Abstract

Accurate molecular property prediction requires input representations that preserve substructural details and maintain syntactic consistency. SMILES (Simplified Molecular Input Line Entry System), while widely used, does not guarantee validity and allows multiple representations for the same compound. SELFIES (Self-Referencing Embedded Strings) addresses these limitations through a robust grammar that ensures structural validity. This study investigates whether a SMILES-pretrained transformer, ChemBERTa-zinc-base-v1, can be adapted to SELFIES using domain-adaptive pretraining without modifying the tokenizer or model architecture. Approximately 700,000 SELFIES-formatted molecules from PubChem were used for adaptation, completed within 12 h on a single NVIDIA A100 GPU. Embedding-level evaluation included t-distributed stochastic neighbor embedding (t-SNE), cosine similarity, and regression on twelve QM9 properties using frozen transformer weights. The domain-adapted model outperformed the original SMILES baseline and slightly outperformed the performance of ChemBERTa-77 M-MLM across most targets, despite a 100-fold difference in pretraining scale. For downstream evaluation, the model was fine-tuned end-to-end on ESOL, FreeSolv, and Lipophilicity, achieving root mean squared error (RMSE) values of 0.944, 2.511, and 0.746, respectively. These results demonstrate that SELFIES-based adaptation offers a cost-efficient alternative for molecular property prediction, without relying on molecular descriptors, 3D features, or large-scale infrastructure.

Similar content being viewed by others

Introduction

The chemical space is estimated to contain approximately 1060 drug-like compounds1,2. This scale presents a significant opportunity for identifying novel pharmaceuticals, materials, and catalysts. However, most of these compounds have not been experimentally characterized3,4. Computational methods are now central to property prediction and chemical space exploration and help mitigate the limitations of large-scale experimental screening5. Among these methods, are descriptor-based prediction6 and graph or geometry-based methods7. Descriptor-based prediction relies on predefined features (e.g., molecular weight, fingerprints) derived from tools like RDKit8which are then fed as inputs for machine learning prediction9. On the other hand, graph-based and geometry-based predictions use molecular graphs to model atoms and bonds, incorporating 2D connectivity and 3D spatial geometry to predict molecular and quantum mechanical properties10,11. Of these methods, geometric graph neural networks have shown state-of-the-art performance in several molecular property prediction benchmarks7,12.

However, there is a common limitation of descriptor-based and graph-based prediction paradigms: they often depend on molecular descriptors (e.g., from RDKit) or privileged 3D information13both of which may be unavailable and impractical to generate for large, novel chemical libraries14. This requirement impedes scalability when navigating the vast chemical space. Consequently, these methods may struggle to generalize efficiently in high-throughput or early-stage exploratory analyses, where computational overhead and minimal data prerequisites are critical15.

Recently, transformer architectures16 have assumed a central role, building on their early success in natural language processing (NLP) to address molecular modelling tasks17,18. Initially developed to handle long-range contextual relationships in text, transformers exploit multi-head attention to capture dependencies across entire sequences, a feature that proves advantageous when treating molecules as strings19. Moreover, transformers’ capacity for self-supervised training on large-scale unlabeled data precludes the need for molecular descriptors or expensive 3D information13,20. As a result, they can learn context-rich embeddings that generalize to novel molecules. This scalability is particularly valuable in high-throughput settings, where rapid exploration of vast chemical spaces is crucial. These properties make transformers a compelling alternative to traditional methods for molecular representation and property prediction.

SMILES (Simplified Molecular Input Line Entry System)21 is the most prevalent string-based format for molecules. It encodes chemical graphs into linear notations resembling sentences, allowing NLP models to operate as if they are processing language. Many large-scale molecular transformers, such as ChemBERTa-zinc-base-v1[22] , rely on SMILES for both pretraining and downstream predictions. A more performant variant, ChemBERTa-77 M-MLM20was trained on 77 million SMILES strings and achieved strong performance across a broad range of property prediction tasks, outperforming graph-based models such as D-MPNN23 on 6 out of 8 MoleculeNet24 benchmarks. This improvement can be attributed to use of a large-scale pretraining dataset (77 M SMILES strings) and transformers’ ability to automate feature extraction directly from molecular strings20.

However, SMILES exhibits notable limitations, including the absence of strict valency checks and multiple valid representations for a single compound25,26. These flaws can degrade performance in tasks requiring stable, unambiguous encodings27. SELFIES (Self-Referencing Embedded Strings)28 was introduced to mitigate these limitations. Every SELFIES string is guaranteed to represent a valid molecular structure, avoiding many of the shortcomings associated with SMILES. This could make SELFIES more robust for property prediction, as the encoding is always chemically valid28,29. SELFormer30a transformer model trained on around 2 million SELFIES strings, demonstrated state-of-the-art results on ESOL and SIDER benchmarks24. On ESOL, SELFormer improved RMSE by more than 15% over GEM7; a geometry-based graph neural network. On SIDER, it increased ROC-AUC by 10% over MolCLR31. SELFormer also outperformed ChemBERTa-77 M in key molecular property prediction tasks like BBBP, BACE, and SIDER30. These findings suggest that the SELFIES-based transformer can perform at least on par with, and in some cases surpass, the performance of both SMILES-based and graph-based models. Notably, SELFormer, which was pretrained on 2 million molecules30outperformed ChemBERTa-77 M, despite the latter having been pretrained on 77 million molecules20.

While SELFIES-based transformers such as SELFormer have shown strong predictive performance, their development requires training from scratch on large SELFIES corpora using customized tokenizers30. This process is computationally expensive and often inaccessible to groups without substantial computational resources[41]. Even well-resourced laboratories must allocate significant time and hardware to build such models. For smaller research groups, the costs may be prohibitive41,42. In natural language processing, domain-adaptive pretraining (DAPT) has been proposed as a lower-cost alternative, where a pretrained model is further adapted to a new domain using unlabeled data32. This paradigm has shown that the effectiveness of continued pretraining correlates with the degree of divergence between the target domain and the original pretraining corpus, a relationship often assessed through vocabulary overlap32. Even when starting from strong pretrained baselines such as RoBERTa33continued pretraining on domain-specific corpora—such as biomedical, scientific, or review texts—has been shown to yield measurable improvements when the target domain diverges from the original training distribution32.

Even though SELFIES is not a new semantic domain, it represents a distinct molecular notation that differs structurally from SMILES28. SELFIES encodes molecules using bracketed syntax and introduces unique tokens such as [Branch1] and [Ring1]. Despite these differences, SMILES and SELFIES share a substantial portion of their chemical vocabulary, including atomic symbols (e.g., C, O, N), bond indicators (e.g., =, #), and punctuation29. In DAPT, vocabulary overlap is used to estimate similarity between source and target domains. By this measure, the overlap between SMILES and SELFIES suggests that the degree of divergence remains within a range that allows effective adaptation using the original tokenizer.

Building on DAPT paradigm, this study examines whether a transformer pretrained on SMILES can be adapted to SELFIES without changing the tokenizer or reinitializing the model. Although SELFIES uses bracketed tokens and differs in syntax, it shares most of its vocabulary with SMILES, including atoms, bonds, and punctuation. The approach treats SELFIES not as a new domain but as an alternative notation within the same chemical language.

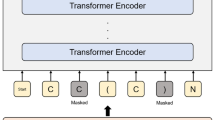

To evaluate this hypothesis, ChemBERTa-zinc-base-v122 was selected as the adaptation base. The model was originally trained on SMILES strings and uses a byte-pair tokenizer34. Approximately 700,000 molecules were sampled from PubChem35 and converted to SELFIES format. Adaptation was performed using masked language modeling36with no changes made to the tokenizer vocabulary. The training procedure was conducted on a single NVIDIA A100 GPU and completed within 12 h, using Google Colab Pro37. The overall methodology is summarized in Fig. 1.

Overview of the methodology for repurposing a SMILES-pretrained transformer to SELFIES. The workflow includes data collection from PubChem, SELFIES conversion, tokenization checks, domain adaptation via masked language modeling, embedding evaluation, and downstream fine-tuning on ESOL, FreeSolv, and Lipophilicity.

The adapted model was evaluated at the embedding level to determine whether it produced chemically coherent representations of SELFIES inputs. t-SNE38 projections and cosine similarity39 were used to analyze clustering and pairwise distances among embeddings for molecules with common functional groups. Also, frozen embeddings were used to predict twelve properties from the QM9 dataset40. These evaluations were designed to assess whether the adapted model retained chemically meaningful structure without requiring end-to-end retraining.

Downstream predictive performance was further tested through full model finetuning on three benchmarks: ESOL, FreeSolv, and Lipophilicity24. All datasets were scaffold-split24 during evaluation. The adapted model matched or exceeded the performance of SMILES-based transformer baselines and graph neural networks across all tasks.

These findings confirm that a SMILES-pretrained model can be adapted to SELFIES through representation-level domain adaptation. The approach avoids the need for specialized tokenization or pretraining from scratch, while producing embeddings that are both structurally coherent and predictive. Although the primary objective is not to establish new state-of-the-art results, the study provides a generalizable framework for extending pretrained molecular transformers to robust notations such as SELFIES.

In addition to achieving high-fidelity performance across multiple benchmarks, the model does not rely on molecular descriptors or three-dimensional structural information. This enables application to novel compounds and use cases where such data are unavailable. Also, the cost-efficient design makes it suitable for research groups with constrained computational resources41,42.

Methods

Tokenization feasibility

Before adaptation, it was necessary to determine whether the tokenizer used in ChemBERTa-zinc-base-v1, originally trained on SMILES, could process SELFIES strings. Two criteria were evaluated: the presence of unrecognized tokens [UNK] and the proportion of SELFIES sequences that exceeded the 512-token input limit33. The presence of unknown tokens would indicate that certain SELFIES symbols are not represented in the tokenizer’s vocabulary. This would break input consistency and lead to loss in essential information43. Sequences that exceed the maximum length are truncated at the input layer, which also results in the loss of information44. Both conditions are expected to reduce the reliability of downstream predictions43,44.

To evaluate tokenizer compatibility, approximately 700,000 SMILES strings were sampled from PubChem35 and converted to SELFIES using the Python “selfies” library45. Molecules that failed the conversion step were excluded. The resulting SELFIES strings were passed directly into the ChemBERTa-zinc-base-v1 tokenizer. The tokenizer vocabulary and merges remained unchanged. Each SELFIES string was treated as a complete input sequence, and segmentation followed the merge rules originally learned from SMILES. Sequences were padded or truncated to a fixed length of 512 tokens33. All inputs were formatted using standard transformer conventions. No additional encoding layers or syntax-specific adjustments were applied.

The resulting distribution of token counts for SMILES versus SELFIES, and example molecules along with the token counts for the two notations are summarized in Tables 1 and 2 respectively. Additionally, the frequency distribution of token lengths for both SMILES and SELFIES is shown in Fig. 2. Notably, none of the SELFIES strings contained [UNK] tokens, and only about 0.5% exceeded 512 tokens. Despite SELFIES often generating longer tokens—an average of 136 tokens compared to SMILES’ 36—the tokenizer consistently decomposed SELFIES expressions into tokens recognizable from the original merges. For instance, “acetone” spans 5 tokens in SMILES but 19 in SELFIES; however, such expansions did not produce either truncation or unknown tokens. A higher incidence of truncation or unknown tokens would have introduced systematic information loss and undermined the validity of subsequent adaptation43,44.

Although SELFIES strings were processed successfully using the SMILES-trained tokenizer, detailed inspection revealed that SELFIES-specific tokens such as [= Branch1] and [Ring1] were not tokenized as complete semantic units29,46. Instead, the tokenizer segmented these tokens at the character or sub-character level, producing tokens like ‘[‘, ‘=’, ‘Br’, ‘a’, ‘nc’, ‘h’, ‘1’, ‘]’ for [= Branch1] and ‘[‘, ‘R’, ‘i’, ‘n’, ‘g’, ‘1’, ‘]’ for [Ring1]. As expected, this behaviour reflects the fact that the original tokenizer, trained only on SMILES data, lacks specific merges for SELFIES bracketed terms and therefore falls back on character-level decomposition47. Therefore, SELFIES inputs tend to produce longer tokenized sequences compared to SMILES for the same molecule.

However, a closer analysis of molecules that exceeded the 512-token limit showed that these cases corresponded to compounds with extreme molecular complexity. The molecules exceeding the 512 threshold exhibited molecular formulas such as C₂₂₈H₃₈₂O₁₉₁ (6179 g/mol), C₁₂₄H₁₈₅N₉O₂₀₇S₃₆ (6268 g/mol), C₉₈H₁₄₇N₇O₁₆₁S₂₈ (4897 g/mol), and C₂₀₅H₃₆₆N₃O₁₁₇P₅ (4900 g/mol)—all notably exceeding typical molecule scales40. Moreover, when the original SMILES strings for these molecules were tokenized, they too exceeded 512 tokens, indicating that the sequence length issue arises from the molecules’ intrinsic sizes and branching complexity. For such ultra-large molecules, existing transformers may not be able to represent all structural information due to unavoidable truncation44. Addressing these cases would likely require specialized tokenization schemes or architectural modifications44. However, for the small, medium, and moderately long molecules that dominate benchmark datasets like ESOL, FreeSolv, and Lipophilicity and QM924,40; current models and tokenization strategies remain sufficient.

Tokenization analysis confirmed that the vocabulary and merges from ChemBERTa-zinc-base-v1 provided full coverage for SELFIES symbols. No additional merges or tokenizer modifications were necessary to process the SELFIES corpus.

Domain-adapting to SELFIES

Following the verification that the ChemBERTa-zinc-base-v1 tokenizer can process SELFIES representations without generating unknown tokens and with minimal sequence truncation, the model was subsequently domain-adapted using these SELFIES inputs. The same dataset comprising approximately 700,000 molecules—previously used in the initial feasibility assessment—was employed for domain adaptation. The domain adaptation process followed a standard masked language modeling (MLM)48 approach, masking 15% of tokens at random and training the model to reconstruct them. All domain adaptation procedures, including the masked token sampling and model updates, were performed using Hugging Face Trainer class49 to streamline data collator setup, optimization schedules, and logging.

The domain adaptation was conducted on an NVIDIA A100 GPU within Google Colab Pro50using a per-device batch size of 32. Seventeen epochs of MLM domain adaptation were completed under an Adam optimizer51 (learning rate = 5 × 10⁻⁵, weight decay = 0.01), and mixed-precision FP1652 was enabled to optimize GPU memory usage. The final training loss was 0.0268.

t-SNE visualization

A chemically diverse panel was selected, comprising alkanes, alkenes, alkynes, aromatics, ketones, thiols, and alcohols. Each molecule’s SELFIES string was fed through the domain-adapted model, generating a 768-dimensional embedding via average-pooling of the final-layer hidden states. A t-SNE projection38 (perplexity = 5, random state = 0) was performed, then reduced these embeddings to two dimensions for visualization. This analysis aimed to evaluate whether the repurposed model encodes functional group information in a chemically meaningful way. Distinct molecular classes—such as ketones and alkanes—should occupy separate regions in the resulting two-dimensional projection if the embeddings reflect structural differences. For reference, SMILES strings were processed using the original ChemBERTa-zinc-base-v1, and their embeddings were projected using the same t-SNE configuration to allow direct comparison.

Cosine similarity

Cosine similarity39 was evaluated using a selected subset of molecules, with all similarities computed relative to methane. Molecules with similar structures, such as ethane and propane, were expected to yield higher similarity scores, while dissimilar compounds like benzene or phenol were expected to yield lower scores. This comparison was used to assess whether the SELFIES-based embeddings reflect chemical similarity in latent space. The same procedure was applied to SMILES-based embeddings produced by the original ChemBERTa-zinc-base-v1 to enable direct comparison between the two representations.

QM9 regression using frozen embeddings

Molecular descriptors are highly effective inputs for machine learning models in property prediction13. Transformers offer a new alternative by taking raw SELFIES strings and automatically generating embeddings that act as learned descriptors, without the need for external toolkits or handcrafted features13,20,30. If these embeddings carry enough chemical information to predict properties accurately, they provide a direct and efficient replacement for traditional descriptor pipelines. To test this, we evaluated the domain-adapted model’s performance in property prediction using frozen embeddings53without any additional fine-tuning.

Embedding-level coherence, while informative, must translate into reliable property predictions to validate any practical advantage of our proposed methodology. The QM9 dataset40—which encompasses a variety of quantum, electronic, thermodynamic, and energetic properties—served as a regression benchmark in this study. Specifically, the following twelve properties were evaluated: Dipole Moment (μ), Isotropic Polarizability (α), HOMO Energy \(\in _{{HOMO}}\), LUMO Energy \(\in _{{LOMO}}\), Gap (HOMO–LUMO) \(\in _{{gap}}\), Electronic Spatial Extent \(\:<{\varvec{R}}^{2}>\), Zero-Point Vibrational Energy \(\:\left(\varvec{z}\varvec{p}\varvec{v}\varvec{e}\right)\), Internal Energy at 0 K \(\:{(\varvec{U}}_{0})\), Internal Energy at 298 K \(\:\left(\varvec{U}\right)\), Enthalpy at 298 K \(\:\left(\varvec{H}\right)\), Free Energy at 298 K \(\:\left(\varvec{G}\right)\), and Heat Capacity at 298 K \(\:{(\varvec{C}}_{\varvec{v}})\).

To ensure a consistent molecular subset for regression analysis, all QM9 molecules were first converted from SMILES to SELFIES using the standard implementation of the SELFIES Python library45. Molecules that failed the conversion step were removed. The remaining SELFIES strings were input to the domain-adapted model. Hidden states from the final transformer layer were extracted and averaged across all tokens to produce a 768-dimensional embedding for each molecule. These embeddings were used as fixed representations for the regression task.

This generated consistent 768-dimensional inputs (identical to what ChemBERTa-zinc-base-v1 yields with SMILES). A uniform feed-forward network54—with dropout55 and batch normalization56—was then trained on each property in a five-fold cross-validation57. The same network architecture and training protocols for two other models were implemented: ChemBERTa-zinc-base-v1 and ChemBERTa-77 M-MLM. The objective is to ensure that any observed performance differences are attributed to the underlying embeddings—whether from the domain-adapted SELFIES model, ChemBERTa-zinc-base-v1, or ChemBERTa-77 M-MLM—rather than variations in downstream network architecture.

The consistency of the feed-forward network (including dropout, batch normalization, and identical training procedures) isolates the effect of the embeddings from other confounding factors. Root-mean-squared error (RMSE) and coefficient of determination (R²) were the primary metrics to gauge prediction accuracy. Figure 3 shows the architecture of the feed-forward network. A single set of hyperparameters58 was used for all models. The configuration was selected through preliminary testing to ensure stable convergence across ChemBERTa-zinc-base-v1, ChemBERTa-77 M-MLM, and the domain-adapted model.

Performance on the QM9 regression tasks was used to compare the domain-adapted model against ChemBERTa-zinc-base-v1 and ChemBERTa-77 M-MLM. This evaluation was designed to assess whether adaptation to SELFIES yields improvements over the original SMILES model and whether the adapted model remains competitive with a significantly larger SMILES-pretrained transformer.

Fine-Tuning the repurposed model for lipophilicity, ESOL, and freesolv datasets

While frozen embedding evaluations assessed the structural quality of the learned representations, they do not reflect the model’s full potential under direct supervision. In transformer-based pipelines, task-specific fine-tuning is a standard stage following pretraining or adaptation36,59. This step evaluates whether the domain-adapted model maintains predictive accuracy when trained directly on supervised tasks, and whether the gains observed in frozen embedding evaluations extend to practical property prediction benchmarks. To evaluate practical predictive capacity, the domain-adapted model was fine-tuned60,61 end-to-end on three widely used molecular property prediction benchmarks: ESOL, FreeSolv, and Lipophilicity24.

These datasets are widely used in cheminformatics and drug discovery and represent chemically diverse and application-relevant endpoints62,63,64. The ESOL dataset consists of 1128 molecules with aqueous solubility values reported in log (mol/L). FreeSolv comprises 643 molecules with hydration free energies in water, expressed in kcal/mol63. The Lipophilicity dataset includes 4200 molecules with experimental logP values reflecting the octanol/water distribution coefficient, a proxy for lipophilicity that informs ADME-related properties64. These datasets provide a practical assessment of the model’s generalizability across real-world molecular property prediction tasks.

The datasets were partitioned using scaffold-based splits (80/10/10) to ensure generalization across distinct molecular scaffolds24. To enable direct comparison with existing benchmarks, the finetuning setup followed the SELFormer protocol30including architecture, optimization strategy, and evaluation procedures. The domain-adapted model was fine-tuned for 25 epochs with a batch size of 16 and a learning rate of 5 × 10⁻⁵, using the Adam optimizer and the Hugging Face Trainer API49. The CLS token65 output from the domain-adapted model was passed through dropout, a tanh-activated dense layer, and a regression head. RMSE, MSE, and MAE were recorded on validation splits, and final test predictions were inverse transformed to the original property scale. All hyperparameters and architectural components were held constant across datasets to ensure comparability.

Results & discussion

t-SNE clusters

After domain adaptation, a chemically diverse subset of molecules was selected to examine the structure of the learned embedding space using t-distributed stochastic neighbor embedding (t-SNE). The set included linear alkanes (methane, ethane, propane), aromatic compounds, and molecules containing functional groups such as ketones, thiols, and alcohols. As shown in Fig. 4, embeddings obtained from the domain-adapted model produced clearer separations between functional groups compared to those from the SMILES-pretrained baseline. Alkanes formed a more compact cluster, with separation from other chemical classes such as thiols and aromatics. Molecules containing aromatic rings occupied more localized regions, consistent with the presence of explicit bracketed tokens in SELFIES for ring specification28. Although the tokenizer was not trained on SELFIES syntax, continued masked language modeling48 was sufficient for the model to process bracketed tokens consistently. The adapted model produced more coherent latent representations across functional groups, even when operating with a tokenizer derived from SMILES. The shared use of atomic symbols, bond characters, and punctuation across both notations enabled this adaptation without modifying the tokenizer or introducing new merges.

t-SNE clustering of selected functional groups in (top) ChemBERTa-zinc-base-v1-based embeddings versus (bottom) SELFIES-adapted embeddings. Points represent final-layer mean-pooled vectors for diverse small molecules (e.g., alkanes, aromatics, and thiols). More distinct grouping is visible in the SELFIES embeddings, suggesting bracket expansions help clarify substructural features.

Cosine similarities

Cosine similarity39 was used to quantify the distance between molecular embeddings and assess whether chemical structure was preserved in latent space. Methane was used as the reference molecule, and similarity scores were computed for a set of small compounds covering multiple functional classes, including alkanes, alkenes, alkynes, alcohols, ketones, thiols, and aromatics. The objective was to determine whether the model distinguishes between these classes in a manner consistent with established chemical intuition.

As shown in Fig. 5, the SMILES-pretrained model produced inconsistent similarity rankings. Alkynes such as acetylene and propyne received higher similarity scores to methane than linear alkanes, despite their increased structural complexity. Alkenes also ranked closer to methane than some saturated hydrocarbons. These outcomes suggest that the ChemBERTa-zinc-base-v1 embeddings do not reliably encode structural proximity.

Cosine similarity of various molecules against methane’s embedding, comparing the ChemBERTa-zinc-base-v1 model (top) to the SELFIES-domain-adapted model (bottom). Alkanes (ethane, propane, butane) show higher similarity than ring-containing or heteroatom-rich structures (benzene, phenol). The SELFIES-based model often yields sharper functional distinctions.

The domain-adapted model exhibited a consistent and chemically interpretable pattern in cosine similarity values relative to methane. Within each functional group, shorter molecules were assigned higher similarity scores, while larger or more substituted analogues received progressively lower values. This trend was observed across alkanes, alkenes, alkynes, as well as molecules containing functional groups such as ketones and thiols. The gradation in similarity aligns with structural proximity to the reference molecule and suggests that the adapted model encodes molecular relationships in a manner consistent with chemical intuition.

These results are consistent with the clustering behaviour observed in the t-SNE analysis and support the conclusion that adaptation to SELFIES improved the structural resolution of molecular embeddings. The next section examines whether these differences extend to supervised regression tasks.

QM9 regression using frozen embeddings

The final stage of the embedding-level evaluation used frozen transformer embeddings to predict twelve molecular properties from the QM9 dataset40. Representations were extracted from the final transformer layer and were used as input to a feed-forward neural network trained using five-fold cross-validation.

Three models were compared: ChemBERTa-zinc-base-v1 (v1), ChemBERTa-77 M-MLM (v2), and the domain-adapted model trained on SELFIES (SELFIES-DA). Table 3 summarizes the coefficient of determination (R2), and root mean squared error (RMSE) for each property. SELFIES-DA outperformed the smaller SMILES baseline (v1) across all twelve targets. For polarizability, R² increased from 0.681 to 0.969, and RMSE was reduced by more than half. Similar improvements were observed for \(\in _{{HOMO}}\), \(\in _{{LUMO}}\), and thermodynamic properties such as \(\:\varvec{U}\), \(\:\varvec{H}\), and \(\:{\varvec{C}}_{\varvec{v}}\).

Compared to ChemBERTa-77 M-MLM, SELFIES-DA achieved similar or slightly better performance on most properties, despite being adapted on a dataset roughly 100 times smaller (700,000 vs. 77 million molecules). These results are consistent with trends observed in the t-SNE and cosine similarity analyses and show that SELFIES-based adaptation can yield high-quality embeddings under constrained data and compute conditions. The original ChemBERTa study noted that performance improves with pretraining scale22. It remains possible that significant improvements could be achieved with a larger SELFIES corpus, though the current results demonstrate that stable and accurate representations can already be obtained under modest adaptation conditions. Since the transformer remained frozen during regression, the gains reflect changes in embedding quality introduced by the adaptation process.

The next section evaluates whether similar performance trends hold when the model is fine-tuned end-to-end on supervised property prediction tasks, in contrast to the frozen-embedding approach used for QM9.

Fine-Tuning the repurposed model for lipophilicity, ESOL, and freesolv datasets

To further assess the predictive performance, the domain-adapted model was fine-tuned36,59 on three standard benchmarks: ESOL (aqueous solubility), FreeSolv (hydration free energy), and Lipophilicity (logD). These datasets are widely used for evaluating molecular property prediction24. All tasks used scaffold-based splits, and test performance was measured using root mean squared error (RMSE). Table 4 presents the results alongside established baselines, including SELFormer30ChemBERTa-77 M-MLM20and a range of graph-based models7,18,23,66,67,68. Performance results for the graph-based models were sourced from the GEM study7while results for ChemBERTa-77 M-MLM20 and SELFormer30 were obtained from their respective original publications.

While the SELFIES-fine-tuned model (SELFIES FT) does not outperform the graph-based models, the results in Table 4 show that it achieves competitive performance across all three benchmarks. On ESOL, the model reached an RMSE of 0.944, outperforming several models such as ChemBERTa-77 M-MLM20PretrainGNN66 and GROVERbase18. On FreeSolv, it achieved an RMSE of 2.511, outperforming SELFormer and several graph-based baselines, though still behind geometry-enhanced models such as GEM7. On Lipophilicity, the model reached 0.746, closely aligned with SELFormer (0.735), slightly above AttentiveFP68 (0.721) and GEM7 (0.660).

Representative predictions are shown in Fig. 6. For ESOL, polar molecules such as 2-Methyloxirane and 2-pyrrolidone were associated with high predicted solubility, while large polyaromatic systems like 3,4-Benzopyrene and 3,4-Benzchrysene yielded low logS values. For FreeSolv, the model assigned relatively high hydration free energy to hydrophobic structures such as Octafluorocyclobutane, and low values to polar, hydrogen-bonding compounds such as caffeine and Dexketoprofen. These examples are consistent with expected behaviour and provide evidence that the model learns interpretable relationships between structure and target properties.

Representative predictions from the ESOL and FreeSolv test sets using the SELFIES FT model. Predicted values reflect known solubility and hydration trends, with polar structures ranked higher in ESOL and more negative in FreeSolv. The observations align with chemical intuition and demonstrate that the model produces interpretable outputs across structurally diverse molecules.

Although the model was trained on only 700,000 molecules and without any architectural modifications or tokenizer extensions, it achieved results comparable to models trained on substantially larger corpora or those augmented with 3D geometry7,20. The SELFIES-based adaptation provides a reliable alternative for settings with limited computational resources41,42. It produces accurate predictions without relying on molecular descriptors or structurally privileged inputs, two considerations of immense importance when dealing with novel molecules13. This characteristic provides an advantage over models that depend on engineered features or 3D structural data, especially in early-stage or high-throughput applications15.

The results presented in this study highlight the effectiveness of adapting a SMILES-pretrained transformer to SELFIES without modifying the tokenizer or model architecture. The SELFIES notation, with its context-free grammar, enforces structural validity and eliminates notational ambiguity28,29. These properties appear sufficient to support the learning of chemically relevant patterns across diverse prediction tasks. The use of a preexisting tokenizer, originally trained on SMILES, did not limit model performance when applied to SELFIES. This may suggest that notational standardization can offset the benefits typically associated with large-scale pretraining.

Conclusion

This study presents a cost-efficient strategy for repurposing a SMILES-pretrained transformer to SELFIES without modifying the tokenizer or model architecture. A default SMILES tokenizer was used to process approximately 700,000 SELFIES-formatted molecules, with no unknown tokens or excessive truncation encountered. The domain adaptation was completed in under 12 h on a single NVIDIA A100 GPU. Embedding-level evaluations—including t-SNE, cosine similarity, and QM9 regression—confirmed that SELFIES-based representations captured chemically meaningful structure with greater consistency than the SMILES baseline. On ESOL, FreeSolv, and Lipophilicity benchmarks, the adapted model performed competitively with both string-based and graph-based models.

These results demonstrate that SELFIES can serve as a robust and scalable alternative to SMILES for transformer-based molecular modeling. The approach requires no molecular descriptors, 3D geometry, or custom tokenizer, which makes it accessible to researchers operating under constrained resources. While the model does not outperform the graph-based methods, it achieves comparable and reliable predictions with minimal infrastructure. This work establishes that standardized string-based notations, when paired with domain-adaptive training, can yield practical gains without full-scale retraining. Future work could examine whether scaling the SELFIES corpus improves downstream performance or whether adapting a pretrained language model, such as RoBERTa33 provides additional benefits. Given the structured grammar and limited vocabulary of SELFIES relative to natural language, such models may capture its syntax efficiently and converge with fewer training epochs.

Data availability

The datasets and codes generated during and/or analysed during the current study are available in the Mendeley Data repository: https://data.mendeley.com/datasets/27j2zg6f5x/4.

References

Polishchuk, P. G., Madzhidov, T. I. & Varnek, A. Estimation of the size of drug-like chemical space based on GDB-17 data. J. Comput. Aided Mol. Des. 27, 675–679 (2013).

Reymond, J. L. The chemical space project. Acc. Chem. Res. 48, 722–730 (2015).

Schneider, G. & Fechner, U. Computer-based de Novo design of drug-like molecules. Nat. Rev. Drug Discov. 4, 649–663 (2005).

Segler, M. H. S., Preuss, M. & Waller, M. P. Planning chemical syntheses with deep neural networks and symbolic AI. Nature 555, 604–610 (2018).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry.Preprint at https://arXiv.org/abs/1704.01212 (2017).

David, L., Thakkar, A., Mercado, R. & Engkvist, O. Molecular representations in AI-driven drug discovery: a review and practical guide. J. Cheminform. 12, 56 (2020).

Fang, X. et al. Geometry-enhanced molecular representation learning for property prediction. Nat. Mach. Intell. 4, 127–134 (2022).

Duarte, R., Matos, G., Pak, S. & Rizzo, R. C. Descriptor-Driven de Novo design algorithms for DOCK6 using RDKit. J. Chem. Inf. Model. 63, 5803–5822 (2023).

Seko, A., Togo, A. & Tanaka, I. Descriptors for Machine Learning of Materials Data. in Nanoinformatics 3–23 (Springer, 2018). https://doi.org/10.1007/978-981-10-7617-6_1

Chen, C., Ye, W., Zuo, Y., Zheng, C. & Ong, S. P. Graph networks as a universal machine learning framework for molecules and crystals. Chem. Mater. 31, 3564–3572 (2019).

Pocha, A., Danel, T., Podlewska, S., Tabor, J. & Maziarka, L. Comparison of Atom Representations in Graph Neural Networks for Molecular Property Prediction. in International Joint Conference on Neural Networks (IJCNN) 1–8 (IEEE, 2021). 1–8 (IEEE, 2021). (2021). https://doi.org/10.1109/IJCNN52387.2021.9533698

Li, H. et al. A knowledge-guided pre-training framework for improving molecular representation learning. Nat. Commun. 14, 7568 (2023).

Ross, J. et al. Large-scale chemical language representations capture molecular structure and properties.Preprint at https://arXiv.org/abs/2106.09553 (2021).

Reeves, S. et al. Assessing methods and obstacles in chemical space exploration. Applied AI Letters 1, (2020).

Nigam, A., Pollice, R. & Aspuru-Guzik, A. Parallel tempered genetic algorithm guided by deep neural networks for inverse molecular design. Digit. Discovery. 1, 390–404 (2022).

Vaswani, A. et al. Atten. Is all You Need Preprint at https://arXiv.org/abs/1706.03762. (2017).

Schwaller, P. et al. Molecular transformer: A model for Uncertainty-Calibrated chemical reaction prediction. ACS Cent. Sci. 5, 1572–1583 (2019).

Rong, Y. et al. Self-supervised graph transformer on large-scale molecular data. Preprint at https://arXiv.org/abs/2007.02835 (2020).

Rives, A. et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proceedings of the National Academy of Sciences 118, (2021).

Ahmad, W., Simon, E., Chithrananda, S., Grand, G. & Ramsundar, B. ChemBERTa-2: Towards chemical foundation models.Preprint at https://arXiv.org/abs/2209.01712 (2022).

Weininger, D. SMILES, a chemical Language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28, 31–36 (1988).

Chithrananda, S., Grand, G., Ramsundar, B. & ChemBERTa large-scale self-supervised pretraining for molecular property prediction.Preprint at https://arXiv.org/abs/2010.09885 (2020).

Gasteiger, J., Groß, J. & Günnemann, S. Directional message passing for molecular graphs.Preprint at https://arXiv.org/abs/2003.03123 (2020).

Wu, Z. et al. MoleculeNet: a benchmark for molecular machine learning. Chem. Sci. 9, 513–530 (2018).

O’Boyle, N. M. Towards a universal SMILES representation - A standard method to generate canonical SMILES based on the InChI. J. Cheminform. 4, 22 (2012).

Jin, W., Barzilay, R. & Jaakkola, T. Junction tree variational autoencoder for molecular graph generation. Preprint at https://arXiv.org/abs/1802.04364 (2018).

Xie, E. et al. An AI-Driven framework for discovery of BACE1 inhibitors for alzheimer’s disease. Preprint At. https://doi.org/10.1101/2024.05.15.594361 (2024).

Krenn, M., Häse, F., Nigam, A., Friederich, P. & Aspuru-Guzik, A. Self-Referencing Embedded Strings (SELFIES): A 100% robust molecular string representation. (2019). https://doi.org/10.1088/2632-2153/aba947

Krenn, M. et al. SELFIES and the future of molecular string representations. Patterns 3, 100588. Preprint at https://arXiv.org/abs/2204.00056.(2022).

Yüksel, A., Ulusoy, E., Ünlü, A. & Doğan, T. SELFormer: molecular representation learning via SELFIES Language models. Mach. Learn. Sci. Technol. 4, 025035 (2023).

Wang, Y., Wang, J. & Cao, Z. Barati farimani, A. Molecular contrastive learning of representations via graph neural networks. Nat. Mach. Intell. 4, 279–287 (2022).

Gururangan, S. et al. Association for Computational Linguistics, Stroudsburg, PA, USA,. Don’t Stop Pretraining: Adapt Language Models to Domains and Tasks. in Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics 8342–8360 (2020). https://doi.org/10.18653/v1/2020.acl-main.740

Liu, Y. et al. RoBERTa: A robustly optimized BERT pretraining approach.Preprint at https://arXiv.org/abs/1907.11692 (2019).

Schmidt, C. W. et al. Tokenization Is More Than Compression. in Proceedings of the Conference on Empirical Methods in Natural Language Processing 678–702 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2024). 678–702 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2024). (2024). https://doi.org/10.18653/v1/2024.emnlp-main.40

Kim, S. et al. PubChem substance and compound databases. Nucleic Acids Res. 44, D1202–D1213 (2016).

Devlin, J., Chang, M. W., Lee, K. & Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. Preprint at https://arXiv.org/abs/1810.04805 (2018).

Carneiro, T. et al. Performance analysis of Google colaboratory as a tool for accelerating deep learning applications. IEEE Access. 6, 61677–61685 (2018).

Cai, T. T. & Ma, R. Theoretical foundations of t-SNE for visualizing high-dimensional clustered data. Preprint at https://arXiv.org/abs/2105.07536 (2021).

Guo, K. Testing and validating the cosine similarity measure for textual analysis. SSRN Electron. J. https://doi.org/10.2139/ssrn.4258463 (2022).

Ramakrishnan, R., Dral, P. O. & Rupp, M. Lilienfeld, O. A. Quantum chemistry structures and properties of 134 Kilo molecules. Sci. Data. 1, 140022 (2014). von.

Costa, C. J., Aparicio, M., Aparicio, S. & Aparicio, J. T. The democratization of artificial intelligence: theoretical framework. Appl. Sci. 14, 8236 (2024).

Seger, E. et al. Multiple meanings, goals, and methods. Preprint at https://arXiv.org/abs/2303.12642 (2023).

Luong, T., Sutskever, I., Le, Q., Vinyals, O. & Zaremba, W. Addressing the Rare Word Problem in Neural Machine Translation. in Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) 11–19Association for Computational Linguistics, Stroudsburg, PA, USA, (2015). https://doi.org/10.3115/v1/P15-1002

Guo, M. et al. LongT5: efficient text-to-text transformer for long sequences. Preprint at https://arXiv.org/abs/2112.07916. (2021)

Lo, A. et al. Recent advances in the self-referencing embedded strings (SELFIES) library. Digit. Discovery. 2, 897–908 (2023). arXiv:2302.03620.

Cheng, A. H. et al. Group SELFIES: a robust fragment-based molecular string representation. Digit. Discovery. 2, 748–758 (2023).

Xu, Z. et al. Enhancing character-level understanding in LLMs through token internal structure learning. Preprint at https://arXiv.org/abs/2411.17679 (2024).

Zhao, M., Lin, T., Mi, F., Jaggi, M. & Schütze, H. Masking as an Efficient Alternative to Finetuning for Pretrained Language Models. in Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP) 2226–2241 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2020). 2226–2241 (Association for Computational Linguistics, Stroudsburg, PA, USA, 2020). (2020). https://doi.org/10.18653/v1/2020.emnlp-main.174

Wolf, T. et al. HuggingFace’s transformers: State-of-the-art natural language processing.Preprint at https://arXiv.org/abs/1910.03771 (2019).

Bisong, E. Google colaboratory. in Building Machine Learning and Deep Learning Models on Google Cloud Platform 59–64 (A, Berkeley, CA, doi:https://doi.org/10.1007/978-1-4842-4470-8_7. (2019).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. Preprint at https://arXiv.org/abs/1412.6980 (2014).

Micikevicius, P. et al. Mixed precision train. Preprint at https://arXiv.org/abs/1710.03740. (2017)

Artetxe, M., Ruder, S. & Yogatama, D. On the Cross-lingual Transferability of Monolingual Representations. in Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics 4623–4637Association for Computational Linguistics, Stroudsburg, PA, USA, (2020). https://doi.org/10.18653/v1/2020.acl-main.421

Bebis, G. & Georgiopoulos, M. Feed-forward neural networks. IEEE Potentials. 13, 27–31 (1994).

Liu, Z., Xu, Z., Jin, J., Shen, Z. & Darrell, T. Dropout reduces underfitting .Preprint at https://arXiv.org/abs/2303.01500. (2023)

Balestriero, R. & Baraniuk, R. G. Batch Normalization Explained (2022). 2209.14778.

Friedl, H. & Stampfer, E. Cross-Validation. in Encyclopedia of Environmetrics (Wiley, https://doi.org/10.1002/9780470057339.vac062. (2012).

Claesen, M. & De Moor, B. Hyperparameter search in machine learning. Preprint at https://arXiv.org/abs/1502.02127 (2015).

Li, J., Sun, A., Han, J. & Li, C. A survey on deep learning for named entity recognition. IEEE Trans. Knowl. Data Eng. 34, 50–70 (2022).

Ziegler, D. M. et al. Fine-tuning language models from human preferences. Preprint at https://arXiv.org/abs/1909.08593 (2019).

Neerudu, P. K. R., Oota, S., Marreddy, M., Kagita, V. & Gupta, M. On Robustness of Finetuned Transformer-based NLP Models. in Findings of the Association for Computational Linguistics: EMNLP 2023 7180–7195Association for Computational Linguistics, Stroudsburg, PA, USA, (2023). https://doi.org/10.18653/v1/2023.findings-emnlp.477

Wen, N. et al. A fingerprints based molecular property prediction method using the BERT model. J. Cheminform. 14, 71 (2022).

Dutschmann, T. M. & Baumann, K. Evaluating High-Variance leaves as uncertainty measure for random forest regression. Molecules 26, 6514 (2021).

Hasebe, T. Knowledge-Embedded Message-Passing neural networks: improving molecular property prediction with human knowledge. ACS Omega. 6, 27955–27967 (2021).

Rogers, A., Kovaleva, O. & Rumshisky, A. A primer in bertology: what we know about how BERT works. Trans. Assoc. Comput. Linguist. 8, 842–866 (2020). arXiv:2002.12327.

Hu, W. et al. Strategies for pre-training graph neural networks. Preprint at https://arXiv.org/abs/1905.12265. (2019)

Liu, S., Demirel, M. F. & Liang, Y. N-Gram Graph: simple unsupervised representation for graphs, with applications to molecules. Preprint at https://arXiv.org/abs/1806.09206 (2018).

Xiong, Z. et al. Pushing the boundaries of molecular representation for drug discovery with the graph attention mechanism. J. Med. Chem. 63, 8749–8760 (2020).

Author information

Authors and Affiliations

Contributions

All authors contributed to study design, data analysis, and manuscript preparation. O.A. wrote the codes, curated datasets, and drafted the initial manuscript. M.A. developed sections of the code and refined the text. M.T. validated results, optimized model parameters, and edited the manuscript. A.E. provided theoretical insights and helped shape the research direction. A.A. supervised the project and approved the final manuscript. All authors reviewed and agreed on the final version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alhmoudi, O.K., Aboushanab, M., Thameem, M. et al. Domain adaptation of a SMILES chemical transformer to SELFIES with limited computational resources. Sci Rep 15, 23627 (2025). https://doi.org/10.1038/s41598-025-05017-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-05017-w