Abstract

Deep Learning methods are notorious for relying on extensive labeled datasets to train and assess their performance. This can cause difficulties in practical situations where models should be trained for new applications for which very little data is available. While few-shot learning algorithms can address the first problem, they still lack sufficient explanations for the results. This research presents a workflow that tackles both challenges by proposing an explainable few-shot learning workflow for detecting invasive and exotic tree species in the Atlantic Forest of Brazil using Unmanned Aerial Vehicle (UAV) images. By integrating a Siamese network with explainable AI (XAI), the workflow enables the classification of tree species with minimal labeled data while providing visual, case-based explanations for the predictions. Results demonstrate the effectiveness of the proposed workflow in identifying new tree species, even in data-scarce conditions. With a lightweight backbone, e.g., MobileNet, it achieves an F1-score of 0.86 in 3-shot learning, outperforming a shallow CNN. A set of explanation metrics, i.e., correctness, continuity, and contrastivity, accompanied by visual cases, provide further insights about the prediction results. This approach opens new avenues for using AI and UAVs in forest management and biodiversity conservation, particularly concerning rare or understudied species.

Similar content being viewed by others

Introduction

Earth Observation imagery, such as satellites and Unmanned Aerial Vehicles (UAVs), combined with modern Artificial Intelligence (AI) algorithms, enables rapid mapping and proves its use for numerous sustainable development goals. For example, monitoring invasive species in natural ecosystems. One significant challenge, however, is that these AI algorithms depend on large amounts of labeled data for training. While some regions have extensive datasets, labeled forest data is often scarce in Low- and Middle-Income Countries (LMICs) 1. Indeed, a review of various open forest benchmark datasets 2,3,4,5,6 revealed that no data was available for Atlantic forest species The lack of labels induces two challenges: 1) it is difficult to train Deep Learning models for the task at hand, and 2) it is difficult to evaluate these models to see if they are performing well. Researchers focus on the first challenge through the development of few-shot learning algorithms. However, the second challenge is often neglected, as few-shot learning papers use an evaluation set that still requires labeled samples to assess whether a model is performing well. If labeled data is unavailable in the field for training an algorithm, it will also not be available to assess its performance. To this end, this manuscript proposes a workflow that combines few-shot learning with explainable AI for a novel study that aims to identify invasive species in the Atlantic forests of Brazil. These species are typically identified in the field by experts walking along forest paths.

Effective, continuous monitoring of forests is crucial to manage and conserve forests efficiently and their ecosystem services from threats such as deforestation, climate change, stress and diseases, and invasive species 7. Remote sensing offers several advantages over traditional field-based methods for monitoring forests where experts walk along forest paths to identify species, including scalability, multi-sensor capabilities, cost, and time effectiveness 8. Satellite imagery is suitable for covering large areas. However, more recently, UAVs have emerged as adaptive tools to collect higher-resolution data for monitoring forests, and they are offering promising solutions to overcoming the challenges of satellite-based data in terms of spatial and temporal resolution 9. Both satellite and UAV imagery can be coupled with AI techniques, such as Machine Learning, to automate the extraction of information, such as identifying invasive species, from the imagery.

The added spatial detail provided by UAVs shows promising results for identifying and characterizing the Atlantic rainforests. In 2022, one study manually delimited tree crowns over hyperspectral UAV imagery and then performed a random forest classification to identify eight species 10. Another research in 2023 combined hyperspectral UAS and LiDAR to identify eight tree species 11. Other studies used UAS-LiDAR and hyperspectral data to predict tree characteristics such as aboveground biomass, canopy height, and leaf area index 12. Similarly, 3D characteristics from UAV-LiDAR were processed with random forests to detect Araucaria angustifolia 13 and distinguish between stages of forest regeneration 14. Hyperspectral and LiDAR systems are generally more expensive, but research has used low-cost UAS that capture only RGB imagery to monitor Atlantic forests. For example, Dasilva et al. compared random forest and SVM to identify invasive species 15, and Albuquerque et al. used random forest classification to distinguish characteristics such as tree density, tree height, and vegetation cover 16. Deep Learning methods are also gaining traction; some studies use ResNet 17 or Faster RCNN 18 to identify tree species from the RGB UAV imagery. The lack of labeled data is a significant hurdle in using AI methods for tree species detection. While many Machine Learning-based strategies depend on large quantities of well-prepared training and test samples, few-shot learning addresses scenarios where a limited number of labeled samples are available for training. In forest monitoring, few-shot learning can be beneficial. At the same time, a limited number of samples are available and can support transfer learning, where we can reuse the knowledge learned from a similar task in a less accessible region. Moreover, due to the dynamic nature of the forest, few-shot learning provides rapid adoption of pre-trained models to the specific forest type, region, or situation 19.

There have been a few examples of few-shot learning in Earth Observation. One study tested Siamese networks with contrastive learning for few-shot learning 20, inspired by the work of Wang et al 21. Another study proposes SCL-P-Net 22, which incorporates contrastive learning into prototypical networks for tree species classification from hyperspectral imagery. Despite the limited attention paid to few-shot learning for forest species detection, it is very relevant from a practical point of view. While data is more readily available in High-Income Countries (HICs), finding labeled datasets of local tree species in other regions is challenging. Therefore, it is essential to develop strategies that combine the advantages of Deep Learning and automatic object detection and classification with the capability of working with a few training samples.

On the other hand, not only is it essential to develop a model with a high classification accuracy, but there is increasing awareness of the importance of developing explainable methods, which can help give the user confidence in results and encourage the faster development of accurate models. Interpretability and explainability are gaining traction in Earth Observation as well 23. Explainability as a concept has many different meanings. Here, we follow other publications in Earth Observation, which relate explainability to the ability to understand how models derive a prediction through the lens of specific domain knowledge 24. Activation maps are a popular explainability method amongst publications (e.g., 25,26). Such activation maps highlight parts of an image that have a relatively strong influence on the model decision 27. For example, such attention maps have been used to provide interpretable visual cues for ship remote sensing image retrieval using Siamese networks 28. However, there are many criticisms of such saliency or activation maps. Some argue that similar saliency maps are obtained for randomly initialized weights and trained models 29 or when queried for different classes 30. Therefore, the “explanations” provided through salience maps are complex and non-self-evident.

Example-based explanations could be more similar to human reasoning. Research on human understanding implies that human decision-making tends to be contrastive and relatively simple 31 – only focusing on one or two reasonings rather than deriving complex answers. Moreover, a study of user preferences for explanations including saliency maps, SHAP, and example-based explanations demonstrated that users greatly preferred example-based explanations over Grad-CAM, a saliency map method (90% vs 50% respectively) for image classification tasks 32. Other user studies suggest that showing just one similar example as an explanation can have similar effectiveness as explanations that the user can interact with 33 and that example-based explanations can help users judge the correctness of classification outputs in domains that they are not familiar with 34. Therefore, the utility of example-based explanations is grounded in the scientific theory of human decision-making and demonstrated through user studies comparing various explanations.

In the domain of Earth Observation, a group of researchers presented a method to verify the reliability of a Deep Learning model by showing a single image from the training dataset, which is similar to the test sample to be classified, obtaining reasonable results 35. From the medical domain, one study deploys an example-based explainability method by using nearest-neighbor to select the closest example from the same class and the closest example from a different class in the latent space 36. Using examples from the same and different classes is similar to the approach presented here. Another study uses case-based explanations to explain the workings of a Siamese network in a class-to-class setup 37. The literature above suggests that a case-based explanation is compatible with Siamese Networks in a few-shot learning classification setup and would be more meaningful to the user. As mentioned above, one limitation of many XAI studies 23 is that explanations are presented but rarely evaluated. Therefore, in this study, we will build on an existing XAI evaluation framework 38 to qualitatively and quantitatively evaluate the explanation provided.

To address all the challenges discussed above, this paper presents an innovative end-to-end workflow for a few-shot learning-based strategy demonstrated for detecting invasive and native species in the mid-Atlantic rainforest from UAV-based imagery. This paper presents a methodological contribution by introducing a generalizable workflow that integrates few-shot learning with example-based explanations. The proposed use of UAVs can capture these species in a spatially consistent manner from the sky, and the XAI workflow subsequently enables the automatic identification of tree species of interest. A shallow CNN-based Siamese network and a lightweight backbone network, MobileNet 39, are suitable in environments with limited high-performance computing hardware. Finally, a case-based strategy for explanation generation is also proposed, and the quality of this explanation is evaluated.

Materials and methods

Study-area

Most of the remaining areas of the Atlantic Forest in S\(\tilde{a}\)o Paulo, Brazil, are on the city’s edges, where the five city’s natural parks (integral protection conservation units aiming to preserve natural ecosystems of ecological relevance) are located. Despite being conservation areas, and thus protected from urban expansion, the remaining Atlantic Forest in these parks also suffers from the expansion of invasive exotic species over the native forest. This is indeed the case in the Bororé Natural Park, which was selected as a case study for this project. To define the actions that prevent biodiversity loss caused by invasive species, experts from the Municipal Green and Environment Secretariat (SVMA) conduct field inspections in the natural parks to elaborate biodiversity assessments of some existing plant formations and species. As natural parks are large areas, these assessments are carried out in only parts of the parks, that is, in a sample area. Therefore, there is no mapping of individual invasive trees in the municipal parks, let alone outside parks or conservation units. In the Bororé Natural Park, the SVMA assessment found 141 trees of invasive exotic species. Based on this assessment, a section of this sampling with the highest concentration of identified species was selected for planning the UAV flights. A DJI Mavic Pro with an FC220 RGB sensor was utilized. The UAV images were processed with Agisoft Metashape to produce the dense point cloud and the orthomosaic. Individuals of the Archontophoenix cunninghamiana (popular name: Seafórtia), an invasive Australian palm, were identified as the invasive species of interest (Species 7 and 10 below). Seafórtia reaches eight to ten meters in height in relatively homogeneous agglomerations. Animals, especially birds, used to be attracted by their red seeds, and they were disseminated around the forest areas. As an invasive species, Seafórtia competes with native species in the natural environment, and its expansion can significantly alter habitats, causing the local extinction of native species and generating other ecosystem complications. Adequate management and coping with this issue to protect the native forest demand adequate and efficient mapping of these species’ individuals once they know their location, which is the first step in any action.

Methods

The overall workflow of the proposed methodology is depicted in Fig. 1. The proposed workflow takes as input a UAV orthomosaic of a forested region, where the goal is to detect and explain the classification of invasive or native tree species. It also requires a few manually labeled bounding boxes indicating the species of interest and benchmark data of aerial imagery of tree canopies of known species. The first processing step is an object detection model that automatically extracts individual tree canopies from the UAV orthomosaic, ensuring each cutout contains a single tree. This objection detection can be performed manually based on expert knowledge or using pre-trained models such as Netflora, which is used to retrieve species 1 and 2 shown in Fig. 2. Next, a Siamese network is trained using these extracted tree canopies and benchmark data. We evaluate two variants: (i) a shallow CNN-based Siamese network trained from scratch, and (ii) a lightweight backbone-based (MobileNet trained on ImageNet 39) Siamese network. The aim is to explore feature extractions suitable for various computational capacities. The second step is to include the manually labeled samples in a few-shot setup where the trained model is refined to recognize the new species. This is critical for practical applications, as these samples are often rare and costly to obtain manually. The third step is to add the explainable AI component to the workflow. The result of the proposed workflow is the recognition of individual tree canopies of invasive or native species with an explanation for each classification.

When deploying this workflow in practice, the steps described in Algorithm 1 can be followed. For each test sample \(I_n\), similarity-based classification is performed using a Siamese network that computes similarity scores against a labeled support set \(\mathscr {S} = \{(S_1, Y_1), (S_2, Y_2), \dots , (S_M, Y_M)\}\), where each \(S_m\) is a support image with class label \(Y_m\). The predicted label \(\hat{Y}(I_n)\) is assigned based on the most similar support samples, and the top-K matches are retained as an explanation set \(E_n \subseteq \mathscr {S}\), specific to each test image.

Overall workflow of the study, showing the three main processing steps: (1) the pre-training of a base Siamese network using images of tree canopies from existing benchmarks and automatically generated from the UAV image of the study area, (2) refining the networks in a few-shot learning setup, and (3) adding a post-hoc explanation to visually inform users whether the network can distinguish the new tree species.

Data preparation

The tree species’ square cutouts (i.e., tiles) are generated to build training data for the Siamese network’s classification workflow. The training cutouts are generated from two sources: (1) tiles generated from the UAV orthomosaic using the Netflora object detection workflow 40 and (2) tiles from the Reforestree benchmark dataset 2. Netflora is a vanilla Machine Learning workflow that detects forest species from aerial imagery. It was applied to the UAV image, and nine species with various numbers of samples were detected. We extracted two of the nine species, resulting in Species 1 and 2 shown in Fig. 2. The species were selected based on the number of predicted objects and their visual distinguishability from other species. Species 1 was selected based on its asymmetrical canopy. Species 2 was selected based on its needle-shaped leaves and symmetric canopy feature. The Reforestree dataset is a labeled dataset of tropical forests based on drone and field data. We utilized the labels banana, cacao, and fruit from this dataset, respectively, resulting in Species 3, 4, and 5 shown in Fig. 2. This open dataset also labels other vegetation classes (e.g., citrus and timber) not included in the cutouts. Citrus and timber are excluded because they have fewer labeled samples than the other classes, and including them would lead to an unbalanced dataset and difficulties in training the model.

Data cleaning is applied to all selected cutouts to ensure accuracy and relevance. During the process, the cutouts that display multiple tree canopies of different species within a single cutout are manually detected and removed. The Reforestree dataset had an original spatial resolution of 1 cm, whereas the UAV image of our study has a spatial resolution of 6 cm. This was homogenized by resampling the cutouts from the Reforestree dataset to 6 cm to match the resolutions from both datasets. The size of the cutouts is also cut or padded to a uniform \(128 \times 128\) with zero-padded pixel values. Examples of selected and cleaned cutouts are shown in Fig. 2, where one example cutout of each of the five selected species is visualized, and Fig. 3 displays the images used in the refinement step. Table 1 presents the final number of selected cutout samples.

Example cutouts generated from the UAV image and Reforestree dataset. One example cutout from each species is shown. Species 1 and 2 are generated using the Netflora workflow from the UAV image of the study area. Species 3, 4, and 5 are banana, cacao, and fruit, respectively, from the Reforesttree dataset.

Training the shallow and deep Siamese networks

Similar and dissimilar pairs of cutouts from Species 1 to 5 are generated to train the Siamese Networks. To ensure the model’s accuracy, this process considers data balancing in two aspects: 1) the balance in the number of samples between the species and 2) the balanced number of samples between similar and dissimilar pairs. In each of the five classes, 13 samples are randomly selected as candidate cutouts if there are more than 13 samples. To increase the number of candidate cutouts, we apply the following data augmentation methods to each cutout: (i) randomly rotate by arbitrary degrees, (ii) horizontal flipping, (iii) vertical flipping, (iv) randomly rotate the image and add a Gaussian noise (\(\mu =0, \sigma =25\)) to all RGB channels, and (v) randomly crop the cutout. The Gaussian noise parameters (\(\mu =0, \sigma =25\)) were chosen to avoid systematic image shifts while empirically maximizing model robustness. We tested \(\sigma\) values from 1 to 50 and found that \(\sigma\) = 25 provided the best performance. This value represents moderate noise relative to the 8-bit RGB scale and aligns with prior literature on Gaussian noise modeling in image processing 41. All pixel values were clipped to the [0, 255] range after noise addition.

Together with the original cutouts, the dissimilar pairs are created by exhaustively pairing candidate cutouts between classes, resulting in 82810 dissimilar pairs. We randomly select 10,000 out of 82,810 dissimilar pairs as training data. Similar pairs are created by pairing candidate cutouts within the same class. This results in 20,475 similar pairs. We randomly select 10,000 out of 20,475 similar pairs as training data. The random selections of both similar and dissimilar pairs are performed by generating a uniformly distributed index within each candidate dataset, assuring a non-biased selection. Ultimately, we acquired a balanced training dataset that includes 20,000 sample pairs.

The same training dataset was used to pre-train the shallow CNN-based and MobileNet-based Siamese neural networks, called the base models. The shallow CNN-based Siamese network is initiated with two identical feature extractors, each with four convolution layers. The outputs of the feature extractors are compared with an Euclidean distance layer, resulting in a similarity score between 0 (most dissimilar) and 1 (most similar). We substitute the four convolution layers using the MobileNet backbone trained on the ImageNet dataset 39 to compare with a more expressive feature extractor. The shallow CNN-based and the MobileNet-based Siamese networks have 393K and 3,502K trainable parameters, respectively. Even though the MobileNet-based Siamese network is much more extensive than the shallow CNN-based Siamese network, both networks are lightweight. They can be deployed on devices with limited computational capacity. For example, based on the training tests conducted, we expect that the shallow network could be trained on a standard laptop with 8 CPU cores and 8 GB of memory space in approximately 30 minutes.

The similarity scores between the query object and each support set cutout will be obtained through the Siamese networks, thus resulting in multiple similarity scores between the tree canopy in question and the support set. We propose two methods to predict the species of the query cutout based on the predicted similarity scores. Method 1 classifies the object as the support set species with the highest average similarity score. Method 2 applies the K-Nearest-Neighbor (K-NN) method, where the K highest similarity scores are selected, and the majority class amongst these K most similar samples will be the predicted label.

Few-shot learning

The next step is to use unseen samples for the few-shot learning task where the Siamese networks are refined by new classes representing the target invasive and native species from the study area in S\(\tilde{a}\)o Paulo. This dataset comprises six species; see Table 1 and Fig. 2. These cutouts were manually labeled by the experts in Brazil. Similar to the data preparation for training the base models, the cutouts were padded or cut to the same size as the ones in the training dataset above. A maximum of six samples were randomly selected from the six species, and the same augmentation methods were applied to generate balanced similar and dissimilar pairs to refine the base models. To reduce the number of support samples as much as possible, we refined the base models using up to three of the six samples in each class. In other words, we tested the refinement from 1-shot to 3-shot learning. Due to the limited number of remaining samples for testing, we partitioned this dataset using an n-fold cross-validation setting to reduce the random effect on performance evaluation. Namely, the support and test samples were iteratively alternated for each experiment run, and we report the average performance in the Results Section.

Explanation

Once the classification is performed, the object will be compared to the support set again by visualizing the highest similarity scores per support set class. If the classes with higher similarity scores also appear more visually similar, the user will have more confidence that the classification is correct. If the network has not learned to consistently identify specific classes, this will likely be evident in the list of similar examples. A standard limitation of XAI studies is the lack of evaluation of the quality of the explanation itself 23. We utilize the Co-12 concepts proposed in 38 to evaluate the explanation provided. The Co-12 explanation of quality properties is divided into properties regarding the content, presentation, and user dimensions. Here, we will focus on evaluating the content. Evaluating the presentation and user dimensions would require extensive additional experiments with the XAI method’s intended end-users, which we will leave to further research. The six Co-12 properties related to the content of the explanation are: correctness, completeness, consistency, continuity, contrastivity, and covariate complexity. Correctness refers to how faithful the explanation is compared to the workings of the Machine Learning model. Here, the correctness will be quantified by counting how often the result of the explanation (i.e., the class that would be assigned if only taking the majority of the most similar samples) is the same as the classification assigned through the model. Completeness refers to how much of the Machine Learning method is described by the explanation. In our case, the workflow consists of object detection, a Siamese Network to determine similarity, and a classification assigned based on these similarity measures. The explanation only considers the final step, where the class label is assigned based on the similarity metrics calculated by the Siamese networks. This limits the completeness of the explanation but heightens its correctness. Consistency refers to the deterministicness of the explanation. That is to say, is the explanation sensitive to changes if the model is run multiple times? In our case, the weights of the trained model are static, so the similarity metrics will not change if a query pair is run through it multiple times. Given the same support set of images, the explanation will be consistent. Continuity refers to how stable the explanation is to small perturbations in the input data. This will be tested by slightly changing an input image and verifying whether the explanation changes. Contrastivity refers to the variety of explanations created for different samples. This will be evaluated by quantifying the diversity of the selection of support images for the explanation. Covariate complexity refers to the complexity of an explanation of semantic meaning. Quantitatively measuring this complexity would involve user studies, which are not included due to the same reasoning as the presentation and user dimension Co-12 concepts.

Experimental setup and evaluation metrics

This proposed workflow (Algorithm 1) is tested through three sets of experiments:

-

1.

From Siamese Networks to multi-class classification for the base models – This set of experiments aims to establish a baseline of classification performance before the few-shot learning step. It uses the training dataset in Table 1 to compare whether better results are obtained when utilizing the average similarity score versus K-NN to convert the similarity scores to a classification label, as well as comparing the accuracy that can be achieved by the shallow CNN-based versus MobileNet-based Siamese networks. The classification performance is measured in standard metrics, including Precision, Recall, F1-score, and Accuracy.

-

2.

Few-shot learning for the refinement of the base models – The second set of experiments aims to test the accuracy of the few-shot learning task for recognizing new species by refining the base models. Experiments are performed to evaluate the n-fold k-shot classification. Namely, we iteratively alternated the partitioning of support and test samples for each independent training of models to avoid random effects on evaluation, and we varied the number of support samples per class, i.e., shots (k) from 0 to 3. Results are presented for the refined shallow CNN-based and MobileNet-based Siamese Networks using the optimum base models determined in the first set of experiments to assign class labels.

-

3.

Evaluation of explanation - Using the Siamese network, which obtains the best performance, example local explanations are given for the prediction of the unseen classes. The correctness \(C_{\textrm{cor}}\), continuity \(C_{\textrm{cty}}\), and contrastivity \(C_{\textrm{cst}}\) of the explanation are quantified by the following equations. Let \(\{I_1, I_2, \dots , I_N\}\) denote the set of test images, where each \(I_n\) has a true class label \(Y(I_n)\) and a predicted class label \(\hat{Y}(I_n)\). Let \(\mathscr {S} = \{(S_1, Y_1), \dots , (S_M, Y_M)\}\) denote the full labeled support set. For each test image \(I_n\), a subset \(E_n = \{(S_{n1}, Y_{n1}), \dots , (S_{nK}, Y_{nK})\} \subseteq \mathscr {S}\) of size K is selected as the explanation set, based on the highest similarity scores to \(I_n\). The correctness is quantified by comparing the number of samples in the explanation that have the class predicted by the model as follows:

$$\begin{aligned} \delta (n, k)= & {\left\{ \begin{array}{ll} 1 & \text {if } \hat{Y}(I_n) = Y(S_{nk}) \text { and } \hat{Y}(I_n) = Y(I_n), \\ 0 & \text {otherwise} \end{array}\right. } \end{aligned}$$(1)$$\begin{aligned} C_{\text {cor}}= & \frac{1}{N} \cdot \frac{1}{K} \sum _{n=1}^N \sum _{k=1}^K \delta (n, k) \end{aligned}$$(2)The continuity considers whether the explanation changes with slight variations in the input data. For each test image \(I_n\), we apply random augmentation to generate \(I_n'\), and recompute the explanation set \(E_n'\). Let \(S_{ni} \in E_n\) and \(S_{ni}' \in E_n'\) be the respective explanation images. We then see whether the images selected for the explanation are changed and calculate the proportion of explanation images that have remained unchanged for all samples as follows:

$$\begin{aligned} \gamma (n, i)= & {\left\{ \begin{array}{ll} 1 & \text {if } S_{ni} \in E_n \cap E_n', \\ 0 & \text {otherwise} \end{array}\right. } \end{aligned}$$(3)$$\begin{aligned} C_{\text {cty}}= & \frac{1}{N} \cdot \frac{1}{K} \sum _{n=1}^N \sum _{i=1}^K \gamma (n, i) \end{aligned}$$(4)The contrastivity considers the diversity in the images selected for the explanations. Let \(p(S_k)\) be the probability of a support sample \(S_k \in \mathscr {S}\) being selected in any explanation set \(E_n\).Contrastivity is then defined as follows:

$$\begin{aligned} C_{\textrm{cst}} = \frac{1}{N} \sum _{n=1}^N \left( - \sum _{k=1}^K p(S_k) \log _2 p(S_k) \right) \Bigg / \log _2(K) \end{aligned}$$(5)The contrastivity score \(C_{\textrm{cst}}\) is the normalized Shannon entropy over explanation selections, with normalization ensuring that values range from 0 (no diversity) to 1 (maximum diversity), making the contrastivity value independent of the number of sample explanations.

Results and discussion

From Siamese networks to multi-class classification

The output of the Siamese network is a list of similarity scores for an input test image and images from a support set. This first set of experiments analyzed the sensitivity of the classification results to (1) using average accuracy or K-NN to assign the class and (2) the performance of the shallow CNN-based versus MobileNet-based Siamese Networks. Results for the base models are presented in Table 2. Generally, both base models achieved satisfactory classification performance, e.g., with a Precision reaching 1.00. This is because an adequate number of samples, i.e., 13 in each class, was used to train the models to match the test and support sample images. However, the MobileNet-based Siamese model achieves much higher Recall, F1-score, and Accuracy than that of the shallow CNN-based Siamese model, indicating a much more robust feature extractor pre-trained on the ImageNet dataset 39. Interestingly, as the models already reached a stable, high-performance level based on very few support samples, e.g., \(k=1\), the average and different K-NN metrics to assign the class did not lead to a noticeable performance difference.

Few-shot learning

The second set of experiments analyzed the performance of the few-shot learning, refining the base models determined from the first set of experiments using a few support samples. To more intuitively show the benefits of refining the base models using increasing support samples (\(k=1, 2, 3\)) for classifying new species, the performance differences for both the shallow CNN- and MobileNet-based models are compared before and after the refinement.

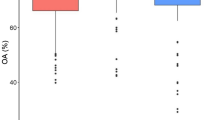

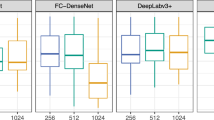

The classification results are measured in Precision (Fig. 4a), Recall (Fig. 4b), F1-score (Fig. 4c), and Accuracy (Fig. 4d). First, with few-shot learning, both the shallow CNN- and MobileNet models performed better after being refined using a few support samples than the zero-shot learning (i.e., before the refinement), and the more support samples, the better the performance. Second, the MobileNet-based Siamese network performs consistently better than the shallow CNN-based models in all the classification metrics from 1-shot to 3-shot learning, except for the 1-shot learning measured in Precision. The 3-shot learning from the MobileNet-based Siamese network achieved the best classification performance, e.g., Precision 0.90, F1-score 0.86, underscoring the effectiveness of using a few support samples to detect newly invasive tree species. Last, it is interesting to observe that the performance gap between the shallow CNN- and MobileNet-based Siamese networks is more profound for 3-shot learning than 1- or 2-shot learning. As the MobileNet engages more trainable parameters than the shallow CNN (3,502K versus 393K), the MobileNet feature extractor becomes more effective when more support samples are leveraged to refine the model.

Explanation

In addition to the classification performance, the evaluation of the results’ explanation is measured in correctness (\(C_{\text {cor}}\)), continuity (\(C_{\text {cty}}\)), and contrastivity (\(C_{\text {cst}}\)), as reported in Table 3. Because only one support sample image exists in the 1-shot learning, \(C_{\text {cst}}\) cannot be measured. The correctness and continuity are similar to the classification results for the shallow CNN- and MobileNet-based Siamese networks. Both models demonstrated a very high contrastivity for the support samples.

Moreover, Fig. 5 shows the qualitative results of the 3-shot learning for the new tree species. In addition to the similarity score, the classification results become more interpretable thanks to example explanations and associated explanation metrics, i.e., correctness, continuity, and contrastivity. For example, the correctness of prediction results for the input Species 7 is 0.67 because the third support sample image has a very low similarity score (0.07) to the input, which was a false positive support. Another interesting example is the third support sample for the input Species 10. One can quickly notice that the third support sample is more visually different than the other support samples to the input sample, even though they belong to the same class. Consequently, its similarity score is much lower than the other support samples.

Example explanations of the prediction results for 3-shot learning. The left-most column shows the input image and the other columns show the support sample images, and each row represents a different species. In addition to the similarity score (sim), the explanation of the visual results measured in Correctness, Continuity, and Contrastivity is on top of each row.

Public implications

Brazil’s Atlantic Forest is a critical biodiversity hotspot facing severe threats from invasive exotic tree species 42, which can affect the species composition and functioning 43, decrease biodiversity 44, and spread diseases 45. As discussed above, monitoring forests by the SVMA is generally conducted manually, causing high data collection costs and limiting inspections to areas that are accessible and close to paths. Our results demonstrate that UAV-based monitoring combined with an explainable few-shot learning model can greatly enhance biodiversity protection and forest management in such data-scarce regions by rapidly detecting and mapping these invaders with minimal training data. The explainable AI component is crucial, as it provides transparent, interpretable outputs that foster trust in the results and provide a visual indication of whether the model seems to be performing well for a new tree species. By specifically addressing issues such as limited training data and computational resources, the workflow presented here facilitates the integration of drone-derived species maps into biodiversity governance, policy design, and operational conservation programs on the ground. This approach directly supports Sustainable Development Goal (SDG) 15 (Life on Land) by safeguarding native forest ecosystems and, by preserving carbon-rich and resilient landscapes, it contributes to SDG 13 (Climate Action). More broadly, the synergy of explainable AI and remote sensing illustrated here is applicable to numerous applications in data-scarce LMICs. For example, UAV imagery can be utilized to detect various objects to support informal settlement upgrading projects 46 for better urban planning, or to quickly detect damage after a disaster 47. Such contexts are also characterized by a need for rapid interpretation of objects or classes of UAV imagery for which large benchmarks are lacking. In summary, the public and environmental implications of this explainable few-shot UAV workflow extend beyond invasive species control, offering a scalable tool for informed decision-making in sustainability and conservation at large.

Conclusions

This study presents a simple but effective Siamese-based method for recognizing tree species in the Atlantic Forest in S\(\tilde{a}\)o Paulo, Brazil, using RGB images captured by UAVs. One of the main contributions of this manuscript is in combining state-of-the-art techniques in an overall workflow from data collection to classification, which considers the practical constraints often faced by public institutions in LMICs - namely, limited data availability and limited computational capacity. Our proposed method uses few-shot learning to minimize the need for extensive labeled data, making it practical for real-world applications. Two networks, a shallow CNN-based and a MobileNet-based Siamese network, were proposed. Despite the scarcity of training data, the proposed model achieved strong performance. In particular, the MobileNet-based Siamese classifier attained 0.90 precision (with an F1-score of 0.86) given only three training samples per class. Though larger, the MobileNet-based network is still lightweight and suitable for mobile deployment. At the same time, the integrated case-based explanation incorporated in the presented workflow offers intuitive visual justifications for each prediction, helping domain experts verify and trust the results by examining analogous reference images. Overall, this study highlights how combining few-shot learning with XAI can tackle the twin challenges of data scarcity and lack of interpretability in ecological monitoring. By facilitating accurate and transparent detection of invasive species in a vulnerable ecosystem, our approach supports proactive forest management and aligns with global sustainability goals—specifically UN SDG 15 (Life on Land) and SDG 13 (Climate Action). The workflow can also easily be extended to other object detection and classification tasks using UAV imagery in data-scarce regions.

Data availability

The training datasets for the experiments are available on Zenodo (https://zenodo.org/records/13833791), and all code to reproduce the experiments is available on an open GitHub repository (https://github.com/XAI4GEO/Tree_Classification).

References

Rodríguez-Veiga, P. et al. Forest biomass retrieval approaches from earth observation in different biomes. Int. J. Appl. Earth Obs. Geoinf. 77, 53–68. https://doi.org/10.1016/j.jag.2018.12.008 (2019).

Reiersen, G. et al. Reforestree: A dataset for estimating tropical forest carbon stock with deep learning and aerial imagery. In Proceedings of the AAAI Conference on Artificial Intelligence 36, 12119–12125 (2022).

Ahlswede, S. et al. TreeSatAI Benchmark Archive: A multi-sensor, multi-label dataset for tree species classification in remote sensing. Earth Syst. Sci. Data 15, 681–695. https://doi.org/10.5194/essd-15-681-2023 (2023).

Idtrees (2017).

Kampe, T. U. Neon: The first continental-scale ecological observatory with airborne remote sensing of vegetation canopy biochemistry and structure. J. Appl. Remote Sens. 4, 043510. https://doi.org/10.1117/1.3361375 (2010).

Beery, S. et al. The auto arborist dataset: A large-scale benchmark for multiview urban forest monitoring under domain shift. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 21294–21307 (2022).

Food and Agriculture Organization of the United Nations. The State of the World’s Forests (Food and Agriculture Organization of the United Nations, 2020).

Cohen, W. B. & Goward, S. N. Landsat’s role in ecological applications of remote sensing. BioScience54, 535–545. https://doi.org/10.1641/0006-3568(2004)054[0535:LRIEAO]2.0.CO;2 (2004)

Torresan, C. et al. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 38, 2427–2447. https://doi.org/10.1080/01431161.2016.1252477 (2017).

Takahashi Miyoshi, G., Imai, N. N., Garcia Tommaselli, A. M., Antunes de Moraes, M. V. & Honkavaara, E. Evaluation of hyperspectral multitemporal information to improve tree species identification in the highly diverse atlantic forest. Remote Sens.. https://doi.org/10.3390/rs12020244 (2020).

Pereira Martins-Neto, R. et al. Tree species classification in a complex brazilian tropical forest using hyperspectral and lidar data. Forests. https://doi.org/10.3390/f14050945 (2023).

de Almeida, D. et al. Monitoring restored tropical forest diversity and structure through UAV-borne hyperspectral and lidar fusion. Remote Sens. Environ. 264, 112582. https://doi.org/10.1016/j.rse.2021.112582 (2021).

Saad, F. et al. Detectability of the critically endangered araucaria angustifolia tree using worldview-2 images, google earth engine and uav-lidar. Land. https://doi.org/10.3390/land10121316 (2021).

Scheeres, J. et al. Distinguishing forest types in restored tropical landscapes with UAV-borne lidar. Remote Sens. Environ. 290, 113533. https://doi.org/10.1016/j.rse.2023.113533 (2023).

da Silva, S. et al. Modeling and detection of invasive trees using UAV image and machine learning in a subtropical forest in Brazil. Eco. Inform. 74, 101989. https://doi.org/10.1016/j.ecoinf.2023.101989 (2023).

Albuquerque, R. W. et al. Forest restoration monitoring protocol with a low-cost remotely piloted aircraft: Lessons learned from a case study in the brazilian atlantic forest. Remote Sens. https://doi.org/10.3390/rs13122401 (2021).

Ferreira, M. P. et al. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 475, 118397. https://doi.org/10.1016/j.foreco.2020.118397 (2020).

Moura, M. M. et al. Towards amazon forest restoration: Automatic detection of species from UAV imagery. Remote Sens. https://doi.org/10.3390/rs13132627 (2021).

Wei, Z. et al. Mapping large-scale plateau forest in sanjiangyuan using high-resolution satellite imagery and few-shot learning. Remote Sens. 14, 388. https://doi.org/10.3390/rs14020388 (2022).

Chen, J. et al. Multiscale object contrastive learning-derived few-shot object detection in VHR imagery. IEEE Trans. Geosci. Remote Sens. 60, 1–15. https://doi.org/10.1109/TGRS.2022.3229041 (2022).

Wang, X., Huang, T. E., Darrell, T., Gonzalez, J. E. & Yu, F. Frustratingly simple few-shot object detection. In Proceedings of the 37th International Conference on Machine Learning, ICML’20. https://doi.org/10.5555/3524938.3525858 (JMLR.org, 2020).

Chen, L., Wu, J., Xie, Y., Chen, E. & Zhang, X. Discriminative feature constraints via supervised contrastive learning for few-shot forest tree species classification using airborne hyperspectral images. Remote Sens. Environ. 295, 113710. https://doi.org/10.1016/j.rse.2023.113710 (2023).

Gevaert, C. M. Explainable AI for earth observation: A review including societal and regulatory perspectives. Int. J. Appl. Earth Obs. Geoinf. 112, 102869. https://doi.org/10.1016/j.jag.2022.102869 (2022).

Roscher, R., Bohn, B., Duarte, M. F. & Garcke, J. Explainable machine learning for scientific insights and discoveries. IEEE Access 8, 42200–42216. https://doi.org/10.1109/ACCESS.2020.2976199 (2020).

Livieris, I. E., Pintelas, E., Kiriakidou, N. & Pintelas, P. Explainable image similarity: Integrating siamese networks and grad-cam. J. Imaging. https://doi.org/10.3390/jimaging9100224 (2023).

Madan, S., Chaudhury, S. & Gandhi, T. K. Explainable few-shot learning with visual explanations on a low resource pneumonia dataset. Pattern Recognit. Lett. 176, 109–116. https://doi.org/10.1016/j.patrec.2023.10.013 (2023).

Molnar, C. Interpretable Machine Learning (Lulu. com, 2020).

Xiong, W., Xiong, Z., Cui, Y., Huang, L. & Yang, R. An interpretable fusion Siamese network for multi-modality remote sensing ship image retrieval. IEEE Trans. Circuits Syst. Video Technol. 33, 2696–2712. https://doi.org/10.1109/TCSVT.2022.3224068 (2022).

Adebayo, J. et al. Sanity checks for saliency maps. Adv. Neural Inf. Process. Syst. 31 (2018).

Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215. https://doi.org/10.1038/s42256-019-0048-x (2019).

Miller, T. Explanation in artificial intelligence: Insights from the social sciences. Artif. Intell. 267, 1–38. https://doi.org/10.1016/j.artint.2018.07.007 (2019).

Jeyakumar, J. V., Noor, J., Cheng, Y.-H., Garcia, L. & Srivastava, M. How can i explain this to you? An empirical study of deep neural network explanation methods. Adv. Neural. Inf. Process. Syst. 33, 4211–4222 (2020).

Gates, L., Leake, D. & Wilkerson, K. Cases are king: A user study of case presentation to explain cbr decisions. In International Conference on Case-Based Reasoning, 153–168. https://doi.org/10.1007/978-3-031-40177-0_10 (Springer, 2023).

Ford, C. & Keane, M. T. Explaining classifications to non-experts: An XAI user study of post-hoc explanations for a classifier when people lack expertise. In International Conference on Pattern Recognition, 246–260. https://doi.org/10.1007/978-3-031-37731-0_15 (Springer, 2022).

Nosuke Ishikawa, S. et al. Example-based explainable AI and its application for remote sensing image classification. Int. J. Appl. Earth Observ. Geoinf. 118, 103215. https://doi.org/10.1016/j.jag.2023.103215 (2023).

Silva, W., Fernandes, K., Cardoso, M. J. & Cardoso, J. S. Towards complementary explanations using deep neural networks. In Understanding and Interpreting Machine Learning in Medical Image Computing Applications, 133–140. https://doi.org/10.1007/978-3-030-02628-8_15 (Springer, 2018).

Ye, X., Leake, D., Huibregtse, W. & Dalkilic, M. Applying class-to-class siamese networks to explain classifications with supportive and contrastive cases. In International Conference on Case-Based Reasoning, 245–260. https://doi.org/10.1007/978-3-030-58342-2_16 (Springer, 2020).

Nauta, M. et al. From anecdotal evidence to quantitative evaluation methods: A systematic review on evaluating explainable AI. ACM Comput. Surv. 55, 1–42. https://doi.org/10.1145/3583558 (2023).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4510–4520 (2018).

Karasinski, M. A. Netflora. https://github.com/NetFlora/Netflora (2024).

Zhang, K., Zuo, W., Chen, Y., Meng, D. & Zhang, L. Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26, 3142–3155. https://doi.org/10.1109/TIP.2017.2662206 (2017).

da Silva, S. et al. Modeling and detection of invasive trees using UAV image and machine learning in a subtropical forest in Brazil. Eco. Inform. 74, 101989. https://doi.org/10.1016/j.ecoinf.2023.101989 (2023).

Le Maitre, D. C. et al. Impacts of invasive Australian acacias: Implications for management and restoration. Divers. Distrib. 17, 1015–1029. https://doi.org/10.1111/j.1472-4642.2011.00816.x (2011).

Bellard, C., Cassey, P. & Blackburn, T. M. Alien species as a driver of recent extinctions. Biol. Lett. 12, 20150623. https://doi.org/10.1098/rsbl.2015.0623 (2016).

Nuñez, M. A., Pauchard, A. & Ricciardi, A. Invasion science and the global spread of sars-cov-2. Trends Ecol. Evol. 35, 642–645. https://doi.org/10.1016/j.tree.2020.05.004 (2020).

Gevaert, C. M., Persello, C., Sliuzas, R. & Vosselman, G. Monitoring household upgrading in unplanned settlements with unmanned aerial vehicles. Int. J. Appl. Earth Obs. Geoinf. 90, 102117. https://doi.org/10.1016/j.jag.2020.102117 (2020).

Koukouraki, E., Vanneschi, L. & Painho, M. Few-shot learning for post-earthquake urban damage detection. Remote Sens. https://doi.org/10.3390/rs14010040 (2022).

Acknowledgements

This publication is part of the project “Bridging the gap between artificial intelligence and society: Developing responsible and viable solutions for geospatial data” (with project no. 18091) of the research program Talent Program Veni 2020, which is (partly) financed by the Dutch Research Council (NWO). The authors would also like to thank the São Paulo Municipal Green and Environment Secretariate for the procurement and labeling of the UAV data and in-situ labels, and the teams behind the Reforestree and Netflora projects for sharing their code and data online.

Author information

Authors and Affiliations

Contributions

C.G., P.C., and A.A.P. contributed to the conceptualization of the ideas. A.A.P. supported the data curation. H.C., O.K., S.G., F.N., and P.C. contributed to the development of the methods and execution of the experiments. C.G., F.D.J., H.C., O.K., S.G., and A.A.P. contributed to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gevaert, C.M., Aguiar Pedro, A., Ku, O. et al. Explainable few-shot learning workflow for detecting invasive and exotic tree species. Sci Rep 15, 23238 (2025). https://doi.org/10.1038/s41598-025-05394-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-05394-2