Abstract

Emotional facial expressions often take place during communicative face-to-face interactions. Yet little is known as to whether natural spoken processing can be modulated by emotional expressions during online processing. Furthermore, the functional independence of syntactic processing from other cognitive and affective processes remains a long-standing debate in the literature. To address these issues, this study investigated the influence of masked emotional facial expressions on syntactic speech processing. Participants listened to sentences that could contain morphosyntactic anomalies while a masked emotional expression was presented for 16 ms (i.e., subliminally) just preceding the critical word. A larger Left Anterior Negativity (LAN) amplitude was observed for both emotional faces (i.e., happy and angry) compared to neutral ones. Moreover, a larger LAN amplitude was found for angry faces than for happy faces. Finally, a reduced P600 amplitude was observed only for angry faces when compared to neutral faces. Collectively, the results presented here indicate that first-pass syntactic parsing is influenced by emotional visual stimuli even under masked conditions and that this effect extends also to later linguistic processes. These findings constitute evidence in favor of an interactive view of language processing as integrated within a complex and integrated system for human communication.

Similar content being viewed by others

Introduction

A growing body of research supports the interplay between language and emotion in the human brain1,2,3. In this regard, prior accounts suggest that cognition and emotion are not categorically different but are deeply intertwined in the human brain4,5. However, the extent to which language and emotion processes interact with each other, and in which manner, deserve further investigation. On the other hand, visual bodily signals–including facial expressions–are also fundamental when it comes to pragmatic processes in human communication6. This study addresses this issue by investigating the possible influence of subliminal emotional facial expressions on syntactic processing while listening to connected speech, as indexed by event-related brain potentials (ERP).

Relevant to our aims, syntactic violations during sentence processing (e.g., gender or number violations), relative to syntactically correct sentence elements, typically elicit a biphasic ERP response: an early left anterior negativity (E/LAN) followed by a late posterior positivity (P600)7,8,9. Past research showed that the LAN component is typically linked to the early (and probably highly automatic) detection of a morphosyntactic mismatch based on the agreement relations for structure-building7,8,10. Some studies have also observed LAN modulations in response to verbal working memory operations11,12 (see also13,14). This negative deflection usually peaks at left frontal electrodes –albeit fronto-central distributions have also been reported– between 300 and 500 ms (ms) after the presence of a syntactic anomaly10,11. Possible neural sources of the LAN component include Brodmann area (BA) 44 in the inferior frontal gyrus, the frontal operculum (FOP), and the anterior superior temporal gyrus (aSTG), all of which are considered key nodes within the syntactic network7,8 (for a review, see15). Subsequently, the P600 component, a centro-parietal positivity peaking around 600 ms after the stimulus onset, is often associated with more controlled language-related processes (which are relatively more strategic, context-sensitive), thus reflecting a later phase of sentence-level integration and processes of syntactic reanalysis and repair7,8,16 (for an alternative view, see17,18). Although the neural sources of the P600 remain an open question in the current literature, they have been proposed the posterior STG (pSTG) and the superior temporal sulcus (STS), which would underlie the integration of different sources of information, including semantics and syntax7,8.

Interestingly, the pSTS may serve as a key hub for integrating visual and auditory signals in speech, including emotion perception. Neural models of face processing19,20 proposed the pSTS as a key brain structure for the visual analysis of changeable aspects, such as expression, eye gaze, or lip movement. More recently, Deen et al.21 demonstrated that face-sensitive areas within the STS also respond to voice perception compared to nonvocal music and environmental sounds, which supports the notion that this brain region reflects the multimodal processing of voice and face signals22. Furthermore, neuroimaging studies indicated that the STS, alongside the ventromedial prefrontal cortex (vmPFC), encodes perceived emotion categories (e.g., happiness, anger) in an abstract, modality-independent fashion23,24. Similarly, current research suggests that the anterior temporal lobe (ATL) is also a critical region involved in multimodal language and emotion processing22,25,26,27. Satpute & Lindquist3, for instance, noted that brain regions often involved in semantic processing –including the ATL– are also engaged during the perception and experience of discrete emotional experiences, as they provide the basis of conceptual knowledge to make meaning of one’s bodily sensations. Besides, the ATL contains subregions functionally linked to emotion-related structures, such as the orbitofrontal cortex or the amygdala26,27. Collectively, extant literature supports a potential interaction between language and emotion.

Of note, increasing studies show that syntactic violations can be processed subliminally, i.e., under reduced levels of perceptual awareness10,28,29,30. Batterink and Neville28, for instance, observed similar early negativities (LAN) in response to both undetected and detected syntactic violations in a dual task. They found a later positivity (P600) only in response to detected/conscious violations, suggesting more conscious awareness and controlled properties for its operation. Jiménez-Ortega et al.10,30, in turn, showed that masked emotional adjectives, which could contain morphosyntactic anomalies, modulate the syntactic processing of ongoing unmasked sentences both at early and late stages of syntactic processing (as indicated by LAN and P600 components). Furthermore, Hernández-Gutiérrez et al.31 observed an interaction between emotional facial expressions (happy, neutral, and fearful) presented supraliminally and morphosyntactic correctness but only during the P600 component (450–650 ms). Consequently, a growing body of evidence suggests that social and emotional information can impact syntactic operations under both supraliminal31,32,33,34,35,36,37 and subliminal10,30,41 conditions. Taken together, these studies reveal how both conscious and unconscious information–including emotional and linguistic stimuli–may impact ongoing language processes, paving the way to study how subliminally presented emotional expressions affect the processing of supraliminal syntactic information. Indeed, it is of interest to explore the degree and mode of sensibility of the linguistic processor to subtle social and emotional signals. This raises the question of how language processing aligns within an ecological perspective, in which syntax processing may be integrated within a complex and integrated system for human communication1,2,3,42.

Importantly, there is a long-standing debate in psycholinguistics as to the functional independence of syntactic processing from other perceptual and cognitive processes. In this regard, mixed findings can be found in the current literature. By and large, the studies reviewed above are in line with an interactive view of syntax. This view holds that different types of information (i.e., phonological, lexical, syntactic, semantic, and context integration) can be accessed in parallel during the early stages of linguistic processing, possibly allowing for free information exchange between processing subcomponents43,44. In contrast, other studies did not observe effects on early syntactic processing by emotional or social information45,46,47,48, in line with traditional views of syntax, in which syntactic processing is opaque or encapsulated to other perceptual and cognitive processes49,50,51.

The present study

Although recent accounts support the interplay between emotion and language1,2,3,4,5, it remains unclear whether syntactic processing can be modulated by subliminal affective visual stimuli, especially when it comes to automatic first-pass syntactic parsing (as reflected by the LAN component)31,38. This study, therefore, investigates the influence of subliminal emotional facial expressions on syntactic speech processing, using ERPs as the main research tool. Building on prior work, we used the same paradigm as in Rubianes et al.41 in which participants saw a scrambled face while listening to emotionally neutral spoken sentences that could contain morphosyntactic anomalies (based on number or gender agreement). Right before the target word (16 ms), in that study, the face identity (one’s own face, a friend’s face, or a stranger’s face) appeared for 16 ms and was masked by the scrambled stimulus. Here, instead of manipulating face identities, we presented emotional expressions from unfamiliar identities under masked conditions, thus maintaining the same procedure. With this approach, both the target word and emotional expression are presented almost simultaneously in order to test the effects of these processes.

If syntactic processing is context-sensitive and permeable, we hypothesized that it would be modulated by subliminal emotional expressions, as indexed by LAN and P600 components. More specifically, we predict that angry faces, relative to happy and neutral faces, would elicit the largest LAN amplitude, followed by a reduced P600 amplitude, in line with previous studies33,37,41. Alternatively, another possibility that might be expected is that the LAN effect would be reduced or even vanish as a result of capturing processing resources by emotional expressions. This observation could be compatible with prior results for both social and emotional stimuli10,47. In either scenario, these results would speak against the view that syntactic processing is modular and encapsulated from other cognitive or emotional processes. On the contrary, if syntactic processing is indeed modular and encapsulated, we would expect no modulation of either the LAN or the P600 components by subliminal emotional faces.

Methods

Participants

Thirty-six native Spanish speakers (twenty-four females) were included with no history of neurological or cognitive disorders and reported normal or corrected-to-normal vision (meanage = 23.24; standard deviationage = 4.78). Participants were recruited from a participant pool consisting of undergraduate and graduate students from the Complutense University of Madrid (Faculties of Psychology and Education). They all received 20€ for their participation. According to the Edinburgh Handedness Inventory52, all participants were right-handed (mean + 88; range + 72 to + 100). Written informed consent was obtained from all participants before the experiment. Besides, the study was conducted following the international ethical protocol for human research (Helsinki Declaration of the World Medical Association) and approved by the Ethics Committee of the Faculty of Psychology of the Complutense University of Madrid.

A priori power analysis was conducted using G*Power software53. Based on an effect size of f = 0.25 derived from Hernández-Gutiérrez et al.31, the analysis indicated that a minimum sample size of 28 participants was required to achieve a statistical power of 0.80 at an alpha level of 0.05. Nevertheless, a total of thirty-six participants were included in the present study to ensure that all possible combinations of the stimulus features were adequately counterbalanced. These features were (see also below): sentence structure (three levels), voice type (two levels), correctness (two levels), and emotional expressions (three levels), resulting in thirty-six conditions combinations. In line with prior studies31,41, the presentation sequence of these features was counterbalanced for each participant. Thus, the order in which participants encountered different combinations of sentence structure, voice type, correctness, and facial expression varied across individuals. For example, if one participant was presented with a particular sentence structure along with a specific voice and a neutral face, the next participant would be presented with a different, randomly generated sequence of these stimulus combinations. This approach was intended to mitigate any systematic influence of presentation order on the results. No participant was excluded from the sample.

Design and stimuli

The study used a within-subjects design in which Emotional Expression (three: happy, neutral, and angry) and Correctness (two: correct and incorrect sentences) were manipulated.

The linguistic material consisted of two-hundred and forty sentences in Spanish with three different structures elaborated from previous studies (see Table 1 for more details; see also54). According to the structure of the sentence, the critical word (gender or number agreement) might be an adjective (structure one) or a noun (structures two and three), and it was pseudo-randomly shuffled between sentences. The set of sentences was spoken with neutral prosody by two different female and male voices. The length of target words varied between two and five syllables, and linguistic characteristics such as word frequency, concreteness, imageability, familiarity, and emotional content were controlled by presenting every word in each voice across all experimental conditions. Some examples of the linguistic material are provided in Table 1 (critical words are highlighted in bold):

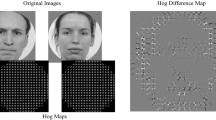

The participants listened to sentences that could contain morphosyntactic anomalies while viewing emotional facial expressions masked with a scrambled face. The scrambled version was elaborated by using 30 × 40 matrices in Adobe Photoshop© from a neutral face. This control stimulus keeps most physical characteristics intact while keeping the facial features unidentifiable. The facial stimuli were obtained from validated datasets, namely, the Chicago Face Database55 (CFD) and the Warsaw Set of Emotional Facial Expression Pictures56 (WSEFEP). Each emotional facial expression was presented for 16 ms masked with the scrambled face. All facial stimuli were processed in Adobe Photoshop© with the aim of normalizing several parameters (grayscale, black background, luminance, and facial proportions). In total, 240 sentences (half of them incorrect) along with one hundred and twenty different facial stimuli according to each emotional expression were presented to each participant. Thus, each sentence (both correct and incorrect) was counterbalanced by each facial expression (happy, neutral and angry).

Procedure

Participants were first informed that they would be participating in a study on syntactic processing in the human brain. In the lab, they were informed that their task was to listen to spoken sentences, some of which would contain syntactic anomalies (e.g., number or gender agreement or disagreement), while viewing a visual stimulus on the monitor (i.e., this was the scrambled stimulus). Participants were instructed to judge whether each sentence was grammatically correct or incorrect (i.e., grammaticality judgment task) by pressing one of two designated buttons after the end of each sentence. Participants were also asked not to blink while the sentences were presented, if possible. A small set of practice trials (5–10) was conducted before the EEG recording. These practice trials were excluded from the experimental material.

As shown in Fig. 1, the procedure was as follows: a blank appeared 500 ms after the onset of the fixation cross, followed 300 ms later by the scrambled stimulus. The scrambled stimulus was then replaced by the emotional face, presented for 16 ms, after a randomized interval of 100–1700 ms. The scrambled stimulus then reappeared until the end of the sentence. Following this, two blanks (300 ms each) and a fixation cross (500 ms) were presented before the windows response (1500 ms). The alternatives (correct or incorrect) were displayed on either side of the screen corresponding to the index and middle fingers, respectively. The response button was counterbalanced across participants.

Following the EEG recording session, participants carried out a visibility task in order to test the degree of awareness of the masked faces. This task consisted of 40 trials that were identical to the experimental procedure of the EEG session, but they were asked to respond if they detected anything beyond the visual (scrambled) stimulus and communicate to the experimenter what they saw. This task is a subjective measure of visibility57, and it has been employed successfully in previous work using masked adjectives10,30 and facial stimuli41. Moreover, previous research using a forced-choice questionnaire to assess the subliminal perception of complex visual stimuli has demonstrated that conscious awareness is almost non-existent with a presentation of 16 milliseconds58. According to our visibility task, sixteen participants reported detecting the shape of a face, but none of them (including the rest of the participants) declared to be able to recognize the facial stimuli (in terms of expression or identity). In fact, all participants were amazed by the explanation that they saw the facial stimuli corresponding to happy, neutral, and angry expressions during the EEG experiment.

Regarding the timing of the experiment, the EEG session started with an EEG setup, which took approximately 30–45 min per participant. Subsequently, participants completed the experimental task, lasting around 25 min. To mitigate potential fatigue effects, the experimental session included three breaks (with one break after completing 60 sentences). Participants could resume the task at their own pace (break durations typically averaged around 5 min). Finally, the visibility task lasted around 10 min. This timeline is consistent with previous EEG experiments conducted in our lab31,41.

EEG recordings and analysis

Continuous EEG was registered using 59 scalp electrodes (EasyCap; Brain Products, Gilching, Germany) following the international 10–20 system. EEG data were recorded with a BrainAmp DC amplifier at a sampling rate of 250 Hz with a band-pass from 0.01 to 100 Hz. All scalp electrodes plus the left mastoid were all referenced to the right mastoid during the EEG recording, and then re-referenced off-line to the average of the right and left mastoids. The impedance of all electrodes was kept below 5 kΩ. The ground electrode was located at AFz. Eye movements were monitored using two vertical (VEOG) and two horizontal (HEOG) electrodes placed above and below the left eye and on the outer canthus of both eyes, respectively.

EEG preprocessing data were analyzed with the software Brain Vision Analyzer® (Brain Products). Raw data were filtered offline with a band-pass of 0.1–30 Hz and subsequently segmented into 1200 ms epochs starting 216 ms prior to the onset of the critical word. Baseline correction was applied from -216 to -16 ms relative to the onset of the critical word. Both incorrect and omitted responses were excluded from the analyses. Trials exceeding a threshold of 100 microvolts (μV) in any of the channels were automatically rejected. Common artifacts (eye movements or muscle activity) were corrected through infomax independent component analysis59 (ICA). After preprocessing EEG data, each condition was exported to Fieldtrip60, an open-source toolbox of Matlab (R2021b, MathWorks, Natick, MA, USA), for further analyses.

Cluster-based permutation tests

Cluster-based permutation tests were conducted to statistically evaluate the data obtained from the ERP using functions implemented in Fieldtrip60. The significance probability is computed from the permutation distribution using the Monte-Carlo method and the cluster-based test statistic61. The permutation distribution was formed by randomly reassigning the values corresponding to each condition across all participants 8000 times. If the p-value for each cluster (computed under the permutation distribution of the maximum cluster-level statistic) was smaller than the critical alpha level (0.05), it was considered that the two experimental conditions were significantly different. This statistical test was applied to evaluate the difference between experimental conditions in our design: 3 Emotional Expression (happy, neutral and angry) \(\times\) 2 Correctness (correct and incorrect). To calculate the interaction effects, we first tested for differences between the three conditions using a cluster-based F-test. These three conditions were obtained by subtracting the mean difference between incorrect and correct sentences for each condition (happy, neutral and angry). We then identified specific differences between pairs of conditions by means of cluster-based permutation t-tests. Thus, pairwise cluster-based t-tests were performed (i.e., happy vs. angry, happy vs. neutral, angry vs. neutral) and the critical alpha value was corrected due to multiple comparisons (0.05/3 = 0.016). These analyses included the whole-time window and all channels.

To estimate the magnitude of the effects in the data, both effect size62 (Cohen’s d) and mean difference for an average of all channels during the latency reported by the cluster permutation tests were calculated.

We also explored the main effects of typical components related to face expression perception, as a way of controlling the success of our manipulations, as well as an exploration of these effects under subliminal conditions. This was made by selecting a priori time windows (including all channels) based on previous research63,64: for the N170 component (100–200 ms) and for the EPN component (200–300 ms). Since our linked mastoid reference might attenuate the effects on these components65, the data were re-referenced to the average of all scalp channels only for the statistical analysis of both these components.

Results

Behavioral results

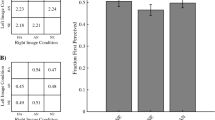

To examine the reaction times, a repeated-measures ANOVA was conducted with the factors Emotional Expression and Correctness. In this regard, the main effect of Emotional Expression was nonsignificant (F(2,70) = 2.518; p = 0.088; \({\upeta }_{\text{p}}^{2}\) = 0.067). However, the ANOVA indicated a significant main effect of Correctness (F(1,35) = 26.436; p < 0.001; \({\upeta }_{\text{p}}^{2}\) = 0.43). Post hoc analysis showed a shorter reaction time for incorrect sentences than for correct ones (\(\Delta\) = -32.71 ±6.36 ms; p < 0.001), as shown in Table 2. In addition, the interaction between Emotional Expression and Correctness was nonsignificant (F(2,70) = 0.557; p = 0.57; \({\upeta }_{\text{p}}^{2}\) = 0.01). As for the response accuracy, which was measured as the percentage of having correctly identified the sentence in terms of syntactic correctness, the ANOVA indicated that the main effect of Emotional Expression was not significant (F(2,70) = 1.995; p = 0.14; \({\upeta }_{\text{p}}^{2}\) = 0.05), while a significant effect of Correctness was found (F(1,35) = 30.129; p < 0.001; \({\upeta }_{\text{p}}^{2}\) = 0.46). Particularly, accuracy was better for correct sentences compared to incorrect ones (\(\Delta\) = -5.432 ±0.99%; p < 0.001). The interaction between Emotional Expression and Correctness was not significant (F(2,70) = 0.141; p = 0.87; \({\upeta }_{\text{p}}^{2}\) = 0.01).

Electrophysiological results

Face-related components

The cluster permutation tests yielded a significant difference of the main effects of Emotional Expression during the N170 time window (F(2,70) = 4.5; p = 0.01). Particularly, a smaller N170 was found in response to angry faces compared to happy (negative cluster: p = 0.007) and neutral faces (negative cluster: p = 0.026), while the difference between happy and neutral faces did not reach statistical significance (p = 0.091). These significant differences were more pronounced over parieto-occipital channels, as depicted in Fig. 2. In turn, no significant differences were observed during the EPN time window, which typically peaks between 200 and 300 ms around parieto-occipital channels (p < 0.14).

Language-related components

The cluster-based permutation tests first indicated a significant difference between incorrect versus correct sentences for each emotional expression. These effects were first associated with the LAN when happy (negative cluster: p < 0.001; \(\Delta\) = − 0.49; Cohen’s d = -0.25), neutral (negative cluster: p = 0.010; \(\Delta\) = − 0.93 ; d = -0.44), and angry faces were displayed (negative cluster: p < 0.001; \(\Delta\) = − 1.49 ; d = -0.58), according to their latency and topographical distribution (see Fig. 3A). Notably, they consisted in a large, long-lasting negativity, particularly observed for angry (between 220 and 960 ms approximately) in contrast with happy (380 – 860 ms approximately). For neutral faces, it was 550–850 ms approximately. Thereafter, the P600 component emerged in response to morphosyntactic anomalies preceded bay happy (positive cluster: p = 0.003; \(\Delta\) = 1.99 ; d = 0.71), neutral (positive cluster: p < 0.001; \(\Delta\) = 2.18 ; d = 0.93), and angry faces (positive cluster: p = 0.015; \(\Delta\) = 1.93 ; d = 0.57). As shown in Fig. 3B, the latency of the P600 component was similar between emotional expressions (840 – 1200 ms approximately).

Grand average of LAN (A) and P600 (B) waveforms and their topographical distributions when comparing morphosyntactic correctness for each emotional expression. Note that the ERP were time-locked to the onset of the subliminal face, the critical word starting 16 ms later. Box plots for the interaction effects between Correctness and Emotional Expression during the LAN (C) and the P600 (D) time windows. * p < .05, ** p < .01, *** p < .001.

After observing both LAN and P600 components in response to each emotional expression, a cluster-based F-test was conducted (including whole-time window and all channels) to examine whether there was an interaction effect between Emotional Expression and Correctness. This analysis showed a significant effect (F(2,70) = 3.66; p = 0.031). Subsequently, cluster-based permutation t-tests were performed to assess the specific differences between pairs of conditions (alpha corrected by the number of comparisons: 0.05/3 = 0.016). Relevant to our aims, this analysis showed a larger LAN amplitude for angry faces in comparison with happy (negative cluster: p = 0.010; \(\Delta\) = − 1.43 ; d = -0.40) and neutral faces (negative cluster: p = 0.014; \(\Delta\) = − 1.10 ; d = -− .42). Additionally, a larger LAN amplitude was found in response to happy faces compared to neutral faces (negative cluster: p = 0.010; \(\Delta\) = − 1.07 ; d = − 0.46; latency: 400 – 470 ms), as can be observed in Fig. 3C. As for the P600 effects, the analysis showed that a smaller P600 amplitude was observed for angry faces relative to neutral faces (positive cluster: p = 0.012; \(\Delta\) = 0.28; d = 0.11), while nonsignificant differences were found between angry and happy faces (positive cluster: p = 0.39; \(\Delta\) = 0.04 ; d = 0.01), as well as between happy and neutral faces (positive cluster: p = 0.11; \(\Delta\) = 0.33 ; d = 0.11) (see Fig. 3D).

Discussion

This study investigated whether syntactic processing can be affected by masked visual stimuli of emotional expressions. Typical morphosyntactic-related ERP components (LAN and P600) along all emotional expressions though, interestingly, these components varied as a function of the specific masked emotional expression, supporting an impact of the latter on syntactic processing. Namely, the results revealed a long-lasting negativity (LAN effect) at frontocentral electrodes larger to angry expressions compared to other expressions. Similarly, a larger LAN amplitude was observed for happy expressions relative to neutral ones. These findings show that first-pass syntactic processing can be biased by emotional information even under reduced levels of visual awareness, as shown by instances of angry and happy expressions. Furthermore, a reduced P600 effect was found for angry expressions than for other expressions. Taken together, these results support interactive accounts of language comprehension43,44,66,67 which in general terms suggest that different types of information (phonologic, syntactic, semantic, and contextual), including emotionally relevant cues, are processed in parallel and can rapidly influence each other to reach an overall interpretation. From this perspective, language processing seems to unfold in continuous interaction with affective and cognitive processes1,2,3,30,31,40,41.

Threat-related signals, such as anger or fear, might show a prioritized access to cognitive resources, as they are key to individuals’ survival and protection,68,69,70 (see also71). Besides its phylogenetic and adaptive value, past research has shown that threat-related stimuli are powerful cues for visual attention72,73,74, even without being consciously perceived75,76. The experimental manipulation of this study provides further evidence of the neural correlates of emotion to facial expressions when perceptual levels of visual awareness are reduced, which is a matter of current debate in the literature64,77,78). The data obtained here add evidence to this line of research by showing a smaller N170 amplitude in the presence of angry faces relative to other facial expressions under masked conditions. This emotion-selective response might reflect an early detection of threat-related signals, relative to other emotional content, that is needed to respond efficiently to potential threats in the social environment. By contrast, an increased N170 amplitude to happy expressions was found, which is in line with past research (for a recent review, see64). The observation of these face-related ERP modulations in response to our masked emotional stimuli suggests an early access to emotional processing, occurring irrespective of morphosyntactic processing. In addition, no differences were found for the EPN component, probably due to the brief presentation of subliminal stimuli. Likewise, it should be noted that participants were performing a grammaticality judgment task during the processing of facial expressions.

Notably, this study provides novel evidence that emotional facial expressions can modulate first-pass syntactic processing under subliminal conditions. In this regard, the patterns observed here extended prior work. For instance, Martín-Loeches et al.37, manipulating the emotional valence in adjectives during sentence comprehension, observed an increase in the LAN amplitude for negative words and a decrease in the LAN amplitude for positive words, as compared to neutral ones. Similarly, other LAN modulations in response to emotional information have been reported in the literature10,30,33,35. Accordingly, and taking into account that the LAN component is often considered to reflect an early detection of a morphosyntactic anomaly7,79 (see also11,13), the LAN increase for angry expressions could be linked to summoning more processing resources during first-pass syntactic parsing. This interpretation is consistent with the notion that negative content typically involves more processing resources (i.e., negativity bias) relative to neutral information37,80 (see also81). It is also plausible that this negativity bias triggered a shift in the processing strategy used for syntactic agreement violations, favoring a more analytic and rule-based approach, which is in line with the nature of early syntactic processes15,28,30,79. Consistent with this, prior research has interpreted modulations of syntactic processing in response to emotional information as reflecting a similar shift towards analytic and rule-based strategies when encountering syntactic agreement violations33,39 (for a review, see1), as opposed to more heuristic or associative processing styles, which, in turn, may trigger N400-like effects10,30,34. Collectively, this evidence clearly contrasts with proposals that syntactic processing –especially when it comes to earlier stages– is encapsulated and largely unaffected by information provided by other perceptual and cognitive systems. This further calls into question that the LAN component reflects a modular process, suggesting more flexible and context-sensitive processes30,41.

Our results showed that the late stages of syntactic operations can also be modulated by masked facial expressions of anger, as indexed by the P600. Our finding is in general agreement with past research that shows less P600 amplitude for negative than for positive conditions32,38. Indeed, similar morphosyntax-related ERP patterns have been previously reported in the literature. For instance, Rubianes et al.41 using the same spoken sentences but presenting facial identities (self, friend, and unknown faces) instead of emotional expressions, also found a larger LAN amplitude followed by a reduced P600 amplitude only in response to self-faces, while a larger LAN amplitude with no reduction of the P600 amplitude was observed for friend faces as compared to unknown faces. This might indicate that both emotional and self-relevant information concurring with language processing would capture processing resources in the service of rapidly adapting the behavior to environmental conditions82,83. Besides, considering the brief presentation of facial stimuli (16 ms) and the masking procedure, these data indicate that both socially and emotionally relevant stimuli may influence syntactic processing without the need for explicit recognition, most likely engaging low-level attentional capture and bottom-up mechanisms. This suggests that even subtle or briefly presented affective stimuli can influence ongoing cognitive processing and decision-making, even under reduced levels of perceptual awareness.

It should be noted that we presented neutrally-valenced spoken sentences, which implies that facial expressions of anger could not capture attentional resources towards sentence parts with negative valence. Specifically, we interpreted our main result –a larger LAN effect followed by a reduced P600 effect in response to facial expressions of anger– as reflecting the increased processing resources during first-pass syntactic parsing (LAN), reducing in turn the need for subsequent syntactic reanalysis or repair processes (P600). Similar biphasic patterns have been observed in the literature, suggesting that less reanalysis/repair processes are necessary to successfully resolve the morphosyntactic mismatch when the earlier stages have summoned increased processing resources34,41,84. In that vein, prior research also suggests that this biphasic pattern might reflect a more efficient syntactic processing30,33,36,85, which would fit tentatively in our study with the notion that threat-related signals are important for survival. We consider, however, that interpretations of facilitatory effects should be supported by corresponding behavioral measures, such as shorter reaction times or higher accuracy rates. Due to the absence of such behavioral effects in our study, we have refrained from interpreting this biphasic pattern as indicative of facilitation, focusing instead on the mobilization of processing resources during early and late syntactic processes, which addresses our main research question.

It is also worth noting that a larger LAN amplitude was also observed in response to happy faces as compared to neutral ones, with no differences in the P600 amplitude. This result might reflect that first-pass syntactic parsing was modulated by emotionally arousing (i.e., both happy and angry) compared to neutral (non-arousing) content, even if emotional valence may have differential effects. Comparable results can be found in the extant literature. For instance, a study conducted by Espuny et al.33, in which they presented emotion-laden words with different emotional valences (equated in arousal) preceding neutral sentences that could contain morphosyntactic anomalies, observed a larger LAN amplitude for both positive and negative conditions relative to neutral ones. This pattern was consistent with Jiménez-Ortega et al.86. Even though, in these studies33,86 no differences were found between positive and negative conditions during the LAN window, which suggests that the observed effects were modulated by arousal. In our study, however, a larger LAN amplitude was observed for angry expressions compared to happy faces, presumably due to emotional valence. Notwithstanding, our study cannot fully elucidate the independent contributions of valence and arousal due to the constraints of the databases used (Chicago Face Database and the Warsaw set of emotional facial expression pictures), which are based on emotional categories (e.g., anger, happiness) rather than quantitative valence and arousal ratings. Hence, further research is needed to disentangle the effects of valence and arousal from affective subliminal visual stimuli on syntactic processing.

In sum, the data presented here seem to indicate that language processes may unfold over time in continuous interaction with other perceptual, affective, and cognitive processes, which is in line with an interactive view of language processing1,2,43,44,66,67. More broadly, this notion is also consistent with prior accounts that suggest that cognition and emotion are intertwined in the brain, as opposed to reflecting isolated processes3,4,5.

Several limitations apply to the present study. One is the near-simultaneous presentation of visual (face) and auditory (critical word) stimuli, with only a 16 ms difference. Consequently, we cannot strictly isolate the effects of the face presentation itself from auditory inputs. Nevertheless, this temporal overlap was necessary to directly investigate our main research question, which focuses on the interplay between facial expressions of emotion and syntactic processing. Another limitation of our study is the lack of facilitatory effects observed from the behavioral data. In that vein, any potential facilitation of syntactic processes driven by emotional expressions would ideally manifest in observable behavioral advantages (e.g., shorter reaction times or higher accuracy rates). The absence of behavioral effects might be due to possible different factors such as the delay in participants’ response time, ERP procedural constraints, or the response window, which was adjusted after the end of the sentence in our task, thereby obscuring any subtle differences between conditions. It is important to note, however, that this is the case in most studies in the field30,31.

A critical question for future research is whether social and emotional information facilitates or impedes language processing. More broadly, it is necessary to elucidate the mechanisms by which emotional processes and language comprehension mutually influence one another. Current models of language comprehension do not yet adequately describe the precise mechanisms underlying this interaction7,43,44,67. For instance, it remains unclear whether non-linguistic (e.g., emotional faces) and linguistic (e.g., emotion-laden words) information shares the same mechanism influencing core language components such as phonology, syntax, or semantics (for a recent discussion, see87,88,89). To this end, cross-domain studies might prove valuable90, possibly leading to different ERP patterns and accounting for mixed findings in the extant literature.

To conclude, this study shows that emotional facial expressions can be decoded under reduced levels of awareness (i.e., subliminally). On the modularity of syntax, the data obtained here indicate that both first-pass and late syntactic processes can be affected by affective visual stimuli even if the latter is out of awareness, as reflected by the mobilization of processing resources. These results support an interactive view of language processing in which the processing of emotional and morphosyntactic features may interact during the early and late computation processes of agreement dependencies. Altogether, syntax is sensitive to subtle emotional signals and supports the depiction of language as integrated within a more complex, mingled, and integrated system devoted to human communication.

Data availability

See Supplementary material for a full report of all statistical comparisons. Raw data and scripts are available upon request by contacting the corresponding author.

References

Chwilla, D. J. Context effects in language comprehension: The role of emotional state and attention on semantic and syntactic processing. Front. Hum. Neurosci. 16, 1014547. https://doi.org/10.3389/fnhum.2022.1014547 (2022).

Hinojosa, J. A., Moreno, E. & Ferré., P. Affective neurolinguistics: Towards a framework for reconciling language and emotion. Language, Cognition and Neuroscience, 35(7), 813–839. https://doi.org/10.1080/23273798.2019.1620957. (2019).

Satpute, A. B. & Lindquist, K. A. At the neural intersection between language and emotion. Affective Sci. 2(2), 207–220. https://doi.org/10.1007/s42761-021-00032-2 (2021).

Lindquist, K. A. & Barrett, L. F. A functional architecture of the human brain: emerging insights from the science of emotion. Trends Cogn. Sci. 16(11), 533–540. https://doi.org/10.1016/j.tics.2012.09.005 (2012).

Pessoa, L., Medina, L., Hof, P. R. & Desfilis, E. Neural architecture of the vertebrate brain: implications for the interaction between emotion and cognition. Neurosci. Biobehav. Rev. 107, 296–312. https://doi.org/10.1016/j.neubiorev.2019.09.021 (2019).

Drijvers, L. & Holler, J. The multimodal facilitation effect in human communication. Psychon. Bull. Rev. 30(2), 792–801. https://doi.org/10.3758/s13423-022-02178-x (2023).

Friederici, A. D. The brain basis of language processing: from structure to function. Physiol. Rev. 91(4), 1357–1392. https://doi.org/10.1152/physrev.00006.2011 (2011).

Friederici, A. D. Language in Our Brain: The Origins of a Uniquely Human Capacity. MIT Press. (2017).

Urbach, T. P., & Kutas, M. Cognitive Electrophysiology of Language. In Shirley-AnnS. Rueschemeyer, and & M. Gareth Gaskell (Eds.), The Oxford Handbook of Psycholinguistics, (2nd ed.). Oxford Academic. https://doi.org/10.1093/oxfordhb/9780198786825.013.40. (2018)

Jiménez-Ortega, L., Espuny, J., de Tejada, P. H., Vargas-Rivero, C. & Martín-Loeches, M. Subliminal Emotional Words Impact Syntactic Processing: Evidence from Performance and Event-Related Brain Potentials. Front. Hum. Neurosci. 11, 192. https://doi.org/10.3389/fnhum.2017.00192 (2017).

Martín-Loeches, M., Muñoz, F., Casado, P., Melcón, A. & Fernández-Frías, C. Are the anterior negativities to grammatical violations indexing working memory?. Psychophysiology 42(5), 508–519. https://doi.org/10.1111/j.1469-8986.2005.00308.x (2005).

Kolk, H. H., Chwilla, D. J., van Herten, M. & Oor, P. J. Structure and limited capacity in verbal working memory: a study with event-related potentials. Brain Lang. 85(1), 1–36. https://doi.org/10.1016/s0093-934x(02)00548-5 (2003).

Bornkessel-Schlesewsky, I. & Schlesewsky, M. Toward a Neurobiologically Plausible Model of Language-Related Negative Event-Related Potentials. Front. Psychol. 10, 298. https://doi.org/10.3389/fpsyg.2019.00298 (2019).

Tanner, D. & Van Hell, J. G. ERPs reveal individual differences in morphosyntactic processing. Neuropsychologia 56, 289–301. https://doi.org/10.1016/j.neuropsychologia.2014.02.002 (2014).

Maran, M., Friederici, A. D. & Zaccarella, E. Syntax through the looking glass: A review on two-word linguistic processing across behavioral, neuroimaging and neurostimulation studies. Neurosci. Biobehav. Rev. 142, 104881. https://doi.org/10.1016/j.neubiorev.2022.104881 (2022).

Leckey, M. & Federmeier, K. D. The P3b and P600(s): Positive contributions to language comprehension. Psychophysiology 57(7), e13351. https://doi.org/10.1111/psyp.13351 (2020).

Aurnhammer, C., Delogu, F., Brouwer, H. & Crocker, M. W. The P600 as a continuous index of integration effort. Psychophysiology https://doi.org/10.1111/psyp.14302 (2023).

Sassenhagen, J. & Fiebach, C. J. Finding the P3 in the P600: Decoding shared neural mechanisms of responses to syntactic violations and oddball targets. Neuroimage 200, 425–436. https://doi.org/10.1016/j.neuroimage.2019.06.048 (2019).

Haxby, J. V., Hoffman, E. A. & Gobbini, M. I. The distributed human neural system for face perception. Trends Cogn. Sci. 4(6), 223–233. https://doi.org/10.1016/s1364-6613(00)01482-0 (2000).

Bernstein, M., Erez, Y., Blank, I. & Yovel, G. An Integrated Neural Framework for Dynamic and Static Face Processing. Sci. Rep. 8(1), 7036. https://doi.org/10.1038/s41598-018-25405-9 (2018).

Deen, B., Saxe, R. & Kanwisher, N. Processing communicative facial and vocal cues in the superior temporal sulcus. Neuroimage https://doi.org/10.1016/j.neuroimage.2020.117191 (2020).

Young, A. W., Frühholz, S. & Schweinberger, S. R. Face and Voice Perception: Understanding Commonalities and Differences. Trends Cogn. Sci. 24(5), 398–410. https://doi.org/10.1016/j.tics.2020.02.001 (2020).

Peelen, M. V., Atkinson, A. P. & Vuilleumier, P. Supramodal representations of perceived emotions in the human brain. J. Neurosci. 30(30), 10127–10134. https://doi.org/10.1523/JNEUROSCI.2161-10.2010 (2010).

Lettieri, G., Handjaras, G., Cappello, E. M., Setti, F., Bottari, D., Bruno, V., Diano, M., Leo, A., Tinti, C., Garbarini, F., Pietrini, P., Ricciardi, E., & Cecchetti, L. Dissecting abstract, modality-specific and experience-dependent coding of affect in the human brain. Science advances, 10(10), eadk6840. https://doi.org/10.1126/sciadv.adk6840, (2024).

Visser, M., Jefferies, E., Embleton, K. V. & Lambon Ralph, M. A. Both the middle temporal gyrus and the ventral anterior temporal area are crucial for multimodal semantic processing: distortion-corrected fMRI evidence for a double gradient of information convergence in the temporal lobes. J. Cogn. Neurosci. 24(8), 1766–1778. https://doi.org/10.1162/jocn_a_00244 (2012).

Hung, J., Wang, X., Wang, X. & Bi, Y. Functional subdivisions in the anterior temporal lobes: a large scale meta-analytic investigation. Neurosci. Biobehav. Rev. 115, 134–145. https://doi.org/10.1016/j.neubiorev.2020.05.008 (2020).

Pascual, B. et al. Large-scale brain networks of the human left temporal pole: a functional connectivity MRI study. Cereb. Cortex 25(3), 680–702. https://doi.org/10.1093/cercor/bht260 (2015).

Batterink, L. & Neville, H. J. The human brain processes syntax in the absence of conscious awareness. J. Neurosci. 33(19), 8528–8533. https://doi.org/10.1523/JNEUROSCI.0618-13.2013 (2013).

Batterink, L., Karns, C. M., Yamada, Y. & Neville, H. The role of awareness in semantic and syntactic processing: an ERP attentional blink study. J. Cogn. Neurosci. 22(11), 2514–2529. https://doi.org/10.1162/jocn.2009.21361 (2010).

Jiménez-Ortega, L. et al. The Automatic but Flexible and Content-Dependent Nature of Syntax. Front. Hum. Neurosci. https://doi.org/10.3389/fnhum.2021.651158 (2021).

Hernández-Gutiérrez, D. et al. How the speaker’s emotional facial expressions may affect language comprehension. Language Cogn. Neurosci. https://doi.org/10.1080/23273798.2022.2130945 (2022).

Espuny, J. et al. Event-related brain potential correlates of words’ emotional valence irrespective of arousal and type of task. Neurosci. Lett. 670, 83–88. https://doi.org/10.1016/j.neulet.2018.01.050 (2018).

Espuny, J. et al. Isolating the effects of word’s emotional valence on subsequent morphosyntactic processing: An event-related brain potentials study. Front. Psychol. 9, 2291. https://doi.org/10.3389/fpsyg.2018.02291 (2018).

Hinchcliffe, C. et al. Language comprehension in the social brain: Electrophysiological brain signals of social presence effects during syntactic and semantic sentence processing. Cortex 130, 413–425. https://doi.org/10.1016/j.cortex.2020.03.029 (2020).

Hinojosa, J. A. et al. Effects of negative content on the processing of gender information: an event-related potential study. Cogn. Affect. Behav. Neurosci. 14(4), 1286–1299. https://doi.org/10.3758/s13415-014-0291-x (2014).

Jiménez-Ortega, L., Casado-Palacios, M., Rubianes, M., Martínez-Mejias, M., Casado, P., Fondevila, S., Hernández-Gutiérrez, D., Muñoz, F., Sánchez-García, J., & Martín-Loeches, M. The bigger your pupils, the better my comprehension: an ERP study of how pupil size and gaze of the speaker affect syntactic processing. Social cognitive and affective neuroscience, 19(1), nsae047. https://doi.org/10.1093/scan/nsae047,(2024).

Martín-Loeches, M. et al. The influence of emotional words on sentence processing: electrophysiological and behavioral evidence. Neuropsychologia 50(14), 3262–3272. https://doi.org/10.1016/j.neuropsychologia.2012.09.010 (2012).

Poch, C., Diéguez-Risco, T., Martínez-García, N., Ferré, P. & Hinojosa, J. A. I hates Mondays: ERP effects of emotion on person agreement. Language Cogn. Neurosci. 38(10), 1451–1462. https://doi.org/10.1080/23273798.2022.2115085 (2023).

Verhees, M. W., Chwilla, D. J., Tromp, J. & Vissers, C. T. Contributions of emotional state and attention to the processing of syntactic agreement errors: evidence from P600. Front. Psychol. 6, 388. https://doi.org/10.3389/fpsyg.2015.00388 (2015).

Maquate, K., Kissler, J. & Knoeferle, P. Speakers’ emotional facial expressions modulate subsequent multi-modal language processing: ERP evidence. Language Cogn. Neurosci. https://doi.org/10.1080/23273798.2022.2108089 (2022).

Rubianes, M. et al. The self-reference effect can modulate language syntactic processing even without explicit awareness: An electroencephalography study. J. Cogn. Neurosci. 36(3), 460–474. https://doi.org/10.1162/jocn_a_02104 (2024).

Holler, J. & Levinson, S. C. Multimodal language processing in human communication. Trends Cogn. Sci. 23(8), 639–652. https://doi.org/10.1016/j.tics.2019.05.006 (2019).

Pulvermüller, F., Shtyrov, Y. & Hauk, O. Understanding in an instant: Neurophysiological evidence for mechanistic language circuits in the human brain. Brain Lang. 110(2), 81–94. https://doi.org/10.1016/j.bandl.2008.12.001 (2009).

McClelland, J. L., St, John, M., & Taraban, R. Sentence comprehension: A parallel distributed processing approach. Language and Cognitive Processes, 4, 287–336. https://doi.org/10.1080/01690968908406371 (1989).

Díaz-Lago, M., Fraga, I. & Acuña-Fariña, C. Time course of gender agreement violations containing emotional words. J. Neurol. 36, 79–93. https://doi.org/10.1016/j.jneuroling.2015.07.001 (2015).

Fraga, I., Padrón, I., Acuña-Fariña, C. & Díaz-Lago, M. Processing gender agreement and word emotionality: New electrophysiological and behavioural evidence. J. Neurol. 44, 203–222. https://doi.org/10.1016/j.jneuroling.2017.06.002 (2017).

Hohlfeld, A., Martín-Loeches, M. & Sommer, W. The nature of morphosyntactic processing during language perception. Evidence from an additional-task study in Spanish and German. Int. J. Psychophysiol. 143, 9–24. https://doi.org/10.1016/j.ijpsycho.2019.06.016 (2019).

Padrón, I., Fraga, I. & Acuña-Fariña, C. Processing gender agreement errors in pleasant and unpleasant words: An ERP study at the sentence level. Neurosci. Lett. https://doi.org/10.1016/j.neulet.2019.134538 (2020).

Ferreira, F. & Clifton, C. Jr. The independence of syntactic processing. J. Mem. Lang. 25, 348–368. https://doi.org/10.1016/0749-596X(86)90006-9 (1986).

Fodor, J. D. On modularity in syntactic processing. Journal of Psycholinguistic Research, 17, 125–168. https://doi.org/10.1007/BF01067069, (1988).

Hauser, M. D., Chomsky, N. & Fitch, W. T. The faculty of language: what is it, who has it, and how did it evolve?. Science 298(5598), 1569–1579. https://doi.org/10.1126/science.298.5598.1569 (2002).

Oldfield, R. C. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9(1), 71–113. https://doi.org/10.1016/0028-3932(71)90067-4 (1971).

Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behavior research methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149 (2009).

Hernández-Gutiérrez, D. et al. Situating language in a minimal social context: how seeing a picture of the speaker’s face affects language comprehension. Soc. Cognitive Affect. Neurosci. 16(5), 502–511. https://doi.org/10.1093/scan/nsab009 (2021).

Ma, D. S., Correll, J. & Wittenbrink, B. The Chicago face database: A free stimulus set of faces and norming data. Behav. Res. Methods 47(4), 1122–1135. https://doi.org/10.3758/s13428-014-0532-5 (2015).

Olszanowski, M. et al. Warsaw set of emotional facial expression pictures: a validation study of facial display photographs. Front. Psychol. 5, 1516. https://doi.org/10.3389/fpsyg.2014.01516 (2015).

Ramsøy, T. Z. & Overgaard, M. Introspection and subliminal perception. Phenomenol. Cogn. Sci. 3, 1–23. https://doi.org/10.1023/B:PHEN.0000041900.30172.e8 (2004).

Ionescu, M. R. Subliminal perception of complex visual stimuli. Roman. J. Ophthalmol. 60(4), 226–230 (2016) (PMID: 29450354).

Bell, A. J. & Sejnowski, T. J. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7(6), 1129–1159. https://doi.org/10.1162/neco.1995.7.6.1129 (1995).

Oostenveld, R., Fries, P., Maris, E. & Schoffelen, J. M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. https://doi.org/10.1155/2011/156869 (2011).

Maris, E. & Oostenveld, R. Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164(1), 177–190. https://doi.org/10.1016/j.jneumeth.2007.03.024 (2007).

Cohen, J. Statistical Power Analysis for the Behavioral Sciences (2nd ed.). Lawrence Erlbaum Associates. (1988).

Eimer, M., Gosling, A., Nicholas, S. & Kiss, M. The N170 component and its links to configural face processing: a rapid neural adaptation study. Brain Res. 1376, 76–87. https://doi.org/10.1016/j.brainres.2010.12.046 (2011).

Schindler, S., Bruchmann, M. & Straube, T. Beyond facial expressions: A systematic review on effects of emotional relevance of faces on the N170. Neurosci. Biobehav. Rev. https://doi.org/10.1016/j.neubiorev.2023.105399 (2023).

Joyce, C. & Rossion, B. The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clin. Neurophysiol. 116(11), 2613–2631. https://doi.org/10.1016/j.clinph.2005.07.005 (2005).

Tanenhaus, M. K., Spivey-Knowlton, M. J., Eberhard, K. M. & Sedivy, J. C. Integration of visual and linguistic information in spoken language comprehension. Science 268(5217), 1632–1634. https://doi.org/10.1126/science.7777863 (1995).

Hagoort, P. The core and beyond in the language-ready brain. Neurosci. Biobehav. Rev. 81, 194–204. https://doi.org/10.1016/j.neubiorev.2017.01.048 (2017).

Fridlund, A. J. Human facial expression: An evolutionary view. Academic Press Inc. (1994).

Öhman, A., Juth, P. & Lundqvist, D. Finding the face in a crowd: Relationships between distractor redundancy, target emotion, and target gender. Cogn. Emot. 24(7), 1216–1228. https://doi.org/10.1080/02699930903166882 (2010).

Öhman, A., Lundqvist, D. & Esteves, F. The face in the crowd revisited: a threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 80(3), 381–396. https://doi.org/10.1037/0022-3514.80.3.381 (2001).

Wormwood, J. B., Quigley, K. S. & Barrett, L. F. Emotion and threat detection: The roles of affect and conceptual knowledge. Emotion 22(8), 1929–1941. https://doi.org/10.1037/emo0000884 (2022).

Burra, N. & Kerzel, D. Task Demands Modulate Effects of Threatening Faces on Early Perceptual Encoding. Front. Psychol. 10, 2400. https://doi.org/10.3389/fpsyg.2019.02400 (2019).

Gong, M. & Smart, L. J. The anger superiority effect revisited: a visual crowding task. Cogn. Emot. 35(2), 214–224. https://doi.org/10.1080/02699931.2020.1818552 (2021).

Becker, D. V. & Rheem, H. Searching for a face in the crowd: Pitfalls and unexplored possibilities. Atten. Percept. Psychophys. 82(2), 626–636. https://doi.org/10.3758/s13414-020-01975-7 (2020).

Tamietto, M. & de Gelder, B. Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11(10), 697–709. https://doi.org/10.1038/nrn2889 (2010).

Zhang, D., Liu, Y., Wang, L., Ai, H. & Luo, Y. Mechanisms for attentional modulation by threatening emotions of fear, anger, and disgust. Cogn. Affect. Behav. Neurosci. 17(1), 198–210. https://doi.org/10.3758/s13415-016-0473-9 (2017).

Hinojosa, J. A., Mercado, F. & Carretié, L. N170 sensitivity to facial expression: A meta-analysis. Neurosci. Biobehav. Rev. 55, 498–509. https://doi.org/10.1016/j.neubiorev.2015.06.002 (2015).

Schindler, S. & Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 130, 362–386. https://doi.org/10.1016/j.cortex.2020.06.010 (2020).

Molinaro, N., Barber, H. A. & Carreiras, M. Grammatical agreement processing in reading: ERP findings and future directions. Cortex 47(8), 908–930. https://doi.org/10.1016/j.cortex.2011.02.019 (2011).

Carretié, L., Albert, J., López-Martín, S. & Tapia, M. Negative brain: An integrative review on the neural processes activated by unpleasant stimuli. Int. J. Psychophysiol. 1, 57–63. https://doi.org/10.1016/j.ijpsycho.2008.07.006 (2009).

Barros, F. et al. The angry versus happy recognition advantage: the role of emotional and physical properties. Psychol. Res. 87(1), 108–123. https://doi.org/10.1007/s00426-022-01648-0 (2023).

de Valk, J. M., Wijnen, J. G., & Kret, M. E. Anger fosters action. Fast responses in a motor task involving approach movements toward angry faces and bodies. Frontiers in psychology, https://doi.org/10.3389/fpsyg.2015.01240 (2015).

Klein, S. B. A role for self-referential processing in tasks requiring participants to imagine survival on the savannah. J. Exp. Psychol. Learn. Mem. Cogn. 38(5), 1234–1242. https://doi.org/10.1037/a0027636 (2012).

van de Meerendonk, N., Kolk, H. H., Vissers, C. T. & Chwilla, D. J. Monitoring in language perception: mild and strong conflicts elicit different ERP patterns. J. Cogn. Neurosci. 22(1), 67–82. https://doi.org/10.1162/jocn.2008.21170 (2010).

Coulson, S. & Kutas, M. Getting it: human event-related brain response to jokes in good and poor comprehenders. Neurosci. Lett. 316(2), 71–74. https://doi.org/10.1016/s0304-3940(01)02387-4 (2001).

Jiménez-Ortega, L. et al. How the emotional content of discourse affects language comprehension. PLoS ONE https://doi.org/10.1371/journal.pone.0033718 (2012).

Kissler, J. Affective neurolinguistics: a new field to grow at the intersection of emotion and language? – Commentary on Hinojosa et al., 2019. Language, Cognition and Neuroscience, 35(7), 850–857. https://doi.org/10.1080/23273798.2019.1694159 (2019).

Hinojosa, J. A., Moreno, E. M. & Ferré, P. On the limits of affective neurolinguistics: a “universe” that quickly expands. Language Cogn. Neurosci. 35(7), 877–884. https://doi.org/10.1080/23273798.2020.1761988 (2020).

Herbert, C. Where are the emotions in written words and phrases? Commentary on Hinojosa, Moreno and Ferré: Affective neurolinguistics: Towards a framework for reconciling language and emotion (2019). Language Cogn. Neurosci. 35(7), 844–849. https://doi.org/10.1080/23273798.2019.1660798 (2020).

Bayer, M. & Schacht, A. Event-related brain responses to emotional words, pictures, and faces - a cross-domain comparison. Front. Psychol. 5, 1106. https://doi.org/10.3389/fpsyg.2014.01106 (2014).

Funding

This study was supported by “Ministerio de Ciencia, Investigación y Universidades, Programa Estatal de Investigación Científica y Técnica de Excelencia, Spain (Grant number: PSI2017-82357-P)”, “Ministerio de Ciencia e Innovación (Grant numbers: PID2021-123421NB-I00; PID2021-124227NB-I00), and by “Ministerio de Ciencia, Innovación y Universidades, (Grant number: FPU18/02223)”.

Author information

Authors and Affiliations

Contributions

MR: Data curation; Formal analysis; Investigation; Methodology; Software; Visualization; Writing original draft & editing. LJ-Ó: Conceptualization; Funding acquisition; Investigation; Project administration; Writing-review & editing. FM: Conceptualization; Data curation; Formal analysis; Funding acquisition; Investigation; Methodology; Supervision; Visualization; Writing original draft & editing. LD: Data curation; Formal analysis; Investigation; Methodology; Software; Visualization; Writing-review & editing. TA-R: Investigation; Writing-review & editing. JS-G: Investigation; Writing-review & editing. SF: Investigation; Writing-review & editing. PC: Investigation; Writing-review & editing. MM-L: Conceptualization; Data curation; Formal analysis; Funding acquisition; Investigation; Methodology; Project administration; Supervision; Visualization; Writing-review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Rubianes, M., Jiménez-Ortega, L., Muñoz, F. et al. Effects of subliminal emotional facial expressions on language comprehension as revealed by event-related brain potentials. Sci Rep 15, 20449 (2025). https://doi.org/10.1038/s41598-025-06037-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-06037-2