Abstract

With the application and development of technologies such as artificial intelligence and deep learning in the generation of animated films, improving the quality and accuracy of generated images to enhance the visual communication effects of animated films has become an important research direction. This work aims to optimize the first order motion model (FOMM) to enhance its performance in generating animated character images. To this end, the convolutional block attention module (CBAM) is introduced into FOMM. Based on this, the CBAM is redesigned to enhance the network’s ability to focus on important features, especially in terms of accuracy in complex backgrounds. Meanwhile, to address the image distortion problem caused by severe pose changes, a repainting image repair module is proposed. Through multi-scale upsampling and occlusion map prediction mechanisms, it effectively improves the coherence and completeness of image reconstruction. Ultimately, the proposed enhanced FOOM (E-FOOM) model realizes the deep coupling of attention mechanisms and reconstruction modules, and a more robust end-to-end character image generation framework is constructed. Experimental results on the VoxCeleb1 and TaiChiHD datasets show that the E-FOOM model outperforms existing models in terms of generated image quality, keypoint detection accuracy, and pose reconstruction. Additionally, the model’s generated images exhibit a minimum peak signal-to-noise ratio increase of 1.11 dB and a minimum structural similarity index improvement of 0.014, indicating superior pixel-level, structural, and perceptual quality. This work intends to enhance the quality of generated character images in animated films, providing a technical pathway for achieving high-quality visual effects.

Similar content being viewed by others

Introduction

Research background and motivations

In the modern digital age, animated films have become an indispensable part of the entertainment industry. With audiences continuously raising their expectations for visual effects, the production of animated films faces increasing challenges. The traditional animation production process is often time-consuming and labor-intensive, and it needs lots of manual intervention and high levels of artistic skill1,2,3. The rapid progress of Artificial Intelligence (AI) as well as deep learning (DL) brings new possibilities for visual communication in animated films. Particularly, DL has shown exceptional capabilities in image recognition, image generation, and image enhancement4,5,6. These technological advancements provide robust support for the automatic generation and optimization of animated film images.

In animated film production, the accuracy and efficiency of motion models directly impact the quality of the final images3,7. Although the First Order Motion Model (FOMM) can describe the motion trajectories of objects to some extent, it often struggles with complex motions and detailed changes8,9. This limitation results in insufficient quality of the generated animated images, failing to meet the growing demands of the audience. Therefore, the primary issue of this work is how to improve image generation quality in complex scenes while maintaining the efficiency of motion models. The Convolutional Block Attention Module (CBAM), as an emerging technology, is gradually being introduced into the research of animated image generation. By dynamically adjusting the focus areas of neural networks, CBAM enhances the accuracy and efficiency of networks in handling complex images10,11,12.

To address the above issues, this work introduces the CBAM module into FOMM and redesigns the CBAM. By incorporating channel-wise max pooling and spatial convolution mechanisms, the model’s ability to focus on important features is enhanced, which improves the accuracy of generated images in complex backgrounds. Meanwhile, to tackle the image distortion problem caused by severe pose changes, a repainting image repair module is proposed. Through multi-scale upsampling and occlusion map prediction mechanisms, it effectively improves the coherence and completeness of animated image reconstruction. Ultimately, the proposed model achieves deep coupling between the attention mechanism and the reconstruction module in its structural design, and a more robust end-to-end character image generation framework is constructed. This approach not only alleviates the workload of animation production but also significantly enhances the visual effects of animated films, meeting the audience’s demand for high-quality visual experiences. This work is expected to provide an effective technical pathway for animated film production, improving the generation quality and efficiency of animated images and thereby promoting the development of the animated film industry.

Research objectives

The research objective is to enhance the performance of FOMM in animated film character image generation by introducing an optimized CBAM and proposing a repainting image repair module. The specific research objectives are as follows:

-

1.

This work aims to explore in-depth the application principles of attention modules in image processing and their impact on the quality of animated image generation. Through systematic theoretical analysis and experimental validation, it intends to clarify the advantages of attention modules in enhancing image feature extraction and improving image generation accuracy.

-

2.

To design and implement an optimized FOMM model. This model combines convolutional attention mechanisms and the repainting image repair module, enabling dynamic adjustment of the neural network’s focus areas. It effectively captures motion features in character images, and particularly improves image quality and coherence when there are significant pose changes, thereby enhancing the quality and efficiency of animated film image generation.

-

3.

Conduct experimental validation to assess the effectiveness of the proposed optimized model in actual animated image generation. Comparative experiments with other animated image generation methods are performed to verify the improvements in image quality achieved by the optimized model.

By achieving these objectives, this work not only expands the theoretical and practical foundation of character animation image generation technology but also provides practical solutions for animated film production. This, in turn, promotes innovation and development within the animation film industry.

Literature review

The visual communication of animated films is an interdisciplinary research area that encompasses computer graphics, artificial intelligence, image processing, and visual arts. With the swift progress of AI and DL, there has been extensive research and discussion in the academic community regarding AI and DL-based animated image generation technologies. Xu et al. reviewed DL image enhancement algorithms and classified them into three categories: model-free, model-based, and optimization strategies, to facilitate the selection of suitable methods and the design of new algorithms13. Jiang et al. proposed a text-driven controllable framework called Text2Human. This framework generated high-quality and diverse human images in two steps: converting poses into parse maps and generating the final images based on texture attributes. This method utilized a hierarchical texture codebook and a diffusion model, enabling the generation of more realistic and diverse images than existing methods14. Zhang et al. proposed StyleSwin, a Generative Adversarial Network (GAN) based on pure Transformers, which combined local attention and dual attention mechanisms to improve generation quality and address artifacts in high-resolution synthesis. This model was specifically designed for high-resolution image generation15. Ding et al. introduced CogView, a tokenizer with 4 billion parameter Transformer and a Vector Quantized Variational AutoEncoder, to address text-to-image generation. Moreover, it achieved the highest Frechet Inception Distance (FID) on the blurred dataset16. In related research on visual quality assessment, Min et al. reviewed both subjective and objective video quality assessment methods, including general and application-specific evaluation measures. They analyzed the latest advancements and provided a systematic overview of the video quality assessment field for researchers17. Zhai and Min reviewed subjective image quality databases and objective assessment methods, and compared the performance of the latest measures. They pointed out that quality assessment was divided into subjective and objective categories, with objective assessment being more favored due to its high efficiency and ease of deployment18. Min et al. systematically reviewed the background, features, methods, benchmarks, technical advancements, unresolved challenges, and future research directions of screen content quality assessment19. These studies not only provide an in-depth theoretical foundation for the field of visual quality assessment but also offer valuable references for deep learning-based animation generation and optimization models, particularly in terms of image and video quality assessment metrics.

Moreover, FOMM plays a significant role in animated image generation. Oshiba et al. proposed an improved FOMM method that used facial feature points as facial expression targets, achieving anime character facial expression generation. This method had less noise, and outperformed other methods in generating anime character facial images20. Xu et al. proposed an enhanced FOMM method to address poor visual effects in video animation generation with large-scale pose changes by improving dense motion and repair capabilities21. Zhang et al. introduced a new micro-expression generation task combining FOMM with facial prior knowledge, extracting facial features, estimating facial motion, and generating micro-expression videos22. Kodama and Saitoh proposed using FOMM to generate a single speaker’s video sequence from speech scenes, transforming the speaker-independent recognition task into a speaker-dependent recognition task23.

Despite the significant progress made in the field of animated image generation, there are still some unresolved issues. For instance, while FOMM performs well in generating simple motion images, it has certain limitations when it handles complex motions and detail-rich images. Specifically, FOMM often struggles to produce high-quality animated images when faced with complex backgrounds and large pose variations. Additionally, current research mostly focuses on optimizing single features or tasks, lacking exploration into enhancing the overall performance across multiple tasks. Therefore, based on existing literature, this work proposes an optimized FOMM model incorporating an attention module and a repainting image repair module. By dynamically adjusting the focus areas of the neural network, the model improves image quality and coherence, and effectively enhances the quality and efficiency of animated image generation. The goal of this work is to improve the model’s generation quality and accuracy when handling complex backgrounds and detail-rich images by introducing the attention module and repainting image repair module into FOMM. Through innovative technological integration and optimization, this work aims to address the existing gaps in current studies, advance the development of visual communication technologies in animated films, and provide valuable references for research and practice in related fields.

Research model

Analysis of the FOMM principles

FOMM is an advanced AI method for generating animated images through keypoint detection and dense motion field prediction. It excels in producing high-quality, realistic animated images, particularly demonstrating significant advantages in handling facial expressions and simple movements24,25,26. Other DL models, such as the GAN and Variational Autoencoders (VAE), have widespread applications in image generation, but they face certain limitations when it comes to animated image generation. GAN requires a large amount of training data and is prone to artifacts and instability during the generation of high-quality animations. VAE does not perform as well as GAN or FOMM in generating detail-rich images. In contrast, FOMM can better capture motion features in images and produce more natural and realistic animated images through keypoint detection and dense motion field prediction. Additionally, the modular structure of FOMM makes it easier to optimize and expand, allowing it to better adapt to various animation generation needs. Therefore, FOMM is chosen as the core model for generating character images in animated films. FOMM mainly consists of three core modules: the key point detector, the dense motion prediction network, and the generator module. These modules work collaboratively to achieve high-quality animated image generation. Figure 1 illustrates the network structure27,28,29.

In FOMM, the primary task of the keypoint detector is to identify a set of key points from the input image, which represent the motion characteristics of the main objects in the image30. The keypoint detector utilizes a U-Net architecture to achieve efficient keypoint detection by extracting multi-scale features. It is assumed that the pixel coordinates (a,b) in the image are denoted as q, and Q represents the set of all pixel coordinates, and \({W}^{i}(q)\) is the weight of the i-th heatmap at pixel coordinate q. Then, the position of the keypoint \({p}^{i}\) is given by:

In addition, the keypoint detection module uses the heatmaps of the key points to predict the affine transformation \({Z}_{X\leftarrow Y}^{i}\) from the reference frame Y to the source frame X and the affine transformation \({Z}_{O\leftarrow Y}^{i}\) from the reference frame to the driving frame O. Thus, the affine transformation \({Z}_{X\leftarrow O}^{i}\) from the driving frame key points to the source frame key points is predicted as follows:

The task of the dense motion prediction network is to predict the motion field for each pixel in the input image. This module also employs a U-Net architecture, which generates dense motion field predictions through multi-scale feature extraction and fusion31. This module combines heatmap and sparse optical flow field predictions, outputting intermediate feature maps and dense optical flow fields. For each key point, the heatmap \({G}_{i}\left(q\right)\) for each transformation is first computed as follows:

δ is a hyperparameter, typically set to 0.01. Next, the sparse optical flow field \({C}_{i}\left(q\right)\) for each key point is calculated as follows:

The optical flow field prediction module combines the heatmap \({G}_{i}\left(q\right)\) with the warped result of the source frame using \({C}_{i}\left(q\right)\) to output an intermediate feature map \(\phi\). Finally, \(\phi\) is processed through a 7 × 7 convolution to output the predicted dense optical flow field \(c\left(q\right)\) and the occlusion map \({H}_{X\leftarrow Y}\). The equations are as follows:

The generator module’s task is to generate new animated images based on the dense motion field and keypoint positions. The generator module uses an encoder-decoder structure to encode image features and decode motion features to create animated images32. This module also ensures that the generated images meet expectations in terms of motion and details through optical flow field distortion and occlusion handling. The source frame image is input into the generator module and processed through two downsampling convolution modules to obtain a feature map \(\zeta \in {\mathbb{R}}^{{G}{\prime}*L{\prime}}\) with a dimension \({G}{\prime}*L{\prime}\). However, this source frame does not yet align pixel-to-pixel with the target driving image. To address this misalignment, the FOMM uses the dense optical flow field \(c\left(q\right)\) to warp the source frame’s feature map. Additionally, FOMM holds that when there is occlusion in the source frame X, the optical flow field may not be sufficient to generate the driving frame O from the source frame X. This is because occluded parts cannot be repaired by image warping alone. Therefore, FOMM introduces an occlusion map \({H}_{X\leftarrow Y}\in {(\text{0,1})}^{{G}{\prime}*L{\prime}}\) to mask out regions of the feature map that need inpainting. The processed feature map is then given by:

\({f}_{\omega }(*,*)\) denotes the backward warping operation, and \(\odot\) represents the element-wise multiplication operation. Finally, the processed feature map is fed into subsequent layers of the generator module to produce the final driving frame O.

FOMM-CBAM model

The dense motion field generation module of FOMM often suffers from background noise and other factors when handling complex image backgrounds, leading to suboptimal animation results. Particularly in predicting optical flow fields, existing models frequently struggle to accurately capture detailed motion information, impacting the quality of the generated images33,34. The CBAM can enhance the network’s feature representation ability in both channel and spatial dimensions. By guiding the network to focus more on key areas while ignoring irrelevant background information, it improves the clarity and structural consistency of the generated images. Therefore, in optimizing the FOMM model, this work incorporates the CBAM module to improve the image reconstruction quality under complex motion poses. In comparison, while SENet performs well in channel attention modeling, it only adjusts feature weights in the channel dimension, neglecting the importance of spatial location information. This leads to inadequate spatial structure modeling, particularly when processing animated videos with complex backgrounds and dynamic changes, which affects pose restoration and local detail in the reconstructed images. Additionally, although the Transformer structure has shown powerful capabilities in feature extraction in recent years, its high computational complexity, slow inference speed, and heavy dependence on large-scale animation sequence training make it less suitable for practical video generation tasks. It especially lacks sufficient advantages in edge devices or real-time scenarios. The CBAM structure is simple, and it is easy to integrate with existing convolutional frameworks. It significantly improves optical flow prediction and image reconstruction quality with only a slight increase in computational overhead. To further enhance the model’s performance, a repainting image repair module is proposed to repair image details across multiple scales. By leveraging the occlusion map repair capability, this module corrects pixel values in the generated images and achieves better visual effects. Simultaneously, the CBAM is redesigned to reduce conflicts between the repainting image repair module and the CBAM module. This ultimately leads to the formation of the E-FOOM model. The architecture of the E-FOOM model is shown in Fig. 2.

Attention module

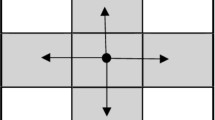

Based on CBAM, a more suitable attention module for the E-FOMM model is proposed. In the proposed attention module, attention maps for intermediate feature layers are inferred in both channel and spatial dimensions. Then, the attention maps are multiplied by the original input feature layers to perform adaptive feature refinement35. This attention module consists of a Channel Attention Module (CAM) and a Spatial Attention Module (SAM), which apply weights to features along the channel and spatial dimensions, respectively, to enhance the accuracy of feature representation. Figure 3 displays the flow of this process.

The attention module first applies weighting to the features along the channel dimension through the CAM, and then applies weighting along the spatial dimension through the SAM. Finally, it outputs the enhanced feature map36. In the CAM, the maximum value of the features along each channel is obtained using channel-wise max pooling. This value is then input to a Multi-Layer Perceptron (MLP) to obtain the channel-wise maximum value weight. After that, it is multiplied by a hyperparameter σ, which is typically set to 2 based on empirical experience. The channel attention weight is then calculated through a sigmoid operation, and this weight is applied to the original feature map’s channels to produce the channel-refined feature map. Figure 4 illustrates the CAM structure.

The primary task of the SAM is to further improve the precision of feature representation by focusing on different spatial locations within the feature map37. The SAM initially performs max pooling and average pooling operations on the input feature map, resulting in two feature maps. These are then concatenated along the channel dimension and passed through a convolutional layer to generate spatial attention weights.

When given an intermediate feature layer \(\phi \in {\mathbb{R}}^{A*G*L}\) as input, the attention module will derive a one-dimensional channel attention map \(CAM(\phi )\in {\mathbb{R}}^{A*1*1}\) and a two-dimensional spatial attention map \(SAM(\dot{\phi })\in {\mathbb{R}}^{1*G*L}\) sequentially according to Eqs. (8) and (9):

\(\otimes\) denotes element-wise multiplication of pixels. For cases where dimensions do not match, \(CAM(\phi )\) and \(SAM(\dot{\phi })\) will expand dimensions using Python’s broadcasting mechanism. The final output feature is denoted as \(\ddot{\phi }\). This process can be simplified using Eq. (10) to replace Eqs. (8) and (9).

Therefore, the final dense optical flow field in E-FOMM is obtained by modifying Eq. (5) to Eq. (11) as shown:

\({Conv}_{7*7}\) is a convolution operation with an output channel of K + 1 (where K is the number of keypoints), a kernel size of 7*7, and a padding of 3.

Repainting image repair module

To address the issue of poor visual quality in reconstructed images when the animated object undergoes significant pose transformations, a repainting image repair module is proposed. Figure 5 displays its structure.

The repainting image repair module takes the intermediate features \(\phi\) output from the optical flow field prediction module as input. The feature map passes through two upsampling modules, Upblock2d, where each Upblock2d module consists of a bilinear upsampling module, a convolution module (Conv), normalization, and a ReLU activation function. After passing through these two Upblock2d modules, two intermediate feature layers are output with dimensions (64,128,128) and (32,256,256), respectively. Finally, convolution with a kernel size of 1 is applied to reduce the channel count of each feature layer to 1, resulting in three occlusion maps at resolutions (64,64), (128,128), and (256,256). In conclusion, by introducing the repainting image repair module, Eq. (6) is modified to Eq. (12):

\(\Theta (\bullet )\) represents the relevant network layer operations in the repainting image repair module.

Generator module

The generator module in the E-FOOM model adopts a variational autoencoder structure and combines feature reconstruction with occlusion repair mechanisms to construct an end-to-end network architecture consisting of an encoder and a decoder. The encoder is composed of two consecutive DownBlock modules. Each DownBlock progressively compresses the spatial resolution of the image features through convolution operations while expanding the feature channels, allowing for more efficient capture of the image’s semantic representation. Ultimately, the encoder outputs an intermediate feature representation with dimensions (256,64,64). To achieve the transfer of motion information between the source frame and the driving frame, a dense optical flow field is introduced after the encoder’s output to perform spatial transformations on the intermediate features, thus completing posture-based feature distortion. The dense optical flow field is assisted by the attention module, enabling a more accurate capture of motion trends in the driving frame. Moreover, to enhance the model’s ability to model high-level semantic information, six ResBlock2d modules are added in the middle part of the generator for deep feature abstraction and fusion, improving the consistency of details and structural integrity in the generated image.

The decoding stage begins with the intermediate feature map, which sequentially passes through two UpBlock modules for feature map restoration. Each UpBlock combines upsampling and convolution to halve the number of channels and double the image size, gradually restoring the original spatial structure of the image. In each upsampling phase, an occlusion map corresponding to the resolution is introduced for feature repair. The occlusion map, output by the repainting image repair module, effectively eliminates image damage in areas with intense motion. The features output by the decoder are finally passed through a 1 × 1 convolution layer with 3 channels for channel compression, and then mapped to the pixel value range of [0, 1] via a Sigmoid activation function, resulting in the final reconstructed animation image.

Evaluation metrics for generated image animations

The evaluation metrics for image animations include \({L}_{1}\) error, Average Keypoint Distance (AKD), Missing Keypoint Rate (MKR), as well as Average Euclidean Distance (AED)38,39. Among these, the \({L}_{1}\) error measures the pixel-level difference between the generated and real image. It calculates the average of the absolute differences between each pixel value in the generated image and its corresponding pixel value in the real image:

n is the video frame quantity. A is the height of the images. B is the width of the images. \({S}_{ab}\) and \({\widehat{S}}_{ab}\) respectively stand for the pixel value at position (a,b) in the real video frame and the reconstructed frame.

AKD is used to assess the accuracy of the pose in the generated image. By extracting key points from the reconstructed frame and the driving frame, and calculating the average distance between corresponding key points, the similarity of the pose can be quantified. MKR is to calculate the proportion of missing key points in the generated image by calculating the ratio of key points present in the real frame but absent in the reconstructed frame. AED evaluates the identity preservation of the reconstructed image. By extracting key feature information from both the reconstructed and real video frames and calculating the AED between these features, the difference in identity between the generated and real images is quantified.

Additionally, the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) are taken as image quality evaluation metrics for the generated images. PSNR is a standard metric for assessing image quality, primarily used to measure differences between images.

R represents the maximum possible pixel value of the image. MSE stands for Mean Squared Error and it is calculated through the following equation:

\(S\left(i,j\right)\) and \(\widehat{S}\left(i,j\right)\) refer to the pixel values at position (i,j) in the original image and the generated image, respectively. A and B indicate the height and width of the images. SSIM measures the structural similarity between images, considering brightness, contrast, and structural information. The calculation equation for SSIM is40:

\({\mu }_{x}\) and \({\mu }_{y}\) indicate the mean pixel values of images x and y, respectively; \({\sigma }_{x}^{2}\) and \({\sigma }_{y}^{2}\) suggest the variances of images x and y; \({\sigma }_{xy}\) is the covariance between images x and y. \({\alpha }_{1}\) and \({\alpha }_{2}\) are constants added to avoid division by zero errors, typically set as \({\alpha }_{1}={({K}_{1}L)}^{2}\) and \({\alpha }_{2}={({K}_{2}L)}^{2}\), where K1 and K2 are usually set to 0.01 and 0.03, respectively, and L is the dynamic range of the image.

In addition to the traditional PSNR and SSIM, deep learning-based perceptual quality assessment metrics such as Learned Perceptual Image Patch Similarity (LPIPS)41 and Neural Radiance Fields—No-Reference Quality Assessment (NeRF-NQA)42 are also introduced to further evaluate the visual quality and detail restoration of the generated images. LPIPS measures perceptual similarity between images through learned deep features, while NeRF-NQA performs a no-reference quality assessment for the generated scenes. These new evaluation methods help to gain a more comprehensive understanding of the perceptual quality of the generated images.

Experimental design and performance evaluation

Datasets collection

The datasets used are VoxCeleb143, TaiChiHD44, and ImageNet-1 k45. VoxCeleb1 is a large-scale dataset for speech and speaker recognition, consisting of over 150,000 video clips. Its extensive video samples and detailed dynamic changes make it an ideal choice for evaluating the image generation capabilities of the FOMM-CBAM model. By downsampling the videos in the VoxCeleb1 dataset, it is possible to capture the details of speaker dynamics at a resolution of 256 × 256. This is of great value for assessing the model’s ability to generate high-quality animated images and capture intricate motion details. Therefore, the VoxCeleb1 dataset is selected for testing the performance of the FOMM-CBAM model in generating image quality and capturing motion details.

The TaiChiHD dataset focuses on high-quality Tai Chi action videos, providing up to 30,000 high-resolution video clips. Its rich action samples and high-resolution images contribute to validating the performance of the FOMM-CBAM model when dealing with complex dynamic backgrounds and detail-rich movements. Processing the TaiChiHD dataset into 256 × 256 images aids in assessing the model’s accuracy and effectiveness in generation and reconstruction tasks. Therefore, the TaiChiHD dataset is selected to evaluate the capabilities of the FOMM-CBAM model in generating high-quality images and demonstrating intricate motion performance. Although VoxCeleb1 and TaiChiHD primarily focus on real-life videos, their detailed dynamic changes and high-quality image data are valuable for research in animation film visual communication. Specifically, the VoxCeleb1 dataset contains rich audio and speaker video data, with high-resolution videos and detailed dynamic changes that provide important references for the visual effects of animated films. The subtle movements and facial expression changes captured in this dataset are crucial for training and evaluating the animation model’s ability to generate similar delicate performances. By processing these real videos, the model’s capability to capture complex expressions and motion details during animation image generation can be enhanced, thereby increasing the realism and expressiveness of animated films. The TaiChiHD dataset, with its detailed dynamic changes and high-resolution images, provides abundant training data for the model in generating animations. Accurately capturing and presenting intricate motion details is essential for animated films. The data from TaiChiHD can assist the model in improving expressiveness when dealing with complex actions and backgrounds, ultimately enhancing the quality and authenticity of the generated animations. Therefore, selecting these datasets as the research foundation can effectively enhance the dynamic effects and visual representation in animated films. This work utilizes the VoxCeleb1 and TaiChiHD high-quality datasets. This ensures that in the visual communication of animated films, the model can generate detailed and realistic images and accurately capture a wealth of motion details, achieving a higher level of animation effects.

ImageNet-1 k is one of the most commonly used subsets of the ImageNet dataset, containing over 1.4 million labeled images. This dataset is widely used for evaluating classification performance across various visual tasks. The choice of ImageNet-1 k as the test dataset is primarily aimed at assessing the performance of the CBAM module in classification tasks. This is because ImageNet-1 k’s rich labeled data and extensive category coverage can effectively validate the classification accuracy and feature representation capability of the CBAM module.

To improve training efficiency and simplify the model training process, the VoxCeleb1 and TaiChiHD datasets are refined and optimized. Although the VoxCeleb1 and TaiChiHD datasets contain rich motion details and high-quality image data, their complexity is relatively high, especially when dealing with dynamic backgrounds and complex movements. Therefore, simpler facial expression change samples from the VoxCeleb1 dataset are selected, and the TaiChiHD dataset is filtered to focus on segments with fewer movements and simpler actions, in order to simplify the training and testing process. By simplifying and optimizing the datasets, the model can be trained more efficiently, while ensuring accuracy and expressiveness when generating detailed animated images.

Experimental environment and parameters setting

Table 1 displays the experimental environment and parameter settings.

The parameter settings in Table 1 mainly consider the following points:

-

1.

The learning rate is set to 0.0002, a common initial value in DL models. The learning rate is a key parameter that controls the magnitude of weight updates during model training. Too high learning rate may cause the model to diverge, while too low learning rate may result in excessively slow training. This value is chosen to balance convergence speed and stability.

-

2.

The number of iterations is set to 300 based on the convergence speed and performance of the model during training. The model’s loss values and performance metrics are monitored throughout the experiments to ensure its convergence and generalization capability.

-

3.

Batch size is set to 32 to maximize memory usage efficiency while maintaining training stability. A larger batch size can enhance training efficiency but may require more memory resources. A size of 32 represents a balanced choice between memory consumption and computational capacity.

-

4.

The number of epochs is set to 100 through experimental tuning to ensure effective training without overfitting. The number of training epochs must consider the complexity of the model and the scale of the dataset.

-

5.

The Adam optimizer is employed. This widely used gradient descent optimization algorithm features an adaptive learning rate, accelerating the training process and improving performance. Its adaptive learning rate and momentum mechanisms make it particularly effective in various DL tasks, especially when dealing with sparse gradients and large datasets, typically resulting in faster convergence than other optimizers.

-

6.

The number of keypoints is set to 10, determining the number of keypoints the model needs to detect when processing input images. An appropriate number of keypoints is chosen based on the complexity of the task and the detail requirements of the images, balancing the model’s ability to capture details with the computational burden.

Performance evaluation

Performance validation of the attention module

The performance of the improved attention module is validated through a comparison with the Squeeze-and-Excitation Networks (SENet) module. Both modules are incorporated into ResNet46, WildResNet47, and ResNext48 architectures to evaluate their classification results on the ImageNet-1 k dataset. Top-1 Error and Top-5 Error are used as evaluation metrics. Figure 6 shows the results.

Figure 6 displays the Top-1 Error and Top-5 Error of ResNet, WildResNet, and ResNext models with the improved attention module. The Top-1 Errors are 21.51%, 24.84%, and 21.07%, respectively, while the Top-5 Errors are 5.69%, 7.63%, and 5.59%, all of which outperform the corresponding models with the SENet module. This indicates that the improved attention module has a more significant advantage over SENet in enhancing the network’s ability to focus on important features and extract information. The improved attention module enhances the feature maps along both the channel and spatial dimensions, thereby improving classification accuracy and model performance.

Performance validation of the E-FOOM model

The proposed E-FOMM model is compared with the FOMM, X2Face49, and AniPortrait50 models. The results on the TaiChiHD dataset are shown in Fig. 7.

Figure 7 suggests that on the TaiChiHD dataset, the E-FOMM model outperforms other models across all four evaluation metrics. Specifically, the E-FOMM model achieves \({L}_{1}\) error, AKD, MKR, and AED values of 0.058, 5.84, 0.023, and 0.170, respectively. Compared to the better-performing AniPortrait model, the E-FOMM model reduces the \({L}_{1}\) error, AKD, MKR, and AED by 4.92%, 4.49%, 25.81%, and 13.48%, respectively. This indicates that the E-FOMM model exhibits lower pixel-level differences, greater accuracy in pose reconstruction, significant advantages in keypoint detection integrity and accuracy, and better fidelity in reconstructing image identity. This suggests that the images generated by E-FOMM are more similar to real images. Figure 8 presents the results of the model performance comparison on the VoxCeleb1 dataset.

Figure 8 suggests that on the VoxCeleb1 dataset, the E-FOMM model also outperforms others. Compared to the better-performing AniPortrait model, the E-FOMM model reduces the \({L}_{1}\) error, AKD, and AED by 6.52%, 2.76%, and 5.11%, respectively. Overall, the E-FOMM model, by introducing the attention module and the repainting image repair module, effectively improves various metrics of image generation. It demonstrates significant improvements in handling complex image backgrounds and capturing details.

Comparison of generated image quality

The quality of images generated by four different models on the VoxCeleb1 and TaiChiHD datasets are compared. Figure 9 shows the results.

Figure 9 shows that on the VoxCeleb1 dataset, the PSNR and SSIM values of the E-FOMM model are 29.76 dB and 0.897, respectively, and on the TaiChiHD dataset, they are 32.45 dB and 0.912, both of which are higher than those of other models. This indicates that the quality of the generated images in terms of pixel-level accuracy, structure, and perceptual quality is superior. Compared to other models, the E-FOMM model shows a minimum improvement of 1.11 dB in PSNR and 0.014 in SSIM. Table 2 displays the results of LPIPS and NeRF-NQA.

Table 2 suggests that the LPIPS value of the E-FOMM model is lower than that of other models on both the VoxCeleb1 and TaiChiHD datasets, indicating that the images generated by E-FOMM have better perceptual similarity in terms of quality. In particular, on the TaiChiHD dataset, E-FOMM shows significant advantages, demonstrating its superiority in handling complex human movements and details. At the same time, the E-FOMM model’s NeRF-NQA scores are higher than those of FOMM, X2Face, and AniPortrait on both datasets, further suggesting that the images generated by E-FOMM are more visually appealing and rich in detail. Especially on the TaiChiHD dataset, E-FOMM shows the best performance in handling complex dynamic backgrounds and details. In conclusion, the E-FOMM model outperforms traditional models like FOMM, X2Face, and AniPortrait on both LPIPS and NeRF-NQA perceptual quality evaluation metrics, indicating significant improvements in the generation of animated images, particularly in terms of details, visual consistency, and perceptual quality. These results further validate the advantages of E-FOMM in generating high-quality animated character images. The animation quality generated by E-FOMM and FOMM models is compared.

Comparison of Images Generated by the Two Models provides a visual comparison of the performance differences between E-FOMM and FOMM in terms of animation image generation quality. On the TaiChiHD dataset, when the character undergoes large posture changes, the FOMM model struggles to accurately capture and restore key posture information, leading to distorted or even incorrect character poses in the generated frames. Particularly during body turns, FOMM may even show the character facing the wrong direction. On the VoxCeleb1 dataset, FOMM also fails to sufficiently capture the features of the source frame when handling large face rotations, resulting in incorrect eye orientation and missing details in the reconstructed image. In contrast, E-FOMM, by introducing the improved attention mechanism, effectively enhances the accuracy of optical flow field prediction, ensuring that the motion trajectory of both the character’s face and body in the driving frame is more consistent with the source frame. Additionally, the multi-scale occlusion maps generated by the repainting image repair module further improve the model’s ability to handle complex postures and occluded areas, leading to more refined image restoration. Overall, E-FOMM generates better results than the original FOMM model on both datasets, demonstrating higher posture reconstruction accuracy and more stable image consistency. This verifies the effectiveness of the proposed method in animation generation tasks.

Although the E-FOMM model demonstrates strong image generation capabilities in most scenarios, there are still some failure cases under extreme conditions. In the TaiChiHD dataset, when the driving frame contains rapid and intense movements (such as high leg lifts or fast turns), the model struggles to accurately model complex body displacement relationships, resulting in blurred body edges or limb misalignment in the generated frames. This issue mainly arises from the instability of key point distribution during intense movements, and the decline in optical flow field prediction accuracy in fast-changing areas. In the VoxCeleb1 dataset, when the face undergoes large-angle rotations (such as turning from the front to the back) or is partially occluded by objects (such as hands or microphones), the reconstructed facial contours may exhibit significant deformation, and even facial features may be lost. While the attention mechanism introduced \can mitigate the impact of background interference to some extent, the model still struggles to accurately restore the original image details in areas with severe occlusion or information loss. Overall, the current model still faces challenges in scenarios with severe occlusion, intense movements, or significant structural information loss. Future improvements could focus on incorporating stronger global modeling mechanisms or integrating temporal information and multi-frame fusion strategies to further enhance the robustness and generalization ability of the model.

Ablation experiment

To verify the impact of the number of key points on animation generation accuracy, structural restoration, and other metrics, four different key point settings (5, 10, 15, and 20) are selected for comparison on the TaiChiHD dataset. The results are shown in Table 3.

Table 3 suggests that when the number of key points is set to 10, the model achieves optimal performance across the four structural and pose evaluation metrics. In terms of image quality evaluation metrics, the setting of 10 key points also results in the highest PSNR and SSIM values. This indicates that the images generated by the model at this point have higher fidelity both at the pixel and structural levels. Too few key points lead to incomplete motion modeling and larger image errors, while too many key points, although capturing more detailed local movements, introduce redundancy and noise, negatively affecting overall reconstruction. Therefore, setting the number of key points to 10 is the most suitable for this model, as it balances both generation quality and pose reconstruction accuracy.

To further analyze the benefits brought by the proposed attention module and the re-drawing image restoration module, an ablation experiment is conducted again. The ablation results on the TaiChiHD dataset with 10 key points are shown in Fig. 10.

In Fig. 10, the proposed E-FOMM model outperforms other comparison models across all metrics, especially in terms of \({L}_{1}\) error and AKD pose distance, with values of 0.058 and 5.84. They are significantly better than the original FOMM’s 0.077 and 10.8. Compared to models that only incorporate the CBAM module or an optimized attention module, E-FOMM further enhances generation accuracy and pose reconstruction ability. It indicates that the attention mechanism and repainting image restoration module both play a positive role in improving model performance. Additionally, although the performance of FOMM + CBAM + repainting image restoration module has improved, it still falls short of E-FOMM. It suggests that the attention module and restoration module design proposed have a more synergistic effect, enabling more effective modeling of human actions and visual details. Overall, the collaboration between the two modules significantly improves the structural and motion performance of the generated images, verifying the rationality and effectiveness of their design.

To evaluate the practical performance of the proposed E-FOMM model, inference time and memory consumption tests are conducted under the same hardware conditions for different models. The test dataset used is TaiChiHD, and the results are shown in Table 4.

From Table 4, it can be seen that E-FOMM shows a slight increase in both inference time (100 ms) and GPU memory consumption (2550 MB) compared to FOMM. After adding the CBAM module, although both inference time and memory consumption increase, the FPS can still be maintained at around 10 frames per second. This indicates that the model can still maintain a smooth generation speed in real-time application scenarios. In contrast, the traditional FOMM model has the shortest inference time (85 ms) and the smallest memory requirement (2300 MB), generating approximately 11.76 FPS. However, despite its higher generation speed, FOMM may lack sufficient precision and detail in more complex animation generation scenes. The FOMM + Transformer model requires significantly more computational resources, with an inference time of 250 ms and GPU memory consumption reaching 3500 MB, generating only about 4 frames per second. This result suggests that, while the Transformer model may have advantages in feature modeling, its computational demands pose a significant performance bottleneck for real-time animation generation tasks.

Discussion

This work significantly improves the performance of the FOMM model in image generation tasks by introducing and optimizing the attention module. The results indicate that after the optimized attention module is introduced, E-FOMM performed exceptionally well in fine action capture and image quality enhancement.

The effectiveness of the CBAM module is also supported by several related studies. Lu and Hu introduced a novel network based on self-attention mechanisms. They embedded CBAM into dense blocks and constructed spatial modules to improve visual quality and quantitative evaluation in super-resolution tasks51. The findings showed that embedding CBAM into the network could effectively improve the details and structure of images. Similarly, this work enhances the details and quality of generated images by introducing an optimized attention module in the FOMM model, and demonstrates the versatility and effectiveness of the attention module across different tasks. Niu et al. proposed a method using CBAM for simultaneous image super-resolution and frontal face generation. By improving both the frontal face generation module and the super-resolution module, they effectively addressed low-resolution and large-pose frontal face synthesis issues52. This work further validates the advantages of the attention module in handling complex image backgrounds and capturing fine details. Here, the E-FOMM model also demonstrates exceptional performance in handling intricate actions and backgrounds, indicating that the attention module effectively enhances the model’s ability to handle diverse and high-complexity image tasks. Additionally, Cao et al. incorporated CBAM into Visual Geometry Group network layers, enhancing facial expression recognition accuracy and stability, and thereby improving human–computer interaction experiences53. They emphasized the role of CBAM in enhancing feature representation and model performance. This work highlights the role of the attention module in enhancing feature representation and model performance. Here, E-FOMM demonstrates the potential and advantages of the attention module in generating tasks by improving various metrics of image generation. This not only improves the quality of the generated images but also enhances the model’s ability to handle complex backgrounds and intricate action details.

In addition to the implicit attention mechanism used, recent years have seen the widespread application of attention and saliency in multimedia processing, particularly in tasks such as audio–video quality assessment, image generation, and processing. For instance, a study54 proposed a multimodal saliency-based model for audio–video matching, while other research explored attention-guided neural networks for full-reference and no-reference audio–video quality assessment55. Moreover, subjective and objective quality evaluation studies have shown that saliency and attention mechanisms can effectively improve the accuracy of quality assessment in multimodal signal processing56. These related studies provide important theoretical support for this work, demonstrating that attention mechanisms have significant advantages in improving generated image quality, accurately capturing details, and optimizing visual performance. Therefore, the E-FOMM model adopts an improved attention mechanism to optimize image generation effects without adding excessive computational complexity, especially when handling complex backgrounds and detail-rich animation scenes.

In image and video quality assessment, quality control has always been a critical issue. In recent years, many no-reference image quality assessment methods have been proposed and widely applied in various visual processing tasks. For example, researchers have proposed blind image quality estimation through distortion exacerbation57, and quality assessment methods based on pseudo-reference images58, which can evaluate image quality in the absence of high-quality reference images. Additionally, research has explored subjective quality information in image quality assessment59 and proposed unified no-reference quality assessment methods applicable to different image types, such as compressed, natural, graphical, and screen-content images60. For fog removal image quality assessment, relevant studies have also addressed the objective quality evaluation of defogged images61 and the quality evaluation of defogging methods using synthetic fog images62. These studies provide important references for image generation and optimization, helping to further improve the quality of generated images.

Recent advancements in Transformers have made significant progress in image generation and offered new solutions for complex actions and long-distance dependencies through their global modeling capabilities. However, the large model size and high training costs remain considerable challenges. In contrast, E-FOMM, with the help of CBAM and the local redrawing mechanism, is better suited for animation production processes that demand real-time performance and deployment efficiency. As a result, E-FOMM achieves excellent image generation quality and structural restoration ability while maintaining low computational costs, demonstrating strong engineering adaptability.

In summary, the results of this work indicate that the introduction of the CBAM module significantly enhances the FOMM-CBAM model in terms of image quality, detail, and realism. These findings help people better understand the role and advantages of CBAM in image generation tasks and further advance research in complex image processing and generation. By comparing existing studies, the broad applicability and effectiveness of the CBAM module across various image generation tasks can be confirmed, providing important theoretical support and a practical basis for future research in related fields.

Conclusion

Research contribution

This work analyzes the FOMM method for generating animated images and optimizes it by incorporating a simple and easily integrable attention module. Additionally, a repainting image repair module is introduced to construct the E-FOMM model for generating character animation images, thus enhancing the visual communication effect of animated films. The following conclusions are drawn based on experimental verification of the image quality generated by the E-FOMM model:

-

1.

The Top-1 Error and Top-5 Error of ResNet, WildResNet, and ResNext with the attention module are all better than the corresponding models with the SENet module. This demonstrates that the optimized attention module has more significant advantages in improving the network’s ability to focus on important features and extract information compared to SENet.

-

2.

The E-FOMM model outperforms traditional models in terms of the quality of generated character images, keypoint detection, and pose reconstruction accuracy. Moreover, ablation experiments also confirm the effectiveness of the attention module and redrawing repair module in improving the performance of image generation tasks.

-

3.

The E-FOMM model achieves higher PSNR and SSIM values on datasets compared to other models, indicating that its generated images are superior in terms of pixel-level quality, structural integrity, and perceptual quality.

Future works and research limitations

While this work has achieved significant results in enhancing FOMM character image generation quality, there are still some limitations. The performance enhancement of the attention module may be limited in certain specific scenarios due to the nature of the dataset. In particular, when handling images with extreme poses or complex backgrounds, there may still be room for improvement. Future research could further explore the performance of attention modules in a broader range of application scenarios, and consider combining them with other advanced attention mechanisms to achieve higher-quality image generation. Additionally, integrating multimodal data and cutting-edge technologies such as reinforcement learning could bring further breakthroughs to animated film image generation.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Zhongbin Huang on reasonable request via e-mail t283061473@163.com.

References

Yusuf, A. & Noor, N. M. Research trends on learning computer programming with program animation: A systematic mapping study. Comput. Appl. Eng. Educ. 31(6), 1552–1582 (2023).

Abidin, D. et al. Development of android-based interactive mobile learning to learn 2D animation practice. J. Sci. 12(01), 138–142 (2023).

Reddy, V. S., Kathiravan, M. & Reddy, V. L. Revolutionizing animation: Unleashing the power of artificial intelligence for cutting-edge visual effects in films. Soft. Comput. 28(1), 749–763 (2024).

Celard, P. et al. A survey on deep learning applied to medical images: From simple artificial neural networks to generative models. Neural Comput. Appl. 35(3), 2291–2323 (2023).

Ghandi, T., Pourreza, H. & Mahyar, H. Deep learning approaches on image captioning: A review. ACM Comput. Surv. 56(3), 1–39 (2023).

Ning, X. et al. Multi-view frontal face image generation: A survey. Concurr. Comput. Pract. Exp. 35(18), e6147 (2023).

Wibowo, M. C., Nugroho, S. & Wibowo, A. The use of motion capture technology in 3D animation. Int. J. Comput. Digit. Syst. 15(1), 975–987 (2024).

Zhou, J. et al. ULME-GAN: A generative adversarial network for micro-expression sequence generation. Appl. Intell. 54(1), 490–502 (2024).

Rashid, M. M. et al. High-fidelity facial expression transfer using part-based local–global conditional gans. Vis. Comput. 39(8), 3635–3646 (2023).

Ijaz, A. et al. Modality specific CBAM-VGGNet model for the classification of breast histopathology images via transfer learning. IEEE Access 11, 15750–15762 (2023).

Muhtar, Y. et al. Fc-resnet: A multilingual handwritten signature verification model using an improved resnet with cbam. Appl. Sci. 13(14), 8022 (2023).

Mun, J. et al. Design and implementation of defect detection system based on YOLOv5-CBAM for lead tabs in secondary battery manufacturing. Processes 11(9), 2751 (2023).

Xu, M. et al. A comprehensive survey of image augmentation techniques for deep learning. Pattern Recogn. 137, 109347 (2023).

Jiang, Y. et al. Text2human: Text-driven controllable human image generation. ACM Trans. Graph. 41(4), 1–11 (2022).

Zhang, B., Gu, S., Zhang, B., et al. Styleswin: Transformer-based gan for high-resolution image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 11304–11314 (2022).

Ding, M. et al. Cogview: Mastering text-to-image generation via transformers. Adv. Neural. Inf. Process. Syst. 34, 19822–19835 (2021).

Min, X. et al. Perceptual video quality assessment: A survey. Sci. China Inf. Sci. 67(11), 211301 (2024).

Zhai, G. & Min, X. Perceptual image quality assessment: A survey. Sci. China Inf. Sci. 63, 1–52 (2020).

Min, X. et al. Screen content quality assessment: Overview, benchmark, and beyond. ACM Comput. Surv. 54(9), 1–36 (2021).

Oshiba, J., Iwata, M. & Kise, K. Face image generation of anime characters using an advanced first order motion model with facial landmarks. IEICE Trans. Inf. Syst. 106(1), 22–30 (2023).

Xu, Y. et al. Improved first-order motion model of image animation with enhanced dense motion and repair ability. Appl. Sci. 13(7), 4137 (2023).

Zhang, Y., Zhao, Y., Wen, Y., et al. Facial prior based first order motion model for micro-expression generation. In Proceedings of the 29th ACM International Conference on Multimedia 4755–4759 (2021).

Kodama, M. & Saitoh, T. Replacing speaker-independent recognition task with speaker-dependent task for lip-reading using first order motion model. In Thirteenth International Conference on Graphics and Image Processing (ICGIP 2021), vol. 12083, 652–659. (SPIE, 2022).

Mallya, A., Wang, T. C. & Liu, M. Y. Implicit warping for animation with image sets. Adv. Neural. Inf. Process. Syst. 35, 22438–22450 (2022).

Zeng, B. et al. FNeVR: Neural volume rendering for face animation. Adv. Neural. Inf. Process. Syst. 35, 22451–22462 (2022).

Sun, K., Li, X. & Zhao, Y. A keypoints-motion-based landmark transfer method for face reenactment. J. Vis. Commun. Image Represent. 100, 104138 (2024).

Hatakeyama, T., Furuta, R. & Sato, Y. Simultaneous control of head pose and expressions in 3D facial keypoint-based GAN. Multimed. Tools Appl. 8, 1–18 (2024).

Guo, C. et al. Human image animation via semantic guidance. Comput. Graph. 118, 102–110 (2024).

Fan, X., Shahid, A. R. & Yan, H. Edge-aware motion based facial micro-expression generation with attention mechanism. Pattern Recogn. Lett. 162, 97–104 (2022).

An, C. H. & Choi, H. C. CaPTURe: Cartoon pose transfer using reverse attention. Neurocomputing 554, 126619 (2023).

Lu, J. & Zheng, Q. Ultra-lightweight face animation method for ultra-low bitrate video conferencing. ZTE Commun. 21(1), 64 (2023).

Suo, Y. et al. Jointly harnessing prior structures and temporal consistency for sign language video generation. ACM Trans. Multimed. Comput. Commun. Appl. 20(6), 1–18 (2024).

Alnaim, N. M. et al. DFFMD: A deepfake face mask dataset for infectious disease era with deepfake detection algorithms. IEEE Access 11, 16711–16722 (2023).

Kleinlogel, E. P. et al. From low invasiveness to high control: How artificial intelligence allows to generate a large pool of standardized corpora at a lesser cost. Front. Comput. Sci. 5, 1069352 (2023).

Wang, S. H. et al. AVNC: Attention-based VGG-style network for COVID-19 diagnosis by CBAM. IEEE Sens. J. 22(18), 17431–17438 (2021).

Ma, K., Chang’an, A. Z. & Yang, F. Multi-classification of arrhythmias using ResNet with CBAM on CWGAN-GP augmented ECG Gramian Angular Summation Field. Biomed. Signal Process. Control 77, 103684 (2022).

Bhuyan, P., Singh, P. K. & Das, S. K. Res4net-CBAM: A deep cnn with convolution block attention module for tea leaf disease diagnosis. Multimed. Tools Appl. 83(16), 48925–48947 (2024).

Barroso-Laguna, A. & Mikolajczyk, K. Key net: Keypoint detection by handcrafted and learned cnn filters revisited. IEEE Trans. Pattern Anal. Mach. Intell. 45(1), 698–711 (2022).

Solimun, S. & Fernades, A. A. R. Cluster analysis on various cluster validity indexes with average linkage method and euclidean distance (study on compliant paying behavior of bank X customers in Indonesia 2021). Math. Stat. 10(4), 747–753 (2022).

Ding, K. et al. Image quality assessment: Unifying structure and texture similarity. IEEE Trans. Pattern Anal. Mach. Intell. 44(5), 2567–2581 (2020).

Zhang, R., Isola, P., Efros, A. A., et al. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 586–595 (2018).

Qu, Q., Liang, H., Chen, X., et al. Nerf-nqa: No-reference quality assessment for scenes generated by nerf and neural view synthesis methods. IEEE Trans. Visual. Comput. Graph. (2024).

Nagrani, A. et al. Voxceleb: Large-scale speaker verification in the wild. Comput. Speech Lang. 60, 101027 (2020).

Zhang, Y. et al. Self-supervised part segmentation via motion imitation. Image Vis. Comput. 120, 104393 (2022).

Salman, H. et al. Do adversarially robust imagenet models transfer better?. Adv. Neural. Inf. Process. Syst. 33, 3533–3545 (2020).

McNeely-White, D., Beveridge, J. R. & Draper, B. A. Inception and ResNet features are (almost) equivalent. Cogn. Syst. Res. 59, 312–318 (2020).

Barrett, D., Hill, F., Santoro, A., et al. Measuring abstract reasoning in neural networks. International conference on machine learning. PMLR 511–520 (2018).

Balnarsaiah, B. et al. Parkinson’s disease detection using modified ResNeXt deep learning model from brain MRI images. Soft. Comput. 27(16), 11905–11914 (2023).

Wu, X. et al. F3A-GAN: Facial flow for face animation with generative adversarial networks. IEEE Trans. Image Process. 30, 8658–8670 (2021).

Wei H, Yang Z, Wang Z. Aniportrait: Audio-driven synthesis of photorealistic portrait animation. arXiv preprint arXiv:2403.17694 (2024).

Lu, E. & Hu, X. Image super-resolution via channel attention and spatial attention. Appl. Intell. 52(2), 2260–2268 (2022).

Niu, C., Nan, F. & Wang, X. A super resolution frontal face generation model based on 3DDFA and CBAM. Displays 69, 102043 (2021).

Cao, W. et al. Facial expression recognition via a CBAM embedded network. Proc. Comput. Sci. 174, 463–477 (2020).

Min, X. et al. A multimodal saliency model for videos with high audio-visual correspondence. IEEE Trans. Image Process. 29, 3805–3819 (2020).

Cao, Y. et al. Attention-guided neural networks for full-reference and no-reference audio-visual quality assessment. IEEE Trans. Image Process. 32, 1882–1896 (2023).

Min, X. et al. Study of subjective and objective quality assessment of audio-visual signals. IEEE Trans. Image Process. 29, 6054–6068 (2020).

Min, X. et al. Blind image quality estimation via distortion aggravation. IEEE Trans. Broadcast. 64(2), 508–517 (2018).

Min, X. et al. Blind quality assessment based on pseudo-reference image. IEEE Trans. Multimed. 20(8), 2049–2062 (2017).

Min, X. et al. Exploring rich subjective quality information for image quality assessment in the wild. IEEE Trans. Circuits Syst. Video Technol. 6, 25 (2025).

Min, X. et al. Unified blind quality assessment of compressed natural, graphic, and screen content images. IEEE Trans. Image Process. 26(11), 5462–5474 (2017).

Min, X. et al. Objective quality evaluation of dehazed images. IEEE Trans. Intell. Transp. Syst. 20(8), 2879–2892 (2018).

Min, X. et al. Quality evaluation of image dehazing methods using synthetic hazy images. IEEE Trans. Multimed. 21(9), 2319–2333 (2019).

Author information

Authors and Affiliations

Contributions

Weiran Cao: Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation Zhongbin Huang:writing—review and editing, visualization, supervision, project administration, funding acquisition.

Corresponding author

Ethics declarations

Ethics approval

The studies involving human participants were reviewed and approved by School of Art and Archaeology, Hangzhou City University Ethics Committee (Approval Number: 2022.65412033). The participants provided their written informed consent to participate in this study. All methods were performed in accordance with relevant guidelines and regulations.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Cao, W., Huang, Z. Character generation and visual quality enhancement in animated films using deep learning. Sci Rep 15, 23409 (2025). https://doi.org/10.1038/s41598-025-07442-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07442-3