Abstract

Türkiye is highly susceptible to earthquakes due to its active tectonic structure and the presence of major fault lines. The accurate estimation of earthquake magnitudes is essential for effective risk mitigation and structural resilience. This study proposes an integrated methodology combining clustering, statistical modeling, and deep learning techniques for the analysis and forecasting of earthquake magnitudes. Initially, earthquakes are classified into three distinct regions using the Fuzzy C-Means (FCM) clustering algorithm. For each region, statistical distributions are applied to characterize magnitude behavior. Subsequently, the Long Short-Term Memory (LSTM) model is used to predict future earthquake magnitudes. The joint application of these three methods provides a comprehensive framework for regional seismic analysis. The findings suggest that the Gumbel distribution offers the best fit for modeling return periods of earthquakes with magnitudes greater than Mwg 5.18, where Mwg denotes the global moment magnitude. Estimated return periods range from 2.56 to 3.63 years in the first region, 2.53 to 3.55 years in the second region, and 2.71–4.22 years in the third region, based on probability levels between 25 and 95%. The LSTM model forecasts that the third region is likely to experience relatively stronger seismic activity, with maximum magnitudes ranging from 2.4 to 6.5 between October 2021 and March 2029. For the same period, expected magnitudes in the first and second regions range from 2.0 to 5.7. These forecasts are supported by model performance metrics that confirm the projected magnitudes are within an acceptable and reliable range of accuracy for medium-term seismic forecasting.

Similar content being viewed by others

Introduction

Earthquakes are powerful natural disasters that can cause severe destruction, affecting lives and property worldwide. Analyzing the impacts of earthquakes is crucial, as seismic events can damage infrastructure and lead to long-term economic consequences1. Türkiye faces significant earthquake risks, especially in the northwestern and western regions, where both the North Anatolian Fault Zone (NAFZ) and the Western Anatolian Fault Zone (WAFZ) contribute to destructive seismic activity2. The westward movement of the Anatolian plate increases the earthquake threat in cities near the NAFZ and active fault lines in the Aegean region. Istanbul, a major city located on the NAFZ, experienced the devastating August 17, 1999 earthquake (magnitude 7.28), which resulted in over 17,000 deaths and widespread damage3. Southern cities such as Düzce and Bursa are also vulnerable, as demonstrated by the November 12, 1999 Düzce earthquake (magnitude 7.06)4. Provinces like Balıkesir and Çanakkale were affected by the Yenice-Gönen earthquake (magnitude 7.28) in 1953. The Aegean city of İzmir has experienced several strong earthquakes, including the June 6, 1970 event (magnitude 7.06), which resulted in 1,086 deaths, and the October 30, 2020 earthquake (magnitude 6.73), which triggered aftershocks and a tsunami5. These events underscore the ongoing seismic threat and the urgent need for preparedness in these high-risk areas.

Western Anatolia is one of the most active seismotectonic regions in Türkiye, characterized by frequent historical and recent earthquakes. This high-risk nature of the region necessitates detailed and multidisciplinary studies, particularly concerning the safety of settlements and the planning of engineering structures. In recent years, many studies have contributed to a better understanding of ground motion behavior and to improving the accuracy of earthquake impact predictions. For instance, Bayrak and Çoban6 examined ground motion parameters and Coulomb stress changes related to the 2019 Bozkurt (Denizli) earthquake, offering insights into seismic hazard. Paradisopoulou et al.7 used stress transfer models to explain the timing and spatial distribution of large earthquakes in Western Türkiye. Bayrak and Bayrak8 assessed seismic hazard across Western Anatolia by combining historical and instrumental data. Çoban and Sayıl9 estimated the probabilities of major potential earthquakes in the Hellenic Arc using statistical distribution models. Tepe et al.10 prepared a new local and updated historical earthquake catalog for Izmir and its immediate surroundings by examining many original sources, records and old international earthquake catalogs in addition to the existing national catalogs used in seismicity studies in Türkiye and determining new geological, seismological and environmental data. Uzelli et al.11 investigated changes in groundwater levels following the 2020 Samos earthquake in İzmir, highlighting the relationship between earthquakes and hydrogeology. Mozafari et al.12 identified seismic activity over the past 16,000 years through 36Cl dating of carbonate fault surfaces. Finally, Ermiş and Curebal13, focused on seismic activity prediction by applying decision tree models by examining earthquake records in the Izmir province in western Türkiye. These studies clearly emphasize the importance of closely monitoring Western Anatolia in terms of seismicity.

Earthquake prediction remains one of the most challenging problems in geosciences and has been addressed from various theoretical and practical perspectives. Classical approaches include deterministic models that attempt to forecast earthquakes based on stress accumulation, fault interactions, and physical precursors14,15. However, such models are often limited by the complex and chaotic nature of tectonic processes. As an alternative, statistical and probabilistic models such as Poisson, renewal, and time-dependent hazard models have been widely adopted16,17,18. These models aim to describe the frequency and magnitude of earthquakes over time and are typically used in seismic hazard maps and risk assessments. Despite their usefulness, they often assume spatial and temporal stationarity and struggle to capture evolving seismic behaviors in heterogeneous regions like Western Anatolia. To overcome these limitations, recent studies have increasingly explored the potential of machine learning (ML) and deep learning (DL) methods in earthquake prediction. Algorithms such as Support Vector Machines (SVM), Random Forests, and especially neural networks like Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM) networks have demonstrated promising results in modeling nonlinear temporal dependencies and classifying seismic patterns19,20,21. For instance, Wang et al.22 used LSTM models to predict magnitude time series in China, while Aryal et al.23 applied LSTM to forecast seismic activity in Nepal and its surrounding regions. Other researchers24,25 combined LSTM with optimization techniques such as genetic algorithms or attention mechanisms to improve forecast accuracy. In parallel, clustering and unsupervised learning techniques have been employed to identify latent structures in earthquake catalogs. These include hierarchical clustering, k-means, and fuzzy clustering methods such as Fuzzy c-Means (FCM)26,27, which allow for the detection of overlapping seismic zones an important consideration in tectonically complex regions.

Statistical analyses are also used to better understand how and why earthquakes occur16,17,28. Cluster analysis aims to identify distinct groups within a dataset, where observations in the same cluster share similar characteristics. In seismology, cluster analysis is often applied to classify earthquakes based on features such as magnitude and depth26,27. The FCM algorithm allows data points to belong to multiple clusters with varying degrees of membership. In this study, the FCM method is preferred over traditional clustering techniques due to its advantages in handling the complex nature of earthquake data, which often involves uncertainty and lacks clearly defined boundaries. This method contributes significantly to earthquake prediction, classification, and hazard assessment by uncovering patterns and influential factors in seismic activity. In addition, statistical distributions of earthquake magnitudes have been widely used to evaluate seismicity across different regions. The Gumbel distribution is a commonly applied model in earthquake studies, as it focuses on extreme magnitudes to assess seismic risk. Although Kaila and Narain29 criticized this approach for neglecting smaller events, Bath30 argued that focusing on larger earthquakes is advantageous, as their magnitudes are more accurately recorded and have greater impact. Compared to other models such as the exponential and Weibull distributions, the Gumbel distribution is particularly effective for analyzing extreme seismic events.

The LSTM method is distinguished by its ability to effectively handle the irregular structure of earthquake magnitudes through hyper parameter tuning and to manage complex, multidimensional data. These features facilitate efficient analysis of earthquake data, improve the accuracy of predictions, and support the advancement of earthquake risk management. Due to its strong learning capacity, LSTM can simultaneously learn complex patterns and temporal dependencies. This capability makes it widely used for modeling multidimensional and complex processes in earthquake data. Wang et al.22 utilized LSTM networks to estimate earthquake magnitudes, demonstrating the effectiveness of this method in capturing the intricate dynamics of complex time series data. Jena et al.31 applied the LSTM model in combination with Geographic Information Systems (GIS) techniques to assess earthquake safety across India. In a more recent study, Berhich et al.32 used earthquake data from Japan spanning 1900 to 2021 to estimate magnitudes and compared the results with those obtained from other deep learning models. Sadhukhan et al.33 examined LSTM, bidirectional LSTM (Bi-LSTM), and a converter model to forecast earthquake magnitudes in three different seismic regions. Aryal et al.34 employed the LSTM model to predict earthquake magnitudes in Nepal and its neighboring regions and found that the model effectively captured overall seismic trends and maintained consistent performance over time. Ye et al.24 developed an earthquake forecasting system that combines the Elite Genetic Algorithm, used for feature selection, with the LSTM model, which captures the impact of past events on future predictions. The study concluded that this combined system improved prediction performance compared to other models and the standard LSTM approach. As demonstrated by these studies, LSTM is selected as the preferred deep learning method in the present study, owing to its proven success in numerous applications, particularly in earthquake magnitude estimation.

Despite these developments, comprehensive hybrid frameworks that simultaneously incorporate spatial clustering, magnitude modeling using extreme value distributions, and deep learning–based forecasting are still scarce in the literature. The present study aims to fill this gap by proposing an integrated methodology for localized and data-driven earthquake prediction, combining unsupervised classification (FCM), extreme value analysis (Gumbel distribution), and temporal magnitude forecasting (LSTM) tailored for the Western Anatolian seismic zone. Although numerous studies have addressed the seismicity and earthquake hazard of Türkiye2,35,36,37, particularly in the Aegean and Western Anatolian regions, many rely on conventional methodologies such as tectonic zonation, Gutenberg–Richter-based recurrence models, and probabilistic seismic hazard mapping38,39. While these methods provide valuable insights, they often assume regional homogeneity and do not account for the spatial heterogeneity, nonlinear dynamics, and temporal evolution of earthquake occurrence. Moreover, traditional models rarely incorporate data-driven clustering or modern predictive techniques, limiting their capacity to identify evolving patterns in regional seismic activity. To address these limitations, the present study proposes a novel hybrid framework that integrates FCM clustering40, Gumbel-based extreme value modeling41, and LSTM neural networks42. This approach enables the objective delineation of seismic sub-regions based on observed data, the characterization of magnitude extremes for each cluster, and the forecasting of regional seismic trends based on historical magnitude patterns. By combining unsupervised learning, statistical distribution modeling, and deep learning, this study advances beyond traditional hazard assessments and contributes a flexible, adaptive, and predictive tool for localized seismic risk evaluation.

The main objective of this study is to divide the Aegean region into sub-regions based on seismic events that occurred between 1970 and 2021, to analyze the distributional characteristics of these sub-regions, and to predict the maximum earthquake magnitudes that may occur in future periods. In this context, the region is initially partitioned into three sub-regions using the FCM method, which is robust to anomalies in earthquake data. By identifying the statistical distributions and characteristics of each sub-region, the suitability of the clustering results was evaluated. Subsequently, the LSTM method, which has a strong learning and adaptation mechanism based on historical seismic data, was applied to forecast future earthquake magnitudes in each sub-region. Then, the Aegean region was successfully divided into appropriate sub-regions, and potential seismic activity was analyzed in detail for the first time using this combined methodology. The structure of the paper is presented in Fig. 1. Section "Study area" provides a detailed overview of the study area and the earthquake catalog. Section "Methodology" explains the methodology, including FCM, LSTM, and statistical distribution models. Section "Results and discussion" presents the results, and Section "Conclusion" concludes with a summary of key findings and their implications.

Study area

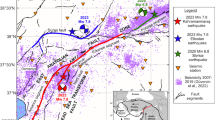

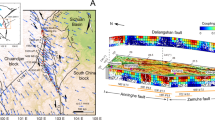

Türkiye is located at the intersection of Western Asia and Eurasia, within the seismically active Alpine-Himalayan belt43. Its complex geology and geodynamic setting give rise to numerous active fault lines44,45. The Aegean Region, situated near the Gediz Graben system, is one of the most seismically active areas in Türkiye, with a long history of frequent earthquakes2,46,47. The region’s tectonic complexity includes several distinct fault lines, and Türkiye has been classified into four neotectonic provinces by Şengör48,49 and Emre et al.50. This study focuses on earthquakes with a moment magnitude of Mwg ≥ 2.43 that occurred in the Aegean Region between 1970 and 2021. Figure 2 presents a map illustrating the active fault zones.

Study area and active fault lines within the Western Anatolian extensional regime (ArcGIS Online, (Esri, accessed 2025); https://www.arcgis.com/).

The dataset used in this study was compiled by integrating earthquake records from three major sources: the Disaster and Emergency Management Presidency Earthquake Department (AFAD-DDA), the Kandilli Observatory and Earthquake Research Institute (KOERI), and the catalog prepared by Tan51. Within the study area, these catalogs respectively documented 202,080, 13,950, and 252,594 earthquakes between 1900 and 2021. To construct a unified and comprehensive dataset, events were matched across catalogs using two criteria: (i) a time difference of less than 3 min and (ii) a spatial difference of less than 0.5 degrees in both latitude and longitude. A strong correlation was observed, particularly between the AFAD-DDA and Tan51 catalogs, especially for events with magnitudes greater than Mwg ≥ 2.43. Following the matching process, unmatched events and missing records were incorporated into the AFAD-DDA dataset, and all magnitude values were standardized using moment magnitude conversion equations to ensure consistency across sources.

To avoid statistical bias caused by dependent events such as aftershocks, foreshocks, and earthquake swarms, the compiled catalog was declustered using the Reasenberg algorithm52, which identifies and removes time–space-magnitude correlated event clusters. This procedure was implemented through the ZMAP software environment53, which is widely used in seismological research for catalog cleaning and event independence analysis. The declustering algorithm identified 5,284 dependent event clusters, resulting in the removal of 30,107 earthquakes, predominantly short-interval aftershocks. The remaining catalog consisted of 16,503 independent seismic events, spanning from January 1970 to September 2021, which formed the basis of the subsequent clustering, statistical modeling, and forecasting procedures. By removing temporally and spatially correlated events, the declustering process ensured that the dataset would represent the background seismicity of the region. This is crucial for the validity of clustering and modeling steps, as the presence of dependent events would artificially inflate seismic density in certain locations and lead to misleading interpretations of seismic hazard. The spatial distribution of moment magnitudes (Mwg) and depths (km) for the declustered dataset is presented in Fig. 3.

Spatial distributions of earthquake magnitudes (a) and depths (b) in the declustered catalog (Mwg ≥ 2.43) for the period 1970–2021 (R version 4.3.1; https://www.r-project.org/).

Various empirical relationships have been employed to convert different magnitude types to moment magnitude (Mw). In this study, the conversion formulas proposed by Kadirioğlu and Kartal54, Pınar et al.55, Tan51, and Scordilis56 were considered. The primary reason for selecting these relations is that they were derived from the same or similar earthquake catalogs used in this study (e.g.51,55) and are widely accepted for magnitude conversion in the region (e.g.56). The equations representing the original magnitude-to-moment magnitude (Mw) relationships taken from Kadirioğlu and Kartal54 and used for homogenizing the earthquake catalog are provided below:

The conversion relationships between original magnitude types and moment magnitude (Mw) proposed by Pınar et al.55 are given below:

The original magnitude-to-moment magnitude (Mw) conversion relationships proposed by Tan51 are as follows:

The original magnitude-to-moment magnitude (Mw) conversion relationships proposed by Scordilis56, and used for homogenizing the earthquake catalog, are presented below:

However, the Mw scale is available for large earthquakes (> 7.5), it has not been validated globally for magnitudes below 7.5 and is not suitable for use. In addition, the Mw scale is based on surface waves and may not be valid for all earthquake depths. To address these shortcomings, Das et al.57 introduced the Das Magnitude Scale (Mwg) using global data covering 25,708 earthquakes between 1976 and 2006. Mwg uses body wave magnitude instead of surface waves and is applicable to all depths, making it more suitable for a wider range of earthquakes. Mwg is a more accurate measure of energy release than Mw and provides a better representation of earthquake power58. Therefore, after obtaining the Mw magnitudes in the study, the seismic moment (Mo) proposed by Hanks and Kanamori59 was first calculated and then the Mwg scale was calculated to be used in the analyses throughout the study. The equations used are as follows, respectively:

To visually represent the epicentral distribution and Kernel density of the earthquakes in the declustered earthquake catalog, maps were generated using ArcGIS and are showcased in Fig. 4a,b respectively. These steps were pivotal in ensuring the compilation of a reliable and declustered earthquake catalog for the study.

Map of the epicenter distribution (a) and Kernel density (b) of the non-clustered catalog of earthquakes with Mwg ≥ 2.4 (ArcGIS; https://www.arcgis.com/)60.

Methodology

FCM algorithm

The Fuzzy C-Means (FCM) method is a widely used approach in multivariate statistical analysis for partitioning data into distinct subsets. Unlike hard clustering methods, FCM allows each data point to belong to multiple clusters with varying degrees of membership, providing a more flexible and realistic representation of the data structure. In this method, the standardized Euclidean distance is commonly used as the distance metric61. A crucial initial step in the FCM algorithm is determining the appropriate number of clusters62. Several algorithms have been proposed in the literature to identify the optimal number of clusters in FCM. Two widely used methods for this purpose are the Silhouette score and the Elbow criterion63. The Silhouette score evaluates how well each data point fits its assigned cluster by considering both cohesion and separation. A value close to 1 indicates strong clustering performance, while a value near -1 suggests poor clustering. Higher Silhouette scores reflect better model suitability for the dataset. The Elbow criterion, on the other hand, analyzes the sum of squared errors (SSE) across different values of k (number of clusters). By plotting SSE against k, the “elbow” point where the rate of decrease sharply changes helps determine the optimal number of clusters64,65. Using both the Silhouette score and the Elbow criterion, the optimal number of clusters and clustering performance can be effectively assessed. This combined approach increases the flexibility and reliability of cluster analysis in multivariate contexts. After choosing the number of clusters (\({c}_{i}, i=\text{1,2},\dots ,c),\) the cluster centers are obtained by

where N is the number of observations, m is called the turbidity coefficient that any real number greater than 1. Here, \({u}_{ij}\) represents the membership degree of \({X}_{i}\) in jth cluster and it is given by

Here, \({d}_{ij}\) is the Euclidean distance between the cluster centers and the data as \({d}_{ij}=\Vert {c}_{i}-{X}_{j}\Vert\). Then, the objective function is given by

If the amount of change is small enough, the algorithm ends. The flowchart of the FCM algorithm is presented in Fig. 5.

Flowchart of the FCM Algorithm66.

Statistical distributions

The most widely used formula for determining the magnitude-frequency relationship in earthquakes is the Gutenberg-Richter formula67. The Gutenberg-Richter formula is defined as

where N(M) is the number of earthquakes equal or larger than M magnitude. The constants (a) and (b) in the magnitude-frequency relation represent key parameters. The average yearly seismic activity index is determined by the value of (a), which is influenced by both the observation period and the level of seismic activity. Parameter (b) governs the slope of the linear function. Regional-scale estimates of the b-value typically fall within the range of 0.5 to 1.568. Besides, the average regional scale estimates of the b-value tend to hover around 169. The b-value serves as an indicator of different tectonic sources within the given area70. The a-value is directly computed as the intercept in the Gutenberg-Richter relationship. By leveraging the parameters in this formula, probabilities of earthquake occurrences and return periods can be estimated using statistical distribution models such as Poisson, Gumbel, and others71,72.

The normal distribution is a symmetrical probability distribution centered around the mean and primarily used to model continuous and naturally occurring phenomena. Although it is not typically the most appropriate model for characterizing earthquake magnitudes—particularly due to the skewed and heavy-tailed nature of seismic data—it remains important as a baseline reference distribution when comparing the fit of alternative statistical models. In the context of seismology, the normal distribution can provide a useful benchmark for identifying deviations from symmetry and assessing the appropriateness of alternative models such as Gumbel, Log-Normal, or Weibull, which are more suitable for modeling extreme events. While earthquakes do not follow a strictly Gaussian behavior, understanding the behavior of the normal distribution enables researchers to assess whether the observed data exhibit asymmetry or heavier tails, which is critical for selecting accurate seismic hazard models. The cumulative distribution function (cdf) and probability density function (pdf) of the normal distribution are given below73:

where \(-\infty <\mu <\infty\) is a location and \({\sigma }^{2}>0\) is a squared scale parameter and \(\Phi\) is the cdf of the standard normal distribution and \(erf\) is the complementary error function.

The gamma distribution is frequently used in seismology to model earthquake inter-event times, energy release, and the duration between major seismic events. It serves as a generalization of the Poisson process by allowing for variable waiting times between events, making it particularly suitable for characterizing clustered or time-dependent seismic sequences. Unlike the exponential distribution, which assumes a constant hazard rate, the Gamma distribution accommodates rate variability through its shape parameter α\alphaα, thereby offering greater flexibility in modeling temporal dependencies and memory effects in earthquake occurrence patterns. For example, a shape parameter α > 1 corresponds to a system where the hazard rate increases with time, which is often observed in aftershock sequences or stress accumulation processes16,74. Additionally, the gamma distribution is capable of representing cumulative energy dissipation, as it can model skewed and heavy-tailed distributions, a characteristic commonly observed in seismic energy release patterns. In this context, it has been applied to model both the frequency of earthquake occurrences within fixed time windows and the distribution of seismic moments or inter-event durations75,76. The cdf and pdf of the Gamma distribution are given below

where \(\alpha >0\) is a shape and \(\beta >0\) is a rate parameter and \(\gamma \left(\alpha ,\beta \right)\) is the lower incomplete gamma function.

The exponential distribution is one of the most commonly used models in seismology for representing the time intervals between successive earthquake events, particularly in memoryless processes where the occurrence of one event does not influence the likelihood of another in the near future. It is often employed to model the inter-event times in a Poisson process, where events occur independently and with a constant average rate77,78. This distribution is especially relevant in early-stage seismic hazard assessments or when modeling background seismicity, where earthquake occurrences are assumed to be random and temporally independent. Its main advantage lies in its simplicity and closed-form expressions for both the pdf and cdf

where \(\lambda >0\) is rate or inverse scale parameter.

In the context of earthquake modeling, the exponential distribution serves as a benchmark model for more complex time-dependent models. For instance, if observed inter-event times significantly deviate from an exponential distribution, this may indicate temporal clustering, stress transfer, or aftershock triggering processes, suggesting the need for alternative models like Gamma, Weibull, or ETAS (Epidemic-Type Aftershock Sequence) models79,80. Although it provides a reasonable approximation for low-magnitude background seismicity, the exponential model generally underestimates the likelihood of short inter-event times during aftershock sequences and fails to account for the long-term memory or rate changes in seismic activity, which are often critical for hazard prediction. Nonetheless, due to its interpretability and mathematical tractability, the exponential distribution remains a foundational tool in probabilistic seismic hazard analysis81 and is widely used in parametric models to approximate recurrence intervals, triggering probabilities, and return periods in earthquake-prone regions.

The Weibull distribution is widely employed in seismic hazard analysis due to its flexibility in modeling the distribution of earthquake magnitudes, inter-event times, and energy release. It is particularly effective in reliability-based models where failure times or extreme occurrences are of interest. The distribution’s scale \((\alpha\)) and shape (λ) parameters allow it to capture various behaviors of seismic events, including those with increasing or decreasing hazard rates75,82. The cdf and pdf of the Weibull distribution are given below

where \(a>0\) is scale and \(\lambda >0\) is a shape parameter.

In seismology, a shape parameter α < 1 may suggest aftershock decay, while α > 1 indicates increasing hazard, potentially modeling stress accumulation in fault zones. The Weibull distribution has been successfully used to model earthquake recurrence intervals and lifespan of seismic sequences, particularly when the assumption of constant hazard rate (as in exponential models) does not hold83.

The log-normal distribution is frequently used to describe earthquake magnitudes and energy release, particularly when these variables exhibit positive skewness. In this distribution, the logarithm of the variable (e.g., magnitude) follows a normal distribution, making it appropriate for modeling skewed data in seismology30,84. The cdf and pdf of the log-normal distribution are defined as follows:

where \(-\infty <\mu <\infty\) and \(\sigma >0\) are the mean and standard deviation of the logarithm of the distribution, respectively and \(\Phi\) is the cdf of the standard normal distribution and \(erf\) is the complementary error function.

The Gumbel distribution, one of the primary families in extreme value theory (EVT), is particularly suitable for modeling maximum (or minimum) values of a dataset. In seismology, it is frequently used to analyze extreme earthquake magnitudes, particularly for estimating return periods and the probability of rare but catastrophic events41,85. Its widespread use in seismic hazard analysis stems from several theoretical and empirical advantages. The Gumbel distribution arises as the limiting distribution of the maximum of a large number of independent identically distributed (i.i.d.) random variables. This fits the context of modeling the largest magnitudes observed over certain time periods or regions. The cdf and pdf of the Gumbel distribution are, respectively, given by.

where \(\alpha >0\) is a location and \(\beta >0\) is a scale parameter.

Earthquake magnitudes exhibit a positive skew with no upper bound (theoretically unbounded). Gumbel’s right-skewed nature and unbounded support match this empirical behavior well. The location parameter α approximates the central tendency of extremes (e.g., the threshold magnitude), and the scale parameter β captures the variability, allowing straightforward interpretation for risk communication.

LSTM method

Although RNNs are effective in addressing long-term dependency issues, they may encounter challenges such as gradient vanishing in certain applications86. To overcome this limitation, Hochreiter and Schmidhuber42 introduced the Long Short-Term Memory (LSTM) model, a specialized type of RNN. Since its development, various researchers—such as Rodriguez et al.87, Medsker and Jain88, Hermans and Schrauwen89, and Graves90—have proposed enhancements to improve its performance. As a result, LSTM has become a widely adopted model in time series forecasting. By incorporating memory cells and gating mechanisms to control information flow, LSTM effectively addresses the gradient vanishing problem and outperforms classical RNNs91. Furthermore, LSTM provides strong performance in time series applications by overcoming limitations of traditional forecasting methods92.

LSTM units consist of three main components: the input gate, forget gate, and output gate. The cell state plays a crucial role in carrying information through the network22. The input gate determines whether new information should be incorporated based on its relevance. The forget gate enhances learning by deciding which information should be retained or discarded. The output gate structures and transmits the processed information93,94,95. LSTM has proven to be an effective algorithm for time series modeling, and its operational structure is illustrated in Fig. 6.

Schematic representation of a basic LSTM architecture96.

The input gate is used to define what new information should be recorded in the cell state. It has two main components, namely, the sigmoid layer and tanh layer. The sigmoid determines what information to record in the cell state, while tanh generates a vector of new candidate values to place in the cell state. The outputs of the sigmoid layer and tanh layer are computed in the following way:

New information to be added to the cell state is calculated by combining the outputs of the sigmoid layer and tanh layer:

The forget gate output is then used in the element-wise multiplication represented by the symbol ⊙. The forget gate output, \({f}_{t}\), determines the data to be extracted from the cell state. It is calculated using \({X}_{t}\) and \({h}_{t-1}\), and a sigmoid layer. The output of the forget gate is a value between 0 and 1, where 0 means that the data could be completely forgotten and 1 means that it should be retained.

The forget gate \({f}_{t}\) output combines the previous memory cell signal with the signal \({c}_{t-1}\). The resulting element-wise multiplication (⊙) with \({c}_{t-1}\) determines which data to keep and which to forget:

The output gate determines which data is output. It makes a major contribution to the selection of the part of the LSTM memory that will be effective. It uses a sigmoid layer followed by a tanh layer, mimicking the process of the input gate. The sigmoid layer calculates the importance and is multiplied element-wise by its output using a non-linear tanh function. The process is obtained by

The vector of candidate cell values \(\widetilde{{c}_{t}}\) is given by and the vector of the memory cell by \({c}_{t}\). Furthermore, the sigmoid function \(\sigma\) and the output matrix \({h}_{t}\) are defined. Weight matrices \({W}_{i}\), \({W}_{f}\), \({W}_{o}\) and \({W}_{c}\), along with bias vectors \({b}_{i}\), \({b}_{f}\), \({b}_{o}\) and \({b}_{c}\) are used. The result output \({h}_{t}\) is the element-wise product of \({o}_{t}\) and the hyperbolic tangent of the current memory cell state \({c}_{t}\). This result carries the data to form the prediction in the LSTM process. The sources cited for these informations are Reyes et al.97, Gürsoy et al.98 and Jia and Ye99.

Results and discussion

Clustering with FCM of earthquake catalog

In this section, we first present the basic characteristics of the earthquake catalog prior to conducting the clustering analysis using the FCM algorithm. Although the complete catalog includes seismic events recorded between 1900 and 2021, the majority of events documented in the KOERI and Tan51 catalog occurred after 1970. Given the lack of sufficient and reliable data before this date, the analyses in this study are limited to the period from January 1970 to September 2021. Table 1 presents the key descriptive statistics for earthquake magnitudes and depths over this selected timeframe. For depth, the first quartile (Q1) is 6.81 km, the median (Q2) is 10 km, and the third quartile (Q3) is 16.32 km. The average depth is 16.06 km, with a standard deviation of 21.25 km. The skewness of 3.84 and kurtosis of 20.79 indicate a highly right-skewed and heavy-tailed distribution. Regarding earthquake magnitudes during the same period, Q1 is 2.54, the median is 2.76, and Q3 is 3.20. The mean magnitude is 2.96, with a standard deviation of 0.60. The skewness (1.54) and kurtosis (5.38) values again suggest a right-skewed distribution with heavier tails than a normal distribution.

Figure 7 presents the histograms of earthquake depth and magnitude for the period between 1970 and 2021. Both distributions are positively skewed, indicating that most earthquakes occurred at shallow depths and had relatively low magnitudes. The depth histogram (left) shows that the majority of earthquakes occurred at depths less than 20 km, with a sharp decline in frequency as depth increases. Similarly, the magnitude histogram (right) reveals that most events were of low to moderate magnitude, predominantly clustered around 3.0–4.0 Mwg.

The box plots in Fig. 8 illustrate the yearly distribution of earthquake magnitudes (Mwg) recorded between 1970 and 2021. A noticeable downward trend in magnitudes is observed after 1990. This apparent decline is likely attributable to the increased sensitivity and improved resolution of earthquake recording instruments introduced in the early 1990s, which allowed for more frequent detection of lower-magnitude events.

The FCM algorithm was employed to partition the earthquakes into homogeneous subgroups. The analysis was based on the latitude, longitude, and magnitude values of earthquakes occurring within the defined study area. The FCM algorithm was applied for varying numbers of clusters, specifically from 2 to 5, to evaluate different levels of granularity in spatial segmentation. The resulting cluster structures for each case are illustrated in Fig. 9a–d.

Spatial clustering results of the declustered earthquake catalog using the FCM algorithm (R version 4.3.1; https://www.r-project.org/).

The clustering results shown in Fig. 9 are based on the FCM algorithm, where each event has a degree of membership in multiple clusters. For visualization purposes, each event is assigned to the cluster in which it has the highest membership value. Unlike deterministic clustering methods, FCM does not create sharp boundaries, as events near the cluster edges exhibit overlapping characteristics. The cluster regions should, therefore, be interpreted as areas of influence rather than strictly separated zones. Figure 10 displays the elbow criterion and the silhouette score results for the FCM analysis. These graphs are crucial in determining the appropriate cluster number in clustering analysis. The Silhouette Score graph illustrates the clustering quality for different numbers of clusters and the Elbow Criterion graph shows the explained variance versus the number of clusters.

According to both the silhouette score and the Elbow method, the optimal number of clusters for the FCM analysis is determined to be three. The silhouette plot indicates a peak at K = 3, suggesting well-defined and compact clusters, while the Elbow plot shows a clear inflection point at the same value. Figure 10b visually supports this conclusion by illustrating the sharp decrease in within-cluster variance up to K = 3, after which the marginal gain diminishes. Accordingly, the clustering results for K = 3 are further analyzed and presented in the following figures, and the details of each cluster are summarized in Table 2.

In Table 2, the earthquake catalogue is divided into three clusters, each with different characteristics. The first cluster, which contains the largest number of earthquakes (5813), includes earthquakes with depths of up to 164 km and magnitudes between 2.43 Mwg and 6.62 Mwg. The average magnitude of the earthquakes occurring in this cluster is 2.89 Mwg. The second cluster, consisting of 4682 earthquakes, has a similar average magnitude of 2.85 Mwg. This cluster mainly includes events occurring on land, with earthquake depths reaching 159.2 km. The third cluster, containing 5350 earthquakes, is concentrated in offshore areas, with an average magnitude of 3.15 Mwg and a maximum depth of 183.8 km. In particular, the devastating Izmir earthquake of October 30, 2020 is part of this cluster. Cluster 1 stands out for having the largest membership compared to the others, while Cluster 2, despite having the fewest observations, contains some of the strongest earthquakes in the region. The box plot and histogram graphs for the clusters are, respectively, presented in Fig. 11a,b. From these graphs, it can be observed that Cluster 3 is slightly different from the other clusters.

The seismicity of seismic sources is also assessed using the Gutenberg-Richter relation. It is obtained as \(Log N\left(M\right)=6.80-0.99M\) for the first cluster, \(Log N\left(M\right)=6.01-0.84M\) for the second cluster and \(Log N\left(M\right)=7.31-1.07M\) for the third cluster, as illustrated in Fig. 12, respectively. The Gutenberg–Richter plots indicate distinct seismic characteristics for each cluster. Cluster 1 exhibits moderate seismic activity with a typical b-value close to 1, suggesting a balanced occurrence of small and large earthquakes. Cluster 2 shows the lowest seismic activity, as indicated by its relatively low a-value; however, its b-value below 1 suggests that larger earthquakes are more likely in this region. In contrast, Cluster 3 demonstrates the highest seismic activity (highest a-value), and a b-value greater than 1, implying that small-to-moderate magnitude events dominate over large ones.

Statistical modeling of magnitude distributions

In this section, the D’Agostino skewness test is applied to evaluate the asymmetry in the distribution of earthquake magnitudes for each cluster, while the Anscombe–Glynn kurtosis test is employed to assess tail behavior. The results of these statistical tests, along with descriptive measures for magnitudes, are presented in Table 3.

Table 3 summarizes the descriptive statistics and normality test results for earthquake magnitudes (Mwg) across the three clusters. In Cluster 1, the magnitude distribution has a mean of 3.4 and median of 2.6, with a moderate spread (St. Dev. = 0.5). The distribution is positively skewed (Skewness = 1.85; Z = 39.59; P = 0.000) and exhibits high kurtosis (6.8; Z = 22.67; P = 0.000), indicating a significant deviation from normality due to the presence of heavy tails and asymmetry. In Cluster 2, the magnitudes exhibit slightly less skew (Skewness = 2.00) but a similar pattern of deviation (Kurtosis = 7.73). The variability is highest here (St. Dev. = 0.6), and the maximum observed value reaches 7.5. The normality test again reveals a statistically significant deviation from normality (P < 0.001), confirming the influence of extreme values. Cluster 3 displays a more concentrated magnitude range with a mean of 3.1, median of 3.0, and standard deviation of 0.6. Although the skewness is lower (1.07), the kurtosis is 3.81, and the normality test still yields significant results (Z = 8.78; P = 0.000), implying a sharper peak and non-normal characteristics.

Figure 13e,f suggest that the Gumbel distribution provides a relatively better fit for Cluster 3. However, for Clusters 1 and 2, a definitive conclusion regarding the best-fitting distribution could not be drawn based solely on visual inspection of Fig. 13a–d. To complement the graphical analysis, two formal goodness-of-fit tests Kolmogorov–Smirnov (K–S) and Cramér–von Mises (CVM) were conducted. The results of these tests are presented in Table 4. The K–S test measures the maximum deviation between the empirical cumulative distribution function (cdf) and the theoretical cdf. The CVM test, similar in purpose, places greater emphasis on discrepancies throughout the entire distribution, especially in the tails.

To evaluate the performance of the fitted distributions and support model selection, we employ two widely used information criteria: the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC). The AIC is based on maximum likelihood estimation and offers a trade-off between model fit and complexity. It is calculated using the formula AIC = –2 × log-likelihood + 2p, where p represents the number of parameters. Lower AIC values indicate a better-fitting model by penalizing over-parameterization. The BIC, derived from Bayesian principles, introduces a stronger penalty for model complexity and is computed as BIC = –2 × log-likelihood + log(n) × p, where n is the sample size. As with AIC, lower BIC values suggest a more suitable model. Table 5 presents the AIC and BIC values for each distribution fitted to the three clusters. According to the results, the Gumbel distribution consistently yields the lowest AIC and BIC values across all clusters, indicating the best overall fit. The corresponding maximum likelihood estimates (MLEs) of the distribution parameters are provided in Table 6.

The suitability of the Gumbel distribution for modeling earthquake magnitudes in this study is supported by both seismological considerations and theoretical properties of extreme value modeling. Earthquake magnitudes are inherently right-skewed, bounded below by a catalog completeness threshold (e.g., Mwg ≥ 2.43), and unbounded above, with the primary interest often focused on large, destructive events. The Gumbel distribution, a member of the EVT family, is specifically designed to model the statistical behavior of maximum values and thus offers a natural framework for capturing the tail behavior of seismic data41. In the context of the Aegean region, the magnitude data exhibit asymmetry and heavy-tailed behavior. This structure aligns well with the shape characteristics of the Gumbel distribution, which provides flexibility in modeling rare and extreme events without imposing an artificial upper bound. Previous studies have shown that Gumbel-based models perform reliably in modeling maximum yearly magnitudes, extreme aftershocks, and peak ground motion parameters83,85. In addition to its theoretical appropriateness, the Gumbel distribution outperformed alternative models such as the Normal, Log-Normal, Weibull, and Gamma distributions in this study based on goodness-of-fit criteria (K–S, CVM, and AD tests) and information criteria (AIC and BIC). These results reinforce its validity as a robust tool for representing the extreme behavior of regional earthquake magnitudes.

Figure 14 illustrates the hazard functions for earthquake magnitudes across the three clusters. In reliability and survival analysis, the hazard function, also known as the failure rate or instantaneous event rate, is defined as the conditional probability that an event occurs in an infinitesimally small interval, given that it has not occurred before that time. In the context of this study, the hazard function is interpreted as the instantaneous probability of an earthquake of a given magnitude occurring, given that it has not yet occurred. The hazard function is given by

where \(f\left(x\right)\) and \(\text{F}\left(x\right)\) are the pdf and cdf of the fitted Gumbel distribution, and \(\alpha >0\) and \(\beta >0\) are location and scale parameters, respectively108.

This approach has been used in various earthquake hazard and extreme value modeling studies to better understand the likelihood of occurrence of large-magnitude events41,83. As seen in Fig. 14, while Cluster 3 exhibits the steepest rise in the hazard function, indicating a concentrated risk within a narrower magnitude range, Cluster 2 shows a broader hazard profile, consistent with its potential for large-magnitude earthquakes due to its tectonic setting. Cluster 1 falls in between, with a moderate hazard function. These differences reflect the varying seismic characteristics of each cluster. Although Cluster 2 exhibits a lower hazard curve than Cluster 3, its lower b-value (Fig. 12) and tectonic setting suggest a higher likelihood of large-magnitude earthquakes, consistent with subduction zone dynamics.

The return periods of earthquakes with Mwg ≥ 5.5 using the Gumbel model are presented for each cluster in Table 7. As seen from the table, the return periods in Cluster 1 increase from 2.56 years at the 25% probability level to 3.63 years at the 95% level. In Cluster 2, the return periods follow a similar trend, ranging from 2.53 to 3.55 years, reflecting slightly shorter recurrence intervals compared to Cluster 1. Cluster 3, on the other hand, exhibits noticeably longer return periods across all probability levels, with values ranging from 2.71 to 4.22 years, suggesting less frequent occurrence of earthquakes above magnitude 5.5 within this group.

Magnitude prediction results with LSTM algorithm

After the clusters were obtained by FCM method, the LSTM analysis was used for each cluster to predict the monthly maximum magnitudes along the Aegean Region. The data was split into 70% for training and 30% for testing, with Min–Max normalization applied for consistency100. The LSTM model architecture was defined with the tanh activation function and "return_sequences = True" to maintain output sequences. Stochastic gradient descent was used for optimization, with root mean squared error (RMSE) as the loss function and k-fold cross-validation was employed to assess the model’s generalization performance. After training, the model was used for predictions, providing insights into potential monthly maximum earthquake magnitudes for each cluster. Other hyper parameters for the regions are shown in Table 8.

Figure 15 presents the iteration number and the associated loss function values for the clusters which show the error amounts of the clusters. When the number of iterations and loss function values of the clusters are compared in Fig. 15, it is not found any significant difference between them.

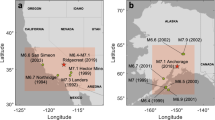

Figure 16 shows the forecasted values and confidence limits for the maximum earthquake magnitudes until March 2029. Figure 16 indicates that the maximum earthquake magnitudes in the upcoming years closely align with the maximums in the 1st (the region between the cities of Istanbul, Uşak, Athens and Thessaloniki) and 2nd (the region between the cities of Istanbul, Zonguldak, Aksaray and Antalya) clusters. It should be emphasized that the maximum magnitudes within the 3rd cluster (the region between the cities of Izmir, Athens, Crete and Antalya) exhibit a tendency toward higher values.

When examining the forecasts from October 2021 to March 2029, the highest maximum predicted magnitudes obtained for the 1st, 2nd and 3rd clusters are 5.7, 5.0, and 6.5, respectively. This implies that the LSTM-generated prediction model anticipates no earthquakes exceeding a monthly maximum magnitude of 5.7 for the first and second clusters over the period 10.2021–03.2029. However, for the third cluster, it is forecasted that earthquakes of a monthly maximum magnitude of 6.5 may occur in the year 2028. The region in the third cluster includes major metropolitan areas such as Izmir, Muğla, Athens, Crete, Antalya (on the West Anatolian fault line) and also half of the Aegean islands. Additionally, it was observed from Table 9 that four earthquakes ranging between magnitudes 5.5 and 5.9 were associated with the third cluster. To substantiate our findings, we collected data on the main seismic events occurring in the region between the years 2021 and 2024. As indicated in Table 9, numerous earthquakes have occurred within the range of magnitude 4.0 to 4.4. When compared with the LSTM graphs provided in Fig. 16, it is evident that the results accurately reflect reality.

A lot of aftershocks occur together with destructive earthquakes. Therefore, the occurrence of an earthquake with a monthly maximum magnitude of 5 is an indicator of destructive earthquakes. As illustrated in Fig. 16, the upper-bound maximum magnitude estimates obtained from the third cluster reveal the risk of destructive earthquakes from the beginning of 2025 to 2029.

We also compare our findings with some similar studies about the same region. Çam and Duman101 conducted a seismic magnitude prediction study for the same region using RNN models other than LSTM. Overall, when evaluating the prediction results, the network accurately predicted earthquakes that would not occur in all regions to a high degree. While the predicted earthquakes that did occur were somewhat accurate, there were also instances of earthquakes that were not predicted or incorrectly predicted. Although the artificial neural network (ANN) generally achieved the desired predictions within certain intervals in the available database, it could not provide highly accurate predictions for earthquakes with a high degree of certainty. Future studies have suggested that incorporating different earthquake parameters into the input parameters used in the models, such as Radon gas density, could lead to more consistent predictions. A similar earthquake magnitude prediction study using LSTM for the region is also attributed to Karcı and Şahin102. This study aimed to predict earthquakes based on historical data of earthquakes occurring in Türkiye between 1970 and 2021. Although the dataset used differs, the LSTM model yielded the best predictions, and it was observed that the model selection criteria in our study, obtained through FCM clustering of earthquakes, were more favorable than those of Karcı and Şahin102. We also consider that in other studies conducted on same region, the direct utilization of raw catalog data from databases and the filtering out of aftershocks and foreshocks may have influenced the outcome.

Several studies have previously applied clustering methods to earthquake classification and prediction. Novianti et al.103, Kamat and Kamath104, and Rifa et al.105 utilized the k-means clustering algorithm for earthquake classification, while Mirrashid26 and Sharma et al.27 employed FCM to predict earthquake magnitudes. These approaches have provided valuable insights into seismic activity; however, the proposed methodology in this study enhances the analysis by integrating additional parameters, thus enabling a more comprehensive assessment of both current and potential future seismic activities.

Conclusion

This study presents a comprehensive and data-driven seismic analysis of earthquakes that occurred in the Western Anatolian Region between 1970 and 2021. By applying the Fuzzy C-Means (FCM) clustering algorithm, earthquakes were classified into three spatially and seismically distinct sub-regions. This approach allowed for the identification of homogeneous seismic zones within a geologically complex setting and provided an alternative to conventional tectonic zoning methods that often rely solely on expert judgment or deterministic fault mapping. Unlike previous studies that focused primarily on historical seismicity statistics or tectonic segmentation (e.g.2,35), this study utilized a hybrid methodological framework. By combining unsupervised clustering (FCM), probabilistic modeling (Gumbel distribution), and machine learning (LSTM), it bridges the gap between empirical statistical modeling and physical tectonic interpretation. The monthly maximum earthquake magnitudes of each cluster are then taken into account, and their estimates are obtained with the LSTM algorithm. Since the predicted results obtained here show the monthly maximum earthquake magnitudes, estimates with magnitudes of 3 or 5 show the monthly maximum results. It is predicted that large earthquakes will occur during times when the monthly maximum magnitude is close to 5, as large earthquakes are often accompanied by aftershocks.

This multidisciplinary integration is crucial for developing seismic hazard assessments that are both data-informed and physically meaningful. Each cluster was examined in terms of its spatial extent and seismotectonic characteristics. Cluster 1, covering Istanbul, Izmir, Manisa, and Balıkesir, is located within the Western Anatolian Extensional Province, characterized by active normal faulting and distributed seismicity. The relatively high b-value observed in this cluster reflects the predominance of moderate-magnitude earthquakes and is consistent with the tectonic regime of frequent crustal extension and energy release across a network of grabens (e.g., Gediz, Büyük Menderes). Cluster 2 encompasses regions such as Ankara, Antalya, and Düzce, partially influenced by the Hellenic subduction system, representing a compressional regime with long recurrence intervals and a higher likelihood of large-magnitude earthquakes. The observed low b-value in this cluster, coupled with broad magnitude variability, supports this interpretation. However, the hazard function from the Gumbel model showed less frequent but higher-magnitude behavior, aligning with subduction-related seismic patterns reported in prior global studies (e.g.85). Cluster 3, including Aydın, Muğla, Crete, and Samos, is transitional between extensional and compressional tectonic zones, making it particularly complex. While the Gutenberg–Richter b-value is intermediate, the Gumbel-based hazard analysis indicated a relatively higher occurrence of moderate-to-large magnitude events within a narrower range, possibly due to the interaction between shallow crustal and deeper subduction-related sources.

One of the novel contributions of this study is the parallel use of two statistical approaches: The b-value analysis, which provides long-established insight into seismicity rate and magnitude-frequency distribution and the extreme value modeling via the Gumbel distribution, which focuses on the behavior of extremes and hazard estimation. By interpreting these methods together, the study reveals not just where earthquakes occur, but how magnitudes behave within different tectonic regimes, offering richer insight into regional hazard characteristics.

In the second phase of the study, an LSTM neural network was implemented to predict monthly maximum earthquake magnitudes up to March 2029. The LSTM architecture, which excels at learning long-range temporal dependencies, allowed for mid-term forecasting of seismic behavior. The outputs revealed distinct temporal patterns. Cluster 1 forecasts ranged between 2.0 and 5.7 Mwg, reflecting moderate activity yet retaining potential for large events, particularly in the Istanbul region, which remains a critical area for risk mitigation. Cluster 2 exhibited forecasts between 2.0 and 5.0 Mwg, moderate-magnitude crustal activity. Cluster 3 forecasts ranged from 2.4 to 6.5 Mwg, supporting the idea of persistent, suggesting high and persistent seismic activity. The LSTM analysis findings indicate that the maximum earthquake magnitudes in the 1st region (comprising Istanbul, Izmir, Manisa, Çanakkale, Balıkesir, Edirne) depicted in yellow in Fig. 8b are anticipated to range from 2.0 to 5.7. Conversely, the maximum earthquake magnitudes in the 2nd region (including Konya, Ankara, Antalya, Eskişehir, Bolu, Düzce, İzmit), shown in red in Fig. 8b, are predicted to range from 2.0 to 5.0. Conversely, the maximum earthquake magnitudes in the 3rd region (comprising Aydin, Muğla, Denizli, Burdur, Isparta, Samos, Santorini and Crete) delineated in green in Fig. 8b are projected to range from 2.4 to 6.5. In summary, the most severe earthquakes are expected to occur around Santorini until March 2029.

Unlike earlier LSTM applications that aimed to predict individual earthquake occurrences or binary event classifications (e.g.106,107), this study focused on monthly maximums, providing a more reliable indicator of temporal risk build-up rather than isolated predictions. These results emphasize the importance of interpreting seismic hazard not only through conventional geological knowledge or isolated statistical modeling, but through a comprehensive, data-driven framework that integrates physical, statistical, and computational dimensions. In conclusion, the findings indicate that Cluster 3, particularly the half of the Aegean islands region, is likely to experience relatively higher seismic activity in the coming years, both in terms of frequency and magnitude variability. The methodology proposed here is not only applicable to the Aegean region but also scalable and transferable to other seismically active regions of the world. It opens the door to more dynamic and predictive approaches in seismic hazard zoning, earthquake forecasting, and early warning system design. Ultimately, this study demonstrates that meaningful insights into earthquake behavior require both statistical sophistication and geophysical interpretation—a combination that can only be achieved through cross-disciplinary integration. The framework established here serves as a foundational model for next-generation seismic risk analysis in both academic and applied contexts.

Compared to previous studies that rely primarily on historical earthquake catalog statistics or tectonic interpretations alone, this study offers an integrated framework combining clustering analysis, extreme value modeling, and deep learning-based forecasting. This multifaceted approach enables both a better understanding of past seismic behavior and more informed projections of future activity. In conclusion, the results indicate that Cluster 3, particularly the half of the Aegean islands region, is likely to experience relatively higher seismic activity in the coming years. The methodology applied in this study provides a scalable and adaptable model for regional seismic hazard assessment and may serve as a foundation for more dynamic earthquake risk management and early warning strategies.

Data availability

The data used in this research were obtained under the Scientific and Technical Research Council of Türkiye (TÜBİTAK) project number 124F059. The datasets used or analyzed in the current study can be obtained from the corresponding author upon reasonable request.

References

Bilham, R. The seismic future of cities. Bull. Earthq. Eng. 7, 839–887 (2009).

Bozkurt, E. Neotectonics of Turkey–a synthesis. Geodin. Acta 14, 3–30 (2001).

Parsons, T. Recalculated probability of M≥ 7 earthquakes beneath the Sea of Marmara, Turkey. J. Geophys. Res.: Solid Earth. 109 (2004).

Barka, A. et al. The surface rupture and slip distribution of the 17 August 1999 Izmit earthquake (M 7.4), North Anatolian fault. Bull. Seismol. Soc. Am. 92, 43–60 (2002).

Dogan, G. G. et al. The 30 October 2020 Aegean Sea tsunami: Post-event field survey along Turkish coast. Pure Appl. Geophys. 178, 785–812 (2021).

Bayrak, E. & Coban, K. H. Evaluation of 08 August 2019 Bozkurt (Denizli-Turkey, Mw 6.0) earthquake in terms of strong ground-motion parameters and Coulomb stress changes. Environ. Earth Sci. 82, 470 (2023).

Paradisopoulou, P. M. et al. Seismic hazard evaluation in western Turkey as revealed by stress transfer and time-dependent probability calculations. Pure Appl. Geophys. 167, 1013–1048 (2010).

Bayrak, Y. & Bayrak, E. An evaluation of earthquake hazard potential for different regions in Western Anatolia using the historical and instrumental earthquake data. Pure Appl. Geophys. 169, 1859–1873 (2012).

Coban, K. H. & Sayıl, N. Conditional probabilities of Hellenic arc earthquakes based on different distribution models. Pure Appl. Geophys. 177, 5133–5145 (2020).

Tepe, Ç., Sözbilir, H., Eski, S., Sümer, Ö. & Özkaymak, Ç. Updated historical earthquake catalog of İzmir region (western Anatolia) and itsimportance for the determination of seismogenic source. Turk. J. Earth Sci. 30, 779–805 (2021).

Uzelli, T. et al. Effects of seismic activity on groundwater level and geothermal systems in İzmir, Western Anatolia, Turkey: The case study from October 30, 2020 Samos Earthquake. Turk. J. Earth Sci. 30, 758–778 (2021).

Mozafari, N. et al. Seismic history of western Anatolia during the last 16 kyr determined by cosmogenic 36Cl dating. Swiss J. Geosci. 115, 1–26 (2022).

Ermiş, İ & Cürebal, İ. Earthquake probability prediction with decision tree algorithm: The example of Izmir. Türkiye. J. Artif. Intell. Data Sci. 4, 59–67 (2024).

Geller, R. J., Jackson, D. D., Kagan, Y. Y. & Mulargia, F. Earthquakes cannot be predicted. Science 275, 1616–1617 (1997).

Scholz, C. H. The mechanics of earthquakes and faulting (Cambridge University Press, 2019).

Utsu, T. Representation and analysis of the earthquake size distribution: A historical review and some new approaches. Seismicity patterns, their statistical significance and physical meaning, 509–535 (1999).

Kagan, Y. Y. Seismic moment distribution revisited: I. Statistical results. Geophys. J. Int. 148, 520–541 (2002).

Marzocchi, W. & Lombardi, A. M. Real-time earthquake forecasting. Geophys. Res. Lett. 36 (2009).

Asim, M., Siddique, M. A. B. & Shafiq, M. A hybrid machine learning approach for earthquake prediction. IJACSA 8, 206–211 (2017).

Kong, Q. et al. Machine learning in seismology: Turning data into insights. Seismol. Res. Lett. 90, 3–14 (2019).

Mousavi, S. M., Ellsworth, W. L., Zhu, W., Chuang, L. Y. & Beroza, G. C. Earthquake transformer—an attentive deep-learning model for simultaneous earthquake detection and phase picking. Nat. Commun. 11, 3952 (2020).

Wang, Q., Guo, Y., Yu, L. & Li, P. Earthquake prediction based on spatio-temporal data mining: an LSTM network approach. IEEE Trans. Emerg. Top. Comput. 8, 148–158 (2017).

Aryal, M., Tiwari, R. K. & Paudyal, H. Prediction of earthquakes in Nepal and the adjoining regions using lstm. BMC J. Sci. Res. 7, 12–26 (2024).

Ye, T., Zhang, M. & Chen, Y. An improved LSTM-based earthquake forecasting model using hybrid feature selection. Geosci. Front. 15, 112–125 (2024).

Berhich, A., Belouadha, F. Z. & Kabbaj, M. I. An attention-based LSTM network for large earthquake prediction. Soil Dyn. Earthq. Eng. 165, 107663 (2023).

Mirrashid, M. Earthquake magnitude prediction by adaptive neuro-Fuzzy inference system (ANFIS) based on Fuzzy C-means algorithm. Nat. Hazards 74, 1577–1593 (2014).

Sharma, A., Nanda, S. J. & Vijay, R. K. A model based on Fuzzy C-means with density peak clustering for seismicity analysis of earthquake prone regions. In Soft computing for problem solving, 173–185. (Springer, Singapore; 2021).

Yegulalp, T. M. & Kuo, J. T. Statistical prediction of the occurrence of maximum magnitude earthquakes. Bull. Seismol. Soc. Am. 64, 393–414 (1974).

Kaila, K. L. & Narain, H. A new approach for preparation of quantitative seismicity maps as applied to Alpide belt-Sunda arc and adjoining areas. Bull. Seismol. Soc. Am. 61, 1275–1291 (1971).

Båth, M. The seismology of Greece. Tectonophysics 98, 165–208 (1983).

Jena, R. et al. Earthquake vulnerability assessment for the Indian subcontinent using the Long Short-Term Memory model (LSTM). Int. J. Disaster Risk Reduct. 66, 102642 (2021).

Berhich, H., Nouicer, A. & Chellal, A. Comparative deep learning models for earthquake magnitude prediction in Japan. Eng. Appl. Artif. Intell. 122, 105750 (2023).

Sadhukhan, B., Chakraborty, S., Mukherjee, S. & Samanta, R. K. Climatic and seismic data-driven deep learning model for earthquake magnitude prediction. Front. Earth Sci. 11, 1082832 (2023).

Aryal, A., Sharma, A. & Bhattarai, S. LSTM-based forecasting of earthquake magnitude trends in Nepal. Nat. Hazards 119, 77–94 (2024).

Kalafat, D., Kekovalı, K., Kara, M., Yılmazer, M. & Güneş, Y. A revised and extended earthquake catalog for Turkey (Boğaziçi University, 2011).

Erdik, M., Durukal, E., Sesetyan, K., Demircioğlu, M. B. & Zülfikar, C. Earthquake risk assessment for urban areas. In 13th world conference on earthquake engineering (2004).

Unal, C., Ozel, G. & Eroglu Azak, T. A Markov chain approach for earthquake sequencing in the Aegean Graben system of Turkey. Earth Sci. Inform. 16, 1–13 (2023).

Özmen, B., Harmandar, E., Akyüz, S. Earthquake hazard map of Turkey. Disaster and Emergency Management Authority (AFAD), (2018).

Sesetyan, K. et al. Probabilistic seismic hazard assessment for Turkey. Bull. Earthq. Eng. 16, 3193–3220 (2018).

Bezdek, J. C., Ehrlich, R. & Full, W. FCM: The fuzzy c-means clustering algorithm. Comput. Geosci. 10, 191–203 (1984).

Coles, S. An Introduction to Statistical Modeling of Extreme Values (Springer Series in Statistics. Springer-Verlag, 2001).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Cabalar, A. F., Canbolat, A., Akbulut, N., Tercan, S. H. & Isik, H. Soil liquefaction potential in Kahramanmaras, Turkey. Geomatics Nat. Hazards Risk. 10, 1822–1838 (2019).

Korbar, T. et al. Active tectonics in the kvarner region (external dinarides, croatia)—An alternative approach based on focused geological mapping, 3D seismological, and shallow seismic imaging data. Front. Earth Sci. 8, 582797 (2020).

Roche, V. et al. Structural, lithological, and geodynamic controls on geothermal activity in the Menderes geothermal Province (Western Anatolia, Turkey). Int. J. Earth Sci. 108, 301–328 (2019).

Sarica, N. The Plio-Pleistocene age of Büyük Menderes and Gediz grabens and their tectonic significance on N-S extensional tectonics in West Anatolia: mammalian evidence from the continental deposits. Geol. J. 35, 1–24 (2000).

Dilek, Y. & Sandvol, E. Seismic structure, crustal architecture and tectonic evolution of the Anatolian-African Plate Boundary and the Cenozoic Orogenic Belts in the Eastern Mediterranean Region. Geol. Soc. London Special Publ. 327, 127–160 (2009).

Sengör, A. M. C. Mid-Mesozoic closure of Permo-Triassic Tethys and its implications. Nature 279, 590–593 (1979).

Sengör, A. M. C. The north Anatolian transform fault: Its age, offset and tectonic significance. J. Geol. Soc. 136, 269–282 (1979).

Emre, Ö. et al. Active fault database of Turkey. Bull. Earthq. Eng. 16, 3229–3275 (2018).

Tan. O. Turkish homogenized earthquake catalogue (TURHEC). Natural Hazards and Earth System Sciences (NHESS). Zenodo (2021).

Reasenberg, P. Second-order moment of central California seismicity, 1969–1982. J. Geophys. Res. Solid Earth 90, 5479–5495 (1985).

Wiemer, S. A software package to analyze seismicity: ZMAP. Seismol. Res. Lett. 72, 373–382 (2001).

Kadirioğlu, F. T. & Kartal, R. F. The new empirical magnitude conversion relations using an improved earthquake catalogue for Turkey and its near vicinity (1900–2012). Turk. J. Earth Sci. 25, 300–310 (2016).

Pınar, A., Coşkun, Z., Mert, A. & Kalafat, D. Frictional strength of North Anatolian fault in eastern Marmara region. Earth, Planets and Space 68, 1–17 (2016).

Scordilis, E. M. Empirical global relations converting MS and mb to moment magnitude. J. Seismol. 10, 225–236 (2006).

Das, R., Sharma, M. L., Wason, H. R., Choudhury, D. & Gonzalez, G. A seismic moment magnitude scale. Bull. Seism. Soc. Am. 109, 1542–1555 (2019).

Das, R. & Das, A. Limitations of Mw and M scales: Compelling evidence advocating for the das magnitude scale (Mwg)—A critical review and analysis. Indian Geotech. J. 1–19 (2025).

Hanks, T. C. & Kanamori, H. A moment magnitude scale. J. Geophys. Res. Solid Earth 84, 2348–2350 (1979).

Karakavak, H. N., Cekim, H. O., Ozel, G. & Tekin, S. Seismic microzonation and earthquake forecasting in Western Anatolia using k-means clustering and entropy-based magnitude volatility analysis. Geotech. Geol. Eng. 43, 1–26 (2025).

Yang, P., Yin, X. & Zhang, G. Seismic data analysis based on Fuzzy clustering. In 2006 8th international conference on signal processing 4, IEEE (2006).

Govender, P. & Sivakumar, V. Application of k-means and hierarchical clustering techniques for analysis of air pollution: A review (1980–2019). Atmos. Pollut. Res. 11, 40–56 (2020).

Dembele, D. & Kastner, P. Fuzzy C-means method for clustering microarray data. Bioinformatics 19, 973–980 (2003).

Nainggolan, R., Perangin-angin, R., Simarmata, E. & Tarigan, A. F. Improved the performance of the K-means cluster using the sum of squared error (SSE) optimized by using the Elbow method. J. Phys. Conf. Ser. 1361 (2019).

Humaira, H., & Rasyidah, R. Determining the appropiate cluster number using elbow method for k-means algorithm. In Proceedings of the 2nd workshop on multidisciplinary and applications (WMA) 1–8 (2020).

Li, K. et al. A spacecraft electrical characteristics multi-label classification method based on off-line FCM clustering and on-line WPSVM. PLoS ONE 10, e0140395 (2015).

Gutenberg, B. & Richter, C. F. Frequency of earthquakes in California. Bull. Seismol. Soc. Am. 34, 185–188 (1944).

Oncel, A. O. & Wyss, M. The major asperities of the 1999 M w= 7.4 Izmit earthquake defined by the microseismicity of the two decades before it. Geophys. J. Int. 143, 501–506 (2000).

Frohlich, C. & Davis, S. D. Teleseismic b values; or, much ado about 1.0. J. Geophys. Res. Solid Earth 98, 631–644 (1993).

Kagan, Y. Y. & Jackson, D. D. Worldwide doublets of large shallow earthquakes. Bull. Seismol. Soc. Am. 89, 1147–1155 (1999).

Oncel, A. O., Wilson, T. H. & Nishizawa, O. Size scaling relationships in the active fault networks of Japan and their correlation with Gutenberg-Richter b values. J. Geophys. Res. Solid Earth 106, 21827–218415 (2001).

Oncel, A. O. & Wilson, T. H. Space-time correlations of seismotectonic parameters: Examples from Japan and from Turkey preceding the Izmit earthquake. Bull. Seismol. Soc. Am. 92, 339–349 (2002).

Wackerly, D. D. Mathematical statistics with applications (Thomson Brooks/Cole, 2008).

Lomnitz, C. Fundamentals of earthquake prediction (John Wiley & Sons, 1994).

Gupta, I. D. The state of the art in seismic hazard analysis. ISET J. Earthq. Technol. 39, 311–346 (2002).

Corral, Á. Long-term clustering, scaling, and universality in the temporal occurrence of earthquakes. Phys. Rev. Lett. 92, 108501 (2004).

Ogata, Y. Statistical models for earthquake occurrences and residual analysis for point processes. J. Am. Stat. Assoc. 83, 9–27 (1988).

Vere-Jones, D. Forecasting earthquakes and earthquake risk. Int. J. Forecast. 11, 503–538 (1995).

Ogata, Y. Estimating the hazard of rupture using uncertain occurrence times of paleoearthquakes. J. Geophys. Res. Solid Earth. 104, 17995–18014 (1999).

Zhuang, J., Ogata, Y. & Vere‐Jones, D. Analyzing earthquake clustering features by using stochastic reconstruction. J. Geophys. Res. Solid Earth. 109 (2004).

Cornell, C. A. Engineering seismic risk analysis. Bull. Seismol. Soc. Am. 58, 1583–1606 (1968).

Kramer, S. L. Geotechnical earthquake engineering (Prentice Hall, 1996).

Pisarenko, V. F. & Sornette, D. Characterization of the frequency of extreme earthquake events by the generalized Pareto distribution. Pure Appl. Geophys. 160, 2343–2364 (2003).

Sornette, D., Knopoff, L., Kagan, Y. Y. & Vanneste, C. Rank-ordering statistics of extreme events: Application to the distribution of large earthquakes. J. Geophys. Res. Solid Earth. 101, 13883–13893 (1996).

Kijko, A. & Graham, G. Parametric-historic procedure for probabilistic seismic hazard analysis—Part I: Estimation of maximum regional magnitude mmax. Pure Appl. Geophys. 152, 413–442 (1998).

Cao, J., Li, Z. & Li, J. Financial time series forecasting model based on CEEMDAN and LSTM. Phys. A: Stat. Mech. Appl. 519, 127–139 (2019).

Rodriguez, P., Wiles, J. & Elman, J. L. A recurrent neural network that learns to count. Connect. Sci. 11, 5–40 (1999).

Medsker, L. R. & Jain, L. Recurrent neural networks. Design App., 5 (2001).

Hermans, M. & Schrauwen, B. Training and analysing deep recurrent neural networks. Adv. Neural Inf. Process. Syst. 26 (2013).

Graves, A. Generating sequences with recurrent neural networks. arXiv preprint arXiv:1308.0850 (2013).

Livieris, I. E., Pintelas, E. & Pintelas, P. A CNN–LSTM model for gold price time-series forecasting. Neural Comput. Appl. 32, 17351–17360 (2020).

Sagheer, A. & Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 323, 203–213 (2019).

Subhan, F., Wu, X., Bo, L., Sun, X. & Rahman, M. A deep learning-based approach for software vulnerability detection using code metrics. IET Softw. 16, 516–526 (2022).

Wu, Y. & Ma, X. A hybrid LSTM-KLD approach to condition monitoring of operational wind turbines. Renew. Energy. 181, 554–566 (2022).

Shrivastava, G. K., Pateriya, R. K. & Kaushik, P. An efficient focused crawler using LSTM-CNN based deep learning. Int. J. Syst. Assur. Eng. Manag. 14, 391–407 (2023).

Al-Selwi, S. M. et al. RNN-LSTM: From applications to modeling techniques and beyond—Systematic review. J. King Saud Univ. Comput. Inf. Sci. 102068 (2024).

Reyes, J., Morales-Esteban, A. & Martínez-Álvarez, F. Neural networks to predict earthquakes in Chile. Appl. Soft Comput. 13, 1314–1328 (2013).

Gürsoy, G., Varol, A. & Nasab, A. Importance of machine learning and deep learning algorithms in earthquake prediction: a review. In 2023 11th international symposium on digital forensics and security (ISDFS) 1–6 IEEE (2023).

Jia, J. & Ye, W. Deep learning for earthquake disaster assessment: Objects, data, models, stages, challenges, and opportunities. Remote Sens. 15, 4098 (2023).

Pranolo, A. et al. Enhanced multivariate time series analysis using LSTM: A comparative study of min-max and Z-score normalization techniques. ILKOM J. Ilm. 16, 210–220 (2024).

Çam, H. & Duman, O. Earthquake prediction with artificial neural network method: the application of West Anatolian fault in Turkey. Gümüshane University Electronic Journal of the Institute of Social Science. 7 (2016). (in Turkish)

Karcı, M. & Şahin, İ. Earthquake prediction by using deep learning methods. Artif. Intell. Stud. 5, 23–34 (2022) (in Turkish).

Novianti, P., Setyorini, D. & Rafflesia, U. K-Means cluster analysis in earthquake epicenter clustering. Int. J. Adv. Intell. Inform. 3, 81–89 (2017).

Kamat, R. & Kamath, R. Earthquake cluster analysis: K-means approach. J. Chem. Pharm. Sci. 10, 250–253 (2017).

Rifa, I. H., Pratiwi, H. & Respatiwulan, R. Clustering of earthquake risk in indonesia using K-medoids and K-means algorithms. Media Stat. 13, 194–205 (2020).

Berhich, A., Belouadha, F. Z. & Kabbaj, M. I. LSTM-based models for earthquake prediction. In Proceedings of the 3rd international conference on networking, information systems & security. 1–7 (2020).

Berhich, A., Belouadha, F. Z. & Kabbaj, M. I. LSTM-based earthquake prediction: Enhanced time feature and data representation. Int. J. High Perform. Syst. Archit. 10, 1–11 (2021).

Kotz, S., & Nadarajah, S. Extreme value distributions: theory and applications. World Scientific (2000).

Acknowledgements

The study presented in this research was funded by The Scientific and Technical Research Council of Türkiye (TÜBİTAK) under the project 124F059: Earthquake Magnitude Prediction with Bidirectional Deep Learning Methods for Western Anatolia Region. We would like to thank Assist. Prof. Dr. Tuba Azak for their efforts in the preparation of the data set.

Author information

Authors and Affiliations

Contributions