Abstract

The stomach is one of the main digestive organs in the GIT, essential for digestion and nutrient absorption. However, various gastrointestinal diseases, including gastritis, ulcers, and cancer, affect health and quality of life severely. The precise diagnosis of gastrointestinal (GI) tract diseases is a significant challenge in the field of healthcare, as misclassification leads to late prescriptions and negative consequences for patients. Even with the advancement in machine learning and explainable AI for medical image analysis, existing methods tend to have high false negative rates which compromise critical disease cases. This paper presents a hybrid deep learning based explainable artificial intelligence (XAI) approach to improve the accuracy of gastrointestinal disorder diagnosis, including stomach diseases, from images acquired endoscopically. Swin Transformer with DCNN (EfficientNet-B3, ResNet-50) is integrated to improve both the accuracy of diagnostics and the interpretability of the model to extract robust features. Stacked machine learning classifiers with meta-loss and XAI techniques (Grad-CAM) are combined to minimize false negatives, which helps in early and accurate medical diagnoses in GI tract disease evaluation. The proposed model successfully achieved an accuracy of 93.79% with a lower misclassification rate, which is effective for gastrointestinal tract disease classification. Class-wise performance metrics, such as precision, recall, and F1-score, show considerable improvements with false-negative rates being reduced. AI-driven GI tract disease diagnosis becomes more accessible for medical professionals through Grad-CAM because it provides visual explanations about model predictions. This study makes the prospect of using a synergistic DL with XAI open for improvement towards early diagnosis with fewer human errors and also guiding doctors handling gastrointestinal diseases.

Similar content being viewed by others

Introduction

Early and accurate diagnosis of disorders in the gastrointestinal tract (GIT) is important to improve long-term healthcare outcomes. In the gastrointestinal tract, the stomach is commonly characterized as a muscular hollow organ localized in the upper part of the abdomen and having a major function at the beginning of the second phase of digestion. The stomach is surrounded by a smooth membrane called the peritoneum that acts as a shield and protects it from external damage. Stomach walls are composed of different muscle tissue layers that mix and churn food. A pyloric sphincter is a muscular valve that brings food from the stomach to the small intestine to regulate the flow and food digestion. The stomach is a highly differentiated endocrine organ with unique physiological, biochemical, immunological, and microbiological properties. Technically, the stomach is one of the most crucial parts of the gastrointestinal tract (GIT) due to its important role in digestion; it is the point through which all ingested substances, including nutrients, pass1. Out of multiple tracts in the human body, GIT is a complex network of organs starting from mouth to anus. This network of organs is responsible for food processing and nutrient absorption. The stomach, one of the primary organs of the GIT, secretes which contain digestive enzymes and hydrochloric acid. This acidic gastric juice help to breaks down food into smaller particles, further digests, absorbs nutrients, and expels waste products. A healthy GIT is a prime requirement for a healthy body, and its functionality should not be disrupted by any other factor2. A survey performed by the World Health Organization shows that digestive tract diseases cause 25% of global deaths, through 2 million annual deaths3. Stomach and gastrointestinal diseases affect digestion and health, making these medical conditions among the most feared conditions in contemporary healthcare. The major stomach conditions affect patients with gastritis while also causing peptic ulcers and leading to stomach cancer4 while GI tract ailments also include Crohn’s disease, ulcerative colitis, and both colorectal cancer and irritable bowel syndrome (IBS) are additional conditions that affect the digestive system5. These illnesses show symptoms like abdominal pain, bloating, nausea, and vomiting combined with changes in bowel movements that cause severe medical conditions in patients. Timely and precise identification of these diseases is important since complications and life-threatening outcomes usually accompany the delayed diagnosis. Patient health and recovery can be improved by early identification of disorders6.

Endoscopy is the most popular technique for diagnosing stomach and other GI diseases. It is mainly an invasive process that entails the passing of a flexible tube equipped with a tiny camera into the gastrointestinal tract to capture visual images of the gastric and intestinal mucosa. A detailed view of the digestive tract helps to diagnose various conditions like ulcers, lesions, inflammation, and tumours via endoscopy.7. However, visual interpretation of endoscopic images for diagnosis often depends on the clinician’s knowledge and skills, which sometimes results in variability or overlooking of some objects by clinicians due to human error.8. The use of AI has been increasing in healthcare, especially in identifying diseases that are not easily recognizable by the human eye.9. In the context of gastrointestinal disease detection, methods that are used to extract features of texture help to assess the severity of these conditions10. However, the extraction of relevant features from images of the gastrointestinal tract is still a problem, and errors in this case will hamper the accuracy of the diagnosis.11. To address this, machine learning techniques are employed that extract useful features such as edges, color, and texture from endoscopic images to diagnose diseases. CNNs have significantly enhanced medical image analysis because of their advancements that overcome feature extraction problems12. However, the deep learning models outperformed compared to human expertise in clinical image analysis.13. This makes deep learning-based computer-aided diagnostic tools highly promising since they may be equally efficient as experienced physicians14.

Currently, there is a growing transition from deep learning (DL) through enhanced demands for explainable artificial intelligence (XAI) in medical diagnostics15. Although DL models achieve a high degree of accuracy, they act as Black-box and their decisions lack transparency and are not easily interpretable. XAI can narrow down the gap between model decisions and clinical acceptance, as well as patient safety. XAI is a human-centric approach to improve the ability of AI systems to explain their output. Rather than being a specific technical process, XAI is a more general term that encompasses all the strategies and actions aimed at addressing issues that are related to trust and interpretability of AI16. As pointed out in17, XAI aims to create models that provide more interpretability in the procedure of reaching their conclusions, implemented at the same time with the essential level of predictive accuracy, usually assessed by such criteria as accuracy. However, many traditional AI models often work as ‘Black box models,’ which means that clinicians are often unable to understand the reason behind the prediction generated by the AI models. To address this, a novel hybrid AI architecture is proposed that integrates the attention layers with Grad Cam to accurately highlight optimized features that help to classify stomach diseases and enhance clinical decision support. In this proposed work, DCCN and Swin transformer are also integrated, which targets specific areas in endoscopic images, and Grad Cam enables a better understanding of which region of the provided image impacted the AI decision. This co-implementation improves the diagnostic outcomes and offers visual explanations that help clinicians increase the chances of early diagnosis when diseases such as ulcers, gastritis, or stomach cancer are at their preliminary stages. The study focuses on the improvement of the diagnostic capabilities of deep learning and explainable AI in staging stomach diseases using endoscopic images. This visual evidence enhances clinical interpretability and decision confidence, unlike previous research that focuses on performance metrics. Thus, this research seeks to improve diagnostic performance to support clinicians by using interpretability techniques and integrating the algorithms to assist in managing stomach and GI tract diseases.

The rest of the paper includes the following sections: Key attributes of the proposed research are presented in “Contribution” section, “Literature review” section presents a detailed study analysis of different research. “Proposed methodology” section presents the workflow of this proposed technique and how different models are integrated in a hybrid model. Experimental outcomes and their visualization are presented in “Results and discussion” section. The paper concludes its discussion in “Conclusion” section.

Contribution

In this research work, a hybrid framework is proposed by integrating DCNN (EfficientNet-B3, ResNet-50) with the Swin Transformer, which together extracts robust features and efficiently captures local and global dependencies in medical imaging, compared to traditional single-layer architectures. This hybrid model also enhances classification accuracy with the combination of Grad-CAM, which not only improves model interpretability but also provides a visual explanation that helps clinicians visualize the key regions influencing predictions. Such visualization builds trust in medical professionals in automated systems. To further improve classification results, meta-loss-based learning classifiers are employed as post-processing that optimize decision boundaries and improve generalization. This hybrid approach is designed to improve model interpretability, together with adaptive learning. This hybrid model not only improves accuracy but also enhances clinical reliability through visual explainability in diverse medical conditions. The main key contribution of this proposed research is defined as:

-

Swin Transformer with DCNN features are combined to reduce false negatives and improve diagnostic confidence for efficient classification of gastrointestinal tract diseases.

-

Fine-tuned hierarchical components, such as attention heads and encoding methods, were optimized, which directly impacts performance in medical imaging data. Furthermore, the positional encoding is adapted to better encode spatial relationships in medical images, further improving feature extraction.

-

The layer normalization was recalibrated, and advanced activation functions like GeLU (Gaussian Error Linear Unit) were applied to enhance convergence and stability. In addition, dropout rates were fine-tuned within the Transformer layers to prevent overfitting on the medical dataset.

Takeaway 1: The study proposed a new method that uses both deep learning (DL) and explainable Artificial Intelligence (XAI) techniques to improve the diagnosis of gastrointestinal conditions affecting the stomach during our research |

Literature review

Recently, the diagnosis of diseases of disease using medical imaging techniques has gained significant attention, especially in gastrointestinal (GI) healthcare. Polyp segmentation has emerged as a critical area of focus due to the increasing availability of labelled datasets. Secondly, the classification of GI diseases remains an active research domain. While traditional ML approaches have shown reasonable effectiveness18 But DL methods consistently outperform and achieve higher accuracy and performance in terms of generalization, making them more precise for medical image analysis19.

Wireless Capsule Endoscopy (WCE) is a state-of-the-art invasive diagnostic imaging technique that is extensively applied for the identification of gastrointestinal disorders, such as bleeding, ulcers, and polyps. However, manual interpretation of WCE data is time-consuming and subjective. A study20 addresses this by proposing a deep learning technique for ulcer classification to help medical practitioners. An enhanced Mask RCNN identified ulcers, and a reconstructed ResNet101 detected features learned employing grasshopper optimization to extract deep features. The classifier used was a Multi-class Support Vector Machine (MSVM) with a cubic kernel, which recorded a classification accuracy of 99.13%. In general, the proposed technique provides higher accuracy compared to the current methods to be used for the diagnosis of gastrointestinal diseases. The CADx system is presented in21 to differentiate gastric cancer from benign lesions such as polyps, gastritis, bleeding, and ulcers. The Xception deep learning model distinguishes the cancer and non-cancer situations based on the depth-wise separable convolutional layers. The features include Auto Augment for Image Enhancement and the simple linear iterative clustering (SLIC) superpixel with FRFCM for segmentation. The study22 presented a deep semantic segmentation model with DeepLabv3 and ResNet-50 for real-time and precise 3D segmentation and pixel class separation. To address this issue, deep features of the studied stomach lesions are extracted through the pre-trained ResNet-50 model. The study also discussed the issues of uncertainty estimation and model interpretability in the context of lesion classification. As for the prediction scores, the proposed approach got 90% and proved the improvement of both segmentation and classification performances for the stomach infection diagnosis. Another study23 utilized XAI via the LIME model to create trust-based models for pre-EC identification. CNN, together with multiple transfer learning models, was employed on the Kvasir-v2 dataset with DenseNet-201, giving an accuracy of about 88.75%. LIME was applied to interpret image classifications, explaining which parts affected malignancy predictions. This integration of deep learning and XAI improved the results’ interpretability and increased trustworthiness in the model without requiring expert validation. In the detection and diagnosis of GI diseases from endoscopic images, the study24 introduces a segmentation classification-based encoder-decoder pipeline is introduced. This framework employs XceptionNet and XAI techniques for better performance. The process involved data augmentation, contour drawing of contours around affected regions. Classification is done employing several classifiers, of which the most efficient yields an accuracy of 98.32%, a recall of 96.13%. The current approach surpasses the traditional techniques in which optimum results include a dice score of 82.08% and mIOU of 90.30%.

Despite these advancements, precise identification of stomach lesions still remains, a challenge especially due to the differences in size and shape of the lesions. Moreover, training deep neural networks requires extensive datasets and face challenges like slow learning, overfitting and tends to get trapped in local optima. The work25 address this issue by adopting the Maximally Stable Extremal Regions (MSER) technique integrated with Morphological operations employing a Hypothesis-Testing Genetic Algorithm. Technical features detected in endoscopic images are achieved by utilizing the Deep Belief Networks (DBNs). As for the other methods, recall, precision, as well as accuracy indicate that the proposed method offers the best performance. To segment the stomach and GI tract for radiation therapy, another study26 proposed a Se-ResNet50-based U-Net model framework. Images of MR scans from the dataset of UW-Madison Carbone are used for training purposes. Experimental results indicate that the proposed model showed better performance, producing a Dice Coefficient score of 0.848, greater than other models including EfficientNetB0, ResNet50, and EfficientNetB1. The study27 developed a DL-based automatic diagnosis system – the PD-CNN-PCC-EELM is used for the classification of GI tract diseases from the GastroVision dataset which consists of 8000 images. A novel, lightweight feature extractor called Parallel Depth-wise Separable Convolutional Neural Network (PD-CNN), and a Pearson Correlation Coefficient (PCC) for feature selection are presented. The Ensemble Extreme Learning Machine (EELM), with the application of pseudo inverse and L1 ELM regularized ELM enhances the identifying accuracy of diseases. The system encompasses a set of image-enhancing methods and yields high accuracy; (Precision = 88.12%). The techniques for interpretability are applied to improve the results used in the diagnostics of real-world cases by XAI. Table 1 represents the summary of the literature review. A study is conducted in28 that focus on diagnosis and staging of colon cancer. Some analyses are performed that use gene expression from the Cancer Genome Atlas dataset. Weighted Gene Co-expression is performed to select gene modules that are highly correlated with cancer, Lasso is utilized to extract key genes for differential expression, and Protein–Protein Interaction is employed to efficiently identify 289 genes for clustering and survival analysis. Random forest outperforms best on this dataset and shows 99.81% accuracy for colon cancer diagnosis, but for staging classification it achieves 91.5% accuracy. The work29 is an automated system that uses endoscopic images for ulcerative colitis diagnosis. UCFN-Net is employed to extracts small lesion features and enhances its accuracy, and Noise Suppression Gating is used that reduces noise by feature gating and attention grid. Models are trained and tested on two different public datasets with an accuracy of 85.47% and MCC of 80.42%, and with the private dataset, and achieve 89,57% accuracy and 85.52% MCC. Similarly, in another study30, early-stage gastric cancer is predicted through lymph node metastasis. A Monocentric study is also performed on frozen postoperative samples. The three-gene meth light technique is applied to 129 ECG signals and 129 tissue samples. Model achieves 84% accuracy on ECG samples and 78% accuracy on tissues. A novel classification framework is presented in study31 that employed the Quantum Cat Swarm Optimization algorithm (QCSO) for feature selection. For enhanced classification and clustering techniques, K-means clustering and SVM are utilized. This proposed QCSO effectively selects the most significant features that help to improve accuracy from 81 to 100%.

After a detailed study of existing studies, it is demonstrated that there is significant improvement and success in GI disease diagnosis due to the integration of deep techniques with XAI methods. This success relies on publicly available datasets and traditional DL methods that often fail to introduce innovations. Moreover, there are specific challenges in this domain, particularly in early-stage identification of tumors, the integration of multimodal data, and real-time decision-making. The proposed study bridges this gap with a novel domain-driven approach that can efficiently combine advanced optimization techniques with customized feature engineering for GI tract diagnosis.

Proposed methodology

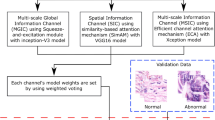

The proposed methodology aims to enhance medical classification accuracy with a combination of hybrid Deep Convolutional Neural Network (DCNN) and Swin Transformer. Features are extracted from an initial branch of DCNN by utilizing EfficientNet B3 and ResNet50 and a second branch based on Swin Transformer. Features extracted from the DCNN layer pass to image patches after conversion to embeddings, while the Swin Transformer extracts feature in parallel. These outputs are fused and delivered to several base classifiers \((\text{C}1,\text{ C}2, ...,\text{ Cm})\) in a preliminary stage for differentiation, each of the different classifiers comes up with a meta-loss. For localization of map features and features selected by the models, Grad-CAM is drawn. Another layer consisting of meta-classifier then further enhances the predictions where the output from every base classifier is gathered together & the final loss function Lmeta is minimized. It adds individual losses and the final aggregated loss while making predictions. This framework presented in Fig. 1 aims to improve the prediction and the prediction model’s interpretability, especially when it is used in medical image analysis.

Takeaway 2: The hybrid model proposed in this study focuses on the interpretability of diagnostic models because medical decision-making has both aspects as essential factors |

Data preprocessing

The Kvasir dataset36 consists of more than 8,000 endoscopic images that contain eight different classes. Images in this dataset differ in resolution, such as 576 × 720 to 1920 × 1072 pixels, and are captured within anatomical locations that can be helpful according to diverse real-world conditions. To standardize input across deep learning models, a further pre-processing step involves resizing images to ensure uniformity and compatibility with the models’ input specifications. This is a crucial step in standardizing the model’s input data. After preprocessing, images are according to the input specification of deep learning models. In this approach, images of varying sizes from \(576\times 720\) up to \(1920\times 1072\) are resized into \(244\times 244\) pixels, this resizing preserves the structural features with consistent dimensions. This resizing step is quite important in maintaining the standard of the images for successful processing by the models in the next phases without size distortion. Data augmentation is used while working with medical image classification tasks to reduce the chances of overfitting37. Some other transformations are applied such as random horizontal flips, rotations, and color jitter. This assists in enhancing the model’s strength since it covers variations in images in the real world. Further, the data is normalized by following the standard ImageNet norms for mean and standard deviations as presented below.

where \(I\left(i,j\right)\) Is the original pixel value of images and \({I}^{\prime}\left(i^{\prime},j^{\prime}\right)\) is the resized pixels, \({s}_{i}\) and \({s}_{j}\) Are the scaling factor for image height and width, this ensures that all the images are resized into \(244\times 244\) dimensions which is followed as:

Patch encoding

After preprocessing images are resized into a \(244\times 244\) dimension, in patch encoding, images are transformed into patches, which can be processed by any transformed-based models. In this process, there are some steps to be performed, first image. \({I^{\prime}}_{{i}^{\prime},{j}^{\prime}}\left(244\times 244, 3\right)\) are divided into non-overlapping \(\text{p x p}\) Patches which are represented as:

In the next step, these patches \(\text{p }\times \text{ p }\times 3\) flatten into 1D vector. \({p}^{2}\) after flattening each patch linear projection is applied where the flattened pattern \({p}^{2}\) is passed through the learnable layer which layer maps each pixel into a feature vector with embedding next step is to add positional encoding which retains the positional information. The final step is patch embedding which is represented as:

Hybrid model feature extraction

Feature extraction is the major component of this hybrid model, with each element designed to extract different kinds of information from the input images. The incorporation of each model allows the new proposed model to learn most of the features for future predictions. The proposed architecture is a combination of three high-performance models that work collectively to provide the best results in the classification task. This methodology utilizes the two-branch structure for feature extraction DCNN branch peculiar to EfficientNet B3, and ResNet50 to extract hierarchical features and skin transformer branch to obtain local and global information about the input images by the system. The input images are divided into patch segments, through patch embedding. Patch segments are then passed to the DCNN branch to be processed. Models such as EfficientNet B3 and ResNet50, and at the same time Swin Transformer extract these features in parallel. DCNN branch utilizes two pre-trained deep models EfficientNet B3 which exploits compound scaling to balance model depth, width, and input resolution. This appropriately optimizes the extraction of features while at the same time achieving a lean design. It incorporates high spatial resolution to capture detailed spatial information together with low-level image information of kvasir endoscopic images for classification. EfficientNet’s output features are extracted from each image of the dataset, in combination with ViT and ResNet, to make the final decision. It captures both high spatial resolution and low-level image details which are represented as:

where \({f}_{\text{EfficientNet B}3}\) indicate compound scaling and \({F}_{\text{EfficientNet B}3}\) indicate output feature map and \({\theta }_{\text{EfficientNet B}3}\) denote the learnable parameters of EfficientNet B3.

The second fine-tuned model that is used in this branch is ResNet50 which is residual learning and has connections by skipping layers in the network hence avoiding vanishing gradient problems when using deep networks. This design helps it to extract more shallow and hierarchical features of stomach images. It captures mid- and high-level abstract features that enhance object differentials and hence disease detection of the GI tract. This architecture is of special use in learning hierarchical features from GI tract images. Similarly, ResNet50 extracts spatial hierarchical features, which are represented as:

where \({f}_{\text{ResNet}50}\) indicate residual connection and \({F}_{\text{ResNet}50}\) indicate output feature map which represents mid and high-level features and \({\theta }_{\text{ResNet}50}\) denote the learnable parameters of ResNet50.

The final output of this DCNN branch is represented as:

Swin Transformer (Swin-T) branch is a hierarchical vision transformer architecture, that uses window-based self-attention. Unlike convolutional networks, it incorporates global contextual information while being computationally efficient. They obtain features in the form of patches from the input image. These features are passed through the transformer architecture which handles relationships between patches to capture global dependencies. Each input image represented as \(X\in {R}^{H\times W\times C}\) (where h, w, and c represent the height, width of the image, and number of channels respectively) is first divided into non-overlapping patches and then mapped linearly into embeddings. This unique attention is known as Window Multi-Head Self-Attention (W-MSA) and acts directly on local windows by computing its attention as follows:

where Q, K, and V are query, key, and value respectively. The output feature of the swin transformer is \({F}_{Swin}\) which is computed as:

where \({f}_{\text{Swin}}\) indicate swin transformer model features and \({F}_{\text{Swin}}\) indicate final output obtained from swin transformer and \({\theta }_{\text{Swin}}\) denote the learnable parameters of the swin transformer.

Once features are extracted from both individual branches the results are combined into a single feature vector:

The concatenation of the hybrid model involves Low-level features that are part of the Efficient Net, Global contextual features in Swin Transformer; Hierarchical features and abstract features included in ResNet50. This fusion yields a rich and robust representation of Kvasir images with high resilience to noise.

After the dual branch feature extraction, the combined feature vector of all three models is passed through a fully connected layer for classification. This layer brings down the feature maps dimensionality of the concatenated features and produces final results for the classes.

where \({O}_{final}\) is the output prediction of each class, W and b are the weight matrix and bias vector, and σ shows the ReLU activation function. The first fully connected layer is 3 times the number of input classes because the features from all three models are combined. After passing through an activation ReLU function, there is another FC layer with the ability to reduce dimension to the number of classes to make the final prediction.

Multi-branch feature extraction techniques enhance performance for classification tasks. The framework consists of three different branches with the integration of DCNN EfficientNet-B3 and ResNet-50 with Swin Transformer, respectively. EfficientNet-B3 enables precise identification of fine details while using an efficient parameter, ResNet-50 obtains deep hierarchical features, which increases model resistance toward various medical image variations. Whereas, Swin Transformer employs self-attention operations to discover and integrate contextual relationships from input data. Different feature representations are obtained with these diverse models, allowing our model to develop a comprehensive understanding that improves GI track representation. This multi-branch feature extraction model extracts both local and global patterns that help to enhance classification results with explainable feature representation and also minimize redundant features. The explainable features are made possible through Grad-CAM, thereby supporting clinical decision-making processes.

The final stage of the model further refines how accurate the final predictions are by combining the results of several base classifiers. It employs meta loss function to integrate the base classifiers for aggregation of losses thereby making the final prediction as follows:

where \(L_{meta} \left( {y,\hat{y}} \right)\) is meta-loss for the predicted output \(\hat{y}\) and actual output \(y\), \(n\) shows the number of base classifiers, \({w}_{i}\) indicates the class weights, L is the Cross-entropy loss for each base classifier \({C}_{i}\left(x\right)\), g represents the aggregating loss function and \({L}_{final}\) is the final loss.

Meta-classifier ensures that each output contributed by a base classifier is properly scaled to give better performance. Moreover, the last prediction is refined from the output of all the classifiers, reducing the rate of errors. Combination of stacked machine learning classifiers with meta-loss enhances system predictions and lowers generalization mistakes and enhances decision boundary performance. Three decision boundary algorithms are employed independently and extract patterns, resulting in predictive modeling. XGBoost, LightGBM, and SVM are used together as a stacked ensemble, XGBoost establishes effective models that recognize complex non-linear relations between variables, LightGBM provides fast model execution and enhances the model, and SVC achieves better generalization results. Multiple classifiers are combined into a single prediction which improves the classification results. However, traditional loss functions process all the input samples equally, but medical diagnosis requires critical supervision for false negative results. Learning process adjustment through meta-loss uses sample difficulty assessments to give higher weights to cases that are incorrectly classified or complex to understand during optimization. Integration of stacked classifiers produces better diagnostic results while decreasing errors and creates a reliable diagnostic system which healthcare professionals can apply during clinical work.

Takeaway 3: A meta-classifier enhances medical diagnosis by refining predictions, enhancing decision boundaries, reducing errors, and improving diagnostic reliability |

Grad-CAM visualization

Grad-CAM makes AI-driven GI tract disease detection easier for medical practitioners by offering visual explanations of model predictions. The system produces heatmaps that show which image areas are more important while making its prediction. Visual explanations Grad-CAM provides AI predictions, enabling clinicians to develop more trust and utilize AI technology efficiently in practical medical applications. Grad-CAM also evaluates incorrect diagnoses through error analysis that improve model training procedures and data quality standards. Medical professionals can also take advantage of AI-assisted second opinions through this system as it provides both clinical decision support and educational resources for training medical professionals about AI-driven diagnostics.

For the interpretation of class predictions by the model, Grad-CAM (Gradient-weighted Class Activation Mapping) is applied. It is used to understand which part of the input image influences the model prediction most. It operates by using gradients of the targeted class score, \({y}_{c}\) (from the final fully connected layer) propagating back through the architecture to the final convolutional layer of the branches such as EfficientNet B3, ResNet50, or Swin Transformer and their feature maps \({A}^{k}\).The weights \({\alpha }_{k}\) are then element-wise multiplied with the feature maps \({A}^{k}\) to generate the Grad-CAM heatmap as follows:

In the proposed architecture, the heatmap in Fig. 2 reveals the interesting regions of the image on which the hybrid model focuses while making decisions for better interpretation across the feature extractors. The visualizations demonstrate how features localized and global features contribute to classification. Thus, the hybrid architecture through the integration of the features minimizes misclassification and exploits the meta-classifier in providing more accurate prediction from the base classifiers. Moreover, the Grad-CAM maps enhance the explanation of the model’s decisions while pinpointing what areas of the image are employed in the prediction.

Takeaway 4: Grad-CAM provides the visual representation of predictions that increase the trust of doctors and assist them in decision making |

Results and discussion

The Kvasir dataset36 of images is endoscopic images obtained in the GI tract, hierarchically divided into three primary and critical pathologies. It has also been classified into two categories regarding the endoscopic polyp removal procedure. Specially trained endoscopists were involved in the classification and annotation of this data sample. The dataset comprises 4000 endoscopic images of the GIT and the number of images per class is 500. For experimental use, the data set split is 70%, for training and 30%, for testing. For model evaluation/testing, both the Kvasir and private dataset are utilized to ensure robustness and generalization. The study has been performed on Google Collab, an online tool for running Python code. T4 GPU for Colab is utilized for this purpose, and Google Chrome is used as a browser. To fine-tune this proposed model, hyperparameters are selected with batch size of 16 for optimal memory optimization through 25 epochs with a learning rate set at 0.001 for stable optimization. Normal weight initialization combined with L2 regularization (λ = 0.0001) for class-wise performance.

The proposed model has shown a precision of 93.58% with a recall of 93.43% and F1-Score of 93.41%. The proposed model has achieved an accuracy of 93.43%. Table 2 shows class-wise results of the proposed model. This table shows that the model has performed very well for each class. This table shows the Precision, Recall, and F1-Score of each class. Each class has shown more than 88% precision, with the highest precision of 97%. While each class has shown more than 84% recall, with the highest recall of 97%. Similarly, each class has shown more than 89% F-1 score with the highest F-1 score of 97%. Class-wise results show a significant reduction in FNR while dealing with high-risk classes including ulcers and polyps. An improved diagnostic decision boundary developed by the combination of meta-loss and stacked classifiers provides better accuracy in medical diagnoses. Reduction in FNR in medical diagnostics is essential for timely treatment. Figure 3 shows the confusion matrix of the proposed model. It shows that the proposed model has shown better results for each class.

The class-wise result of the proposed model is analyzed in a heat map in Fig. 4. This result shows that all classes performed well for the model and have shown good results. The high correlation in values suggests that the proposed model has performed much better for each class.

The proposed model has been trained for 25 epochs. Below Fig. 5 shows the accuracy of training and validation over epochs. The result shows that the model has shown improvement in the accuracy of training and validation over epochs. The accuracy of validation has reached 93% while that of training has reached 97%.

The stacked model applied to the proposed model has shown a precision of 94.64% with a recall of 93.79% and an F1-score of 93.91%. The stacked model has achieved an accuracy of 93.79%. Below are the wise results of the proposed model. This table shows that the model has performed very well for each class. Table 3 shows the Precision, Recall, and F1-score of each class. Each class has shown more than 72% precision, with the highest precision of 100% while each class has shown more than 81% recall, with the highest recall of 100%. Similarly, each class has shown more than 80% F-1 score, with the highest F-1 score of 100%. Figure 6 shows the confusion matrix of the proposed model. It shows that the proposed model has shown better results for each class.

The class-wise result of the stacked model is analyzed in the heat map in Fig. 7 . This result shows that all classes performed well for the stacked model which is applied to the proposed model. The high correlation in values suggests that the stacked model has shown much better for each class.

The stacked model of ML classifiers based on the loss function is applied to the proposed model. A stacked Model is applied to the original model to improve results as it is combined with the strength of multiple models. Figure 8 shows a bar plot comparison of results before and after stacking. It can be clearly shown that the stacking has improved results. The result of the model is analyzed and compared in the heatmap, as shown in Fig. 9 . This result shows that the model has improved its performance after stacking.

In Fig. 10, performance based on recall before and after stacking is compared across different categories. There is a clear gap between the recall percentage before applying stacking and after stacking, which indicates a strong ability for accurate identification of class instances. These results suggest that these classes are more prone to misclassification. This variation after stacking highlights the tendency for the model to perform better after stacking.

Figure 11 compares performance based on precision before and after stacking across different categories. There is a clear gap between the recall percentage before and after stacking, which indicates a strong ability to identify class instances accurately. These results suggest that these classes are more prone to misclassification. This variation after stacking highlights the tendency for the model to perform better after stacking.

Figure 12 compares the F-1 Score across categories before and after stacking. Infinity and similar measures, along with the large improvement in recall pre-stacking and post-stacking, indicate good class instance identification capability. These results indicate that these classes are misclassified more frequently. There is a difference after stacking the model is more likely to work better after stacking.

In Fig. 13 class-wise results are illustrated using a heat map where different intensity colors represent different performance measures such as recall, precision, and F1-score. In heat maps usually dark shades represent the highest scores, similarly, in this head map dark red shows the classes that acquire the highest results whereas the lighter shades are used to describe the areas where the model does not perform well. As illustrated in the heat map, Dyed-lifted-polyps show overall better performance in all metrics indicating that this class is best across all-in-one glance. This visual representation helps to identify the model’s behavior against targeted improvements.

A comparison of FNR with and without stacking is illustrated in Fig. 14. This graph indicates the reduction in FNR when stacking is applied. This shows that the stacking technique is effective in the reduction of false negative occurrences. This graph also increases our confidence while choosing stacking as the best technique and enhancing model performance.

Takeaway 5: The stacked machine learning classifiers improved the diagnostic efficacy of the model, and there is a notable increase in the model’s performance metrics accuracy, precision, recall, and F1-score and reduction in rates of false negatives is also reported |

Figure 15 shows a heat map comparison of FNR before and after stacking. Both these diagrams show that FNR is reduced in most classes after stacking, while some classes have shown 0% FNR. This shows that stacking played a vital role in reducing FNR.

A comparative analysis of this proposed hybrid model with other models is presented in Table 4 which presents different performance parameters in terms of MCC, training time, inference time, and other matrices. The Hybrid Model archives an accuracy of 92.93% with a balanced MCC of 0.9195 but the cost of training time increases.

An explainable AI technique Grad Cam evaluation is applied for analysis of decision-making. It shows features that were used by models while making predictions. Figure 16 shows a grad cam evaluation of the proposed model, and it has been clearly shown that the model has used the most useful features for classification. This diagram shows the heatmap overlay where red and yellow color shows the most impactful part and only useful features are used for making predictions.

The proposed model is compared with different SOTA models in Table 5. This proposed model has achieved an accuracy of 93.79%, which is much higher than SOTA methods and also provides higher outcomes in terms of precision, recall, and f-1 score. The proposed model has improved more than 3% accuracy compared to the SOTA model and also explains the features that are most active in model prediction, which shows model interpretability.

The implemented framework reduces false negative medical diagnoses in GI tract disease evaluation that contribute to real-world implications for early disease detection and timely treatment. This deep-based Explainable AI model also provides medical professionals with accurate disease prediction and visual understanding. The main additions to our research include EfficientNet-B3 and ResNet-50 along with the Swin transformer are used to extract detailed features and complete contextual information that improve diagnostic classification accuracy. Grad-CAM technology adds explainability to AI systems because it enables medical professionals to examine algorithm-based decisions that establish trust in diagnostic automation. Stacked Machine Learning Classifiers are implemented with Meta-Loss by coupling XGBoost with LightGB and SVC through ensemble learning operations that improve classification boundary accuracy and generalization. The combination of DCCN with Swin transformer and Explainable AI enhances diagnostic precision while assisting medical decisions and decreases diagnosis errors in practical healthcare settings. Although this proposed model achieves better accuracy and also visualizes features, there is a key limitation that is time complexity, extensive time is required for computational operations performed through this model. This can be a challenge to process a large amount of data or in an environment with limited computational resources. Performance of the proposed model may also depend on specialized hardware. The ablation study of the proposed model is described in Table 6.

Conclusion

This research provides a way for more efficient, accurate, and reliable diagnostic processes in clinical settings by reducing the possibilities for human error, and as such providing a trustworthy explainable AI-driven tool.odels and ViT that enhance feature extraction and classification. The stacked machine learning classifiers are also used that improved the diagnostic efficacy of the model, and notable increases in the model’s performance metrics accuracy, precision, recall, and F1-score, as well as a decrease in the rates of false negatives, have been reported. The critical aspect of this research is the implementation of XAI approaches, which address the often criticized “black box” characteristic of deep learning models. Then the results are justified with a visual representation through Grad-CAM that enhances the trust and model adoption.

Theoretically, this proposed system enhances explainability of medical image through the Grad-CAM and other interpretative processes, this model can shed light on the rationale behind certain decisions, allowing the results to be contextually appreciated by clinicians. Grad-CAM provides the visual representation of predictions that increase the trust of doctors and assist them in decision making. They can also apply this technique as a second thought for their decisions. Although this hybrid model enhances medical diagnosis and improves classification and feature extraction.

The hybrid model proposed in this study focuses on the improvement of not only precision but also the interpretability of diagnostic models because medical decision-making has both aspects as essential factors. The success of this type of hybrid model indicates that the model will be useful for healthcare professionals in the early diagnosis of stomach or gastrointestinal disorders, hence bettering patient results. This research provides a way for more efficient, accurate, and reliable diagnostic processes in clinical settings by reducing the possibilities for human error, and as such providing a trustworthy explainable AI-driven tool.

One major limitation of this proposed model is the computational resources complexity while preprocessing high resolution images. In the future we will focus to enhance its computational power so this model can perform better on high resolution images. More extensive applications may include further research combining DL with XAI in particular within broader clinical applications and further validation using real-world datasets. The code is available here.

Data availability

The datasets analysed during the current study are publicly available in the Kvasir dataset repository and can be accessed at https://www.kaggle.com/datasets/meetnagadia/kvasir-dataset. Additionally, the code utilized for this study is provided in the conclusion section of the article.

References

Hunt, R. H. et al. The stomach in health and disease. Gut 64(10), 1650–1668 (2015).

Greenwood-Van Meerveld, B., Johnson, A.C., Grundy, D. Gastrointestinal physiology and function. Gastrointestinal pharmacology 1–16 (2017).

Renjith, V. & Judith, J. A Review on Explainable Artificial Intelligence for Gastrointestinal Cancer using Deep Learning. in 2023 Annual International Conference on Emerging Research Areas: International Conference on Intelligent Systems (AICERA/ICIS) 1–6 (EEE, 2023).

Milosavljević, T., Kostić-Milosavljević, M., Krstić, M. & Sokić-Milutinović, A. Epidemiological trends in stomach-related diseases. Dig. Dis. 32(3), 213–216 (2014).

Quaglio, A. E. V., Grillo, T. G., De Oliveira, E. C. S., Di Stasi, L. C. & Sassaki, L. Y. Gut microbiota, inflammatory bowel disease and colorectal cancer. World J. Gastroenterol. 28(30), 4053 (2022).

Vattikuti, M. C. A comprehensive review of ai-based diagnostic tools for early disease detection in healthcare. Res. Gate J. 6(6) 2020.

Chadebecq, F., Lovat, L. B. & Stoyanov, D. Artificial intelligence and automation in endoscopy and surgery. Nat. Rev. Gastroenterol. Hepatol. 20(3), 171–182 (2023).

Ali, S. Where do we stand in AI for endoscopic image analysis? Deciphering gaps and future directions. NPJ Digit. Med. 5(1), 184 (2022).

Hamet, P. & Tremblay, J. Artificial intelligence in medicine. Metabolism 69, S36–S40 (2017).

Sasaki, Y., Hada, R., Yoshimura, T., Hanabata, N., Mikami, T. & Fukuda, S. Computer-aided estimation for the risk of development of gastric cancer by image processing. in Artificial Intelligence in Theory and Practice III: Third IFIP TC 12 International Conference on Artificial Intelligence, IFIP AI 2010, Held as Part of WCC 2010, Brisbane, Australia, September 20–23, 2010. Proceedings 3 197–204 (Springer, 2010).

Bangash, J. I. et al. Multiconstraint-aware routing mechanism for wireless body sensor networks. J. Healthc. Eng. 2021(1), 5560809 (2021).

Hirasawa, T. et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer 21, 653–660 (2018).

Liu, X. et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 1(6), e271–e297 (2019).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518(7540), 529–533 (2015).

Sadeghi, Z. et al. A review of explainable artificial intelligence in healthcare. Comput. Electr. Eng. 118, 109370 (2024).

Weber, L., Lapuschkin, S., Binder, A. & Samek, W. Beyond explaining: Opportunities and challenges of XAI-based model improvement. Inf. Fusion 92, 154–176 (2023).

Minh, D., Wang, H. X., Li, Y. F. & Nguyen, T. N. Explainable artificial intelligence: A comprehensive review. Artif. Intell. Rev. 55, 1–66 (2022).

Wong, G. L. H. et al. Machine learning model to predict recurrent ulcer bleeding in patients with history of idiopathic gastroduodenal ulcer bleeding. Aliment. Pharmacol. Ther. 49(7), 912–918 (2019).

Majid, A. et al. Classification of stomach infections: A paradigm of convolutional neural network along with classical features fusion and selection. Microsc. Res. Tech. 83(5), 562–576 (2020).

Khan, M. A. et al. Gastrointestinal diseases segmentation and classification based on duo-deep architectures. Pattern Recogn. Lett. 131, 193–204 (2020).

Lee, S.-A., Cho, H. C. & Cho, H.-C. A novel approach for increased convolutional neural network performance in gastric-cancer classification using endoscopic images. IEEE Access 9, 51847–51854 (2021).

Amin, J., Sharif, M., Gul, E. & Nayak, R. S. 3D-semantic segmentation and classification of stomach infections using uncertainty aware deep neural networks. Complex Intell. Syst. 8(4), 3041–3057 (2022).

Shaw, P., Sankaranarayanan, S. & Lorenz, P. Early esophageal malignancy detection using deep transfer learning and explainable AI. in 2022 6th International Conference on Communication and Information Systems (ICCIS) 129–135 (IEEE, 2022).

Nouman Noor, M., Nazir, M., Khan, S. A., Ashraf, I. & Song, O.-Y. Localization and classification of gastrointestinal tract disorders using explainable AI from endoscopic images. Appl. Sci. 13(15), 9031 (2023).

Alhajlah, M. Automated lesion detection in gastrointestinal endoscopic images: Leveraging deep belief networks and genetic algorithm-based Segmentation. Multimed. Tools Appl. https://doi.org/10.1007/s11042-024-20439-w (2024).

Chen, N. A Se-ResNet50 Based Deep Learning Method for Stomach and Tract Segementation. in 2024 4th International Conference on Neural Networks, Information and Communication (NNICE) 1744–1747 (IEEE, 2024).

Ahamed, M. F. et al. Detection of various gastrointestinal tract diseases through a deep learning method with ensemble ELM and explainable AI. Expert Syst. Appl. 256, 124908 (2024).

Su, Y. et al. Colon cancer diagnosis and staging classification based on machine learning and bioinformatics analysis. Comput. Biol. Med. 145, 105409 (2022).

Li, H. et al. UCFNNet: Ulcerative colitis evaluation based on fine-grained lesion learner and noise suppression gating. Comput. Methods Programs Biomed. 247, 108080 (2024).

Chen, S. et al. Evaluation of a three-gene methylation model for correlating lymph node metastasis in postoperative early gastric cancer adjacent samples. Front. Oncol. 14, 1432869 (2024).

Mohammed, M. A. & Ali, A. M. Enhanced cancer subclassification using multi-omics clustering and quantum cat swarm optimization. Iraqi J. Comput. Sci. Math. 5(3), 37 (2024).

Ahamed, M. F., Shafi, F. B., Nahiduzzaman, M., Ayari, M. A. & Khandakar, A. Interpretable deep learning architecture for gastrointestinal disease detection: A Tri-stage approach with PCA and XAI. Comput. Biol. Med. 185, 109503 (2025).

Ahamed, M. F. et al. Automated detection of colorectal polyp utilizing deep learning methods with explainable AI. IEEE Access 12, 78074–78100 (2024).

Sarkar, O. et al. Multi-scale CNN: An explainable AI-integrated unique deep learning framework for lung-affected disease classification. Technologies 11(5), 134 (2023).

Himel, G. M. S., Hasan, M. S., Salsabil, U. S. & Islam, M. M. MedLingua: A conceptual framework for a multilingual medical conversational agent. MethodsX 12, 102614 (2024).

Pogorelov, K. et al. Kvasir: A multi-class image dataset for computer aided gastrointestinal disease detection. in Proceedings of the 8th ACM on Multimedia Systems Conference 164–169 (2017).

Razzak, M. I., Naz, S., Zaib, A. Deep learning for medical image processing: Overview, challenges and the future. in Classification in BioApps: Automation of decision making 323–350 (2018).

Acknowledgements

The Authors are thankful for Prince Sattam bin Abdulaziz University project number (PSAU/2025/R/1446).

Author information

Authors and Affiliations

Contributions

J.H.S. and F.D. provided the problem statement and proposed the model. R.S. and T.A. contributed to technical writing and analytical writing. M.H. worked on implementation and result analysis. M.A. performed technical proofreading and result gathering.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Dahan, F., Shah, J.H., Saleem, R. et al. A hybrid XAI-driven deep learning framework for robust GI tract disease diagnosis. Sci Rep 15, 21139 (2025). https://doi.org/10.1038/s41598-025-07690-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07690-3