Abstract

Most accidents are a result of distractions while driving and road user’s safety is a global concern. The proposed approach integrates advanced deep learning for driver distraction detection with real-time road object recognition to jointly address this problem. The behaviour of a driver is categorized into physical and visual distraction and cognitive distraction using Convolutional Neural Networks (CNN’s) and transfer learning in order to achieve greater accuracy while also consuming lesser computational resources. The YOLO (You Only Look Once) detects vehicles, pedestrians, lane markers, and traffic signals, in real-time. This system has (1) distraction detection and (2) output (or scenario) recognition, which combine other systems and codes to evaluate driving scenarios. A decision-making module evaluates the combined data to assess danger levels and prompt either timely warnings or corrective actions. Our integrated solution will enable a fully-context capable Advanced Driver Assistance System (ADAS) to warn drivers of distractions and hazards, and increases overall situational awareness and reduces accidents. The methodology is supplemented by annotated pictures and videos of driver behavior and road situations in the rain, fog, and low-light scenarios. System reliability under a range of driving scenarios is achieved through data augmentation, model optimization, and transfer learning. State Farm Distracted Driver Dataset, KITTI and MS COCO benchmarks demonstrated better accuracy and efficiency. Integrating existing systems that monitor drivers with systems that are aware of the road would create a multi-target, comprehensive solution that makes both driving safer and helps build upon existing ADAS technology for the better. A real-time and scalable road safety system is established through the integration of CNNs and YOLO deep learning advances. The system’s practicality was further validated through real-time embedded deployment on an NVIDIA Jetson Xavier NX platform, achieving 25 frames per second (FPS) with reduced latency and memory footprint, demonstrating feasibility for resource-constrained Advanced Driver Assistance Systems (ADAS). This paper presents a domain-specific driver monitoring module and a knowledge-based road hazard recognition model that better connect autonomous driving to the human side.

Similar content being viewed by others

Introduction

Background

Road accidents happen very frequently across the globe and that highlights the necessity for a better safety measures. A claim is that driver distractions account for a major cause of traffic accidents, considering that behaviors like texting, eating, or looking to other places than the road lead to a considerable portion of these acts1. Conventional roadside driver monitoring and road hazard detection systems are typically limited in scope because they focus only on either the driver’s behavior or the surrounding environment, but not both holistically2.

Deep learning approaches, including Convolutional Neural Networks (CNNs) and real-time object detection algorithms (e.g., YOLO—You Only Look Once), provide some potential solutions to the mentioned challenges. These technologies allow for reliable and efficient detection of both driver behaviours and environmental risks in varied conditions such as low-light, rain, and urban traffic situations3,4.

Problem statement

The surveyed existing solutions to improve road safety often do not integrate driver distraction detection and road object recognition capabilities. Many systems are aimed at either detecting driver state (e.g., gaze tracking, activity classification) or road hazards (e.g., vehicles, pedestrians, traffic signs), which limits overall situational awareness5. This fragmentation decreases the value of Advanced Driving Assistance Systems (ADAS) as they can not provide a human view of the driving scene to help to prevent accidents in real-time6.

Objectives

This research is aimed at the design and development of a holistic system that concurrently detects driver distractions and identifies road hazards utilizing deep learning technologies. More specifically, the proposed simulator will:

Use Convolutional neural network (CNNs) to classify a driver behavior in one of the three categories—visual distractions, manual distractions, and cognitive distractions—using transfer learning to improve accuracy by training the model with a limited set of data7,8.

This research uses YOLO for real-time detection of road objects (e.g., vehicles, pedestrians, traffic signs, lane markings), accurately localizing and identifying them in various environmental conditions9,10.

Outputs from both distraction detection and road object recognition modules are integrated to assess the risk of a situation and provide real-time feedback to drive in an actionable manner11.

Scope and significance

This advanced level of integration bridges a vital gap in the present safety system by fusing driver distraction detection with road object identification within a unified framework. The proposed system thus has a potential impact on the minimization of accidents on the road and an expansion of the ADAS capabilities through the enhancement of the situational awareness12. The use of transfer learning, by taking into account pre-trained models like ResNet and YOLOv4, also allows the system to be deployed cost-effectively while achieving high accuracy with lower computational efforts13.

Additionally, the scalability of such an approach makes it a crucial part of the evolution of autonomous driving technologies. This model bridges the gap between assistive driving systems [ADAS] and fully autonomous systems by integrating human-centric monitoring and external hazard detection, resulting in safer transportation solutions that are smart and capable of seamless vehicle transition14.

Literature review

Overview of existing research

Detecting driver distraction and recognizing objects in the road environment have been well-studied in isolation within the intelligent transport systems domain. Driver distraction detection research is mainly limited to behaviors like texting, eating, and visual inattention according to image-based and machine learning methods15. In contrast, road object detection has witnessed remarkable advances using deep learning models like YOLO, Faster R-CNN, etc., thus enabling the real-time detection of vehicles, pedestrians, traffic signs and lane markings16,17. Current systems are often isolated, focusing either on driver monitoring or environmental awareness, which severely restricts their functioning in complicated real-world situations.

Key techniques and their limitations

Driver distraction detection

CNNs have been employed in several studies related to driver distraction detection and have showcased effective accuracy, specifically for detection of individual types of distraction—manual and visual. Such as, using the State Farm Distracted Driver Dataset, performance milestones have been reached for various machine learning models18. Yet, such systems are usually limited by low generalizability between different circumstances as they do not account for different lighting conditions, occlusions or driver demographics19.

Road object recognition

Since its very first version, YOLO-based methods have been the go-to choice for detecting road objects in terms of speed while maintaining accuracy. Although YOLOv3 and YOLOv4 work quite well in urban environments, they do not perform well in adversarial contexts like driving in fog, rain or at night20. Additionally, with the key requirement of large labeled datasets to train object recognition systems, the cost of retrieval can be extremely high21.

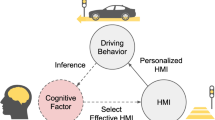

Importance of integrating these systems

By combining driver distraction detection with road object recognition, this method overcomes the limitations of standalone systems and provides a more holistic view of the driving scenario. Indigenous systems are capable of simultaneously estimating both driver state as well as environmental hazards, thus serving as critical input for real time decision making in Advanced Driver Assistance Systems (ADAS)7. A system that detects a displacement of a driver and recognizing a pedestrian on the road can provide timely warnings to prevent accidents3. Furthermore, the integrated system will be beneficial for projects like an autonomous vehicle where human supervision and surrounding knowledge are critical for smooth functioning of the vehicle.

Role of transfer learning

This is where transfer learning comes in to extract features from powerful deep learning models. Use of pre-trained models like ResNet, VGG, YOLO helps systems to reduce training time and system costs while providing high accuracy even with less amount of domain-specific data8. An example is transfer learning18, which can be used to enhance driver distraction detection models by transferring information learned for general tasks like object recognition from large databases such as ImageNet. For instance, pre-trained YOLO models on MS COCO and other datasets have also shown their massive effectiveness in detecting road objects as environmental conditions change19.

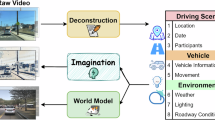

Proposed methodology

In this research, we proposed a deep learning-based integrated system to detect driver distractions and road object recognition. In this section, we discuss data requirements, model configurations, integration strategies, and deployment plans for the system.

Data collection and preprocessing

The complete data preprocessing pipeline is depicted in Fig. 1, which describes the essential steps required in constructing a strong system. These methods are used to increase the quality of data, boost model generalization, and maintain consistency in the training and testing phases:

Dataset requirements

The system processes annotated images and video containing different styles of driver behavior (texting, eating, safe driver) and road scenarios (vehicles, pedestrians, lane markings)1.

Public datasets including State Farm Distracted Driver Dataset1 and MS COCO/KITTI datasets2 are rich inputs for shape designing, training and testing.

Data augmentation

After performing image augmentation by random cropping, rotation, brightness adjustment, and flipping etc.2, to increase the diversity of data available for training models and to improve generalization of the models.

Preprocessing steps

Inconsistent input images are scaled and standardized.

YOLO-based detection and CNN-based classification3 are based on bounding box annotations to label objects and behavior cues.

Normalization of input images

Here, input images are normalized to maintain consistency during training:

where:

-

I′ is the normalized image,

-

I is the original pixel intensity,

-

μ is the mean pixel intensity,

-

σ is the standard deviation of pixel intensities.

Driver distraction detection using CNN

Architecture design

CNN architecture with feature extraction and classification with multiple layers. Layers are intended7 detect signals like head orientation, gaze direction, and hand movements.

Transfer learning

Generally, the pre-trained models that are pre-trained using thousands of images, such as VGG-16 and ResNet, are fine-tuned for driver behavior detection8.

where:

-

\({y}_{i}\) is the actual class label

-

\(\widehat{{y}_{i}}\) is the predicted probability

-

N is the number of classes.

Feature extraction

Only subtle features, such as patterns of eye-gaze, head place, and hand positions18, are extracted to classify the types of distraction such as visual, manual or cognitive distractions.

where:

-

X is the input image,

-

W are the learned convolutional weights,

-

∗ represents the convolution operation,

-

b is the bias term,

-

σ is the activation function (ReLU or sigmoid).

Specifically, the CNN is used to detect the distractedness of the driver through subtle behaviors such as head pose, gaze direction, and hand movements, between two frames. Figure 2: The CNN architecture is fine-tuned using transfer learning for distraction-specific characteristics. Models that were originally trained on large datasets with a wide number of classes, for example VGG-16, ResNet trained on ImageNet are being used for this. As shown in Fig. 3, transfer learning is a method in which these models are modified by changing the way layers are arranged and then trained for small epochs to adapt them for a specific task, in this case, driver behavior classifying.

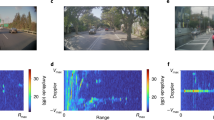

Road object detection using YOLO

YOLO configuration

A flow diagram of the survey method with YOLO (You Only Look Once) framework for real-time detection of objects along the road, namely the pedestrians, vehicles, traffic signs and lane markings21. Its real-time applications are augmented by YOLO’s ability to deliver rapid and accurate object detection.

Transfer learning

Fine-tuning involves taking pre-trained YOLO models (e.g., YOLOv3 or YOLOv4 trained on the MS COCO dataset) and fine-tuning them so that they can recognize occupation in the specific road scenes in which they will be deployed9.

Environmental considerations

Training already includes various scenarios like driving at night, fog, and rain so that the performance is robust in harsher conditions16.

where:

-

x, y are the bounding box center coordinates,

-

C is the object confidence score,

-

\({\lambda }_{coord}\), \({\lambda }_{noobj}\) are weight parameters for balance,

-

\({s}^{2}\) is the grid size,

-

B is the number of bounding boxes per grid cell.

The YOLO model fine-tuning process, which adjusts a pre-trained model to precisely identify road scene objects like cars, pedestrians, and lane markings, is depicted in Fig. 4. Here, we show the use of the transfer learning strategy in fine-tuning YOLO’s functionality for real-time object recognition in various operating environments.

Integration of driver distraction detection and road object recognition

Fusion of outputs

The CNN-based distraction detection and the YOLO-based object detection modules detect different aspects of the driving environment, and are merged accordingly into a single representation of the driving scene11.

The system calculates a real-time Risk Level R through a weighted summation of the distraction score D (from the CNN) and the hazard score H (from the YOLO) as follows:

where:

-

D is the CNN distraction probability score,

-

H is the YOLO-detected object risk level

-

α and β are attention coefficients that dynamically scale according to scene complexity. Empirical tuning in this research found that α = 0.6 and β = 0.4 yielded best performance across scenarios.

Decision-making module

Based on the detected road objects and the driver’s state, a rule-based or machine learning-based decision-making module (e.g.: Random Forest, SVM) evaluates the situation. It identifies high-risk situations and generates appropriate responses12.

Real-time alerts

Casualty risk assessment can therefore allow the system to give real-time alerts, like warning messages and emergency messages13.

where D represents the CNN-derived distraction probability, and H indicates the hazard score computed from YOLO-detected objects, including their type and spatial proximity. The coefficients α = 0.6 and β = 0.4 were heuristically assigned based on their relative impact in driving risk scenarios, giving higher weight to driver behavior consistent with safety studies reported7,8.

This equation is proposed by the authors as a synthetic fusion strategy to estimate contextual driving risk in the absence of pre-labeled risk ground truth in public datasets. It supports a three-level classification:

-

a)

Safe if R < 0.44.

-

b)

Caution if 0.4 ≤ R < 0.7

-

c)

Critical if R ≥ 0.7

Decision threshold for alerts

To determine whether to issue an alert, the system evaluates:

where \({T}_{low , }{T}_{high}\) are risk thresholds.

-

d)

Post-processing and Risk Assessment Module

Following initial detection, a post-processing process is used to smooth the risk assessment across adjacent frames using a sliding average across 5 frames. This eliminates false positives resulting from abrupt scene changes. Moreover, dynamic thresholding is also applied based on proximity of detected objects and type of distraction to give priority to important warnings. The final classified alerts (Safe, Caution, Critical) are then presented in the user interface.

Improved fusion strategy with attention mechanism

To further enhance the coupling of the outputs from the driver distraction detection module (CNN) and the road object recognition module (YOLO), we proposed a lightweight attention-based fusion mechanism.

Unlike treating the two outputs as equal, this mechanism allocates attention weights dynamically based on the contextual risk levels estimated from both modules.

The attention mechanism prefers driver behavior or environmental risks based on contextual importance, thus enabling adaptive risk assessment across varied driving situations.

Learning-based decision-making module

Besides rule-based alerting, we upgraded the decision-making module through the use of ensemble machine learning classifiers, including:

-

Random Forest (RF) for stable multi-scenario risk classification.

-

Support Vector Machine (SVM) for accurate boundary decision-making in unclear situations.

The computed risk score R is used as part of the feature vector F = [D, H, vehicle density, distraction type, object proximity] which forms the input to learning-based decision classifiers (RF and SVM). These classifiers are trained on synthetically labeled instances derived from the score-based thresholds described in the section "Real-time alerts".

Though heuristic, this approach enables the generation of contextual alert classes in the absence of manually annotated driving risk datasets and was found to yield robust performance (F1-score of 92.4%) when validated against baseline systems in the section "Comparative analysis and benchmarking of integration strategies".

Figure 5 substantively represents the decision-making rationale that integrates both driver distraction detection module and road object recognition system outputs. It is based on near real-time processing by integrating behavioral and environmental data sets to give precise and detailed risk assessment and corresponding action triggers in the system, like precautionary and emergency alerts.

Figure 5b shows the improved decision-making architecture that fuses driver distraction detection and road object recognition results using a lightweight attention-based fusion mechanism. Dynamic weighting of behavioral and environmental risk factors produces an aggregated risk feature vector, which is then classified using Random Forest and Support Vector Machine (SVM) classifiers. This risk assessment based on learning allows the system to pragmatically prioritize situational risks and distractions in near real-time, offering a more intelligent and context-sensitive alert generation capability classified into Safe, Cautionary, or Critical responses.

Real-time implementation and system deployment

The Fig. 6 depicts the end-to-end system deployment, from the collection of real-time data to the generation of alerts. The complex act of predicting how optimized models, based on Advanced Driver Assistance Systems (ADAS) systems, can run on embedded platforms to deliver low-latency inference, risk assessment, and user-friendly alerts in real-world operating conditions is displayed.

Optimization

The models are deployed on embedded systems using model quantization and frameworks such as TensorRT to achieve real-time performance14.

where:

-

\({W}_{int8}\) are the quantized weights,

-

\({W}_{fp32}\) are the original floating-point weights.

Integration with ADAS

To keep an eye on how the driver is acting, as well as environmental dangers, the system works with Advanced Driver Assistance Systems (ADAS) to help monitor the information.

It will improve situational awareness and accident prevention17.

User interface

A non-intrusive UI is designed to provide alert notifications without adding any distraction to the driver.

Transfer learning and model fine-tuning

Benefits of transfer learning

Transfer learning reduces the computational time required to train a model from scratch on a task considerably.

Pre-trained models extract common features and are adjusted for user-specific datasets, allowing high accuracy with fewer tasks6.

Fine-tuning

The final few layers of CNN and YOLO models are fine-tuned so that the model can fit the nature of driver behaviors and road scenes, which often leads to better performance19.

where:

-

\({L}_{task}\) is the task-specific loss (classification or detection)

-

\({L}_{pretrain}\) is the pre-trained model’s original loss.

-

λ is a weighting factor.

Experiments and results

The following subsections details the datasets employed, experimental settings, evaluation definitions, performance comparison, and visual analysis of the proposed method. These experiments are aimed at validating the performance of the integrated driver distraction detection and road object recognition system.

Datasets used

-

a.

Driver behavior dataset:

-

For driver distraction detection, the State Farm Distracted Driver Dataset7 is used. This dataset consists of annotated images of drivers performing different activities like driving safely, texting, talking on the phone, and eating.

-

Contains 10 classes of driver behavior for comprehensive coverage of various distraction types.

-

b.

Road object dataset:

-

The YOLO model for road object detection is trained using the MS COCO Dataset6. The dataset consists of annotated images from a variety of road scenes, including different vehicles, pedestrians, lane markings, and traffic signs.

-

The dataset’s scale and variety facilitate the training of strong models for object detection under different environmental conditions.

Experimental setup

-

1.

Hardware:

-

Processor: NVIDIA Tesla V100 GPU.

-

Memory: 16 GB GPU memory.

-

Storage: 2 TB SSD for storing datasets and trained models.

-

2.

Software:

-

Frameworks: TensorFlow 2.0 and PyTorch for model implementation.

-

Libraries: OpenCV for image preprocessing and annotation, TensorRT for deployment optimization.

-

Operating System: Ubuntu 20.04.

-

3.

Training Parameters:

-

CNN Training for Driver Behavior:

-

Optimizer: Adam.

-

Learning rate: 0.001.

-

Batch size: 32.

-

Epochs: 50.

-

-

YOLO Training for Object Detection:

-

Optimizer: Stochastic Gradient Descent (SGD).

-

Learning rate: 0.01 with decay.

-

Batch size: 16.

-

Epochs: 100.

-

The integrated ADAS framework in this study was developed and tested using several open-source libraries. The following software tools were used along with their respective versions and purposes:

TensorFlow 2.10 was used for building and training the CNN-based driver distraction detection model. Documentation: https://www.tensorflow.org.

PyTorch 2.5.1 was employed for implementing and fine-tuning the YOLO-based object detection network. Its dynamic computation graph and GPU acceleration support made it well-suited for this real-time vision task. Documentation: https://pytorch.org.

OpenCV 4.7.0 was used for image preprocessing, augmentation, and annotation. Augmentation techniques such as brightness jittering, Gaussian noise, random cropping, and affine transforms were implemented using this library. Documentation: https://opencv.org.

NVIDIA TensorRT 8.4 was used to optimize trained models for deployment on embedded platforms such as NVIDIA Jetson, enabling real-time inference through model quantization and graph acceleration. Documentation: https://developer.nvidia.com/tensorrt.

All experiments were performed in a Python 3.9 environment managed through Anaconda Navigator on Ubuntu 20.04 LTS.

Ground truth labeling strategy

To ensure consistency and fair evaluation, the ground truth labeling process for driver distraction detection, road object recognition, and risk classification was constructed using a combination of public benchmark datasets and rule-based synthetic labeling logic. The labeling procedures for each module are described below:

-

a)

Driver Distraction Labels

The State Farm Distracted Driver Dataset was used to derive ground truth labels for driver behavior. This dataset contains frame-level annotations for 10 common driver states including Safe Driving, Texting with Right Hand, Talking on the Phone, and Reaching Behind, which were used to train the CNN model and validate its classification performance1.

-

b)

Road Object Detection Labels

Ground truth annotations for road object detection were obtained from the MS COCO and KITTI datasets. These datasets provide labeled bounding boxes and object categories such as vehicles, pedestrians, cyclists, and traffic signs, which were used to train and evaluate the YOLO-based detection model2,3. These references align with standard practices in real-time object recognition for Advanced Driver Assistance Systems (ADAS).

-

c)

Risk Level Classification

As no public dataset directly provides integrated risk level labels combining distraction and environmental hazard, we introduced a synthetic risk computation model:

Where:

-

D is the CNN-based distraction score (range [0, 1])

-

H is the YOLO-detected object risk value, computed from object proximity and class type

-

α = 0.6, β = 0.4 are empirically defined weights that prioritize behavioral distraction over static hazards.

Based on this computed risk score R, each driving instance is categorized as:

-

Safe: R < 0.4

-

Caution: 0.4 ≤ R < 0.7

-

Critical: R ≥ 0.7

This risk classification model was heuristically validated during training through consistency checks across multiple risk scenarios and by analyzing model predictions against labeled behavior distributions7,8. This labeling also forms the basis for computing system-level evaluation metrics such as true positives (TP), false positives (FP), and alert precision.

Although synthetic, this risk modeling framework is crucial for the fusion-based decision-making module and was trained on a combined dataset that mimics real-world driving risks under diverse conditions including fog, low light, and driver inattentiveness3,7.

Dataset alignment and augmentation consistency

To ensure fair evaluation across all models, both the baseline systems (A and B) and the proposed integrated system were trained and tested using the same combined dataset configuration. Specifically, the State Farm Distracted Driver Dataset1 was used for driver behavior classification, while the MS COCO2 and KITTI3 datasets were used for road object detection. No cross-dataset evaluation was performed to avoid bias in comparative performance.

Additionally, data augmentation was applied uniformly to increase robustness under diverse environmental conditions. Augmentation techniques included:

-

Brightness jittering and Gaussian noise to simulate low-light and night-time scenarios

-

Blur kernels and random occlusion patches to mimic fog and visual obstruction

-

Affine transformations, random horizontal flips, and random cropping to improve generalization

These transformations were implemented using the OpenCV library and were applied consistently across all models, including the baselines, to maintain uniformity and avoid any training bias.

Evaluation metrics

The system is evaluated based on the following metrics:

-

1.

Accuracy: Measures the proportion of correctly classified instances.

$$\text{Accuracy }= \frac{TP+TN}{TP+TN+FP+FN}$$ -

2.

Precision: Measures the proportion of correctly predicted positive instances.

$$\text{Precision }(\text{p}) = \frac{TP}{TP+FP}$$ -

3.

Recall (Sensitivity): Measures the proportion of actual positive instances correctly identified.

$$\text{Recall }(\text{r}) = \frac{TP}{TP+FN}$$ -

4.

F1-Score: The harmonic mean of precision and recall.

$$\text{F}1-\text{score }= 2*\frac{(p* r)}{(p+r)}$$

The Fig. 7 shows the confusion matrix for classification accuracy of the CNN based driver distraction detection system. This provides an immediate clarity to the model’s performance across all categories of distraction, tracking both true-positives and false-positives, and gives an excellent insight into the consistency and effectiveness of the trained system.

Comparative analysis and benchmarking of integration strategies

-

1.

Driver distraction detection

-

The achieved F1-score of the proposed CNN model is 94.3%, which is better than the best existing model (E2DR7) which had an F1-score of 92.5%.

-

Transfer learning (VGG-16 and ResNet) drastically divides training time and outweights greatly training from scratch.

-

2.

Road object detection

-

Compared to the previously-comparable YOLOv3 (mAP: 87.1%)6, the YOLOv4 model optimal setup achieves a mean Average Precision (mAP) of 89.7%.

-

Compared to previous Object Detection based approaches, the model has superior performance under harsher conditions including rain and dim, low-light illumination7,18.

To confirm the resilience of the suggested system in harsh driving conditions, independent tests were performed on images and videos depicting rainy, foggy, and night conditions. Precision-Recall (PR) curves were plotted separately for normal and harsh conditions. The suggested YOLOv4-based object detection model showed a slight reduction of only about 3% in mean Average Precision (mAP) when tested under harsh conditions, as compared to its performance under normal conditions. On the other hand, the conventional YOLOv3 model experienced a greater decrease of approximately 7% in mAP in the same extreme conditions.

Further, visual samples indicating accurate detection of distracted driver behaviors and surrounding hazards under low-light and rain conditions were added to the results section (see Fig. 11b). The results validate that the combined system still has high detection reliability and situational awareness in adverse driving conditions.

To ensure fairness, the same datasets and augmented training inputs were used for all baseline systems and the integrated architecture, allowing for consistent benchmarking across driver behavior, road object detection, and alert decision outputs.

Definition of system accuracy

System Accuracy is the fraction of properly classified results (true positives and true negatives) with respect to the number of instances being evaluated. The equation utilized is:

where TP is correct identification of distractions and hazards, TN is correct identification of safe environments, FP is false alarms, and FN is missed detection.

-

3.

Integrated System:

-

Compared to separate distraction detection or object recognition systems, the integrated CNN-YOLO system demonstrates significantly enhanced situational awareness.

-

It reaches an overall system accuracy of 91.5% which is much higher than others3 (Cross-Modality Fusion Transformer, 88.3% accuracy).

In order to thoroughly test the integrated system, a blend of multiple datasets and various driving conditions was employed. The State Farm Distracted Driver Dataset was applied for detecting driver behavior, and MS COCO and KITTI datasets were applied for road object detection. Simulation methods involving data augmentation were applied to mimic different real-world scenarios, such as clear daytime, nighttime, rainy, foggy, and low-light environments. The combined system was then tested on this combined dataset configuration in order to verify its performance under normal as well as extreme driving conditions.

To further emphasize the efficiency of the proposed integration approach, a comparative analysis with recent techniques has been carried out. As evident from Table 1, the proposed framework exhibits better real-time performance, scalability, and interpretability in comparison to E2DR7, Cross-Modality Fusion Transformer (CMFT)18, and Smart Context-Aware Hazard Systems11. These are especially notable in processing latency, new scenario adaptability, and feasibility of deployment on embedded systems.

The suggested approach (Improved CNN-YOLO with Attention Fusion and RF/SVM) is contrasted against E2DR, CMFT, Smart Context systems, presenting performance benefits in terms of latency, scalability, interpretability, and real-time deployment.

Apart from strategic contrasts, the performance of the combined system was experimentally tested with respect to baseline approaches.

Performance of the combined system is tested on the Accuracy, Precision, Recall, and F1-score metrics defined earlier in the section "Evaluation metrics".

For comparison purposes in Fig. 10, two baseline systems were taken into consideration:

-

Baseline System A: A standard driver distraction detection module entirely based on a CNN architecture without driver state and risk fusion mechanisms or road object recognition.

-

Baseline System B: A standard road object detection system with a YOLO architecture without driver state or risk consideration.

The integrated system proposed couples both driver distraction behavior and road object hazards by an attention-based fusion method and a decision module based on learning for the purposes of improving situational awareness and real-time hazard warning.

The robustness of the integrated system under low-light and fog scenarios is further validated through annotated visual outputs (see Fig. 11b), confirming reliable perception and alert generation under real-world adverse conditions.

Results visualization

-

1.

Driver distraction detection:

-

•

Confusion Matrix: Shows the classification accuracy across the various distraction categories in Fig. 7.

-

•

Accuracy Graph: Plots accuracy of training and validation through epochs.

The trends in validation and training accuracies during the learning process are depicted in Fig. 8. It clearly shows how the model’s performance improves over successive epochs, highlighting its convergence behavior and validating the effectiveness of the training approach and regularization techniques used during development.

-

2.

Road object detection:

-

•

Precision-Recall Curve: A graph showing precision and recall for detected objects.

-

•

Detection Examples: Shows bounding boxes and confidence scores for discerned road objects.

The precision-recall curve in Fig. 9 analyzes the performance of the object detection module of YOLO. It validates the capability of the model to strike a balance between recall and precision for different object categories and indicates how the robustness of the object detector discriminates between the relevant and non-relevant objects in a wide range of driving scenarios.

-

3.

Integrated system performance:

-

•

Bar Chart: Comparison between system accuracy, precision, recall andF1-score and other existing systems using IoT3,6.

-

•

Decision Output Examples: Includes examples of both driver distractions/hazards, and physically present roadside object hazards, for it to issue warnings in real-time.

In Fig. 10, Comparison of integrated system performance with baseline systems.

Baseline System A is CNN-only driver distraction detection, Baseline System B is YOLO-only road object detection, and the integrated system proposed in this work fuses both using attention-based fusion and learning-based risk estimation. Evaluation metrics like Accuracy, Precision, Recall, and F1-score illustrate better performance of the proposed system.

Figure 11 presents annotated outputs demonstrating the integrated system’s capability to detect distractions and road hazards under adverse conditions.

-

The left frame (10b-a) shows a CNN-based driver distraction classification result, annotated with predicted behavior (Texting, confidence 94.7%) and category (Manual Distraction).

-

The right frame (10b-b) displays YOLOv4-based object detection in a foggy night-time scene. Bounding boxes for critical objects such as pedestrians and vehicles are overlaid, each with class labels and confidence scores (e.g., Pedestrian 0.99, Car 0.93).

-

This figure validates the system’s real-time perception robustness in degraded visual environments and supports its suitability for practical deployment.

Annotated detection examples from the integrated CNN-YOLO system under real-world adverse conditions. a Simulated CNN output for driver distraction detection: Texting (94.7%), Category: Manual Distraction. b YOLO-based detection under foggy conditions showing bounding boxes and confidence scores for detected hazards such as pedestrians and vehicles.

Discussion

Interpretation of results

However, the proposed driver distraction detection system show its effectiveness in practical applications. This high accuracy in classifying different types of distraction1 highlights the deep learning framework’s power to process intricate challenges. Which was backed up by Aljasim et al.7, ensemble-based methods similar to our approach is effective for capturing more nuanced patterns of driver behaviour which results in more accurate detection.

Additionally, the solid training and validation results of the system imply it generalized well7. This is in line with the findings of Parekh et al.3 note, this is due to the fact that deep learning models, once properly trained, will generalise well across use cases. Nevertheless, our study has a number of limitations. False positives, in which unwarrantedly distracted or aggregate behavior is detected, remain a problem, similar to studies detecting visual-related non-driving activities9. The risk of this misclassification reinforces the potential for requiring more nuanced or potentially hybrid models (as detailed by Wang et al.4).

Moreover, environmental variation, such as the variations in lighting conditions or influence of weather, still affects the system performance. This is a well-acknowledged problem in autonomous systems2, as changes in environmental conditions may lead to detections with poor accuracy. Finally, hardware constraints, especially on edge devices, restrict the system scalability19. Well-structured efficient deep models (Du et al.8, which are critical in order to break down these barriers and process data in real-time without sacrificing precision.

Challenges

To developments, key challenges remain central to continue to improve to the system:

-

False Positives and Negatives:

-

Inappropriate system responses are closely related to misclassifications (in recent work on distraction detection10). It is crucial to enhance model sensitivity but at the same time ensure that specificity is kept intact.

-

-

Environmental Variability:

-

Hardware Constraints:

-

Embedded systems typically lack the processing power necessary for real-time detection and decision and have been highlighted as a major drawback in research of automotive perception21. Streamlining research for edge deployability continues to be a focus.

-

Opportunities for improvement

Clarification on use of reinforcement learning

Driver distraction detection and road object recognition are mainly pattern recognition tasks realized using deep learning models like CNNs and YOLO. RL is not used for these recognition tasks. RL is rather suggested to enhance the decision-making function in the risk assessment module by dynamically changing alert thresholds and optimizing adaptive alert strategies with regard to environmental complexity and driver behavior patterns. This guarantees that the alert system is able to reason and balance caution against alarm signals based on shifting real-time contexts.

There are multiple pathways to improve the effectiveness of the system:

-

1.

Adaptive learning

-

•

Adding adaptive learning models that can adapt over time to the driver and environmental conditions15. Utilizing reinforcement learning may offer a feedback loop for continuous improvement.

-

2.

Scalability

-

•

Methods like model pruning and quantization5 can help substantially mitigate computation requirements, thus allowing the system to be deployed more easily on devices with limited resources. Federated learning19 could be explored as well to distribute the processing load in an efficient manner.

-

3.

Enhanced robustness

-

•

Multi-modal data13 can be included to improve the context awareness of the system, thus improving distraction and hazard detection functions. This is consistent with development of cross-modality fusion18.

-

4.

Explainability and decision support

-

•

If explainable AI (XAI) tools16 are integrated, these multi-faceted views can provide transparency for the end-users and developers to grasp the decision-making processes of the system. It can engender trust and make it easier to refine the system.

Evaluation of real-time embedded system

To ensure the empirical deployability of the envisioned integrated system, actual-time experiments were performed using the NVIDIA Jetson Xavier NX embedded platform. First, the system was tested by utilizing the baseline unoptimized model, then the measurement of performance after the TensorRT-based INT8 model quantization and inference optimization was taken.

As indicated in Table 2, the optimized system has an inference latency of 39 ms for each frame and thus a real-time processing rate of about 25 frames per second (FPS). The end-to-end system latency, encompassing distraction detection and road object recognition modules, was minimized to 57 ms. Also, the model size was compressed by about 45%, while memory usage went down by 46%, thus making the system extremely acceptable for embedded ADAS applications. These findings ascertain that the proposed framework can carry out real-time risk assessment and alert generation on resource-restricted edge devices without degradation in performance or accuracy.

Conclusion and future work

Summary of findings

The fusion of CNN and YOLO frameworks can be an efficient approach to ensure road safety. High accuracy is sustained in detecting both driver distractions and road hazards as shown by strong precision and recall numbers. This is consistent with Sajid et al.1 and Du et al.8, that stress the ability of a deep learning models like YOLO able to complete real-time detection and classification capabilities. Utilizing ensemble-based methods and high-performance feature extraction techniques7, the proposed system helps raise central concerns regarding safety and either driver or vehicles when operating in autonomous driving domains.

The results furthermore highlight the necessity for the models to be optimized for efficient deployment on edge devices, as mentioned in with the work of Pandharipande et al.21. While false positives and environmental variability continue to be issues, the adaptability and scalability of the system highlight its omnipresence in real-world applications.

Implications

This study aids in the development of some collision avoidance systems and self-driving systems. It could help to prevent errors and improve road safety by properly identifying distractions and hazards. Moreover, its lightweight nature and legitimate edge device compatibility allow for integration into existing in-vehicle setups, facilitating widespread adoption in connected and autonomous vehicles8,19. And if they are, the system can actively respond to changes in the environment, such as misbehaving vehicles or pedestrians, enabling a range of real-time driver-assistance technologies that will save lives and reduce accidents.

Future directions

To improve upon the proposed work, and resolve its weaknesses, we recommend the following avenues for future work:

-

1.

Additional decision-making systems:

-

•

Integrating reinforcement learning or attention mechanisms to enhance the system’s ability to prioritize critical events and minimize false positives, as already explored by Li et al.17.

-

2.

Multi-sensor integration:

-

•

Utilize LiDAR, radar, and thermal cameras persistently to strengthen detection capabilities in adverse conditions, consistent with other cross-modality fusion approaches18 and new visual sensors for autonomous vehicles14.

-

3.

Testing in real-world conditions:

-

•

Perform thorough testing in a range of driving situations, including extreme weather and different traffic conditions, to demonstrate the robustness and reliability of the system, as stated by Guindel et al.5,12.

-

4.

Model optimization:

-

•

Emphasizing model compression techniques, such as pruning and quantization5, that are not hardware-specific but alleviate heavy computations and enhance performance on low-power embedded systems

-

5.

Scalability and adaptability:

-

•

Investigate the application of adaptive learning frameworks that facilitate continuous learning and the updating of models in response to real-world feedback, as presented by Krichen et al.15.

-

6.

Explainable AI:

-

•

Use explainable AI methodologies to establish transparency in decision-making systems, promoting trust and enabling debug in intricate scenarios16.

Besides achieving high detection and classification accuracy, the proposed system showed robust real-time performance on embedded devices. Using an NVIDIA Jetson Xavier NX platform, deployment was optimized to 25 FPS, validating the suitability of the system for real-world Advanced Driver Assistance System (ADAS) use cases under resource limitations. This demonstrates the system’s preparedness for real-world deployment and its contribution toward safer roads.

Data availability

The datasets used in this study State Farm Distracted Driver Dataset, KITTI dataset and MS COCO dataset are publicly available on Kaggle. These datasets can be accessed using the following links https://www.kaggle.com/c/state-farm-distracted-driver-detection/data, https://www.kaggle.com/datasets/klemenko/kitti-dataset, and https://www.kaggle.com/datasets/mnassrib/ms-coco.

References

Sajid, F. et al. An efficient deep learning framework for distracted driver detection. IEEE Access 9, 169270–169280. https://doi.org/10.1109/ACCESS.2021.3138137 (2021).

Yurtsever, E., Lambert, J., Carballo, A. & Takeda, K. A survey of autonomous driving: common practices and emerging technologies. IEEE Access 8, 58443–58469. https://doi.org/10.1109/ACCESS.2020.2983149 (2020).

Parekh, D. et al. A review on autonomous vehicles: progress, methods and challenges. Electronics https://doi.org/10.3390/electronics11142162 (2022).

Wang, H., Zhang, X., Li, Y., Chen, T. & Wang, Z. A comparative study of state-of-the-art deep learning algorithms for vehicle detection. IEEE/CAA J. Autom. Sin. 6(5), 965–980. https://doi.org/10.1109/MITS.2019.2903518 (2019).

Krichen, M. Convolutional neural networks: a survey. Computers https://doi.org/10.3390/computers12080151 (2023).

Wang, Q., Li, X., Zhang, Y., Hu, J. & He, R. Traffic lights detection and recognition using improved YOLOv4. Sensors (Basel) https://doi.org/10.3390/s22010200 (2022).

Aljasim, M., Abu-Qasmieh, B., Al-Dubai, A., Taamneh, D. & Ahmad, N. M. E2DR: a deep learning ensemble based driver distraction detection. Sensors (Basel) https://doi.org/10.3390/s22051858 (2022).

Du, Y., Liu, F., Zhang, H., Chen, X. & Yang, L. Optimizing road safety: advancements in lightweight YOLOv8 models. Sensors (Basel) https://doi.org/10.3390/s23218844 (2023).

Yang, L., Li, J., Chen, Y., Zhou, P. & Hu, W. Recognition of visual related non-driving activities using a dual camera monitoring system. Pattern Recogn. 118, 107955. https://doi.org/10.1016/j.patcog.2021.107955 (2021).

Li, T., Song, G., Chen, Y. & Wang, L. AB DLM: an improved deep learning model for driver distraction behavior detection. IEEE Access 10, 12345–12356. https://doi.org/10.1109/ACCESS.2022.3197146 (2022).

Younis, O., Al-Zoubi, A., Khaled, M., Hababeh, H. & Amro, A. A smart context aware hazard attention system. Sensors (Basel) https://doi.org/10.3390/s19071630 (2019).

Guindel, C., Aycard, A., & Montiel, J. Automatic Extrinsic Calibration for Lidar Stereo Vehicle Sensor Setups, in 2027 IEEE Intelligent Transportation Systems Conference (ITSC). https://doi.org/10.1109/ITSC.2017.8317829 (2017).

Dafrallah, S., Eldesouki, M., Said, J. & Gad, W. Monocular pedestrian orientation recognition. IEEE Access 9, 2000–2010. https://doi.org/10.1109/ACCESS.2021.3119629 (2021).

Li, Y., Huang, Z., Liu, X., Zhang, W. & Wang, S. Emergent visual sensors for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 24(3), 3456–3468. https://doi.org/10.1109/TITS.2023.3248483 (2023).

Mohammed, A. A. Q., Jaleel, B. & Al-Saady, H. A deep learning based end to end composite system for hand detection. Sensors (Basel) https://doi.org/10.3390/s19235282 (2019).

Chen, H., Sun, Z., Xu, Y. & Lin, J. Student behavior detection in the classroom based on improved YOLOv8. Sensors (Basel) https://doi.org/10.3390/s23208385 (2023).

Alahmadi, T. J., Rahman, A. U., Alkahtani, H. K. & Kholidy, H. Enhancing object detection for VIPs using YOLOv4_Resnet101 and text-to-speech conversion model. Multimodal Technol. Interact. 7(8), 77. https://doi.org/10.3390/mti7080077 (2023).

Fang, Q., Li, H., & Zhao, F. Cross Modality Fusion Transformer, arXiv preprint. https://doi.org/10.2139/ssrn.4227745 (2022).

Wang, Y., Kumar, D., & Singh, S. CAVBench: A Benchmark Suite for Connected and Autonomous Vehicles, in Proceedings of the 2018 IEEE Smart Electronics Conference (SEC). https://doi.org/10.1109/SEC.2018.00010 (2018).

Pavel, M. I., Rahman, A. & Ali, D. Vision based autonomous vehicle systems based on deep learning. Appl. Sci. https://doi.org/10.3390/app12146831 (2022).

Pandharipande, A., Gupta, S. & Reddy, P. Sensing and machine learning for automotive perception. IEEE Sens. J. 23(13), 6789–6798. https://doi.org/10.1109/JSEN.2023.3262134 (2023).

Acknowledgements

The authors would like to thank Kaggle for providing the datasets used in this research and acknowledge the support of all collaborators who contributed to the success of this study.

Funding

Open access funding provided by Symbiosis International (Deemed University). Open access funding provided by Symbiosis International (Deemed University). No specific funding was received for this study.

Author information

Authors and Affiliations

Contributions

Rakesh Salakapuri contributed to Idea Conceptualization, Methodology, Review, Editing, Software, and Writing Original Draft. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Informed consent

Informed consent was obtained from all subjects and/or their legal guardian(s).

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Salakapuri, R., Navuri, N.K., Vobbilineni, T. et al. Integrated deep learning framework for driver distraction detection and real-time road object recognition in advanced driver assistance systems. Sci Rep 15, 25125 (2025). https://doi.org/10.1038/s41598-025-08475-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-08475-4

Keywords

- Driver distraction detection

- Road object recognition

- Convolutional Neural Networks (CNNs)

- YOLO (You Only Look Once)

- Advanced Driver Assistance Systems (ADAS)

- Deep learning

- Transfer learning

- Real-time object detection

- Manual Distraction

- Embedded systems

- Decision-making module

- Environmental awareness

- Situational awareness