Abstract

Accurate detection of rice pests helps farmers take timely control measures. This study compares different attention mechanisms for rice pest detection in complex backgrounds and demonstrates that a human vision-inspired Bionic Attention (BA) mechanism outperforms most traditional attention mechanisms in this task and is applicable to all major mainstream and novel models. Bionic Attention (BA) assists the main branch in recognition by additionally labeling important features of each rice pest category and inputting the additional category labels as bionic information into the network during the input stage. This study applies Bionic Attention to dominant entity classical and novel networks, including YOLOv5s, YOLOv8n, SSD, Faster R-CNN, YOLOv9-e, and YOLOv10-X, and compares it with classical attention mechanisms such as CBAM, SE, and SimAM to verify its feasibility. Meanwhile, this study introduces more detailed evaluation metrics to assess Bionic Attention, including Classification Error, Localization Error, Cls and Loc Error, Duplicate Detection Error, Background Error, and Missed GT Error. Experimental results show that Bionic Attention improves detection performance by indirectly enhancing the loss function, allowing the model to acquire more fine-grained information during the feature extraction stage, thereby improving detection accuracy.

Similar content being viewed by others

Rice grains account for 20% of the world’s dietary energy supply, and more than 3 billion people consume rice daily1. It is estimated that rice hosts more than 100 pest species. Frequent occurrence of rice pests can lead to a significant decline in rice yields, severely limiting agricultural production and posing a severe threat to global food security2. Therefore, accurate and real-time automated monitoring and forecasting is crucial to controlling pests and preventing yield reduction.

However, due to the wide variety of rice pests and their high degree of similarity to the surrounding environment, in traditional agricultural production scenarios, the detection of rice pests still relies on manual surveys among rice fields. This method is not only labor-costly and inefficient in continuously monitoring rice pests, but its accuracy also depends on technicians’ experience. It is challenging to ensure identification accuracy, and it may lead to incorrect diagnosis and pesticide misuse. Therefore, developing an accurate, real-time automated monitoring and early warning system is essential for effectively controlling rice pests and avoiding rice yield loss.

In recent years, the rapid development of deep learning has led to the gradual application of computer vision to various fields, such as industrial, military, and medical. In particular, using target detection technology to diagnose agricultural pests and diseases has become a research hotspot in agricultural information technology. However, traditional target recognition algorithms often need help recognizing small targets accurately. Taking rice pests and diseases as an example, Preethi Muruganandam et al. tested the fusion of the Canny algorithm, Prewitt algorithm, and Laplace edge detection technique to VGG16 and GoogLeNet for detecting rice pests and diseases3. Shuaifeng Li et al. proposed a Self-Attention Feature Fusion Model for Rice Pest Detection (SAFFPest) and achieved good results on the Faster R-CNN network4. Md Suzauddola et al. proposed CMPestNet, a framework that employs multi-scale feature extraction for pest characteristic representation, demonstrating superior performance in both intra-crop and cross-crop pest detection scenarios5. Yuqi Hu et al. introduced the Swin Transformer and proposed a deep neural network, YOLO-GBS, which achieved an average mAP of 79.8% on a publicly available dataset6. Shuai Zhang et al. enhanced the YOLOv7 architecture through strategic integration of an ELAN-P module and P6 detection layer, demonstrating superior detection accuracy compared to state-of-the-art counterparts including YOLOv8s7. In addition, Liu Si-Tao et al. constructed a GA-Mask R-CNN (Generative Adversarial Based Mask Region Convolutional Neural Network) intelligent recognition model by adding the channel attention ECA module in Mask R-CNN and improving the connectivity of residual blocks in ResNet101 of the backbone network, the final average accuracy (AP) on the multi-source dataset was 92.71%, recall (R) is 89.28%, and balanced score F1 is 90.96%, which achieves good detection results8. Although many studies using attention mechanisms exist and have achieved excellent results in the rice pest detection research field, such studies could be better targeted and often require many manual experiments to explore the combinations of attention mechanisms and their insertion locations in neural networks. In addition, most attentional mechanisms need to effectively extract features between the target and the background in a given dataset, leading to poor recognition and robustness of the algorithms.

Primates are very good at recognizing objects. Despite decades of engineering efforts, their visual systems’ performance level and their image degradation robustness still outperform the best computer vision systems9. Biologically, humans acquire external information and interact with the external world through the senses of sight, touch, hearing, and smell. Vision is the basis of human perception and can compute and process analog information in parallel. The human visual system has a strong screening ability for external information, and the visual cortex of the brain has a multilevel interconnected structure, with nerve cells processing information in parallel at different levels at high speed, extracting image features layer by layer, and ultimately realizing cognitive understanding of objects10. Therefore, from the perspective of human vision, we can accurately recognize targets in complex environments due to the critical role of the “edges” and “key parts” of the target object. However, the proportion of these essential features in the whole image is often tiny. Moreover, when neural networks perform feature extraction, they tend to focus on the overall features of the target, thus ignoring the critical local features. As a result, this leads to a decrease in the model recognition accuracy, which leads to wrong and missed detection of rice pests.

To solve the above problems, we demonstrate that a bionic attention method based on human vision, Bionic Attention (BA), outperforms traditional attention mechanisms under this task. From the perspective of data preprocessing, this method first annotates the 14 types of rice pests as a whole as the dominant entity. Then, it follows the example of human vision by individually annotating the crucial features of each type of rice pest observed by the human eye as an auxiliary branch to be introduced into the input of the model. This helps the model acquire the “key parts” of the target during the learning process, thus reducing the errors and omissions of rice pests to cope with multiple types of rice pests in complex scenarios. The main contributions of our research are as follows:

-

1.

A high-quality data set of significant rice pests, RPest14, was constructed by specialized plant protection personnel after manually classifying rice pests.

-

2.

Comparison of the combined performance of different attentional mechanisms under this task.

-

3.

Bionic Attention (BA) is observed to show relative advantages compared to most other traditional attention mechanisms under this task from certain analytical perspectives.

-

4.

A YOLOv5s_BA detection model for rice pests was constructed, which can improve the mean Average Precision to 92.5% based on a weight file of only 13.8 MB.

The following sections will describe the experimental dataset and methodology, including data preprocessing and how Bionic Attention is used. Subsequently, the role of Bionic Attention in the model will be described in detail. Then, this paper will introduce the model evaluation metrics. In the Results and Discussion section, the paper will show the model training results, the comparison experiments with other models, the ablation experiments, and the analysis of the results. Finally, this paper will summarize the study’s results, show the detection effect of Bionic Attention visually, discuss the limitations, and outline future research directions.

Materials and methods

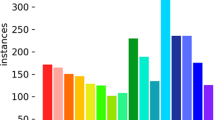

Data acquisition

The dataset used in this study is a significant rice pest dataset, consisting of 14 classes of rice pests with a total of 3548 images, named RPest14, as shown in Fig. 1. The dataset is divided into two versions: RPest141.0 includes 3548 images and corresponding overall labeling data of the pests, and RPest142.0 introduces the rice pests’ characteristic labels. We invited professional plant protection personnel to complete image quality screening, pest category confirmation, and feature label screening on the original publicly available IP102 dataset and finally compiled RPest141.0 and RPest142.0 as the baseline datasets for this study11.

While screening the original IP102 data, we found that data was mixed in several categories in the dataset. For example, pest data from other categories were mixed under each category, which could severely damage the detection accuracy. Using manual screening, we eliminated erroneous and poorly performing image data. In the rice pest image samples, pests belonging to different species, such as brown planthopper, white-backed planthopper, and gray planthopper, may have high similarity in appearance, especially in the same growth period. The same pests show different morphologies at different growth periods, such as eggs, nymphs, and adults. Since the eggs and nymphs of the pests pose much less damage to rice than the adults. Therefore, the baseline dataset of this study was entirely tested with adults, excluding the eggs and nymphs of rice pests, and the images were labeled with features.

Model of detection

The task of target detection is to accurately and efficiently identify and localize many object instances of predefined categories from an image12. Depending on the presence or absence of a candidate frame generation phase, the dominant entity deep learning-based target detection algorithms can be categorized into two-stage and single-stage target detection algorithms. The two-stage target detection algorithm extracts candidate frames from the image and obtains the final detection results by making secondary corrections to these candidate regions. This algorithm has high detection accuracy but is slow, and standard two-stage models are R-CNN13 and Faster R-CNN14.On the other hand, the single-stage target detection algorithm directly computes the entire image, generates candidate boxes directly in the image, and performs target detection, thus achieving fast detection speed. However, its detection accuracy is usually low, and standard one-stage models are You Only Look Once(YOLO)15 and Single Shot MultiBox Detector(SSD)16.

SSD is a typical single-stage target detection model with a simple network structure, fast computation speed, suitable for real-time applications, and multi-scale detection capability. YOLOv5 utilizes Mosaic data enhancement technology to stitch multiple images at the model input; meanwhile, it adopts an innovative Focus structure as the Backbone. The uniqueness of this structure is that it improves the receptive field. The unique feature of this structure is that it improves sensory wildness and avoids information loss at the same time. These designs enable YOLOv5 to fuse richer features when dealing with rice pest datasets, significantly improving detection efficiency.YOLOv8 retains the CSP concept, feature fusion method (PAN-FPN), and SPPF module from YOLOv5. In addition, YOLOv8 adopts an anchorless algorithm to reduce the number of predictions, which speeds up the NMS post-processing and ensures a lightweight model. Faster R-CNN is a typical two-stage detection model, which introduces region candidate networks (RPNs) and domain-of-interest pooling (ROI pooling) to improve detection accuracy. This model can accomplish the task of accurately detecting rice pests to a certain extent. Compared to YOLOv8, YOLOv9 analyses the CNN architecture based on reversible functions designed with PGI and auxiliary reversible branches. This innovative architecture enables the model to utilize the parameters more efficiently, improving accuracy and inference speed. Meanwhile, YOLOv9 achieves higher parameter utilization than state-of-the-art deep divisible convolutional designs based on the state-of-the-art by introducing designs such as GELAN17. YOLOv10 builds on this by utilizing consistent dual assignment to eliminate the need for an NMS, thereby reducing inference latency. It also incorporates large kernel convolution and a partial self-attention module to improve performance without adding significant computational cost18.

Bionic attention mechanism based on human vision

Convolutional Neural Networks (CNNs)—such as DenseNet—are a foundational class of deep learning models that have achieved state-of-the-art results in image classification, object detection, semantic segmentation, super-resolution, and many other vision tasks19. However, under complex backgrounds where target and background share similar appearance, CNNs can still be misled by distracting context, leading to weakened detection performance20. In contrast, human vision exploits a saliency-based search strategy—selectively focusing on local features like color contrasts, shapes, and textures—to identify objects even when they are camouflaged within cluttered scenes21.

Inspired by this, we introduce a Bionic Attention (BA) mechanism to bolster pest detection. In addition to the usual whole-object labels, we manually annotate those pest parts whose biological features differ most from the background (e.g., distinctive shape or texture). These part-level annotations form an auxiliary branch during training, guiding the CNN to jointly learn global and local representations. By mimicking human-like saliency focus, BA helps the model uncover the implicit relationships between pest parts and wholes, thereby improving overall detection accuracy in challenging field conditions.

When using Bionic Attention to annotate image data, we found that different rice pests possess different distinguishing features. Therefore, based on the overall annotation of various rice pests using the image annotation tool Labelimg22, in this study, two categories of pests, rice fly and rice green stink bugs, were labeled with features of the dorsal plate, one category of stem borer was labeled with features of the dorsal spots, one category of rice stem borer was labeled with features of the eyes, and the rest of the pests were labeled with features of the head. Figure 2 illustrates the specific feature labeling, and Table 1 shows each category and the corresponding number of labels.

Multi-branch detection model

Multi-scale and different source input models can improve the accuracy and detection of the algorithm23. This experiment uses feature labels as bionic information and an overall label combination input model. Where the label of the whole is used as the main branch, and the feature label is used as the auxiliary branch. It has been verified through comparative experiments that this method can improve the accuracy of target detection tasks for pest identification. Taking the rice fly as an example, the rice fly is mainly divided into a grey fly, white-backed fly, and brown fly; for this, the whole of the rice fly is labeled first, and then the features between different rice flies are labeled, such as the back plate of rice fly, which leads to the model not only focusing on the pest as a whole, but also the significant differences between pests and pests, and pests and the environment, which solves the problem of misdetection and omission of the model for rice fly.

Figure 3 illustrates the multi-branch detection model based on Bionic Attention. As shown in the figure, the three species of rice hoppers are assigned both overall labels and BA-labeled feature-specific annotations. These labels are integrated within a single image for training. The overall labeling serves as the primary branch, while the feature-specific labeling functions as the auxiliary branch. Both branches are fed into the target detection network, where their joint loss computation enhances detection accuracy.

Training environment and evaluation indicators

This experiment was conducted on a computer based on the Ubuntu 20.04 operating system. The CPU model is a 24 vCPU AMD EPYC 7642 48-Core Processor, the GPU model is NVIDIA GeForce RTX 3090 GPU (24GB), Pytorch 1.10.0 framework is used, and Python version is 3.8.

When training the experimental model, the relevant hyperparameters are set as follows:

-

The model receives images with a resolution of 640 × 640 pixels as a uniform input.

-

The initial learning rate of the model is 0.01, and the momentum of the learning rate is 0.937.

-

The optimizer uses SGD, and the batchsize in the experiments is 8.

-

The number of training iterations of the model is uniformly 100 epoch.

It is important to note that the network structure parameters used in this experiment are based on experiments conducted under the default parameters of the authors of the original model.In order to evaluate the model more comprehensively, this study will evaluate it in terms of detection accuracy and inference speed. For detection precision, Precision rate (P), Recall rate (R), F1 score (F1), and Mean Average Precision (mAP) are introduced; and for inference speed, we compare the size of the model weight file under the same parameters.

where True Positive(TP) denotes the number of pest samples that were correctly identified, False Positives(FP) denotes the number of samples in which a particular type of pest was incorrectly identified as other pests, and False Negatives (FN) denotes the number of samples in which pests were incorrectly identified. Mean Average Precision (mAP) denotes the mean accuracy, where AP is calculated by integrating the area enclosed by the P(Precision)R(Recall) curve with the axes. mAP denotes the mean value of AP for different categories.

Results

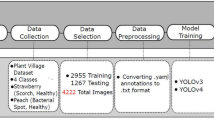

Ablation experiments of mainstream models

Four mainstream target detection models and two novel models are shown in Table 2, including YOLOv5s, YOLOv8n, SSD, Faster R-CNN, and YOLOv9-e, YOLOv10-X. In this experiment, the models were inputted with RPest141.0 and RPest142.0, respectively. The test metrics of the models were compared with each other and the use of migration learning and pre-training weights were added during training to improve the accuracy and performance of the model. Based on the results in Table 2, it can be seen that the SSD model and Faster R-CNN achieved the highest Precision and Recall rate, respectively, on the original dataset RPest141.0. where YOLOv9-e achieved the highest mAP@50, which was 92.1%. Among many weight files, the weight file of YOLOv8n is only 5.96 MB.

In order to improve the detection of the original dataset, the concept of feature labeling is introduced in this study. Feature labels are added as auxiliary branch inputs using Bionic Attention (BA), and the feature labels are discarded during testing, retaining only the metrics of the original labels. After introducing feature labels, each model improves compared to the previous one. YOLOv5s improves mAP@50 by 1.6%, the accuracy rate by 2%, and the model size is reduced by 0.3 MB. YOLOv5s has the most room for improvement after adding feature labels using BA.

Among all models, YOLOv8n_BA achieved a high mAP@50 and the smallest model size, 92.9% and 5.95 MB, respectively. In addition, YOLOv8n performed exceptionally well regarding several metrics, including precision and recall. After considering the evaluation metrics of each model, this study concludes that YOLOv8n has the best overall performance after adding BA to the dataset RPest141.0. It should be noted that SSD with Faster R-CNN has a large model size. Although some metrics are im-proved after introducing feature labels, their performance is average compared to the other models, and the larger model affects the inference speed. This illustrates that a larger network model sometimes leads to better detection performance and results.

Comparison of attention mechanism

By conducting ablation experiments on the mainstream models mentioned above, this study chose YOLOv5s, which has more room for enhancement and is more main-stream, as the base model. We added the more commonly used attentional mechanisms, SE, SimAM, and CBAM, to compare with Bionic Attention. Specifically, the SE, SimAM, and CBAM attention mechanisms are added to the SPPF layer in the YOLOv5 network structure to form three different versions of the network structure. To ensure fairness, none of the comparison experiments used transfer learning. The results for YOLOv5s, YOLOv5s_CBAM, YOLOv5s_SE, YOLOv5s_SimAM, and YOLOv5s_BA are presented in Table 3.

According to the results in Table 3, Bionic Attention outperforms all other attention mechanisms in the table from an overall perspective. Compared to the original YOLOv5s model, YOLOv5s_BA with the addition of the Bionic Attention mechanism has a 7.5% increase in precision rate, a 3.6% increase in recall, and a 5% increase in mAP@50 with no change in the model size and only a 0.53% increase in the total number of parameters. Compared with YOLOv5s_CBAM, the precision rate is increased by 11.5%, the recall rate is increased by 4.9%, and the mAP@50 is increased by 5.5%. 4.4% improvement in precision rate, 2% improvement in recall, and 3.9% improvement in mAP@50 compared to YOLOv5s_SE. 6.7% increase in precision rate, 2.6% increase in recall, and 4.9% increase in mAP@50 compared to YOLOv5s_SimAM. YOLOv5s_BA shows good performance and model accuracy with a similar number of total model parameters. This is because adding the Bionic Attention branch makes the model more inclined to determine the rice pest category by feature labels. The misdetection problem can be solved by learning the dorsal plate features of similar-looking pests such as white-backed, gray and brown flies to improve their dis-crimination ability. At the same time, the feature labels can also help the model extract the ability to distinguish the pests from their surroundings, which reduces the interference of the background to a certain extent, thus solving the problem of missed detection. When the problem of misdetection and omission is solved, mAP@50 is improved.

On the contrary, the classical attention mechanisms, such as SE and SimAM, although they play a specific role in helping the model in the task of detecting rice pests, with YOLOv5s_SE being the highest, reaching a good result of 86% for mAP@50, the interpretability and targeting are poorer when these attention mechanism modules are added. At the same time, the number of total parameters to be trained is also raised, and thus, the model size is also increased. Even after adding the CBAM attention mechanism, YOLOv5s_CBAM shows a 0.5% decrease in mAP@50 when the number of total training parameters increases by about 7.04% and the model increases by 1 MB. The above shows that adding classical attention does not necessarily achieve good results and may even lead to a decrease in accuracy, requiring multiple trials, such as the location and number of added attention mechanisms, etc., before a better situation may be found, resulting in poor targeting and interpretability. Compared with Bionic Attention, it only requires a certain amount of labor cost to achieve better accuracy.

In contrast, the total amount of training parameters added increases by 0.53% compared to the original model, which is almost negligible. It also does not require multiple trials to find the most suitable insertion position and number, reducing the trial cost as much as possible. Compared to the classical attention mechanism, Bionic Attention significantly improves the effectiveness of rice pest detection on small-scale datasets while reducing the experimental cost and complexity.

mAP@50 only succinctly summarizes the model’s performance in a single figure. It does not provide a concrete understanding of the direction from which Bionic Attention (BA) addresses the problems of the original model. Therefore, this experiment introduces more detailed indicators to validate further that BA is a targeted approach to solving the misdetection and omission of rice pests. In the study, all FPs and FNs in the model were categorized into one of six types (see Fig. 4). FP can be paired with FN for some error types (classification and localization). In this study, IoUmax will represent the overlap of the maximum IoU of FP with the ground truth of a given category. Unless otherwise stated, the foreground IoU threshold is denoted as TF, and the back-ground threshold is denoted as TB and set to 0.5 and 0.124.

Classification Error: accurate localization for rice pests, but incorrect classification (IoUmax ≥ TF for GT of the incorrect class);

-

1.

Localization Error: for rice pests classified correctly but not localized accurately (TB ≤ IoUmax ≤ TF for GT of the correct class);

-

2.

Both Cls and Loc Error: for rice pest misclassification and inaccurate localization (TB ≤ IoUmax ≤ TF for GT of the incorrect class);

-

3.

Duplicate Detection Error: Duplicate detection for a correctly classified rice pest (IoUmax ≥ TF for GT of the correct class, but another higher-scoring detection already matched that GT);

-

4.

Background Error: detects background as rice pest (IoUmax ≤ TB for all GT);

-

5.

Missed GT Error: Rice pests that should have been detected were not (All undetected ground truth (false negatives) not already covered by classification or localization error).

According to the results in Table 4, it can be further verified that Bionic Attention is superior to the other attention mechanisms in the table. Taking YOLOv5s as the benchmark index, the SE attention mechanism brings the most significant improvement to AP50, precisely 2.00% among the traditional attention mechanisms. The improvement brought by the SE attention mechanism is mainly because it reduces to a certain extent the interference brought by the misdetection and background of the original model, in which the Cls decreases by 0.69, the FP decreases by 2.00, and the Bkg decreases by 0.25. The enhancement brought by the SimAM attention mechanism is mainly due to the more accurate localization of pests compared to the original model, in which Loc decreased by 0.03. The CBAM attention mechanism decreased by 0.57% compared to the original model AP50, and although it reduces the miss-detection and inaccurate localization of the original model to a certain extent, its increase in Cls compared to the original model was 1.48. FP increased by 0.71, which proves that it brings more misdetections and leads to a decrease in accuracy. Compared with the original model and other traditional attention mechanisms, Bionic Attention has only 7.82 Cls, 10.24 FP, and even 0.00 Missed. The enhancement brought by Bionic Attention is pronounced, and its AP50 reaches 88.84%, which is an increase of 5.05% compared with the original model, and the reduction of Cls is 4.03, FP is 5.26, and Missed is 0.07. The above results prove that Bionic Attention is overall better than the other attention mechanisms in the table. The above results prove that Bionic Attention is overall better than the other attention mechanisms in the table. The significant improvement of Bionic Attention to the original model is due to its reduction of wrong detection and omission of targets in the original model.

Compared with the original model and other traditional attention mechanisms, Bionic Attention has only 7.82 Cls, 10.24 FP, and even 0.00 Missed. The enhancement brought by Bionic Attention is pronounced, and its AP50 reaches 88.84%, which is an increase of 5.05% compared with the original model, and the reduction of Cls is 4.03, FP is 5.26, and Missed is 0.07. The above results prove that Bionic Attention is overall better than the other attention mechanisms in the table. The significant improvement of Bionic Attention to the original model is due to its reduction of wrong detection and omission of targets in the original model.

Figure 5 illustrates the contribution of the six different categories of errors to the impact on model accuracy, where the pie charts show the relative contribution of each error and the bar charts show their absolute contribution. As seen in Fig. 2, Cls and Bkg have the most significant impact on model accuracy, regardless of the type of attention. It proves that on this dataset, misdetection due to the similarity between pests and pests and underdetection due to the similarity between pests and background have the most significant impact on the model accuracy. Bionic Attention, on the other hand, can solve this problem well.

Analysis of results

According to the results in Table 3, Bionic Attention improves detection mainly by reducing Classification Error (Cls) and Missed GT Error (Missed). From the perspective of model analysis, it mainly optimizes the loss function so that the model accuracy is improved. The total loss function of the original model is:

where \(\:{L}_{global}\) represents the total loss of detecting the overall label.

After introducing the feature labels, the BA model total loss function is added as:

where \(\:{L}_{feature}\) represents the total loss of detected feature labels.

This demonstrates that adding feature labels is equivalent to introducing more constraints in the loss function. This makes the optimization process richer and the model can minimize more error terms during the training process, thus improving the overall performance. By minimizing L_total2, the model learns to identify the whole and features, making the model more robust.

Taking the classification loss function in YOLOv5 as an example, the classification loss is used to measure the model’s prediction accuracy for the target category, and the model’s loss function is:

where C represents the total number of categories, \(\:{x}_{i}\) is the current category prediction, \(\:\widehat{{y}_{i}}\) is the probability of the current category obtained after the activation function, and \(\:{y}_{i}\) is the actual value of the current category. Figure 6 shows the classification loss of the five algorithms, and YOLOv5s_BA converges the fastest within 100 epochs. Adding feature labels increases the number of categories C, and the model needs to learn more categories. This can refine the model’s classification ability, making it easier to distinguish between different categories and features, reducing classification errors, and speeding up the convergence of the model. At the same time, feature labeling allows the model to obtain more fine-grained information in the feature extraction phase. This information can be better conveyed in the middle layer of the network, enabling the model to recognize the target more accurately in the prediction stage.

Visualization of rice pest detection

In order to visualize the difference between Bionic Attention and other attentional mechanisms for rice pest detection in complex backgrounds, this study uses the trained YOLOv5s, YOLOv5s_CBAM, YOLOv5s_SE, and YOLOv5s_BA models to rea-son about the test set images. As shown in Fig. 7, in the first row, there are specific interference terms in the original images, which make the YOLOv5s model and YOLOv5s_SimAM model incorrectly recognize the interference terms in the images as other pests, while YOLOv5s_CBAM, YOLOv5s_SE, and YOLOv5s_BA solve this problem well. At the same time, YOLOv5s_BA has the highest confidence level of 88% for predicting such rice pests. In the second row, YOLOv5s_BA and YOLOv5s_SimAM predicted the Asiatic rice borer with the highest confidence level, precisely 85%. In the third row, the similarity of this pest to its surroundings caused all types of models of YOLOv5s to fail to predict the rice pest in this figure, which resulted in a missed detection, whereas YOLOv5s_BA succeeded in detecting this pest with a confidence level of 67%. In the fourth row, YOLOv5s and YOLOv5s_SE should be more careful in predicting the results of this pest. The anchor box does not select the pest thoroughly. YOLOv5s_SimAM can select the pest ultimately, but with low confidence, YOLOv5s_CBAM fails to predict this type of pest, and there is a case of missed detection, whereas YOLOv5s_BA can select the pest entirely in the case, also achieved a high confidence level, precisely 66%. The results show that the YOLOv5s model with Bionic Attention can effectively solve the problems of misdetection and omission of the original model. At the same time, its performance and accuracy are better than other attention mechanisms.

The rows (from top to bottom) represent YOLOv5s_BA, YOLOv5s_CBAM, YOLOv5s, YOLOv5s_SE, and YOLOv5s_SimAM, while the columns (from left to right) correspond to the following modifications: (A) extreme high contrast, (B) extreme low contrast, (C) lower contrast, (D) rotated by + 15°, (E) rotated by − 45°, and (F) rotated by 60°. This figure illustrates the robustness and performance of the respective models under different challenging imaging conditions.

To demonstrate the robustness of BA across diverse scenarios, this study conducted extreme contrast adjustments (± 100% and − 80% relative to baseline) and rotational transformations (15°, 30°, and 45°) on randomly selected pest images. In Column A (200% contrast enhancement), BA maintained effective recognition of anatomical features with 76% MAP@50 for body segmentation, closely approaching the 80% performance of YOLOv5s_SE. However, under severe contrast reduction (Column B, 20% baseline contrast), only YOLOv5s_BA and YOLOv5s_SimAM achieved target detection, albeit with erroneous classifications. Moderate contrast conditions (Column C, 50% baseline) revealed notable performance recovery, with all architectures except YOLOv5s_SimAM attaining approximately 85% MAP@50. Rotational analysis demonstrated BA’s angular stability: Columns D-E showed consistent BA performance (> 77% MAP@50) compared to competing models exhibiting > 20% performance degradation between matching rotations. Notably, YOLOv5s produced critical misclassifications in Column E, erroneously identifying pest heads as complete specimens. Under extreme rotation (Column F, 45°), BA maintained detection efficacy alongside YOLOv5s_SE and YOLOv5s_SimAM (MAP@50 reductions < 5%), while YOLOv5s and YOLOv5s_CBAM suffered significant declines (21% and 25% respectively). These findings, as illustrated in Fig. 8, collectively indicate BA’s superior robustness in handling challenging imaging conditions compared to existing attention mechanisms.

In order to investigate the regions attended by the neural network before and after adding different attention mechanisms, this study introduced the Gradient Weighted Class Activation Mapping (Grad-CAM) algorithm25 to evaluate the images predicted by the model. Grad-CAM generates the class activation maps by calculating the gradient of the target class over the input feature maps, assigning different weights to different pixel points, and denoting the network with different colors to indicate the attention level. Among them, the red areas represent the regions with higher weights, i.e., where the network focuses more attention, while the blue areas indicate lower weights. To ensure interpretability and consistency, we selected the last convolutional layer (i.e., layer 21) of the YOLOv5s backbone as the target feature layer for Grad-CAM visualization. This layer retains rich semantic information and has sufficient spatial resolution, making it suitable for highlighting attended regions.

As shown in Fig. 9, the heat maps generated from YOLOv5s_SE (fourth column) and YOLOv5s_SimAM (fifth column) show attention regions that are often scattered in the background, mistakenly treating background textures as target features, which leads to poor detection results. In contrast, the YOLOv5s_BA model (sixth column) guides the network to focus more accurately on the pest areas by incorporating feature labels, covering the entire object while reducing background interference. This demonstrates that the YOLOv5s model with Bionic Attention exhibits superior detection and localization capabilities. Its attention is more concentrated on the true object regions, thereby mitigating false positives and missed detections in complex scenes like rice pest environments.

Discussion

In the context of rice pest detection, two key challenges arise: the variation in pest scale and the similarity in pest features. These factors can negatively affect the performance of recognition algorithms. In our experiments, we observed that the network was trained multiple times on the same feature of the same pest type, which led to overfitting. This overfitting caused the model to repeatedly recognize the same feature, while the recognition of the overall pest shape remained average. As a result, there was a slight improvement in the two metrics: Localization Error and Duplicate Detection Error. Additionally, we found that Bionic Attention (BA) tends to perform better on small to medium-sized datasets. This is primarily due to the labor costs associated with creating feature labels, which increase with dataset size. When applied to larger datasets, the benefits of Bionic Attention are less pronounced compared to traditional attention mechanisms. This suggests that BA may not always be the most efficient solution for large-scale datasets. To address these limitations, future research could focus on gradually reducing the impact of feature labels on overall labeling. One potential approach is to introduce a new parameter that regulates the contribution of feature labels to overall label recognition. Alternatively, incorporating more advanced state-of-the-art models could help improve recognition accuracy and enhance the overall performance of the algorithm.

Conclusions

This study compares the performance of different attention mechanisms in rice pest detection and demonstrates that Bionic Attention (BA) offers notable improvements over traditional attention mechanisms for this task, particularly on small-scale datasets. Our experiments show that BA generally outperforms other methods, though its effectiveness may vary depending on the dataset and model. Two rice pest datasets, RPest141.0 and RPest142.0, were constructed, with RPest142.0 including feature labels added to RPest141.0. These datasets, which involve 14 categories and 3548 images, were used to evaluate the models. To address the challenges of missed detections due to complex backgrounds and incorrect detections caused by pests with similar appearances, we utilized human-annotated feature labels as auxiliary branches in the model. These feature labels were combined with the overall labels for training in models such as YOLOv5s, YOLOv8n, SSD, Faster R-CNN, and YOLOv9-e. The addition of these feature labels led to improvements in mAP@50 by 1.6%, 1.5%, 1.2%, 1.1%, and 1.1%, respectively, compared to the original models. Furthermore, to validate the effectiveness of Bionic Attention, we compared it with widely-used attention mechanisms, including CBAM, SE, and SimAM, and introduced additional evaluation metrics such as Classification Error (Cls) and Missed GT Error (Missed). The results show that while YOLOv5s_SE, incorporating classical attention, achieves the best detection effect with 86.0% mAP@50, YOLOv5s_BA, which integrates Bionic Attention, outperforms it with 88.9% mAP@50. Moreover, the Cls of YOLOv5s_BA is reduced by 4.03 compared to the original model, indicating a significant improvement. In contrast, the Cls of YOLOv5s_SE, the best-performing model with traditional attention, is reduced by only 0.69, suggesting that Bionic Attention generally offers better performance and robustness across models. While the results demonstrate the potential of Bionic Attention, it is important to note that its performance is still influenced by dataset characteristics and the choice of model. Further research, particularly with larger and more diverse datasets, will help explore its scalability and refine its application.

Data availability

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation. Data can be requested from the corresponding author at lzy@sicau.edu.cn.

Abbreviations

- BA:

-

Bionic Attention

References

Mishra, R., Joshi, R. K. & Zhao, K. Genome editing in rice: recent advances, challenges, and future implications. Front. Plant Sci. 9, 409924 (2018).

Yang, Y. et al. FCBTYOLO: A Lightweight and High-Performance Fine Grain Detection Strategy for Rice Pests (IEEE Access, 2023).

Hassan, S. I., Alam, M. M., Illahi, U. & Mohd Suud, M. A new deep learning-based technique for rice pest detection using remote sensing. PeerJ Comput. Sci. 9, e1167. https://doi.org/10.7717/peerj-cs.1167 (2023).

Li, S., Wang, H., Zhang, C. & Liu, J. A self-attention feature fusion model for rice pest detection. IEEE Access. 10, 84063–84077 (2022).

Suzauddola, M. et al. Advanced Deep Learning Model for crop-specific and cross-crop Pest IdentificationVolume 274126896 (Expert Systems with Applications, 2025).

Hu, Y. et al. Detection of rice pests based on self-attention mechanism and multi-scale feature fusion. Insects 14 (3), 280 (2023).

Zhang, S. et al. JutePest-YOLO: A deep learning network for jute pest identification and detection. IEEE Access 12, 72938–72956. https://doi.org/10.1109/ACCESS.2024.3403491 (2024).

Liu, S. et al. Research on insect pest identification in rice canopy based on GA-Mask R-CNN. Agronomy 13 (8), 2155 (2023).

Serre, T., Oliva, A. & Poggio, T. A feedforward architecture accounts for rapid categorization. Proc. Natl. Acad. Sci. 104 (15), 6424–6429 (2007).

Yang, X. et al. Brain-inspired models for visual object recognition: an overview. Artif. Intell. Rev. 55 (7), 5263–5311 (2022).

Wu, X., Zhan, C., Lai, Y. K., Cheng, M. M. & Yang, J. Ip102: A large-scale benchmark dataset for insect pest recog-nition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8787–8796). (2019).

Zou, Z., Chen, K., Shi, Z., Guo, Y. & Ye, J. Object detection in 20 years: A survey. Proc. IEEE. 111 (3), 257–276 (2023).

Girshick, R., Donahue, J., Darrell, T. & Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 580–587). (2014).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: towards real-time object detection with region proposal net-works. IEEE Trans. Pattern Anal. Mach. Intell. 39 (6), 1137–1149 (2016).

Jiang, P., Ergu, D., Liu, F., Cai, Y. & Ma, B. A review of Yolo algorithm developments. Procedia Comput. Sci. 199, 1066–1073 (2022).

Liu, W. et al. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14 (pp. 21–37). Springer International Publishing. (2016).

Wang, C. Y., Yeh, I. H. & Liao, H. Y. M. YOLOv9: learning what you want to learn using programmable gradient information. In Computer Vision - ECCV 2024. Lecture Notes in Computer Science, Vol. 15089 (eds Leonardis, A. et al.) 3–20 (Springer, Cham, 2025). https://doi.org/10.1007/978-3-031-72751-1_1.

Wang, A. et al. YOLOv10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 37, 107984–108011 (2024).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. In Pro-ceedings of the IEEE conference on computer vision and pattern recognition (pp. 4700–4708). (2017).

Alcorn, M. A. et al. Strike (with) a pose: Neural networks are easily fooled by strange poses of familiar objects. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4845–4854). (2019).

Itti, L. & Koch, C. A saliency-based search mechanism for overt and Covert shifts of visual attention. Vision. Res. 40 (10–12), 1489–1506 (2000).

Tzutalin, L. I. Git code (2015). https://github.com/tzutalin/labelImg

Yan, J., Zhao, L., Diao, W., Wang, H. & Sun, X. Af-ems detector: improve the multi-scale detection performance of the anchor-free detector. Remote Sens. 13 (2), 160 (2021).

Bolya, D., Foley, S., Hays, J. & Hoffman, J. Tide: A general toolbox for identifying object detection errors. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16 (pp. 558–573). Springer International Publishing. (2020).

Selvaraju, R. R. et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision (pp. 618–626). (2017).

Funding

This work was funded by the Sichuan Provincial Natural Science Foundation Project (Grant No. 2024NSFSC0337, No. 2025ZNSFSC0162); and Innovation and entrepreneurship training program for College Students (Grant No. S202310626014); and Sichuan Provincial Science and Technology Department Regional Innovation Cooperation Project (Grant No. 2024YFHZ0177).

Author information

Authors and Affiliations

Contributions

YX: Conceptualization, Data curation, Funding acquisition, methodology, software, Visualization, writing - original draft. XZ: Project administration, supervision, writing – review & editing. ZC: Project administration, supervision, writing – review & editing. JT: Investigation, data curation, formal analysis. LZ: Data curation, Visualization. LX: formal analysis, Project administration. CJ: data curation, funding acquisition. ZL: Project administration, funding acquisition. All authors contributed to the article and approved the submitted version.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xiao, Y., Zhang, X., Chen, Z. et al. Comparative analysis of attentional mechanisms in rice pest identification. Sci Rep 15, 21397 (2025). https://doi.org/10.1038/s41598-025-08869-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-08869-4