Abstract

This paper presents a new architecture for multimodal sentiment analysis exploiting hierarchical cross-modal attention mechanisms, as well as two parallel lanes for audio analysis. Traditional sentiment analysis approaches are mainly based on text data, which can be inefficient as valuable sentiment information may reside within images and audio. Aiming at solving this issue, the model provides a unified framework that integrates three modalities (text, image, audio) based on BERT text encoder, ResNet50 visual features extractor and hybrid CNN-Wav2Vec2.0 pipeline for audio representation. Specifically, its main innovation is a dual audio pathway augmented with a dynamic gating module and a cross-modal self-attention layer that enables fine-grained interaction among modalities. Our model reports state-of-the-art performance on various benchmarks, outperforming recent approaches: CLIP, MISA and MSFNet. Such that, the results reveal an improvement of classification accuracy especially with missing or noisy modality data. The system has robustness and reliability, which is validated with an exhaustive analysis through metrics like precision, recall, F1-score, and confusion-matrices. In addition, such an architecture demonstrates modular scalability and adaptability across domains, making it proficient for applications in healthcare, social media, and customer service. By providing a framework for developing affective AI systems that can decode human emotion from intricate multimodal features, the study lays the groundwork for future research into further processing of such data streams in the longer term, including real-time processing, domain-specific adjustments, and extending the analysis to the addition of multi-channel sensor input combining physiological and temporal data streams.

Similar content being viewed by others

Introduction

Sentiment Analysis, which is an important subdomain of the field of Natural Language Processing (NLP), is the identification, extraction, and classification of subjective information from text. It has been found useful in diverse fields such as public opinion mining, customer review analysis and social media content analysis. Historically, sentiment analysis techniques were based on rule-based methods and numerical models such as lexicons, bag-of-words, naive bayes1, and support vector machines2. RNNs3, CNNs4, and later transformer-based models such as BERT5 have revolutionized these methods in terms of building more accurate and reliable approaches to sentiment predictions.

However, sentiment analysis based exclusively on text input has its limitations. Human communication is multimodal in nature, including textual information, audio, visual, and at times even physiological signals6. The text itself does not always convey the full range of emotional nuance, especially in cases of sarcasm, irony, and context-rich expressions. Multimodal sentiment analysis (MSA) overcomes this limitation by utilizing the joint information from different modalities (e.g., facial expressions, vocal tone, body language), that is critical for expressing a sentiment in a more holistic manner7. This is especially important for platforms that support rich audiovisual content, such as YouTube and TikTok, where sentiment is not only expressed through speech but also by a person’s physical appearance and overlay text.

Multimodal learning, in recent times, has shown that integrating various data modalities, when possible, tremendously improved sentiment classification8. Multimodal methods take advantage of the benefits offered by each individual modality for a more complete perspective of the emotional expression experience9. Unlocking the potential of [multimodal] will raise challenges such as effectively fusing heterogeneous data streams and capturing modality-aware and cross-modal contextual dependencies10.

Building on these principles, this paper presents a new multimodal sentiment analysis framework, which utilizes hierarchical cross-modal (and inherent) attention mechanisms and dual audio pathways (base and high level) to improve multimodal embeddings’ representation and fusion. It uses sophisticated deep-learning models: textual data are processed by transformer-based architectures11, CNNs4 extract visual features, and encoders extract audio signals. We propose a hierarchical attention mechanism to learn cross-modal interactions on multiple granularity levels in parallel and use a dual-audio branch to obtain a richer acoustic representation that includes low-level prosodic and mid-level semantic features. This would enhance the accuracy and robustness of the classification against the modalities of the real multimedia environment, allowing deeper cross-modal understanding.

In fact, discovering and understanding the reactions across audio, text and video has been widely accepted as multimedia analysis, and after understanding the impact of human representation of emotions we led them to venture beyond unimodal analysis12 while realizing the restrictive nature of unimodal-based analysis12. Multi modal methods take a highly complimentary stance to make the best use of leverage modalities, or other greater modality context or emotion for which other modalities are not sufficient. Although deep learning has previously given us great practical results and insight from models such as the vision and language model, recently, it has started to show promise to be gradually applicable to a generic framework to process critical elements for the processing and synthesis of different data types. This sets the groundwork for our current project, which we will describe, which merges text and visual information so that sentiment prediction can be performed better and more accurately.

Related work

There has been promising progress in multimodal sentiment analysis (MSA), with increasingly developed frameworks that are robust to missing or noisy modalities. A line of work extended CLIP-based foundational models13, specifically targeting the issue of transforming visual inputs to virtual text in a stable manner, more so under noise. The performance of these models was evaluated on popular datasets such as CMU-MOSI14 and CMU-MOSEI15, which are based on YouTube video data. Another important avenue is to develop interaction networks specifically to capture the synergy of text and image. They have been learned on datasets such as MVSA-Single16 and MVSA-Multiple17, which incorporate thousands of image-text combinations from Twitter. However, their performance can drop significantly when the textual descriptions do not match emotionally salient regions in their respective images.

The application of deep learning techniques has recently improved performance in emotion recognition systems based on speech (SER). Earlier systems treated speech features in isolation, but recent architectures, especially dual-stream CNN transformer networks, capture spatial and temporal information simultaneously from MFCCs and Mel spectrograms18. These systems achieve state-of-the-art performance of 99.42% accuracy on TESS benchmark datasets. Context-aware models also improve accuracy, as seen in the CCTG-NET19 model, which contextualizes convolutional transformer-gru networks. It integrates conversational context and achieves a 3% increase in unweighted accuracy on the IEMOCAP dataset20. In addition, some models apply hierarchical attention, combining contextual modeling with MFCCs and Mel-spectrograms demonstrating a 5.8% increase in unweighted accuracy on the same dataset. These advances highlight the importance of context and integrated acoustic representations in developing SER systems that are reliable, precise, and lightweight to enhance sentiment analysis in real-world applications21.

As mentioned in our introduction, numerous survey-based papers have documented the advances made in fusion approaches to the MSA field, over a range of datasets, which include YouTube videos, the new MOUD22,23 and the new ICT-MMMO24. These surveys, while informative, tend to focus on aspects such as advanced feature extraction and multitask learning while missing deeper analysis in other areas24. Several frameworks proposed using cross-modal contrastive learning approaches25 for sentiment-aware pre-training strategies to improve interactions between image and text modalities. Although such approaches utilize available resources (e.g., VSO, MVSA-Multiple, and Twitter datasets26), there are still restrictions when constructing large-scale, sentiment-rich multimodal datasets.

End-to-end systems based on transformers have also been proposed to perform multimodal fusion of text, visual, and acoustic information27. These systems perform well using datasets such as CMU-MOSI14, MELD28, and IEMOCAP20. However, these systems accommodate only up to three modalities, excluding physiological data. Other models, on the other hand, try to consolidate all modalities in one transformer architecture29. Although these models are quite effective on datasets such as the CMU-MOSI and CMU-MOSEI datasets, they typically rely heavily on text masking during pre-training, with limited exploration of audio or visual masking techniques.

Difference between contrastive and dissimilarity approaches as well as the approach of decomposing modalities into similarity and dissimilarity has also been explored. These approaches have been validated on known datasets (e.g.: CH-SIMS, CMU-MOSI, CMU-MOSEI) and yielded good results, but they show poor performance on noisy data or incomplete/inconsistened modality labels. Text-visual30 and text-audio31 interactions are modeled separately through bimodal fusion methods. Though effective for datasets including CMU-MOSEI and UR-FUNNY32, their limitation is that the proposed modalities are restricted to three and are also not extensible to physiologically available signals.

The classification of emotional states through dynamic speech signals is termed as Speech Emotion Recognition (SER)18. It is a significant branch of affective computing. Considerable progress has been made in acoustic-based SER, although model generalization and scalability are hampered by a lack of labeled data–small and imbalanced datasets. Some recent literature has focused on addressing these gaps with the focus on data augmentation, domain adaptation, and multi-level feature fusion, but thorough reviews applying these methods are still lacking33. Also gaining traction is bimodal SER which uses both textual and acoustic information for added emotional context. A great deal of recent progress in deep learning–especially concerning attention and fusion techniques–has been geared toward increasing the robustness and accuracy of bimodal SER systems. Integrated models appear to be more effective than single-technique models; studies report that DBMER style models incorporating CNNs4, RNNs , and multi-head attention outperform others. However, issues such as cross-corpus variability, multilingual SER, and scarce bidirectional-multimodal datasets still pose challenges toward large-scale implementation34.

Recent benchmarking studies based on CNN features extraction have offered a baseline of performance comparison across modalities (text, image, audio). These studies, while informative, tend to suffer from speaker dependence and limited assessment of how modality affects results. There have been detailed surveys that outline the complete landscape of MSA approaches, datasets, and challenges. Although these reviews were comprehensive, they sometimes fail to reflect the complexity of fusion methods7 and the subjectivity involved in multimodal sentiment analysis35.

Recent benchmarking studies based on CNN features extraction have offered a baseline of performance comparison across modalities (text, image, audio). These studies, while informative, tend to suffer from speaker dependence and limited assessment of how modality affects results. Detailed surveys have been conducted that outline the complete landscape of MSA approaches, datasets, and challenges. Although these reviews were comprehensive, they sometimes fail to reflect the complexity of fusion methods and the subjectivity involved in multimodal sentiment analysis6. There have also been testing of ensemble approaches that combine multiple pre-trained models (e.g., BERT with ResNet or gpt-2 with VGG). These models yield superior performance on CMU-MOSI and CMU-MOSEI, yet grapple with issues of data sparsity, complexity of fusion between differing models, and risks of overfitting. Strong robustness and higher accuracy of sentiment classification have been introduced via joint multi-task frameworks consisting of both intra- and inter-task dynamics. The models tested on a diverse range of datasets (including CMU-MOSI, CMU-MOSEI, and UR-FUNNY) are complex and sensitive to low sentiment signals36. Another approach to aspect-level multimodal sentiment analysis has been co-attention mechanisms for further enhanced feature fusion. These approaches are heavily reliant on text-image pairs and may not transfer well to diverse modalities or linguistic settings. Using MLP based low-cost models, an interpretation providing mixed model across features has been presented with the ability to scale well. Nonetheless, they are mostly limited to text, audio, and visual modalities and may not be robust37. To further understand the trend or evolution of sentiment analysis from being a unimodal to multimodal is represented in Fig. 1.

Method

Existing models of multimodal sentiment analysis utilize either early fusion, which concatenates features of individual modalities, or late fusion, which computes the average prediction from each modality. Traditional methods still struggle to demonstrate the intricate interdependencies among modalities. In comparison, the proposed framework utilizes multilevel fusion but is augmented with two main innovations: a double-channel acoustic feature processing module and a cross-modal attention mechanism for better feature combinations. Instead of extracting hand-crafted audio spectral features and training a separate classifier in traditional cascaded architectures, our model jointly learns CNN-based spectrogram features and Wav2Vec2-based contextual speech embeddings with an adaptive gating mechanism. This setup allows for a richer extraction of acoustic and linguistic characteristics from audio streams. Moreover, the multi-head attention mechanism enables the model to attend dynamically to different modalities, ensuring that the model has a way to optimize which modality to trust most given various conflicting sources of information.

Dataset description

The dataset used to build this multimodal sentiment analysis system comprises of three different datasets, one for each type of modality. Figure 2 depicts the structure of dataset.

For the text modality, we use Kaggle “Emotions” dataset with more than 400,000 text samples, each labeled with one of the six distinct categories: joy, anger, sadness, fear, love, and surprise. In terms of natural language processing, it is a rich and well-organized dataset which is widely used for training the sentiment analysis model. The dataset is populated by the corresponding emotion label of each entry: a sentence, being the ground truth for supervised learning tasks. This detailed annotation enables the extraction of semantic and linguistic features necessary for emotion recognition in a multimodal sentiment analysis setting.

For image modality, AKSNAZAR dataset has been used. It represents a comprehensive database of images of facial expressions corresponding to different basic state of emotions like happy/sad/angry/surprised/neutral. The dataset contains the images taken with different lighting conditions and with different angles during the acquisition, which improves the robustness and generalization of computer vision models developed for the task of facial emotion recognition. Each image is labelled carefully according to the expression it shows, so it is very informative and allows for supervised training in visual sentiment analysis.

For the audio modality, the modalities were combined to form a composite dataset comprising audio data from various sources. The mappings were created from the filenames with their corresponding metadata for each audio file and the sentiment label, and were used to create a CSV file. The labels follow a structured approach, providing supervision to vocal and acoustic signals.

In order to balance the representation and to account for the class imbalance found in the original datasets, 10,000 samples from each modality text, image and audio were chosen as a curated subset for each modality. We split this subset into 8000 samples for training and 2000 samples for testing to ensure modality consistency, which allows us to evaluate model performance on a fair platform.

In order to achieve consistency across modalities which stemmed from datasets with varying counts of emotional classes (six for images, five for text, and variable for audio), we implemented a standard binarization method. All emotion designations were reduced to two classes of sentiment as per well-known affective valence categorizations. For example, emotions “joy,” “love,” and “surprise” were labeled as positive, while “anger,” “fear,” and “sadness” were labeled as negative. This method ensured uniformity at the level of sentiment but more importantly, it enabled the model to concentrate on binary sentiment classification, a far more practical approach in cases of content moderation and feedback analysis. Because data were independently sourced, modality specific features were learned independently using specialized pipelines and later merged through a cross modal attention mechanism. This framework aligns sentiment representations at the embedding level rather than requiring instance-level alignment across modalities, which is more restrictive. The fusion mechanism guarantees that the features from different modalities capture aligned emotional states, enhancing cross-modal sentiment inference despite heterogeneity of the sources.

To ease the classification target and since the original multi-class distributions still maintains an imbalance, the model was constructed to accomplish binary sentiment classification. It concerns differentiating positive and negative sentiment in each modality. The binary setup is maintained consistently throughout the model training, evaluation and result reporting stages. No three-class sentiment classification was performed. Using this method leads to more stable training, and allows for meaningful comparisons between sentiment predictions based on text, image and audio data.

Proposed methodology

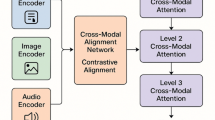

This paper proposes a novel neural network-based double-path audio architecture for multimodal sentiment analysis. To accurately perform the sentiment classification task, this system employs three key input modalities–text, image, and audio–through unique feature extraction pipelines. The detailed architecture of the model is shown in Fig. 3.

In the text, a frozen BERT model is used for creating contextual representations for words and phrases. Then, these embeddings are fed into a specialized Text Processing Layer, which incorporates a linear (fully-connected) layer, a ReLU activation function, and a dropout for deeper feature extraction and generalization.

The image modality is processed through a frozen network called ResNet50, which is able to extract high-level semantic features from visual data. The features from the resulting image are sequenced into an Image Processing Layer that is similar in structure to the text pathway, transforming each image feature with a linear transformation, ReLU activation and dropout operations intended to fortify the features before they are used downstream.

The dual-branch structure for audio processing is another key innovation in the architecture. For example one branch adopts CNN-based method to extract spectral features, and the second the Wav2Vec2.0, a pretrained network capable of fine-grained temporal audio understanding. Both branch outputs are merged into an Audio Fusion Module with a Dynamic Gating Mechanism that assigns adaptive importance weights depending on the relevance of each branch. These fused audio features also pass through the same Audio Processing Layer as for the text and image pathways.

One of the important breakthroughs in the model’s design is the dual-branch audio feature extraction. The first branch captures the prosodic features of speech at a low level by extracting pitch, tone, and spectral energy patterns using a CNN-based encoder. The Wav2Vec2.0 model’s second branch captures contextualized representations directly from raw waveforms and semantically rich features. The two branches complement each other so that the model utilizes both localized temporal features and higher-level semantic abstractions. The outputs from the branches are integrated using a dynamic gating mechanism that assigns variable importance weights to each branch depending on the input signal. This technique allows the system to enhance the most informative stream per instance, improving expression and flexibility in acoustic representation prior to fusion with other modalities. The system is designed this way because emotional signals in speech span a wide range of time scales and diverse features. It would be complex and insufficient to capture this using a single branch.

The modality-specific feature vectors are then concatenated and the resulting representation passes through a series of linear transformations (Linear \(\rightarrow\) ReLU \(\rightarrow\) Dropout) in a Feature Fusion Layer to generate a final joint embedding. During the training phase, this fused feature vector is routed to separate modality-based Sentiment Heads (text, image, and audio). These heads promote modality-specific learning by providing auxiliary sentiment prediction tasks . The Unified Sentiment Classifier (USC) performs the final sentiment classification that consolidates the outputs from all three sentiment heads and then classifies the output into one of the two sentiment classes: positive or negative, consistent with the binary sentiment classification setup used throughout this study.

The whole architecture, from the encoders to the processing layers, the attention modules, the fusion mechanism, the sentiment heads to the classifier, is implemented with modular class definitions in Python. Thus, it serves as a reliable and efficient framework for heterogeneous multimodal inputs by tailoring the dual-branch audio strategy with dynamic feature fusion and cross-modal attention to facilitate the acquisition of sentiment information.

Mathematical formulation of cross-modal attention fusion

To allow rich cross-modal interactions, we implement multi-head self-attention. The usage of multi-head self-attention layer is used to fuse the modality-specific features obtained from text, image and audio inputs.

Let the modality-specific embeddings be denoted as

where \(n\) is the number of tokens or segments and \(d\) is the embedding dimension. These are concatenated to form a unified input:

For each attention head \(h\), the input \(\mathbf{X}\) is projected into query, key, and value spaces:

where \(\mathbf{W}_h^Q, \mathbf{W}_h^K, \mathbf{W}_h^V \in \mathbb {R}^{d \times d_k}\) are learnable projection matrices and \(d_k\) is the dimensionality per head.

Each head computes scaled dot-product attention:

The outputs of all heads are concatenated and linearly transformed to obtain the final attention output:

where \(H_i\) is the attention output from head \(i\), and \(\mathbf{W}^O \in \mathbb {R}^{H d_k \times d}\) is the output projection matrix.

This multi-head formulation enables the model to capture diverse semantic and temporal correlations across modalities, facilitating more robust sentiment representation before classification.

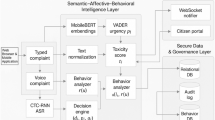

Training process

This section discussed the steps of execution of how the model is trained and saved. Figure 4 depicts the flowchart of the process in detail.

The multimodal sentiment analysis model is trained using a systematic and efficient approach, which ensures high levels of reliability and performance. This starts with a setup task in the training run, including important library imports and hardware connections (e.g. to a GPU or CPU) Checkpoint paths are also defined in order to allow resuming from checkpoints mid-training.

At first, it uses pretrained models–Bert for text, ResNet50 for images, and Wav2Vec2. 0 for audio on the head loaded as foundational encoders for all modality. Simultaneously, the datasets for each modality (text, image and audio) are done preprocessing and structured for optimized access in training.

We then initialize the model architecture using a common framework covering all specific feature extraction modules, fusion components, and classification layers as designed. Subsequently, important elements for training, such as loss function, optimizer, and metrics like accuracy and F1 score which help in evaluating model performance, are configured.

Before the training loop starts, it checks for existing checkpoints. Training continues at the last epoch if a checkpoint exists; if not, it starts from scratch. The training loop—iterates over the dataset for a preset number of epochs. In each epoch, the model takes performs forward propagation to get predictions, loss calculation, followed by backpropagation for the appropriate weight updates. After every epoch, the model is validated on another dataset to check for generalization and to keep an eye on overfitting.

Metrics like loss/accuracy are logged during the training. Checkpoints are created periodically, thus allowing the training progress to be preserved and resumed without loss of information. Usually, the best-performing model on the validation set is saved separately from regular checkpoints.

After iterating all epochs, the process enters the final phase and summary of this process is also produced, including total training time, total compute time, and evaluation results in this stage. Similarly, an inference function is also defined, allowing the model to make predictions on new, unseen multimodal data using another method. The final result of the training pipeline is the JSON dump of all critical results, including the models configurations, actual performances, and checkpoints.

The clear structure and modularization of the system make it robust and easy to reproduce and debug, which is crucial for multimodal sentiment analysis because it relies on the sound processing of synchronized batches of different data modalities.

Evaluation metrics

To evaluate the performance of multimodal sentiment analysis model on multimodal inputs text, image and audio a comprehensive set of evaluation metrics is required and proposes. These are critical metric especially when it comes to balanced and accurate assessment, particularly if you are using imbalanced data sets. This work uses a similar evaluation framework which contains commonly used performance metrics like accuracy, precision, recall, F1-score, confusion matrix, and AUC-ROC curve. All of them are useful in their own way in figuring out the strengths and weaknesses of the model in classifying sentiments.

Accuracy acts as a simple performance metric that calculates the number of correctly predicted sentiment labels as a proportion of the total number of predictions made. Nevertheless, the accuracy rarely represents the true story, especially when the sentiment classes are imbalanced, because accuracy is biased toward the dominant classes. The equation is given in 1:

Precision measures accuracy for positive sentiment predictions, specifically the fraction of accurately labeled positive content in relation to all textual predictions labeled positive. They are particularly important in applications involving emotionally charged or impact heavy decision-making contexts, where false positives can have disastrous consequences. The equation is given in 2:

Recall measures how many of the actual (“relevant”) instances of a sentiment class were predicted by the model. So, high recall means most of the true positive are being retrieved, but it can also lead to a high number of false positives. High recall means sentiment cues are always likely to be identified, but that does risk including some false positives. The equation is given in 3:

F1-score gives a harmonic mean of precision and recall, and it’s a good starting point when both false positives and false negatives are required to be minimized. In multimodal systems, prediction uncertainty and class imbalance can be introduced by the interplay of modalities and therefore this metric is particularly relevant. The equation is given in 4

Confusion Matrix provides a comprehensive view of the model’s performance by showing the number of true positives, true negatives, false positives, and false negatives for each sentiment class. This matrix helps us recognise particular instances of misclassification, e.g., if ‘positive’ and ‘negative’ examples are being confused a lot) and proves to be useful in model tuning and during the analysis of individual modality performance.

The AUC-ROC Curve (Area Under the Receiver Operating Characteristic Curve) is a useful measure of model performance over all the classification thresholds. The ROC curve plots the true positive rate (recall) against the false positive rate, providing information about the model’s ability to discriminate between sentiment classes. A larger AUC indicates better overall performance (throughout all possible thresholds, or the TPR-FPR), and represents the model’s ability to accurately predict observations irrespective of the decision threshold. The equations are given in 5 and 6:

All of these different evaluation metrics provide a strong framework for evaluating the performance of the proposed multimodal approach to sentiment analysis. They guarantee high average performance, as well as fair and credible classification throughout the spectrum of sentiment categories and input modalities. This holistic assessment is particularly crucial in multimodal setups, where separate modalities–whether they be textual, visual, or auditory, may each play varying roles in informing the ultimate sentiment judgment.

Results and discussions

This section discusses the results obtained after training and testing multiple baseline models along with the proposed models. Table 1 gives you detailed analysis of results of various baseline models and proposed models. All results in this section are reported for a binary classification task involving two sentiment classes–positive and negative. No multi-class classification (e.g., including a neutral class) was conducted in this study.

MISA, MSFNet, CLIP, CLIP+BERT and the Proposed Model comparison chart on Text, Image and Audio performance evaluation in the above integrated view. All models are evaluated for their performance based on outcome level of three modalities on Accuracy (A), Precision (P), Recall (R) and F1 Score (F1) metrics. Aim is to express the accuracy of each model’s sentiment prediction for each modality and determine the best model for Multimodal Sentiment Analysis. To better understand the model’s efficiency the training summary of the proposed model is added in Table 2.

Within the three data streams, classifying sentiment from text proved to be the toughest hill to climb. Nevertheless, our model still posted a respectable F1-score of 0.84 for that channel, pointing to solid meaning capture and tagging. By contrast, MISA and MSFNet lagged behind, showing precision figures of only 0.25 and 0.51, well below par. Such scores hint that both systems often label neutral or weakly informative sentences as positive because they miss the fine details of shifting language. CLIP and its CLIP+BERT cousin fared better, reaching moderate accuracies of 0.79 and 0.80, thanks to off-the-shelf embeddings, yet they stopped short of full text-centric tuning. Our framework, however, pulls BERT-based embeddings through extra polish layers, letting it lock on to the subtlest emotional signals and boosting sentiment judgments when text teams up with images or audio.

The performance of imaging modality is at high benchmark across all models where Proposed Model achieved highest (all scored at 0.94). This indicates that the visual components are utilized correctly in sentiment prediction, probably because of the utilization of robust pretrained CNN models like ResNet50. CLIP and CLIP+BERT are in second place with scores in the 0.92–0.94 range, which is to be expected as those models excel at vision-language pair tasks. MISA and MSFNet provide slightly worse performance (accuracy of 0.86 and 0.84 respectively), which indicates that indeed they do ok with the image sentiment extraction, but they do not seem to possess the advanced fusion or attention architecture that more recent models such as CLIP and the proposed model employ. For audio performance, its score and variance are usually lower than that of text and images. Once again, the Proposed Model outperforms all remaining ones with a 0.85 in all metrics, reflecting the better handling of audio sentiment signals seemed due to the dual stream audio architecture and fusion (Wav2Vec2. 0, ECOM, and CNN based gated frameworks). At the other end of the scale, MISA, MSFNet and CLIPBERT gave low scores, featuring 0.51–0.57 on MISA and MSFNet, and (perhaps unsurprisingly) more misery for CLIP+BERT, at 0.55. Next, CLIP scores 0.79–0.80 top of the lead, demonstrating its inability to characterize auditory sentiment features, but less than the proposed model. Several of the outcomes noted above suggest that even more powerful feature extraction and integration of audio may be beneficial, and the proposed model clearly excels over other models at implementing such strategies.

The Proposed Model outperforms all existing architectures for all evaluation metrics within all 3 modalities. The most significant performance gains were identified in the text and audio modalities, which typically poses generalization or model accuracy issues in other models. Results further validate the effectiveness of multi-modal fusion strategy, self-attention model for cross-modal interactions, and pipeline exhaustive feature integrations in introducing drop-out and ReLU fusion layers. Further, the scores, such as that for the proposed model metrics being equal per modality indicates a good model lingua with low overfitting and instabilities over epochs. The structured evaluation grants the endorsed model a lasting favor over other tested counterparts, substantiating its strength in prediction and performance for all sentiments.

The effectiveness of the proposed architecture is evident from the performance progression across modalities. As shown in Figs. 5, 6, 7 and 8, the integration of hierarchical cross-modal attention and dual audio pathways leads to consistent improvements in overall sentiment analysis (Fig. 5), as well as modality-specific gains in image (Fig. 6), text (Fig. 7), and audio (Fig. 8) channels, demonstrating the model’s ability to leverage rich, complementary features from each modality.

To assess how well the unified model as well as each individual modality performed, we calculated confusion matrices and ROC curves for the text, image and audio branches. As highlighted in the confusion matrices, all three modalities exhibit balanced classification of the image modality demonstrating the strongest performance with with 940 true positives and 917 true negatives followed by audio and text. This underscores the advantage gained through the use of pretrained visual encoders like ResNet50 in emotion recognition based on facial expression cues. The audio modality results, obtained from a dual-branch CNN and Wav2Vec2.0 fusion, demonstrate competitive performance which justifies the proposed acoustic pathway’s efficacy. Image modality also performed well in ROC curve analysis with AUC of 0.962, audio and text closely following with AUCs of 0.923 and 0.922 respectively. The unified model, which integrates all modalities by multi-head attention and fusion, shows strong AUC of 0.922 confirming consistent and reliable sentiment prediction across varying streams. The relevant plots are displayed in Figs. 9, 10, 11 and 12.

Limitations

The proposed multimodal sentiment analysis approach has several limitations, despite its promising results. First, the use of pretrained models like BERT, ResNet50, and Wav2Vec2.0 make the system computationally reliant. This type of strain puts a cap on both real-time and remote resource settings. Second, the fusion algorithm works under the assumption that all three modalities–text, image, and audio is aligned and clean which is rarely the case in real-life situations like social media posts or video calls that contain background noise. Additionally, the model’s behavior on out-of-distribution or domain-specific data has not been evaluated which raises issues with generalizability. Another limitation is the neutral and mixed sentiments are classified as binary, losing vital context despite added layers of complexity. Furthermore, a lack of focus on blended emotions poses additional challenges. Lastly, there is very little focus on explainability within sensitive sectors, as verifiability is weak due to gaps in interpreting the attention mechanisms applied in the fusion processes. These gaps make it increasingly difficult to apply the technology in practice. Addressing these issues can enhance the robustness and real-world applicability of the system.

Additionally, there is a problem with the data quality which has affected model’s performance. The AKSNAZAR dataset which is used to train the model on image modality has an issue which content-based imbalance. Out of all the images classified as negative, most of the images contain a human face, where as in images classified as positive, no such imbalance is observed. Therefore, the final model predicts most images that have human faces as negative even if the image is of a happy face.

Conclusion

In this paper, we introduce a multimodal sentiment analysis framework that integrates three modalities for sentiment prediction, namely text, image and audio. The initial foundation of this framework is based on advanced pretrained models: BERT for text, ResNet50 for image feature extraction and Wav2Vec2.0 for audio representation. Through exemplary experiments, we demonstrate that the multilevel framework successfully overcomes the limitations of existing unimodal sentiment analysis methods and proves the effectiveness of the model. By integrating a cross-modal self-attention mechanism and dynamic audio fusion layer, the proposed system can adequately accommodate the complex inter modal dependencies and conduce to a more grounded and semantically richer sentiment prediction.

The comparative scores show that the proposed model consistently with all three modalities consistently outperforms established baseline architectures on relevant performance metrics: precision, recall and F1-score. This highlights the value of integrating diverse data sources to gain novel and more precise insights into sentiment, especially in elaborate, real-world settings—including social media platforms, customer experience systems, and interactive AI applications. These results confirm that multimodal sentiment analysis provides a more accurate and holistic view of emotional expression than unimodal approaches.

Data availability

The datasets generated and/or analysed during the current study are available in the kaggle repository, https://www.kaggle.com/datasets/nelgiriyewithana/emotions, https://www.kaggle.com/datasets/penhangara1/aksnazar5, https://www.kaggle.com/datasets/uwrfkaggler/ravdess-emotional-song-audio, and https://www.kaggle.com/datasets/uwrfkaggler/ravdess-emotional-speech-audio.

References

Dey, L., Chakraborty, S., Biswas, A., Bose, B. & Tiwari, S. Sentiment analysis of review datasets using Naive Bayes and k-nn classifier. arXiv preprint arXiv:1610.09982 (2016).

Ahmad, M., Aftab, S. & Ali, I. Sentiment analysis of tweets using svm. Int. J. Comput. Appl. 177(5), 25–29 (2017).

Ain, Q. T. et al. Sentiment analysis using deep learning techniques: A review. Int. J. Adv. Comput. Sci. Appl. 8(6) (2017).

Liao, S., Wang, J., Yu, R., Sato, K. & Cheng, Z. CNN for situations understanding based on sentiment analysis of twitter data. Procedia Comput. Sci. 111, 376–381 (2017).

Hoang, M., Bihorac, O. A. & Rouces, J. Aspect-based sentiment analysis using bert. In Proceedings of the 22nd Nordic Conference on Computational Linguistics 187–196 (2019).

Das, R. & Singh, T. D. Multimodal sentiment analysis: A survey of methods, trends, and challenges. ACM Comput. Surv. 55(13s), 1–38 (2023).

Gandhi, A., Adhvaryu, K., Poria, S., Cambria, E. & Hussain, A. Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 91, 424–444 (2023).

Soleymani, M. et al. A survey of multimodal sentiment analysis. Image Vis. Comput. 65, 3–14 (2017).

Yadav, S. K., Bhushan, M., Gupta, S. & Multimodal sentiment analysis: Sentiment analysis using audiovisual format. In 2nd International Conference on Computing for Sustainable Global Development (indiacom), 2015 1415–1419 (IEEE, 2015).

Huddar, M. G., Sannakki, S. S. & Rajpurohit, V. S. Multi-level context extraction and attention-based contextual inter-modal fusion for multimodal sentiment analysis and emotion classification. Int. J. Multimed. Inf. Retr. 9(2), 103–112 (2020).

Kokab, S. T., Asghar, S. & Naz, S. Transformer-based deep learning models for the sentiment analysis of social media data. Array 14, 100157 (2022).

Xu, H. Unimodal sentiment analysis. In Multi-Modal Sentiment Analysis 135–177 (Springer, 2023).

Zhao, X., Poria, S., Li, X., Chen, Y. & Tang, B. Toward robust multimodal sentiment analysis using multimodal foundational models. Expert Syst. Appl. 276, 126974 (2025).

Zadeh, A. et al. CMU multimodal opinion sentiment and emotion intensity dataset. Accessed May 2025. http://multicomp.cs.cmu.edu/resources/cmu-mosi-dataset/.

Zadeh, A. B., Liang, P. P., Poria, S., Cambria, E. & Morency, L. P. Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics Vol. 1: Long Papers, 2236–2246 (2018).

Yang, X., Feng, S., Wang, D. & Zhang, Y. Image-text multimodal emotion classification via multi-view attentional network. IEEE Trans. Multimed. 23, 4014–4026 (2020).

Yadav, A. & Vishwakarma, D. K. A deep multi-level attentive network for multimodal sentiment analysis. ACM Trans. Multimed. Comput. Commun. Appl. 19(1), 1–19 (2023).

Tellai, M., Gao, L. & Mao, Q. An efficient speech emotion recognition based on a dual-stream CNN-transformer fusion network. Int. J. Speech Technol. 26(2), 541–557 (2023).

Tellai, M. & Mao, Q. CCTG-NET: Contextualized convolutional transformer-GRU network for speech emotion recognition. Int. J. Speech Technol. 26(4), 1099–1116 (2023).

Busso, C. et al. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 42, 335–359 (2008).

Tellai, M., Gao, L., Mao, Q. & Abdelaziz, M. A novel conversational hierarchical attention network for speech emotion recognition in dyadic conversation. Multimed. Tools Appl. 83(21), 59699–59723 (2024).

Chenworth, M. et al. Methadone and suboxone® mentions on twitter: Thematic and sentiment analysis. Clin. Toxicol. 59(11), 982–991 (2021).

Zhu, L., Zhu, Z., Zhang, C., Xu, Y. & Kong, X. Multimodal sentiment analysis based on fusion methods: A survey. Inf. Fusion 95, 306–325 (2023).

Poria, S., Chaturvedi, I., Cambria, E. & Hussain A. Convolutional MKL based multimodal emotion recognition and sentiment analysis. In IEEE 16th International Conference on Data Mining (ICDM), 2016 439–448 (IEEE, 2016).

Yang, S., Cui, L., Wang, L. & Wang, T. Cross-modal contrastive learning for multimodal sentiment recognition. Appl. Intell. 54(5), 4260–4276 (2024).

Wagh, R. & Punde, P. Survey on sentiment analysis using twitter dataset. In Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), 2018 208–211 (IEEE, 2018).

Shahade, A. K., Walse, K. H. & Thakare, V. M. Deep learning approach-based hybrid fine-tuned Smith algorithm with Adam optimiser for multilingual opinion mining. International Journal of Computer Applications in Technology, Vol. 73, 50–65. Accessed June 2025. https://www.inderscience.com/info/inarticle.php?artid=133253.

Poria, S., Hazarika, D., Majumder, N., Naik, G., Cambria, E. & Mihalcea, R. Meld: A multimodal multi-party dataset for emotion recognition in conversations. arXiv preprint arXiv:1810.02508 (2018).

Wang, Z., Wan, Z. & Wan, X. Transmodality: An end2end fusion method with transformer for multimodal sentiment analysis. In Proceedings of the Web Conference 2020 2514–2520 (2020).

Chaudhuri, A. Visual and Text Sentiment Analysis Through Hierarchical Deep Learning networks (Springer, 2019).

Lin, F., Liu, S., Zhang, C., Fan, J. & Wu, Z. StyleBERT: Text-audio sentiment analysis with bi-directional style enhancement. Inf. Syst. 114, 102147 (2023).

Hasan, M. K., Rahman, W., Zadeh, A., Zhong, J., Tanveer, M. I., Morency, L. P. et al. UR-FUNNY: A multimodal language dataset for understanding humor. arXiv preprint arXiv:1904.06618 (2019).

Kakuba, S. & Han, D. S. Addressing Data Scarcity in Speech Emotion Recognition: A Comprehensive Review (ICT Express, 2024).

Kakuba, S., Poulose, A. & Han, D. S. Deep learning approaches for bimodal speech emotion recognition: Advancements, challenges, and a multi-learning model. IEEE Access 11, 113769–113789 (2023).

Shahade, A. K. & Deshmukh, P. V. A unified approach to text summarization: Classical, machine learning, and deep learning methods. Ingénierie des Systèmes d’Information 30(1), 169–179. https://doi.org/10.18280/isi.300114 (2025).

Rahman, W., Hasan, M. K., Lee, S., Zadeh, A., Mao, C., Morency, L. P., et al. Integrating multimodal information in large pretrained transformers. In Proceedings of the Conference. Association for Computational Linguistics. Meeting Vol. 2020, 2359 (2020).

Sun, H., Wang, H., Liu, J., Chen, Y. W. & Lin, L. CubeMLP: An MLP-based model for multimodal sentiment analysis and depression estimation. In Proceedings of the 30th ACM International Conference on Multimedia 3722–3729 (2022).

Funding

Open access funding provided by Symbiosis International (Deemed University).

Author information

Authors and Affiliations

Contributions

D Vamsidhar: Model design and implementation, result analysis, and original draft preparation. Parth Desai: Model design and implementation, result analysis, and original draft preparation. Aniket K. Shahade: Conceptualization, supervision, and original draft preparation. Shruti Patil: Supervision, Review and Editing. Priyanka V. Deshmukh: Conceptualization, Supervision, Review and Editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Vamsidhar, D., Desai, P., Shahade, A.K. et al. Hierarchical cross-modal attention and dual audio pathways for enhanced multimodal sentiment analysis. Sci Rep 15, 25440 (2025). https://doi.org/10.1038/s41598-025-09000-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-09000-3