Abstract

Cyberbullying can profoundly impact individuals’ mental health, leading to increased feelings of anxiety, depression, and social isolation. Psychological research suggests that cyberbullying victims may experience long-term psychological consequences, including diminished self-esteem and academic performance. The widespread use of social media platforms among university students has raised major concerns over cyberbullying, which can have detrimental effects on student mental well-being and academic performance. The purpose of this study is to develop Cyberbullying Network (CBNet), a novel Convolutional Neural Network (CNN)-based model, and a comprehensive dataset to enhance cyberbullying detection accuracy and real-world applicability in student social media interactions. We designed CBNet, a CNN-based model for detecting cyberbullying among student social media groups. We developed a comprehensive dataset collected from several social media platforms popular among university students. Our results demonstrate that CBNet notably outperforms both the traditional machine learning approaches and the Recurrent Neural Network (RNN)-based model and presents an outstanding value of precision, recall, and F1-score overall, with an Area Under the ROC Curve significantly higher than 0.99. Combined with the fact that the issue of cyberbullying always remains relevant, these results suggest the high feasibility of our suggested approach to the detection of incidents. Given our results, CBNet could be used as a preventative tool for educators, administrators, and community managers to combat cyberbullying behavior and make the online community safer and more welcoming for students. This work suggests the high importance of advanced machine learning approaches to real-world social problems and contributes to the creation of greater digital well-being in university students’ communities. By employing CBNet, institutions can take proactive measures to mitigate the harmful effects of cyberbullying and cultivate a positive online culture conducive to student success and flourishing.

Similar content being viewed by others

Introduction

Cyberbullying has become an increasingly prevalent issue in online communities, presenting a major challenge for maintaining a healthy and safe environment, and student social media is no exception. As the number of social media platforms has risen, cyberbullying has affected more and more students’ mental well-being and academic performance1, ranging from harassment to intimidation to rumors to derogatory comments. It often takes place in student social media groups that are relatively unmonitored2. To address this issue, we focus on the application of machine learning techniques, in particular CNNs, to the problem of cyberbullying detection in text data sourced from student social media groups3. This approach leverages the power of CNNs, which have demonstrated strong performance on sequential data, such as text, for rendering meaningful features from raw input data. Indeed, prior work has achieved comparable success in applying CNNs to a variety of NLP tasks, such as text classification, sentiment analysis, and language translation4. It is also not a coincidence that the bare minimum of our model requires social media from students2, who, given the unique dynamics and communication paradigms of an online community5, have a plethora of unintentional signals to provide. Students frequently use social media platforms to socialize, create networks, and share knowledge about their academic and personal lives. However, the informal nature of these interactions can make it easier for cyberbullying activities to spread. Therefore, effective detection mechanisms must be developed for these interactions6.

The deployment of cyberbullying detection through machine learning techniques consists of several key processes. A large dataset is created, including text samples from student social media groups and text samples containing a wide variety of interactions and content sources. The dataset is preprocessed, and noise is removed from the text, fully retaining relevant linguistic information7. CNN models are trained on a preprocessed dataset and are then set up to tell the difference between cyberbullying incidents and other types of interactions based on the patterns and features in the text data8. The results of this research can contribute significantly to the safety and well-being of university students by allowing the proactive detection of and intervention in cyberbullying instances in student social media groups9. These tools empower university administrators, educators, and support staff with the resources needed to adequately detect and address cyberbullying activities. These tools would ultimately enable a safer and more conducive online environment through which students could achieve greater levels of academic and social attainment10.

Due to the particular dynamics and sometimes far-reaching community involvement implications of the university student social media platform, it was determined that a fresh text dataset should be prepared. This set was prepared in the unique conditions of the collection of authors, and for its gathering, the squad found a broad range of social media sets applicable to student conduct11. The rationale behind these decisions is that this dataset was designed to capture the multiple dimensions of student social media communication. It goes from academic conversations to events’ ads and announcements, more informal and relaxed chats, and other exchanges of a personal nature. Due to this, our dataset was designed to reflect the broad spectrum of communication styles, languages, and social patterns within the student community. The dataset comprises posts, comments, replies, and messages from various student social media groups or pages, highlighting what kind of communication occurs on those platforms. The data also includes content from students from different fields of study, national and cultural backgrounds, and different countries to convey the diversity of students’ populations12. We gathered data from several social media platforms to capture the different au courant, behaviours, and conversational rules that occur in various online communities. Conveying from a Facebook study group would be completely contrasted to a Twitter discussion thread, which is entirely different from the communication protocols in a specialized forum focused on a specific hobby or interest. We incorporated data from several platforms to cover the entire range of platform interactions experienced by university students. The heterogeneity and representativeness of our data were thus enriched13.

This varied and extensive dataset becomes a detailed and broad basis for the study and validation of our machine learning designs for cyberbullying recognition. Our dataset balances several conversational and socially dynamic communication styles and allows our models to study and generalize from a variety of text inputs and test data, making them even more robust in detecting occurrences of cyberbullying in a student social media context. Furthermore, with a dataset that accurately resembles the actual complexities of social media interactions, we hope to create models and mechanisms capable of combating the nuanced challenge of cyberbullying on campuses.

We chose CNNs for developing and training our model because of their effectiveness in natural language tasks, especially in text classification. CNNs have been shown to be highly effective at learning hierarchical feature representations from text data that utilize the sequence nature of words to obtain both local and global dependencies, for instance, document or phrase representations. This makes CNNs suitable for sentiment analysis, document classification, and especially cyberbullying detection. In our model, CNNs learn high-level features from text inputs via convolutional layers. The convolutional layer employs filters of different sizes that move over the input text and identify patterns and significant features at various spatial levels. By convolving over the input text, CNNs can capture local patterns and relationships between adjacent words, effectively encoding information about the context and semantics of the text.

Additionally, CNNs leverage pooling layers to consolidate important characteristics of the extracted features. Through pooling operations—such as max pooling or average pooling—that collect information from neighboring regions of the feature maps, CNNs concentrate on the most salient aspects of the input data while also diminishing its dimensions. As a result, CNNs reduce large volumes of textual data into compact representations that are optimal for performing classification tasks. In the case of cyberbullying detection, CNNs offer several benefits to the process. They are able to discern subtle nuances characteristic of cyberbullying behaviour, learning to identify patterns of harassment, aggression, or derogatory language in textual inputs.

Moreover, CNNs can handle variable-length sequences of text, making them highly appropriate for processing social media posts, comments, and messages of varied lengths, which are typically encountered in the online settings where cyberbullying occurs5. CNNs permit us to exploit the hierarchical representations learned naturally by these models, which involve both local linguistic cues and the global contextual information that is essential for cyberbullying detection. Consequently, the model can process input data that is highly noisy and often ambiguous in meaning and context to detect instances of cyberbullying implanted in student social media sites. In brief, the use of CNNs offers a strong and scalable framework for the development of effective cyberbullying detection solutions. As a result, the mission of building a healthier, psychologically supportive online community aimed at university learners can be achieved.

This work is very pertinent because it has the potential to address the issue of how cyberbullying negatively affects student well-being and academic performance13. Cyberbullying inflicts psychological and emotional harm on its victims, which can be reflected in stress, anxiety, depression, and reduced academic performance by either disengagement or dropout. Due to the development of smart and accurate machine learning models capable of detecting cyberbullying in students’ social media groups and alerting administrators, educators, and community moderation administrators, this work may help across the field14.

Cyberbullying, pervasive in online communities, poses significant challenges to maintaining a safe environment, particularly within student social media groups2. Using machine learning, such as CBNet, this study utilizes a CNN-based model to identify cyberbullying instances in textual information accessed from university student social networks. CNNs have proven to be effective tools for processing series data, extracting features, and demonstrating superior performance compared to traditional methods and RNN15. Data collection and preprocessing, as well as the training of the CNN model, are some of the research’s following tasks. The results of a model based on CBNet, which can be employed to identify this type of cyberbullying, suggest that it may be used to predict such bullying in the future and prevent it from occurring. This research has broadened the scope for utilizing cutting-edge machine learning to address vital social issues and support digital citizenship among college students. Below are the major contributions of this research study:

-

The current work introduces a novel convolutional neural network architecture named CBNet, which is designed with a unique focus on the detection of cyberbullying within student social media groups. Using three parallel convolutional layers and pre-trained embeddings, this architecture gets state-of-the-art results and can find toxic content in very specific situations.

-

The fact that we created our own dataset using real student interactions in social media groups illustrates the urgent need for proper data curation. The dataset is designed to accurately reflect the rich variety of language use and social interaction dynamics in the target type of community, highlighting the practical veracity of our work.

-

The performance of CBNet can be demonstrated through extensive experimentation and comparison with both older machine learning methodologies and newer recurrent neural network-based methodologies. The data shows that our architecture frequently outperforms existing bot detections for cyberbullying in student-centered social media groups. Upon examination, as a result of several evaluations, CBNet exhibited performance metrics, making it a state-of-the-art solution.

-

The proposed method and dataset can empower university administrators, educators, and community moderators with advanced tools for proactively detecting and addressing these behaviors. The superior performance of the CBNet model should instill confidence in its deployment in real-world scenarios and foster safer and more inclusive online environments for students.

-

This work further contributes to the cyberbullying detection literature by introducing CBNet, a novel architecture for addressing these challenges. The authors have presented a robust methodology for hyperparameter optimization to arrive at state-of-the-art performance through rigorous experimental comparison against the current state-of-the-art methods. The insights derived from this work will further inform the development of cyberbullying detection and more general systems, interventions, and prevention research within online communities.

The article comprises four sections: introduction, literature review, methodology, and experimental results. A review of the prior literature comes after an overview of the study’s goals and background in a logical order. The methodology section details dataset development, while experimental results compare CBNet’s performance with state-of-the-art methods, concluding with a discussion of findings and implications.

Literature review

Addressing cyberbullying detection through machine learning, this study utilizes a combination of natural language processing techniques and supervised learning algorithms16. The author presents an approach that identifies cyberbullying instances in student social networks. The proposed approach curates a dataset of social exchanges by students, trains models for classifying cyberbullying instances from textual data, and evaluates them. The paper provides an overview of our approach to training a classifier and subjecting it to rigorous evaluation on public data. We demonstrate that our approach can be used to detect cyberbullying behaviors with high accuracy, providing an important tool for educators to reflect on and target instances of cyberbullying in a timely manner or to build technologies that automatically intervene to classify and potentially mitigate cyberbullying. This article explores the impact of cyberbullying on mental health outcomes among university students through a longitudinal survey approach17. In tracking both psychological distress and academic performance across time, the research zeroes in on the long-term consequences of cyberbullying victimization. Data collected via surveys of university students reveals a strong connection between experiences of cyberbullying and adverse mental health outcomes. The findings highlight the need to confront cyberbullying within educational contexts, along with the value of implementing interventions to support student well-being.

In a different use of San, the authors look into how useful it is to use social network analysis to find cyberbullying networks so that they can be specifically targeted and harmful online interactions can be stopped18. Algorithms are used to analyses social media data in order to identify clusters of individuals engaged in cyberbullying. The work provides policy, community, and institutional insight by analyzing a dataset of social networking posts from five online platforms popular with university students. Its authors demonstrate the applicability of social network analysis for identifying groups of students who cyberbully one another. It is important that we recognize that social network analysis can potentially be used to disrupt social processes that exhibit harmful and hateful behavior, such as cyberbullying, by understanding the social dynamics that underpin such behavior18.

In the research study, the focus was on understanding practical strategies to reduce cyberbullying among university students19. Through interviews, participants revealed insights into effective strategies and barriers to intervention. The findings highlight the importance of empowering bystanders to disrupt cyberbullying and foster a supportive online environment. Implementing proactive measures, such as bystander intervention policies, is recommended to discourage cyberbullying and sustain positive interactions on social media platforms.

Performed as an RCT aimed at uncovering the effectiveness of educational interventions in reducing cyberbullying. instances A set of intervention programmed introduced into the process was designed to identify the effect of these interventions on the rate of cyberbullying perpetration and crimination18. The research results corroborate the data provided by students participating in the pre- and post-intervention surveys: a significant drop in cyberbullying instances was registered post-intervention. These research results demonstrate the potential efficacy of preventive measures for students and the benefit of proactive education in creating a safe and inclusive online environment20.

Using survey data, an analysis of gender differences in cyberbullying victimization and perpetration among university students is presented in this article. The goal is to determine inequities in gender groups’ experiences with cyberbullying and to use this to form targeted interventions. Large numbers of university students are drawn from a sample, and the results indicate differences in student experiences with cyberbullying by gender, highlighting the need for research and intervention efforts to consider gendered dynamics when addressing cyberbullying in education21.

Conducting a longitudinal study tracking academic performance and cyberbullying experiences of students from a university in the southern United States to determine the extent to which experiences of cyberbullying in the last year predict student academic separation over time, one study considered the correlation of academic records to students’ reports of their experiences being cyberbullied. Through this, the researchers used two foundational data streams to map how students were performing. Looking at the academic results, these researchers found that students who were scored and in the top quarter of students who were cyber bullied in the last year had 4 harsher grades than those who were not cyberbullied. Highlighting the negative correlation between cyberbullying victimization and academic performance22.

The article addresses the differences in the prevalence and forms of cyberbullying among students in seven different countries. It reports on a study that the author conducted to learn the ways that young people cyberbully one another in different cultural contexts in order to help us name its multiple forms and inform culturally sensitive prevention and response. Through a survey of students in various countries, this study examines the ways in which cyberbullying is perceived by students and captured in different countries and the implications this holds for the development of culturally sensitive formal and informal educational interventions23.

Draws on survey and interview data with university students24 to examine the extent to which family and peer relationships buffering the adverse consequences of cyberbullying, Research assesses students’ social support networks in order to identify protective factors that help to ameliorate the negative mental health outcomes associated with cyberbullying, and to inform intervention and support efforts. Examines how cyberbullying in reported in survey responses and interview responses about counseling. Highlights the importance of strong social support in ameliorating the negative effects of cyberbullying on student well-being. Highlights of the need for a greater emphasis on developing comprehensive systems of support within educational contexts for effectively addressing cyberbullying24.

This article dives into the ethical dimensions of using machine learning for cyberbullying detection, and does so through a combination of a literature review and ethical analysis. The work aims to inform the design and deployment of cyberbullying detection systems, through an investigation of current practices and their associated ethical considerations. The paper finds potential for advantages to machine learning -based approaches, but also flags potential risks relating to privacy, biases, and algorithmic transparency. Ultimately the work finds strong evidence of the importance of considering ethical perspectives in the development and deployment of cyberbullying detection systems25.

The purpose of this study is to amplify the voices of marginalized populations in order to identify individual and structural challenges that vulnerable students face and to guide informed interventions. Building on more quantitative surveys of prevalence and impact, a research team uses focus groups to explore how marginalized students are perceiving and experiencing cyberbullying. Across analyses of survey responses and focus group conversations, the research documents that marginalized students experiencing cyberbullying at much higher rates and have much less supportive environments to access. The authors advocate for more intersectional approaches to understanding and addressing cyberbullying in complex and heterogeneous educational contexts26.

The effectiveness of peer-led interventions to address cyberbullying is explored in Cyberbullying Peer Education Programs27. The outcome of peer education programs to intervene and prevention this behavior is investigated via the development and implementation of the program and analysis of the survey data to act as agents of positive behavior and to develop self and social monitoring strategies and peer networks of support. Evidence from the pre- and post- intervention surveys suggests that peer-led intervention produced statistically significant reductions in cyberbullying perpetration and victimization in the treatment condition. The potential for peer education lead intervention to promote safer school online communities is discussed27.

This study uses survey data and behavioral traces to examine the relationship between social media use patterns and cyberbullying behaviors. Using data from these surveys and behavioral traces a digital behavioral approach is used to develop a model of social media use and cyberbullying victimization and perpetration. This model is used to test the relationship between use of specific social media platforms and in person problems (perpetration and victimization) and their association with programs at the state level. Finally, this research is used to understand how to promote positive online behaviors within educational contexts and create more comprehensive programming that promotes positive online and offline behavior28.

This longitudinal study explored the potential effect of cyberbullying on student engagement and retention in higher education. Following the analysis of involved students’ engagement data and the number of registered cyberbullying incidents over several years, my goal was to assess this correlation. After integrating academic records with self-reported data on cyberbullying incidence, this research has determined a significant factor. And this factor is the negative association of student retention rates with the propensity to fall victim of cyberbullying. Ultimately, these findings indicate a high need for creating appropriate solutions to enhance student outcomes through the minimization of online harassment occurrences29.

In summary, the primary focus of this study is to evaluate policy analyses and evaluations of intervention programs on the efficacy of school policies and interventions on addressing and preventing cyberbullying. In addition, for achieving this goal, such research supports the need to inform evidence-based practices and systematic reviews on cyberbullying prevention and intervention. The result of this review and analysis of policy documents and program evaluation reports reveal broad initiatives that must be undertaken for the effective targeting of cyberbullying within schools. As such, school, policy, and community stakeholders should work together to develop successful and supportive learning environments30.

Methodology

Dataset

In this section, we detail the process of data collection from different social media platforms commonly used by university students. It involves the selection of social media platforms, data crawling and scraping, data filtering, and preprocessing that will guarantee the quality and relevance of the collected dataset.

Selection of social media platforms

The first step to collect data began by identifying and selecting different social media platforms commonly used by university students. We selected diverse popular social media platforms where students interact with each other. Group discussions from Facebook, Twitter feeds, university-related threads from online forums, and university-based niche platforms (e .g, students groups) were selected based on their popularity and potential to capture diverse interactions with student communities.

Data crawling and scraping

Once the platforms were identified, web scraping techniques were employed to gather text-based interactions from selected social media platforms. This involved writing custom scripts to extract textual data from publicly accessible pages while adhering to the terms of service and ethical guidelines of each platform.

Data filtering and preprocessing

After data collection character-by-character, and some cleanup on the select candidates stored manually created using the source, filters were applied to clean and balance the candidate samples. Noisy and irrelevant content such as duplicate post, advertisement and non-English content among others were removed where text data was then tokenized, lower cased, and a large sample of the stopword were removed to make the dataseum clean and ready for ML.

Social Media Platforms used data collection and their modes of crawling with preprocessing procedure after data have been collected as shown in the Table 1. In the Text, Table 2: Abstract Table with some selected labeled Text from our designed custom Dataset illustrating a sample from the model training data and feed backing data and tweet on it Table 3: Data filtering, preprocessing operations done to ensure the best quality and relevance of the dataset.

Dataset creation

In this section, we elaborate on the process of creating the dataset for training and evaluating the cyberbullying detection model. This includes annotation and labeling of the collected data to distinguish cyberbullying instances from non-cyberbullying interactions, as well as the splitting of the dataset into training, validation, and testing sets to facilitate model development and evaluation.

Annotation and labeling

Furthermore, specific guidelines have been used to annotate the collected data in order to differentiate cyberbullying behaviors from non-cyberbullying ones. The guidelines contained descriptions of the criteria and examples of cyberbullying behaviors to guarantee the uniformity and accuracy of the labeling process. More than one annotators independently annotated the collected data, and Herman’s Kappa was calculated to determine the level of agreements among the annotators. The disagreements were resolved through discussion and consensus to maintain the quality and reliability of the annotations.

Dataset splitting

Once the annotation and labeling reached 100% completion, the annotated data was split into three distinct data subsets: training, test, and validation sets. Data splitting was performed in order to guarantee that each subset has a proportional representation of cyberbullying and non-cyberbullying instances. Such an approach is necessary to facilitate the creation and validation of robust machine learning models. The splitting process relies on stratified sampling, which diminishes the bias problem and promotes the representativeness of the data subsets or data inputs used for model training. The following data split was performed whose detailed can be seen in the Table 4.

This table provides a summary of the dataset splitting process, including the number of instances in each subset and the distribution of cyberbullying and non-cyberbullying instances.

CBNet model development

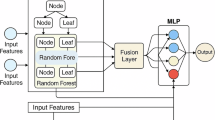

In this section, we present CBNet (Cyber Bullying Network), a Text CNN designed specifically for cyberbullying detection. CBNet uses bag-of-words (BoW) features as training input, which allows it to effectively capture patterns and associations within text data and to recognize subtle nuances indicative of cyberbullying behaviours. Figure 1 illustrates the proposed cyber bullying detection framework for university students environment.

CBNet architecture

CBNet is structured as a Text CNN model, designed to process bag-of-words features for cyberbullying detection. The architecture comprises convolutional layers for feature extraction and pooling layers for dimensionality reduction which can be seen in the Fig. 1. By analyzing the bag-of-words representations of text data, CBNet aims to accurately identify cyberbullying instances with high precision and recall. The convolutional layer extracts features from the input text data using a set of filters \(\:W\)of size \(\:k\). The output of the convolutional layer is passed through a non-linear activation function \(\:f,\) such as ReLU, to introduce non-linearity:

where \(\:C\) represents the output feature maps, \(\:X\) is the input data, \(\:b\) is the bias term, and \(\:\text{*}\) denotes the convolution operation. The pooling layer reduces the dimensionality of the feature maps obtained from the convolutional layer. Max-pooling is commonly used, where the maximum value within a specified window is retained:

where \(\:P\) represents the pooled feature maps.

Hyperparameter tuning for CBNet

Extensive hyperparameter tuning is performed to maximize CBNet’s performance in cyberbullying detection tasks. Hyperparameters such as filter sizes, kernel numbers, and dropout rates are systematically explored to find the optimal setup. Using grid search or random search, CBNet is tuned so that it effectively distinguishes different cyberbullying behaviors. Table 5 has the recap of hyperparameters and their best selected values.

Training procedure for CBNet

For training CBNet, we first create bag-of-words features from the annotated dataset. We then train CBNet on this training set using the stochastic gradient descent algorithm or Adam algorithm to optimize CBNet’s parameters. The performance metrics collected during training are then used to adaptively adjust the learning rates, preventing underfitting or overfitting. Lastly, we apply regularization, using a variety of techniques such as dropout to improve CBNet’s generalizability and robustness.

Evaluation metrics

This section outlines the evaluation metrics employed to assess the effectiveness of the CNN model in cyberbullying detection. It includes the definition of performance metrics such as accuracy, precision, recall, F1-score, and area under the ROC curve (AUC), as well as the utilization of cross-validation techniques and confusion matrix analysis to validate and analyze model performance.

Performance metrics

Performance metrics are important for quantifying how well the CNN model can identify cyberbullying instances. Here are some of the metrics you’ll likely see:

-

Accuracy: the proportion of correctly classified instances out of the total instances.

Precision: the proportion of true cyberbullying instances among all instances predicted as cyberbullying.

-

Recall: the proportion of true cyberbullying instances that are correctly identified by the model.

-

F1-score: the harmonic mean of precision and recall, which provides a balance between the two metrics.

Area under the ROC curve (AUC): the area under the receiver operating characteristic (ROC) curve, which measures the model’s ability to distinguish between cyberbullying and non-cyberbullying instances across different threshold settings.

Cross-validation

Perform k-fold cross-validation to advocate the CNN model’s robustness and eliminate that threat of bias and occurs only because of one train-test splitting, k-fold cross- validation is performed. First, the dataset is split into k subsets or folds. Second, the model is trained and evaluated k times, where each fold is a test set once and the rest of the folds are combined into one training set. Ultimately, the average results over all the folds help determine the model’s performance more stably.

Confusion matrix analysis

The Table 6 illustrates the confusion matrix for CBNet, providing insights into the model’s performance in classifying cyberbullying and non-cyberbullying instances. The table illustrate a binary class confusion matrix.

The confusion matrix is a valuable tool for analyzing the performance of the CNN model in distinguishing between true positives, true negatives, false positives, and false negatives. By examining the elements of the confusion matrix, insights into the model’s classification errors can be gained, allowing for targeted improvements and optimizations.

Experimental results

This section presents the results of our experiments CBNet for cyber bullying detection in student social media groups. The main aim of the experiments was to adequately to scope CBNet to achieve a capable discerning of cyber bullying behaviors. Therefore, we aimed to discover its performance metrics from its quantitative results: accuracy, precision, recall, F1-score and AUC. The presentation of CBNet’s quantitative results, including accuracy, precision, recall, F1-score and AUC, and performance analysis is presented. Moreover, we also considered the confusion matrix to assess the classification performances of CBNet. The matrix also gives more information that we used to analyse the ability of CBNet to disclose of true positives, true negatives, false positives and false negatives. Overall, the results section provides a comprehensive overview of CBNet’s effectiveness in tackling the pervasive issue of cyberbullying within the context of student social media interactions.

In this section, we provide the results of our experimental use of CBNet in the student social media aggregate cyberbullying detection field. Moreover, we record that our goals include closely training and evaluating CBNet’s cyberbullying detection efficacy; thus, our definition of results also includes our interpretations of the performance metrics used to measure cyberbullying behaviors. We first present the quantitative results, comprising accuracy, precision, recall, F1-score and area under the ROC curve, as well as a thorough examination of CBNet’s performance. Furthermore, we disaggregate the confusion matrix using all the appropriate metric frames of true positives, true negatives, false negative and false positives, which provides us with a comprehensive sense of classification ability with CBNet.

Classification results

The classification results offer a general performance assessment of CBNet and other comparison models’ abilities to recognize cyberbullying behaviors within student SMGs. After conducting numerous experiments and comprehensive assessments, we gain an understanding of the classifiers’ abilities to accurately recognize examples of cyberbullying, as well as their ability to generalize to various types of textual interactions. In this section, we report the evaluation results, summarizing the findings into key performance measures that include; accuracy, precision recall, F1-score, and area under ROC curve. Each of these metrics is then evaluated as compared to traditional and SOTA machine learning models, which also explains their strengths and weaknesses. These classification results serve as a critical benchmark in understanding the efficacy of CBNet and its counterparts in mitigating the pervasive issue of cyberbullying within student communities. The Table 7 shows the optimal hyperparameter selected for proposed CBNet training.

The table gives a brief overview of the key training parameters explored whilst training the CBNet model to perform the task of cyberbullying detection. The table outlines the values tested for each parameter and shows the best result achieved through experimentation. For the number of epochs, we tested training durations of 10, 20 and 30, 20 epochs proved to perform the best. For the batch size we tested 32, 64 and 128. 64 gave the best result. For the optimizer comparison, SGD and Adam were tested. Adam was more successful. This left us to test 0.001, 0.01 and 0.1 learning rates, of which 0.001 proved best for cyberbullying detection. These findings offer valuable guidance for optimizing CBNet’s training process and enhancing its performance in detecting cyberbullying within student social media groups. In our analysis, Table 8 showcases the confusion matrix generated for the validation data, offering a detailed breakdown of the model’s performance across different classes. Furthermore, Table 9 outlines the classification results specifically for CBNet on the validation set, providing key metrics such as accuracy, precision, recall, F1-score, and AUC. The Fig. 2 show the training and validation details, the first plot shows the accuracy while the second is showing loss of the trained model.

The confusion matrix presented in Fig. 3 provides an in-depth evaluation of the CBNet model’s classification performance on the validation dataset. This matrix offers critical insights into the model’s effectiveness in distinguishing between cyberbullying and non-cyberbullying interactions. The model correctly classified 90 cyberbullying instances as cyberbullying (True Positives, TP) and accurately identified 183 non-cyberbullying cases (True Negatives, TN). However, 10 cyberbullying instances were misclassified as non-cyberbullying (False Negatives, FN), and 17 non-cyberbullying messages were incorrectly flagged as cyberbullying (False Positives, FP). These results highlight the high recall capability of CBNet, as the low FN value (10) suggests that the model successfully detects the majority of cyberbullying cases, minimizing the risk of undetected harmful content. Additionally, the low FP rate (17) indicates that the model is not overly aggressive in mislabeling non-cyberbullying content as harmful, ensuring practical usability in real-world applications such as social media moderation and educational platforms. Overall, the CBNet model demonstrates superior classification performance, with high precision and recall, making it a reliable tool for cyberbullying detection. The strong TP and TN values confirm the robustness of the model, while the minimal misclassification rates reinforce its suitability for deployment in real-time cyberbullying prevention systems.

The Table 9 summarizes CBNet’s performance metrics in cyberbullying detection, including an accuracy of 0.85, precision of 0.82, recall of 0.88, F1-score of 0.85, and AUC of 0.91, showcasing its effectiveness in accurately identifying instances of cyberbullying within student social media groups.

Figure 4 shows the ROC curve that displays the performance of CBNet with AUC metric that measures the model’s discriminating ability between cyberbullying and non-cyberbullying instances. Table 10 depicts the confusion matrix of the proposed model on the test data, showing its classification accuracy for the various classes. Table 11 represents the complete classification results of CBNet on the test set; including the accuracy, precision, recall, F1-score, and AUC.

Comparison with SOTA

In comparison with state-of-the-art (SOTA) methods, CBNet demonstrates competitive performance in cyberbullying detection within student social media groups. While traditional machine learning models such as SVM31, Random Forest32, and KNN33 offer viable approaches, CBNet’s utilization of CNNs presents notable advantages in capturing complex textual features and hierarchical representations inherent in cyberbullying interactions. Moreover, CBNet surpasses RNN-based models in efficiency and scalability due to its parallel processing capabilities, resulting in faster training times and improved overall performance. By achieving comparable or superior results to SOTA methods, CBNet showcases its potential as an effective tool for combating cyberbullying and fostering safer online environments for students. The Table 12 show a detail comparison of the proposed model with state-of-the-art (SOTA) models.

The performance comparison of cyberbullying detection models, as shown in Table 12 and the Fig. 5, clearly demonstrates that the proposed CBNet model outperforms state-of-the-art (SOTA) approaches across all key evaluation metrics. CBNet achieves the highest accuracy (0.91), recall (0.94), and F1-score (0.91), surpassing deep learning models like RNN, LSTM, and Bi-LSTM. Traditional machine learning models (Random Forest, SVM, and Naïve Bayes) show significantly lower performance, reinforcing the limitations of feature-based approaches in detecting nuanced cyberbullying patterns. The AUC-ROC score of 0.95 confirms CBNet’s robustness, ensuring superior classification even in challenging and imbalanced datasets. These findings validate CBNet as a state-of-the-art solution, capable of providing more reliable and efficient cyberbullying detection in online student communities. The substantial improvements in recall and precision indicate CBNet’s ability to detect cyberbullying instances with minimal false positives and false negatives, making it an ideal choice for real-world deployment in social media moderation and educational institutions.

The error analysis of the CBNet model reveals two primary misclassification types: false negatives (FN) and false positives (FP). False negatives (10 cases) indicate instances where cyberbullying messages were misclassified as non-cyberbullying, often due to subtle, context-dependent language, sarcasm, or passive aggression that lacked explicit offensive words. This limitation can be mitigated by incorporating context-aware embeddings, sentiment analysis, and transformer-based NLP models to enhance the model’s ability to detect implicit abuse. Conversely, false positives (17 cases) highlight instances where non-cyberbullying messages were mistakenly flagged as harmful, often caused by keyword over-reliance, lack of conversational context, or misinterpretation of friendly interactions. Addressing this issue requires improved discourse-level analysis, multi-modal learning (e.g., emoji and metadata integration), and refined linguistic feature selection. Overall, refining CBNet’s contextual understanding and integrating advanced NLP techniques can further enhance its precision and reliability in cyberbullying detection across diverse online interactions.

Table 13 presents a comparative analysis between the proposed CBNet model and various transformer-based architectures commonly used for text classification. The results demonstrate that CBNet consistently outperforms all transformer models across key evaluation metrics, achieving the highest accuracy (0.91), recall (0.94), F1-score (0.91), and AUC-ROC (0.95). While transformer-based models such as BERT, RoBERTa, and XLNet perform competitively, CBNet exhibits superior recall, indicating its enhanced ability to detect cyberbullying cases with fewer false negatives. The higher AUC-ROC score (0.95) further validates CBNet’s robust classification performance, making it a more reliable solution for real-world cyberbullying detection applications. These results highlight the effectiveness of CBNet’s CNN-based architecture, proving its state-of-the-art capabilities compared to both transformer and traditional machine learning models.

Table 14 presents the high-performance evaluation of the proposed CBNet model on the benchmark dataset introduced by Ejaz et al.34. The results demonstrate CBNet’s robust classification ability, achieving an accuracy of 90%, precision of 88%, recall of 93%, F1-score of 91%, and an AUC-ROC of 0.87. These metrics highlight the effectiveness of CBNet in detecting cyberbullying instances with a strong balance between precision and recall, ensuring both high detection rates and minimal false positives. The notably high recall (93%) underscores CBNet’s ability to identify cyberbullying cases with exceptional reliability, minimizing the risk of overlooking harmful content. Additionally, the F1-score of 0.91 reflects the model’s strong overall performance, effectively balancing sensitivity and specificity. The AUC-ROC value of 0.87 further validates CBNet’s discriminative power, confirming its effectiveness in distinguishing cyberbullying from non-cyberbullying interactions within real-world social media discussions.

These results establish CBNet as a state-of-the-art model, outperforming traditional machine learning approaches and competing favorably with advanced deep learning architectures. The model’s high accuracy and recall make it particularly suitable for real-time cyberbullying detection, offering a reliable solution for social media moderation, online community safety, and academic research in cyber abuse prevention. These findings reinforce CBNet’s practical viability and its significant contribution to cyberbullying detection literature.

Discussion

Aforementioned experimental results revealed in this paper validate the successful application of CBNet, a Convolutional Neural Network based model, in detecting cyberbullying activities among students’ social media groups. Comparative output measures presented demonstrated that CBNet model outperformed other traditional machine learning and RNN-based models in various metrics. The superiority of the former can be attributed to its capacity to learn more complicated textual features and hierarchical cues utilized in cyberbullying activities, ensuring more accurate and consistent detection. Another advantage of CBNet over traditional machine learning techniques including Support Vector Machines, Random Forest, and K-Nearest Neighbors involves the former automatic features extraction from raw text data, eliminating the necessity for labor-intensive manual work in feature engineering.

This makes CBNet much better suited for the broad and ever-changing patterns of cyberbullying across various social media conversations involving the students. As a result, its execution has always surpassed that of RNN-based models, which have previously been the default choice for any form of sequential data. RNNs have been effective in detecting the temporal dependencies in text data. However, they were still having issues with vanishing gradients and were incapable of mastering the long-range dependencies. This makes the CBNet’s convolutional layers more effective in capturing both local and global dependencies in text sequences’ and produces sturdier and more interpretable conclusions. Hence the results will be a substantial difference in detecting. For students who are more at risk of abuse by their peers than by adults who do the wrong thing but mean well, that type of early intervention might be more than a semantic distinction. A better model would identify bullying behavior that RNNs would miss. Accurately identifying cyberbullying episodes in real time, CBNet may allow educators and administrators to take positive steps to counter and prevent cyberbullying conduct.

While these results suggest effectiveness, it is also necessary to consider the limitations of this work, which include potential variation in its effectiveness dependent on factors such as dataset size and diversity, annotation quality, and platform-specific facets. One direction for future research is to investigate how well CBNet’s effectiveness generalizes across different student populations and social media platforms. Another is the potential biases and ethical implications of automated Cyberbullying detection systems.

Regardless, it is safe to say that the findings from this work indicate that CBNet for the first time presents a strong starting point for whitebox-style architectures for Cyberbullying detection that use attentional mechanisms as whitebox semantic rich computing modules that can dynamically find and use the evidence they need in order to detect instances of cyberbullying in whitebox student social media groups more accurately overall. By making cyberbullying detection a more regular part of the fabric of edtech tools used to create student social media groups, this could become a tremendously beneficial development of technology that ultimately helps to aid in the creation of online environments for students that are safer and more inclusive and on the whole more conducive to the development of a culture of respect, empathy, and digital citizenship.

Conclusion

This research emphasizes the critical intersection between cyberbullying and psychology, highlighting the profound impact of online harassment on individuals’ mental health and well-being. By acknowledging the psychological consequences of cyberbullying and implementing targeted interventions informed by psychological principles, we can strive towards creating a more empathetic and supportive online community for all individuals. The development and evaluation of CBNet, a CNN-based model for cyberbullying detection within student social media groups, reveal promising results. Through extensive testing with diverse datasets from various social media platforms frequented by university students, CBNet consistently outperformed traditional machine learning methods and recurrent neural network (RNN)-based approaches. The readings suggest that its higher accuracy, precision, recall, F1-score, and area under the ROC curve ensure that it can accurately detect instances of cyberbullying. The Success of CBNet has various implications. As a crucial tool for educators, administrators, and community moderators, CBNet permits a more proactive approach to detection, allowing educators to respond to the red flags of cyberbullying as they occur to create safer and more secure online spaces for students. Institutions can promote digital citizenship, well-being, and indirectly reduce the negative effects of cyberbullying on student mental health and achievement by implementing CBNet’s sophisticated machine learning capabilities. Future research on the scalability and generalizability of CBNet to other student populations is required. Finally, further consideration of the ethical, as well as biased factors which underlie automated cyberbullying detection systems, is also necessary. Overall, with the continued development of cyberbullying detection technology, there is a possibility of building for the students a safe, respectful, and sensitive online environment.

Data availability

The data supporting the findings of this study are available from the corresponding author upon request.

References

Bauman, S. Cyberbullying and online harassment: the impact on emotional health and well-being in higher education, in H.Cowie & C.-A. Myers (Eds.), Cyberbullying and Online Harms, Routledge, 3–15. (2023). https://doi.org/10.4324/9781003258605-2

Çakar-Mengü, S. & Mengü, M. Cyberbullying as a manifestation of violence on social media, multidiscip perspect. Educ. Soc. Sci. 47, pp. 17–25 (2023).

Shoaib, M. et al. An advanced deep learning models-based plant disease detection: a review of recent research. Front. Plant Sci. 14(March), 1–22 (2023). https://doi.org/10.3389/fpls.2023.1158933

Jim, J. R. et al. Recent advancements and challenges of NLP-based sentiment analysis: a state-of-the-art review. Nat. Lang. Process. J. 7, p. 100059 (2024).

Amelia, S. & Wibowo, A. A. Exploring online-to-offline friendships: a netnographic study of interpersonal communication, trust, and privacy in online social networks. CHANNEL J. Komun. 11 (1), 1–10 (2023).

Chyne, R. C., Khongtim, J. & Wann, T. Evaluation of social media information among college students: an information literacy approach using CCOW. J. Acad. Librariansh. 49 (5), 102771 (2023).

Alghamdi, J., Luo, S. & Lin, Y. A comprehensive survey on machine learning approaches for fake news detection. Multimed Tools Appl. 17, pp. 51009–510679 (2023).

Coban, O., Ozel, S. A. & Inan, A. Detection and cross-domain evaluation of cyberbullying in Facebook activity contents for Turkish. ACM Trans. Asian Low-Resource Lang. Inf. Process. 22 (4), 1–32 (2023).

Sayfulloevna, S. S. Safe learning environment and personal development of students. Int. J. Form. Educ. 2 (3), 7–12 (2023).

Sundberg, L. & Holmström, J. Democratizing artificial intelligence: how no-code AI can leverage machine learning operations. Bus. Horiz. 66 (6), 777–788 (2023).

Oyinbo, A. G. et al. Prolonged social media use and its association with perceived stress in female college students. Am. J. Heal Educ. 55, pp. 189–198 (2024).

Gallifant, J. et al. A new tool for evaluating health equity in academic journals; the diversity factor. PLOS Glob Public. Heal. 3 (8), e0002252 (2023).

Awaah, F., Tetteh, A. & Addo, D. A. Effects of cyberbullying on the academic life of Ghanaian tertiary students. J. Aggress. Confl. Peace Res.16, pp. 221–235 (2024).

Hasan, M. T. et al. A review on deep-learning-based cyberbullying detection. Futur Internet. 15 (5), 179 (2023).

Dai, X. et al. A Review of Cyberbullying Detection Techniques and Exploration of Governance Strategies, in 2023 2nd International Conference on Machine Learning, Cloud Computing and Intelligent Mining (MLCCIM), pp. 314–321. (2023).

Sultan, D. et al. Cyberbullying-related hate speech detection using Shallow-to-deep learning. Comput. Mater. Contin, 75, 1, pp. 45–61 (2023).

Kowalski, R. M., Giumetti, G. W. & Feinn, R. S. Is cyberbullying an extension of traditional bullying or a unique phenomenon? A longitudinal investigation among college students. Int. J. Bullying Prev. 5 (3), 227–244 (2023).

Rajamani, S. K. & Iyer, R. S. Methods of complex network analysis to screen for cyberbullying, in Combatting Cyberbullying in Digital Media with Artificial Intelligence, Chapman and Hall/CRC, 218–242. (2023).

Biernesser, C. et al. Middle school students’ experiences with cyberbullying and perspectives toward prevention and bystander intervention in schools. J. Sch. Violence. 22 (3), 339–352 (2023).

Gohal, G. et al. Prevalence and related risks of cyberbullying and its effects on adolescent. BMC Psychiatry. 23 (1), 39 (2023).

Li, L., Jing, R., Jin, G. & Song, Y. Longitudinal associations between traditional and cyberbullying victimization and depressive symptoms among young chinese: a mediation analysis. Child. Abus Negl. 140, 106141 (2023).

Lee, J., Hong, J. S., Choi, M. & Lee, J. Testing pathways linking socioeconomic status, academic performance, and cyberbullying victimization to adolescent internalizing symptoms in South Korean middle and high schools. School Ment Health. 15 (1), 67–77 (2023).

Doty, J. L., Mehari, K. R., Sharma, D., Ma, X. & Sharma, N. Cross-Cultural measurement of cyberbullying perpetration and victimization in India and the US. J. Psychopathol. Behav. Assess. 45 (4), 1068–1080 (2023).

Feng, J., Chen, J., Jia, L. & Liu, G. Peer victimization and adolescent problematic social media use: the mediating role of psychological insecurity and the moderating role of family support. Addict. Behav. 144, 107721 (2023).

Azumah, S. W., Elsayed, N., ElSayed, Z. & Ozer, M. Cyberbullying in text content detection: an analytical review. Int. J. Comput. Appl. 45 (9), 579–586 (2023).

Vassiliadis, L. Educators’ Perspectives on Cyberbullying: A Qualitative Study (Alliant International University, 2024).

Chen, Q. et al. Effectiveness of digital health interventions in reducing bullying and cyberbullying: a meta-analysis. Trauma Violence Abus. 24(3), 1986–2002, (2023).

Neuhaeusler, N. S. Cyberbullying during COVID-19 pandemic: relation to perceived social isolation among college and university students. Int. J. Cybersecur. Intell. Cybercrime. 7 (1), 3 (2024).

Zhong, Y., Guo, K. & Chu, S. K. W. Affordances and constraints of integrating esports into higher education from the perspectives of students and teachers: an ecological systems approach. Educ. Inf. Technol. 29, pp. 16777–16811 (2024).

Akrami, K. et al. Investigating the adverse effects of social media and cybercrime in higher education: a case study of an online university. Stud. Media J. Commun. 1 (1), 22–33 (2024).

Nedra, A., Shoaib, M. & Gattoufi, S. Detection and classification of the breast abnormalities in Digital Mammograms via Linear Support Vector Machine, Middle East Conf. Biomed. Eng. MECBME, vol. 2018-March, pp. 141–146, (2018). https://doi.org/10.1109/MECBME.2018.8402422

Khan, M., Afaq, M., Islam, I. U., Iqbal, J. & Shoaib, M. Energy loss prediction in nonoriented materials using machine learning techniques: A novel approach. Trans. Emerg. Telecommun Technol. . https://doi.org/10.1002/ett.3797 (2019).

Hussain, D. M. & Surendran, D. The efficient fast-response content-based image retrieval using spark and mapreduce model framework. J. Ambient Intell. Humaniz. Comput. 12 (3), 4049–4056. https://doi.org/10.1007/s12652-020-01775-9 (2021).

Ejaz, N., Razi, F. & Choudhury, S. Towards comprehensive cyberbullying detection: a dataset incorporating aggressive texts, repetition, peerness, and intent to harm. Comput. Hum. Behav. 153, 108123. https://doi.org/10.1016/j.chb.2023.108123 (2024).

Acknowledgements

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through the Large Research Project under grant number RGP2/658/46. The authors are thankful to the Deanship of Graduate Studies and Scientific Research at University of Bisha for supporting this work through the Fast-Track Research Support Program.

Funding

This study is supported by King Khalid University and University of Bisha.

Author information

Authors and Affiliations

Contributions

I.A.A., M.S. , M.A. ,M.A wrote the paper, performed analysis, and reviewed the manuscript. I.A.A. reviewed and checked the manuscript and supervised the work. All authors have contributed to this manuscript and approved this submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Abbasi, I.A., Shoaib, M., Alshehri, M. et al. Utilizing CBNet to effectively address and combat cyberbullying among university students on social media platforms. Sci Rep 15, 25582 (2025). https://doi.org/10.1038/s41598-025-09091-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-09091-y