Abstract

Watershed macrotrash contamination is difficult to measure and requires tedious and labor-intensive processes. This work proposes an automated approach to waste counting, focusing on using computer vision, deep learning, and object tracking algorithms to acquire accurate counts of plastic bottles as they advect down rivers and streams. By using a combination of several publicly available labeled trash and plastic bottle image datasets, the model was trained to achieve high performance with the YOLOv8 object detection model. This was paired with the Norfair object tracking library and a novel post-processing algorithm to filter out false positives. The model performed extremely accurately over the test scenarios with just one false positive and recalls in excess of 0.947.

Similar content being viewed by others

Introduction

Geoscience researchers are leveraging machine learning (ML) and artificial intelligence (AI) algorithms to address a wide range of challenges1,2,3,4,5,6. In the field of water resources, AI and ML techniques have opened new research pathways including water management models based on Markov Decision Process, water depth analysis with Convolutional Neural Networks, and modeling infiltration rates with the Firefly Algorithm7,8,9. One popular approach, known as computer vision, extracts information from images or video and has widespread application in medical sciences, autonomous vehicles, and manufacturing. Computer vision offers advantages like low-cost video recording sensors, real-time analysis, and a data-rich input.

Computer vision has also found application in trash detection and classification. For example, a deep convolutional neural network was used to detect and classify roadway waste images recorded by a vehicle-mounted camera. As one of the first trash studies, the researchers collected and annotated nearly 20,000 images for their application10. To enhance the limited availability of training data, a trash debris dataset was developed with over 48,000 annotations11. Other trash datasets include the Trash Annotations in Context (1,500 images), The Plastic Bottles in the Wild (8,000 images), The Unmanned Aerial Vehicle - Bottle Dataset (25,000 images), and The Images of Waste (9,000 images)12,13,14,15.

Previous iterations of trash-detecting software have focused on general solutions capable of detecting multiple trash types16. Some of these tools have been applied in waste sorting systems including recycling stations17,18,19. However, these tools are not easily adaptable to the outdoor environment because they rely on cameras close to the objects, have controlled environmental conditions (e.g., lighting and background) and use a limited set of test items. Several general-purpose tools have been developed for watershed trash detection20,21,22,23,24,25,26,27. Yet, these tools encounter key challenges like the absence of counting algorithms, lower accuracy for detecting distant objects, and high false positives rates.

This project aims to address these limitations by developing a specialized tool that only counts plastic bottles in aquatic ecosystems as they move across the video’s frame. The program, referred to as botell.ai, implements an object detection model that finds the presence and location of a bottle within a frame28. botell.ai then matches each detected item and tracks their movement. In the final stage of post-processing, the tracked objects are analyzed for motion, and stationary items are filtered out from the model. Additionally, this project follows Integrated, Coordinated, Open, Networked (ICON) best practices with a publicly available source code, input video files, and output model result videos29.

Quantifying macroplastic debris using software like botell.ai is essential for addressing plastic pollution in aquatic ecosystems. Water bottles are a common source of plastic debris, frequently found as pollutants worldwide30,31. Over time, these bottles degrade, contributing to the growing presence of microplastics and nanoplastics in both freshwater and marine environments32,33,34,35.

Methods

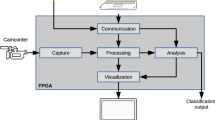

The bottle detecting application combines computer vision with object tracking to count plastic bottles floating downstream. The process begins with training of YOLOv8, an object detection model. Field videos are then processed by the trained model, which identifies and logs each bottle location and unique ID. Next, Norfair, a tracking algorithm, links the detections across frames and calculates velocity and overall movement. Finally, a post-processing script refines the output. The pseudo-code for this model is shown in Fig. 1.

Object Detection

YOLO (You Only Look Once) is a popular and effective real-time object detection and classification model36. The algorithm combines object localization and class prediction steps into a single network, and predicts both simultaneously. The model simultaneously implements a global class probability map, for predefined cell sizes as a grid over the image, and creates bounding boxes with probabilities. The object detection is simplified by framing as a regression analysis rather than a multi-step classification. The YOLO framework is a fast-performing model, which is accessible and executable on diverse hardware platforms. Processing times with YOLO may exceed real-time video playback, especially with modern graphics processing units (GPU).

Since its inception, YOLO has undergone many iterations both as a research tool37 and in commercial applications38. This project uses YOLOv8 a state-of-the-art model developed by the Ultralytics team39. While leveraging the speed and simplicity of the YOLO architecture, YOLOv8 is a collection of models of increasing complexity (from simplest to most complex nano, small, medium, large, and extra large), as well as a host of built-in preprocessing and configuration options. The included image augmentation library optimizes the input dataset by rotating, manipulating colors, and transforming images to create a more effective and larger training dataset.

YOLOv8 is used in the botell.ai program to first identify the plastic bottles floating downstream and assigns a label ID. It then calculates a confidence score for each item ranging from 0 (lowest probability) to 1 (highest likelihood), and creates a bounding box with pixel location. This information is then passed on to the next step, Norfair, for object tracking and counting.

Object Tracking

Norfair40 is a customizable lightweight Python library for real-time multi-object tracking. Norfair is used in conjunction with compatible detection software, like YOLOv8 to produce tracking metrics based on the given object detection. Norfair uses a Kalman filter for motion estimation, similar to DeepSORT, and includes extensive customization and visualization toolbox41.

The bottle detecting tracking algorithm was designed for a top-down view, as found when mounting a camera on a bridge or walkway over the waterway. This increases the likelihood that the bottles are continuously tracked and avoids potential occlusions from waves, floating debris, or other obstructions. The code is developed as a flexible tool and can also be used with cameras mounted along a stream bed or on a drone, with reduced robustness.

Detection Filtering

The detection filtering is set up into two separate stages. The first phase, shown in Fig. 2, implements OpenCV’s Gaussian mixture model for background removal42. The filter creates a binary mask of suspected moving objects and removes small noisy elements (leaves or small waves) with a morphological closing algorithm. A smoothing effect is then applied that turns the binary mask into a distribution of suspected movement. The mask is then normalized from -0.33 for no movement to 0 for expected motion. These values are finally added back to the detection’s confidence values.

The lower bound of -0.33 was selected based on testing to sufficiently penalize non-moving detections. Users can tune this value and the minimum confidence threshold for tracking based on their specific environment. In our testing, the background removal filter, combined with appropriate threshold tuning, consistently allowed for robust filtering of non-moving objects while preserving valid detections. Detection filtering is only applied when the camera remains stationary. For moving camera scenarios, such as drone applications, this technique cannot be used to blur the background.

The second, post-processing stage, analyzes the amount of time an object is tracked and the distance the object moves across the video frame. Typically, the majority of false positives have either an extremely short duration (i.e. one or two frames) or do not travel across the majority of the screen. These false positives are most likely noise (e.g. image disturbances from reflection or waves) rather than a bottle-like shape. They have little in common between frames, lack consistency in direction, and travel short distances. This additional filter increases the overall robustness of the model. The object time (number of frames) and distance traveled (across a frame) are both user controlled features.

Training Data

The botell.ai model was trained, tested, and validated with 13,480 images from three publicly accessible datasets. The model was trained using 11,964 images (88.7%) and tested with 492 images (3.6%). It was then validated with 1,024 images (7.5%) to calculate overall accuracy and skill. The datasets were selected for their size and consistency in label quality and, in combination, include natural, staged, and synthetic data. This diversity enables the model to generalize across different camera types, environmental conditions, and visibility ranges. The three datasets include the following:

racnhua43 is a publicly available Roboflow-based dataset consisting of 9,889 images of plastic bottles. This dataset came pre-split into training, testing, and validation sets, containing 8415, 1011, and 505 bottle images respectively. Several images were converted from segmentation annotations to bounding boxes.

The Unmanned Aerial Vehicle - Bottle Dataset, or UAV-BD, is a large collection with more than 25 thousand images of bottles, and over 34 thousand instances of plastic bottles across the dataset14. Despite the high quality and quantity of the images in this data, the images have very low variability in background and lighting between each image. For this reason, the UAV-BD was reduced to approximately one third using a random selection process. This reduction prevents over fitting of the detection model with one type of image observation.

Trash Annotations in Context12, or TACO, is a set of 6,004 images of 18 different categories of trash and litter. Because many mislabels existed for the plastic bottles category, we filtered out any images containing plastic bottles, removed all annotations, and utilized this dataset solely to provide background images to reduce false positives.

Training

The object detection model, YOLOv8, comes in a wide variety of configurations depending on the task and efficiency requirements of the application, ranging from YOLOv8n (nano) to YOLOv8xl (extra large). For initial testing and model development, this project used YOLOv8n (nano) and YOLOv8s (small) because they reduce training time during testing stages. The final project implemented the medium size, YOLOv8m, because of its balance between training time, inference speed, and model performance.

YOLOv8 defaults to an image input size of 640x640. This means that all images passed through the model are downsampled to this resolution before any processing takes place. Custom resolutions are possible, but were not needed due to the high quality precision and recall results. Model training was done on an NVIDIA RTX A4000, accompanied by an AMD Ryzen Threadripper PRO 5995WX (64-Cores) and 32GB of RAM.

The training model was set to single class mode and configured with a batch size of 32 and a maximum limit of 500 epochs. An epoch represents a complete pass through the entire training dataset, while a batch refers to the number of items processed by the model before updating gradients and parameters. All images were placed into a 2x2 mosaic in groups of 4. YOLOv8 applied four separate image augmentations to increase the variability of the training set including copy-paste (a random chance for an instance of an object to be pasted onto a different background), scaling (mosaic is scaled by a factor of a random number between 0.5 and 1.5), rotation (mosaic is rotated by a random number of degrees between 0 and 180), and flipping (mosaic has a random chance of being horizontally flipped).

Validation

After each epoch, metrics are run, and are compared to the previous epochs. To prevent over-fitting, YOLOv8 implements patience, a parameter that stops training if the model’s performance on the validation set doesn’t improve after a set number of epochs, saving the best-performing weights. The botell.ai model was set to a patience value of 50. Training stopped at epoch 338, with the best performance on the validation set occurring at epoch 288. The final precision-recall curve (epoch 288) is shown in Fig. 3 and validation testing with confidence scores are shown in Fig. 4.

Performance

The trained YOLOv8 model performs reliably across a wide range of conditions, provided that the bottle’s contour is visible. This holds true regardless of camera angle or water clarity, as long as there is a clear line of sight to the object’s edges. While lighting conditions do affect performance, the impact largely depends on the camera’s sensor quality. In low-light environments, limited light reaching the sensor can constrain detection accuracy. For optimal results, the model is best used in well-lit settings.

Results and Discussion

botell.ai was tested under controlled conditions where researchers placed bottles in the environment. The first four trials were conducted in Sligo Creek, a small subwatershed in Maryland, using a GoPro HERO12 Black camera (1080p at 60 frames per second). Tests were carried out on a pedestrian bridge and atop a weir (a small dam), with each setup filmed from both optimal (top-view) and sub-optimal (30-degrees) angles. The dual-angle approach was used to assess the impact of camera positioning and evaluate the robustness of the algorithm.

The fifth test was also filmed in Sligo Creek, but used a cellphone camera attached to the side of the stream bed. The last test was done with a drone in Yucatán, Mexico. The results from the six tests are shown in Table 1. All bottles released into the environment were collected by researchers at the end of the experiment.

Pedestrian Bridge

The first location used two cameras placed on a pedestrian bridge. The cameras were placed about eight feet from the water surface, with the optimal direction pointing directly down at the water. The camera with the sub-optimal angle had a more shallow side view. After positioning the camera, the researchers released 38 bottles into the stream. The videos were then cropped and zoomed to an 1:1 aspect ratio.

The botell.ai algorithm counted 36 of the 38 bottles for the optimal angle test (recall of 0.95), with example frames shown in Fig. 5. One of the false negatives, bottom image of Fig. 5, was due to the two bottles connecting and floating downstream as a single item. The sub-optimal angle only counted 12 of the 38 bottles advecting downstream (recall of 0.32). The sub-optimal detection had many tracking issues due to the tortuous water path and drops in tracking, as seen in Fig. 6. The sub-optimal angle detected the large boulder as a potential bottle in this frame, but it was filtered out because it did not move and was not on screen past the threshold period.

Weir

The second test location was filmed on top of a weir discharge, where water flowed over a narrow concrete opening. The cameras were stationed less than two feet away from the water’s surface. No video cropping or zooming was necessary in this test. The test counted 51 of the 52 bottles for the optimal angle (recall of 0.95), shown in Fig. 7. However, the sub-optimal only captured 37 of the 52 bottles (recall of 0.71), which was caused by the video being too close to the water surface, shown in Fig. 8. The weir test outperformed the pedestrian bridge video because the camera was closer to the water’s surface. Thus, a larger majority of the camera frame was comprised of bottles.

Sideview

The fifth test was completed on the side of the shore using a cellphone camera. The video included 7 bottles and the botell.ai program successfully counted all 7. There were no false positives or negatives and had a recall and precision of 1.0. An image from this test video is shown in Fig. 9. The bottles were relatively close to the camera and were easily detectable.

Drone

The last test involved using drone footage tested in a controlled setting in Yucatán, Mexico. Plastic bottles were introduced into a small swimming structure and a drone flying overhead captured continuous video. The model was capable of capturing all of the bottles but struggled due to sun reflections. Of the 6 bottles in the video, the algorithm counted all bottles and double counted one bottle as it passed the sun spot in the water. An image from the test video is shown in Fig. 10. While the recall was 1.0, the precision was limited to 0.88.

The drone footage did not use the object filtering techniques as described in the detection filtering section. This is because the bottle stays in a fixed location in reference to its background.

Computational Performance

botell.ai’s computational performance varies significantly depending on the hardware, with GPU processing being substantially faster than CPU. On a high-performance desktop equipped with an NVIDIA A4000 GPU, the system processes between 30 and 60 frames per second, depending on the video resolution. In contrast, when running on a CPU–specifically a notebook with an Intel i7-1260P (12 cores)–the processing speed drops to approximately 3 frames per second. Although performance can be improved by reducing the input framerate, doing so may compromise accuracy, especially when tracking fast-moving objects.

Model Use

botell.ai is an open source package, written in Python with the Anaconda distribution platform, and is available on GitHub28. The model runs in the command line with several options including frame skipping, minimum time an object is on frame, minimum distance traveled, video display for debugging, and detection threshold. After downloading and installing botell.ai users can run the following command for help: python bottledetector.py –help.

The application only requires a video path as an input argument and will output a text file with all model inputs as a header and a list of detections, which includes their ID number, confidence, start and end times, and total pixels traveled. A simple simulation would include the following command python bottledetector.py Example.mp4, where Example.mp4 is the name of the sample video.

Each video requires a manual tuning of parameters based on the physical environment (e.g. water velocity, camera distance, and lighting). For long videos, a smaller test segment can be used to determine optimal settings, which can then be applied to the rest of the footage. Additionally, reducing the frame rate and resolution can also help speed up the model.

Conclusion

botell.ai demonstrated excellent performance, accurately detecting, tracking, and counting nearly every bottle during the optimal angle tests (0.95 and 0.98 recall). The sub-optimal angle tests, performed poorly (0.32 and 0.71 recall), and highlighted the importance of video quality (e.g. camera angle, resolution, frame rate, and distance from the object). The drone and side-view tests also showed promising results. There was only one false positive across the six tests due to the filtering algorithm.

Overall, the detection model does not work well on videos with excessive glare, large waves, or distant camera placement. Additional testing was also conducted using TLC2020 timelapse camera. However, the timelapse camera struggled due to low framerate and a lack of continuous tracking over the video.

The botell.ai program is a flexible bottle detection model that can be applied across a wide range of environments. Tracking bottles instead of all forms of trash increased the model’s robustness and accuracy, and nearly eliminated false positives. The next steps for this tracking model are to deploy cameras throughout the watershed and track the movement of bottles over storms and extended time periods.

Data availability

The datasets supporting the conclusions of this article are available in the CUAHSI’s hydroshare repository, https://www.hydroshare.org/resource/4c6424e5569a4ff89841a7b5b47d31bd/44. The software, botell.ai v24.10, is publicly available on GitHub and can be accessed with the following link doi: 10.5281/zenodo.1395196228. The software is platform independent and is written in Python. It follows the Creative Commons 4.0 Attribution license. The training data, software, and testing videos are all open source and publicly available. The three training datasets are published and accessible from the references12,14,43.

References

Karpatne, A., Ebert-Uphoff, I., Ravela, S., Babaie, H. A. & Kumar, V. Machine learning for the geosciences: Challenges and opportunities. IEEE Trans. Knowledge Data Eng. 31, 1544–1554 (2018).

Bergen, K. J., Johnson, P. A., de Hoop, M. V. & Beroza, G. C. Machine learning for data-driven discovery in solid earth geoscience. Science 363, eaau0323 (2019).

Dramsch, J. S. 70 years of machine learning in geoscience in review. Adv. Geophys. 61, 1–55 (2020).

Lary, D. J., Alavi, A. H., Gandomi, A. H. & Walker, A. L. Machine learning in geosciences and remote sensing. Geosci. Front. 7, 3–10 (2016).

Sun, Z. et al. A review of earth artificial intelligence. Comput. Geosci. 159, 105034 (2022).

Mamalakis, A., Barnes, E. A. & Ebert-Uphoff, I. Investigating the fidelity of explainable artificial intelligence methods for applications of convolutional neural networks in geoscience. Artific. Intell. Earth Syst. 1, e220012 (2022).

Xiang, X., Li, Q., Khan, S. & Khalaf, O. I. Urban water resource management for sustainable environment planning using artificial intelligence techniques. Environ. Impact Assess Rev. 86, 106515 (2021).

Ai, B. et al. Convolutional neural network to retrieve water depth in marine shallow water area from remote sensing images. IEEE J. Selected Topics in Appl. Earth Observat. Remote Sens. 13, 2888–2898 (2020).

Sayari, S., Mahdavi-Meymand, A. & Zounemat-Kermani, M. Irrigation water infiltration modeling using machine learning. Comput. Electron. Agri. 180, 105921 (2021).

Rad, M. S. et al. A computer vision system to localize and classify wastes on the streets. In Computer Vision Systems (eds Liu, M. et al.) 195–204 (Springer, Cham, 2017).

Tharani, M., Amin, A. W., Rasool, F., Maaz, M. & Murtaza Taj, A. M. Trash detection on water channels. In ICONIP (2021).

Proença, P. F. & Simões, P. Taco: Trash annotations in context for litter detection. arXiv preprint arXiv:2003.06975 (2020).

Sah, S. Plastic bottles in the wild image dataset. https://www.kaggle.com/datasets/siddharthkumarsah/plastic-bottles-image-dataset/data.

Wang, J. et al. Bottle detection in the wild using low-altitude unmanned aerial vehicles. In 2018 21st International Conference on Information Fusion (FUSION), 439–444, https://doi.org/10.23919/ICIF.2018.8455565 (2018).

Bilrein. Images of waste dataset. https://universe.roboflow.com/bilrein/images_of_waste (2024). Visited on 2024-07-11.

Jia, T. et al. Deep learning for detecting macroplastic litter in water bodies: a review. Water Res. 231, 119632 (2023).

Shukhratov, I. et al. Optical detection of plastic waste through computer vision. Intell. Syst. Appl. 22, 200341 (2024).

Ramsurrun, N., Suddul, G., Armoogum, S. & Foogooa, R. Recyclable waste classification using computer vision and deep learning. In 2021 zooming innovation in consumer technologies conference (ZINC), 11–15 (IEEE, 2021).

Lu, W. & Chen, J. Computer vision for solid waste sorting: A critical review of academic research. Waste Manag. 142, 29–43 (2022).

Cowger, W. et al. Trash AI: a web gui for serverless computer vision analysis of images of trash. J. Open Source Software 8, 5136. https://doi.org/10.21105/joss.05136 (2023).

Tharani, M. et al. Trash detection on water channels. In Neural Information Processing: 28th International Conference, ICONIP 2021, Sanur, Bali, Indonesia, December 8–12, 2021, Proceedings, Part I 28, 379–389 (Springer, 2021).

Q. Tomas, J. P., D. Celis, M. N., B. Chan, T. K. & A. Flores, J. Trash detection for computer vision using scaled-yolov4 on water surface. In Proceedings of the 11th International Conference on Informatics, Environment, Energy and Applications, 1–8 (2022).

Kraft, M., Piechocki, M., Ptak, B. & Walas, K. Autonomous, onboard vision-based trash and litter detection in low altitude aerial images collected by an unmanned aerial vehicle. Remote Sens. 13, 965 (2021).

Bose, C., Pathak, S., Agarwal, R., Tripathi, V. & Joshi, K. A computer vision based approach for the analysis of acuteness of garbage. In Advances in Computing and Data Sciences: 4th International Conference, ICACDS 2020, Valletta, Malta, April 24–25, 2020, Revised Selected Papers 4, 3–11 (Springer, 2020).

Lin, F., Hou, T., Jin, Q. & You, A. Improved yolo based detection algorithm for floating debris in waterway. Entropy 23, 1111 (2021).

van Lieshout, C., van Oeveren, K., van Emmerik, T. & Postma, E. Automated river plastic monitoring using deep learning and cameras. Earth and Space Sci. 7, e2019EA000960 (2020).

Jia, T., Vallendar, A. J., de Vries, R., Kapelan, Z. & Taormina, R. Advancing deep learning-based detection of floating litter using a novel open dataset. Front. Water 5, 1298465 (2023).

Davison, J., Heller, A. & Jacobs, M. botell.ai Water Bottle Computer Vision Detection Software, https://doi.org/10.5281/zenodo.13951962 (2024).

Acharya, B. S. et al. Hydrological perspectives on integrated, coordinated, open, networked (icon) science. Earth and Space Sci. 9, e2022EA002320 (2022).

Ryan, P. G., Pichegru, L. & Connan, M. Tracing beach litter sources: Drink lids tell a different story from their bottles. Marine Pollut. Bullet. 201, 116186 (2024).

Ryan, P. G. Land or sea? what bottles tell us about the origins of beach litter in kenya. Waste Manag. 116, 49–57 (2020).

Liro, M., Zielonka, A. & Mikuś, P. First attempt to measure macroplastic fragmentation in rivers. Environ. Int. 191, 108935 (2024).

Thacharodi, A. et al. Microplastics in the environment: A critical overview on its fate, toxicity, implications, management, and bioremediation strategies. J. Environ. Manag. 349, 119433 (2024).

Nabi, I. et al. Biodegradation of macro-and micro-plastics in environment: A review on mechanism, toxicity, and future perspectives. Sci. Total Environ. 858, 160108 (2023).

Kawecki, D. & Nowack, B. Polymer-specific modeling of the environmental emissions of seven commodity plastics as macro-and microplastics. Environ. Sci. Technol. 53, 9664–9676 (2019).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016).

Diwan, T., Anirudh, G. & Tembhurne, J. V. Object detection using yolo: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 82, 9243–9275 (2023).

Mirhaji, H., Soleymani, M., Asakereh, A. & Mehdizadeh, S. A. Fruit detection and load estimation of an orange orchard using the yolo models through simple approaches in different imaging and illumination conditions. Comput. Electron. Agri. 191, 106533 (2021).

Jocher, G., Chaurasia, A. & Qiu, J. Ultralytics YOLO (2023).

Alori, J. et al. tryolabs/norfair: v2.2.0, https://doi.org/10.5281/zenodo.7504727 (2023).

Bewley, A., Ge, Z., Ott, L., Ramos, F. & Upcroft, B. Simple online and realtime tracking. In 2016 IEEE international conference on image processing (ICIP), 3464–3468 (IEEE, 2016).

Zivkovic, Z. Improved adaptive gaussian mixture model for background subtraction. In Proceedings of the 17th International Conference on Pattern Recognition, 2004. ICPR 2004., vol. 2, 28–31 (IEEE, 2004).

diep. racnhua dataset. https://universe.roboflow.com/diep-omxgu/racnhua (2023). Visited on 2024-07-24.

Davison, J. H. et al. Water bottle river video for computer vision and detection. HydroShare (2024).

Acknowledgements

Dr. Jason Davison and the AnthroHydro Lab was funded by the Office of Naval Research award N00014-22-1-2410, Chesapeake Bay Trust, and Montgomery County, MD.

Author information

Authors and Affiliations

Contributions

AH and AJ developed the methodology, created the test data, and drafted the manuscript. MJ developed the framework and advising. GAG, JAH, and ML created the drone video. AB and JB created test video. WG, EO, RR, and BT worked on the concept design. RK and GPD advised the concept design. JHD developed the methodology and advised the project. All authors have reviewed and provided feedback to the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author(s) declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heller, A., Jacobs, M., Acosta-González, G. et al. Plastic water bottle detection model using computer vision in aquatic environments. Sci Rep 15, 24851 (2025). https://doi.org/10.1038/s41598-025-09300-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-09300-8