Abstract

Brain tumor segmentation plays a crucial role in clinical diagnostics and treatment planning, yet accurate and efficient segmentation remains a significant challenge due to complex tumor structures and variations in imaging modalities. Multi-feature selection and region classification depend on continuous homogeneous features to improve the precision of tumor detection. This classification is required to suppress the discreteness across various extraction rates to consent to the smallest segmentation region that is infected. This study proposes a Finite Segmentation Model (FSM) with Improved Classifier Learning (ICL) to enhance segmentation accuracy in Positron Emission Tomography (PET) images. The FSM-ICL framework integrates advanced textural feature extraction, deep learning-based classification, and an adaptive segmentation approach to differentiate between tumor and non-tumor regions with high precision. Our model is trained and validated on the Synthetic Whole-Head Brain Tumor Segmentation Dataset, consisting of 1000 training and 426 testing images, achieving a segmentation accuracy of 92.57%, significantly outperforming existing approaches such as NRAN (62.16%), DSSE-V-Net (71.47%), and DenseUNet+ (83.93%). Furthermore, FSM-ICL enhances classification precision to 95.59%, reduces classification error to 5.67%, and minimizes classification time to 572.39 ms, demonstrating a 10.09% improvement in precision and a 10.96% boost in classification rates over state-of-the-art methods. The hybrid classifier learning approach effectively addresses segmentation discreteness, ensuring continuous and discrete tumor region detection with superior feature differentiation. This work has significant implications for automated tumor detection, personalized treatment strategies, and AI-driven medical imaging advancements. Future directions include incorporating micro-segmentation and pre-classification techniques to further optimize performance in dense pixel-packed datasets.

Similar content being viewed by others

Introduction

Brain tumor segmentation is a process that identifies the brain tissues and tumor types based on the scanned images. Brain tumor segmentation is a complicated task to perform which requires proper data for the segmentation process1. Positron emission tomography (PET) is used to scan the brain tissue activities of patients. PET scan images are used for clinical diagnosis to identify the impact and level of brain tumors. PET images reduce the latency and complexity level in the brain tumor segmentation process2,3. Segmentation methods address the region detection and classification problems by differentiating multiple indistinct features extracted. The precise problem of region classification, segment extraction, and inflated feature detection remains a problem due to noise and errors in the acquired images4. An efficient wavelet-based image fusion technique is used to address the above issues. The wavelet-based technique detects the size and texture of infected brain tissues by reducing errors and disclosing the actual regions for validation. The image fusion technique extracts the relevant patterns and details from PET images to classify the noise-prone regions5.

Feature-based region segmentation methods are used for brain tumors. The actual region of interest (ROI) range is detected using features. Features provide optimal brain tumor information for segmentation and detection processes6. Combined features are used for region segmentation in brain tumors. A hybrid method based on texture features is used for segmentation. The exact ROI and features of brain tissues are detected using PET and magnetic resonance imaging (MRI)7. The texture features provide the structures, size, types, and condition of the tumors. Extracting such features increases the complexity and feature-based repetitions to improve the precision. Therefore, a limited count of iteration or learning rate is less feasible for this process. Therefore, hybrid methods with high feature fusion and pattern classification with free-hand iteration are required for the purpose8. An ROI-aided deep learning technique is also used for tumor segmentation. The ROI-aided technique is an automatic brain tumor segmentation which reduces the time consumption in the computation process9. The ROI-aided technique localizes the structure and texture of tumors based on MRI images acquired from different datasets. A raw dataset provides information with discreteness wherein the false positives are undetectable. The techniques used must focus on reducing the error level due to imbalanced/discrete information available across various segmented regions10.

Deep learning (DL) methods and algorithms are commonly used for the detection and prediction process. The DL method is mainly used to improve the overall accuracy of the detection process11. A 3D U-Net deep neural network-based classification method is used for brain tumors. A convolutional neural network (CNN) algorithm is used in the method to detect types of tumors12. The U-Net-based technique analyses the structure and condition of tumors from the given MRI images. The main aim is to classify the exact types of tumors which provide important information for the tumor diagnosis process13. The U-Net-based technique facilitates the feasibility range of the diagnosis process. The MRI images provide clinical data for the decision-making process which reduces the latency in the tumor classification process14. An adaptive-adaptive neuro-fuzzy inference system (Adaptive-ANFIS) classifier-based method is used for tumor classification. The adaptive-ANFIS classifies the types and classes of tumors using MRI images. The ANFIS classifier identifies the exact segmentation of the tumor from the MRI images. The INFIS-based method increases the accuracy range of the brain tumor segmentation process15. This article proposes a novel segmentation model using improved classifier learning to mitigate the aforementioned issues in brain tumor detection. This segmentation model classifies features based on textural differences to identify maximum differences and coexisting features. Based on this classification, the discrete pixel-related features are identified to improve the region segmentation. The difference parameter is used to train the classifier learning to ensure further classifications across various features extracted. The contributions are summarized as follows:

-

1.

Introducing a novel finite segmentation model using a modified classifier for brain tumor detection from PET images.

-

2.

Modifying the conventional classifier process for discrete segmentation differentiation using feature existence and unanimous matching over different regions.

-

3.

To analyze the performance of the proposed segmentation model using the metrics precision, classifications, error rate, classification time, and segmentation accuracy.

-

4.

To compare the performance of the proposed model with the existing NRAN27, DSSE-V-Net17, and DenseUNet+23 using the above metrics.

Related works

The related works section provides a brief description of different methods proposed by various authors. In this section, the methods discussed the pros and cons of the previous proposals with the description of the methodologies proposed. The end of the section presents a research gap between the surveyed methods and emphasizes the need for the proposed method.

Zhuang et al.16 developed an aligned cross-modality interaction network (ACMINet) for brain tumor segmentation. Magnetic resonance images (MRI) are used in the method which provides relevant data for tissue segmentation. Volumetric feature alignment is used here which provides high-level features and patterns for further processes. It is mostly used as a 3D network which reduces the complexity of segmentation. The developed ACMINet increases the effectiveness of the tumor diagnosis process.

Liu et al.17 proposed an encoder-decoder neural network for brain tumor segmentation. A deep supervised 3D squeezer and excitation V-net (DSSE-V-Net) is implemented in the method to classify the tumors. The encoder and decoder are mainly used here to segment the tumor based on the given datasets. The V-Net is used here to identify the exact types of brain tumors. The proposed network improves the performance range in the tumor segmentation process.

Ilyas et al.18 introduced a hybrid weight alignment with a multi-dilated attention network (Hybrid-DANet) for brain tumor segmentation. The hybrid DANet used an automatic segmentation method which minimizes the complexity of the detection process. It investigates the segments that are produced by the images. The hybrid DANet reduces the optimization problems during segmentation. The introduced method increases the accuracy level in the segmentation process.

Yan et al.19 designed a squeeze and excitation network-based U-Net (SEResU-Net) model for brain tumor segmentation. It is mostly used for small-scale tumors which require a proper segmentation process. MRI is used in the model which provides significant information for the detection and diagnosis process. U-Net segments the exact types of tumors that reduce the latency in the diagnosis process. The designed model increases the performance range of tumor segmentation.

Metlek et al.20 developed a new convolution-based hybrid model for brain tumor segmentation. The main aim of the model is to identify the exact type and structure of the tumor from the given MRI images. Region of interest (ROI) is detected from the image that provides feasible data for the further detection process. The developed model reduces the energy consumption in the computation process. When compared with other models, the developed model improves the accuracy of the segmentation process.

Zhou et al.21 proposed an attention-aware fusion-guided multi-modal for brain tumor segmentation. A 3D U-Net is implemented in the modal which extracts the important features for segmentation. The segmentation features are evaluated and produce optimal data for the diagnosis process. The proposed modal increases the feasibility and reliability range of the systems. The proposed modal reduces the overall complexity level in segmentation.

Gao et al.22 introduced a deep mutual learning with a fusion network for brain tumor segmentation. The actual goal of the method is to identify the regions and sub-regions of tumors from given magnetic resonance (MR) images. The MR images reduce the time consumption level in the segmentation process. The introduced method increases the performance range of the network.

Çetiner et al.23 developed a new hybrid segmentation approach using multi-modality images for brain tumor segmentation. MRI images are used here to perform tumor segmentation which improves the efficiency range of the systems. A U-Net architecture is used in the approach which identifies the dense blocks from the MRI images. The dense block contains the necessary information for tumor segmentation. The developed approach maximizes the accuracy level of the segmentation process.

Chen et al.24 designed a multi-threading dilated convolutional network (MTDCNet) for brain tumor segmentation. A pyramid matrix fusion (PMF) algorithm is used in the network to identify the important characteristics for segmentation. The PMF algorithm also detects the structural and semantic information to recognize the exact types of tumors. Experimental results show that the designed MTDCNet improves the performance range of automatic segmentation systems.

Bidkar et al.25 proposed a salp water optimization-based deep belief network (SWO-based DBN) approach for brain tumor classification. It identifies the important patterns and features from the MRI images. The identified features produce relevant information for the tumor classification process. SWO is mainly used here to reduce the error ratio in tumor classification. The proposed network increases the accuracy of brain tumor classification.

Sindhiya et al.26 introduced a hybrid deep learning (DL) based approach for brain tumor classification. An adaptive kernel fuzzy c means clustering technique is used in the approach which selects the necessary features. The c-means clustering technique enhances the energy-efficiency range of the systems. The introduced approach maximizes the performance and feasibility range of tumor classification systems.

Sun et al.27 proposed a semantic segmentation using a residual attention network for tumor segmentation. An improved residual attention block (RAB) is used here to segment the blocks for the segmentation process. The RAB utilizes the necessary features that reduce the error in tumor prediction and detection processes. The proposed method enhances the overall accuracy level of the segmentation process.

AboElenein et al.28 developed an inception residual dense nested U-net (IRDNU-Net) for brain tumor segmentation. The main aim of the model is to increase the width of the structure and to reduce the computational complexity level. The IRDN extracts the important information for segmentation which minimizes the latency in the computation process. The developed model improves the reliability and robustness range of the tumor segmentation process.

Shaukat et al.29 introduced a 3D U-Net architecture for brain tumor segmentation. A deep convolutional neural network (DCNN) algorithm is implemented in the method to train the datasets. The DCNN produces optimal data which reduces the complexity of the computation process. It is used as a path extraction scheme that segments the sub-regions of the images. The introduced architecture improves the accuracy range of tumor segmentation.

Kumar et al.30 proposed a convolutional neural network (CNN) based brain tumor segmentation and classification method. MRI images are used here which high quality images for the classification process. It differentiates the exact types of tumor that produce necessary information for further diagnosis process. The proposed method increases the performance and feasibility level of tumor classification and segmentation processes.

Vimala et al.31 projected a CNN-based brain tumor differentiation method to identify the survival probability of patients. The authors used MRI inputs operated by median filtering and growth distribution depth to achieve 97% high tumor classification. Weighted tumor support factor is used in this method to perform optimal classification of features extracted from the input images.

Amsaveni et al.32 proposed a novel medical image watermarking concept for data embedding to ensure the security of critical clinical inputs. This method used the pixel pairing concept to embed data with fair mapping through a maximum similarity index. The similarity index-based validations are performed using random transform computations. This method achieved a 96% high irreversibility of the embedded data in medical images.

Satheesh Kumar and Jeevitha, Manikandan33 employed artificial neural networks for COVID-19 diagnosis in cardiovascular systems. This network is used to categorize the type of cardiovascular disease that is exposed due to the virus infection by accounting for the sensor-based observations from different intervals. This method uses spectral features and Lyapunov exponent to classify the cardiovascular disease caused by COVID-19.

Positron Emission Tomography (PET) is an imaging technique that is utilized in clinical settings for brain tumor detection by visualizing tissue metabolic activity. Compared to the MRI-based diagnosis, PET is challenging due to its limited spatial pixel representations. This confines the image size, pixel distribution, and variants due to feature extraction. However, illuminating the PET images is manually challenging. Besides, the noise interference due to the illuminating characteristics of the PET is high where in feature and resolution are influenced by the noise. The challenges from the above methods are range-based as in17,28, multi-feature dependent as in16,24, and sub-region classifier-based as in19,20,22. Such processes result in multiple discreteness between the identified region/feature and the consecutive representation. These problems fail to improve the accuracy of the input based on pixel correlation or labeled input training. This research work aims to handle the problem of discrete segmentation using the Finite Segmentation Model (FSM) with Improved Classifier Learning (ICL). Different from the above-discussed methods, problems such as visual classification of regions, feature range of the infected pixels, and sub-region detection are addressed in this proposed model. The segmentation is based on textural differences regardless of the feature extractable regions. Considering the discreteness between each pixel distribution, the classification based on differences other than homogeneity is performed. Therefore the visual classification or region-excluded feature differentiation problems are mitigated using this classifier learning.

Methodology

The methodology section presents the discussion of the proposed model with a detailed description, illustrative figures, and mathematical models. The different sections in the proposed model are explained in various subsections numbered below.

Finite segmentation model (FSM) using improved classifier learning (ICL)

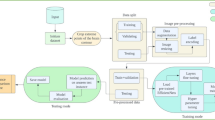

The FSM supports both existing and improved classifier functions to efficiently segment regions of interest within the images that correspond to the tumors. In the existing segmentation process, the algorithm determines the different regions depending on textural differences in the images. This involves relating the labeled inputs to innovate the distinct segments. In difference, the altered classifier process takes a more developed approach. It uses the defined characteristic that delivers discrete and continuous region detection. These features are distinguished by their presence and the maximum difference between tumor and non-tumor regions. Figure 1 portrays the proposed segmentation process.

In the above representation, PET images are used for detecting tumors other than MRI or CT inputs. PET images are reliable in detecting cell/tissue level changes due to tumor cells. Such changes are reflected in the image surface over the disease spread and classification. Irrespective of the overview of the regions, the change in tissue/cell level features is observed from PET. This serves as the smaller level of region detection between the pixel distribution sequences. The classifier learning is used for differentiating the feature existence with minimum or maximum difference under continuous iterations. The classification is instigated based on the textural differences and matching features that are extracted. Thus, the conventional image processing steps with the classification process are extended to the classification process for discreteness detection. The training of the conventional and modified classifiers revolves around achieving the highest feasible precision in segmenting the tumor regions. The acquired characteristics help in the training procedures. These features help the approach to understand the modulations of these regions, allowing for accurate classification. The accuracy of the classification is identified using its low false positives and precise region segmentation. In particular, the change in textural differences that are unnoticed impacts the feature extraction process. This is specific to retaining the segmentation precision of the proposed model. Following the representation in Fig. 1 the flow graph of the proposed process is illustrated in Fig. 2.

The flow graph of the proposed FSM-ICL is depicted in the above Fig. 2. The extracted features are validated for their textural differences. Using the computed textural differences, the region with a current high difference is classified. If the difference is the maximum among all the features extracted, that region is segmented and used for training. The rest of the region features are used for pattern matching and similarity estimation. If the difference of the current extraction is low, then the pixel existence is verified to augment the new feature extraction. The uniqueness of the approach lies in its adaptability. The modified finite process is merged into the conventional classifier operation, but only if the segmentation of tumor regions is highly precise. This procedure optimizes the segmentation process, specifically when handling both discrete and continuous PET image segments. This paper presents a Finite Segmentation Model combined with Improved Classifier Learning to evaluate the challenges of discrete segmentation in PET image-based intelligent analysis.

The PET images are taken as the input image for the further feature extraction operation. These images establish the perceptions of the metabolic activities of the tissues. These images are utilized as the initial point for observing brain tumors. Characteristics extraction involves determining and capturing the consequential features from these images. By supporting experienced approaches, particular frameworks, textures, and differences within the PET images are determined. These acquired features are separated and transferred into distinct features and these characteristics are necessary for training the segmentation model. They establish the required information to distinguish between the tumor and non-tumor regions, enabling the method to make precise classifications. The feature extraction procedure acts as a cross-over between the raw PET images and then the subsequent stages of observation, assisting the approach’s ability to understand and differentiate the difficult visual information. The process of extracting the features from the given PET input images is explained using Eq. (1)10,16.

.

Where \(\:\beta\:\) is denoted as the feature extraction operation, \(\:G\) is represented as the given PET images, \(\:t\) is denoted as the subsequent stages of analysis. Now the classification process is performed in the segmentation procedure. This step involves classifying the different regions within the images to differentiate the tumor and non-tumor regions. This classification operation uses a set of priory-defined measures and learned frameworks to decide these distinctions. The FSM with the ICL introduces two different methods in determining the textural difference and then the matching images. The process of classifying the tumor and non-tumor regions is explained using Eq. (2).

.

where \(\:\sigma\:\) is represented as the regions in the acquired PET input images, \(\:\gamma\:\) is denoted as the distinctions, \(\:j\) is denoted as the variance occurred in the operation, \(\:i\) is represented as the necessary frameworks. The classification process undergoes the training operation to attain its maximum accuracy. This helps in utilizing the features for further differentiation procedures with the help of the conventional neural network. CNN in the deep learning algorithm helps in this process and interprets visual data like PRT images. The conventional classification process is illustrated in Fig. 3.

The extracted \(\:\:\beta\:\)is used for classifying the regions of the input image for \(\:\:j\)and\(\:\:\gamma\:\). In this process, the \(\:\:j{\prime\:}s\) difference and \(\:\:i{\prime\:}s\)similarity are used under conventional segregation and complicated subsequent analysis. Based on the \(\:\:\gamma\:\) the \(\:\:V\) variation and\(\:\:Z\) analysis are performed. This is utilized for new\(\:\:\beta\:\) such that \(\:\:\alpha\:\) is profounded for difference analysis (Fig. 2). They analyze the extracted characteristics from the PET images, recognizing complicated frameworks and structures that represent the tumor regions. This allows it to recognize difficult relationships, which enables the accurate classification of these regions as either tumor or non-tumor. This integration of the CNNs improves the segmentation procedure by supporting their capability in the classification process. The process of utilizing the CNN in the classification process is explained using Eq. (3).

.

Where \(\:V\) is denoted as the textures of the acquired images, \(\:X\) is represented as the complicated relationships, \(\:Z\) is represented as the structures in the images, \(\:\alpha\:\) is denoted as the integration operation. Now the textural difference from the classification is determined in the segmentation process with the help of the CNN. As the CNN analyzes the extracted characteristics from the PET images, it effectively determines and classifies the difference in the textures and patterns of those manifesting different tissue regions. Through the framework of conventional layers, the CNN helps in capturing the complicated visual understanding which is difficult to detect through the conventional methods. These learned characteristics enable the CNN to distinguish between the tumor and non-tumor regions based on their distinct textural signatures. This is computed using Eq. (4).

.

By operating the image data through the various convolutional and merging layers, the CNN becomes the high analyzing the variations, edges, and structures that distribute to the textural variations. The CNN’s ability to efficaciously interpret these textural differences importantly improves the segmentation operation, by allowing for accurate determination of tumor regions within the PET images. The process of determining the textural difference in the segmentation process by CNN is explained by the following equation given above. Where \(\:B\) is denoted as the textural difference, \(\:F\) is represented as the visual understanding operation, \(\:P\) is represented as the tumor tissues of the images. Now the matching features are determined in the segmentation process by using the CNN technique. The features that are extracted from the PET images are framework and aspects that confine importance in differentiating between tumor and non-tumor regions. The CNN engages its layer to evaluate the complicated regions within these characteristics, by allowing it to determine the related patterns between the extracted features and distributed measures for tumor detection. The CNN refines its capability to match these extracted features to known tumor characteristics. This process ensures that the CNN becomes efficient in determining even matching features that indicate the presence of the tumor. It helps in acquiring the matching features from input PET images. The process of acquiring the matching features in this segmentation process with the help of the CNN is explained using Eq. (5).

:

Where \(\:L\) is denoted as the matching features. Now from the textural differences, the classification process is performed. This is the modified classification operation that is performed based on the textural difference. In this classification process, the distinctive features are extracted from the PET images, capturing an understanding of textures that differentiate between the tumor and non-tumor regions. These features are then analyzed using the CNN which aids in recognizing the complex patterns and textures. The process of modified classification is explained using Eq. (5).

.

Where \(\:U\) is denoted as the modified classification which is performed based on the acquired textural difference. Now the existing features are determined from the modified classification. These features denote the unique characteristics extracted from the PET images that grip important information about the tissue regions. The modified classification process is illustrated in Fig. 4.

The similarity rate for the identified\(\:\:\sigma\:\) is used for validating the difference and\(\:\:Z\). Across the varying segments, two factors are extracted namely\(\:\:L\) and\(\:\:\alpha\:\). Based on the \(\:\:X\in\:t\) and \(\:\:B\) the visual operations on \(\:\:P\) is performed. Compared to the previous process the modification in classification is performed for \(\:\frac{d\beta\:}{dt}\left(t\right)\) until\(\:\:\frac{\partial\:\beta\:}{\partial\:t}\:\left(t,\alpha\:\right)={G}_{\sigma\:}\left(t,\beta\:\left(t,\alpha\:\right)\right)\) Thus the training for \(\:\:V\) (repeated) is performed under\(\:\:P\) or\(\:\:L\) feature for identifying tumors (Refer to Fig. 3). Through CNN, the modified classification process extracts and focuses on these characteristics, holding complex patterns, etc. The classification algorithm is given in Algorithm 1.

Algorithm 1

Classification Process.

Input: The feature classification is estimated across the segments.

Output: Identify the tumor.

Functions:

1. if\(\:\:\sigma\:=Z\), then//variance detected is the same as the differences in the extracted regions.

2.

\(\text{i}\text{f}\:\beta\:\left(0\:\right)>P,\:\text{o}\text{r}\:P<Z,\) then

3. Compute the current differences.

4. else.

5. Compute the current Similarity rate.

6. end if.

7. else.

8. if \(\:\alpha\:<\beta\:\left(0\right)\) then//extracted features are high than the difference features

9. Compute the current feature’s segment-matching rate.

10. else.

11. for\(\:\:V+\left(P*L\right)\approx\:\beta\:\) do

12. \(\:\beta\:=\left(t,\alpha\:\right)\text{*}{G}_{\sigma\:}\)

13.end if.

14. else.

15. if\(\:\:{G}_{\sigma\:}\left({\alpha\:}_{i}\right)=Z\), then//Similarity rate of the extracted regions is the same as the non-variance regions

16. Declare the available features.

17. else.

18. Initialize the training process.

19. end if.

20. end if.

The existing features enclose the abundance of the data which enables the algorithm to tackle the regions accurately. The process of detecting the existing features from the modified classification is explained using Eq. (6)15.

.

Where \(\:\rho\:\) is denoted as the existing features, \(\:\phi\:\) is denoted as the unique characteristics from the modified classification. Now the maximum difference is determined from the existing features of the modified classification. This represents the most important difference between the features related to the tumor and non-tumor regions within the PET images. By determining the features that distribute the maximum variance between these two types of regions, the method effectively spots the differentiating features that are most complex of tumor presence. The process of detecting the maximum difference is explained using Eq. (7).

.

Where \(\:\varnothing\:\) is denoted as the maximum difference, \(\:W\) is represented as the efficacious spots in the tumor regions. Based on the modified classification outcome, the training is given to the classification process by CNN. The outputs include the features and then the maximum differences, which serve as important information for enhancing the CNN. The CNN is trained to recognize and correlate these features with the presence of the brain tumor regions. It helps in acquiring the accurate distinctions between the tumor and non-tumor regions within the PET images. The process of training the CNN for the enhancement of accuracy is explained using Eq. (8).

.

Where \(\:A\) is denoted as the training operation to the CNN in the classification of the segmentation process. Also, the modified classification helps in determining the continuous and discrete regions. The continuous and discrete regions are identified based on the different feature distributions for multiple regions that ease true positive analysis. The true positives for the different segments are used to improve the precise detection based on monotonous pixel distributions. Based on different feature extraction rates, the change in the monotonous nature is used to identify the discreteness. This is categorized by the varying features coexisting in the same region as the feature extracted and variance estimated regions. At first the detection of the continuous regions using finite features based on the high maximum accuracies. These continuous regions are detected through the altered classification process, demonstrating the substantial difference between the tumor and non-tumor regions. By designating the features with the highest accuracy in selecting these differences, the algorithm aims for the most reliable representation of the continuous tumor regions. This method ensures that the segmentation model enhances the aspects of the image data, which results in precise identification during the analysis. This is obtained using Eqs. (9) and (10).

.

The process of detecting the continuous regions is explained by the following equation given above. Where \(\:K\) is denoted as the continuous region based on the modified classification. Now the discrete region is extracted in the detection operation. This process involves determining the particular, isolated regions that provide the distinctive features, feasibly representing the presence of tumors. This process associates the finite features, extracted depending on their existence and maximum difference between the tumor and non-tumor regions. The difference estimation is presented in Algorithm 2.

Algorithm 2

Difference Estimation.

Input: Region for detection.

Output: Find the difference in the region.

-

1.

If \(\:\:K<\beta\:\left(G\right)\), then//Difference in feature of the current region is less than the extracted ones

-

2.

If\(\:\:{\beta\:}_{0}\left({\alpha\:}_{i}\right)={G}_{\sigma\:}\) then

-

3.

Compute the maximum matching features of the region.

-

4.

Else.

-

5.

Calculate the modified classification rate.

-

6.

End if.

-

7.

Else.

-

8.

if\(\:\:{\alpha\:}_{i}\left(K\right)>\left({\alpha\:}_{\sigma\:}\right)\), then//High variance check between the identified and extracted regions

-

9.

Identify the finite feature.

-

10.

else.

-

11.

if\(\:\:\alpha\:{U}_{i}+\alpha\:{U}_{j}>\beta\:\left(G\right)\), then

-

12.

if\(\:\:\beta\:\left(G\right)=\rho\:t\), then

-

13.

Compute the feature differences.

-

14.

else.

-

15.

Identify maximum region differences.

-

16.

end if.

-

17.

for\(\:\:{\alpha\:}_{i}\left(K\right)=\beta\:\left(G\right)\) then, do//for all the features that represent the regions with no variance

18. \(\:\beta\:\left(G\right)={\alpha\:}_{i}\left(K\right)\)

-

19.

Else.

-

20.

if\(\:\:\stackrel{\sim}{{G}_{\sigma\:}}\:\left(t,\beta\:\left(t\right)\right)>K\left(G\right)\), then//variance difference is higher than the actual variance estimated

-

21.

Identify the distinctive feature.

-

22.

else.

-

23.

Identify the discrete region.

-

24.

end if.

-

25.

end if.

-

26.

end if.

By analyzing these features and their differences, the discrete regions were detected. The process of determining the discrete regions that represent the presence of a tumor is explained using Eqs. (11) and (12)2,12.

.

Where \(\:\pi\:\) is represented as the discrete regions that represent the tumor. The training operation for discrete and continuous region detection is illustrated in Fig. 5.

The second classification identifies the need for training under \(\:\:P{\prime\:}s\)process. First, if\(\:\:\rho\:=true\:\) then maximum matching is pursued for validating \(\:\:\psi\:=true\) or not. If\(\:\:\psi\:\) is true then the \(\:\:\rho\:=\beta\:\) is valid and hence the region is continuous without\(\:\:j\). Contrarily there are two cases where\(\:\:\rho\:\) is not true and\(\:\:\psi\:\) is false. If \(\:\:\rho\:=false\:\) then the max difference is identified for training. The\(\:\:\psi\:=\) true case performs integration of \(\:\:\rho\:\) and\(\:\:\beta\:\in\:\psi\:\). This integration identified \(\:\:A\) different from \(\:\:K\) region (Fig. 4). This process helps in detecting the presence of a tumor with the maximum accuracy. The segmentation process is efficacious with the help of the CNN technique. This process helps in reducing errors while handling the discrete and continuous PET image segments with high adaptability. The training is given to the CNN until it attains the précised segmentation.

Performance assessment

In the performance assessment section, the discussion of experimental results using external dataset images and comparative analysis using metrics and methods are described. The performance assessment is discussed using experimental and comparative analysis. For the experimental analysis, the images from34 (Synthetic whole-head brain tumor segmentation dataset) are used for tumor segmentation. This dataset provides 3D segmented images with 0 to 2 labels indexing background, forehead, and tumor region in order35. The number of training and testing images used are 1000 and 426 respectively. The images are split into 10 regions maximum. The training is initiated with 800 iterations extended up to 1200 (for large images). The training rate is set from 0.6 to 1.0 for which the minimum epochs is 3 and the maximum is 5. The learning requires a minimum of 3–4 epochs to classify the output texture. The classifier learning is trained at a rate between 0.6 and 1 with a drop rate between consecutive 2 intervals. Besides, the classification iteration is halted for the maximum saturation between the 3–5 epochs. With this information, the experimental analysis using MATLAB is summarized in Tables 1 and 2 using the sample inputs36,37. The MATLAB software is deployed in a computer with a 2.8 GHz intel processor, 256GB secondary storage, and 8GB physical memory.

Apart from the above experimental analysis, the following section presents a discussion on metric-based comparisons. The metrics precision, classifications, error rate, classification time, and segmentation accuracy are considered in this comparative analysis. The number of regions and features are varied up to 10 and 12 respectively for analysis. The methods NRAN27, DSSE-V-Net17, and DenseUNet+23 are considered in this comparative analysis along the proposed method38. The parameters used for this comparative study include image height and variances which are used to find whether it is normalized or not. The characteristic of the image consists of noise and contrast, the obtained image is in grayscale, so the noise can be easily identified\(\:\:\beta\:\left(0\right)*\sum\:_{{\alpha\:}_{i}}\rho\:\left(t\right)\). The validation of image segmentation is done for the precise output with segmentation accuracy. Here, it relates to the two formats such as continuous, and discrete, based on this representation the image classification is processed. The matching is estimated whether it is a tumor or not and provides the resultant. Based on the matching process, the differences are estimated for the image parameter\(\:\:G\left(\beta\:\right)*{\alpha\:}_{i}\). From the matching, the \(\:k\) regions are extracted where it is given to the integration process to analyze the region39. The precision and error metrics are inferred from \(\:{\gamma\:}_{\sigma\:}\left(t,\beta\:\left(t\right)\right)\) and\(\:\:G\left(t,\beta\:\left(t\right)\right)\) estimation that inversely validates the identified regions. The mismatching of continuous and discrete textures results in increasing false rates. If the false rate is not satisfying\(\:\:{A}^{*}=\beta\:\left(G\right)\)condition, then the error increases and thereby precision decreases. The case of\(\:\:\psi\:=true\) ensures high classification regardless of the number of matching cases from which the precision is improved40. Therefore, references for error and precision are inferred from these parameters for validation. Following the above, the hyperparameter analysis for sensitivity is tabulated in Table 3 based on the maximum difference identified. This analysis of sensitivity identifies the maximum true positives irrespective of the true positive rates.

The sensitivity analysis for the varying regions and their corresponding difference ranges is tabulated in Table 3 above. As the difference rate increases, the sensitivity fluctuates without precise identification of the maximum difference region. The classifier learning operates on both the difference and variance of the extracted features to improve the sensitivity. This identifies the maximum true positives to leverage the region differences rather than avoiding them from the computations. As this process is iterated until the maximum regions are identified, the change in sensitivity is observed. The p-values and the corresponding error values for uncertainty are tabulated in Table 4 below. This tabulation considers the number of regions for the same difference ranges.

The region extraction is computed with the higher classification rate to reduce the noise and periodically analyze the height of the input image. The error rate is reduced based on the parameter used for the identification of tumor from the PET image\(\:\:\sum\:_{G}\left|\beta\:\left(G\right)-G\right|\). This evaluation of image segmentation is derived as the output from the continuous and discrete image. The maximum difference is used to estimate the region and provides the training based on its image segmentation and it is described as\(\:\:{\alpha\:}_{i}\left(K\right)*\left({\alpha\:}_{\sigma\:}\right)\). The tumor cell is used to improve the accuracy rate from the classification method and ensure the segmentation. The available and differences in the image features are clumped together and ensure the image training which is not on the detection boundary. The maximum region difference is extracted by using the mapping function and estimating the validation function for the precise output. The maximum region is extracted and the segmentation with the parameters such as noise and contrast of PET image. The image characteristics are considered for the segmentation where the regions are split into smaller portions and found for the mapping. The boundary-based image region is extracted and estimates the training by matching the difference whether it is a tumor or not. The labeled region is used to find the tumor cell in an ordered manner and improves the accuracy and it is described as\(\:\:\left(1+{t}^{2}+{y}^{2}\left(t\right)\right)\). Here, it illustrates the region of the image by testing the image and producing the output with higher precision by decreasing the computation time. The similarity rate is enhanced for the textural classifier where the discrete and continuous images are evaluated for the segmented output. The correlation is used to find the tumor and non-tumor from the discrete PET image. Considering the parameters such as characteristics, size, and pattern of the image are difficult to detect. These difficulties are identified by introducing CNN which improves the classification level of PET images and results in accurate detection of tumor region. The tumor detection is in the form of a discrete image where the region is split into a smaller portion \(\:\rho\:t\left(\beta\:\right)*{A}_{0,n}\left(t,\beta\:\right)\). From the parameters used for the improvement in this comparative study, the characteristic of the image is considered along with the nature, size, and height of the PET image. Based on these characteristics the normal and abnormal region is identified with the reference output. From the parameter used in this proposed work the precision, and classification are improved whereas, the error rate and classification time are reduced.

Precision

The precision is effective in this process with the help of classifier learning. This operation integrates both the conventional and modified classifier functions, each distributing to refine the accuracy of segmentation in PET image analysis. The CNN classifier supports finite features and high-accuracy matching to achieve discrete and continuous region detection. Through iterative training, these classifiers adjust their acquired input values to enhance the accuracy of the images. The finite characteristics represent the most variant features while capturing the essential distinctions between the tumor and non-tumor regions. By using classifier learning, the variations are detected precisely, which leads to high precision in segmentation procedures. The inclusion of the modified finite operation within the conventional classifier utilizes its procedures. This inclusion depends on the accuracy of the tumor region segmentation. By incorporating the modified process when segmentation accuracy is high, the errors are minimized and then the overall precision of the operation is enhanced as shown in Fig. 6.

Classifications

The classification is effective in this process after the extraction of the input PET image features. These features help distinguish between the tumor and non-tumor regions. After the features are extracted, these features are sent as the input to the classification process, which engages the CNN. The CNN accurately analyzes the extracted characteristics, recognizing the complex patterns, frameworks, and differences within the PET images. By supporting the CNN, it understands the complex association within the input PET image data. This enables them to precisely differentiate the region as either tumor or non-tumor. The acquired features allow the CNN to make the solutions on the distinctive attributes of each region within the PET images. As the classification process incorporates, the CNN training helps in the classification process with the maximum accuracy. This operation leads to an overall precision of the segmentation operation. Then the integration of the classification after the feature extraction process enhances the accuracy and reliability of the determining brain tumor regions within the PET images as shown in Fig. 7.

Error rate

The error rate is lesser in this process with the help of the precise segmentation procedure and classifier learning. The CNN helps in the segmentation process by ensuring the accurate determination of both continuous and discrete tumor regions within the PET images. Through repetitive training, the classifiers enhance their accuracy after knowing their outcomes. The proper features which are selected based on their distinctiveness and difference; enhance the classifier’s ability to distinguish between the tumor and non-tumor regions. The help of the modified classification with the conventional classifier further mitigates the errors. By integrating the altered operation when segmentation precision is maximum, this operation becomes more vigorous by reducing the errors. The symbiosis between the precise segmentation operation and classifier learning delivers a lower rate. This association ensures that the brain tumor regions are identified more accurately without any errors and also it enhances the precision of the procedure as shown in Fig. 8.

Classification time

The time taken for the classification process is less in this process with the help of CNN. The textural difference and then the matching features are determined in this classification procedure. These differences help in determining the complex patterns and differences delivering the distinction between the tumor and non-tumor regions. The CNN determines the matching features by observing the extracted features. Through the layers of the CNN and combining, these are effectively correlated for precise tumor detection. This method utilizes the efficiency of the classification operation by fleet processing and determining the complex elements that distinguish between the regions. The CNN ability is enhanced to process information from the given PET images, the classification operation becomes faster and more précised. The orderly determination of the textural difference and matching features ensures that the method efficiently determines the brain tumor regions within the PET images, making the procedure more time-effective as shown in Fig. 9.

Segmentation accuracy

The segmentation accuracy is higher in this process with the help of the CNN in classification and modified classification procedures. CNN with their developed pattern recognition abilities helps in enhancing the precision of segmenting the brain tumor within the PET images. During the classification process, the CNN analyzes the extracted features, refining the intricate patterns, and textures that are the representation of the tumor regions. Thus this analysis enables the precise determination and categorization of these regions, delivering the maximum segmentation accuracy. The modified classification operation helps in determining the complex features that distinguish the tumor and non-tumor regions. This process enhances the accuracy of segmenting the discrete and continuous tumor areas. The incorporation of the CNN utilizes the segmentation precision by enhancing the CNN abilities. The accurate classification and determination of the textural differences, combined with the efficacious recognition of the features, ensure precision in detecting the brain tumor regions within the PET images as shown in Fig. 10.

Specificity

In Table 5, the specificity comparisons are presented. In this comparative analysis, the methods IRDNN-Net28 and MTDC-Net24 are augmented along the methods considered previously.

The specificity of the proposed model is high compared to the other methods of the same kind as presented in the above table. The proposed model is reliable in performing the classification of features based on differences and variances. This process is iterated to induce various classification instances across multiple segments and regions identified. The matching and un-matching features are identified through this classification to improve the specificity regardless of the regions. In this process, the variance is estimated as high or low depending on the number of segments across various feature extraction rates. In this process, the classification differentiates the maximum regions based on the\(\:\:\alpha\:\) and\(\:\:\beta\:\) variants to leverage the detection accuracy. Therefore the true negatives are identified from these classifications for multiple deviations identified.

In Tables 6 and 7, the comparative analysis results are tabulated for each variant used.

The proposed FSM improves precision, classification, and segmentation accuracy by 10.09%, 10.96%, and 10.03% respectively. This method reduces error and classification time by 11.29% and 10.24% respectively.

The proposed FSM improves precision, classification, and segmentation accuracy by 8.43%, 8.51%, and 9.23% respectively. This method reduces error and classification time by 9.34% and 10.4% respectively.

From the above results, it is seen that the proposed model is reliable in handling smaller variations between the consecutive regions identified. The classification process is used to improve the segmentation accuracy ahead of the iterations. Therefore, any changes in the feature extraction results in computations of the differences across multiple segments. In the difference estimation for the patterns identified, the regions are used for feature matching which is a lagging concept in the existing network models. Therefore, the specificity measure consents to the sensitivity feature to improve the precision.

Conclusion

This paper discussed the working process of the novel finite segmentation model using improved classifier learning. The proposed model aimed and succeeded at improving the segmentation accuracy by addressing the discrete problem defined. The problem is addressed using dual functions of the classifier: the conventional and the modified. In the conventional process, the segments are identified based on correlation using the labeled inputs. In contrast to the conventional process, the modified classifier identifies the discrete and continuous regions using specific feature parameters. In these cases, the difference and high matching factors are used for classification accordingly. Based on these two factors, the training process is pursued to improve the segmentation process. Therefore the error-causing discrete sequences are identified through recurrent training using feature existence and its uniqueness. Thus from the comparative analysis, it is seen that the proposed model improves precision by 10.09%, classifications by 10.96%, and reduces the error by 11.29% for the varying regions. However, the backward training in this model requires additional feature-matching instances that are less feasible for dense pixel-packed inputs. For handling this problem, micro-segmentation approaches with pre-classification are planned to be incorporated in future work. The pre-classification is reliable in identifying variance regions as patches other than segmenting the whole region. Therefore, the micro-segmentation for the variance region is alone performed to reduce the true negatives. This is monotonous for any rate of feature and region extractions.

Data availability

The datasets generated during and/or analyzed during the current study are not publicly available but are available from the corresponding author on reasonable request. https://ieee-dataport.org/documents/synthetic-whole-head-mri-brain-tumor-segmentation-dataset.

References

Sbei, K., ElBedoui, W., Barhoumi & Maktouf, C. Gradient-based generation of intermediate images for heterogeneous tumor segmentation within hybrid PET/MRI scans. Comput. Biol. Med. 119, 103669. https://doi.org/10.1016/j.compbiomed.2020.103669 (2020).

Ahmed et al. Brain tumor detection and classification in MRI using hybrid ViT and GRU model with explainable AI in Southern Bangladesh. Sci. Rep. 14 (1), 22797 (2024).

Ganesh, S., Kannadhasan, S. & Jayachandran, A. Multi class robust brain tumor with hybrid classification using DTA algorithm. Heliyon 10(1), e23610. https://doi.org/10.1016/j.heliyon.2023.e23610 (2023). PMID: 38187263; PMCID: PMC10770571.

Huang, Z. et al. Isa-Net: Improved Spatial Attention Network for PET-CT tumor segmentation, Computer Methods and Programs in Biomedicine, vol. 226, p. 107129, (2022). https://doi.org/10.1016/j.cmpb.2022.107129

Alongi et al. Radiomics analysis of brain [18F] FDG PET/CT to predict alzheimer’s disease in patients with amyloid PET positivity: A preliminary report on the application of SPM cortical segmentation, pyradiomics and machine-learning analysis. Diagnostics 12 (4), 933 (2022).

Alqazzaz, S. et al. Combined features in region of interest for brain tumor segmentation. J. Digit. Imaging. 35 (4), 938–946. https://doi.org/10.1007/s10278-022-00602-1 (2022).

Li, S., Liu, J. & Song, Z. Brain tumor segmentation based on region of interest-aidedlocalization and segmentation U-Net, (2021). https://doi.org/10.21203/rs.3.rs-627205/v1

Wang, T. et al. Deep learning-based automated segmentation of eight brain anatomical regions using head CT images in PET/CT. BMC Med. Imaging. 22 (1). https://doi.org/10.1186/s12880-022-00807-4 (2022).

Ali et al. Applications of artificial intelligence, deep learning, and machine learning to support the analysis of microscopic images of cells and tissues. J. Imaging. 11 (2), 59 (2025).

Zhang, C. et al. Hematoma evacuation via Image-Guided Para-Corticospinal tract approach in patients with spontaneous intracerebral hemorrhage. Neurol. Therapy. 10 (2), 1001–1013. https://doi.org/10.1007/s40120-021-00279-8 (2021).

Zhang, C. et al. Clot removal with or without decompressive craniectomy under ICP monitoring for supratentorial intracerebral hemorrhage (CARICH): a randomized controlled trial. Int. J. Surg. 110 (8), 4804–4809. https://doi.org/10.1097/JS9.0000000000001466 (2024).

Xu, X. et al. Large-field objective lens for multi-wavelength microscopy at mesoscale and submicron resolution. Opto-Electronic Adv. 7 (6), 230212. https://doi.org/10.29026/oea.2024.230212 (2024).

Zhang, G., Zhou, J., He, G. & Zhu, H. Deep fusion of multi-modal features for brain tumor image segmentation. Heliyon 9 (8). https://doi.org/10.1016/j.heliyon.2023.e19266 (2023).

Ramprasad, M. V., Rahman, M. Z. & Bayleyegn, M. D. A deep probabilistic sensing and learning model for brain tumor classification with fusion-net and HFCMIK segmentation. IEEE Open. J. Eng. Med. Biology. 3, 178–188. https://doi.org/10.1109/ojemb.2022.3217186 (2022).

Solanki, S., Singh, U. P., Chouhan, S. S. & Jain, S. Brain tumor detection and classification using intelligence techniques: an overview. IEEE Access. 11, 12870–12886. https://doi.org/10.1109/access.2023.3242666 (2023).

Cao, Z., Zhu, J., Wang, Z., Peng, Y. & Zeng, L. Comprehensive pan-cancer analysis reveals ENC1 as a promising prognostic biomarker for tumor microenvironment and therapeutic responses. Sci. Rep. 14 (1), 25331. https://doi.org/10.1038/s41598-024-76798-9 (2024).

Agrawal, P., Katal, N. & Hooda, N. Segmentation and classification of brain tumor using 3D-unet deep neural networks. Int. J. Cogn. Comput. Eng. 3, 199–210. https://doi.org/10.1016/j.ijcce.2022.11.001 (2022).

Wang, H. et al. Merge-and-Split graph convolutional network for Skeleton-Based interaction recognition. Cyborg Bionic Syst. 5, Article0102. https://doi.org/10.34133/cbsystems.0102 (2024).

Nanda, R. C., Barik & Bakshi, S. SSO-RBNN driven brain tumor classification with saliency-K-means segmentation technique. Biomed. Signal Process. Control. 81, 104356. https://doi.org/10.1016/j.bspc.2022.104356 (2023).

Kalam, R., Thomas, C. & Rahiman, M. A. Brain tumor detection in MRI images using adaptive-ANFIS classifier with segmentation of tumor and edema. Soft. Comput. 27 (5), 2279–2297. https://doi.org/10.1007/s00500-022-07687-4 (2022).

Luan, S. et al. Deep learning for fast super-resolution ultrasound microvessel imaging. Phys. Med. Biol. 68 (24), 245023. https://doi.org/10.1088/1361-6560/ad0a5a (2023).

Zhuang, Y., Liu, H., Song, E. & Hung, C. C. A 3D cross-modality feature interaction network with volumetric feature alignment for brain tumor and tissue segmentation. IEEE J. Biomedical Health Inf. 27 (1), 75–86. https://doi.org/10.1109/jbhi.2022.3214999 (2023).

Liu, P., Dou, Q., Wang, Q. & Heng, P. A. An encoder-decoder neural network with 3D squeeze-and-excitation and deep supervision for brain tumor segmentation. IEEE Access. 8, 34029–34037. https://doi.org/10.1109/access.2020.2973707 (2020).

ILYAS, N., Song, Y. & Lee, B. Hybrid-danet: An encoder-decoder based hybrid weights alignment with multi-dilated attention network for Automatic Brain Tumor Segmentation, (2022). https://doi.org/10.36227/techrxiv.19083590.v1

Yan, C. et al. SERESU-net for multimodal brain tumor segmentation. IEEE Access. 10, 117033–117044. https://doi.org/10.1109/access.2022.3214309 (2022).

Metlek, S. & Çetıner, H. ResUNet+: A new convolutional and attention block-based approach for brain tumor segmentation. IEEE Access. 11, 69884–69902. https://doi.org/10.1109/access.2023.3294179 (2023).

Zhou, T. & Zhu, S. Uncertainty quantification and attention-aware fusion guided multi-modal Mr brain tumor segmentation. Comput. Biol. Med. 163, 107142. https://doi.org/10.1016/j.compbiomed.2023.107142 (2023).

Gao, H., Miao, Q., Ma, D. & Liu, R. Deep mutual learning for brain tumor segmentation with the fusion network. Neurocomputing 521, 213–220. https://doi.org/10.1016/j.neucom.2022.11.038 (2023).

Çetiner, H. & Metlek, S. DenseUNet+: A novel hybrid segmentation approach based on multi-modality images for brain tumor segmentation. J. King Saud Univ. - Comput. Inform. Sci. 35 (8), 101663. https://doi.org/10.1016/j.jksuci.2023.101663 (2023).

Sun, W., Jang, M., Zhan, S., Liu, C., Sheng, L., Lee, J. H.,… Yang, H. Y. (2025).Tumor-targeting and redox-responsive photo-cross-linked nanogel derived from multifunctional hyaluronic acid-lipoic acid conjugates for enhanced in vivo protein delivery. International Journal of Biological Macromolecules, 314, 144444. doi: https://doi.org/10.1016/j.ijbiomac.2025.144444.

Yu, X., Luan, S., Lei, S., Huang, J., Liu, Z., Xue, X.,… Zhu, B. (2023). Deep learning for fast denoising filtering in ultrasound localization microscopy. Physics in Medicine & Biology, 68(20), 205002. doi: 10.1088/1361-6560/acf98f

Chen, W. et al. MTDCNet: A 3D multi-threading dilated convolutional network for brain tumor automatic segmentation. J. Biomed. Inform. 133, 104173. https://doi.org/10.1016/j.jbi.2022.104173 (2022).

Bidkar, P. S., Kumar, R. & Ghosh, A. SegNet and salp water optimization-driven deep belief network for segmentation and classification of brain tumor. Gene Expr. Patterns. 45, 119248. https://doi.org/10.1016/j.gep.2022.119248 (2022).

Sindhiya Devi, R., Perumal, B. & Pallikonda Rajasekaran, M. A hybrid deep learning based brain tumor classification and segmentation by stationary wavelet packet transform and adaptive kernel fuzzy C means clustering. Adv. Eng. Softw. 170, 103146. https://doi.org/10.1016/j.advengsoft.2022.103146 (2022).

AboElenein, N. M., Songhao, P. & Afifi, A. IRDNU-net: inception residual dense nested u-net for brain tumor segmentation. Multimedia Tools Appl. 81 (17), 24041–24057. https://doi.org/10.1007/s11042-022-12586-9 (2022).

Shaukat, Z. et al. Efficient scheme to perform semantic segmentation on 3-D brain tumor using 3-D u-net architecture. Multimedia Tools Appl. https://doi.org/10.1007/s11042-023-16458-8 (2023).

Kumar, S. & Kumar, D. Human brain tumor classification and segmentation using CNN. Multimedia Tools Appl. 82 (5), 7599–7620. https://doi.org/10.1007/s11042-022-13713-2 (2022).

Vimala, M., SatheeshKumar Palanisamy, S., Guizani, H. & Hamam Efficient GDD feature approximation based brain tumour classification and survival analysis model using deep learning. Egypt. Inf. J. 28, 1110–8665. https://doi.org/10.1016/j.eij.2024.100577 (2024).

Amsaveni, S. K., Palanisamy, S., Guizani, H. & Hamam Next-Generation secure and reversible watermarking for medical images using hybrid Radon-Slantlet transform, results in engineering, 24, 103008, ISSN 2590 – 1230, (2024). https://doi.org/10.1016/j.rineng.2024.103008

Satheesh Kumar, P., Jeevitha, M. & Springer Diagnosing COVID-19 Virus in the Cardiovascular System Using ANN. In: Oliva, D., Hassan, S.A., Mohamed, A. Artificial Intelligence for COVID-19. Studies in Systems, Decision and Control, vol 358. Cham. (2021). https://doi.org/10.1007/978-3-030-69744-0_5

Acknowledgements

Not applicable.

Funding

Princess Nourah bint Abdulrahman University Researchers Supporting Project number, (PNURSP2025R512), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

K. Murugan: Writing – original draft, Visualization, Project administration, SatheeshKumar Palanisamy-Conceptualization, Formal analysis, Writing – original draft, Tagrid Abdullah N. Alshalali: Visualization, Validation, Investigation, Writing – review & editingN. Sathishkumar:-Conceptualization, Writing – original draft,

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Murugan, K., Palanisamy, S., Sathishkumar, N. et al. Advanced finite segmentation model with hybrid classifier learning for high-precision brain tumor delineation in PET imaging. Sci Rep 15, 25666 (2025). https://doi.org/10.1038/s41598-025-09638-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-09638-z