Abstract

The growing need for energy efficiency in buildings has driven significant improvements in digitalisation and intelligent energy management. Traditional energy management techniques often fail to address dynamic energy demands and user preferences, leading to inefficiencies and increased costs. This paper proposes a framework that integrates Digital Twin (DT) systems with Artificial Intelligence (AI) algorithms for intelligent building energy consumption assessment by developing real-time virtual twin representations. Unlike conventional DT models used mainly for visualization or simulation, the proposed approach leverages DTs as adaptive, data-driven decision-making tools that evolve through continuous IoT sensor feedback. This dynamic representation of physical systems enables real-time energy optimization and facilitates intelligent control to enhance both efficiency and sustainability. The system categorizes buildings into energy-efficient and non-energy-efficient groups with an accuracy of 98% by leveraging IoT sensor data, along with Random Forest, Deep Neural Networks, Long Short-Term Memory networks, and Bidirectional Long Short-Term Memory networks. The framework encompasses comprehensive data preprocessing, feature engineering, and the implementation of cutting-edge AI techniques, highlighting the transformative potential of this integrated approach. The results, illustrated through various graphical representations, demonstrate the critical role of DT and AI in optimising energy management, minimising waste, and driving sustainability in industrial and urban environments. Confusion matrices and performance metric graphs reveal that the Random Forest model outperforms other techniques. Meanwhile, training curves and feature importance visualizations provide insights into model behaviour and key factors influencing energy efficiency. This research underscores the significance of combining real-time DT environments with intelligent learning models to address modern energy efficiency challenges and support the development of adaptive, sustainable building systems.

Similar content being viewed by others

Introduction

The increasing demand for energy efficiency in buildings has driven advancements in digitalisation and intelligent energy management1. Approximately 60 per cent of the global energy is consumed by residential and commercial buildings (with a role of inefficient energy usage in increasing costs and environmental concerns2). However, such traditional approaches for energy management, such as peak shaving3 and dual tariff4, do not consider energy demands and user preferences dynamically. This highlights the need for data-driven, real-time systems that can adapt to dynamic energy usage patterns and provide intelligent decision support. Therefore, this study addresses this gap by proposing an intelligent and adaptive framework for more effective monitoring, prediction, and optimisation of building energy consumption.

DT technology, together with the wide and fast growth of Internet of Things (IoT) devices and Artificial Intelligence (AI), offers a revolutionary method of managing energy in real time5,6. A DT is a digital twin of a physical system that reflects the real-time operational states and behaviours of a physical system7. In the context of this work, Digital Twins are not just static simulations, but continuously updated virtual replicas that evolve based on live sensor data and enable automated energy decision-making. DTs use IoT-enabled sensory data to create dynamic models of buildings, appliances and energy systems for building energy management context8. These digital replicas facilitate continuous monitoring, predictive analytics, and self-adjusted targeting, which enable improvements in energy efficiency and sustainability9.

This study utilizes data to develop a DT and an AI-based energy consumption classification framework. This framework is proposed by integrating IoT sensors, AI-based classification models, and cloud-based digital representations that enable real-time energy monitoring, consumption analysis, and optimization strategies. The integration of these technologies enables what we define as intelligent energy modeling, where energy usage patterns are automatically analysed and classified, and actionable feedback is generated to enhance building performance. The main goal is to reduce energy waste at a reduced cost to occupant comfort and productive performance while improving energy efficiency through the prediction of occupant operations and automated decision-making.

Figure 1 shows a digitally simultaneous monitoring and energy-consuming building management framework. These real-time data are collected from the temperature, humidity, light levels, occupancy and energy usage using IoT sensors installed on the building. Once this data is transmitted to an IoT gateway, it is relayed to the cloud, creating a virtual model of the building. This data is fed into an AI classification model that analyzes and interprets variations in energy consumption patterns, enabling an interactive energy dashboard to generate actionable insights. This continuous feedback loop between the physical environment and the virtual model enables intelligent, real-time adjustments, allowing the system to optimise both energy usage and building performance dynamically.

Research scope

The proposed framework is made up of three main components:

-

IoT data collection: Real-time monitoring through distributed IoT sensors that capture the environmental and operational parameters of the building.

-

AI-based energy classification: Machine learning models that classify energy consumption patterns and predict anomalies for efficient energy management.

-

DT representation: A cloud-based digital model that mirrors the physical building’s real-time state, facilitating predictive analysis and automated optimisations.

By combining these elements, this research contributes to the development of intelligent energy management strategies, paving the way for sustainable and cost-effective energy consumption in modern buildings.

Contributions

The main research contributions are as follows:

-

The research introduces a framework that merges Digital Twin (DT) with AI algorithms for assessing energy consumption by developing real-time virtual twin representations.

-

The study emphasises a comprehensive data-driven approach that includes data preprocessing, feature engineering, and the application of IoT sensor data. This methodology enables adaptive energy efficiency classification, providing insights into the relationships between building features and energy consumption patterns, ultimately contributing to more sustainable energy management practices.

-

The research evaluates various machine learning models, achieving an impressive accuracy of 98% in classifying buildings into energy-efficient and non-energy-efficient categories, and further, it shows the effectiveness of DT and AI techniques in optimising energy management strategies.

Organisation

The rest of the paper is structured as follows. Section 2 discusses the related work on energy consumption classification. The methodology is outlined in Sect. 3, which details the integration of DTs and AI for energy consumption analysis. Section 4 presents the experimental analysis and results, evaluating the effectiveness of the proposed approach. Discussions and findings are provided in this Section, highlighting key insights and comparisons. Finally, Sect. 5 concludes the paper with a summary of findings and future research directions.

Related work

DTs in smart buildings revolutionise management, monitoring, and optimisation by creating real-time digital replicas of physical structures10. These twins integrate IoT sensors, IT, and OT systems to collect and process real-time data, enhancing efficiency, sustainability, and security11. IoT devices measure temperature, humidity, and user behaviour, enabling precise modelling and analysis12. Blockchain integration ensures data integrity and transparency13, while machine learning and predictive analytics generate actionable insights14. This bi-directional communication allows real-time adaptation, making DTs essential for cost reduction, optimised building management, and sustainable urban development15. Integrating DTs with IoT-enabled control systems has proven to be a viable solution for optimising energy use in smart buildings. Authors in16 developed a DT prototype capable of predicting energy usage under different scenarios using Message Queuing Telemetry Transport (MQTT) interfaces, leading to improved energy efficiency and resource management. Beyond lighting control, advanced data-driven DTs have been developed for building energy management.

Furthermore, authors in17 illustrated the potential of embedding measured energy consumption data within as-built BIM models to encourage energy-saving behaviour and automate data transmission between sensors and digital platforms. Renewable energy integration is another key area where DTs enhance energy efficiency. Authors in18 leveraged artificial neural networks and multi-objective genetic algorithms to optimise energy consumption, achieving savings between 10.8% and 13.2% during the summer. However, further research is needed to assess seasonal variations in energy efficiency19. Proposed a cloud-based DT framework for historic public buildings in Sweden, using machine learning to predict energy consumption and optimise resource use. Meanwhile, authors in20 investigated the impact of DTs on thermal comfort and energy efficiency in smart buildings, yet failed to assess user preferences or quantify energy savings. Advanced AI-driven strategies have further enhanced DT applications for energy efficiency. Additionally, Authors in21 developed a data-model fusion dispatch strategy for fault tolerance and privacy protection, emphasising the growing role of DTs in intelligent energy management. While significant progress has been made in utilizing DTs for energy monitoring, tracking, and optimization, future research should focus on making DT applications more interactive, intuitive, and capable of real-time decision-making to maximize energy efficiency in buildings.

Machine learning (ML) applications in energy consumption prediction have gained significant attention, enabling more accurate and efficient energy management strategies. One of the notable advancements in this field is the integration of Extreme Gradient Boosting (XGBoost) with Multi-Objective Feature Selection (MOFS), as demonstrated by22. The XGB_MOFS model enhances energy consumption prediction by incorporating a causal inference framework to identify influential factors, thereby balancing predictive accuracy and interpretability. Case studies confirmed its robustness, particularly in regulating Tunnel Boring Machine (TBM) energy consumption, making it a reliable tool for complex industrial applications. Time-series forecasting models have also played a crucial role in predicting energy consumption23. Introduced a comparative forecasting framework using Long Short-Term Memory (LSTM) and Bidirectional LSTM (Bi-LSTM). Their model outperformed traditional neural networks and statistical models, such as ARIMA and SARIMA, achieving up to 50.6% lower RMSE in test scenarios. This approach effectively addresses activation imbalances between consumers and prosumers, making it particularly useful in dynamic energy markets where consumption patterns vary over time.

Beyond time-series approaches, hybrid models combining multiple computational techniques have demonstrated superior performance24. Proposed an Agent-Based Modelling (ABM), System Dynamics (SD), and Building Information Modelling (BIM)-integrated ML framework for energy consumption prediction across different indoor layouts. Their approach generated highly precise datasets, allowing ML models to achieve an MSE of 0.03, RMSE of 0.17, and an R² score of 0.92, highlighting its potential for capturing complex energy consumption patterns in diverse architectural configurations. In the domain of HVAC optimisation and thermal comfort,25 employed a Support Vector Machine (SVM), Artificial Neural Network (ANN), Random Forest (RF), and XGBoost for predictive modelling. Using sensor-collected data from Raspberry Pi devices, the study found that RF and XGBoost achieved superior accuracy (96.7% and 9.64%, respectively) in predicting Predicted Mean Vote (PMV) values, ensuring enhanced energy efficiency in building management systems.

Optimisation techniques have also been integrated into ML models to refine energy consumption forecasts26. Introduced a hybrid model combining the Random Forest algorithm with meta-heuristic optimisation to estimate heating energy consumption in residential buildings. The model leveraged six Random Forest-meta-heuristic combinations, considering architectural design, occupancy, and temperature factors, to achieve an \(R^{2}\) of 0.977 and a TIC of 0.0149 using the Ant Lion Optimiser. This approach significantly outperformed traditional forecasting methods, demonstrating the effectiveness of hybrid ML models in optimising residential energy consumption. As data privacy and decentralised processing become increasingly important, adaptive federated learning has emerged as a novel approach to energy forecasting27. Developed an adaptive learning framework that integrates federated learning and edge computing. By aggregating locally trained LSTM models on a central server, the framework effectively reduced communication overhead, lowered forecast errors by 8%, and cut training time by 80% while ensuring data privacy. This decentralised approach offers a scalable and efficient solution for large-scale energy management applications. Deep learning models have demonstrated remarkable potential in specialised energy forecasting applications28. Introduced a deep learning-based CNN approach to predict energy consumption in mosques, utilising 3D laser scanning and energy modelling to generate a simulated dataset. Performance evaluation showed that CNN performed better than Support Vector Regression, measured with MAPE 4.5% and \(R^{2}\) of 0.98. Hence, it proved to be a good tool for energy load optimization in mosques under different operational strategies.

The integration of IoT sensors and MQTT-based communication has significantly contributed to the real-time streaming of data for intelligent energy management. One important contribution in this domain is the Optimised Multi-scale Attention Convolutional Neural Network for Microgrid EMS (OMACNN-MGEMS-Iot) introduced in29. Real-time energy data is processed in the microgrid using an IoT-based energy management system using MQTT. For this purpose, the Regularized Bias-aware Ensemble Kalman Filter (RBAEKF) was used to remove outliers and update missing values, thereby enabling accurate data representation. Further, fault detection was also performed using a Multi-scale Attention CNN (MACNN), and its parameters were optimised using the Sheep Flock Optimisation Algorithm (SFOA). The model was evaluated and confirmed to be effective in reducing costs in current IoT-based microgrid energy management solutions, as well as in aiding real-time monitoring and enhancing cost efficiency. IoT-enabled sensor networks exemplify real-time process control in different industrial sectors beyond microgrids, as explained by30 by investigating the use cases, technologies and challenges of IoT-enabled process monitoring. The study identified data overload, scalability issues, and concerns about energy efficiency as key impediments to widespread adoption. To address these issues, they developed a framework for an IoT sensor network that utilizes the advanced MQTT and CoAP protocols for data transfer and decision-making. Improved operational efficiency, scalability, and safety, along with case studies from smart cities, agriculture, healthcare, and manufacturing, were demonstrated. Nevertheless, there are several areas for further research, including security, energy consumption, and scalability.

Using an IoT-based system comprising linked smart submeters, a mini-PC gateway, and cloud analytics, the authors in31 designed an IoT system for real-time tracking of energy use. For essential energy-consuming gadgets, such as chillers, boilers, and HVAC systems, this system was implemented using Modbus over RS-485 to fetch data. Node-RED was utilized to process the data, and MQTT was employed to upload it to the cloud. The system provides real-time information on energy use, which industries can use to improve their consumption patterns and minimize waste, thereby increasing operational efficiency. Findings proved the system scalable, capable of adaptive control, and secure real-time monitoring, making it an effective tool for industries interested in energy management strategies based on data. The security and reliability of IoT-enabled energy monitoring are crucial. Authors in32 devised an intelligent and secure monitoring network for hybrid energy systems using AI coupled with MQTT and CoAP protocols. Typically, traditional energy monitoring systems exhibit high latency and limited scalability, which severely reduces their real-time responsiveness. This paper presented advanced IoT communication protocols for low latency and highly efficient data streaming. They evaluated the system in terms of a 35% reduction in latency, a 96.87% increase in data transmission efficiency, a 28% increase in reliability, a 25% increase in scalability, and a 20% reduction in energy consumption. These results highlight the effectiveness of secure MQTT-based frameworks in enhancing observability and control in hybrid energy environments.

Proposed framework

This section describes the data preprocessing method, feature engineering, and classification of buildings into energy efficiency categories. This approach involves a well-structured process for building an energy classification model using DT and AI through feature engineering, preprocessing, and dataset selection, as illustrated in Fig. 2. This process begins with the Data Collection stage, which relies on the UCI dataset (for building features), IoT sensors (for real-time sensor streams via MQTT), and DT parameters as they appear in building plans, among other sources, to feed the system with the necessary data for analysis.

After data collection, it underwent preprocessing, which consisted of several steps. First, we perform data cleaning from the missing values and normalise (feature values) using Min-Max scaling to make them standard. To extract meaningful attributes, feature engineering is performed, and then energy labels are formed into low-energy and high-energy based on median values. Secondly, a DT is created as a virtual representation of a building. JSON/XML schema structures the model, integrating sensor data for real-time updates. However, its state is continually updated by the continuously received new sensor data to maintain a perfect reflection of real-world conditions. It also supports simulation scenarios to ’what-if’ and predicts future energy consumption based on different configurations and external factor influences. The third phase of AI Classification is when a random forest classifier is trained using an 80/20 train-test split. The trained model receives real-time data from IoT sensors via MQTT, classifies energy efficiency, and updates the visualization dashboard with 3D scatter plots. A feedback mechanism ensures that AI insights refine the DT, thereby improving prediction accuracy.

Dataset selection and preprocessing

Dataset selection is crucial in determining the model’s reliability and relevance. This study uses the publicly available “Energy Efficiency” dataset33. The dataset was generated by34 using the Ecotect building energy simulation software, which simulates energy usage based on varying thermal and design parameters. 768 samples were generated by simulating 12 base building shapes with multiple configurations, varying eight critical building features: Relative Compactness, Surface Area, Wall Area, Roof Area, Overall Height, Orientation, Glazing Area, and Glazing Area Distribution. These characteristics were selected due to their significant influence on building thermal behaviour. The dataset includes two continuous target variables: Heating Load (y1) and Cooling Load (y2), representing energy demand under standard climatic assumptions. Table 1 summarises the input and output variables used in this study, which were derived from simulated energy performance data.

It is important to clarify that no physical IoT sensors were deployed in this study, as the dataset is entirely simulation-based. Consequently, no specific sensor types, deployment locations, or collection intervals exist. To bridge this gap and align with the DT framework, we synthetically mapped the simulation parameters to a virtual building environment using a custom JSON-based schema (see Sect. 3.2). This approach aligns each input feature with its corresponding architectural or operational component, effectively emulating what real-time IoT sensors (e.g., for temperature, light, or occupancy) would report in a physical smart building. Each sample thus represents a DT of a unique building configuration with simulated, sensor-equivalent outputs.

This abstraction facilitates integration with AI models and supports future extensions toward real-time sensor-based DT systems. Additionally, a descriptive analysis of the dataset’s input features is provided to enhance understanding of the dataset’s characteristics and statistical distribution. Exploratory Data Analysis (EDA) was conducted to understand the statistical properties, distribution, and variability of the dataset’s features. The dataset comprises 768 samples and includes eight numerical input features that influence building energy performance, along with two continuous target variables: Heating Load (Y1) and Cooling Load (Y2). Table 2 provides summary statistics of the dataset features.

The summary statistics indicate a broad range of building configurations. Relative Compactness, Surface Area, Wall Area, and Roof Area represent structural dimensions, while Glazing Area and its distribution capture facade properties. Orientation and Height introduce additional variability in thermal behaviour. Both target variables (Heating Load and Cooling Load) exhibit moderate variance, supporting the model’s need for non-linear modelling approaches.

The Algorithm 1 establishes a DT for a building by integrating real-time IoT sensor data for energy monitoring and classification. It begins by initialising the DT with essential building parameters, such as area, Height, and wall structure, while setting up an energy profile and a sensor mapping system. The twin continuously updates its state by processing incoming data from an MQTT stream, mapping sensor values like temperature, humidity, and energy consumption to their respective locations. The algorithm calculates and normalises the total energy load based on the building’s area. Additionally, it enables scenario simulation by modifying DT parameters and predicting changes in energy usage. The main execution loop listens for new sensor data, updates the DT, calculates energy metrics, and periodically classifies energy efficiency using an AI model. When a predefined time interval passes, the system updates the classification and refreshes the dashboard, ensuring real-time monitoring of the building’s energy performance.

The Algorithm 2 integrates DT technology with AI-based energy classification through a structured four-phase approach. First, it preprocesses the energy dataset by removing missing values, normalising features, and labelling energy usage as “High” or “Low” based on the median consumption. Next, it creates DTs for buildings, mapping relevant structural features and energy consumption data. A Random Forest Classifier is then trained using historical data, with model evaluation performed on test data. Finally, the algorithm utilizes MQTT integration to receive real-time updates from IoT sensors. When new sensor data arrives, the corresponding DT updates its energy profile and extracts key features for classification using the trained model. The system continuously processes sensor data, predicts energy efficiency, and maintains an up-to-date digital representation of energy consumption trends for better decision-making.

Data preprocessing involves transforming raw data into a structured and clean format suitable for machine learning models. This step includes data cleaning, handling missing values, and feature engineering. Data cleaning is essential to ensure the integrity and consistency of the dataset. The dataset is loaded from the UCI Machine Learning Repository using its direct URL. Column names are updated for better readability by replacing default names with more descriptive ones such as Relative_Compactness, Surface_Area, and Heating_Load (HL). The dataset’s shape is also printed to provide an overview of the number of samples and features. Descriptive statistics are computed using the following Eq. (1) to gain insights into the dataset.

where D represents the dataset, and \(D_{stats}\) contains the computed summary statistics for numerical features.

Handling missing values is important to prevent biased results and inefficiencies in machine learning models. The dataset is checked for missing values, and appropriate imputation techniques are applied if necessary to ensure data completeness. Feature engineering involves transforming and creating new features to enhance model performance. A new feature, EF, is introduced, representing the total energy consumption of a building by Eq. (2):

where HL and \(Cooling\_Load (CL)\) are the building’s heating and cooling energy requirements.

To convert EF into a binary classification problem, the median value is used as a threshold, as shown in Eq. (3):

The use of the median ensures that the energy consumption is divided into two groups that are as balanced as possible based on the central tendency of the dataset. Buildings with energy consumption below the median are labelled Low Energy, while those above the median are labelled High Energy. A new binary column, Binary_Label, is added to the dataset as shown in Eq. (4), where:

This classification method was chosen because it provides a simple, data-driven approach to creating the two energy groups, without requiring external benchmarks or arbitrary thresholds. The target variable, Binary_Label, is encoded using LabelEncoder, converting textual labels into numerical values (0 for Low Energy and 1 for High Energy). The feature set (X) is prepared by removing unnecessary columns as shown in Eq. (5):

To ensure all features have zero mean and unit variance, feature standardisation is performed using StandardScaler, represented by Eq. (6):

where \(\mu _X\) and \(\sigma _X\) are the mean and standard deviation of X. Finally, the dataset is split into training and testing sets using a 70–30% split, as shown in Eq. (7).

This ensures the model is trained on a subset of data and evaluated on unseen data, improving its generalisation performance.

To create multi-class labels for energy efficiency, this study calculates efficiency thresholds based on the tertiles of the ’EF’ values, effectively dividing the data into three equal groups: low, medium, and high energy efficiency.

A function named assign_efficiency_label is defined to categorise each building’s energy efficiency based on the calculated thresholds. This function is applied to the ’EF’ column to create a new column, ’Multiclass_Label’, which contains the corresponding energy class for each building. The distribution of these multi-class labels is printed to provide an overview of the number of buildings falling into each category and the specific efficiency thresholds used for classification. Then, prepares the data for modelling by dropping the original ’HL’, ’CL’, ’EF’, and ’Multiclass_Label’ columns from the feature set, resulting in a new DataFrame X that contains only the relevant features for classification. The target variable y_multiclass is defined as the multi-class labels. The multi-class labels are encoded into a numerical format to facilitate model training using LabelEncoder, which transforms the categorical labels into integers. The encoded class mapping is printed to show the relationship between the original labels and their corresponding encoded values. Next, the features are standardised using StandardScaler, ensuring they have a mean of zero and a standard deviation of one. The dataset is then split into training and testing sets using train_test_split, with 30% of the data reserved for testing. The stratified parameter is used to maintain the proportion of each class in both the training and testing sets. Finally, the original feature names are stored for later reference, and a dictionary named model_results is initialised to store the performance metrics of various models that will be trained, including accuracy, precision, recall, F1-score, training time, and inference time.

DT modeling

Developing a comprehensive JSON-based model forms the cornerstone of our DT implementation for energy efficiency classification. This structured representation encapsulates buildings’ physical and operational characteristics as machine-readable, hierarchical data objects. The schema begins with fundamental architectural parameters, including surface area, wall area, roof dimensions, height, orientation, and glazing properties, all of which are critical determinants of thermal behavior. For instance, a representative JSON object may begin as follows:

{ “building_id”: “B001”, “surface_area”: 450.0, “wall_area”: 220.0, “roof_area”: 180.0, “height”: 15.0, “orientation”: “South”, “glazing_area”: 12.5, “glazing_distribution”: “Uniform”, “hvac”: { “type”: “VRF”, “efficiency_rating”: “A++” }, “lighting”: { “type”: “LED”, “control”: “automatic” }, “occupancy_pattern”: { “weekday”: “8AM-6PM”, “weekend”: “10AM-4PM” }, “sensors”: { “temperature”: 22.4, “humidity”: 45, “co2”: 550 }, “last_updated”: “2025-05-10T09:00:00Z” }

This example demonstrates how key-value pairs enable fast, semantically meaningful I/O updates from IoT sensor streams. The schema is specifically designed for both human readability and computational efficiency. Each building instance, represented in JSON, acts as a virtual counterpart that is continuously updated with live data, ensuring the DT remains synchronized with the physical asset. These current representations directly inform the AI classification model for real-time analysis. Additionally, the schema incorporates metadata such as “created_at”: “2025-01-01T12:00:00Z” and “version”: “1.3.2” to support traceability and version control throughout the DT’s lifecycle. The JSON format’s compatibility with cloud platforms, web dashboards, and analytics pipelines ensures broad interoperability across the entire DT ecosystem.

AI model selection

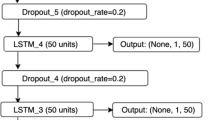

Binary and multi-classification are implemented and evaluated using four different machine learning models: Random Forest, Deep Neural Network (DNN), Long Short-Term Memory Network (LSTM), and Bidirectional Long Short-Term Memory Network (BiLSTM). The dataset is split into training (X_train, y_train) and test (X_test, y_test) sets and then each model is trained. The Random Forest model is trained first using the RandomForestClassifier with 100 decision trees, and its performance is evaluated using accuracy, precision, recall, and F1-score. Next, a deep neural network is defined using the Keras Sequential API, consisting of three dense layers with a dropout layer, and utilizing the Adam optimizer and binary cross-entropy loss. The model is trained with early stopping and model checkpointing to save the best-performing model. The trained model is then evaluated in a manner similar to the Random Forest model. The LSTM model is designed for sequential data modelling with two LSTM layers, dropout layers and dense layers, requiring the input data to be reshaped into a 3D format (samples, timesteps, features). The model is trained with the exact early stopping mechanism and then evaluated on test data. Finally, the Bidirectional LSTM (BiLSTM) model is implemented, consisting of bidirectional LSTM layers that process the input sequence in forward and backwards directions to enhance feature learning. Like the other models, it is trained with early stopping and evaluated for accuracy and other performance metrics. Each model’s training and inference times are measured throughout the script, and their performance metrics are saved using the save_model_performance function.

Hyperparameter optimization

To ensure optimal performance across all models, we conducted empirical tuning of hyperparameters using grid search and validation monitoring. For the Random Forest classifier, the number of trees (n_estimators) was set to 100 after testing values in the range [50, 100, 150], where 100 yielded the best trade-off between accuracy and training time. For the DNN, LSTM, and BiLSTM models, we selected hidden layer sizes, dropout rates, and batch sizes based on experiments using validation loss as the criterion for selection. Specifically, we fixed the batch size at 32, dropout at 0.2, and employed early stopping with a patience of 20 epochs to prevent overfitting. The number of epochs was capped at 100, with early stopping typically halting training around 70–80. All deep models utilized the Adam optimizer and binary cross-entropy loss, with layer configurations (e.g., 64-32-16 neurons) determined through trials that prioritized convergence speed and validation performance. Table 3 explains the Hyperparameter Optimisation Summary for All Models.

Implementation stages

Five key steps are involved in the process: data preprocessing, AI model training, IoT data simulation, real-time prediction, and visualisation.

Step 1: Data preprocessing: The first step is to prepare the dataset for training the AI model. The dataset is loaded, and its columns are renamed for easy readability. Another essential part of this step is to create an energy efficiency label by categorizing buildings into different energy load categories. The median energy load is used as a threshold for separating the consumption load of buildings into high-energy-consuming and low-energy-consuming categories. Supervised learning requires this labelling so the AI model can classify buildings based on energy efficiency.

Step 2: AI model training: A Random Forest model is trained with the processed dataset. The Random Forest algorithm is one of the powerful ensemble learning methods that builds a bunch of decision trees and aggregates their predictions to achieve high accuracy and robustness. Three validity criteria are used to evaluate the model: accuracy, precision, and recall, which measure its ability to classify buildings correctly. Overall correctness is focused on accuracy; precision explains how many energy-efficient buildings the model predicts, and recall determines how often the model picks up all the energy-efficient buildings. It ensures the model’s reliability before being deployed to a real-time IoT environment.

Step 3: IoT data simulation: As real-time energy efficiency prediction entails generating the IoT sensor data, we simulated it by creating random sensor data for several buildings. The readings presented are similar to those found in real-world smart building data, including temperature, humidity, energy usage, and other environmental factors. After that, the simulated data is published to an MQTT broker under the building/energy topic. MQTT is a lightweight messaging protocol specifically designed for IoT applications that require real-time data transmission between devices. This step is used to create a dynamic data stream that will be used to make real-time AI predictions.

Step 4: Real-time prediction: To perform real-time classification, the AI model needs to keep analysing data fed by the sensor. The system listens to the MQTT “building/energy” topic to receive new IoT sensor readings. The Random Forest model is trained to build hypotheses and classify each incoming data point as high or low energy efficient by passing it through the output of the live sensor values. This real-time decision-making capability enables instant monitoring and alerts based on inefficient signs in the buildings.

Step 5: Visualization: Plotly is used to visualize the classification results with 3D scatter plots. Interactive visualisation plays a key role in the entire visualisation process, and the most popular ones include plots. The classification results are presented in a 3D scatter plot, which maps different sensor readings across three dimensions to display the classification of buildings based on their energy efficiency. It provides a clearer visual representation of the AI model’s predictions and energy consumption patterns across buildings, enabling stakeholders, such as facility managers and energy analysts, to gain a deeper understanding of the model’s predictions. Integrating IoT with AI enables real-time monitoring of energy efficiency, facilitating smart building management and sustainable energy optimization.

Results and discussion

Table 4 provides a comparative analysis of four models: Random Forest, Deep Neural Network (DNN), Long Short-Term Memory (LSTM), and Bidirectional LSTM (BiLSTM) based on precision, recall, F1-score, and support for two classes (Class 0 and Class 1). For Random Forest, Class 0 has a precision of 1.00, a recall of 0.97, and an F1-score of 0.98, while Class 1 has a precision of 0.96, a recall of 1.00, and an F1-score of 0.98. The weighted average metrics show 98% accuracy. The DNN model presents identical results, with Class 0 and Class 1 having the same precision, recall, and F1-score values, leading to 98% accuracy. The LSTM model shows slight variations, where Class 0 has a precision of 0.99, a recall of 0.97, and an F1-score of 0.98, and Class 1 has a precision of 0.96, a recall of 0.99, and an F1-score of 0.98. The overall accuracy remains 98%. The BiLSTM model produces the same results as LSTM, with Class 0 having 0.99 precision, 0.97 recall, and 0.98 F1-score, and Class 1 having 0.96 precision, 0.99 recall, and 0.98 F1-score, maintaining 98% accuracy.

Table 5 presents the average feature importance values across four models, Random Forest, DNN, LSTM, and BiLSTM, for a binary classification task. Among all the features, Overall_Height stands out with the highest average importance (0.1032), indicating its relatively greater influence on model predictions. Roof_Area, Wall_Area, and Surface_Area follow, though with significantly lower importance scores, suggesting a modest contribution to predictive performance. Features like Relative_Compactness, Glazing_Area, and Glazing_Area_Distribution have minimal influence, while Orientation shows a negative importance score, implying it may have a negligible or even slightly adverse effect on the models’ performance.

Figure 3 presents the graphical representation of Model Performance Analysis for a binary class. Figure 3a presents the confusion matrices for different models used in the study. A confusion matrix is a key tool in classification analysis, displaying the number of true positives, true negatives, false positives, and false negatives. The RF and DNN models performed accurately, correctly classifying 122 low-energy and 105 high-energy buildings. There were 4 Low Energy buildings misclassified as High Energy, and no High Energy buildings were misclassified. Both models detect High-energy buildings accurately. The LSTM and BiLSTM models correctly classified 122 low-energy and 104 high-energy buildings. There were 4 Low Energy buildings misclassified as High Energy and 1 High Energy building misclassified as Low Energy. Figure 3b shows the training and validation accuracy and loss over epochs for deep learning models: DNN, LSTM, and BiLSTM. Each subplot represents a different model, allowing for a comparative analysis of how each architecture learns over time. The x-axis represents the number of epochs, while the y-axis represents accuracy and loss values. The accuracy graph depicts the progression of training and validation accuracy over 80 epochs for the DNN model. The training accuracy starts at 65% at the \(0^{th}\) epoch and increases rapidly, reaching around 95% by the \(2({\text{nd}})\) epoch and showing a slight fluctuation stabilising close to 98–99%. The validation accuracy starts at 89% at \(0^{th}\) epoch. It increases to 97%, remaining close to the training accuracy, and the loss graph presents the reduction in training and validation loss for the DNN model. The training loss starts high at approximately 0.6 in the \(0^{th}\) epoch, rapidly decreases to 0.1 by the \(10{\text{th}}\) epoch, and continues to decline gradually. The validation loss follows a similar downward trend. The accuracy graph for the LSTM model shows a sharp increase in training accuracy within the first few epochs, stabilising around 96-97% after the \(10{\text{th}}\) epoch. The validation accuracy mirrors this trend. The loss graph for the LSTM model depicts a steep decline in training loss from 0.7 in the \(1{\text{st}}\) epoch to a much lower value by the \(10{\text{th}}\) epoch, eventually stabilising at a minimal loss. The validation loss follows a similar trend. The accuracy graph for the BiLSTM model shows a rapid rise in training accuracy within the first few epochs, with a steady level of around 98% maintained throughout. However, the validation accuracy exhibits slight fluctuations. Moreover, the loss graph for the BiLSTM model shows a rapid drop in training loss from approximately 0.65 in the \(1{\text{st}}\) epoch to a low, stable value. The validation loss follows a similar declining trend.

Figure 4 presents the accuracy scores obtained during a 5-fold cross-validation process for the Random Forest model. The x-axis represents different folds, while the y-axis displays accuracy values. Each bar corresponds to a specific fold, and a horizontal line represents the mean accuracy of 0.9818 across all folds. The accuracy values range from a low of 0.9481 in the first fold to a high of 0.9869 in the fourth, indicating that the model’s performance varies between attempts. Notably, four of the five folds achieve accuracy levels above the mean, suggesting that the model is generally performing well during the validation process. The slight variability in accuracy across the folds may indicate differences in the data distribution or the complexity encountered in each subset. The RF model consistently achieves high accuracy values in classification tasks.

Figure 5 presents a heatmap visualisation of the correlation between building features and energy efficiency. Darker colours indicate strong relationships, either positive or negative. “Relative Compactness” negatively correlates with heating load, meaning more compact buildings require less heating energy. Conversely, a strong positive correlation between “Surface Area” and “Cooling Load” suggests that larger buildings consume more cooling energy. The heatmaps reveal key patterns in building attributes and energy consumption. Surface Area and Wall Area combinations within the ranges of 514–551 and 330–416 show the highest energy usage, suggesting inefficient designs. Surface Area vs. Overall Height indicates that shorter, wider buildings consume more energy, favoring taller, compact designs for greater efficiency. Wall Area vs. Glazing Area highlights that high Wall Areas with minimal Glazing lead to increased energy consumption, underscoring the role of glazing in thermal regulation. These findings emphasise the impact of architectural choices on energy efficiency.

Figure 6 presents violin plots illustrating the distribution of various building features across energy efficiency classes. These plots reveal differences in feature distributions and help identify outliers that may influence model predictions. Low-energy buildings tend to have higher Relative Compactness (\(\approx\)0.9), lower Surface, Wall, and Roof Areas, and reduced Overall Height (<5 units), suggesting that compact designs minimize energy consumption. In contrast, High-energy buildings exhibit larger surface exposure, higher glazing areas, and greater Height (up to 7 units), contributing to increased energy use. Glazing Area Distribution is more uniform in low-energy buildings, while high-energy structures show greater variability. These results highlight the impact of building compactness, surface exposure, and glazing on energy efficiency, emphasising the need for optimised design strategies.

Figure 7a presents a 3D bubble chart visualising the relationships between the Glazing Area, Wall Area, and Overall Height concerning energy efficiency. Each point represents a building sample, with colour indicating energy efficiency class and bubble size corresponding to the glazing area. The graph reveals that buildings with higher glazing areas tend to cluster towards greater overall heights and larger wall areas, contributing to higher energy efficiency. Green points, representing lower energy efficiency, are concentrated in the lower regions of the graph, where buildings have smaller glazing, wall areas, and heights. In contrast, red points, signifying higher energy performance, occupy the upper Section, indicating that larger dimensions correlate with improved efficiency. This trend suggests a positive relationship between increased glazing area and energy performance, emphasising the importance of structural dimensions in energy-efficient building design. Figure 7b presents a 3D scatter plot illustrating the relationships between Surface Area, Wall Area, and Overall Height, with points colour-coded by energy efficiency classification and sized according to Glazing Area. Green circles represent correctly classified low-energy buildings, which cluster at lower Surface and Wall Areas, indicating that smaller dimensions correspond to lower energy consumption. Red crosses denote misclassified low-energy buildings, which share attributes similar to those of correctly classified buildings but fall outside expected regions. Red circles indicate high-energy buildings positioned at higher values across all axes, confirming that larger Surface and Wall Areas correlate with increased energy usage. The visualisation effectively distinguishes between low and high energy classifications, revealing how building dimensions influence energy efficiency.

Figure 8 presents a scatter plot comparing Surface Area and Wall Area, with points colour-coded by energy class and styled to indicate correct or incorrect predictions. The x-axis represents the Surface Area, while the y-axis represents the Wall Area. Lighter shades indicate Low-energy buildings, whereas darker shades represent High-energy buildings. Grey points mark correctly classified buildings, while black points highlight misclassified ones. The plot reveals a correlation between higher Wall Areas and larger Surface Areas. Misclassified points indicate areas where the model’s predictions did not align with actual classifications, highlighting potential limitations in accuracy.

Figure 9 contains box plots summarising the distribution of key building features across Low and High Energy classes. Each plot shows the median, quartiles, and outliers, allowing class comparison. Low Energy buildings tend to have larger Surface Areas (median \(\approx\)750) and Roof Areas (\(\approx\)200) but lower Relative Compactness (\(\approx\)0.7), indicating a less compact design. In contrast, High-energy buildings are generally more compact (\(\approx\)0.9) with smaller Surface Areas (\(\approx\)650) and Roof Areas (\(\approx\)140), suggesting efficiency-driven architectural choices. Overall, Height remains similar across both classes ( 5 units), indicating it is less predictive of energy classification. These results emphasise the impact of surface exposure and compactness on energy efficiency.

Figure 10 shows a histogram with a Kernel Density Estimate (KDE) overlay, visualising the overall distribution of energy efficiency values. Vertical lines mark the median and quantile thresholds, offering insights into the dataset’s distribution. The graph reveals a prevalent energy efficiency pattern, with most values clustering around lower levels, particularly between 20 and 40. The high frequency at approximately 30 points suggests that many units operate at relatively low efficiency, indicating potential areas for improvement. The median of 40.97 indicates that, while the population is skewed towards lower efficiencies, a segment of higher-performing units also exists. The clear distinctions between the 33% and 67% thresholds further outline the spread of efficiency levels; with many units falling below the 33% threshold, it presents an opportunity for energy improvements.

Figure 11 illustrates multiple bar plots comparing training and inference time for different models. Random Forest has the fastest training time, making it ideal for scenarios where quick model training is essential. However, DNN, LSTM, and Bi-LSTM require significantly longer training times. Random Forest remains the most efficient for inference time, while LSTM and Bi-LSTM exhibit higher inference times. Random Forest offers efficiency in training and inference, whereas DNN, LSTM, and Bi-LSTM provide better accuracy at the cost of increased computational demands. The analysis highlights the trade-offs between performance and efficiency when selecting a model for binary classification tasks.

Figure 12 represents the graphical visualisation of Permutation Testing of all models for binary data. The bar graph in Fig. 12a illustrates the permutation importance scores of eight architectural features across all predictive models. Permutation importance quantifies how much each feature contributes to the model’s performance, with higher scores indicating greater importance. These values are shown along the y-axis, representing the mean decrease in performance when a feature is randomly shuffled, along with error bars to indicate standard deviation. The most important feature across all models is Overall Height. For the DNN model, it has the highest importance score of approximately 0.155, followed by BiLSTM at around 0.145, and LSTM at about 0.115. The Random Forest model shows a minimal value for this feature (close to 0.005) but with a larger error margin, indicating potential variability in its contribution. Roof Area is the second most influential feature for the DNN (0.095) and has moderate importance in the BiLSTM (0.03) and LSTM (0.028) models. Its influence is minimal in Random Forest, where the score is close to 0.005. Features such as Wall Area, Surface Area, and Relative Compactness exhibit lower importance values, ranging from 0.01 to 0.03, across LSTM-based models and DNN. For example, Wall Area scores 0.017 in DNN and 0.03 in LSTM and BiLSTM. Surface Area has values near 0.012–0.022, while Relative Compactness peaks around 0.02 in BiLSTM. The remaining features, Glazing Area, Glazing Area Distribution, and Orientation, have very low importance across all models, with scores mostly at or near 0, indicating that they have a negligible influence on model accuracy. Overall Height and Roof Area are the dominant features, especially in neural network-based models, indicating their strong predictive power for the target variable. The heatmap in Fig. 12b visualises the feature importance scores across four models based on permutation importance. Each cell contains a numeric value representing the decrease in model performance when the corresponding feature is shuffled, with higher values indicating greater importance. From the heatmap, Overall_Height is the most influential feature across all deep learning models. It achieves the highest importance score of 0.1532 in the DNN, followed by 0.1446 in BiLSTM and 0.1169 in LSTM. In contrast, its importance in the Random Forest model is negligible, even slightly negative (− 0.0017), indicating a possible lack of sensitivity to this feature or noise in its estimation. Roof_Area ranks as the second most important feature, particularly for the DNN (0.0961), and also shows moderate importance in LSTM (0.0294) and BiLSTM (0.0260). The Random Forest model shows a very low importance for this feature (0.0017). Other features like Wall_Area, Surface_Area, and Relative_Compactness have lower but still noticeable contributions. For example, Wall_Area holds importance scores of 0.0130, 0.0294, and 0.0303 in the DNN, LSTM, and BiLSTM, respectively. Surface_Area scores range from 0.0052 in Random Forest to 0.0208 in BiLSTM. Relative_Compactness remains low in all models, peaking at 0.0095 in LSTM. In contrast, features such as Glazing_Area, Glazing_Area_Distribution, and Orientation show minimal to no importance across all models. For instance, all models assign 0.0000 importance to Glazing_Area_Distribution and Orientation, while Glazing_Area only has a slightly positive score (0.0130) in Random Forest and 0.0000 elsewhere. Orientation even has a slightly negative score (−0.0043) in Random Forest, indicating it may introduce noise. The bar chart in Fig. 12c displays the permutation testing time required by four models. The y-axis uses a logarithmic scale to represent time in seconds, highlighting the computational efficiency of each model during the evaluation of the importance of permutation. The Random Forest model is the most efficient, completing the permutation testing in just 0.53 seconds, significantly faster than all other models. In contrast, the DNN model takes 5.63 seconds, over ten times longer than Random Forest, reflecting the increased complexity and computation involved in neural network models. Moving to recurrent neural networks, LSTM requires 12.73 seconds, more than twice the time of DNN, indicating its additional overhead due to temporal sequence processing. The BiLSTM model, being the most computationally intensive, takes 20.73 seconds, making it nearly 40 times slower than Random Forest and almost four times slower than DNN.

Table 6 provides a comparative analysis of four models, Random Forest, DNN, LSTM, and BiLSTM, which are summarised through precision, recall, and F1-score for three classes, along with weighted averages. Random Forest achieves the highest overall accuracy, with a weighted precision, recall, and F1-score of 0.97. It excels in Class 0, attaining a perfect recall of 1.00 and a precision of 0.95, ensuring no false negatives. For Class 1, it maintains a strong balance with a precision and recall of 0.97, while for Class 2, its recall slightly drops to 0.92, though it retains a high F1-score of 0.95. DNN closely follows, with a weighted precision, recall, and F1-score of 0.94. It performs consistently across Class 0 and Class 1, achieving a precision and recall of 0.96. However, its performance in Class 2 is slightly lower, with a precision of 0.90 and a recall of 0.92, leading to an F1-score of 0.91. LSTM exhibits a decline in classification performance, with a weighted precision and recall of 0.84. It struggles particularly with Class 2, where precision drops to 0.79 and recall to 0.69, resulting in an F1-score of 0.74. Class 1 achieves a relatively higher recall of 0.90 but a lower precision of 0.79, leading to an F1-score of 0.84. Class 0 remains stable with a precision and recall of 0.92. BiLSTM improves upon LSTM’s performance, reaching a weighted precision, recall, and F1-score of 0.88. It achieves strong results for Class 0, with a precision of 0.97 and a recall of 0.95. For Class 1, recall increases to 0.91, but precision is slightly lower at 0.83, leading to an F1-score of 0.87. Class 2 shows an improvement compared to LSTM, with a precision of 0.85 and a recall of 0.79, resulting in an F1-score of 0.82.

Table 7 presents the average feature importance across four models, Random Forest, DNN, LSTM, and BiLSTM, for a multi-class classification task related to building performance. The results indicate that Glazing Area and Wall Area are the most influential features, with the highest average importance values of 0.2048 and 0.1987, respectively, suggesting their significant role in model predictions due to their impact on building energy efficiency. Roof Area and Overall Height show moderate importance, while Relative Compactness, Glazing Area Distribution, Surface Area, and Orientation have relatively lower average importance, implying they contribute less to the predictive performance.

Figure 13 presents the graphical representation of model performance analysis for multi-class. Figure 13a presents the confusion matrices of the multi-classes of all four models. The confusion matrix of models classifies energy levels into high, medium, and low energy categories. The RF model correctly classifies all 77 High Energy instances without misclassification. For Low Energy, 75 instances are accurately classified, while 2 instances are misclassified as Medium Energy. For Medium Energy, 71 instances are correctly classified, whereas 4 instances are misclassified as High Energy and 2 as Low Energy. Overall, the model demonstrates high accuracy. The DNN model correctly classified 73 High and Low Energy instances, respectively, while 4 were misclassified as Medium Energy. For Medium Energy, 71 instances are correctly classified, whereas 3 instances are misclassified as High Energy and 3 as Low Energy. The LSTM model correctly classified 71 and 61 instances of High and low energy, respectively, while 6 of high and 8 of low energy are misclassified as Medium Energy. For Medium Energy, 53 instances are correctly classified, whereas 6 instances are misclassified as High Energy and 18 as Low Energy. The BiLSTM model correctly classified 73 and 70 instances of High and Low Energy, respectively, while 4 high and 7 low energy instances are misclassified as Medium Energy. For Medium Energy, 61 instances are correctly classified, whereas 2 instances are misclassified as High Energy and 14 as Low Energy. Figure 13b shows the training and validation accuracy and loss over epochs. The DNN accuracy graph shows that the training accuracy starts at approximately 60% in the \(1{\text{st}}\) epoch and steadily increases, reaching around 90% after the \(50{\text{th}}\) epoch, continuing to improve slightly until the final epoch. The validation accuracy begins lower but follows a similar increasing trend, stabilising around 85% with fluctuations. Similarly, the LSTM accuracy graph indicates that the training accuracy rises rapidly from 55% in the \(1{\text{st}}\) epoch to around 85% by the \(20{\text{th}}\) epoch and remains stable thereafter. The validation accuracy also increases but fluctuates more, stabilising near 80% after the \(30^{th}\) epoch. For the BiLSTM model, the training accuracy starts at 60%, increasing steadily to about 90% after the \(50{\text{th}}\) epoch. The validation accuracy initially lags but shows a consistent upward trend, stabilising at approximately 85% with some fluctuations. The loss graphs illustrate the reduction in training and validation loss over the epochs. The DNN loss graph shows that the training loss starts at approximately 1.0 in the \(1{\text{st}}\) epoch and declines steadily, reaching around 0.2 by the final epoch. The validation loss follows a similar downward trend but remains slightly higher than the training loss. Similarly, the LSTM loss graph indicates that the training loss begins at approximately 1.1, dropping sharply within the first few epochs and then stabilising around 0.3. The validation loss follows a similar pattern but fluctuates slightly more. The BiLSTM loss graph shows that the training loss decreases rapidly from nearly 1.0 in the \(1{\text{st}}\) epoch, reaching 0.2 by the \(50{\text{th}}\) epoch. The validation loss also declines but remains slightly higher, stabilising around 0.3.

Figure 14 presents a bar chart illustrating the accuracy scores obtained during the 5-fold cross-validation of the Random Forest model. Fold 1 achieves the highest accuracy (0.9026), indicating strong model performance, while Fold 2 (0.8701) and Fold 3 (0.8766) maintain relatively consistent results. However, a noticeable decline occurs in Fold 4 (0.8039) and Fold 5 (0.7778), suggesting potential challenges in these datasets. The mean accuracy across all folds is 0.8462, demonstrating overall strong performance but highlighting variability.

Figure 15 displays a combined metrics comparison chart for multiple models. Random Forest is optimal for speed-focused scenarios, while LSTM and BiLSTM may be better suited for tasks that require complex pattern recognition despite their higher computational costs.

Figure 16a provides a 3D visualisation of the relationships among ’Surface_Area’, ’Wall_Area’, and ’Overall_Height’. High-energy buildings (red circles) are primarily clustered in the upper and back regions, suggesting they generally have larger surface and wall areas with greater Height. Medium-energy buildings (orange markers) show correct and incorrect classifications, indicating feature overlap with other energy classes. Low-energy buildings (green markers) also exhibit some misclassifications, particularly overlapping with medium-energy buildings. The presence of incorrect classifications (crosses) suggests that the current feature set may not always provide clear separability between energy classes. This visualisation highlights the need for refined classification criteria or additional features to improve accuracy and reduce misclassification. Figure 16b presents a plot illustrating the relationship between ’Surface_Area’ and ’Wall_Area’, with points coloured according to the energy class. Medium Energy buildings (red points) cluster around higher Wall Area values but exhibit more incorrect classifications, indicating challenges in distinguishing this class. High-energy buildings (orange points) are fewer but consistently classified correctly, suggesting more apparent feature separation. Low Energy buildings (green points) form a dense cluster at lower Surface and Wall Area values, showing a distinct trend in classification. The presence of misclassified Medium Energy samples suggests that Surface Area and Wall Area effectively differentiate High and Low Energy classes but may not provide sufficient distinction for Medium Energy, leading to higher misclassification rates.

Figure 17 presents box plots illustrating the distribution of selected features across energy efficiency classes, highlighting variations in medians, interquartile ranges, and potential outliers. The analysis reveals that high-energy-efficient buildings typically have smaller glazing and surface areas, supporting a compact design that minimises heat loss. In contrast, medium-energy buildings exhibit larger surface and wall areas, suggesting less efficient energy use. Relative compactness is lowest in high-energy buildings, reinforcing that compact structures enhance energy retention. Roof areas are larger in medium-energy buildings, whereas low and high-energy buildings favour more compact designs. Glazing area distribution and overall Height remain relatively consistent across energy classes, indicating minimal impact on efficiency. Orientation is highest in medium-energy buildings, suggesting that strategic adjustments in building orientation may contribute to improved efficiency. These findings emphasise that compact, well-optimised designs are key to high energy efficiency, while medium-energy structures may reflect less efficient architectural choices.

Figure 18 presents a kernel density estimate (KDE) plot that visualises the distribution of energy efficiency values across classes. Vertical dashed lines indicate class thresholds, helping to understand the spread and separation between energy efficiency categories. The green density curve for low energy efficiency peaks around 20, showing a high concentration of observations in this category. The yellow curve, representing medium energy efficiency, has a significant density near 50, with some overlap with the low energy class, indicating variability in efficiency. Thresholds at 31.34 (low-medium) and 58.53 (medium-high) mark the transitions between categories. The red curve for high energy efficiency rises beyond 58.53, but appears less frequent than the other classes. This distribution highlights that most observations fall into the low and medium energy categories, with fewer instances of high energy use.

Figure 19 presents a pair plot illustrating the pairwise relationships among the top features, with points coloured according to energy class. This visualisation helps identify feature interactions and trends within the dataset. The scatter and density plots highlight key relationships influencing energy classification. The glazing Area exhibits a complex distribution across energy classes, with variations in its interaction with other features. Surface Area is nonlinearly correlated with Glazing Area, where specific ranges distinguish energy classifications. Relative Compactness is inversely related to Surface Area, indicating that as surface area increases, compactness decreases. Roof Area forms clusters concerning glazing and surface areas, emphasising its role in energy classification. The density plots along the diagonals further reinforce distribution differences among energy classes, underscoring critical trends in feature relationships.

Figure 20 represents the graphical visualisation of Permutation Testing of all models for binary data. The bar chart in Fig. 20a presents the permutation testing time required by all models. Among the models, Random Forest is the fastest, completing the permutation importance test in just 0.53 seconds. This reflects its relatively simple structure and lower computational overhead. In contrast, the DNN takes 5.63 seconds, indicating a tenfold increase in processing time compared to Random Forest due to the deeper architecture and more complex parameter tuning. The LSTM model requires even more time, taking 12.73 seconds to complete the testing, which is more than double the time of the DNN. This increase is attributed to the sequential nature of LSTM, which processes data over time steps. The most time-consuming model is BiLSTM, which takes 20.73 seconds, almost four times the time of the DNN and nearly 40 times slower than Random Forest. This is expected, as BiLSTM processes information in both forward and backwards directions, effectively doubling the computation compared to standard LSTM. The Fig. 20b illustrates a heatmap that visualises the importance of various features in predicting energy efficiency parameters across models. Among all features, Wall Area showed the highest importance in the BiLSTM model with a value of 0.3532, suggesting it is the most significant predictor for this model. Similarly, Glazing Area exhibited high importance across all models, with scores of 0.2052 (Random Forest), 0.2156 (DNN), 0.1922 (LSTM), and 0.2061 (BiLSTM), indicating its consistent contribution. The Roof Area feature was also notably influential, particularly in BiLSTM (0.2052) and DNN (0.1532), but less so in Random Forest (0.0139) and LSTM (0.1065). Overall Height had a moderate importance in DNN (0.1810) but was negligible in other models, such as LSTM (0.0087) and Random Forest (0.0069). Relative Compactness contributed the most in BiLSTM (0.1013) compared to other models, which remained under 0.04. The Glazing Area Distribution showed low but relatively consistent importance across models, ranging from 0.0147 (LSTM) to 0.0398 (Random Forest). Less impactful features include Surface Area and Orientation, with importance scores below 0.05 in all models. Specifically, Surface Area had the highest value in BiLSTM (0.0407), while Orientation remained the least significant across all models, peaking at only 0.0130 in DNN. Wall Area and Glazing Area are identified as the most critical features for energy efficiency prediction, especially in BiLSTM and DNN models, while Orientation and Surface Area have minimal influence across all approaches. The bar graph in Fig. 20c presents a comparative analysis of the four models’ training time (in seconds). The Random Forest model exhibited the shortest training time, completing in just 4.93 seconds, highlighting its efficiency and suitability for rapid training tasks. In contrast, the DNN model required the longest training time, taking 29.02 seconds, due to its multiple dense layers and iterative backpropagation process, which generally makes deep learning models more computationally demanding. The LSTM model, designed to capture sequential dependencies, recorded a moderate training time of 9.55 seconds, reflecting its recurrent structure and memory components. The BiLSTM model, which processes input sequences in both forward and backwards directions, took 16.41 seconds to train-considerably longer than the standard LSTM but still significantly faster than the DNN.

Discussion and comparison

Figure 21 presents a bar plot comparing the accuracy of different models for energy efficiency classification in both binary and multi-class settings. The x-axis represents various models, while the y-axis displays accuracy scores, ranging from 0.85 to 1.0, for better visibility of performance differences. Each bar is annotated with its accuracy score for a straightforward comparison. Figure 21a presents a bar plot for binary classification; Random Forest and DNN achieved the highest accuracy, scoring approximately 0.9827, demonstrating their effectiveness in correctly predicting outcomes. LSTM and BiLSTM performed slightly lower, each scoring around 0.9784, indicating their capability but slightly reduced efficiency compared to Random Forest and DNN. Figure 21b presents a bar plot of multi-class classification; Random Forest remained the top performer with 96.54% accuracy, highlighting its robustness in classification tasks. DNN followed with 93.94%, while LSTM scored 83.55%, suggesting challenges in handling multi-class data. BiLSTM improved upon LSTM with 88.31%, showing that bidirectional processing enhances context capture but still lags behind Random Forest and DNN. Random Forest consistently outperformed other models in both classification settings, likely due to its adaptability and ability to handle diverse data distributions. While deep learning models, particularly BiLSTM, have improved multi-class classification, ensemble methods like Random Forest have proven to be the most reliable for achieving high accuracy.

Without standardised benchmarks or empirical baseline data for categorising energy efficiency in the simulation-based dataset, the Energy Feature (EF) median value was used as a statistically neutral threshold for binary classification. This approach ensures a balanced class distribution, which is particularly important for training supervised machine learning models. While this method may not accurately reflect domain-specific thresholds, it provides a practical solution for enabling model training and performance comparison across classification algorithms. The study acknowledges this limitation and suggests that future work could involve mapping these classifications to real-world energy efficiency standards or incorporating empirical thresholds from building energy codes or green certifications. This study focuses on applying machine learning models to classify buildings based on their energy efficiency. The features selected for this classification, such as building shape, surface area, roof area, and glazing distribution, are essential factors that influence the thermal performance of buildings. These features were specifically chosen because they are known to significantly impact the building’s energy consumption patterns, and thus are highly relevant to energy efficiency analysis. The study’s results have a direct impact on building energy management, including the classification of buildings into low-energy and high-energy categories. The binary classification approach, using median values for categorization, enables us to make data-driven decisions about energy efficiency and helps simulate energy optimization in a practical, real-world context. Furthermore, the study’s use of machine learning algorithms aligns with current trends in the building energy sector, where predictive modelling is increasingly employed to make real-time energy consumption assessments and drive energy-saving strategies. Future work will explore real-time data collection through IoT sensors to better connect the methodology to building energy domain objectives, integrating live building data into the DT framework. This will allow the models to be tested in dynamic environments, with real-time performance feedback, providing more relevant insights into energy optimisation practices.

Conclusion and future scope

This research highlighted the significant impact of integrating DT technology and AI in energy consumption classification. Besides high accuracy in energy efficiency prediction, the developed framework can provide real-time monitoring and energy consumption management in smart buildings. These results demonstrate that the Random Forest model outperforms the classification tasks with an accuracy of above 98%. In contrast, the DNN, LSTM, and BiLSTM models already demonstrate satisfactory performance, with accuracies ranging from 94% to 97%. Graphical analyses, including confusion matrices, training curves, and feature importance plots, provide a comprehensive understanding of model performance and the relationships between building features and energy efficiency. For instance, the confusion matrices illustrate the models’ ability to correctly classify energy-efficient and non-energy-efficient buildings, while training curves reveal the learning dynamics of deep learning models. The feature-important visualisations highlight critical factors influencing energy consumption and guiding future architectural and design decisions. These findings underscore the importance of utilising IoT data and advanced machine learning techniques to optimise energy usage, contributing to sustainability goals. Future work should focus on deploying this framework in real-world scenarios, enhancing its capabilities with more sophisticated AI models, and integrating cloud-based solutions for improved data management and accessibility. Additionally, exploring the framework’s scalability and adaptability to various building types and energy systems will be crucial for broader implementation and impact.

Data availability

All data generated or analysed during this study are included in this published article.

References

Bera, M. et al. Advancing energy efficiency: innovative technologies and strategic measures for achieving net zero emissions. Carbon Footprints 4, N–A (2025).

Billanes, J. D., Ma, Z. G. & Jørgensen, B. N. Data-driven technologies for energy optimization in smart buildings: A scoping review. Energies 18, 19961073 (2025).

Wang, S. et al. Grid peak shaving and energy efficiency improvement: Advances in gravity energy storage technology and research on its efficient application. Energies 18, 996 (2025).

Silva, B. N., Khan, M. & Han, K. Futuristic sustainable energy management in smart environments: A review of peak load shaving and demand response strategies, challenges, and opportunities. Sustainability 12, 5561 (2020).

Bibri, S. E., Huang, J., Jagatheesaperumal, S. K. & Krogstie, J. The synergistic interplay of artificial intelligence and digital twin in environmentally planning sustainable smart cities: A comprehensive systematic review. Environmental Science and Ecotechnology. 100433 (2024).

Alnaser, A. A., Maxi, M. & Elmousalami, H. Ai-powered digital twins and internet of things for smart cities and sustainable building environment. Appl. Sci. 14, 12056 (2024).

Kabir, M. R. & Halder, D. Ray, S. A Survey. (EEE Access, Digital twins for IoT-driven energy systems, 2024).

Agho, M. O., Eyo-Udo, N. L., Onukwulu, E. C., Sule, A. K. & Azubuike, C. Digital twin technology for real-time monitoring of energy supply chains. Int. J. Res. Innov. Appl. Sci. 9, 564–592 (2024).

Arsecularatne, B., Rodrigo, N. & Chang, R. Digital twins for reducing energy consumption in buildings: A review. Sustainability 16, 9275 (2024).

Grieves, M. & Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems: New Findings and Approaches. 85–113 (2017).

El Saddik, A. et al. Dtwins: A digital twins ecosystem for health and well-being. IEEE COMSOC MMTC Commun. Front 14, 39–43 (2019).

Corrado, C. R., DeLong, S. M., Holt, E. G., Hua, E. Y. & Tolk, A. Combining green metrics and digital twins for sustainability planning and governance of smart buildings and cities. Sustainability 14, 12988 (2022).

Brilakis, I. et al. Built environment digital twining (International Workshop on Built Environment Digital Twinning presented by, 2019).

Agostinelli, S., Cumo, F., Guidi, G. & Tomazzoli, C. Cyber-physical systems improving building energy management: Digital twin and artificial intelligence. Energies 14, 2338 (2021).

Alibrandi, U. Risk-informed digital twin of buildings and infrastructures for sustainable and resilient urban communities. ASCE-ASME J. Risk Uncertainty Eng. Syst. Part A Civil Eng. 8, 04022032 (2022).

Vering, C. et al. Unlocking potentials of building energy systems’ operational efficiency: Application of digital twin design for hvac systems. In 16th International Building Performance Simulation Association (IBPSA) 1304–1310 (2019).

Francisco, A., Truong, H., Khosrowpour, A., Taylor, J. E. & Mohammadi, N. Occupant perceptions of building information model-based energy visualizations in eco-feedback systems. Appl. Energy 221, 220–228 (2018).

Hosamo, H., Hosamo, M. H., Nielsen, H. K., Svennevig, P. R. & Svidt, K. Digital twin of hvac system (hvacdt) for multiobjective optimization of energy consumption and thermal comfort based on bim framework with ann-moga. Adv. Build. Energy Res. 17, 125–171 (2023).

Ni, Z., Eriksson, P., Liu, Y., Karlsson, M. & Gong, S. Improving energy efficiency while preserving historic buildings with digital twins and artificial intelligence. In IOP Conference Series: Earth and Environmental Science, 863, 012041 (IOP Publishing, 2021).

Clausen, A. et al. A digital twin framework for improving energy efficiency and occupant comfort in public and commercial buildings. Energy Inf. 4, 1–19 (2021).

Song, Y., Xia, M., Chen, Q. & Chen, F. A data-model fusion dispatch strategy for the building energy flexibility based on the digital twin. Appl. Energy 332, 120496 (2023).