Abstract

When k-Nearest-Neighbors (\(k\)-NN) was conceived more than 70 years ago, computation, as we use it now, would be hardly recognizable. Since then, technology has improved by orders of magnitude, including unprecedented connectivity. However, \(k\)-NN has remained virtually unchanged, exposing its shortcomings for today’s needs: becoming overwhelmed when presented with large, high-dimensional data. Although space partitioning data structures, especially k-d trees and ball-trees, have improved performance in larger data, they remain inadequate when data is also high-dimensional. Experiments confirm that space partitioning becomes ineffective in high-dimensional data because most of the search space is explored needlessly. Our strategy is to partition the data into small groups of points similarly distanced from a reference point in a B+ tree data structure and use this data structure to limit the search space of a \(k\)-NN query. Further, we establish that the limited search space chosen by the B+ tree structure can be effectively explored by any indexing techniques applicable to the entire data. We then present our algorithm \(k\)-NN with partitioning (ti\(k\)-NN), including computational analysis and experiments. Our detailed evaluation demonstrates significant speedup achieved by ti\(k\)-NN over the naive, \(k\)-d tree, \(ball\)-tree based \(k\)-NN and other state-of-the-art approximate \(k\)-NN search approaches in high dimensional data.

Similar content being viewed by others

Introduction

Most machine learning (ML) algorithms currently in use were conceived in the last century predating computation as we know it now. Extraordinary technological improvements partnered with ubiquitous demand has at once presented not only challenges–mostly due to so-called big-data–but also opportunities1,2. One of the most widely used ML algorithms k-Nearest-Neighbors (\(k\)-NN) is more than seventy-one years old3. \(k\)-NN is currently used for classification and regression. This non-parametric learning technique for a datum p relies on the k closest data to determine outcomes in which no model is built; instead, the data itself is used for prediction, but the penalty is that search becomes exhaustive. When performing \(k\)-NN on massive data, i.e., practical settings, search becomes prohibitive; consequently, efforts generally have focused on reducing the search space either directly through partitioning or indirectly through indexing or a combination of the two.

Using \(k\)-NN over “big data” has been mostly conducted through space partitioning to deal with not only data size but also dimension. The literature is split between exact or approximate nearest neighbor search (NNS). The NNS search problem was first formulated in4,5 to reduce the time needed to find nearest neighbors. The original goal is relaxed by returning any q whose distance from the query is at most c for some \(c \ge 1\), making NNS computationally less expensive.

Existing state-of-the-art (SOTA) literature has various approaches to solving NNS, such as the tree-based approaches for large data, in which binary partitioning is used to build a tree of the data sets6. Each leaf node of the tree contains objects that are near each other. The triangle inequality is used during the query to prune nodes that cannot have potential neighbors. Similarly, Cluster-based (or partition) approaches make use of clustering as a means to reduce the search space7. The triangle inequality property determines whether a cluster is explored for a given query. While the performance of cluster-based approaches mainly depends on the quality of clusters formed, this approach in high dimensional data has been shown to outperform tree-based approaches6. Graph-based methods rely upon neighborhood graphs8, most notably the k-nearest neighbor graph (\(k\)-NNG)–a graph in which an edge connects two vertices, p, and q if the distance between p and q is among the k smallest distances from p to other objects from the dataset. The time required to build a \(k\)-NNG for a dataset with n points is \(O(n^2)\) rendering it infeasible for large datasets. Locality-Sensitive Hashing (LSH)9,10 make use of several hash functions that are locality-sensitive; similar points are hashed into the same bucket with a high probability providing a likelihood of distance. As a \(k\)-NN query is posed, the query points are hashed, and data points in the resulting bucket are probed.

In the proposed work, we make the following contributions: (1) a novel sequence of indices that together produce a structure that effectively and efficiently reduces the search space and outperforms current SOTA \(k\)-NN algorithms; (2) theoretical proof of obtained speed-up; (3) extensibility of this approach as a framework allowing integration of any suitable suite of indexing techniques. We call our work ti\(k\)-NN (\(k\)-NN using telescope indexing), since the sequence is akin to multiple lenses on a telescope in producing a final image.

The remainder of this paper is as follows. In Sec. ii. background and related work are presented for conventional \(k\)-NN (brute-force and naïve) and state-of-the-art for both \(k\)-NN and NNS. In Sec. iii. Details of ti\(k\)-NN are presented as well as proof of speed-up. In Sec. iv. comparisons among naïve \(k\)-NN, state-of-the-art \(k\)-NN, NNS algorithms and ti\(k\)-NN are made using data sets that are large, high dimensional, and both. Sec. v. contains conclusions and Sec. vi. outline directions for future work.

Background & related work

Assume data \(|D| = n\) where \(D \subset {\mathbb {R}}^d\). k-NN classification11 for a query point \(p \in D\) only has two steps: (1) To compute the distinct Euclidean distance pairs e(p, q) between p and all other points \(q \in D/\{p\}\) while keeping the k points closest to p; (2) To return the most frequent class label. The obvious drawback of \(k\)-NN classification is the time cost of step (1). The time complexity of \(k\)-NN is \(O(n\cdot d)\). Even though \(k\)-NN is linear in the order of n, it becomes infeasible to employ it when n is routinely \(> 10^6\) (the ordered list must be constructed for each query) and further deteriorates when \(d \gg 10\).

The \(k\)-d tree12 is a data structure, which generates k hyperplanes to organize data in a k-dimensional space. Each non-leaf node in \(k\)-d tree represents a single split, where only one of the k dimensions is used as a discriminator (key) to partition the space. At higher dimensions, however, \(k\)-d tree’s performance degrades13,14,15 due to the curse of dimensionality16. Splitting on a single dimension in a high-dimension space seldom yields insight into the entire space.

Another partitioning data structure considered is the \(ball\)-tree17 that builds “balls” (or hyperspheres) to partition space. A ball tree recursively divides the data into nodes defined by a centroid C and radius r, and each point in the node lies within the hypersphere defined by r and C. The number of candidate points for a neighbor search is reduced through triangle inequality. Because of the spherical geometry of the \(ball\)-tree nodes, it can out-perform a \(k\)-d tree in high dimensions13,14,15. In practice, the performance is highly dependent on the structure of the training data and again affected by the curse of dimensionality16.

A B+ tree structure to partition the data was introduced in18, which is referred to as the iDistance method. Each data point is converted into a single-dimensional value based on its distance to the closest reference point and indexed to the corresponding partition. The query point is also converted into a single-dimensional value, and the nearest neighbors are searched within a radius. If the required neighbors are not found within the specified radius, then the radius is increased in small steps until the necessary neighbors are found. A drawback of this method is that the number of partitions and reference points must be determined a priori to index creation. If the number is too few or too numerous, the resulting partitions will be too densely populated or too sparsely populated, respectively. The partitions become increasingly overlapping when high-dimensional data is reduced to a single-dimension, thereby increasing the number of candidate partitions and searchable data. Another problem with reducing a high-dimensional data point into a single-dimension value is information loss. For example, data points in opposite directions of a reference point may get reduced to the same or similar value. The brute-force exploration of the candidate partitions and the exploration of unnecessary data due to the lossy nature of indexing leads to an increased search time.

Another indexing method based on multiple random projection trees (MRPT) was proposed for fast \(k\)-NN Search19,20. This technique combines multiple sparse random projection trees using a voting scheme. The final search focuses only on points occurring most frequently among points retrieved from multiple trees. The complexity of building the index is \(\Theta (T\cdot \log \sqrt{d})\) and of query is \(O(T\cdot d\cdot (\frac{\ell }{\sqrt{d}} + \frac{n}{2^\ell }))\), where \(\ell\) is some predetermined depth, and T is the number of trees.

Using \(k\)-means an approach proposed in21 performs better than tree-based approaches in high dimensions; \(k\)-means, however, tends to create clusters with large radii, negatively impacting the triangle inequality condition. Fixed Width Clustering (FWC)22 addresses this issue by keeping the radii of clusters “fixed”. A drawback is that FWC can create clusters that are either too dense or too sparse. kNNVWC6 introduces a Variable Width Clustering(VWC) approach addressing both aspects by limiting the number of members within a given cluster. VWC results in better data clustering and provides significant performance improvements. caKD+23 also proposes a clustering method that is coupled with feature extraction relying on a Deep Neural Network (DNN) and two-level \(k\)-d tree structure for improved performance.

NN-Descent24 approximates \(k\)-NNG construction with arbitrary similarity measures based on local search. NNS is calculated by selecting possible neighbors, assuming the neighbors-of-neighbors of a node are most likely already the nearest one, thereby preventing comparing every node with every other node. The work proposed in25 divides the data into smaller groups using locality-sensitive hashing (LSH) in which one \(k\)-NNG is constructed on each group. The union of all smaller \(k\)-NNG is considered an approximation of \(k\)-NNG of the entire data. \(k\)-NN of a query can be searched by only searching the \(k\)-NNG of the group to which the query belongs. Static degree-adjustment26 tune the indegrees and outdegrees of \(k\)-NNG deriving an “adjusted” graph which is further improved by the dynamic-adjustment method. A path-adjustment method for unnecessary shortcut edges with alternative paths to reduce degrees can significantly reduce the query time. Hierarchical Navigable Small World (HNSW)27 proposes a multilayer graph structure in which only a fixed portion of connections can be searched independent of the network size, thus allowing the scalability of the search. A greedy search starts from the upper-most layer until a local minimum is reached and then restarts the search in lower layers with shorter links from the same local minimum. HVS28 proposes another novel hierarchical graph structure in which multiple layers correspond to subspaces that are divided coarse-to-fine, and the search is accelerated by using a virtual Voronoi diagram in each layer.

Collision Counting LSH (C2LSH)29 proposes an LSH scheme that uses a base of m single hash functions to construct compound hash functions dynamically. C2LSH defines a collision threshold (l) to determine the quality of the examined candidate point. Only those candidates that collide with a query object (q) in greater than l hash functions are considered. Traditional LSH and its variants, including C2LSH, do not consider query object during the construction of LSH functions; thus, data objects closer to q can be partitioned into different buckets. Query Aware LSH scheme (QALSH)30 addresses this concern by introducing an LSH scheme that uses q as an anchor for bucket partition. In QALSH, the LSH function is defined as a combination of random projection and a query-aware bucket partition, eliminating the need for random shifts required by traditional LSH functions.

Proposed work

Telescope index structure for \(k\)-NN: ti\(k\)-NN

From both literature and practice, indexing plays the most significant role in reducing the number of candidates examined before finding the k-Nearest-Neighbors. ti\(k\)-NN introduces the concept of telescope-indexing combining two complementary indices. The primary index of ti\(k\)-NN is a B+ tree constructed over the entire data. The telescope indices, which act as the secondary indices, are built at each leaf node of the B+ tree and only include the data in the leaf node. The primary index B+ tree is of order b where order specifies the maximum number of keys that a single node can hold; thus, each node contains at most b keys in sorted order and \(b+1\) pointers to the next level. The root node is in the first level. In subsequent levels, i at most \((b+1)^i\) nodes can exist. The internal nodes contain keys and pointers that help navigate toward the leaf node that most likely contains the data. Finally, the data resides at leaf nodes. As for the telescope index at leaf nodes, ti\(k\)-NN provides the flexibility of using any existing \(k\)-NN or NNS index.

The data is indexed on a B+ tree of order b based on their distances to a single reference point. Each leaf node of the resulting B+ tree contains at most b data points and represents a group of maximum size b points that are similarly distanced from the reference point. By indexing the data based on distances, we run into the problem of grouping oppositely positioned points in the same node; that is, two equidistant points placed in opposite directions of the reference point might get indexed into the same leaf node. During a \(k\)-NN search, searching one of the two points is unnecessary. This problem is exacerbated in higher dimensional data. In ti\(k\)-NN we address this problem by introducing the telescope-index.

It is important to note (1) the leaf nodes are disjoint sets, i.e., no two leaf nodes will have intersecting data points indexed in them; (2) while indexing the data, the distances are used as the keys for indexing, and the data points are not modified; hence, they are a precise subset of the actual data; (3) the telescope index at each leaf node can be built using any index applicable to the entire data without loss of generality. Various \(k\)-NN and NNS indices, such as randomized \(k\)-d tree, \(ball\)-tree, MRPT, etc., help in avoiding searching unnecessary data points within a leaf node; and (4) in the worst-case scenario, where the entire leaf node is explored, the total distance computations are bounded by \(b<<n\).

The figure on the left shows the data partitioning created by ti\(k\)-NN (B+ + \(ball\)-tree) of order 4. The blue sections are partitions created by the primary index (B+), and the green sections indicate the telescope index (\(ball\)-tree) at partition V5. The table on the right is the data, having two features \(X_1, X_2\) and a label Y. The tuple ID TID is used to identify individual data.

Querying using ti\(k\)-NN

The ti\(k\)-NN approach in answering a k-Nearest-Neighbor search is straightforward. When a query is issued, the B+ tree is traversed based on the query data point’s distance to the same reference point until a leaf node is reached. Once the leaf node to which the query point belongs is found, the telescope index of the leaf node is used to query \(k\)-NN of the data point.

Fig. 1 (left) shows an example ti\(k\)-NN (B+ + \(ball\)-tree) index of order 4 (b=4) built on a sample 2D data and the resulting partitions caused (V1, V2, V3, V4, and V5). When the query for the nearest neighbor of (8, 10) is issued, the first level of search occurs at the primary index (B+). The first level of search returns the leaf node representing the outermost partition (V5) containing four candidate data points (B, C, 0, 1). It is clear, however, that data points 0, 1, and C should not be searched since they are far away from the query. To efficiently explore these data points, the second level of search is issued to the associated telescope index (\(ball\)-tree) at partition V5. We can observe that out of the four data points, only data point B and the query are part of the same “ball” in the \(ball\)-tree index; thus, the distance computations to the other three data points are avoided.

Hyperparameters in ti\(k\)-NN

The reference point choice plays a crucial role in partitioning the data. If the reference point acts as a good representation of the data set, the resulting index will be better leading to faster searches. A simple choice in selecting the reference point can be the mean or median. In our experiments, we consider different options and evaluate their impact on the index and prediction. Further, the order of the tree represents the size of the smaller group that is formed by the B+ tree index impacting both the effectiveness and correctness of the query. In ti\(k\)-NN both the reference point and order of tree(b) are considered as hyperparameters that are tuned.

Based on our experiments presented in Section 4, we find that setting the order of tree to explore around 5–7% of the data and using simple statistical reference points–such as the mean, median, or cluster centroids–yield consistently strong performance. These can serve as effective default values for constructing the ti\(k\)-NN index.

Analysis

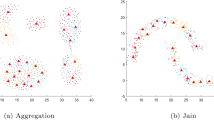

The motivation driving a partitioning as a space indexing technique is to consider fewer elements when detecting the k-NN set of a queried point Q. Any partitioning scheme is subject to error when a data point X, that actually belongs to the k-NN set of Q is placed erroneously into a different partition; thus, the better data is ignored during the search process limiting or even incurring greater cost. In other words, how likely it is to observe that at least one of the data points from the k-nearest neighbor set of Q is not in the detected partition returned by the proposed indexing scheme. We now analyze this as depicted in Fig. 2.

The B+ tree partitions the d dimensional data points into a number of d dimensional hypervolumes according to their distances to the chosen reference point. Every leaf node of the B+ tree defines such a volume by including up to b points. We enumerate the leaves of the tree from left to right. The leftmost leaf is the closest d dimensional volume \(V_1\) to the reference which is a hypersphere (HS) with the radius \(r_1\). The next sibling of this leaf to the right is the second volume \(V_2\) and can be defined as the volume between the hyperspheres with radius \(r_1\) and \(r_2\). Similarly, \(V_i\), for \(1\le i\le m\), denotes the volume between the hypersphere with radius \(r_i\) and \(r_{i-1}\), where \(r_0=0\) when \(i=1\). We assume the data points are spread randomly uniform in the space such that all volumes \(V_1\) to \(V_m\) occupy equal space, i.e., \(V_1=V_2= \cdots = V_m\), and each contains b data points. We also assume wlog that the total number of all data points n is divisible by b for simplicity.

The d dimensional volume of a hypersphere with a radius r can be computed with \(HS(r) = \kappa \cdot r^d\), for \(\kappa = \pi ^{d/2}/\Gamma (d/2+1)\). The most inner hypersphere with the radius of \(r_1\), thus, defines the volume \(V_1 = \kappa \cdot r_1^d\) and includes b points. The next hypersphere, which has a radius of \(r_2\) with respect to the reference, includes 2b points so that \(V_2=HS(r_2) - HS(r_{1})=V_1\). Similarly, \(i\cdot b\) points are present in the \(i^{th}\) hypersphere with radius \(r_i\). The relation \(r_i = r_1\cdot \root d \of {i}\) holds since \(HS(r_i) = i \cdot HS(r_1)\) with the assumption.

While searching the k nearest neighbors of a queried point Q, we first locate the volume in which the point Q resides and then check all data points that are in between the detected ith and \((i-1)\)th hyperspheres which is the volume \(V_i\) represented at the ith leaf of the tree. A secondary indexing for the points in a leaf node may reduce the number of points that needs to be explored. We will only focus on the performance of the B+ tree partitioning.

It may cause an error if one of the \(k\)-NN points of the query is not in the selected volume \(V_i\), but in another \(V_j\), since in that case that point is not considered at all in the search phase. The question to be answered is how likely is this scenario to be encountered?

Let CQX represents the triangle such that C is the reference point, Q is a point in \(V_i\) which is the space in between the ith and \((i-1)\)th hyperspheres, and X is one of the k-NN of Q which is in another volume \(V_j\) other than \(V_i\). The following conditions hold where |AB| is the Euclidean distance between points A and B. The inequalities in (1) and (2) are derived from the definitions of the hypersphere and the coordinates of the data points C,Q, and X. Eq. (3) is obtained by summing (1) and (2). Notice that \(|QX|\le |CQ|+|CX|\) is dictated by the property that the sum of the lengths of the two sides of a triangle is larger than or equal to the remaining side’s length, and combining this fact with the upper-bound given in (3) provides us the equation (4)

Consider a hypersphere centered at Q with a radius of |QX|. There can be at most \((k-1)\) points in that hypersphere when X is exactly the kth nearest neighbor of Q in the worst case using the probability of observing more than \((k-1)\) points in HS(|QX|) volume. Observe that this probability becomes maximum when |QX| is assumed to take its maximum value \(r_i+r_j\). If \(i<j\), then \(r_i<r_j\) and \(2r_i< r_i+r_j < 2r_j\), and the volume of the \(HS(r_i+r_j)\) becomes smaller than the volume of the \(HS(2r_j)\), which occupies \(2^d\cdot {j}\) times larger volume than the \(V_1=HS(r_1)\) as \(r_j = r_1 \root d \of {j}\). According to our assumption \(V_1(r_1)=\kappa \cdot r_1^d\) includes b points, and thus, \(V_Q=HS(r_i+r_j)\) is expected to include more than \(b\cdot 2^d\cdot j\) points. In such a volume, considering \(b\cdot 2^d\cdot j \le k-1\) would be rare when \(b>k\), and becomes increasingly less probable with increasing values of b, d and j. For sufficiently large b values, therefore, the probability that the \(k\)-NN points reside in a different leaf node of the B+ tree is expected to be very rare, particularly on high-dimensions d and at the outskirts of the data points (specified by j) . Our analysis is reinforced with relevant experiments discussed in the next section. Notice that if we assume the reverse \(j<i\), then the same analysis holds by changing the j index with i.

The sketch of a possible error such that the proposed scheme misses the data point X, which is among the \(k\)-NN of a queried data point Q. When X occurs in a different volume \(v_j\) than the volume \(v_i\) of the query Q, it can not be considered as a candidate \(k\)-NN of Q as X and Q will then reside in different leaves of the created B+ tree.

Algorithms and run-time analysis

We now describe the approach for ti\(k\)-NN. The creation of a B+ tree index is shown in Algorithm 1. Creating a B+ tree index involves inserting data into the structure using their distance to the reference point as the key. The keys within each node are maintained in sorted order. Inserting a new data point into the B+ tree begins at the root node by determining the closest key to the data point with respect to its distance to the reference point. Since the keys are sorted, we can make use of a binary search to find the closest key. Next, the left sub-tree of the closest key is traversed if the distance is strictly less than that of the key; otherwise, the right sub-tree is traversed. The traversal continues until a leaf node is reached. The data point is finally inserted into the leaf node by finding an appropriate position based on its proximity to the reference point. If the insertion results in an overflow, the node is split into two nodes, each containing an equal number of keys. Further, the overflows at any node result in the insertion of a key in the parent node that represents the median of data points stored in its children. These overflows are recursively resolved until the root node.

Algorithm 2 describes searching and prediction on a data point using ti\(k\)-NN. Both searching and prediction primarily involve membership–determining the leaf node the data point belongs to; in other words, the smaller group of data points to which the data belongs. It is important to note that the search operation only determines the leaf node where the data point may reside. Hence, the search succeeds irrespective of whether the data point is part of the index. Search begins with traversing the tree from the root. Since the data points are sorted based on their distance to the reference point, binary search is used to get the closest key to the query. The algorithm then recursively traverses its left or right sub-tree based on distance comparison until a leaf node is reached. Once a leaf node is reached, querying the \(k\)-NN can be achieved from the associated telescope-index at the node.

To predict using ti\(k\)-NN, we initially use the B+ tree index to search the leaf node that likely contains the neighbors, then \(k\)-NN is subsequently queried from the telescope-index at the leaf node. The formal complexity analyses of each algorithm are addressed below.

Insertion

Insertion of a single data point can be done in \(O(\log n)\) time. In the worst-case scenario, the tree is traversed twice: once top-down to find the leaf node to insert the data and once bottom-up, restructuring and balancing the tree. At each node, the closest key can be found in \(O(\log n)\) via a binary search over the sorted key values, where each comparison is made in O(d) time. Thus, the time complexity of inserting a single item becomes \(O(\log _b n \cdot d \cdot \log _2 b)\).

Creation

Creation of a B+ tree index involves inserting the entire data set. A single data point can be inserted in \(O(\log _b n \cdot d \cdot \log _2 b)\) time. Therefore, insertion of all n data points can be done in \(O(n\cdot \log _b n \cdot d \cdot \log _2 b )\) time.

Search

Searching a data point in the B+ tree involves traversing the path from the root to a leaf and requires visiting \(\log _b n\) nodes. At each node, the closest key can be found in \(O(d \cdot \log _2(b))\) time via a binary search over the sorted b items, where the comparison in between the items take O(d) time. Therefore, searching can be achieved in \(O(\log _b n \cdot d \cdot log_2 b )\) time.

Prediction

Prediction involves searching the B+ tree and then querying the \(k\)-NN using the node level index. Prediction time complexity is \(O(\max (\log _b n \cdot d \cdot \log _2 b), node\_level\_index)\). For example, if MRPT is used, then prediction time complexity will be \(O(\max (\log _b n \cdot d \cdot \log _2(b), T\cdot d \cdot (\frac{\ell }{\sqrt{d}} + \frac{b}{2^\ell })))\).

In this approach the dimensions of the data set become a very significant parameter playing a critical role in index creation, search, and prediction with the node-level index since the term \(d\cdot \log b\) is linear with the dimension value d and may become the dominant factor when compared to the \(\log _b n\); therefore, for increasingly higher dimensional data, filtering becomes more important where the B+ tree helps reduce significantly reduce the elapsed time consistent with our experiments.

Experiments and results

Experimental setup

The current implementation of ti\(k\)-NN is in Python 3.9.10, and no parallelism is used other than any present in the underlying linear algebra libraries. All experiments were carried out on an Intel Broadwell 16 vCPU and 16 GB memory VM.

The ti\(k\)-NN index has two hyperparameters that influence the quality and performance of the index: The Order Of the Tree and the Reference Point. Experiments 1 (EX1) and 2 (EX2) aim to independently assess the impact of hyperparameters on the quality of the index and its performance. Experiment 3 (EX3) is a comparative study between ti\(k\)-NN and other SOTA algorithms for \(k\)-NN and NNS.

Baselines

ti\(k\)-NN has been compared with the (1) iDistance, traditional \(k\)-d tree, \(ball\)-tree implementations of scikit-learn library31; (2) tree-based NNS approaches such as randomized \(k\)-d tree \(k\)-means implementations of FLANN library13, and MRPT index19,20,32; (3) graph-based NNS implementations such as NN-Descent24, NGT26, and HNSW27; and (4) LSH implementation provided by faiss library33 which is developed at Meta’s Fundamental AI Research group and query-aware LSH (QALSH)30. To illustrate the flexibility of building different telescope-indices, we experiment with building each of the mentioned indices as a telescope index in ti\(k\)-NN.

Datasets

To illustrate the performance of our approach, we have selected datasets of varying sizes and dimensionality from UCI repository39. Table 1 provides pertinent details. The first column lists the name of the datasets and their publicly accessible links, the second column lists the dimensionality of the data, and the last column is the number of samples.

Evaluation metrics

To assess the efficiency and effectiveness of the ti\(k\)-NN, the following evaluation metrics have been considered: (1) Index creation time, (2) Speedup Rate (SR), (3) Memory usage, (4) Prediction Error, and (5) ROC-AUC scores. While Prediction Error and ROC-AUC score help us compare and understand the quality of predictions, the SR metric allows us to assess how much faster ti\(k\)-NN is compared to the alternatives. Memory usage comparison indicates the memory overheads being imposed by the telescope-index structure of ti\(k\)-NN, allowing us to determine if an effective procedure is actually feasible.

Speedup Rate (SR) for two comparing algorithms A and B with run-times \(t_A\) and \(t_B\), respectively is calculated as: \(\frac{t_A}{t_B}\). A SR value of \(>1\) indicates algorithm B is faster than A, \(<1\) indicates A is faster than B, and equal to 1 indicates that both the algorithms have similar run times. Memory is reported as relative usage between two algorithms with memory-usages \(m_A\) and \(m_B\) as \(1-\frac{m_A}{m_B}\). A positive value for relative memory usage indicates that algorithm B consumes more memory than A, and a negative value indicates otherwise.

EX1: order of the tree

Setup

In EX1, the impact of the order of the tree (OOT) parameter on ti\(k\)-NN index is examined. The telescope-index structure used for this experiment is (B+, \(ball\)-tree). The OOT is chosen to be 1%-10% of the entire data set.

Observations

The graph in Fig. 3 captures the change in prediction error rate w.r.t. the OOT. The x-axis represents the OOT values, and the y-axis records the prediction error of ti\(k\)-NN (B+, \(ball\)-tree) index. An increase in OOT leads to a decrease in prediction error. Additionally, for all the six datasets, ti\(k\)-NN achieved a good error rate (or prediction accuracy) by only exploring a modest amount of 5%-7% of the total data. Fig. 4 captures how OOT impacts the speedup achieved by ti\(k\)-NN. The x-axis represents the OOT values, and the y-axis records the speedup achieved by ti\(k\)-NN (B+, \(ball\)-tree) over \(ball\)-tree. Prediction time increases at higher OOT values due to the increased number of data points that need to be explored and hence the speedup achieved drops. Fig. 5 indicates how indexing is affected by OOT. The x-axis represents the OOT values, and the y-axis records the log of time taken to create a ti\(k\)-NN (B+, \(ball\)-tree) index over the datasets. ti\(k\)-NN can index the data with different order of tree values in nearly linear time.

EX2: reference points

Setup

In EX2, we examine the impact of choosing the reference point to index the dataset. Four reference points are considered: (1) the mean of the dataset; (2) the centroid of the largest cluster found using k-means40,41 clustering; (3) the median of the dataset; (4) a random data point from the dataset. For this experiment, the order of the tree (OOT) was fixed at 5% of the data, a \(ball\)-tree was used as the telescope-index, and 100 queries were issued. For the evaluation metrics, we captured the time taken to create ti\(k\)-NN index, Speedup Rate(SR) over traditional \(ball\)-tree index, and prediction error for \(k=[5, 10, 15, 20, 25, 50, 70, 100]\).

Observations

In Fig. 6 the x-axis represents the datasets in both the charts, and the y-axis represents the time taken to create the index in seconds in the top chart, and speedup achieved by ti\(k\)-NN (B+, \(ball\)-tree) over \(ball\)-tree in the bottom chart. Except for the \(k\)-means reference point, it can be observed that the index creation time is not significantly impacted by the reference point chosen. The high indexing time for \(k\)-means is caused by the preprocessing step of running \(k\)-means over the data. The choice of reference point, however, does impact the quality of the index created. While the Mean, Median, and \(k\)-means achieve similar SR, the SR achieved by ti\(k\)-NN created over a random data point yields the lowest speedup indicating that the data points in partitions are low quality. Fig. 7 further confirms that a random data point chosen as a reference point results in the greatest error rates across all datasets.

EX3: comparative study

Setup

In EX3, we compare the performance of ti\(k\)-NN against SOTA \(k\)-NN and NNS approaches. To illustrate the potential of ti\(k\)-NN to integrate with any indexing technique to build a telescope-index, we also build ti\(k\)-NN with each of these algorithms as its telescope-index. For example, we compare \(k\)-d tree index with ti\(k\)-NN (B+, \(k\)-d tree).

Four evaluation metrics are used for different values of \(k=[5, 10, 15, 20, 25, 50, 100]\): (1) prediction error; (2) ROC-AUC scores; (3) Speedup Rate (SR) calculated as \(\frac{t_A}{t_B}\) for 100 queries, where \(t_A\) is the time taken by \(k\)-NN or NNS and \(t_B\) is the time taken by ti\(k-\)NN with the same algorithm as telescope index; and (4) Memory usage calculated as \(1-\frac{m_A}{m_B}\), where \(m_A\) is the memory used by \(k\)-NN or NNS index and \(m_B\) is the memory used by ti\(k\)-NN with the same algorithm as telescope-index.

Observations

In Fig. 8 shows that the ti\(k\)-NN prediction error closely matches the exact nearest neighbor search (\(k\)-d tree and \(ball\)-tree) and all other NNS approaches indicating the effectiveness of the partitioning created by the ti\(k\)-NN.

Table 2. summarizes the speedup achieved and memory usage by ti\(k\)-NN over other \(k\)-NN and NNS algorithms. The speedup values indicate how fast the ti\(k\)-NN index is compared to a given algorithm. For example, if we consider \(k\)-d tree, for the census dataset, a speedup of 2.43 indicates that ti\(k\)-NN (B+ + \(k\)-d tree) is 2.43 times faster than \(k\)-d tree alone. The outcomes show some general trends: (1) ti\(k\)-NN provides a significant speedup over both \(k\)-NN approaches (\(k\)-d tree and \(ball\)-tree) across all datasets, and (2) ti\(k\)-NN provides a speedup across high-dimensional data over all NNS algorithms except for flann-\(k\)-d tree. While the telescope index structure consistently improves performance on higher-dimensional datasets, the gains are less pronounced in low-dimensional settings. The overhead introduced by building and traversing the two-level index structure might outweigh the benefits of candidate set reduction in low-dimensional settings, primarily due to two reasons: (1) distance computations are relatively inexpensive, as their cost scales modestly with the number of data points and dimensions; and (2) distances are more informative and effectively differentiate the data42.

One exception where ti\(k\)-NN fails to achieve a speedup over low and high dimensional data is the flann-\(k\)-d tree which implements the randomized \(k\)-d tree that splits the data randomly over the top \(N_D=5\) dimensions with the greatest variance13. If the data points within a partition of \(k\)-d tree do not result in a reasonable split across the \(N_D\) dimensions, then the \(k\)-d tree built over such data will be slow in search due to the increased overhead. For other NNS algorithms, however, as the dimensionality of data increases, the performance of ti\(k\)-NN becomes better than the standalone NNS algorithm.

Similarly, memory usage shown in Table 2 represents how much more memory ti\(k\)-NN index uses compared to the standalone use of its telescope-index. For example, if we consider \(k\)-d tree for the census dataset, a memory usage value of 0.26 indicates that ti\(k\)-NN (B+ + \(k\)-d tree) uses 26% more memory than \(k\)-d tree alone. This overhead results from the additional primary index that ti\(k\)-NN builds over the original dataset, which works alongside the telescope-index to enable efficient querying of data. While in tree-based and clustering-based telescope-indices, the increase in memory usage is under 10% in four of the six datasets, the graph-based and LSH implementation of faiss as telescope-index shows a general trend of higher memory usage, with ti\(k\)-NN consuming 40% or more memory than the original indices.

This increase is mainly because specific indexing methods, such as HNSW27 and faiss43, are designed for global optimization over large-scale datasets. When applied as secondary indices over small partitions, they lose the benefits of global structure sharing and optimization. For instance, graph-based indices like HNSW incur redundancy in neighbor links and hierarchical layers. Similarly, each secondary index using faiss must maintain its own quantizers, inverted lists, and buffers, leading to duplicated memory overhead across many small index partitions.

Table 3. summarizes the ROC-AUC scores for all the algorithms and corresponding ti\(k\)-NN index. We again observe that the ROC-AUC scores of ti\(k\)-NN closely match the original algorithms, reaffirming the index’s quality. It is interesting to note the results for flann library implementations: The ti\(k\)-NN index with flann implementations as telescope-index tends to generate a better ROC-AUC score than the standalone algorithms. The results highlight that, even though ti\(k\)-NN adds a slight overhead for flann implementations, the telescope-index generates a better quality output than the original algorithm.

Comparison with iDistance

The performance of ti\(k\)-NN index against the iDistance is compared independently as both implementations are B+ tree structure-based indices. We have used 64 reference points in the iDistance18 implementation. The performance comparison is made using the Speedup (SR) and Memory usage parameters. We have compared the ti\(k\)-NN index with \(ball\)-tree as the telescope index, i.e.,(B+, \(ball\)-tree) against the iDistance implementation. Table 4 summarizes the speedup achieved and memory usage between the two. The ti\(k\)-NN (B+ + \(ball\)-tree) is significantly faster than iDistance across all the datasets, with the highest speedup achieved in larger datasets (covtype and poker). The speedup achieved by ti\(k\)-NN over iDistance can be directly attributed to the telescope index structure that avoids brute-force search and eliminates the examination of unnecessary data. However, the memory usage of ti\(k\)-NN is higher than iDistance due to the creation of telescope indices.

Remarks

The two hyperparameters OOT and Reference Point, play a significant role in the quality of the ti\(k\)-NN index being created and its efficiency. The experiments indicate that ti\(k\)-NN index can achieve a good error rate (or prediction accuracy) by only exploring a modest amount of 5%-7% of the total data across all datasets and a statistically significant reference point such as Mean, Median, or cluster centroids result in better indices. ti\(k\)-NN achieves significant speedup over \(k\)-NN algorithms (\(k\)-d tree and \(ball\)-tree) in all datasets and high dimensional data for NNS algorithms.

Limitations

While ti\(k\)-NN introduces a robust framework for a telescope-index structure, it also has several limitations:

-

In low-dimensional data, the search time overhead introduced by the telescope-index structure outweighs the gains achieved by reduced dataset.

-

NNS indices, such as randomized \(k\)-d tree which partitions the data over a smaller subset of features, \(k\)-means tree that relies on clustering of data for partitioning, do not gain as much as other NNS algorithms.

-

The telescope-index structure introduces additional memory overheads, which are more profound when graph-based NNS algorithms and certain LSH-based implementations are used as secondary indices.

Conclusions

In this work a novel telescope-index for \(k\)-NN called ti\(k\)-NN is shown to significantly improve the performance of \(k\)-NN classification using large-scale and high dimensional data over a single index. We have demonstrated that any indexing technique applicable to the data can be used in the telescope-index structure proposed in ti\(k\)-NN. The ti\(k\)-NN index requires two parameters: order of the tree and reference point to establish the partitions. Analysis shows the reason telescope-indexing is superior: finding the most likely neighbors in one traversal, the inherent ordering of data within the tree allows quicker traversal to the leaf nodes, and the telescope index that avoids unnecessary distance computations. The B+ tree structure is easy to persist in secondary storage while only loading the nodes on a need basis, thereby making it an excellent fit for large datasets. This approach can be extended to build multiple B+ tree indexes and distribute the data over those indices, enabling executing the model in a distributed setting to improve performance significantly. An impactful consequence is that our work can be easily transformed into a framework. Lastly, performance improvements by employing ti\(k\)-NN against data sets of low and high dimensions and studying and comparing the results against existing SOTA algorithms for \(k\)-NN and NNS.

Future work

The selection of hyperparameters, such as the reference point and the order of the tree, affects the quality of the index constructed by the ti\(k\)-NN algorithm. In this context, emerging technologies such as deep learning can help automate and optimize this selection process. Previous works, such as caKD+23, demonstrate the use of deep learning for feature extraction, where an encoder deep neural network (DNN) is trained to project data into a lower-dimensional linearly separable space. This transformation facilitates more effective clustering using algorithms like c-means, and each cluster is subsequently represented by its centroid. In similar lines, we plan to investigate how the encoding capability of neural networks can be used to guide the optimal selection of reference points and order of tree, thus improving the quality of the index.

Another promising direction is enabling distributed deployment of ti\(k\)-NN indices. The B+ tree-based structure of tik-nn is well-suited for cloud-native and distributed deployment. The nature of the index enables it to be offloaded to secondary storage, reducing memory overhead by loading only relevant nodes on demand. Index creation can also be parallelized, as each leaf node and its secondary index can be built independently. In a distributed environment, leaf nodes can be stored across multiple machines, and queries can be efficiently routed to the appropriate nodes, enabling scalable and distributed execution.

From an implementation perspective, our current implementation of the ti\(k\)-NN algorithm is in Python 3.9.10, leveraging optimized numerical libraries such as NumPy, which internally utilize efficient C-based routines for performance-critical operations44. However, exploring reimplementation in C++ or other low-level languages is a valuable direction for enhancing real-time performance.

Finally, we plan to extend the applicability of ti\(k\)-NN to specialized domains such as images, and temporal data. A complete probabilistic analysis that is sensitive to different multivariate distributions would likely provide a performance advantage if some sampling is propitiously used. Another line is to consider \(\ell\)-tuples of indices that will likely be needed as we move squarely into the Zetta byte range.

Data availability

References and publicly accessible links to all data analyzed during this study are included in Table 1 of this article.

References

Zhou, L., Pan, S., Wang, J. & Vasilakos, A. V. Machine learning on big data: Opportunities and challenges. Neurocomputing 237, 350–361 (2017).

Nguyen, G. et al. Machine learning and deep learning frameworks and libraries for large-scale data mining: a survey. Artificial Intelligence Review 52(1), 77–124 (2019).

Fix, E. & Hodges, J. L. Discriminatory analysis. nonparametric discrimination: Consistency properties. Int. Stat. Rev. 57(3), 238–247 (1989).

Arya, S. & Mount, D. M. Approximate nearest neighbor queries in fixed dimensions. SODA 93, 271–280 (1993).

Bern, M. Approximate closest-point queries in high dimensions. Information Processing Letters 45(2), 95–99 (1993).

Almalawi, A. M., Fahad, A., Tari, Z., Cheema, M. A. & Khalil, I. \(k\) nnvwc: An efficient \(k\)-nearest neighbors approach based on various-widths clustering. IEEE Trans. Knowl. Data. Eng. 28(1), 68–81 (2015).

Prerau, M. J. & Eskin, E. Unsupervised anomaly detection using an optimized k-nearest neighbors algorithm. Undergraduate Thesis, Columbia University: December, (2000).

Prokhorenkova, L. & Shekhovtsov, A. Graph-based nearest neighbor search: From practice to theory. in International Conference on Machine Learning. PMLR, pp. 7803–7813. (2020).

Indyk, P. & Motwani, R. Approximate nearest neighbors: towards removing the curse of dimensionality. in Proceedings of the thirtieth annual ACM symposium on Theory of computing, pp. 604–613 (1998).

Andoni, A. & Indyk, P. “Near-optimal hashing algorithms for approximate nearest neighbor in high dimensions’’, in, 47th annual IEEE symposium on foundations of computer science (FOCS’06). IEEE 2006, 459–468 (2006).

Cover, T. & Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 13(1), 21–27 (1967).

Bentley, J. L. Multidimensional binary search trees used for associative searching. Communications of the ACM 18(9), 509–517 (1975).

Muja, M. & Lowe, D. G. Scalable nearest neighbor algorithms for high dimensional data. IEEE Trans. Pattern Anal. Mach. Intell. 36(11), 2227–2240 (2014).

Abbasifard, M. R., Ghahremani, B. & Naderi, H. A survey on nearest neighbor search methods. Int. J. Comput. Appl. 95, 25 (2014).

Bhatia, N. et al. Survey of nearest neighbor techniques. arXiv preprint arXiv:1007.0085 (2010).

Köppen, M. The curse of dimensionality. in 5th Online World Conference on Soft Computing in Industrial Applications (WSC5), vol. 1, pp. 4–8 (2000).

Omohundro, S. M. Five balltree construction algorithms (International Computer Science Institute Berkeley, 1989).

Jagadish, H. V., Ooi, B. C., Tan, K.-L., Yu, C. & Zhang, R. idistance: An adaptive b+-tree based indexing method for nearest neighbor search. ACM Transactions on Database Systems (TODS) 30(2), 364–397 (2005).

Hyvönen, V. et al. Fast k-nn search. arXiv preprint arXiv:1509.06957, (2015).

Hyvönen, V. et al. Fast nearest neighbor search through sparse random projections and voting. in Big Data (Big Data), 2016 IEEE International Conference on. IEEE, pp. 881–888 (2016).

Wang, X. A fast exact k-nearest neighbors algorithm for high dimensional search using k-means clustering and triangle inequality. in The, 2011 international joint conference on neural networks. IEEE pp,=. 1293–1299 (2011).

Eskin, E., Arnold, A., Prerau, M., Portnoy, L. & Stolfo, S. A geometric framework for unsupervised anomaly detection. in Applications of data mining in computer security. Springer, pp. 77–101. (2002).

Gallego, A. J., Rico-Juan, J. R. & Valero-Mas, J. J. Efficient k-nearest neighbor search based on clustering and adaptive k values. Pattern Recognition 122, 108356 (2022).

Dong, W., Moses, C. & Li, K. Efficient k-nearest neighbor graph construction for generic similarity measures. in Proceedings of the 20th international conference on World wide web, pp. 577–586 (2011).

Zhang, Y.-M., Huang, K., Geng, G. & Liu, C.-L. Fast knn graph construction with locality sensitive hashing, in Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, pp. 660–674 (2013).

Iwasaki, M. & Miyazaki, D. Optimization of indexing based on k-nearest neighbor graph for proximity search in high-dimensional data. arXiv preprint arXiv:1810.07355, (2018).

Malkov, Y. A. & Yashunin, D. A. Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE Trans. Pattern Anal Mach. Intell. 42(4), 824–836 (2018).

Lu, K., Kudo, M., Xiao, C. & Ishikawa, Y. Hvs: hierarchical graph structure based on voronoi diagrams for solving approximate nearest neighbor search. Proceedings of the VLDB Endowment 15(2), 246–258 (2021).

Gan, J., Feng, J., Fang, Q. & Ng, W. “Locality-sensitive hashing scheme based on dynamic collision counting,” in Proceedings of the 2012 ACM SIGMOD international conference on management of data, pp. 541–552 (2012).

Huang, Q., Feng, J., Zhang, Y., Fang, Q. & Ng, W. Query-aware locality-sensitive hashing for approximate nearest neighbor search. Proceedings VLDB Endowment. 9(1), 1–12 (2015).

Pedregosa, F. et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Jääsaari, E., Hyvönen, V. & Roos, T. Efficient autotuning of hyperparameters in approximate nearest neighbor search, in Pacific-Asia Conference on Knowledge Discovery and Data Mining. Springer, p. In press (2019).

Johnson, J., Douze, M. & Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 7(3), 535–547 (2019).

Kohavi, R. et al. Scaling up the accuracy of naive-bayes classifiers: A decision-tree hybrid. Kdd 96, 202–207 (1996).

Guyon, I., Gunn, S., Ben-Hur, A. & Dror, G. Result analysis of the nips. feature selection challenge. Adv. Neural Inf. Process. Syst. 17, 2004 (2003).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998).

Blackard, J. A. & Dean, D. J. Comparative accuracies of artificial neural networks and discriminant analysis in predicting forest cover types from cartographic variables. Comput. Electron. Agric. 24(3), 131–151 (1999).

Cattral, R., Oppacher, F. & Deugo, D. Evolutionary data mining with automatic rule generalization. Recent Advances in Computers, Computing and Communications 1(1), 296–300 (2002).

Dua, D. & Graff, C. UCI machine learning repository. [Online]. Available: http://archive.ics.uci.edu/ml (2017).

Kurban, H. & Dalkilic, M. M. A novel approach to optimization of iterative machine learning algorithms: over heap structure. in 2017 IEEE International Conference on Big Data (Big Data). IEEE, pp. 102–109 (2017).

Jenne, M., Boberg, O., Kurban, H. & Dalkilic, M. Studying the milky way galaxy using paraheap-k. Computer 47(9), 26–33 (2014).

Beyer, K., Goldstein, J., Ramakrishnan, R. & Shaft, U. When is “nearest neighbor” meaningful? in Database Theory-ICDT’99: 7th International Conference Jerusalem, Israel, January 10–12. 1999 Proceedings 7. Springer pp. 217–235 (1999).

Douze, M. et al. The faiss library. (2024).

Harris, C. R. et al. Array programming with numpy. Nature 585(7825), 357–362 (2020).

Author information

Authors and Affiliations

Contributions

M.K, H.K, O.K, M.D have contributed equally to the conception, design, research, writing, and revision of this manuscript. All authors have read and approved the final version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

K R, M., Kurban, H., Kulekci, O.M. et al. Telescope indexing for k-nearest neighbor search algorithms over high dimensional data & large data sets. Sci Rep 15, 24788 (2025). https://doi.org/10.1038/s41598-025-09856-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-09856-5