Abstract

Forecasting and early warning of agricultural product prices is a crucial task in stream data event analysis and agricultural data mining. Existing methods for forecasting agricultural product prices suffer from inefficient feature engineering and challenges in handling imbalanced sample data. To address these issues, we propose a novel predictive model selection approach based on time series image encoding. Specifically, we utilize Gramian Angular Fields (GAF), Markov Transition Fields (MTF), and Recurrence Plots (RP) to transform time series data into image representations. We then introduce an Information Fusion Feature Augmentation (IFFA) method to effectively combine these time series images, ensuring that all relevant event information is preserved. The combined time series images (TSCI) are subsequently fed into a Convolutional Neural Network (CNN) classifier for model selection. Furthermore, to accommodate the unique characteristics of the data, we incorporate Transfer Learning (TL) and S-Folder Cross Validation (S-FCV) to optimize the model selection process, thereby mitigating overfitting due to limited or imbalanced data. Experimental results demonstrate that the proposed IFFA-TSCI-CNN-SFCV method outperforms existing approaches in terms of both efficiency and accuracy.

Similar content being viewed by others

Introduction

Accurate forecasting of food commodity prices is one of the most challenging yet crucial tasks for agricultural economists. Timely and reliable price predictions not only support market participants in making informed production and risk management decisions, but also contribute to optimizing resource allocation and enhancing overall market efficiency. Among the most widely cultivated feed grains globally, corn and wheat account for over 95\(\%\) of total production and consumption1. These commodities serve as key inputs for various industries, including ethanol production and animal feed, making their price fluctuations significantly impactful on the broader food industry.

The outbreak of COVID-19 in 2019 acted as a “black swan” event, leading to drastic changes in the agricultural futures market environment. The pandemic created unprecedented volatility, disrupting global supply chains and economic stability. The uncertainty surrounding the timing, pattern, and form of economic recovery continues to pose challenges for both consumers and producers. In this context, agricultural futures2, as a vital financial instrument, play an essential role in price discovery, hedging, and risk management. During the pandemic, an increasing number of investors turned to agricultural futures for risk mitigation, underscoring the importance of studying the price dynamics of agricultural futures.

This paper is structured as follows: “Related work” section provides a brief overview of the existing literature on forecasting agricultural futures prices using time series analysis. “Methodology” section introduces the foundational theoretical concepts relevant to our study. “Experiment” section details the proposed approach for predicting agricultural commodity prices. In “Results” section , we present the experimental setup and results, followed by the conclusions in “Discussion” section.

Related work

In the field of futures price prediction, existing models can generally be categorized into three types: statistical models, artificial intelligence models, and hybrid models. Statistical models are commonly employed to analyze and predict price fluctuations in futures markets. For example, Evans’ study demonstrated that U.S. economic news announcements have a significant correlation with intra-day price volatility in the futures market. Stoll et al.3 found that the transmission of trading signals is time-sensitive, with 5-minute high-frequency data outperforming 10-minute data in predicting price volatility. Brooks4 used the ARCH model to study price volatility in futures markets, revealing that volatility follows a non-homogeneous distribution. Bunnag5 applied the GARCH model to predict crude oil futures prices and calculate the optimal oil investment portfolio weights. Huang et al.6 utilized GARCH and EGARCH models to estimate and forecast price volatility of four agricultural commodity futures, confirming the effectiveness of GARCH models in this domain. Despite the effectiveness of traditional statistical models like GARCH, they often rely on strict statistical assumptions7, which may not hold in rapidly changing futures markets. Futures price time series often exhibit nonlinear and non-stationary characteristics, and traditional statistical models have limitations when dealing with these complex features, thus affecting predictive accuracy.

In contrast, machine learning -based models, particularly those utilizing decomposition and reconstruction frameworks, have garnered increasing attention in recent years8. For instance, Wen et al.9 highlighted that a hybrid model combining Singular Spectrum Analysis and Support Vector Machines significantly outperforms both SVM-only models and other hybrid models in financial price prediction. Zhu et al.10 demonstrated the superiority of a combination of Variational Mode Decomposition and Bidirectional Gated Recurrent Unit in forecasting rubber futures prices, showing marked improvement over standalone BiGRU models. Liu et al.11 combined VMD with Artificial Neural Networks for predicting energy and metal prices, with experimental results showing that VMD-based models significantly outperform traditional methods. Wang et al.12 proposed a hybrid neural network model based on Empirical Wavelet Transform for oil price forecasting, with results indicating that EWT effectively extracts both the overall trend and local volatility features of price movements, thereby improving forecasting accuracy. However, while decomposition methods significantly enhance predictive performance, using all decomposed components in the prediction model can lead to increased computational complexity. Moreover, Yu et al.13 pointed out that during the final ensemble prediction process, errors from all components may accumulate and negatively impact the final results. To address these challenges, recent research has focused on decomposition-reconstruction ensemble methods14, which optimize prediction accuracy by removing components with minimal contribution (e.g., those contributing less than 2%15.

Despite the success of these machine learning approaches, two key challenges remain: (i) most existing methods rely on manual feature selection, which reduces flexibility and heavily depends on domain knowledge. In some cases, local dynamics in time series contain critical information (e.g., early changes in medical signals or abnormal weather patterns), which necessitates an automated feature extraction process; (ii) current futures price prediction literature has not achieved full automation, and it is challenging to effectively test model performance in large-scale data settings. As a result, recent research has increasingly turned to deep learning models16.

For example, Li and Zheng17 proposed a framework combining a value-based Deep Q Network with a key behavioral model for profit, which effectively addresses noise and non-stationarity in financial data. This model incorporates Stacked Denoising Autoencoders and Long Short-Term Memory networks, achieving stable risk-adjusted returns in stock index futures trading. Jeong and Kim18 improved the DQN model by integrating Deep Neural Networks to predict trading volumes. Wu et al.19 introduced the combination of Gated Recurrent Units and deep reinforcement learning, proposing GDQN and GDPG futures trading strategies, which performed well under various market conditions, especially in volatile markets. Gao et al.20 optimized DQN training by incorporating a Prioritized Experience Replay (PER) mechanism, showing that it outperformed ten traditional strategies, including the buy-and-hold strategy (B&H).

Building on the latest advancements in deep learning, Jiang et al.21,22 proposed a novel framework for predicting abnormal price fluctuations in agricultural futures, based on time-series images. The approach first transforms one-dimensional time series data into two-dimensional images and then applies IFFA for data preprocessing, sorting 15-day time series data numerically. To address small sample overfitting, transfer learning is employed, and a CNN model selector is trained to compute prediction errors and compare them with existing methods. This framework not only improves the prediction accuracy for agricultural futures prices but also provides effective early-warning support, enhancing the capacity for agricultural price risk management.

The main contributions of this study are as follows:

-

1.

A novel automated time-series forecasting model selection method based on IFFA-TSCI-CNN is proposed, enabling automatic feature extraction and improving prediction accuracy.

-

2.

Transfer learning is introduced to address the small sample problem in agricultural futures data, preventing overfitting.

-

3.

By improving the prediction and early-warning capabilities for agricultural futures prices, the framework enhances strategic decision-making and strengthens agricultural price risk management.

Methodology

Gramian angular field

GAF utilizes a polar coordinate system to represent time series data23. This method constructs a matrix of temporal correlations for each pair of time points \((x_{i},x_{j})\) from the series X. The matrix entry at position i, j corresponds to the cosine of the sum of the angles associated with the values of the time series at times i and j. respectively. Prior to computing these correlations, the values of the time series X are first rescaled to the range \([-1,1]\) or [0, 1] using min-max normalization, resulting in a rescaled series X. Subsequently, the rescaled series is represented in polar coordinates, where each value is encoded as the cosine of the angle, and the time index is used as the radial coordinate, as outlined in Eq. (1).

In Eq. (1), \(t_i\) represents the timestamp in the time series. \(x_{i}\) is the minimum maximum normalized value, and N is the sequence length. After converting the time series into polar coordinates, the corresponding GAF pixel matrix can be generated using Eq. (2).

To reduce the dimensionality of the \(n\times n\) matrix generated from a time series with n observations, we use PAA.

Using 3.10.12 version of python NumPy library (https://pypi.org/project/numpy/) in the pixel matrix GAF converted to images, as shown in Fig. 1.

Markov transition field

Let X denote the time-series data, which is divided into \(S=\{s_{1},s_{2},...,s_{n}\}\) segments. For each segment \(s_{i}\), a total of \(f_{m}\) features are extracted, as described in the previous section. To categorize these features, we define Q quantile bins \(\{q_1, q_2,..., q_Z\}\) based on Gaussian quantiles. Each feature \(f_{i}\) is then mapped to the corresponding bin using Symbolic Aggregation Approximation (SAX)24. This process ensures that each feature vector contains only non-zero numerical values. The division strategies include uniform, quantile, and normal. Uniform division means that in each sample, each region of the division has the same width of amplitude; quantile division means that each region of the division contains the same number of samples in each sample; and normal distribution division means that the number of samples contained in each region of the division conforms to a normal distribution. Then the probabilities of the samples at consecutive time steps transitioning from quantile bin \(q_k\) to \(q_l\) are calculated, and these probabilities P are used as elements \(\omega _{kl}\) to construct the Markov state transition matrix W of size \(Z \times Z\):

The Markov Transition Field (MTF) M expands the state transition matrix W to explicitly represent temporal dynamics by recording the transition probabilities between each pair of time points. For a time series of length T, M is a \(T \times T\) matrix where each element \(m_{ij}\) corresponds to the transition probability between the quantile bins at times i and j:

Recurrence plots

Time series data exhibit uncertainty and irregularity, with state recursion being a common characteristic of dynamic nonlinear systems or random processes. Recurrence Plots (RP) 25 serve as a visual tool to examine M-dimensional phase space trajectories through their recursive two-dimensional representation. The Heaviside step function \(\Theta\) and a threshold distance \(\varepsilon\) are used to construct the RP: points (i, j) are marked if the Euclidean distance (\(\ell _2\)-norm) between \(x_i\) and \(x_j\) falls below \(\varepsilon\), and left unmarked otherwise. This provides visualization of phase space periodicity while capturing the system’s dynamical evolution. For a time series X, the recurrence plot is formally defined as:

where \(\Vert \cdot \Vert _2\) denotes the Euclidean norm, and \(\varepsilon > 0\) is the recurrence threshold.

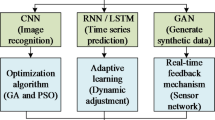

CNN

CNNs are similar to traditional artificial neural networks, comprising neurons that self-optimize by processing inputs through operations like scalar products and nonlinear functions. The network outputs a perceptual score or weight. The primary distinction between CNNs and traditional neural networks lies in their design for image pattern recognition. This focus has led researchers to incorporate image-specific feature encoding techniques, which allow CNNs to reduce the number of parameters needed for model construction. This efficiency makes CNNs particularly well-suited for tasks in computer vision. We chose three different types of neural networks as experimental subjects.

ResNet is an advanced CNN architecture designed for extremely deep networks, enabling the training of models with over 1000 layers. Unlike traditional CNNs, which sequentially stack layers of convolutions, nonlinear activations, and pooling, ResNet introduces residual modules with shortcut connections. These shortcuts directly link input to output, facilitating the flow of information across layers without degradation due to multiple nonlinear transformations. This residual learning approach focuses on approximating the residual mapping \(F(x) = H(x) - x)\), which is often more effective than learning the original mapping, especially when it is close to an identity map. By utilizing identity connections, ResNet prevents the vanishing gradient problem and mitigates overfitting, even with increased depth, thus enabling the efficient training of very deep models26.

VGGNet is a deep neural network known for its simplicity and excellent classification performance. It enhances network depth by adding three \(3\times 3\) convolutional layers, while reducing the number of neurons through max-pooling layers. The fully connected layers are reduced to 4096 neurons to minimize computational complexity. In image processing, VGGNet uses 64 filters in the first convolutional layer (\(3\times 3\) size) to extract features from the input image, followed by max-pooling to reduce dimensionality. A feature vector is generated through fully connected layers and passed to the SoftMax function for classification. VGGNet’s architecture systematically investigates the effect of network depth on recognition accuracy, with successful models such as VGG-16 (13 convolutional and 3 fully connected layers) and VGG-19 (16 convolutional and 3 fully connected layers)27.

DenseNet employs a unique architecture where each layer in a dense block is connected to all preceding layers, promoting compactness and reducing overfitting. This dense connectivity enables layers to access earlier feature maps, enhancing feature correlation. Direct supervision through shortcuts further improves learning. DenseNet is effective for pixel-wise prediction and image recognition, eliminating the need for pre-training. Its architecture includes a \(7\times 7\) convolution, \(3\times 3\) max-pooling, transition layers, and dense blocks with \(1\times 1\) and \(3\times 3\) convolutions. The final classification layer uses global average pooling and a SoftMax function, achieving high accuracy in image classification28.

Information fusion feature augmentation-time series combined image based CNN (IFFA-TSCI-CNN)

Information Fusion Feature Augmentation (IFFA) is an advanced data processing technique in pattern recognition, operating at three levels: pixel, feature, and decision. Among these, feature-level enhancement through multi-classifier combinations has become a prominent research focus29. IFFA leverages the extraction of multiple feature vectors from the same pattern, optimizing their combination to preserve essential recognition information while reducing redundancy, which is critical for improving classification accuracy. Two primary IFFA methods are employed: serial and parallel feature enhancement. The serial method integrates feature vectors into a joint vector, extracting features in a high-dimensional real vector space, while the parallel method combines feature vectors using complex vectors to extract features in a complex vector space. Both methods enhance recognition performance, with the selection of the approach depending on the data characteristics. Since the temporal images in this study do not involve complex vector spaces, the serial enhancement method is utilized. The principle of this method is as follows: Let \(\omega _{1},\omega _{2},...,\omega _{c}\) denote c known pattern classes, and \(\Omega =\{\xi |\xi \in \mathbb {R}\}\) represent the training sample space. Given two feature representations \(x\in \mathbb {R}^{p}\) and \(y\in \mathbb {R}^{q}\) extracted from the same sample \(\xi\) using different methods, we consider them as random vectors in their respective spaces. For dimensionality reduction and feature enhancement, we project these vectors using weight matrices \(W_x \in \mathbb {R}^{p \times d}\) and \(W_y \in \mathbb {R}^{q \times d}\):

After the linear transformations in (6) and (7), we perform two distinct feature fusion operations:

The transformation matrix is given by formula (10) and (11):

To address the issue of feature loss introduced by convolution operations in Convolutional Neural Networks (CNNs) when applied to sequential images, this study employs sequential image encoding techniques to transform time series data into GADF, GASF, MTF, and RP representations. The Information Fusion Feature Augmentation (IFFA) method is then applied to combine these four image types from the same time series into a unified square composite image. As a result, the feature vectors derived from the four different time series images are fused into a joint feature vector within a high-dimensional vector space, enabling serial feature fusion. This approach significantly enhances the feature richness of the original time series images. Finally, the combined time series images are classified using CNN classifiers, leading to the development of the IFFA-TSCI-CNN classification method. The key advantages of the IFFA-TSCI-CNN method are:

-

(1)

The cosine and sine functions in GASF and GADF are bijective within their respective intervals, effectively capturing the temporal dependencies in the time series. MTF encodes statistical information that represents the characteristic transition probabilities of a first-order Markov chain within the time series. RP visualizes trajectory periodicity in phase space and incorporates all relevant dynamic information of the time series. The IFFA method combines these four complementary, non-overlapping feature sets into a single image, facilitating serial feature enhancement in a high-dimensional feature space. This enriches the feature dimension and mitigates feature loss during the time series image encoding process.

-

(2)

In the GASF and GADF pixel matrices, the cosine values of the boundary and diagonal elements encapsulate the cosine values of all other positions in the matrix. This indicates that the boundary and diagonal elements contain the majority of the feature information in GAFs. For instance, the diagonal often represents equal angles in the cosine function, forming a linear shape in the time series image. This diagonal line exhibits higher pixel values than the surrounding regions, thereby encapsulating richer classification features. After stitching the images together, two edges from the original images are merged into the interior of the mosaic, which enhances the CNN’s ability to extract edge features and reduces feature loss. Previous work by Wang et al.30 used GAFs and MTF images of the same size to construct a two-channel image (GASF-MTF-GADF), which showed improved classification performance on UCR datasets. The advantage of this approach lies in the combination of static and dynamic statistics inherent in the original time series, which enhances the accuracy of classification. The GAF and MTF pixel matrices represent time-dependent and transition probability information, respectively. While GAF encodes static information, MTF captures dynamic information, and these two features are independent of each other. In this context, GAF and MTF can be considered “orthogonal” channels, analogous to different colors in the RGB image space. In this paper, we extend this approach by incorporating an RP diagram to capture the periodicity of the visual trajectory in the phase space of the time series image.

-

(3)

In CNN-based image recognition, the convolution kernel slides over the image’s pixel matrix line by line to extract feature information. Except for 1\(\times\)1 convolution kernels, larger kernels can only extract boundary features once during the sliding process, whereas features closer to the center of the matrix are extracted multiple times. To minimize feature loss, particularly for the boundary and diagonal lines, which contain dominant features, the IFFA method is employed to concatenate four types of time series images into a rectangular image. From a macro perspective, half of the 16 boundaries of the four individual images are merged into the interior of the mosaic, as shown in Fig. 2. This arrangement enables the convolution kernel to repeatedly extract features from the concatenated time series images, reducing feature loss and leading to more complete and richer boundary features, thus enhancing classification performance. From a micro perspective, the feature vectors of the four types of time series images are combined into a single, concatenated feature vector in high-dimensional space. When classifying these spliced feature vectors, the CNN can recognize a greater degree of differentiation, improving classification accuracy. The CNN’s color patch extraction process functions as a moving average, enhancing several receptive fields within the nonlinear unit through distinct training weights. However, it is not merely a simple moving average; rather, it integrates two-dimensional time dependencies across different time intervals, which helps preserve temporal information. The operational flow of the IFFA-TSCI-CNN method is illustrated in Fig. 2.

Experiment

Experimental data

To assess the applicability of the proposed agricultural product price time series forecasting model selection method across different agricultural products, we selected the spot prices of corn and wheat as case studies for empirical analysis. The average spot prices of corn and wheat are obtained from Wind database www.wind.com.cn, where the spot price of corn is provided with the daily degrees from January 1989 to December 2019, and the spot price of wheat is provided with the daily degrees from January 1999 to December 2019, Showed in Table 1. Due to the epidemic situation, the latest data cannot be updated for the time being.

Experimental design

There are two primary forecasting model selection schemes: Transfer Learning (TL)31 and S-Folder Cross Validation (S-FCV)32. The IFFA-TSCI-CNN-TL Method is chosen in this study due to its ability to address the issue of insufficient data. The time series data selected for this experiment includes 10,950 daily price timestamps for corn and 7,300 daily price timestamps for wheat. However, these two time series are relatively short and consecutive, limiting the model’s robustness due to insufficient training data. To mitigate this, the TL scheme is employed to enhance model training. The experimental procedure can be broadly divided into four stages: time series preprocessing, time series image coding, transfer learning, and forecasting model selection.

Time Series Preprocessing Given the large number of data points in the selected time series for corn and wheat, directly encoding them into images is impractical (e.g., converting the corn time series into an image results in a pixel matrix of size 10,950\(\times\)10,950, which is computationally prohibitive). Therefore, both time series are divided into sub-series of 50 data points each, with the resulting sub-series subsequently allocated to test sets. Missing or incomplete data are addressed using interpolation methods, and labels are assigned to each sub-series after segmentation. For model selection, we consider four linear forecasting models—Autoregressive Integrated Moving Average Model(ARIMA), Exponential Smoothing State Space Model(ETS), Random Walk(RW), and Theta Model(Theta)—and two nonlinear models—Backpropagation Neural Network(BPNN) and Extreme Learning Machine(ELM)—as potential alternatives. We use the forecast package in R 4.3.1 (https://cran.r-project.org/) : Version 8.21.1 (https://cran.r-project.org/package=forecast) selects the above six alternative models to predict each of the generated subsequences. The model that yields the lowest prediction error, measured by the Mean Absolute Percentage Error (MAPE), is selected as the optimal model for each sub-sequence.

Time Series Image Coding Four types of time series images are generated from the segmented agricultural product price data. These images are then combined using the IFFA method, with corresponding labels assigned to each composite image.

Transfer Learning In the transfer learning phase, the public M3 dataset is used as the transfer learning library for both the training and validation sets, while the agricultural product price time series serves as the test set. After applying the IFFA-TSCI-CNN procedure to the training data, a predictive model classifier is synthesized. Applying IFFA-TSCI-CNN algorithm to M3 data set to enhance the features of time series images in M3 transfer learning library. This will enhance the robustness and generalization ability of transfer learning models.

Forecasting Model Selection In the final stage, the segmented time series images of agricultural product prices are input into the trained forecasting model. The optimal prediction model is selected based on the MAPE of each time series, and the mean MAPE of all time series is computed to evaluate overall model performance.

The alternative approach, S-FCV, consists of four stages: time series preprocessing, time series image coding, classifier training, and forecasting model selection.

IFFA-TSCI-CNN-SFCV Method In the preprocessing stage, the corn and wheat time series are segmented into sub-series of 15 data points. The segmented sub-time series are split into training, validation, and test sets in a 6:2:2 ratio. Given that the total number of sub-sequences is less than 1,000, overfitting may occur. Therefore, S-FCV is applied to the validation and test sets, enabling the entire training set to be utilized for both training and validation. This approach allows for rapid parameter adjustments and improves the model’s ability to handle insufficient data.

Time Series Image Coding In the image coding phase, 1D sub-time series are converted into 2D time series images (2D-TSI), with the optimal prediction model assigned as the label. The IFFA method is used to concatenate time series images, enhancing features and improving classification accuracy.

Classifier Training Sub-time series images from the training and validation sets, after data and feature enhancement, are input into a CNN model for training. The trained CNN classifier is then used to classify the sub-time series in the test set.

Forecasting Model Selection In the model selection stage, the optimal prediction model for each sub-time series is assigned based on the labels, and the MAPE of the test set is computed. The mean MAPE across all sub-time series is then averaged to assess the model’s overall performance.

The flowcharts for the TL and S-FCV experimental schemes are illustrated in Fig. 3.

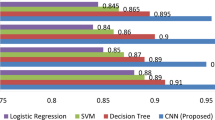

The baseline model includes SVM, MLP, DTW, TSI-CNN, IFFA-TSCI-CNN, and 6 alternative single models, which can be divided into linear and nonlinear single models, machine learning models and CNN time series image models.

Experimental parameter

For model training and evaluation, we implement the following experimental settings:

-

Cross-validation with 3 folds and shuffling (random state=np.random)

-

Batch size: 16 for CNN training

-

Learning rate: 0.0001 with decay factor 0.93 per epoch

-

Training epochs: 200 (until loss convergence)

We evaluate prediction accuracy using Mean Absolute Percentage Error (MAPE), defined as:

where:

-

\(Y_t\) is the true value at time t

-

\(\hat{Y}_t\) is the predicted value at time t

-

n is the total number of samples

Results

Discussion

The experimental results of the Transfer Learning (TL) scheme are presented in Tables 1-5, from which the following conclusions can be drawn:

-

(1)

Nonlinear forecasting models outperform linear models for highly volatile time series

As shown in Table 2, the baseline model demonstrates that nonlinear models exhibit a slightly superior forecasting performance compared to linear models. This observation is particularly evident in the context of agricultural product price fluctuations, which have remained relatively volatile in recent years. The nonlinear models are better equipped to capture the complexities and non-linear patterns inherent in such volatile time series.

-

(2)

Advantages of deep learning networks

Tables 2 and 3 illustrate that, within the TL framework, traditional machine learning models struggle to perform effectively when confronted with time series data of large volume and complexity. In contrast, deep learning models, which feature more advanced network architectures, are better suited for handling such data. The ability of deep learning models to perform multi-level feature extraction is crucial for addressing the challenges posed by complex and high-dimensional time series data.

-

(3)

Deep learning networks demonstrate superior accuracy in model selection for IFFA-TSCI

As shown in Tables 4, 5, and 6, DenseNet-121, which features the deepest network layers, delivers the best performance in selecting prediction models for Time Series Images (TSI) and the IFFA-TSCI method. The increased depth and complexity of the network architecture are particularly advantageous for handling time series data characterized by significant fluctuations. Furthermore, the IFFA-TSCI-CNN approach outperforms other methods on the actual dataset, effectively demonstrating the superiority of the proposed methodology in terms of both accuracy and robustness.

These results underscore the importance of leveraging deep learning models with deeper architectures for time series forecasting, particularly when dealing with highly volatile data such as agricultural prices. The proposed IFFA-TSCI-CNN method represents a significant improvement over traditional methods, offering enhanced predictive accuracy and better handling of complex, fluctuating time series data.

-

(4)

Comparison Between the TL Scheme and S-FCV Scheme

A comparison between the TL scheme and the S-FCV scheme, as shown in Tables 2 and 7, reveals that the prediction errors of the single prediction models in the TL scheme are generally lower than those in the S-FCV scheme. This difference can be attributed to the fact that the subsequences in the TL scheme are longer than those in the S-FCV scheme. With longer time series, the prediction model has the opportunity to capture the underlying trends and cycles over a greater number of lag periods, leading to improved prediction accuracy.

As presented in Tables 8, 9 and 10, within the TL framework, the TSI-CNN model selection method consistently outperforms traditional machine learning methods in terms of prediction accuracy. Notably, the IFFA-TSCI-CNN method, which we propose, surpasses the TSI-CNN model across three distinct CNN architectures. This comparison strongly supports the superior performance of the IFFA-TSCI-CNN method for time series forecasting tasks.

In contrast, the S-FCV scheme (as shown in Tables 9, 10 and 11) exhibits higher model selection errors for DenseNet-121-TSI when compared to VGG-11-TSI and ResNet-18-TSI. This can be explained by the relatively limited amount of agricultural product price data available, which affects the performance of the DenseNet model. DenseNet, with its deeper network layers, requires larger datasets to fully leverage its capacity for learning complex features. In scenarios with insufficient data, its performance is hindered, whereas VGG-11 and ResNet-18, which have fewer layers, are better suited for such data constraints.

Finally, the MAPE comparisons for each method in both the TL and S-FCV schemes are presented in Figs. 4, 5, 6, 7, 8, 9, 10, 11, 12 and 13 providing further insights into the relative forecasting accuracies of the methods under consideration.

These findings underscore the importance of subsequence length and model architecture in time series forecasting, particularly when working with datasets of varying sizes and complexities. The results highlight the advantages of the TL scheme, and specifically the IFFA-TSCI-CNN method, over traditional machine learning models, especially in handling longer time series with more complex patterns.

Conclusion

In this study, we apply a temporal classification method based on image encoding technology and convolutional neural networks (CNNs) to time series prediction. The primary objective is to integrate domain-specific knowledge with advanced classification techniques and leverage these methods for forecasting agricultural price time series. The key contributions of this work are as follows:

-

(1)

Transformation of Predictive Modeling into Time Series Image Classification We redefine the problem of model selection for time series forecasting as a classification problem for time series images. Rather than relying on traditional feature engineering techniques, we utilize computer vision approaches to automatically extract features from time series images through CNNs. This approach significantly reduces the manual effort required for feature selection, offering a more efficient solution for model development.

-

(2)

Enhancement of Feature Representation in CNN Training To address the potential feature loss that may arise during the CNN training process when dealing with time series images, we introduce the IFFA-TSCI-CNN method. This method involves the splicing of time series images, thereby enhancing feature representation and improving the overall accuracy of model selection in the context of time series classification. This enhancement helps to capture more meaningful patterns within the data, which in turn improves forecasting performance.

-

(3)

Superior Performance on Agricultural Price Data Our proposed IFFA-TSCI-CNN model achieves the highest selection accuracy when applied to spot price data for corn and wheat. To ensure the reliability and robustness of our findings, we conduct extensive ablation experiments, systematically validating the model’s performance across different configurations. These results demonstrate the effectiveness of the IFFA-TSCI-CNN method in forecasting agricultural prices. Additionally, we recognize the growing importance of multivariate approaches for time series forecasting, particularly in practical applications where the diversity of information plays a critical role. Future work will focus on extending the algorithm’s capabilities to multivariate time series forecasting. We also aim to integrate methodological advances with real-world practices more closely, enhancing the practical applicability of our approach. These contributions collectively advance the state of the art in time series forecasting, particularly for agricultural prices, and provide a foundation for future research into multivariate and more complex time series prediction tasks.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Ahmadi, H. et al. Impact of varied tillage practices and phosphorus fertilization regimes on wheat yield and grain quality parameters in a five-year corn-wheat rotation system. Sci. Rep. 14, 14717. https://doi.org/10.1038/s41598-024-65784-w (2024).

Zhang, Y. et al. Covid-19, the russia-ukraine war and the connectedness between the us and chinese agricultural futures markets. Humanit Soc. Sci. Commun. 11, 477. https://doi.org/10.1057/s41599-024-02852-6 (2024).

Stoll, H. & Whaley, R. The dynamics of stock index and stock index futures returns. J. Financ. Quant. Anal. 25, 441–468 (1990).

Brooks, R. Power arch modelling of the volatility of emerging equity markets. Emerg. Mark. Rev. 124–133 (2007).

Bunnag, T. Hedging petroleum futures with multivariate garch models. Int. J. Energy Econ. Policy 5, 105–120 (2015).

Huang, W., Huang, Z., Matei, M. & Wang, T. Price volatility forecast for agricultural commodity futures: the role of high frequency data. J. Econ. Forecast. 83–103 (2012).

Zhu, B., Ye, S., Wang, P., Chevallier, J. & Wei, Y. Forecasting carbon price using a multi-objective least squares support vector machine with mixture kernels. J. Forecast. 41, 100–117 (2022).

Ribeiro, M., da Silva, R. & Moreno, S. E. A. Variational mode decomposition and bagging extreme learning machine with multi-objective optimization for wind power forecasting. Appl. Intell. 54, 3119–3134. https://doi.org/10.1007/s10489-024-05331-2 (2024).

Wen, F., Xiao, J. & He, Z. E. A. Stock price prediction based on ssa and svm. Procedia Comput. Sci. 31, 625–631 (2014).

Zhu, Q. et al. A hybrid vmd-bigru model for rubber futures time series forecasting. Appl. Soft Comput. 84, 105739 (2019).

Liu, W. et al. Ensemble forecasting for product futures prices using variational mode decomposition and artificial neural networks. Chaos Solitons Fract. 146, 110822 (2021).

Wang, B. & Wang, J. Deep multi-hybrid forecasting system with random ewt extraction and variational learning rate algorithm for crude oil futures. Expert Syst. Appl. 161, 113686 (2020).

Yu, L., Wang, Z. & Tang, L. A decomposition–ensemble model with data characteristic-driven reconstruction for crude oil price forecasting. Appl. Energy 156, 251–267 (2015).

Lingyu, T., Jun, W. & Chunyu, Z. Mode decomposition method integrating mode reconstruction, feature extraction, and elm for tourist arrival forecasting. Chaos Solitons Fract. 143, 110423 (2021).

Wang, J. & Li, X. A combined neural network model for commodity price forecasting with ssa. Soft Comput. 22, 5323–5333 (2018).

Gurina, E. et al. Forecasting the abnormal events at well drilling with machine learning. Appl. Intell. 52, 9980–9995. https://doi.org/10.1007/s10489-021-03013-x (2022).

Li, Y., Zheng, W. & Zheng, Z. Deep robust reinforcement learning for practical algorithmic trading. IEEE Access 7, 108014–108022 (2019).

Jeong, G. & Kim, H. Improving financial trading decisions using deep q learning: Predicting the number of shares, action strategies, and transfer learning. Expert Syst. Appl. 117, 125–138 (2019).

Wu, X. et al. Adaptive stock trading strategies with deep reinforcement learning methods. Inf. Sci. 538, 142–158 (2020).

Gao, Z., Gao, Y., Hu, Y., Jiang, Z. & Su, J. Application of deep q-network in portfolio management. In 2020 5th IEEE International Conference on Big Data Analytics (ICBDA), 268–275 (IEEE, 2020).

Jiang, W. & et al. Time series to imaging-based deep learning model for detecting abnormal fluctuation in agriculture product price. Soft Comput. 27, 14673–14688 (2023).

Jiang, W., Zhang, D. & Ling, L. e. a. Time series classification based on image transformation using feature fusion strategy. Neural Processing Letters 54, 3727–3748 (2022).

Ghasemieh, A. & Kashef, R. An enhanced wasserstein generative adversarial network with gramian angular fields for efficient stock market prediction during market crash periods. Appl. Intell. 53, 28479–28500 (2023).

Chai, X. et al. Tpe-mm: Thumbnail preserving encryption scheme based on markov model for jpeg images. Appl. Intell. 54, 3429–3447 (2024).

Faisal, M. et al. Nddnet: a deep learning model for predicting neurodegenerative diseases from gait pattern. Appl. Intell. 53, 20034–20046. https://doi.org/10.1007/s10489-023-04557-w (2023).

Wu, Y. et al. Da-resnet: dual-stream resnet with attention mechanism for classroom video summary. Pattern Anal. Applic. 27, 32. https://doi.org/10.1007/s10044-024-01256-1 (2024).

Vignesh, S. et al. A novel facial emotion recognition model using segmentation vgg-19 architecture. Int. J. Inf. Technol. 15, 1777–1787. https://doi.org/10.1007/s41870-023-01184-z (2023).

Zhu, C. et al. Image classification based on tensor network densenet model. Appl. Intell. 54, 6624–6636 (2024).

Liu, Q. Application research and improvement of weighted information fusion algorithm and kalman filtering fusion algorithm in multi-sensor data fusion technology. Sens Imaging 24, 43. https://doi.org/10.1007/s11220-023-00448-z (2023).

Wang, Y. & Ouyang, Y. A new time series classification approach based on gramian angular field and markov transition field. Knowledge-Based Syst. 88, 33–46. https://doi.org/10.1016/j.knosys.2015.05.017 (2015).

Bao, J. et al. Redirected transfer learning for robust multi-layer subspace learning. Pattern Anal. Applic. 27, 25. https://doi.org/10.1007/s10044-024-01233-8 (2024).

Dupierris, V. et. al. Validation of MS/MS Identifications and Label-Free Quantification Using Proline, vol. 2426 of Methods in Molecular Biology, 1–12 (Humana, New York, NY, 2023).

Acknowledgements

This work was supported by the Guangdong Basic and Applied Basic Research Foundation(2023A1515110618) and Wuxi University Research Startup Fund for high-level talents in the thesis.

Author information

Authors and Affiliations

Contributions

Wentao Jiang: Conceptualization, methodology, resources, writing—original draft. Quan Wang: validation, writing—review and editing. Hongbo Li: Funding acquisition, project administration, supervision. Corresponding author correspondence to Wentao Jiang.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jiang, W., Wang, Q. & Li, H. Two forecasting model selection methods based on time series image feature augmentation. Sci Rep 15, 27217 (2025). https://doi.org/10.1038/s41598-025-10072-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-10072-4