Abstract

The timely detection of abnormal health conditions is crucial in achieving successful medical intervention and enhancing patient outcomes. Despite advances in health monitoring, existing methods often struggle with achieving high accuracy, sensitivity, and specificity in real-time detection. This work addresses the need for improved performance in health monitoring systems in real time sensor data. In this work, real-time health monitoring data is obtained through the utilization of MAX 30102 and LM35 sensors, which capture the physiological features such as heart rate, blood oxygen levels and body temperature. The acquired data from these sensors is then transmitted to ThingSpeak, a cloud-based platform developed for the Internet of Things (IoT), where the data are analysed. In order to ensure consistency, the sensed features are subjected to a standardization process, ensuring they are scaled uniformly. In this work joint process estimator rider optimization algorithm (JPEROA) for Deep stack auto-encoder is proposed to perform the classification task. In JPEROA algorithm line coefficients and delay coefficients parameters are estimated to improve the performance of the system. The performance of the proposed method is compared with other five machine learning algorithms, including Support Vector Machine, Random Forest, Gradient Boosting, Naive Bayes, and Multilayer Perceptron neural networks. The proposed method also evaluated using PTB Diagnostic dataset signals. The performance of the algorithms is assessed using multiple performance metrics such as accuracy, sensitivity and specificity. The proposed method provides a maximum accuracy of 0.9625 and maximum sensitivity of 0.975 and specificity of 0.95.

Similar content being viewed by others

Introduction

In the healthcare industry, the timely detection of abnormal health conditions is of utmost importance, as it can significantly impact patient outcomes and healthcare costs. With the advent of sensor technology, health monitoring applications have become more sophisticated and effective, as sensors are capable of detecting and measuring various physical quantities, such as heart rate, blood oxygen levels, and body temperature, which are essential indicators of an individual’s health status. Moreover, the integration of machine learning algorithms with sensor technology has paved the way for a more efficient and accurate approach to detecting abnormal health conditions. Large sensor data sets can be analyzed by machine learning algorithms to produce insights that are difficult to obtain using conventional statistical techniques. They can learn patterns and trends from the data and provide predictions with a high degree of accuracy.

A fog computing-based event triggering technique for remote health monitoring was described by Verma et al.1. The work uses a Bayesian belief network classifier to classify data on a cloud server. The work also analyses distributed storage, embedded data mining, and notification services. For remote patient monitoring, Kar et al.2 described the analytic Hierarchy method and dynamic multi-attribute caching technique. Based on the doctors decision, the sensor data in the work are medically rated. The method’s latency is 23.8% lower than FIFO. Automatic signal quality analysis for an IoT-enabled ECG monitoring system was explained by Satija et al.3. The battery life of IoT-enabled devices is also examined in this work. The accuracy and dependability of unsupervised diagnosis systems are enhanced by the autonomous signal quality analysis method. An IOT system designed by Pathak et al.4 does not rely on physical ports. The technique has the benefit of having a compact form factor and supports the simultaneous operation of many homogenous sensors. The mesh network arrangement has an average packet delivery ratio of 0.98, whereas the star network configuration offers an average of 0.92. Additionally, the system offers good reliability.

A health-care data management method was described by Souvik Sengupta et al.5 in order to store huge amount of health data on the cloudlet’s server. The store health data is handled in response to end-user enquiries. The data is stored on the cloud server using the NoSQL approach. The system’s performance is evaluated based on energy usage and packet loss. Lindquist et al.6 suggested an IOT architecture to improve the adoption and efficacy between the variety of IOT Sensors. The architecture examines IOT-based mobile health applications. The method influence the design of more generalized IoT architectures for health-specific solutions. The Finite Element Analysis approach was described by Fawad et al.7 for the creation of a structural health monitoring system. The technique makes use of MEMS accelerometers and liquid levelling sensors for monitoring. In the process of creating the virtual environment, 3D Game Engine and BIM technologies are utilized. A smart textile-based long-range wearable antenna for short- and long-range temperature and heart rate monitoring was described by iman et al.8. The technique evaluates the rate at which electromagnetic radiation is absorbed. The technique improves wearable devices functionality.

Li et al.9 used a PPG sensor and a multichannel data collecting board to develop a health monitoring system. The sensor’s bending radius is 66.6 μm, and its power consumption is 30.4 mW. The method provide an average correlation of 96.21%. For applications involving remote human health monitoring, Phukan et al.10 described an indexed system for physical activity data. The convolutional neural network model examines the datasets for human activity recognition to determine whether it is feasible in real time. The method achieved an accuracy of 89.05% with processing time of 0.142ms. For Internet of Things applications, wearable environmental monitoring devices were explained by Mamun et al.11. To find connections between environments, the approach uses a fine-grain based spatiotemporal distribution.

A labor progress tracking system was designed by Gong et al.12 to help medical professionals intervene when pregnant women start having contractions. To identify contraction activity, a sensor device is affixed to the abdomen. For contraction categorisation, the edge service approach is employed, and the system’s accuracy is more than 96%. IoT-based vital sign monitoring of intensive care unit patients was described by Wang et al.13. This technique measures physiological parameters without making contact by using optical sensors. The cardio-respiratory measurement is done using the photoplethysmography algorithms. The accuracy of the approach was 94.5%. Spachos et al.14 discussed voice activated IoT devices with low power. The approach examines the patterns and particular difficulties of voice-activated sensors in the medical field. Jaffino et al.15,16 described how machine learning methods improve the accuracy of sensor-based accident detection systems based on IOT technology. To improve neural network performance, different optimization strategies depending on rider parameters are used. In the field of health monitoring, the utilization of various sensor networks enables the collection of data from nodes in different states. This data can then be analyzed using guided waves, hierarchical non-linear primary component analysis, and machine learning techniques to detect and classify the abnormality. Additionally, this study encompasses the implementation of vibration-based and vision-based surveillance methods, along with the exploration of recent developments in smart health monitoring. Multi-modal fusion method is used in U-net for classifying Alzheimer’s Disease. The Densely Connected Feature Aggregator Module is used to analyze the features of EEG signals17,18. Various Optimization techniques for training the Deep learning network are discussed in19,20,21,22,23,24,25. These optimization method are examined in terms of accuracy, sensitivity and specificity. To overcome these accuracy challenges, it is imperative to explore potential improvements and employ corrective measures in the deep learning algorithms. Khan et al.26 suggested an IOT-based fertilizer recommendation system based on the real-time soil fertility. Multi-modal fusion method and various machine learning models27 was discussed for various engineering applications. The connected feature Aggregator Module is used to analyze the various features extracted from the raw data. Deep dual-patch attention mechanism28 was used to improve the stability of the deep learning networks. This method transforms the bio signals into data blocks. For categorization tasks, a variety of machine learning models are employed, including K-Nearest Neighbour and Support Vector Machine.

The existing methods of health monitoring system have limited accuracy, sensitivity, and specificity in real-time abnormal health condition detection. The traditional machine learning algorithms have low performance on large datasets, sensitivity to noise, and potential for high false positive and false negative rates.The objective of this research work is to develop and implement the Joint Process Estimator Rider Optimization Algorithm (JPEROA) within a Deep Stack Auto-Encoder framework for real-time health monitoring. The second objective is to evaluate the effectiveness of the JPEROA method in improving the classification accuracy, sensitivity, and specificity of detecting abnormal health conditions. The work also compares the performance of the JPEROA approach with other established machine learning algorithms (Support Vector Machine, Random Forest, Gradient Boosting, Naive Bayes, and Multilayer Perceptron) using the PTB Diagnostic dataset.

The major contributions of this research work are listed below:

Proposed embedded system for measuring the bio signals: This research presents a novel embedded system that is built upon the ESP32 Wi-Fi module and incorporates the Max30102 sensor and LM35 sensor. The primary objective of this embedded system is to capture and measure the bio signals of patients.

Proposed joint process estimator rider optimization for deep stack auto-encoder: In JPEROA algorithm line coefficients and delay coefficients parameters are estimated to improve the performance of the optimization algorithm. The gradient method is used to adjust the coefficients to reduce the error, which improves the accuracy of the system. The deep stack auto encoder network is trained using the proposed joint process estimator which is obtained by integrating the joint process estimation in rider optimization algorithm.

Proposed method

The proposed method for detecting abnormal health conditions of a person using joint process estimator-based rider optimization for Deep stack auto-encoder is shown in Fig. 1. In this work the health monitoring system capture the data such as heart rate, blood oxygen level, and body temperature using MAX 30102 and LM35 sensors. The collected sensor data is transferred to Thing Speak, a cloud-based platform for the Internet of Things, and utilized for the analysis.

The proposed method employs a feature selection process to ensure that the model exclusively trains on relevant features, while the target variable is separated for training purposes. Subsequently, the program proceeds with hyperparameter tuning, wherein the search space for hyperparameters, such as learning rate, maximum depth, and the number of estimators, is defined for various algorithms. Grid search is then utilized to perform hyperparameter tuning on the model. This technique randomly selects combinations of hyperparameters from the defined search space and evaluates their performance using cross-validation. The objective is to identify the optimal hyperparameters that enable the model to achieve its highest performance potential. Once the hyperparameters are tuned, a distinct classifier model is constructed using the selected hyperparameters and trained on the pre-processed training data. During the model training phase, the program utilizes the pre-processed data to instruct the model on how to make accurate predictions. Following the model training, the system evaluates its performance on unfamiliar data. The system provides the predicted health status of unknown patients using the trained model, along with the best hyperparameters identified through machine learning algorithms. Additionally, a classification report is generated, which offers a comprehensive analysis of the model’s performance in each class, presenting precision, recall, f1-score, and support for each class. This analysis aids in identifying areas that require improvement, thereby facilitating the enhancement of patient outcomes.

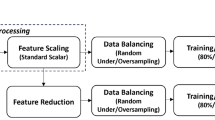

Pre-processing

Several pre-processing steps were undertaken in this research to preprocess the dataset and prepare it for the subsequent training and testing of machine learning models. The first step in pre-processing was to find the missing values from the dataset. Missing values are a common issue in datasets and can significantly affect the performance of the resulting models. Therefore, it is crucial to identify and find any missing values in the dataset. The missing values are handled using Linear Regression method. This approach predicts missing values by constructing a regression model based on the relationships between other features in the dataset. This method was chosen due to its effectiveness in preserving the linear relationships inherent in the data, ensuring that the imputed values are consistent with the existing data patterns. In this work a threshold of 10% was set for acceptable levels of missing data per features. The second pre-processing step was outlier removal. Outliers are data points that are significantly different from the rest of the data and can affect the model’s performance. Therefore, it is important to identify and remove any outliers in the dataset to ensure that the model is not affected by them. Finally, standardization was applied to scale the features in the dataset to the same scale. This is done to ensure that each feature has a similar impact on the model and to prevent features with larger scales from dominating the model. Standardization is a common preprocessing step in machine learning and is often used to improve the performance of the resulting model. By removing missing values and outliers and standardizing the features in the dataset, the resulting models were able to achieve high levels of accuracy and performance.

Support vector machine

The support vector machine (SVM) algorithm is a powerful tool utilized for classification and regression tasks. Its primary objective is to identify the optimal hyperplane that effectively separates data points into distinct classes. The hyperplane, determined by the SVM algorithm, maximizes the margin between these classes. The margin represents the distance between the hyperplane and the nearest data points from each class. The SVM model’s performance is enhanced when the margin is maximized, leading to improved generalization capabilities and better overall performance. The hyperplane of SVM is described as the decision boundary that separates the data into distinct classes, which is expressed as.

where w is the weight vector, x is the input vector, and b is the bias. The objective of the classifier is to find the values of w and b that satisfy the following constraints.

where yi is the class label (+ 1 or − 1) of the i-th data point. This constraint ensures that all data points are correctly classified and that they are on the correct side of the hyperplane.The margin of the classifier is expressed as.

where yi is the class label (+ 1 or − 1) of the i-th data point. This constraint ensures that all data points are correctly classified and that they are on the correct side of the hyperplane.The margin of the classifier is expressed as.

Where ||w|| is the norm of the weight vector. The primary objective of the support vector machine (SVM) algorithm is to maximize the margin while adhering to the specified constraint. To achieve this, the Lagrange multipliers technique is employed to determine the optimal values for the weight vector and bias. By converting the optimization problem into a dual problem, the Lagrange multipliers enable more efficient solution finding. Once the optimal values for the weight vector and bias are obtained, the classifier can be utilized to classify new data points. In essence, the SVM algorithm is a potent machine learning approach that operates by identifying the hyperplane that maximizes the margin between two classes. It accomplishes this by mapping the data to a higher-dimensional space and determining the appropriate values for the weight vector and bias. The SVM algorithm finds widespread application in various domains, including image classification, text classification, and bioinformatics.

Random forest classifier

Random Forest, a prominent algorithm in the field of machine learning, harnesses the collective power of multiple decision trees to yield accurate predictions in both classification and regression scenarios. Each decision tree in the ensemble is constructed by randomly selecting a subset of the training data and a subset of the features. This deliberate randomization strategy mitigates the risk of overfitting and enhances the algorithm’s ability to generalize to unseen data. Moreover, the algorithm assesses the significance of each feature using the mean decrease impurity metric, which quantifies the impact on model accuracy when a particular feature is randomly permuted. In classification tasks, the final prediction is determined by majority voting among the individual tree predictions, while regression tasks utilize the average of the individual tree predictions as the final output. The Random forest prediction is expressed as

Here \(\hat {H}\) represent the predicted class label for the input data x and mode (⋅) denotes the mode function that returns the most frequent prediction among the decision trees. \({m_T}(x)\)represent the prediction of t-th decision tree in the RF classifier for input x. The prediction of \({m_t}(x)\) is for a given decision tree is expressed as

The combined prediction of the overall decision tree is described as

Here d denotes the class label, \({m_t}(x)\) is the prediction of the t-th decision tree, and K() is the indicator function that returns 1 if the condition is true and 0 otherwise. The class label with the highest sum of indicators is chosen as the final prediction.

Naive Bayes

Naive Bayes, a widely employed classification algorithm in machine learning, stands out for its effectiveness across diverse applications. The algorithm draws its foundations from Bayes theorem, a fundamental theorem in the realm of probability theory. Naive Bayes operates by computing the conditional probability of each class, considering the feature values of a new data point, and subsequently selects the class with the highest probability as the predicted class. The algorithm employs a simplifying assumption that all features are independent of each class, thus allowing for efficient and scalable calculations.

In naïve bayes classifier C denote the set of class labels and x be the sensor data values. The prediction of naïve bayes classifier is based on maximum a posteriori (MAP) estimation which is computed as

The likelihood function \(P({{{m_i}} \mathord{\left/ {\vphantom {{{m_i}} c}} \right. \kern-0pt} c})\) is expressed as

where \({\mu _{c,i}}\) is the mean and \({\sigma _{c,i}}\) is the standard deviation for feature i in class c

Gradient boosting

Gradient Boosting, an influential machine learning algorithm, has garnered substantial attention for its ability to generate robust predictive models through the fusion of multiple weak models. The algorithm employs a sequential strategy, wherein each subsequent model is trained on the residual errors left unexplained by the previous models. This iterative process enables the algorithm to progressively refine its predictions by capitalizing on the accumulated knowledge of prior models. At the outset, the Gradient Boosting model is initialized with a constant value, typically the mean of the target variable. Subsequently, in each iteration, a weak learner is fitted to the negative gradient of the loss function, which guides the algorithm towards the most substantial reduction in the loss function relative to the current model’s prediction.

In gradient bosting algorithm M represent the number of base learners. The final prediction for an input sensor data is calculated as

where \(\eta\)is the learning rate and \({m_i}(x)\) is the prediction of the i-th base learner on input feature vector x. The training algorithm update equation of gradient boosting algorithm is expressed as

where \({\alpha _t}\)is the optimal weight values,\({h_t}\)is the base learner, H is the loss function and \({F_{t - 1}}\) is the current prediction.

Multilayer perceptron

The multilayer perceptron (MLP) is a neural network architecture characterized by its interconnected neurons or nodes, organized into multiple layers. It finds wide applications in supervised learning scenarios, encompassing regression and classification tasks. The output of each neuron is determined by a non-linear activation function, which computes the weighted sum of inputs received by the neuron. This output is then propagated to the subsequent layer of neurons, continuing until the final output layer is reached, generating the network’s prediction. The weights and biases of the MLP are learned through a process called backpropagation. Backpropagation involves adjusting the weights and biases based on the disparity between the network’s prediction and the actual output. It accomplishes this by computing the gradient of the error with respect to the weights and biases and utilizing it to update their values. Stochastic gradient descent, an optimization algorithm, is commonly employed in this weight and bias adjustment process. Each neuron employs a non-linear activation function to produce an output. The MLP’s weights and biases are updated through backpropagation, allowing the network to make precise predictions on new data.

The weighted sum of input for each neuron j in layer i is expressed as

where \({w^i}_{{jm}}\) is the weight connecting neuron m in layer i-1 to neuron j in layer i, \({x^i}_{m}\)is the output of neuron m in layer i-1 and \({b^i}_{j}\) is the bias of neural network. The weights and bias are updated based on the learning rate \(\eta\) and loss function L which is expressed as

Proposed joint process estimator based rider optimization algorithm based deep SAE

The proposed JPE-ROA algorithm is obtained by integrating the Rider optimization algorithm with linear combinations of backward prediction errors. The line coefficients and delay coefficients are extracted from the features to enhance the performance of the algorithm. The proposed algorithm is designed using rider parameters, such as attacker, follower, over taker, and bye pass rider, as described in19.The initialization of the JPE-ROA is expressed as

Here C denote the total number of riders, and \({H_r}(t,m)\)indicate location of \({t^{th}}\) rider in \({m^{th}}\)dimension at \({r^{th}}\)time instant. To determine whether the rider parameters were successful, the rider’s location is updated. The proposed algorithm’s update equation is written as

Here, h is interrelate selector, \({H^G}\) represent the location of success rider, G indicate fastest rider, \(\phi _{{t,h}}^{r}\)denote the navigate angle of \({t^{th}}\)rider in \({h^{th}}\)coordinate, \(j(k)\)is the joint process estimator and \(\partial _{t}^{r}\)represent the distance vector. The joint process estimator parameter of the method is derived by using line coefficient parameter.

The number of riders is evaluated with the count of riders of each group and is given by,

where, N be the number of riders, B signifies bypass rider, F represents follower, O signifies over taker, A represents attacker, and R signifies rag bull rider. The success rate of the rider parameters are estimated using the Eq.

where, Vp symbolizes the position of pth rider and Vt indicates the target position.

The ROA algorithm’s error is decreased by using the joint process estimator. The error reducing coefficients are extracted from the sensed IOT features. The line coefficient of the algorithm is expressed as

where j represents the line coefficient. Each line coefficient of the algorithm is estimated using adaptive method and the equation is represented as

Here \(g(m)\)is the arbitrary process estimation from the feature vector and \({e_k}(m)\)is the prediction error. The gradient method is used to adjust the coefficients to reduce the error which is formulated as

Here \(\eta\)is the step size to reduce the error and \(e(m)\) is the prediction error. The update feature coefficient is formulated as

Here the joint process estimator is converged based on the prediction error. The pseudo code of the proposed method is tabulated in Table 1.

Deep stacked autoencoder

The layers of the deep stacked autoencoder are trained using the output of the preceding autoencoder and are composed of many autoencoder layers. The SAE network has high capability to acquire knowledge from complex features. The autoencoder is composed of encoder and decoder module in which the encoder is used to decrease the depth of the feature vector and the decoder is used to regenerate the actual feature value from the decreased depth feature values. The primary objective of the SAE is to decrease the error value. The mapping function of encoder to transform the input feature vector m is represented as

Here \({\psi _1}\) denote the weight vector and R indicate the bias value and G1 represent the regenerated value. The regeneration of the decoder based on hidden network is represented as

where \({\psi _2}\) represents weight value and z represent the hidden networks and G2 denote the bias value. The Proposed Joint Process Estimator based Rider optimization algorithm is used to decrease the squared regeneration error of the deep SAE network.

Results and discussion

This work aimed to predict the health condition of a person using different machine learning models. The performance of these models was evaluated by comparing their accuracy, sensitivity, Specificity, precision, recall, F1 score and Matthews Correlation Coefficient. The method determined the best hyperparameters to improve the classification accuracy. These hyperparameters were then used to classify the health condition of the person. In this work 80% of the feature values are used for training the classifier and 20% of the feature values are used for testing the performance of the classifier. In the domain of machine learning, the evaluation of classification models holds significant importance. Assessing the accuracy of these models is crucial for determining their effectiveness in various applications. The classification report serves as a valuable tool in this evaluation process, offering a comprehensive overview of the model’s performance through the utilization of different metrics. The energy efficiency of the sensors and the embedded system used in this work are analysed. The MAX30102 sensor consumes 600 µA during active measurement of the data and 0.7 µA in standby mode. we will incorporate a detailed comparison of the power consumption of each model under various operational scenarios. The Efficient power management ensures the sensors for minimal energy consumption during periods of low activity. The LM35 sensor consumes 60 µA, with a maximum current of 1 mA under high load conditions. The LM35 sensor consume less quiescent current that ensures minimal energy consumption during continuous monitoring, so it is suitable for energy-sensitive application. The ESP32 microcontroller consumes current between 160 mA to 240 mA when actively transmitting or receiving data. The power consumption was peak during intensive tasks, such as streaming sensor data or handling multiple sensor inputs. In standby mode the ESP32 consumes around 20 mA to 80 mA. The ESP32 is highly energy-efficient for IoT applications that require instant data transmission.The computational load of the system was analysed in terms of resource utilization, scalability and latency Impact. The ESP32 have 4 MB of flash memory, which is used for storing the firmware, user applications, and configuration files. The ESP32 has approximately 520 KB of SRAM, split between two banks. This memory is used for runtime variables, stacks, and buffers. Efficient memory management, such as dynamic memory allocation and garbage collection, is crucial to avoid memory leaks. The deep stacked autoencoder is used in this work. . The architecture is structured as follows:

Input Layer: 3 neurons.

Encoder: Two hidden layers, each with 5 neurons.

Decoder: Two hidden layers, each with 5 neurons.

Output Layer: 1 neuron.

The hardware specification of the components used in this work are tabulated in Table 2.

Performance metrics

True Positive (TP): The number of classes where the actual class is positive (y = 1) and the predicted class is also positive (ŷ = 1).

True Negative (TN): The number of cases where the actual class is negative (y = 0) and the predicted class is also negative (ŷ = 0).

False Positive (FP): The number of cases where the actual class is negative (y = 0) but the predicted class is positive (ŷ = 1).

False Negative (FN): The number of cases where the actual class is positive (y = 1) but the predicted class is positive (ŷ = 0). Precision is used to evaluate the performance of a classification model. It measures the proportion of true positive predictions (i.e., instances correctly classified as positive) among all instances that were classified as positive. In other words, precision gives an estimate of how often the model is correct when it predicts a positive class.

The precision is given as

Recall is a performance metric commonly used to evaluate the effectiveness of a classification model. It measures the proportion of true positive predictions among all instances that are positive. In other words, recall gives an estimate of how often the model correctly identifies positive instances. The recall is expressed as

The F1-score is a widely used metric to evaluate the overall performance of a classification model. It is the harmonic mean of precision and recall and provides a balanced view of the model’s performance across both measures. The F1-score is calculated using the formula.

Matthews Correlation Coefficient is expressed as

Performance assessment

The accuracy of the designed joint process estimator based deep stack encoder is evaluated. The Fig. 2 represents the performance metric accuracy analysis of the proposed method with other existing classifier such as Support Vector Machine, Random Forest, Gradient Boosting, Naive Bayes, and Multilayer Perceptron neural networks. he proposed method achieved the highest accuracy, recording a value of 0.9625. In comparison, the Support Vector Machine (SVM) classifier achieved an accuracy of 0.9375, while the Multilayer Perceptron Neural Network (MLP) yielded 0.9. The Random Forest classifier had an accuracy of 0.85, Gradient Boosting reached 0.9075, and Naïve Bayes recorded an accuracy of 0.88. These findings indicate that the proposed method outperforms the other models in terms of accuracy.

The sensitivity analysis of the proposed method is shown in Fig. 3. The proposed JPEROA-based deep stack autoencoder demonstrated the highest sensitivity, achieving a maximum value of 0.975. In comparison, the Support Vector Machine (SVM) classifier reached a sensitivity of 0.95, while the Multilayer Perceptron Neural Network (MLP) recorded 0.925. The Random Forest model produced a sensitivity of 0.875, Gradient Boosting achieved 0.91, and Naïve Bayes provided a sensitivity value of 0.9. These results indicate that the JPEROA-based deep stack autoencoder outperforms all other models in terms of sensitivity, with SVM showing strong performance as well.

The specificity analysis of the proposed method compared with other exiting methods is shown in Fig. 4. The proposed JPEROA-based deep stack autoencoder achieved the highest specificity, with a value of 0.95. In comparison, the Support Vector Machine (SVM) classifier obtained a specificity of 0.925, while the Multilayer Perceptron Neural Network (MLP) recorded 0.875. The Random Forest classifier had a specificity of 0.825, and both the Gradient Boosting classifier and Naïve Bayes achieved a specificity of 0.86. These results highlight the superior performance of the JPEROA-based deep stack autoencoder in terms of specificity,

The performance analysis of the Performance analysis of proposed method with existing methods is summarized in Table 3. In terms of recall, the JPEROA-based deep stack autoencoder achieved the highest recall at 0.975, indicating its ability to identify positive instances. The Support Vector Machine (SVM) followed with a recall of 0.95, while the Multilayer Perceptron Neural Network (MLP) recorded 0.925. Random Forest exhibited the lowest recall of 0.875. Gradient Boosting and Naïve Bayes models achieved recall values of 0.91 and 0.9, respectively. In terms of precision, JPEROA-based autoencoder once again outperformed the other models, attaining a precision of 0.9512. SVM demonstrated a precision of 0.9268, followed by Gradient Boosting with 0.9054. MLP, Naïve Bayes, and Random Forest yielded precision values of 0.8809, 0.8653, and 0.8333, respectively. The F1 score, which balances precision and recall, was highest for the JPEROA-based autoencoder at 0.9629. The SVM classifier followed with an F1 score of 0.9382, while Gradient Boosting achieved 0.9077. MLP had an F1 score of 0.9024, with Naïve Bayes at 0.8823 and Random Forest at 0.8537. The JPEROA-based autoencoder attained the best Matthews Correlation Coefficient value of 0.9252, indicating strong overall performance. The SVM classifier followed with an MCC of 0.8752, while Gradient Boosting and MLP recorded MCC values of 0.8150 and 0.8010, respectively. Naïve Bayes had an MCC of 0.7606, and Random Forest scored the lowest with 0.7008.

The performance of the proposed JPEROA-based deep stack autoencoder (JPE + ROA SAE) is evaluated using the PTB Diagnostic dataset, alongside other classification models, including Support Vector Machine (SVM), Multilayer Perceptron (MLP) Neural Networks, Random Forest (RF) Classifier, Gradient Boosting (GB), and Naïve Bayes. In this work, the analysis of the method is performed by changing the features used to training phase. The accuracy of the proposed JPE-ROA-based deep stack autoencoder for different training features is shown in Fig. 5.

The accuracy performance of several classifiers at different percentages of training data, such as 50%, 60%, 70%, 80%, and 90%, is explained in Fig. 5. The accuracy of SVM classifier is 0.8375 at 50% training sample and 0.9325 at 90% training sample. The multilayer perceptron neural provides an accuracy of 0.8075 for 50% training data and 0.9 for 90% training data. The performance of MLPNN is less during the early stage of training phase. For a 50% training sample and a 90% training sample, the random forest classifier yields an accuracy of 0.7975 and 0.905, respectively. An accuracy of 0.8075 for a 50% training sample and 0.8425 for a 90% training sample is produced using the gradient boosting algorithm. When compared to other methods, the accuracy performance of the naïve Bayes classifier is moderate. The proposed JPEROA-based deep stack autoencoder provide maximum accuracy of 0.84 for 50% training sample and 0.97 for 90% training sample. For the PTB Diagnostic dataset, the proposed JPEROA-based deep stack autoencoder perform well than the existing classifiers.

Figure 6 displays the results of the sensitivity analysis for the proposed JPE ROA Stack autoencoder. When given 50%, 60%, 70%, 80%, and 90% of the training data, the sensitivity of the SVM classifier is 0.85 for 60%, it is 0.875 for 70%, it is 0.88 for 80% and for 90%, it is 0.95. The findings show that the sensitivity performance of the SVM classifier is steadily increasing when increasing the training sample. The multilayer perceptron neural provides sensitivity of 0.84 for 50% training data and 0.925 for 90% training data. The random forest classifier produces a sensitivity of 0.79 for a 50% training sample and 0.875 for a 90% training sample. The gradient boosting approach yields a sensitivity of 0.91 for a 90% training sample and 0.825 for a 50% training sample. For a 50% training sample and a 90% training sample, the naïve bayes classifier yielded a sensitivity of 0.8 and 0.9, respectively. The sensitivity measure of the proposed JPE ROA Stack autoencoder is 0.85 for 50% training sample, 0.89 for 60% training sample, 0.925 for 70% training sample value, 0.95 for 80% training value, and 0.975 for 90% training value. For the PTB Diagnostic dataset, the proposed JPEROA-based deep stack autoencoder perform well than the existing classifiers in terms of sensitivity measure29.

Figure 7 displays the results of the specificity analysis of the proposed JPEROA Stack autoencoder. The specificity of SVM classifier for 50% training sample is 0.825 and 0.925 for 90% training sample. The false positive and false negative in the model indicates the misclassification of sample values. The multilayer perceptron neural provides specificity of 0.775 for 50% training data and 0.875 for 90% training data. The specificity value in MLPNN increases slightly as the training sample increases. The random forest classifier produces specificity of 0.805 for a 50% training sample and 0.825 for a 90% training sample. The specificity value is slightly increased from 50 to 90% of training sample. The gradient boosting method yields a sensitivity of 0.79 for 50% training sample and 0.905 for a 90% training sample. The gradient boosting method shows less improvement in specificity when compared to SVM and Random Forest classifier. Specificity was 0.78 for 50% of the training sample and 0.86 for 90% of the training sample according to the naïve bayes algorithm. In addition, this strategy not performed well when compared to other methods. The specificity measure of the proposed JPE ROA Stack autoencoder is 0.83 for 50% training sample, 0.85 for 60% training sample, 0.91 for 70% training sample value, 0.925 for 80% training value, and 0.95 for 90% training value. With 50% training sample, the proposed JPE ROA method provide high specificity that specify that it is more successful than other methods.

Comparative discussion

The evaluation is based on key metrics such as accuracy, sensitivity, and specificity of the comparative assessment tabulated in Table 4. The proposed JPEROA-based deep stack autoencoder achieved the highest accuracy at 0.97, demonstrating its superior ability to correctly classify instances compared to other models. The SVM classifier followed with an accuracy of 0.9325, while MLP Neural Networks recorded 0.9. The Random Forest classifier performed similarly to MLP with an accuracy of 0.905, whereas Gradient Boosting and Naïve Bayes achieved lower accuracy values of 0.8425 and 0.7925, respectively. The JPEROA-based deep stack autoencoder also recorded the highest sensitivity value of 0.975, indicating its strong performance in identifying positive instances. SVM came close with a sensitivity of 0.94, while the Random Forest classifier recorded 0.9. MLP, Gradient Boosting, and Naïve Bayes had lower sensitivity values of 0.875, 0.825, and 0.775, respectively. In the proposed JPEROA method the key parameters such as line coefficient and error prediction are estimated using joint process estimation, which improve the performance of the proposed method.

The results highlight the robustness of the proposed method in detecting positive cases compared to the other models. In terms of specificity, the JPEROA-based autoencoder once again outperformed the other models, achieving 0.965 indicating its superior ability to correctly identify negative instances. SVM and MLP Neural Networks followed with specificity values of 0.925 each. The Random Forest classifier recorded a specificity of 0.91, while Gradient Boosting and Naïve Bayes lagged behind with values of 0.86 and 0.81 respectively. The deep stacked autoencoder used in this work require large amount of training data for effective learning. The network is overfit for limited training data.

Conclusion

In this work joint process estimator-based rider optimization algorithm is developed for training the deep stack autoencoder to classify the sensor data stored in cloud server. The embedded system was designed using ESP32 Wi-Fi module and integrate with Max30102 sensor and LM35 sensor to transfer the sensed data to IOT cloud server. The sensed data are pre-processed to identify any missing values in the dataset. The deep stack auto encoder network is trained using the proposed joint process estimator rider optimization algorithm. The performance of the proposed method is compared with other existing algorithms such as Support Vector Machine, Random Forest, Gradient Boosting, Naive Bayes, and Multilayer Perceptron neural networks. The proposed method provides a maximum accuracy of 0.9625 and maximum sensitivity of 0.975 and specificity of 0.95. These results obtained in this work demonstrate that the proposed JPEROA-based deep stack autoencoder perform well when compared to other machine learning models.

Several potential areas of future research could build upon the findings of this study. One possibility is to further optimize the machine learning models developed in this study to improve their performance, or hyperparameters to enhance the accuracy and precision of the models. Improving the power efficiency of the ESP32-based embedded system can be a critical future direction. Low-power optimization techniques can extend battery life, making the system more suitable for wearable healthcare devices. The JPEROA algorithm can be extended to optimize other neural network architectures, such as convolutional neural networks (CNNs) or transformers, for tasks like image-based diagnostics, predictive analytics, or medical data classification.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Verma, P. & Sood, S. K. Fog assisted-IoT enabled patient health monitoring in smart homes. IEEE Internet Things J. 5 (3), 1789–1796 (2018).

Kar, P., Chen, K. & Shi, J. DMACN: A dynamic multi-attribute caching mechanism for NDN-based remote health monitoring system. IEEE Trans. Comput. 72 (5), 1301–1313 (2022).

Satija, U., Ramkumar, B. & Sabarimalai Manikandan, M. Real-time signal quality-aware ECG telemetry system for IoT-based health care monitoring. IEEE Internet Things J. 4 (3), 815–823 (2017).

Pathak, N., Misra, S., Mukherjee, A. & Kumar, N. HeDI: healthcare device interoperability for IoT-based e-health platforms. IEEE Internet Things J. 8 (23), 16845–16852 (2021).

Sengupta, S. & Suman Sankar Bhunia. Secure data management in cloudlet assisted IoT enabled e-health framework in smart City. IEEE Sens. J. 20 (16), 9581–9588 (2020).

Lindquist, W., Helal, S., Khaled, A. & Hutchinson, W. IoTility: architectural requirements for enabling health IoT ecosystems. IEEE Trans. Emerg. Top. Comput. 9(3), 1206–1218 (2019).

Fawad, M. et al. Integration of Bridge health monitoring system with augmented reality application developed using 3D game engine–Case study. IEEE Access. 12, 16963–16974 (2024).

Iman, U. et al. IoT-Enabled real-time health monitoring via smart textile integration with LoRa technology across diverse environments. IEEE Trans. Ind. Inform. (2024).

Li, H., Qu, T., Liu, L., Li, Y. & Xu, J. Zhiliang Hong, and An IoT-Enabled wearable health monitor for synchronized exg, PPG, and BioZ measurement. IEEE Trans. Instrum. Meas. (2025).

Phukan, N., Mohine, S., Mondal, A., Sabarimalai Manikandan, M. & Ram Bilas, P. Convolutional neural network-based human activity recognition for edge fitness and context-aware health monitoring devices. IEEE Sens. J. 22 (22), 21816–21826 (2022).

Al Mamun, M., Abdulla & Mehmet Rasit, Y. Sensors and systems for wearable environmental monitoring toward IoT-enabled applications: A review. IEEE Sens. J. 19 (18), 7771–7788 (2019).

Gong, Li, Z. et al. An IoT-based wearable labor progress monitoring system for remote evaluation of admission time to hospital. IEEE J. Biomed. Health Inf. 27 (6), 3037–3048 (2023).

Wang, H., Huang, J., Wang, G., Lu, H. & Wang, W. Contactless patient care using hospital iot: CCTV-camera-based physiological monitoring in ICU. IEEE Internet Things J. 11 (4), 5781–5797 (2023).

Spachos, P., Gregori, S. & Jamal Deen, M. Voice activated IoT devices for healthcare: design challenges and emerging applications. IEEE Trans. Circuits Syst. II Express Briefs. 69 (7), 3101–3107 (2022).

Jaffino, G., Palaniappan, T., Gugapriya, M. K. S. S., manikya Kumar, G. & Jose, J. P. M., IoT-based framework for automatic accident intimation system. In 2022 8th International Conference on Advanced Computing and Communication Systems (ICACCS), vol. 1, 1568–1572. (IEEE, 2022).

Jaffino, G., Jose, J. P. & Sundaram, M. Grey wolf optimization with deep recurrent neural network for epileptic seizure detection in EEG signals. In 2021 International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), 1–5. (IEEE, 2021).

Md, M., Islam, A., Rahaman & Islam, M. R. Development of smart healthcare monitoring system in IoT environment. SN Comput. Sci. (2020).

Khan, A. A., Madendran, R. K., Thirunavukkarasu, U. & Faheem, M. D2PAM: epileptic seizures prediction using adversarial deep dual patch attention mechanism. CAAI Trans. Intell. Technol. 8 (3), 755–769 (2023).

Johnrose, P., Jose, S., Muniasamy & Georgepeter, J. Rag-bull rider optimisation with deep recurrent neural network for epileptic seizure detection using electroencephalogram. IET Signal Proc. 15 (2), 122–140 (2021).

Jaffino, G. & Jose, J. P. Contour-and texture-based analysis for victim identification in forensic odontology. Data Technol. Appl. 56 (1), 146–116 (2021).

Jose, J. P., Sundaram, M. & Jaffino, G. Adaptive rag-bull rider: A modified self-adaptive optimization algorithm for epileptic seizure detection with deep stacked autoencoder using electroencephalogram. Biomed. Signal Process. Control. 64, 102322 (2021).

Jaffino, G., Sundaram, M. & Jose, J. P. Weighted 1D-local binary pattern features and Taylor-Henry gas solubility optimization based deep maxout network for discovering epileptic seizure using EEG. Digit. Signal Proc. 122, 103349 (2022).

Jaffino, G., Jose, J. P. & Sundaram, M. Deer hunting optimization with deep neural network for automated arrhythmia diagnosis in ECG signals. In 2021 Fourth International Conference on Electrical, Computer and Communication Technologies (2021).

Ashok, R., Sundaram, M., Jaffino, G. & Prabin, J. Jose. Weighted Moth-flame optimization algorithm for edible oil quality detection using microwave technologies. Food. Anal. Methods. 16(9), 1487–1497 (2023).

Jaffino, G., Palaniappan, T., Gugapriya, M. K. S. S., manikya Kumar, G. & Jose, J. P. M., IoT Based Frame work for Automatic accident Intimation System. In 2022 8th International Conference on Advanced Computing and Communication Systems (ICACCS), vol. 1, 1568–1572. (2022).

Khan, A., Ahmad, M. & Faheem, R. N. Internet of things (IoT) assisted context aware fertilizer recommendation. IEEE Access. 10, 129505–129519 (2022).

Celik, O. A research on machine learning methods and its applications. J. Educ. Technol. Online Learn. 1, Issue.3, (2018).

Khan, A., Ahmad, R. K., Mahendran, K., Perumal & Faheem, M. Dual-3DM 3-AD: mixed transformer based semantic segmentation and triplet pre-processing for early multi-class alzheimer’s diagnosis. IEEE Trans. Neural Syst. Rehabil. Eng. (2024).

Govindaraju, S. Intelligent transportation system’s machine learning-based traffic prediction. J. Appl. Data Sci. 5 (4), 1826–1837. https://doi.org/10.47738/jads.v5i4.364 (2024).

Acknowledgement

The author extends their appreciation to the Deanship of Scientific Research, the King Khalid University of Saudi Arabia, for funding this work through the Large Groups Research Project under grant number: (RGP.2/437/45).

Author information

Authors and Affiliations

Contributions

All authors contributed to the design and implementation of the research, to the analysis of the results and to the writing of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical statement

This paper does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jose, J.P., Jaffino, G., Al Awadh, M. et al. IoT enabled health monitoring system using rider optimization algorithm and joint process estimation. Sci Rep 15, 27584 (2025). https://doi.org/10.1038/s41598-025-10199-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-10199-4