Abstract

With the profound convergence and advancement of the Internet of Things, big data analytics, and artificial intelligence technologies, edge computing—a novel computing paradigm—has garnered significant attention. While edge computing simulation platforms offer convenience for simulations and tests, the disparity between them and real-world environments remains a notable concern. These platforms often struggle to precisely mimic the interactive behaviors and physical attributes of actual devices. Moreover, they face constraints in real-time responsiveness and scalability, thus limiting their ability to truly reflect practical application scenarios. To address these obstacles, our study introduces an innovative physical verification platform for edge computing, grounded in embedded devices. This platform seamlessly integrates KubeEdge and Serverless technological frameworks, facilitating dynamic resource allocation and efficient utilization. Additionally, by leveraging the robust infrastructure and cloud services provided by Alibaba Cloud, we have significantly bolstered the system’s stability and scalability. To ensure a comprehensive assessment of our architecture’s performance, we have established a realistic edge computing testing environment, utilizing embedded devices like Raspberry Pi. Through rigorous experimental validations involving offloading strategies, we have observed impressive outcomes. The refined offloading approach exhibits outstanding results in critical metrics, including latency, energy consumption, and load balancing. This not only underscores the soundness and reliability of our platform design but also illustrates its versatility for deployment in a broad spectrum of application contexts.

Similar content being viewed by others

Introduction

With the rapid development of Internet of Things (IoT) technology, edge computing, an emerging computing paradigm that moves computation and data storage to the edge of the network, is receiving widespread attention from both academia and industry. By decentralizing processing power to devices or endpoints, edge computing significantly reduces data processing delays and improves efficiency, thereby injecting new vitality into IoT applications. However, in practical applications, especially in changing and complex real-world environments, the performance testing and verification of edge computing are particularly important and challenging1.

There are three major technical shortcomings in current edge computing test platforms. First, in terms of physical interaction simulation, mainstream software simulation tools cannot accurately replicate physical coupling effects such as electromagnetic interference and thermal conduction between devices. Second, at the system scalability level, existing test platforms suffer from architectural limitations in supporting the integration of new sensors and over-the-air firmware updates, which hampers functional expansion of edge devices. Third, regarding data processing efficiency, current technical frameworks struggle to address engineering challenges such as multimodal data synchronization and real-time feature extraction, particularly facing performance bottlenecks like high processing latency and low resource utilization in heterogeneous data fusion scenarios. As edge computing evolves toward a distributed architecture, adopting a multi-node collaborative processing model has become a key approach to enhancing system performance. This requires the development of a new computing offloading model, whose core lies in: establishing an optimization mechanism for task distribution among heterogeneous devices, designing lightweight data transmission protocols, and developing elastic scheduling strategies adaptable to dynamic environmental changes2.

We have keenly observed a surge of technological innovation and convergence trends in the field of edge computing. As a critical component of the Internet of Things (IoT) technology ecosystem, embedded systems possess advantageous physical characteristics such as compact hardware size, low power consumption, and high integration of functional modules, making them especially suitable for deploying data acquisition, local processing, and transmission control functions at the edge3. KubeEdge, as a cutting-edge edge computing framework, seamlessly extends the powerful capabilities of cloud computing to edge devices, significantly enhancing the synergy between cloud and edge computing. Additionally, Serverless technology has injected new vitality into edge computing. It enables dynamic, on-demand resource allocation, not only substantially improving resource utilization but also reducing operational costs, allowing developers to focus more on implementing core business logic. With the increasing maturity of the cloud service market, more and more cloud platforms now offer support for edge computing. Leading domestic cloud service providers such as Alibaba Cloud and Tencent Cloud have already launched edge computing-specific solutions. These solutions leverage the robust infrastructure and rich services of cloud platforms to provide stable and reliable backend support for edge computing. In particular, the introduction of elastic computing services allows edge computing environments to easily handle growing data processing demands, ensuring system scalability and high availability.

To address these challenges, this paper innovatively integrates the KubeEdge framework, Serverless technology, and cloud services to build an efficient edge-IoT verification platform. Leveraging KubeEdge’s resource management and scheduling capabilities, combined with the on-demand resource allocation features of Serverless, and supported by the stability of cloud services, we aim to construct a system that is both efficient and intelligent4. The core objective of this research is to develop a high-performance, intelligent edge-IoT verification platform and conduct comprehensive performance evaluations. By deploying sophisticated edge offloading strategies and deeply collecting key metrics such as latency, energy consumption, and load balancing, our platform demonstrates significant advantages in resource utilization and system scalability. Experimental results confirm that compared to traditional architectures, our proposed solution more effectively addresses the ever-growing demand for data processing, providing solid support for the in-depth development of IoT applications.

The main contributions and innovations of this paper are as follows:

-

1)

A practical edge computing testbed is established by employing embedded devices to emulate real-world network nodes, which enables the collection and analysis of key performance metrics such as latency, energy consumption, and load balancing under realistic operational conditions.

-

2)

An efficient and intelligent edge-IoT verification platform is implemented through the integration of Serverless architecture with KubeEdge, thereby enhancing system stability and scalability while enabling dynamic and resource-efficient management in response to increasing data processing demands.

-

3)

High system stability and scalability are ensured by leveraging the robust capabilities of the Alibaba Cloud platform, effectively supporting the continuously growing requirements for data processing in edge computing scenarios.

-

4)

Extensive experimentation and performance analysis demonstrate that the proposed platform achieves significant advantages in terms of resource utilization, extensibility, and data offloading efficiency, providing strong technical support for addressing the escalating demands of data processing and advancing the development of military IoT applications.

In the following chapters, we will deeply analyze the current research status (Section “Background review and related works”), introduce the system model and problem description in detail (Section “System architecture and problem description”), and conduct comprehensive verification and evaluation of the system through experiments (Section “Experimental evaluation”). Finally, in Section “Conclusion”, we will summarize this research and look forward to possible future research directions.

Background review and related works

In the context of edge computing environments, offloading strategies constitute a pivotal aspect. Over recent years, researchers have been committed to developing highly efficient offloading algorithms aimed at optimizing the allocation and execution of computational tasks. These algorithms typically take into account multiple factors, such as network latency, computational resources, and energy consumption, to ensure tasks are executed at the most suitable location. The exploration of offloading strategies not only enhances the efficiency of edge computing but also provides more reliable performance guarantees for real-time applications. Su et al.5 introduces a simulator called EasiEI, designed for flexible modeling of complex edge computing environments. This simulator aids researchers in simulating and analyzing various types of edge computing scenarios, encompassing diverse network topologies, device configurations, and application contexts. It serves as a crucial tool and platform for the design and optimization of edge computing systems. However, the article lacks practical use cases or experimental results to demonstrate the simulator’s effectiveness and practicality, along with an evaluation of its performance and accuracy. Additional practical validations and discussions on application scenarios could further strengthen the study’s credibility and practicality. Elias et al.6 presents a scalable simulator for modeling cloud, fog, and edge computing platforms, supporting mobility. By integrating these three computing platforms and considering device mobility, it offers researchers a comprehensive simulation environment. This allows for better evaluation and comparison of the performance, reliability, and efficiency of different platforms across various scenarios. Nevertheless, the literature is deficient in quantitative assessments of the simulator’s scalability and accuracy, as well as case studies and experimental results from practical application scenarios. Furthermore, Ren et al.7 introduces a method for rapid task offloading and power allocation tailored to edge computing environments. This approach employs intelligent techniques to achieve environment-adaptive task offloading and power allocation, thereby enhancing system efficiency and performance. It considers environmental factors, data transmission, and processing delays, utilizing machine learning algorithms and dynamic programming techniques to make decisions regarding task offloading and power allocation. This renders the system more intelligent, adaptive, and efficient. However, the literature lacks further experimental validations and case studies from practical scenarios, which could reinforce the article’s persuasiveness and practicality, as well as enhance the feasibility and reliability of the proposed method.

KubeEdge, as an open-source platform connecting the cloud and the edge, has garnered widespread attention and research in recent years. By offering features such as cloud-edge collaboration, device management, and data synchronization, it significantly enhances the flexibility and reliability of edge computing. Researchers continuously optimize the performance of KubeEdge and explore its best practices in various application scenarios, thereby promoting the widespread application of edge computing. Kim et al.8 presents a novel local scheduling strategy in the KubeEdge edge computing environment, which effectively reduces inter-node traffic forwarding, improves system throughput, and decreases service latency. However, experimental validation may be limited to specific environments and scenarios, failing to fully demonstrate the strategy’s versatility under different conditions. Bahy et al.9 compares the resource utilization of three edge computing frameworks: KubeEdge, K3s, and Nomad. This provides a valuable reference for resource management and optimization in edge computing environments. Yet, it doesn’t comprehensively consider performance in dynamic environments or cover other evaluation metrics beyond resource utilization.

Serverless computing, an emerging computing model, has also shown tremendous potential in the field of edge computing. This model allows developers to focus on implementing application logic without concerning themselves with the underlying infrastructure. In recent years, significant progress has been made in applying serverless computing to edge computing, particularly in elastic resource management and automated operation and maintenance (Rodriguez et al., 2023). These advancements offer developers more efficient and convenient edge computing solutions. Russo et al.10 introduces an innovative framework designed to enable effective offloading and migration of serverless functions within the edge-cloud continuum. Through an intelligent decision-making mechanism, it dynamically determines the optimal execution location for functions, optimizing resource utilization and response time. This demonstrates the system’s flexibility and efficiency. Nevertheless, the article lacks an in-depth evaluation of scalability and performance in complex, large-scale network environments. The innovation of11, “EcoFaaS,” lies in its reconsideration of the design of serverless environments to enhance energy efficiency. However, the article may not adequately discuss the feasibility, cost-effectiveness, and potential technical challenges of these new methods in practical deployments.

As the edge computing market continues to expand, leading cloud providers have introduced their own edge computing solutions. These solutions, which often integrate the providers’ technological strengths and market positioning, offer users a one-stop edge computing service. For instance, AWS Greengrass and Alibaba Cloud’s Link Edge are actively exploring the best practices and application scenarios for edge computing (Amazon, 2023; Alibaba, 2023). The research and practices of these cloud providers not only advance the development of edge computing technology but also provide users with more diversified options. In exploring the practical experience of elastic resource allocation on Alibaba Cloud, Xu et al.12 proposes a new method for dynamically allocating resources in large-scale microservice clusters. Although it lacks specific case studies and technical details, it shares valuable practical insights with readers. However, the article does not compare its findings with other companies or academia, nor does it provide a clear assessment of results. This leaves room for deeper analysis and evaluation to further validate the effectiveness and merits of this practice. On the other hand, Hefiana et al.13 compares Alibaba Cloud’s Elastic Compute Service (ECS) instances with Azure’s virtual machines, highlighting the distinct features of the two cloud service providers in terms of elastic computing resources. It provides an in-depth comparative analysis, aided by intuitive examples and data, to help readers understand the differences and choices between the two, offering valuable reference information for cloud computing users.

Embedded systems, such as the Raspberry Pi, can be easily integrated into existing network environments as intelligent edge devices. These systems are capable of collecting and processing information from various sensors or other data sources in real-time, significantly reducing data transmission delays and costs. This is particularly crucial in applications that require a rapid response, such as intelligent transportation and smart manufacturing. Kum et al.14 introduces an AI management platform with an embedded edge cluster, combining artificial intelligence and edge computing technologies to provide faster and more efficient data processing and decision-making capabilities. By deploying AI models on edge devices, the platform enables more effective real-time data analysis and applications. However, the article lacks comparative analysis and experimental results to support the advantages and disadvantages of this approach. Additionally, the article does not adequately evaluate and discuss challenges related to the platform’s scalability, security, and performance. Nikos et al.15 proposes an edge platform with embedded intelligence, integrating MLOps (Machine Learning Operations) with edge computing. This platform aims to provide more powerful edge computing and machine learning capabilities for future 6G systems. Implementing embedded intelligence at the edge offers a new approach for real-time data processing and decision-making. Nevertheless, the literature fails to compare this method with other similar studies or platforms, making it difficult to accurately assess its advantages and disadvantages relative to existing solutions.

After delving into offloading strategies, KubeEdge technology, serverless computing, and edge computing solutions from cloud providers, we have discovered that each of these technologies possesses unique strengths and potentials. Currently, most of these technologies are being researched and applied in isolation, lacking a comprehensive platform to fully leverage their synergies. KubeEdge technology provides a robust foundation for cloud-edge collaboration, enabling efficient communication and resource management between the cloud and edge devices. On the other hand, serverless computing’s elastic resource management and automated operation and maintenance capabilities can significantly simplify the development and deployment processes of edge computing applications. Meanwhile, edge computing solutions from cloud providers offer users a wealth of services and tool options. However, the lack of full integration among these technologies hinders their maximum effectiveness in practical applications. Therefore, this study focuses on the organic integration of KubeEdge technology, serverless computing, and edge computing solutions from cloud providers. We are committed to building a comprehensive verification platform to test and optimize the synergies among these technologies. Incorporating embedded systems (such as the Raspberry Pi) into edge computing research not only enriches the application scenarios of edge computing but also enhances its efficiency and flexibility. Hence, in this study, we will fully consider the role of embedded systems in edge computing and explore their optimal integration with KubeEdge technology, serverless computing, and edge computing solutions from cloud providers. Consequently, we need to continue in-depth research and explore innovative methods to drive further development in the field of edge computing.

System architecture and problem description

This section first introduces the system model and overall architecture, elaborating on the functionalities and interconnections of its key components. Subsequently, we describe in detail the deployment process of the system, aiming to ensure the accuracy and efficiency of each operational step. To enable a scientifically rigorous evaluation of system performance, we construct a comprehensive performance evaluation framework based on mathematical modeling. This framework not only enables precise quantification of various performance metrics—such as latency, energy consumption, and load balancing—but also offers actionable insights for optimizing system performance and resource allocation. By systematically presenting and analyzing these aspects, we aim to provide readers with a clear, in-depth, and holistic understanding of the proposed system.

System model

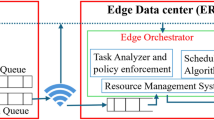

The three-tier architecture of cloud-edge-device collaboration has become a key framework for processing big data and providing real-time services, as illustrated in Fig. 1. Our system model is built upon this concept, aiming to achieve more intelligent, flexible, and efficient computing services through efficient resource allocation and data processing16.

-

1)

Cloud Layer

The cloud layer serves as the core of the entire system, offering powerful computing, storage, and data processing capabilities. Operating on cloud computing platforms like Alibaba Cloud, it utilizes ACK for container orchestration and management. Additionally, it deploys serverless functionality to support automatic application scaling and an event-driven architecture. The cloud layer is also responsible for centralized data storage, analysis, intelligent decision-making, as well as unified identity authentication and access management. Through an API gateway, the cloud layer provides a unified service interface for both the edge and device layers.

-

2)

Edge Layer

The edge layer consists of distributed edge nodes that are located close to data sources and users. It runs KubeEdge to enable cloud-edge collaboration. This layer handles data preprocessing, caching, and analysis to reduce latency and network load. It also provides a service mesh and message queues to ensure efficient communication and data synchronization between services. The edge layer possesses autonomous capabilities, ensuring the continuous operation of critical services even when cloud connectivity is unstable or disconnected.

-

3)

Device Layer

The device layer comprises various embedded devices such as Raspberry Pi, sensors, and mobile devices. These devices are responsible for real-time data collection and initial processing, supporting instant response and control. They interact with the edge layer through lightweight communication protocols, enabling data reporting and command reception. In case of network instability, the device layer can utilize local caching and autonomy mechanisms to ensure business continuity and stability.

The edge layer serves as a critical bridge connecting the cloud and device layers. In this layer, we have introduced a serverless architecture to achieve efficient resource utilization and rapid service response. By incorporating serverless technology, developers can focus on the code itself without worrying about server operation and management, significantly improving development efficiency and resource utilization. The edge layer dynamically allocates computing, storage, and network resources based on actual demands. This elastic scaling capability not only avoids resource wastage but also ensures stable operation in high-concurrency scenarios.

-

(a)

Serverless computing allows the execution of serverless functions. At the edge layer, we deploy serverless functions using this technology. These functions can be invoked instantly when needed to process data from the device layer. After processing, the functions automatically release resources, enabling true on-demand computing.

-

(b)

To simplify service invocation and integration, we provide a unified API interface. Users and applications do not need to concern themselves with the specific deployment location of services. They can easily access the required functionalities through standard RESTful APIs.

-

(c)

State monitoring and reporting: Edge servers continuously monitor their execution status, resource usage, network bandwidth, and other information. They report these data to the control layer, facilitating timely problem detection and optimization adjustments.

Another significant advantage of serverless technology is its elastic scaling capability. When data processing demands increase, serverless functions can automatically scale to meet those demands. Conversely, when demands decrease, resources are automatically released, effectively controlling costs. KubeEdge provides powerful container orchestration capabilities for edge computing. By combining it with serverless technology, we can achieve more refined resource management and scheduling. KubeEdge handles container deployment and monitoring, while serverless technology manages function execution and dynamic resource allocation.

System architecture

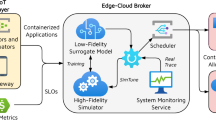

To address these challenges, we innovatively propose a cloud-edge collaborative Serverless architecture model. This model aims to leverage the elasticity of the cloud and the low-latency characteristics of the edge to construct an efficient, flexible, and secure computing environment. Through a layered design, the architecture model clearly delineates different functions and responsibilities, enabling modular management and optimization. This layered approach ensures that our architecture model not only meets the current needs of edge computing but also possesses scalability and adaptability for future application scenarios. In the following sections, we will elaborate on the design principles, key technologies, and implementation details of each layer17.

In this paper, we introduce a comprehensive cloud-edge collaborative system architecture, which consists of eight layers. Each layer performs specific functions and services, collectively forming an efficient and secure cloud-edge computing environment, as illustrated in Fig. 2.

-

1)

The Cloud Center Layer serves as the computational core of the entire architecture. It relies on Alibaba Cloud Service (ACK) to provide powerful container orchestration and management capabilities. Additionally, we have deployed Knative Serving to enable Serverless functionalities, including automatic scaling and event-driven characteristics. Meanwhile, Alibaba Cloud Object Storage (OSS) securely stores static resources and application images, while Alibaba Cloud Log Service (SLS) is responsible for centrally managing and analyzing log data generated at the cloud edge.

-

2)

The Edge Computing Layer extends the capabilities of the cloud center to edge nodes seamlessly through KubeEdge. This not only achieves edge autonomy but also strengthens the collaborative work between the cloud and the edge. Edge nodes, deployed near data sources, efficiently execute edge computing tasks and run containerized applications. Furthermore, we have created a Serverless function execution environment on edge nodes to facilitate rapid responses to local event triggers.

-

3)

The Network Communication Layer utilizes Istio/Linkerd to deploy a service mesh, optimizing communication management among microservices. Simultaneously, messaging queues and data synchronization components like Kafka and RabbitMQ ensure real-time data synchronization and smooth communication between the cloud and the edge.

-

4)

The Security and Identity Layer is crucial for system security. We adopt Alibaba Cloud Identity Service (RAM) for unified user identity and permission management. By configuring security groups and network access control lists (ACLs), we rigorously protect the network security of the cloud center and edge nodes. Additionally, an automated certificate management system guarantees data transmission security.

-

5)

The Data Management and Analysis Layer provides robust data processing capabilities. It includes Alibaba Cloud database services such as RDS and Table Store for persistent data storage, as well as big data processing services like MaxCompute and DataWorks to support deep data analysis and mining.

-

6)

The Application and Service Layer supports user-developed custom cloud-edge collaborative applications, which can be Web applications, IoT services, and more. Meanwhile, the API gateway serves as a unified entry point, efficiently handling tasks such as request routing and load balancing.

-

7)

The Monitoring and Operation Layer utilizes Alibaba CloudMonitor Service (CloudMonitor) to monitor the performance and status of the cloud center and edge nodes in real-time. Integrated with an alert system, we can set alert thresholds based on Cloud Monitor data to ensure timely responses to system anomalies.

-

8)

The User Access Layer involves direct interaction with users through devices such as smartphones and IoT devices. Additionally, our edge autonomy service guarantees service continuity and availability during network instability or disconnections.

This carefully layered architectural design not only achieves efficient cloud-edge collaborative computing but also ensures data security and real-time processing, providing robust support for various user applications.

By introducing Serverless technology and integrating with Alibaba Cloud, the brand-new KubeEdge architecture design has achieved remarkable improvements and innovations in terms of efficiency, flexibility, and security. These improvements and innovations are crucial for meeting the demands of complex edge computing scenarios and are significant in advancing the development of edge computing technology.

Deployment process

The deployment strategy of this model aims to ensure seamless integration and collaborative work at every level from cloud to edge through a series of carefully designed steps. These steps encompass the preparation of the cloud service environment, the deployment of the edge computing layer, the integration of end-side devices, comprehensive testing and verification of the system, as well as continuous operation, maintenance, and optimization. Through this strategy, we can not only achieve rapid response and intelligent processing but also ensure the stability and reliability of the system, meeting the needs of future intelligent applications. Next, we will detail the key tasks and implementation details of each deployment phase18.

Phase 1: Cloud Layer Deployment

-

1)

Cloud Service Environment Preparation: The initial stage involves initializing an ACK cluster on the Alibaba Cloud platform, selecting suitable regions and resource allocations to meet expected computing and storage needs. Simultaneously, load balancers, security groups, and network ACLs are carefully configured to establish a secure network environment, protecting the system from unauthorized access and potential network threats.

-

2)

Knative Serving Deployment: Based on the ACK cluster, the Knative Serving component is deployed, encompassing crucial serverless functionalities like auto-scalers and build templates. Knative Serving is meticulously configured to support serverless application deployment and runtime auto-scaling, addressing dynamically changing workload demands.

-

3)

Central Database and Storage Configuration: To persist application data and support complex analytics, Alibaba Cloud RDS or OSS services are deployed. These services not only provide data persistence solutions but also enable large-scale data processing and analysis.

Phase 2: Edge Layer Deployment

-

1)

Edge Node Preparation: Select hardware devices, such as Raspberry Pis, as edge nodes based on their computing power, size, and cost-effectiveness to meet the specific needs of the edge computing environment.

-

2)

KubeEdge Installation: Deploy KubeEdge, including its cloud core and edge core services, on the edge nodes. This step is crucial for achieving cloud-edge collaboration, ensuring seamless integration of edge nodes into the cloud architecture.

-

3)

Configuring Edge Autonomy: Equip edge nodes with autonomous operation capabilities, allowing them to maintain critical service operations even when cloud connectivity is unstable or unavailable.

-

4)

Service Mesh Deployment: Deploy service mesh technologies like Istio or Linkerd on edge nodes to enable intelligent communication, discovery, and load balancing between services.

Phase 3: Device Layer Integration

-

1)

Device Preparation: Configure devices such as Raspberry Pis, installing necessary operating systems and drivers to ensure effective communication with the edge layer.

-

2)

Data Acquisition and Processing: Develop lightweight applications deployed on devices for data collection and initial processing, reducing network transmission burdens and latency.

-

3)

Network Communication Configuration: Establish secure communication links between devices and edge nodes, guaranteeing secure data transmission and privacy protection.

Phase 4: System Integration and Testing

-

1)

End-to-End Deployment: Deploy applications on ACK and create and manage services through Knative Serving, enabling full lifecycle application management.

-

2)

Edge Integration: Deploy business logic to KubeEdge nodes for data preprocessing, decision-making, and instant response.

-

3)

Testing and Validation: Conduct comprehensive system tests, including unit, integration, and performance tests, to verify the collaborative work and performance of system components.

-

4)

Monitoring and Logging Configuration: Utilize Alibaba Cloud’s CloudMonitor and SLS to establish system monitoring and log management mechanisms, supporting stable system operation and problem diagnosis.

Phase 5: Operations and Optimization

-

1)

Automated Deployment: Adopt CI/CD processes for automated application deployment and seamless updates, enhancing development efficiency and system reliability.

-

2)

Performance Monitoring: Continuously monitor system performance, collecting key performance indicators to provide data support for system optimization.

-

3)

Troubleshooting and Recovery: Develop system troubleshooting and recovery strategies to ensure high availability and business continuity19.

-

4)

System Optimization: Continuously adjust and optimize system configurations based on performance monitoring data and user feedback, improving application performance and user experience.

Create the instance, deploy the project, install the necessary dependencies, and when the deployment is successful, return the message as shown in the figure, indicating that it can run successfully on the machine, as shown in Fig. 3.

Problem description

In order to construct a complex mathematical model for edge offloading scenarios based on latency and energy consumption, we can consider several factors, including the size of the task, transmission rate, processing speed, offloading decision, energy consumption, etc. The commonly used symbols are shown in Table 1.

-

1)

Delay Model

-

(a)

Transmission Delay

Considering channel conditions, transmission power, noise power, and task partitioning ratio, the transmission delay \(\:{d}_{\text{trans}}\) can be derived from Shannon’s formula as:

$$d_{{trans}} = \frac{{S \times t_{i} }}{{B \times \log _{2} \left( {1 + \frac{{P_{{tx}} \times h}}{{\sigma ^{2} }}} \right)}}$$(1)where \(\:S\) represents the task partitioning ratio,\(\:{t}_{i}\)is the task size, \(\:B\) is the channel bandwidth, \(\:{P}_{\text{tx}}\) is the transmission power, \(\:h\) is the channel gain, and \(\:{\sigma\:}^{2}\) is the noise power.

-

(b)

Processing Delay

Taking into account a multi-server environment, task allocation ratio, number of processing cores, and frequency, the processing delay \(\:{d}_{\text{proc}}\) is given by:

$$d_{{proc}} = \mathop \sum \limits_{{j = 1}}^{M} a_{{ij}} \times \frac{{\left( {1 - S} \right) \times t_{i} \times C}}{{N_{0} \times \omega }}$$(2)In this equation, \(\:{a}_{ij}\:\)denotes the allocation ratio of task \(\:i\) on server \(\:j\), \(\:M\) is the number of servers, \(\:C\) represents task complexity, \(\:{N}_{0}\) is the basic processing capability, and \(\:\omega\:\) is the processing frequency.

-

(c)

Total Delay

Combining transmission and processing delays, the total delay \(\:D\) is:

$$D = \mathop \sum \limits_{{i \in T}} \left[ {\frac{{S \times t_{i} }}{{B \times \log _{2} \left( {1 + \frac{{P_{{{\text{tx}}}} \times h}}{{\sigma ^{2} }}} \right)}} + \mathop \sum \limits_{{j = 1}}^{M} a_{{ij}} \times \frac{{\left( {1 - S} \right) \times t_{i} \times C}}{{N_{0} \times \omega }}} \right]$$(3)

-

(a)

-

2)

Energy Consumption Model

-

(a)

Transmission Energy Consumption

Transmission energy consumption is related to transmission power and task partitioning ratio, calculated as follows:

$$e_{{{\text{trans}}}} = S \times t_{i} \times P_{{{\text{tx}}}}$$(4) -

(b)

Processing Energy Consumption

Considering a multi-server environment, task allocation ratio, and dynamic power consumption of processing cores, the processing energy consumption \(\:{e}_{\text{proc}}\) is:

$$e_{{{\text{proc}}}} = \mathop \sum \limits_{{j = 1}}^{M} a_{{ij}} \times \left( {1 - S} \right) \times t_{i} \times C \times \kappa \omega ^{2}$$(5)where \(\:{\upkappa\:}\) is the power consumption coefficient.

-

(c)

Total Energy Consumption

The total energy consumption \(\:E\) is the sum of transmission and processing energy consumptions:

$$E = \mathop \sum \limits_{{i \in T}} \left[ {S \times t_{i} \times P_{{{\text{tx}}}} + \mathop \sum \limits_{{j = 1}}^{M} a_{{ij}} \times \left( {1 - S} \right) \times t_{i} \times C \times \kappa \omega ^{2} } \right]$$(6)

-

(a)

In this model, multiple objectives such as delay, energy consumption, and server load balancing can be considered for optimization. The optimization problem can be formulated as:

where \(\:{\upalpha\:}\) and \(\:\left(1-{\upalpha\:}\right)\) represent the weights of delay and energy consumption in the optimization objective, respectively. \(\:\text{Var}\left({a}_{ij}\right)\) denotes the variance of the task allocation ratio, which measures the load balancing among servers. \(\:{\uplambda\:}\) is the weight factor for load balancing.

Our overall optimization goal is total utility, which is a negatively correlated linear weighted sum of total energy consumption, total delay, and load balancing. A reasonable task offloading strategy will result in less energy consumption, lower delay, and better load balancing, thus achieving higher total utility20.

Experimental evaluation

In this section, we introduce an adaptive offloading strategy into the model and deploy a practical edge computing scenario based on multiple types of embedded terminals. We first verify the performance and advantages of our proposed innovative edge computing architecture, which is based on KubeEdge and Serverless technology. We have carefully designed detailed experimental settings and established a rigorous performance evaluation system.

Experimental setup

To build a stable and high-performance edge computing platform, we utilize Alibaba Cloud Elastic Compute Service (ECS) instances. These high-performance ECS instances not only provide us with powerful computing capabilities but also ensure the reliability and security of the platform. Each ECS instance is equipped with a 4-core CPU, 8GB of memory, and a 50 Mb/s bandwidth to meet the stringent requirements of edge computing for real-time performance and data processing capabilities.

In our experiments, Raspberry Pi 4 (1.5 GHz) with 8GB RAM is used as the edge server, while Raspberry Pi 3B+ (1.4 GHz) and Raspberry Pi 3B (1.2 GHz) with 1GB RAM are employed as terminal smart devices. All Raspberry Pis are configured with the same version of the Raspbian operating system and related system software. We connect these Raspberry Pis to the same local wireless network, assuming they are fully connected. Each Raspberry Pi is linked to the Alibaba Cloud service we use to synchronize data and commands between the Raspberry Pi and the cloud.

We measure the system energy consumption of the Raspberry Pi with the help of an external device - the USB power meter UM25C. This power meter can transmit energy consumption data in real-time to its accompanying Windows Bluetooth application and display this information directly on the screen. To better acquire and process these data, we have developed two core software components. The first is the energy interface, responsible for data transmission and communication; the second is the smart meter application, used to read electricity data directly from the screen.

Offloading scheme and test cases

In this section, we present our proposed offloading schemes and compare their performance to validate the capabilities of our platform.

Comparison of offloading schemes

This paper compares four common offloading schemes, as illustrated in Fig. 4.

-

1)

Local Computing Scheme (LCS)

The LCS becomes particularly crucial when the communication between IoT nodes and edge servers is disrupted. This scheme relies entirely on the node’s own resources for processing, ensuring basic functionality even in the event of a connection loss. Despite its intuitive simplicity, we suggest using LCS as a benchmark to better highlight the advantages of other schemes.

-

2)

Edge Computing Scheme (ECS)

Contrary to LCS, ECS adopts a comprehensive offloading strategy when a connection is available, disregarding the energy consumption and execution time of applications on the node. In this scheme, the communication quality between IoT nodes and edge servers directly affects the offloading cost. Therefore, ECS may not be the optimal choice when channel quality is poor.

-

3)

Cloud Computing Scheme (CCS)

CCS alleviates the computational pressure on IoT nodes by providing efficient, flexible, and scalable computing resources, enabling efficient processing of complex tasks. IoT nodes are no longer isolated computing units but are connected to the cloud through high-speed networks, leveraging the cloud’s powerful computing capabilities to handle complex data analysis and processing tasks. This approach not only frees IoT nodes from computational stress, allowing them to focus on data collection and preliminary processing, but also ensures efficient task execution and rapid response. Additionally, its rich data storage and processing services, coupled with strict security measures, provide robust support and guarantees for IoT applications.

-

4)

Adaptive Scheme (ACS)

The aforementioned schemes have different emphases, whether it’s local processing, offloading, energy consumption, or execution speed. However, in practical applications, multiple factors often need to be considered comprehensively. The adaptive scheme is designed based on this need. It dynamically adjusts processing strategies according to the current network conditions, energy reserves, task requirements, and other factors to find the optimal balance among various costs. For instance, in certain scenarios, although TPS can deliver the fastest execution time, the adaptive scheme might achieve both faster execution speed and lower energy consumption through a certain degree of time tolerance21. Based on this, we propose an adaptive offloading scheme modeled using Eq. 7 for system overhead. To ensure a fair and convenient comparison, we apply Z-Score Normalization. The calculation formula is as follows:

where \(\:x\) denotes the original data point, \(\:\mu\:\) and \(\:\sigma\:\) both represent the standard deviation of the original data. \(\:{x}_{norm}\) signifies the normalized data point.

In selecting the four offloading strategies discussed in this study, we followed three key criteria: representativeness, comparability, and practical applicability. First, the selected strategies cover a full spectrum—from fully local processing (LCS) to full offloading (ECS, CCS), and finally to dynamic adaptation (ACS)—ensuring that our analysis is representative of common offloading paradigms. Second, these strategies emphasize different performance objectives (e.g., latency reduction vs. energy saving), enabling meaningful comparative analysis. Finally, all schemes have been validated in prior studies or real-world deployments, ensuring their practical relevance. This selection allows for a comprehensive evaluation of our proposed adaptive offloading approach under diverse edge computing scenarios.

Test cases

To comprehensively evaluate the practicality and reliability of the proposed solution and ensure its adaptability to diverse real-world application scenarios, we have meticulously designed the following three representative test cases. These cases cover lightweight, medium-weight, and heavyweight application requirements, enabling validation of the solution’s performance across different application levels. Furthermore, they are closely aligned with current industry needs and typical application scenarios, demonstrating the solution’s performance in practical contexts and thereby ensuring the broad applicability and persuasiveness of the test results.

-

1)

Lightweight: Temperature Sensor Data Monitoring

In the realm of the Internet of Things (IoT), temperature sensor data monitoring is a pivotal application scenario for edge computing. By collecting real-time data from temperature sensors, this case assesses the edge computing platform’s capability in handling simple sensor data streams. The experiment will monitor data processing latency and accuracy, verifying the edge node’s response speed to real-time data monitoring, enabling timely detection of potential equipment failures or environmental anomalies, and ensuring production safety and efficiency. This test case aims to validate the response speed and accuracy of the edge computing platform when processing large volumes of simple sensor data streams, which is crucial for enhancing the real-time performance and reliability of IoT applications.

-

2)

Medium-weight: Multimedia Services

With the proliferation of video streaming, real-time transcoding and analysis have become critical components of multimedia services. Edge computing platforms can significantly enhance user experience by reducing data transmission delays and improving processing efficiency. This test procedure involves processing multimedia content, such as real-time transcoding and analysis of video streams. The experiment will test the platform’s performance in multimedia content processing, including transcoding speed, analysis accuracy, and bandwidth utilization. Evaluating the platform’s performance in this context is vital for optimizing video streaming services and enhancing user satisfaction.

-

3)

Heavyweight: Smith-Waterman Gene Sequence Analysis

In bioinformatics, gene sequence alignment is a computationally intensive task that demands high computational capacity and efficiency. Edge computing platforms, through distributed processing and optimized algorithms, can accelerate gene sequence analysis, reducing research cycles. This test case involves executing gene sequence alignment, a computationally intensive task, and the experiment will verify the edge computing platform’s computational capacity and efficiency when processing large-scale bioinformatics datasets. Assessing the platform’s performance and stability in handling complex bioinformatics datasets is of great significance for advancing biomedical research and innovation.

Through these meticulously designed test cases, not only do we comprehensively cover lightweight, medium-weight, and heavyweight application requirements, validating the solution’s performance across different application levels, but we also closely align with current industry needs and typical application scenarios, demonstrating its performance in practical applications21.

Evaluation metrics

In edge computing environments, resource allocation plays a critical role in determining the efficiency and overall performance of computation offloading. As offloading increasingly involves collaborative processing across cloud servers, edge nodes, and end devices, the complexity of resource allocation has significantly increased. Based on the determined offloading decisions, the core objective of resource allocation is to allocate system resources (e.g., bandwidth, CPU cycles, storage) rationally to offloaded tasks, aiming to optimize system performance—including reduced latency, lower energy consumption, and improved load balancing—while meeting the quality-of-service requirements of each task. Therefore, an efficient resource allocation mechanism not only affects the execution efficiency of individual tasks but also has a profound impact on system stability, scalability, and energy sustainability.

The load balancing index is a unitless relative metric that measures the uniformity of task distribution within the system. The index typically ranges from 0 to 1, where 1 indicates a perfectly balanced task distribution, and 0 indicates complete imbalance. Computational accuracy is expressed as the average percentage error. The task success rate represents the proportion of tasks that are successfully completed.

Experimental results and analysis

To comprehensively evaluate the performance of four offloading strategies (LCS, ECS, CCS, and ACS) across applications with varying complexities, a series of experiments were designed in this section, covering lightweight, midweight, and heavyweight application scenarios. By systematically collecting and analyzing data from these strategies while handling various tasks, we aim to thoroughly explore their advantages and disadvantages under different conditions. Furthermore, the experimental results not only provide theoretical insights into understanding each offloading strategy but also offer practical guidance for selection and configuration in real-world applications. Through comparative analysis, it is possible to identify the most suitable offloading strategy for specific scenarios, thereby optimizing overall system performance and resource utilization.

-

1)

Energy efficiency validation

The experiment compared and analyzed energy consumption data of the four offloading strategies, as shown in Fig. 5. To ensure fairness in the experiment, an equal number of tasks were processed for each task magnitude, and the average energy consumption values for each task magnitude were calculated. After normalizing the energy consumption data of all tasks, significant differences in energy consumption among different offloading schemes when processing applications of the same magnitude were observed. As the application magnitude increased, the energy consumption of all offloading schemes showed an upward trend. This trend aligns with expectations because higher application complexity typically requires more computational resources and time, leading to greater energy consumption. Comparatively, LCS exhibits the lowest energy consumption in small-scale applications, but its energy consumption increases rapidly as the application scale grows. In contrast, ECS and CCS have relatively higher energy consumption in small-scale applications, and this increase accelerates even more with the rise in application scale. The primary reason for this phenomenon is that ACS demonstrates relatively lower energy consumption across various application scales due to its intelligent offloading strategy, which dynamically adjusts resource allocation based on task requirements and network conditions.

-

2)

Latency sensitivity validation

The experiment conducted a comparative analysis of the latency performance of different offloading schemes in handling multiple concurrent tasks, as shown in Fig. 6. Overall, as the number of concurrent tasks increased, the latency of all schemes exhibited an upward trend. This is primarily due to the intensified competition and consumption of system resources (such as CPU, memory, and network bandwidth), which leads to longer processing times. Among them, LCS shows a relatively faster increase in latency. The main reason is the limited local resources—resource contention becomes more severe with an increasing number of tasks, resulting in performance degradation. Additionally, the latency data of LCS also show certain fluctuations, which may be caused by interference from background processes and varying workload conditions in the actual experimental environment. The latency performance of ECS and CCS is relatively close. However, as the number of tasks increases, network transmission delay may become a bottleneck. The latency of ECS is generally higher than that of CCS. This difference can be attributed to variations in server configurations and network conditions in the experimental setup. In contrast, ACS demonstrates relatively lower latency. This is mainly because ACS dynamically adjusts its offloading strategy based on current conditions to minimize latency. Through comparative analysis, the most suitable offloading scheme can be selected according to specific application scenarios and requirements. For instance, ACS may be a better choice for latency-sensitive applications, whereas LCS could be more appropriate for resource-constrained environments. Moreover, the experimental results remind us that various uncertain factors should be fully considered in practical applications in order to more accurately evaluate and optimize system performance.

-

3)

Resource scheduling and allocation validation

The load balancing index is a dimensionless relative metric used to measure the uniformity of task distribution across the system. As shown in Fig. 7, the experiment analyzed how the load balancing index of the four strategies changes under different system loads. The results indicate that as the system load increases, the load balancing index of LCS drops sharply. This reflects its inability to effectively handle increasing task volumes under limited local resources, leading to severe imbalance in workload distribution—particularly evident under high-load conditions where performance degradation is most significant. In contrast, ECS and CCS demonstrate more stable performance. Under scenarios with sufficient resources and favorable network conditions, these two strategies achieve relatively good load balancing. However, as the load continues to increase, their load balancing indices gradually decline due to limitations in communication quality and network conditions. Among all tested strategies, ACS performs the best. Its load balancing index remains consistently high, and even under heavy load, ACS significantly outperforms the other schemes in terms of load balancing performance. This is attributed to its ability to dynamically adjust task allocation strategies, thereby maintaining superior performance under various load conditions. Nevertheless, under extreme load conditions, increased network latency and congestion, along with resource bottlenecks, affect ACS’s efficiency in acquiring resources and allocating new tasks, which in turn has a certain impact on the overall load balancing.

-

4)

Reliability validation

As shown in Fig. 8, the experiment conducted a comparative analysis of the computational accuracy and reliability of different schemes in task processing. The experimental results indicate that LCS performs significantly worse than the other three strategies. This is mainly due to the limitations of local device performance—when handling complex tasks, the computational capability of local devices may not be sufficient to achieve optimal accuracy, leading to a decline in the precision of computation results. In contrast, ECS and CCS benefit from utilizing more powerful edge server resources, resulting in a notable improvement in computational accuracy. In particular, CCS achieves higher accuracy than ECS. However, ACS demonstrates the best performance in terms of computational accuracy. This is attributed to its ability to dynamically adjust based on task requirements and network conditions. This flexible mechanism enables ACS to maintain high accuracy when processing various types of complex tasks, thereby meeting the demands of high-precision computing. From the perspective of task success rate, LCS again shows the lowest performance. The limited local resources often lead to task failures or timeouts. On the other hand, both ECS and CCS improve the task success rate through distributed processing, with CCS achieving a slightly higher success rate than ECS, further confirming the advantages of CCS in task processing strategies. Nevertheless, ACS once again exhibits a clear advantage in task success rate. This is closely related to its dynamic adjustment capability, which allows real-time adaptation based on current network conditions and task demands, ensuring efficient and accurate task completion.

Through the comparison and analysis of the four offloading schemes based on experimental data, the following conclusions can be drawn: LCS performs poorly in both computational accuracy and task success rate due to resource constraints. It is suitable for scenarios with limited resources or where high computational accuracy is not required. ECS and CCS enhance computational accuracy and task success rate by leveraging edge server resources. These strategies are appropriate for applications requiring moderate levels of computational precision and reliability. ACS, with its dynamic adjustment capability, demonstrates strong advantages in both computational accuracy and task success rate. Its flexibility and efficiency make it the preferred solution for handling complex and high-precision computing tasks, especially in environments with stringent reliability requirements.

-

5)

Platform stress testing

To evaluate the actual performance of the four offloading strategies under different application workloads, the experiment conducted a detailed comparative analysis based on the total utility across lightweight, midweight, and heavyweight applications. Through repeated experiments and statistical analysis, we adopted box plots to intuitively and rigorously present these results, clearly illustrating the performance distribution and differences among the various offloading strategies.

As shown in Fig. 9, in lightweight applications, LCS and CCS demonstrate relatively stable performance, with data points closely clustered and high median values. This indicates that these two strategies offer high reliability and consistency when handling lightweight tasks. In contrast, ECS and ACS show greater performance fluctuations, with more dispersed data points. This may be due to the low resource consumption in lightweight scenarios, which increases the sensitivity to strategy selection.

As task complexity increases, as illustrated in Fig. 10, the performance differences among the offloading strategies become more pronounced. ACS demonstrates a clear advantage, with the most concentrated data distribution at the highest level, indicating its efficiency and stability in processing midweight tasks. ECS also exhibits good performance, with data mainly clustered at higher levels, suggesting potential for further improvement. CCS performs at an intermediate level between LCS and ECS. Meanwhile, LCS shows relatively poor performance in midweight applications, possibly failing to meet the demands of more complex tasks.

In heavyweight applications, as shown in Fig. 11, the performance gaps among the offloading strategies are further amplified. ACS continues to lead, maintaining the most concentrated data distribution toward higher positive values and having the highest median value, demonstrating excellent performance and stability in handling complex tasks. CCS also shows relatively stable performance, although with slightly higher dispersion, its median remains high. ECS performs at a moderate level, while LCS shows the worst performance in heavyweight applications, with widely scattered data and the lowest median.

Based on the above stress testing results across different application scales, the following conclusions can be drawn:

-

(a)

ACS demonstrates the best overall performance in most cases, especially in midweight and heavyweight applications. Its stability and efficiency significantly outperform other strategies. This is largely attributed to ACS’s ability to flexibly integrate the advantages of local computing, edge computing, and cloud computing.

-

(b)

CCS maintains relatively stable performance across all three application scales. Although it does not surpass ACS, it still shows competitiveness in handling complex tasks.

-

(c)

ECS exhibits larger performance fluctuations in lightweight applications but performs well in midweight and heavyweight scenarios. This is because ECS better leverages the advantages of edge computing when dealing with more complex tasks.

-

(d)

LCS consistently performs the worst across all application scales, particularly lagging behind in midweight and heavyweight applications. This is primarily due to its reliance solely on limited local resources and its inability to fully utilize the benefits of edge and cloud computing.

The four offloading strategies exhibit clear performance differences, as shown in Table 2. LCS is energy-efficient in lightweight tasks but performs poorly under heavy loads, especially in load balancing and task success rate, making it suitable only for simple, resource-constrained scenarios. ECS reduces latency and improves reliability under good network conditions, yet its performance fluctuates under high load or unstable networks. CCS delivers high accuracy and stability for complex tasks but suffers from long communication delays, limiting its efficiency in lightweight applications. ACS, in contrast, consistently outperforms the others by dynamically adapting to workload and network changes, achieving the best overall performance in energy efficiency, latency, load balancing, and reliability — albeit with slightly higher algorithmic complexity.

Limitations and constraints

Despite the notable achievements in the construction of an edge computing physical verification platform and the optimization of offloading strategies, this study still encounters several limitations and potential biases that necessitate further exploration and resolution in future research. Firstly, The current experimental setup is primarily based on a limited set of devices and simulated conditions. While this allows for controlled comparisons and highlights the performance advantages of the proposed adaptive offloading strategy, it may not fully reflect the diversity and dynamics of real-world edge computing environments. For instance, variations in hardware configurations, network quality, and user behavior are not adequately captured, which could lead to an overestimation of system stability and efficiency. As a result, the generalizability of the findings—especially when applied to large-scale or heterogeneous deployments—may be limited. In addition, the evaluation metrics used in this study mainly focus on computational latency, energy consumption, and task success rate. Although these are essential indicators for assessing offloading performance, they do not account for other critical aspects such as system security, fault tolerance, data privacy, and user experience. Neglecting these non-functional dimensions may reduce the practical applicability of the proposed approach, particularly in safety-critical or privacy-sensitive applications like healthcare monitoring or industrial automation. Therefore, future work should expand the evaluation framework to include a broader range of metrics that reflect both functional and non-functional requirements. Furthermore, the current model assumes relatively stable network and device conditions, which does not align with the uncertainties often encountered in real-world environments—such as wireless signal fluctuations, device failures, or resource degradation over time. These dynamic factors can significantly affect the reliability and effectiveness of offloading decisions. To improve the robustness and realism of the results, future studies should incorporate real-time environmental monitoring, adaptive fault detection mechanisms, and predictive resource allocation models. Furthermore, exploring cross-platform interoperability, including standardized interfaces and coordination protocols, will enhance the deployment potential of the proposed strategy in heterogeneous infrastructures. Integrating emerging technologies such as 5G communication, edge AI, and lightweight machine learning models also holds great promise for addressing current limitations and advancing the field.

To further enhance the practical relevance and robustness of future research, several actionable steps can be taken. First, expanding the experimental setup to include diverse hardware configurations—such as low-end IoT devices, high-performance edge servers, and heterogeneous communication protocols—can help evaluate the adaptability of offloading strategies under real-world heterogeneity. Second, incorporating additional evaluation metrics, such as system security (e.g., data integrity and access control), fault tolerance (e.g., recovery time and redundancy mechanisms), and user experience (e.g., QoE indicators like response smoothness and interface latency), would provide a more comprehensive understanding of system performance beyond traditional efficiency benchmarks. Third, designing dynamic and realistic experimental conditions, such as introducing network instability, fluctuating workloads, and device failures into simulations, can better reflect the unpredictable nature of real deployment environments. Finally, exploring integration with emerging technologies, including lightweight machine learning models for fast inference at the edge, 5G/6G communication for reduced transmission delay, and digital twin-based simulation for virtual testing, can significantly improve the scalability, responsiveness, and resilience of offloading strategies.

Conclusion

With the deep integration of the IoT and edge computing, the selection of offloading strategies has become particularly critical, directly affecting system performance, resource utilization, and task processing efficiency. This chapter focuses on edge computing offloading strategies in embedded systems, and innovatively integrates KubeEdge and Serverless technologies to construct an efficient, flexible, and intelligent edge-IoT verification platform. On this platform, the system comprehensively evaluates the performance of four offloading strategies—Local Computing Strategy (LCS), Edge Computing Strategy (ECS), Cloud Computing Strategy (CCS), and Adaptive Computing Offloading Strategy (ACS)—across three applications with varying levels of complexity. Experimental results reveal significant differences among these strategies in terms of latency, energy consumption, and resource utilization, providing strong support for optimizing offloading configurations in real-world systems.

This study not only achieves a systematic comparison of multiple offloading strategies at the technical level but also proposes a dynamic selection mechanism tailored for resource-constrained scenarios, contributing to enhanced task processing capabilities and energy efficiency of embedded devices. The research outcomes hold significant implications for advancing edge computing toward greater intelligence and lightweight design, particularly benefiting application domains with stringent requirements for real-time performance and reliability, such as battlefield IoT, emergency response, and industrial control. Moreover, this work lays a practical foundation and provides reference pathways for the deployment and optimization of future edge computing systems. Future work may further enrich the experimental environment by exploring cross-platform interoperability, multimodal task scheduling, and AI-driven intelligent decision-making mechanisms, aiming to build a more efficient, secure, and sustainable edge computing ecosystem.

Data availability

The data that support the findings of this study are available from the corresponding author, [Zhi Yu], upon reasonable request.

References

Merzougui, S. E. Leveraging edge computing and orchestration platform for enhanced pedestrian safety application: The DEDICAT-6G approach. https://doi.org/10.1109/CCNC51664.2024.10454879 (2024).

Ferreira, V. et al. NETEDGE MEP: A CNF-based multi-access edge computing platform. https://doi.org/10.1109/ISCC58397.2023.10217942 (2023).

Horisaki, S., Matama, K., Goto, R., Naito, K. & Suzuki, H. Intercommunication method between local edge computing devices using QUIC-based CYPHONIC. https://doi.org/10.1109/ICCE59016.2024.10444375 (2024).

Tuan, N. A., Xu, R., Moe, S. J. & Kim, D. Federated recognition architecture based on voting and feedback mechanisms for accuracy object classification in distributed edge intelligence environment. IEEE Sens. J. 23(22), 27478–27489. https://doi.org/10.1109/JSEN.2023.3285618 (2023).

Su, X., Qi, J., Wang, J., Wang, R. & Yao, Y. EasiEI: A simulator to flexibly modeling complex edge computing environments. IEEE Internet Things J. 11(1), 1558–1571. https://doi.org/10.1109/JIOT.2023.3289870 (2024).

Del-Pozo-Puñal, E., García-Carballeira, F. & Camarmas-Alonso, D. A scalable simulator for cloud, fog and edge computing platforms with mobility support. Future Gener. Comput. Syst. 144, 117–130. https://doi.org/10.1016/j.future.2023.02.010 (2023).

Ren, T., Hu, Z., He, H., Niu, J. & Liu, X. FEAT: Towards Fast environment-adaptive task offloading and power allocation in MEC. https://doi.org/10.1109/INFOCOM53939.2023.10228946. (2023).

Kim, S. H. & Kim, T. Local scheduling in KubeEdge-based edge computing environment. Sensors 23, 1522. https://doi.org/10.3390/s23031522 (2023).

Bahy, M. B., Dwi Riyanto, N. R., Fawwaz Nuruddin Siswantoro, M. Z. & Santoso, B. J. Resource utilization comparison of KubeEdge, K3s, and Nomad for edge computing. In 2023 10th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Palembang, Indonesia 321–327. https://doi.org/10.1109/EECSI59885.2023.10295642 (2023).

Russo, G. R., Cardellini, V. & Presti, F. L. A framework for offloading and migration of serverless functions in the Edge-Cloud Continuum. Pervasive Mob. Comput. 100, 101915 (2024).

Stojkovic, J., Iliakopoulou, N., Xu, T., Franke, H. & Torrellas, J. EcoFaaS: Rethinking the design of serverless environments for energy efficiency. In Proceedings of the 51st Annual International Symposium on Computer Architecture (ISCA’24) (2024).

Xu, M. et al. Practice of Alibaba cloud on elastic resource provisioning for large-scale microservices cluster. Softw. Pract. Exp. 54(1), 39–57 (2024).

Hefiana, R. & Fernando, Y. Analisis perbandingan elastic compute service (ECS) instance Alibaba cloud Dengan virtual machine azure. KLIK Kajian Ilmiah Inf. Komput. 4(4), 2158–2168 (2024).

Kum, S., Yu, M., Kim, Y., Moon, J. & Cretti, S. AI Management platform with embedded edge cluster. In 2021 IEEE International Conference on Consumer Electronics (ICCE). https://doi.org/10.1109/ICCE50685.2021.9427731 (2021).

Psaromanolakis, N. etal. MLOps meets edge computing: An edge platform with embedded intelligence towards 6G systems. https://doi.org/10.1109/EUCNC/6GSUMMIT58263.2023.10188244 (2023).

Wu, L. et al. Simulation for urban computing scenarios: An overview and research challenges. https://doi.org/10.1109/CSCloud-EdgeCom58631.2023.00012 (2023).

Kaiser, S., Şaman Tosun, A. & Korkmaz, T. Benchmarking container tech-nologies on ARM-based edge devices. IEEE Access 11, 107331–107347. https://doi.org/10.1109/ACCE-SS.2023.3321274 (2023).

Lingayya, S. et al. Dynamic task offloading for resource allocation and privacy-preserving framework in Kubeedge-based edge computing using machine learning. Clust. Comput. https://doi.org/10.1007/s10586-024-04420-8 (2024).

Pham, K. Q. & Kim, T. Elastic federated learning with Kubernetes vertical pod Autoscaler for edge computing. Future Gener. Comput. Syst. 158, 501–515 (2024).

Fathoni, H. et al. Empowered edge intelligent aquaculture with lightweight Kubernetes and GPU-embedded. Wirel. Netw. https://doi.org/10.1007/s11276-023-03592-2 (2024).

Yuan, Y. et al. Edge-Cloud collaborative UAV object detection: Edge-embedded lightweight algorithm design and task offloading using fuzzy neural network. IEEE Trans. Cloud Comput. 12, 306–318. https://doi.org/10.1109/TCC.2024.3361858 (2024).

Author information

Authors and Affiliations

Contributions

Conceptualization: Z.Y. and J.C.; Data curation: M.C. and J.Y.; Formal analysis: J.C. and B.Z.; Funding acquisition: J.Y.; Writing – original draft: J.C.; Software: J.C. and M.C.; Supervision: Z.Y. and J.Y.; Validation: J.C. and B.Z. Writing – review & editing: Z.Y. , J.Y. and J.C.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Cao, J., Yu, Z., Zhu, B. et al. Construction and efficiency analysis of an embedded system-based verification platform for edge computing. Sci Rep 15, 26114 (2025). https://doi.org/10.1038/s41598-025-10580-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-10580-3