Abstract

In this paper, we develop a combination of algorithms, including camera motion detector (CMD), deep learning models, class activation mapping (CAM), and periodical feature detector for the purpose of evaluating human gastric motility by detecting the presence of gastric peristalsis and measuring the period of gastric peristalsis. Moreover, we use visual interpretations provided by CAM to improve the sensitivity of the detection results. We evaluate the performance of detecting peristalsis and measuring period by calculating accuracy, F1, and area under curve (AUC) scores. Also, we evaluate the performance of the periodical feature detector using the error rate. We perform extensive experiments on the magnetically controlled capsule endoscope (MCCE) dataset with more than 100,000 frames (100,055 specifically). We have achieved high accuracy (0.8882), F1 (0.8192), and AUC scores (0.9400) for detecting human gastric peristalsis, and low error rate (8.36%) in measuring peristalsis periods from the clinical dataset. The proposed combination of algorithms has demonstrated the feasibility of assisting in the evaluation of human gastric motility.

Similar content being viewed by others

Introduction

In the normal digestion process, food is propelled through the digestive tract by rhythmic muscle contractions known as peristalsis. The resulting movement of contents is referred to as gastrointestinal motility (throughout the digestive tract) or gastric motility (when limited to the stomach)1. Gastric motility disorder occurs when the normal peristalsis is disrupted, which may cause severe constipation, recurrent vomiting, bloating, diarrhea, nausea, and even death. Gastric motility may be assessed through various techniques. While direct measurements such as gastric emptying scintigraphy and wireless motility capsules (WMCs) quantify food movement, other modalities-including manometry, electrogastrography (EGG), and ultrasound-evaluate the factors affecting gastric motility. However, traditional methods of evaluating gastric motility have their limitations. Manometry involves intranasal intubation protocols, which may cause discomfort to patients and lead to the use of sedation2. Nuclear medicine is required for gastric emptying scintigraphy, which leads to the risk of radiation exposure to patients3. EGG has many variations in the recording system. Despite recent efforts to standardize EGG for body surface gastric mapping4, EGG remains susceptible to inter-individual physiological variability, such as differences in body mass index. Gastric ultrasound suffers from the trade-off between penetration depth and resolution5. Wireless motility capsules measure physiological parameters related to gastric peristalsis, such as temperature, pH, and pressure, as they travel through the gastrointestinal tract6. However, as the WMCs passively transit through the gastrointestinal tract, they may fail to accurately assess motility at specific anatomical landmarks7. On the other hand, magnetically controlled capsule endoscope (MCCE) is an emerging tool for the diagnosis of gastric diseases, which provides real-time, true-color visualization of the gastric environment. With active magnetic control, MCCE enables precise localization and visualization of anatomical landmarks. Moreover, the MCCE provides direct, multi-angle visualization of contraction waves, which enables comprehensive and effective analysis of gastric motility. Besides, the MCCE possesses advantages of comfort, safety, and no anesthesia8.

However, an extensive amount of labor from the clinical participants is needed to evaluate gastric motility using MCCE. For example, each MCCE frame needs to be inspected for the presence of peristalsis; and the period of peristalsis needs to be manually counted. Thus, there is a need to develop automatic algorithms for evaluating gastric motility using MCCE systems. Deep learning algorithms have been used in the field of medical imaging9,10,11 as well as assisting the diagnosis of MCCE systems12,13. Convolution neural network (CNN) has been used in detecting polyps14,15, ulcer16, tumor, and mucosa12. Moreover, the Deep Reinforcement Learning (DRL) approaches have been used in the automated navigation of the MCCE capsules within human stomach. However, the existing research focuses on detecting gastric lesions, anomaly detection, segmentation, and navigation17 based on single MCCE frame, instead of utilizing the temporal information from MCCE frame sequences.

In this paper, we develop a combination of algorithms for evaluating human gastric motility by detecting and measuring gastric peristalsis using the MCCE system. During the MCCE examination, an external magnetic head will guide the capsule to move and capture images within the human stomach, which poses a challenge for action recognition algorithms18. To mitigate the sudden movement of MCCE capsule, we develop a camera motion detector (CMD) for processing MCCE frame sequences. We develop a framework for detecting gastric peristalsis, which is compatible with CNN + long short-term memory (LSTM)19,20 and transformer-base models21. The human gastric contraction waves present features in both spatial and temporal domains. In the spatial domain, the waves have morphological shapes; in the temporal domain, the shape of waves changes over time. The CNN model is capable of capturing the spatial features and LSTM model is capable of capturing the temporal features; and the Video Swin Transformer21 is capable of analyzing patches across the spatial and temporal dimensions . For detection and classification algorithms in most medical applications, reducing false negatives is actually more important than reducing false positives22. False negative results may lead to an omission in detecting gastric peristalsis, which will lead to an underestimation of human gastric motility. The class activation mapping (CAM)23 is capable of highlighting the regions within the gastric where the peristalsis occurs. To make the detection results more reliable (i.e. have fewer false negatives in detecting peristalsis frames in the dataset), we improve the detection sensitivity using the visual interpretations provided by CAM. Moreover, we develop a periodical feature detector for measuring the period of human gastric peristalsis based on the analysis of feature map of MCCE frames.

We conducted extensive experiments on our MCCE dataset, which includes over 100,000 frames (specifically 100,055) from 30 subjects for the training and validation sets, and 24,183 frames from 11 subjects for the testing set. Our combination of algorithms is capable of evaluating gastric motility by detecting the presence of peristalsis as well as measuring the period of gastric peristalsis. The proposed algorithms have great potential to be developed in clinical devices for assisting the evaluation of gastric motility.

Methods

MCCE dataset

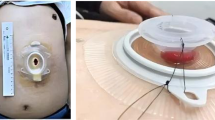

The MCCE dataset was acquired by the department of research and development at AnX Robotica. Using the NaviCam MCCE system, inspection videos of internal volunteers were collected. The MCCE system consists of four components: a swallowable, wireless, and magnetically controlled capsule endoscope (11.8 \(\times\) 27 mm), a guidance magnetic robot, a data recorder, and a computer workstation with corresponding softwares. An example of the components of the NaviCam MCCE system is shown in Fig. 1. The videos captured by MCCE were recorded at 2 fps, with a size of 480 \(\times\) 480 pixels. The MCCE videos were treated as frame sequences. Our training and validation set contains more than 100,000 MCCE (specifically 100,055) frames from 30 subjects.

An illustrations of the NaviCam MCCE system (https://www.anxrobotics.com/products/navicam-stomach-capsule-system/). (a) Controlled capsule endoscope. (b) Guidance magnetic robot. (c) Data recorder and computer workstation.

Design of camera motion detector

We design the CMD for filtering the MCCE frames which are deteriorated by camera movement. The proposed CMD takes two consecutive frames, Frame N-1 and Frame N as inputs. Then the CMD determines the camera motion by analyzing histograms. The details of the CMD is described in Algorithm 1. A normalized Gaussian function with \(\mu\) at 128 and \(\sigma\) at 20 is adopted as the mask M. The choice of \(\mu\) and \(\sigma\) is based on empirical research. With a higher threshold T, the mean sequence length of resulting video sequences is longer, leading to higher computational cost. However, the resulting video sequences will contain less motion with lower threshold T. We empirically set the threshold T to 200 to strike a trade-off between the sequence length and camera motion within sequences.

Workflow of detecting human gastric peristalsis

The workflow of detecting human gastric peristalsis is shown in Fig. 2a. For training, the MCCE dataset will be processed by the CMD, which provides stable MCCE frames. For prediction (testing), the testing MCCE data will go through a post-processing step. In the post-processing step, the CMD is involved in determining the quality of the MCCE frames. The frames with camera movement above the threshold will be marked as ’camera moving’; the stable MCCE frames which pass the CMD will be sent to the pre-trained deep-learning model for prediction, which generates outputs of ’wave’ or ’nowave’. The proposed framework is compatible with various deep-learning models. In Fig. 2b, we demonstrate the method of using CAM for improving the sensitivity of the framework. In Fig. 2c, we demonstrate the ensemble of the CNN and LSTM for detecting human gastric peristalsis.

(a) The workflow of detecting human gastric peristalsis using MCCE frames and deep learning algorithms. During the training and inferencing phases, the CMD is used to filter the unstable MCCE frames, and deep learning algorithms are used to detect gastric peristalsis based on both spatial and temporal information. (b) The protocol for improving detection sensitivity using CAM. Using the counted activated pixels, we further calibrate the prediction results from the CNN-LSTM model. (c) The ensemble of CNN and bi-directional LSTM model for detecting gastric peristalsis. The CNN model extracts spatial features and LSTM model extracts temporal features.

Improving sensitivity using CAM

We use the CAM to calibrate the detection results of the deep learning model. The CAM for a particular category indicates the discriminative image regions used by the CNN to identify that category. We calculate the CAM for each MCCE frame k. Then we use a threshold \(\hbox {T}_{c}\) to filter the activated pixels in the CAM. Then we count the number of activated pixels. If the number of activated pixels of a frame is larger than the threshold \(\hbox {T}_{c}\), the frame will be classified as ‘wave’ in the modified label list c. Then we perform a calibration between the modified list c and the original prediction list p. If \(c_k\) or \(p_k\) is ‘wave’, the final calibrated prediction results in \(pr_k\) will be ‘wave’. The algorithm is described in Algorithm 2. The parameter \(\hbox {T}_{p}\) determines the threshold of choosing positive CAM pixels and \(\hbox {T}_{c}\) determines the number of CAM positive pixels to consider a frame as positive. The higher of these two parameters, the stricter of the CAM filter. For example, if the Tp is set to 1 and Tc is set to 230,400 (total number of the pixels in a MCCE frame with a size of 480\(\times\)480), then no frame can pass the CAM filter and the sensitivity of the CNN+LSTM model will not be improved. If both the \(\hbox {T}_{p}\) and \(\hbox {T}_{c}\) are set to 0, then every MCCE frame will be able to pass the CAM filter, and the sensitivity will be 1. Following some of the existing research24,25, we set \(\hbox {T}_{p}\) = 0.8. The choice of \(\hbox {T}_{c}\) is set to 400 based on empirical research.

Periodical feature detector for human gastric peristalsis

We design a periodical feature detector for measuring periods of human gastric peristalsis. The inputs of the periodical feature detector are a range of intervals, MCCE frames, and two thresholds \(T_{l}\) and \(T_{r}\). The periodical feature detector calculates the feature difference score S of feature maps across certain intervals i. For each interval i, a score \({\textbf {S}}_{i}^{mean}\) is calculated for the MCCE frames. The period of the human gastric peristalsis is determined by the local minimal P between thresholds \(T_{l}\) and \(T_{r}\). The details of the periodical detector are described in Algorithm 3. The intervals i were set from 5s to 50s, with an incremental of 0.5 s (2 fps). The \(T_l\) is set to 10 s and \(T_u\) is set to 40 s. The choice of \(T_l\) and \(T_u\) is determined by the minimum frames needed to detect human gastric peristalsis and the average period of normal human gastric peristalsis. We will show experimental details in results section to confirm that 10 s (20 MCCE frames) achieves optimal performance in detecting gastric peristalsis. Thus, we assume 10 s to be the lower bound for the periodical feature detector and set \(T_l\) to be 10 s. We set the \(T_u\) (40 s) to be twice the value of the average period of normal gastric peristalsis, which is around 20 s26. Note that the periodical feature detector will detect both the period and multiples of the period. Thus, setting \(T_u\) = 40 s can remove the multiples of the period of the normal human gastric peristalsis. We use the EfficientNet_b727 to generate the feature maps of the MCCE frames.

Evaluation setup

Using the CMD, we acquire 32,431 stable MCCE frames (wave: 9501, nowave: 22,930) from the training set. The 32,431 stable MCCE frames are divided into 1028 MCCE frame sequences (wave: 336, nowave: 692), each sequence consists of more than 20 frames. Each MCCE frame sequence corresponds to a single label of whether ‘wave’ or ‘nowave’. For training and cross-fold validation, we used the 1028 MCCE stable frame sequences. For testing, we used 30 additional MCCE records from another 11 individuals (24,183 frames), which are acquired after the training data. The training cohort and testing cohort were divided according to the time of data acquisition. The training and testing data were acquired from the same center, using the same MCCE system.

Network training parameters

For training the CNN-LSTM and Video Swin Transformer models, we set the batch size to eight (frame sequences). We used pre-trained weights on ImageNet28 for all the models in this project. For the CNN+LSTM models, we acquired the pretrained weights from the Torchvision29 package; for the Video Swin Transformer model, we acquired the pretrained weights from the mmaction30 package. We trained all the models for 200 epochs, in which we observed a plateau of loss function for all models. We used the first five epochs for warm-up, in which we only train the CNN model. The learning rate is initialized to \(10^{-4}\), followed by half decay for every 10 epochs. The experiments were carried out in a single RTX 3080 GPU.

Evaluation metrics

We used accuracy, F1 score, and area under the curve (AUC) to evaluate the classification performance. The accuracy score evaluates the performance of the model to correctly predict (true positive and true negative) the wave/nonwave video frames; the F1 score evaluates the performance of the model, with the consideration of falsely predicted cases (false negative and false positive). In this medical-related study, we need to evaluate model performance for both correctly and falsely predicted cases. The AUC score evaluate how much the trained model can distinguish between the wave and non-wave video sequences.

where TP stands for true positive; TN stands for true negative; FP stands for false positive; FN stands for false negative.

where precision is defined by \(\frac{TP}{TP+FP}\); sensitivity is defined by \(\frac{TP}{TP+FN}\).

The AUC score tells how much the classification model is capable of distinguishing between classes, which can be calculated by the receiver operating characteristic (ROC) curve. The AUC ranges in value from 0 to 1. A model whose predictions are 100% wrong has an AUC of 0; one whose predictions are 100% correct has an AUC of 1.

We define the error rate to quantify the performance of the periodical feature detector.

Ethics declarations

The MCCE data adopted in this research was collected from internal healthy volunteers in Ankon, using the NaviCam system. The NaviCam system is registered medical device in National Medical Products Administration (NMPA).

The data collection protocol was designed and performed by the Medical Department in Ankon, which is in accordance with the Ankon Internal Volunteer Protocol and Declaration of Helsinki.

Informed consent for the data collection protocol and reuse of the data for research purpose were acquired from all the internal healthy volunteers. The internal healthy volunteers received monetary compensation.

An example of applying the camera motion detector to stable (upper row) and unstable MCCE (lower row) frame sequence. The MCCE frames are inputs of the proposed CMD. The histogram (H) mask (M), and masked histograms (\(\hbox {H}_M\)) are interim results. The output of the CMD is a score that evaluates the movements between two consecutive MCCE frames.

Results

Effects of CMD

We show the effect of using the CMD on stable and unstable MCCE frame sequences. An example of applying CMD to stable (upper row) and unstable (lower row) MCCE images is demonstrated in Fig. 3. On the upper row, we apply the CMD to two consecutive MCCE frames captured when the capsule is stable. In this case, the main body of the histogram \({\textbf {H}}\) of the residual images between the two frames is zero. However, the high band (right) and low band (left) in the histogram have high values. This is caused by the small motion of the capsule, which is equipped with a light source. The slight changes in the light source will change the positions of bright/dark regions, which will be captured by the high/low bands of the histogram of the residual image. Using the mask \({\textbf {M}}\), we can filter out the high/low bands of \({\textbf {H}}\), which results in \({\textbf {H}}_M\). On the bottom row, we apply the CMD to two consecutive MCCE frames captured when the capsule is unstable, which leads to a high CMD score S (2616).

Detecting human gastric peristalsis

We train the CNN+LSTM models using different memory lengths (1, 5, 10, and 20 video frames). The model performance of different memory lengths is reported in Table 1. We observe the model performance increases with the value of the memory length. The CNN+LSTM model with a memory length of 20 shows the best performance.

We implemented different types of CNN models with LSTM of memory length of 20, including ResNet18, ResNet50, ResNet10132, ShuffleNet_v233, EfficientNet_b0, and EfficientNet_b727. Also, we implemented video swin transformer21 for detecting the human gastric peristalsis. The results are shown in Table 2. The CNN+LSTM with memory length of 20 shows the best performance. The video swin transformer model shows worse performance compared to the CNN+LSTM models. Although the video swin transformer has the potential to demonstrate superior performance in classifying natural images with multiple classes21, CNN+LSTM models perform better for detecting gastric peristalsis in our dataset. The EfficientNet_b7 has a better performance compared to other CNN models27. The multi-objective neural architecture search algorithm in EfficientNet_b7, which searches for optimal model design, may lead to superior performance over other CNN models.

Still images from inference results of four representative cases in the testing set. The EfficentNet_b7+LSTM with memory length of 20 is used for the inference. (a) Case14 (Supplementary Video case14.mp4). (b) Case19 (Supplementary Video case19.mp4). (c) Case20 (Supplementary Video case20.mp4). (d) Case24 (Supplementary Video case24.mp4).

Improving the detection sensitivity using CAM

In Fig. 4, we demonstrate the still images from the inference results of four representative cases in the testing set. We follow the inference protocol in Fig. 2a. The inference results are shown in black bold fonts. Also, we calculate CAM for the MCCE frames during inferencing. The CAM is projected to a heatmap, where red corresponds to high intensity (1) and blue corresponds to low intensity (0). In inference videos, the human gastric peristalsis is highlighted by the red regions in the CAM. The CAM provides visual explanations of the CNN+LSTM model for detecting gastric peristalsis.

By analyzing the activated regions in CAM (described in Algorithm 2) and calibrating the original predictions results, we improve the sensitivity of the CNN+LSTM model in detecting gastric peristalsis. The results are reported in Fig. 5. With the explainable information provided by CAM, we are capable of reducing the false negative results in detecting gastric peristalsis, compared to the vanilla CNN+LSTM model.

An example of applying the periodical feature detector on an MCCE frame sequence (case2 in the testing set). For each interval value I from 10 to 50 s, the periodical detector will generate a corresponding feature difference score. The I (between predefined \(T_l\) and \(T_u\)) with local minimum feature difference score will be identified as the detected period P. In this case, the detected period of human gastric peristalsis is 17.5 s (denoted as red line), which is close to the counted period (19.2s).

Measuring period of human gastric peristalsis

To capture the period of human gastric peristalsis, we develop the periodical feature detector in Algorithm 3. The proposed detector extracts the periodical information by analyzing the difference of MCCE feature maps given different intervals. An example of applying the periodical feature detector on MCCE frame sequence is shown in Fig. 6. In this case (case2 in the testing set), the detected period is 17.5 s (an error rate fo 8.85% compared to the counted period 19.2 seconds).

We apply the periodical detector to the testing set of 30 MCCE frame sequences. The counted and detected periods are reported in Fig. 7. The proposed periodical feature detector achieves a mean error rate of 8.36% with a standard deviation of 12.84%.

Discussion

In this paper, we explored the deep learning and image processing algorithms for detecting and measuring periods of human gastric peristalsis. We developed a generic framework for detecting human gastric peristalsis using deep learning. We explore multiple CNN+LSTM models and video swin transformer for detecting gastric peristalsis using the proposed framework. Also, we developed a CMD for filtering the MCCE frames which are deteriorated by camera movement. The current design of CMD is based on processing MCCE frames. In the future, we may add additional information and optimize the design of CMD to various devices, such as magnetic positioning data from the NaviCam. On our MCCE dataset with more than 100,000 MCCE frames (100,055 specifically) from 30 subjects, we achieved 0.8882 accuracy, 0.8192 F1, and 0.9400 AUC scores for detecting gastric peristalsis. In the future, we will train and test the proposed algorithms on different MCCE systems from different medical centers to evaluate the algorithm’s generalization ability. Moreover, we improved the sensitivity of detecting gastric peristalsis using visual interpretation provided by the CAM. To measure the period of the gastric peristalsis, we designed a periodical feature detector. The proposed periodical feature detector achieves a mean error rate of 8.36% in our dataset, which outperforms the existing method in our previous research31. We notice in the case26, the periodical feature detector has the highest error rate (67.68%). We investigate the case26 in Fig. 8. In case26, the MCCE frame sequences capture a substantial amount of mucus. The mucus has shape features and motions different than the gastric peristalsis, which may lead to the deteriorated performance of the periodical feature detector. To mitigate the performance drop of the periodical feature detector caused by the presence of mucus, image noise removal algorithms may be adopted. Also, the performance of peristalsis detection can be improved by involving more diverse training data, such as datasets with more presence of debris and mucus. With a more diversed dataset, the deep learning model can learn to ignore the noises (e.g. debris and mucus) and focus on detecting the gastric peristalsis. Moreover, image-denoising algorithms can be adapted to preprocess the data to reduce the level of noise.

Still image of the MCCE frame sequence case26 (Supplementary Video case26.mp4) in the testing set. The MCCE frames are de-identified. The MCCE frame sequence captures the presence of mucus.

The proposed algorithms have great potential to be integrated into clinical workflows. For example, the algorithms can be integrated into data recorders and computer workstations of MCCE systems (shown in Fig. 1c). During the clinical diagnosis, the collected data can be analyzed and provide real-time gastric motility evaluation. Moreover, the proposed algorithms can run off-line and analyze the collected MCCE data from previous clinical diagnoses, retrospectively. However, more diverse training data is needed before adopting the proposed algorithms in real clinical scenarios. Especially, the training data should cover a wide range of stomach environments, e.g. different genders, age ranges, ethnic groups, and previous medical conditions.

Conclusion

As an exploratory study on automatic detection and measurement of human gastric peristalsis, the algorithms developed in this research have great potential to help both clinicians and patients. Using the proposed algorithms, the extensive manual labor in evaluating gastric peristalsis, such as inspection of each MCCE frame and counting the period of peristalsis, can be reduced for clinicians; and patients can benefit from reliable examination results. The proposed algorithms contribute to an efficient and reliable workflow that we envision for MCCE systems. However, the algorithms, especially the periodical feature detector, are developed based on a clean gastric environment without the presence of debris and mucus. Although we improved the sensitivity of the CNN+LSTM model using the explainable visual interpretations provided by CAM, the model performance may deteriorate with the presence of gastric debris and mucus. In the future, we will improve the algorithm design focusing on the presence of debris and mucus by acquiring more data. With a more diverse dataset, the deep learning model can learn to be robust to the noises present in the image, including the debris and mucus. Also, we may add image de-nosing algorithms to pre-process the MCCE frames. We optimized the parameters (\(\mu\), \(\sigma\), and T in Algorithm 1, \(\hbox {T}_p\) and \(\hbox {T}_c\) in Algorithm 2) for the NaviCam MCCE system. We will keep optimizing the parameters using more data and for MCCE systems from other manufacturers. Moreover, our current dataset is collected based on healthy volunteers. We will extend our dataset and involve patients with gastric disease. We aim to further improve the robustness of the proposed algorithm with the extended dataset. Besides, we will enable the proposed algorithm to detect and classify human gastric diseases based on peristalsis.

Data availability

The datasets generated during the current study are not publicly available due to the policy of AnX Robotica but are available from the corresponding author upon reasonable request.

References

Szarka, L. A. & Camilleri, M. Methods for measurement of gastric motility. Am. J. Physiol. Gastrointest. Liver Physiol. 296, G461–G475. https://doi.org/10.1152/ajpgi.90467.2008 (2009).

Christian, K. E., Morris, J. D. & Xie, G. Endoscopy- and monitored anesthesia care-assisted high-resolution impedance manometry improves clinical management. Case Rep. Gastrointest. Med. 2018, 9720243. https://doi.org/10.1155/2018/9720243 (2018).

Kar, P., Jones, K. L., Horowitz, M., Chapman, M. J. & Deane, A. M. Measurement of gastric emptying in the critically ill. Clin. Nutr. 34, 557–564. https://doi.org/10.1016/j.clnu.2014.11.003 (2015).

O’Grady, G. et al. Principles and clinical methods of body surface gastric mapping: Technical review. Neurogastroenterol. Motil. 35, e14556. https://doi.org/10.1111/nmo.14556 (2023).

Lento, P. H. & Primack, S. Advances and utility of diagnostic ultrasound in musculoskeletal medicine. Curr. Rev. Musculoskelet. Med. 1, 24–31. https://doi.org/10.1007/s12178-007-9002-3 (2008).

Saad, R. J. & Hasler, W. L. A technical review and clinical assessment of the wireless motility capsule. Gastroenterol. Hepatol. 7, 795–804 (2011).

Thwaites, P. A. et al. Comparison of gastrointestinal landmarks using the gas-sensing capsule and wireless motility capsule. Aliment. Pharmacol. Therap. 56, 1337–1348. https://doi.org/10.1111/apt.17216 (2022).

Zhang, Y., Zhang, Y. & Huang, X. Development and application of magnetically controlled capsule endoscopy in detecting gastric lesions. Gastroenterol. Res. Pract. 2021, 2716559. https://doi.org/10.1155/2021/2716559 (2021).

Shen, D., Wu, G. & Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 19, 221–248. https://doi.org/10.1146/annurev-bioeng-071516-044442 (2017).

Wu, J., Ye, X., Mou, C. & Dai, W. Fineehr: Refine clinical note representations to improve mortality prediction. In 2023 11th International Symposium on Digital Forensics and Security (ISDFS) 1–6. https://doi.org/10.1109/ISDFS58141.2023.10131726 (2023).

Ye, X., Wu, J., Mou, C. & Dai, W. Medlens: Improve mortality prediction via medical signs selecting and regression. In 2023 IEEE 3rd International Conference on Computer Communication and Artificial Intelligence (CCAI) 169–175. https://doi.org/10.1109/CCAI57533.2023.10201302 (2023).

Xia, J. et al. Use of artificial intelligence for detection of gastric lesions by magnetically controlled capsule endoscopy. Gastrointest. Endosc. 93, 133-139.e4. https://doi.org/10.1016/j.gie.2020.05.027 (2021).

Qin, K. et al. Convolution neural network for the diagnosis of wireless capsule endoscopy: A systematic review and meta-analysis. Surg. Endosc. 36, 16–31. https://doi.org/10.1007/s00464-021-08689-3 (2022).

Nadimi, E. S. et al. Application of deep learning for autonomous detection and localization of colorectal polyps in wireless colon capsule endoscopy. Comput. Electric. Eng. 81, 106531. https://doi.org/10.1016/j.compeleceng.2019.106531 (2020).

LaLonde, R., Kandel, P., Spampinato, C., Wallace, M. B. & Bagci, U. Diagnosing colorectal polyps in the wild with capsule networks. In 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI) 1086–1090. https://doi.org/10.1109/ISBI45749.2020.9098411 (2020).

Alaskar, H., Hussain, A., Al-Aseem, N., Liatsis, P. & Al-Jumeily, D. Application of convolutional neural networks for automated ulcer detection in wireless capsule endoscopy images. Sensors 19, 65. https://doi.org/10.3390/s19061265 (2019).

Muruganantham, P. & Balakrishnan, S. M. A survey on deep learning models for wireless capsule endoscopy image analysis. Int. J. Cogn. Comput. Eng. 2, 83–92. https://doi.org/10.1016/j.ijcce.2021.04.002 (2021).

Wu, S., Oreifej, O. & Shah, M. Action recognition in videos acquired by a moving camera using motion decomposition of lagrangian particle trajectories. In 2011 International Conference on Computer Vision 1419–1426. https://doi.org/10.1109/ICCV.2011.6126397 (2011).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735 (1997).

van Houdt, G., Mosquera, C. & Nápoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 53, 5929–5955. https://doi.org/10.1007/s10462-020-09838-1 (2020).

Liu, Z. et al. Video swin transformer. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 3192–3201. https://doi.org/10.1109/CVPR52688.2022.00320 (2022).

Hashemi, S. R. et al. Asymmetric loss functions and deep densely-connected networks for highly-imbalanced medical image segmentation: Application to multiple sclerosis lesion detection. IEEE Access 7, 1721–1735. https://doi.org/10.1109/ACCESS.2018.2886371 (2019).

Zhou, B., Khosla, A., Lapedriza, À., Oliva, A. & Torralba, A. Learning deep features for discriminative localization. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2921–2929 (2015).

Sun, K., Shi, H., Zhang, Z. & Huang, Y. Ecs-net: Improving weakly supervised semantic segmentation by using connections between class activation maps. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV) 7263–7272. https://doi.org/10.1109/ICCV48922.2021.00719 (2021).

Lee, S., Lee, J., Lee, J., Park, C. & Yoon, S. Robust tumor localization with pyramid grad-cam. CoRR. http://arxiv.org/abs/1805.11393 (2018).

O’Grady, G., Gharibans, A. A., Du, P. & Huizinga, J. D. The gastric conduction system in health and disease: A translational review. Am. J. Physiol. Gastrointest. Liver Physiol. 321, G527–G542. https://doi.org/10.1152/ajpgi.00065.2021 (2021).

Tan, M. & Le, Q. V. Efficientnet: Rethinking model scaling for convolutional neural networks. http://arxiv.org/abs/1905.11946 (2019).

Deng, J. et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255. https://doi.org/10.1109/CVPR.2009.5206848 (2009).

Maintainers, T. & Contributors. Torchvision: Pytorch’s Computer Vision Library. https://github.com/pytorch/vision (2016).

Contributors, M. Openmmlab’s Next Generation Video Understanding Toolbox and Benchmark. https://github.com/open-mmlab/mmaction2 (2020).

Li, X., Gan, Y., Duan, D. & Yang, X. Detecting and measuring human gastric peristalsis using magnetically controlled capsule endoscope. In 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI) 1–5 (2023).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778. https://doi.org/10.1109/CVPR.2016.90 (2016).

Zhang, X., Zhou, X., Lin, M. & Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition 6848–6856. https://doi.org/10.1109/CVPR.2018.00716 (2018).

Acknowledgements

The authors would like to thank Dr. Guohua Xiao for promoting the collaboration during this research.

Author information

Authors and Affiliations

Contributions

X.L: conceptualization, methodology, formal analysis, validation, software, data curation, writing. Y.G: methodology, reviewing, and editing. D.D: conceptualization, methodology, reviewing. X.Y: conceptualization, methodology, data analysis, editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Information 1.

Supplementary Information 2.

Supplementary Information 3.

Supplementary Information 4.

Supplementary Information 5.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, X., Gan, Y., Duan, D. et al. Toward automatic and reliable evaluation of human gastric motility using magnetically controlled capsule endoscope and deep learning. Sci Rep 15, 25955 (2025). https://doi.org/10.1038/s41598-025-10839-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-10839-9