Abstract

Precision and timeliness in the detection of plant diseases are important to limit crop losses and maintain global food security. Much work has been performed to detect plant diseases using deep learning methods. However, deep learning techniques demand a large quantity of data to train the models for diagnosis and further classification. Few-shot learning has surfaced to remove the drawbacks of deep learning methods. Therefore, the proposed work presents a novel GRCornShot model for corn disease diagnosis using few-shot learning with Prototypical Networks based on metric learning. Metric Learning calculates the distance to measure the similarity between the data points. Hence, addressing the challenge of limited labeled data, GRCornShot effectively classifies healthy and corn diseases. Furthermore, the Gabor filter is incorporated into the backbone network ResNet-50 to extract the texture features and to enhance the classification performance. The experiments show the promising application of few-shot learning in agronomic applications, providing a robust solution for detecting corn diseases precisely with minimal data requirements. Using a 4-way 2-shot, 3-shot, 4-shot, and 5-shot learning strategy, GRCornShot achieves impressive accuracy of 96.19%, 96.54%, 96.90%, and 97.89%, respectively.

Similar content being viewed by others

Introduction

Agriculture forms the backbone of many economies worldwide, and the health of crops is paramount for ensuring food security and economic stability1. Corn is a staple crop globally. It serves a significant role in food security and the economy due to its extensive cultivation and use in various products, including food, animal feed, and industrial applications. However, productivity and quality of corn are often affected by a host of leaf diseases2, leading to considerable economic losses. Early and precise detection of corn leaf diseases is critical for enhancing yield, minimizing reliance on harmful chemicals, and improving overall quality of agricultural production3,4,5,6,7,8,9.Traditionally, experts visually examine the plants to diagnose the diseases, which is labor-intensive, time-consuming, and vulnerable to human errors10. Furthermore, this technique could not be more scalable, especially in large agricultural areas. latest improvements in AI, particularly in deep learning, offer a scalable solution for large-scale agricultural monitoring. All these problems can be resolved by employing AI techniques11,12.

Also, recent advancements in computer-aided systems (CAD), particularly those leveraging deep learning, have significantly improved the accuracy and speed of disease identification in plant leaves. Recent research in corn disease detection has leveraged deep-learning models to achieve impressive accuracy and efficiency. For instance, the use of advanced architectures like MaxViT with SE blocks and GRN-based MLPs has significantly improved inference speed while handling large datasets13. Recently, a few works have employed lightweight models and have focused on designing lightweight and attention-enhanced models to support real-world applications in agriculture. For instance, Shafik et al. proposed AgarwoodNet, a robust and lightweight model capable of accurately classifying multiple plant stresses while maintaining a small model size suitable for edge deployment in the field14. Bera et al. proposed a technique integrating partial region descriptors and neighborhood awareness to enhance discriminative power, achieving near-perfect accuracy on multiple datasets15. Similarly, Maruthai et al. utilized a hybrid vision-based GNN to identify pests in coffee crops, capturing interrelationships among visual features to improve accuracy and early detection16. Furthermore, RAFA-Net, proposed by Bera et al., integrates region attention and pyramidal pooling to enhance contextual understanding, showing generalization across food and agricultural stress datasets, including PlantDoc-2717. Hybrid methods, such as a multi-scale residual-based network (MResNet), have enhanced feature optimization and interpretability18. Approaches utilizing VGGNET, Inception V3, and ResNet50 have demonstrated high precision and recall through deep transfer learning19. Additionally, integrating segmentation techniques like SLIC with VGG16 and other networks has facilitated the development of practical web and mobile applications for real-time diagnostics20. Paul et al. proposed a lightweight CNN-based approach for tomato leaf disease classification, emphasizing model deployment on mobile and web platforms for real-time prediction21. Das et al. introduced PCA DeepNet, a hybrid deep learning framework combining PCA and GANs for improving tomato leaf disease detection, achieving high accuracy and precision22. Pacal et al. utilized state-of-the-art Vision Transformer (ViT) models and ensemble techniques to achieve exceptional accuracy in corn leaf disease detection23. Additionally, Das et al. proposed the explainable lightweight model XLTLDisNet with Grad-CAM and LIME integration for enhanced transparency in tomato leaf disease identification24. Furthermore, Sangar et al. introduced a computationally efficient potato leaf disease detection model using EfficientNet-LITE with KE-SVM optimization, suitable for edge device deployment25. While these advancements have made significant strides in leveraging deep learning for corn leaf disease detection, they typically rely on large labeled datasets, which may not always be feasible to obtain in agricultural contexts. This presents a research gap that few-shot learning (FSL) aims to fill, particularly when data availability is challenging.

Through AI techniques, the process of disease detection and classification is automated and provides a scalable solution for large-scale agricultural monitoring. Researchers are paying more attention to deep learning techniques for image recognition tasks26,27,28. Convolutional Neural Networks (CNNs) are deep neural networks widely used to identify plant diseases from leaf images. However, typical deep learning models usually need a lot of labeled data to effectively learn from, posing a huge limitation in the agriculture sector, where obtaining such datasets is challenging due to the need for expert knowledge and significant time investment. In order to address this limitation, the solution introduces few-shot learning (FSL), a branch of machine learning that aims to allow models to learn from few labeled examples29. Specifically, the solution uses a prototypical network, one of the most popular FSL algorithms. This network utilizes metric learning, which encompasses two stages: meta-training and meta-testing. In the meta-training phase, the model learns to calculate class prototypes by averaging class support set image embeddings. In the meta-testing phase, the model predicts query images by calculating the distance of a query image to learned prototypes and assigns labels based on similarity. Figure 1 shows the process of N-way K-shot few-shot learning. However, few-shot learning is more capable than any conventional state-of-the-art method, and there is still a gap in applying this approach in corn disease detection. The advancements collectively motivate the need for frameworks like GRCornShot, which employs few-shot learning to handle the challenges of limited data, visual complexity, and deployment in real farming environments.

The primary objectives of this research paper are:

-

To develop a robust corn disease classifier model with few-shot learning, overcoming the disadvantages of deep learning methods in needing large amounts of labeled data.

-

To implement Prototypical Networks, which employ metric learning to calculate the similarity between data points, for effective disease identification with limited labeled data.

-

To improve feature extraction efficiency through the combination of the Gabor filter with the ResNet-50 backbone, enhancing the model’s texture-based disease pattern capture and classification accuracy.

-

To accurately evaluate the model’s performance using Four-way two-shot, three-shot, four-shot, and five-shot learning strategies to identify healthy and diseased corn samples with minimal data.

The contributions of this paper are the design of a new few-shot learning model architecture specifically for corn disease detection, the establishment of a large-scale corn disease dataset for few-shot learning, and a comparative study of the proposed model with state-of-the-art deep learning approaches. In the subsequent sections, i.e., Sect. Related work, work related to few- shot is explained, Sect. Proposed methodology, model GRCornShot’s methodology is described; Sect. Results and discussion illustrates the results along with the discussions, and Sect. Conclusion concludes the paper.

Related work

Few-shot learning (FSL) has originated to represent a viable approach to overcoming the issues of plant disease identification in environments where obtaining huge, labeled datasets is not feasible or even challenging. New developments in few-shot learning have demonstrated its potential in agriculture30,31. Li et al.32 presented a novel task-driven meta-learning framework for FSL in pest and plant classification, pioneering the application of this paradigm in agriculture. They conducted extensive experiments, establishing benchmarks and analyzing the impact of different factors, such as N-way, K-shot scenarios, and domain shifts, on classification performance. Rezaei et al.33 addressed the limitations of AI methods that require substantial annotated data and proposed a few-shot pipeline combining pre-training, meta-learning, and fine-tuning (PMF) with a feature attention (FA) module. Their method, employing ResNet50 and Vision Transformers (ViT), showed consistent superiority over baseline models, achieving an accuracy of 90.12% in complex field conditions. The FA module effectively emphasized discriminative image regions while mitigating background noise, demonstrating significant improvements in real-time plant disease detection. Along with few-shot learning methods, if other techniques like pre-training, fine-tuning (PMF), and feature attention modules (FA) are also combined, then it shows major improvements in accuracy, even if only five images per class are used in the field of plant disease detection. Tassis et al.34 evaluated metric learning methods for classification and severity estimation of biotic stresses in coffee leaves. The work highlights the effectiveness of embedding learning in reducing training data requirements while maintaining high accuracy. This approach is particularly relevant for agricultural applications where large datasets are unavailable. A semi-supervised few-shot learning approach is proposed by Li et al.35 to take advantage of unlabeled data for plant leaf disease recognition. Egusquiza et al.36 implemented deep learning with the Siamese network and calculated triplet loss with a very small dataset, enhancing the model’s performance in plant disease prediction and classification. This research proves the benefits of metric learning by upgrading the power of FSL models. Chen et al.37 used LFM-CNAPS to improve the FSL in plant disease detection. This model is trained on a few examples and performs better using metric-based learning for feature matching.

Table 1 underlines various applications of few-shot learning in agriculture, which shows the flexibility and capability of this technique. Similarly, in livestock farming/animal husbandry, for recognizing the cows efficiently with a few examples in training data, a model based on meta-learning is used38, which provides good accuracy. Porto et. al39 demonstrate the significance of few-shot learning in agriculture for plant disease detection and pest identification. Few-shot semantic segmentation techniques are also efficient for cross-domain tasks like identifying forest coverage over different landscapes40,41,42,43.

In comparing the performance of various models in similar studies, it is evident that few-shot learning models, particularly those using metric learning techniques like Prototypical Networks and Siamese Networks, have shown superior performance in data-scarce environments. These models are efficient in disease classification, feature extraction, and similarity measurement, making them well-suited for agricultural applications where large annotated datasets are difficult to acquire. Along with a few-shot learning techniques, other techniques such as multi-modality, generative models, and semi-supervised learning approaches can also be explored to enhance the model’s generalization and accuracy. These technological improvements could provide more flexible and efficient solutions for real-time problems faced by farmers. Several studies have explored the application of FSL in plant disease classification to overcome data scarcity while achieving high classification accuracy. Table 2 presents a Literature Survey on recent work in Few-Shot Learning in Plant Disease Detection.

Boulila et al.44 introduced an FSL model incorporating the Performer-attention mechanism to enhance learning efficiency from limited examples. Their approach demonstrated exceptional performance, with accuracy rates of 92.15%, 98.12%, and 99.12% in 1-shot, 5-shot, and 10-shot scenarios on the PlantVillage dataset. This study underscores the importance of effectively integrating attention mechanisms to address sample scarcity.

Pan et al.45 introduced a two-stage approach using Faster R-CNN and a Siamese network for strawberry leaf scorch detection to enhance plant severity estimation further. The model excelled in complex scenarios, achieving 94.56% mean average precision (mAP) for patch detection and 96.67% accuracy for disease identification. Their method exhibited robust generalization capabilities on field-acquired images, suggesting its applicability in diverse agricultural environments. Argüeso et al.46 explored Siamese networks and Triplet loss on the PlantVillage dataset. Splitting the dataset into source and target domains demonstrated that few-shot models could achieve competitive accuracy with significantly reduced training data. Their experiments showcased the potential of FSL to efficiently learn new plant disease types with limited samples, outperforming traditional transfer learning techniques. In hyperspectral imaging applications, Cai et al.47 applied few-shot class-incremental learning (class-IL) for Chrysanthemum classification. Using a Replay training strategy, they achieved 80.13% accuracy with only 30 samples per class, demonstrating the scalability of their approach compared to traditional supervised methods, which required significantly more data. This study highlighted the advantages of combining hyperspectral imaging and FSL for plant disease detection in real-world scenarios. A generative model which utilizes conditional adversarial autoencoders (CAAE) is proposed by Zhong et al.48 for few-shot disease diagnosis in Citrus aurantium L.. Their model effectively synthesized visual features, achieving 53.4% harmonic mean accuracy for zero-shot recognition, a substantial improvement over conditional variational autoencoders (CVAE). Their findings indicate the feasibility of using generative models to detect plant diseases with the least labeled samples.

These studies highlight the different methodologies in few-shot learning for plant disease detection. Integrating attention mechanisms, generative models, and class-incremental learning has significantly improved the robustness and efficiency of plant disease classification, paving the way for practical implementations in agriculture. The future research direction lies in further optimizing these models for real-time applications, addressing domain shifts, and improving the generalization capabilities in complex field environments.

Proposed methodology

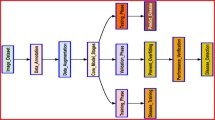

Figure 2 presents the methodology outlining the GRCornShot model’s training and evaluation process. The images of corn diseases and healthy corn are collected. After the dataset collection, the images are preprocessed, including resizing and normalizing them. These techniques are essential for the preparation of the data for training. Data augmentation techniques, such as rotation to an angle, color adjustments, and flips, make the model more generalized and flexible. These techniques bring variation to the training data. Due to this, the model can’t be overfitted. This work introduces a robust approach for corn disease detection by integrating Gabor filters with ResNet as a backbone network and utilizing a Prototypical Few-Shot Learning framework. The proposed method aims to enhance feature extraction, particularly focusing on the texture variations in diseased corn leaves, and addresses the challenge of limited labeled data for agricultural disease diagnosis. This network extracts the features from the input data, which a few-shot learning models further use. Prototype Computation is related to the prototypical few-shot learning technique, in which the prototype is calculated for each class by extracting the features from the support set images and then averaging the feature embeddings. The next step after prototype computation is the query classification. In this step, the query set data is classified into different classes by comparing it to the prototypes calculated. This classification is done based on the distance between query examples and prototypes. A detailed description of these steps is discussed in the next subsections.

Benchmark datasets in plant disease detection

In this study, the dataset is carefully created by merging and enhancing publicly available corn disease datasets from the Roboflow and Kaggle platforms50,51. These two sources offer complementary characteristics: Kaggle datasets primarily contain images captured under controlled conditions with minimal background noise, while Roboflow datasets include images with varied lighting, field backgrounds, and environmental noise, representing more realistic agricultural scenarios. To ensure a balanced class distribution, an initial data audit of both sources is conducted to analyze the number of images per disease class. This revealed a significant class imbalance, with certain disease categories being under-represented. To address this, targeted data augmentation techniques are applied, preferentially to the minority classes, including rotation, horizontal and vertical flipping, and color jittering. This augmentation process not only increases the size of under-represented classes but also improves the intra-class variability, making the model more robust to unseen data during testing. By combining samples from both structured and field-based datasets, and by applying augmentation with class-balancing objectives, potential biases in model learning are mitigated, which leads to a reduction in the risk of overfitting towards majority classes. Furthermore, a post-augmentation distribution check is performed to ensure that each class contributed proportionally during the meta-training and meta-testing phases of the few-shot learning framework.

This class-balanced, diversity-enriched dataset creates a real-world, yet manageable testbed for evaluating the GRCornShot few-shot learning model in corn disease detection.

Data collection and preprocessing

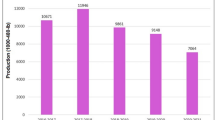

In the proposed work, the corn leaf image dataset is collected from publicly available sources: Roboflow Universe50 and Kaggle51. It includes images representing three common corn diseases along with healthy corn leaves, offering a diverse range of visual symptoms. The original dataset consists of 1,886 images categorized into four distinct classes, providing a solid foundation for training and evaluating the proposed model. Table 3 represents the data set summary, and Table 4 represents the detailed class-wise distribution before and after augmentation, highlighting the steps taken to address initial class imbalances. The primary reason for combining data from two diverse sources is to enhance the model’s generalization capability. Each source presented variability in lighting conditions, image resolution, background complexity, and capture angles, which collectively expose the model to a wider range of data distribution scenarios. This variation helps the model learn more generalized features, improving performance on unseen test images. All images are resized to 224\(\times\)224 pixels to match the input dimensions required for the ResNet-50 backbone. Random horizontal and vertical flips with a 50% probability are applied. Figure 3 shows sample images from all four classes, capturing both controlled and field conditions. This preprocessing pipeline ensures that the model is trained on a balanced, diverse, and realistically representative dataset, thereby improving its robustness and generalization capability for real-world deployment.

Gabor-ResNet architecture

The backbone of the proposed model is based on ResNet, which is pre-trained on the ImageNet dataset and known for its deep residual learning capabilities, which mitigate the vanishing gradient problem in deep neural networks52. The initial layers of ResNet-50 mainly extract general low-level features like edges and shapes. However, these features are learned during training and depend on the quality of training data. Gabor filters53 provide handcrafted low-level features mathematically optimized for edge and texture detection, complementing the network’s ability to learn high-level features in deeper layers. Gabor filters mimic the functioning of the human visual cortex, which is especially well adapted to edge and texture detection. Since plant disease detection often requires identifying patterns visually distinguishable by humans, Gabor filters align well with this requirement. Instead of relying on ResNet-50 to learn all relevant features from scratch, Gabor filters pre-extract key patterns (e.g., ridges, spots, striations). This would light up part of the workload at the ResNet-50 layers, making it possible to exercise the network toward mid-level and high-level abstraction. Therefore, the Gabor convolutional layer is integrated before the standard convolutional layers of ResNet to enhance the model’s ability to capture spatial, frequency, and orientation-specific features. The Gabor filter applied in this layer is mathematically defined in Eq. 1

where:

\(\lambda\) is the wavelength of the sinusoidal component, detects features of different sizes, \(\theta\) defines the orientation angle, enabling the filter to respond to features at different directions, captures patterns at various angles, \(\psi\) stands for phase offset controlling the symmetry of detected features, \(\sigma\) is the standard deviation of the Gaussian envelope which tunes the focus on local versus global features and \(\gamma\) is the spatial aspect ratio, controlling the ellipticity of the Gabor function and distinguishes between elongated (streaks) and circular (spots) features.

In the proposed model, the Gabor layer consists of 32 channels, each parameterized with unique values of

This diversity in Gabor filters allows the network to capture various texture patterns and orientations inherent in corn disease symptoms. The Gabor layer output is then passed through standard convolutional layers within ResNet to extract deeper hierarchical features. The processed feature maps are fed into the ResNet-50 architecture following the Gabor layer. The integration of Gabor filters within the ResNet blocks ensures that low-level texture features are preserved and further refined by deeper layers.

Few-shot learning and prototypical networks

Few-shot learning is a subpart of the machine learning approach used to train models with only a few labeled examples, yet it provides impressive prediction accuracy, unlike traditional deep learning methods, which often require large amounts of labeled data. Few-shot learning leverages prior knowledge gained from related tasks to adapt to new tasks with minimal data. This technique can be helpful in various applications like medical diagnosis, wildlife species identification, and plant disease detection, especially when acquiring large datasets is not feasible. Few-shot learning is based on meta-learning, which means “learning to learn.” In meta-learning, the model is trained on a series of tasks instead of individual samples, enabling it to generalize to new tasks with only a few examples. With the introduction of the Omniglot and ImageNet datasets, the concept of few-shot learning started attracting researchers’ attention. The few-shot learning process typically involves two sets of data: a support set for training and a query set for evaluation.

The few-shot learning technique is designed in an N-way, K-shot classification scenario. In this structure, N-way refers to the number of classes the dataset has to be classified, and K-shot refers to the number of examples each class has for training. For example, a 5-way 1-shot task includes five classes with one labeled example per class, while in our work, a 4-way 5-shot task is employed, where each class has five labeled examples for training. In 2017, Snell et al. proposed an architecture called Prototypical Networks, which is widely known in the domain of few-shot learning. The core idea behind Prototypical Networks is to calculate the mean embedding of all examples belonging to a particular class, known as the prototype. The prototype serves as a reference point, and distances between the prototype and query examples are calculated, determining the class to which the query belongs. Prototypical Networks use distance-based classification, such as Euclidean distance or cosine similarity, to compare query embeddings with class prototypes. The query examples are classified based on their closest prototype in the embedding space.

The proposed work employs 4-way 2-shot, 3-shot, 4-shot, and 5-shot scenarios, representing real-world situations where annotated datasets are limited. This strategy encourages the model to learn a robust feature representation by tackling mini-tasks episodes that resemble real-world few-shot tasks. During each episode, two sets are defined: Support set S with n examples for each of the m classes, and the Query set Q is used to evaluate the model’s performance with separate examples from the same m classes.The episodic approach is inspired by meta-learning principles, where the model learns to generalize to new tasks by adapting quickly with minimal data. This task-level training enables the model to handle various few-shot learning settings.

In an m-class n-sample task, the dataset can be divided into a training set S and an evaluation set Q, expressed as Eqs. (9) and (10), respectively.

where x is the input data point, c is the associated class label, n is the number of training samples per class, and r represents the number of evaluation samples from each category.

As depicted in Fig. 3, feature embeddings for the support set are generated from the input samples. Subsequently, the similarity between a query set example and the support set embeddings is measured using a distance metric, such as Euclidean Distance or Cosine Similarity. This distance computation is essential for determining how closely the query example matches each class, leading to its final classification.

For each class m, the prototype \(\textbf{c}_m\) is derived by averaging the feature representations of all support instances \(\textbf{x}\) within that class from the support set S

denotes the embedding function mapping an input example to a feature space.

Once the class prototypes are established, the model calculates the Euclidean distance between each query example \(\textbf{x}_q\) and the prototypes \(\textbf{c}_m\):

Each query example is assigned to the class whose prototype has the minimum distance to the query embedding:

To obtain a probability distribution over classes, the distance scores are passed through a softmax layer, providing the likelihood of the query sample \(\textbf{x}_q\) belonging to each class m:

For training, a cross-entropy loss function is minimized across all few-shot classification tasks:

This loss function encourages the model to minimize the classification error by assigning higher probabilities to the correct class. Stochastic Gradient Descent (SGD) is used to optimize the network parameters\(,\) and the model is evaluated based on its ability to generalize across various few-shot settings. Algorithm 1 presents the training and evaluation process of the Prototypical Network using a Gabor filter-integrated ResNet-50 architecture for corn disease detection and classification.

In short, the GRCornShot model is precisely designed to overcome the difficulties of corn disease identification under sparse data availability conditions. Combining Gabor filters and ResNet-50 improves texture feature extraction for identifying disease patterns. At the same time, Prototypical Networks enable efficient few-shot classification through learning robust class prototypes from few examples. Benchmark datasets curated from Kaggle and Roboflow, with customized data augmentation and preprocessing methods, lead to better generalization of models to real-world settings. Using an episodic training and meta-learning paradigm, GRCornShot effectively addresses few-shot tasks and provides a scalable, versatile, and efficient solution for diagnosing early corn disease in agro-applications. The following section provides the experimental configuration, evaluation measures, outcome, and comparative analysis showing the efficacy of the suggested solution.

Results and discussion

Experimental setup

The implemented network for the Prototypical Network is based on the PyTorch library, and it is trained using the Adam optimizer, and the learning rate is set to 0.001. Weight decay is set to 0.0005, and Cross-entropy loss is used as a loss function. The training is done for 30 epochs with a batch size of 32. Experiments are carried out on a workstation with an NVIDIA RTX 3080 GPU, 128 GB RAM, and an Intel i7 processor. This section presents and analyzes the visualizations and metrics obtained from the proposed GRCornShot model, which was trained using Prototypical Networks for corn disease detection. The figures provided, including t-SNE plots, confusion matrices, ROC curves, and Precision-Recall (PRC) curves, collectively illustrate the performance of the model across various few-shot learning scenarios.

Results and Validation

Figure 4 showcases the t-SNE (t-distributed Stochastic Neighbor Embedding) visualizations, powerful tools for visualizing high-dimensional data in a two-dimensional space. Each sub-figure in Fig. 4 corresponds to one of the four few-shot learning configurations with Fig. 4a as 4-way 2-shot, Fig. 4b as 4-way 3-shot, Fig. 4c as 4-way 4-shot and Fig. 4d as 4-way 5-shot, respectively. The t-SNE plots effectively demonstrate the model’s ability to cluster data points from the same class while keeping different classes well-separated in the embedding space. As we increase the number of shots from 2 to 5, the clusters become more distinct, indicating that the model can better learn and represent the underlying structure of the data with more examples per class. This trend can be visualized in Fig. 5, where starting from shot 2 to shot 5, all four classes, including grey leaf spot and blight(green and blue color), are also separated. Therefore, it confirms the effectiveness of the Prototypical Network in generalizing from a few examples and highlights the improvement in the model’s discriminative ability with the increment in the shots.

Figure 5 presents the confusion matrices for the same four few-shot scenarios, providing insights into the classification performance of the GRCornShot model with Fig. 5a as 4-way 2-shot, Fig. 5b as 4-way 3-shot, Fig. 5c as 4-way 4-shot and Fig. 5d as 4-way 5-shot respectively. As observed, the accuracy improves as we move from 2-shot to 5-shot scenarios, with fewer misclassifications occurring in the 5-shot configuration. This improvement is especially notable in classes previously challenging for the model, demonstrating that providing more examples during training enhances the model’s ability to identify diseases correctly.

Figure 6 displays the Receiver Operating Characteristic (ROC) curves for the different few-shot settings with Fig. 6a as 4-way 2-shot, Fig. 6b as 4-way 3-shot, Fig. 6c as 4-way 4-shot and Fig. 6d as 4-way 5-shot respectively. The AUC(Area Under the Curve) represents a single scalar value for the model’s performance assessment by summarizing the ROC curve. It takes a value ranging from 0 to 1. The bigger the value, close to 1, the better the model’s performance. The greater the number of shots, the higher the AUC value, as reflected in the ROC curves. The proposed model achieves the best classification in the 5-shot approach.

Finally, Fig. 7 illustrates the Precision-Recall (PRC) curves for the four few-shot learning scenarios with Fig. 7a as 4-way 2-shot, Fig. 7b as 4-way 3-shot, Fig. 7c as 4-way 4-shot and Fig. 7d as 4-way 5-shot respectively. In the case of imbalanced data, the PRCs capture the model’s precision(positive predictive value) and recall(sensitivity). The area under the PRC(AUC-PR), gives a metric for the performance evaluation of this model. Similar to the ROC curves, the PRCs plot an improvement in precision and recall with an increasing number of shots. The PRC of the 5-shot scenario shows high precision and recall, which means the model predicts a lot of true positives with minimal false positives. Figure 7a through Fig. 7d collectively give a clear indication of how well the GRCornShot model performs in classifying corn diseases with few-shot learning. It can be deduced from the result that with an increased number of shots, the performance increases in all metrics regarding clustering quality, classification accuracy, and ROC and PRC metrics. These results confirm that the Prototypical Network is an approach that, if set in a few-shot learning framework, is resistant to limited labeled data issues in detecting corn diseases.

The backbone Prototypical Network ResNet-50 for corn disease detection on different few-shot learning scenarios is compared.

-

2-Shot Learning Scenario: The performance is impressive in the 2-shot learning scenario, reaching a validation accuracy of 98.20% in its final epoch. Its average accuracy is at 96.19%, precision at 96.35%, recall at 96.60%, F1-score at 96.65%, Cohen’s Kappa score of 0.943, and a Matthews Correlation Coefficient (MCC) of 0.939 . This means it should be very accurate in classifying diseases with only two examples per class.

-

3-Shot Learning Scenario: In the 3-shot learning scenario, the model performed quite strongly, with its validation accuracy of 98.60% in the last epochs. Its average accuracy is at 96.54%, whereas precision, recall, F1-score stood at 97.64%, 97.77%, and 97.69%, respectively. The model’s Cohen’s Kappa score reached 0.954 and the MCC is 0.951.

-

4-Shot Learning Scenario: It is underlined that the model is more robust in the 4-shot case, where the validation accuracy oscillated within the range of 98.95% to 99.05% at the last epochs, whereas it reached an accuracy of 96.90%, precision 96.92%, recall 96.95%, F1-score 96.98%. Additionally, it achieved a Cohen’s Kappa score of 0.960 and an MCC of 0.957.

-

5-Shot Learning Scenario: In the 5-shot learning scenario, the model performed at its best, yielding a high validation accuracy of 99.30% in the last epoch. It gave an accuracy of 97.89%, precision of 97.90%, recall of 98.61%, and an F1-score of 97.95%. The model also recorded the highest Cohen’s Kappa score of 0.974 and an MCC of 0.971 .The results certainly support that this model generalizes well when five examples per class are available, reflecting improvements in all the metrics considered for classification.

Comparing all four scenarios in Table 5, we can see that the performance of the models is relatively similar, with small margins in terms of the evaluated metrics. The 5-shot learning scenario obtained the overall highest metrics as the model benefits from having more examples per class. It shows the effectiveness of using the Prototypical Network few-shot learning with a Gabor filter in Resnet 50. Therefore, this paper concludes that the proposed GRCornShot model may be a good alternative approach in corn disease detection, especially when collecting many labeled samples is difficult. Its applicability is then verified in real-world cases facing the common problem of scarcity because of its impressive performance under a few-shot scenario.

This, therefore, makes it a highly valued tool in agricultural disease management. From these results, it goes without saying that the GRCornShot approach has outperformed other classical techniques in aspects of accuracy, which further leads to reliability and applicability in real-world agricultural fields. This table underlines that the model can achieve very high accuracy with just a few labeled samples, making this approach suitable and scalable for early corn disease diagnosis. Table 6 compares the GRCornShot model against other state-of-the-art few-shot metric learning techniques, where the GRCornShot model demonstrates superior performance.

While achieving high classification accuracy is important, considerations such as computational cost and training duration become equally significant, especially when targeting the deployment of disease detection models on low-resource platforms like mobile phones or edge computing devices frequently used in agricultural fields. To address this aspect, a comparative analysis is conducted between GRCornShot and three widely used baseline deep learning models: VGG16, ResNet50, and MobileNetV2. The models are trained and evaluated under identical experimental conditions using the same training dataset, with the same hardware configuration. The GRCornShot model achieved significantly lower computational complexity than VGG16 and ResNet50 while maintaining comparable or superior accuracy. Its parameter count (\(\sim\)23 million) is much closer to lightweight models like MobileNetV2, but it outperforms MobileNetV2 in classification accuracy, making it an effective balance between accuracy and computational efficiency. Additionally, the inference time per image for GRCornShot was measured to be 18 ms, which is acceptable for near real-time deployment on edge devices or in mobile applications. The integration of Gabor filters within ResNet and the use of a Prototypical Few-Shot Learning framework significantly reduced the need for extensive training epochs. Typically, GRCornShot converged within 25–30 epochs, while deeper models like VGG16 required 50 or more epochs to achieve comparable validation accuracy. This comparative analysis underlines that GRCornShot is well-suited for deployment in resource-limited settings, providing a good trade-off between speed, memory efficiency, and predictive performance. Table 7 summarizes the detailed comparison of training time, parameter count, and computational complexity (GFLOPs) across all models considered. This comparative analysis underlines that GRCornShot is well-suited for deployment in resource-limited settings, providing a good trade-off between speed, memory efficiency, and predictive performance.

Although the GRCornShot model demonstrates strong performance on carefully curated and augmented datasets, real-world agricultural field conditions present additional challenges that may affect its generalization and robustness. These challenges include variations in illumination, cluttered and noisy backgrounds, variable image resolutions, different disease stages, incomplete or partial symptom presentation, and environmental disturbances such as rain, dust, and occlusions. To evaluate the model’s effectiveness in handling such variations, we conducted external validation on a set of 80 field images. These images are sourced from publicly accessible platforms as well as captured during limited field visits, ensuring a mix of real-world capture conditions, including different times of the day, natural lighting, and background complexities such as soil, weeds, and neighboring crops. The external dataset contained images representing the same four classes targeted in this study: corn blight, corn common rust, gray leaf spot, and healthy corn leaves, but captured under uncontrolled field environments, with natural symptom variability and diverse camera angles and devices. On this external field dataset, the GRCornShot model achieved an overall accuracy of 92.75%, precision of 92.90%, recall of 92.60%, F1-score of 92.70%, Cohen’s Kappa of 0.89, and Matthews Correlation Coefficient (MCC) of 0.88. These results demonstrate the model’s generalization capability across different data acquisition conditions and symptom presentations. To further improve the model’s real-world deployment potential, we propose future enhancements such as:

-

Fine-tuning the model with larger and more diverse field datasets collected from different geographical regions, climatic conditions, and cropping seasons.

-

Employing domain adaptation techniques to minimize domain shift between curated datasets and field data.

-

Implementing real-time, on-device augmentation techniques during inference to handle unpredictable environmental variations.

-

Developing lightweight, mobile-friendly versions of the model for on-field, farmer-accessible diagnostics.

Future research will focus on expanding field validation across multiple geographic locations, diverse environmental conditions, and different seasonal phases, ensuring that the model remains robust and scalable for precision agriculture applications.

Discussion

Few-shot learning has many advantages over regular deep learning methodologies, particularly for use in sectors such as agriculture, where having vast labeled sets is difficult. Among its primary advantages, it makes enormous reductions in relying on extensive annotated datasets and therefore is significantly applicable in practical use with fewer data requirements. It aids in quicker model adaptation across new classes and supports generalizing over unseen classes. In addition, few-shot learning is beneficial when data labeling is costly, time-consuming, or domain-expertise-intensive. Few-shot learning does have some drawbacks. However, its performance can still be worse than conventional supervised deep learning models when large amounts of data are available. The method is also sensitive to the quality and representativeness of the few examples given, and low-quality examples can result in suboptimal performance. Further, designing effective episodic training strategies for meta-learning can be tricky and needs to be handled carefully. Few-shot learning models can similarly find it difficult to deal with intricate intra-class variability when few examples are available, affecting classification accuracy in high-variability real-world scenarios.

Though the suggested GRCornShot framework performs well, a number of limitations need to be noted. Firstly, the model is trained and tested on prepared datasets, and aspects like changing lighting conditions, cluttered backgrounds, leaf occlusions, and unobserved variations in disease may affect its generalization performance when implemented within practical agricultural fields. In addition, the framework at present takes static RGB images as input. It does not leverage temporal or multispectral data sources, like thermal or NDVI, that are able to increase the precision of disease detection. Another constraint is with the assumption made by the Prototypical Network that novel disease classes are related to those experienced under meta-training; the detection of truly new diseases without previous representation would depend on additional model fine-tuning or retraining. In addition, real-world deployment on edge or mobile devices comes with limitations like memory usage, processing delays, and offline adaptability requirements, which have not been thoroughly investigated yet. Finally, though the Gabor filter enhances texture feature extraction by picking up edge and frequency information, it can actually increase noise in intricate backgrounds. Moreover, Gabor parameter tuning manual or heuristics for attributes such as frequency and orientation can fail to generalize across species or varying conditions, impacting model robustness.

Conclusion

The proposed work explores the use of Prototypical Networks for detecting corn diseases, utilizing a Gabor filter in ResNet-50 as the backbone for feature extraction. While the Gabor filter enhances feature richness by capturing textures and edges, its sensitivity to background noise in real-world conditions must be acknowledged. ResNet-50, known for its deep architecture and high performance in image classification tasks, allows the model to achieve high accuracy with minimal training data through a few-shot learning approach. In the proposed model, the Prototypical Network employs a 4-way 2-shot, 3-shot, 4-shot, and 5-shot learning techniques, training the model on four classes with 2, 3, 4 and 5 examples per class, respectively, and the model has achieved 96.19%, 96.54%, 96.90%, and 97.89% accuracy respectively. By applying Prototypical Networks with ResNet-50, the requirement for extensive labeled datasets is drastically reduced; this makes it fairly practical in realistic agricultural applications due to data collection challenges. This research places a stake in a few labeled examples to yield high accuracy, scalable, and useful early detection of diseases in corn. Therefore, this approach prevents disease from spreading across the whole farm and contributes toward enhanced food security and sustainable agricultural practices. The challenges during the research involved handling issues like class imbalance and optimization of model performance for varying environmental conditions. Future work should validate the model’s performance on larger, more diverse data and further explore the integration of multimodal data, including images, texts, audio, and video, for better predictions. Besides, it is necessary to study the applicability of this method for more crops or plant diseases. There are several avenues for further work: other backbone network architectures can be tried with or without transfer learning and applications in different environmental conditions.

Data availability

Publicly available datasets were used in this study which can be found here: https://universe.roboflow.com/final-enlye/corn-disease and https://www.kaggle.com/datasets/smaranjitghose/corn-or-maize-leaf-disease-dataset.

References

Rani, R., Sahoo, J., Bellamkonda, S., Kumar, S. & Pippal, S. K. Role of artificial intelligence in agriculture: An analysis and advancements with focus on plant diseases. IEEE Access 11, 137999–138019. https://doi.org/10.1109/ACCESS.2023.3339375 (2023).

Yu, H. et al. Corn leaf diseases diagnosis based on K-means clustering and deep learning. IEEE Access 9, 143824–143835. https://doi.org/10.1109/ACCESS.2021.3120379 (2021).

Kumar, S., Patil, R. R. & Rani, R. Smart IoT-based pesticides recommendation system for rice diseases. Lect. Notes Electr. Eng. 959, 17–25. https://doi.org/10.1007/978-981-19-6581-4_2 (2023).

Patil, R. R. & Kumar, S. Priority selection of agro-meteorological parameters for integrated plant diseases management through analytical hierarchy process. Int. J. Electr. Comput. Eng.12, 649–659. https://doi.org/10.11591/ijece.v12i1.pp649-659 (2022).

Patil, R. R., Kumar, S., Rani, R., Agrawal, P. & Pippal, S. K. A bibliometric and word cloud analysis on the role of the internet of things in agricultural plant disease detection. Appl. Sys. Innovat. 6, 1–17. https://doi.org/10.3390/asi6010027 (2023).

Das, A., Pathan, F., Jim, J. R., Kabir, M. M. & Mridha, M. Deep learning-based classification, detection, and segmentation of tomato leaf diseases: A state-of-the-art review. Artif. Intell. Agricult. 15, 192–220. https://doi.org/10.1016/j.aiia.2025.02.006 (2025).

Patil, R. R. & Kumar, S. Predicting rice diseases across diverse agro-meteorological conditions using an artificial intelligence approach. PeerJ Comput. Sci. 7, 1–25. https://doi.org/10.7717/peerj-cs.687 (2021).

Patil, R. R., Kumar, S., Chiwhane, S., Rani, R. & Pippal, S. K. An artificial-intelligence-based novel rice grade model for severity estimation of rice diseases. Agriculture (Switzerland) 13, 1–19. https://doi.org/10.3390/agriculture13010047 (2023).

Patil, R. R., Kumar, S. & Rani, R. Comparison of artificial intelligence algorithms in plant disease prediction. Revue d’Intelligence Artificielle36 (2022).

Jararweh, Y., Fatima, S., Jarrah, M. & AlZu’bi, S. Smart and sustainable agriculture: Fundamentals, enabling technologies, and future directions. Comput. Electr. Eng. 110, 108799. https://doi.org/10.1016/j.compeleceng.2023.108799 (2023).

Sunil, C., Jaidhar, C. & Patil, N. Cardamom plant disease detection approach using efficientnetv2. IEEE Access 10, 789–804 (2021).

Wspanialy, P. & Moussa, M. A detection and severity estimation system for generic diseases of tomato greenhouse plants. Comput. Electron. Agric. 178, 105701 (2020).

Pacal, I. Enhancing crop productivity and sustainability through disease identification in maize leaves: Exploiting a large dataset with an advanced vision transformer model. Expert Syst. Appl. 238, 122099. https://doi.org/10.1016/j.eswa.2023.122099 (2024).

Shafik, W., Tufail, A., De Silva, L. C. & Apong, R. A. A. H. M. A lightweight deep learning model for multi-plant biotic stress classification and detection for sustainable agriculture. Sci. Rep. 15, 12195. https://doi.org/10.1038/s41598-025-90487-1 (2025).

Bera, A., Bhattacharjee, D. & Krejcar, O. An attention-based deep network for plant disease classification. Mach. Graph. Vision33, 47–67. https://doi.org/10.22630/MGV.2024.33.1.3 (2024)

Maruthai, S., Selvanarayanan, R., Thanarajan, T. & Rajendran, S. Hybrid vision Gnns based early detection and protection against pest diseases in coffee plants. Sci. Rep. 15, 11778. https://doi.org/10.1038/s41598-025-96523-4 (2025).

Bera, A., Krejcar, O. & Bhattacharjee, D. Rafa-net: Region attention network for food items and agricultural stress recognition. IEEE Trans. AgriFood Electr. 3, 121–133. https://doi.org/10.1109/TAFE.2024.3466561 (2025).

Liu, L. et al. A multi-scale feature fusion neural network for multi-class disease classification on the maize leaf images. Heliyon 10, e28264. https://doi.org/10.1016/j.heliyon.2024.e28264 (2024).

Khan, I., Sohail, S. S., Madsen, D. Ø. & Khare, B. K. Deep transfer learning for fine-grained maize leaf disease classification. J. Agricult. Food Res. 16, 101148. https://doi.org/10.1016/j.jafr.2024.101148 (2024).

Phan, H., Ahmad, A. & Saraswat, D. Identification of foliar disease regions on corn leaves using SLIC segmentation and deep learning under uniform background and field conditions. IEEE Access 10, 111985–111995. https://doi.org/10.1109/ACCESS.2022.3215497 (2022).

Paul, S. G. et al. A real-time application-based convolutional neural network approach for tomato leaf disease classification. Array 19, 100313. https://doi.org/10.1016/j.array.2023.100313 (2023).

Roy, K. et al. Detection of tomato leaf diseases for agro-based industries using novel PCA deepnet. IEEE Access 11, 14983–15001. https://doi.org/10.1109/ACCESS.2023.3244499 (2023).

Pacal, I. & Işık, G. Utilizing convolutional neural networks and vision transformers for precise corn leaf disease identification. Neural Comput. Appl. 37, 2479–2496. https://doi.org/10.1007/s00521-024-10769-z (2025).

Das, A. et al. Xltldisnet: A novel and lightweight approach to identify tomato leaf diseases with transparency. Heliyon 11, e42575. https://doi.org/10.1016/j.heliyon.2025.e42575 (2025).

Sangar, G. & Rajasekar, V. Optimized classification of potato leaf disease using efficientnet-lite and ke-svm in diverse environments. Frontiers in Plant Science16, https://doi.org/10.3389/fpls.2025.1499909 (2025).

Janarthan, S., Thuseethan, S., Rajasegarar, S. & Yearwood, J. P2op-plant pathology on palms: A deep learning-based mobile solution for in-field plant disease detection. Comput. Electron. Agric. 202, 107371. https://doi.org/10.1016/j.compag.2022.107371 (2022).

Keceli, A. S., Kaya, A., Catal, C. & Tekinerdogan, B. Deep learning-based multi-task prediction system for plant disease and species detection. Eco. Inform. 69, 101679. https://doi.org/10.1016/j.ecoinf.2022.101679 (2022).

Elfatimi, E., Eryigit, R. & Elfatimi, L. Beans leaf diseases classification using mobilenet models. IEEE Access 10, 9471–9482 (2022).

Snell, J., Swersky, K. & Zemel, R. S. Prototypical networks for few-shot learning (2017)

Rani, R., Sahoo, J., Bellamkonda, S. & Kumar, S. Attention-enhanced corn disease diagnosis using few-shot learning and vgg16. MethodsX 14, 103172. https://doi.org/10.1016/j.mex.2025.103172 (2025).

Rani, R., Sahoo, J. & Bellamkonda, S. Corn disease detection using few-shot learning prototypical network. in 2024 IEEE 16th International Conference on Computational Intelligence and Communication Networks (CICN), 1379–1383, https://doi.org/10.1109/CICN63059.2024.10847346 (2024).

Li, Y. & Yang, J. Meta-learning baselines and database for few-shot classification in agriculture. Comput. Electron. Agric. 182, 106055. https://doi.org/10.1016/j.compag.2021.106055 (2021).

Rezaei, M., Diepeveen, D., Laga, H., Jones, M. G. & Sohel, F. Plant disease recognition in a low data scenario using few-shot learning. Comput. Electron. Agric. 219, 108812. https://doi.org/10.1016/j.compag.2024.108812 (2024).

Tassis, L. M. & Krohling, R. A. Few-shot learning for biotic stress classification of coffee leaves. Artif. Intelli. Agricult. 6, 55–67. https://doi.org/10.1016/j.aiia.2022.04.001 (2022).

Li, X., Yang, X., Ma, Z. & Xue, J. H. Deep metric learning for few-shot image classification: A review of recent developments, Patt. Recogn. https://doi.org/10.1016/j.patcog.2023.109381 (2023).

Egusquiza, I. et al. Analysis of few-shot techniques for fungal plant disease classification and evaluation of clustering capabilities over real datasets. Front. Plant Sci. https://doi.org/10.3389/fpls.2022.813237 (2022).

Chen, J., Zhang, D., Zeb, A. & Nanehkaran, Y. A. Identification of rice plant diseases using lightweight attention networks. Expert Syst. Appl. 169, 114514. https://doi.org/10.1016/j.eswa.2020.114514 (2021).

Xu, X. et al. Few-shot cow identification via meta-learning. Inform. Process. Agricult. https://doi.org/10.1016/j.inpa.2024.04.001 (2024).

de Andrade Porto, J. V., Dorsa, A. C., de Moraes Weber, V. A., de Andrade Porto, K. R. & Pistori, H. Usage of few-shot learning and meta-learning in agriculture: A literature review. Smart Agricult. Technol. 5, 100307. https://doi.org/10.1016/j.atech.2023.100307 (2023).

Puthumanaillam, G. & Verma, U. Texture based prototypical network for few-shot semantic segmentation of forest cover: Generalizing for different geographical regions. Neurocomputing 538, 126201. https://doi.org/10.1016/j.neucom.2023.03.062 (2023).

Huang, Y. et al. Few-shot learning based on attn-cutmix and task-adaptive transformer for the recognition of cotton growth state. Comput. Electron. Agric. 202, 107406. https://doi.org/10.1016/j.compag.2022.107406 (2022).

Liu, Y., Zou, Y., Li, R. & Li, Y. Spectral decomposition and transformation for cross-domain few-shot learning. Neural Netw. 179, 106536. https://doi.org/10.1016/j.neunet.2024.106536 (2024).

Shao, J. et al. Query-support semantic correlation mining for few-shot segmentation. Eng. Appl. Artif. Intell. 126, 106797. https://doi.org/10.1016/j.engappai.2023.106797 (2023).

Boulila, W. An approach based on performer-attention-guided few-shot learning model for plant disease classification. Earth Sci. Inf. 17, 3797–3809 (2024).

Pan, J. et al. Automatic strawberry leaf scorch severity estimation via faster r-cnn and few-shot learning. Eco. Inform. 70, 101706. https://doi.org/10.1016/j.ecoinf.2022.101706 (2022).

Argüeso, D. et al. Few-shot learning approach for plant disease classification using images taken in the field. Comput. Electron. Agric. 175, 105542. https://doi.org/10.1016/j.compag.2020.105542 (2020).

Cai, Z. et al. Identification of chrysanthemum using hyperspectral imaging based on few-shot class incremental learning. Comput. Electr. Agricult. https://doi.org/10.1016/j.compag.2023.108371 (2023).

Zhong, F., Chen, Z., Zhang, Y. & Xia, F. Zero- and few-shot learning for diseases recognition of citrus aurantium l. using conditional adversarial autoencoders. Comput. Electr. Agricult. 179, 105828. https://doi.org/10.1016/j.compag.2020.105828 (2020).

Afifi, A., Alhumam, A. & Abdelwahab, A. Convolutional neural network for automatic identification of plant diseases with limited data. Plants https://doi.org/10.3390/plants10010028 (2021).

Final. Corn disease dataset. https://universe.roboflow.com/final-enlye/corn-disease , Visited on 2024-05-24 (2022).

Ghose, S. Corn or maize leaf disease dataset, Accessed: 2024–05–15 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition (2015).

Wang, L., Zhang, J., Liu, Y., Mi, J. & Zhang, J. Multimodal medical image fusion based on gabor representation combination of multi-CNN and fuzzy neural network. IEEE Access 9, 67634–67647. https://doi.org/10.1109/ACCESS.2021.3075953 (2021).

Lin, H., Tse, R., Tang, S.-K., Qiang, Z. & Pau, G. Few-shot learning for plant-disease recognition in the frequency domain. Plants https://doi.org/10.3390/plants11212814 (2022).

Author information

Authors and Affiliations

Contributions

R.R. Conceptualization of this study, Methodology, Software, Data curation, Writing—Original draft preparation. J.S. Conceptualization of this study, Editing, Supervision. S.B. Conceptualization of this study, Editing, Supervision. S.K. Funding, Editing, Supervision. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Rani, R., Sahoo, J., Bellamkonda, S. et al. A novel framework GRCornShot for corn disease detection using few shot learning with prototypical network. Sci Rep 15, 26461 (2025). https://doi.org/10.1038/s41598-025-10870-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-10870-w