Abstract

Ensuring safe transportation requires a comprehensive understanding of driving behaviors and road safety to mitigate traffic crashes, reduce risks and enhance mobility. This study introduces an AI-driven machine learning (ML) framework for traffic crash severity prediction, utilizing a large-scale dataset of over 2.26 million records. By integrating human, crash-specific, and vehicle-related factors, the model improves predictive accuracy and reliability. The methodology incorporates feature engineering, clustering techniques such as K-Means and HDBSCAN, with oversampling methods such as RandomOverSampler, SMOTE, Borderline-SMOTE, and ADASYN to address class imbalance, along with Correlation-Based Feature Selection (CFS) and Recursive Feature Elimination (RFE) for optimal feature selection. Among the evaluated classifiers, the Extra Trees (ET Classifier) ensemble model demonstrated superior performance, achieving 96.19% accuracy and an F1-score (macro) of 95.28%, ensuring a well-balanced prediction system. The proposed framework provides a scalable, AI-powered solution for traffic safety, offering actionable insights for intelligent transportation systems (ITS) and accident prevention strategies. By leveraging advanced ML and feature selection techniques, this approach enhances traffic risk assessment and enables data-driven decision-making.

Similar content being viewed by others

Introduction

Traffic crashes continue as a significant worldwide problem which kills millions of people every year while creating extensive community strain. These traffic accidents produce effects that surpass the loss of human lives and injuries, creating economic and healthcare system strain and urban transportation issues. Road traffic crashes create substantial economic strain because they require healthcare expenses and property damage compensation while reducing workforce productivity, thus making traffic safety essential for governments and urban planners. The psychological strain experienced by accident victims, together with social effects on families and communities, demonstrates why strong crash prevention strategies need immediate implementation. Predicting traffic crashes represents a powerful solution for reducing road accidents because it enables organizations to deploy preventive measures ahead of time. Accurate prediction of traffic crashes reduces fatalities while enabling better traffic management and improved infrastructure development. Statistical models and rule-based approaches demonstrate limitations when processing big-scale, real-time crash data. The models struggle to capture complex traffic dynamics because they use simplified assumptions and restricted feature interactions. As presented in1, the authors conducted a study of roundabout crash severity in Jordan by evaluating multiple contributing elements, including weather conditions, lighting conditions, vehicle characteristics, road geometry, and driver demographics. The research team uses rule-based classifiers and RF models to discover important risk elements affecting crash severity and property damage-only incidents. The research uses evidence-based findings to help policymakers enhance roundabout traffic safety programs. The authors of2 studied fatal crash risks between highways, collector roads, and local roads in Thailand by applying DT, RF, XGBoost, and GB ML models. The research analyzes crash severity factors to create targeted safety measures that will benefit different road environments by addressing speeding alongside alcohol use and lighting conditions. The research in3 examines Indian expressway crash severity by studying 2747 recorded incidents from three major expressways. The research utilizes Multinomial Logit (MNL) and DT and RF models to identify critical factors that affect crash severity between fatal, severe, minor, and property damage only—PDO incidents. The goal aims to improve traffic safety by implementing enhanced speed enforcement alongside better road infrastructure design and improved lane discipline.

ML has proven itself as an advanced predictive tool for traffic crashes through its ability to process extensive datasets and complex patterns and execute real-time analysis. Analyzing traffic conditions alongside driver behavior, environmental factors, and accident-prone areas shows better results through ML models. The authors in4 studied various ANN models with activation functions and optimizers to enhance prediction accuracy. This led them to select the ADAM optimizer and SOFTMAX activation function as the optimal pairing. The Apriori algorithm serves to uncover important factors that contribute to fatal crashes. The ML-based method allows predictive crash analysis, leading to specific road safety measures. The authors in5 utilized Decision Trees and RF classifiers to analyze and predict road traffic accidents based on present traffic accident data. The study aims to solve the homogeneity issue that affects road safety surveys and accident analysis through accident data. The study implements elements from weather to lighting conditions to accident severity patterns across time to evaluate data mining approaches for enhancing prediction accuracy. Implementing ML-driven crash prediction faces multiple unresolved issues that restrict their complete potential utilization. The main obstacle in crash prediction arises from limited access to inconsistent traffic accident data, which is also challenging to obtain. The large number of dimensions in crash datasets requires advanced memory capacity and processing speed for real-time implementation to be feasible. The quality of real-time accident data suffers because incomplete or missing records cause information reduction and deteriorating model precision. The imbalance of data in crash datasets6 creates prediction bias because accident-related features rarely appear in the dataset, which trains models primarily on non-severe crashes, making them less effective at detecting severe or fatal crashes. The unbalanced distribution of classes in the data affects models to become more reactive to common crash types while underperforming when detecting important yet infrequent accident scenarios. The use of inconsistent data formats that include “NA” or “null” and empty strings creates misinterpretations that deteriorate model performance. The examination of crash types, together with severity levels, functions as an essential method to forecast and minimize fatal accidents. The classification of crashes into three severity levels by ML models enables the identification of dangerous areas and key accident patterns. Developing early-warning systems and targeted road safety measures becomes possible by analyzing impact force, vehicle speed, weather conditions, and driver behavior. Predictive models that integrate crash severity analysis enable traffic management authorities to make better decisions through safety intervention deployment, speed limit adjustments, and road infrastructure enhancement for fatal accident reduction.

To address these challenges, this study aims to analyze recent advancements in ML-based crash prediction, evaluate the effectiveness of feature selection techniques, and propose optimized modeling approaches for improving data quality, computational efficiency, and real-time crash prediction accuracy. By tackling issues related to low data availability, high dimensionality, and data inconsistency, this research contributes to developing more reliable and scalable traffic crash prediction models, paving the way for safer and more efficient transportation systems. The contribution of the paper is presented as follows:

-

Developing an enhanced Triple Merge Dataset by integrating multiple traffic datasets, resulting in large-scale dataset traffic records.

-

Provide a comprehensive analysis of recent crash severity prediction methods.

-

Applying advanced feature engineering, including temporal, environmental, and location-based attributes, to improve predictive performance.

-

Applying baseline experiments with recent ML classification models.

-

Implementing K-Means and HDBSCAN clustering to segment crash locations and enhance classification performance.

-

Applying oversampling techniques (RandomOverSampler, SMOTE, Borderline SMOTE, and ADASYN) to address class imbalance and improve classification fairness.

-

Utilizing CFS with RFE to optimize feature selection.

While several previous studies have individually applied data balancing techniques, clustering algorithms, or feature selection to crash prediction, our study introduces a comprehensive, multi-stage AI-driven framework that unifies these techniques into a cohesive pipeline applied to a large-scale, triple-merged dataset. This dataset combines human-related, crash-specific, and vehicle-specific features—an integration not commonly addressed together in the literature. Additionally, we evaluate the impact of clustering (K-Means, HDBSCAN) and oversampling methods (RandomOverSampler, SMOTE, Borderline-SMOTE, ADASYN) in tandem with advanced feature generation and hybrid feature selection using CFS with RFE. To our knowledge, this is among the few studies that offer an end-to-end performance comparison across all these dimensions using over 2 million records, yielding highly accurate and generalizable results for real-world crash severity prediction. To improve clarity, accessibility, and reader comprehension, Table 1 summarizes list of acronyms used in the paper.

This paper is structured as follows: Section "Literature review" presents the literature review, analyzing recent methods for crash severity prediction. Section "Proposed methodology" details the proposed methodology, including data preprocessing steps. Section "Baseline ML classification" introduces baseline ML classification. Section "Feature generation" focuses on feature generation to enhance model accuracy. Section "Crash severity prediction enhancement using clustering methods" applies clustering methods (K-Means and HDBSCAN) for further prediction improvements. Section "Oversampling" explores oversampling techniques to balance the dataset. Section "Feature selection" implements feature selection using CFS and RFE. Section "Discussion" discusses the results and comparisons with recent studies. Section "Conclusion and future works" concludes the study and outlines future research directions.

Literature review

Traffic accidents create worldwide difficulties, generating substantial human casualties and significant economic consequences. The analysis and prediction of traffic crashes serve to detect high-risk elements, which help activate safety measures and support the making of effective policy choices. Analyzing traffic severity using ML-based methods delivers essential findings that enhance traffic security while minimizing fatal accident numbers. Multiple Learning techniques have been broadly implemented to forecast crash intensity through evaluations of speed-related variables such as road condition elements and driving behaviors. This review examines contemporary methods to analyze and forecast worldwide traffic crashes and their severities.

The researchers in7 introduced an accurate prediction model that fixes the issues of traditional parametric safety performance functions (SPFs) by addressing both accuracy problems and non-generalization behavior. The study establishes a ML system to produce precise multi-area automobile crash predictions and identifies the most important characteristics to help traffic management departments solve crashes. A hybrid approach for feature selection-based ML classification was created by the researchers in8 to achieve accurate road traffic accident injury severity prediction. This research analyzes Pakistan National Highway N-5 traffic accident data while using the Boruta Algorithm to select important attributes that serve as inputs for ML classifiers. The authors of9 evaluated different feature selection techniques for their use in disease risk prediction through ML applications. An extensive review of feature selection techniques exists within this research, presenting their benefits and limitations for predicting disease risk from patient genetic information. The authors from10 enhanced ML algorithm performance for driver behavior classification through feature selection methods. The method seeks to identify essential driver behavior elements that enhance model precision while decreasing overfitting and reducing computational needs. The research findings demonstrate that RF and KNN achieve superior classification results under these evaluation conditions.

The authors in11 established predictive ML models for analyzing road car accident factors. The research examines traffic accident statistics to determine severity and count casualties and vehicles. The study utilizes Apache Spark big data analysis methods to process heterogeneous information while examining four main ML algorithms: DT and RF, multinomial LR, and naïve Bayes algorithms. The authors in12 presents an innovative approach to predicting driver injury severity, combining the strengths of DNNs for feature extraction and RF for classification. A predictive system for road crash detection was developed by13 by analyzing real-time data between road characteristics, land areas, vehicle telemetry, driver inputs, and weather conditions in low visibility conditions. The research fills a knowledge gap by combining ensemble models and imbalance learning algorithms to study real-time impacts on low-visibility crash prediction accuracy. The authors of14 created a real-time system for Collision Avoidance Systems (CAS) that combines driver inputs and vehicle dynamics with weather conditions alongside physiological signs and multiple real-time data sources to boost prediction accuracy. The system applies SMOTE for class imbalance treatment combined with RF and PCA as feature selection methods. As proposed in15, a prediction framework was developed to enhance road safety through lane change analysis for autonomous and connected mobility systems. A proposed framework utilizes feature learning to detect important lane change determinants while maintaining high prediction precision levels.

One of the recent methodologies in predicting crash severities was presented in16, where the authors proposed a framework that detects lane-changing dangers by integrating driver-specific behavioral patterns. The LGBM algorithm achieves superior accuracy in lane-changing risk prediction compared to other ML methods. Furthermore, the authors of17 established a new approach for validating and assessing the safety of perception-based CSP functions that serve as PCS activation triggers. The research develops an approach to build test scenarios through unsupervised ML, minimizing testing costs and maintaining CSP function reliability and accuracy. The authors of18 explored automobile price determinants using LASSO and stepwise selection regression algorithms. The research creates a predictive model through multiple linear regression, which utilizes selected features to help automobile manufacturers, consulting firms, and consumers better understand pricing patterns for informed decisions. In addition, the authors of19 investigated feature selection as an essential ML method that helps avoid overfitting while improving model performance. The study demonstrates that choosing the right features remains crucial for achieving better predictive models while minimizing computational complexity in ML applications.

As presented in20, highway dangerous lane change predictions are applied. A comprehensive analysis of vehicle trajectory data reveals the important elements that lead to dangerous driving behavior. Research results highlight the critical impact of single-vehicle behavior, the mutual effects between changing vehicles and their neighbors, and the specific acceleration dynamics between target lane vehicles when measuring lane change dangers. The authors of21 a real-time hybrid XGBoost model to overcome current secondary crash prediction model limitations. The model achieves better accuracy by merging estimates about primary crash, secondary crash initiation, and secondary crash occurrence probabilities. The real-time model leverages essential real-time traffic data, such as average traffic volume and occupancy, to make secondary crash predictions during short periods. As presented in22, the authors developed precise predictions regarding road accident severity within New Zealand. The research combines RF and XGBoost ML models to process recent accident data and identify important factors driving accident severity. The study uses explainable AI techniques to reveal how models predict outcomes and identify which factors most significantly affect results, including road conditions and vehicle participation. As presented in23, the authors applied ML methods to forecast the severity levels of highway crashes throughout Saudi Arabia, focusing on the Qassim Province. The research develops three ML methods to classify crash injury severity. The research uses Shapley additive explanations (SHAP) to interpret factors and establish their relative importance in causing crash severity. The research seeks to support policy development by creating safety mitigation approaches that minimize traffic accidents while reducing their impact. The authors of24, investigated the elements which determine the occurrence and intensity of RTC among the productive age group (15 to 44) in Al-Ahsa Saudi Arabia. The research reveals important road safety policy development insights by identifying driver behaviors and crash categories. The research aims to minimize RTC incidents among Saudi Arabia’s young, productive population through its findings to promote highway safety. The authors of25 applied ML models to identify factors contributing to driver-related crashes on Highway 15 in Saudi Arabia. The study aims to predict crash probabilities and develop strategies to improve road safety in Saudi Arabia and similar regions by analyzing road features, traffic flow, and driver behavior. One of the recent research methodologies was presented in26, where a Transformer-based architecture is applied to estimate traffic accident driver injury severity levels. The model processes textual accident descriptions and structured accident data to make accurate human injury severity predictions from incomplete information.

Using several ML models and SHAP analysis to identify important risk factors such alcohol use, road type, and rider characteristics, the study sought to predict motorcycle accident injury severity as presented in27 that offers a framework for focused injury prevention. To address imbalanced data for traffic crash severity prediction, the authors suggested a KT-Boost algorithm enriched with SMOTE variations in28, obtaining great performance and using SHAP to highlight influential aspects including gender, age, and road conditions. With LightGBM outperforming other models and important predictors identified to include collision type, vehicle type, and accident cause, the authors of29 concentrated on determining the best-performing machine learning model for predicting driver injury severity based on 693 crash cases in India. Particularly for motorcycle-involved crashes, the authors created CNN-based models in30 to assess intersection crash severity in Thailand and utilized SHAP to analyze the results, so exposing important parameters including intersection type, time of day, and highway classification. Using SHAP for interpretation and emphasizing on instance hardness and region of competence to improve crash severity prediction on unbalanced datasets, the authors of31 presented a Bayesian-optimized Dynamic Ensemble Selection framework incorporating classifiers like Catboost and LightGBM.

Although numerous studies have applied machine learning techniques to crash severity prediction, several limitations persist. Many works depend on single-source datasets, which might ignore the interaction of human, vehicle, and crash-specific elements. Others ignore data imbalance entirely or use oversampling without methodically assessing model performance. Few research have also looked at how integrated clustering or contextual feature creation could find latent crash trends. By means of a unified, multi-stage architecture combining three traffic-related datasets, extensive feature engineering, and hybrid clustering and targeted oversampling, this paper attempts to close these gaps. For traffic safety management, the proposed architecture and model not only raise predicted accuracy but also help interpretability and practical utility of model outputs. Table 2 presents a comprehensive review and comparison to explore significant research methodologies for analyzing and predicting crash severities.

Proposed methodology

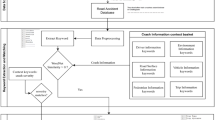

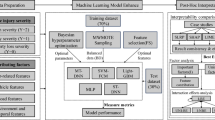

The proposed Traffic Data Analysis Framework is a highly sophisticated and systematic pipeline designed to integrate multi-source traffic datasets, optimize feature engineering, and leverage advanced ML and clustering techniques to predict crash severities accurately. By merging data from Traffic People, Traffic Crashes, and Traffic Vehicles datasets, the framework ensures a comprehensive and enriched feature space that captures intricate relationships between human behavior, accident characteristics, and vehicle attributes. As presented in Fig. 1, the methodology follows a multi-stage hierarchical approach, starting with data collection and preprocessing, where rigorous data cleaning, transformation, and encoding techniques are applied to ensure data integrity and consistency. The Dual Merge (Traffic People and Crashes) and Triple Merge (Traffic People, Crashes, and Vehicles) enable a progressive information fusion, enhancing predictive accuracy by integrating contextual insights. Feature generation and selection refine the dataset by extracting high-impact variables, reducing dimensionality, and mitigating redundant information. During the baseline phase, eight ML models (LR, RF, XGBoost, LGBM, GB, ET, CatBoost, and DT) are trained on each merged dataset without clustering or oversampling to benchmark performance under realistic, imbalanced conditions. Standard performance metrics, including accuracy, precision, recall, F1-score, and AUC-ROC, determine the evaluation of the models during training and testing. The effectiveness of each algorithm becomes clear during this phase, enabling better choices of models and additional optimizations in future stages.

The Triple Merge dataset constantly produced the best predictive accuracy when all baseline results were compared. Consequently, feature generation is done just on this dataset, producing new contextual features to improve crash severity prediction. Two clustering methods—K-Means and HDBSCAN—help to carry forward and improve the top three classifiers from the baseline phase (RF, ET, DT) to discover latent spatial patterns. Addition of cluster assignments as input elements enhances model performance. To address the issue of class imbalance, oversampling techniques such as RandomOverSampler, SMOTE, ADASYN, and Borderline SMOTE are applied in the final stage of the pipeline. However, this method is confined to the best-performing classifier—ET Classifier—which outperformed other models throughout all phases, including the clustering step, due to the high computational cost linked with oversampling vast datasets. This approach let the framework preserve methodological integrity while applying oversampling in a targeted, re-source-efficient way. With the top models chosen for deeper development, evaluating classifiers on imbalanced data in previous phases also helped determine their inherent discriminating capability. We employed RandomOverSampler as a baseline reference method that duplicates samples from the minority class without creating synthetic instances, providing a useful benchmark to evaluate the added benefit of more sophisticated algorithms. Including this simpler method allows us to compare improvements in classification performance across different resampling strategies more transparently. The framework systematically identifies the most effective predictive model for crash severity classification by integrating automated best model selection. This multi-layered ML approach, supported by comprehensive feature engineering and data fusion, offers unparalleled accuracy and generalizability in predicting crash outcomes. The framework’s modularity allows for scalability and adaptability, making it a robust decision-support tool for traffic safety agencies, urban planners, and policymakers aiming to implement proactive accident mitigation strategies and improve road safety intelligence. This advanced predictive framework contributes to enhanced traffic incident management and fosters a data-driven safety paradigm that can be integrated into innovative transportation systems and intelligent accident prevention mechanisms.

Dataset collection

The data collection process in this framework involves gathering traffic-related datasets from multiple sources to ensure a comprehensive representation of accident factors. The Traffic People Dataset32, Traffic Crashes Dataset33, and Traffic Vehicles Dataset34 are acquired from official transportation and accident reporting agencies of Illinois State in the USA. These datasets include demographic information, crash circumstances, and vehicle-specific attributes, providing a rich foundation for predictive modeling. Table 3 presents a complete description of the three traffic datasets, where the number of records and features in each dataset are identified.

As explained in Table 2, the first dataset (Traffic_People) provides detailed insights into individuals involved in traffic incidents, focusing on factors like driver behavior, safety equipment usage, injury outcomes, and demographic influences. It supports analysis of crash causes, effectiveness of safety measures (e.g., airbags, seatbelts), and emergency response efficiency. By capturing data on driver actions, physical conditions, BAC levels, and pedestrian-related factors, the dataset helps improve traffic safety policies, reduce injuries, and enhance road infrastructure planning. The second dataset (Traffic_Crashes) provides comprehensive details about traffic crashes, with nearly 900,000 entries. It includes crash identifiers (CRASH_RECORD_ID, CRASH_DATE) and environmental factors like WEATHER_CONDITION, and LIGHTING_CONDITION. In addition, geographic information (LATITUDE, LONGITUDE, LOCATION) enables spatial and temporal analysis. The third dataset (Traffic_Vehicles) provides detailed information about individual vehicles involved in traffic crashes with vehicle specifics (MAKE, MODEL, VEHICLE_YEAR) and operational data (TRAVEL_DIRECTION, MANEUVER). In addition, the dataset supports descriptions of crash causes, vehicle safety, and the role of specific vehicle types or configurations in traffic incidents, particularly for commercial and hazardous material transportation. A full description of the dataset feature types, whether they are numerical or categorical, is presented in Fig. 2.

Data preprocessing

Data cleaning

The large-scale and multi-source nature of 3,784,263 records with 148 features makes data cleaning a critical preprocessing step to ensure accuracy, consistency, and completeness. Data cleaning is applied to handle missing or incorrect entries, remove duplicate records, standardize data formats, and align feature distributions across the merged datasets. This step is essential to optimize the final dataset before proceeding to the feature engineering and model training phases, leading to more reliable and interpretable crash severity predictions. The following steps explore the main parameters for each dataset before and after the data-cleaning processes:

The data cleaning stage on the Traffic datasets consists of three key steps to enhance data quality, improve model efficiency, and ensure reliable predictions. Given the dataset’s large size (3,784,263 records and 148 features), removing inconsistencies and irrelevant information is essential. The following steps are applied:

-

1.

Remove null features: Certain features in the dataset may contain a high percentage of missing values (NULLs), making them unusable for effective predictive modeling. Features with an excessive proportion of missing data above 70% are removed, as their inclusion may introduce noise and degrade model performance. This step ensures that only informative and well-populated attributes are retained for analysis.

-

2.

Remove unimportant features to reduce dimensionality: High-dimensional datasets often contain redundant, irrelevant, or low-variance features that do not contribute significantly to predictive accuracy.

-

3.

Remove null records: After eliminating NULL features, individual records (rows) with missing values are identified. In cases where a record has critical missing information across key features, it is removed to maintain data integrity. This step helps to prevent inaccuracies in the ML model caused by incomplete or inconsistent data points.

Dual merge process

The main objective of the Dual Merge process is to create a more comprehensive and enriched dataset by integrating human-related attributes from the Traffic People Dataset with incident-specific details from the Traffic Crashes Dataset. This merging process is essential for capturing the interaction between individual characteristics and accident circumstances, which plays a crucial role in predicting crash severities. By combining both datasets, the framework aims to:

-

1.

Enhance data contextualization: The Traffic People Dataset contains demographic and behavioral information (e.g., age, gender, driver history), while the Traffic Crashes Dataset includes accident-related features (e.g., crash time, location, severity, and contributing factors).

-

2.

Improve predictive accuracy: By integrating people-related attributes with crash data, the model can identify hidden patterns and correlations that significantly impact crash severity, ultimately improving predictive performance.

-

3.

Establish a stronger feature representation: Merging datasets increases the number of available features, enabling a more robust feature selection and engineering process. This helps the ML models differentiate high-risk and low-risk cases more effectively.

Triple merge process

The main objective of the Triple Merge process is to create a fully integrated and enriched dataset by combining human-related attributes (Traffic People Dataset), accident details (Traffic Crashes Dataset), and vehicle-specific information (Traffic Vehicles Dataset). This step is critical for building a comprehensive data representation that enhances the model’s ability to predict crash severities with higher accuracy and reliability. By incorporating vehicle-related factors into the previously merged dataset, the framework achieves the following:

-

1.

Complete crash contextualization: When merged with human and crash-related data, the dataset fully represents the three critical dimensions influencing traffic accidents: driver behavior, crash dynamics, and vehicle characteristics.

-

2.

Improved feature engineering and representation: Integrating vehicle-related variables allows for more effective feature generation by identifying interactions between driver profiles, accident scenarios, and vehicle safety factors.

-

3.

Refinement of crash severity predictions: The combination of human, crash, and vehicle attributes allows ML models to capture more complex relationships that contribute to crash severity. This helps in distinguishing high-risk cases from minor accidents with greater precision.

The overall data preprocessing stage for Traffic datasets is explored in Table 4.

Based on the data presented in Table 4, the final data size for Traffic_People is (515,685 records and 16 features), while the dataset size for the dual merge of People and Crashes datasets is (195,573 records and 53 features). Finally, the dataset size for the triple merge dataset is (2,263,315 records and 44 features) as presented in Table 5.

Feature engineering and encoding

Feature engineering is a crucial step in the data preprocessing pipeline, aimed at enhancing predictive performance by refining, transforming, and selecting features most relevant to the target variable. For the Traffic_People dataset, the target feature is "INJURY_CLASSIFICATION," where only information about individuals is involved in accidents. After applying dual merge, additional accident-specific details are introduced, such as crash location, time, road conditions, and contributing factors, making the target feature changed to “CRASH_TYPE”. This aims to predict overall crash characteristics, incorporating human and crash-related factors. After applying the final stage of the triple dataset (People + Crashes + Vehicles), the dataset becomes fully enriched with vehicle-related attributes. Despite this additional information, the target feature remains “CRASH_TYPE”, as vehicle characteristics directly impact crash outcomes but do not change the fundamental objective of predicting accident types. Including vehicle data strengthens the model’s ability to identify patterns linking driver behavior, crash conditions, and vehicle involvement, leading to more precise and reliable crash severity predictions. By applying feature engineering, transformation, and encoding, the framework maximizes data quality and ensures that predictive models can accurately capture patterns and trends in crash severity analysis. The three datasets feature engineering and encoding process involves transforming raw features into more informative variables that enhance ML model performance. On the Traffic_People dataset, the “AGE” feature is categorized into age groups to reduce the dimensionality of the feature as follows:

The target feature “INJURY_CLASSIFICATION” has five classes: “NO INDICATION OF INJURY”, “NONINCAPACITATING INJURY”, “REPORTED, NOT EVIDENT”, “INCAPACITATING INJURY”, “FATAL”. These classes lead to class imbalance which is a critical issue in multi-class classification. We have grouped the 5-classes into binary classification as follows:

On the dual merge dataset; after merging both people and crashes datasets, a crash severity index is applied on the merged dataset which is computed using impact speed, road conditions, and visibility:

where:

-

\(Impac{t}_{Speed}\) represents the velocity at impact.

-

\(Roa{d}_{Hazar{d}_{Factor}}\) is a risk factor (0–1 scale) based on weather, road type, and obstacles.

-

\(Visibilit{y}_{Index}\) (0–1) represents clear vs. poor visibility conditions.

Data splitting

The dataset is split into 80% training data and 20% testing data across all experimental phases to ensure a robust and unbiased evaluation of the ML models. The training set trains the models, allowing them to learn patterns and relationships between features and the target variable. The testing set remains unseen during training and is an independent validation set to assess the model’s ability to generalize to new, unseen data. The selection of 80:20 is selected for better model learning and for reducing model variance.

Baseline ML classification

During the baseline experiments, all eight ML models: LR, RF, XGBoost, LGBM, CatBoost, GB, ET, and DT were trained under default hyperparameters in the baseline phase in order to evaluate their raw predictive capacity objectively.

Experiment 1: baseline results for traffic people dataset

A baseline analysis of the Traffic_People dataset included eight ML models: LR, RF, XGBoost, LGBM, CatBoost, GB, ET, and DT. The LR functions as an interpretable benchmark model that provides essential performance reference. The RF, ET, and DT represent tree-based models that effectively handle structured data while identifying non-linear relationships. XGBoost LGBM and GB represent boosting algorithms that excel at high-accuracy tasks, feature importance analysis, and work effectively with large datasets. The CatBoost model excels in dealing with unprocessed categorical features. The framework combines these models to achieve balanced simplicity and interpretability with high-performance predictive capabilities, which makes it appropriate for traffic crash injury classification. Based on the data description and distribution presented in Table 5 after data preprocessing, the target feature “INJURY_CLASSIFICATION” is presented in Table 6 as follows:

In highly imbalanced datasets such as35 and like the Traffic_People dataset, where the majority class (NO INDICATION OF INJURY) comprises 92.36% of the records, relying solely on accuracy can be misleading. A model that predicts the majority class most of the time would appear to have high accuracy but would fail to correctly classify the minority class (INJURY), which is critical in crash severity analysis. To ensure a balanced evaluation, the macro F1-score is used as it provides an equal-weighted average of the F1-scores of both classes, preventing the model from being biased toward the majority class. Unlike accuracy, the macro F1-score considers both precision and recall, making it particularly effective in addressing class imbalance by ensuring that the model correctly identifies non-injury cases and improves its ability to detect injury cases. A higher macro F1-score indicates that the model distinguishes between injury and non-injury cases more effectively. This leads to a more fair, reliable, and generalizable real-world traffic crash severity analysis model. By prioritizing the macro F1-score, the model ensures that both injury and non-injury cases are treated equally, enhancing the overall efficiency and practicality of the model for decision-making in traffic safety. As presented in Table 7, the overall results of experiment 1 on the Traffic_People dataset are explored.

Based on the results presented in Table 6, the highest three ML models based on F1-Score (Macro) are ET with 60.26%, RF with 59.99%, and XGBoost with 59.60%, indicating their relative effectiveness in handling the imbalanced Traffic People Dataset. However, despite these models achieving the highest F1 scores among the tested classifiers, the overall results remain low, suggesting that the models struggle to classify the minority class (INJURY cases) effectively. The ET classifier performs the best, likely due to its higher randomness in tree construction, which enhances generalization. The confusion matrix and ROC curve for the highest ML model (ET Classifier) are explained in Fig. 3.

As presented in Fig. 3, the Confusion Matrix for the ET classifier shows that the model correctly classifies 98.40% of injury cases (high recall for the minority class), but struggles with the majority class, misclassifying 83.21% of non-injury cases as injuries (high false positive rate), leading to poor precision. The ROC Curve with an AUC-ROC score of 0.72 indicates moderate predictive performance but still lacks intense discrimination between injury and non-injury cases. These results suggest that while the model effectively detects injuries, it lacks balance in handling class distributions.

Experiment 2: baseline results for dual marge dataset

Based on the conducted results presented in Experiment 1, the models struggled to effectively classify the minority class (INJURY cases) due to the imbalanced nature of the dataset. The dual merge process was implemented to address this limitation by combining the Traffic_People dataset with the Traffic_Crashes dataset to enhance feature representation and improve classification performance. The merging step allows for integrating human-related attributes with crash-specific factors, enhancing the dataset’s feature representation and improving classification performance. As a result of this merge, the target feature was changed from “INJURY_CLASSIFICATION” to “CRASH_TYPE”, now representing the overall crash severity rather than individual injury status. After performing data preprocessing as outlined in Section "Data preprocessing", the target feature “CRASH_TYPE” is presented in Table 8.

As presented in Table 9, the overall results of experiment 2 on the dual merge dataset are explored. The highest three ML models based on F1-Score (Macro) are ET with 93.27%, RF with 92.43%, and CatBoost with 89.78%, significantly improving over Experiment 1. The ET model achieved the highest F1-score, leveraging greater feature randomness, which improved generalization and class balance, resulting in a strong AUC-ROC of 92.076%. The RF model followed closely, with an exceptionally high recall of 97.82%, making it highly effective in detecting injury-related crashes, though slightly less precise than ET. The CatBoost ranked third, outperforming other boosting models due to its efficient handling of categorical data, ensuring a well-balanced precision-recall tradeoff. Additionally, overall accuracy improved across all models, with ET achieving 94.95%, RF 94.34%, and CatBoost 92.36%, demonstrating that including crash-related attributes alongside human factors provided better context for injury classification.

Analysis of the highest ML model (ET Classifier) through confusion matrix and ROC-curve is explained in Fig. 4. In the dual merge dataset, the Confusion Matrix generated better results than Experiment 1 by accurately classifying 98.09% of injury cases while correctly identifying 86.06% of non-injury cases, leading to improved performance across both classes. The false positive rate (13.94%) has improved, indicating superior precision in crash-type discrimination. The ROC Curve demonstrates a high discriminative ability for injury and non-injury case discrimination through its AUC-ROC score of 0.98. The dual merge approach strengthened classification precision and generalization ability, thus making ET a highly effective model for predicting crash severity.

Baseline results for triple marge dataset

In Experiment 3 the Triple Merge process integrates the Traffic People Dataset with the Traffic Crashes Dataset and Traffic Vehicles Dataset following the success of Dual Merge in Experiment 2. The final step of feature merging brings vehicle-specific elements into the model, such as safety features, mechanical conditions, and prior crash involvement, because these variables determine crash severity outcomes. The objective of Experiment 3 is to refine model accuracy, recall, and F1-score through enhanced data that describes the interrelationships between human elements and crash mechanics and vehicle characteristics. The model will achieve better generalization, enhance injury risk prediction, and generate more detailed traffic crash severity analysis by integrating vehicle-related information. After merging the Traffic_People, Traffic_Crashes, and Traffic_Vehicles datasets, the target feature remains “CRASH_TYPE”, as vehicle-related attributes continue to influence crash severity. However, the total number of records significantly increased to 2,263,315 after the final merge, providing a more extensive and comprehensive dataset for analysis, as shown in Table 10. The merge process uses “CRASH_RECORD_ID” as the standard key across all three datasets, ensuring proper alignment and integration of human, crash, and vehicle-related features. This expanded dataset enhances feature richness and predictive power, allowing models to understand better the relationships between driver behavior, crash dynamics, and vehicle safety factors.

To explore the overall pipeline of the Triple Merge dataset, Fig. 5 provides a step-by-step visualization of the methodological framework used for crash severity prediction. The Triple Merge dataset is applied to baseline experiments that employ eight ML models (LR, RF, XGBoost, LightGBM, CatBoost, GB, ET, and DT) to assess initial performance without balancing. This evaluation results in the top three classifiers chosen for more work. Then feature generation is used to improve the dataset using fresh, context-rich variables. The three classifiers (RF, ET, and DT) are combined with two clustering algorithms (K-Means and HDBSCAN) to organize data into meaningful patterns for enhancing learning. The best-performing model from this stage is then chosen for oversampling to address class imbalance. Finally, feature selection is performed to refine the model by retaining only the most relevant features for prediction.

As presented in Table 11, the highest three models based on F1-Score (Macro) for the Triple Merge dataset are ET with 93.697%, RF with 93.071%, and DT with 91.486%, demonstrating a substantial improvement over Experiment 2 in both classification accuracy and overall model performance. Including vehicle-related attributes in the Triple Merge dataset enhanced predictive capabilities by capturing complex interactions between driver characteristics, crash conditions, and vehicle safety features. The ET classifier achieved the highest F1-score and accuracy with 94.95%, excelling in generalization and class balance, making it the most effective classifier. The RF classifier followed closely with 94.42% accuracy, benefiting from strong recall at 97.70%, ensuring more injury-related crashes were correctly identified. The DT classifier also improved significantly, reaching an accuracy of 92.96%, with intense precision (95.19% and recall 0.94.85%. Compared to Experiment 2, where class balance had already improved, the Triple Merge dataset enhanced accuracy by providing a more balanced distribution between the majority and minority classes, reducing misclassification bias. These results confirm that integrating vehicle-related data significantly improves model reliability, with ensemble tree-based models proving the most effective for highly accurate crash severity classification.

As presented in Fig. 6, the Confusion Matrix for the ET classifier in Experiment 3 shows 98.42% of injury cases correctly classified (high recall) and 86.50% of non-injury cases correctly identified, indicating a strong balance between sensitivity and specificity. However, the false positive rate remains at 13.50%, suggesting that while the model effectively detects injuries, some non-injury cases are still misclassified. The ROC Curve, with an AUC-ROC score of 0.94, shows a slight reduction compared to Experiment 2 (AUC-ROC = 0.98). The possible reason for the reduction in AUC-ROC between Experiment 3 and Experiment 2 is that adding vehicle-related features in the Triple Merge dataset introduced more complexity and feature interactions, which may have added noise or redundancy that slightly impacted the classifier’s ability to separate the classes ideally. Additionally, while class balance improved, including more diverse features may have increased overlap in decision boundaries, leading to a marginal drop in overall discrimination capability. Despite this reduction, the model still maintains strong predictive performance, confirming the effectiveness of the Triple Merge dataset in improving overall classification accuracy.

Several evaluation metrics were computed over several runs using various random seeds in order to guarantee the statistical dependability and resilience of the baseline classification results. This let variance in model performance to be captured. Along with mean accuracy, precision, recall, F1-score, AUC-ROC, the reported results also show standard deviations across trials. Moreover, paired t-tests and other statistical significance tests were used to evaluate whether observed performance variations between top-performing models (ET, RF, and DT) were meaningful. This comparative framework supports the reproducibility of the framework and helps to increase the credibility of choices of models.

As explored in Table 12, the baseline experiments results show a consistent improvement in classification performance as datasets were merged, with the Triple Merge dataset achieving the highest accuracy and F1-score (macro) across all models. The ET classifier consistently recorded the best results in all three experiments, demonstrating its superior generalization and robustness in handling large datasets. The ET achieved 92.23% accuracy with F1-score: 60.263% in the Traffic_People dataset, significantly improved to 94.95% accuracy with F1-score: 93.265% in the Dual Merge dataset, and reached its highest performance in the Triple Merge dataset with 94.95% accuracy with F1-score: 93.697%. These results confirm that ET is the most effective classifier for crash severity prediction, benefiting from the progressive enrichment of features across all dataset configurations.

Feature generation

Handling a large-scale dataset with 2,263,315 records and 44 features presents significant challenges in computational complexity, model training efficiency, and feature selection36,37. With such vast data, ensuring optimal preprocessing, memory management, and algorithm scalability becomes critical. The dataset contains diverse attributes from three merged sources, requiring advanced feature engineering techniques to extract the most relevant patterns. Balancing model accuracy, training time, and generalization is essential, as overfitting, processing overhead, and feature redundancy can negatively impact predictive performance. In this step, the application of feature generation stands as a vital step because it produces new meaningful features that boost the predictive accuracy of ML models. The Triple Merge experiment benefits from engineered features that help the model better understand complex variable relationships despite its existing human and crash and vehicle-related attributes. When models undergo feature generation, they receive new features and transformed features and redundancy reduction, which helps them detect patterns better, reduce bias, and enhance generalization. This step plays a crucial role in achieving better classification accuracy and increasing F1-score and false positive and false negative minimization thus developing a more dependable crash severity prediction model that is easily interpretable.

To enhance the performance of the Triple Merge experiment, several new features were generated to provide richer contextual insights into crash severity predictions. From CRASH_DATE, additional time-based features such as HOUR, DAY, MONTH, DAY-OF-WEEK, SEASON, PEAK-HOUR, and WEEKEND were extracted to capture temporal patterns and traffic density variations. From VEHICLE_YEAR, a new feature VEHICLE_AGE was derived to assess the impact of vehicle condition on crash severity. Additionally, using CRASH_TYPE and DAMAGE, the DAMAGE_LEVEL feature was created to provide a more refined representation of vehicle impact severity. Furthermore, by utilizing LONGITUDE, LATITUDE, and CRASH_DATE, an external API (http://api.zippopotam.us) was used to fetch the ZIP_CODE of the crash location, while historical weather conditions at the time of the crash were predicted based on geolocation and time data. These newly generated features significantly enrich the dataset, allowing ML models to capture more complex interactions and improve overall prediction accuracy. The following Pseudocode explains the process of extracting the ZIP_CODE of the crash record and then predicting the WEATHER condition from the ZIP_CODE, LONGITUDE, LATITUDE, and CRASH_DATE.

Based on the previous Pseudocode for weather forecasting based on the CRASH_DATE and locations of traffic crashes, we have generated a simulated map for Illinois State in USA as presented in Fig. 7 where the position of crashes are identified by red and blue points such that:

-

Red points: indicate higher temperatures (warmer areas). The deeper the red color, the hotter the temperature at that location.

-

Blue points: These indicate lower temperatures (colder areas). The deeper the blue color, the colder the temperature at that location.

Including the ZIP_CODE and weather-related elements into the crash severity prediction system aims to improve the spatial and environmental contextualizing of crash events. The analysis of localized social and infrastructure vulnerabilities made possible by the ZIP code helps to better understand how injury and death outcomes in motor vehicle crashes are influenced38. Likewise, including weather conditions helps one to grasp external risk factors influencing crash intensity, especially when their accuracy is guaranteed by statistical and geospatial validation methods39. These characteristics improve the model’s capacity to more exactly represent real-world crash dynamics.

To summarize the feature generation step, we applied a thorough feature generating approach to improve the predictive capability of the ML models. Ten new features were created from pre-existing characteristics to provide deeper temporal, geographic, and contextual understanding, as Table 13 shows. By encoding elements including time-of-day, vehicle age, impact severity, and exact crash location, these built-in tools seek to expose latent trends connected to crash severity. More exact classification and strong model performance are made possible by this expanded feature space.

As explained in Table 14, after applying the feature generation step on the Triple Merge dataset, the number of features increased in the new generated dataset from 44 to 54, reflecting the newly generated features that enhance the dataset’s predictive capabilities.

In this phase, we comprehensively evaluate the impact of feature generation on model performance by applying the best winning three ML classifiers from Experiment 3—ET, RF, and DT—to the Triple Merge dataset after feature generation. These experiments aim to assess whether the enriched dataset improves model classification accuracy, enhances generalization, and reduces misclassification errors, thereby further refining the predictive capabilities of the selected classifiers in traffic crash severity analysis. As presented in Table 15, the results obtained after applying feature generation on the Triple Merge dataset show an overall improvement in model performance, particularly in accuracy, precision, and F1-score (macro). The top-performing model remains the ET classifier, achieving the highest accuracy, 95.17%, and F1-score, 93.97%, confirming its superior ability to handle large feature spaces and capture complex interactions within the dataset. The RF classifier follows closely with an accuracy of 94.53% and an F1-score of 93.21%, maintaining its strong performance, especially in recall with 97.76%, which indicates its effectiveness in correctly identifying injury-related crashes. While slightly lower in accuracy with 93.01% and F1-score 91.54%, the DT classifier still demonstrates a balanced precision-recall tradeoff, highlighting its ability to classify crash severity with reasonable effectiveness.

As explored in Fig. 8, the Confusion Matrix for the ET classifier after feature generation demonstrates a further improvement in classification performance, with 98.51% of injury cases correctly identified (high recall) and 87.00% of non-injury cases accurately classified, reflecting a strong balance between sensitivity and specificity. The false positive rate has slightly decreased to 13.00%, showing that the model has become more precise in distinguishing crash severity levels. The ROC Curve, with a score of 0.99, shows a highly improved performance compared to previous experiments. This enhancement is directly attributed to the newly generated features, which provided richer contextual information about time, vehicle condition, crash impact, and environmental factors. The significant increase in AUC-ROC indicates that the model’s ability to distinguish between injury and non-injury crashes has substantially improved, demonstrating the feature generation’s effectiveness in refining the ET classifier’s predictive capabilities.

Crash severity prediction enhancement using clustering methods

In this stage, two clustering methods, K-Means, and HDBSCAN, process the Triple Merge dataset to improve the performance of RF, ET, and DT classification models. The primary purpose of clustering involves organizing crash instances into groups according to their underlying patterns so models can identify similarities and variations between groups. HDBSCAN automatically determines clusters of different densities, making it suitable for working with data sets of various complexity levels. K-Means clustering divides the data into clusters that were defined before the analysis. Through clustering preprocessing, classification models receive better training conditions within homogeneous groups, producing cleaner boundaries and leading to improved prediction accuracy, better recall rates, and enhanced model generalization in crash severity classification.

As presented in Table 16, integrating K-Means and HDBSCAN clustering with the Triple Merge dataset further enhanced classification performance across all models. The ET classifier consistently achieved the highest accuracy and F1 score, reinforcing its effectiveness as the best-performing model. Under K-Means clustering, the ET recorded an accuracy of 95.46% and an F1-score of 94.34%, while HDBSCAN slightly improved ET’s performance to 95.49% accuracy and an F1-score of 94.37%, demonstrating the impact of density-based clustering on refining class separation. The RF classifier also showed strong results, achieving 94.81% accuracy with HDBSCAN and 94.74% with K-Means, indicating that clustering helped optimize feature distribution and improve classification boundaries. The DT exhibited a moderate increase in performance, with accuracy improving to 93.35% under HDBSCAN, highlighting that clustering helps reduce misclassification errors in more interpretable models. Overall, HDBSCAN outperformed K-Means in most cases, particularly in F1-score and recall, suggesting that its flexibility in detecting variable-density clusters contributed to better class separation. These results confirm that applying clustering before classification enhances model accuracy and generalization, particularly for ensemble-based classifiers like ET and RF classifiers.

As presented in Fig. 9, the confusion matrices for ET with K-Means and HDBSCAN clustering demonstrate an overall improvement in classification performance, with both methods significantly enhancing the model’s ability to distinguish between injury and non-injury crashes. In the K-Means clustering approach, the model achieved a true positive rate of 98.69%, correctly identifying most injury cases but misclassified 12.41% of non-injury cases as injuries, indicating a slight bias toward predicting injuries. On the other hand, HDBSCAN clustering yielded slightly better results, with a higher true positive rate (98.72%) and a lower false negative rate (1.28%), suggesting improved accuracy in classifying injury cases. Additionally, the false positive rate decreased slightly to 12.40%, indicating a better overall balance. The comparison between the two clustering methods shows that HDBSCAN marginally outperformed K-Means, likely due to its ability to detect variable-density clusters, making it more effective in capturing localized crash patterns. Despite these improvements, the false positive rate remains above 12%, suggesting that further refinements in feature selection or clustering parameters may be needed to enhance precision. HDBSCAN is the preferred clustering approach for improving the ET classifier’s predictive capability in crash severity classification.

Across the six clustering experiments, KMeans clustering was applied with a fixed number of clusters set to 3 in all three configurations involving RF, ET, and DT classifiers. In contrast, HDBSCAN was used with its inherent ability to automatically determine the optimal number of clusters based on data density, without specifying a fixed cluster count. In none of the experiments were internal clustering validation metrics—such as silhouette score explicitly computed or reported. Instead, the focus was on evaluating the added value of cluster-based features through standard classification performance metrics such as accuracy, precision, recall, F1-score, and AUC-ROC.

Integrating clustering methods in this paper was done to enrich the dataset with latent spatial patterns that might enhance crash severity prediction, not to get optimal unsupervised segmentation. The effectiveness of the clustering was indirectly confirmed by the performance of downstream machine learning models since it functioned as a feature engineering enhancement rather than a single analysis goal. Therefore, traditional internal validation measures were not used since its influence on classification results was the ultimate criteria for clustering usefulness.

Oversampling

To further enhance the classification performance of the Triple Merge dataset, oversampling techniques were applied to address class imbalance in the target feature “CRASH_TYPE” and improve the model’s ability to correctly classify injury-related crashes. Since the ET classifier was identified as the best-performing classifier across all experiments, it was selected as the base model for evaluating the impact of oversampling. Oversampling is a crucial technique in handling imbalanced datasets40 as it aims to balance the dataset by increasing the representation of the minority class (injury cases), reducing misclassification bias, and improving recall and F1-score. In this study, four different oversampling methods were implemented:

-

1.

RandomOverSampler: Generates duplicate instances of the minority class to balance the dataset.

-

2.

SMOTE (Synthetic Minority Over-sampling Technique): Creates synthetic data points by interpolating between existing minority class samples.

-

3.

BorderlineSMOTE: Focuses on generating synthetic samples near the decision boundary, improving the model’s ability to distinguish between classes.

-

4.

ADASYN (Adaptive Synthetic Sampling): Generates synthetic samples based on the density of the minority class, prioritizing areas where the class imbalance is more severe.

The ET classifier—identified as the best performer—was fine-tuned using Grid Search with fivefold cross-valuation to maximize important parameters including the number of estimators, maximum depth, and feature selection strategy in later phases including clustering and oversampling. To guarantee strong performance assessment across imbalanced classes, evaluation metrics comprised accuracy, precision, recall, F1-score, AUC-ROC, and confusion matrices.

The objective of the oversampling step is to increase the representation of injury-related crash cases. This allows the classification models to better generalize across both classes, reduce misclassification errors, and improve recall and F1 Scores, which are crucial for evaluating model performance in imbalanced settings. Before applying oversampling, a stratified k-fold cross-validation with k = 5 was conducted to ensure fair distribution of both classes in each training and validation fold. This stratification process ensures that the model evaluates performance across multiple training subsets, reducing the risk of overfitting and providing a more robust and reliable classification accuracy assessment. By integrating oversampling with stratified k-fold cross-validation, the approach improves class balance and enhances model generalization, leading to better predictive performance in crash severity classification.

Table 17 explores the results of applying four oversampling techniques on the best-performing ET classifier, demonstrating a significant improvement in classification performance, particularly in recall, F1-score (macro), and AUC-ROC, confirming the effectiveness of synthetic data generation in balancing the dataset. SMOTE achieved the highest F1-score with 94.57% and strong recall with 93.63%, making it the best-performing technique, as it generates synthetic samples by interpolating between existing minority class instances, improving model generalization. BorderlineSMOTE performed similarly with F1-score = 94.57%, demonstrating that placing synthetic samples near decision boundaries enhances classification effectiveness. ADASYN achieved the highest AUC-ROC score with 99.20%, indicating excellent discrimination between injury and non-injury cases, though its F1-score of 94.56% was slightly lower, suggesting that some synthetic samples might introduce minor noise. RandomOverSampler, while improving recall, performed the weakest among the four techniques with F1-score = 93.99%, as it duplicates existing samples rather than generating new patterns. Overall, oversampling significantly enhanced classification performance, with SMOTE and BorderlineSMOTE emerging as the most effective techniques for crash severity classification, ensuring better generalization and improved predictive capabilities when applied to the ET classifier.

Figure 10 explores the confusion matrixes for the four oversampling techniques a clear improvement in handling class imbalance, particularly by reducing false positives while maintaining high recall. These results confirm that oversampling successfully enhanced class balance, reducing bias toward the majority class and improving overall classification accuracy.

Feature selection

As the final step in optimizing the Triple Merge dataset, a Feature Selection process was applied to enhance model performance by removing redundant and less informative features, improving efficiency, and increasing classification accuracy. Feature selection aims to identify the most relevant features contributing to crash severity prediction while eliminating unnecessary attributes that may introduce noise or computational overhead. We employed a hybrid approach combining CFS with RFE to achieve this. The CFS evaluates feature dependencies, selecting attributes that maximize relevance to the target variable while minimizing redundancy among themselves. Meanwhile, RFE iteratively eliminates the least important features, ensuring that only the most significant predictors remain. This combined approach offers several advantages, including enhanced model interpretability, reduced overfitting, and improved computational efficiency. By eliminating irrelevant or highly correlated features, the classification models trained on the Triple Merge dataset experience a boost in accuracy, F1 score, and overall stability, making the crash severity prediction process more reliable and robust.

As explained in Fig. 11, a performance curve represents the relationship between the number of selected features and the ET classifier’s classification accuracy. The accuracy improves as the number of selected features increases, reaching peak performance between 35 and 40 features (above 0.96 accuracy). Beyond this point, accuracy slightly declines, indicating diminishing returns and potential redundancy. This highlights the importance of selecting an optimal feature set to balance model complexity and predictive performance.

Table 18 explores the Top-10 feature selection sets with their accuracies. The highest accuracy was achieved with 37 features 96.19%, indicating that this feature set provided the best balance of information and generalization. As the number of features decreased slightly, the accuracy remained high but fluctuated, with 36 and 35 features achieving 96.14% and 96.06% accuracy, respectively.

As presented in Table 19, the feature set 37 achieved the highest accuracy (0.96193) with strong performance across all metrics: precision 95.98%, recall 98.77%, and F1-score 95.29%. The AUC-ROC score 99.28% indicates excellent classification ability. This feature selection improved model efficiency by reducing redundancy while maintaining high accuracy and generalization.

Figure 12 explores the confusion matrix for the highest feature set 37. The confusion matrix demonstrates the effectiveness of the feature selection process in refining classification performance within the Triple Merge dataset. The model achieves a high true positive rate (98.77%), correctly identifying most instances of the positive class and maintaining a low false positive rate (10.08%). The false negative rate (1.23%) is minimal, indicating that the model rarely misclassifies positive instances as negatives. These results confirm that the dataset imbalance has been successfully addressed, leading to an optimal balance between the two classes and enhancing overall classification accuracy.

Table 20 summarizes the most important features selected by both CFS and RFE techniques. This comparison highlights the overlapping and unique contributions of each method in identifying the key variables influencing crash severity.

Discussion

The results obtained from this study demonstrate the effectiveness of the proposed ML framework for traffic crash severity prediction. The study systematically applied a multi-stage data integration approach, beginning with the Triple Merge dataset, which combines the Traffic People, Traffic Crashes, and Traffic Vehicles datasets. Integrating human, crash, and vehicle-related attributes enriched the dataset, providing a more holistic representation of accident scenarios. This comprehensive dataset facilitated improved predictive accuracy and robustness in crash severity classification. The study tackled data quality challenges by applying rigorous data cleaning, feature selection, and encoding techniques, ensuring consistency and completeness in the dataset. The progressive dataset merging process significantly enhanced predictive performance by integrating relevant attributes. At the same time, feature engineering introduced meaningful transformations such as crash severity index calculations, temporal features, and vehicle condition parameters. These feature enhancements gave the ML models richer contextual information, enabling better discrimination between crash types. The baseline ML experiments revealed that ensemble models, particularly ET, RF, and DT, consistently outperformed other classifiers. The Triple Merge dataset exhibited superior classification performance across all models, validating the hypothesis that integrating driver, crash, and vehicle-specific data enhances prediction accuracy. The best-performing model, the ET classifier, achieved an accuracy of 95.17% and an F1 score of 93.97% after feature generation, confirming its robustness in handling complex feature interactions.

Clustering techniques such as K-Means and HDBSCAN were applied to improve classification accuracy further. The results indicated that HDBSCAN outperformed K-Means in refining model performance, particularly in distinguishing between minor and severe crashes. Additionally, oversampling techniques were employed to mitigate class imbalance in the dataset. The SMOTE and Borderline-SMOTE techniques yielded the highest F1 scores, effectively improving model generalization while reducing misclassification biases. Feature selection improved model efficiency by reducing dimensionality while retaining high-impact variables. The best feature subset (Feature Set 37) achieved an accuracy of 96.19%, precision of 95.98%, recall of 98.77%, and an AUC-ROC of 99.28%, indicating optimal model performance. This step confirmed that selecting the most relevant features minimizes noise and redundancy, leading to better interpretability and computational efficiency.

An ablation study was carried out in order to better grasp the unique contributions of the fundamental elements of the proposed framework. We progressively combined clustering (KMeans—HDBSCAN), feature selection (CFS and RFE), and oversampling (RandomOverSampler) beginning from the baseline model trained on the original Triple Merge dataset. Every configuration’s performance was gauged by means of accuracy, F1-score, and AUC-ROC measures. By capturing latent patterns in crash sites, clustering notably improved model generalization; feature selection improved model interpretability and decreased overfitting. Applied to the best-performing classifier (ET), oversampling notably resolved class imbalance. All three methods taken together produced the best predictive performance, so verifying the additive value of every pipeline component.

A comparative analysis with recent studies, as presented in Table 21, highlights that the proposed framework outperforms existing models in terms of predictive accuracy, feature integration, and dataset scale. Unlike previous studies focusing on single-source datasets or limited feature representations, our approach integrates multiple datasets, incorporates feature engineering, and applies advanced clustering and oversampling techniques to enhance crash severity prediction. The model’s high recall and AUC-ROC scores validate its effectiveness in real-world crash prediction applications. In conclusion, this study presents a robust and scalable ML framework for accurate crash severity prediction. Integrating multi-source datasets, feature selection, clustering, and oversampling techniques significantly enhance classification performance. These results contribute to developing data-driven traffic safety policies and accident mitigation strategies, ensuring safer and more intelligent transportation systems.

As presented in Table 18, our model integrates multi-source traffic crash data for a broader scope. Additionally, we employ advanced feature selection (CFS-RFE), clustering (K-Means, HDBSCAN), and oversampling techniques (SMOTE, ADASYN), ensuring better balance and predictive power. While the authors of10 rely on traditional feature selection and RF/KNN, our ensemble approach (ET, RF, DT) achieves 96.19% accuracy on a much larger, diverse dataset, making it more robust and adaptable for real-world crash prediction. The results of this study can make a real difference for stakeholders to improve road safety. Traffic managers can use the model to spot where and when severe crashes are most likely to happen, helping them take targeted actions—like adjusting traffic signals, increasing patrols, or improving road design in high-risk areas. The results can help direct more successful public awareness efforts and better traffic policies by revealing how elements including driver conduct, vehicle type, and road conditions mix to raise crash severity. The modular foundation allows it to be included into smart transportation systems to provide improved planning choices and faster reaction to collisions. Basically, this work offers useful tools and ideas to enable communities to make their roadways safer and more sensitive to actual issues.

Conclusion and future works

This study presents a comprehensive and data-driven approach to traffic crash prediction, demonstrating significant advancements in classification accuracy, feature engineering, and dataset optimization. Unlike conventional studies that rely on limited datasets and basic feature selection techniques, our model leverages an extensive dataset of more than 2.26 million traffic records, ensuring a robust and generalizable framework. The research began by constructing the Triple Merge dataset, integrating multiple data sources to enhance predictive insights. Feature generation techniques were applied to extract critical temporal, spatial, and environmental attributes, such as weather conditions, peak-hour indicators, and vehicle age, enriching the dataset with context-aware information. Clustering methods (K-Means and HDBSCAN) were introduced to refine data representation further, enabling better crash pattern segmentation. Applying oversampling techniques (SMOTE, Borderline-SMOTE, ADASYN, and RandomOverSampler) effectively mitigated class imbalance, a standard limitation in real-world crash data. The feature selection phase, utilizing CFS and RFE, further optimized the dataset by removing redundant and less informative features. Among all applied classification models, the ET classifier emerged as the best-performing model, achieving an outstanding accuracy of 96.19%, surpassing recent state-of-the-art models. The superior performance of our model can be attributed to the combination of extensive feature engineering, robust dataset balancing, and advanced selection techniques, setting a new benchmark for traffic crash analysis. Although the proposed framework exhibits robust predictive capabilities, its generalizability may be restricted by the utilization of region-specific data. Real-time and multi-regional datasets should be included into future development. Due to computational restrictions, oversampling was done only to the top-performing model (ET); extending it to other models might provide more information. Another restriction is the lack of contextual elements including infrastructure, driver behavior, and weather; future datasets should thus contain them. Finally, including AutoML or dynamic ensemble techniques could help to improve model adaptability and efficiency even more.

Data availability

The experimental data supporting the findings of this study are available from https://data.cityofchicago.org/Transportation/Traffic-Crashes-People/u6pd-qa9d/about_data. https://data.cityofchicago.org/Transportation/Traffic-Crashes-Crashes/85ca-t3if/about_data. https://data.cityofchicago.org/Transportation/Traffic-Crashes-Vehicles/68nd-jvt3/about_data.

References

Ashqar, H. I., Alhadidi, T. I., Elhenawy, M. & Jaradat, S. Factors affecting crash severity in roundabouts: A comprehensive analysis in the Jordanian context. Transp. Eng. 17, 100261. https://doi.org/10.1016/j.treng.2024.100261 (2024).

Champahom, T. et al. Tree-based approaches to understanding factors influencing crash severity across roadway classes: A Thailand case study. IATSS Res. 48, 464–476. https://doi.org/10.1016/j.iatssr.2024.09.001 (2024).

Kumar, P., Jain, J. K. & Singh, G. Analysing crash severity on expressways in India: Statistical and machine learning models. Proc. Institut. Civil Eng. – Transp. https://doi.org/10.1680/jtran.24.00071 (2025).

Das, S., Das, C., Sarma, R., Talukdar, P., Barman, A. & Hubballi, R. Machine learning based approach for predicting the impact of time of day on traffic accidents. In Proceedings of the 2023 26th international conference on computer and information technology (ICCIT), 13–15 Dec. 2023, pp. 1–5 (2023).

Goswami, N.G., Sharma, P., Arora, A. & Singh, N. Traffic improvisation by identifying the accident locations using ML/Data mining approaches. In Proceedings of the 2022 4th international conference on advances in computing, communication control and networking (ICAC3N), 16–17 Dec. 2022, pp. 127–132 (2022).