Abstract

With the advancement of neural networks, end-to-end neural automatic speech recognition (ASR) systems have demonstrated significant improvements in identifying contextually biased words. However, the incorporation of bias layers introduces additional computational complexity, requires increased resources, and leads to redundant biases. In this paper, we propose a Context Bias Adaptive Model, which dynamically assesses the presence of biased words in the input and applies context biasing accordingly. Consequently, the bias layer is activated only for input audio containing biased words, rather than indiscriminately introducing contextual bias information for every input. Our findings indicate that the Context Bias Adaptive Model effectively mitigates the adverse effects of contextual bias while substantially reducing computational costs.

Similar content being viewed by others

Introduction

With the rapid rise of deep learning technologies, significant breakthroughs have been achieved in the research of end-to-end Automatic Speech Recognition (E2E ASR) models. Prominent methods, such as Connectionist Temporal Classification (CTC)1,2,3, Recurrent Neural Network Transducer (RNN-T)4,5,6, and Attention-based Encoder-Decoder (AED)7,8,9, have been widely adopted on a large scale. However, as research delves deeper, the issue of low recognition accuracy for contextually biased words has become increasingly apparent. These words, infrequent during training, are often challenging for the model to recognize. Examples include personal names, geographic locations, and specialized terminology from various fields. Although these words appear infrequently, they often carry crucial information and significantly impact the semantic meaning of sentences.

Leveraging external information to guide Automatic Speech Recognition (ASR) models in accurately recognizing contextually biased words is an effective approach. Current research strategies can be broadly categorized into two types. The first type involves increasing the probability of biased words during inference. This can be done through external language models, shallow fusion, and on-the-fly rescoring. For instance, shallow fusion combines outputs from a neural network and an external language model during decoding10,11. On-the-fly rescoring re-evaluates the scores of generated hypotheses during decoding to boost biased words4. This method is straightforward and effective but lacks flexibility in adjusting the list of biased words.The second approach injects contextual bias information during encoding. This method involves encoding contextual entities using a bias encoder along with a position-aware attention mechanism to direct the ASR model’s focus towards them. Studies such as12,13,14,15,16 have explored this strategy. Moreover, attention mechanisms have been employed to integrate contextual information into end-to-end ASR systems, including RNN-T and transformer transducers, as evidenced in12,17,18.

Integrating a bias encoder to incorporate contextual bias into the model is a potent strategy, enhancing the recognition accuracy of contextually relevant terms. However, this approach is not without its drawbacks. One significant issue is the introduction of unnecessary biasing information, which can disrupt the model’s ability to accurately recognize non-biased nouns, potentially leading to errors. This disruption is particularly problematic during audio recognition tasks, especially for phonetically similar words. In such cases, the superfluous biasing information might cause the model to misclassify non-biased nouns as proper nouns. This misclassification not only hampers the overall accuracy of the model but also impacts its reliability in diverse real-world applications. The problem is further exacerbated when dealing with a large number of biased words, where the negative effects of over-biasing can outweigh the benefits, leading to a noticeable degradation in performance. Consequently, while the integration of a bias encoder is advantageous for certain contexts, careful consideration and balance are required to avoid the pitfalls of over-biasing and ensure the robustness of the ASR system.

Therefore, in contexts where biasing information is redundant or inappropriate, disabling the biasing function can conserve substantial computational resources and improve the overall efficiency of the system. This adaptive approach ensures that the model only utilizes biasing when it is beneficial, avoiding unnecessary complexity and potential misclassifications. By dynamically managing the integration of contextual bias, the system can maintain optimal performance without the overhead associated with processing irrelevant or excessive bias information. This strategy not only enhances computational efficiency but also ensures the ASR system remains robust and accurate across a wide range of scenarios. This careful management of bias integration exemplifies a balanced approach to leveraging contextual information, providing significant improvements in both performance and resource utilization.

In this paper, we introduce the Context Bias Adaptive Model aimed at mitigating the negative impacts stemming from excessive contextual bias incorporation. Building on prior work, our approach extends the contextual bias adapter by integrating a Bias Detector. This detector evaluates the presence of biased words in the input, dynamically enabling or disabling the adapter to suit current encoding requirements. Our experimental results demonstrate that this adaptive approach, driven by real-time input analysis, effectively reduces the detrimental effects of over-biasing. Importantly, it achieves significant performance improvements across different datasets while conserving computational resources for ASR systems. These findings highlight the advantages of integrating detection mechanisms into bias adjustment strategies in speech recognition systems, offering a robust solution to enhance model accuracy and efficiency.

Methods

Background

Prior work proposed the Context Bias Model19, which we redesign and improve. The model (Fig. 1) consists of a backbone network using the Conformer encoder20 for robust global and local feature extraction, and a bias network containing a Biasing Module plus a newly added Bias Detector.

The backbone encodes audio \(x_t\) into embeddings \(f^{emb}\). The bias network encodes contextual bias words into \(f^{Bemb}\), weighted by \(w_t\) from the Detector, forming a bias vector. The final encoding \(f^{emb} + w_t \cdot b_t\) is decoded via CTC beam search.

The Biasing Module19 uses attention mechanisms21 to fuse audio with bias word embeddings. It consists of a Bias Encoding Module converting biased words \(C = [c_1, \ldots , c_{m+1}]\) into vectors \(F\), and a Context Fusion Module that integrates audio and bias features to improve recognition.

Integration employs the encoder output as queries \(Q\) and bias encoder outputs as keys \(K\) and values \(V\) in attention fusion. A filtering mechanism selects relevant bias words, updating the decoder to enhance sensitivity.

Prior studies demonstrate that incorporating gating mechanisms22 and cross-attention23 offers valuable insights for our work by further improving selective contextual bias integration.

Bias detector

As shown in Fig. 1, we introduce a bias detector to optimize the context adapter. The role of this detector is to determine whether the current audio segment requires context-specific keyword bias enhancement processing. It utilizes the feature representations from the encoder to decide if the audio needs to undergo keyword bias enhancement adjustments. During the inference phase, it regulates context biases through a pre-trained keyword enhancement model, simplifying the training process. Specifically, the intermediate representation first has positional information added to it, and then it is transformed into a non-linear space using a feedforward layer with a hyperbolic tangent activation function to capture complex data patterns. The output from this layer is then passed through another feedforward layer, which projects it into a scalar value. This scalar value is subsequently mapped to the range of 0 to 1 using the Sigmoid function, yielding the final \(\omega _t\)

Detector

Position encoding is a mechanism used in models to introduce positional information of elements in a sequence. Since the model itself does not contain any recurrent or convolutional structures, it cannot directly capture the sequential information of the data. Position encoding enables the model to utilize this positional information by adding it to each element of the input sequence.

where \(\text {pos}\) represents the position index, i represents the dimension index, and \(d_{\text {model}}\) is the dimension of the embedding vectors in the model. This way, each dimension will have a different frequency with varying positions, allowing the model to identify the relative positions of elements in the sequence through position encoding.

After generating the position encodings, these encoding vectors are directly added to the embedding vectors of the input sequence. Specifically, if each element of the input sequence is transformed into a vector of the same dimension through an embedding layer, we can add the position encoding vector at the corresponding position to obtain a new vector. This new vector contains both the information of the original element and the positional information of the element in the sequence. This process can be expressed as:

This new vector will then serve as the input to the model, participating in the calculations of subsequent self-attention layers and feedforward neural networks. In this way, the model can consider not only the content of each element in the sequence but also their positions, thus better capturing the sequential information of the data. By learning the patterns of keyword occurrences, the model indirectly obtains the positional information of the keywords.

In the Detector module, the intermediate representation \(f_{\text {emb}}\) is processed through two sequential feedforward layers. The first layer applies a hyperbolic tangent (\(\tanh\)) activation to project \(f_{\text {emb}}\) into a non-linear space, enabling the capture of complex patterns. The resulting vector is then passed through a second layer followed by a hardsigmoid activation, which scales the output to the [0, 1] range to produce the final weight \(\omega _t\).

Here, the hyperbolic tangent function is applied element-wise. For any scalar input x, the \(\tanh\) function is defined as:

The \(\textrm{hardsigmoid}\) function is a piecewise linear approximation of the sigmoid function, often used for its computational efficiency. For any scalar input x, it is defined as:

where \(\textrm{clip}(a, b, c)\) restricts the value of a to lie within the range [b, c]. This activation squashes the input to the [0, 1] interval and is applied element-wise.

Here, \(W_1\), \(W_2\), \(b_1\), and \(b_2\) are trainable weights.

Train part

During training, the weight \(\omega _t\) outputed from the gating layer is multiplied by the vector \(b_t\), updating the intermediate encoder representation through component-wise addition:

The Encoder and context bias module are initialized using pre-trained context model. The pre-trained parameters remain frozen, while the detection module starts training from the beginning. The bias layer interaction occurs solely through single value multiplication \(\omega _t\), keeping the architecture of the pre-trained context ASR model unchanged. Consequently, this allows for the rapid and cost-effective integration of neural adapters, requiring only a few epochs of training.

Loss part

The context adapter is trained using an augmented Connectionist Temporal Classification (CTC) loss24, which incorporates a regularization term. The CTC loss is designed to enable sequence-to-sequence learning without requiring explicit alignment between input and output sequences. In this context, we apply regularization to encourage the model to produce smaller gating weights when context bias is unnecessary. The total loss is defined as:

where:

-

\(L_{\text {CTC}}\) is the standard CTC loss used for aligning input sequences with target labels, defined as:

$$\begin{aligned} L_{\text {CTC}} = -\log \left( \sum _{\pi \in \mathcal {B}(\textbf{l})} \prod _{t=1}^T p(\pi _t | \textbf{x}) \right) \end{aligned}$$Here, \(\textbf{x}\) is the input sequence of length T, \(\textbf{l}\) is the target label sequence of length U, \(\pi\) is a valid alignment path of length T that maps to \(\textbf{l}\) after removing blanks and merging consecutive duplicates, \(\mathcal {B}(\textbf{l})\) is the set of all such valid paths, and \(p(\pi _t | \textbf{x})\) is the probability of the label at position t in path \(\pi\) given input \(\textbf{x}\).

-

\(\lambda\) is a regularization coefficient controlling the influence of the auxiliary term.

-

\(\omega _i\) is the output of the gating mechanism at time step i.

-

T is the total number of time steps.

This regularization term encourages the weights \(\omega _i\) to be small, thereby minimizing the influence of the context bias when it is not necessary.

Inference part

During the inference process, weights act as switches to Activate or deactivate the biasing layer. Apply a hard threshold to the weights and update the intermediate state of the model as follows

In Eq. (8), we define \(\hat{f}^{emb}\) based on the condition of \(\omega _t\) and \(\epsilon\). \(\epsilon\) can be generated by training or set manually.

When the weight falls below the threshold \(\epsilon\), the biasing layer can be fully deactivated. This is due to the attention-based biasing layer can utilize keys with large dimensions , such as entity words. Consequently, this can lead to significant computational savings, thereby reducing latency. In this experiment, \(\epsilon\) is set to 0.5.

Experiments

Datasets

AISHELL

We use the AISHELL-125 dataset as the original dataset. AISHELL-1 is a large-scale, open-source Mandarin Chinese speech corpus, commonly used for training and evaluating automatic speech recognition (ASR) systems. The dataset contains approximately 178 h of speech data from 400 speakers, covering diverse accents and speaking styles. It includes recordings from various domains such as daily conversation, news, entertainment, and finance, providing a rich vocabulary and linguistic diversity. The audio is recorded in 16kHz, 16-bit PCM format and comes with accurate sentence-level transcriptions, making it an invaluable resource for ASR research and development.

Context-biased word dataset

In addition, we utilize AISHELL-1 to create a context-biased domain-specific dataset. Due to the lack of publicly available datasets specifically tailored for context bias, we based our work on AISHELL, filtering out audio recordings containing only common words. We reorganized the data with proper nouns such as names of persons, geography, and organizations into a Context-biased Word Dataset. We employed the publicly available NER tool, roberta-base-finetuned-cluener2020-chinese26, to label entities within the data and set a threshold of fewer than five occurrences for entities to filter out the bias. Audio recordings and labels corresponding to these biased words were selected as a specific class of data. The reorganized dataset contains a higher proportion of biased word audio recordings, which can more effectively evaluate the experimental results. Detailed information about the dataset is presented in Table 1, including the number of instances, types of instances, and the number of biased words corresponding to each instance type.

Experimental setup

Baseline models

The baseline model, adopted directly from the SpeechBrain toolkit27, is a speech recognition system based on convolutional neural networks (CNN) and transformer architectures. Specifically, the learning rate of the Adam optimizer was set to 0.1. For audio processing, the sampling rate was 16,000 Hz, the window size for the short-time Fourier transform was 400, and the number of Mel-frequency cepstral coefficients was 80. The CNN frontend consisted of two blocks, each containing one layer with 256 output channels, using \(3 \times 3\) convolution kernels, a stride of 2, and no residual connections.

The transformer model included 12 encoder layers and 6 decoder layers, each with a model dimension of 256, a feed-forward network dimension of 2048, 4 attention heads, a dropout rate of 0.1, and the GELU activation function, applied before input normalization. The entire system had 5000 output neurons for generating the target vocabulary. During decoding, the minimum decoding ratio was set to 0.0 and the maximum decoding ratio to 1.0, with a validation search interval of 10. Both validation and testing used a beam size of 10, and the CTC decoding weight was set to 0.40. The model had a total of 37.6 million parameters.

The word embedding encoder we implemented is designed with two attention layers, each consisting of hidden layers that contain 512 units. To enhance model robustness and prevent overfitting, we applied a dropout rate of 0.1. Additionally, we incorporated residual connections which facilitate the training of deep networks by allowing gradients to flow through the network more effectively. After these layers, we employed an activation function followed by a linear layer, which then leads into the attention mechanism fusion. This fusion mechanism is critical for integrating various sources of information into a cohesive representation.To achieve more refined and granular biasing information, the biasing layer utilized an 8-head attention mechanism. This multi-head approach enables the model to attend to different parts of the input sequence simultaneously, capturing diverse aspects of the contextual information. The resultant biasing vector was then linearly projected to align with the intermediate representations generated within the encoder. This alignment ensures seamless integration of biasing information into the overall model framework.During the training phase, we set the maximum directory size—the number of entities within the directory encoder—to 100. This parameter was carefully chosen to balance the need for comprehensive contextual information with the limitations imposed by memory constraints. By doing so, we ensured that the model could operate efficiently without exceeding available computational resources.

Evaluation sets

The Character Error Rate (CER) is a fundamental metric for evaluating the accuracy of character-level recognition systems, such as speech-to-text or optical character recognition (OCR). It quantifies the minimum number of character edits (insertions, deletions, or substitutions) required to transform an automatically generated text into its corresponding reference text. This metric is particularly relevant for languages with discrete character units, such as Chinese (hanzi) and Japanese.

The formula for CER is defined as:

where: - I: Number of insertions, - D: Number of deletions, - S: Number of substitutions, - N: Total number of characters in the reference text.

A lower CER indicates higher recognition accuracy, as fewer edits are needed to align the recognized text with the ground truth.

The Relative Character Error Reduction (CERR) measures the effectiveness of improvements in recognition systems by comparing the error reduction relative to a baseline model. It highlights the percentage decrease in character errors achieved through algorithmic optimizations or model enhancements. A higher CERR value signifies a more significant improvement, while a negative value implies the modified system underperforms the baseline.

The CERR is calculated using the following formula:

where: \(\text {CER}_{\text {A}}\): Character error rate of the improved model (A), \(\text {CER}_{\text {B}}\): Character error rate of the baseline model (B).

This metric emphasizes the relative improvement gained from modifications, ensuring fair evaluation of performance gains across different models and datasets.

Result

Results on AISHELL

Our approach demonstrates superior competitiveness compared to various mainstream models, as shown in the Table 2. On the AISHELL dataset, the proposed method achieves 5.56 character error rate (CER) on the validation set (Dev) and 6.01 CER on the test set (Test), surpassing the current state-of-the-art specialized model CopyNE. This outstanding performance not only highlights its efficiency and accuracy in specific tasks but also shows that our method effectively enhances biased words information without introducing excessive interference to general word recognition, even when rivaling large models Wav2vec2 in performance.

From the perspectives of model stability and generalization, the adaptive biased words enhancement method exhibits exceptional robustness across different datasets. The minimal performance variation between Dev (5.56) and Test (6.01)—a mere 0.45 change—demonstrates strong consistency in performance across data distributions. This indicates no significant overfitting or underfitting issues, suggesting the model maintains stable accuracy when handling heterogeneous data.

Ablation study on AISHELL

We conducted an ablation study on the AISHELL dataset to evaluate the contribution of each component. In the initial phase of this study, as shown in Table 3, S0—serving as the baseline defined in our experimental setup—was evaluated on both the development (Dev) and test (Test) sets. We observed unsatisfactory performance on the development set, which was consistent with trends observed on the test set. It is important to note that the test set was used exclusively for final evaluation and was not involved in model tuning. The character error rates (CERs) were 5.92 and 6.55 on the Dev and Test sets, respectively. These results highlight the baseline model’s limitations in addressing the task and underscore the need for further optimization.

To address these shortcomings, we introduced an attention-driven biased words enhancement method with feature fusion. This approach leverages attention mechanisms to strengthen the model’s focus on critical biased words, thereby improving character recognition accuracy. Experimental results demonstrated marked improvements: the Dev set CER decreased to 5.62, and the Test set CER dropped to 6.10. These advancements validate the proposed method’s effectiveness in overcoming the baseline’s performance bottlenecks.

Building on this progress, we integrated the attention-based biased words enhancement framework into a unified model, referred to as S2. This integration yielded superior performance across all experimental evaluations, establishing the integrated approach as a robust solution for enhancing character recognition accuracy.

Results on context-biased word dataset

In this study, we systematically investigated the impact of two strategic enhancements on character recognition models, as shown in the Table 4, focusing on datasets containing specific biased words such as person names (Per) and place names (Geo). The experiments began with A0, the baseline model defined in our experimental setup, representing an unoptimized foundational configuration. To improve biased words recognition, we introduced A1: BaselineAttention, which incorporates an attention mechanism to prioritize critical biased words during processing. This mechanism explicitly directs the model’s focus toward biased words, thereby augmenting their detection accuracy. Subsequently, we extended A1 into A2: A1+Detector, which incorporates a specialized detector to dynamically adjust information injection. This detector first evaluates the input text for biased words presence and selectively incorporates relevant information to avoid interference from normal words content when no biased word are detected.

The experimental results demonstrated significant improvements with A1 compared to A0: On the Per dataset, CER decreased by 14.12, and on the Geo dataset, CER decreased by 29.09. These outcomes underscored the attention mechanism’s effectiveness in enhancing the model’s ability to prioritize biased words, thereby elevating overall recognition accuracy. Building on A1, A2 achieved further gains through detector-guided optimization, with CER reductions of 24.47 (Per) and 38.96 (Geo). This improvement not only reinforced the detector’s role in refining biased words detection but also demonstrated its capacity to mitigate interference from normal words information during recognition.

Notably, while A2 outperformed A1, the marginal gains were relatively modest. This limitation may stem from the substantial performance improvements already achieved by A1’s attention mechanism, which reduced the remaining optimization space for A2. Nonetheless, the results collectively validate the synergistic benefits of combining attention mechanisms with detector-based strategies in character recognition tasks, offering valuable insights for future research.

Further analysis

To deeply analyze how the proposed method enhances the model’s ability to process biased words information, this section carefully selects specific experimental instances and conducts a detailed analysis of these cases from the perspectives of attention distribution and detector output weights.

Attention distribution

First, as shown in Fig. 2, when processing the input hidden vectors corresponding to the biased words 广州 (“Guangzhou”) and 林琳 (“Lin Lin”), the model assigns significantly higher attention weights to these positions, reflected by darker colors in the visualization. This phenomenon indicates that the model prioritizes these biased words during recognition. Conversely, normal words such as 日报 (“Daily Report”) and 上周五 (“Last Friday”) exhibit lighter colors, suggesting lower attention allocation. Similarly, words like 讯 (“News”) and 记者 (“Reporter”) also appear with lighter hues, demonstrating reduced focus during processing.

Through further computational analysis, we confirmed that the hidden vectors most likely encode the phrases “Guangzhou” and “Lin Lin”. This result aligns with our expectation that the model would emphasize key biased words through enhanced attention mechanisms.

Semantic-aligned disambiguation

Through comparative analysis of Fig. 3 , it becomes evident that our proposed method demonstrates significant advantages in optimizing the integration of biased words information with hidden vectors. A key observation from the Baseline model versus our approach is the improved accuracy in identifying proper nouns within sentences. For instance, while the Baseline model misclassifies "谌龙" as "陈龙," our model correctly recognizes the target biased word "谌龙." The same principle applies to the remaining examples in the figure. At the same time, it should be noted that the correct recognition of speech has a significant impact on certain downstream tasks. For instance, in an instruction scenario, if I want to send a message to "谌龙", due to incorrect recognition, the message might be mistakenly sent to "陈龙".

This enhancement stems from our optimized fusion mechanism, which not only strengthens biased word selection accuracy but also establishes a more reliable foundation for downstream tasks by refining semantic alignment between contextual embeddings and critical terms.

Suppression of biased words interference

Through analysis of error-prone biased words enhancement cases, our detector-based mechanism demonstrates effective suppression of unnecessary biased word interference, thereby reducing error rates caused by such disturbances. We can see from the Fig. 4 that, in Case 8, Model A1 erroneously recognized "无疑同" (“Thai is undoubtedly most similar to”) as "吴怡同"(“Wu Yitong”), likely due to interference from the biased word "吴怡同" . In contrast, Model A2 leveraged the detector to deactivate biased word enhancement here, preserving the original expression’s integrity. This has a significant impact on downstream tasks. For example, in the machine translation task, as shown in the content within the parentheses in the figure, the incorrect recognition has led to a completely wrong translation of the entire sentence. However, the results obtained in this work can correctly translate the original meaning.

This validation confirms that our method dynamically disables biased word enhancement when irrelevant, thereby mitigating errors arising from contextual biased word ambiguities. By selectively avoiding unnecessary modifications, the proposed approach not only enhances model robustness but also improves overall accuracy in complex textual environments where latent biased word interference could compromise recognition reliability.

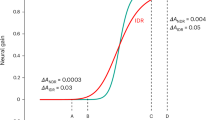

The variation of the \(\omega _t\) value

By analyzing the output weights of the detector, we find that our model’s recognition of biased word extends beyond mere attention allocation. It also involves the selective incorporation of biased word information based on contextual relevance. This ensures that this information occupies an important position in the final recognition results, further strengthening the model’s ability to recognize biased words while reducing the interference that may be caused by information of normal word. Overall, this improves the accuracy and robustness of character recognition.

Specifically, starting from the analysis of the association between speech input and \(\omega _t\), it can be observed from Fig. 5 that when there are biased words in a sentence, \(\omega _t\) remains at a relatively high value. This guarantees that the biased word enhancement function is activated. Conversely, if there are no biased words in the sentence, \(\omega _t\) is close to zero, thus controlling the biased word enhancement function to be in the off state. In the figure, the X-axis represents biased words, the Y-axis, “Bias” represents sentences containing biased words, and “Normal” represents sentences without biased words, and Z-axis represents the value of \(\omega _t\).

This method has unique advantages in ensuring the robustness of the model performance. It can effectively avoid the over - interference of biased word information on hidden vectors that do not contain biased words. When the model processes such hidden vectors without biased word, it will use the detector method to flexibly and accurately adjust the processing strategy according to the actual situation. Since biased word information does not interfere randomly, hidden vectors without biased words can be analyzed in a relatively independent and pure environment. As a result, the model can always maintain good balance during the information processing.

Conclusions

We propose the Context Bias Adaptive Model, which incorporates a Bias Detector to adapt the model based on the presence of biased words. Experimental results show that the Bias Detector effectively mitigates the negative impacts of over-biasing, achieving varying degrees of performance improvement across different datasets while conserving computational resources in the ASR system. These findings underscore the advantages of incorporating detection mechanisms in bias adjustments for speech recognition systems.

Data availability

Data availability The datasets analysed during the current study are available in the AISHELL repository, https://www.openslr.org/33/.

References

Graves, A., Fernández, S., Gomez, F. & Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, ICML ’06, 369–376, https://doi.org/10.1145/1143844.1143891 (Association for Computing Machinery, New York, NY, USA, 2006).

Graves, A. & Jaitly, N. Towards end-To-end speech recognition with recurrent neural networks. in Proceedings of the 31st International Conference on Machine Learning, 1764–1772 (PMLR, 2014).

Li, K., Li, J., Ye, G., Zhao, R. & Gong, Y. Towards code-switching ASR for end-to-end CTC models. in ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6076–6080, https://doi.org/10.1109/ICASSP.2019.8683223 (2019).

He, Y. et al. Streaming end-to-end speech recognition for mobile devices. in ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6381–6385, https://doi.org/10.1109/ICASSP.2019.8682336 (2019).

Zhang, Y., Sun, S. & Ma, L. Tiny transducer: A highly-efficient speech recognition model on edge devices. in ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6024–6028, https://doi.org/10.1109/ICASSP39728.2021.9413854 (2021).

Wang, X., Sun, S., Xie, L. & Ma, L. Efficient conformer with Prob-Sparse attention mechanism for end-to-EndSpeech recognition. https://doi.org/10.48550/arXiv.2106.09236 (2021). arxiv:2106.09236.

Chorowski, J. K., Bahdanau, D., Serdyuk, D., Cho, K. & Bengio, Y. Attention-based models for speech recognition. in Advances in Neural Information Processing Systems, vol. 28 (Curran Associates, Inc., 2015).

Cho, K. et al. Learning phrase representations using RNN encoder–decoder for statistical machine translation. https://doi.org/10.48550/arXiv.1406.1078 (2014). arxiv:1406.1078.

Chan, W., Jaitly, N., Le, Q. & Vinyals, O. Listen, attend and spell: A neural network for large vocabulary conversational speech recognition. in 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 4960–4964. https://doi.org/10.1109/ICASSP.2016.7472621 (2016).

Gourav, A. et al. Personalization strategies for end-to-end speech recognition systems. in ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 7348–7352. https://doi.org/10.1109/ICASSP39728.2021.9413962 (2021).

Zhao, D. et al. Shallow-fusion end-to-end contextual biasing. in Interspeech 2019, 1418–1422. https://doi.org/10.21437/Interspeech.2019-1209 (ISCA, 2019).

Chen, Z., Jain, M., Wang, Y., Seltzer, M. L. & Fuegen, C. Joint grapheme and phoneme embeddings for contextual end-to-end ASR. in Interspeech 2019, 3490–3494. https://doi.org/10.21437/Interspeech.2019-1434 (ISCA, 2019).

Han, M., Dong, L., Zhou, S. & Xu, B. Cif-based collaborative decoding for end-to-end contextual speech recognition. in ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6528–6532. https://doi.org/10.1109/ICASSP39728.2021.9415054 (2021).

Sun, G., Zhang, C. & Woodland, P. C. Tree-constrained pointer generator for end-to-end contextual speech recognition. in 2021 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 780–787. https://doi.org/10.1109/ASRU51503.2021.9687915 (2021).

Le, D. et al. Contextualized streaming end-to-end speech recognition with Trie-based deep biasing and shallow fusion. https://doi.org/10.48550/arXiv.2104.02194 (2021). arxiv:2104.02194.

Dingliwal, S. et al. Personalization of CTC speech recognition models. in 2022 IEEE Spoken Language Technology Workshop (SLT), 302–309. https://doi.org/10.1109/SLT54892.2023.10022705 (2023).

Jain, M. et al. Contextual RNN-T For open domain ASR. https://doi.org/10.48550/arXiv.2006.03411 (2020). arxiv:2006.03411.

Chang, F.-J. et al. Context-Aware Transformer Transducer for Speech Recognition. In 2021 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 503–510, https://doi.org/10.1109/ASRU51503.2021.9687895 (2021).

Xu, Y. et al. CB-conformer: Contextual biasing conformer for biased word recognition. in ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1–5. https://doi.org/10.1109/ICASSP49357.2023.10095469 (2023).

Gulati, A. et al. Conformer: Convolution-augmented transformer for speech recognition. https://doi.org/10.48550/arXiv.2005.08100 (2020). arxiv:2005.08100.

Vaswani, A. et al. Attention is all you need. in Advances in Neural Information Processing Systems (NeurIPS), 5998–6008 (2017).

Wu, Y., Zhang, F., Lei, Z. & Liu, C. Gated convolutional neural networks for contextual speech recognition. in ICASSP, 6820–6824. https://doi.org/10.1109/ICASSP.2019.8683200 (2019).

Wang, Y. et al. Cross-attention mechanisms for context-aware speech recognition. in ICASSP, 8019–8023. https://doi.org/10.1109/ICASSP40776.2020.9054429 (2020).

Graves, A., Fernandez, S., Gomez, F. & Schmidhuber, J. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. in Proceedings of the 23rd international conference on Machine learning, 369–376 (ACM, 2006).

Bu, H., Du, J., Na, X., Wu, B. & Zheng, H. AISHELL-1: An open-source Mandarin speech corpus and a speech recognition baseline. in 2017 20th Conference of the Oriental Chapter of the International Coordinating Committee on Speech Databases and Speech I/O Systems and Assessment (O-COCOSDA), 1–5, https://doi.org/10.1109/ICSDA.2017.8384449 (2017).

Uer/roberta-base-finetuned-cluener2020-chinese \(\cdot\) Hugging Face. https://huggingface.co/uer/roberta-base-finetuned-cluener2020-chinese.

Ravanelli, M. et al. SpeechBrain: A general-purpose speech toolkit. https://doi.org/10.48550/arXiv.2106.04624 (2021). arxiv:2106.04624.

Radford, A. et al. Robust speech recognition via large-scale weak supervision. in Proceedings of the 40th International Conference on Machine Learning, 28492–28518 (PMLR, 2023).

Baevski, A., Zhou, H., Mohamed, A. & Auli, M. Wav2vec 2.0: A Framework for self-supervised learning of speech representations. https://doi.org/10.48550/arXiv.2006.11477 (2020). arxiv:2006.11477.

Zhou, S. et al. CopyNE: Better contextual ASR by copying named entities. https://doi.org/10.48550/arXiv.2305.12839 (2024). arxiv:2305.12839.

Acknowledgements

This work was supported by National Natural Science Foundation of China—Research on Key Technologies of Speech Recognition of Chinese and Western Asian Languages under Resource Constraints (Grant No. 62066043). It was also funded by the Key Research and Development Program of Xinjiang Urumqi Autonomous Region under Grant No. 2023B01005. We also acknowledge assistance from medical writers, proof-readers, and editors.

Author information

Authors and Affiliations

Contributions

N.Y. and Y.C. designed the main methods and experimental content; N.Y. and W.S. provided the experimental environment and equipment; L.S. and Y.L. offered guidance on experiments and coding; Q.D., Y.H., and Z.S. prepared the figures; J.P. and Y.W. prepared the experimental datasets; and Y.C. wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yolwas, N., Cai, Y., Sun, L. et al. Adaptive context biasing in transformer-based ASR systems. Sci Rep 15, 28779 (2025). https://doi.org/10.1038/s41598-025-12121-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-12121-4