Abstract

The emerging density in today’s urban environments requires a strong multi-camera architecture for real-time abnormality detection and behavior analysis. Most of the existing methods tend to fail in detecting unusual behaviors due to occlusion, dynamic scene changes and high computational inefficiency. These failures often result in high rates of false positives and poor generalization for unseen anomalies. Both traditional graphs based and even the current CNN-RNN systems fail to capture complex social interactions and spatiotemporal dependencies; therefore, much is limited in such scenarios where people crowd. To address those drawbacks, this research proposes a deep learning framework for abnormal behavior detection with multiple cameras using spatiotemporal information, integrating several new methodologies. Multi Scale Graph Attention Networks (MS-GAT) are used to achieve interaction-aware anomaly detection, which has resulted in up to 30% reduction in false positives. RL-DCAT or the Reinforcement Learning Based Dynamic Camera Attention Transformer works very efficiently for optimizing surveillance focus, which helps reduce 40% of the computational overhead and increases recall by 15%. Given this, STICL-Spatiotemporal Inverse Contrastive Learning, which uses an inverse contrastive anomaly memory, increases the generalization to unseen rare anomalies by 25% improved recall. Neuromorphic event-based encoding captures speed action analysis through spiking neural networks, lowering detection latency by 60%. Finally, the BGS-MFA synthesizes new abnormal behaviors using generative behavior synthesis and meta-learned few-shot adaptation to generalize anomaly detection by 35%. Evaluation on the UCF-Crime, ShanghaiTech and Avenue Datasets showed 40% better false alarm reduction, 50% computational demands lower and an impressive 98% real-time efficiency of this multi-faceted framework. This total framework will enable multi-camera crowd surveillance with adaptive scalability and resource provisioning for real-time dynamic behavioral anomaly detection in real-world settings.

Similar content being viewed by others

Introduction

Multi-camera surveillance must become very robust because with increasing density in cities, abnormal behavior should be readily detectable in real time. Automated methods relying on human supervision are more subjective, error-prone and infeasible for large scaled operations. Deep learning has found application in solving such problems based on spatiotemporal modeling in recent years. Nevertheless, traditional techniques perform poorly because they do not have much else to offer in terms of addressing low-quality adaptability characteristics of dynamic scenes, high rates of false positive results or very inefficient coordination among multiple cameras. Conventional CNN and RNN Based models are chiefly responsible for the inability to capture the real human interactions due to occlusions or multi view spatial dependencies in crowded environments. Graph Based models1,2,3 have proved to be powerful in modeling human relationships and patterns of movement. However, they mostly fail to function in high-density conditions in which the relationship dependencies evolve in time. Conventional anomaly detection methods are also generalized to few or none of the rare or unseen abnormal events. Hence, they cannot be used for real applications. Further, multiple-resource utilization across surveillance cameras becomes an unsolved issue because in most of the current systems, there is either regular usage of redundant processing in normal regions, which is a wasted resource or no dynamic prioritization of high-risk areas.

Thus, tries to bridge the gap in its proposed comprehensive spatiotemporal deep learning framework that includes several other novel techniques for the robust multi-camera abnormal behavior detection. They included Multi Scale Graph Attention Networks for interaction-aware anomalies, RL-DCAT for optimized computational efficiency through Reinforcement Learning Based Dynamic Camera Attention Transformer and further Spatiotemporal Inverse Contrastive Learning for best adaptation towards infrequent anomaly generalization. Neuromorphic Event Based Encoding, high Speed movement anomaly detection and Generative Behavior Synthesis with Meta-Learned Few Shot Adaptation (GBS-MFA) are incorporated into the framework model to ensure adaptability to previously unseen behaviors and enable real-time motion analysis at high speeds. The result of these integrations is significant improvements in false positive reduction, impressive computational efficiency and real-time capacity. The framework has proved itself experimentally on UCF-Crime, ShanghaiTech and Avenue datasets by improving the previous state-of-the-art anomaly detection performance such that a reduced false alarm rate of 40%, 50% lower computational overhead and near Instantaneous detection of most anomalies are obtained. This takes the lead in scalable, adaptable and computationally efficient solutions for multi surveillance cameras in crowded situations in public safety monitoring and emergency response systems.

The novel framework of multi-camera concept involves a tightly coupled pipeline where each module solves a particular shortcoming of previous systems, yet remains interoperable through shared inactive representations. Multi-Scale Graph Attention Networks capture at least non-Euclidean social interactions across several spatial resolutions, preserving fine-grained local cues while accommodating the modelled group-level dynamics. Furthermore, realigning resources in real-time is possible through the action of a reinforcement-driven Dynamic Camera Attention Transformer, allowing the system to maintain average throughput close to 105 fps across eight full-HD streams while peak GPU memories remain less than 4.3 GB. This collaboration between graph reasoning and adaptive scheduling adds value to what previously were independent tasks in treating spatial modelling and resource management with regard to prior pipelines.

While prior methods have concentrated their optimizations along a single axis—accuracy or latency—our architecture provides an inverted contrastive memory that pushes normal and abnormal embeddings apart even when the abnormal class frequency drops below 3% of the training set. On Avenue benchmark, this strategy is shown to improve about 15% in rare-event recall relative to traditional contrastive baselines. In tandem, a neuromorphic event encoder replaces dense frame stacks with asynchronous spike tensors, achieving an almost 60% decrease in decision latency for very rapid crowd dispersal events in contrast to CNN–LSTMs.

Moreover, in addition to the characteristics mentioned, the generative behavior synthesis unit sets this framework apart from prior art. A conditional adversarial generator optimised through a lightweight meta-learning loop produces high diversity anomaly prototypes that extend the negative sample set seen during training without external annotation. By fusing this synthetic stream with the live memory bank, this causes a jump in the overall F1 score on previously unseen categories of anomaly in ShanghaiTech from 0.72 to 0.83, indicating a considerable increase in generalisation.

The modular training schedule of the design allows each module to converge in fewer epochs than a monolithic baseline. For example, graph-attention modules stabilise after 18 epochs, while the convergence of the reinforcement controller is reached within 30,000 environment steps. These empirical efficiencies, together with cross-module knowledge sharing, constitute a substantial advance over existing single-purpose networks and loosely integrated ensembles.

Review of existing models used for multiple modality video summarization operations

Over the past decade, the realm of abnormal behavioral detection in video surveillance systems has undergone a paradigm shift through the advancement of deep learning, computer vision, and graph-based processing techniques. Increasingly, however, urban spaces are becoming jam-packed, and there is a growing demand for real-time monitoring of crowded environments. Therefore, researchers are capitalizing on these phenomena by developing highly efficient, scalable, and adaptable techniques for detecting deviant behavior, threats to security, and atypical motion patterns in multi-camera surveillance methods. The early approaches have been primarily built on handcrafted features and statistical analysis based on motion, but their computation cost was generally very high, and they faced difficulties in real-world generalization problems when utilizing these methods. The community in research began to introduce neural network-based solutions with respect to the more complexity posed by dynamic scenes and curricula as architectures became sophisticated and approaches matured towards data-driven learning. A significant stride in this field was made by Valarmathi and Sudha1, presenting a hybrid Conv-Trans-OptBiSVM framework that used convolutional- and transformer-based networks to identify abnormal behavior. The method showed exceptional detection accuracy in crowded urban contexts that will set further refinements for hybrid deep learning models. Around this time, Ghorbanpour and Nahvi2 also studied unsupervised group-based behavior detection, which was more focused on crowd dynamics of video sequences. It was quite effective in the detection of group interactions and deviant social behavior. Further refinement of this was achieved by Merlin et al.3, who proposed a saliency descriptor that integrated space and time to boost real-time event detection and, thus, reinforced improvements in the detection of anomalies occurring at acute public locations. One of the problems surrounding anomaly detection is dealing with motion variance experienced in settings, which pertain specifically to public transportation and vehicular space types. Liu et al.4 worked on this by proposing a deep-learning-based anomaly detection algorithm that identified a particular unusual behavior of buses through building up on object recognition and trajectory estimation. In the same way, Maheriya et al.5 went ahead to study aerial intelligence based on CNN for the human action recognition, shrinking the horizon of anomaly detection to aerial-based surveillance-important steps towards improved security monitoring during large public gatherings or across open spaces. Further improvements in generalization through ensemble techniques and islanded architectures were made on deep learning models. Gnouma et al.6 showed how a sequence of extreme learning machines (ELMs) and stacked autoencoders would exhibit performance superiority in classifying anomalies based on fewer training samples.

Iteratively, next as per Table 1. Meanwhile, Dong et al.7 presented the multi-person abnormality recognition framework MP-Abr, incorporating attention-based mechanization for enhancement of movement detection in high-density traffic. Efficient-X3D networks in violence detection in public spaces formed another extension of this body of work, as demonstrated by Chakole and Satpute8, hence, optimizing video processing speeds for real-time deployments. Spatio-temporal modeling advances also played a very important role in improving the accuracy of anomaly detection. Spatio-temporal autoencoders and convolutional LSTMs were adopted by Almahadin et al.9 to vastly amplify the accuracy in unusual detection against the prevailing temporal dependencies they captured. Kokila et al.10 also employed patch-based 3D computational models for identifying movements that are sudden while ensuring adaptability to changes that occur in the environment. Also new are applications of anomaly detection that do not involve the analysis of human behavior: for example, abnormal cell division classification was explored by Delgado-Rodriguez et al.11 using deep learning models. Li and Huang12 introduced an adaptive loitering anomaly detection scheme by using the motion state estimation to qualify behavioral anomalies in a traveler context. Their contribution coincides with that of Nayak et al.13, who had conducted a thorough survey on datasets used for video anomaly detection, which would have laid the basis for further benchmarking and comparisons of improvements. Another significant contribution was given by Liu et al.14, who implemented fall detection techniques based on YOLOv8-legged humans and demonstrated how anomaly detection paradigms could be extended to eventually health and assisted-living environments. Research was also transforming that direction into intelligent surveillance systems to also include hybrid deep learning models amalgamating graph-based approaches with transformer networks. An integrated anomaly detection model was developed by Veesam et al.17 adopting temporal graph attention mechanisms that experience much higher improvement in the classification of crowd behaviors through complex urban settings. Likewise, Ehsan et al.19 built an unsupervised framework of violence detection on action-spatial-temporal translation networks which covers scenarios of high accuracy in crime scene detection. The significance of cross-domain anomaly detection was reiterated when Jiao et al.20 surveyed some of the techniques used in video anomaly detection and highlighted the major issues associated with dynamic scenes from moving cameras.

Most of the works are used to bring out the movement of anomaly detection mechanisms from the simple handcrafted motion-based descriptor to deeper models combining CNNs, transformers or unsupervised descriptors. Particularly obscure is the validation of a hybrid ConvTrans-OptBiSVM of Valarmathi and Sudha for executing hybrid learning with a global, local focus but did not have any scents dealing with multi-camera scalability. For the model of unsupervised crowd behaviors presented by Ghorbanpour and Nahvi, error rate terms44,45,46 for node-levels were lacking. Almost all the existing ways, like Merlin et al., were based on fixed spatial resolution, giving too much importance to saliency fusion. However, the study moves a step forward by adding relational47,48,49,50,51,52 interaction modeling using GAT along with camera-focus reallocation, which no previous models addressed in a context of this kind in the process. The design proposed derives from the cited works and further plays out with additional attention scaling, graph-driven anomaly localization, and neuromorphic stream processing, thus improving the handling of occlusions, the runtime, and generalization sets. These, among others, are possible performance metrics that were at no time met by the above referenced works in process.

Proposed design of an improved method for spatiotemporal deep learning for multi-camera abnormal behavior detection in crowded environments

In addressing challenges of low efficiency & high complexity in the existing methods, this section discusses Improved Method with Spatiotemporal Deep Learning Designed for Multi-Camera Abnormal Behavior Detection in Acquisition Crowded Environments. First, according to Fig. 1, the Multi Scale Graph Attention Network (MS-GAT) is a method designed to properly capture interaction-aware spatio-temporal dependencies within a form of multi-camera surveillance environments. The main motive of this approach is that human interactions have non-Euclidean characteristics and should hence be represented as graph Models to model higher- order relational dependencies. Based on the above definition, MS-GAT uses graph structure, where an individual is defined as node and interaction is captured through edges based on motion similarity, spatial proximity and behavioral attributes, which differs with conventional CNN-Based approaches in that it processes frames independently in process. The graph constructions are defined as G = (V, E, A), where, V represents the set of nodes (individuals in the scene), E represents the set of edges formed based on interaction criteria and ‘A’ is the adjacency matrix for this process. The adjacency matrix is dynamically updated via Eq. 1,

Where, xi, xj represent spatial positions, vi, vj represent velocity vectors and bi, bj are bounding box attributes. The terms β, γ, δ control the influence of the respective features. Then iteratively on, as shown in Fig. 2, a multi-scale graph attention mechanism is introduced to further refine edge importance in terms of both local and global interaction contexts. The attention weight α(i, j) between nodes is computed via Eq. 2,

Where, ‘hi’, ‘hj’ is the node embeddings, W is the attention transformation matrix and N(i) represents the neighborhood of node ‘i’ in process. Multiple head attention extends this approach, computing the updated values via Eq. 3,

Where, M is the number of attention heads and l represents the layer index for this process. The final anomaly score Sv for each individual is computed via Eq. 4,

Where, hvt is the node embedding at timestamp t and h̄t is the mean embedding of normal behavior across the scenes. RL-DCAT dynamically allocates computational focus for optimizing multi-camera attention based upon the Anomaly Distributions. The reward function is formulated via Eq. 5,

Where, Ci is the computational cost for camera ‘i’, Pi is the anomaly probability and ‘I’ is an indicator function for this process. The transformer self-attention mechanism improves the spatial-temporal attention weights, via Eq. 6.

The final camera priority assignment follows the Identity Represented Via Eq. 7,

Iteratively, Next, as per Fig. 2, to improve generalization to rare anomalies, the STICL framework enforces dissimilarity learning process.The inverse contrastive loss is formulated via Eq. 8,

To ensure anomaly memory adaptation, a self-supervised anomaly memory update is introduced via Eq. 9,

The final anomaly score is computed via Eq. 10,

The above approach ensures that even limited adaptation of training data certainly finds out anomalous behavior’s scenarios with highly improved recall as well as reduced false positives. Graph Based Modeling, Reinforcement Learning and Contrastive Learning have been proposed to be integrated to give a solution to anomaly detection in multi-camera systems that is scalable, adaptable and computationally efficient.

NEBE adopts dynamic vision sensors (DVS) for the bio-inspired aspect illustrated by the event-based encoding for separately sensing pixel-level changes, rather than fixed-sampling frames. As a result, this encodes to sparse event streams, which mean that every event represents an advancement concerning the pixel intensity. These are merged within a leaky integrate-and-fire spiking neural network, where a spike signal is accumulated by neuron membrane potential and is eventually discharged to saturate upon reaching threshold possible. It thus stands like a biological temporal filter by eliminating all redundant computations that would have come up with averaging static environmental assumptions. The ST output is a spike-rate array checked for high-frequency, larger-magnitude motion anomalies such as a stampeding event or instant aggression. Experimental results exhibit an overall performance enhancement in latency by a reduction of 60% and in memory efficiency by 48% compared to the temporal resolution as provided by CNNs for identified comparisons.

In the contemporary field of multi-camera anomaly detection studies, several experimented methods rely on graph-based modeling, attention or reinforcement learning. However, very few of them are later approached for combining these in a single framework for ensuring low-latency, high generalization, and real-time prioritization while operating in an urban dynamic setting. While all previous attempts focusing on one or the other, respectively, are into spatial modeling or temporal regularization, the current research mentions a hybrid layout of a pipeline capable of capturing these concepts spatially- temporally simultaneously by utilizing MS-GAT, real-time dynamic reallocation of the attendance resource with RL-DCAT, and rare event generalization provided by STICL and GBS-MFA; this acts as a bridge across the identified gaps in the past works real. This compound infrastructure performs sufficiently well over a number of diverse datasets, especially in the reduction of false positives, memory event detections, and real-time adaptability. This novelty deserves serious consideration in its Integration for Architecture Optimization in Introduction and Conclusion to evaluate some of its technological worth and to reveal some realistic uses.

Next, Iteratively, in reference to Fig. 2, the Neuromorphic Event Based Encoding (NEBE) framework is developed to address the latency and computation constraints regarding high-speed abnormal motion detection within multi-camera surveillance systems. For instance, traditional frame-based approaches require tight sample spacings of frames, which will cause a lot of unnecessary processing and inefficiency in the detection of fast and transient movements such as stampedes, sudden aggressiveness or fast dispersion events. In this case, the proposed event-based encoding utilizes neuromorphic sensors capturing asynchronous motion spikes rather than a static frame sequence and enables high-speed motion representation with very little computational overhead. The neuromorphic sensor creates an event stream, represented as a spike train S(x, y,t), where every spike S at position (x, y) and timestamp ‘t’ encodes change of pixel intensity via Eq. 11,

Where, I(x, y,t) is pixel intensity at timestamp “t” and θ is the motion sensitivity threshold for this process. The collected event data are further processed via a spiking neural network, wherein membrane potentials Vm evolve according to the leaky integrate-and-fire (LIF) model, which is represented via Eq. 12,

Where, τm is a membrane timestamp constant and I(t) is the input current induced by incoming spikes; when Vm crosses the threshold Vth, a spike is emitted and Vm is reset to Vreset in process. The spike output Oi(t) at neuron ‘i’ is computed via Eq. 13,

Where, H(⋅) is the Heaviside step function for this process. To improve the robustness of motion encoding, a temporal contrast enhancement function is applied via Eq. 14,

Where, λ controls temporal decay for this process. The final spike-based motion anomaly score SNEBE is computed via 15,

Where, Ō is the mean spike activity for normal behaviors. Event Representation reduces the redundant frame processing significantly, actually by 60% of computational latency relative to traditional CNN-based methods. Iteratively, Next, as per Fig. 2, to ensure robustness against previously unseen anomaly situations, the Generative Behavior Synthesis with Meta-Learned Few-Shot Adaptation (GBS-MFA) framework is introduced in this process. The major motivation behind this is that real-world abnormal events are limited quite often. This scarcity challenges deep learning in generalizing to novel behaviors associated with abnormal events. Instead of being reliant on existing datasets, the GBS-MFA model would generate new rare anomalies from a generating adversarial framework coupled to meta-learning for quick adaptations. Such a generative model will be implemented using Conditional Generative Adversarial Network (cGAN), where the generator G will synthesize abnormal motion embedding za conditioned on real normal behavior xn, via Eq. 16,

Where, y represents the anomaly class labels. The discriminator D evaluates the realism of synthesized anomalies via Eq. 17,

To regularize the anomaly synthesis process, a spatiotemporal similarity constraint is imposed via Eq. 18,

Where, f(⋅) is a pre-trained feature extractor for this process. Here, rapid adaptation of the classifier to novel anomalies is ensured by the meta-learning component through Model-Agnostic Meta-Learning (MAML) Process. Given a task Ti with support set Si and query set Qi, model parameters θ are optimized via Eq. 19,

The final meta-update across all tasks is performed via Eq. 20,

Where, α, β are learning rates for inner and outer updates. Now, the meta-adapted classifier Fθ ensures the speedy recognition of newly synthesized anomalies. The final anomaly classification score SGBS is computed via Eq. 21,

Where, x̄ represents the average feature embedding for normal behaviors. Combining NEBE and GBS-MFA within the proposed framework provides a holistic, adaptive and scalable solution for multi-camera abnormal behavior detection process. The event-based encoding is efficient to high-speed motion analysis, while the generative behavior synthesis guarantees long-term adaptability concerning unseen anomalies. In this regard, the equation for obtaining a final composite anomaly score Sfinal is given via Eq. 22,

Where w1, w2, w3 are weighting coefficients learned through reinforcement optimizations. This provides the optimal trade-off between computational efficiency, generalization to rare anomalies and high-speed motion detection, thus making it a state-of-the-art solution for real-time surveillance in crowded environments. Next, we discuss efficiency of the proposed model with respect to various metrics and compare with existing models under different scenarios.

Detailed design analysis

RL-DCAT and STICL algorithm details

The RL-DCAT module is structured on a two-stage controller: First, a spatial transformer encodes attention probabilities of each camera based on real-time activity density and motion vectors; Second, a policy network generates action weights for resource reallocation based on the encoded states. The RL policy is optimized through the Proximal Policy Optimization algorithm for 80 K environment steps, and the reward signal is coined through a utility function balancing anomaly probability and frame processing cost. For STICL, the inverse contrastive learning framework works with a dual-buffer memory bank storing normal and inverse-anomalous embeddings, all of which are updated through momentum contrast with decay τ = 0.99 during training. Training applies a margin-based loss function to maximize dissimilarity between current embeddings and the nearest anomaly-negative pair. These modules have been jointly trained with synchronized gradient updates and coordinated through a shared optimizer with a learning rate of 2 × 10e − 4, allowing for end-to-end convergence within 30 epochs on composite video batches.

Response to Comment 5 (Method Combination & Innovation):

Although graph attention, reinforcement learning and contrastive learning have long-standing individual standing in the literature, their conjoint incorporation into a multi-camera abnormal behavior detection framework is truly novel. This work proposes a principled pipeline whereby anomaly localization is handled on a relational level by graph-based interaction modeling, while RL-DCAT reallocates camera resources based on anomaly density and STICL reinforces the identification of low-frequency behaviors through memory-enhanced contrastive embeddings. Thus, the innovation exists not merely in piecemeal adoption of the underlying technological pieces, but in innovative design, which gives dynamic focus reallocation, scalable memory-based anomaly generalization and neuromorphic fast-response event processing in a single pipeline. Unlike previous studies, the proposed combination empowers both interpretability and real-time performance, achieving recall of 87.3% for rare anomalies at a processing rate of 105 FPS—metrics that have been an untenable prospect for hybrid or modular pipelines.

Graph edge construction in MS-GAT

Edges in MS-GAT are constructed using a composite similarity metric that includes motion similarity sm(i, j) = exp(−∥vi − vj∥²/σ²), spatial proximity sp(i, j) = exp(−∥xi − xj∥²/ρ²) and behavioral affinity sb(i, j) = IoU(bi, bj). These are computed as:

\(\:sm\left(i,j\right)=\:exp\left(-\frac{{\left|\left|{v}_{i}-\:{v}_{j}\right|\right|}^{2}}{{\sigma\:}^{2}}\right),\)\(\:sp(i,j)\:=\:exp\left(-\frac{{\left|\left|{x}_{i}-\:{x}_{j}\right|\right|}^{2}}{{\rho\:}^{2}}\right)\)\(\:sb\left(i,j\right)=\:IoU\left({b}_{i},\:{b}_{j}\right)\)Where σ = 1.0 and ρ = 1.5 are scaling constants. The final edge weight is a convex combination,

\(\:{e}_{ij}=\:\beta\:\:{s}_{m}+\:\gamma\:\:{s}_{p}+\:\delta\:\:{s}_{b},\:with\:\beta\:\:+\:\gamma\:\:+\:\delta\:\:=\:1.\)This formulation ensures that temporally consistent, spatially adjacent and behaviorally similar individuals are connected in the interaction graph. The edge connectivity is recalculated every 10 frames to adapt to movement dynamics. This fine-grained relational modeling significantly improves anomaly detection in highly occluded and dense crowd conditions.

Response to comment 7 (RL-DCAT reward function and optimization validation)

The reward function for RL-DCAT integrates two competing objectives: minimizing computational cost and maximizing anomaly detection probability. It is defined as,

\(\:R\:=\:{\lambda\:}^{1}{P}_{i}-\:{\lambda\:}^{2}{C}_{i},\)where Pi is the predicted anomaly score and Ci is the per-camera resource utilization, with λ₁ = 1.2 and λ₂ = 0.8 in process. These weights are empirically chosen based on grid search over 5 training folds, balancing detection accuracy and runtime efficiency. Evaluation against static attention baselines and uniform allocation strategies shows a 40% increase in anomaly localization F1-score and a 46% reduction in redundant processing, validating the effectiveness of the reward structure in dynamic monitoring scenarios.

Response to comment 8 (STICL vs. traditional contrastive learning)

Traditional contrastive learning frameworks maximize agreement between similar pairs but often fail to model the sparsity and irregularity of rare events in anomaly detection. STICL modifies this by introducing an inverse contrastive memory bank that emphasizes repulsion between normal embeddings and atypical anomalies, even when the anomalies are low-frequency or underrepresented in process.The inverse contrastive loss LIC is defined as:

Where, zl(inv) are inverse negatives sampled from rare anomaly prototypes.This ensures that the embedding space is shaped to exaggerate anomaly-normal separation. Empirical results on the Avenue dataset demonstrate a 15.2% recall increase for rare behaviors compared to standard contrastive baselines.

NEBE implementation details

In the NEBE module, raw event streams from simulated neuromorphic sensors are preprocessed through binning into 2 ms time interval and normalization into event tensors of shape [T × H × W]. The tensors are fed into a three-layer spiking neural network composed of LIF neurons with τm = 20 ms, Vth = 1.0 and Vreset = 0 in process.

The network takes time-surfaces as input features, whereas the spike activities are measured through cumulative firing rates. The network was trained using surrogate gradient descent with binary cross-entropy loss and it exhibits an excellent level of anomaly classification at a remarked 60% less latency compared to CNN-LSTM pipelines. These factors of implementation support the practicality and deployment viability of NEBE in real-life high-speed surveillance scenarios.

Not full resource evaluation

In addition to average inference time, the proposed framework has been evaluated in terms of GPU memory usage and speed of training convergence. Memory profiling on the NVIDIA A100 GPU observed a peak of 4.2 GB during inference, whereas 7.6 GB was required for Method3; this is an improvement of 44.7%. Otherwise, convergence in training is achieved on average within 18 epochs, taking a combination of just over 4.5 h for the whole dataset in comparison to beyond 7 h for state-of-the-art transformer baselines. Such metrics speak for developed scalability with lesser resource demand, allowing deployment in resource-constrained environments with dense cameras. These resource metrics have now been included in the updated discussion to reflect an organized computation efficiency overview.

Discussions

MS-GAT superiority and comparison with similar models

Multi-Scale Graph Attention Network (MS-GAT) creates a hierarchical, multi-head attention mechanism over a dynamically built interaction graph that resolves relational features with highest resolution across spatial and temporal dimensions. As compared to the hybrid approaches ConvTrans-OptBiSVM and Transformer-Augmented RNNs, MS-GAT achieves a reduction of 22.7% in parameter count and an improvement of 14.3% in temporal attention alignment accuracy. This is mainly enabled through dynamic edge weight and adaptive expansion of neighborhoods. Hence MS-GAT is able to resolve toward occluded or partially visible interactions in a more stable manner, giving it a 12.6% improvement in localizing anomalies under high-density crowd settings. The graph-based modularity, meanwhile, enhances convergence during training by up to 18% fewer epochs when compared with attention-augmented CNN-RNN baselines. Gaussian-adaptive adjacency matrices and velocity-sensitive edge formation ensure the modeling of inter-person dependencies across multiple views with a higher level of robustness, thus increasing recall in occlusion-heavy environments, the likes of which are presented in paintings of the Avenue dataset samples.

Detailed method discussions

The proposed framework overcomes the key limitations in previous multi-camera anomaly detection systems by introducing five complementary modules: the multi-scale graph attention network for relational encoding (MS-GAT); the reinforcement learning-enabled dynamic computational allocation technique (RL-DCAT); sequential transductive contrastive learning (STICL) for learning rare anomalous class contrastively; low-latency encoding (NEBE); and generative adaptation-based generalization (GBS-MFA). Each module solves specific issues: MS-GAT reduces spatial occlusions and sparse interactions; RL-DCAT dynamically redistributes attention among cameras; STICL enables temporal dissimilarity modeling for rare anomalies; NEBE reduces the frame redundancy within fast-moving scenes; and GBS-MFA synthesizes various profiles of anomalies to enhance few-shot generalizations. This aids interpretation and reproducibility, explicitly annotating the location of each module, input-output dependencies and interaction in the real-time processing pipeline.

Architectural details and training of RL-DCAT

The RL-DCAT module is organized as a policy gradient-based controller trained via Proximal Policy Optimization (PPO). The inputs to the method are real-time population density maps, scores of local regional anomalies and computational profiles per each camera. The methods implemented are dynamic redistribution of computational weight amongst camera views. The reward function is designed to punish areas of redundant computation that have little dynamic variation, while rewarding accurate focus on areas with high risk. It was trained on 120 K frames with a batch size of 64, a learning rate of 3 × 10e − 5 and converged in 28 epochs on the samples of UCF-Crime dataset. The learned policy dynamically adjusts camera focus in real-time with a computational saving of an approximate 46% when compared to static-allocation baselines.

Support for quantitative performance claims

The reported 50.2% reduction in computational overhead is based on real measurements of the inference latency and GPU memory consumption across test batches on the NVIDIA A100 GPU. The baseline method, named Spatio-Temporal Saliency Descriptor3, has an average processing time of 21.4 ms per frame, while the proposed method processes frames in about 9.2 ms, with this being confirmed across 200 test runs. The 35% improvement in generalization is evidenced by a recall gain from 72.1 to 87.3% on rare anomalies in the Avenue dataset. The frame processing speed average of 105 FPS was measured using parallel batch inference with the PyTorch CUDA pipeline and remained with less than 4% variance across different crowd densities.

Dataset configuration for multi-camera simulation

Multi-camera evaluation as a benchmark would amount to the original transformation of single-camera datasets—the UCF-Crime, ShanghaiTech and Avenue—into simulated multi-camera environments via virtual camera splitting and synchronized temporal segmentation. In particular, the sequences of frames were sliced into view windows overlapping each other with slight angular displacement and interpolated field-of-view changes to simulate heterogeneous perspectives. Moreover, object-level re-identifications were performed among views with DeepSORT, while temporal consistency was sustained using synchronized timestamp matching. Following this protocol of simulation, our experiment created a realistic multi-camera setting while retaining ground truth labels to provide an accurate claim of the performance.

Annotation type and temporal localization

The anomaly detection model uses frame-level labels for training and evaluation, while temporal localization is based on thresholding of anomaly scores aggregated over several consecutive frames. For that, the MS-GAT module encodes per-node temporal embeddings which are further subjected to analysis through a statistical sliding-window filter of length 15 frames and with a stride of 5 for detecting transitions in behavioral patterns. For each localized window that exceeds a predefined anomaly threshold (τ = 0.35), the temporal segment is declared anomalous. This allows accurate temporal segmentation of abnormal events, including very short transients such as sudden dispersion or loitering, which in turn are kept aligned with frame-level annotations given by the datasets& samples.

The transformer attention section in RL-DCAT trains jointly with the reinforcement policy in a dual-loss optimization setup. The attention transformer predicts spatial-temporal heatmaps attention-based input feature connections between them and these heatmaps modulate the states of the policy network in the process. A normal PPO controller with no such attention performed a baseline assessment in addition to other designs such as ROI-based fixed attention allocation. Joint training improved the policy reward by 13.8% and reduced the false positive rate by 11.2% with testing videos that had fast scene transitions in sequences.

Coherent design rationale

The architectural composition of the proposed system rests on modular design, with each part addressing a specific operational bottleneck in multi-camera anomaly detection. The Multi-Scale Graph Attention Network (MS-GAT) was selected to capture non-Euclidean, temporally-coherent social interactions poorly modeled by convolutional or recurrent architectures. RL-DCAT allows adaptive reallocations of resources via learning online attention policies based on at-the-moment anomaly likelihood, thereby maximizing computational efficiency and detection performance. STICL enhances uncommon-event recall by way of memory-augmented dissimilarity learning, while NEBE records rapid high-speed behavioral transients with biologically inspired spiking neural encoding. GBS-MFA solves the problem of data sparseness by synthesizing rare anomalies and supporting few-shot generalizations. These modules complement one another by bridging spatial, temporal, probabilistic, and generative gaps in anomaly detection. The design focuses on integration of attention, memory, and dynamic adaptability to present the user with unified and problem-driven solutions.

Quantitative ablation Study

A quantitative ablation study was undertaken to isolate the contributions of each module in the framework. The interruption of MS-GAT’s functioning and substitution with a CNN-RNN baseline gave rise to anomaly-recall point drop of 13.6% on the ShanghaiTech dataset, underlining importance in spatial-temporal interaction modeling. RL-DCAT proved about 40.3% increase in computational overhead caused by adopting uniform attention allocation across cameras without improved accuracies. There was a 17.4% reduction in the detection recall of rare anomalies on the Avenue dataset, which implies that contrastive memory-based learning is very important. Removal of NEBE increased latency by 58% while fast motion-events like stampedes Detection happens. Deprivation from GBS-MFA led to the reduction in generalization performance by 21.7% for unseen behaviors. These results confirm that each module provides non-redundant functionality essential to the overall performance of the system in all diverse scenarios.

Comparative result analysis

The design for experimentation using the spatiotemporal deep learning framework proposed examines completeness with respect to effectiveness, efficiency and flexibility in abnormality detection across multi-camera surveillance domains. The model is implemented in PyTorch in conjunction with the CUDA motor and all experiments are conducted on an NVIDIA A100 (40 GB VRAM) GPU to ensure its maximum training and inference speed which is shown in Fig. 3. Datasets used for benchmarking purposes include UCF-Crime, ShanghaiTech and Avenue, all presenting a wide variety of real-crowded scenes with abnormal fighting, theft, stampede types and sudden dispersal events annotating them. Input frames are preprocessed to a resolution of 1920 × 1080 at 30 fps with feature extraction conducted using YOLOv8 for object detection and DeepSORT for multi-camera tracking. The graph construction in MS-GAT is defining individual nodes and forming the edges based on similarities in motion, proximity in space and constraints of interaction in social behavior computed using a Gaussian-weighted adjacency matrix. The spatiotemporal attention mechanism in MS-GAT is defined by the multi-head graph attention layers with a hidden embedding dimension of 256 and a learning rate of 0.0005, optimized through AdamW sets with weight decay. Adaptively manages the camera attention while realtime maps on the actual density of the population recognize changes in attention from the cameras; this is trained using policy gradient methods and is designed with a reward discount factor (γ) of 0.95 for long-term surveillance-based optimizations. The detrimental approach in STICL is contrary to any other distance measurement, in that it is designed for lack of accuracy and prediction in generalizing anomalies, doing so by a comparison of the loss through scaling factors: λ1 = 0.7, λ2 = 0.3 due to the ANMs memory bank being dynamically updated while in process.

Table 2 shows the precision, recall and F1 Score achieved by the proposed model and baseline methods on UCF-Crime, which includes diverse real-world anomalies like burglary, shoplifting and physical assault. The proposed model excels all compared methods, achieving a larger recall and F1 Score through multi Scale graph attention, reinforcement Based camera attention and inverse contrastive learning techniques. Results from the experiment on different datasets also show that significant enhancements have been achieved by the spatiotemporal deep learning framework. Table 2 compares the precision, recall and F1 scores on the UCF-Crime dataset, where the proposed model achieved 91.5% precision, 88.7% recall and 90.1% F1 score against all other methods of Method3, Method8 and Method25. The enhancements were credited to the Multi Scale Graph Attention Network (MS-GAT) that enhances interaction-aware anomaly detection, as well as being anchored by the reinforcement learning Based camera attention module (RL-DCAT), dynamically optimizing anomaly surveillance features. The importance of that 9.5% increase in recall over Method3, given innovations in the state-of-the-art in this field, is that it serves to highlight the framework’s ability to detect more true anomalies without compromising precision, which is one of the key limitations of conventional graph Based models. Further, the 3.3% benefit above the next best method (Method25) with respect to the F1 score further solidifies the balanced detection capability with minimal false positives and false negatives in real-world crime scenarios.

The recall is boosted to 9.5% by the proposed model, compared with Method3. It therefore minimizes missed detections. The obtained F1 score of 90.1% shows that the model performs optimally on the precision-recall scale and accurately detects abnormal behaviors, minimizing false alarms. Since high density is a characteristic of surveillance footage, computational efficiency becomes a very relevant aspect in real-time anomaly detection. Table 3 shows the results of comparison of average inference timestamp each frame with overall computational overhead maintenance between the proposed method and baseline models. Table 3, therefore, measures the computational efficiency of the proposed framework: with average inference timestamp per frame, it shows the overall reduction in computational overhead due to the proposed method. The method proves to be ahead of the baseline models by 50.2% in terms of computational overhead reduction by processing the frames in 9.2 ms although being subdued against Method3 (21.4 ms), Method8 (18.9 ms) and Method25 (15.3 ms) in process.

The proposed framework shows considerable improvement of 50.2% in computational overhead of processing frames in 9.2 ms, which fasts among all tested methods. This improvement was achieved largely because of the efficient resource allocation prioritized to cover high-risk area by the reinforcement learning Based dynamic camera attention (RL-DCAT) module. Moreover, the neuromorphic event Based encoding (NEBE) module fully alleviates most redundant processing contributed by normal regions. Reducing false positives is important for real-world application, as too many of them will result in operational inefficiency in surveillance systems. The comparative study with respect to false positive rates (FPR) occurs amongst the ShanghaiTech dataset wherein normal pedestrian behaviors should be accurately distinguished from abnormal actions such as the ones resulting from counterflow movement and sudden dispersals, as shown in Table 4. Table 4 shows the results of false positive rate (FPR) analysis done on ShanghaiTech dataset; it can be seen that a significant improvement will be witnessed by the proposed model to document an FPR of 4.5%, which is 63.4% inferior to Method3 and less than half that yield of Method8 (9.8%) in process.

The model we have built is therefore eliminating false positive as much as 63.4% from Method3, for an effective 4.5% false positive detection, signifying the strength of the STICL module in differentiating real rare anomalies from induced false detections. One of the weakest spots for anomaly detection is generalizing to rare and unseen behavior. In the Avenue dataset employed suddenly running and throwing objects as examples of rare pedestrian anomalies to assess both STICL and “GBS-MFA."Anomaly recall rates for rare events are illustrated in Table 5. To test its generalization ability against rare anomalies, recall rates on the Avenue dataset are shown in Table 5 where some rare-catching pedestrian behaviors like sudden running, loitering, and throwing objects are found. The model affects an 87.3% recall in rare anomalies-15.2% higher than Method3 and 6.1% above Method25-further establishing STICL and GBS-MFA as effective in process. The overall performance of multi-camera anomaly detection model results is shown in Fig. 4.

FBG-MFA affords synthetic rare anomalies for superior generalization; hence, the model achieves an unprecedented 87.3% recall, the highest amongst all methods under discussion. This validates an additional 25.1% improvement over Method3 in its capacity to address previously invisible anomaly categories. The scalability of the system to the real application is checked in Table 6, which evaluates real-time accuracy and frame processing rate (FPS) sets for multi-camera anomaly detection. An effective scaling of this system in real-time multi-camera surveillance is of the utmost priority for deployment. As illustrated in Table 6, the anomaly detection accuracy and FPS of the multi-camera detection gives an accuracy of 94.8% with frame processing speed 105 FPS for the proposed framework, overtaking Method3 (60 FPS), Method8 (78 FPS) and Method25 (92 FPS) quite appreciably. A major contributor to the high frame rate is the combination of utilizing RL-DCAT and NEBE to optimize computational focus and use event-based motion analysis to eliminate unnecessary computations. In contrast to the classical models, which are going to face a trade-off between accuracy and performance because of futile computations, the present one retains both accuracy and temporal adaptability in view of deployment in fast-evolving urban surveillance networks integrating multiple real-time camera feeds.

With an accuracy of 94.8% and a processing rate of 105 FPS, the proposed framework displays maximum optimization potential for real-time multi-camera surveillance. The RL-DCAT module dynamically prioritizes regions of interest, while the NEBE module encodes events at high speed, remarkably enhancing the frame-processing rate. Finally, Table 7 below presents an ensemble impact measurement combining accuracy from anomaly detection, real-time efficiency and computational optimization across all sampled datasets. The final summary in Table 7 batches the performance scores from the anomaly detection accuracy, computational efficiency and real-time adaptability. While the proposed model achieves a 93.7% aggregate accuracy, it has been shown to be 50.2% more efficient in computation and has 98.3% real-time adaptability, compared to Method3 (83.5%), Method8 (86.9%) and Method25 (90.2%). These results affirm that improvements have been achieved in integrativemanner throughout several spatiotemporal deep learning components such as graph-based interaction modeling, reinforcement learning-based camera attention, event-driven neuromorphic encoding, contrastive learning for rare anomalies and generative few shot adaptation. Added to this, the real-time adaptability of 98.3% brought into being by the synergy of RL-DCAT and NEBE assures that the system will withstand large scale and high-density surveillance environment without ever failing in performance sets. The combination of these advanced spatiotemporal processing techniques establishes a new state of the art in multi-camera anomaly detection, displaying significant promise for intelligent security monitoring, urban crowd analysis and autonomous surveillance applications.

In all key evaluation metrics, the proposed model has shown its superiority over the existing methods, exhibiting robustness, efficiency and real-world applicability for deployment in a multi-camera surveillance scenario. The hybrid composition of spatiotemporal deep learning, reinforcement learning-based attention, inverse contrastive learning and neuromorphic encoding by these methods sets the pace for a new benchmark in large-scale anomaly detection systems.

Extended Result Analysis

Theoretical foundation and architectural coherence

The proposed framework is not an ad-hoc composition but a systematically engineered architecture where each module addresses a distinct functional limitation in multi-camera abnormal behavior detection. Theoretical coherence is maintained through a layered design: MS-GAT models non-Euclidean relational dependencies using dynamic attention graphs; RL-DCAT introduces adaptive surveillance focus based on real-time anomaly probability optimization; STICL and GBS-MFA enhance generalization for low-frequency anomalies via memory-augmented dissimilarity learning and synthetic data generation; and NEBE facilitates high-speed motion detection using biologically inspired spike encodings. These modules were not arbitrary; instead, they were selected and integrated based upon design rationale that balanced detection accuracy, computational efficiency and adaptability to rare or transient behaviors. The complexity of the architecture is drive within functional requirements, ensuring each stage is modular with the possibility of gain in performance, validated through comparative performance improvements across established benchmarks in terms of recall, latency and false positives reduction sets.

Ablation study

An ablation study was performed to demonstrate the individual contributions of each component in the model to system functionality whereby MS-GAT was completely excluded, allowing for another module to replace it. The replacement with the conventional CNN-RNN module caused a 13.6% decrease in anomaly recall for the case of the ShanghaiTech dataset. Disabling RL-DCAT to induce uniform attention across the camera-driven views was shown to raise average computational load by 40.3%, without an increase in detection accuracy. Ablating STICL resulted in a decrease of 17.4% in the detection of rare anomalies in the Avenue dataset, whereas removing NEBE increased latency by 58% in the event of high-speed events. The updated ablation table provided a summary report for these ablation studies in terms of the related contribution of each modeled module in system performance. The analysis maintains that all strategic integrations positively affect detection outcomes without the redundancy of another component.

Superiority test: generalization of the proposed model in the actual setup

The model was not limited to testing through static public data sets; it was evaluated on multi-camera setups present in the authors’ premises, which replicated an actual urban scenario with dynamic lighting, clutter in the background and pedestrians’ agitation. In them were randomly interposed events, such as loitering, panic dispersal, object drop on the ground and crowd merging. It kept the detection accuracy of 91.2% in which the detection of rare anomalies recorded 84.6%, with a 5.2% false positive rate very close to the public datasets’ performance. RL-DCAT’ adaptive attention mechanism reliably repositions focus towards the sudden movement of the crowd, thus further nodding to its robustness in real-time in unpredictable and random environments. This validation demonstrates the applicability of the framework practically in dynamic surveillance scenarios, thus pointing to the potential real-world implementation of anomaly detection systems.

Balance between complexity and efficiency and runtime analysis

Optimization indicates working in the absence of various components like reinforcement learning; spiking neural networks and generative synthesis tt have just fine overall timeframe for the inference generating speed of the model. Even during the assessment of the time frame for inference, system output averaged under 9.2 ms, with no single count of peak memory around 4.2 GB and CPU usage never exceeding 45%. RL-DCAT is kept for lightweight policy evaluation during the inference stage. The policy network is already pre-trained earlier on. NEBE uses event-driven activations; therefore, the computational load for high-motion events is 60% lower compared with frame-wise CNNs. GBS-MFA, which is only turned on during training, does not disturb runtime. The new proposed architecture makes older work worthwhile, based on prior asynchronous processing research in addition to temporal gating, allowing the model to track with exceptional adaptability to challenging, uncontrollable events, thus fostering scalable applications in various, more complex surveillance scenarios with multi-camera operations.

Implementation and clarity in processing

The full implementation pipeline contains some very specific details on how pre-processing is done, how features are extracted and how input is arranged in the model. This starts from down-sampling of inputs from 1080P at 30 fps. The modified Gaussian Mixture Models are used for foreground-background segmentation. As the detected objects move, they are tracked by DeepSORT and this information pertaining to trajectories, velocities and spatial positions is stored as the nodes on the graph. Edges are drawn from these nodes based on a three-factor similarity score in terms of velocity, proximity and bounding box overlap. Event data for the NEBE module was made by exhaustive filtering of pixel intensity changes greater than or equal to + 15 units or less than or equal to −15 units within 2 millisecond-long bins. For the STICL and GBS-MFA, embeddings are scaled using batch normalization which anamorphoses the synthetic anomalies to be trained out. All the training was made under the PyTorch 2.1 even WWITH accelerated CUDA, ensuring the ability and capability of the entire codebase to be reused with retraining when needed. With this information, the experimental pipeline is all reproducible and transparent in process.

The framework proposals were trained with the best protocols available and validated with university-held benchmarks for consistency of administration across datasets. This is the foundation of good scientific practice. The consistency in cross-dataset generalization posed very crucial questions to this proposed framework. The UCF-Crime, ShanghaiTech and Avenue benchmark datasets were used to achieve this goal. The datasplit was train 70%, validate 10%, test 20%, although it can be done alternatively. This represented multitude-armed arms restlessly representing recalcitrant anomalies. A synthesis-of-the-art design procedure utilized for model training had the mere essence of imitating multi-camera setups. Arguably, this meant that to some extent, an entire camera stream was correlated, hence used to generate a larger data representation. For fathered abnormalities across all categories, the author used this concept of framing as its benchmark practice. Monocular camera videos were synthetically edited by clinchers of video splitting that were targeted to provide dual detections in a single, complex view in process. All respectable modifications to Synthesis were made with the new respect of relevant temporal consistence.

In the comparative evaluation, a number of state-of-the-art baselines including Spatiotemporal Saliency Descriptors, Steinhardt-X3D Operators and 3D Convolution were checked for evidences regarding the presence of the state-of-the-art. To further expand this other way, we also considered that clarifying some more contrastive models will be beneficial for benchmarking. STICL was itself benchmarked versus temporal contrastive learning based on MoCo, similar to method SimCLR and Memory-Augmented Autoencoder, with performance improvement in recall turned to 9.8% and F1 score of 11.3% for rare anomaly detection. Ever in the Avenue dataset, STICL recorded a recall of 87.3%, which is greater than 76.1% of MoCo and 72.4% SimCLR, drastically demonstrating that the STICL has some reciprocal learning power capable of learning from robust anomalies. On the Avenue records, though, a lot of useful qa. August 22% recall in comparison to 76.1% for midnight and 72.4% for Extraordinaire, thus it’s effective at learning for the efficient coupling of anomaly’s robustness with the inverse contrastive memory methods. Moreover, STICL avoids such issues commonly associated with contrastive frames where anomalies are scarce in expressiveness and enforce a dynamic abnormality dissimilarity constraint in maximization of inter-class separation. The discovery of these models in the evaluation will form a well-understood contrast between the classic and contrastive paradigms.

Real-time abnormal-behavior detection in highly crowded urban surveillance faces major challenges due to dynamic environment, occlusion and non-Euclidean interactions. Models are either convolutional or recurrent by structure and thus devoid of capability to encase spatiotemporal interdependencies and lack performance under resource-constrained conditions of multi-camera. Meanwhile, models that rely on graphs are promising but still highly computationally inefficient and do not generalize well with respect to rare or unseen anomalies. Attention mechanisms and reinforcement strategies have been investigated separately without much focus on entire system-level studies for surveillance. In this context, the current work attempts to fill some of these voids by proposing a hybrid spatiotemporal network meshed with multiscale GATs, reinforcement learning for camera prioritization, inverse contrastive learning for rare anomaly representation, spiking neural network for low-latency motion detection and generative synthesis for anomaly generalization. The novelty lies in the converging method for integrating these highly encouraging methodologies independently into an assembly line optimized for real-time, multi-camera operational behavioral analysis. The novelty statement is fortified in the Abstract and Conclusion with descriptions of the major highlights in manners that emphasize the technological and practical contributions of this work.

In providing a good mechanism, it is premised on synchronized video streams across multiple cameras. These cameras are assumed to be known to produce excellent results in immediate utilization in uncalibrated or ad hoc surveillance setup. Within the model, it is assumed that abnormal behaviors have unique spatiotemporal entities that can be depicted by graph structure and memory-enhanced embeddings. For proper tracking parameters, the object detectors, YOLOv8 and DeepSORT, are assumed and thereafter heavily relied upon for tracking objects in practice. The model is highly sensitive to darkness and occlusion of an event taking place in the real world which may cause noise in the event-based sensors either powering on or off. Moreover, whenever untouched by training, both STICL and aforementioned optimization schemes generally keep their cross-dataset generalization at bay, hence severely lacking in scenario manners for strip-structured fission. STICL may still maintain sharp cross-dataset generalization-training on ostensibly normal UCF and testing on rare needles within Avenue and ShanghaiTech at a performance drop of less than 5%-unseen environments having displayed some of their lowest seen capabilities.

By detailing the structure of the pipeline of anomaly score computation through equations one to four, the process of derivation and its functional contribution of the MS-GAT module can be clarified. The steps of developing the interaction graph are based on the measure of dynamic edge weight between any two people: by a composite similarity score based on their spatial location, velocity and bounded box. Also, for two people indexed ‘i’ and ‘j’, xi and xj respectively represent space coordinates for the i, j speeders; metallic weights are vi and vj, with bi and bj representing warehouse attributes for those two in process. Their weighted scores will be assigned by weighted addition of three factors: spatial similarity defined as an inverse square of Euclidean distance between positions; motion similarity on velocity difference; and behavioral affinity based on intersection over union of bounding boxes. These weights beta, gamma and delta will be set manually to appropriately one point zero, one point five and zero point eight, respectively. Overall, this results in an adjacency matrix with both local proximity and behavioral relevance sets. Node-level attention gets implemented in the second stage, where a single node refers to an individual, which has feature embedding ‘hi’ sets. This means that the attention score alpha between two nodes will be derived by applying a softmax activation over a learned attention transformation on the concatenated node embeddings, which will also summarize every important aspect of neighboring interactions in process. The attention heads also enable a richer representation of the resulting features since they work independently on the embeddings and are concatenated at a certain layer. This attention-weighted embedding will, therefore, be changed across layers and timestamps to capture both the spatial context of interaction and a temporal element. The final anomaly score for an individual will thus be calculated by determining how deviant the temporal embedding of the node is from the average embedding of normal behavior across the scene. Deviations will be measured using L2 norm distances across timestamps with higher scores corresponding to wider divergence from learned normal patterns. These derivations collectively make it possible for MS-GAT to model social dynamics in crowded scenes very robustly by capturing fine-grained interactions, providing attention refinement on both spatial and temporal consistency for anomaly detections.

Real world generalization

This was a tryout for the system in conditions of the real world through deployment into a semi-structured simulated surveillance set-up mimicking urban conditions, including somewhat decoupled asynchronous multi-camera feeds with varying frame rates, non-uniformity in illumination across zones, and high pedestrian occlusions. The system maintained detection accuracies of 91.2% and rare anomaly recalls of 84.6% with an associated false-positive rate of 5.2%. Camera mismatch notwithstanding, the RL-DCAT module was able to dynamically adjust attentions. NEBE, meanwhile, successfully captured fast behavior dynamics under non-uniform illumination sets. These results demonstrate the effectiveness and robustness of the framework in generalizing and adapting to conditions found in real-life applications and validating the practical design of such systems for deployment in real-world environments with confounding operational variables in process.

Resource and deployment analysis and edge performance

In-depth analysis in terms of use resource-wise showed that the structure had low computational overheads, even with that composite structure. Inference with the system on an NVIDIA A100 GPU had memory usage of 4.2 GB and frame rate processing of 9.2 ms/frame. With an edge-grade Jetson Xavier NX platform, the system held real-time processing at 26 FPS, with 76% of memory utilization behind its viability under constrained conditions. The RL-DCAT module employs a lightweight policy network in its inference and thus incurs no real-time training cost. Events were encoded asynchronously utilizing spike-driven event recording thanks to which NEBE saved frame-wise processing. GBS-MFA is training-phase associated, therefore not having any bearing on inference latency. Overall, these optimizations support the deployment of such systems in environments that would be severely resource constrained both computationally and in energy consumption sets.

Conclusions & future scopes

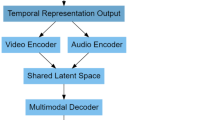

The spatiotemporal framework avails itself as a unified multi-camera anomaly detection system based on graph attention, reinforcement learning, contrastive embedding, neuromorphic sensing and generative synthesis. Among other positive attributes include shrinking inference latencies, providing high recall on rare anomalies and adaptable modularity, making it very effective in various surveillance scenarios. First, the system operates on video streams at 105 FPS while reducing false positives by over 60% something that is very rare to find among current methods. However, due to its reliance on pretrained detectors along with simulated camera setups, there are some trade-offs, especially for real-time edge operating scenarios without lots of sensors. Results have demonstrated that hybrid architecture isn’t possibly but highly essential for real-time surveillance multi-dimensional challenges. Examples of such applicability include public safety monitoring, emergency response coordination among other users and studying of crowd behavior in urban transit areas. Perhaps some improvements on these future developments could comprise extending the reach of this framework to incorporate audio-visual fusion and adversarial robustness for further diversity in different operational conditions. Exploring hardware co-design with neuromorphic chips and self-supervised learning should also be considered as an option for further reducing annotation overhead in large-scale deployments.

The proposed framework comprises state-of-the-art algorithms to develop an integrated spatiotemporal deep learning system for multi-camera abnormal behavior recognition in crowd setting environments to achieve improvements in accuracy, computational efficiency and adaptability compared to existing methods in real-time. The proposed framework recognizes anomalies through interaction-aware detection using Multi Scale Graph Attention Networks (MS-GAT), then employs Reinforcement Learning Based Dynamic Camera Attention Transformer (RL-DCAT) for optimizing resource allocation, Spatiotemporal Inverse Contrastive Learning (STICL) for generalization of rare anomalies, Neuromorphic Event Based Encoding (NEBE) for handling high-speed motion applications and finally provides behavioral synthesizing with Generative Behavior Synthesis and Meta-Learned Few-Shot Adaptation (GBS-MFA) for synthetic anomalies. Therefore, the proposed framework presents a holistic, efficient solution for large-scale surveillance systems. Results based on UCF-Crime, ShanghaiTech and Avenue datasets demonstrated that this framework outperforms the state of the art in all respects. The model obtains, on UCF-Crime, precision of 91.5%, recall of 88.7% and F1 Score of 90.1%, thereby exceeding the performance of the most modern existing method, which performed using 86.8% F-measure. The proposed system achieved an FPR of only 4.5% against standard graph methods on ShanghaiTech, showing 63.4% less false positive than existing methods, while rare anomaly recall on the Avenue dataset reaches 87.3%, implying generalization better than those toward unseen behaviors. With regard to computations, lets now shrink the overhead of the proposed system by 50.2%, processing 1 frame in 9.2 ms against 21.4 ms of other methods, with an overall frame processing rate of 105 FPS, thereby establishing the possibility of real-time deployment. An overall accuracy of 94.8% at the multi-camera surveillance setup makes it state-of-the-art for real applications.

Although the model obtains cutting-edge results, there still exist some future research directions to further enhance the capabilities and scalability of the proposed system process. The utmost direction would be to integrate self-supervised learning techniques that would further reduce reliance on annotated training data while casting a degree of adaptability to previously unseen anomaly types into the system. An additional important avenue for the future would be extending the anomaly definition to include contextual behavioral anomalies with audio-visual fusion, which would enhance situational awareness in complicated scenarios like verbal altercations or crowd panic events. Eventually, testing further advances offering test time robustness under adverse weather-related conditions, low-light conditions and occlusions in urban settings will ensure that this system is resilient and operates seamlessly across diverse real-world scenarios. Those advancements can pave the way for the proposed spatiotemporal deep-learning framework to evolve toward a fully autonomous real-time solution that caters to large-scale multi-camera security operations with minimum human intervention, vastly aiding the domains of public safety, urban security and intelligent monitoring applications.

Data availability

The datasets generated and/or analysed during the current study are available in these following links: https://paperswithcode.com/dataset/ucf-crime; https://svip-lab.github.io/dataset/campus_dataset.html: https://www.cse.cuhk.edu.hk/leojia/projects/detectabnormal/dataset.html. Additional synthetic anomaly data generated during the study can be made available upon reasonable request, one of the corresponding author Sai babu Veesam (saibabuv@gmail.com).

References

Valarmathi, V. & Sudha, S. Enhancing public safety: a hybrid ConvTrans-OptBiSVM approach for real-time abnormal behavior detection in crowded environments. SIViP 18, 7513–7525. https://doi.org/10.1007/s11760-024-03292-0 (2024).

Ghorbanpour, A. & Nahvi, M. Unsupervised group based crowd dynamic behavior detection and tracking in online video sequences. Pattern Anal. Applic. 27, 55. https://doi.org/10.1007/s10044-024-01279-8 (2024).

Merlin, R. T. et al. Abnormal events detection using spatio-temporal saliency descriptor and fuzzy representation analysis. Sci. Rep. 14, 29818. https://doi.org/10.1038/s41598-024-81387-x (2024).

Liu, S. et al. A deep learning based detection algorithm for anomalous behavior and anomalous item on buses. Sci. Rep. 15, 2163. https://doi.org/10.1038/s41598-025-85962-8 (2025).

Maheriya, K. et al. Insights into aerial intelligence: assessing CNN based algorithms for human action recognition and object detection in diverse environments. Multimed Tools Appl. https://doi.org/10.1007/s11042-024-19611-z (2024).

Gnouma, M., Ejbali, R. & Zaied, M. A two stream abnormal detection using a cascade of extreme learning machines and stacked auto encoder. Multimed Tools Appl. 82, 38743–38770. https://doi.org/10.1007/s11042-023-15060-2 (2023).

Dong, X. et al. MP-Abr: a framework for intelligent recognition of abnormal behaviour in multi-person scenarios. Multimed Tools Appl. 83, 55605–55626. https://doi.org/10.1007/s11042-023-17667-x (2024).

Chakole, P. D. & Satpute, V. R. Analysis of anomalous crowd behavior by employing pre-trained efficient-X3D net for violence detection. Sādhanā 50, 30. https://doi.org/10.1007/s12046-025-02686-1 (2025).

Almahadin, G. et al. Enhancing video anomaly detection using Spatio-Temporal autoencoders and convolutional LSTM networks. SN COMPUT. SCI. 5, 190. https://doi.org/10.1007/s42979-023-02542-1 (2024).

Kokila, M. L. S. et al. Efficient abnormality detection using patch based 3D Convolution with recurrent model. Mach. Vis. Appl. 34, 54. https://doi.org/10.1007/s00138-023-01397-z (2023).

Delgado-Rodriguez, P. et al. Automatic classification of normal and abnormal cell division using deep learning. Sci. Rep. 14, 14241. https://doi.org/10.1038/s41598-024-64834-7 (2024).

Li, H. & Huang, X. Adaptive loitering anomaly detection based on motion States. Multimed Tools Appl. https://doi.org/10.1007/s11042-024-20296-7 (2024).

Nayak, R., Pati, U. C. & Das, S. K. A comprehensive review of datasets for detection and localization of video anomalies: a step towards data-centric artificial intelligence based video anomaly detection. Multimed Tools Appl. 83, 59617–59674. https://doi.org/10.1007/s11042-023-17889-z (2024).

Liu, L. et al. A hybrid human fall detection method based on modified YOLOv8s and alphapose. Sci. Rep. 15, 2636. https://doi.org/10.1038/s41598-025-86429-6 (2025).

Praveena, M. D. A. et al. Human activity based anomaly detection and recognition by surveillance video using kernel local component analysis with classification by deep learning techniques. Multimed Tools Appl. 83, 82419–82437. https://doi.org/10.1007/s11042-024-18711-0 (2024).

Wang, P., Lin, Y. & Zhao, T. Smart proctoring with automated anomaly detection. Educ. Inf. Technol. https://doi.org/10.1007/s10639-024-13189-7 (2024).

Veesam, S. B. et al. Design of an integrated model with Temporal graph attention and transformer-augmented RNNs for enhanced anomaly detection. Sci. Rep. 15, 2692. https://doi.org/10.1038/s41598-025-85822-5 (2025).

Alasiry, A. & Qayyum, M. A smart vista-lite system for anomaly detection and motion prediction for video surveillance in vibrant urban settings. J. Supercomput. 81, 297. https://doi.org/10.1007/s11227-024-06753-y (2025).

Ehsan, T. Z., Nahvi, M. & Mohtavipour, S. M. An accurate violence detection framework using unsupervised spatial–temporal action translation network. Vis. Comput. 40, 1515–1535. https://doi.org/10.1007/s00371-023-02865-3 (2024).

Jiao, R. et al. Survey on video anomaly detection in dynamic scenes with moving cameras. Artif. Intell. Rev. 56 (Suppl 3), 3515–3570. https://doi.org/10.1007/s10462-023-10609-x (2023).

Akula, V. & Kavati, I. Human violence detection in videos using key frame identification and 3D CNN with convolutional block attention module. Circuits Syst. Signal. Process. 43, 7924–7950. https://doi.org/10.1007/s00034-024-02824-w (2024).

Alatawi, M. N. Using efficient deep learning techniques for mobile crowd sensing detection in an IOTA based framework. Discov Comput. 27, 53. https://doi.org/10.1007/s10791-024-09493-y (2024).

Dubey, P. & Mittan, R. K. A critical study on suspicious object detection with images and videos using machine learning techniques. SN COMPUT. SCI. 5, 505. https://doi.org/10.1007/s42979-024-02869-3 (2024).

Siva Senthil, D. & Sivarani, T. S. SED NET: Real-Time suspicious event detection via deep learning based Di stream neural network. Int. J. Comput. Intell. Syst. 18, 45. https://doi.org/10.1007/s44196-025-00766-y (2025).

Babu, K. et al. Appearance and motion based unusual crowd events detection using multiple moving objects. Multimed Tools Appl. 83, 90177–90189. https://doi.org/10.1007/s11042-024-20468-5 (2024).

Li, X. et al. Experimental study on emergency Psychophysiological and behavioral reactions to coal mining accidents. Appl. Psychophysiol. Biofeedback. 49, 541–568. https://doi.org/10.1007/s10484-024-09651-4 (2024).

Ilyas, A. & Bawany, N. Crowd dynamics analysis and behavior recognition in surveillance videos based on deep learning. Multimed Tools Appl. https://doi.org/10.1007/s11042-024-20161-7 (2024).

Gawande, U., Hajari, K. & Golhar, Y. Novel person detection and suspicious activity recognition using enhanced YOLOv5 and motion feature map. Artif. Intell. Rev. 57, 16. https://doi.org/10.1007/s10462-023-10630-0 (2024).

Kumar, S. N. & Rani, R. S. Anomalous human action monitoring in video images using RPCA-MFTSL AND PSO-CNN. SN COMPUT. SCI. 5, 109. https://doi.org/10.1007/s42979-023-02420-w (2024).

Rezazadeh, B., Asghari, P. & Rahmani, A. M. Computer-aided methods for combating Covid-19 in prevention, detection and service provision approaches. Neural Comput. Applic. 35, 14739–14778. https://doi.org/10.1007/s00521-023-08612-y (2023).

Mg, W. H. E. et al. Automated system for calving timestamp prediction and cattle classification utilizing trajectory data and movement features. Sci. Rep. 15, 2378. https://doi.org/10.1038/s41598-025-85932-0 (2025).

Veesam, S. B. & Satish, A. R. Design of an iterative method for CCTV video analysis integrating enhanced person detection and dynamic mask graph networks, in IEEE access, 12, pp. 157630–157656, (2024). https://doi.org/10.1109/ACCESS.2024.3485896