Abstract

Gaze estimation is an important indicator of human behavior that can be used for human assistance. Recent gaze estimation methods are primarily based on convolutional neural networks (CNNs) or attention Transformers. However, CNNs extract a limited local context while losing important global information, whereas attention mechanisms exhibit low utilization of multiscale hybrid features. To address these issues, we propose a novel nonlinear multi-head cross-attention network with programmable gradient information (MCA-PGI), which synthesizes the advantages of CNNs and the Transformer. The programmable gradient information is used to achieve reliable gradient propagation. An auxiliary branch is incorporated to integrate the gradient information, thereby retaining more original information than CNNs. In addition, nonlinear multi-head cross-attention is employed to fuse the global visual and multiscale hybrid features for more accurate gaze estimation. Experimental results on three publicly available datasets demonstrate that the proposed MCA-PGI exhibits strong competitiveness and outperforms most state-of-the-art methods, achieving 2.5% and 10.2% performance improvements on the MPIIFaceGaze and Eyediap datasets, respectively. The implementation code can be found at https://github.com/Yuhang-Hong/MCA-PGI.

Similar content being viewed by others

Introduction

Gaze is a fundamental nonverbal communication cue in human behavior, which aids in understanding human intentions and emotions. By estimating the gaze direction, we can gain insights into and analyze human visual attention and cognitive processes. Consequently, gaze estimation is significant in human-centered domains of science and technology1,2. Examples include clinical assessment and diagnosis3, human-computer interaction4, head-mounted devices5,6,7, autonomous driving8, and virtual reality9,10. In the medical realm, gaze tracking technology can be integrated into assistive devices, enabling patients with amyotrophic lateral sclerosis to use eye gaze to control computers and communication devices. This facilitates patient communication and interaction with their surroundings11, as shown in Figure 1.

At present, achieving accurate gaze estimation12 remains a significant challenge. The performance of gaze estimation is affected by individual differences in sex, race, and environmental factors, including lighting and occlusion, as well as nonlinear changes in images, facial features, and eye appearance resulting from variations in head posture. Various deep learning-based methods for gaze estimation have been proposed in recent years to enhance generalizability. These methods can be broadly categorized into two groups based on the type of input image: using eye images and combining facial images as inputs for gaze estimation and only using facial images as inputs for gaze estimation, as shown in Figure 1 (a) and (b), respectively.

Different gaze estimation methods for human assistance: (a) Using eye images and integrating facial images as inputs for gaze estimation. (b) Using facial images as inputs for gaze estimation. (c) Examples of human assistance. Note: The facial image was obtained from the MPIIFaceGaze13 dataset.

Using eye images or combining facial images for gaze estimation often involves the extraction of multiple features from different images. Subsequently, these features are fused for predicting the gaze direction, as in CA-Net14, AFF-Net15, and Dilated-Net16. Although these methods have achieved good results in gaze estimation, they are highly reliant on the effectiveness of the eye segmentation module. This module cannot obtain satisfactory results under challenging conditions such as extreme head poses, shadowing or exposure issues in the eye region, or occlusion by external objects, which affects the subsequent gaze estimation. Moreover, the eye segmentation and gaze estimation modules are trained independently and then integrated into a single system, which may not guarantee a globally optimal solution.

In contrast, methods based on full-face appearance do not exhibit the aforementioned limitations. Existing approaches that directly use facial images as inputs typically employ convolutional neural networks (CNNs) to extract deep facial features automatically for gaze estimation. However, CNNs have two limitations. First, some information of the original image is lost as the number of stacked convolutional layers increases. Second, CNNs cannot effectively capture the contextual information between localized regions of the image, such as the correlation between the eyes and nose in Figure 2. Randomly rearranging the positions of different facial components (such as the eyes, nose, and mouth) does not yield a recognizable face. Mi et al17. addresses the challenge of eye center localization by introducing a deep voting mechanism. This approach is effective for its specific task (such as eye localization), but the performance of gaze estimation is not solely influenced by eye position. Furthermore, it also requires considering the impact of the nose, mouth, and the entire human face on gaze estimation2. Hence, fully utilizing the original image information and understanding the correlation between the eyes and other facial features18 are essential for accurate gaze estimation.

Correlation analysis of the location of facial sites. Note: The facial image was obtained from the MPIIFaceGaze13 dataset.

In order to accurately utilize facial information, Cheng et al19. proposed a Transformer-based network20 for gaze estimation to learn the contextual information of facial images efficiently, and achieved good performance on several datasets. The Transformer can efficiently learn nonlinear facial features and simultaneously consider the contextual information that is implied between different facial features. However, Transformer-based approaches do not consider the multiscale features in facial images. Exploring multiscale features of facial images is beneficial for the model to fully comprehend the content of the image21,22. Therefore, a neural network can be designed to combine the advantages of CNN and Transformer23, using CNN to extract multi-scale features and Transformer to integrate contextual information to help understand the content of high-dimensional facial images.

However, existing methods rely on sequential concatenation, which involves using both CNN and transformer to extract features from the original image and then performing simple summation operations24,25. These approaches of feature fusion lack the multiscale features of the image. We are the first to fuse multi-scale features extracted from CNN with mixed features extracted from transformer for gaze estimation. We propose a nonlinear multi-head cross-attention network with programmable gradient information (MCA-PGI) for gaze estimation. First, to accurately extract multiscale gaze-related features from facial images, we designed the Programmable Gradient Information Feature Extraction Module (PGIFEM), which preserves key information from facial images through shorter gradient backpropagation paths. Secondly, we employed the efficient multiscale transformer (EMST)26 to capture contextual information within these multilevel convolutional and global visual features. These features are then fused into multi-scale local features using the Cross-Level Attention Module (CLAM)26. Finally, we proposed an efficient nonlinear multi-head cross attention module (NMCAM) to achieve the cross-fusion of global visual features and multiscale hybrid features. The main contributions of this study are as follows:

-

We propose a gaze estimation network known as MCA-PGI that leverages the advantages of CNNs and the Transformer to fuse global visual features and multiscale hybrid features effectively. The experimental results show that the proposed MCA-PGI is effective in the gaze estimation task.

-

Our proposed PGIFEM utilizes an auxiliary branch to effectively extract multi-level convolutional features and global visual features from facial inputs through shorter gradient backpropagation paths. Visualization results demonstrate the effectiveness of PGIFEM in feature extraction.

-

We propose the NMCAM to fuse the global visual and multiscale hybrid features effectively. We demonstrated the effectiveness of NMCAM by comparing the differences in feature extraction networks.

The remainder of this paper is organized as follows: Section 2 briefly reviews the existing gaze estimation approaches, including CNNs and attention-based methods. The details of the proposed method are presented in Section 3. Section 4 outlines the experimental results. Finally, Section 5 concludes the paper.

Related work

Early gaze estimation methods can be categorized into model-based27,28,29 and appearance-based2,30 methods. Model-based methods generally need to consider the geometric features of the eyes. Three-dimensional (3D) eye models are usually person-specific owing to the diversity of human eyes. Therefore, model-based methods typically require specialized equipment for individual calibration to facilitate the extraction of human eye geometric features. However, with the development of deep learning, high-level gaze features can be directly extracted from high-dimensional images. The gaze estimation performance can be improved significantly by learning a highly nonlinear mapping function from eye appearance to gaze direction. Compared with model-based methods, appearance-based gaze estimation is simpler to use, as it does not require additional specialized equipment. Thus, appearance-based gaze estimation has received increasing attention from researchers.

Gaze estimation based on CNN methods

Zhang et al31. proposed the first LeNet-based deep learning model for gaze estimation. The model uses a face image as input and then localizes the eye position using face detection and face feature point detection. Subsequently, the eye images and head angle vectors are input into the CNN using data normalization to obtain the angle vector \(g\) for estimating the gaze. In addition, GazeNet, which was proposed by Zhang et al32., is the first deep gaze estimation method based on a 16-layer VGG33deep network. The first full-face appearance-based gaze estimation (Full-Face) was proposed by Zhang et al13. Full-Face uses CNNs to encode facial images and applies spatial weights to the feature map to suppress or enhance information from different facial regions flexibly. Krafka et al34. proposed the use of both human eye and facial images for gaze estimation. Later, Cheng et al14. proposed a coarse-to-fine adaptive network (CA-Net) that uses facial images to estimate an approximation of the gaze angle, and then extracts fine-grained features from eye images and generates gaze residuals to refine the gaze direction. Bao et al15. proposed a gaze tracking architecture (AFF-Net) based on two-eye similarity and the eye–face relationship, which can achieve improved binocular and facial appearance feature extraction. Chen et al16. proposed an appearance-based gaze estimation method by extracting higher-resolution features from eye images using dilated convolutions. Cheng et al35. used fused features from CNNs to augment the original feature set while adopting dual-view image processing, achieving a significant performance improvement in the gaze estimation task.

Gaze estimation based on attention mechanism

Cheng and Lu19 were the first to introduce the Vision Transformer (ViT)36in the field of gaze estimation and achieved significant performance improvements. Oh et al37. combined convolution and inverse convolution with the self-attention mechanism of the Transformer to model local environments accurately, with the aim of reducing computational costs and achieving better performance. Wang et al38. represented facial attributes as different capsules and assigned facial weights via self-attention scores, achieving state-of-the-art results.

Methods in which CNNs are fused with attention mechanisms have achieved better performance than those using only CNNs. However, these methods primarily use global features for estimation and ignore multilevel information, which is crucial for understanding the contextual relationships in facial images19,37.

Methodology

Code availability

We provide complete code for others to reproduce, including the address for obtaining the dataset, the code for processing the dataset, and the code for running the model. The code can be found at https://github.com/Yuhang-Hong/MCA-PGI.

Architecture of MCA-PGI

MCA-PGI architecture. The framework consists of three components: PGIFEM, CIFM, and NMCAM. Note: The facial image was obtained from the EyeDiap39 dataset.

The overall framework of MCA-PGI is shown in Figure 3. The framework consists of three components: the PGIFEM, contextual information fusion module (CIFM), and NMCAM. Given an input image, MCA-PGI first extracts the multilevel convolutional features \(block1\), \(block2\) and global visual features \(fc\) using the PGIFEM. The CIFM is used to obtain the multiscale hybrid features \(ft\). Subsequently, the extracted global visual features \(fc\) and multiscale hybrid features \(ft\) are subjected to NMCAM for feature fusion and the final gaze estimation output is obtained.

PGIFEM

The effectiveness of gradient information in deep neural networks is crucial for gaze estimation, particularly full-face appearance-based gaze estimation. This significantly affects the accuracy of the converged results when handling high-dimensional information, such as faces. Wang et al40. deduced that the root cause of this problem is that the initial gradient that originates from a very deep network has lost substantial information that is required to achieve the goal soon after it is transmitted. Therefore, to address the issue of effective gradient propagation for high-dimensional facial information, we introduce an enhanced PGI structure known as the PGIFEM, which is tailored to the requirements of the gaze estimation task. While retaining the reliability of gradient propagation in PGI, we eliminated the reversible parts of the auxiliary branch and directly connected the output block, resulting in a more streamlined structure. The structure of the PGIFEM is shown in Figure 4.

The PGIFEM consists of three parts: the main branch, auxiliary branch, and output.

The main branch comprises downsampling convolutional layers, which extract information from facial images through multilevel downsampling. Among them, Adown is used for convolutional downsampling, and RepNCSPELAN4 is used for adjusting the number of channels. The detailed structures of both can be found in40. Given an input image \(x\), where \(x \in \mathbb {R}^{C \times H \times W}\), its feature maps \(x_1, x_2, x_3\) are obtained following convolutional downsampling. The information from \(x_1, x_2, x_3\) is segmented into the input of the subsequent auxiliary branch through channel splitting. Specifically, \(x_1 \in \mathbb {R}^{C_1 \times H_1 \times W_1}\), \(x_2 \in \mathbb {R}^{C_2 \times H_2 \times W_2}\), and \(x_3 \in \mathbb {R}^{C_3 \times H_3 \times W_3}\). In this study, \(C_1 = C_2 = C_3 = 512\), \(H_1 = W_1 = 28\), \(H_2 = W_2 = 14\), and \(H_3 = W_3 = 7\). The channel splitting module corresponds to CBLinear depicted in Figure 4. The mathematical expression for the channel splitting operation is as follows:

where \(x\) represents a tensor of size \(C \times H \times W\), in which \(C\) is the number of channels, and \(H\) and \(W\) are the height and width of the tensor, respectively. \(x\) passes through a convolutional layer to produce \(outs\). \(Outs\) is then split into \(n\) tensors according to a specified list of channel numbers, where \(n\) is the length of the channel number list. For the input \(x_1\), the corresponding output \(\text {outs}\) has a shape of \((256, 28, 28)\). For the input \(x_2\), the corresponding output \(\text {outs}\) is a list of tensors. When \(n = 2\), the dimensions of the outputs are: \(\text {out}_1\) with shape \((256, 14, 14)\) and \(\text {out}_2\) with shape \((512, 14, 14)\). For the input \(x_3\), the corresponding output \(\text {outs}\) is also a list of tensors. When \(n = 3\), the dimensions of the outputs are: \(\text {out}_1\) with shape \((256, 7, 7)\), \(\text {out}_2\) with shape \((512, 7, 7)\), and \(\text {out}_3\) with shape \((512, 7, 7)\).

The auxiliary branch consists of downsampling convolutional layers and channel concatenation operations. The channel concatenation operation corresponding to CBFuse in Figure 4 merges information from the main branch, \(x_1, x_2, x_3\). The information \(x_1, x_2, x_3\) from the main branching section is fused by channel concatenation operations, and feature maps at different scales are output in the output section. The mathematical expression for the channel concatenation is as follows:

where, idx represents a list of indices, idx[i] points to a specific tensor selected from the input tensor set x for interpolation. In equations 3 and 4, the channel concatenation operation involves interpolating tensors from the input tensor set x according to the order specified by i and idx[i]. The sizes of all tensors except for the target tensor \(x_{\text {last}}\) are adjusted to match the size of the target tensor using interpolation. Subsequently, the adjusted tensors are concatenated with the target tensor. Here, \(interpolate\) represents the interpolation operation, size represents the target size, which refers to the height and width of \(x_{\text {last}}\). In this case, the size is \((7,7)\). The \(mode=nearest\) indicates the use of the nearest neighbor interpolation method. \(i\) signifies retrieving tensors in the specified order of index.

The output section obtains feature maps at different scales. \(Block1 \in \mathbb {R}^{128 \times 28 \times 28}\) represents a large-scale feature map that contains the overall contour information, basic structural features of the human face, and rough texture information. \(Block2 \in \mathbb {R}^{256 \times 14\times 14}\) represents the medium-scale feature map, which contains the local features of the face, finer texture information, and morphological features of the face. \(fc \in \mathbb {R}^{512 \times 7\times 7}\) represents the global visual feature map, which contains detailed features, fine texture information, and facial micro-expressions. \(Block1\), \(Block2\), and \(fc\) are used as features for the subsequent CIFM for aggregation.

CIFM

The CIFM, which is illustrated in Figure 3, is designed to extract contextual information and fuse it into multiscale hybrid features. The CIFM consists of two components from EMTCAL26: the EMST and CLAM. The EMST is used to extract distant contextual information from facial feature maps. The CLAM integrates the contextual information extracted from the EMST. The CLAM can integrate long-range correlations between features at different levels and fuse multilevel features. The long-range correlations of features at different levels can be regarded as latent dependency information in the facial images, which helps to construct a more comprehensive feature representation. The detailed structures of the EMST and CLAM can be found in26. In this study, we employ CIFM to extract contextual information from \(Block1\), \(Block2\), and \(fc\). This extracted information is then fused to form multiscale hybrid features \(ft\), where \(ft \in \mathbb {R}^{512\times 7\times 7}\).

NMCAM

Inspired by ViT36, we propose the NMCAM to solve the fusion problem of the global visual features \(fc\) extracted by the PGIFEM and multiscale hybrid features \(ft\) fused by the CIFM. Compared to the multi-head attention in the Transformer, NMCAM includes an additional nonlinear processing module (NPM). And the multi-head attention in the NMCAM differs from that in the Transformer in the mapping operations of \(Q\), \(K\), and \(V\) as well as the masking operation. The NMCAM is shown in Figure 3. In the NMCAM, the NPM performs nonlinear operations on the input feature map. The input image is denoted as \(x \in \mathbb {R}^{H \times W \times C}\), where \(H\) and \(W\) represent the resolution of the original image and \(C\) denotes the number of channels. After passing through the NPM, the input image \(x\) is represented as:

After the input image \(x\) undergoes convolution, BatchNorm, and ReLU activation, a nonlinear representation of the feature map \(x_{nonlinear} \in \mathbb {R}^{(H \times W \times C)}\) is obtained. Subsequently, \(x_{nonlinear}\) undergoes adaptive average pooling and is flattened to \(f \in \mathbb {R}^{1 \times C}\). The number of features in \(f\) remains consistent with the number of channels to maximize the preservation of facial features.

The outputs of \(fc\) and \(ft\) pass through the NPM separately, resulting in \(f_1 \in \mathbb {R}^{1 \times 512}\) and \(f_2 \in \mathbb {R}^{1 \times 512}\) as outputs. Thereafter, \(f_2\) undergoes \(l_2\) regularization and serves as an input, along with \(f_1\), for the multi-head attention. The multi-head attention in the NMCAM differs from that in the Transformer in the mapping operations of \(Q\), \(K\), and \(V\) as well as the masking operation. The detailed computational process of NMCAM is described in Algorithm 1.

The feature fusion process between the two extracted feature vectors, \(f_{1}\) and \(f_{2}\), can be likened to machine translation between different languages. In machine translation tasks, the correlation between the currently predicted word and previous word must be considered. Unlike in machine translation tasks, the sequences of the two input feature vectors of the NMCAM are not continuous; therefore, each feature can be considered independent. It is not necessary to consider temporal relationships when fusing two feature vectors. Therefore, a mask operation is not required by the proposed NMCAM in the scaled dot product attention. This further simplifies the computational cost of the proposed NMCAM and improves its operational efficiency.

Experiments

Setup

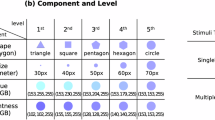

We pretrained the proposed MCA-PGI and evaluated the gaze estimation performance on three datasets. The experiments in this study were conducted through remote connection to a server with an Ubuntu 20.04 operating system and the PyTorch deep learning framework. Python 3.8 and a NVIDIA GeForce RTX 3090 GPU were used. The parameter settings for the different datasets are listed in Table 1.

Dataset used for pretraining. We used ETH-XGaze43 for pretraining. ETH-XGaze includes 110 participants of different ages, genders, and ethnicities, including a large range of head postures and gaze ranges, with consistent labeling quality and high-resolution images. We used the training set in ETH-XGaze for the pretraining. We train MCA-PGI in ETH-XGaze with 32 batch size and 15 epochs. The learning rate is set as 0.00003 and the scheduler is Cosine. AdamW44 is used to train the model.

Datasets used for evaluation. To evaluate the model comprehensively, we used three mainstream gaze estimation datasets: MPIIFaceGaze13, EyeDiap39, and Gaze36042.

MPIIFaceGaze contains 213,659 facial images from 15 participants. The dataset was collected in a real-world environment, providing rich illumination and head pose variations. This dataset is suitable for evaluating unconstrained gaze estimation methods.

EyeDiap contains 94 video clips from 16 participants, each of which is accompanied by a file that provides important information, such as the head pose and target position for each frame. The gaze annotations for this dataset are relatively accurate because a depth camera was used for data acquisition. However, as the data were collected only in a laboratory environment, which differs from a real-world environment, the conditions such as lighting do not vary significantly.

Gaze360 contains 129,000 training images, 17,000 validation images, and 26,000 test images with gaze annotations and has a wide distribution of participants in terms of age, race, and gender. The dataset combines 3D gaze annotations, numerous gaze and head poses, various indoor and outdoor capture environments, and subject diversity.

Partial sample images from the three publicly available datasets are shown in Figure 5.

Data processing methods.The dataset preprocessing procedure proposed by Fischer et al45. was used for MPIIFaceGaze and EyeDiap. Each 224 \(\times\)224 color face image from both datasets was cropped for gaze estimation and evaluation using the leave-one-person-out method. The processing method proposed by Cheng et al2. was followed for Gaze360. The evaluation was performed by dividing the training set into test sets. The training set contained 84,000 images from 54 participants and the test set contained 16,000 images from 15 participants. The size of each image was 224 \(\times\) 224.

Evaluation metrics. We used the average angular error as the performance evaluation metric. It is a widely used metric for evaluating the accuracy of the gaze direction, which is the angle between the estimated and true gaze directions. A lower angular error implies better model performance. The angular error was calculated using the following formula:

where g is the true gaze estimation direction, \(\hat{g}\) denotes the estimated gaze direction, ||g|| and \(||\hat{g}||\) denote the modes of the two vectors, and arccos denotes the inverse of the cosine function.

Comparison with state-of-the-art methods

To evaluate our model, we compared its performance with that of several state-of-the-art methods. The results are shown in Table 2. A visual comparison of the methods is presented in Figure 6 for an intuitive understanding. Overall, the best performance among the existing methods was achieved by the capsule network-based GazeCaps38. Compared with GazeCaps, MCA-PGI achieved the best performance on the MPIIFaceGaze and EyeDiap datasets and exhibited strong performance on Gaze360. MCA-PGI achieved a 2.5% (\(\frac{4.00-3.90}{4.00}\)) performance improvement compared with the state-of-the-art GazeTR-Hybrid on the MPIIFaceGaze dataset, and a 10.2% (\(\frac{5.10-4.58}{5.10}\)) performance improvement compared with the state-of-the-art GazeCaps on the Eyediap dataset. The outstanding performance on all three datasets demonstrates the effectiveness of MCA-PGI, which leverages a CNN for feature extraction and integrates the Transformer for fusion.

Furthermore, we visualized the data distribution and angle error distribution for the three datasets to demonstrate the performance of the proposed model.

The data distributions of the three datasets are shown in Figure 7. Selected data samples were extracted from the corresponding training sets. The horizontal and vertical axes represent the yaw and pitch angles of the gaze direction, respectively. A deep blue color indicates a smaller data distribution. Figure 7 indicates that MPIIFaceGaze and EyeDiap exhibited a higher data distribution in the central region of the gaze directions, whereas Gaze360 exhibited a data distribution with a wider range and higher distribution in extreme gaze directions.

Figure 8 shows the angular error distribution of MCA-PGI on the test sets, where the horizontal and vertical axes represent the yaw and pitch angles, respectively. The color depth was calculated using the angular error formula in Equation 7. A larger angular error indicates poorer model performance. In Figure 8, a color close to blue indicates that the angular error of estimated gaze was small compared to the ground truth. Thus, a color closer to blue means that the performance of the proposed model was better. As shown in Figure 8, the distribution color of MCA-PGI was close to blue in the three datasets, and it performed poorly in only small regions with extreme gaze angles in the datasets.

In addition, we visualized the gaze estimation results on facial images from each dataset, as shown in Figure 9. The left columns show the original input images. In the right columns, the blue arrows denote the ground truth gaze direction, whereas the red arrows represent the gaze direction predicted by MCA-PGI. The red arrows generally overlap with the blue arrows, indicating that MCA-PGI could accurately estimate the gaze direction for various images across the different datasets.

Gaze estimation results on facial images from different datasets: (a) MPIIFaceGaze13, (b) EyeDiap39, and (c) Gaze36042. The left columns of each dataset show the original images, whereas the right columns show the visualization results. The blue arrows denote the ground truth and the red arrows represent the MCA-PGI prediction.

Ablation study

Ablation study of PGIFEM and NMCAM

In the ablation study section, we first conduct an ablation study on the overall module and then separately discuss the contributions of specific module details to the gaze estimation task. First, we performed an ablation study using the PGIFEM and NMCAM on EyeDiap to validate the efficiency of the proposed model. This dataset includes 14 participants (from p1 to p16). Note that no p12 and p13 exist in the EyeDiap dataset. Four network structures were designed, and EMTCAL26 was used as the baseline.

-

Net-0: EMTCAL.

-

Net-1: Baseline + PGIFEM.

-

Net-2: Baseline + NMCAM.

-

Net-3: MCA-PGI.

The experimental setup for the four networks was based on Table 1. The average angular errors for the entire dataset in the ablation study are presented in Table 3. The results of the mean angular error evaluation for each participant are presented in Figure 10. For different participants \(p_i\), \(i\in [1,11]\bigcup [14,16]\), a smaller average angular error of \(p_i\) indicated better performance of the corresponding network model.

As shown in Table 3, both the PGIFEM and NMCAM positively contributed to the gaze estimation accuracy. According to Figure 10, Net-1 with the addition of the PGIFEM exhibited high performance compared to Net-0 for most participants, with an overall performance increase of 10.5% \((\frac{5.61-5.02}{5.61})\). This demonstrates the efficacy of the PGIFEM. In addition, the introduction of the NMCAM in Net-2 led to a 4.8% increase (\(\frac{5.61 - 5.34}{5.61}\)). This suggests that the effectiveness of the feature fusion component is more dependent on the effectiveness of the PGIFEM feature extraction component. Finally, Net-3 with the addition of the PGIFEM and NMCAM achieved the best performance, with an overall improvement of 18.4% \((\frac{5.61-4.58}{5.61})\). This suggests that the PGIFEM and NMCAM are highly effective in improving the gaze estimation performance.

Effectiveness of PGIFEM

We used ResNet3446 as the benchmark method to evaluate the effectiveness of the PGIFEM. Grad-CAM47 was used for visualization to show the focus of the network in specific regions. We selected the color scale COLORMAP_WINTER provided in OpenCV48 to visualize the Grad-CAM results. On this color scale, areas that interest the network yield higher pixel values, meaning they appear green. Grad-CAM was used to visualize the feature maps of ResNet34 and the PGIFEM on \(Block1\) as well as to compare their differences in the regions of interest. The results are shown in Figure 11.

Grad-CAM feature maps visualization. (a) Input images from the EyeDiap39 dataset. (b) Feature maps from the ResNet34 method. (c) Feature maps from the PGIFEM method.

In the ResNet34 model, the green area was mainly concentrated around the face border. This indicates that ResNet34 has a limited ability to extract facial features. Most of the attention was focused on the periphery of the face, whereas the extraction of features inside the face was relatively weak. In contrast, the green areas generated by the PGIFEM were mainly distributed across the five senses of the face. In addition, the green areas generated by the PGIFEM were darkly colored, implying that more attention was paid to these areas. Thus, the PGIFEM has stronger gradient propagation performance and is better at capturing the key features of the face.

We also conducted an ablation study on the auxiliary branches in the PGIFEM. Net-4 was designed using PGIFEM*, with the auxiliary branches removed, as the feature extraction network. The other modules remained unchanged. The mean angular error results for each sample from the ablation study are presented in Table 4. It can be observed that the auxiliary branch improved the performance for almost every participant. The overall improvement was 5.2% \((\frac{4.82-4.58}{4.82})\). This indicates that the auxiliary branch is effective in providing reliable gradient information for the feature extraction network.

Effectiveness of NMCAM

The effectiveness of the NMCAM is analyzed in this section. While maintaining the other modules unchanged, we designed four network structures using ResNet34 and the PGIFEM as feature extraction networks.

-

Net-5: ResNet34 + MA.

-

Net-6: ResNet34 + NMCAM.

-

Net-7: PGIFEM + MA.

-

Net-8: PGIFEM + NMCAM*.

MA is a feature fusion module. The MA module represents a multi-head attention mechanism of the Transformer. The NMCAM* in Net-8 retains the other modules unchanged, except for the linear mapping of \(ft\) and \(fc\), such that \(ft\) linearly maps to \(K\) and \(V\) and \(fc\) linearly maps to \(Q\). The experimental setup for the four networks was based on Table 1. The results for several different attention mechanisms are shown in Table 5.

It can be observed from Table 5 that when ResNet34 was used as the feature extraction network, the effect of the NPM was more obvious, with a performance improvement of 2.7% \((\frac{(5.49-5.34)}{5.49})\). When using the PGIFEM as the feature extraction network, the effect of the NPM is smaller, with a performance improvement of only 0.7% \((\frac{(4.61-4.58)}{4.58})\). This suggests that the nonlinear process can compensate the defects of feature extraction to a certain extent. Moreover, it is more effective in rough information extraction (ResNet34). In addition, the linear mapping of \(fc\) into \(K\) and \(V\) improved the performance by 8.2% \((\frac{(4.99-4.58)}{4.99})\) compared to the linear mapping into \(Q\). The reason for this performance may be the consideration of richer global information. Following NPM transformation, the fused multiscale hybrid features \(ft\) can be considered as the source language in machine translation. The global visual features \(fc\) are the corresponding target languages following NPM transformation. Multiple versions of target languages exist, which contain richer information than the source language. The global visual feature \(fc\) extracts richer global information; therefore, using \(ft\) as \(Q\) is more effective than using \(K\) and \(V\).

Discussion

The experimental results demonstrated the effectiveness of the proposed MCA-PGI for gaze estimation. The specific performance results are as follows:

-

1.

The results in Table 2 and Figure 6 show that our proposed MCA-PGI model achieved a 2.5% improvement on the MPIIFaceGaze dataset and a 10.2% improvement on the EyeDiap dataset compared with state-of-the-art methods. MCA-PGI achieved the second-best performance, after GazeCaps, on the Gaze360 dataset. The limited performance on the Gaze360 dataset may be owing to the low resolution of the original input images, which limits the ability of the model to capture details and extract information. Increasing the resolution of the input images may improve the model performance on this dataset. Thus, our model achieved satisfactory results on different datasets.

-

2.

The results in Table 3 and Figure 10 show that the proposed PGIFEM and NMCAM positively contributed to the gaze estimation performance. The results in Table 4 indicate that the auxiliary branch in PGIFEM effectively transmits gradient information. The results in Figure 11 show that, compared to ResNet34, the PGIFEM model exhibits stronger gradient propagation capabilities, enabling it to capture key facial features more effectively. In the ablation study, the addition of the auxiliary branch significantly improved the model’s performance, demonstrating its effective transmission of gradient information.

-

3.

According to the results in Table 5, the NPM in the NMCAM enhanced the nonlinear feature representation. In addition, the global visual features \(fc\) linearly mapping to \(K\) and \(V\) was preferred over linear mapping to \(Q\). This could be owing to the consideration of feature richness in the query mechanism of the attention mechanism.

Conclusion

We have proposed an efficient gaze estimation network known as MCA-PGI, which effectively utilizes facial image input information and fuses the global visual features along with multiscale hybrid features. The proposed MCA-PGI comprises three components: the PGIFEM, CIFM, and NMCAM. The PGIFEM effectively utilizes input information and propagates gradient information through auxiliary branches in facial images, thereby accurately extracting multilevel convolutional and global visual features from facial images. The CIFM extracts rich contextual information from multilevel convolutional and global visual features and fuses them to obtain multiscale hybrid features that are enriched with contextual information. The NMCAM is used to fuse the global visual features with multiscale hybrid features to derive gaze directions. MCA-PGI combines the strengths of CNNs and transformers. The experimental results demonstrated the robust competitiveness of MCA-PGI across three publicly available datasets. Although the model demonstrates strong performance across three datasets, there remains room for improvement in real-world application scenarios. The experimental validation primarily relies on three public datasets, and its robustness in complex real-world scenarios with extreme head poses or strong illumination variations requires further verification. In future work, we will consider integrating a 3D head pose estimation module to enhance its generalization capability under extreme angles.

Data availability

The data used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Wang, Xinming, Zhang, Jianhua, Zhang, Hanlin, Zhao, Shuwen & Liu, Honghai. Vision-based gaze estimation: a review. IEEE Transactions on Cognitive and Developmental Systems 14(2), 316–332 (2021).

Cheng, Yihua, Wang, Haofei, Bao, Yiwei & Feng, Lu. Appearance-based gaze estimation with deep learning: A review and benchmark. IEEE Transactions on Pattern Analysis and Machine Intelligence 46(12), 7509–7528 (2024).

Zeng, Zheng et al. A robust gaze estimation approach via exploring relevant electrooculogram features and optimal electrodes placements. IEEE Journal of Translational Engineering in Health and Medicine 12, 56–65 (2023).

Dondi, Piercarlo & Porta, Marco. Gaze-based human-computer interaction for museums and exhibitions: Technologies, applications and future perspectives. Electronics 12(14), 3064 (2023).

Lei, Yaxiong, He, Shijing, Khamis, Mohamed & Ye, Juan. An end-to-end review of gaze estimation and its interactive applications on handheld mobile devices. ACM Computing Surveys 56(2), 1–38 (2023).

Zhang, Hanyuan, Shiqian, Wu., Chen, Wenbin, Gao, Zheng & Wan, Zhonghua. Self-calibrating gaze estimation with optical axes projection for head-mounted eye tracking. IEEE Transactions on Industrial Informatics 20(2), 1397–1407 (2023).

Velisar, Anca & Shanidze, Natela M. Noise estimation for head-mounted 3d binocular eye tracking using pupil core eye-tracking goggles. Behavior Research Methods 56(1), 53–79 (2024).

Sharma, Pavan Kumar & Chakraborty, Pranamesh. A review of driver gaze estimation and application in gaze behavior understanding. Engineering Applications of Artificial Intelligence 133, 108117 (2024).

Mania, Katerina, McNamara, Ann & Polychronakis, Andreas. Gaze-aware displays and interaction. In ACM SIGGRAPH 2021 Courses, pages 1–67. (2021).

Liu, Hongmei & Qin, Huabiao. Perceptual self-position estimation based on gaze tracking in virtual reality. Virtual Reality 26(1), 269–278 (2022).

Edughele, Hilary O. et al. Eye-tracking assistive technologies for individuals with amyotrophic lateral sclerosis. IEEE Access 10, 41952–41972 (2022).

Zhang, Ziheng, Lian, Dongze & Gao, Shenghua. Rgb-d-based gaze point estimation via multi-column cnns and facial landmarks global optimization. The Visual Computer 37(7), 1731–1741 (2021).

Zhang, Xucong, Sugano, Yusuke, Fritz, Mario & Bulling, Andreas. It’s written all over your face: Full-face appearance-based gaze estimation. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 51–60, (2017).

Cheng, Yihua, Huang, Shiyao, Wang, Fei, Qian, Chen & Feng, Lu. A coarse-to-fine adaptive network for appearance-based gaze estimation. In Proceedings of the AAAI Conference on Artificial Intelligence 34, 10623–10630 (2020).

Bao, Yiwei, Cheng, Yihua, Liu, Yunfei & Lu, Feng. Adaptive feature fusion network for gaze tracking in mobile tablets. In 2020 25th International Conference on Pattern Recognition (ICPR), pages 9936–9943. IEEE, (2021).

Chen, Zhaokang spsampsps Shi, Bertram E. Appearance-based gaze estimation using dilated-convolutions. In Asian Conference on Computer Vision, pages 309–324. Springer, (2018).

Mi, Jian-Xun., Gao, Yun, Yuan, Shiyao & Li, Weisheng. Accurate and robust eye center localization by deep voting. IEEE Transactions on Circuits and Systems for Video Technology 33(8), 4070–4082 (2023).

Li, Ping, Sheng, Bin & Philip Chen, C. L. Face sketch synthesis using regularized broad learning system. IEEE Transactions on Neural Networks and Learning Systems 33(10), 5346–5360 (2021).

Cheng, Yihua & Lu, Feng. Gaze estimation using transformer. In 2022 26th International Conference on Pattern Recognition (ICPR), pages 3341–3347. IEEE, (2022).

Vaswani, Ashish, Shazeer, Noam, Parmar, Niki, Uszkoreit, Jakob, Jones, Llion, Gomez, Aidan N, Kaiser, Łukasz & Polosukhin, Illia. Attention is all you need. Advances in neural information processing systems, 30, (2017).

Ghani, Muhammad Ahmad Nawaz Ul., She, Kun, Saeed, Muhammad Usman & Latif, Naila. Enhancing facial recognition accuracy through multi-scale feature fusion and spatial attention mechanisms. Electronic Research Archive 32(4), 2267–2285 (2024).

Eljialy, A. E. M., Uddin, Mohammed Yousuf & Ahmad, Sultan. Novel framework for an intrusion detection system using multiple feature selection methods based on deep learning. Tsinghua Science and Technology 29(4), 948–958 (2024).

Qin, Yang, Ding, Shuxue, Xie, Huiming, Tan, Benying & Li, Yujie. A fine-grained vision-language pretraining model with progressive freezing and feedback-controlled cropping. Tsinghua Science and Technology, (2025).

Zhao, Ruijie, Wang, Yuhuan, Luo, Sihui, Shou, Suyao & Tang, Pinyan. Gaze-swin: Enhancing gaze estimation with a hybrid cnn-transformer network and dropkey mechanism. Electronics 13(2), 328 (2024).

Cheng, Zhang & Wang, Yanxia. Lightweight gaze estimation model via fusion global information. In 2024 International Joint Conference on Neural Networks (IJCNN), pages 1–8. IEEE, (2024).

Tang, Xu. et al. Emtcal: Efficient multiscale transformer and cross-level attention learning for remote sensing scene classification. IEEE Transactions on Geoscience and Remote Sensing 60, 1–15 (2022).

Zhu, Zhiwei & Ji, Qiang. Novel eye gaze tracking techniques under natural head movement. IEEE Transactions on biomedical engineering 54(12), 2246–2260 (2007).

Alberto Funes Mora, Kenneth & Odobez, Jean-Marc. Geometric generative gaze estimation (g3e) for remote rgb-d cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1773–1780, (2014).

Elias Daniel Guestrin and Moshe Eizenman. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Transactions on biomedical engineering 53(6), 1124–1133 (2006).

Yang, Aolei, Jin, Zhouding, Guo, Shuai, Dakui, Wu. & Chen, Ling. Unconstrained human gaze estimation approach for medium-distance scene based on monocular vision. The Visual Computer 40(1), 73–85 (2024).

Zhang, Xucong, Sugano, Yusuke, Fritz, Mario & Bulling, Andreas Appearance-based gaze estimation in the wild. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4511–4520, (2015).

Zhang, Xucong, Sugano, Yusuke, Fritz, Mario & Bulling, Andreas. Mpiigaze: Real-world dataset and deep appearance-based gaze estimation. IEEE transactions on pattern analysis and machine intelligence 41(1), 162–175 (2017).

Simonyan, Karen & Zisserman, Andrew. Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556, (2014).

Krafka, Kyle, Khosla, Aditya, Kellnhofer, Petr, Kannan, Harini, Bhandarkar, Suchendra, Matusik, Wojciech & Torralba, Antonio. Eye tracking for everyone. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2176–2184, (2016).

Cheng, Yihua & Lu, Feng. Dvgaze: Dual-view gaze estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 20632–20641, (2023).

Dosovitskiy, Alexey, Beyer, Lucas, Kolesnikov, Alexander, Weissenborn, Dirk, Zhai, Xiaohua, Unterthiner, Thomas, Dehghani, Mostafa, Minderer, Matthias, Heigold, Georg, Gelly, Sylvain, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv:2010.11929, (2020).

O Oh, Jun, Chang, Hyung Jin & Choi, Sang-Il. Self-attention with convolution and deconvolution for efficient eye gaze estimation from a full face image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4992–5000, (2022).

Wang, Hengfei, Oh, Jun O, Chang, Hyung Jin, Na, Jin Hee, Tae, Minwoo, Zhang, Zhongqun, & Choi, Sang-Il. Gazecaps: Gaze estimation with self-attention-routed capsules. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2668–2676, (2023).

Funes Mora, Kenneth Alberto, Monay, Florent & Odobez, Jean-Marc. Eyediap: A database for the development and evaluation of gaze estimation algorithms from rgb and rgb-d cameras. In Proceedings of the symposium on eye tracking research and applications, pages 255–258, (2014).

Wang, Chien-Yao, Yeh, I-Hau, spsampsps Mark Liao, Hong-Yuan. Yolov9: Learning what you want to learn using programmable gradient information. arXiv:2402.13616, (2024).

Loshchilov, Ilya et al. Fixing weight decay regularization in adam. 5(5), 5 (2017) arXiv: 1711.05101.

Kellnhofer, Petr, Recasens, Adria, Stent, Simon, Matusik, Wojciech & Torralba, Antonio. Gaze360: Physically unconstrained gaze estimation in the wild. In Proceedings of the IEEE/CVF international conference on computer vision, pages 6912–6921, (2019).

Zhang, Xucong, Park, Seonwook, Beeler, Thabo, Bradley, Derek, Tang, Siyu & Hilliges, Otmar. Eth-xgaze: A large scale dataset for gaze estimation under extreme head pose and gaze variation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part V 16, pages 365–381. Springer, (2020).

Loshchilov, Ilya & Hutter, Frank. Fixing weight decay regularization in adam. (2018).

Fischer, Tobias, Chang, Hyung Jin & Demiris, Yiannis. Rt-gene: Real-time eye gaze estimation in natural environments. In Proceedings of the European conference on computer vision (ECCV), pages 334–352, (2018).

He, Kaiming, Zhang, Xiangyu, Ren, Shaoqing & Sun, Jian. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, (2016).

Selvaraju, Ramprasaath R., Das, Abhishek, Vedantam, Ramakrishna, Cogswell, Michael, Parikh, Devi & Batra, Dhruv. Grad-cam: Why did you say that? arXiv:1611.07450, (2016).

Bradski, Gary, Kaehler, Adrian, et al. Opencv. Dr. Dobb’s journal of software tools, 3(2), (2000).

Acknowledgements

This work was supported in part by the Innovation and Entrepreneurship Training Program for College Students (S202310595344), the Guangxi Science and Technology Major Project (AA22068057), and the Guangxi Natural Science Foundation (2022GXNSFBA035644).

Author information

Authors and Affiliations

Contributions

Y.L. and Y.H. wrote the main manuscript text. Conceptualization, Y.L. and Y.H.; Methodology, Y.H. and J.C.; Software, Y.H., Z.W and J.C.; Validation, Z.W and R.L.; Writing – review and editing, Y.L., B.T. and S.D. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, Y., Hong, Y., Wang, Z. et al. Nonlinear multi-head cross-attention network and programmable gradient information for gaze estimation. Sci Rep 15, 27135 (2025). https://doi.org/10.1038/s41598-025-12466-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-12466-w