Abstract

Aging is a natural phenomenon characterized by the loss of normal morphology and physiological functioning of the body, causing wrinkles on the skin, loss of hair, and compromised immune systems. Peptide therapies have emerged as a promising approach in aging studies because of their excellent tolerability, low immunogenicity, and high specificity. Computational methods can significantly expedite wet lab-based anti-aging peptide discovery by predicting potential candidates with high specificity and efficacy. We propose AAGP, an anti-aging peptide predictor based on diverse physicochemical and compositional features. Two datasets were constructed, both shared anti-aging peptides as positives, with the first using antimicrobial peptides as negatives and the second using random peptides as negatives. Peptides were encoded using 4,305 features, followed by adaptive feature selection with a heuristic algorithm on both datasets. Nine machine learning models were used for cross-validation and independent tests. AAGP achieves reasonably accurate prediction performance, with MCCs of 0.692 and 0.580 and AUCs of 0.963 and 0.808 on the two independent test datasets, respectively. Our feature importance analysis shows that physicochemical features are more crucial for the first dataset, whereas compositional features hold greater importance for the second. The source code of AAGP is available at https://github.com/saptawtf/AAGP.

Similar content being viewed by others

Introduction

Since time immemorial, human beings have been in constant search of the “fountain of youth”. It would be best if you could send out 60 dollars and receive a sample of the medicine in a package1 rather than going on a long, arduous journey in search of an elusive mythical well. Even though a cure for the ailment with a 100% mortality rate has been romanticized for centuries and centuries, we are still a long distance away from it. Moreover, contrary to common misconception, it’s not only a search for a cure that can help one live longer (or forever) but rather a search for something that can keep you young and healthy2.

Aging is a natural phenomenon that happens to every living organism and is characterized by a loss of normal biological function and morphology, which finally leads to death. In humans, aging can cause several problems, including compromised immune systems, loss of hair, wrinkles in the skin, loss of normal physiological functions, and so on. Aging makes the body vulnerable to different diseases like neurodegenerative disorders, cancer, and cardiovascular diseases, and also makes it more prone to infections3,4. The pharmaceutical and nutraceutical companies have long spent a lot of money and effort to find cures and interventions that can help to slow down aging or deal with the phenotypical consequences of it5. Over the years, with the constant evolution of beauty standards, anti-aging research has also become very deeply intertwined with the cosmetics industry. The cosmetics industry has armed itself with a wide array of beauty products from sunscreens, moisturizers, and exfoliants to anti-aging and anti-wrinkle skincare creams. The market demand for anti-aging products has been expanding and growing over the years6,7,8. The need to look healthy and attractive has become as important as remaining healthy and living long, if not more. Hence, it is important to find and locate new avenues of drug discovery that can help us come up with important interventions.

There are a wide variety of interventions that have been actively researched. These interventions encompass diverse mechanisms, including the inhibition of cellular senescence, preservation of genomic stability, mitigation of mitochondrial dysfunction, prevention of telomere attrition, restoration of microbial homeostasis, reduction of chronic inflammatory processes, implementation of nutritional modifications, and application of targeted dermatological formulations9. Over the past decade, multiple peptide therapeutics have been designed and approved for usage in multiple countries for a wide array of diseases like diabetes, neurological disorders, hematological diseases10. Peptides are short strands of amino acids that are within the range of 500–5000 Da. They have been extensively investigated and used for the treatment of multiple diseases since the approval of insulin in the first half of the twentieth century. A lot of naturally occurring peptides have crucial functions in human physiology and are naturally expressed as hormones, neurotransmitters, growth factors, ion channel ligands, and anti-pathogenic agents. Over the years, peptide therapeutics have been observed to have excellent tolerability and safety profiles in human beings. Moreover, these biologics also have low immunogenicity, predictable metabolism, and high specificity, and the time from bench to market is much shorter than conventional therapeutics11,12. Anti-aging peptides represent a novel class of peptide-based therapeutics targeting aging. These peptides can address the problem of aging-related distress by modulating cell–cell communication. These peptides exhibit diverse functions, such as regulation of collagenase, inhibition of acetylcholine and other neurotransmitter production, enhancement of mitochondrial biogenesis and function, and attenuation of oxidative stress pathways6,7,13. In recent years, there has been a wide number of studies focusing on experiments to design novel anti-aging peptides. However, the design and discovery of such peptides are usually laborious and time-consuming, and hence, building computational machine learning (ML) models would be of great importance. There are studies utilizing various machine learning methods to predict bioactive peptides, such as anti-cancer, anti-microbial, anti-viral, and cell-penetrating peptides14,15,16,17,18,19. Machine learning classifiers can help rapidly identify leads for anti-aging peptides irrespective of the effect or mechanism. This can fast-track the discovery of novel peptides with anti-aging effects and facilitate the development of new peptide therapeutics.

Herein this study, we built multiple ML models that predict anti-aging peptides using sequence-based physicochemical and compositional features. We used sequences from the anti-aging peptide database (AagingBase)13, which contained natural and synthetic peptides selected based on specific assays and criteria. A vast majority of the peptides present in the database help combat skin aging, whereas the rest help mitigate effects like oxidative stress, macular degeneration, and cell aging. Two datasets (DS1 and DS2) were constructed, both sharing anti-aging peptides from AagingBase as positives, while DS1 used antimicrobial peptides as negatives, and DS2 used random peptides as negatives. A total of 4305 features across 57 feature types were considered, and customized feature subsets were then generated using a heuristic feature subset selection algorithm. It was demonstrated that different subsets of features were considered informative for DS1 and DS2. Nine ML models were then used for cross validation and independent tests based on the customized feature subsets. Our results show that AAGP achieves accuracies of 0.955 and 0.941, Matthew’s correlation coefficients (MCCs) of 0.692 and 0.580, and area under the ROC curves (AUCs) of 0.963 and 0.808 on the independent tests for DS1 and DS2, respectively. The prediction accuracy of our best models on dataset DS1 and DS2 shows a positive correlation with the ratio of hydrophobic, hydrophilic, and charged residues, while it shows a negative correlation with the ratio of uncharged residues. Our feature importance analysis reveals that the prediction for DS1 relies more on physicochemical features, such as charge, aliphaticity, hydrophilicity, and hydrophobicity, while the prediction for DS2 relies more on compositional features. The results demonstrate the robust capacity of our prediction pipeline to adaptively select highly informative features, providing reasonably accurate and reliable predictions.

Materials and methods

Workflow of AAGP

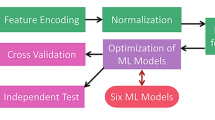

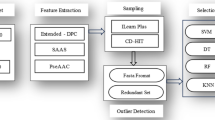

Figure 1 illustrates the workflow of AAGP. There are three major modules: dataset compilation, feature engineering, and hyperparameter optimization. Datasets DS1 and DS2 were constructed from three types of sequences: anti-aging peptides, antimicrobial peptides, and random peptides. The feature engineering module includes feature encoding, normalization, feature ranking, and feature subset selection. The selected feature subset was utilized for hyperparameter optimization, which leverages a Bayesian technique to identify optimal hyperparameters for each ML model. We considered 9 ML models, and the models with optimized hyperparameters were evaluated with cross validation and independent tests. Detailed descriptions of the modules are provided in the following sections.

Dataset compilation

We compiled two datasets with the same positives but different negatives for our study. A total of 282 anti-aging peptides were downloaded from the publicly available AagingBase database13. Peptides of no greater than 5 amino acids were removed. The remaining sequences were subjected to CD-HIT20 clustering to eliminate redundancy, retaining only those with less than 90% pairwise sequence identity. Antimicrobial peptides have often been reported to have cytotoxic effects and cause senescence in cells21,22. Moreover, they have also been linked to ROS (Reactive Oxygen Species) generation, which leads to oxidative stress and damage of cellular components such as DNA and proteins23. Antimicrobial peptides target pathogenic microorganisms through membrane disruption, whereas anti-aging peptides function through cellular repair, antioxidant activity, and metabolic regulation pathways. The two types of peptides may exhibit some functional overlap through shared immunomodulatory and anti-inflammatory properties, yet antimicrobial peptides lack the specific molecular characteristics required for direct anti-aging effects. Thus, antimicrobial peptides were taken as negative samples in this study. The use of functionally related but distinct peptide classes as negatives also provides a more stringent test of the model’s discriminatory power, ultimately leading to more robust and specific predictive algorithms.

The antimicrobial peptides were downloaded from the publicly available database DBAASP v324. CD-HIT-2D20 was then applied to remove redundant antimicrobial peptides, ensuring that the pairwise sequence identities between the positives (anti-aging peptides) and negatives (antimicrobial peptides) were below 90%. This resulted in 199 anti-aging peptides and 5186 antimicrobial peptides. Such an imbalanced dataset with many more negatives than positives can lead to a biased model that performs well on the majority class (negatives) but poorly on the minority class (positives). Thus, we applied random sampling and maintained a 1:10 positive-to-negative ratio (199 anti-aging peptides and 1990 antimicrobial peptides) within our dataset. The sampling process guaranteed that the distribution of peptide length for the positive sequences was identical to that for the negative sequences. The ratio decreased the extent of data imbalance, facilitating model training, and in the meantime, reflected the realistic situation that negative samples (non-anti-aging peptides) significantly outnumber positive samples (anti-aging peptides) in nature. This approach can lead to models with improved generalizability and applicability compared to those trained on balanced datasets. The complete dataset, termed DS1, was split into two subsets: DS1_Main for model development and cross-validation, containing 80% of the sequences (159 positive and 1590 negative peptides), and DS1_Indp for independent test, containing the remaining 20% (40 positive and 400 negative peptides).

It is common that studies on therapeutic peptides take randomly generated peptides from the Swiss-Prot database25 as negatives to facilitate the training of ML models26,27,28,29. Following the methodology, we constructed DS2 by substituting the negative sequences in DS1 with random peptide sequences from Swiss-Prot. To avoid bias in the dataset, the random peptides were carefully selected to ensure that (1) they have the same sequence length distribution as the positive peptides, and (2) the pairwise sequence identity between any positive and negative sequences is below 90%. Similarly, the 80−20 split was applied to DS2 to construct DS2_Main for model development and cross validation and DS2_Indp for an independent test. The sequence length distributions of DS1 and DS2 are shown in Supplementary Fig. S1. Using different types of negative samples helps evaluate model robustness and reduce bias, ensuring more reliable and generalizable findings. This approach also allows for performance comparison and deeper insights into feature importance.

Feature encoding

We transformed the amino acid sequences of the peptides into numerical representations based on various physicochemical and compositional features. The Pfeature30, modlAMP31, and iFeature32 packages were used to generate a feature vector of 4305 features based on 57 feature types for each sequence. These included various feature types such as amino acid composition (AAC), composition of k-spaced amino acid pairs (CKSAAP)33, dipeptide deviation from expected mean (DDE)34, dipeptide composition (DPC)35, conjoint triad (CTriad)36, the extended five-dimensional Z-scale for amino acids (z5)37, empirical residue-based potential (Ez)38, amphiphilic pseudo-amino acid composition (APAAC)33, pseudo amino acid composition (PAAC)33, and so on. Supplementary Table S1 lists all 57 feature types considered in this study.

Normalization

The feature values obtained after encoding were subject to robust normalization with the RobustScaler from the Scikit-learn package in Python. The scaler transforms a feature value from xi to yi using the equation below

in which Q3(X) and Q1(X) stand for the 3rd quartile and 1st quartile of feature X. For each feature, the scaled values have a median of zero and an interquartile range of one. The use of median and interquartile range, rather than mean and standard deviation, makes this method more resistant to outliers compared to standard and min–max normalization, as it reduces the impact of extreme values. This makes it ideal for our highly heterogeneous peptide datasets since the peptides originate from diverse sources and exhibit different biological mechanisms.

Machine learning methods

A total of nine ML models were considered in this study, including gradient boosting classifier (GBC)39,40, categorical boosting classifier (CB)40,41, light gradient boosting machine (LGBM)40,42, random forest (RF)43, extremely randomized trees (ET)44, linear discriminant analysis (LDA)45, quadratic discriminant analysis (QDA)45, k-nearest neighbors (KNN)46, and multi-layer perceptron (MLP) classifier47. LGBM, GBC, and CB are variants of gradient boosting algorithms that construct a series of weak learners that minimize the residual errors of the previous weak learners, and the final predictions are based on the weighted average of the output from the weak learners. RF consists of multiple decision trees built from randomly sampled subsets of the training data, with their predictions averaged to produce the final result. ET is similar, but instead of sampling with replacement, it uses the entire training dataset to train each tree. Moreover, the top-down node splitting is determined by a random process instead of a scoring function such as Gini impurity or information gain. LDA and QDA are based on linear and quadratic discriminant functions, respectively. LDA assumes equal covariance matrices across classes, leading to linear decision boundaries, while QDA allows different covariance matrices, resulting in quadratic decision boundaries. KNN is a non-parametric algorithm that uses distance metrics to separate classes. It classifies data points using a majority vote of their k-nearest neighbors. MLP is a feed-forward artificial neural network that consists of one input layer, one or more hidden layers, and one output layer. All our ML models are implemented with the Scikit-learn package of Python.

Heuristic algorithm for feature selection

Given n features, there are 2n possible feature subsets. We encoded a total of 4305 features for a peptide in the study, and hence, it is computationally impossible to evaluate all the subsets exhaustively. We proposed a heuristic feature selection method that consists of two main steps, namely, feature ranking and feature subset selection. In the first step, we utilized BORUTA48, a wrapper method based on an extreme gradient boosting algorithm for ranking all the features. The algorithm removes features that are statistically less significant than random features, thereby generating a list of features ranked by their importance. For a given dataset, the ranked features are sorted in descending order by feature importance as a list F = (F1, F2, F3,...F4305). Next, a forward selection method was applied to determine the best feature subset. The top N feature subset was defined as FSN = {F1, F2, F3,…, FN}. Iterative five-fold cross validation runs using FSN were performed using the nine ML models with their default hyperparameters from the Scikit-learn package. The experiments were carried out via PyCaret49 and the results of all the models were evaluated with MCC. Let MCCNi be the MCC for ML model i using FSN. After cross validation, we obtained a set of MCCs sorted in descending order, {MCCN1, MCCN2,… MCCN9}. The MCCN associated with FSN is defined as

where i corresponds to any of the nine ML models, namely, GBC, CB, LGBM, RF, ET, LDA, QDA, KNN, and MLP. The process was repeated iteratively, with N ranging from 10 to 290 in increments of 20. The selected feature subset (SFS) is defined as

The proposed heuristic feature selection algorithm has three advantages. First, using the default hyperparameters of ML models for cross validation avoids the lengthy process of model optimization. Second, employing ML models with different underlying principles reduces the risk of selecting feature subsets that are biased toward a specific model. Third, by considering the top five ML models for MCC evaluation, we prioritize feature subsets that are supported by the better-performing models. The heuristic feature selection method was applied to DS1_Main and DS2_Main, generating two feature subsets.

Optimization of machine learning models

After the best feature subset was determined, hyperparameters of the ML models were tuned on DS1 and DS2 using the automatic hyperparameter optimization package, Optuna50, which is based on sampling the search space and selectively cutting off unpromising trials using a Bayesian model. The tree-structured Parzen estimator51 algorithm was selected as the search algorithm as it converges more efficiently to an optimum compared to a randomized search. On the other hand, it takes much less time than the grid search algorithm. Optuna’s default pruner, MedianPruner, was employed to prune a trial (one specific set of hyperparameter values being evaluated) if its intermediate objective value is worse than the median of intermediate values of previous trials at the same step. This acts as an early stopping mechanism within each trial. In this study, the optimization was conducted with 50 trials using MCC as the objective function, and the key hyperparameter ranges for our ML models are detailed in Supplementary Table S2. The optimal hyperparameters for each ML model were obtained upon completion of the optimization process.Based on these hyperparameters, ten-fold cross validation was performed to evaluate each predictor.

To perform an independent test, we performed an additional training phase using the optimized hyperparameters of each model on the complete DS1_Main and DS2_Main dataset. These models, benefiting from a 10% increase in training data compared to the cross-validation models, were subsequently evaluated on the independent test sets, DS1_Indp and DS2_Indp.

Evaluation metrics

Prediction results were evaluated with various measures including accuracy, precision, recall (or sensitivity), F1-score, and MCC defined as follows.

where TN, TP, FN and FP correspond to the numbers of true negatives, true positives, false negatives and false positives, respectively. Accuracy, precision, recall, specificity and F1-Score range from 0 to 1, where a higher value signifies better predictive performance. MCC falls between − 1 and 1, showing absolute negative and positive correlations, respectively, with an MCC of 0 representing a random correlation. We also evaluate the models based on AUC52 (Area under the Receiver operating curve), a widely used metric that provides a comprehensive assessment of a classification model’s performance by capturing its ability to distinguish between the positive and negative classes across all possible decision thresholds. A value of 1 for AUC indicates a perfect model, and a value of 0.5 indicates a random model equivalent to random guessing.

Results and discussions

Amino acid composition and dipeptide composition

The AACs for DS1_Main, DS2_Main, DS1_Indp, and DS2_Indp are illustrated in Fig. 2A,B. In general, the AACs of the main dataset (Fig. 2A) and the independent test dataset (Fig. 2B) are consistent. It can be observed that anti-aging peptides are enriched in glycine residues compared to both random and antimicrobial peptides across the training and test datasets, consistent with previous literature findings13. The role of Gly in anti-aging has been reported and discussed in the literature; for example, glycine helps increase lifespans and improve health in both rodent and mammalian models by activating autophagy and mimicking methionine restriction53. In addition to Gly, there are higher compositions of Gln, Met, and Pro in the anti-aging peptides when compared to the random peptides and the antimicrobial peptides. These amino acids are associated with several discriminating patterns of dipeptides, as illustrated in Supplementary Fig. S2. For example, dipeptides of MQ, GP, and PG are more abundant in positive sequences compared to the two types of negative sequences.

The compositions of the branched-chain amino acids Leu and Ile are lower in the anti-aging peptides compared to both random and antimicrobial peptides, as shown in Fig. 2. This is consistent with the depletion of II, IL, LI, and LL dipeptides in positive sequences compared to the two types of negative sequences (Fig. S2). It is obvious from Fig. 2 that antimicrobial peptides and random peptides exhibit distinct compositions in certain amino acids, such as Asp, Glu, Lys, Gln, and Trp, indicating that the machine learning models trained on DS1 and DS2 may have different decision boundaries.

Sequence logo

We generated sequence logos for our datasets using the Two Sample Logo website54. In accordance with a previous study55, we took the 5 amino acids (the minimum peptide length) from the N and C termini of each peptide and concatenated them to form a fixed vector of 10 residues. The sequence logo shows the amino acids that are enriched or depleted at each position in the positive sequences relative to the negative ones. The sequence logos for DS1 and DS2 are illustrated in Supplementary Figs. S3A,B, respectively. For both DS1 and DS2, Gly, Gln, Met, and Pro are enriched in multiple positions. However, positively charged residues Lys and Arg are enriched only in DS2. It can be seen that Leu and Ile are depleted in both DS1 and DS2, whereas Lys, Arg, and Trp are depleted only in DS1, and Ser is depleted only in DS2. In general, the sequence logos are consistent with the amino acid composition presented in Fig. 2. The results also suggest that DS1 and DS2 share certain common amino acid propensities but exhibit some fundamental differences.

Selected feature subsets

Figure 3A and B show the average MCCs of the top 5 ML models across different feature numbers for DS1_Main and DS2_Main, respectively. The best feature numbers for DS1_Main and DS2_Main are 50, because it is associated with the highest average MCC. The top five models for DS1 are GBC, CB, RF, LGBM, and ET, whereas those for DS2 are ET, RF, LGBM, GBC, and CB, all of which are more advanced and complex ML models. A complete list of the 50 features for DS1_Main and DS2_Main is listed in Supplementary Tables S3 and S4. The selected features of DS1_Main and DS2_Main belong to 20 and 21 feature types, respectively, as shown in Supplementary Table S5. The selected feature subsets of DS1 and DS2 share 15 common feature types, including ABHPRK56, APAAC, CKSGAAGP57, CKSAAP58, CTDC59, CTDD59, CTriad, DDE, Ez, GTPC60, MSW61, NMBroto62, PAAC, QSO63, and Z5. Among the top 10 feature types for DS1_Main and DS2_Main in Table S5, eight of them belong to the common feature types, indicating a certain degree of similarity. Among the features selected for DS1_Main, the physicochemical feature type ABHPRK is the most prevalent, with 7 features selected, while only 3 ABHPRK features are selected for DS2_Main. Conversely, DDE is the most prevalent feature type for DS2_Main, with 7 features selected, and yet the number of selected DDE features for DS1_Main is 4. Similar situations are observed for the feature types of CKSAAGP (6 for DS1_Main and 2 for DS2_Main) and CTDC (3 for DS1_Main and 1 for DS2_Main), revealing inherent differences between the two selected feature subsets.

The selected features for DS1_Main are associated with important attributes, including hydrophobicity, hydrophilicity, charge, and aliphaticity (observed from the feature types of APAAC, CKSAAGP, CTDC, CTDD, and GTPC in Table S3), while the attributes for DS2_Main are only hydrophobicity and charge (Table S4). It is found that the selected features correspond to important amino acids mentioned in the previous sections, such as Gly (AAC_G for DS1_Main and APAAC for DS2_Main), Pro (DDE_PP for DS1_Main and APAAC for DS2_Main), and Ile (DDR_I for DS1_Main). The selected dipeptide-related features, such as AP and WT for DS1_Main and MQ, PG, RA, RP, RQ, TW, and GF for DS2_Main, are enriched in the positive sequences, as illustrated in Fig. S2. Similarly, dipeptides WC (for DS1_Main) and NT (for DS2_Main) are enriched in the negative sequences.

Benchmark results of cross validation

The benchmark results of cross validation on DS1_Main and DS2_Main are shown in Table 1. The top four models (ET, GBC, CB, and RF) for DS1_Main generate accuracies between 0.953 and 0.956, F1-scores between 0.698 and 0.726, AUCs between 0.953 and 0.962, and MCCs between 0.693 and 0.715, indicating fairly accurate overall predictions. ET demonstrates superior performance across multiple metrics, outperforming other models in accuracy, precision, AUC, and MCC. The top two models, ET and GBC, demonstrate somewhat comparable accuracy, specificity, and MCC. The major difference between the two is that ET yields 11.1% higher precision than GBC, and yet GBC yields 7.4% higher recall than ET. MLP and QDA are considered the worst-performing models, judging by accuracy, AUC, and MCC. MLP suffers from a recall of 0.301, the lowest among all the models, whereas QDA suffers from a precision of 0.525, also the lowest among all the models.

For DS2_Main, the top 5 models (ET, GBC, CB, RF, and LGBM) generate accuracies between 0.937 and 0.943, F1-scores between 0.500 and 0.579, AUCs between 0.847 and 0.893, and MCCs between 0.530 and 0.580. These values are lower than those from DS1_Main, indicating that differentiating anti-aging peptides from random peptides is more challenging. It can also be observed that the top 5 models excel in precision (above 0.8) while showing potential for improvement in recall (below 0.46), suggesting that enhancing sensitivity could further optimize their predictions. The bottom two models for DS2_Main, namely, QDA and LDA, generate recalls (0.424 and 0.344) comparable to the top 5 models (in between 0.352 and 0.456), while their precisions (0.550 and 0.547) are significantly lower than those from the top 5 models (in between 0.806 and 0.982). The clear weakness of QDA and LDA compared to the top models lies in their significantly lower precision.

Benchmark results of independent test

The benchmark results on DS1_Indp and DS2_Indp are shown in Table 2. The top 5 models on DS1_Indp (LGBM, CB, GBC, ET, and RF), which are more advanced ensemble ML methods, achieve reasonably accurate predictions, with accuracies ranging from 0.943 to 0.955, AUCs ranging from 0.959 to 0.963, and MCCs ranging from 0.660 to 0.692. Since the dataset is highly unbalanced (positive-to-negative ratio of 1:10), the large number of true negatives significantly contributes to the measures of accuracy, AUC, and specificity. LGBM is the top-performing model, yielding the highest accuracy (0.955), precision (0.885), and MCC (0.692) among all the models, and yet it suffers from a relatively lower recall of 0.575. In contrast, the second-best model, CB, achieves the highest recall of 0.775 with a relatively lower precision of 0.660. MLP’s poor performance, reflected in its low F1-score (0.320) and MCC (0.376) compared to other methods, is attributed to its particularly low recall of 0.200. The ROC curves of all the models on DS1_Indp are shown in Supplementary Fig. S4A.

Similarly, the top 5 models on DS2_Indp (ET, CB, RF, LGBM, and GBC) are more advanced ensemble ML methods, achieving accuracies ranging from 0.932 to 0.941, AUCs ranging from 0.788 to 0.829, and MCCs ranging from 0.500 to 0.580. The accuracy, AUC, and MCC values for DS2_Indp are in general lower than those for DS1_Indp, consistent with our previous observation that differentiating anti-aging peptides from random peptides is more challenging. Similar to the cross-validation results of DS2_Main, all the methods suffer from low recall (between 0.3 and 0.45), suggesting that improving the models’ sensitivity can significantly enhance the overall prediction performance. KNN and QDA models (for DS2_Indp) showed comparable recalls (0.350 and 0.450) to the top 5 models, but their much lower precision values (0.560 and 0.439) result in poor overall prediction performance. The ROC curves of all the models on DS2_Indp are shown in Supplementary Fig. S4B.

We analyzed the correlation between the prediction probability of a peptide and the true positive rate (TPR), which represents the likelihood that a peptide is an anti-aging peptide. Ideally, a higher prediction probability assigned by a model corresponds to a higher TPR if the model has good predictive capability. TPR was calculated by dividing the number of anti-aging peptides by the number of peptide sequences predicted within the range of prediction probability. It can be seen in Fig. 4A,B that our models in general show strong positive correlations between their prediction probability and TPR. The only curve that does not exhibit a monotonic increase in Fig. 4B is QDA, the worst-performing method for DS2_Indp.

The sequence number and true positive rate plotted with respect to the prediction probability for (A) DS1 and (B) DS2. Prediction probability is obtained from the best-performing model on both DS1_Indp (ET) and DS2_Indp (LGBM). The true positive rate is calculated by the number of AAGPs divided by the total number of sequences predicted within each range of prediction probabilities.

Prediction accuracy with respect to peptide properties

We further analyzed the prediction results of the best models on independent tests, namely, LGBM for DS1_Indp and ET for DS2_Indp, with respect to multiple peptide properties characterized by the ratios of hydrophobic, hydrophilic, aliphatic, aromatic, charged, and uncharged residues within peptides. The groupings of amino acids based on their properties are listed in Supplementary Table S6, and the analysis results are illustrated in Fig. 5. Prediction accuracy positively correlates with the ratios of both hydrophobic and hydrophilic residues in DS1_Indp and DS2_Indp (Fig. 5A,B,G,H). It can also be observed from Supplementary Tables S3 and S4 that multiple features regarding hydrophobicity and hydrophilicity are selected for both DS1_Main and DS2_Main. Despite the importance of hydrophobicity for skin permeability, many anti-aging peptides are hydrophilic and require chemical conjugation or carriers for effective permeation of the skin epidermis64.

Peptides’ aliphaticity and aromaticity positively correlate with prediction accuracy for DS1_Indp (Fig. 5C,D) but show opposite trends for DS2_Indp (Fig. 5I,J). This is in good agreement with the observation from Supplementary Tables S3 and S4 that more features regarding aliphatic property are selected for DS1 compared to DS2, and features regarding aromaticity are only selected for DS1. It can also be observed from Fig. 2A,B that the AACs of aromatic residues Phe, Trp, and Tyr demonstrate larger differences between the positive and negative sequences for DS1 (blue bars vs. green bars) than those for DS2 (red bars vs. green bars). Similar situations can also be observed for aliphatic amino acids Leu, Ile, and Pro. In both DS1 and DS2, prediction accuracy shows a positive correlation with the ratio of charged residues and a negative correlation with the ratio of uncharged residues, consistent with a previous report that many of the anti-aging peptides are charged7. Several features regarding charge are also selected for both DS1_Main and DS2_Main (Supplementary Tables S3 and S4).

Interpretation of the ML models using SHAP

SHAP (SHapley Additive exPlanations analysis) analysis is a robust tool based on game theory to explain how different features contribute towards the prediction output of a model. Positive SHAP values show that the features push the model toward predicting a positive outcome, whereas negative SHAP values show that the features push the model to make negative predictions. Here, we analyzed LGBM and ET, our best models on DS1_Indp and DS2_Indp, judging by MCC, respectively. Figure 6A,B illustrate the SHAP values for the top 20 features for DS1_Indp and DS_2_Indp, respectively. The two models share several common feature types, including compositional features DDE and CKSAAGP, and the physicochemical features CTDC and ABHPRK. Most of the features selected for DS1_Indp are physicochemical features, many of which are related to attributes of charge, aliphaticity, hydrophilicity, and hydrophobicity. A total of 7 of the top 20 features (2 for APAAC, 3 for CKSAAGP, and 2 for formula) for DS1_Indp are compositional features. On the other hand, the top 20 features for DS2_Indp include 12 compositional features (6 for DDE, 2 for CKSAAGP, 2 for CKSAAP, 1 for CTriad, and 1 for PAAC), much more than the compositional features for DS1_Indp.

The analysis suggests that the two models (LGBM and ET) learned to distinguish positive and negative sequences from the two datasets based on different characteristics. In DS2_Indp, the negative samples are random peptides selected from Swiss-Prot, with AAC values resembling the background sequence space. Intuitively, compositional features would be useful in distinguishing these random peptides from anti-aging peptides, which possess specific biological functions and distinct compositional patterns of amino acids. In contrast, the compositional features exhibit less pronounced differences between the positive samples (anti-aging peptides) and negative samples (anti-microbial peptides) from DS1_Indp, leading LGBM to rely more heavily on physicochemical features for classification. The results showcase the capability of our prediction pipeline in the adaptive selection of informative features, contributing to accurate predictions. It is reported that the composition of the training data, specifically the type of negative sequences, affects the decision boundary and final outcome of an ML model65. The experimental design based on the two datasets and two sets of trained ML models offers significant potential for advancing the field of anti-aging peptide identification.

Independent tests on datasets with varying positive-to-negative ratios

Independent tests on datasets with varying positive-to-negative (P/N) ratios facilitate evaluating model robustness and generalizability across different class imbalance scenarios that may be encountered in real-world applications. Random antimicrobial peptides and random peptides from the Swiss-Prot database were extracted to form new independent test datasets similar to DS1_Indp and DS2_Indp, respectively, at P/N ratios of 1:5, 1:8, 1:13, and 1:15. Our best trained models, namely, LGBM for DS1 and ET for DS2, were evaluated on these datasets, and the results are shown in Supplementary Table S7.

It can be seen for both DS1 and DS2, model performances on datasets with P/N ratios of 1:5, 1:8, and 1:13 remain comparable to that achieved on the original independent test datasets (P/N ratio of 1:10) across MCC, AUC, and F1-score, demonstrating robust generalizability. However, at the more extreme P/N ratio of 1:15, performance degradation becomes evident. LGBM on DS1 exhibits decreases of 5% and 8% in MCC and F1-score, respectively, relative to the performance on DS1_Indp. Similarly, ET on DS2 shows decreases of 7% and 11% in MCC and F1-score, respectively, compared to the prediction on DS2_Indp. The performance decline is attributed to significantly reduced sensitivity (> 10% for both DS1 and DS2), indicating that future improvement is needed for prediction on highly imbalanced anti-aging peptide datasets.

Computational efficiency of AAGP

The computational efficiency of AAGP was evaluated by measuring the execution time for its six core components: feature encoding, feature ranking, heuristic algorithm for feature selection, hyperparameter optimization, cross validation, and independent test. All experiments were conducted on a standard workstation equipped with an Intel® Core™ i7-8700 CPU (3.20 GHz base frequency), 32 GB RAM, and no GPU acceleration. The total processing time for complete experiments on DS1 and DS2 was less than 30 and 40 min, respectively. Detailed timing breakdowns for individual processing steps are provided in Supplementary Table S8.

Conclusion

This study introduces AAGP, a novel method for the prediction of anti-aging peptides. Two datasets were constructed, both using anti-aging peptides as positives, but with antimicrobial peptides as negatives in DS1 and random peptides as negatives in DS2. Peptide sequence encoding was performed using a comprehensive set of 4305 features, categorized into 57 feature types. Feature ranking was conducted on DS1_Main and DS2_Main using the Boruta algorithm, followed by feature subset selection based on a heuristic algorithm. Consequently, two optimal feature subsets were identified for DS1 and DS2, which were subsequently used to develop 9 machine learning models (LGBM, GBC, CB, ET, RF, KNN, MLP, QDA, and LDA). For each model, Bayesian optimization was employed for hyperparameter tuning, followed by cross-validation with the main datasets (DS1_Main and DS2_Main) and evaluation on independent test sets (DS1_Indp and DS2_Indp).

On the independent test set DS1_Indp, the best-performing model (LGBM) achieves 0.955, 0.963, and 0.692 for accuracy, AUC, and MCC, respectively. On the independent test set DS2_Indp, the best-performing model (ET) achieves 0.941, 0.808, and 0.580 for accuracy, AUC, and MCC, respectively. These values are consistent with the cross-validation results (using DS1_Main and DS2_Main), indicating robust generalizability and no evidence of overfitting. The evaluation results also indicate that the best-performing ML models exhibit lower recall and higher precision for both datasets. This observation is likely due to the diverse range of anti-aging peptides, encompassing a variety of molecular mechanisms and sequence properties. For both DS1_Indp and DS2_Indp, predictive accuracy demonstrates a positive correlation with the proportion of hydrophilic, hydrophobic, and charged residues within a peptide, while a negative correlation is observed with the proportion of uncharged residues. Furthermore, an increased ratio of aliphatic and aromatic residues within a peptide is associated with improved predictive accuracy for DS1_Indp but diminished predictive accuracy for DS2_Indp. SHAP analysis reveals that the prediction of DS1_Indp relies more heavily on physicochemical features, while the prediction of DS2_Indp is more strongly influenced by compositional features. These results highlight distinct underlying patterns and characteristics in the two datasets, influencing their respective prediction tasks. The distinct strengths of the models trained on each dataset suggest their suitability for different applications, which can accelerate progress in the study of anti-aging peptides.

The datasets were constructed with a 1:10 positive-to-negative ratio to mitigate potential model bias towards the majority class and to better approximate the real-world distribution where negative samples are considerably more abundant. Similar to most studies on therapeutic peptide prediction, the datasets presented herein represent only a small portion of the complete peptide space due to limited experimental data. This limitation means that our models, while demonstrating good generalizability on the current data, might not fully capture the breadth of anti-aging mechanisms beyond those predominantly related to skin aging, oxidative stress, macular degeneration, and cell aging, which constitute the majority of currently available experimental data. Consequently, the predictive power for novel anti-aging peptides targeting less-explored mechanisms may be constrained. Furthermore, a challenge in the practical application of our method lies in the experimental validation of predicted peptides. While our model can identify potential anti-aging candidates, the subsequent wet-lab synthesis and testing of these peptides remain a time-consuming and resource-intensive process, which can slow down the translation of computational predictions into tangible therapeutic agents. Despite this limitation, our experimental results demonstrate good model generalizability. Future expansion of experimentally verified anti-aging peptides, particularly those addressing a wider spectrum of aging-related conditions, is expected to further improve the predictive performance and facilitate the development of deep learning approaches for more accurate prediction.

Data availability

The datasets generated and/or analysed during the current study are available in the AAGP repository, https://github.com/saptawtf/AAGP.

References

Haber, C. & Anti-Aging, M. The history: life extension and history: the continual search for the fountain of youth. J. Gerontol. Ser. A. 59, B515–B522 (2004).

Hodgson, R. et al. Aging: therapeutics for a healthy future. Neurosci. Biobehav. Rev. 108, 453–458 (2020).

Guo, J. et al. Aging and aging-related diseases: from molecular mechanisms to interventions and treatments. Signal. Transduct. Target. Ther. 7, 1–40 (2022).

Gladyshev, V. N. et al. Molecular damage in aging. Nat. Aging. 1, 1096–1106 (2021).

Moskalev, A. et al. Targeting aging mechanisms: Pharmacological perspectives. Trends Endocrinol. Metab. 33, 266–280 (2022).

Ferreira, M. S., Magalhães, M. C., Sousa-Lobo, J. M. & Almeida, I. F. Trending anti-aging peptides. Cosmetics. 7, 91 (2020).

Veiga, E. et al. Anti-aging peptides for advanced skincare: focus on nanodelivery systems. J. Drug Deliv. Sci. Technol. 89, 105087 (2023).

Ahmed, I. A., Mikail, M. A., Zamakshshari, N. & Abdullah, A. S. H. Natural anti-aging skincare: role and potential. Biogerontology. 21, 293–310 (2020).

de Magalhães, J. P., Stevens, M. & Thornton, D. The business of anti-aging science. Trends Biotechnol. 35, 1062–1073 (2017).

Jain, S., Gupta, S., Patiyal, S. & Raghava, G. P. THPdb2: compilation of FDA approved therapeutic peptides and proteins. Drug Discov. Today. 29, 104047 (2024).

Wang, L. et al. Therapeutic peptides: current applications and future directions. Signal. Transduct. Target. Ther. 7, 1–27 (2022).

Fosgerau, K. & Hoffmann, T. Peptide therapeutics: current status and future directions. Drug Discov. Today. 20, 122–128 (2015).

R, K. et al. AagingBase: a comprehensive database of anti-aging peptides. Database. 2014, baae016 (2024).

Basith, S., Manavalan, B., Hwan Shin, T. & Lee, G. Machine intelligence in peptide therapeutics: A next-generation tool for rapid disease screening. Med. Res. Rev. 40, 1276–1314 (2020).

Malik, A. et al. APLpred: A machine learning-based tool for accurate prediction and characterization of asparagine peptide lyases using sequence-derived optimal features. Methods. 229, 133–146 (2024).

Guan, J. et al. A two-stage computational framework for identifying antiviral peptides and their functional types based on contrastive learning and multi-feature fusion strategy. Brief. Bioinform. 25, bbae208 (2024).

Yao, L. et al. AMPActiPred: A three-stage framework for predicting antibacterial peptides and activity levels with deep forest. Protein Sci. 33, e5006 (2024).

Yao, L. et al. ACP-CapsPred: an explainable computational framework for identification and functional prediction of anticancer peptides based on capsule network. Brief. Bioinform. 25, bbae460 (2024).

Zhang, X. et al. SiameseCPP: a sequence-based Siamese network to predict cell-penetrating peptides by contrastive learning. Brief. Bioinform. 24, bbac545 (2023).

Li, W. & Godzik, A. Cd-hit: a fast program for clustering and comparing large sets of protein or nucleotide sequences. Bioinformatics. 22, 1658–1659 (2006).

Badinloo, M. et al. Overexpression of antimicrobial peptides contributes to aging through cytotoxic effects in Drosophila tissues. Arch. Insect Biochem. Physiol. 98, e21464 (2018).

Szymczak, P. & Szczurek, E. Artificial intelligence-driven antimicrobial peptide discovery. Curr. Opin. Struct. Biol. 83, 102733 (2023).

Zhang, Q. Y. et al. Antimicrobial peptides: mechanism of action, activity and clinical potential. Mil Med. Res. 8, 1–25 (2021).

Pirtskhalava, M. et al. DBAASP v3: database of antimicrobial/cytotoxic activity and structure of peptides as a resource for development of new therapeutics. Nucleic Acids Res. 49, D288–D297 (2021).

Boeckmann, B. et al. The SWISS-PROT protein knowledgebase and its supplement trembl in 2003. Nucleic Acids Res. 31, 365–370 (2003).

Lee, Y. C., Yu, J. C., Ni, K., Lin, Y. C. & Chen, C. T. Improved prediction of anti-angiogenic peptides based on machine learning models and comprehensive features from peptide sequences. Sci. Rep. 14, 1–10 (2024).

Agrawal, P., Bhagat, D., Mahalwal, M., Sharma, N. & Raghava, G. P. AntiCP 2.0: an updated model for predicting anticancer peptides. Brief. Bioinform. 22, bbaa153 (2021).

Naorem, L. D., Sharma, N. & Raghava, G. P. A web server for predicting and scanning of IL-5 inducing peptides using alignment-free and alignment-based method. Comput. Biol. Med. 158, 106864 (2023).

Olsen, T. H. et al. AnOxPePred: using deep learning for the prediction of antioxidative properties of peptides. Sci. Rep. 10, 21471 (2020).

Pande, A. et al. Pfeature: A tool for computing wide range of protein features and Building prediction models. J. Comput. Biol. 30, 204–222 (2023).

Müller, A. T., Gabernet, G., Hiss, J. A. & Schneider, G. ModlAMP: Python for antimicrobial peptides. Bioinformatics 33, 2753–2755 (2017).

Chen, Z. et al. iFeature: a Python package and web server for features extraction and selection from protein and peptide sequences. Bioinformatics. 34, 2499–2502 (2018).

Chou, K. C. Prediction of protein cellular attributes using pseudo-amino acid composition. Proteins Struct. Funct. Bioinform. 43, 246–255 (2001).

Garg, A. & Raghava, G. P. S. A machine learning based method for the prediction of secretory proteins using amino acid composition, their order and similarity-search. Silico Biol. 8, 129–140 (2008).

Garg, A., Bhasin, M. & Raghava, G. P. S. Support vector machine-based method for subcellular localization of human proteins using amino acid compositions, their order, and similarity Search *. J. Biol. Chem. 280, 14427–14432 (2005).

Shen, J. et al. Predicting protein–protein interactions based only on sequences information. Proc. Natl. Acad. Sci. 104, 4337–4341 (2007).

Sandberg, M., Eriksson, L., Jonsson, J., Sjöström, M. & Wold, S. New chemical descriptors relevant for the design of biologically active peptides. A multivariate characterization of 87 amino acids. J. Med. Chem. 41, 2481–2491 (1998).

Senes, A. et al. Ez, a Depth-dependent potential for assessing the energies of insertion of amino acid Side-chains into membranes: derivation and applications to determining the orientation of transmembrane and interfacial helices. J. Mol. Biol. 366, 436–448 (2007).

Friedman, J. H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001).

Bentéjac, C., Csörgő, A. & Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 54, 1937–1967 (2021).

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V. & Gulin, A. CatBoost: unbiased boosting with categorical features. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, 6639–6649 (Curran Associates Inc., 2018).

Ke, G. et al. LightGBM: a highly efficient gradient boosting decision tree. in Proceedings of the 31st International Conference on Neural Information Processing Systems, 3149–3157 (Curran Associates Inc., 2017).

Parmar, A., Katariya, R. & Patel, V. A. Review on Random forest: an ensemble classifier. In International Conference on Intelligent Data Communication Technologies and Internet of Things (ICICI) 2018 (eds. Hemanth, J., Fernando, X., Lafata, P. & Baig, Z.) 758–763. https://doi.org/10.1007/978-3-030-03146-6_86 (Springer International Publishing, 2019).

Geurts, P., Ernst, D. & Wehenkel, L. Extremely randomized trees.

Tharwat, A. Linear vs. quadratic discriminant analysis classifier: a tutorial. Int. J. Appl. Pattern Recognit. 3, 145–180 (2016).

KNN Model-Based Approach. in Classification | SpringerLink. https://link.springer.com/chapter/10.1007/978-3-540-39964-3_62.

Delashmit, W., Missiles, L. & Manry, M. Recent developments in multilayer perceptron neural networks. (2005).

Kursa, M. B., Jankowski, A. & Rudnicki, W. R. Boruta—A system for feature selection. Fundam Informaticae. 101, 271–285 (2010).

pycaret. PyCaret—An open source, low-code machine learning library in Python.

Akiba, T. et al. A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2623–2631. https://doi.org/10.1145/3292500.3330701 (Association for Computing Machinery, 2019).

Bergstra, J., Bardenet, R., Bengio, Y. & Kégl, B. Algorithms for hyper-parameter optimization. In Proceedings of the 25th International Conference on Neural Information Processing Systems, 2546–2554 (Curran Associates Inc., 2011).

Miller, C., Portlock, T., Nyaga, D. M. & O’Sullivan, J. M. A review of model evaluation metrics for machine learning in genetics and genomics. Front. Bioinform. 4, 1457619 (2024).

Johnson, A. A. & Cuellar, T. L. Glycine and aging: evidence and mechanisms. Ageing Res. Rev. 87, 101922 (2023).

Vacic, V., Iakoucheva, L. M. & Radivojac, P. Two sample logo: a graphical representation of the differences between two sets of sequence alignments. Bioinformatics. 22, 1536–1537 (2006).

Dhall, A. et al. TNFepitope: A webserver for the prediction of TNF-α inducing epitopes. Comput. Biol. Med. 160, 106929 (2023).

Timmons, P. B. & Hewage, C. M. ENNAACT is a novel tool which employs neural networks for anticancer activity classification for therapeutic peptides. Biomed. Pharmacother. 133, 111051 (2021).

Li, F. et al. Computational analysis and prediction of PE_PGRS proteins using machine learning. Comput. Struct. Biotechnol. J. 20, 662–674 (2022).

Chen, K., Kurgan, L. A. & Ruan, J. Prediction of protein structural class using novel evolutionary collocation-based sequence representation. J. Comput. Chem. 29, 1596–1604 (2008).

Dubchak, I., Muchnik, I., Holbrook, S. R. & Kim, S. H. Prediction of protein folding class using global description of amino acid sequence. Proc. Natl. Acad. Sci. U. S. A. 92, 8700–8704 (1995).

Basith, S., Lee, G. & Manavalan, B. STALLION: a stacking-based ensemble learning framework for prokaryotic lysine acetylation site prediction. Brief. Bioinform. 23, bbab376 (2022).

Zaliani, A. & Gancia, E. MS-WHIM scores for amino acids: A new 3D-Description for peptide QSAR and QSPR studies. J. Chem. Inf. Comput. Sci. 39, 525–533 (1999).

Horne, D. S. Prediction of protein helix content from an autocorrelation analysis of sequence hydrophobicities. Biopolymers. 27, 451–477 (1988).

Chou, K. C. Prediction of protein subcellular locations by incorporating Quasi-Sequence-Order effect. Biochem. Biophys. Res. Commun. 278, 477–483 (2000).

Mortazavi, S. M. & Moghimi, H. R. Skin permeability, a dismissed necessity for anti-wrinkle peptide performance. Int. J. Cosmet. Sci. 44, 232–248 (2022).

Ursu, E. et al. Training data composition determines machine learning generalization and biological rule discovery. 06.17.599333 (2024). https://doi.org/10.1101/2024.06.17.599333 (2024).

Acknowledgements

This work was supported by National Science and Technology Council, Taiwan under Grant NSTC 113-2221-E-468-006.

Author information

Authors and Affiliations

Contributions

S.D., J.C.Y., Y.H.L., and Y.C.C. developed the method. S.D. and J.C.Y. conducted experiments and analyzed the results. C.T.C. and S.D. conceived the work and edited the manuscript. C.T.C. supervised the study. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Datta, S., Yu, JC., Lin, YH. et al. AAGP integrates physicochemical and compositional features for machine learning-based prediction of anti-aging peptides. Sci Rep 15, 29036 (2025). https://doi.org/10.1038/s41598-025-12759-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-12759-0