Abstract

To address the shortcomings of the gravitational search algorithm, such as its tendency to fall into local optima, slow convergence, and low solution accuracy, this paper proposes a gravitational search algorithm based on multi-strategy cooperative optimization. The proposed algorithm balances global exploration and local exploitation. In the early iterations, particle positions are primarily updated using the original gravitational force, preserving the inherent characteristics of the gravitational search algorithm. In the later stages, particles with better fitness values are updated using a globally optimal Lévy random walk strategy to enhance local search capabilities, while particles with poorer fitness values are updated using the sparrow algorithm follower strategy. This approach increases the exploration of the particles in unexplored local areas, further improving the local exploitation abilities of the algorithm. Finally, the lens-imaging opposition-based learning strategy generates opposite solutions for particles at different stages, increasing population diversity, expanding the search range, and enhancing the global search performance of the algorithm. An effectiveness analysis and algorithm comparison tests were carried out on 24 typical complex benchmark functions. The performance analysis results show that the multi-strategy collaborative optimization method effectively leverages both the global and local search abilities of the algorithm, improving the accuracy of its solutions and stability. Compared with other GSA-based algorithms and advanced intelligent algorithms, the proposed algorithm exhibits superior solution accuracy, convergence speed, and stability, making it an efficient GSA-based algorithm. In addition, the proposed algorithm was applied to three engineering design optimization problems to verify its applicability to real-world scenarios.

Similar content being viewed by others

Introduction

In scientific research and engineering, many problems can be reduced to a search for the optimal solution. Optimization involves identifying a set of parameter values that satisfy a given optimality metric while adhering to specific constraints, with the aim of determining the best solution among various alternatives. Optimization problems have been extensively studied, leading to the development of various techniques, such as Newton’s method, the conjugate gradient method, pattern search, the simplex method, Rosenbrock’s method, and Powell’s method. However, when dealing with large-scale problems, these techniques often require exploration of the entire search space, leading to a combinatorial explosion in search complexity and making polynomial-time completion unfeasible. Consequently, solving engineering optimization problems remains a challenging task. Inspired by human intelligence, the social behavior of biological groups, and the laws of natural phenomena, scientists have proposed intelligent optimization algorithms to solve them. These algorithms can be categorized into three groups based on their underlying principles: (1) intelligent algorithms based on physical principles, such as the simulated annealing algorithm1, gravitational search algorithm (GSA)2, energy valley optimization algorithm (EVO)3, and Kepler optimization algorithm (KOA)4; (2) swarm intelligence-based algorithms, including particle swarm optimization (PSO)5, Harris hawk optimization (HHO)6 (either alone or combined with PSO), seahorse optimization (SHO)7, and zebra optimization algorithm (ZOA)8; and (3) intelligent algorithms based on evolutionary mechanisms, such as the genetic algorithm (GA)9, differential evolution (DE)10, memetic algorithms (MA)11, and the imperialist competitive algorithm (ICA)12. Intelligent optimization algorithms leverage the collective capabilities of groups to conduct parallel and distributed searches, eliminating the need for centralized control or a global model. They operate independently of the strict mathematical properties inherent to the problem and do not require an exact mathematical model, offering significant advantages in terms of computational complexity.

The GSA, proposed by Rashedi et al. in 20092, emulates the law of universal gravitation to facilitate information exchange among particles, thereby identifying the optimal solution. This algorithm is characterized by its simple implementation and strong performance in global search and convergence. Since its introduction, it has garnered significant attention from the academic community, leading to numerous research developments over a relatively short span. The GSA has been successfully applied in various fields, including robot path planning13,14, parameter estimation and scheduling15,16,17,18,19,20,21, image classification22,23, neural network optimization24,25, and data prediction26,27,28. However, like other intelligent algorithms, its precision and ability to escape local optima are limited. Overcoming these disadvantages, enhancing its optimization capabilities, and effectively applying it to practical problems are crucial challenges that must be addressed.

Scholars have proposed various strategies and methods to address these challenges and enhance the efficacy of GSA. The gravitational constant of the algorithm can be adaptively adjusted according to the number of iterations by employing an exponential decay model, as introduced by Yang et al.29. The population density index is used to dynamically adjust the distance between particles, and iterative evolution leads to changes in direction with respect to both position and velocity. Liu et al.30 proposed an adaptive gravitational constant. In this method, each individual is assigned its own constant, which is updated based on a threshold and probability, to prevent particles from becoming trapped in local optima during the search process. Joshi31 incorporated a chaotic component based on sine and cosine functions into the gravitational constant to address insufficient variations during certain stages of the search process. The populations in the methods of Wu et al.32 and Wang et al.33 are conceptualized as multi-layered structures, with hierarchical organizations guiding the evolutionary trajectory of individuals to address premature convergence and poor algorithm performance. Wang et al.34 employ a distributed architecture, partitioning the population into multiple sub-populations that guide the evolutionary process through a hierarchical structure and facilitate the exchange of globally optimal values for information sharing. Shehadeh35 presented a hybrid algorithm combining GSA with the sperm swarm algorithm, integrating their advantages to avoid becoming trapped in local extrema. Kumar and John36 introduced a novel GSA incorporating Gaussian PSO. This method uses Gaussian parameters in the particle swarm velocity calculations, which are then integrated into the overall GSA. Özyön et al.37 introduced an incremental GSA, adding a new particle at specified iteration intervals until the population reaches its maximum capacity. In the paper by Yu et al.38, a fuzzy system-based GSA was proposed. This approach incorporates 12 fuzzy rules to regulate the parameters, ensuring improved convergence and the ability to avoid becoming trapped in local extrema. Bala and Yadav39 introduced an enhanced comprehensive learning strategy into GSA, enabling the effective acquisition of optimal solutions from particles with diverse dimensions within the population. Feng et al.40 proposed a multi-strategy GSA incorporating the Lévy flight strategy to enhance local search for the globally optimal solution and a mutation strategy to improve population diversity. Pandey et al.41 introduced a hyperbolic GSA, which dynamically adjusts the gravitational constant system using a hyperbolic function, enhancing the attraction of other the particles to the best particle and effectively addressing slow convergence speed and premature convergence issues. Acharya and Das42 proposed a GSA incorporating opposition-based learning, using a randomly generated initial population to create an opposite population, and allowing the algorithm to explore both spaces during the iteration process.

Through the study of swarm intelligence algorithms, commonly used improvement strategies include mutation strategies (e.g., chaotic, Gaussian, Cauchy, and differential mutations), flight- and walk-based strategies (e.g., Lévy flight, random walk, spiral flight, and triangle walk), and other strategies such as crisscross optimization, lens-imaging opposition-based learning, dynamic opposition-based learning, the sine cosine algorithm, and adaptive convergence factors. These strategies have been widely used in various combinations within swarm intelligence algorithms, yielding substantial improvements in performance. To overcome the inherent defects of the GSA, this paper proposes a new GSA based on multi-strategy cooperative optimization, incorporating several ideas from swarm intelligence algorithms. The proposed algorithm integrates multiple strategies into the GSA for collaborative optimization, which not only enhances local search capabilities but also increases population diversity, avoids entrapment in local optima, and improves global search performance.

The main contributions of this paper are as follows:

-

(1)

The globally optimal Lévy random walk strategy is designed to enhance the local search capabilities of the algorithm, and the sparrow algorithm follower strategy is used to further improve the exploration of local regions.

-

(2)

A lens-imaging opposition-based learning strategy is proposed to increase population diversity and improve the global search capabilities of the algorithm.

-

(3)

The time and space complexity of the IMSGSA is analyzed, and the efficiency and feasibility of the improved algorithm are verified through experiments.

The rest of the paper is organized as follows: Sect. 2 describes the basic principles of the standard GSA. In Sect. 3, four improvement strategies are introduced. Section 4 describes the framework and implementation of the GSA based on a multi-strategy cooperative optimization and analyzes its time and space complexity. In Sect. 5, the performance of the improved GSA is verified through experiments. Section 6 applies the modified algorithm to engineering design problems, and Sect. 7 concludes the paper.

Standard GSA

The design of the standard GSA is inspired by the law of universal gravitation. Each particle in the population moves according to the principles of gravitational dynamics, with the particles guiding the swarm during intelligent optimization searches through gravitational interactions. In this context, the position of each particle corresponds to a potential solution to the optimization problem, and each particle is treated as an individual with a corresponding mass. The better the position, the greater the particle’s mass. Under the influence of gravity, particles attract one another and move toward individuals with a larger mass. As this movement continues, the entire swarm eventually converges around the individual with the largest mass, thereby identifying the optimal solution to the problem.

Suppose there are N particles in a D-dimensional search space. The position and velocity of the ith particle are denoted by \({X_i}=(x_{i}^{1},...,x_{i}^{k},...x_{i}^{D})\) and \({V_i}=(v_{i}^{1},...,v_{i}^{k},...v_{i}^{D})\), respectively, where \(x_{i}^{k}\) and \(v_{i}^{k}\) denote the position and velocity components of particle i in dimension k. The standard GSA consists of the following main steps: evaluating the fitness value of each particle, determining the inertial mass and gravitational force of each particle, calculating the acceleration of the particles, and updating the velocity and position of the particles.

Inertial mass calculation

The inertial mass of particle \({X_i}\) is directly related to the fitness of the particle’s position. At iteration t, the inertial mass of particle \({X_i}\) is denoted by \({M_i}(t)\), and it is calculated as follows:

where \(fi{t_i}(t)\) represents the fitness of particle \({X_i}\) at iteration t, while \(best(t)\) and \(worst(t)\) respectively represent the best and worst fitness values of all particles at iteration t.

Calculation of the gravitational dynamics

At iteration t, the attraction of particle j by particle i in the K-th dimension is defined as follows:

where \(\varepsilon\) represents a small constant to prevent the denominator from becoming zero, and \({M_i}(t)\) and \({M_{\text{j}}}(t)\) denote the inertial masses of particles i and j, respectively.

Gravitational constant \(G(t)\) at iteration t is typically determined based on the “true age of the universe.” As the age of the universe increases, the gravitational constant decreases as follows:

Usually, \({G_0}=100\), \(\alpha =20\), and T is the maximum number of iterations.

The Euclidean distance \({R_{{\text{ij}}}}(t)\) between particle i and particle j is given by.

The sum of the forces acting on particle \({X_i}\) in the K-th dimension at iteration t is equivalent to the cumulative effects exerted by all other particles on it, and is given by.

where randj represents a random variable uniformly distributed between [0,1], which is used to increase the randomness of the net particle force. Kbest represents the number of particles with the top-K inertial masses in descending order, and the value of K decreases linearly with the number of iterations, starting from an initial value of N and reducing to a final value of 1.

Speed and position updates

According to Newton’s second law of motion, an object will experience acceleration when subjected to external forces. Similarly, particles will accelerate because of the gravitational forces exerted by other particles. The acceleration of particle i in the K-th dimension is given by the ratio of the applied force to its inertial mass as follows:

During each iteration, the velocity and position of the particle are updated according to the obtained acceleration. The update equations are as follows:

where randi is a random variable uniformly distributed between 0 and 1 and introduces randomness into the velocity update process.

Improvement strategies

Lévy random walk strategy

Lévy flight is a stochastic model of a random walk that follows the Lévy distribution and was proposed by the French mathematician Paul Lévy in the 1930s. Lévy flight is essentially a random step process characterized by step lengths that follow a heavy-tailed Lévy distribution. In most cases, the step lengths in the flight process are small, but occasionally, there are large jumps with sharp directional changes. The foraging trajectories of many animals and insects in nature conform to the Lévy distribution. The random search path calculation for Lévy flight is expressed as follows:

where \(Levy(\beta )\) is a Lévy distribution with parameter β, where\(0<\beta <2\), and is usually set to 1.5. The variable µ follows an \(N(0,\sigma _{\mu }^{2})\) distribution, and \(\nu\) follows an \(N(0,\sigma _{\mu }^{2})\) distribution. The step length \({\delta _\nu }=1\),\({\sigma _\mu }\) can be calculated as follows:

where \(\Gamma\) represents the gamma distribution function.

The particle position is updated using the Lévy distribution, and is calculated as follows:

where \(\oplus\) is element-wise multiplication and \(\:{X}_{best}^{d}\) is the globally optimal position. Lévy flight provides an effective random step generator that interleaves long and short distances. In the Lévy random walk strategy, individual particles are encouraged to move randomly toward the globally optimal particle, enhancing the randomness of the algorithm and local search performance near the optimal value. This approach improves solution accuracy and accelerates the convergence of the algorithm.

Globally optimal solution strategy

The globally optimal solution strategy uses the position information of the best solution within the population to guide individuals toward the optimal solution. In this strategy, a random value is first generated to determine how the update mechanism will operate. If the random value is greater than 0.5, a dimension is randomly selected, and the value of the current individual in that dimension is replaced with the corresponding value of the current optimal solution in that dimension. Otherwise, the position is updated using the Lévy random walk strategy. The mathematical model of the globally optimal solution strategy is as follows:

where \(\:{X}_{i}^{d}\left(t\right)\) is obtained using either the Lévy random walk strategy or the new mechanism, and \(\:\eta\:\) is the index for the randomly selected dimension. Although the globally optimal solution strategy has a limited global search ability, it significantly enhances local search performance. In this paper, the globally optimal solution strategy is embedded into the position update stage of the GSA to further enhance the local search ability of the algorithm and accelerate its convergence.

Sparrow algorithm follower strategy

In the sparrow algorithm, the population is divided into producers and followers according to fitness values. Producers provide the foraging direction for the entire group, lead other sparrows to avoid threats, and provide new search directions for the group. Followers exhibit two main behaviors: those with better fitness values follow and compete for resources with the producers, while others forage in different areas. The position update formula for followers foraging in other areas in the sparrow algorithm is as follows:

where Q is a random number following a normal distribution, \(\:{X}_{w}^{d}\left(t\right)\) is the position of the particle with the worst fitness in the population at iteration t, \(\:{X}_{i}^{d}\left(t\right)\) is the position of the i-th particle at iteration t, and P is a threshold set such that 1 < P < N. The follower strategy from the sparrow algorithm is introduced into the GSA, where each particle with a fitness rank less than P moves toward the center, while all particles with a rans greater than P move toward the particle with the worst fitness in the current iteration. This approach increases the chance the particles will explore unknown areas, thereby enhancing the local exploitation ability of the algorithm.

Lens-imaging opposition-based learning strategy

Opposition-based learning is a mechanism that expands the search range of an algorithm by calculating the opposite position of the current position. The main idea of lens-imaging opposition-based learning is to further expand the search range by generating an opposite position based on the current coordinates and principle of convex lens imaging. In a D-dimensional search optimization problem, the inverse solution based on the lens-imaging principle is calculated as follows:

where \(\:{X}_{i}\) is the component of the current particle in the i-th dimension; \(\:{a}_{i}\) and \(\:{b}_{i}\) are the minimum and maximum values in the i-th dimension of the search space, respectively; \(\:{X}_{i}^{*}\) is the opposite solution of \(\:{X}_{i}\), calculated using the lens; and k is the conditioning factor, which is an important performance parameter. A smaller value of k generates a larger range of opposite solutions, whereas a larger value of k generates a smaller range of opposite solutions. Considering that the GSA requires large-scale exploration in the early iterations and fine-tuning in the later iterations, the approach proposed in this paper uses a dynamic regulation factor that changes with respect to the number of iterations as follows:

where t is the current iteration number and T is the maximum number of iterations of the algorithm. As the iterations of the GSA progress, the diversity of the population decreases, and the population tends to converge near the optimal particle’s position. The lens-imaging opposition-based learning strategy is used to perturb the gravitational search population, enhancing the diversity and increasing the possibility that the algorithm will be able to jump out of local optima.

Improved GSA

Algorithm framework and implementation

The standard GSA uses the K-best particles to guide the movement of all particles. By contrast, the K-best particles rely solely on gravitational interactions among themselves to move. If the K-best particles fall into a local optimum, the entire search algorithm is likely to converge to that local optimum, resulting in slow convergence, low solution accuracy, and failure to achieve the global optimum. To solve these problems, the four strategies described above are introduced, and a GSA based on multi-strategy cooperative optimization is proposed. This algorithm strategically disturbs the population during the optimization process, which not only increases population diversity but also enhances the algorithm’s local search performance, effectively balancing the exploration and exploitation abilities of the algorithm.

The structure of the algorithm is given in (Algorithm 1). The core part of the algorithm is the particle position update, which includes the original position update operator of the GSA, the position update using the sparrow algorithm follower strategy, position update using the globally optimal Lévy random walk strategy, and position update using the lens-imaging opposition-based learning strategy. When updating the position of the particles, the update strategy is first selected according to the adaptive switching function. In the early stages, the value of the adaptive switching function is relatively small, and hence the algorithm selects the original gravitational position along with a new strategy to maintain the inherent characteristics of the algorithm. In the later stages, the particles with the top fitness rankings use the globally optimal Lévy random walk strategy operator for updates, which accelerates convergence speed and strengthens local search performance. Particles with the lowest fitness rankings use the sparrow algorithm follower strategy to update their positions, increasing the opportunities to explore new areas and enhance local exploitation. Finally, the lens-imaging opposition-based learning strategy is used to perturb and mutate the particle positions, enriching population diversity and improving global search performance. Below, we detail the implementation and structural flow of the algorithm.

As shown in Algorithm 1, the core process of the IMSGSA consists of the following steps: 1) Set the parameters. 2) Randomly initialize the population positions and initialize the particle velocity values. 3) Perform out-of-bounds processing for each particle. 4) Calculate particle fitness values according to the objective function. 5) Identify the best and worst fitness values in the population, and calculate the inertial mass of each particle according to Eq. (1); 6) Calculate the gravitational constant according to Eq. (3); 7) Calculate particle acceleration according to Eq. (6); 8) Select a new strategy for each particle according to the adaptive switching function, and update the velocity and position of the particle using Eqs. (7), (8), and (10)–(12); (9) Update the positions according to the lens-imaging opposition-based learning strategy; (10) Repeat Steps 3–9 until the termination condition is met. The model used by the algorithm is relatively simple, and the operators can be modified to a certain extent, making the overall framework of the algorithm easy to implement.

Time and space complexity analyses of the proposed algorithm

Assume that the population size of the IMSGSA is N and the maximum number of iterations is T. The initialization of the population in step 2 requires N operations and the initialization of other parameters requires a constant number of operations. Hence, the time complexity of step 2 is O(N). The time complexity of step 3 is O(N) because N operations are needed to process the particle boundary crossing. In step 4, N operations are needed to calculate the fitness of the particle, and hence the time complexity of step 4 is O(N). In step 5, it takes one operation to calculate the best fitness value, one operation to calculate the worst fitness value, and 2N operations to calculate the inertial mass. Therefore, the time complexity of step 5 is O(1) + O(1) + O(2N). Calculating the gravitational constant in step 6 takes one operation, and hence the time complexity of step 6 is O(1). In step 7, it takes K operations to calculate the resultant forces of each particle and one operation to calculate the acceleration, and hence the time complexity of step 7 is O(N*N) + O(N). In step 8, it is assumed that the original gravitation and new strategy are calculated for each particle in each iteration, and hence the number of operations is N. Suppose either the sparrow algorithm follower strategy or the globally optimal Lévy random walk strategy and the new position are computed in each iteration. The number of operations required to update the position of the sparrow algorithm follower strategy position is (N − pNum)4, and the number of operations required to update the globally optimal Lévy random walk strategy and the new position is pNum6. Therefore, the time complexity of step 8 is O(pNum6). In step 9, one operation is needed to perform the lens imaging transformation, one operation is needed to calculate the fitness value of the new particle, and one operation is needed to compare the fitness value. Hence the time complexity of step 9 is O(N) + O(N) + O(N). After the above steps, the time complexity of the IMSGSA after T iterations is: O(T(9N + N*pNum6 + N*N)).

Suppose that the population size of the IMSGSA is N, the maximum number of iterations is T, and the dimensionality of the solution function space is D. In the IMSGSA, X[N][D] stores the initial position values of the population, V[N][D] stores the initial velocity value of the population, and BestFit[1][T] stores the globally optimal value in each iteration. Fitness[1][N] in step 4 stores the particle fitness value. In step 5, Worst[1][1] stores the worst fitness value in each iteration, Best[1][1] stores the best fitness value in each iteration, and M[1][N] stores the particle’s inertial mass value. In step 6, G[1][1] stores the gravitational constant value; in step 7, a[N][D] stores the particle acceleration value; in step 8, NewX[1][D] stores the updated position of the particle, and NewFit[1][1] stores the updated fitness value of the particle; in step 9, NewBX[N][D] stores the position of the particle after the lens imaging transformation, and NewBFit1[N] stores the fitness value of the updated particle. Consequently, the space complexity of the whole algorithm is 4O(ND) + O(T) + 3O(N).

Experiments on benchmark functions

Benchmark functions

In this study, 24 typical complex benchmark functions were selected to evaluate the global search ability of the improved algorithm. Functions F1–F4 and F6–F14 are from the CEC2005 benchmark set, functions F5, F17, F19, and F22 are from the CEC2017 benchmark set, and functions F15, F16, F20, F21, F23, and F24 are from the commonly used test set for intelligent algorithms. The benchmark functions include eight multidimensional unimodal test functions and 16 multidimensional multimodal functions. Functions F1–F8 are multidimensional unimodal functions, which have only one global peak point and no local extrema, and are often used to test the convergence speed and solution accuracy of algorithms. In contrast, F9–F24 are multimodal functions that contain multiple local extrema that are located far from the globally optimal point. Finding the globally optimal solution of multimodal functions is more challenging than it is for unimodal functions. If an algorithm has poor population diversity and insufficient global optimization ability, it is prone to falling into local extrema, leading to premature convergence. Table 1 lists the names, expressions, search space ranges, and globally optimal fitness values (\(\:f\left({X}^{*}\right)\)) of these test functions.

Effectiveness analysis of multi-strategy collaborative optimization

To verify the effectiveness of the multi-strategy collaborative optimization in the IMSGSA, an ablation test was carried out. For simplification, we refer to the GSA that uses only the Lévy random walk strategy for collaborative optimization as LeGSA. Similarly, the GSA that uses only the sparrow algorithm follower strategy is referred to as SSAGSA. The GSA that uses only the lens-imaging opposition-based learning strategy for collaborative optimization is referred to as LoGSA, and the GSA that uses only the globally optimal solution strategy is referred to as GbGSA. In the experiment, we tested the convergence of the GSA optimized by these four strategies on the 24 benchmark functions in 30 dimensions. The population size was set to 50, and the maximum number of iterations was \(\:\text{D}\text{i}\text{m}\text{*}10000/\text{N}\). Each algorithm was tested independently on each function 30 times, and the best fitness value obtained from each test was recorded. The mean (Mean) and the standard deviation (Std) of the best fitness values were used as the performance metrics for each improvement strategy. Rank represents algorithm ranking. The smaller the mean, the higher the algorithm ranking. If the mean is the same, the smaller the standard deviation, and the higher the ranking. If the mean and standard deviation are the same, the algorithm ranking is the same. Count represents the total number of times the algorithm ranks first on the test set, avg.Rank represents the average ranking of the algorithm on the test set, and totalRank represents the final ranking of the algorithm. The smaller the average ranking value, the higher the final ranking of the algorithm. Table 2 shows the results of the six algorithms on the benchmark functions, where the top results for each test function are displayed in bold.

The results in Table 2 reveal that for unimodal functions, the various strategies improve the optimization ability of the standard GSA. For functions F1–F6, all algorithms except for the SSAGSA significantly improve solution accuracy, and the IMSGSA converges to the theoretically optimal value. For function F7, although the LeGSA shows limited improvement, the other strategies notably improve the solution accuracy, with the LoGSA and IMSGSA performing relatively similarly. For function F8, the SSAGSA substantially improves solution accuracy, while the other single-strategy algorithms fall into local extrema. However, using the combined strategies, the IMSGSA successfully escapes local extrema, achieving better convergence accuracy and stability, achieving an accuracy of 10− 29. On complex multimodal functions, the IMSGSA not only finds the theoretically optimal value but also achieves a standard deviation of 0 when solving functions F9, F11, F15–F18, and F20–F24, demonstrating its superiority and stability. For functions F10, F12–F14, and F19, although the IMSGSA does not attain the theoretically optimal value, its optimal value and standard deviation are still better than those of other algorithms. The other algorithms rank as follows on complex multimodal functions: LoGSA, GbGSA, SSAGSA, LeGSA, and GSA.

The above experiments verify that the strategies proposed in this paper improve the optimization performance of the standard GSA. The multi-strategy collaborative optimization approach effectively enhances population diversity, leverages the strengths of both global and local search, and ensures that the algorithm achieves better solution accuracy and stability.

Comparative analysis with GSA-based algorithms

To comprehensively evaluate the performance of the IMSGSA, this paper compares the proposed algorithm with six GSA-based algorithms. These algorithms encompass a variety of enhancement methods, including adaptive parameter improvements, such as the MACGSA; improvements based on other strategies, such as the LCGSA and SCGSA; combinations with other algorithms, such as the HSSOGSA and GPSOGSA; and improvements based on topological structure, such as the HGSA. These methods are considered to be efficient classic GSA-based algorithms. The parameter settings for each algorithm in the experiment were set to the default parameters from the literature, as listed in (Table 3). The significance of the experimental results were statistically analyzed using the Wilcoxon two-tailed rank sum test method, with a confidence level of α = 0.05. The experimental results are marked with the symbols “+,” “−,” and “=” to indicate comparative performance outcomes, indicating that the experimental results of the IMSGSA are significantly better, significantly worse, or not significantly different from the experimental results of the comparison algorithms. Moreover, “h” indicates the statistical significance of the result. The significant statistical results of the IMSGSA are also summarized in Table 3, where “w” is the total number of wins, “t” is the total number of ties, and “l” is the total number of losses.

To ensure fairness in the experiment, the maximum number of iterations for each algorithm was set to \(\:\text{m}\text{a}\text{x}\text{I}\text{t}\text{e}\text{r}=10000\times\:\text{d}\text{i}\text{m}/\text{N}\), the population size was set to 50, and all algorithms were independently repeated 30 times. The average value of the best fitness and its standard deviation for each algorithm were used as evaluation indices. Tables 4 and 5 present the experimental results for each algorithm for 50 and 100 dimensions, respectively. On the multidimensional unimodal functions, the overall convergence of the IMSGSA is significantly better than those of the other seven algorithms, demonstrating outstanding performance. Especially for functions F1–F6, the average value and variance of the optimization results match the theoretically optimal values, revealing superior, stable solutions. The optimization performance of the other algorithms on multidimensional unimodal functions is ranked as follows: MACGSA, HGSA, GPSOGSA, SCGSA, HSSOGSA, LCGSA, and GSA. On the multidimensional complex optimization functions, the IMSGSA continues to exhibit excellent overall performance. For multimodal functions F9, F11, F15–F18, and F20–F24, the IMSGSA outperforms the other seven algorithms in terms of mean and variance, achieving the theoretically optimal value on all 10 functions, thereby demonstrating exceptional solution capability. For the multimodal functions F10, F12–F14, and F19, although the average fitness and variance of the IMSGSA do not reach the theoretically optimal values, these values remain better than those of the other seven algorithms. In addition, the statistical significance results show that the IMSGSA performs well overall, achieving the best performance across all benchmark functions. Therefore, it can be concluded that the IMSGSA effectively uses multi-strategy collaborative optimization to improve population diversity, reducing the probability of falling into local extrema while ensuring highly accurate solutions.

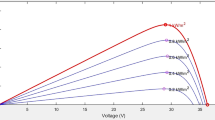

Figure 1 compares the average convergence curves of the eight algorithms on the representative complex benchmark functions when D = 100. Changes in the optimal fitness throughout the iterative process are shown. When solving complex unimodal functions, the curves of the GSA, HGSA, HSSOGSA, LCGSA, GPSOGSA, and SCGSA exhibit a very slow descent from the beginning, and convergence generally stagnates. In contrast, the convergence curves of the IMSGSA and MACGSA maintain a consistent downward trend throughout the process. The IMSGSA convergences more quickly and accurately, ultimately reaching the theoretically optimal value.

On function F9, which is a high-dimensional complex function, the IMSGSA quickly converges to the theoretically optimal value in the early stages of optimization. The MACGSA falls into a local optimum, but as the iterative calculations continue, it successfully pulls out the local extremum and quickly converges to the theoretically optimal value. The convergence curves of the other algorithms tend decrease slowly, and the accuracy of their solutions is relatively poor.

For function F11, the IMSGSA converges rapidly to the theoretically optimal value in the early stages. The GSA, HSSOGSA, LCGSA, GPSOGSA, and SCGSA achieve convergence more quickly, and the optimal value is small. The HGSA and MACGSA fall into local optima in the early stages of iteration, and inflection points appear successively in the convergence curves in the later stages. The algorithms avoid local optima and converge to the global optimum.

For function F12, the convergence of the IMSGSA is excellent. The algorithm quickly converges in the beginning and middle stages of the iterations, while the convergence curves of the other seven algorithms briefly decrease in the early stages and then stabilize. The IMSGSA falls into a local extremum and cannot get out of it.

For function F13, the convergence performance of the IMSGSA remains excellent, and it quickly converges to the optimal value during the first and middle iterations. The convergence speed and accuracy results of the GSA, GPSOGSA, and HSSOGSA are relatively poor, and the other five algorithms fall into local extrema and converge in the early stages of calculation. Moreover, they do not jump out of these local extrema in the later stages, and their solution accuracy is the lowest.

For function F15, the IMSGSA converges to the theoretically optimal value quickly in the early stages. The MACGSA becomes trapped in a local optimum in the early stages until the convergence curve has an inflection point near the end of the iterative calculations, improving solution accuracy. The other algorithms become stuck in local optima, and the convergence curves remain stable until the end of the iterations.

For function F19, the IMSGSA converges to the globally optimal value in the middle of the iterations, and the convergence speed and accuracy are better than those of the other algorithms. The GSA and SCGSA results decrease throughout the iterations. However, because of their slow speed, they fail to obtain a high solution accuracy for a given number of iterations; the other algorithms fall into local optima in the middle of the iterations.

For function F21, in the early part of the iterations, each algorithm converges at a certain speed. As the iterations continue, the MACGSA, HGSA, HSSOGSA, and GPSOGSA fall into local optima. The convergence speeds of the LCGSA, GSA, and SCGSA are slower than that of the IMSGSA, which converges to the theoretically optimal value at the fastest speed.

Figure 2 shows the convergence of the IMSGSA on the general complex benchmark functions with 100 dimensions. Overall, the convergence speed and accuracy of the IMSGSA remain the best.

Table 6 shows the time consumed by the IMSGSA and the GSA-based algorithms on the 100-dimensional complex benchmark functions. The IMSGSA includes four proposed strategies, which may affect the time consumed during algorithm execution. In contrast to its improvement in performance, the IMSGSA consumes more time and has lower execution efficiency than the other GSA-based algorithms. The time unit in the table is seconds.

The experimental results and analysis reveal that the IMSGSA outperforms the other six GSA-based algorithms in terms of solution accuracy, convergence speed, and optimization stability. However, takes longer to execute.

Comparison with other intelligent algorithms

To further evaluate the overall performance of the IMSGSA, this study compared the proposed algorithm with nine advanced intelligent algorithms. These algorithms fall into two main categories: swarm intelligence algorithms, which includes the HHO, SMA, SO, WSO, ROA, CSA, GJO, and JS, and intelligent algorithms based on physical principles, which are represented by the KOA. Table 7 lists the experimental parameter settings used for each algorithm.

The comparison algorithms based on swarm intelligence are recent optimization algorithms designed to simulate the prey behavior of animals. The KOA, proposed in 2023, is a new meta-heuristic algorithm based on physical principles and simulates Kepler’s laws of planetary motion. These algorithms are characterized by strong global search ability, fast convergence speed, and high solution accuracy, making them widely cited and studied, and hence good comparison methods. The maximum number of iterations, population size, repetition times, and evaluation indices of each algorithm are consistent with the experimental settings in the previous section. Tables 8 and 9 list the experimental results of each algorithm at 50 and 100 dimensions, respectively. On the multidimensional unimodal test functions, the performance of the IMSGSA is significantly stronger than that of the other nine algorithms with respect to both mean and variance. The optimization performance of the other algorithms on unimodal functions decreases as follows: HHO, SMA, SO, GJO, JS, ROA, KOA, CSA, and WSO algorithms. The unimodal function F8 is the Rosenbrock function, in which the globally optimal point lies at the bottom of a long and narrow parabolic valley. While most optimization algorithms can locate the valley near the optimal point, converging precisely to the valley’s position is challenging. The IMSGSA demonstrates good convergence accuracy and stable performance on this function. On the complex multimodal functions, the IMSGSA also performs exceptionally well. The mean and variance for some multimodal functions reach the theoretically optimal value, showing that its solution accuracy is less affected by changes in dimensionality, and its ability to find a solution remains robust. The performance of the other algorithms on complex multimodal functions is ranked as follows: HHO, SMA, ROA, SO, GJO, JS, KOA, WSO, and CSA. In addition, the Wilcoxon rank sum test results demonstrate the IMSGSA generally exhibits superior performance, achieving better optimization results on most functions.

Figure 3 compares the average convergence curves of 10 algorithms on the representative complex benchmark functions when D = 100. For the unimodal functions F1 and F2, the GJO and JS algorithms quickly converge to the theoretically optimal value within a very short number of iterations. The SO, HHO, and SMA converge to the theoretically optimal value in the middle to late iterations, while the IMSGSA converges to the theoretically optimal value in approximately 8000 iterations. The KOA, ROA, WSO, and CSA show the worst convergence performance. For the unimodal function F4, although the JS algorithm converges relatively quickly in the early stages, it falls into a local optimum in the middle and late stages. The IMSGSA converges slowly in the early iterations, but its convergence rate increases significantly near the middle stages, eventually converging to the globally optimal value. The HHO, SO, and SMA converge to the theoretically optimal value in the later stages, whereas the other six algorithms fall into local extrema in the later stages, resulting in relatively low convergence accuracy. For the unimodal function F8, although the HHO, ROA, JS, and SMA converge relatively quickly in the early stages, they fall into local optima after 5000 generations. The IMSGSA converges slowly during the initial iterations but accelerates rapidly after 5000 iterations, ultimately converging to the globally optimal value. The other five algorithms fall into local extrema early in the iterations, with significantly lower convergence accuracy. For the multimodal complex functions F9–F11, the GJO and JS algorithms converge to the theoretically optimal value within a few iterations. The IMSGSA converges to the theoretically optimal value in approximately 2000 iterations. The optimization performance of the other algorithms on these functions follows the order: ROA, HHO, SMA, SO, WSO, KOA, and CSA. For the multimodal complex functions F12, F13, F15, F19, and F21, the IMSGSA converges relatively quickly, reaching the globally optimal value around the middle of the iterations. The other nine algorithms fall into local optima early in the iterations and cannot escape. The convergence curves for the unimodal and multimodal functions show that the convergence speed of the IMSGSA is not always the highest. However, it generally converges to the globally optimal value around the middle of the iterative process.

Figure 4 shows the convergence of the IMSGSA on the general complex benchmark functions in 100 dimensions. The convergence accuracies of the IMSGSA on functions F16, F17, F18, F22, and F24 are tied for first place with the accuracies of GJO, JS, and ROA, and its convergence speed ranks third. On the remaining functions, the convergence speed and accuracy still perform relatively well.

Table 10 lists the time consumed by the IMSGSA and other advanced intelligent algorithms on the 100-dimensional complex benchmark functions. The internal structures of the advanced intelligent algorithms are simple, whereas that of the IMSGSA is complex. The IMSGSA performs the worst in terms of execution time. The time unit in the table is seconds.

The experimental results and analysis demonstrate that compared with the other nine advanced intelligent algorithms, the proposed IMSGSA is an effective, robust, and reasonably fast GSA-based algorithm.

Engineering application problems

Benchmark engineering design problems

Three engineering problems, namely the design of multi-disc clutch braking, the minimization of reducer weight, and gear design, were selected for this study to evaluated the proposed method on practical applications. The problem of minimizing the weight of a reducer is a classical engineering design problem. The optimization model comprises a fitness function, seven design decision variables, and eleven inequality constraints. The main goal is to minimize the weight of the reducer while adhering to the specified constraints. The structure and mathematical model can be found in50. The gear design problem is a type of engineering optimization problem, falling under the category of unconstrained optimization. The optimization model comprises a fitness function and four integer design decision variables. The main goal is to make the gear ratio as close as possible to 1/6.931. The structure and mathematical model are also detailed in50. The multi-disc clutch braking design problem is a typical engineering design problem. The optimization model consists of a fitness function, five design decision variables, and nine nonlinear inequality constraints. The main goal is to minimize the mass of the multi-disc clutch while satisfying the constraints. The structure and mathematical model are given by Heidari et al. in54. The mean, variance, optimal value, algorithm ranking and Wilcoxon rank sum test p-values are used as the performance indicators. The algorithm was iterated 5000 times, and the other settings were the same as before.

Effectiveness analysis of the strategies

The improved algorithm was subjected to ablation experiments on the three engineering problems. Table 11 compares the results of the IMSGSA and the single-strategy algorithms. From the experimental results, it can be seen that the IMSGSA achieved the best results on all three engineering problems with respect to the comparison algorithms. Additionally, the average performance results of the single strategy-based algorithms were also superior to that of the GSA. Figure 5 presents the convergence curves of the algorithm. The IMSGSA converges slightly slower than the LeGSA on the gear design problem, but it has the fastest convergence speed and the highest accuracy on the other two engineering problems. Although the GSA has a better convergence speed and accuracy than the GbGSA on the gear design problem and outperforms the SSAGSA on the multi-disc clutch braking design problem, its overall convergence speed and accuracy are the worst.

Comparison with other intelligent algorithms

The IMSGSA was also compared and analyzed with other intelligent algorithms on the three engineering problems. Table 12 lists the results of the IMSGSA with those of the 13 other intelligent optimization algorithms. When minimizing the weight of the reducer, the IMSGSA outperforms the others in terms of the best value, average value, variance, and ranking. Meanwhile, Fig. 6 shows the convergence curves of each algorithm on this problem, showing the overall graph and an enlarged region. The convergence speed of the IMSGSA is the highest. On the gear design problem, the IMSGSA and HGSA have achieved the globally optimal values in terms of the best value, average value, and variance, outperforming the other 12 algorithms.

Conclusion

In this study, a new GSA-based algorithm was proposed. The algorithm uses the progression of the iterations to dynamically switch between the original gravitational search strategy, a new strategy, and a super-local search strategy through a probability-based mechanism, allowing it to maintain high search activity throughout the optimization process and thereby improving search accuracy near the globally optimal position. Additionally, the lens-imaging opposition-based learning strategy was used to generate opposite solutions, expanding the search range of the algorithm at different stages, balancing global search and local exploitation capabilities, and comprehensively enhancing the performance of the algorithm. The simulation results on benchmark test functions showed that the improved algorithm had superior solution accuracy, convergence speed, and optimization stability, demonstrating that it is an effective and robust modification of the GSA. The proposed algorithm was then applied to three engineering design optimization problems, further verifying its superiority and feasibility.

Overall, the main contributions of this paper are as follows:

-

(1)

The proposed IMSGSA integrates the Lévy random walk strategy, globally optimal solution strategy, sparrow algorithm follower strategy, and lens-imaging opposition-based learning strategy into the GSA to improve performance.

-

(2)

Numerical experiments were conducted to compare the IMSGSA and GSA-based algorithms with other advanced intelligent algorithms on multi-dimensional benchmark function sets, revealing the advantages of the IMSGSA in handling optimization problems.

-

(3)

The IMSGSA was applied to three classic engineering problems: multi-disc clutch brake design, reducer weight minimization, and gear design. These results verified the effectiveness and practicality of the IMSGSA in solving real-world optimization problems.

Although the IMSGSA has strong optimization performance, it also has some limitations, such as a slow execution time and low efficiency. Moreover, its performance is affected by the characteristics of the problem, and it is not highly suitable for all optimization problems. In future work, theoretical research on the algorithm will be strengthened, and more advanced strategies will be considered to further enhance the optimization capability of IMSGSA, enabling it to handle a variety of optimization problems. In addition, expanding the application of IMSGSA to more fields will increase our understanding of the application of the improved algorithm in practical problems such as multi-objective optimization, logistics vehicle scheduling, image segmentation, neural networks, pattern recognition, and path planning.

Data availability

All the data produced or examined during the research are included in this article. The source code for the algorithm described in this manuscript is publicly available on GitHub (https://github.com/yzh2020/IMSGSA).

References

Kirkpatrick, S., Gelatt, C. & Vecchi, M. Optimization by simulated annealing. Science 220, 671–680. https://doi.org/10.1126/science.220.4598.671 (1983).

Rashedi, E., Nezamabadi-Pour, H. & Saryazdi, S. G. S. A. A gravitational search algorithm. Inf. Sci. 179, 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004 (2009).

Azizi, M., Aickelin, U., Khorshidi, H. A. & Shishehgarkhaneh, M. B. Energy Valley optimizer: A novel metaheuristic algorithm for global and engineering optimization. Sci. Rep. 13, 226. https://doi.org/10.1016/j.cma.2021.114194 (2023).

Abdel-Basset, M., Mohamed, R., Azeem, S. A. A., Jameel, M. & Abouhawwash, M. Kepler optimization algorithm: A new metaheuristic algorithm inspired by kepler’s laws of planetary motion. Knowl. -Based Syst. 268, 110454. https://doi.org/10.1016/j.knosys.2023.110454 (2023).

Shami, T. M. et al. Particle swarm optimization: A comprehensive survey. IEEE Access. 10, 10031–10061. https://doi.org/10.1109/ACCESS.2022.3142859 (2022).

Heidari, A. A. et al. Harris Hawks optimization: algorithm and applications. Futur Gener Comput. Sys. 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 (2019).

Zhao, S., Zhang, T., Ma, S. & Wang, M. Sea-horse optimizer: A novel nature-inspired meta-heuristic for global optimization problems. Appl. Intell. 53, 11833–11860. https://doi.org/10.1007/s10489-022-03994-3 (2023).

Trojovská, E., Dehghani, M. & Trojovský, P. Zebra optimization algorithm: A new bio-inspired optimization algorithm for solving optimization algorithm. IEEE Access. 10, 49445–49473. https://doi.org/10.1109/ACCESS.2022.3172789 (2022).

Liu, X. et al. Genetic algorithm-based trajectory optimization for digital twin robots. Front. Bioeng. Biotechnol. 9, 793782. https://doi.org/10.3389/fbioe.2021.793782 (2022).

Deng, W., Liu, H., Xu, J., Zhao, H. & Song, Y. An improved quantum-inspired differential evolution algorithm for deep belief network. IEEE Trans. Instrum. Meas. 69, 7319–7327. https://doi.org/10.1109/TIM.2020.2983233 (2020).

Wang, J. J. & Wang, L. A cooperative memetic algorithm with learning-based agent for energy-aware distributed hybrid flow-shop scheduling. IEEE T Evolut Comput. 26, 461–475. https://doi.org/10.1109/TEVC.2021.3106168 (2022).

Atashpaz-Gargari, E. & Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In IEEE Congress on Evolutionary Computation (p. 4661-–4667) https://doi.org/10.1109/CEC.2007.4425083 (IEEE, 2007).

Das, P. K., Behera, H. S., Jena, P. K. & Panigrahi, B. K. An intelligent multirobot path planning in a dynamic environment using improved gravitational search algorithm. Int. J. Autom. Comput. 18, 1032–1044. https://doi.org/10.1007/s11633-016-1019-x (2021).

Xu, H., Jiang, S. & Zhang, A. Path planning for unmanned aerial vehicle using a mix-strategy-based gravitational search algorithm. IEEE Access. 9, 57033–57045. https://doi.org/10.1109/ACCESS.2021.3072796 (2021).

Younes, Z., Alhamrouni, I., Mekhilef, S. & Reyasudin, M. A memory-based gravitational search algorithm for solving economic dispatch problem in micro-grid. Ain Shams Eng. J. 12, 1985–1994. https://doi.org/10.1016/j.asej.2020.10.021 (2021).

Cao, C., Zhang, Y., Gu, X., Li, D. & Li, J. An improved gravitational search algorithm to the hybrid flowshop with unrelated parallel machines scheduling problem. Int. J. Prod. Res. 59, 5592–5608. https://doi.org/10.1080/00207543.2020.1788732 (2021).

Habibi, M., Broumandnia, A. & Harounabadi, A. An intelligent traffic light scheduling algorithm by using fuzzy logic and gravitational search algorithm considering emergency vehicles. Int. J. Nonlinear Anal. Appl. 11, 475–482. https://doi.org/10.22075/IJNAA.2020.4706 (2020).

Thakur, A. S., Biswas, T. & Kuila, P. Binary quantum-inspired gravitational search algorithm-based multi-criteria scheduling for multi-processor computing systems. J. Supercomput. 77, 796–817. https://doi.org/10.1007/s11227-020-03292-0 (2021).

He, J., Wang, T., Li, Y., Deng, Y. & Wang, S. Dynamic chaotic gravitational search algorithm-based kinetic parameter Estimation of hepatocellular carcinoma on F-FDG PET/CT. BMC Med. Imaging. 22, 796–817. https://doi.org/10.1186/s12880-022-00742-4 (2022).

Enikeeva, L. V. et al. Gravitational search algorithm for determining the optimal kinetic parameters of propane pre-reforming reaction. React. Kinet Mech. Cat. 132, 111–122. https://doi.org/10.1007/s11144-021-01927-8 (2021).

Ismail, A. M. et al. An improved hybrid of particle swarm optimization and the gravitational search algorithm to produce a kinetic parameter Estimation of aspartate biochemical pathways. Biosystems 162, 81–89. https://doi.org/10.1016/j.biosystems.2017.09.013 (2017).

Wang, Y., Tan, Z. & Chen, Y. C. An adaptive gravitational search algorithm for multilevel image thresholding. J. Supercomput. 77, 10590–10607. https://doi.org/10.1007/s11227-021-03706-7 (2021).

Alenazy, W. M. & Alqahtani, A. S. An automatic facial expression recognition system employing convolutional neural network with multi-strategy gravitational search algorithm. IETE Tech. Rev. 39, 72–85. https://doi.org/10.1080/02564602.2020.1825125 (2022).

Garcia-Rodenas, R. & Jimenez Linares, L. Alberto Lopez-Gomez, J. Memetic algorithms for training feedforward neural networks: an approach based on gravitational search algorithm. Neural Comput. Appl. 33, 2561–2588. https://doi.org/10.1007/s00521-020-05131-y (2021).

Nagra, A. A., Alyas, T., Hamid, M., Tabassum, N. & Ahmad, A. Training a feedforward neural network using hybrid gravitational search algorithm with dynamic multiswarm particle swarm optimization (retracted article). BioMed. Res. Int. 2022, 2636515. https://doi.org/10.1155/2022/2636515 (2022).

Nobahari, H., Alizad, M. & Nasrollahi, S. A nonlinear model predictive controller based on the gravitational search algorithm. Control Appl. Meth. 42, 1734–1761. https://doi.org/10.1002/oca.2757 (2021).

Zhang, X., Wu, X., Zhu, G., Lu, X. & Wang, K. A seasonal ARIMA model based on the gravitational search algorithm (GSA) for runoff prediction. Water Supply. 22, 6959–6977. https://doi.org/10.2166/ws.2022.263 (2022).

Singh, A. & Singh, N. Gravitational search algorithm-driven missing links prediction in social networks. Concurr Comput. Pract. Exp. 34, e6901. https://doi.org/10.1002/cpe.6901 (2022).

Yang, Z., Cai, Y. & Li, G. Improved gravitational search algorithm based on adaptive strategies. Entrop 24, 1826–1857. https://doi.org/10.3390/e24121826 (2022).

Lei, Z., Gao, S., Gupta, S., Cheng, J. & Yang, G. An aggregative learning gravitational search algorithm with self-adaptive gravitational constants. Expert T Syst. Appl. 152, 113396. https://doi.org/10.1016/j.eswa.2020.113396 (2020).

Joshi, S. K. Chaos embedded opposition based learning for gravitational search algorithm. Appl. Intell. 53, 5567–5586. https://doi.org/10.1007/s10489-022-03786-9 (2023).

Wang, Y., Yu, Y., Gao, S., Pan, H. & Yang, G. A hierarchical gravitational search algorithm with an effective gravitational constant. Swarm Evol. Comput. 46, 118–139. https://doi.org/10.1016/j.swevo.2019.02.004 (2019).

Wang, Y., Gao, S., Zhou, M. & Yu, Y. A multi-layered gravitational search algorithm for function optimization and real-world problems. IEEE-CAA J. Automatic. 8, 94–109. https://doi.org/10.1109/JAS.2020.1003462 (2021).

Wang, Y., Gao, S., Yu, Y., Cai, Z. & Wang, Z. A gravitational search algorithm with hierarchy and distributed framework. Knowl. -Based Syst. 218, 106877. https://doi.org/10.1016/j.knosys.2021.106877 (2021).

Shehadeh, H. A. A hybrid sperm swarm optimization and gravitational search algorithm (HSSOGSA) for global optimization. Neural Comput. Appl. 33, 11739–11752. https://doi.org/10.1007/s00521-021-05880-4 (2021).

Kumar, S. & John, B. A novel Gaussian based particle swarm optimization gravitational search algorithm for feature selection and classification. Neural Comput. Appl. 33, 12301–12315. https://doi.org/10.1007/s00521-021-05830-0 (2021).

Ozyon, S., Yasar, C. & Temurtas, H. Incremental gravitational search algorithm for high-dimensional benchmark functions. Neural Comput. Appl. 31, 3779–3803. https://doi.org/10.1007/s00521-017-3334-8 (2019).

Xianrui, Y., Xiaobing, Y., Chenliang, L. & Hong, C. An improved parameter control based on a fuzzy system for gravitational search algorithm. Int. J. Comput. Intell. S. 13, 893–903. https://doi.org/10.2991/ijcis.d.200615.001 (2020).

Bala, I. & Yadav, A. Comprehensive learning gravitational search algorithm for global optimization of multimodal functions. Neural Comput. Appl. 33, 7347–7382. https://doi.org/10.1007/s00521-019-04250-5 (2020).

Feng, Z. K. et al. Ecological operation of cascade hydropower reservoirs by elite-guide gravitational search algorithm with lévy flight local search and mutation. J. Hydrol. 581, 124425. https://doi.org/10.1016/j.jhydrol.2019.124425 (2020).

Pelusi, D. et al. Improving exploration and exploitation via a hyperbolic gravitational search algorithm. Knowl. -Based Syst. 193, 105404. https://doi.org/10.1016/j.knosys.2019.105404 (2020).

Acharya, D. & Das, D. K. Optimal coordination of over current relay using opposition learning-based gravitational search algorithm. J. Supercomput. 77, 10721–10741. https://doi.org/10.1007/s11227-021-03705-8 (2021).

Rather, S. A. & Bala, P. S. Lévy flight and chaos theory based gravitational search algorithm for multilayer perceptron training. Evol. Syst. 14, 365–392. https://doi.org/10.1007/s12530-022-09456-y (2023).

Liu, J., Xing, Y., Ma, Y. & Li, Y. Gravitational search algorithm based on multiple adaptive constraint strategy. Computing 102, 2117–2157. https://doi.org/10.1007/s00607-020-00828-3 (2020).

Jiang, J., Jiang, R., Meng, X. & Li, K. SCGSA: A sine chaotic gravitational search algorithm for continuous optimization problems. Expert Syst. Appl. 144, 113118. https://doi.org/10.1016/j.eswa.2019.113118 (2020).

Hashim, F. A. & Hussien, A. G. Snake optimizer: A novel meta-heuristic optimization algorithm. Knowl. -Based Syst. 242, 108320. https://doi.org/10.1016/j.knosys.2022.108320 (2022).

Braik, M., Hammouri, A., Atwan, J., Al-Betar, M. A. A. & Awadallah, M. A. White shark optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl. -Based Syst. 243, 108457. https://doi.org/10.1016/j.knosys.2022.108457 (2022).

Braik, M. S. Chameleon swarm algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Syst. Appl. 174, 114685. https://doi.org/10.1016/j.eswa.2021.114685 (2021).

Chou, J. S. & Truong, D. N. A novel metaheuristic optimizer inspired by behavior of jellyfish in ocean. Appl. Math. Comput. 389, 125535. https://doi.org/10.1016/j.amc.2020.125535 (2021).

Bayzidi, H., Talatahari, S., Saraee, M. & Lamarche, C. P. Social network search for solving engineering optimization problems. Comput. Intell. Neurosci. 8548639 https://doi.org/10.1155/2021/8548639 (2021).

Ahmadianfar, I., Bozorg-Haddad, O. & Chu, X. Gradient-based optimizer: A new metaheuristic optimization algorithm. Inf. Sci. 540, 131–159. https://doi.org/10.1016/j.ins.2020.06.037 (2020).

Faramarzi, A., Heidarinejad, M., Stephens, B. & Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl-Based Syst. 191, 105190. https://doi.org/10.1016/j.knosys.2019.105190 (2020).

Ahmadianfar, I., Heidari, A. A., Gandomi, A. H., Chu, X. & Chen, H. Run beyond the metaphor: an efficient optimization algorithm based on runge Kutta method. Expert Syst. Appl. 181, 115079. https://doi.org/10.1016/j.eswa.2021.115079 (2021).

Heidari, A. A. et al. Harris Hawks optimization: algorithm and applications. Future Gener Comp. Syst. 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 (2019).

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant Nos. 72071207 and 62103425).

Author information

Authors and Affiliations

Contributions

Zhanghua Yang: Methodology, Conceptualization, Investigation, Writing—Review & Editing. Yuanli Cai: Methodology, Conceptualization, Software, Writing—Original Draft. Ge Li: Supervision. Peng Wang: Software, Validation. Yu Chen: Writing—Reviewing and Editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yang, Z., Cai, Y., Li, G. et al. Multi-strategy collaborative optimization of gravitational search algorithm. Sci Rep 15, 29533 (2025). https://doi.org/10.1038/s41598-025-13215-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-13215-9