Abstract

This study aims to enhance the accuracy and efficiency of MRI-based brain tumor diagnosis by leveraging deep learning (DL) techniques applied to multichannel MRI inputs. MRI data were collected from 203 subjects, including 100 normal cases and 103 cases with 13 distinct brain tumor types. Non-contrast T1-weighted (T1w) and T2-weighted (T2w) images were combined with their average to form RGB three-channel inputs, enriching the representation for model training. Several convolutional neural network (CNN) architectures were evaluated for tumor classification, while fully convolutional networks (FCNs) were employed for tumor segmentation. Standard preprocessing, normalization, and training procedures were rigorously followed. The RGB fusion of T1w, T2w, and their average significantly enhanced model performance. The classification task achieved a top accuracy of 98.3% using the Darknet53 model, and segmentation attained a mean Dice score of 0.937 with ResNet50. These results demonstrate the effectiveness of multichannel input fusion and model selection in improving brain tumor analysis. While not yet integrated into clinical workflows, this approach holds promise for future development of DL-assisted decision-support tools in radiological practice.

Similar content being viewed by others

Introduction

Magnetic Resonance Imaging (MRI) remains a cornerstone in the diagnosis and management of brain tumors due to its superior soft tissue contrast and non-invasive nature. However, traditional diagnostic workflows rely heavily on radiologists’ expertise, making the process labor-intensive and subject to inter-observer variability. While contrast-enhanced MRI is commonly used to improve lesion visibility, it may be contraindicated in certain patient populations—such as elderly individuals, those with renal impairment, or patients allergic to contrast agents. These limitations highlight the need for accurate and reliable diagnostic approaches based solely on non-contrast MRI sequences, particularly T1-weighted (T1w) and T2-weighted (T2w) imaging.

In recent years, artificial intelligence (AI), especially deep learning (DL), has significantly advanced medical image analysis by improving diagnostic accuracy and operational efficiency. Convolutional Neural Networks (CNNs) have demonstrated excellent performance in tumor classification, while Fully Convolutional Networks (FCNs) have proven effective for tumor boundary segmentation. These AI-based methods enable automated interpretation of complex medical images and offer substantial potential to assist radiologists in clinical decision-making while reducing diagnostic workload.

Despite these technological advances, key challenges persist. Non-contrast MRI inherently provides lower lesion-to-background contrast, complicating precise tumor boundary delineation. Additionally, deep learning models typically require large, well-annotated datasets and often lack interpretability—factors that are crucial for clinical adoption. Overcoming these barriers calls for the development of robust, generalizable AI frameworks capable of extracting meaningful features from non-contrast images while maintaining clinical relevance and transparency.

To address these challenges, this study proposes a deep learning-based approach that fuses T1w, T2w, and their linear average—(T1w + T2w)/2—into a three-channel RGB format to enrich image representation. This multichannel fusion supports the development of a unified DL pipeline for simultaneous tumor classification and segmentation. CNN architectures are applied for classification tasks, while FCN models are used for precise boundary delineation. The proposed framework presents a non-invasive, efficient, and clinically viable solution for patients who cannot undergo contrast-enhanced imaging. The main contributions of this study are as follows:

-

A comparative evaluation of multiple CNN and FCN architectures using both internal hospital datasets and external benchmarks (BraTS) under a unified multichannel MRI fusion strategy.

-

Integration of comprehensive performance metrics—including Dice coefficient, Intersection over Union (IoU), boundary F1-score, and Kappa index—to rigorously assess model performance in the presence of class imbalance.

-

Delivery of practical insights into the impact of RGB fusion schemes and the comparative effectiveness of lightweight versus deep model architectures—an area that remains underexplored in previous literature.

Related works

In recent years, transfer learning has been widely adopted in medical imaging, allowing models pre-trained on large, diverse datasets to be fine-tuned for specific tasks with limited data. This approach has shown promise in improving diagnostic accuracy across various medical conditions, including brain tumors1,2. Additionally, federated learning, which enables model training on distributed datasets without sharing patient data, has emerged as a solution to privacy concerns in medical imaging. This technique not only enhances data security but also improves model generalizability across diverse clinical settings3,4. These advancements align with the goals of our study, which seek to enhance the reliability and applicability of DL in brain tumor diagnostics.

Tumor detection using MRI and deep learning

Magnetic Resonance Imaging (MRI) plays a pivotal role in the diagnosis of brain diseases, particularly tumors, due to its high-resolution anatomical detail and non-invasive nature. Recent studies have demonstrated that integrating machine learning, particularly deep learning (DL), into MRI analysis significantly improves diagnostic efficiency and accuracy5,6. However, reliance solely on automated systems may risk diminishing the value of clinical judgment. Accurate diagnosis not only depends on image interpretation but also on the integration of patient-specific clinical context, which may be overlooked if technology is overly prioritized.

A range of recent works has explored advanced imaging and machine learning methods to enhance brain tumor detection, highlighting both technical advancements and the practical challenges of clinical implementation7,8,9,10. The use of computer-aided diagnosis (CAD) and DL techniques in MRI has been shown to improve early tumor detection, classification, and overall treatment outcomes11,12,13,14,15,16. These approaches enhance precision and speed, but extremely high reported accuracies—approaching 100%—raise concerns about potential overfitting and limited generalizability in real-world settings.

Several studies have reported the effectiveness of 2D CNN models in brain tumor classification using MRI, reflecting substantial progress in neuro-oncology diagnostics17,18,19. Nonetheless, challenges related to generalizing these models across heterogeneous clinical environments and patient populations remain significant. Further, emerging machine learning techniques using MRI and fMRI have enabled tumor detection and disease progression prediction without labeled data, supporting semi-supervised and unsupervised learning frameworks.

CAD systems also demonstrate the ability to detect subtle brain metastases that are frequently missed during routine assessments by leveraging CNNs trained on unique and diverse datasets. To improve multiclass classification performance, many studies have adopted transfer learning strategies, which enhance diagnostic reliability and accelerate model convergence. However, achieving consistent performance across diverse healthcare environments with minimal recalibration remains a critical hurdle20,21,22,23.

CNN-based classification of brain tumors

Convolutional Neural Networks (CNNs), as a subset of deep learning (DL), have demonstrated exceptional performance in brain tumor classification using MRI. A dual CNN architecture based on VGG-16 has been reported to classify three major tumor types—meningioma, glioma, and pituitary—with near-perfect accuracy24. Similarly, a weighted ensemble model that integrates features from VGG19 and other CNN variants has shown superior performance on the Cancer Genome Atlas dataset25. In parallel, 3D residual networks have been developed to effectively identify primary brain metastasis sites, leveraging volumetric data to improve spatial context26.

Advanced CNN-based models increasingly integrate complementary technologies to enhance diagnostic precision. Examples include visual feature extraction pipelines, the use of blockchain for data security, and stacked ensemble frameworks that combine multiple network outputs27,28,29,30,31. While these innovations have shown strong potential, integrating such systems into real-world clinical workflows remains a complex challenge due to issues such as interoperability, computational demands, and clinician acceptance.

Transfer learning techniques continue to play a crucial role in improving CNN performance on limited medical datasets. Pretrained networks fine-tuned for brain tumor classification have shown high diagnostic accuracy32,33. Furthermore, optimized residual learning frameworks incorporating metaheuristic algorithms have further enhanced classification performance34,35. Nevertheless, ensuring consistent effectiveness across diverse imaging protocols and patient demographics remains an ongoing challenge.

Beyond classification, DL models are now being employed for segmentation and tumor grading. Hybrid models combining U-Net and DenseNet architectures have demonstrated improved accuracy in segmenting tumor boundaries36. Federated learning approaches, which preserve data privacy while enabling cross-institutional training, have also shown promise in enhancing model generalizability for automated segmentation tasks26,37. Additionally, ensemble DL systems have proven effective in non-invasive glioma grading using FLAIR MRI sequences38,39,40.

Recent developments also include fully integrated diagnostic pipelines capable of classifying diffuse gliomas from whole-slide images, thus potentially eliminating the need for histological examination41,42. Despite these advancements, widespread clinical adoption still requires rigorous validation to ensure the reliability, robustness, and interpretability of these models across varied clinical settings43,44,45,46.

The current state-of-the-art research

Recent advancements in artificial intelligence (AI) and deep learning (DL) have led to innovative approaches in both medical and industrial domains. Gangopadhyay et al. (2025) introduced a lightweight self-attention-based multi-task deep learning model that demonstrates high efficiency for industrial solar panel monitoring and environmental surveillance, showcasing the broader applicability of multi-task DL frameworks beyond healthcare47.

In the realm of medical AI, Roy et al. emphasized the importance of explainable AI (XAI) for enhancing transparency and trust in healthcare ecosystems, advocating for models that provide interpretable outputs suitable for clinical integration43. Extending this theme, Kabiraj et al. developed a weakly supervised model capable of multi-disease detection and localization in thoracic X-rays, offering both diagnostic accuracy and interpretability without requiring dense annotations31.

Roy et al. (2024) also proposed a forward attention-based deep network tailored for breast histopathology image classification, demonstrating how attention mechanisms can improve performance in high-resolution medical imaging tasks30. Earlier, Roy and Shoghi (2019) presented a pioneering computer-aided tumor segmentation technique using T2-weighted MR images from patient-derived tumor xenografts, laying the groundwork for precision tumor delineation in MRI15.

Together, these studies highlight key trends in state-of-the-art research: the integration of attention mechanisms, the move toward explainable and weakly supervised models, and the adoption of multi-task architectures—all aiming to enhance performance, interpretability, and clinical relevance. These innovations provide critical insights and inspiration for the present study’s focus on RGB-fused MRI-based brain tumor classification and segmentation using CNN and FCN models.

Methods and materials

Ethics approval

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of E-DA Hospital, Kaohsiung, Taiwan (Approval Number: EMRP-110-084, Date of Approval: 5 August 2021). Verbal and written information detailing all experimental procedures was provided to all participants, and written informed consent was obtained prior to their participation and the collection of experimental data.

MR imaging and protocols

The MRI scanners used in this study include a range of GE Signa Excite models operating at 1.5 Tesla (Table 1). The acquired images have a 512 × 512 pixels resolution in DICOM format. The imaging parameters include a 90-degree flip angle, a specific absorption rate (SAR) ranging from 0.73 to 1.33 W/kg, and a slice thickness of 5 mm. The repetition time (TR) varies between 380 and 600 milliseconds, while the echo time (TE) ranges from 9 to 23 milliseconds. The scans are obtained with 6.5 mm slice spacing, with series descriptions for Axial T1w and non-contrast sequences, provided by the GE Signa Excite GEMR1 1.5T scanner.

Tumor volume was calculated by manually delineating the tumor boundaries on each 2D MRI slice, a process performed by expert radiologists to ensure accuracy. The total pixel count within the tumor boundary across all slices was then accumulated to represent the tumor’s total volume in 3D space. To convert this pixel-based volume into physical units (mm³), specific imaging parameters were applied. The slice thickness was set to 5 mm, and the pixel spacing in the X and Y dimensions was 0.4688 mm × 0.4688 mm. The voxel volume, representing the 3D unit of measurement, was determined as the product of slice thickness and pixel spacing, calculated as 5 mm × 0.4688 mm × 0.4688 mm = 1.0988 mm3 or mL per voxel. The final tumor volume in milliliters (mL) was obtained by multiplying the total number of tumor pixels by the voxel volume. This approach provides a precise estimation of tumor size, accurately accounting for the slice thickness and pixel resolution of the MRI images.

The enrolled samples

Table 2 presents a summary of brain tumor case demographics, categorizing participants by gender, case counts, and average age. The study includes 203 individuals who underwent MRI brain imaging using T1-weighted (T1w) and T2-weighted (T2w) sequences, with 100 individuals having no tumors and 103 diagnosed with brain tumors. The dataset used in this study consists of 3,038 2D MRI images for each T1w and T2w sequence, comprising 2,238 images without brain tumors and 800 images with tumors for each modality.

The average age of individuals without tumors was 53.2 years, with females averaging 54.9 years and males 52.3 years. In contrast, the average age of individuals with tumors was 57.7 years, with females averaging 55.9 years and males 59.5 years. Among the different 13 tumor types, meningioma (31 cases, avg. age 57.4 years) and metastasis (49 cases, avg. age 63.2 years) were the most common, with a higher prevalence among females. Glioblastoma and astrocytoma, on the other hand, were more frequently observed in males. Some rare tumor types, such as central neurocytoma and neurofibroma, had very few reported cases. Additionally, the age distribution varied among tumor types; glioblastoma cases had an overall average age of 65.5 years, with females averaging 61.0 years and males 79.0 years, while metastasis cases showed an older age distribution with an average of 63.2 years. This table provides a comprehensive demographic overview, offering valuable insights into the distribution of brain tumors across different age groups and genders, which supports further analysis in this study.

This data design provides a comprehensive understanding of the landscape of brain tumors diagnosed through MRI, emphasizing not only the prevalence of tumors but also the diversity of tumor types, their demographic distribution, and the implications for advancing medical imaging and diagnostic technologies. The rarity of certain tumors poses unique challenges, as the limited number of cases can hinder the ability of AI models to learn effectively, potentially affecting diagnostic accuracy. The variability in the frequency of different brain tumor types underscores the need to carefully account for this disparity when developing and training robust AI models for diagnostic imaging.

The fusion of T1w and T2w

Both T1w and T2w images are non-contrast. The images are normalized to a 0–1 double format and then fused by assigning T1w to the red channel, T2w to the green channel, and either their average or repetition to the blue channel (Fig. 1). Additionally, the image resolution for training was set to 300 × 300 pixels, despite the original dataset having 512 × 512 pixels, to optimize computational efficiency.

Table 3 summarizes the eight different combinations used to generate 2D three-channel RGB images from T1-weighted (T1w), T2-weighted (T2w), and their linear average (T1w + T2w)/2. Each combination assigns T1w, T2w, or their average to the red, green, and blue channels, respectively, creating diverse RGB configurations for comparative analysis.

Figure 2 visualizes these combinations, demonstrating how variations in channel assignments influence the appearance of fused RGB images. This provides insight into the spatial and textural information encoded in each combination.

Example visualizations of T1w, T2w, and eight RGB combinations based on T1w, T2w, or their linear average (refer to Table 3).

The CNN models for classification of MRI images

This study employs Convolutional Neural Networks (CNNs) for the classification of MRI brain images using non-contrast T1-weighted (T1w) and T2-weighted (T2w) sequences. The dataset consists of MRI scans categorized into two groups: Tumor and No Tumor. To enhance feature extraction, the images undergo preprocessing steps, including resizing to 300 × 300 pixels, intensity normalization to the (0,1) range, and fusion of T1w, T2w, and (T1w + T2w)/2 into an RGB channel representation.

Several CNN architectures are utilized for classification, including VGG19, VGG16, Darknet19, Darknet53, ResNet50, and ResNet101. These models are trained to distinguish between tumor and non-tumor images, leveraging deep learning techniques for automated feature extraction and decision-making. The training process follows a well-defined set of hyperparameters. The CNN models are trained for 30 epochs with a batch size of 30, using a learning rate of 0.001. To ensure robust validation, a 5-fold cross-validation strategy is implemented. The ADAM optimizer is employed for model optimization, while cross-entropy loss is used as the loss function to enhance classification accuracy. The dataset was split into training, validation, and testing sets with a ratio of 70%, 10%, and 20%, respectively. The performance of the CNN models is evaluated using key classification metrics, including accuracy, sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV).

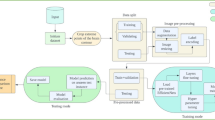

The final classification results are based on the CNN models’ predictive performance, providing insights into their effectiveness in distinguishing brain tumor presence from non-tumor cases. The workflow for MRI brain image classification using CNN models is summarized in Fig. 3, detailing the step-by-step process from image loading and preprocessing to training, evaluation, and result interpretation.

The FCN models for MRI image segmentation

This study employs Fully Convolutional Networks (FCNs) for MRI brain tumor segmentation using non-contrast T1-weighted (T1w) and T2-weighted (T2w) sequences. The dataset includes MRI scans with boundary-labeled tumor regions, covering 13 different brain tumor types. The preprocessing steps involve resizing images to 300 × 300 pixels, intensity normalization to the (0,1) range, and fusing T1w and T2w images into an RGB representation. To optimize performance, hyperparameters are set with a batch size of 20, 100 epochs, and a learning rate of 0.001, utilizing ADAM as the optimizer. The dataset was split into training, validation, and testing sets with a ratio of 70%, 10%, and 20%, respectively. The FCN models are trained using multiple backbone architectures, including Xception, ResNet50, ResNet18, MobileNetV2, and InceptionResNetV2, to achieve precise tumor boundary segmentation.

The segmentation performance is evaluated using key metrics such as global accuracy, mean accuracy, mean intersection over union (Mean IoU), weighted IoU, boundary F1-score (BF), and Dice score. The results obtained from the FCN models provide insights into their effectiveness in segmenting brain tumors from MRI scans. The overall workflow for MRI brain tumor segmentation using FCN models is summarized in Fig. 4, illustrating the step-by-step process from image loading and preprocessing to model training, evaluation, and final segmentation results.

A detailed summary of the selected CNN and FCN architectures is presented in Table 4. These architectures were deliberately chosen to represent a diverse set of network designs, including:

-

Lightweight architectures (e.g., MobileNetV2, DarkNet19) optimized for fast inference and low-resource environments,

-

Deep residual networks (e.g., ResNet18, ResNet50, ResNet101) known for their strong feature extraction and gradient stability,

-

And hybrid or advanced architectures (e.g., InceptionResNetV2, Xception) that leverage inception modules and depth wise separable convolutions for enhanced representational power.

This architectural diversity enables a comprehensive evaluation of how different model complexities and design philosophies impact classification and segmentation performance in brain MRI analysis.

Evaluated performance of CNN and FCN models

The performance of the classification model is evaluated using a confusion matrix, which provides metrics such as accuracy, sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV) as defined in Eqs. (1)–(5). These metrics are derived based on true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN) (Fig. 5). The Kappa consistency statistic is defined in Eq. (6), where Po represents the observed agreement, and Pe denotes the expected agreement by chance. Additionally, an ANOVA (Analysis of Variance) test was conducted to examine significant differences in performance metrics across image (RGB) types and CNN models. To visually analyze model performance, a radar plot and bar chart were employed to illustrate the distribution of classification accuracy across CNN models. Collectively, these metrics provide a comprehensive assessment of the classification precision and reliability of the presented models for MRI image analysis. A P-value smaller than 0.05 was considered statistically significant. All experiments were conducted on a workstation with an NVIDIA RTX A6000 GPU (48 GB VRAM), 256 GB RAM, running Windows 11 64-bit, using MATLAB R2023b with the Deep Learning Toolbox. To further assess the generalizability of the trained models, external validation was conducted using the BraTS benchmark dataset, as described in Sect. 4.3.

Segmentation performance was evaluated using a comprehensive set of metrics, including global accuracy, mean accuracy, mean intersection over union (Mean IoU), weighted IoU, boundary F-1 score (BF), and the Dice score, as defined in Eqs. (7–14). Additionally, the mean absolute error (MAE) between the true tumor volume (mm3) and the volume estimated by the FCN models was calculated to assess segmentation accuracy, as shown in Eq. (15). These metrics provide an in-depth evaluation of the model’s capability to accurately segment MRI images, offering valuable insights into both overall segmentation precision and the delineation of tumor boundaries.

Results

Evaluation of RGB fusion and CNN models for tumor classification

Table 5 presents the results of an ANOVA test conducted to evaluate the influence of six CNN architectures and ten RGB fusion (including T1w, T2w, and eight RGB channel combinations) on various performance metrics, including Sensitivity, Specificity, NPV, PPV, Accuracy, and Kappa. Both RGB fusion and CNN models exhibited statistically significant effects on all performance metrics, as indicated by the F-values and P-values. Specifically, the CNN models had a greater impact on Sensitivity (F = 7.91, P < 0.001), Specificity (F = 4.95, P < 0.05), NPV (F = 8.71, P < 0.001), PPV (F = 5.69, P < 0.05), Accuracy (F = 28.74, P < 0.001), and Kappa (F = 29.26, P < 0.001), reflecting their critical role in classification performance. Similarly, the RGB combinations significantly influenced Specificity (F = 2.11, P = 0.049) and PPV (F = 2.38, P = 0.027), demonstrating the importance of appropriate channel selection in enhancing model performance. These findings, as detailed in the supplementary material, underscore the combined impact of CNN architecture and RGB fusion strategies in achieving superior tumor classification outcomes.

A comprehensive overview of the performance metrics for single-modality inputs (T1 and T2) and eight RGB channel combinations derived from T1, T2, and their linear average ((T1 + T2)/2) is provided in the supplementary material. Figure 6 presents a radar plot illustrating the performance metrics (Accuracy, Sensitivity, Specificity, Positive Predictive Value (PPV), Negative Predictive Value (NPV), and Kappa index) for 60 different model configurations (i.e., 6 CNN Models × 10 Image Types), combining eight RGB channel setups with six CNN architectures. Among the models, two standout configurations—M30 (ResNet101 with RGB channels (T1 + T2)/2, T2, T1 and M46 (Darknet53 with RGB channels T2, T1, (T1 + T2)/2)—achieved exceptional performance, with all metrics exceeding 0.95. These results highlight the superior ability of these specific RGB configurations and CNN architectures to leverage multi-modality imaging features effectively. The radar plot visually emphasizes the high performance of these models in comparison to other configurations, demonstrating the critical role of appropriate architecture and channel selection in achieving robust classification results for tumor detection.

Figure 7 presents the Kappa index performance across the T1w, T2w, and 8 RGB combinations evaluated using 6 CNN architectures. The bar chart highlights that certain configuration, such as the eighth combination (average in red, T2w in green, and T1w in blue), consistently achieved higher Kappa scores (e.g., 0.960 with Darknet53), demonstrating its superiority in retaining relevant features for classification. This comparative analysis underscores the importance of selecting appropriate channel assignments to maximize the model’s performance, showcasing the potential of RGB fusion for enhancing multi-modality image analysis in brain tumor detection.

The performance of individual MRI modalities (T1w and T2w) and eight RGB channel combinations derived from these modalities, evaluated across six CNN architectures (VGG19, VGG16, Darknet19, Darknet53, ResNet50, and ResNet101), is detailed in supplementary material. Metrics reported include Accuracy, Sensitivity, Specificity, Positive Predictive Value (PPV), Negative Predictive Value (NPV), and the Kappa index. Among all configurations, Darknet53 achieved the highest Kappa index (0.960) using the sixth RGB combination, which assigns the linear average of T1w and T2w to the red channel, T2w to the green channel, and T1w to the blue channel. This combination also demonstrated outstanding performance, with an Accuracy of 0.983, Sensitivity of 0.992, Specificity of 0.963, PPV of 0.984, and NPV of 0.981, showcasing its superior capability to extract and leverage complementary information from the fused modalities.

Notably, while T1w and T2w performed well individually, achieving Kappa indices of 0.905 and 0.906 respectively with ResNet101 and ResNet50, the RGB combinations consistently outperformed single modalities across most models. The findings underscore the advantage of fusing T1w and T2w into RGB formats, particularly the sixth combination, which maximize diagnostic performance by enhancing feature representation. These results validate the importance of exploring RGB fusion strategies for improving CNN-based brain tumor classification.

Table 6 presents the performance metrics for ten image types, including single-modality images (T1w and T2w) and eight RGB fusion combinations, evaluated using CNN models selected for their maximum Kappa index. Among the single-modality inputs, ResNet101 achieved the highest Kappa (0.906) for T1w (Model M6), and ResNet50 achieved the same Kappa (0.906) for T2w (Model M11). For the RGB fusion combinations, Darknet53 consistently demonstrated superior performance across multiple configurations. Notably, the sixth RGB combination (T2w as red, T1w as green, and T2w as blue) yielded the highest Kappa index of 0.960 with Darknet53 (Model M46), along with outstanding accuracy (0.983), sensitivity (0.992), and specificity (0.963). Similarly, ResNet101 with the third RGB combination achieved a high Kappa index of 0.959 (Model M30). These findings emphasize the effectiveness of RGB fusion in enhancing classification performance compared to single-modality images. The results highlight the importance of selecting optimal CNN architectures and RGB configurations to achieve robust and accurate tumor classification. These results underline the effectiveness of specific RGB configurations in enhancing model performance. The findings emphasize the importance of testing various CNN architectures and RGB combinations to identify optimal setups that maximize diagnostic performance in brain tumor classification tasks.

The ResNet101 and Darknet53 model equipped with the ADAM optimizer displayed a strong and consistent performance across all metrics due to Kappa index over 0.95. These results affirm the model’s robustness and accuracy in diagnosing the presence of tumors using MRI imaging, making it a reliable tool in medical diagnostics. Meanwhile, the Kappa statistics, which measure the Kappa agreement 0.96 indicate an excellent level of agreement. The darknet53 model, using the sixth RGB combination, proves particularly effective for the following reasons.

-

1.

Enhanced Feature Representation with RGB Channels.

-

2.

The sixth combination assigns the linear average of T1w and T2w to the red channel, which helps to balance the intensity differences and retain complementary spatial information present in both modalities. This fusion enhances the model’s ability to capture subtle differences that may not be evident when using individual modalities.

-

3.

Preservation of Modality-Specific Strengths.

-

4.

Assigning T2w to the green channel leverages its higher sensitivity for detecting water content and fluid-related abnormalities, while assigning T1w to the blue channel highlights anatomical details. This channel distribution preserves the strengths of each modality, making them more accessible for the model to process.

-

5.

Model Compatibility with RGB Input.

-

6.

ResNet101 and Darknet53, as a deeper CNN architecture, is designed to efficiently process RGB inputs. The sixth combination aligns well with architecture’s capacity to learn complex patterns from multi-channel data, leading to improved classification performance.

-

7.

Complementary Information Fusion.

-

8.

By integrating T1w, T2w, and their linear average, the sixth combination provides a richer input space, allowing the model to extract and leverage complementary information. This reduces ambiguity in tumor classification and improves overall diagnostic accuracy.

These factors collectively explain why ResNet101 and Darknet53, when paired with the sixth RGB combination, achieves the highest Kappa index and excellent performance across other metrics. This combination effectively maximizes the synergy between imaging modalities and the model architecture.

Analysis of segmentation performance across brain tumor types and FCN models

Table 7 presents the results of an ANOVA test evaluating the effects of tumor type and Fully Convolutional Networks (FCNs) on various segmentation performance metrics. Five FCN architectures were assessed for segmenting 13 brain tumor types. The metrics include Global Accuracy, Mean Accuracy, Mean Intersection over Union (IoU), Weighted IoU, Mean Boundary F1 (BF) Score, Dice coefficient, True Volume, Estimated Volume, and MAE. The ANOVA results reveal that certain performance metrics did not show significant differences across brain tumor types. Specifically, Global Accuracy (P = 0.856), Mean Accuracy (P = 0.055), Weighted IoU (P = 0.660), and Estimated Volume (P = 0.091) were not significantly influenced by the type of tumor. These findings suggest that these metrics are less sensitive to tumor-specific variations and are more likely to reflect the general performance of the segmentation models across different tumor categories. This highlights the robustness of these indices in maintaining consistent performance irrespective of tumor type.

The results indicate that the type of tumor had a statistically significant impact on several metrics, including Mean IoU (F = 3.323, P < 0.001), BF Score (F = 2.455, P = 0.005), and Dice (F = 3.073, P < 0.001). In contrast, FCN models demonstrated a highly significant influence on all metrics, including Global Accuracy (F = 88.377, P < 0.001), Mean Accuracy (F = 8.059, P < 0.001), Mean IoU (F = 71.049, P < 0.001), Weighted IoU (F = 71.227, P < 0.001), BF Score (F = 212.725, P < 0.001), and Dice (F = 65.755, P < 0.001). Additionally, FCN models significantly affected volume-related metrics, such as Estimated Volume (F = 5.469, P < 0.001) and MAE (F = 59.281, P < 0.001). These findings highlight the critical role of FCN architecture in determining segmentation performance while also acknowledging the influence of tumor type on certain metrics. This underscores the importance of model selection and tumor-specific considerations in achieving accurate segmentation outcomes.

Table 8 presents the performance metrics of five Fully Convolutional Network (FCN) architectures for brain tumor segmentation tasks. Metrics evaluated include global accuracy, mean accuracy, weighted IoU, boundary F-1 (BF) score, mean IoU, Dice coefficient, and mean absolute error (MAE). ResNet50 demonstrated the highest global accuracy (0.996), mean accuracy (0.936), weighed IoU (0.882), BF score (0.992), and mean IoU (0.902), with an impressive Dice score of 0.937 and a relatively low MAE of 3.691. MobileNetV2 and ResNet18 also achieved competitive results, particularly excelling in Dice scores (0.928 and 0.932, respectively) and maintaining low MAE values (3.592 and 4.504, respectively). In contrast, InceptionResNetV2 displayed the highest MAE of 26.597, indicating challenges in volume estimation despite relatively strong global accuracy (0.986). Xception performed well in terms of Dice score (0.917) but exhibited the lowest weighted IoU (0.849) and relatively higher MAE (9.513). These findings highlight ResNet50 as the most robust architecture overall, with consistent performance across all metrics, while other architecture showed varying strengths and weaknesses in segmentation tasks.

The analysis highlights resnet50’s effectiveness in segmenting brain tumors, achieving exceptional performance in most metrics across tumor types (Table 9). However, the findings also emphasize the need for further refinement in handling rare tumor types and morphologically complex cases, such as metastasis. The inclusion of sample sizes strengthens the reliability of these evaluations, facilitating a deeper understanding of the model’s clinical applicability and areas for improvement.

Table 10 provides a detailed analysis of estimated tumor volumes and the associated MAE for various FCN models, including InceptionResNetV2, MobileNetV2, ResNet18, ResNet50, and xception, across 13 tumor types. The true tumor volume serves as the baseline for comparison. ResNet50 consistently demonstrated the lowest MAE across most tumor types, indicating its superior accuracy in volume estimation. For instance, ResNet50 achieved a MAE of 1.4 for Astrocytoma, 0.3 for central neurocytoma, and 1.0 for glioblastoma. In contrast, InceptionResNetV2 exhibited higher MAE values, such as 28.5 for Astrocytoma and 54.1 for ependymoma, suggesting challenges in precise volume estimation. MobileNetV2 and ResNet18 also performed well, with moderate MAE values for various tumor types. xception, while achieving competitive performance for some tumor types like gangliocytoma (MAE = 0.1), displayed higher errors for larger and more heterogeneous tumors, such as ependymoma (MAE = 15.2). These findings highlight ResNet50 as the most robust model for accurate tumor volume estimation, with other models showing varying degrees of effectiveness depending on tumor type and complexity.

This study demonstrates the effectiveness of five FCN models (InceptionResNetV2, MobileNetV2, ResNet18, ResNet50, and Xception) in brain tumor segmentation using a diverse dataset of 13 tumor types. ANOVA results reveal that the FCN model architecture significantly impacts all performance metrics, including global accuracy, mean IoU, weighted IoU, boundary F1 score, and Dice coefficient, while tumor type influences only select metrics such as Dice and mean IoU. ResNet50 stands out as the most robust model, achieving the highest Dice score and demonstrating consistent performance across all metrics. Furthermore, the model demonstrates superior volume estimation capabilities, with the lowest MAE values across most tumor types. In this study, ResNet50 outperformed other FCN architectures in segmentation tasks due to several key factors.

-

1.

Deep Architecture with Residual Connections: ResNet50’s deep architecture incorporates residual connections, which help mitigate the vanishing gradient problem in deep networks. This allows the model to learn more complex patterns and capture finer details in tumor boundaries during segmentation.

-

2.

Balanced Depth and Complexity: The 50-layer architecture strikes a balance between depth and computational efficiency, providing sufficient capacity for feature extraction without overfitting or excessive computational demands.

-

3.

Effective Feature Representation: ResNet50 is particularly adept at extracting hierarchical features, making it well-suited for segmentation tasks that require distinguishing between subtle differences in intensity and texture, such as those in T1w and T2w MRI sequences.

-

4.

Optimization and Regularization: The training process, which included advanced optimization techniques like ADAM, coupled with data augmentation (rotation, flipping, intensity adjustments), helped ResNet50 generalize better to the test data.

These factors collectively contributed to ResNet50’s superior performance in FCN-based segmentation tasks in this study. These findings underline the importance of model selection and the need for tailored approaches to tackle tumor-specific challenges, particularly for heterogeneous and rare tumor types. This research provides a strong foundation for advancing AI-based segmentation in clinical applications, offering insights for further model optimization and data integration.

External validation on the brats benchmark dataset

External validation was conducted using the publicly available BraTS dataset (https://www.kaggle.com/datasets/awsaf49/brats20-dataset-training-validation, accessed on 09/06/2025). Given the large sample size, this evaluation demonstrates the model’s robust generalization capability and aligns with competitive benchmarks reported in the BraTS challenge literature. The dataset consisted of 24,422 MRI slices with tumor presence and 26,425 slices without tumors, used for segmentation and classification tasks. To enhance tumor visualization, eight RGB fusion types (Type 1–Type 8) were generated by combining T1-weighted (T1w), T2-weighted (T2w), and the average of T1w and T2w images (Fig. 8). The leftmost image in the figure illustrates the ground truth label of the edema region, which served as the reference for both CNN-based classification and FCN-based segmentation. Each RGB type applies a unique channel assignment strategy, enabling a comparative assessment of how different image combinations influence model performance and feature representation.

Table 11 compares the classification performance of eight different RGB fusion types combining T1-weighted (T1w), T2-weighted (T2w), and their average. Each fusion type was paired with a specific CNN model and optimizer. The results show that Type 7 (Avg, T1w, T2w) using VGG19 + SGDM achieved the highest classification accuracy (0.979) and Kappa (0.956), indicating the most reliable and consistent performance. Models using DarkNet19 and ResNet101 also demonstrated strong performance in selected configurations.

Table 12 presents the Dice coefficients of five FCN models evaluated across eight RGB fusion types. ResNet18 and ResNet50 consistently achieved high Dice scores, with ResNet18 scoring 0.959 on Type 8 and 0.948 on Type 3, indicating robust tumor segmentation performance. Xception performed strongly on Types 2, 3, and 4 (Dice > 0.90), while InceptionResNetV2 showed the most stable performance across all types, albeit at a moderate level. MobileNetV2 showed good results for Types 2–4, but lower performance on Types 5–8. The findings highlight that both model architecture and RGB channel composition significantly influence segmentation accuracy. The fully convolutional network (FCN) model achieved a Dice coefficient of 0.839 for the Whole Tumor (WT) region, indicating strong segmentation performance.

Figure 9 demonstrates a comparison of five different Fully Convolutional Networks (FCNs) - InceptionResNetV2 (FCN 1), MobileNetV2 (FCN 2), ResNet18 (FCN 3), ResNet50 (FCN 4), and Xception (FCN 5) - applied to segmentation tasks across eight RGB types from the BarTs2020 dataset. Each row represents a specific RGB type (Type 1 to Type 8), and each column corresponds to the output from one of the FCNs. The results indicate that ResNet50 performs well for Type 3, and both ResNet18 and ResNet50 perform well for Type 8.

Discussion

Miss classification using CNN models

Table 13 presents a detailed summary of misclassification counts across various brain tumor categories and normal cases, using six CNN architectures: VGG19, VGG16, ResNet50, ResNet101, Darknet19, and Darknet53. Among all categories, meningioma and metastasis exhibited the highest number of misclassifications, with 109 and 144 errors respectively. This suggests that these tumor types pose particular challenges for CNN-based classification, likely due to their heterogeneous appearance and overlapping features with other classes.

A total of 43 false positives were recorded for the “absent of tumor” category, highlighting the difficulty models face in confidently distinguishing non-tumorous cases, which could lead to over-diagnosis in clinical settings. Model-wise, VGG16 demonstrated notably high misclassification rates, especially for meningioma (59) and metastasis (61), which may indicate issues with model sensitivity or a tendency to overfit. In contrast, ResNet50 and ResNet101 achieved more balanced results, though they too struggled with the most challenging tumor types.

Darknet53 showed the lowest overall misclassification count (16) and particularly strong performance on metastasis classification (only 10 misclassified out of 413). Its deeper 53-layer architecture likely enables superior feature extraction, especially when trained on RGB-fused inputs derived from T1w and T2w images. This highlights its suitability for handling complex tumor classification tasks.

Misclassification rates were lower for rare tumor types such as neurofibroma, neuroganglioblastoma, and medulloblastoma, likely due to the limited number of cases, although this also limits statistical conclusions for those classes. Some CNN architectures—particularly Darknet53—performed well, the results emphasize the need for further model optimization. Incorporating additional MRI modalities such as FLAIR, DWI, or proton density imaging may enhance model sensitivity, especially for challenging tumor subtypes. Future work may also benefit from attention-based mechanisms or ensemble learning strategies to further reduce misclassification rates.

Figure 10 illustrates representative misclassification cases for four brain tumor types—Neurofibroma, Gangliocytoma, Meningioma, and Metastasis—using T1-weighted (T1w), T2-weighted (T2w), and fused RGB modalities. Each row displays the original T1w and T2w images along with the corresponding RGB fusion image for a specific tumor type or normal case.

Each tumor exhibits unique imaging characteristics that contribute to misclassification:

-

Neurofibroma: Low contrast and subtle signal differences reduce tumor conspicuity, making detection difficult across modalities.

-

Gangliocytoma: Exhibits heterogeneous texture and poorly defined boundaries, especially in T2w and fused images.

-

Meningioma: Often misclassified due to intensity overlap between tumor and adjacent hard/soft tissues, particularly near the skull base.

-

Metastasis: Presents as small, multifocal lesions with indistinct borders, leading to confusion in both single and fused modalities.

Although RGB fusion enhances feature representation by combining modality-specific contrasts, it may also introduce visual artifacts, texture homogenization, or signal dilution, which can obscure critical diagnostic cues. These findings underscore the importance of tailored preprocessing, robust model design, and modality-aware learning strategies to improve diagnostic performance, particularly in challenging tumor types.

Table 14 presents a summary of misclassification factors for five categories—Neurofibroma, Gangliocytoma, Meningioma, Metastasis, and Absence of Tumor—across three imaging modalities: T1-weighted (T1w), T2-weighted (T2w), and Fused RGB images. T1w images commonly struggled with low contrast, poor lesion visibility, and subtle anatomical features, particularly in rare or small tumor types. T2w images offered improved soft tissue contrast and better fluid sensitivity, but often suffered from blurry boundaries, overestimation of lesion size, or misinterpretation of normal hyperintensities.

Although the RGB fusion modality aims to combine complementary features from both T1w and T2w, it sometimes resulted in texture homogenization, loss of boundary sharpness, or overemphasis on irrelevant features, which contributed to misclassification. For example, neurofibroma misclassification was attributed to subtle signals being masked by fusion blending, while gangliocytoma exhibited reduced clarity due to heterogeneous textures. Meningioma was affected by overlapping signals in skull base regions, and metastasis by poorly defined, multifocal lesions. In non-tumor cases, normal anatomical structures were sometimes mistaken for lesions due to blended textures in the fused image. These observations highlight the need for modality-aware model design, adaptive preprocessing, and enhanced feature extraction techniques that account for the inherent limitations and artifacts specific to each modality.

Table 15 presents a comparative analysis of recent studies (2022–2024) focused on brain tumor classification using deep learning (DL) techniques. The reviewed studies address different classification tasks, including multi-class differentiation among glioma, meningioma, and pituitary tumors, as well as binary classification between tumor and non-tumor cases. Sample sizes vary across studies, ranging from 3,038 to 5,712 MRI images. A variety of deep learning approaches have been employed, including transfer learning-based CNNs, vision transformer architectures, and hybrid models combining convolutional features. Reported classification accuracies are consistently high across studies. Notably, B. Vimala et al. achieved the highest reported accuracy of 0.991 using a transfer learning-based model. The current study also demonstrates competitive performance, achieving an accuracy of 0.983 using a transfer learning-based DL approach for binary classification between tumor and non-tumor cases.

The results highlight the effectiveness of deep learning—particularly transfer learning methods—in brain tumor classification. Minor differences in accuracy across studies can be attributed to factors such as dataset composition, task complexity, model architecture, and modality inclusion. This comparison reinforces the relevance of the proposed method in line with state-of-the-art research.

Segmentation for brain tumors

This study utilized non-contrast T1-weighted (T1w) and T2-weighted (T2w) MRI scans to segment brain tumors using fully convolutional network (FCN) models. However, both modalities exhibit intrinsic limitations in tumor boundary delineation. T1w images often lack sufficient contrast to clearly separate tumors from surrounding brain tissues—unless specific features like hemorrhage are present—while T2w images may struggle to differentiate tumors from peritumoral edema, as both appear similarly hyperintense. Among the evaluated models, ResNet50, ResNet18, and MobileNetV2 demonstrated strong performance, though subtle differences were observed in accuracy and error magnitude. Notably, MobileNetV2 produced the most accurate results in several cases, showing both high Dice similarity and low Mean Absolute Error (MAE). On the other hand, ResNet50 performed well but exhibited slightly higher MAE in some cases.

Tumor types such as ependymoma and oligodendroglioma consistently showed high segmentation errors across all models, likely due to their irregular shapes, diffuse margins, or limited training data. In contrast, astrocytoma and medulloblastoma yielded relatively lower errors, suggesting that some tumor types with more well-defined features are more amenable to accurate segmentation.

These findings offer valuable insights into the effectiveness of various FCN models for estimating tumor volumes—a critical factor in treatment planning, surgical guidance, and follow-up monitoring. In particular, MobileNetV2 appears to be a promising candidate for clinical deployment where precision in volumetric estimation is essential. However, the performance variability across tumor types underscores the need for tumor-specific model optimization and adaptive learning strategies.

Figure 11 presents a comparative visual analysis of segmentation accuracy and MAE for five FCN models—InceptionResNetV2, MobileNetV2, ResNet18, ResNet50, and Xception—applied to meningioma segmentation. Manual tumor boundaries (white contours) are compared with model-predicted segmentations (yellow contours). The Dice coefficient reflects the spatial overlap between these boundaries, while the MAE quantifies the difference in volumetric estimation.

The models MobileNetV2 (Dice = 0.948, MAE = 0.8), ResNet18 (Dice = 0.939, MAE = 2.6), and ResNet50 (Dice = 0.932, MAE = 3.1) achieved the most accurate and consistent results. These scores indicate high segmentation fidelity, particularly in capturing the true extent and shape of meningiomas. In contrast, InceptionResNetV2 and Xception exhibited lower performance, suggesting reduced reliability in boundary localization or volume estimation for this tumor type.

Figure 12 presents a visual comparison of segmentation results from five FCN models—InceptionResNetV2, MobileNetV2, ResNet18, ResNet50, and Xception—on a challenging axial MRI slice containing multiple small metastatic tumors. Ground truth annotations are shown in white, and model-predicted boundaries are overlaid in yellow. Among the models, ResNet18 and ResNet50 demonstrate the most accurate boundary alignment with the ground truth, effectively capturing the small lesion contours with minimal false positives. In contrast, InceptionResNetV2 and Xception tend to produce over-segmented, fragmented, or diffuse boundaries, indicating reduced localization precision. MobileNetV2 offers balanced performance, with reasonable coverage and minimal boundary distortion.

This comparative visualization underscores the challenges of segmenting small and scattered metastatic lesions and highlights significant variability in model performance. While Dice scores provide a general measure of overlap, these results emphasize the importance of incorporating boundary-specific metrics—such as Mean Boundary F1-score or Hausdorff Distance—to better assess segmentation quality, especially in clinically critical cases involving subtle or multifocal tumor presentations.

Table 16 presents a comparative analysis of recent studies on brain tumor segmentation performance using deep learning (DL) models, with Dice score serving as the primary evaluation metric. The studies vary in terms of dataset size, model architecture, and segmentation methodology. For instance, S. Rohilla and S. Jain (2023) utilized ResNet50 on a dataset of 110 samples and achieved a Dice score of 0.893. Y. Chen et al. (2023) applied an ensemble-based deep learning approach, resulting in a Dice score of 0.854 on 369 images. R. Li et al. (2023), using a general deep network, reported a lower Dice score of 0.810 with 649 samples.

Among all studies, X. Yan et al. (2023) achieved the highest Dice score of 0.951, though it was based on a relatively small dataset of 133 images, which may limit generalizability. In contrast, J. Yuan (2024) used a more advanced segmentation framework—Mask R-CNN with a channel attention (CA) module—achieving a Dice score of 0.897 across a larger dataset of 1,066 samples. In comparison, the current study, using ResNet50 on 800 samples, achieved a high Dice score of 0.937, demonstrating both strong segmentation accuracy and scalability. These results highlight the effectiveness of ResNet50-based architectures and support the model’s applicability in real-world clinical segmentation tasks.

Generalization assessment of CNN and FCN models using brats data

To validate model generalizability, both the trained CNN classification models and FCN segmentation models were applied to the external BraTS2020 dataset, which contains a large number of diverse brain tumor cases. For classification, eight RGB fusion types—created by combining T1w, T2w, and their average—were used as input across multiple CNN architectures. DarkNet19, ResNet101, and VGG19 models demonstrated high accuracy, with the highest recorded accuracy of 0.958 and Kappa value of 0.913 (Type 1 using DarkNet19 + ADAM). This confirms that CNNs trained on hospital datasets can still effectively distinguish tumor from non-tumor cases in external benchmark data.

For segmentation, five FCN models (InceptionResNetV2, MobileNetV2, ResNet18, ResNet50, and Xception) were evaluated using Dice coefficients across the same eight RGB fusion types. ResNet50 and ResNet18 achieved the best overall performance, with Dice scores up to 0.959 on Type 8 fusion (ResNet50) and 0.948 on Type 3 (ResNet18), highlighting their strong tumor localization capabilities. Xception also performed well on selected types, though with more variability across fusion schemes. Notably, fusion type and model architecture both significantly impacted performance.

Collectively, these results demonstrate that the proposed deep learning models maintain strong classification and segmentation performance when applied to a large, independent dataset, further supporting their robustness and clinical applicability.

Conclusions

This study presents a unified deep learning framework for brain tumor classification and segmentation using non-contrast MRI, specifically T1-weighted (T1w), T2-weighted (T2w), and their linear average fused into a three-channel RGB format. By applying CNN architectures for classification and FCN models for segmentation, the proposed approach addresses key clinical challenges—particularly in patients who cannot undergo contrast-enhanced imaging.

Through a comprehensive comparative analysis involving both internal hospital datasets and the external BraTS benchmark (as detailed in Sect. 4.3 and 5.3), the study achieved high classification accuracy (98.3%) and a Dice score of 0.937 for tumor boundary delineation. These results confirm the generalizability and robustness of the models across datasets of varying characteristics.

Limitations and future works

While the proposed deep learning (DL) framework demonstrates strong performance for brain tumor classification and segmentation using non-contrast MRI, several limitations remain that inform future directions.

First, although external validation using the BraTS dataset has been conducted in this revision, further evaluation on larger, multi-institutional datasets—particularly those encompassing diverse scanner types, protocols, and patient demographics—is needed to robustly assess generalizability and clinical applicability.

Second, this study focuses on established CNN and FCN architectures. While these models provide a stable and interpretable foundation, emerging approaches such as Vision Transformers, UNet++, attention-based networks, and 3D UNet architectures offer improved feature learning and spatial modeling capabilities. These modern architectures were not included in the current study to maintain architectural consistency, but will be explored in future work to enhance performance and robustness.

Third, the current model outputs are primarily quantitative (e.g., class labels, segmentation masks), with limited direct integration into clinical workflows. To bridge this gap, future versions of the framework will incorporate confidence maps, gradient-weighted class activation maps (Grad-CAM), and other explainable AI techniques to assist clinicians in understanding the rationale behind predictions. These visual tools will allow experts to assess the spatial relevance and reliability of DL decisions. Moreover, we plan to collect expert radiologist feedback to refine model outputs and user interfaces, ensuring practical usability in clinical decision support systems (CDSS).

Fourth, while the study addresses class imbalance using techniques such as SMOTE, future work will evaluate the use of cost-sensitive learning and advanced sampling strategies to minimize synthetic noise and enhance learning in rare tumor classes.

Planned future enhancements include:

-

Fusion Strategy Analysis: Comparison of performance across single-modality input, RGB-based fusion, and full multi-modality fusion.

-

Hyperparameter Optimization: A comprehensive ablation study to assess the effects of learning rate, batch size, resolution, and regularization techniques.

-

Interpretability Tools: Deployment of visualization tools (e.g., CAMs, saliency maps) for enhanced model transparency and radiologist trust.

-

Ethical AI Practices: Implementation of federated learning to protect patient privacy and bias auditing across demographic subgroups to ensure fairness.

-

Clinical Integration: Designing an interactive dashboard or viewer interface that integrates model outputs, confidence scores, and visual cues to support real-time radiological decision-making.

In summary, these directions aim to build a more accurate, explainable, and ethically sound AI system that supports clinician interpretation, enhances diagnostic workflows, and facilitates safe deployment in real-world neuroimaging applications.

Data availability

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to restrictions, e.g., privacy and ethical concerns.

References

Rashidi, G. et al. The potential of federated learning for self-configuring medical object detection in heterogeneous data distributions. Sci. Rep. 14 (1), 23844. https://doi.org/10.1038/s41598-024-74577-0 (2024).

Mu, J. et al. Explainable federated medical image analysis through causal learning and blockchain. IEEE J. Biomed. Health Inform. 28(6), 3206–3218. https://doi.org/10.1109/JBHI.2024.3375894 (2024).

Manthe, M., Duffner, S. & Lartizien, C. Federated brain tumor segmentation: an extensive benchmark. Med. Image Anal. 97, 103270. https://doi.org/10.1016/j.media.2024.103270 (2024).

Ahamed, M. F. et al. A review on brain tumor segmentation based on deep learning methods with federated learning techniques. Comput. Med. Imaging Graph. 110, 102313. https://doi.org/10.1016/j.compmedimag.2023.102313 (2023).

Soomro, T. A. et al. Image segmentation for MR brain tumor detection using machine learning: A review. IEEE Rev. Biomed. Eng. 16, 70–90. https://doi.org/10.1109/RBME.2022.3185292 (2023).

Sahoo, D. K., Mishra, S., Mohanty, M. N., Behera, R. K. & Dhar, S. K. Brain tumor detection using DL approach, neurol. India. Jul -Aug. 71 (4), 647–654. https://doi.org/10.4103/0028-3886.383858 (2023).

Vimala, B. et al. Detection and classification of brain tumor using hybrid deep learning models. Sci. Rep. 13 (1), 23029. https://doi.org/10.1038/s41598-023-50505-6 (2023).

Robinet, L., Siegfried, A., Roques, M., Berjaoui, A. & Cohen-Jonathan Moyal, E. MRI-based DL tools for MGMT promoter methylation detection: A thorough evaluation. Cancers (Basel) 15(8), 2253. https://doi.org/10.3390/cancers15082253 (2023).

Rohilla, S. & Jain, S. Detection of brain tumor employing residual network-based optimized deep learning. Curr. Comput. Aided Drug Des. Aug. https://doi.org/10.2174/1573409920666230816090626 (2023).

Roy, S., Bhattacharyya, D., Bandyopadhyay, S. K. & Das, A. K. An improved brain MR image binarization method as a preprocessing for abnormality detection and features extraction. Front. Comput. Sci. 11 (4), 717–727. https://doi.org/10.1007/s11704-016-5129-y (2017).

Asiri, A. A. et al. Exploring the power of DL: Fine-tuned vision transformer for accurate and efficient brain tumor detection in MRI scans. Diagnostics (Basel) 13(12), 2094. https://doi.org/10.3390/diagnostics13122094 (2023).

Ullah, N. et al. TumorDetNet: A unified DL model for brain tumor detection and classification. PLoS One 18(9), e0291200. https://doi.org/10.1371/journal.pone.0291200 (2023).

Mahajan, A. et al. DL based clinico-radiological model for paediatric brain tumor detection and subtype prediction, explor. Target Antitumor Ther. 4(4), 669–684. https://doi.org/10.37349/etat.2023.00159 (2023).

Roy, S., Bhattacharyya, D., Bandyopadhyay, S. K. & Kim, T. H. An effective method for computerized prediction and segmentation of multiple sclerosis lesions in brain MRI. Comput. Methods Programs Biomed. 140, 307–320. https://doi.org/10.1016/j.cmpb.2017.01.003 (2017).

Roy, S. & Shoghi, K. I. Computer-aided tumor segmentation from T2-weighted MR images of patient-derived tumor xenografts. In Image Analysis and Recognition. ICIAR 2019, (eds Karray, F., Campilho, A. & Yu, A.) Lecture Notes in Computer Science, vol. 11663, Cham: Springer, 125–132. https://doi.org/10.1007/978-3-030-27272-2_14 (2019).

Pal, D., Meena, T., Mahapaatra, D. & Roy, S. SAC UW-Net: A self-attention-based network for multimodal medical image segmentation. In Proc. 2024 IEEE Int. Symp. Biomed. Imaging (ISBI), Athens, Greece, May pp. 1–5. https://doi.org/10.1109/ISBI56570.2024.10635611 (2024).

Samee, N. A. et al. Classification framework for medical diagnosis of brain tumor with an effective hybrid transfer learning model. Diagnostics (Basel) 12(10), 2541. https://doi.org/10.3390/diagnostics12102541 (2022).

Li, R. et al. MRI-based two-stage DL model for automatic detection and segmentation of brain metastases. Eur. Radiol. 33 (5), 3521–3531. https://doi.org/10.1007/s00330-023-09420-7 (2023).

Saeedi, S., Rezayi, S., Keshavarz, H. & Niakan Kalhori, S. R. MRI-based brain tumor detection using convolutional DL methods and chosen machine learning techniques. BMC Med. Inform. Decis. Mak. 23(1), 16. https://doi.org/10.1186/s12911-023-02114-6 (2023).

Wang, X. et al. Unsupervised contrastive graph learning for resting-state functional MRI analysis and brain disorder detection. Hum. Brain Mapp. 44(17), 5672–5692. https://doi.org/10.1002/hbm.26469 (2023).

Hossain, R., Ibrahim, R. B. & Hashim, H. B. Automated brain tumor detection using machine learning: A bibliometric review. World Neurosurg. 175, 57–68. https://doi.org/10.1016/j.wneu.2023.03.115 (2023).

Fairchild, A. T. et al. A DL-based computer-aided detection (CAD) system for difficult-to-detect brain metastases. Int. J. Radiat. Oncol. Biol. Phys. 115(3), 779–793. https://doi.org/10.1016/j.ijrobp.2022.09.068 (2023).

Hossain, S., Chakrabarty, A., Gadekallu, T. R., Alazab, M. & Piran, M. J. Vision transformers, ensemble model, and transfer learning leveraging explainable AI for brain tumor detection and classification. IEEE J. Biomed. Health Inform. 28(3), 1261–1272. https://doi.org/10.1109/JBHI.2023.3266614 (2024).

Al-Zoghby, A. M., Al-Awadly, E. M. K., Moawad, A., Yehia, N. & Ebada, A. I. Dual deep CNN for tumor brain classification. Diagnostics (Basel) 13(12), 2050. https://doi.org/10.3390/diagnostics13122050 (2023).

Anand, V. et al. Weighted average ensemble DL model for stratification of brain tumor in MRI images. Diagnostics (Basel) 13(7), 1320. https://doi.org/10.3390/diagnostics13071320 (2023).

Jiao, T. et al. DL with an attention mechanism for differentiating the origin of brain metastasis using MR images. J. Magn. Reson. Imaging 58(5), 1624–1635. https://doi.org/10.1002/jmri.28695 (2023).

Khan, F. et al. MRI-based effective ensemble frameworks for predicting human brain tumor. J. Imaging. 9, 163. https://doi.org/10.3390/jimaging9080163 (2023).

Mohammad, F., Ahmadi, S. A., Al, J. & Muhtadi Blockchain-based deep CNN for brain tumor prediction using MRI scans. Diagnostics (Basel) 13(7), Art. no. 1229. https://doi.org/10.3390/diagnostics13071229 (2023).

Al-Azzwi, Z. H. N. & Nazarov, A. N. Brain tumor classification based on improved stacked ensemble DL methods, Asian Pac. J. Cancer Prev. 24 (6), 2141–2148. https://doi.org/10.31557/APJCP.2023.24.6.2141 (2023).

Roy, S. et al. Forward attention-based deep network for classification of breast histopathology image. Multimed Tools Appl. 83 (62), 88039–88068. https://doi.org/10.1007/s11042-024-18947-w (2024).

Kabiraj, A., Meena, T., Tadepalli, K. & Roy, S. An explainable weakly supervised model for multi-disease detection and localization from thoracic X-rays. Appl. Soft Comput. 166, 112139. https://doi.org/10.1016/j.asoc.2024.112139 (2024).

Kumar, S. et al. Brain tumor classification using deep neural network and transfer learning. Brain Topogr.. 36(3), 305–318. https://doi.org/10.1007/s10548-023-00953-0 (2023).

van der Voort, S. R. et al. Combined molecular subtyping, grading, and segmentation of glioma using multi-task DL. Neuro Oncol. 25 (2), 279–289. https://doi.org/10.1093/neuonc/noac166 (2023).

Emam, M. M., Samee, N. A., Jamjoom, M. M. & Houssein, E. H. Optimized DL architecture for brain tumor classification using improved hunger games search algorithm. Comput. Biol. Med. 160, Art. no. 106966. https://doi.org/10.1016/j.compbiomed.2023.106966 (2023).

Chen, Y. et al. A radiomics-incorporated deep ensemble learning model for multi-parametric MRI-based glioma segmentation. Phys. Med. Biol. 68(18). https://doi.org/10.1088/1361-6560/acf10d (2023).

Raju, S. & Veera, V. R. P. Classification of brain tumors from MRI images using DL-enabled hybrid optimization algorithm. Network.. 34 (4), 408–437. https://doi.org/10.1080/0954898X.2023.2275045 (2023).

Ahamed, M. F. et al. A review on brain tumor segmentation based on DL methods with federated learning techniques. Comput. Med. Imaging Graph. 110, Art. no. 102313. https://doi.org/10.1016/j.compmedimag.2023.102313 (2023).

Tandel, G. S. et al. Role of ensemble DL for brain tumor classification in multiple magnetic resonance imaging sequence data. Diagnostics (Basel) 13(3), Art. no. 481. https://doi.org/10.3390/diagnostics13030481 (2023).

Tajima, T. et al. Usefulness of DL-based noise reduction for 1.5 T MRI brain images. Clin. Radiol. 78 (1), e13–e21. https://doi.org/10.1016/j.crad.2022.08.127 (Jan. 2023).

Yan, X. et al. Deep-learning-based automatic segmentation and classification for craniopharyngiomas. Front. Oncol. Art No 1048841. 13. https://doi.org/10.3389/fonc.2023.1048841 (2023).

Wang, W. et al. Neuropathologist-level integrated classification of adult-type diffuse gliomas using DL from whole-slide pathological images. Nat. Commun. 14, Art. no. 6359. https://doi.org/10.1038/s41467-023-41195-9 (2023).

Jalalifar, S. A., Soliman, H., Sahgal, A. & Sadeghi-Naini, A. A self-attention-guided 3D deep residual network with big transfer to predict local failure in brain metastasis after radiotherapy using multi-channel MRI. IEEE J. Transl. Eng. Health Med. 11, 13–22. https://doi.org/10.1109/JTEHM.2022.3219625 (2022).

Roy, S., Pal, D. & Meena, T. Explainable artificial intelligence to increase transparency for revolutionizing healthcare ecosystem and the road ahead. Netw. Model. Anal. Health Inf. Bioinform. 13(1), 4. https://doi.org/10.1007/s13721-023-00437-y (2024).

Näslund, O. et al. Incidence, management, and outcome of incidental meningioma: what has happened in 10 years? J. Neurooncol. 165(2), 291–299. https://doi.org/10.1007/s11060-023-04482-5 (2023).

Jiang, K. et al. Epidemiology and survival outcomes of synchronous and metachronous brain metastases: a retrospective population-based study. Neurosurg. Focus. 55(2). https://doi.org/10.3171/2023.5.FOCUS23212 (2023).

Achrol, A. S. et al. Brain metastases. Nat. Rev. Dis. Primers. 5, 5. https://doi.org/10.1038/s41572-018-0055-y (2019).

Gangopadhyay, T., Meena, T., Pal, D. & Roy, S. A lightweight self-attention based multi-task deep learning model for industrial solar panel and environmental monitoring. IEEE Trans. Emerg. Top. Comput. Intell. 9(2), 1502–1513. https://doi.org/10.1109/TETCI.2024.3444590 (2025).

Yuan, J. Brain tumor image segmentation method using hybrid attention module and improved mask RCNN. Sci. Rep. 14(1), 20615. https://doi.org/10.1038/s41598-024-71250-4 (2024).

Acknowledgements

This research was partially supported by grants from the EDA Hospital and National Science and Technology Council, Taiwan (EDCHP113010 and NSTC 113-2221-E-214-007).

Funding

The authors would like to express their gratitude to EDA Hospital and the National Science and Technology Council in Taiwan for their partial financial support under contract No. EDCHP113010 and NSTC 113-2221-E-214-007.

Author information

Authors and Affiliations

Contributions

Conceptualization, N.-H.L. and T.-B.C.; methodology, N.-H.L. and T.-B.C.; software, T.-B.C. and K.-Y.L.; validation, Y.-H.H.; formal analysis, T.-B.C. and K.-Y.L.; investigation, N.-H.L.; resources, N.-H.L.; data curation, Y.-H.H.; writing—original draft preparation, N.-H.L.; writing—review and editing, Y.-H.H., N.-H.L. and T.-B.C.; visualization, T.-B.C.; supervision, T.-B.C.; project administration, N.-H.L.; funding acquisition, N.-H.L and Y.-H.H.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate & consent for publication

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of E-DA Hospital, Kaohsiung, Taiwan (Approval Number: EMRP-110-084, Date of Approval: 5 August 2021). Verbal and written information detailing all experimental procedures was provided to all participants, and written informed consent was obtained prior to their participation and the collection of experimental data.u

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lu, NH., Huang, YH., Liu, KY. et al. Deep learning-driven brain tumor classification and segmentation using non-contrast MRI. Sci Rep 15, 27831 (2025). https://doi.org/10.1038/s41598-025-13591-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-13591-2