Abstract

By carefully analyzing latent image properties, content-based image retrieval (CBIR) systems are able to recover pertinent images without relying on text descriptions, natural language tags, or keywords related to the image. This search procedure makes it quite easy to automatically retrieve images in huge, well-balanced datasets. However, in the medical field, such datasets are usually not available. This study proposed an advanced DL technique to enhance the accuracy of image retrieval in complex medical datasets. The proposed model can be integrated into five stages, namely pre-processing, decomposing the images, feature extraction, dimensionality reduction, and classification with an image retrieval mechanism. The hybridized Wavelet-Hadamard Transform (HWHT) was utilized to obtain both low and high frequency detail for analysis. In order to extract the main characteristics, the Gray Level Co-occurrence Matrix (GLCM) was employed. Furthermore, to minimize feature complexity, Sine chaos based artificial rabbit optimization (SCARO) was utilized. By employing the Bhattacharyya Coefficient for improved similarity matching, the Bhattacharya Context performance aware global attention-based Transformer (BCGAT) improves classification accuracy. The experimental results proved that the COVID-19 Chest X-ray image dataset attained higher accuracy, precision, recall, and F1-Score of 99.5%, 97.1%, 97.1%, and 97.1%, 97.1%, respectively. However, the chest x-ray image (pneumonia) dataset has attained higher accuracy, precision, recall, and F1-score values of 98.60%, 98.49%, 97.40%, and 98.50%, respectively. For the NIH chest X-ray dataset, the accuracy value is 99.67%.

Similar content being viewed by others

Introduction

The usage of digital technologies for medical imaging, including X-rays, Computed Tomography (CT), and Magnetic Resonance Imaging (MRI), has increased in the current generation due to the Internet’s rapid expansion. It provides functional and essential anatomical details of various body parts, classification, monitoring, diagnosis, and treatment planning1,2. The CPIR field is defined by the abundance of images produced by different image capturing devices3. In the realm of medical image processing, content-based medical image retrieval (CBMIR) has been instrumental due to its extensive picture collections and powerful image retrieval capabilities4. Every CBMIR technique includes two fundamental steps: feature extraction and similarity measurement computations5. CBIR has emerged as a feature where intensity, texture, color, and shape are removed based on query images from large image sets.

After feature extraction, a feature vector is created during the process of similarity measure calculations to compare each retrieved image, evaluate it in the corresponding medical database, and display the most relevant images to the user6,7. Traditional TBIR includes imprecise subjective ambiguity, interpretation, and extensive annotation8. High-dimensional data is still included in medical image processing technologies like MRI9. The time and effort needed to retrieve images using text-based image retrieval (TBIR) is higher than that of other methods, and its retrieval accuracy has been lacking10,11. Radiologists analyze medical imaging, such as CT scans, to diagnose the condition of broken bones, but it doesn’t show the details of the mussels infections12. Convolutional Neural Networks in CBIR are irreplaceable in encrypted image analysis13.

Medical image retrieval is aided by the Picture Archival and Communication System (PACS), although there is a lack of time and limited manual effort14. As a tool in medical imaging technologies, computer-aided diagnosis (CAD) efficiently analyzes medical images for patient medical diagnosis15. Less effective, more human intervention required, and limited feature extraction for image retrieval are some of the issues with traditional image storage systems16. There was an issue with the image’s transparency and its ability to use deep learning-based CPMIR17. By using latent image properties to retrieve relevant images, CPMIR creates a feature vector that preserves high-level image representations in the query image18. There has been little progress in clinical image retrieval algorithms that can access large-scale storage of landscape photos and medical images instead of tags or metadata, despite breakthroughs in content-based image retrieval (CIBR)19,20.

Motivation

Using sophisticated feature extraction, classification, and similarity matching approaches, an effective content-based image retrieval system for X-ray pictures is provided in this research. Existing techniques like CNN, AOADL-CBIRH, DLECNN, and others have certain drawbacks, like only offering a tiny dataset from visualization21 and not supplying patient details like name, gender, age, and others20. Additionally, there is low picture retrieval accuracy22, inability to correctly adjust the hyperparameter23, and inability to recognize the shape in image retrieval24,25. Moreover, does not use an optimization-based feature selection algorithm, which degrades the CBIR results26, handles small datasets for the testing process27, too complex to real time applications28, low efficiency in feature extraction29, and produces more noise in retrieval30 in order to overcome the existing methods’ limitations, introduce a novel Bhattacharya context performance aware global attention-based transformer (BCGAT). Here, adding HWHT captures both low and high frequency components, which supports detailed content analysis. Also, addingthe Bhattacharya coefficient (BC) to measure the closeness of feature distributions ensures that more relevant images are retrieved. Moreover, adding a context performance aware global attention-based transformer ensures high precision for an effective result. The main objectives are mentioned below:

-

To collect input images from COVID-19 Chest X-ray image datasets and the chest X-ray image (pneumonia) dataset, which is passed into pre-processing.

-

To enhance contrast, noise reduction, and normalization from collected X-ray images using the hybrid wavelet-Hadamard transform to support detailed content analysis.

-

To extract important textual features such as contrast, correlation, power, and homogeneity using the Gray Level Co-occurrence Matrix (GLCM).

-

To optimize the performance, dimensionality reduction is used using Sine chaos-based Artificial Rabbit Optimization (SCARO).

-

To effectively diagnose medical image retrieval by integrating Bhattacharya Context performance aware global attention-based Transformer (BCGAT) classification.

The research paper has been prepared as follows. Section “Related work” designates the collected works analysis of the recent research works connected to CBMIR. Section “Proposed methodology” defines the proposed methodology of CBMIR for X-ray images. Section “Results and discussion” represents the performance analysis of existing and proposed models, and Section “Conclusion” explains the conclusion of the paper.

Related work

This research introduces an important 3D volume visualization method, Li et al.21 is recommended for CBIR systems. The suggested method applied the positron emission tomography and computed tomography (PET-CT) non-small cell lung cancer (NSCLC) dataset to improve the visualization of recovered volumetric images. The suggested method has also been applied in various fields of volumetric images. Parameters for rendering are designed to make key structures as visible as possible. The recovery process finds it first and gives it priority. Medical volume graph depicting full PET-CT scan data. This method includes a small visualization dataset.

The author suggests improving key regions optimization and deep learning for two levels of content-based medical image retrieval by Tuyet et al.22. Local object attributes from medical images, including shape and texture, were extracted at the first level of analysis. In contrast, the second level involved an offline task and an online task for content-based picture retrieval in the database. Local feature extraction utilizes a code word to process the supplied user query image. The collection of code words acquired in the initial phase, when applied to the computer, gets the n most similar photos through similarity comparison. The suggested method achieves an accuracy of 91.61%. This method achieves low accuracy in retrieving images.

The scarcity of extensive and reliable datasets has constrained the application of intelligent systems for effective medical image management. Karthik et al.24 recommended a multi-view classification deep neural network model for effective content-based retrieval of medical images. This strategy addresses the issue of inadequately capturing the intrinsic features of images and the restricted accuracy associated with medical imaging. This method aims to reduce variability in different types of scans using body part orientation visual classification labels, such as X-ray images, where different body orientations were observed in similar retrieved images. This method doesn’t identify the shape in image retrieval.

This research employs a deep learning methodology for content-based medical picture retrieval, referencing the survey by Dubey et al.25. This author examines various retrieval types, networks, descriptor types, and supervision methods to build content-based picture retrieval. Employing cutting-edge techniques such as chronological summarization to evaluate picture retrieval efficacy. This learning uses a class-specific feature that preserves the latest trends in image reconstruction. In this paper, various data augmentation, layer manipulation, and feature normalization based objective functions are investigated to maintain robust properties in future analysis. This method cannot properly tune the hyperparameter.

In this research, to improve the image retrieval performance more accurately, using a deep learning-based enhanced convolutional neural network (DLECNN) was recommended by Sivakumar et al.23. Irrelevant image features were removed from the noisy Corral database using histogram equalization using the recommended method. A user-supplied query image computes similarity measures using the HFCM algorithm. Here, the Fuzzy c-Means (HFCM) algorithm is used to calculate the similarity index of the query image vector with the database images. The suggested method achieves an accuracy of 90.23%. This method does not use an optimization-based feature selection algorithm, which degrades the CBIR results.

Agrawa et al.26 established a deep neural model–based content–based clinical image retrieval system for the early diagnosis and categorization of lung illnesses. This author trains transfer learning-based models for specific disease features on a standard COVID-19 Chest X-ray image dataset collection. This approach minimizes computing burden and enhances the accuracy of the CBMIR system utilizing COVID-19 Chest X-ray image datasets. This document outlines various distance-based metrics for evaluating the influence of categorization on retrieval performance, including chi-square, cosine distances, and Euclidean distance. The trained model was found to achieve an accuracy of 81% in fivefold cross validation. This method handles a small dataset for the testing process.

Rashad et al.27 described an automated method for expanding user queries in medical image retrieval using an effective RbQE methodology. This text proposes a content-based medical image retrieval (CBMIR) method for the precise retrieval of computed tomography (CT) and magnetic resonance (MR) images. The extraction of advanced characteristics from medical images utilizes the AlexNet and VGG-19 models. This method employs two search procedures in image retrieval: a rapid search and a final search. The original query for each class is broadened to obtain the highest-ranked images for a more efficient search procedure. In the concluding search, a query identical to the original is employed to extract data from the database. This method is too complex for real time application.

CBIR systems use CNN and other advanced deep learning algorithms to automatically extract complicated information from medical images. Archimedes optimization Algorithm with Deep Learning Assisted Content-Based Image recovery (AOADL-CBIRH) approach by Issaoui et al.28 to enable accurate recovery of pertinent patient and diagnostic notes. Here, enhance the image quality using adaptive bilateral filtering. The proposed method uses the ECSM-ResNet50 model to tune the AOA-based hyperparameter to improve the retrieval performance. The similarity between images and their retrieval has determined the Manhattan distance metric. This method includes low efficiency in feature extraction.

Content-based medical image recovery framework query growth based on top ranking images by Ahmed et al.29 suggested a new expansion method, which does not require interaction from users due to its fully automatic process. The suggested method enhances the efficient retrieval of related medical images. Here, it enhances the retrieval model’s precision in two ways. Only the most important features of the top-ranked photos in the second part are chosen, and the original enlarged query image is rebuilt using the top-rated image feature, with average values acting as the foundation. This method achieves a precision of 95.8%. This method included more noise in retrieval.

An enormous amount of image data has resulted from the widespread use of medical imaging in clinical diagnostics, making it difficult to organize, control, and retrieve images effectively. Cui et al.30 suggested a deep hash coding based CBMIR framework, which had a CNN layer combined with hash encoding for efficient and accurate retrieval. However, to improve feature extraction specific to medical imaging, the suggested model combines a spatial attention block, a dense block, and a hash learning block-based feature learning network. The performance analysis of the suggested model was evaluated using the TCIA-CT dataset, which achieved an accuracy of 91.2%. Moreover, this model significantly improves medical image retrieval for clinical decision making.

Padate et al.31 suggested the content-based image retrieval (CBMIB), which influences the Harris hawks optimization (HHO) algorithm to improve feature selection and retrieval accuracy. The suggested HHO algorithm effectively strikes a balance between exploration and exploitation, optimizing the feature extraction process to improve retrieval results. However, the suggested system was evaluated by using various datasets and compared with traditional optimization algorithms, such as particle swarm optimization (PSO) and genetic algorithm (GA). Moreover, the findings of HHO outperform in terms of precision, recall, and F1-score at values of 90%, 88%, and 88%, respectively. Therefore, the experimental result of HHO optimization and image captioning crucially improves both retrieval performance and user satisfaction, also making it a powerful solution for large scale image retrieval.

CBMIR was one of the popular methods for finding similar images by comparing the input image’s inherent features with those in the database. However, the absence of extensive research in this field was the fundamental cause of the significant difficulties facing the multiclass medical image of CBMIR. Suresh Kumar and Celestin Vigila et al.32 suggested a CNN based auto encoder that enhances the accuracy of feature extraction and improves the result of retrieval. The experimental result of the proposed model was evaluated by using the ImageNet dataset, and it achieved better accuracy outcomes at a range of 95.87%. Therefore, this feature of the suggested model achieves better accuracy and highlights its potential as a transformative tool in advancing medical image retrieval. Table 1 represents the analysis of various existing models.

Research gap and novelty

Several existing models demonstrates limitations such as handling small datasets21,26, low accuracy in image retrieval22, inability to extract or utilize shape information24, poor hyperparameter tuning25, lack of optimization based feature selection23 and low efficiency in feature extraction28. However, the traditional model attained high computational cost30, overfitting issues31, and limited global context32. In addition to that, the surveyed models suffered from limitations like generalization issues, which led to less robustness. At the same time, these approaches apply various DL models, which often fail to balance computational efficiency, accurate feature representation, and retrieval relevance in real-world clinical settings. In order to overcome these existing issues, a novelty of the proposed model is achieved through synergistic integration of multiple well-established methods, such as HWHT for capturing multi-resolution frequency content, an essential set of features is extracted from GLCM, and intelligent dimensionality reduction using a chaotic and behavioural optimization approach using SCARO. Finally, BCGAT integrated global attention with a transformer approach for contextual performance awareness. By combining these models, BC leads to evaluating the similarity computation. This combined approach led to a highly accurate model for the X-ray image retrieval process. Furthermore, the use of the Bhattacharyya Coefficient for similarity computation introduces a more accurate retrieval mechanism that is particularly suited for distinguishing clinically similar but diagnostically different cases. The proposed model has been tested on multiple datasets, resulting in better generalization.

Proposed methodology

In order to effectively retrieve X-ray pictures of the head, chest, palm, and foot, this work proposes a CBIR framework that employs advanced feature extraction, classification, and similarity matching. Images are pre-processed by the system using contrast enhancement, scaling, normalization, and noise reduction. Images are broken down into subbands for detailed analysis by employing an HWHT, and significant textural characteristics are extracted using a GLCM.

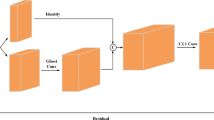

In order to maximize efficiency, parallel processing simplifies retrieval, and SCARO minimizes feature complexity. By including the BCGAT and utilizing the BC, the framework enhances assistance for medical diagnostics through boosting similarity computation and classification accuracy. The block diagram of the proposed methodology is shown in Fig. 1. The major novelty of this research lies in the advanced combination of image decomposition, feature optimization, and advanced classification techniques. The HWHT plays a crucial role in decomposing images into multiple subbands, effectively capturing both low and high-frequency components for a more detailed content analysis. To further optimize performance, the SCARO is employed for dimensionality reduction. This method efficiently minimizes feature complexity while preserving essential information, leading to faster and more accurate computations.The use of the BCGAT for classification is a significant improvement in this approach. Here, these operations are performed for both the input image and the query image. For the input image process, the feature vectors and image labels are stored in a database. The user image was also performed for the same process. The feature vectors and class labels are provided for the user image, and these are checked with the database using the BC mechanism. By reducing false positives and false negatives, the attention mechanism in BCGAT guarantees accurate emphasis on pertinent characteristics, greatly increasing classification accuracy. The input image refers to the new image presented by a user for classification. The query image is essentially the same as the input image, representing the problem to be solved. The CBIR system compares this query image to a database of stored images (cases) to retrieve similar cases. Based on the retrieved cases, the system suggests solutions or insights for the query image.

Pre-processing

Noise reduction

The accuracy of medical diagnosis may be negatively affected by noise, which degrades the quality of images. Several algorithms have been used to enhance the quality of medical images. A new hybrid technique is proposed to decrease noisy speckles in medical images. This technique utilizes parallel computing. The approach effectively uses both local and non-local information to integrate the benefits of several denoising filters.

Normalization

The technique of changing an image’s pixel values to improve a machine learning (ML) model is known as image normalization. This is achieved by normalizing pixel values to a common range or by standardizing them through mean subtraction and division by the standard deviation.

Resizing and contrast enhancement

The procedure of resizing an image involves changing its original dimensions.Contrast enhancements improve object visibility in a scene by increasing the brightness difference between objects and their backgrounds.

Decompose the images by using hybridized Wavelet-Hadamard transform (HWHT)

Using the HWHT, images are broken down into many subbands, capturing both high- and low-frequency components to aid in deep content analysis33. A technique called spatial frequency decomposition and a multi-resolution technique called the discrete wavelet transform (DWT) are used to divide a picture into sets of wavelet sub-bands that are next to each other. A DWT transformation splits an image into four groups of different sizes, called low-low (LL), high-high (HH), low–high (LH), and high-low (HL). Less computationally intensive watermarking approaches remain possible by the DWT process, which presents a hierarchical representation of the deconstructed image. When employing DWT for data hiding, three aspects were taken into consideration: the regulation and impact of the reliability of watermarked images. Several filter banks, including Symlet-8, Daubechies, and Haar functions, can be employed to hide data. Second, a variety of watermarking techniques employ multiple levels of decomposition, ranging from one to five layers. In order to avoid quality deterioration, the watermark can be embedded using any one of four potential sub-bands that are available, utilizing various depth factors.

Similarly, in image decomposition, the HT is employed due to its simplicity and efficiency, which is particularly helpful for applications such as denoising, pattern identification, and picture compression. The transform works on images, which operates at the dimension of powers of two such as \(2 \times 2,\,4 \times 4,\,8 \times 8\)\(256 \times 256\), because Hadamard matrices exist for only powers of two. However, these metrics are orthogonal and consist of + 1 and − 1 elements, which makes them computationally efficient. Their operation involves addition and subtraction. Moreover, in the construction (decomposition) process, the image is split into non-overlapping blocks, and each block is transformed using the Hadamard matrix. Most of the energy is compressed into a small number of coefficients, while the spatial domain data is converted to the frequency domain.

Meanwhile, the image is expressed on a new basis by the altered coefficients, which reduce redundancy and highlight significant features. In reconstruction, the reverse Hadamard transform is applied to the compressed or modified coefficient of each block to return the image to its spatial domain. Therefore, the Hadamard matrix is orthogonal; its inverse transform is simply the transpose. It allows its original image to be accurately or exactly reconstructed, depending on the amount of information retained during decomposition.

A generalized variant of the Fourier transform, WHT, is a linear orthogonal transformation. The Hadamard transform’s row and column order is not frequently interpreted. An image’s Hadamard transformation only involves actual additions and subtractions. The WHT elementary matrix is formed of [1, -1]. It is illustrated in Eq. (1).

Here, the Hadamard transform was denoted as \(P\).

The standard formulation of the Hadamard transform is recursive, and higher orderHadamard matrices \(2^{M}\) are,

Here, the block specific weight matrices are denoted as \(W_{1}\), \(W_{2}\), \(W_{3}\) and \(W_{4}\).

Compared to embedding a watermark in other well-known transform domains, less image information is changed when the watermark is placed in the Hadamard region. The combination of HT and WT improves noise reduction. HT’s simplified operation minimizes the amount of noise amplification that occurs during transformations.

Feature extraction by gray level co-occurrence matrix

Features are the pieces of data that pictures need to solve specific problems and show key parts of the images. In order to decrease the number of resources needed, feature extraction is the approach that collects an image’s visual components. In order to extract statistical texture qualities, the GLCM was utilized in this study34. Texture features are important low-level properties that are used to define and measure the apparent texture of a picture. The GLCM method used in image processing extracts texture features by analyzing the spatial relationship between pixel intensities in a grayscale image. In GLCM, an element \(\left( {i,j} \right)\) represents the number of times that a pixel with a gray level \(i\) occurs adjacent to a pixel, and the gray level \(j\) is represented in a specific direction and distance. The intensity pair \(\left( {i,j} \right)\) seems frequently present in the spatial information, which indicates recurring texture or a strong pattern if the GLCM value at \(\left( {i,j} \right)\) is high. The low GLCM value indicates that the \(\left( {i,j} \right)\) pair is uncommon at the distance and direction. The GLCM was calculated by choosing the sliding window, direction, and distance. However, the common features of GLCM are contrast, homogeneity, energy, and correlation. Using statistical distributions of intensity value combinations at different locations in relation to each other, this method is commonly used to extract second order statistical texture properties from photographs. The number of intensity points in an image determines the classification of statistics, from first order to higher order. Higher order statistics cannot be implemented due to the computational complexity. The resulting texture-based properties includeinverse difference moment (IDM), energy, correlation, entropy,sum variance, homogeneity, IDM normalized, contrast, maximum probability, dissimilarity, autocorrelation, etc. A handful of these are expressed as follows:

Energy

It gives the square root of the GLCM matrix. The process involves moving areas that are homogeneous to areas that are not, as given in Eq. (4).

Here, the normalized likelihood of co-occurrence within grey levels \(a\) and \(b\) is denoted as \(P_{ab}\). The total amount of distinct grey levels present in the image is represented as \(M\).

Contrast

It calculates the strength of the difference that connects a pixel to its neighbor throughout the entire image. It is illustrated in Eq. (5).

Here, the grey level present in the image is represented as \(a,b\).

Correlation

It measures the linear relationships between gray tones in an image. It represents the correlation within a pixel and its neighbors. It is demonstrated in Eq. (6).

Here, the mean of the GLCM matrix is represented as \(\mu\). The standard deviation of grey levels is denoted as \(\sigma^{2}\).

Homogeneity

It describes how similar pixels are to one another. It is described in Eq. (7).

Here, the normalized GLCM matrix was denoted as \(P_{ab}\). The robust descriptors provided by GLCM features, including energy, entropy, contrast, and correlation, help increase the accuracy of image retrieval systems by discriminating between healthy and sick tissues.

Dimensionality reduction by employing sine chaos based artificial rabbit optimization

SCARO was selected over traditional models due to its higher success in solving tasks that involve complicated and many-dimensional spaces, which are a typical part of medical image retrieval. Unlike PSO and GA, SCARO combines how rabbits behave (detour and hiding) with chaos by including a sine map. There is less chance for the algorithms to converge prematurely, a common drawback in PSO and GA when looking for multiple solutions. Also, SCARO adapts its approach by using an energy component that helps it choose the best set of features and achieves a better rate of correct classification. The proposed ARO has an efficient optimization model that mathematically represents the survival strategies actually used by rabbits (search agent)35. This approach addresses two imitation techniques, such as detour eating and random hiding.To avoid attackers, the search agent initially eats grass around neighboring nests. It uses this strategy to simulate the detour foraging technique. Second, the possibility of predators acquiring a search agent might be decreased by employing the randomized hiding technique.Finally, rabbits might start hiding more frequently or switch from detour feeding if they start to lose energy.

The features extracted in this work primarily include texture based attributes such as energy, correlation, contrast, maximum probability, auto-correlation, dissimilarity, sum variance, and homogeneity derived from GLCM, which represent second order statistical properties essential for differentiating medical image patterns. SCARO mechanism is used to select the best subset of features, which balances exploration and exploitation by simulating rabbit foraging behaviour and chaotic dynamics. This method evaluates candidate feature subsets based on their contribution to classification accuracy, minimizing redundancy while preserving discriminatory power. The result is an optimal, compact feature set that enhances retrieval performance and reduces computational overhead. The population of the search agent’s position is updated in each iteration based on the given method’s criteria, and it is then evaluated by the objective function.

In this model, the search for new places for food (escaping from threats) in ARO simulates exploration, which promotes looking worldwide for solutions and delays a fast gathering at the convergence point. Rabbits hide at random in burrows nearby, which causes the search to center on the area where the rabbits were found. Based on how many iterations have been performed, the algorithm adjusts the balance among the stages of its decision using an energy factor. The algorithm is stopped after 300 iterations, and the objective function (classification accuracy) is checked to see if it has stayed stable, together with signs of fitness improvement seen from one generation to the next. According to Eq. (8), each location in the initial population is assigned to a randomized site inside the penetrating space. It is mathematically expressed in Eq. (8).

Here, the position of every search agent is represented as \(Rs_{i}\). The upper and lower limits of design variables are represented as \(UB\) and \(LB\). The overall number of variables and search agents is denoted as \(\dim\) and \(M_{Rs}\). Each seeking search agent chooses to occupy a different area with another hunting animal chosen at random from the swarm in order to engage in distraction, in accordance with the AROA’s diversion foraging strategy. Equations (9)–(12) illustrate the detour forage mathematical representation.

Here, the current iteration is represented as \(iter\). The existing and new position of kth search agent is indicated as \(Rs_{k}\) and \(MRs_{k}\). The three arbitrary quantities that lie in the series \(\left[ {0,1} \right]\) are denoted as \(r_{1} ,r_{2}\) and \(r_{3}\). The supreme quantity of repetitions is represented as \(Iter_{\max }\).

To avoid predators, the search agent may often dig many tunnels close to its nest for protection. It is illustrated in Eqs. (13)–(16).

Here, the value of hiding is represented as \(H\). The jth burrow of kth search agent is represented as \(r_{k,j}\). The mathematical representation for the randomized hiding technique is expressed in Eq. (17).

The shift from the discovery stage, which is linked to detour foraging, to the exploitation phase, which is considered by randomized disguise, is modeled with an energy component. It is explained in Eq. (18).

Here, the energy factor is denoted as \(AF\). A parameter from the original equation is indicated as \(r\). The Sine map for a chaotic sequence was represented as \(x_{m + 1} = r\sin \left( {\pi x_{m} } \right)\).

The dynamic disturbances created by the sine chaos allow SCARO to effectively tackle high-dimensional, complicated, and non-linear optimization issues. Table 2 represents the algorithm for SCARO.

Chaotic updates introduce unfamiliar but regulated fluctuations in the search process, preventing the population from being kept in local optima. The sine chaotic function is updated to the population initialization approach to enhance exploration in binary search spaces. By introducing this function, it provides non-repetitive ergodic and highly diversified starting solutions, which help to avoid premature convergence and local optima. The sine map produces a chaotic sequence that ensures a wide and uniform coverage of the search space, enhancing the algorithm’s ability to explore feature subsets thoroughly. This randomness prevents early convergence, a common issue in binary optimization problems. This approach helps to maintain a balance between intensification and diversification during the search process. It increases the chance of finding globally optimal or near optimal feature subsets in complex binary domains.

Classification by using Bhattacharya context performance aware global attention-based transformer

Originally designed to extract global sequence information, local convolution features, and spectral dimension, the GAT module consists of two embedding tokens and an attention transformer. Similar token embedding blocks and encoders utilized in the global–local spatial convolutional transformer (GACT) module can then improve the capacity to extract global–local spatial characteristics. Both local spatial linkages and long-range dependencies between pixels are obtained in the spatial dimension using transformers and convolution kernels. The spectral and spatial branches are used to determine the global–local spectral and spatial properties. During model training, these properties are then adaptively combined. A collection of learnable weights is multiplied to complete the merging. The weights are applied after the features have been convolved by various kernels. The resulting multiscale features are generated. The structural diagram of BCGAT is shown in Fig. 2.

In the 3-dimensional (3D) space, the BCGAT additionally delays information loss and many calculations by avoiding pooling and linear flattening procedures. In the input feature map \(F_{input} \in R^{A \times B \times C}\), where \(A\) and \(B\) represent the spatial dimensions. The intermediate feature map \(F_{{{\text{int}} er}}\) and output feature map \(F_{output}\) can be expressed in Eqs. (19)–(20).

Here, the spatial and spectral feature maps were represented as \(P_{spa}\) and \(P_{spe}\). The element-wise multiplication is denoted as \(\otimes\).

The vanilla ViT is unable to extract local spectrum characteristics and instead focuses on the long-range dependencies across all spectral bands. In contrast, convolution kernels with inductive bias successfully pay more attention to the local information contained in these close bands. The GAT aims to obtain both local and global HSI information by utilizing both a transformer and a convolution.

Position \(\left( {a,b,c} \right)\) on the pth feature map in the qth layer of 3D convolutional layers can be represented in the Eq. (21).

Here, the activation function is characterized as \(f\left( . \right)\). The width and height of the 3-D convolution kernel were represented as \(R_{p}\) and \(H_{p}\).

Three three-dimensional convolutional layers, one residual connection layer, and a spectral dimension were used in the convolutional token embedding block; convolution tokens \(P_{spe}\) may be expressed in the Eq. (22).

The size of the channel in convolution kernels is denoted as \(k_{1} ,k_{2}\) and \(k_{3}\).

The correlation between two probability distributions, \(P\) and \(Q\) is measured statistically by the Bhattacharyya coefficient (BC)35. It is mathematically expressed in Eq. (23).

The overall number of unique bins involved in the distributions is denoted as \(m\).

BC and performance-aware global attention allow the model to collect global patterns as well as local characteristics (necessary for identifying small abnormalities). In order to accurately classify and retrieve images that are similar but clinically distinct, the BC is essential for medical diagnosis.BCGAT can help radiologists and doctors find pertinent instances more rapidly, boosting diagnostic efficiency and decision-making by increasing retrieval accuracy and robustness.

Results and discussion

In this study, the CBIR framework for X-ray images is proposed and classified by using advanced techniques. The proposed method is executed by employing the COVID-19 chest x-ray dataset. The details of the hyperparameters present in the proposed model are depicted in Table 3.

Dataset description

COVID-19 Chest X-ray image dataset description36

The dataset is organized into 2 folders (train, test), and both train and test contain 3 subfolders (COVID19, PNEUMONIA, NORMAL). The dataset contains a total of 6432 x-ray images, and the test data comprises 20% of the total images. For testing, the dataset has been split into 116, 317, and 855 samples for COVID-19, normal, and pneumonia. For the training phase, normal has 1266 samples, 3418 samples of pneumonia, and 460 samples of COVID-19. Table 4 describes the number of samples along with the Training and Testing samples. The visualization of sample images is shown below in Fig. 3

Visualization of sample images COVID-19 Chest X-ray image dataset (https://www.kaggle.com/datasets/prashant268/chest-xray-covid19-pneumonia).

Chest X-ray image (pneumonia) dataset37

The chest X-ray (pneumonia) dataset is organized into three categories: training, testing, and validation. Each category of image contains subfolders, namely pneumonia and normal. Totally present 5,863 images. For analysis of chest X-ray images, initially, all chest radiographs were screened for quality control, removing all unreadable scans or low-quality images. Two knowledgeable doctors verified the photographs’ diagnosis before moving on to a performance review. The visualization of sample images is shown in Fig. 4. The number of samples, along with Training and Testing samples for the Chest X-ray image (Pneumonia) dataset, is given in Table 5.

Visualization of sample images from the Chest X-ray (Pneumonia) dataset. (https://www.kaggle.com/datasets/paultimothymooney/chest-xray-pneumonia/data).

The National Institute of Health (NIHChest X-ray-8) dataset39

The National Institute of Health (NIHChest X-ray-8) dataset, which consists of about 112,000 frontal view X-ray images from over 30,000 people taken between 1992 and 2015. Each X-ray image collection may contain many positive diagnoses of sickness. The collection includes 44,810 AP view images and 67,310 PA view images from 16,630 male and 14,175 female patients. Figure 5 shows the visualization of sample images from the NIHChest X-ray-8 dataset.

Visualization of sample images from the NIHChest X-ray-8 dataset. https://www.kaggle.com/datasets/nih-chest-xrays/data.

Performance evaluation forCOVID-19 chest X-ray image

In order to classify the X-ray images, the performance of the proposed model is evaluated and compared with other existing models, such as Bi-LSTM, RNN, GRU, DenseNet 169, and MobileNet. The comparison of accuracy, precision, recall, F1-score, MAE, MSE, and RMSE is demonstrated in the given Figures.

The Quantitative analysis of model performance based on accuracy and precision is illustrated in Fig. 6a–b. The proposed model achieved high accuracy and precision values at 99.54% and 97.13%, respectively, but the existing model had attained low values. However, some limitations have occurred in the existing model; the Bi-LSTM had an unstable training process, and the training process consumed more time. The existing GRU model has shown less scalability, and this model lacks interpretability. Moreover, the proposed model consumed less time for training and used fewer computational resources.

The analysis of classification performance based on recall and F1-score highlights the model’s ability, which is graphically represented in Fig. 7a and b. The proposed model achieved high recall and F1-score values at 97.13% and 97.13%, respectively, but the existing model attained low values. However, in existing work, the RNN model is not suitable for high dimensional data, and it also faces the vanishing gradient problem because it makes it difficult to update the parameters and makes the training process difficult. The proposed system can quickly classify and show the relevant image with high quality and reduce complexity.

Figure 8a–b illustrates the performance of MAE and MSE; these metrics provide a straightforward interpretation and heavily penalize large errors. The proposed MAE had less value at 0.0046, but the existing value occurred at high values. The existing MAE does not fit well with the data for the process and is less sensitive to outliers. The performance of MSE had achieved a low value at 0.0093 for the proposed model, and the existing values had obtained high values. However, the existing MSE has shown difficulty in interpreting and calculating the error, and the data may not be captured properly.

Figure 9 demonstrates the RMSE based error metric analysis that represents the model’s average prediction error magnitude. The performance evaluation for RMSE is compared with the existing and proposed models. In the existing, RMSE attained high values, but the proposed model attained low values at 0.09635, respectively. However, existing RMSE does not provide an accurate performance of the model, and the large error might be insensitive. The proposed RMSE minimized the large error.

The ROC curve in Fig. 10 provides a visual and quantitative measure of how well the model distinguishes between different classes in x-ray image data. The performance of a classifier model at various threshold conditions is demonstrated graphically. One way to visualize the ROC is to plot the true positive rate (TPR) vs the false positive rate (FPR) for each threshold setting.

Figure 11a–b illustrates the training performance evaluation using accuracy and loss. The accuracy curve tracks the proportion of correctly predicted cases over training epochs. The comparison of existing and proposed models for training accuracy is specified in Fig. 11a. The proposed model attains a high range of values at 0.65 to 0.90 in the epochs of 0 to 300, but the existing model attains a low range of values at the respective epochs. Figure 11b depicts the performance of the training loss for the proposed and existing models. The proposed model achieved low loss values at 0.38 to 0.15 between the epochs of 0 to 300, but the existing model attained high range values at the respective epochs.

Figure 12a–b signifies the validation accuracy loss across epochs, highlighting the proposed model’s improvements as compared to the existing model. Figure 12a–b demonstrates the comparison of the existing and proposed models for the validation accuracy loss curve. The performance evaluation of validation accuracy for the existing and proposed models is shown in Fig. 12a. The suggested model achieved a high validation accuracy rate at values of 0.65 to 0.95 at the epochs of 0 to 300. However, in the existing validation, accuracy occurs at a low range of values at corresponding epochs. A comparison of the existing and proposed models for the validation loss curve is illustrated in Fig. 12b. The proposed model achieved a low loss range of 0.39 to 0.05, with respective epochs of 0 to 300, whereas the existing model attained a high loss value at the corresponding epochs.

Performance evaluation for chest X-ray image (pneumonia)

Figure 13a–b specifies the model performance based on accuracy and precision, which describes positive quality and correct prediction of classification. The proposed model achieved a high range of values at 98.60% and 98.49%, respectively, but the existing model obtained a low value. However, the existing densenet169 had heavy computational resources and consumed more memory to process. Moreover, the existing MobileNet model was highly complex and difficult to interpret.

Figure 14a–b shows the comparative analysis of classification recall and F1-score; here, the proposed model improved overall performance over the existing model. The proposed model achieved high values of recall and F1-score, at 97.40% and 98.50%, respectively. However, existing RNN and BiLSTM models have scalability issues, and tuning parameters may attain complexity; also, these models may overfit large datasets.

Comparison of MSE and RMSE in Fig. 15a–b emphasizes the proposed model’s robustness and significantly reduces the sensitivity to large errors. The proposed model of MAE and MSE achieved low values at 0.9153 and 0.9035, respectively, but the existing model obtained high values. The existing MAE has a high loss function and does not show confidence in the classification process. However, the imbalanced data in the existing model is more complex.

The model performance assessment based on RMSE is shown in Fig. 16. This analysis can signify the typical size of prediction error and the model’s sensitivity to outliers. The performance of the existing and proposed models in terms of RMSE is demonstrated in Fig. 16. The proposed model attains low values at 1.1223, respectively, but has high error values. Therefore, the existing model had a lack of interpretability and scalability. Still, the proposed model is more significant for large errors, and optimization produces a clear training process in less time.

The ROC curve illustrating the performance of the chest X-ray image retrieval system is shown in Fig. 17. The performance of the proposed and existing models at various threshold conditions is specified graphically. In the receiver operating characteristic curve (ROC), plot the true positive rate (TPR) against the false positive rate (FPR) at each threshold setting.

The training accuracy and loss curve analysis over 300 epochs represent the model’s learning progression, and its graphical representation analysis over existing and proposed is shown in Fig. 18a–b.

The proposed model achieved a high range of values at 0.63 to 0.93 at epochs of 0 to 300, but the existing model had a low range of values with respective epochs. The performance of the existing and proposed models in terms of training loss is illustrated in Fig. 18. The proposed model achieved a low range of values at 0.39 to 0.07 between corresponding epochs. However, the existing model of training loss occurs at high values at the respective epochs.

The validation accuracy and loss curve analysis over 300 epochs represent the model’s learning progression, and its graphical representation analysis over existing and proposed models is shown in Fig. 19a and b. Comparison of the existing and proposed model of validation accuracy curve is shown in Fig. 19a. The proposed model achieved a high range of values at 0.6 to 0.95, respectively, but the existing model attains a very low range of values at corresponding epochs. Figure 19b illustrates the performance evaluation of the proposed and existing models for the validation loss curve. The proposed model achieves a low value at 0.4 to 0.05 between epochs of 0 to 300, but the existing model has a high range of values at respective epochs.

Performance analysis of the NIHChest X-ray-8 dataset

The performance evaluation through the NIHChest X-ray-8 dataset, with the existing and proposed models, is shown in Table 6.

Comparative analysis

Table 7 represents the comparison of existing and proposed models; Table 8 shows the existing and proposed models for the COVID-19 chest X-ray image dataset38. Table 9 represents the class wise mAP with various measures26. Table 10 represents the ablation study of the proposed model. Table 11 represents the statistical analysis for the existing and proposed models with the help of the Wilcoxon test. It is a non-parametric statistical test. The Wilcoxon test, also known as the rank sum test and signed rank test, can be used to compare two equal groups. Mean, standard deviation, P-value, and statistics are determined by this test, which is described in Table 11.

Qualitative analysis for the retrieval of images from the proposed model

Table 12 represents the visual comparison for the query input images and the top 2 ranked retrieval images from the system. The retrieved images are finalized based on the BC mechanism, which is checked with the image features database.

Computational efficiency of the proposed model

Table 13 represents the computational efficiency analysis in terms of accuracy, training time, inference time, model size, and scalability of the model.

The proposed model outperforms all existing models across three different datasets, achieving the highest accuracy of 99.54%, 98.60%, and 99.67%. It demonstrates strong scalability and significantly reduces training time and inference time. This makes it well-suited for real-time clinical applications. Additionally, the smallest model size (20 MB) and lowest FLOPS (0.7) offer an efficient balance between performance and computational demands. In terms of computational cost, the proposed model requires fewer floating point operations (FLOPS) and memory compared to deep models like DenseNet 201 and MobileNetV2, resulting in lower energy consumption and faster deployment on edge devices.

Scalability of the proposed model

The proposed model demonstrates strong scalability and robustness to noise, making it well-suited for real-world medical imaging environments. Scalability is achieved through the integration of HWHT and SCARO, which efficiently decompose images and reduce feature dimensionality. This approach allows for handling a large amount of data with minimal computational overhead. These parallel and optimized architectures enable faster and consistent performance even as the dataset size increases.

Robustness against noisy and low quality inputs

To assess the robustness of the proposed model under realistic imaging challenges, the performances are evaluated on modified datasets containing artificial induced noise, low resolution, and spatial misalignment. To explicitly evaluate the model’s robustness under conditions of pre-processing failure, controlled experiments and standard enhancement procedures were carried out, such as normalization, denoising, and contrast adjustment.

Three test scenarios were created using the COVID-19 chest X-ray dataset: Raw grey scales images without any normalization, images corrupted with additive Gaussian noise (\(\sigma = 0.03\)) and images with reduced resolution (downscaled by 50% and upscaled back using bilinear interpolation). The proposed model was then tested without altering any internal hyperparameters. The results indicated that the model retrained high retrieval accuracy, achieving 97.46% on normalized images, 96.85% under noisy conditions, and 96.12% on resolution degraded inputs. These results suggest that the hybrid HWHT-GLCM feature pipeline combined with the attention enhanced BCGAT classifier allows the model to preserve essential semantic and spatial relationships, even when typical pre-processing pipelines are absent. In real-world clinical situations, where image quality and consistency cannot always be guaranteed, such robustness is extremely important.

Clinical significance of accurate image retrieval in diagnostic scenarios

Accurate medical image retrieval plays a critical role in clinical diagnostics, especially in high-stakes scenarios such as differentiating between viral and bacterial pneumonia, early stage versus advanced COVID-19. By retrieving clinically relevant and similar historical cases, radiologists can compare disease progression patterns, validate ambiguous findings, and make more informed decisions. This is particularly valuable in situations with limited access to expert opinions or rare pathological decisions. The proposed system can retrieve diagnostically meaningful images based on content rather than metadata, ensuring higher diagnostic precision, reducing inter-observer variability, and supporting faster evidence based treatment planning. Thereby significantly enhancing the quality and efficiency of clinical workflows.

Real world challenges

The proposed system is designed with scalability and modular integration in mind, making it well suited for deployment within hospital PACS environments. The lightweight architecture with compact model size of 20 MB and low computational demands enables smooth integration without overburdening existing infrastructure. The retrieval and classification components can be containerized and deployed as microservices, allowing the system to function alongside existing DICOM. A real-time case based comparison during radiological reviews using a transformer based retrieval mechanism, which is integrated through a standard API. Future work will include extending DICOM compatibility to ensure seamless integration with electronic health records. Patient privacy and data security are paramount in medical image analysis systems. The proposed system can be deployed on premises within hospital firewalls, eliminating the need to transmit sensitive patient images to external servers. All the images will be anonymized before retrieval processing, and secure encryption protocols will be applied to protect data at rest and in transit. Future enhancements will consider federated learning for secure collaborative training across institutions without sharing raw data, further bolstering privacy and reducing data centralized risks.

The model is benchmarked on a standard workstation configuration (Intel Core i7 CPU, 16 GB RAM, NVIDIA RTX 3060 GPU) and critical operation metrics are evaluated in an effort to improve the feasibility of the suggested CBIR framework to implement in the real-world clinical context. The model maintains a small memory size with a quantized size of just 20 MB. At run time, the maximum memory footprint is less than 220 MB, so it can be integrated into hospital PACS. The average inference time for a single image was observed to be 43 ms, and the total retrieval time with feature comparison using BC was also observed to be 97 ms per query. Under batch processing load conditions, the system showed a throughput of 10–12 queries per second. All these deployment scores are definite confirmation of the fact that the suggested model has high diagnostic accuracy, along with viable applicability in real-time conditions. Its design is lightweight, meaning it does not require substantial computing services, and this is necessary in resource-limited healthcare facilities where rapid as well as precise decision support is important.

Some other comparative analysis

Table 14 shows the comparative analysis for the COVID-19 chest X-ray image dataset40. Table 15 illustrates a comparison of the National Institute of Health (NIHChest X-ray-8) dataset41. Table 16 represents the comparative analysis for various existing similarities measured with BC. Table 17 represents the performance evaluation of existing advanced transformer models with the proposed approach.

Discussion

In this section, the efficiency of the classification model based on the CBIR framework for X-ray images is thoroughly discussed. The performance of the existing model is compared with the advanced proposed model. In existing global attention, the complexity makes it difficult to tune the parameters, the performance may show overfitting and underfitting issues, and a larger dataset may show less scalability. The classification models degraded performance due to less flexibility. However, the performance of metrics is compared with existing and proposed models. The comparison shows the limitations in existing models, such as the accuracy obtained in the DenseNet model being low due to the inefficiency of memory and the occurrence of the vanishing gradient problem. On the other hand, the training and optimization could be difficult.

Furthermore, as layer size increases, so does the number of feature maps, leading to high computational costs. Low accuracy and recall in the current MobileNet model are caused by the size of the dataset and the deep convolutional layer, which reflects the high computational cost. Nevertheless, the model is unable to extract high-level features and is not suitable for real-time environments. Additionally, the accuracy, precision, and error metrics values of the current Bi-LSTM, GRU, and RNN models were lower. Due to noisy data sequences and fitting problems during the optimization process, the current GRU model provides reduced accuracy. However, because this model is hard to understand, the performance of image categorization using the current method has declined. As a result, the suggested model improves accuracy and increases model efficacy by paying attention to the contextual sequence. The conventional model’s deep architectural layer may consume more memory, which lowers accuracy performance and hinders real-time retrieval performance in survey analysis. However, performance has been effectively enhanced in the new approach. As a result, the typical DL model’s comparison form shows much worse results than suggested. For the extraction of spatial features from images, the comparison models of BiLSTM, RNN, GRU, and DenseNet-129 are more suited. However, the capacity to extract spatial information from X-ray images may be challenging.

In contrast, the classic DenseNet-129 model performed well in feature extraction, but its thick connection layer may be burdensome and cause performance degradation. Similar to this, the MobileNet model’s ability to extract subtle and discriminative medical information from X-rays may be hampered by its lightweight design, which may also impair retrieval accuracy. However, BiLSTM, RNN, and GRU suffer from vanishing and gradient problems and essentially do not support feature extraction. Furthermore, those models lose their capacity for long-term dependencies, which makes them less effective or inefficient for the CBMIR job. By combining context awareness, statistical distance measurement, and sophisticated attention mechanisms, the suggested BCGAT model increases categorization accuracy. Bhattacharya distance has been incorporated to improve class separability and reduce overlap. The context performance-aware method in the proposed model allows the model to focus on the most discriminative features by adjusting the attention weight based on the relevance of contextual information and performance.

Additionally, the global attention module records long-range dependencies and relationships throughout the picture. Consequently, the incorporation of innovative techniques enables more substantial, more reliable, and precise classification. In Table 18, the current performance is compared.

Application of image retrieval based on X-ray images

The adaptation of chest x-ray images (CXR) was acquired from various hardware platforms, and the variation of adult to pediatric CXR was digitally reconstructed into a radiograph. All commercial products of medical images are applicable for CXR. However, the image based commercial product includes more abnormalities, and different labels with many reporting results. Further, CXR designed two more products, one for identifying and reporting healthy images and another for visualizing interval changes. In CXR, image registration has determined the geometric transformation that statistically specifies the shape of the image. Based on the clinical objective, image registration has demonstrated changes in the retrieval of images. Table 19 represents the summary of key findings of the proposed framework.

Conclusion

In this paper, a novel Bhattacharya Context performance aware global attention-based transformer is presented for more accurate similarity computation. The input images are collected from the COVID-19 Chest X-ray image datasets and the Chest X-ray (pneumonia) dataset. The pre-processing of the collected X-ray images is performed before being stored in a medical database for analysis and retrieval. The hybridized Wavelet-Hadamard Transform (HWHT) was utilized to obtain both low and high frequency detail for analysis. To extract important features using the Gray Level Co-occurrence Matrix and to minimize feature complexity, Sine chaos based artificial rabbit optimization (SCARO) was utilized. In BC, the similarity between the query picture and the stored images is found. In the proposed method, the COVID-19 chest-x-ray image obtained an F1-Score of 97.1%, accuracy of 99.5%, precision of 97.1%, recall of 97.1%, and MSE of 0.0963. The chest x-ray image (pneumonia) dataset has an accuracy of 98.60%, a precision of 98.49%, a recall of 97.40%, and an F1-score of 98.50%. For the NIH chest X-ray dataset, the accuracy value is 99.67%.

Limitations and future work

The proposed system is quite efficient and accurate when it comes to image retrieval tasks. However, it doesn’t handle modalities like CT and MRI scans, which contain volumetric and multi-slice data, and is only designed to work with 2D radiographic images. Additionally, the model relies on hand-crafted texture features and does not fully exploit multimodal data sources such as clinical notes. Future work will focus on extending the framework to 3D imaging modalities and integrating AI models that combine vision transformers with natural language processing (NLP) for multimodal diagnosis. Enhancements such as federated learning and secure edge deployment will also be explored to ensure patient privacy. Moreover, real-time integration with the hospital PACS system and support for the DICOM standard will be prioritized for clinical translation.

Data availability

Data supporting this study are publicly available on the following sites. https://www.kaggle.com/datasets/prashant268/chest-xray-covid19-pneumonia; https://www.kaggle.com/datasets/paultimothymooney/chest-xray-pneumonia/data.

References

Mohammed, M. A., Oraibi, Z. A. & Hussain, M. A. content-based image retrieval using hard voting ensemble method of inception, Xception, and Mobilenet architectures. Iraqi J. Electr. Electron. Eng. 19(2) (2023).

Sweeta, J. A. & Sivagami, B. Review on topical content-based image retrieval systems in the medical realm. In 7th International Conference on Recent Innovations in Computer and Communication (ICRICC 23). https://doi.org/10.59544/ODBB5333/ICRICC23P16 (2023).

Shetty, R., Bhat, V. S. & Pujari, J. Content-based medical image retrieval using deep learning-based features and hybrid meta-heuristic optimization. Biomed. Signal Process. Control 92, 106069 (2024).

Kapadia, M. R. & Paunwala, C. N. Content-based image retrieval techniques and their applications in medical science. In Biomedical Signal and Image Processing with Artificial Intelligence 123–151 (2022).

Srivastava, D. et al. Content-based image retrieval: A survey on local and global features selection, extraction, representation and evaluation parameters. IEEE Access 11, 95410–95431. https://doi.org/10.1109/ACCESS.2023.3308911 (2023).

Rout, N. K., Ahirwal, M. K. & Atulkar, M. Content-based medical image retrieval system for skin melanoma diagnosis based on optimized pair-wise comparison approach. J. Digit. Imaging 36(1), 45–58 (2023).

Tabatabaei, Z. et al. Wwfedcbmir: World-wide federated content-based medical image retrieval. Bioengineering 10(10), 1144 (2023).

Muraki, H., Nishimaki, K., Tobari, S., Oishi, K. & Iyatomi, H. Isometric feature embedding for content-based image retrieval. In 2024 58th Annual Conference on Information Sciences and Systems (CISS) Vol. 13, 1–6 (2024).

Arora, N., Kakde, A. & Sharma, S. C. An optimal approach for content-based image retrieval using deep learning on COVID-19 and pneumonia X-ray Images. Int. J. Syst. Assur. Eng. Manag. 14, 246–255 (2023).

Tizhoosh, H. R. On validation of search & retrieval of tissue images in digital pathology. https://doi.org/10.48550/arXiv.2408.01570 (2024).

Chen, Y. et al. Performance evaluation of attention-deep hashing based medical image retrieval in brain MRI datasets. J. Radiat. Res. Appl. Sci. 17(3), 100968 (2024).

Sadik, M. J., Samsudin, N. A. & Bin Ahmad, E. F. Balancing privacy and performance: Exploring encryption and quantization in content-based image retrieval systems. Int. J. Adv. Comput. Sci. Appl. 15(10), 093 (2024).

Tian, M., Zhang, Y., Zhang, Y., Xiao, X. & Wen, W. A privacy-preserving image retrieval scheme with access control based on searchable encryption in media cloud. Cybersecurity 7(1), 22 (2024).

Mahbod, A., Saeidi, N., Hatamikia, S. & Woitek, R. Evaluating pre-trained convolutional neural networks and foundation models as feature extractors for content-based medical image retrieval. Eng. Appl. Artif. Intell. 150, 110571 (2025).

Ali, M. Content based medical image retrieval: A deep learning approach. Doctoral dissertation, St. Mary’s University.

Battur, R. & Narayana, J. Classification of medical X-ray images using supervised and unsupervised learning approaches. Indones. J. Electr. Eng. Comput. Sci. 30(3), 1713–1721 (2023).

Wickstrøm, K. K. et al. A clinically motivated self-supervised approach for content-based image retrieval of CT liver images. Comput. Med. Imaging Graph. 107, 102239 (2023).

Manna, A., Sista, R. & Sheet, D. Deep neural hashing for content-based medical image retrieval: A survey. Comput. Biol. Med. 196, 110547. https://doi.org/10.1016/j.compbiomed.2025.110547 (2025).

Lee, H. H., Santamaria-Pang, A., Merkow, J., Oktay, O., Pérez-García, F., Alvarez-Valle, J. & Tarapov, I. Region-based contrastive pretraining for medical image retrieval with anatomic query. arXiv preprint arXiv:2305.05598 (2023).

Franken, G. Applications of content-based image retrieval in financial trading. Masters Thesis, Eindhoven University of Technology (2023).

Li, M., Jung, Y., Fulham, M. & Kim, J. Importance-aware 3D volume visualization for medical content-based image retrieval-a preliminary study. Virtual Real. Intell. Hardw. 6(1), 71–81 (2024).

Tuyet, V. T., Binh, N. T., Quoc, N. K. & Khare, A. Content based medical image retrieval based on salient regions combined with deep learning. Mob. Netw. Appl. 26(3), 1300–1310 (2021).

Sivakumar, M., Kumar, N. M. & Karthikeyan, N. An efficient deep learning-based content-based image retrieval framework. Comput. Syst. Sci. Eng. 43(2), 683–700 (2022).

Karthik, K. & Kamath, S. S. A deep neural network model for content-based medical image retrieval with multi-view classification. Vis. Comput. 37(7), 1837–1850 (2021).

Dubey, S. R. A decade survey of content based image retrieval using deep learning. IEEE Trans. Circuits Syst. Video Technol. 32(5), 2687–2704 (2021).

Agrawal, S., Chowdhary, A., Agarwala, S., Mayya, V. & Kamath, S. S. Content-based medical image retrieval system for lung diseases using deep CNNs. Int. J. Inf. Technol. 14(7), 3619–3627 (2022).

Rashad, M., Afifi, I. & Abdelfatah, M. RbQE: An efficient method for content-based medical image retrieval based on query expansion. J. Digit. Imaging 36(3), 1248–1261 (2023).

Issaoui, I. et al. Archimedes optimization algorithm with deep learning assisted content-based image retrieval in healthcare sector. IEEE Access 19(12), 29768–29777 (2024).

Ahmed, A. & Malebary, S. J. Query expansion based on top-ranked images for content-based medical image retrieval. IEEE Access 8, 194541–194550 (2020).

Cui, L. & Liu, M. An intelligent deep hash coding network for content-based medical image retrieval for healthcare applications. Egypt. Inform. J. 27, 100499 (2024).

Padate, R., Gupta, A., Chakrabarti, P. & Sharma, A. An enhanced approach to content-based image retrieval using Harris Hawks optimization and automated image captioning. In 2024 8th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC) 1816–1824 (IEEE, 2024).

Suresh Kumar, J. S. & Celestin Vigila, S. M. Autoencoder and CNN for content-based retrieval of multimodal medical images. Int. J. Adv. Comput. Sci. Appl. 15(4), 281 (2024).

Helal, S. & Salem, N. A hybrid watermarking scheme using walsh hadamard transform and SVD. Procedia Comput. Sci. 194, 246–254 (2021).

Kumar, D. Feature extraction and selection of kidney ultrasound images using GLCM and PCA. Procedia Comput. Sci. 167, 1722–1731 (2020).

Wang, L., Cao, Q., Zhang, Z., Mirjalili, S. & Zhao, W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 114, 105082 (2022).

https://www.kaggle.com/datasets/prashant268/chest-xray-covid19-pneumonia.

https://www.kaggle.com/datasets/paultimothymooney/chest-xray-pneumonia/data.

AlMohimeed, A. et al. Diagnosis of COVID-19 using chest X-ray images and disease symptoms based on stacking ensemble deep learning. Diagnostics 13(11), 2023 (1968).

Dhiman, G., Vinoth Kumar, V., Kaur, A. & Sharma, A. Don: Deep learning and optimization-based framework for detection of novel coronavirus disease using x-ray images. Interdiscip. Sci.Comput. Life Sci. 13, 260–272 (2021).

Iqbal, S. et al. Fusion of textural and visual information for medical image modality retrieval using deep learning-based feature engineering. IEEE Access 11, 93238–93253 (2023).

Chowdary, G. J., & Yin, Z. Med-former: A transformer based architecture for medical image classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention 448–457 (Springer, 2024).

Shao, Z. et al. Transmil: Transformer based correlated multiple instance learning for whole slide image classification. Adv. Neural. Inf. Process. Syst. 34, 2136–2147 (2021).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study, conception, and design. All authors commented on the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Saranya, E., Chinnadurai, M. Medical application driven content based medical image retrieval system for enhanced analysis of X-ray images. Sci Rep 15, 29115 (2025). https://doi.org/10.1038/s41598-025-14282-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-14282-8

Keywords

This article is cited by

-

AI in radiology: a comprehensive survey on content-based medical image analysis for lung diseases

Network Modeling Analysis in Health Informatics and Bioinformatics (2025)