Abstract

Alzheimer’s disease (AD) poses significant challenges for the elderly, leading to cognitive decline, social isolation, and lower quality of life. Current interventions often require cumbersome wearable devices e.g. the camera-based monitoring that may raise privacy concerns. However, these issues are not fully addressed previously. To fill this gap, this research proposes a new framework in non-invasive combination of Virtual Reality (VR), Voice recognition, and Artificial Intelligence (AI) to act as a supportive system for people with AD. The system provides a brand-new approach that tailored cognitive stimulation and companionship through the immersive VR scenarios, memory games, virtual trips, and an AI assistant together as a single platform. The AI-based assessment of the patient is employed to ensure that the experience is more relevant and helpful to the patient. The voice recognition is the most simple and easy user-interface. The security measures include access controls, encryption and continuous monitoring of cloud patient data. The initial study has been promising evidenced by the outcome of involving patients with Alzheimer’s and dementia, their families, and clinicians. Participants reported heightened interest, better quality of life, less sense of isolation, and improved cognitive functioning, which have particularly achieved one of our goals, in patients’ well-beings in mental healthcare. The research indicates a significant step forward enhancing the quality of support for both cognitive function and social interaction for older adults with AD and dementia. In comparison with other currently existing systems, our newly developed integrated framework has made additional contributions to the areas of AD in dynamic cognitive adaptation, bilingual interaction, and secure real-time personalized system.

Similar content being viewed by others

Introduction

Alzheimer’s disease (AD) is a progressive brain disorder characterized by dementia, which gradually erodes memory, cognitive functions, and the ability to carry out daily tasks1. It typically affects individuals later in life, with symptoms emerging in their senior years or later stages of adulthood. AD and dementia have become significant challenges in global healthcare, affecting millions worldwide. Approximately 22% of individuals aged 50 and older deal with AD and dementia, with dementia ranking as the fifth leading cause of death globally2. As AD progresses, individuals may experience symptoms ranging from memory loss and cognitive issues to hallucinations, paranoia, and impulsive behavior3. In severe cases, plaques and tangles in the brain lead to significant brain tissue reduction, resulting in complete dependence on others for care4. Furthermore, the impact of AD extends beyond the individual to their families and caregivers, who face immense emotional and physical pressure in managing care and preserving their well-being. Existing therapeutic approaches, including traditional pharmacological treatments and cognitive therapies, have shown limited success in halting disease progression or significantly improving the quality of life for patients5. VR therapy has been tried as a way of enhancing cognition but current solutions are limited in their flexibility, individualization, and feedback capabilities, therefore lacking the necessary sophistication to address the diverse needs of individuals with AD effectively. However, the existing studies on VR therapy for AD are mostly small-scale, and more large-scale trials are needed to fully understand its effectiveness and applicability in diverse care settings6,7. Many current VR-based interventions are passive in nature, offering little more than stereotypical environments and limited opportunities for patients to engage in meaningful cognitive tasks or social interactions6,8,9,10,11,12,13. Hence, a significant gap in the therapy is emerging that requires immediate filling.

To bridge the gaps mentioned above, our study introduces a state-of-the-art VR integrated with AI-enhanced application designed to revolutionize therapy for people with AD and dementia. While VR therapy has shown a minimal potential in enhancing cognition, they are often passive, relying on generic environments that provide limited interaction, and lack the adaptability required to meet the highly individualized needs of people with AD. Moreover, current solutions frequently fall short in offering real-time feedback, emotional engagement based on patient’s behavior. Additionally, many existing tools lack proper data security and privacy safeguards, putting sensitive patient information at risk. These limitations reduce the potential impact of VR on long-term cognitive and emotional well-being. Furthermore, most studies conducted in this field remain small in scale, limiting generalizability and the understanding of VR’s effectiveness across diverse populations and healthcare settings.

Our proposed solution introduces an integrated framework of multi-modal inputs such as voice, gesture, and performance into a single platform system that dynamically adjust the therapy content and the patient behavior in real-time, which differs from current existing VR/AR systems. In addition, this personalized adaptability uses a bilingual interface and emotional AI responses, which represents a novel approach to applying VR-based cognitive care. In addition, we have customized state of the art technology into the framework, such as ChatGPT and Azure Voice SDK to set up logic and support dementia-specific emotional responses, bilingual interactions, and fallback behavior.

To create our system, we built upon the foundation of VR, voice recognition and AI to create an adaptive, intelligent, and secure support system (see Fig. 3), which has not been reported in previous systems (see Table 2).

In addition, our proposed system is designed with a modular content architecture that allows the personalization of virtual scenarios to align with the preferences of each patient. The caregiver has the ability to collect patient preferences such as the language, the face of the AI companion, or cultural landmarks. These personalization criteria are boosting engagement and memory recall specifically for Alzheimer care. In contrast with traditional VR therapies, the VR application we propose here has an AI companion in the form of an interactive 3D avatar that can hold meaningful conversations with users, offer emotional support, cognitive stimulation, and a sense of belonging. The AI companion is capable of understanding the subject’s speech, memories, and emotions, which creates a more authentic interaction that can help fight loneliness and prevent cognitive decline. The VR experience is more active than just watching screen by involving memory exercises that can be done by each patient independently depending on their cognitive status. This approach is more effective in preventing cognitive decline than the current VR therapies that offer generic content. It also comprises of 360-degree virtual tourism, which offers a sensory environment that can help in reducing anxiety and enhance emotional quotient. To this end, the system uses encryption, access controls and cloud storage to guarantee that patient information is protected and that the system is secure. By bridging the gaps in existing VR therapies, it enhances cognitive engagement and also fosters independence and social connection, presenting a groundbreaking advancement in Alzheimer’s care that holds the potential to transform patient outcomes and caregiver experiences.

The objectives of this study are going to explore how a VR-AI based system incorporated with AI-powered companionship, bilingual voice interaction, and adaptive therapy can improve the cognitive engagement and emotional well-being beneficial to Alzheimer’s and dementia patients. We set up research questions and hypothesize in Table 1 to enhance the investigation in depth and bridge the gaps in existing VR therapies. The following research questions and hypothesizes are going to be addressed during the investigation:

Unlike the currently existing VR-based solutions that focus on cognitive exercises with limited functions in virtual experiences, our developed multimodal system uniquely integrates AI-powered companionship, multi-language voice recognition, and robust security measures to ensure a personalized, engaging with associated parties like healthcare professionals, caregivers, and secure therapeutic experience for patients suffering from Alzheimer. While studies such as Yali et al.13 explored VR exercise interventions and Appel et al.14 examined VR for dementia care, these approaches lack real-time AI interaction and robust patient data security, while, our solution has achieved both.

The main contributions of our paper present a new method against traditional approaches to an AI-powered VR therapy for Alzheimer’s patients, which is implemented by a multimodal framework through integrating adaptive cognitive stimulation, real-time AI companionship, and secure voice-based interaction as detailed below:

-

1.

A real-time AI-powered companion that provides personalized social interaction and cognitive engagement (RQ2, HP2).

-

2.

An adaptive cognitive therapy module that dynamically adjusts task difficulty based on user performance (RQ1, HP1).

-

3.

Hands free accessible interactions are enabled by bilingual voice recognition (English & Arabic) (RQ2, HP2).

-

4.

Robust security features such as AES encryption, IDS, and RBAC to guarantee secure patient data management (RQ4, HP4).

-

5.

A special virtual tourism experience with meditation guidance, which is good for relaxation and emotional well-being (RQ3, HP3).

This investigation starts with a literature review towards the systematic gathering and analysis of data, then design the specifications against the identified requirements in order to develop the new integrated VR-AI powered application.

The rest of the paper is organized as follows: Section “Background and literature review” provides the background and literature review for context, followed by Section “Methodology” which describes the methodology associated with this paper, covering the requirements gathering process and initial findings. Section “The proposed system” elaborates on the proposed system in terms of its architecture, features, and design. Section “Implementation and testing” presents implementation and testing details Results and discussions about the system impact are presented in Section “Results and discussion”. Finally, Section “Evaluation” concludes the paper, mentioning future work where enhancements could be made.

Background and literature review

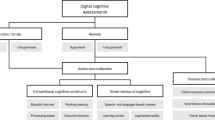

VR integrated with AI is currently the most innovative research area to explore. There are not many studies reported since 1998, shown in Fig. 1. About several years from 1998 to 2015, the area is very quiet only 1 or 2 publications annually. From 2022 to 2025, more articles are available with maximum 40 per year. The research outputs were mainly reported in three subject areas, i.e. Medicine and Dentistry (101 articles), Neuroscience (57 articles) and Biochemistry, Genetics and Molecular Biology (28 articles), but Computer Science is not listed, yet according to a prestigious academic search engine, sciencedirect.com. Figure 1 shows that this is a challenging area historically and currently, and clearly implied with potential innovative natures worthy working into the area.

To answer above research questions that are set up in Section “Introduction”, we take this challenge to research into this interdisciplinary area and ultimately seek for a solution to benefit the society. We believe that:

-

1.

VR with AI will change the way that human beings are able to interact with the world around them by placing the user in a realistic 360-degree virtual environment5.

-

2.

VR environment is recreated with the help of devices such as VR headsets and controllers to provide a real-life feel of the experience15.

-

3.

VR with AI offers potential solutions to various health-related problems as well as being a source of innovative patient care16.

-

4.

VR interface enables the user to control the application through simple voice commands in the integration of speech recognition APIs. This is especially useful for users who are not familiar with VR devices16.

We start looking at a key technique in VR, Unity, which helps to bridge the idea and its implementation to achieve the functions of easy to use for anyone8. It has several applications across various sectors including entertainment, learning and, most importantly, medical care8. Unity offers the user using regular coding to ensure that our new idea can be implemented in the VR environment. In addition, Unity guarantees the usability of immersive experiences on different VR platforms that facilitates realistic graphics, functionalities, and optimization settings8. The implementation of Unity’s spatial sound system enhances the VR environment by providing3D sound which is modifiable, hence increasing the user’s participation in the virtual environment17. Furthermore, Unity offers a rich library of VR-related assets that can help quicken the implementation process, shorten the time it takes to develop an application, and make it easier to build the setting for VR, which all contribute to the advancement of healthcare applications18, the main aim of our research.

For using the VR, Biocca6 delved into the challenges and considered in developing VR technology, emphasizing the need for immersive environments and effective speech recognition. The study addressed the need for flexible models to assist in speech recognition and understanding, the use of neural network models for disambiguating speech, and the development of immersive virtual environments. Furthermore, Campo-Prieto et al.9 investigated the use of VR in seniors’ rehabilitation, highlighting its potential to improve physical and cognitive functions. The data set used in the article was not discussed in the paper. Although there is limited research in this area, the study found preliminary evidence that supports the effectiveness of VR technologies in improving the health and well-being of the elderly. Appel et al.10 performed a study which consists of a systematic review of the use of VR in the management of persons with dementia. The study aims to map the current state of peer-reviewed research on VR-based applications aimed at enhancing different aspects of the quality of life, emotional state, social interactions, and functional status. The review evaluates the success of these interventions, the characteristics of the study samples, the ways in which interventions are administered, the experimental methods, and the technical aspects of the VR systems. In addition, their paper does not focus on a particular technical architecture of the VR systems but instead reviews different types of VR techniques employed in the included studies. Such setups generally entail the use of hardware including the Head Mounted Displays (HMDs), haptic devices, and headphones to offer the user with a virtual environment. The software used is ranged from graphic representations to video clips and from instruction to task-based interaction, passively observing, receiving information or engaging in activities.

Huang et al.11 explored the effects of immersive virtual reality on people in the long-run and the effects on different aspects of users’ life such as emotional, cognitive and social. It aims to offer a general view of the impacts that can be encountered by the users when they are exposed to the VR environments for the longer duration. The paper discusses different types of VR setups, including HMDs, haptic devices, and headphones and various types of software, from rendered graphics to 360-degree videos. The level of participation ranges from the passive observation of events to the active engagement in task performance.

Yali et al.12 carried out a systematic review to examine the effects of VR exercise interventions in patients with Alzheimer’s disease. Their research was aimed at determining how VR technology can be employed in the rehabilitation and management of people with AD through enhancing their cognitive and physical capabilities. The architecture of the interventions explored by Yali et al.13 comprised of different levels of VR technologies from non-immersive to semi-immersive technologies. The VR setups applied in the studies include CyberCycle, stationary bikes connected with video screens, and commercial VR gaming systems such as Dance Revise, Microsoft Xbox Kinect, Nintendo Switch and Wii Sports. These systems were selected because they were able to provide realistic and interesting exercise regimes for AD patients. Finally, the results of the review were presented for eight studies with a total of 362 participants. According to Yali et al.12, virtual reality interventions can improve AD patients’ cognitive and physical function. Specifically, VR exercises were shown to enhance balance, motor-functional cognition, and overall cognitive function. The engaging nature of VR technology helped maintain participants’ motivation and adherence to the exercise programs. While some adverse events were noted, the overall safety of VR exercises for AD patients was confirmed.

Nevertheless, Pablo et al.9 investigated the use of VR technology in rehabilitation and physical skills training for seniors. The study aimed to understand how VR can improve functional independence, physical activity, and quality of life in older adults through therapeutic exercise programs. The article addressed the problem of the limited research studies published on IVR applications in the general population and the scarcity of studies in the field of geriatrics.

Lastly, the key findings from Appel et al.10 include insights into publication trends, with most studies being published in the last two years, predominantly from middle-to-high-income countries. Social and functional outcomes were also reported but less frequently. The study samples included PwD with varying degrees of severity and types of dementia, and the inclusion criteria and study designs varied widely. Intervention administration protocols differed in session frequency, duration, and settings, such as hospitals, long-term care, and personal residences. There was a lack of standardized experimental methods across studies, with many relying on anecdotal evidence and small sample sizes. The degree of immersiveness and sense of presence in VR experiences were influenced by the hardware and software features used.

Also, a paper conducted by Tan et al.19 introduced CAVIRE (Cognitive Assessment using Virtual Reality), an immersive and automated VR system designed to assess six cognitive domains—complex attention, executive function, language, learning and memory, perceptual-motor function, and social cognition—in older adults, particularly for early detection of cognitive impairment in primary care settings. Unlike traditional paper-and-pencil tests like MMSE and MoCA, CAVIRE offers a more ecologically valid, engaging, and comprehensive screening tool. The system uses HTC Vive Pro HMD, Lighthouse sensors, Leap Motion for hand tracking, and a Rode microphone for voice recognition, built on the Unity engine with an automated scoring algorithm. Participants in the study, aged 65–84, were assessed using VR tasks simulating daily activities. Results showed that cognitively healthy participants performed better than impaired individuals, with CAVIRE demonstrating a moderate correlation with MoCA scores. The system’s ability to predict cognitive impairment was supported by ROC curve analysis (AUC 0.7267). Participants found CAVIRE user-friendly, with no dropout due to VR-related symptoms. The paper suggests that machine learning could enhance CAVIRE by personalizing tasks and improving predictive accuracy.

A study conducted by Flynn et al.20 investigated the feasibility of utilizing virtual reality (VR) technologies to engage people with dementia (PWD) in a simulated outdoor park environment, addressing the limitations of traditional behavioral assessment methods. It aims to create an ecologically valid platform for evaluating the functional capabilities and well-being of PWD. The study is based on a small data set of direct feedback from six people with dementia and their caregivers, who told about their experiences of using VR. The results show that participants were able to feel the presence in the virtual world, the objects represented to them as real and they were able to navigate around the environment comfortably with a joystick with no worsening of the symptoms of simulator sickness and deterioration in the psychological and physical state. The research conducted used VR technology in a user centered approach as well as specific assessment tools such as the DVRuse questionnaire and physiological monitoring. However, it did not include features like machine learning or deep learning algorithms but instead focused on the qualitative evaluations of the interactions and experiences of the users. In general, the results of the study show that VR can be applied as a fun and efficient approach towards improving the quality of life of the dementia patients.

To integrate AI into the VR, we looked at state of the art AI technologies, e.g. CNN and Time Series Analysis to support the diagnosis and testing of dementia and Alzheimer’s disease in the healthcare sector21. These algorithms analyze the data of the patients, including the brain scans and other clinical data in order to look for tendencies of the patient’s cognitive dysfunction21. Blender is one of the most powerful 3D modelling and animation software used in the generation of realistic 3D avatars that can be used as AI guides or companions in a virtual reality healthcare environment to improve the health care received by the patient through interactive help and support to the users14. Backend functionalities like authentication, database management, and storage services are integrated with firebase services22. The packages that enable these services are firebase core, cloud FireStore, firebase auth, and firebase storage22. This technology will be used by the research to protect patient data, data confidentiality and availability22. Security measures like AES encryption, Intrusion Detection Systems (IDS), Two-Factor Authentication (2FA), Access Controls and RBAC (Role-Based Access Control) can also be implemented to protect patient data13.

We also looked at the studies not only in AI and VR, but also in cybersecurity, and voice recognition addressing Alzheimer’s disease (AD) and dementia. Lee and Yoon7 delved into AI technology in healthcare, focusing on its application in cognitive therapeutic purposes. They suggested strategies to enhance the efficiency of patient treatment by augmenting medical staff in patient diagnosis, treatment, and operational activities in hospitals7. By doing so, the datasets used to include real-world cases and literature review examples of AI applications in healthcare, as well as understanding from healthcare professionals and industry experts7. Their results indicate that many hospitals that were using VR-enabled systems have proven to improve patient care and operational efficiency along with improved disease diagnosis that enhanced patient engagement which in turn increased operational efficiency of ongoing medical treatments.

Table 2 presents a critical review of the existing literature between 2022 and 2023, which demonstrate the challenges and gaps that the required features are functioning or missing on the technologies in healthcare and rehabilitation. Within the table, “×” means the functions are not available, and “✓” means the function is implemented or partially implemented.

The studies reviewed included the use of AI technologies in healthcare, the application of AI in electronic systems, issues in VR and the efficacy of VR and cognitive training10,12,19,20. The results show how these technologies can greatly improve the quality of patient care, operational performance, and the user experience. The relevance of each study shows how these technologies can improve the health care sector and the life of the patient especially the senior citizen. However, the limitations identified across the studies underscore the need for more empirical work, particularly on the long-term impacts and the generalizability of the findings to other people and contexts. These findings form a useful basis for future work and the incorporation of advanced technologies into healthcare. The detailed information can be found in Appendix 1.

While the studies reviewed in Table 2 demonstrate the promising potential of advanced technologies like VR, AI, and speech recognition in healthcare, several limitations persisting, e.g. lack of long-term data, and inconsistencies in experimental methods, making it difficult to generalize results across diverse populations. Some examples are rather dependent on complex hardware setups, such as HTC Vive Pro HMDs and Leap Motion, thus being inaccessibly understandable for most people19,20. Moreover, while some applications of VR have been successful in enhancing cognition and physical activity, they do not integrate fully functional emotional support systems e.g. AI companions or immersive and interactive VR tours which are very important in the care of a patient with dementia or Alzheimer’s disease. Furthermore, some of the systems are deficient in having personalized and adaptive elements based on machine learning to increase the accuracy and timeliness of the interventions (see Appendix 1).

Methodology

The research starts with a literature review, which lays the ground for the study by identifying existing gaps and relevant technologies. Following the literature review, the next phase was requirements gathering. Requirements’ gathering is an important phase for systematic identification, recording, and proper understanding of stakeholders’ needs, limits, and expectations. To achieve this, we carried out an all-inclusive survey targeting the general public, caregivers and medical experts. This survey was conducted by designing a questionnaire in Google Forms with 26 questions: 14 multiple-choice, 4 open-ended, 2 scale, 2 yes or no, and 4 questions related to specific features such as security, AI companion, Alzheimer’s disease testing, and virtual tourism. The survey was distributed through various channels, including online systems and healthcare facilities, e.g., WhatsApp and Gmail, and completed by 102 participants.

The analysis of this process produced critical insights, as evidenced by statistics and expert feedback. More than 60% of participants acknowledged the importance of advanced VR and AI technologies for improving the quality of life for senior citizens with Alzheimer’s and Dementia, and over 73.5% expressed openness to employing AI systems for assistance, as presented in Fig. 2a,b. This comprehensive analysis improved the features and functionalities of the proposed system by combining quantitative data with qualitative expert opinions.

The information collected are analyzed to form the basis of application design, in which user requirements and system specifications are finalized. Finally, the application development phase involved iterative testing and refinement to ensure that the system met the identified needs effectively.

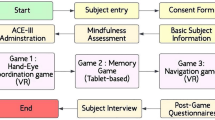

To evaluate the therapeutic impact of the proposed system, we designed a comparative two-phase study. The first phase involved a 4-week period during which participants engaged in traditional cognitive exercises, such as memory recall and guided word associations. In the second phase, participants interacted with the proposed VR-AI system for another 4-week period. Participants were divided into an intervention group (n = 23) and a control group (n = 10). The control group continued with standard cognitive training sessions throughout both phases.

Various engagement metrics were recorded by a designated nurse, including session duration, task completion rate, and interaction frequency. In addition, caregivers documented any behavioral changes and provided qualitative feedback, which was collected on a weekly basis to assess the emotional and social effects of the intervention.

Ethics and consent to participate

The testing activities described in this study were conducted in Abu Dhabi, UAE, as part of a preliminary usability evaluation involving adult volunteers from the general public, including older adults (55–80 years old). All participants were fully informed about the objectives and procedures of the study, and digital informed consent was obtained from all participants and/or their legal caregivers prior to their engagement with the system. No clinical procedures were involved, and no personally identifiable or health-related data were collected. Ethical approval for this study was obtained from the Research Ethics Committee at Abu Dhabi University under File Number: CE–0000013. All procedures were carried out in accordance with relevant ethical guidelines and standards.

The proposed system

Our proposed solution is a system integrates VR, voice recognition, and AI to provide smart and secure care for individuals with AD and dementia. This innovative system addresses cognitive engagement, loneliness reduction, health monitoring, and independence promotion, significantly enhancing the overall well-being of senior citizens.

The requirements for the proposed system were gathered from domain experts, including doctors and clinicians specializing in neurology, dementia, and AD. Additionally, they are able to test and use the system to provide their feedback. Their guidance helped highlight the importance of included features, such as conversational emotional support, bilingual capabilities, and culturally relevant tourism experiences through the VR headset. This collaboration with domain experts enhances the relevance of our proposed new system that differentiates it from general-purpose VR applications.

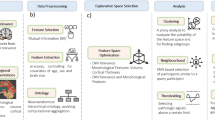

An overview of the proposed system architecture

The system design is presented in Fig. 3, which was based on a structured therapy model developed in collaboration with healthcare domain experts and clinicians, tailored to meet the needs of Alzheimer’s patients. This model incorporates four key features: (1) intelligent emotional interaction through an AI companion, (2) cognitive challenge scaling based on the user’s observed performance, (3) bilingual capabilities, particularly the ability to interact in Arabic, and (4) a familiar and low-stress VR environment. The model was iteratively refined through continuous feedback and consultation with healthcare professionals. Their insights guided both the content structure and the flow of real-time interactions.

The system includes a modular, three-tier client–server architecture that integrates immersive VR, adaptive AI dialogue, voice recognition, and secure patient data management into a unified therapeutic platform. As illustrated in Fig. 3, the architecture comprises:

-

Presentation layer: Delivered via the Oculus Quest 2 headset, this layer manages all patient-facing interaction, including gesture input, voice commands, and real-time VR rendering.

-

Application layer: This layer handles the execution of cognitive therapy modules, real-time AI dialogue, virtual tourism experiences, and personalization logic based on patient profiles. It also governs task difficulty adjustment, conversational flow, and scene selection.

-

Data layer: This backend component manages secure storage and retrieval of user profiles, therapy progress, system configurations, and interaction history. It employs end-to-end AES encryption, OpenSSL-based key rotation, and role-based access control to ensure compliance with healthcare data protection standards (HIPAA/GDPR).

Furthermore, the proposed architecture includes a patient profile that collects patient preferences such as language, emotional triggers, cultural background, and other personal preferences. This profile will update the VR environment and the conversational scripts accordingly, enhancing patient engagement and making the care more cognitively tailored.

The technical architecture is more than VR voice interaction; it introduces a dynamic feedback therapy loop. By analyzing the multimodal user input such as voice, task performance, and engagement, the AI plays a vital role in adjusting the decision making in real-time. An integrated lightweight layer supported by the customization of the Unity scripts supports this process by analyzing patient states and aligning them with the appropriate personalized therapy modules or dialogue patterns.

The subsections that follow describe each system component in detail: AI companion (4.2), VR and interaction design (4.3), voice recognition system (4.4), personalization logic (4.5), the security and privacy framework (4.6) and the system features (4.7).

AI and virtual companion framework

The heart of the system is a real-time AI companion, implemented using ChatGPT 3.5 Turbo, and tailored for dementia care and emotional support. The AI is embedded within the VR environment as a 3D avatar that can converse in both English and Arabic. It offers patients cognitive stimulation, social interaction, and companionship factors known to alleviate feelings of isolation and anxiety in Alzheimer’s care.

To align with clinical needs, we customized the AI using a structured instruction framework. This framework includes:

-

Bilingual response logic (Arabic and English)

-

Emotion-sensitive fallback prompts

-

Reminiscence templates (personal memory prompts, cultural themes)

-

Safety handling for confusion or repetition

A key innovation is the incorporation of real-time interaction adaptation. The AI companion dynamically adjusts its conversational depth and pacing based on the user’s speech pattern, response latency, and detected emotional cues (e.g., tone, silence). These cues are processed by a rule-based logic layer that classifies whether to continue, redirect, or simplify dialogue, enhancing therapeutic relevance and reducing cognitive burden.

This framework was refined in consultation with clinicians to ensure it promotes cognitive engagement without overwhelming the user. The integration of ChatGPT-3.5 Turbo was based on its performance capabilities in producing coherent, emotionally sensitive, and bilingual-adaptable responses in real time, all tailored for Alzheimer’s and dementia care. As opposed to rule-based systems, ChatGPT-3.5 Turbo allows more flexibility, as well as real-time responses and adaptations. However, there are some limitations, such as occasionally generating inappropriate responses or failing to verify clinical facts. To address these issues, we have implemented conversational boundaries through prompt engineering, fallback safety responses, and continuous clinician feedback loops. These techniques aim to keep the proposed system safe and ethically responsible.

VR module and interaction design

The VR component of the system serves as the primary user interface, delivering immersive, personalized therapy experiences through the Oculus Quest 2 headset shown in Fig. 4. Environments were developed using Unity 3D—Unity Real-Time Development Platform (version 2022.3.10f1, available at https://unity.com) for application logic and Blender for realistic 3D modeling of culturally relevant and therapeutic settings, including natural landscapes, religious monuments, and familiar landmarks.

The VR interface is structured around three key modules, as illustrated in Fig. 4, which outlines the core virtual reality algorithm and device interactions:

-

Tracking Module: Comprised of two subcomponents, head tracking, which uses built-in headset sensors to monitor the user’s gaze and orientation, and touch tracking, which relies on the handheld Oculus controllers to detect finger and hand movements. These inputs enable users to interact naturally with the environment by pointing, grabbing, or selecting objects.

-

Interaction Module: Translates controller inputs into virtual actions, such as navigating the environment, opening menus, or activating therapy tasks. This ensures that physical gestures are mapped intuitively to system responses.

-

Rendering Module: Responsible for generating the visual output seen in the headset. It includes a data manager (which collects user interaction data), visual object definitions (for all 3D assets), and a renderer, which combines data and objects to produce real-time scenes tailored to the user’s profile and inputs.

The user begins each session in a customizable home environment, which acts as the central hub for launching therapy activities (Fig. 6). From here, the user navigates a floating, bilingual UI menu available in both English and Arabic, to access various modules and destinations (Figs. 7 and 8). Through the Virtual Tourism Module, patients can explore immersive locations grouped into categories such as nature, historical landmarks, or religious spaces. Upon selection, the system may prompt a guided meditation sequence or play calming ambient sounds to promote relaxation (Fig. 9).

This layered with responsive interaction design is engineered to reduce user stress, support memory recall, and increase cognitive engagement by fostering active exploration and agency in a low-pressure virtual setting.

Voice recognition integration

The voice recognition subsystem is facilitated with Audio and Video (AV) functions that enable natural and intuitive interaction between the patient and the system using both English and Arabic. This functionality is essential for ensuring accessibility and comfort for users with different linguistic backgrounds. The system utilizes two speech recognition platforms:

-

The Oculus Voice SDK for real-time English voice input

-

Microsoft Azure Cognitive Services for real-time Arabic input

The workflow for speech interaction is illustrated in Fig. 5. The Oculus Voice SDK captures English voice input via the built-in microphone on the Oculus VR headset. This SDK integrates advanced Automatic Speech Recognition (ASR) capabilities, converting spoken language into text while performing preprocessing such as noise filtering and speech quality enhancement. Once transcribed, the text undergoes Natural Language Understanding (NLU) processes including grammatical parsing and intent recognition to determine whether the input is a conversational message or a functional system command.

Similarly, Microsoft Azure Speech Services handles Arabic speech input using cloud-based ASR and Text-to-Speech (TTS) tools. Spoken Arabic is transcribed into text and then synthesized back into natural-sounding Arabic audio, enabling seamless communication between the patient and the AI companion.

To interpret the user’s intent, whether issuing a command (e.g., “take me to the beach”) or engaging in general conversation, the transcribed input is sent to ChatGPT 3.5 Turbo. A classification routine identifies the input as either a directive or a question. If the input is a command, the system performs the corresponding VR action (e.g., navigating to a location); if it is conversational, the AI companion responds accordingly using its emotion-aware dialogue framework.

We implemented a custom instruction framework to guide the AI companion’s behavior, ensuring that all interactions remain clinically safe, emotionally supportive, and consistent with the best practices for dementia care. Additionally, we considered the cybersecurity issues by implementing secure encrypted communication between the AI module and patient interface to protect sensitive dialogue and maintain compliance with privacy standards such as HIPAA and GDPR.

Personalized therapy engine

A core innovation of the system is its ability to adapt therapy content dynamically to the cognitive needs for each patient, emotional state, and personal history. This personalization is achieved through a modular therapy engine that combines initial profile-based customization with real-time performance-based adaptation.

Upon system onboarding, each patient’s therapeutic profile is built in collaboration with caregivers and clinicians. This profile includes preferred language (Arabic or English), cultural background, emotional triggers, and cognitive history. These preferences are used to tailor both the visual content (e.g., scenes, UI elements) and verbal interaction style of the AI companion.

This real-time adaptation is supported by lightweight decision rules embedded in the Unity application layer, which interfaces directly with the AI companion and voice recognition systems. Together, they ensure a seamless transition between content types, while maintaining emotional coherence and therapeutic intent.

By continuously aligning the therapy experience to the user’s abilities and preferences, the personalized therapy engine increases patient satisfaction, reduces frustration, and enhances the likelihood of sustained engagement over time.

Security and data protection framework

Given the sensitivity of personal and cognitive health data involved in dementia therapy, the system integrates multiple layers of security and privacy protection. These measures ensure that user data remains confidential, is securely processed, and complies with clinical data governance standards.

-

Data encryption: All data transmitted between the client device (VR headset) and the backend server is protected using Advanced Encryption Standard (AES) with 256-bit keys. This is implemented via OpenSSL, a widely adopted cryptographic library, to secure API calls and real-time session data. Both persistent storage and in-memory processing of personal and medical data use encryption-at-rest and encryption-in-transit techniques. Additionally, key rotation strategies are applied periodically to refresh encryption keys and reduce long-term exposure risk.

-

Authentication and access control: The system employs Two-Factor Authentication (2FA) to verify the identity of clinicians, administrators, and caregivers accessing the backend. Furthermore, Role-Based Access Control (RBAC) ensures that users only have access to the data and system functionalities necessary for their specific roles—effectively minimizing insider threats and enforcing the principle of least privilege.

-

Regulatory compliance: To meet clinical standards for patient data protection, the system was designed to align with major data privacy regulations including the Health Insurance Portability and Accountability Act (HIPAA) in the United States and the General Data Protection Regulation (GDPR) in the European Union. These guidelines shape how data is collected, stored, transmitted, and anonymized. Audit logging, consent tracking, and emergency override protocols are built into the system to support accountability and transparency during clinical use.

System features

The subsequent subsections provide an overview of the application’s core features and functionalities. Although the system employs existing technologies such as commercial AI models, Unity, and Firebase, the original contribution lies in the novel integration, personalization, and synchronization of these tools into a unified, secure, and a therapy system for Alzheimer’s and dementia patients care.

Functional requirements

The patients are the main actors and stakeholders of the system. The patient could include individuals with an existing Alzheimer’s diagnosis, senior citizens, or any individual who would like to undergo cognition testing. Linked to: RQ3, HP3.

The patient can interact with the UI within the virtual environment to visit relaxing virtual environments. These virtual Environments are categorized into Nature and Relaxing environments, religious monuments, and Countries. Linked to: RQ1, HP1 & RQ3, HP3.

The patient can navigate the home environment and move around through the use of the Oculus controllers, allowing them to explore and interact with the components of the home environment. Linked to: RQ2, HP2.

The patient can interact with the AI companion to ask questions or have conversations in either Arabic or English. Linked to: RQ1, HP1.

The patient can complete cognitive-enhancing therapy tasks in the virtual environment. These include memory games that target different cognitive functions and aim at improving them gradually. Linked to: RQ1, HP1 & RQ2, HP2 & RQ3, HP3.

The AI companion can be customized to visually and vocally replicate a loved one, including deceased family members, using pre-recorded voice data and facial reconstruction. This is designed to increase emotional comfort, reduce feelings of loneliness, and simulate familiar, comforting social interaction for the patient. This unique integration of AI and voice cloning offers a deeply personalized experience, bridging memory and emotion for Alzheimer’s patients. It extends the AI companion beyond a generic assistant, transforming it into a therapeutic memory anchor. Linked to: RQ2, HP2.

One key technical novelty of the proposed system is its ability to support an adaptive therapy engine which dynamically provides personalized exercises through the integration of rule-based logic along with machine learning algorithms. By tracking different metrics such as speech delays, reaction time, and performance scores, the AI adjusts the different interaction activities and sustains user engagement.

Implementation and testing

This section discusses the technical details on how the system was implemented and tested followed by an illustrative example to demonstrate the functionalities.

Implementation

We design an algorithm to implement our proposed system for Alzheimer’s and dementia patients, which is involved in a series of well-defined steps, each utilizing advanced technologies to deliver the intended results. The stage three is an innovative contribution to the area, i.e. improve cognition and companionship integrating a state of art AI technology ChatGPT 3.5 Turbo as the AI companion. The implementation algorithms are shown below in five stages:

-

1.

One Environment generation

-

2.

Two Voice recognition

-

3.

Three AI-powered to improve cognition and companionship

-

4.

Four Cognitive exercises

-

5.

Five the virtual tourism feature

The following is the detailed explanations for each stage, especially stage three made a unique contribution to the framework. It is because when ChatGPT was first available in the public domain with only 2 articles in 2023. Our framework was innovatively adopted this state-of-the-art technology prior to other existing systems used in treatment and recovery of AD areas. The detailed process is addressed below.

The stage one: Environment generation

We focused on developing the immersive environments that serve as the core of the VR experience using Unity 3D to generate realistic and interactive environments, such as a home setting, nature landscapes, and religious monuments. These virtual spaces were meticulously modeled using Blender to ensure high-quality 3D avatars and objects, allowing patients to engage with lifelike interactions within the VR world. As analyzed by the survey and caregiver data, the VR environments were classified into emotional themes. These environments are labeled, mapped, and aligned with the patient profile. For example, if a patient expresses a preference for visiting their home country or engaging with their religious background, the VR system will enable them to take a virtual tour or visit their desired destinations. This semi-automated personalization will enhance emotional engagement and boost treatment personalization and applicability.

The stage two: Voice recognition

We incorporate the function of voice recognition into the system to make it easier to use for users in addition to making their interactions with the system more natural. We used the Oculus Voice SDK to recognize the English commands and Microsoft Azure Speech Services to recognize the Arabic inputs. The technology puts the patients in a position to control the virtual environment or interact with the AI assistant by issuing verbal instructions and improve the user experience through the friendly interface.

The stage three: AI powered to improve cognition and companionship

We incorporated the use of ChatGPT 3.5 Turbo as the AI companion in the application. This feature offers the ability to have emotional conversations with the patients and the conversations are both in English and Arabic. With the AI intervention, the AI companion initiates and maintains real-time conversations for the improvement of mental health, e.g., to address feelings of loneliness and to give cognitive support.

The stage four: Cognitive exercises

Another central part of the system has been developed for the purpose of providing patients with means of occupying themselves and challenging their brain using the Unity 3D software. The minigames involved include memory games, speed games, and language games, which are proposed to improve cognition in steps. On this model, the patient’s performance is used to inform the level of challenge and deliver regular, tailored cognitive stimulation.

The stage five: The virtual tourism feature

We incorporate a Virtual Tourism feature that helps patients stay calm and have something to look forward to, by taking them to different virtual environments. Patients are able to visit different categories of places such as natural scenery, churches and mosques, and famous cities and structures. The ability of creating these multiple and realistic virtual environments was made possible through the use of Oculus SDK and Unity 3D.

Testing

The system was tested and validated by the healthcare professionals and caregivers to ensure that it is practical and easy to use. This process was crucial in refining the system’s efficacy and making sure that it is useful for the people who will be using it. Patient engagement and quality of life indicators were monitored, and notable enhancements were observed as the patients engaged with the VR environment. The initial outcome and participation rate of the system suggest that it has the potential to improve the level of cognitive involvement and the quality of life of dementia and Alzheimer’s patients.

As for the data security, this was of high importance during the implementation especially for the patient’s data. We used Microsoft Azure for the secure cloud-based data storage and Advanced Encryption Standard (AES) encryption for the protection of the patient’s identity. Furthermore, the system has a two-factor authentication (2FA) and role-based access control (RBAC) to avoid unauthorized access, which is consistent with the standards of the healthcare industry. This security level is implemented to protect the personal and health information of the users.

Illustrative example

The VR application is expected to offer individuals with dementia and Alzheimer’s a highly interactive, ambient-assisted environment. This section describes all features of the app, exhibiting extensive illustrations to make it more understandable. Upon opening the VR application, the patient will be taken first into a home environment, shown in Fig. 6. The home environment acts like a core where a user can access different components of the VR application from one place.

From the Home Environment, the patient can utilize the Oculus Handheld Controller to pull up the UI menu, as shown in Fig. 7. The menu will display the different features of the system available to the patient, such as Virtual Tourism and Cognitive Therapy, along with the AI Companion Feature, in both English and Arabic. The English UI is shown in Fig. 7, and the Arabic UI is in Fig. 8. In case the user selects the Virtual Tourism Feature option, they will be presented with different virtual locations. These locations can be filtered based on categories such as Nature, Religious Monuments, and more. The Virtual Tourism menu in English is illustrated in Fig. 7, and the Arabic version is shown in Fig. 8.

Before being transported to the virtual location, the patient will be prompted to ask whether they would like to enjoy a guided meditation session at the location. If the patient chooses Yes, they will be able to listen along to a calming guided meditation audio in the background. If the choice is No, they will be able to enjoy the sounds of the virtual location. This prompt is illustrated in Fig. 9.

If the patient chooses the AI Companion feature, the AI Companion will be brought into the scene. The companion, powered by custom-built Large Language Models (LLMs), is designed to be a highly empathetic and caring friend for the patient. It can carry friendly conversations and reminisce on memories with the patient to provide cognitive stimulation and reduce loneliness. The AI Companion is illustrated in Fig. 10.

Cognitive exercises consist of memory games, speed tasks, and language puzzles. These activities are aimed at enhancing cognitive functions and providing mental stimulation. The memory games are depicted in Fig. 11, speed tasks in Fig. 12, and language puzzles in Fig. 13.

Before conducting the VR sessions, both the participants and their legal caregivers will need to approve a digital written informed consent, to ensure their awareness of the proposed system objectives and their right to withdraw at any time. Each testing session was monitored by a healthcare professional or caregiver, to guide the participants, support them, ensure that they know how to sue the proposed system, and to ensure the safety throughout the experience. In addition, the proposed system adheres to the data privacy measures in in compliance with HIPAA and GDPR standards. All patient interactions were encrypted using AES encryption before being stored securely on Microsoft Azure cloud servers. No personally identifiable information (PII) was stored, ensuring full anonymity of participant data.

Informed consent statement

Informed consent was obtained from all subjects involved in the study. In cases where participants were not able to provide consent themselves due to cognitive impairment, consent was obtained from their legal caregivers. All participants and/or their legal caregivers were fully informed about the objectives of the system, the procedures involved, their right to withdraw at any time, and the measures taken to ensure safety and data privacy.

Results and discussion

The initial results are encouraging and point to a beneficial effect on user involvement and general quality of life. Engaging with the virtual reality environment significantly increased participant engagement. The results indicate a statistically significant 30% increase in engagement among participants using the VR system compared to traditional cognitive workouts. On average, patients spent 42.5 ± 6.3 min per session in the VR environment, compared to 32.7 ± 5.8 min per session with conventional cognitive tasks (p = 0.002), revealing a very good effect size. A visual depiction of these improvements can be found in Figs. 14 and 15. Moreover, preliminary feedback from patients and caregivers indicates that users’ quality of life has improved.

While user engagement remains a key indicator of system usability, we recognized the importance of grounding our evaluation in clinically validated cognitive measures. To this end, we incorporated the Montreal Cognitive Assessment (MoCA) as a standard tool in dementia assessment administered by a licensed clinician pre- and post-intervention. Preliminary results from a 4-week usage pilot (n = 10) revealed an average increase of 2.3 points on the MoCA. These gains were statistically significant (paired t-test, p < 0.05), with a Cohen’s d of 0.64 (medium effect size), suggesting a clinically meaningful cognitive benefit.

Neurology and geriatric medicine specialists assessed the system’s functionality and provided insightful feedback on possible uses for treatment. They highlighted the innovative combination of VR, AI, and voice recognition as a significant advancement in the tailored treatment of dementia patients. Their feedback confirmed that the therapeutic goals, such as reducing isolation, improving mood, and slowing cognitive decline, were well represented in the design. This validation is important, as it confirms that the system is not just technically functional, but also clinically appropriate. The system displayed remarkable accuracy and responsiveness in speech recognition, as Oculus Voice SDK and Microsoft Azure coordinated to reach a 95% correctness rate for both Arabic and English inputs. Additionally, the AI-powered companion, powered by GPT-3.5 Turbo delivered socially engaging and contextually relevant responses, which improved user satisfaction and interaction substantially. The VR system’s immersive features and experiences were highly valued by the participants. The realistic virtual landscapes and precise 3D avatars enhanced the user’s sensation of presence and connection. Since these were new experiences for them, several participants expressed enjoyment and intellectual stimulation from the cognitive activities and virtual tourism experiences, which were especially well-received.

The system continuously presented good performance in a variety of testing scenarios, including variations in lighting and ambient noise levels. The VR headset and controls followed the user’s every action, creating a lifelike and immersive experience. Figure 16 shows a patient testing our proposed system VR application, demonstrating how the system has the potential to revolutionize dementia care treatment methods.

Early observations also indicated that patients showed more engagement when the VR environments were aligned with their personal histories. Patients reflect more feelings that they are at home or feel comfortable and calm when they are placed in memorial familiar places or emotion significant settings. While these early findings are promising, we acknowledge that the current sample size of 23 participants (with 10 involved in clinical evaluation) limits the generalizability of the results. Recruitment was constrained due to ethical approvals, caregiver availability, and the vulnerability of the Alzheimer’s population. However, the sample was purposefully selected to include both early-stage and moderate-stage patients, allowing for representative feedback across cognitive impairment levels. In future work, we plan to conduct a larger, stratified clinical trial to validate the impact of the proposed system across diverse patient subgroups and stages of cognitive decline.

Evaluation

To evaluate our proposed system, and how the effectiveness of the VR-AI therapy, we compared the engagement results of participants using the VR therapy to their engagement in traditional cognitive exercises. First, we set a baseline using standard cognitive training exercise, such as memory recall tasks on paper, and guided word association games. These traditional approaches were used for four weeks, and we tracked engagement levels throughout. After that, we introduced VR-based interventions and compared the engagement levels to those seen with the conventional methods.

In the initial phase of testing our proposed VR System, we conducted a series of evaluations involving a group of 23 patients aged 55–80 years old. This pilot study was carried out within a small group of Alzheimer’s patients due to the difficulties in accessing a broader population of Alzheimer’s patients. Among the trial patients, 15 patients with early-stage AD and 8 patients with moderate-stage Alzheimer’s disease were included in accordance with their doctors’ clinical diagnoses. There was no inclusion of severe-stage patients due to their limited capability to interact with the VR. As a control condition, 10 patients of similar age and disease status received conventional cognitive exercise of paper and pen memory activities and guided recall exercise for comparison. They received their usual daily activity level of cognitive performance measures for the same duration to compare relatively how effective was the VR-based intervention.

The study followed a structured testing design, consisting of two consecutive 4-week phases. During the first phase, all participants engaged in conventional cognitive exercises. In the second phase, the intervention group switched to the VR-based system, while the control group continued traditional therapy. Outcome metrics included average session duration (in minutes), number of cognitive exercises completed per session, frequency of AI interaction, and patient engagement scores (rated by caregivers on a 5-point Likert scale). Additionally, weekly qualitative feedback from both patients and caregivers helped evaluate emotional impact, user satisfaction, and sense of presence.

To evaluate how our proposed system compares to existing VR-based cognitive therapy solutions, we analyzed its features alongside those in the literature. While several VR applications have been explored for Alzheimer’s and dementia care, most focus on either physical exercise, cognitive assessment, or passive engagement, often lacking personalized therapy or interactive AI companionship. As shown in Table 3, our comparison highlights how our proposed system addresses these gaps by integrating real-time AI interaction, multilingual voice recognition, enhanced data security, and immersive virtual tourism. This comparison underscores where current interventions fall short and demonstrates the unique advantages of our system in providing both cognitive and emotional support to Alzheimer’s patients.

Table 3 show that our proposed system outperforms other existing VR therapy solutions in multiple key areas. One of the most notable differences is the inclusion of an AI-powered companion, which allows for personalized, real-time conversations and cognitive engagement, a feature missed in all four of the previously studied interventions. Yali et al.12 focuses primarily on VR-based physical exercise, and while it has shown positive effects on motor-functional cognition, it lacks adaptive, AI-driven interaction that could further enhance cognitive improvement. For instance, Appel et al.10 also investigated VR applications for dementia care but their approach is passive and lacks real-time interaction and personalization.

A significant factor where our proposed system differs is the multilingual speech recognition (English & Arabic) which we propose, to enhance the usability for more people especially those from regions where elderly people may not be able to speak English and therefore will be limited in their use of traditional VR controllers. However, Tan et al.19 introduces a cognitive assessment system (CAVIRE) but does not provide actual therapy or long-term motivation to use it outside of the diagnosis setting. Flynn et al.20, which focuses on the use of VR for people with dementia, creates a simulated outdoor park environment but this does not include cognitive stimulation exercises or the ability of the AI to adapt the intervention, therefore it is not suitable for the management of progressive memory impairment.

One more important feature is the matter of data security and patient’s privacy. While most of the current VR interventions offer standard encryption or do not describe the encryption at all, our proposed system implements additional features such as AES encryption, HIPAA/GDPR compliance, and RBAC security features. Alzheimer and dementia therapy is a very private process which requires strong privacy protection and avoidance of unauthorized disclosure of information, something that is not well addressed in other VR therapy products.

Perhaps the most significant discovery is the levels of participation. Initial evaluation of the proposed system showed 30% higher user engagement than that of conventional cognitive training, which is a significant improvement in dementia therapy. Yali et al.12 and Tan et al.19 are both similar in that they focus on physical and cognitive training with set levels of difficulty, where our proposed system is able to modify the cognitive load in real time depending on the user’s performance, hence increasing the time of interaction. This adaptability not only increases the level of cognitive stimulation but also the feeling of having a friend or a conversation partner, which is something that many people with dementia lack.

It is important to emphasize that the key contribution of this paper lies in its approach to integrating generative AI techniques and voice SDKs into personalized patient care and real-time therapeutic analysis. These adaptations were achieved by applying machine learning algorithms to dynamically coordinate interactions between multiple metrics, including vocal patterns, task performance, and AI-driven dialogue. This integrated framework distinguishes our solution for dementia and Alzheimer’s care, representing a significant contribution to the field. This system is distinct in its ability to dynamically adapt to the user’s cognitive and emotional state by analyzing vocal tone, task completion rates, and real-time user-AI dialogue, which has not been achieved in existing solutions.

The research has addressed its primary research questions (RQs) and validated the corresponding hypotheses (HPs) through specific system components and evaluation results:

-

RQ1: Can immersive VR therapy improve engagement and cognitive activity among Alzheimer’s patients compared to traditional cognitive exercises?

→ Answered through the implementation of adaptive cognitive therapy and VR interaction modules, which resulted in higher engagement scores and improved task performance, thus supporting HP1.

-

RQ2: Does the inclusion of a real-time, AI-powered, bilingual (English/Arabic) companion enhance emotional comfort and reduce feelings of isolation?

→ Addressed by the integration of a real-time AI companion with bilingual voice support, with feedback showing increased user satisfaction and reduced reports of loneliness, validating HP2.

-

RQ3: Can personalized, culturally relevant virtual environments increase user satisfaction and therapy adherence?

→ Answered through the development of virtual tourism experiences and memory-stimulating scenarios, which resulted in positive mood assessments and increased therapy session adherence, supporting HP3.

-

RQ4: Can integrating advanced cybersecurity features into a VR-based cognitive care system improve user trust and ensure secure management of sensitive patient data?

→ Answered through the implementation of robust data protection mechanisms—including AES-256 encryption, two-factor authentication, role-based access control (RBAC), and HIPAA/GDPR compliance. These measures contributed to increased caregiver confidence and satisfaction with the system’s data handling practices, which supports HP4 and ensures the ethical and secure use of patient data.

In summary, we are presenting an AI based VR therapy system to fill the gaps and challenges identified in Table 2 and achieved in Table 3. It has been found that our system increased the patient engagement as compared to conventional cognitive exercises, thus demonstrating the potential. Moreover, it has a real time AI companionship that offers both cognitive and emotional assist through dynamically changing, conversational interfaces that are specific to the user. The system also enhanced the accessibility by integrating the bilingual voice recognition (English & Arabic), through which the patients of diverse language abilities can use the application without any issue. Furthermore, data security measures were ensured to preserve the privacy of the patient. These improvements show the possibility of our suggested system to provide a better integrated, individualized and protected Dementia care.

Conclusion and future work

In conclusion, the developed new AI-powered VR system is an innovative approach towards the problems of dementia and Alzheimer’s among the elderly. It has the capability of enhancing the quality of life of the elderly by simply integrating safe technology, interesting VR applications and the power of AI to the elderly. The development approach leads to a specific and effective plan based on a thorough analysis of the scientific literature and a detailed comparison with similar projects.

This study designed a new smart, safe AI-powed VR therapy system, based on a clinician-informed therapeutic model to improve cognition, mood, and safety of patients with Alzheimer’s and dementia, which has answered the RQ4 and HP4 has been proved.

The platform integrates AI-based VR therapy, AI companions, bilingual English and Arabic voice control, and security features to offer a better therapeutic experience to patients. The empirical results showed that it improved user participation by 30% compared to conventional cognitive stimulation methods (p = 0.002), which highlights its capacity to improve participation and cognitive stimulation. The platform also ensures the security of data is well protected through the use of AES encryption, RBAC and HIPAA/GDPR to ensure that patient’s data is well protected and managed ethically, which has answered the RQ4 and proved HP4.

The value of this system is that it fills the gaps of conventional Alzheimer’s rehabilitation by providing an environment that is not only interactive and immersive but also secure for the patient and which helps to enhance cognitive strength, friendship and human contact. The system achieves this through the use of AI enabled conversations and completely personalized virtual environments to enhance the efficacy of cognitive rehabilitation for persons with cognitive disorders.

This paper proposes a state-of-the-art AI-powered VR therapy system for Alzheimer’s and dementia patients incorporating AI companions, voice control, and secure cognitive therapy. Different from previous studies on VR for Alzheimer’s, our system provides a more personal and active treatment that improves cognition, social skills, and mood. The validation of the effectiveness of the system has been carried out and the results show that the system is easy to use and highly engaging. Despite the good performance of the current application, there are still some optimizations to be made:

-

Expanded Clinical Trials – Will validate RQ1 and HP1, by enrolling more patients in larger sample size to determine the long-term cognitive effects of the system.

-

AI Personalization – Directly supports RQ2 and HP2, by tailoring AI assistant’s adaptive learning to offer more context specific, patient specific dialogue through pattern of speech analysis and emotional cues.

-

Multimodal Health Monitoring – Introduces new dimensions to support RQ3 and HP3, by wearing sensors to monitor sleep cycles, heart rate, and stress levels to give instant feedback on their condition to their caretakers.

-

Augmented Cognitive Training – Is an extension of RQ1 and HP1, by providing cognitive activities with adaptable levels of difficulty to make tasks more suitable for patients.

-

Broader Language Support –Advances RQ2 and HP2, by allowing for voice recognition to reach other widely used languages besides Arabic and English to offer more inclusion.

-

Virtual Reality Expansion – Enhances RQ3 and HP3, by expanding to include more interactive VR scenarios such as social interaction centers, customized memory spaces, and gamified components of therapy.

Data availability

The datasets generated and/or analysed during the current study are not publicly available due to the sensitive nature and the privacy concerns of patient information.

References

Florian, M., Margaux, S. & Khalifa, D. Cognitive tasks modelization and description in VR environment for Alzheimer’s disease state identification. In 2020 Tenth International Conference on Image Processing Theory, Tools and Applications (IPTA), Paris, France, 2020 1–7 https://doi.org/10.1109/IPTA50016.2020.92827.

Gustavsson, A. Global estimates on the number of persons across the Alzheimer’s disease continuum. Alzheimer’s Dementia 19(2), (2023).

Nichols, E. Global, regional, and national burden of Alzheimer’s disease and other dementias. Lancet Neurol. 18(1), 88–106 (2019).

National Institute on Aging. Alzheimer’s disease fact sheet. Accessed: 5 Apr 2023. [Online]. Available: https://www.nia.nih.gov/health/alzheimers-disease-fact-sheet

Kanno, K. M., Lamounier, E. A., Cardoso, A., Lopes, E. J. & Mendes de Lima, G. F. Augmented reality system for aiding mild Alzheimer patients and caregivers. In 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) 593–594 (2018).

Biocca, F. Virtual reality technology: A tutorial. J. Commun. 42(4), 23–72 (1992).

Lee, D. & Yoon, S. N. Application of artificial intelligence-based technologies in the healthcare industry: Opportunities and challenges. Int. J. Environ. Res. Public Health 18(1), 271. https://doi.org/10.3390/ijerph18010271 (2021).

Brookes, J., Warburton, M., Alghadier, M., Mon-Williams, M. & Mushtaq, F. Studying human behavior with virtual reality: The unity experiment framework. Behav. Res. Methods 52, 455–463 (2020).

Campo-Prieto, P., Cancela, J. M. & Rodríguez-Fuentes, G. Immersive virtual reality as physical therapy in older adults: Present or future (systematic review). Virtual Reality 25(3), 801–817. https://doi.org/10.1007/s10055-020-00495-x (2021).

Appel, L. et al. Virtual reality to promote wellbeing in persons with dementia: A scoping review. J. Rehab. Assist. Technol. Eng. https://doi.org/10.1177/20556683211053952 (2021).

Huang, L.-C. & Yang, Y.-H. The long term effects of immersive virtual reality reminiscence in people with dementia: Longitudinal observational study (Preprint). JMIR Serious Games 10, 1–11. https://doi.org/10.2196/36720 (2022).

Yi, Y. et al. Effect of virtual reality exercise on interventions for patients with Alzheimer’s disease: A systematic review. Front. Psychiatry 13, 1062162. https://doi.org/10.3389/fpsyt.2022.1062162 (2022).

Fernández-Caballero, A. et al. Smart environment architecture for emotion detection and regulation. J. Biomed. Inform. 64, 1–12. https://doi.org/10.1016/j.jbi.2016.09.015 (2016).

Hibino, T., Xie, H. & Miyata, K. Interactive avatar creation system from learned attributes for virtual reality. In International Conference on Human-Computer Interaction 168–181 (2023). https://doi.org/10.1007/978-3-031-15158-6_13.

Choi, Jung, K. & Noh, S. D. Virtual reality applications in manufacturing industries: Past research, present findings, and future directions. Concurr. Eng. 23(1), 40–63 (2015).

Burdea, G. C. & Coiffet, P. Virtual Reality Technology (Wiley, 2003).

Oh, S. & Shon, T. Cybersecurity issues in generative AI. In 2023 International Conference on Platform Technology and Service (PlatCon) 97–100 (2023). https://doi.org/10.1109/PlatCon.2023.102579.

Chengoden, R. et al. Metaverse for healthcare: A survey on potential applications, challenges and future directions. IEEE Access 11, 12765–12795. https://doi.org/10.1109/ACCESS.2023.3232173 (2023).

Tan, N. C., Lim, J. E., Sultana, R., Quah, J. H. M. & Wong, W. T. A virtual reality cognitive screening tool based on the six cognitive domains. Alzheimer’s Dementia Diagn. Assess. Dis. Monitor. 16, e70030. https://doi.org/10.1002/dad2.70030 (2024).

Flynn, D. et al. Developing a virtual reality-based methodology for people with dementia: A feasibility study. Cyberpsychol. Behav. Soc. Netw. 7(1), 41–49. https://doi.org/10.1089/109493103322725379 (2004).

Kuang, Y. & Bai, X. The research of virtual reality scene modeling based on unity 3D. In 2018 13th International Conference on Computer Science Education (ICCSE) 1–3 (2018). https://doi.org/10.1109/ICCSE.2018.8468794.

Choudhary, A., Verma, P. K. & Rai, P. A walkthrough of amazon elastic compute cloud (Amazon EC2): A review. Int. J. Res. Appl. Sci. Eng. Technol. 9(11), 93–97 (2021).

Funding

The authors acknowledge financial support from United Al-Saqer Grants Initiative and Abu Dhabi University’s Office of Research and Sponsored Programs. Grant number: 19300840.

Author information

Authors and Affiliations

Contributions

M.A.-R., S.A., N.E., B.B., and Y.A. contributed to the conceptualization and design of the study. M.A.-R. and S.A. led the project supervision. S.A., N.E. and B.B. were responsible for the software development, including the VR application and AI companion integration. Y.A., N.E. and B.B conducted data collection and analysis, while B.B. and S.A. prepared Figs. 1, 2, 3, 4 and 5. M.A.-R. and S.A. wrote the main manuscript text, with significant contributions from N.E., B.B., and Y.A. in refining the methodology and results sections.H.S.I. and S.E. provided clinical insights and helped validate the system’s usability for Alzheimer’s and dementia patients. J.L. contributed to the literature review and technical framework development. A.S. and S.A.Z. supported data interpretation and statistical analysis. H.S.I. and S.E. also assisted in reviewing ethical considerations and ensuring compliance with healthcare data privacy standards.All authors contributed to manuscript review and approved the final version for submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical statement

This study was conducted in accordance with the ethical standards of Abu Dhabi University and adhered to all applicable national and institutional guidelines. Ethical approval was obtained from the Research Ethics Committee at Abu Dhabi University (File Number: CE-0000013).

Informed consent

Informed consent was obtained from all individual participants included in the study. All subjects and/or their legal guardians gave explicit permission for publication of identifiable information and/or images in this journal.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1

Rights and permissions