Abstract

Coral reefs are one of the most biodiverse ecosystems on Earth and are extremely important for marine ecosystems. However, coral reefs are rapidly degrading globally, and for this reason, in-situ online monitoring systems are being used to monitor coral reef ecosystems in real time. At the same time, artificial intelligence technology, particularly deep learning technology, is playing an increasingly important role in the study of coral reef ecology, especially in the automatic detection and identification of coral reef fish. However, deep learning is essentially a data-driven technique that relies on high-quality datasets for training, while existing fish identification datasets suffer from low resolution and inaccurate labeling, which limits the application of deep learning techniques to coral reef fish identification. To better utilize deep learning techniques for real-time automatic detection and identification of coral reef fish from the data collected by the in-situ online monitoring system, this paper proposes a high-resolution, fish species-rich, and well-labeled coral reef fish dataset SCSFish2025, which is the first publicly available coral reef fish dataset in the waters of China’s Nansha Islands. SCSFish2025 contains 11,956 high-resolution underwater surveillance images and over 120,000 bounding boxes covering 30 species of fish that have been manually labelled by experienced fish identification experts, with sub-category labels for blurring, occlusion, and altered pose. Furthermore, this paper establishes a benchmark for the dataset by analyzing the detection performance of deep learning object detection techniques on this dataset using four state-of-the-art or typical object detection models as baseline models. The best baseline model RT-DETRv2 achieves mAP@50 performance of 0.9960 and 0.7486 respectively on the five-fold cross-validation of the training set and the independent test set. The release of this dataset will help promote the development of AI technology in the study of automatic detection and identification of coral reef fish, and provide strong support for the study of marine biodiversity and ecosystems. The project code and dataset are available at https://github.com/FudanZhengSYSU/SCSFish2025.

Similar content being viewed by others

Introduction

Coral reefs are one of the most biodiverse ecosystems on Earth, providing habitat for a large number of marine organisms. These organisms include fish, crustaceans, sponges, algae and many other species, 25% of which depend on coral reefs for their livelihoods1. The complex structure and rich biodiversity of coral reefs make them vital to marine ecosystems, and even to global ecosystems2. Coral reefs also play an important role in protecting coastlines, storing carbon, regulating climate and maintaining the energy cycle of marine ecosystems. However, global coral communities have been shown to have declined by nearly 50% in recent decades3 and coral reefs are rapidly degrading. Global mass coral bleaching events continue to occur with great severity4. To better understand coral reef ecosystems and their changing status so as to cope with the global degradation of coral reefs, coral reef in-situ online monitoring systems have been used in recent years in research or projects for real-time monitoring, surveillance and early warning of coral reef ecosystems. The in-situ online monitoring system can integrate water quality sensors, underwater imaging technology, acoustic equipment and online transmission capabilities, etc. It can conduct continuous, real-time and long-term monitoring of coral reef ecosystems, which not only greatly reduces the workload of fieldwork and the risk of diving operations by researchers or investigators, but also improves the speed of underwater monitoring and data collection. However, this highly efficient method of underwater data acquisition has led to an explosion in the amount of data, which has greatly increased the workload of data analysis5, especially for underwater images/videos of coral reef fish and benthic organisms, etc. Traditional manual identification and classification processing methods cannot meet the need for rapid analysis and processing of large amounts of data, and therefore cannot provide timely monitoring data and regulatory reports for effective management of coral reef ecosystems. Therefore, automatic image analysis methods that speed up and standardize information processing are needed, and artificial intelligence technology provides a new way to solve this problem. In recent years, artificial intelligence has been applied to several areas of coral reef ecology research, such as automatic detection6,12,13,14,15,17 and identification6,7,8,9,10,11,12,16,18 of coral reef fish, automatic detection of crown-of-thorns starfish19,20,21,22, coral species identification24, analysis of the 3D characteristics of coral skeletons26, benthic classification of coral reefs5,23,24,25, sustainable monitoring27 and management28 of coral reef ecological restoration/recovery, distribution prediction of thermally tolerant corals29, assessment of coral reef bleaching30, and the effects of climate change on large-scale coral reefs31, etc.

Among these studies of coral reef ecology, the identification of coral reef fish has far-reaching significance for the conservation of coral reef ecosystems. As the main reservoir of consumer biomass in coral reef ecosystems32, fish play a key role in reef resilience and nutrient cycling33,34,35, and are an indispensable component in maintaining coral reef health36,37). Therefore, establishing accurate knowledge of the identity and geographic distribution of coral reef fish38 is essential for the conservation of marine biodiversity and the sustainable human use of marine fishery resources. Monitoring fish in their natural habitat allows for more effective knowledge and management of fish living conditions and fishery resources39. Therefore, monitoring fish using in-situ online monitoring systems has become a common practice in ecological conservation and stock assessment surveys40,41,42. The in-situ online monitoring system can provide massive, rich and comprehensive coral reef fish data resources. However, to automatically detect and identify coral reef fish using AI technology, the data need to be identified and labeled by trained fish experts and form a dataset that can be used for learning, training and validation of AI models. There have been many studies on the automatic detection and identification of coral reef fish6,7,8,9,10,11,12,13,14,15,16,17,18, and some coral reef fish identification datasets have been made publicly available. The Fish4Knowledge project is the world’s first image- and video-based automatic fish species identification program, covering FishCLEF201443, FishCLEF201538 SeaCLEF201644 and SeaCLEF201745 as well as F4K with Complex Scenes46 and other datasets. These datasets are valuable data resources for the automatic fish identification, but they are generally of low resolution (most are 320 pixels * 240 pixels, and only a few reach 640 pixels * 480 pixels), and some images suffer from corruption and label duplication. A Large Scale Fish Dataset47 covered 9 fish species, each with 1000 enhanced images. The QUTFish48 dataset contains 3960 labeled images of 468 fish species. However, these images were taken in controlled environments with carefully adjusted lighting conditions and backgrounds maintained in a single color tone, which differs significantly from the natural field environment. In addition, F4K with recognition49, WildFish50, and Croation Fish51 were obtained from the natural marine environment, but the images were cropped to show only a single fish in the center, limiting their use to classification tasks and providing limited help to the model in learning to identify fish in the field. The OzFish52 and DeepFish53 datasets provide a large number of bounding box annotations, but these annotations are limited to the binary classification of fish/non-fish and cannot be used to subdivide individual species. The SEAMAPD2154 dataset, although containing 130 fish species and 90000 labeled images, poses some obstacles to the detection task due to occlusion in front of the shooting equipment. In summary, these datasets are unsatisfactory either in terms of video/image quality and labeling quality, or in terms of data completeness and diversity.

In order to provide better data support for the automatic detection and identification of coral reef fish, in this paper we propose a high-resolution, species-rich, and well-labeled coral reef fish dataset called SCSFish2025. The dataset is the first publicly available coral reef fish dataset in the waters of China’s Nansha Islands, and was labeled by two senior Chinese fish experts based on video data from the in-situ online monitoring system for up to 333.6 h, resulting in 120,084 bounding boxes and species labels. The brief comparison of SCSFish2025 with other relevant datasets mentioned above is shown in Table 1. We believe that the dataset will contribute to the development and comparison of data-driven multimedia identification technologies, and promote the development of artificial intelligence technology in the automatic detection and identification of coral reef fish, thus better assisting marine biologists in the in-depth study of fish biodiversity and marine ecosystems.

DataSet

Data sources

The dataset is derived from the video captured by the underwater cameras of a set of coral reef in-situ online monitoring systems deployed by the South China Sea Ecological Center of China’s Ministry of Natural Resources at Subi Reef in Nansha, China. The video image data acquisition device is a self-developed device with the following parameters: resolution of 1080P (1920*1080), video frame rate of 30 frames, field of view of 80° diagonal, video format of “flv”, and pressure-resistant depth of 3000 m.

We select videos based on fish species and video information to form the dataset. First, in terms of fish species, the selected videos must include species that are commonly found in the sea (representative); include as many families and genera as possible (species-rich); include as many colors and morphological features as possible (morphologically-rich); and cover important indicator species of reef health as possible (indicative). Second, in terms of video information, considering the difficulty of AI model learning, we selected the videos according to the following principles: (1) sufficient light; (2) clearer water, less suspended matter in the water; (3) smaller water currents, the fish are basically in a natural swimming state, and don’t follow the rapid swaying of the water currents; (4) the lens protector does not have any obvious foreign matter that affects the video quality, such as algae, suspended matter, etc.; (5) coverage of different seasons; (6) coverage of different time periods during the day (morning, noon and evening); (7) higher concentration of fish species.

After the above screening, the final video dataset consists of 23 videos from March 2–4, September 19–21 and October 1, 2017, with a total of 11,956 frames and a total duration of 487 s. The statistical information of video number, date (year_month_day), start time, duration and number of frames is detailed in Table 2.

Labeling

Labeling basis and rules

The dataset was annotated by two experienced fish identification experts (one with 23 years of professional experience and the other with 9 years) with reference to the global fish dataset FishBase55, the book “Reef fish identification of Nansha islands“56 and the book “The Fishes of the Islands in the South China Sea“57, combined with their own rich experience in fish identification. The two experts completed their annotations separately and independently. Once completed, they reviewed the labels together and, for controversial labels, carefully reviewed the relevant literature and consulted to decide on the final labels.

The experts followed the following labeling rules: (1) the labeling was refined to “species” according to the criteria of the “class-order-family-genus-species” of organisms; (2) the labeling was based only on the current frame, i.e., it was carried out without the help of information from the previous and subsequent frames; (3) tiny fish with inconspicuous features or similar species in the same family and genus were uniformly labeled as “fish”; (4) for the three special cases of blur, occlusion, and poor identification due to the angle of the fish, they were labeled as “category_suffix” with the suffixes ‘_m’, ‘_z’, and ‘_a’, respectively.

Labeling tools and process

The labeling uses DarkLabel (https://github.com/darkpgmr/DarkLabel), a video/image labeling and annotation tool that can label object bounding boxes with IDs and names in video. There are two main labeling processes: initial labeling and verification, as shown in Fig. 1.

Initial labeling The initial labeling is interwoven between manual labeling and DarkLabel’s automatic labeling. The specific steps are as follows:

-

(1)

The experts manually labeled the first frame of the video using DarkLabel’s interface, including framing out the position of each distinguishable fish to a generate bounding box and specifying the category of the fish.

-

(2)

DarkLabel’s automatic labeling was enabled to automatically generate the labels for the next frame.

-

(3)

The experts fine-tuned the labels given by DarkLabel and manually labeled those missed by DarkLabel (such fine-tuning and additional annotation was necessary because the small fish can swim very fast, resulting in variable postures in a short adjacent frame, and the results of the automatic labeling may be biased).

-

(4)

Let DarkLabel do the automatic labeling again for the next frame.

-

(5)

Repeat steps (3) and (4) above until the last frame of the video.

Verification The verification is done by both expert manual inspection and the YOLOv5 (https://github.com/ultralytics/yolov5) object detection model. The specific steps are as follows:

-

(1)

Crop the bounding box generated by the initial labeling and sort it into categories according to the labeled categories to check if there are fish that obviously do not belong to a particular category. This step was done manually by the experts.

-

(2)

Use a YOLOv5 object detection model to train on 80% of the frames of the dataset obtained from the initial labeling to obtain a preliminary detection model, which is used to detect the whole dataset, and the false positives in the detection results are again checked manually by the experts.

After the above process, the final total number of labels for the 23 videos is 120,084, with an average of 3.7–19.6 labels per frame for each video, and an average of about 10.0 labels per frame. It took about 10 s to complete a single label (including adding, deleting, and modifying the label, as well as adjusting the size of the bounding box, etc.), and the total cumulative time spent is about 333.6 h. The labeling statistics for each video are shown in Table 2. There were 29 fish species labeled. As the full names of the fishes are too long, we used an abbreviated naming method, taking the first three letters of the genus name (Latin, same below) and the first two letters of the species name to form the abbreviated category name. The statistics of the normal, blurred (_m), occluded (_z), posture-altered (_a), and total (Sum) bounding boxes for each fish species are shown in Table 3. Examples of labeled video frames are shown in Fig. 2.

Labeling description

After labeling with DarkLabel, we exported the labels to .txt files in the format [fn, cname, id, x1, y1, w, h]. Each video corresponds to a .txt file, and one line in the file represents the labeling information of a single fish, where ‘fn’ represents the frame number, ‘cname’ the category abbreviation of the fish, and ‘id’ the fish number, all of which are − 1 as we do not distinguish the number of each fish. (x1, y1) and (w, h) represent the upper left coordinate and the width and height of the fish’s bounding box, respectively. For example, [0, chrma, -1, 374, 757, 56, 30] indicates that in frame 0 of the video there is a fish of category “chrma”, the upper left coordinate of its bounding box is [374,757], and the width and height of its bounding box are 56 and 30 pixels, respectively.

Dataset description

Dataset split

We split the training and test sets based on the videos. Due to the significant advantage in the number of dominant fish species, the distribution of the number of fish is extremely uneven. Therefore, the split ensures that all species are present in the training set, while the test set covers as many species as possible (so if a particular fish species only appears in one video, that video is included in the training set). We ended up with a training set of 16 videos and a test set of 7 videos. The bounding box statistics of each fish species included in the training and test sets are shown in Table 3. Among them, the categories 0,1,8,17,26 appeared in only one video, and thus all of them existed only in the training set.

We consider the samples with the suffixes ‘_m’, ‘_z’, and ‘_a’ in their labels to be hard samples. There are 28,987 hard samples in total, which is 24.1% of the total number of samples (instances) in the whole dataset. The training set and the test set have 20,160 and 8827 hard samples, respectively.

Dataset statistical analysis

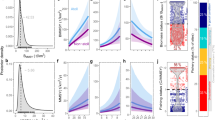

We analyzed the attributes of the proposed dataset SCSFish2025 and compared it with other datasets for fish detection such as FishCLEF201538 and SEAMAPD2154. Statistically, the average number of categories contained per image frame is significantly higher in SCSFish2025 than in FishCLEF2015 (5.3 vs. 1.4), as shown in Fig. 3(a). It is worth noting that FishCLEF2015 has a maximum of 5 categories in a frame (0.1% of the images), whereas 64.3% of the images in SCSFish2025 have more than 5 categories; only 0.2% of the images in SCSFish2025 have a single category, whereas 64.5% of the images in FishCLEF2015 do. Furthermore, the number of categories in each image frame of SCSFish2025 shows a normal distribution. Similarly, the average number of instances per frame is normally distributed and much higher than in FishCLEF2015 (10.0 vs. 1.4), as shown in Fig. 3(b). The most significant difference is that FishCLEF2015 has a maximum of 7 instances per frame, while SCSFish2025 has a maximum of 29 instances per frame, with 80.1% of the images having more than 7 instances. Less than 2% of the images in SCSFish2025 have only one instance, compared to 54.7% in FishCLEF2015.

Figure 3(c) shows the size distribution of the instances in the dataset. It can be seen that, overall, the instances in SCSFish2025 represent a smaller percentage of the total image area than in FishCLEF2015. The maximum percentage of instances in SCSFish2025 is 31%, while in FishCLEF2015 it is 99%. Furthermore, we examined the average size of the instances with less than 5% and 0.1% of the area in both datasets and found that FishCLEF2015 has only 88.10% of the instances with less than 5%, while SCSFish2025 has 99.08%. More notably, FishCLEF2015 has no instances with less than 0.1% of the area, while SCSFish2025 has 31.10%, i.e., the vast majority of the objects in SCSFish2025 are small or even tiny objects.

Figure 3(d) Shows an overview of the three fish detection datasets, SCSFish2025, FishCLEF2015 and SEAMAPD21, including the number of categories in the datasets and the average number of instances per category. Although SCSFish2025 has fewer categories than SEAMAPD21, it has more instances per category on average. SCSFish2025 has more categories and more instances than FishCLEF2015.

Statistical analysis of the datasets. (a) The average number of categories contained per image frame is significantly higher in SCSFish2025 than in FishCLEF2015. (b) The average number of instances per frame is normally distributed and much higher than in FishCLEF2015. (c) The instances in SCSFish2025 represent a smaller percentage of the total image area than in FishCLEF2015. (d) SCSFish2025 has more instances per category on average than SEAMAPD21and more categories and instances than FishCLEF2015.

Dataset characteristics

As can be seen from the dataset description and statistics above, the SCSFish2025 dataset contains a large number of fish instances, which can help the model to better understand and identify diverse objects in the video. The objects in the dataset cover a wide range of fish species, sizes, poses, and difficult cases such as occlusion, blur, and pose differences. In addition, the data has high-quality labels with accurate bounding boxes and category for each fish instance, which is suitable for training and evaluation in object detection tasks.

Furthermore, a variety of different fish species are included, making the dataset rich in fish morphology. For example, as shown in Fig. 4, the dataset includes: Fishes of the families Chaetodontidae and Zanclidae, which are richly colored and have obvious morphological features; Fishes of the family Mullidae, Halichoeres hortulanus and Scolopsis bilineata, which have no obvious morphological features but are richly colored; Chromis margaritifer and Hemigymnus melapterus, which have strongly contrasting coloration; and Dascyllus trimaculatus, Ctenochaetus striatus and Chromis weberi, which are darkly colored and also have no obvious morphological features. Moreover, the dataset contains a variety of important indicator species of coral reef health, such as some species of Pomacentridae, Chaetodontidae and Acanthuridae55,56,57,58,59,60. Among them, fishes of the family Pomacentridae are very sensitive to changes in water quality and can be considered as indicators of water quality in coral reef waters59. In addition, Pomacentridae often use healthy live corals as spawning grounds58,61, while some species of Chaetodontidae feed mainly on coral polyps. Therefore, a decline or extinction of these two fish families indicates that the coverage of living corals in the sea area has decreased and the coral reef ecosystem is degrading62. The herbivorous family Acanthuridae55,63 plays a vital role in controlling algal growth on coral reefs and removing sediment58,64,65,66, and reefs with diverse and abundant populations of herbivorous fish are more likely to maintain their resilience33,60,65. The inclusion of these indicator species will facilitate the subsequent tracking and alerting of indicator species, and the monitoring and assessment of marine ecosystem quality based on indicator species.

Algorithmic analysis

Baseline

We chose RT-DETRv267, YOLOv1068, SDD69 and Faster R-CNN70, four of the most advanced and representative object detection models available, as the baseline models to establish specific benchmarks for the dataset. Among them, YOLOv10, SDD and Faster R-CNN are typical representatives of CNN-based object detection models while RT-DETRv2 is a typical representative of Transformer-based object detection models. For both RT-DETRv2 and YOLOv10, we adopted their SMALL versions (RT-DETRv2-S and YOLOv10-S). For SDD, we adopted a pytorch version consistent with the original network, while for Faster R-CNN, we used a pytorch version with the backbone replaced with VGG16. The specific model architectures and parameters of the four models can be found in their official open source projects (https://github.com/THU-MIG/yolov10, https://github.com/lyuwenyu/RT-DETR ) or other related open source (https://github.com/bubbliiiing/ssd-pytorch, https://github.com/bubbliiiing/faster-rcnn-pytorch ).

Implementation details

As described in subsection 2.3.1, we split the 23 videos of the dataset into a training set and a test set, where the training set consisted of 16 videos with 8177 frames and the test set consisted of 7 videos with 3779 frames. To more accurately evaluate the performance and generalization ability of the AI models, we adopted a five-fold cross-validation approach for the training set. That is, the training set is randomly divided into five equal parts, and one of the subsets is sequentially selected as the validation set for evaluation, while the other four subsets are used for training. The above operation is repeated five times to ensure that each subset is used once as the validation set, and the performance measures of the five evaluations are averaged to obtain the final performance evaluation of the model.

We used the weights pre-trained on the COCO dataset to initialize the four baseline models. We employed a variety of data enhancement strategies, such as random cropping, random horizontal/vertical mirror flipping, multi-scale scaling, mosaic enhancement, etc., to improve the generalization ability of the model. Different optimizers such as AdamW71, SGD72 and Adam73 and learning rates were applied to different models. We employed mixed precision training throughout the training process of all models to enhance training efficiency, and adopted gradient clipping (with a maximum value set to 10) to stabilize the training process. The input images were uniformly adjusted to a resolution of 1920 × 1920. The batch size for each GPU was set to 16, and the total number of training epochs was 100. The experimental platform was equipped with two NVIDIA A800 GPUs. We adopted the PyTorch framework and configured CUDA 12.0 and Anaconda 2023.09 to achieve efficient computing and a stable operating environment. The specific training details of each model are shown in Table 4.

Results and analysis

We use mAP (mean Average Precision), which is commonly used in the field of object detection, as the evaluation metric. mAP is used to measure the average precision of the model under different categories and different IoU (Intersection over Union) thresholds, and to quantify the predictive power of the model through precision and recall to comprehensively measure the performance of the model. Specifically, mAP@50 calculates the average of AP (Average Precision) for all categories at an IoU threshold of 0.5, whereas mAP@[50: 95] considers a wider range of IoUs and calculates the average of AP for all categories at each 0.05 step of the IoU threshold from 0.50 to 0.95, which is a more comprehensive and rigorous evaluation metric. In addition to the performance of the full sample dataset, we are also concerned with the detection performance of objects of different sizes and hard samples.

Overall performance

As described in subsection 3.2, we perform a five-fold cross-validation on the training set to better evaluate the performance of the models. Table 5 shows the overall performance of the models, and it can be seen that overall, RT-DETRv2 performs the best of the four models, which may be due to the fact that the RT-DETRv2 is based on the Transformer architecture, which introduces a deformable attention mechanism and sets different numbers of sampling points for features of different scales to achieve selective multi-scale feature extraction by the decoder. The performance of YOLOv10 is slightly inferior, but it is still very satisfactory. This might be due to the fact that YOLOv10 integrates the optimized CSPNet74 backbone network and the neck structure of PANet75, thus possessing excellent feature extraction and multi-scale fusion capabilities. In contrast, although very efficient in processing local features, the SDD and Faster R-CNN model are relatively limited in capturing global dependencies, especially when faced with complex backgrounds or small objects, which may lead to false or missed detection. In addition, the architectures of SSD and Faster R-CNN are computationally inefficient and the Faster R-CNN converges slowly and may not fully converge even after 100 epochs of training. These factors together cause SSD and Faster R-CNN to be less effective than YOLOv10 and RT-DETRv2.

RT-DETRv2 and YOLOv10 show very satisfactory performance on the training set with five-fold cross-validation, but the performance on the independent test set is relatively inferior. Taking the detection results of the RT-DETRv2 model as an example, we further investigated the detection results of each fish species on the test set, as shown in Table 6; Fig. 5. It can be seen that the detection performance of bodax (Bodianus axillaris), cepar (Cephalopholis argus), chakl (Chaetodon kleinii), ctecy (Ctenochaetus cyanocheilus) and ctest (Ctenochaetus striatus) are all very low, which seriously affects the overall performance. By observing the confusion matrix in Fig. 5, we can see that in many cases these fish species were misclassified as fish or simply not recognized. We further analyzed the unrecognized samples of these fish species and found that most of these samples contained postural changes (bodax, chakl, ctecy, ctest), occlusion (cepar, ctest) and blur (chakl, ctest). It can be concluded that these hard samples do present difficulties for model learning. The performance of hard samples is further discussed in subsection 3.3.2.

Confusion matrix of identification results for each fish species on the test set (RT-DETRv2 model). The detection performance of bodax (Bodianus axillaris), cepar (Cephalopholis argus), chakl (Chaetodon kleinii), ctecy (Ctenochaetus cyanocheilus) and ctest (Ctenochaetus striatus) are all very low, which seriously affects the overall performance.

Performance on different subsets

-

(1)

On subsets of different sizes.

We divide the sample subsets of different sizes according to the area ratio of fish objects occupying the entire image frame. Specifically, samples with area ratios greater than 5% form the large object subset LargeSet; samples with area ratios between (0.1%, 5%] form the medium object subset MediumSet; samples with area ratios between (0.05%, 0.1%] form the small object subset SmallSet; and samples with area ratios less than or equal to 0.05% form the tiny object subset TinySet. After such a division, the number of objects in subsets of different sizes and their proportion in the whole dataset are shown in Table 7. Examples of samples of different sizes are shown in Fig. 6, where the blue bounding boxes denote large objects, the fuchsia bounding boxes denote medium objects, the lime green bounding boxes denote small objects, and the red bounding boxes denote tiny objects.

We investigated the detection performance of the models on the above subsets of different object sizes, as shown in Fig. 7. It can be observed that RT-DETRv2 and YOLOv10 still achieve significant advantages in the detection of samples of various different sizes, with RT-DETRv2 being the best. On the five-fold cross-validation of the training set, as the object size gradually increases, the recall of both models increases accordingly, which is in line with intuition. However, this conclusion does not fully hold for the test set. It can be seen that large objects are poorly detected on the test set, which we believe is mainly due to the fact that there is a serious imbalance in data distribution, and the proportion of all large object samples in the whole data set is only 0.9%, which leads to insufficient feature learning of large objects by the models, thus affecting their detection performance on the test set.

We can also observe that the SSD model performs poorly on both the test set and the five-fold cross-validation on large object detection, which may be mainly due to the fact that the size of anchor boxes of SSD has a direct impact on the detection accuracy. The size of the preset box used by the SSD model in this training is relatively small, which makes it difficult to effectively match and detect large objects, thus resulting in poor detection performance on these objects.

Faster R-CNN shows obvious shortcomings in the detection of tiny objects, which may be due to the fact that the deep network structure of Faster R-CNN makes the tiny objects occupy only a limited number of pixels or even disappear after multiple downsampling. Moreover, the anchor scale of Faster R-CNN cannot take into account too small targets, which results in insufficient number of positive samples for tiny targets and thus affects the training effect. In addition, the NMS post-processing may also mistakenly delete the real bounding boxes due to the small target spacing.

-

(2)

On hard sample subsets.

Regarding the hard samples, as described in subsections 2.2.1 and 2.3.1, we have added the suffixes ‘_m’, ‘_z’ and ‘_a’ to the labels of the three special cases that are difficult to identify due to blur, occlusion and fish body angle, respectively, and these samples directly form the subset of hard samples HardSet. The number of objects in three different hard sample subsets and their proportion in the whole dataset are shown in Table 8.

We also examined the detection performance of the models on three subsets of hard samples, and the recalls are shown in Fig. 8. It can be seen that, overall, RT-DETRv2 and YOLO still perform better than the other two models, with RT-DETRv2 being the best, but both of their performance on the three test subsets of hard samples is still far from satisfactory. We have analyzed some of the misidentified hard samples, and found that these samples themselves are indeed very difficult to identify, as shown in Fig. 9. These particular samples may require the design of specific modules or strategies to learn from them. Occlusion samples (_z) seem to be more challenging for model recognition than pose changes (_a) and blur (_m), which we believe is mainly due to the fact that many fish differ from each other only in local areas, and occlusions occurring in these local areas can greatly affect the recognition results. Therefore, it is necessary to design learning modules or learning strategies specifically for hard samples in further research. On the test set, the SSD model performs best in the recognition of blurred objects, which may be due to the fact that many blurred objects in this dataset are small in size, and the small-size preset box of SSD happens to be able to match these small objects well. Faster R-CNN has poor performance on both the test set and the five-fold cross-validation on blurred object detection. This can also be attributed to the convergence problem of Faster R-CNN.

Comparison of resource efficiency of model training

To further compare the resource efficiency of model training, we have calculated the number of parameters, GFLOPs and FPS of the four models, as shown in Table 9. From the table, we can see that YOLOv10 has the least number of parameters and GFLOPs while having the highest FPS, which shows that YOLOv10 is the most resource-efficient model. RT-DETRv2 comes in the second place. SSD and Faster R-CNN, on the other hand, have larger number of parameters and computation. Therefore, if coral reef fish detection models are to be deployed in real underwater environments in future, YOLOv10 or RT-DETRv2 should be prioritized in order to reduce the demand for training resources at the edge and improve detection efficiency.

Discussion

We propose a new large-scale dataset for coral reef fish detection to advance the object detection algorithm, which covers a rich diversity of coral reef fish and contains labels for a large number of fish-specific instances (labeling took approximately 333.6 h). The dataset has the following obvious advantages: First, the dataset contains more coral reef fish species than existing datasets of the same type, and the number of categories and instances per frame is significantly increased, which can help AI models to better understand and identify the diversity objects in the video; Secondly, there are many hard samples in the dataset, such as occlusion, blur, posture difference, etc., and learning of these hard samples is conducive to improving the generalization ability of the model; Thirdly, the dataset has high-quality labels, with accurate bounding boxes and category labels for each fish instance, which is suitable for the training and evaluation of the object detection task; Finally, the dataset covers important indicator species, and by detecting, tracking, and alerting of indicator species, it can be used to monitor and evaluate the marine ecological environment in a timely manner. Therefore, the proposed dataset has far-reaching significance for the conservation of the marine ecological environment.

However, there are some limitations to this work. First of all, the data in this dataset is only selected from a single camera view. The camera is fixed and cannot be moved or rotated, so the field of view is fixed, resulting in an overly monotonous background; Secondly, the dataset does not cover the evening hours or all seasons, so it does not reflect the situation at all real times; Thirdly, the currently selected videos are those with an unobstructed lens, clear water and few suspended objects, which cannot fully reflect all possible underwater situations, such as the lens protective cover being occluded by attachments, the water body being filled with a large amount of suspended matter due to strong currents, or the water body being turbid due to the aggregation of zooplankton and phytoplankton. These issues may lead to the fact that models trained based on this dataset may not generalize well to other coral reef fish detection scenarios. Therefore, we will continue to collect and propose datasets that cover more camera views, more time periods (including night), more seasons, more lens occlusions, and more water turbidity situations, aiming at training models with more generalization ability to adapt to the coral reef fish detection task in any situation.

Conclusion

In this paper, we present SCSFish2025, a large coral reef fish identification dataset with more than 120,000 accurate bounding boxes and category labels, which allows the comparison of computer vision identification techniques and encourages computer vision researchers to tackle interdisciplinary challenges involving ecological and environmental data. The computer vision recognition models developed from this dataset will help marine biologists to thoroughly study marine ecosystems and fish biodiversity in the future, thereby ultimately contributing to the conservation of diversity and sustainable development of marine ecosystems. In subsequent studies, we will endeavor to collect datasets with wider spatial and temporal coverage (multiple camera views, multiple time periods, different water quality conditions, etc.), with the aim of assisting researchers in implementing real-time marine ecosystem conservation that is more applicable to real marine environments.

Data availability

All relevant data supporting the findings of this study are available in the Github Repository: https://github.com/FudanZhengSYSU/SCSFish2025.

References

Chen, P. Y., Chen, C. C., Chu, L. F. & McCarl, B. Evaluating the economic damage of climate change on global coral reefs. Glob. Environ. Change. 30, 12–20 (2015).

Furtado, D. P. et al. # deolhonoscorais: a polygonal annotated dataset to optimize coral monitoring. PeerJ 11, e16219 (2023).

Bruno, J. F. & Selig, E. R. Regional decline of coral cover in the Indo-Pacific: timing, extent, and subregional comparisons. PLoS One 2(8), e711 (2007).

Hughes, T. P. et al. Spatial and Temporal patterns of mass bleaching of corals in the anthropocene. Science 359 (6371), 80–83 (2018).

Gonzalez-Rivero, M. et al. Monitoring of coral reefs using artificial intelligence: a feasible and cost-effective approach. Remote Sens. 12 (3), 489 (2020).

Villon, S. et al. Coral reef fish detection and recognition in underwater videos by supervised machine learning: Comparison between Deep Learning and HOG + SVM methods. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems 160–171 (2016).

Chatfield, K. Return of the devil in the details: delving deep into convolutional nets. arXiv preprint arXiv:1405.3531. (2014).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556. (2014).

Zeiler, M. (ed Fergus, R.) Visualizing and Understanding convolutional networks. Proc. Eur. Conf. Comput. Vis. I 13–818 (2014).

Becken, S. et al. Monitoring Aesthetic Value of the Great Barrier Reef by Using Innovative Technologies and Artificial Intelligence (Griffith Institute for Tourism, Griffith University, 2018).

Villon, S. et al. A Deep Learning algorithm for accurate and fast identification of coral reef fishes in underwater videos. PeerJ Preprints 6, e26818v26811 (2018).

Shi, C. Coral fish detection and recognition based on deep learning. Master Dissertation. Beijing Jiaotong University. (2019).

Shi, C., Jia, C. & Chen, Z. FFDet: A fully convolutional network for coral reef fish detection by layer fusion. In Proceedings of the IEEE Visual Communications and Image Processing 1–4 (2018).

Wang, H. Super-resolution reconstruction and detection of coral reef fish images based on deep learning. Master Dissertation. Beijing Jiaotong University (2019).

Salman, A. et al. Automatic fish detection in underwater videos by a deep neural network-based hybrid motion learning system. ICES J. Mar. Sci. 77 (4), 1295–1307 (2020).

Yusup, I., Iqbal, M. & Jaya, I. Real-time reef fishes identification using deep learning. IOP Conf. Series: Earth Environ. Sci. 429 (1), 012046 (2020).

Alshdaifat, N. F. F., Talib, A. Z. & Osman, M. A. Improved deep learning framework for fish segmentation in underwater videos. Ecol. Inf. 59, 101121 (2020).

Zhang, S. et al. Research on fish identification in tropical waters under unconstrained environment based on transfer learning. Earth Sci. Inf. 15 (2), 1155–1166 (2022).

Li, Y. et al. A real-time edge-AI system for reef surveys. In Proceedings of the 28th Annual International Conference on Mobile Computing and Networking 903–906 (2022).

Truong, P. Crown-of-Thorns starfish detection by state-of-the-art YOLOv5. Master Dissertation. Aalto University. (2022).

Nguyen, Q. T. Detrimental starfish detection on embedded system: a case study of YOLOv5 deep learning algorithm and tensorflow lite framework. J. Comput. Sci. Inst. 23, 105–111 (2022).

Heenaye-Mamode Khan, M., Makoonlall, A., Nazurally, N. & Mungloo-Dilmohamud, Z. Identification of crown of Thorns starfish (COTS) using convolutional neural network (CNN) and attention model. PLoS One 18(4), e0283121 (2023).

Runyan, H. et al. Automated 2D, 2.5D, and 3D segmentation of coral reef pointclouds and orthoprojections. Front. Rob. AI. 9, 884317 (2022).

Sharan, S., Kininmonth, S. & Mehta, U. V. Automated CNN based coral reef classification using image augmentation and deep learning. Int. J. Eng. Intell. Syst. 29 (4), 253–261 (2021).

Pavoni, G. et al. TagLab: AI-assisted annotation for the fast and accurate semantic segmentation of coral reef orthoimages. J. Field Robot. 39 (3), 246–262 (2022).

Scucchia, F., Sauer, K., Zaslansky, P. & Mass, T. Artificial intelligence as a tool to study the 3D skeletal architecture in newly settled coral recruits: insights into the effects of ocean acidification on coral biomineralization. J. Mar. Sci. Eng. 10 (3), 391 (2022).

Williams, B. et al. Enhancing automated analysis of marine soundscapes using ecoacoustic indices and machine learning. Ecol. Ind. 140, 108986 (2022).

Rodriguez-Ramirez, A. et al. A contemporary baseline record of the world’s coral reefs. Sci. Data. 7 (1), 355 (2020).

Quigley, K. M. & van Oppen, M. J. H. Predictive models for the selection of thermally tolerant corals based on offspring survival. Nat. Commun. 13 (1), 1543 (2022).

Giles, A. B., Ren, K., Davies, J. E., Abrego, D. & Kelaher, B. Combining drones and deep learning to automate coral reef assessment with RGB imagery. Remote Sens. 15 (9), 2238 (2023).

Nunes, J. A. C., Cruz, I. C., Nunes, A. & Pinheiro, H. T. Speeding up coral reef conservation with AI-aided automated image analysis. Nat. Mach. Intell. 2 (6), 292–292 (2020).

Allgeier, J. E., Burkepile, D. E. & Layman, C. A. Animal pee in the sea: consumer-mediated nutrient dynamics in the world’s changing oceans. Glob. Change Biol. 23 (6), 2166–2178 (2017).

Bellwood, D. R., Hughes, T. P., Folke, C. & Nyström, M. Confronting the coral reef crisis. Nature 429 (6994), 827–833 (2004).

Brandl, S. J. et al. Demographic dynamics of the smallest marine vertebrates fuel coral reef ecosystem functioning. Science 364 (6446), 1189–1192 (2019).

Steneck, R. S. et al. Managing recovery resilience in coral reefs against climate-induced bleaching and hurricanes: a 15 year case study from bonaire, Dutch Caribbean. Front. Mar. Sci. 6, 265 (2019).

Niu, W., Liu, Y. & Lin, R. Research progress of the health assessment method of coral reef ecosystem. J. Mar. Sci. 27 (4), 77–85 (2009).

Yingying, W., Xinming, L., Hui, H., Yuyang, Z. & Dewen, D. Study on the health assessment method of typical coral reef ecosystem in the South China sea. J. Trop. Oceanogr. 40 (4), 84–97 (2021).

Joly, A. et al. LifeCLEF 2015: multimedia life species identification challenges. In Experimental IR. Meets Multilinguality Multimodality Interaction: 6th Int. Conf. CLEF Association CLEF’15 Toulouse France September 8–11 2015, vol. 9283 462–483 (2015).

Ditria, E. M., Connolly, R. M., Jinks, E. L. & Lopez-Marcano, S. Annotated video footage for automated identification and counting of fish in unconstrained seagrass habitats. Front. Mar. Sci. 8, 629485 (2021).

Gilby, B. L. et al. Umbrellas can work under water: using threatened species as indicator and management surrogates can improve coastal conservation. Estuar. Coast. Shelf Sci. 199, 132–140 (2017).

Langlois, T. et al. A field and video annotation guide for baited remote underwater stereo-video surveys of demersal fish assemblages. Methods Ecol. Evol. 11 (11), 1401–1409 (2020).

Whitmarsh, S. K., Fairweather, P. G. & Huveneers, C. What is big BRUVver up to? Methods and uses of baited underwater video. Rev. Fish Biol. Fish. 27, 53–73 (2017).

Joly, A. et al. R., Lifeclef 2014: multimedia life species identification challenges. In Information Access Evaluation. Multilinguality, Multimodality, and Interaction: 5th International Conference of the CLEF Initiative, CLEF 2014, Sheffield, UK, September 15–18, 2014, vol. 8685 229–249 (2014).

Joly, A. et al. LifeCLEF 2016: multimedia life species identification challenges. In Experimental IR. Meets Multilinguality Multimodality Interaction: 7th Int. Conf. CLEF Association CLEF 2016 Évora Portugal September 5–8 2016, vol. 9822 286–310 (2016).

Joly, A. et al. Lifeclef 2017 lab overview: multimedia species identification challenges. Experimental IR. Meets Multilinguality Multimodality Interaction: 8th Int. Conf. CLEF Association CLEF 2017 Dublin Irel. September 11–14 2017, vol. 10456 255–274 (2017).

Spampinato, C., Palazzo, S. & Kavasidis, I. A texton-based kernel density Estimation approach for background modeling under extreme conditions. Comput. Vis. Image Underst. 122, 74–83 (2014).

Ulucan, O., Karakaya, D. & Turkan, M. A large-scale dataset for fish segmentation and classification. In 2020 Innovations in Intelligent Systems and Applications Conference (2020).

Anantharajah, K. et al. Local inter-session variability modelling for object classification. In IEEE Winter Conference on Applications of Computer Vision 309–316 (2014).

Fisher, R. B., Chen-Burger, Y. H., Giordano, D., Hardman, L. & Lin, F. P. Fish4Knowledge: collecting and analyzing massive coral reef fish video data (Vol. 104). Springer (2016).

Zhuang, P., Wang, Y. & Qiao, Y. Wildfish: a large benchmark for fish recognition in the wild. In Proceedings of the 26th ACM international conference on Multimedia 1301–1309 (2018).

Jäger, J. et al. Croatian fish dataset: Fine-grained classification of fish species in their natural habitat. Swansea: Bmvc, 2. In Procedings of the Machine Vision of Animals and their Behaviour Workshop 2015. Swansea: British Machine Vision Association (2015).

Australian Institute of Marine Science (AIMS), U. o. W. A. U. a. C. U. OzFish Dataset - Machine learning dataset for Baited Remote Underwater Video Stations (2019). https://doi.org/10.25845/5e28f062c5097.

Saleh, A. et al. A realistic fish-habitat dataset to evaluate algorithms for underwater visual analysis. Sci. Rep. 10 (1), 14671 (2020).

Boulais, O. et al. SEAMAPD21: a large-scale reef fish dataset for fine-grained categorization. In Proceedings of the FGVC8: The Eight Workshop on Fine-Grained Visual Categorization (2021).

Froese, R. & Pauly, D. (eds). FishBase. World Wide Web electronic publication. www.fishbase.org, version (10/2024) (2024).

Fang, H. & Lv, X. Reef Fish Identification of Nansha Islands (China Ocean University, 2019).

South China Sea Fisheries Institute, C. N. B. o. A. P. & et al. The Fishes of the Islands in the South China Sea (Science, 1979). Xiamen Fisheries College, Institute of Oceanology, Academia Sinica.

Sale, P. F. The ecology of fishes on coral reefs. Elsevier (2013).

Reese, E. S. Reef fishes as indicators of conditions on coral reefs. In Proceedings of the Colloquium on Global Aspects of Coral Reefs: Health, Hazards, and History. (1993).

Cheal, A. et al. Coral–macroalgal phase shifts or reef resilience: links with diversity and functional roles of herbivorous fishes on the great barrier reef. Coral Reefs. 29, 1005–1015 (2010).

Coker, D. J., Wilson, S. K. & Pratchett, M. S. Importance of live coral habitat for reef fishes. Rev. Fish Biol. Fish. 24 (1), 89–126 (2014).

Wang, T. et al. Species composition characteristics analysis of Qilianyu reef fishes of Xisha Islands. J. Fisheries China. 29 (1), 102–117 (2022).

Edwards, C. B. et al. Global assessment of the status of coral reef herbivorous fishes: evidence for fishing effects. Proc. R. Soc. B: Biol. Sci. 281 (1774), 20131835 (2014).

Hughes, T. P. et al. Phase shifts, herbivory, and the resilience of coral reefs to climate change. Curr. Biol. 17 (4), 360–365 (2007).

Goatley, C. H., Bonaldo, R. M., Fox, R. J. & Bellwood, D. R. Sediments and herbivory as sensitive indicators of coral reef degradation. Ecol. Soc. 21 (1), 29 (2016).

Hughes, T. P., Graham, N. A., Jackson, J. B., Mumby, P. J. & Steneck, R. S. Rising to the challenge of sustaining coral reef resilience. Trends Ecol. Evol. 25 (11), 633–642 (2010).

Lv, W. et al. RT-DETRv2: Improved baseline with bag-of-freebies for real-time detection transformer. arXiv preprint arXiv: 2407.17140 (2024).

Wang, A. et al. YOLOv10: Real-time end-to-end object detection. in Proceedings of the Conference on Neural Information Processing Systems (2024).

Liu, W. et al. SSD: Single shot multiBox detector. In Proceedings of the European Conference on Computer Vision (2016).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39 (6), 1137–1149 (2017).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. In Proceedings of the International Conference on Learning Representations (2019).

LeCun, Y. et al. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1 (4), 541–551 (1989).

Kingma, D. P. & Ba, J. L. Adam: a method for stochastic optimization. In Proceedings of the International Conference for Learning Representations. (2015).

Wang, C. et al. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (2020).

Liu, S., Qi, L., Qin, H., Shi, J. & Jia, J. Path aggregation network for instance segmentation. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018).

Acknowledgements

This work is supported by the Open Fund of Nansha Islands Coral Reef Ecosystem National Observation and Research Station (NO. NSICR23102), and the Science and Technology Development Foundation of South China Sea Bureau, Ministry of Natural Resources (No. 230105).

Author information

Authors and Affiliations

Contributions

M.W.: Data curation, Investigation, Writing, Review & editing.W.X.: Data curation, Investigation, Validation, Visualization. Y.W.: Data curation, Validation, Visualization. H.J.: Funding acquisition, Project administration, Resources.Y.G.: Data curation, Investigation, Conceptualization.Z.C.: Conceptualization, Funding acquisition, Project administration, Resources.F.Z.: Conceptualization, Formal analysis, Methodology, Writing, Review & editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, M., Xiao, W., Wang, Y. et al. SCSFish2025: a large dataset from South China sea for coral reef fish identification. Sci Rep 15, 30091 (2025). https://doi.org/10.1038/s41598-025-14785-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-14785-4