Abstract

Accurate athlete pose estimation in basketball is crucial for game analysis, player training, and tactical decision-making. However, existing pose estimation methods struggle to effectively address common challenges in basketball, such as motion blur, occlusions, and complex backgrounds. To tackle these issues, this paper proposes a basketball action pose estimation framework, which first leverages a multi-dimensional data stream network to extract spatial, temporal, and contextual information separately. Specifically, the spatial stream branch aims to extract multi-scale features and captures the spatial pose information of players in single-frame images through feature fusion and spatial attention mechanisms. The temporal stream branch merges feature maps with adjacent frames, effectively capturing player motion information across consecutive frames. The context stream branch generates a global context feature vector that encodes the entire image, offering a holistic perspective for pose estimation. Subsequently, we designed a feature fusion module that integrates early fusion, late fusion, and hybrid fusion strategies to fully utilize multi-modal information. Finally, we introduced a stage-wise streaming training module that progressively enhances the model’s accuracy and generalization ability through three stages. Experimental results demonstrate that the proposed framework significantly improves the accuracy and robustness of basketball action pose estimation, particularly excelling in scenarios with high dynamics and complex backgrounds.

Similar content being viewed by others

Introduction

The dynamic and complex nature of basketball necessitates high-precision athlete pose estimation to support applications such as motion analysis, athlete training improvement, and game strategy formulation1,2. Accurate pose estimation not only enhances the viewing experience of the game but also provides critical performance feedback for coaches and players3,4. With the advancement of computer vision technology, the automatic detection and tracking of human poses in videos using deep learning methods5,6,7 have become a research hotspot.

Despite the significant progress made in pose estimation within the field of computer vision, current models still face several challenges in specific high-dynamic sports activities like basketball8,9. Firstly, (1) the high speed and frequent interactions between players in basketball lead to prevalent motion blur and occlusion, resulting in the loss of crucial motion information in key frames, which in turn affects the accuracy of subsequent action recognition. Existing frame-by-frame pose estimation methods, such as OpenPose10 and AlphaPose11, often struggle to effectively utilize complementary information within the time series when handling continuous actions, thereby failing to fully reconstruct the occluded or blurred action details. This limitation arises because these methods primarily rely on single-frame image information, lacking effective utilization of temporal continuity. Moreover, these methods are deficient in their ability to leverage complementary information from historical and future frames within a time series to supplement missing features in the current frame, limiting their performance in dynamic environments. Secondly, (2) the varying camera angles and complex background environments in basketball games lead to a high diversity in the extracted human body shapes, further complicating pose estimation. For instance, JointFlow12 performs well under fixed angles but its performance significantly degrades in cross-scene applications because it lacks the adaptability to geometric variations of the human body under different viewing angles. DeepCut13 often underperforms when dealing with viewpoint differences and complex backgrounds due to its failure to effectively integrate long-term viewpoint changes and background information, leading to a decrease in accuracy in varied environments.

To address the aforementioned challenges, an effective basketball action pose estimation model must possess the following capabilities: (1) the ability to effectively extract and utilize spatiotemporal information to compensate for information loss caused by motion blur and occlusion; (2) the ability to adapt to different camera angles and complex background environments to improve the model’s robustness and generalization capabilities. Therefore, this paper proposes a basketball action pose estimation framework based on multi-stream feature fusion and stage-wise training. The framework first utilizes a multi-dimensional data stream network module to separately extract spatial, temporal, and contextual information. Specifically, to overcome the challenges of motion blur and occlusion, we designed the spatial and temporal stream branches. The spatial stream branch utilizes the ResNet deep network to extract multi-scale features and focuses on extracting the spatial pose information of players in single-frame images through multi-scale feature fusion and spatial attention mechanisms. The temporal stream branch integrates the feature maps extracted by the ResNet network with differential images of adjacent frames and models the dynamic changes along the temporal dimension through a temporal convolution module, focusing on capturing the motion information of players across consecutive frames. To tackle the challenges posed by varying viewpoints and backgrounds, we introduced the context stream branch, which leverages the global features extracted by ResNet and generates a global context feature vector encoding the entire image’s information through global average pooling and fully connected layers. To effectively integrate multimodal information, we introduced a basketball action pose feature fusion module that combines early fusion, late fusion, and hybrid fusion strategies to enhance the model’s robustness and its ability to capture different types of information. Finally, to optimize the model training process, we proposed a stage-wise streaming training module consisting of three stages: single-stream pre-training, freezing and fine-tuning, and joint optimization, progressively improving the model’s accuracy and generalization capability.

The main contributions of this paper are as follows:

-

We propose a multi-dimensional data stream network module for basketball action pose estimation that effectively captures spatial, temporal, and contextual information, laying the foundation for subsequent fusion and training.

-

We introduce a basketball action pose feature fusion module that incorporates early fusion, late fusion, and hybrid fusion strategies to enhance the model’s robustness and its ability to capture different types of information.

-

We construct a stage-wise streaming training module, which, through fine-tuned adjustments to the training process, enhances the model’s ability to capture action continuity and adapt to viewpoint variations.

-

Extensive experimental validation on the PoseTrack 2017 and PoseTrack 2018 benchmark datasets demonstrates the superior performance of the proposed model, particularly in scenarios involving high dynamics and complex backgrounds.

The remainder of this paper is structured as follows: “Related work” reviews related work in pose estimation and video understanding. “Method” details our proposed multi-stream network, feature fusion module, and staged training strategy. “Experiments” presents our extensive experimental validation, including comparisons, ablation studies, and robustness analysis. Finally, “Conclusion” concludes the paper and discusses future directions.

Related work

Human pose estimation

Human pose estimation aims to identify and locate key points of the human body (such as the head, shoulders, elbows, wrists, hips, knees, and ankles) from images or videos, thereby constructing a structured representation of the human pose. Human pose estimation is a highly challenging problem as it involves solving various issues, including the variability of human poses, occlusions, and image quality. Early pose estimation methods primarily relied on hand-crafted features and graphical models. For example, Deformable Part Models (DPM)14 use deformable part models to represent the human body and employ HOG (Histogram of Oriented Gradients) features and SVM (Support Vector Machine) classifiers to detect body parts. These traditional methods are often computationally intensive and have limited effectiveness in handling complex scenes and occlusions. In recent years, deep learning technologies have revolutionized the field of human pose estimation, significantly improving the accuracy and efficiency of pose estimation. Among these, OpenPose10 is a multi-person pose estimation method based on Part Affinity Fields (PAFs). This method uses a CNN network to simultaneously predict human key points and the PAFs that connect these key points, and then connects the key points into a complete human pose through a greedy inference algorithm. AlphaPose11 is a region-based multi-person pose estimation framework that first uses an object detector to locate the human regions in an image and then performs single-person pose estimation for each region. This method effectively addresses the occlusion problem in multi-person pose estimation. HRNet (High-Resolution Network)15 is a high-resolution network for human pose estimation that maintains high-resolution feature representations throughout the network, thereby improving the accuracy of key point localization.

Recently, significant progress has also been made in 2D-to-3D human pose estimation, often leveraging sophisticated Transformer architectures. For example, MHAFormer16 employs a diffusion model to generate multiple 3D pose hypotheses to address depth ambiguity. Similarly, MLTFFPN17 uses a multi-level Transformer and a feature frame padding network to better handle temporal dependencies and edge-frame information. While these methods demonstrate the power of Transformers for temporal modeling, their primary focus is on the 3D lifting task. In contrast, our work tackles the different but related challenge of robust 2D pose estimation in dynamic, multi-person videos. Our key novelty lies not in generating multiple geometric hypotheses, but in introducing a dedicated Contextual Stream that leverages global scene semantics to resolve ambiguities-a fundamentally different approach to improving pose estimation robustness.

Although deep learning-based human pose estimation methods have made significant progress, they still have some limitations when dealing with basketball action pose estimation: rapid movement (basketball players’ actions are very fast, leading to motion blur and drastic pose changes, which challenge pose estimation), occlusion (basketball players frequently engage in physical contact and occlusion, making it difficult to accurately identify and locate key points), and complex backgrounds (the background in basketball games is usually complex, such as spectators, billboards, and lighting, which can interfere with pose estimation). While powerful, these methods often rely on single-frame information or generic temporal models, and their attention mechanisms are not explicitly designed to interpret the high-level, global game state crucial for resolving ambiguities in sports scenarios. To address these issues, this paper proposes a basketball action pose estimation framework based on multi-stream feature fusion and stage-wise training, which effectively captures spatial, temporal, and contextual information and improves the model’s robustness in handling complex scenes and high-dynamic actions.

Video action recognition

Video action recognition aims to identify and classify ongoing actions from video sequences, such as running, jumping, and shooting. This technology has broad applications in video understanding, sports analysis, security surveillance, and human-computer interaction. Video action recognition is a challenging problem as it requires solving the following difficulties: temporal variations, spatial variations, and category differences. Early video action recognition methods were primarily based on two-dimensional convolutional neural networks (2D CNN). For example, Two-Stream Networks18 is a dual-stream network structure that separately processes static image frames and optical flow information of videos to capture the spatial and temporal features of actions. Our framework extends this paradigm by introducing a third, dedicated contextual stream, designed to move beyond local motion and capture global scene semantics essential for team sports analysis. C3D (Convolutional 3D)19 uses three-dimensional convolutional kernels to extract spatiotemporal features of videos, allowing for better capture of the dynamic process of actions. To better capture the spatiotemporal features of videos, researchers have proposed methods based on three-dimensional convolutional neural networks (3D CNN). I3D (Inflated 3D ConvNet)20 extends 2D convolutional neural networks (2D CNN) to three dimensions and initializes the model using a pre-trained model on the ImageNet dataset, effectively improving the model’s performance. SlowFast Networks21 is a dual-pathway network structure where one pathway processes videos at a slower frame rate to capture spatial semantic information, while the other pathway processes videos at a faster frame rate to capture rapid motion information. Recently, Transformer models have achieved tremendous success in the field of natural language processing, and researchers have begun applying them to video action recognition. TimeSformer22 is a video action recognition model based on the Transformer architecture, treating video frame sequences as a spatiotemporal sequence and using the self-attention mechanism to capture the global spatiotemporal dependencies of the video. ViT for Videos23 extends the Vision Transformer (ViT) model to the video domain, using three-dimensional patches to represent video frames and employing the Transformer model to learn spatiotemporal feature representations of videos. In contrast to using a monolithic Transformer as the backbone, our approach strategically integrates a Transformer within the contextual stream, leveraging its power for global context modeling while retaining specialized convolutional streams for efficient spatial and temporal feature extraction in a hybrid architecture.

Beyond action recognition, advancements in related video understanding tasks like video object detection (VOD) and visual tracking also provide valuable context. In VOD, HyMATOD24 is a Transformer-based framework with a hybrid multi-attention module to enhance feature aggregation across frames. In the domain of visual tracking, methods based on discriminative correlation filters (DCF) have shown great success. For instance, ASTABSCF25 and DSRVMRT26 introduce advanced regularization and background suppression schemes to improve tracking robustness. While these works address similar high-level challenges like temporal consistency and background clutter, their underlying techniques and specific tasks differ significantly from ours. Our framework is not based on correlation filters and is designed for the specific problem of multi-person pose estimation, which requires detecting and associating numerous key points rather than tracking a single object bounding box. Our multi-stream design is tailored to decompose the pose estimation problem, a specialization does not present in these more generic tracking or detection architectures. The concept of fusing information from multiple sources is also central to other domains, such as multimodal emotion recognition. The HMATN27 framework proposes a hybrid multi-attention network to effectively fuse audio and visual data. This highlights the general trend of using sophisticated fusion mechanisms to handle complementary and sometimes inconsistent information from different streams. However, a key distinction of our work is the nature of the streams being fused. While HMATN fuses heterogeneous modalities , our framework fuses three homogeneous but functionally distinct feature streams: the local spatial pose, the temporal motion, and the global scene context. This unique decomposition of visual information and its subsequent fusion are specifically tailored to the nuances of human action pose estimation in complex sports environments.

Although existing video action recognition methods have made significant progress, they still have some limitations in handling basketball action recognition, particularly in fine-grained action recognition and player interaction modeling. In fine-grained action recognition, basketball games involve many fine-grained actions, such as different techniques in dribbling, passing, and shooting, which current methods struggle to accurately recognize. In player interaction modeling, basketball is a team sport, and player interactions are crucial for understanding the game; however, existing methods struggle to effectively model these interactions. To address these issues, the basketball action pose estimation framework proposed in this study can serve as a foundation for providing richer pose information for recognizing and analyzing finer-grained basketball actions and supporting more in-depth analysis of player interactions.

To provide a clearer perspective on the landscape of related research and to better situate our contributions, Table 1 offers a comprehensive comparison between our framework and key state-of-the-art methods across various video and image understanding tasks.

Method

Overview

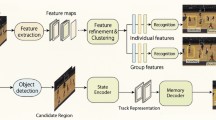

Overview of our model. The model has three module: basketball action pose multi-dimensional data stream network module(BAP-MDSNM), feature fusion module for basketball action pose estimation(FFM) and staged stream training module(SST). FFM contains three strategies are early fusion, late fusion and hybrid fusion. SST consists of three training options: single-stream pre-training, freezing and fine-tuning, and co-optimization.

Basketball action pose multi-dimensional data stream network module (BAP-MDSNM)

Accurately capturing complex actions and high-speed movements in basketball is essential for pose estimation. To address this, we propose the Basketball Action Pose Multi-Dimensional Data Stream Network Module (BAP-MDSNM), which is shown in Fig. 1. Our design decomposes this complex problem into three complementary sub-problems, each handled by a specialized stream: the Spatial Stream for static pose, the Temporal Stream for motion dynamics, and the novel Contextual Stream for global scene context. This systematic decomposition optimizes separate network streams to learn specialized and non-redundant features, which are then optimally integrated, thereby enhancing overall accuracy and efficiency in pose estimation.

We use ResNet28 as the backbone network, and different branches generate independent network streams to process spatial, temporal, and contextual information. Given an input image frame sequence \((I_t, T_{t+1}, \dots )\), the formula is as follows:

where \(I_t \in {\mathbb {R}}^{W \times H \times 3}\) represents a single image frame at time \(t\), with \(W\) and \(H\) being the width and height of the image, respectively. The output consists of multi-level feature maps \((F_1, F_2, \dots , F_L)\), where \(F_i \in {\mathbb {R}}^{W_i \times H_i \times C_i}\) represents the feature map at the \(i\)th layer, \(W_i\) and \(H_i\) are the width and height of the feature map, \(C_i\) is the number of channels, and \(L\) is the number of layers.

Spatial stream

To analyze the static pose information of basketball players, including body joint positions and orientation within single frames, we designed a spatial stream. This stream extracts spatial features to identify players’ static poses and relative positions on the basketball court. To better capture spatial information at different scales, we upsample and concatenate multi-level feature maps from intermediate ResNet layers (e.g., \(F_{L-1}, F_{L-2}\)) with \(F_L\) to obtain richer spatial features. To explicitly model spatial relationships between body joints and improve key point localization accuracy, we integrate a Spatial Attention Mechanism (SAM), which learns dependencies between different spatial positions by focusing on task-relevant regions in the feature map. The formula is:

where \(F_L \in {\mathbb {R}}^{W_L \times H_L \times C_L}\) is the last feature map from ResNet, \(W\) and \(H\) represent the width and height of the image, \(\phi _{SAM}(\cdot ; \theta _{SAM})\) denotes the spatial attention mechanism with parameters \(\theta _{SAM}\), \(Concat\) denotes the concatenation operation, and \(Upsample\) denotes the upsampling operation. \({\mathscr {H}}_s^{t} \in {\mathbb {R}}^{W' \times H' \times K}\) is the heatmap of player key points output by the spatial stream.

The goal of the Spatial Attention Mechanism is to make the model focus more on task-relevant regions in the feature map and learn dependencies between different spatial positions, thereby more accurately locating key points. The formula is as follows:

where the feature map is \(F\), and the new feature maps are \(F_{11}, F_{12}, F_{21},\) and \(F_{22}\). The spatial attention maps are \(A_3, A_4,\) and \(A_5\), and the final output feature map is \(F'\), as shown in Fig. 2.

Temporal stream

To address the challenges of motion blur and occlusion inherent in high-speed sports, we designed a temporal stream to capture the motion information of basketball players. Temporal information, such as players’ trajectories and velocities, is crucial for understanding player interactions and predicting future actions. For two consecutive image frames \((I_t, T_{t+1})\) and a specific feature map \(F_{L-1}\) from ResNet, the motion information is first extracted and then spatiotemporal feature fusion and temporal modeling are performed. The formula is:

where \(D_t = I_{t+1} - I_t\) is the pixel difference between consecutive frames, which approximates the optical flow information. Spatiotemporal feature fusion is the concatenation of motion information \(D_t\) with ResNet’s feature map \(F_{L-1}\). Temporal modeling is used to better capture dynamic changes in the temporal dimension using Temporal Convolutional Modules (TCM), which learn feature representations in the temporal dimension.

The goal of the temporal convolutional module (TCM) is to capture dynamic changes in feature map sequences in the temporal dimension, such as object movement in video frames. It extracts feature representations in the temporal dimension by learning the relationships between adjacent time-step features. A temporal convolution kernel is first constructed, defined as a kernel of size \((t, k, k)\), where \(t\) is the kernel size in the temporal dimension, and \(k\) is the kernel size in the spatial dimension. The kernel is slid across the temporal dimension, performing convolution operations on the feature map at each time step. The formula is:

where \(F = \{F_1, \dots , F_T\}\) is the sequence of feature maps, \(Conv3D\) represents the 3D convolution operation, and \(W\) is the convolution kernel’s parameters.

Contextual stream

To move beyond individual player analysis and understand poses within the broader game situation, we designed a contextual stream to analyze the context of an entire game. We use global average pooling to convert \(F_L\) into a feature vector and then use a fully connected layer to map the feature vector to the target dimension. The formula is:

where \(FC\) represents the fully connected layer, \(AVG\) represents the global average pooling operation, and \(F_L\) is the last feature map from ResNet.

Having extracted distinct spatial, temporal, and contextual features, the next critical step is to effectively integrate them to achieve precise basketball player pose estimation. This is handled by our feature fusion module.

Feature fusion module for basketball action pose estimation

Having extracted features from three specialized streams, the next critical step is to effectively integrate them. The Feature Fusion Module (FFM) is designed for this purpose. The central question in fusion is when and how to combine the information. We explore three canonical strategies: early, late, and hybrid fusion. Early fusion allows for learning deep correlations from the start, late fusion preserves the specialized knowledge of each stream, and hybrid fusion aims to achieve the best of both worlds. We will show empirically that a well-designed hybrid strategy yields the best performance.

Early fusion

We concatenate the input features of the spatial, temporal, and contextual streams. Specifically, we concatenate the player keypoint heatmaps output by the spatial stream with the keypoint heatmaps output by the temporal stream and the global features output by the contextual stream along the channel dimension. The concatenated feature maps are then fed into a convolutional network to learn the

fused multimodal feature representation. The formula is:

where \([\cdot ; \cdot ; \cdot ]\) denotes the concatenation operation along the channel dimension, \(\phi _{fuse}(\cdot ; \theta _{fuse})\) denotes the convolutional neural network used for fusing multimodal features, with parameters \(\theta _{fuse}\), and \({\bf{F}}_{early}^{t}\) is the feature representation after early fusion.

Late fusion

To allow each network stream to independently learn its respective modal features and fuse them at the final stage to preserve the unique information of each modality, we independently train the spatial, temporal, and contextual streams and obtain their respective player pose estimation results \({\hat{Y}}_s^{t}, {\hat{Y}}_t^{t}, {\hat{Y}}_c^{t}\). Then, we use a weighted average method to fuse the predictions from the three streams, with the weights obtained through grid search on the validation set. The formula is:

where \(w_s\), \(w_t\), and \(w_c\) are the weights for the three streams, and \({\hat{Y}}_{late}^{t}\) is the final pose estimation result after late fusion.

Hybrid fusion

To combine the advantages of both early and late fusion, we propose a multi-stage Hybrid Fusion approach. This strategy aims to obtain more comprehensive and robust feature representations by learning correlations between streams at multiple levels of semantic abstraction. We first apply the early fusion strategy, concatenating the input features of the spatial, temporal, and contextual streams and feeding them into a lightweight convolutional network \(\phi _{light}\). Then, we concatenate the intermediate layer features of this network with the independent predictions of the spatial and temporal streams and input them into another convolutional network \(\phi _{light}\) for further feature fusion. Finally, we use the fused features for the final pose estimation. The formula is:

where \(\phi _{light}(x) = ReLU(Conv(ReLU(Conv(Conv(x)))))\) represents a “3-convolution and 2-ReLU” structure, containing 3 convolution layers and 2 ReLU activation functions. \(\phi _{head}(\cdot ; \theta _{head})\) is the fully connected layer used to predict the final pose estimation result, with parameters \(\theta _{head}\), and \({\hat{Y}}_{hybrid}^{t}\) is the final pose estimation result after hybrid fusion.

By applying these feature fusion strategies, the basketball action pose estimation model can more effectively process and analyze the complex data collected from different dimensions, thereby improving the accuracy of recognition and the robustness of the system.

Staged stream training module (SST)

Training a complex, multi-stream network like ours presents a significant optimization challenge. A naive end-to-end training approach can lead to unstable learning and suboptimal results. To address this, we propose the Staged Stream Training (SST) module, a systematic, three-stage training methodology. The core idea is to progressively build and refine the model: first, each stream is individually pre-trained to become an expert (Stage 1); next, the fusion layers are carefully fine-tuned while keeping the expert backbones frozen (Stage 2); finally, the entire network is jointly optimized to achieve perfect harmony (Stage 3). This structured approach is a key contributor to our model’s high performance and robustness.

Stage 1: single stream pre-training

In the initial stage, each data stream is independently pre-trained, focusing on learning its respective modal feature representations to lay the foundation for subsequent feature fusion. We use large-scale publicly available human pose estimation datasets to pre-train the spatial and temporal streams. Specifically, for the spatial stream, we pre-train the ResNet and FPN backbone on the COCO (Common Objects in Context) dataset, which is a standard practice for pose estimation tasks. For the contextual stream, we curated a dataset of unannotated video clips from various broadcast basketball games to train the Transformer model in a self-supervised manner. For the spatial stream, we use annotated data for human pose estimation to train the ResNet28 and FPN29 networks to predict player keypoint heatmaps. The formula is:

where \(\theta _{\text {ResNet-FPN}}^{*}\) represents the optimal parameters obtained from training, \(N_s\) represents the number of training samples, \({\mathscr {L}}_{MSE}\) represents the mean square error loss function, \(I_i\) represents the \(i\)th training sample, and \({\mathscr {H}}_{gt, i}\) represents the ground truth keypoint heatmap for the \(i\)th sample.

For the temporal stream, we use optical flow information between consecutive frames as the supervision signal to train the TCN network30 to predict player keypoint heatmaps. The formula is:

where \(\theta _{TCN}^{*}\) represents the optimal parameters obtained from training, \(N_t\) represents the number of training samples, and \({\bf{O}}_j\) represents the optical flow sequence of the \(j\)th sample.

For the contextual stream, we use basketball game video data to train a Transformer model to learn global contextual features, pre-training it in a self-supervised manner using masked language modeling (MLM). The formula is:

where \(\theta _{Transformer}^{*}\) represents the parameters obtained from training, \(N_c\) represents the number of samples, \({\bf{S}}_k\) represents the video sequence of the \(k\)th sample, and \({\mathscr {L}}_{MLM}\) represents the masked language modeling loss function.

Stage 2: freezing and fine-tuning

To freeze the base layers while fine-tuning the subsequent layers, we adjust and refine the model’s handling of fused features, enhancing its adaptability and accuracy when dealing with complex real-world data. Specifically, we select a feature fusion strategy to integrate the pre-trained spatial, temporal, and contextual streams. We then freeze the backbone parameters of the ResNet-FPN and TCN networks30 in the spatial and temporal streams, only fine-tuning their head networks and the Transformer model parameters in the contextual stream. The training objective function in this stage is the difference between the fused model’s predictions and the ground truth labels. The formula is:

where \({\Theta } = (\Theta _{fuse}, \Theta _{head}^{'}, \Theta _{Transformer}^{'})\), \({\mathscr {F}}(\cdot )\) denotes the feature fusion function, \(\Theta _{head}^{'}\) represents the parameters to be fine-tuned in \(\Theta _{head}\), and \(\Theta _{Transformer}^{'}\) represents the parameters to be fine-tuned in \(\Theta _{Transformer}\).

Stage 3: joint optimization

To comprehensively optimize the entire network, all parameters are adjusted to accommodate the complexity of real-world data. Through meticulous global adjustments, the model is ensured to perform optimally when predicting new, unseen basketball actions. The formula is:

where \({\Theta } = (\Theta _{ResNet-FPN}, \Theta _{TCN}, \Theta _{Transformer}, \Theta _{fuse}, \Theta _{head})\) represents all the parameters to be optimized, and \({\mathscr {F}}(\cdot )\) denotes the feature fusion function.

The Staged Stream Training Module, through its structured multi-stage training approach, ensures that the model fully utilizes the specific data characteristics at each training stage. Moreover, through effective feature fusion and comprehensive optimization, the module significantly improves the model’s accuracy, efficiency, and adaptability. This method provides an efficient and robust solution for basketball pose estimation, meeting the high dynamics and variability demands in sports data analysis.

To enhance the clarity and accessibility of our methodology, especially the intricate interactions between multi-stream feature extraction, multi-strategy fusion, and staged training, we provide a summarized pseudocode for the overall training pipeline in Algorithm 1. This algorithm encapsulates the high-level steps involved in optimizing our Basketball Action Pose Estimation framework.

Experiments

Datasets

To validate the effectiveness and robustness of our proposed framework, we conducted extensive experiments on the challenging PoseTrack201731 and PoseTrack201815 benchmarks. These datasets are crucial for basketball action pose estimation research, providing a large amount of annotated data for human pose estimation and action tracking tasks. They are specifically chosen for their richness in the very challenges our model is designed to overcome, such as severe occlusion, motion blur, and varying viewpoints in dynamic multi-person scenes. The PoseTrack 2017 dataset is a publicly available benchmark for multi-person pose estimation and action tracking in videos, comprising 66,374 annotated frames across 300 training and 50 validation video sequences. Each frame is annotated with 15 human body keypoints (head, shoulders, elbows, wrists, hips, knees, and ankles). This dataset features a variety of complex scenarios, including multi-person interactions, occlusions, and motion blur. PoseTrack 2018 is an extended version of PoseTrack 2017, offering increased data volume (153,615 annotated human data points across 593 training and 170 validation video sequences) and improved annotation accuracy. Similar to its predecessor, it provides a comprehensive resource for developing and evaluating pose estimation algorithms in complex dynamic environments. The inherent challenges within these benchmarks-multi-person interaction, frequent occlusion, motion blur, and varying viewpoints-are directly analogous to conditions found in typical basketball games, making them strong indicators of our model’s robustness and applicability in sports analytics.

Settings and metrics

Data preprocessing involved uniformly scaling all images to \(256 \times 256\) pixels and applying data augmentation techniques (random horizontal flipping, rotation, cropping) to increase diversity and enhance robustness. For the temporal stream, optical flow maps or frame differences between adjacent frames were calculated to capture dynamic changes. Our Staged Stream Training Module (SST) utilizes specific parameter settings for each stage. For single-stream pre-training, the spatial stream uses a pre-trained ResNet-50. The temporal stream employs a 3D convolutional neural network (3D CNN). The contextual stream uses a pre-trained LSTM model. All streams are optimized using the Adam optimizer, with initial learning rates of 0.001 for spatial/temporal streams and 0.0005 for the contextual stream. During the freezing and fine-tuning stage, the first four layers of each stream’s pre-trained backbone were frozen to retain basic feature extraction capabilities, while subsequent layers and fusion layers were fine-tuned with a learning rate of 0.0001. For joint optimization, the entire model is optimized using the cross-entropy loss function and Adam optimizer with an initial learning rate of 0.0001. Dropout regularization (ratio of 0.5) is applied to each layer to prevent overfitting. The model is trained for 300 epochs on both datasets with a batch size of 32. Model performance is evaluated using the standard pose estimation metric, Average Precision (AP). The accuracy of each keypoint is calculated, and the Mean Average Precision (MAP) of all keypoints is then computed for overall performance assessment.

Comparisons

To validate the overall effectiveness of our proposed framework, we first compare it against several state-of-the-art methods on two public benchmarks. Table 2 presents the performance comparisons on the PoseTrack 2017 validation dataset, including several models such as PoseFlow, JointFlow, FastPost, STEmbedding, HRNet, MSTCFL, and our proposed model (Ours). Our model achieved an accuracy of 84.5% for head keypoint detection, outperforming other models like HRNet (82.1%) and STEmbedding (83.8%), demonstrating its excellent capability in capturing head features. In shoulder detection, our model also performed outstandingly with an accuracy of 83.8%. Similarly, our model achieved an elbow keypoint detection accuracy of 82.3%, which is mainly attributed to its effective learning of dynamic joint movements. Wrist keypoint detection is challenging due to the rapid movement and frequent occlusion of the wrists, typically resulting in lower accuracy. Despite this, our model achieved an accuracy of 75.7% in this category, reflecting its robustness in handling fast movements and partial occlusions. Our model also showed strong performance in the hip, knee, and ankle regions, particularly in ankle detection, where it achieved an accuracy of 71.3%. This success is due to our model’s ability to accurately track lower limb dynamic changes, maintaining high accuracy even in complex movements.

In terms of overall mean average precision (MAP), our model outperformed all the compared models with a score of 79.7%. This significant performance improvement is mainly attributed to: (1) our Staged Stream Training Module (SST), which effectively enhances feature representation and model generalization by optimizing different types of features at different stages; (2) the use of a hybrid fusion strategy, which allows the model to capture and integrate critical information at various stages, resulting in more accurate predictions when dealing with complex dynamic scenes.

Table 3 shows that our proposed model excels on the PoseTrack 2018 dataset, particularly in the overall MAP, reaching 80.2%, a significant improvement over other models. This is because our model effectively integrates spatial, temporal, and contextual information by combining a multi-dimensional data stream network architecture with the Staged Stream Training Module. Notably, by gradually refining and optimizing the feature extraction and fusion capabilities of each stream at different stages, our model performs exceptionally well in handling complex dynamic scenes and fine details.

Ablation study

To understand the contribution of each individual component of our proposed framework, we conducted a series of ablation studies. These experiments focus on evaluating the contribution of each major module to the overall performance of the model.

Table 4 presents the results of the ablation study. The Baseline (Only BAP-MDSNA) demonstrates the basic performance using only the Data Stream Network Module, showing the initial capability of this architecture in handling dynamic information. Configuration A (Only Feature Fusion Module) failed to achieve a performance improvement, highlighting the limitations of the Feature Fusion Module without the support of the Data Stream input. Configuration B (Only SST) performed slightly better than using only Feature Fusion, indicating that SST can have a positive impact on model performance even when used independently. Configuration C (BAP-MDSNA + Feature Fusion) showed better performance than using a single module, indicating that Feature Fusion can effectively improve performance when supported by Data Streams. Configuration D (BAP-MDSNA + SST) demonstrates that SST can further enhance performance by reinforcing the Data Stream Module. Configuration E (Feature Fusion + SST) showed an improvement compared to using a single module but did not reach the performance of configurations including the Data Stream Module, underscoring the importance of the Data Stream Module. The Full Model achieved the best performance, demonstrating the overall advantage when the three modules work together, proving that integrating BAP-MDSNA, the Feature Fusion Module, and SST maximizes performance improvement.

Sensitivity analysis

Sensitivity analysis of BAP-MDSNA module parameters

To further analyze the impact of BAP-MDSNA module parameters on basketball action pose estimation performance, we designed several sensitivity experiments. Table 5 shows the model’s performance under different configuration conditions in the basketball action pose estimation task. The Baseline uses ResNet-50 as the feature extractor for the spatial stream, + VGG-16 replaces the spatial stream’s feature extractor with VGG-16, ResNet-101 replaces ResNet-50 with ResNet-101 to increase model depth, EfficientNet-B3 tests the use of EfficientNet-B3 as a more modern feature extraction network, Higher Resolution increases the input image resolution to \(512 \times 512\), and Lower Resolution decreases the input image resolution to \(128 \times 128\).

In terms of feature extractors, performance slightly decreased after using VGG-16, possibly because VGG-16 has fewer residual connections compared to ResNet-50, making it less effective in capturing complex features. Switching to ResNet-101 improved performance, indicating that a deeper network can better capture details, especially in scenarios involving complex actions. EfficientNet-B3 did not show significant improvement over ResNet-50, suggesting that a more modern but similarly complex network structure may not bring significant benefits for this task. Regarding input resolution changes, increasing the resolution to \(512 \times 512\) significantly improved the accuracy of all keypoints, indicating that higher resolution input provides more detailed information, which helps improve the accuracy of keypoint localization. On the other hand, reducing the resolution to \(128 \times 128\) led to a significant performance decline, as the lower resolution image lost a lot of detail, which is detrimental to accurate pose estimation.

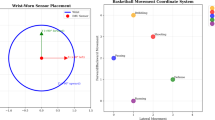

Sensitivity analysis of different stream weights

Figure 3 analyzes the impact of the weights of the spatial stream, temporal stream, and contextual stream on final performance in feature fusion. The left figure shows the impact of spatial stream weight (x-axis) and temporal stream weight (y-axis) on model performance (MAP, z-axis). The middle figure shows how spatial stream weight (x-axis) and contextual stream weight (y-axis) jointly affect the model’s MAP (z-axis), and the right figure shows the combined effect of temporal stream weight (x-axis) and contextual stream weight (y-axis) on MAP (z-axis).

In the left figure of Fig. 3, we can see greater overall fluctuation, with the temporal stream having a slightly larger impact on performance. This is because capturing dynamic information (e.g., speed and trajectory) of players is usually more critical than static spatial information in basketball action pose estimation. The middle figure shows that spatial information is crucial in basketball action pose estimation, as it directly affects the accuracy of player position and pose recognition. Although contextual information provides deeper insights into the game context, it is slightly less influential in directly affecting pose estimation than spatial features. The right figure indicates that contextual stream information has a greater impact than temporal stream information. The temporal stream provides continuity and fluidity analysis of actions, while the contextual stream enhances understanding of the game context.

Sensitivity analysis for spatial stream parameters

To assess the impact of convolutional layer parameters in the spatial stream on basketball action pose estimation performance, we conducted a series of experiments, varying parameters such as the number of convolutional layers, filter size, and the number of filters per layer. Table 6 lists different combinations of the number of convolutional layers, filter size, and the number of filters per layer and shows the performance of each configuration in terms of the model’s overall mean average precision (MAP).

As observed in Table 6, increasing the number of convolutional layers generally enhances the network’s learning ability, as more layers mean more complex features can be captured. As the number of layers increases (from 3 to 7), the AP for head and shoulder and the overall MAP gradually increase, indicating that a deeper network can better understand the spatial features of basketball players. Changes in filter size also have different impacts, with larger filters (5x5 compared to 3x3) covering a broader receptive field, capturing more spatial contextual information. This is particularly important for recognizing actions in basketball, where actions often involve the coordination of multiple joints and limbs. This explains why the performance of 5x5 filters is slightly better than that of 3x3 filters. Increasing the number of filters (from 64 to 128) allows the network to learn more feature representations, enhancing its recognition capability. The results show that increasing the number of filters positively impacts model performance, especially when dealing with complex backgrounds and multiple actions.

Sensitivity analysis for temporal stream parameters

To delve into the impact of parameters in the temporal stream on basketball action pose estimation performance, Table 7 analyzes the effect of time window size, frame interval, and convolution type (3D convolution vs. 2D convolution combined with temporal filters) on model performance. Table 7 shows the average precision (AP) for knee and ankle keypoints and the overall mean average precision (MAP) under different combinations of time window size, frame interval, and convolution type.

As observed in Table 7, increasing the time window from 3 frames to 7 frames improves the AP for knee and ankle keypoints and the overall MAP. This is because a larger time window can capture more extended periods of action information, helping the model more accurately understand and predict changes in players’ actions, especially in terms of action continuity and fluidity. We also see a slight performance drop when the frame interval increases. This is because a larger frame interval may result in the loss of important action information, especially in fast-action scenarios. Therefore, a smaller frame interval helps track actions more coherently, though it may come with higher computational costs. We also observe that 3D convolution performs better than 2D convolution combined with temporal filters. This is because 3D convolution directly integrates information in both spatial and temporal dimensions, more effectively capturing temporal continuity and spatial details. In contrast, 2D convolution with temporal filters, while able to process temporal information, may not be as efficient and coherent in its integration as 3D convolution.

Sensitivity analysis for contextual stream parameters

In basketball action pose estimation tasks, the design of the contextual stream is crucial for understanding the tactical layout of the game and player behavior patterns. We analyzed the impact of different model types, sequence lengths, and hidden layer sizes in the contextual stream on model performance.

As shown in Table 8, the transformer model generally outperforms the LSTM model, as the Transformer has stronger parallel processing capabilities and more efficiently captures long-distance dependencies, making it better suited for handling complex basketball game scenarios. As the sequence length increases, the model’s performance gradually improves, indicating that longer sequences provide more contextual information, helping the model better understand the progression of the game and player interactions. This is crucial for predicting future actions and reactions. Increasing the number of hidden units significantly improves performance. This indicates that larger hidden layers provide stronger data processing capabilities, allowing the model to capture more complex features and relationships, thereby improving overall prediction accuracy and robustness.

Sensitivity analysis of feature fusion module

To evaluate the impact of the Feature Fusion Module on the performance of the basketball action pose estimation model, we conducted a series of sensitivity analysis experiments. Table 9 presents the comparison results of the experiments, focusing on the average precision (AP) of four major keypoints (head, shoulder, elbow, and wrist) and the overall mean average precision (overall MAP).

In Table 9, Early Fusion refers to fusing the data from the spatial, temporal, and contextual streams during the feature extraction stage. Late Fusion refers to fusing the data at the decision layer after each stream has processed independently. Hybrid Fusion refers to combining early and late fusion strategies to perform fusion at multiple stages. No Fusion refers to each stream working independently without any fusion, with results only combined at the final output stage. Among these, Early Fusion performed well because it enables the network to utilize all available feature information at an earlier stage, helping to better understand complex actions and interactions. Early fusion helps the model learn dependencies between different streams more deeply. Late Fusion performed slightly worse than Early Fusion, possibly because, while Late Fusion allows each stream to optimize its feature representation, some timely contextual information may be lost before fusion. Hybrid Fusion performed the best, showing the potential to combine the advantages of Early and Late Fusion at different stages. This strategy leverages the comprehensiveness of Early Fusion and the specialization of Late Fusion without sacrificing the independence of each stream. No Fusion performed the worst, indicating that independent work in each stream fails to effectively utilize information from other streams, highlighting the importance of fusion strategies in integrating multi-dimensional information.

Sensitivity analysis of SST module parameters

To explore the impact of different training stage parameters in the staged stream training module (SST), we designed a series of sensitivity experiments, focusing on the impact of different training strategies such as the number of frozen layers, learning rate, and batch size on the performance of basketball action pose estimation. Table 10 presents the comparison of model performance in basketball action pose estimation under different staged stream training settings, with a focus on the average precision (AP) of head (Head), shoulder (Shou), and wrist (Wri) keypoints, as well as the overall mean average precision (Overall MAP).

In Table 10, Baseline refers to no frozen layers, High Learning Rate and Low Learning Rate refer to using a higher learning rate of 0.01 and a lower learning rate of 0.0001, respectively. Small Batch Size and Large Batch Size refer to using smaller and larger batches, respectively. In terms of frozen layers, freezing fewer layers slightly improves performance, indicating that the basic visual features captured by the early layers benefit the model, while the subsequent layers need more adjustment to adapt to the specific task. However, freezing too many layers leads to a performance decline, possibly because it limits the model’s ability to adjust higher-level features. From the learning rate perspective, a high learning rate slightly reduces performance, possibly because the fast learning causes the model to oscillate near local minima, failing to converge effectively. A low learning rate improves performance, indicating that slower weight updates help fine-tune the model parameters, leading to better results. From the batch size perspective, a small batch size slightly reduces performance, possibly because the smaller batch increases gradient noise, making the training process more unstable. A large batch size improves performance, indicating that a larger batch provides a more stable gradient estimate, helping stabilize the training process.

Visualization

Visualization on datasets

Figure 4 shows the pose estimation results of our model in various environments and action scenes. These scenes include outdoor sports, indoor exercises, urban streets, etc., covering a wide range of applications from sports competitions to daily activities.

From indoor gyms to outdoor streets and sports fields, the keypoints in the images (represented by colored lines) clearly mark the head, shoulders, arms, waist, legs, and feet of the human body. These keypoints not only capture static poses but also dynamically track human movements such as walking, running, and stretching. Moreover, even in crowded scenes or against complex backgrounds, such as the construction site and sports scenes with multiple athletes in the images, the model demonstrates excellent distinguishing and recognition capabilities.

Figure 5 shows the recognition of occlusion, blur, and perspective changes in multi-player basketball movements. In these images, the waist, legs, shoulders, arms, etc., of the athletes are all recognized, especially under high blur conditions during shooting. These results indicate that our model not only has high accuracy and robustness but is also suitable for various environments, effectively handling different visual interferences and complex dynamic scenes.

Visualization of feature similarity on time slices at different intervals

To analyze the differences in feature similarity extracted by the spatial stream, temporal stream, and contextual stream at different time intervals and to distinguish the characteristics of the three types of information, Fig. 6 shows the similarity of features at different time intervals, verifying the model’s ability to capture changes in temporal dimension features.

From Fig. 6, we can observe: (1) The similarity of temporal stream features gradually decreases with increasing time intervals, indicating that the temporal stream can capture changes in features over time. This is because the temporal stream focuses on motion information between consecutive frames, and as the time interval increases, the changes in motion information become more significant. (2) The similarity of spatial stream features is relatively stable and does not change drastically with increasing time intervals. This is because the spatial stream mainly focuses on player pose information in a single frame, and this information remains relatively stable over short periods. (3) The similarity of contextual stream features remains at a high level with relatively minor changes. This is because the contextual stream focuses on global game context information, such as interactions between players, game rules, etc., which remain relatively stable throughout the game.

Generalization and robustness analysis on real-world basketball dataset

To explicitly assess the generalization capability and robustness of our model, we conducted an additional experiment on a challenging, newly curated dataset named the Broadcast-view Basketball Video Dataset (BTVD), which was not seen during the training phase. The BTVD was built to reflect real-world basketball viewing conditions. It comprises 100 video clips, each 10-15 seconds long (totaling approximately 35,000 frames), sampled from over 20 different professional NBA games from the 2021–2022 season, publicly available on YouTube. The BTVD is strictly independent of the PoseTrack datasets, featuring distinct environments (professional indoor arenas), subjects, and capture conditions. This makes it an ideal benchmark for evaluating cross-domain generalization. The key challenges within BTVD include frequent player occlusions during team plays, motion blur from rapid movements, and varied professional broadcast perspectives. For our analysis, we categorized the clips into three distinct camera angle groups: Sideline View (standard side-on tracking shots, 40% of clips), Baseline View (shots from behind the basket, featuring severe player overlap, 30%), and High-Angle View (overhead shots providing a tactical overview, 30%). Due to the absence of public annotations, we generated high-quality evaluation labels through a meticulous manual pseudo-labeling process on sampled frames.

We performed a zero-shot evaluation by applying our final model, trained on PoseTrack, directly to the BTVD. The results are presented in Table 11. Our model demonstrates excellent generalization, achieving an overall MAP of 76.0%, significantly surpassing established baselines. This indicates that the features learned on the general-purpose PoseTrack dataset can be effectively transferred to the specific domain of basketball. Furthermore, the model exhibits strong robustness to varying camera angles. It consistently outperforms the baselines across all views and shows particular strength in the difficult baseline view, which often contains heavy player occlusion. This robustness can be attributed to our holistic model design, where the contextual stream provides global information that mitigates ambiguities arising from challenging viewpoints.

Complexity and efficiency analysis

To evaluate the practical applicability of our framework, we analyzed its computational complexity and inference speed. We measured the number of parameters (Params), Giga Floating-point Operations per second (GFLOPs), and inference speed in Frames Per Second (FPS). We evaluated using a uniform input resolution (\(256 \times 256\) pixels) and batch size (batch size = 1) to simulate real-world application scenarios with single-frame video streaming.

As shown in Table 12, our model achieves an inference speed of about 25.1 FPS on the NVIDIA V100 GPU. This means that it can process more than 25 frames of images per second, which is sufficient for near real-time or even real-time requirements for most sports analytics applications. Although slightly lower than HRNet and MSTCFL (which are typically optimized for single-frame pose estimation), this speed performance is very efficient and acceptable considering that our model needs to process multidimensional information streams to capture more complex spatio-temporal contexts. Our model possesses about 45.6 GFLOPs of computation and 60.1M parameters. It is worth noting that although our parameter count is relatively large , the GFLOPs are lower than HRNet, which suggests that our design, especially the feature fusion module and the optimizations within each stream, achieves a high computational efficiency and does not impose a disproportionate computational burden while obtaining more comprehensive information. Compared to models that focus only on a single frame or a single stream of information, our framework balances the computational cost while ensuring high accuracy and robustness. Considering our model’s excellent performance in handling highly dynamic motion, occlusion, and complex backgrounds, as well as its excellent generalization ability on real-world basketball videos, the inference speed of 25.1 FPS gives it a strong potential for application in scenarios such as live game analysis, player training feedback systems, and tactical decision support.

Limitations

While our proposed framework demonstrates state-of-the-art performance and robustness in many challenging scenarios, we acknowledge several limitations that highlight opportunities for future research. These challenges are often shared by current leading methods in video-based pose estimation. Our model is trained on a vast corpus of common human actions and basketball-specific movements. Its performance may be less reliable for highly unconventional or out-of-distribution poses, such as those resulting from serious injuries or unorthodox celebratory actions, which are sparsely represented in the training data. Enhancing the diversity of the training set with more edge cases or developing an uncertainty-aware prediction head could help the model to recognize and handle such novel situations more gracefully.

Conclusion

This paper addresses two major challenges in basketball action pose estimation: the loss of motion information due to motion blur and mutual occlusion, and the complexity of human body shapes caused by changes in camera angles. We propose a comprehensive solution that includes three core modules: the Basketball Action Pose Multi-Dimensional Data Stream Network Architecture, the Feature Fusion Module for Basketball Action Pose Estimation, and the Staged Stream Training Module. These modules work together to significantly improve the model’s performance in handling complex dynamic scenes, particularly in addressing the continuity of motion information and accurately modeling spatial features of the human body. Experimental results demonstrate that this framework outperforms existing mainstream methods on the PoseTrack 2017 and PoseTrack 2018 datasets, especially in challenging environments with severe occlusions and varying camera angles, where the model exhibits excellent accuracy and robustness. Future work will explore the model’s performance in practical applications, such as real-time game analysis and athlete training assistance, to validate its practicality and optimize it based on actual needs. This will help advance research in the field of sports science, providing technological support for athlete training and game strategy development.

Data availability

All data generated or analysed during this study are available to readers upon request to the author ZH-Z.

References

Fan, J., Bi, S., Xu, R., Wang, L. & Zhang, L. Hybrid lightweight deep-learning model for sensor-fusion basketball shooting-posture recognition. Measurement 189, 110595 (2022).

Zhou, C., Ren, Z. & Hua, G. Temporal keypoint matching and refinement network for pose estimation and tracking. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXII 16. 680–695 (Springer, 2020).

Zhao, L. & Chen, W. Detection and recognition of human body posture in motion based on sensor technology. IEEJ Trans. Electr. Electron. Eng. 15, 766–770 (2020).

Bao, Q., Liu, W., Cheng, Y., Zhou, B. & Mei, T. Pose-guided tracking-by-detection: Robust multi-person pose tracking. IEEE Trans. Multimed. 23, 161–175 (2020).

Jiang, L. & Zhang, D. Deep learning algorithm based wearable device for basketball stance recognition in basketball. Int. J. Adv. Comput. Sci. Appl. 14 (2023).

Ji, R. Research on basketball shooting action based on image feature extraction and machine learning. IEEE Access 8, 138743–138751 (2020).

Zuo, K. & Su, X. Three-dimensional action recognition for basketball teaching coupled with deep neural network. Electronics 11, 3797 (2022).

Cai, X. Wsn-driven posture recognition and correction towards basketball exercise. Int. J. Inf. Syst. Model. Des. (IJISMD) 13, 1–14 (2022).

Pan, Z. & Li, C. Robust basketball sports recognition by leveraging motion block estimation. Signal Process. Image Commun. 83, 115784 (2020).

Cao, Z., Simon, T., Wei, S.-E. & Sheikh, Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 7291–7299 (2017).

Fang, H.-S., Xie, S., Tai, Y.-W. & Lu, C. RMPE: Regional multi-person pose estimation. In Proceedings of the IEEE International Conference on Computer Vision. 2334–2343 (2017).

Xiu, Y., Li, J., Wang, H., Fang, Y. & Lu, C. Pose flow: Efficient online pose tracking. arXiv preprint arXiv:1802.00977 (2018).

Liang, X., Shen, X., Feng, J., Lin, L. & Yan, S. Semantic object parsing with graph LSTM. CoRR arXiv:abs/1603.07063 (2016).

Felzenszwalb, P. F., Girshick, R. B., McAllester, D. & Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 32, 1627–1645 (2009).

Sun, K., Xiao, B., Liu, D. & Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5693–5703 (2019).

Arthanari, S., Jeong, J. H. & Joo, Y. H. Exploiting multi-transformer encoder with multiple-hypothesis aggregation via diffusion model for 3D human pose estimation. Multimed. Tools Appl. 1–29 (2024).

Arthanari, S., Jeong, J. H. & Joo, Y. H. Exploring multi-level transformers with feature frame padding network for 3d human pose estimation. Multimed. Syst. 30, 243 (2024).

Simonyan, K. & Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 27 (2014).

Tran, D., Bourdev, L., Fergus, R., Torresani, L. & Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision. 4489–4497 (2015).

Carreira, J. & Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6299–6308 (2017).

Feichtenhofer, C., Fan, H., Malik, J. & He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 6202–6211 (2019).

Bertasius, G., Wang, H. & Torresani, L. Is space-time attention all you need for video understanding?. ICML 2, 4 (2021).

Arnab, A. et al. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 6836–6846 (2021).

Moorthy, S., KS, S. S., Arthanari, S., Jeong, J. H. & Joo, Y. H. Hybrid multi-attention transformer for robust video object detection. Eng. Appl. Artif. Intell. 139, 109606 (2025).

Arthanari, S., Moorthy, S., Jeong, J. H. & Joo, Y. H. Adaptive spatially regularized target attribute-aware background suppressed deep correlation filter for object tracking. Signal Process. Image Commun. 136, 117305 (2025).

Moorthy, S., KS, S. S., Arthanari, S., Jeong, J. H. & Joo, Y. H. Learning disruptor-suppressed response variation-aware multi-regularized correlation filter for visual tracking. J. Vis. Commun. Image Represent. 104458 (2025).

Moorthy, S. & Moon, Y.-K. Hybrid multi-attention network for audio-visual emotion recognition through multimodal feature fusion. Mathematics 13, 1100 (2025).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 770–778 (2016).

Lin, T.-Y. et al. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2117–2125 (2017).

Bai, S., Kolter, J. Z. & Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271 (2018).

Yang, Y. et al. Learning dynamics via graph neural networks for human pose estimation and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8074–8084 (2021).

Doering, A., Iqbal, U. & Gall, J. Joint flow: Temporal flow fields for multi person tracking. arXiv preprint arXiv:1805.04596 (2018).

Zhang, J. et al. Fastpose: Towards real-time pose estimation and tracking via scale-normalized multi-task networks. arXiv preprint arXiv:1908.05593 (2019).

Jin, S., Liu, W., Ouyang, W. & Qian, C. Multi-person articulated tracking with spatial and temporal embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5664–5673 (2019).

Ma, Y. & Yan, Y. Multiscale spatio-temporal correlation feature learning for human pose estimation. J. South-Central Minzu Univ. (Nat. Sci. Ed.) 42, 95–102. https://doi.org/10.20056/j.cnki.ZNMDZK.20230114 (2023).

Guo, H. et al. Multi-domain pose network for multi-person pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops (2018).

Acknowledgements

We sincerely thank all the personnel involved in this research. It is your support and collaboration that have enabled this research to be successfully completed. We would like to thank LZ-S for his leadership and guidance throughout this project. He participated in the research design, and his technical support greatly enhanced the quality and reliability of the research. The contribution of WY-L should not be ignored either. She provided significant assistance in the process of literature retrieval and screening, and participated in data extraction, organization, as well as the writing and revision of the manuscript. Thanks to Y-Z and LK-D for providing technical support for this research.

Author information

Authors and Affiliations

Contributions

ZH-Z conducted the overall design of this study, was responsible for the literature search and screening process, data extraction and statistical analysis, and wrote the first draft. LZ-S participated in the study design and provided corresponding technical support for this study to ensure the accuracy of the data and the rationality of the analysis. WY-L carried out the literature search and screening process, assisted in data extraction and collation, and participated in the writing and revision of the manuscript. Y-Z and LK-D also provided technical support for the charts and illustrations of this study to ensure the accuracy of the article content.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, Z., Liu, W., Zheng, Y. et al. Learning spatio-temporal context for basketball action pose estimation with a multi-stream network. Sci Rep 15, 29173 (2025). https://doi.org/10.1038/s41598-025-14985-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-14985-y