Abstract

Natural disasters such as floods, tsunamis, and earthquakes significantly impact lives and infrastructure, highlighting the urgent need for accurate and real-time prediction systems. Current methods often suffer from limitations in scalability, privacy, and real-time data integration, particularly in large-scale disaster scenarios. This study introduces the mTCN-FChain framework, a novel solution that combines Massive Machine-Type Communications (mMTC) and Temporal Convolutional Networks (TCNs) with federated learning and blockchain technology. The objective is to develop a scalable, secure, and efficient system for real-time disaster prediction using IoT data streams. Lightweight edge-based TCNs enable localized anomaly detection, while federated learning ensures privacy-preserving collaborative model training across edge devices. Blockchain integration secures model updates and provides traceability. Using datasets for earthquakes, floods, and tsunamis, the framework was implemented in Python and validated using metrics such as MAE, MSE, and RMSE. The results show significant performance improvements over existing methods, with higher accuracy, reduced latency, and robust scalability in disaster prediction tasks. Tools like TensorFlow, PyTorch, and Hyperledger Fabric were employed for implementation. The study concludes that mTCN-FChain offers a transformative approach to disaster resilience, though future work will focus on optimizing blockchain integration and expanding real-world applicability to enhance its robustness and adaptability.

Similar content being viewed by others

Introduction

Natural disasters, especially tsunamis, floods, and earthquakes, are perhaps the most disastrous phenomena that impact human populations, infrastructure, and ecosystems. Preparedness and prompt intervention are paramount importance in reducing damage and loss of human life. Conventional disaster prediction systems, mostly based on history and fixed sensor networks1,2. The problem occurs in the disasters themselves, mainly because of variability and dynamic involvement in their causing factors. Therefore, a diverse array of interactions such as rain conditions, seismic oscillations, ocean behavior, commences. Also, the scale of the data is considerably huge to successfully predict disasters-especially considering deployment of more environmental sensors worldwide lately. The potential for using real-time data from these sensors, in combination with the most advanced machine learning techniques, increases with the development of technology3,4. Data processing and analysis in real time, with a guarantee of privacy and security, are therefore of utmost importance. This makes integrating technologies such as IoT, AI, and decentralized learning frameworks promising avenues for revolutionizing disaster prediction systems and response strategies.

The existing disaster forecasting models have also evolved from statistical techniques to complex machine learning methods and deep learning. Classical approaches to regression models or time-series models are limited and cannot capture dynamic, non-linear data associated with disasters. There are more machine learning algorithms related to ANNs, SVMs, and RFs applied lately for the disaster prediction5,6,7. While these methods are promising, they often present problems like overfitting, scalability, and dependency on large, centralized datasets. Deep learning techniques, CNNs, and RNNs have been used to solve many of the above issues with a better accuracy and robustness for the task. However, these models still present problems with regards to data privacy issues, high computationally demanding steps and demands for large datasets8,9,10. Furthermore, centralized models often require significant data transmission, which can be slow and inefficient, especially in areas with limited connectivity. Federated learning has been suggested as a way to overcome the problem of data privacy, but existing models are still not robust enough to deal with multi-modal time-series data from various disaster types simultaneously.

To overcome the limitations of existing techniques, the innovative framework proposed in this study is mTCN-FChain which integrates mMTC with TCNs in a federated learning setting. This is capable of handling large scale, multi-modal time-series data from the tsunami, flood, and earthquake datasets in real-time. By taking advantage of the edge devices to process data locally, the model reduces the number of data transmission, thereby achieving efficient bandwidth use and preserving the data privacy. Light TCNs deployed at the nodes of the network enable real-time anomaly detection and disaster prediction without relying on a centralized server. Federated learning allows training the local models at the site by sharing only model updates and ensures data privacy while enhancing the model’s predictability. Furthermore, the use of blockchain technology will ensure that the updates of models are secure, traceable, and accountable to prevent tampering and verify the authenticity of the shared information. The decentralized approach not only improves the efficiency of the system but also promotes the robustness and adaptability of disaster prediction models for timely response to a disaster and continuous learning from local predictions. The model proposed overcomes the challenges of above-mentioned approaches by innovating a scalable, secure, and efficient solution toward disaster prediction using cutting-edge technology to provide prompt and accurate disaster predictions in high-risk areas.

Research motivation

Natural disasters, including earthquakes, floods, and tsunamis, result in devastating damage to human lives, infrastructure, and ecosystems. The existing prediction systems cannot handle the dynamic, nonlinear data from real-time, large-scale sources efficiently. Motivated by the need to enhance disaster preparedness and reduce damage, this study is aimed at developing a scalable and secure disaster prediction framework using leading-edge technologies to ensure accurate predictions and proactive interventions in high-risk areas.

Significance of study

The proposed mTCN-FChain framework integrates some of the state-of-the-art technologies, namely mMTC, TCNs, federated learning, and blockchain, to alleviate critical challenges involved in disaster prediction. This study is significant since it can handle real-time time-series data from the IoT sensors to predict disasters accurately and efficiently. Combining scalable machine learning methods with decentralized and privacy-preserving techniques, the framework provides a reliable prediction system for disasters, such as tsunamis, floods, and earthquakes.

Problem statement

Most disaster prediction studies remain limited by issues of computational efficiency, scalability, and real-time data integration. A study11 mentioned that their model for disaster prediction is not very efficient at the large scale; it is needed to improve its computational efficiency. Another study12 point out that the limitations of flood management models in regions with unreliable data could be mitigated by introducing real-time, data-driven systems. Similarly13 discuss the challenges involved in the fusion of real-time data for disaster prediction, which needs to be done in a much better way for the integration of multiple data sources. The proposed method overcomes the limitation by incorporating Massive Machine-Type Communications (mMTC) along with TCN, federated learning, and blockchain-based mechanisms that lead to scalable, efficient, and real-time disaster prediction while allowing the model’s applicability to large-scale disaster scenarios in real life.

Recent inventions and challenges

Recent developments in the form of AI-driven disaster resilience models, federated learning for privacy-preserving disaster prediction, and blockchain-based secure data aggregation have revolutionized disaster management systems. Yet these approaches also raise challenges in computational inefficiency, the necessity for robust multi-modal data integration, and the ability to scale well in different types of disasters.

Key contributions of the study

-

Innovative framework Design: mTCN-FChain framework that combines federated learning and blockchain with TCNs and mMTC to achieve secure, scalable, and real-time-based disaster prediction.

-

Privacy-preserving collaborative learning: Propose federated learning for decentralized model training, thereby keeping privacy and data on sensitive raw material while enabling edge devices to collaboratively enhance disaster prediction models without sharing their sensitive raw data.

-

The study utilized blockchain technology to improve the security and traceability of model updates while proving the authenticity of updates in a federated learning environment.

-

The study exhibited better accuracy, reduced latency, and scalability than other methods and was validated using multi-modal datasets, including earthquakes, floods, and tsunamis, with metrics such as MAE, MSE, and RMSE, demonstrating strong real-time disaster prediction capabilities.

Rest of section of the study

Section 2: Review of existing models in disaster prediction, federated learning, blockchain, and IoT systems.

Section 3: Explanation of the mTCN-FChain framework using TCNs, federated learning, and blockchain.

Section 4: Performance evaluation of the framework with a discussion on strengths and challenges.

Section 5: Summary of contributions, challenges, and future research directions.

Related works

A recent study11 proposed a disaster prediction knowledge graph utilizing remote sensing data, geographic information, and knowledge from specialist experts for disaster research. The method constructs a semantic ontology of disasters and operates in a single unambiguous space-time. Applying the proposed model for disaster prediction, studies on forest fires and geological landslides establish the efficiency of the proposed model in acquiring multi-source spatio-temporal information from experiments and case studies. However, the study does not consider the computational efficiency or scalability of the paradigm when extended to larger scale disaster situations. Another approach12 presented a target-specific real-time Decision Support System (DSS) for flood disaster management for Iran that considers its various climates. This paper applied the principal component analysis via KMeans Clustering, Nearest Neighbours Classification, Gaussian process Classifier and Neural Network and proved a positive correlation (mean r = 0.7) between rainfall and flood disasters. Thirdly, the TOPSIS method also classified flood prevention strategies according to social, environmental and economic criterion. The model used confirmed precipitation data and estimates; however, it is only feasible where credible data is available, particularly in the third world. Additionally, a study14 developed an artificial neural network to predict of landslide risk for open-pit mine dumps. The study used geo studio software (GeoStudio 2012 8.15.6.13446, https://geostudio-2012.software.informer.com/8.0/)15 to evaluate the slope factors of safety under different rainfall intensity levels where the accuracy gains by GBRT was established to be at its highest (for instance FOS = 1.283 under q = 87 mm/day). Consequently, the results reveal that the proposed model is appropriate for early landslide risk warning. However, the research mainly bases its analysis on rainfall only and does not take into account other geographical or human structures.

Communications are critical in disaster-stricken areas where conventional modes of communication may not be functional, and for this reason, a user-centric communication model have been developed in this study16. The approach forms ad hoc cluster to connect end-users to the core network, for and aims to maximize through put in accordance with convex optimization. An example of a disaster-sensitive scheme that uses social media data to categorize regions such flooded areas is a machine learning scheme. As the results showed that, clustering and classification could effectively help restore the connection; however, the extensiveness of the model, when applied in large-scale real-world disasters, and real-time data was not tested. The multi-sensor information fusion algorithm for natural disaster protection was presented in this study13 the system improves the accuracy of distinct predictions and the level of tolerance to faults made during the process by combining Kalman joint filters, DS evidence theory, BP neural networks, as well as support vector machines. The application of the developed comprehensive diagnosis model pointed out the increased prediction accuracy of the working results, the integration of the results of several models, and a real-time decision and management approach. The results obtained from the simulation included better classification accuracy of disasters. But the authors did not look super closely at realistic issues surrounding the fusion of real-time data, which could be a problem for the real application in different types of disasters. Based on hydraulic theory and the machine learning method, the classification-based real-time flood prediction model was presented in this study17 utilizes flood database from EPA-SWMM and a two-dimensional inundation model; data categorization is done using the Latin Hypercube Sampling (LHS) method, coupled with a Probabilistic Neural Network (PNN) classifier for felicitous flood range analysis. The model gives real time flood mapping through converting predicted cumulative volumes into grid areas with a fitting of 85% goodness-of-fit and with a runtime of 1 min 12 s. It also improves the efficiency of flood prediction on a hybrid conceptual platform that can be applied in assessing loss mitigation and operational disaster risk management. However, additional data exploring model scalability for various flood cases and types might improve the model’s applicability in practice.

A study18 focus on the application of the machine learning approach for improving the flood forecasting system. This study emphasizes the use of AI and machine learning to forecast floods especially for nonlinear problems and to ease the computational effort of diffident hydrologic and hydraulic models. The work considers the applicability of machine learning techniques including Support Vector Machines SVM and fine tree classifiers for flood identification while developing a machine learning model for SIFT extracting. But this increases accuracy and at the same time reduces computation costs The use of real time data input could additionally enhance predictive abilities for disaster response. Another study19 explored the mapping of the drought forecast in the Free State Province of South Africa to analyze and forecast the dried-up patterns in arid zones by prompting machine-learning. The study shows that AI and ML are valuable tools for mapping the dry areas; nevertheless, more detailed studies on climatic and anthropogenic characteristics of sub-Saharan Africa may extend the reliability of the drought models. The ML-based model for farmland waterlogging prediction was recently proposed, which includes weather data forecasts and main predictors like rainfall and node water levels.

A study20 integrates several categories of machine learning models such as Random Forest and Multilayer Perceptron in order to forecast the occurrence of the result of disasters and economic losses from waterlogging. The outcome demonstrated that the established model was useful in predicting disaster losses and important deviations in yields were noticed to occur in different rainfall situations. But it could be more effective if geographic and temporal data were more specific in order to make locality estimates. Another study21 explored the role of AI in enhancing disaster resilience, particularly in response to emergencies, crises, acute diseases and disasters (ECDs) including the COVID-19, natural calamities and cyber threats. Thus, stressing an ability of AI to go from reactive to proactive, the paper provides a systematic review of AI-driven disaster resilience (AISDR). This highlights the importance of intelligent resilience for various ECD ecosystems and presents an overview of the research area on using AI to support. disaster management. Enhancements in the future of AI for ECDs can enhance the levels of system integration and its global scalability in operating in real-time disaster response scenarios.

The Table 1 summarizes various disaster prediction and management studies, indicating the methods applied, such as machine learning, decision support systems, and multi-sensor fusion algorithms. It points out the benefits of each approach, such as increased accuracy, real-time predictions, and scalable solutions. However, it also shows disadvantages in terms of availability of data, computational complexity, scalability, and applicability of the model to large scale or real-time application. In such cases, the advanced scalable models and efficient disaster prediction and management will be a must.

Disaster prediction using mTCN-FChain

This study presents a novel framework of disaster prediction, integrating federated learning and blockchain technology, called mTCN-FChain, shown in Fig. 1. This methodology models sequential data from diverse sources, including earthquake, tsunami, and flood datasets, with TCN. Federated learning allows decentralized training across edge devices while preserving data privacy and enabling collaborative learning. The process is made secure through model updates encryption and authenticity verification by digital signatures along with an immutable ledger for traceability and accountability purposes. The proposed framework also embeds WNLs for data transmission and processing. The aggregate global model that validated on the level of blockchain would be deployed onto edge devices then conduct real-time disaster prediction based on greater precision. This encompassing methodology supports secure, scaled, and privacies-preserving disaster monitoring with prediction for hazardous regions.

Data collection

This study uses real-time data from the IoT sensors; three datasets were used to make predictions. Earthquake Dataset spans from 2001 to 2023 which provides a magnitude and location for forecasting purposes. Flood Dataset comprises 50,000 records with details of the parameters from the hydrological and meteorological sensors. These parameters will be analyzed in predicting flood risk. The Tsunami Events Dataset, which contains more than 2000 records, collects wave height and source event data from oceanographic sensors and together supports accurate and real-time disaster prediction models.

Earthquake dataset

The earthquake dataset22 comprises 782 cases and seismic events from January 1, 2001, to January 1, 2023; earthquakes’ attributes include the magnitude, date, time, latitude, longitude, depth, and CDI, MMI, and SIG indicators. Event risk categorization is done based on its alert levels (“green,” “yellow,” “orange,” “red”) while a tsunami flag indicates ocean events. Other parameters like NST, GAP, MAGTYPE also help in making a result more reliable and geographical information gives more background information. Due to its highly featured and real-time nature, this dataset is used in the study for pattern recognition and earthquake prediction using TCNs and for localized training in federated learning. They include scalability, accuracy and privacy and therefore, it is appropriate to be used in efficient disaster prediction. Summary of earthquake dataset parameters were given in Table 2.

Flood prediction dataset

There are 50,000 rows in the flood prediction dataset23 and 21 numeric features that include variables like Monsoon Intensity, Deforestation, Urbanization, Drainage Systems, and Flood Probability as the target variable. With no missing value and all the data being of integer type, this dataset will prove ideal for use with regression models without much data preprocessing. This work provides a detailed overview of flood causes, which include climate change, human activities, and infrastructural issues. Utilizing this dataset will enable the proposed mTCN-based model to analyze complex interactions accurately, enhancing the precision of flood prediction and supporting proactive strategies for disaster management. Summary of flood prediction dataset parameters were given in Table 3.

Tsunami events dataset (1900–Present)

The Tsunami Events Dataset (1900–Present)24 includes comprehensive information on more than 2000 tsunamis which occurred in the whole world, as well as their feature, based on which the events have occurred, their date and time, location, magnitude, as well as death and injuries count. It is further supplemented with bathymetry data, which facilitate the depiction of the tsunami wave movement prognosis. Tsunami data is important for identifying causes, impacts and temporal distribution, making this dataset particularly useful for tsunami studies, planning and intervention. The broad scope and open access strengthen the potential for constructing better predictive models, which substantively enriches disaster risk analysis and decision-making in this investigation. Summary of Tsunami events dataset parameters were given in Table 4.

Data pre-processing

This study utilizes normalization to standardize numerical features within datasets, and mean imputation is applied in missing value imputation to replace missing data with mean value of observed data. These pre-processing steps help to check the quality and completeness of the data fed into the system. They are specifically required when training the TCNs. The workflow of data pre-processing in shown in Fig. 2.

Normalization

Normalization (or standardization) is a technique applied to scale numerical data into a constant range, hence making it easier for machine learning models to learn and perform well. Normalization usually scales the data to a [0, 1] range, while standardization transforms the data so that it has mean 0 and a standard deviation of 125. When features such as magnitude due to an earthquake, flood probability, and tsunami impact have varying numerical ranges, the appropriate application of this technique is important in TCNs. For instance, the magnitude of an earthquake can be between 1 and 9, while the probability of a flood is between 0 and 100. This would make the model place greater emphasis on the probability of a flood since it is the larger scale. Standardization is done using the following Eq. (1).

where \(\:X\) is the original value, \(\:\mu\:\) is the mean of the feature, and \(\:\sigma\:\) is the standard deviation of the feature. This process makes it possible for all features to get into a similar scale which makes the model more efficient and not to be biased toward large-value features.

Missing value imputation by mean imputation

Missing values in a dataset may result in incorrect predictions or incomplete models. Mean imputation is one of the most common techniques for dealing with missing data, where missing values are replaced by the mean of the observed values in the feature26. This is quite valuable in the case of numerical features such as the probability of a flood or the magnitude of a tsunami, where the data may be missing due to reporting errors or other reasons. Mean imputation is simple and helps retain the overall distribution of the data. The formula for mean imputation is given in Eq. (2).

where, \(\:{X}_{imputed}\) is the value used to replace the missing data, \(\:\sum\:{X}_{observed}\) is the sum of all the data points that are not missing; n is the number of data points that are not missing.

Wireless-aware neural layer (WNLs)

WNLs are layers that improve wireless data transmission by using neural network models to dynamically adapt to network conditions such as signal strength, bandwidth, and congestion. In this work, WNLs are applied after data collection, optimizing the transmission process before the data reaches edge nodes. WNLs reduce packet loss through error-correcting codes and adaptive retransmission mechanisms, ensuring data integrity even in noisy environments. They minimize latency through dynamic transmission rates and critical data prioritization in order to prevent network congestion. Intelligent routing is implemented so that WNLs predict optimal paths and make selections based on real-time network metrics for robust data delivery. Once data reaches the edge nodes, it is subjected to lightweight preprocessing and initial analysis using the Lightweight TCN. The working of WNLs is given in Fig. 3.

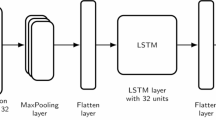

Temporal convolutional networks (TCNs)

Lightweight TCNs mechanism is intended to efficiently process time-series data, especially in resource-constrained environments like those with limited computational resources and network bandwidth27. Lightweight TCNs have several layers with unique contributions toward the overall functionality of the model. This section expands on the role of each layer, with special emphasis on how they work together to process time-series data for real-time disaster prediction. The architecture of TCN is shown in Fig. 4. The Input Layer is the first contact point with the model. The time-series data is received and usually pre-processed by WNLs. It plays a critical role in this step as they optimize the data transmission from the IoT devices to the edge nodes by reducing noise and only transmitting relevant information. This pre-processing step improves the quality of the input data, which is very important for proper predictions. The convolutional layers are the core component of TCNs, and they are designed to extract features from the input time-series data.

First layer: This layer uses causal convolutions, with specified kernel size. The causal convolutions do ensure that the predictions produced for time \(\:t\) depend only on inputs up to previous time steps while keeping their temporal order necessary for predictions. Mathematically this can be expressed as Eq. (3).

where \(\:{y}_{t}\) is the output at time \(\:t\), \(\:{w}_{i}\) are the weights of the convolution kernel, and \(\:x\) represents the input sequence.

Dilated convolutional layers: Following layers apply dilated convolutions with increasing dilation rates. Dilation allows the network to expand its receptive field without significantly increasing the number of parameters. For example, setting dilation rates \(\:d=\text{1,2},4,\) each layer processes inputs with different receptive fields given in Eq. (4).

This way, it can understand dependencies across longer time spans effectively without losing computational efficiency. After feature extraction, TCN computes an anomaly score to determine whether the current observation significantly deviates from the expected behavior. The TCN utilizes a 96-hour receptive field, which is intended to capture long temporal dependencies typical of disaster events such as slow pressure declines ahead of a flood or seismic precursors in earthquakes, which improve early prediction capabilities and false alarm rates.

Localized anomaly detection

Edge-based TCNs can perform local anomaly detection, where it able to identify any irregular behavior or pattern within time-series data coming from a sensor or other IoT device. It processes the incoming streams of data and looks for patterns that may hint at a possible disaster, it can be a temperature rise, uncharacteristic seismic activity, or unusual levels of rainfall. This model uses causal convolutions in order to have predictions based on past data therefore, maintains the necessary temporal integrity required to be correct for any kind of prediction. Mathematical representation of an anomaly score can be computed as Eq. (5).

Here, \(\:{y}_{t}^{predicted}\) is the predicted value from the TCN model, and \(\:{y}_{t}^{actual}\:\)is the actual observed value. If this score exceeds a predefined threshold \(\:\tau\:\), an alert can be triggered for further investigation. For every convolutional layer, the non-linear activation functions such as ReLU are applied. Such functions allow a model to introduce non-linearity and therefore capture complex patterns from the data. The definition of the ReLU function can be given by Eq. (6).

This non-linearity is crucial for enabling TCNs to model intricate relationships within time-series data. Residual Blocks are introduced after some of the convolutional layers to further improve learning within deeper networks. The residual block of TCN is shown in Fig. 5.

A residual block adds outputs of convolutional layers with their corresponding inputs which is given in Eq. (7).

where, \(\:F\left({x}_{t}\right)\) is the output of a set of convolution operations applied to the input \(\:{x}_{t}\). This design helps reduce problems of vanishing gradients, hence training better and deeper networks without performance degradation. The Output Layer generates predictions given the features which have been passed through previous layers. For a disaster prediction problem, this output is the probabilities of certain events (such as floods or earthquakes) happening in a given timeframe. The output can be written as Eq. (8).

where \(\:W\) are the weights learned during training, \(\:y\) represents the output from the last hidden layer, and \(\:b\) denotes a bias term.

Federated learning workflow for disaster prediction

In this study, Federated Learning (FL) is applied to develop a disaster prediction model using multiple edge devices with different disaster datasets, for instance, handling tsunami, flood, and earthquake disasters. This will ensure the development of a robust global model, capable of making predictions of all types of disaster, and thus ensuring scalability, preservation of privacy, and adaptability in different disaster scenarios.

Local mTCN models: Each edge node trains a lightweight TCN, mTCN is trained on its assigned disaster dataset. This localized scheme allows each of the models to specialize in disaster type, meaning it can then better predict under certain conditions in an earthquake event, flood or tsunami. Due to the naturally non-IID nature of data associated with disasters between nodes (i.e., coastal nodes handling tsunami data versus inland nodes handling flood data), the challenges related to model convergence can be addressed through normalization of data sets, as well as balancing the weights that are aggregated during model fusion. This type of heterogeneity is part of the real-life deployment, as well as a key aspect when creating a robust and generalized global model. Local models perform short-term trend analysis to detect immediate disaster signals like seismic or flood indicators, enabling timely alerts. They also capture long-term patterns such as seasonal or oceanographic trends to improve disaster forecasting and preparedness.

Federated learning workflow

Federated learning is used to enable the models on edge devices to collaboratively train a global model in a distributed manner without transferring sensitive local data. The core components of the federated learning are as follows. Each edge node trains its local mTCN model using solely the data stored locally28. The training is done by focusing on disaster-specific features, which enables the model to learn from the local data without being required to access other nodes’ data. Unlike simple raw data communication, the devices communicate model updates such as weights or gradients back to the central server. Such an approach assures privacy since any sensitive information from the edge does not leave; instead, model parameters are the only information transmitted and shared in a collaborative framework among devices for collaboration. Thereby, throughout the federated learning process, privacy of disaster data is maintained. Only model updates would be communicated as not to mention the exact details of the whereabouts of disasters, or socio-economic factors. Partial participation is allowed each communication round to realistically simulate the real-world deployment conditions. To simulate devices that fail, lose connectivity for a period of time, or just are unavailable temporarily, an average of 60–80% of the nodes are expected to provide updates each round. This helps improve the resiliency and scalability of the system in a dynamic or unpredictable environment. The framework of federated learning is shown in Fig. 6.

Global model aggregation

Finally, after training the local models, the edge models need to aggregate those models towards forming a single global model, which is typically performed by the central server. The workflow of model aggregation is shown in Fig. 7.

Federated aggregation: The global server aggregates the model updates that come from each edge node by using a process called Federated Averaging, or FedAvg. It combines the model parameters (weights or gradients) coming from each of the edge nodes into a single unified model. This process can be represented mathematically as Eq. (9).

where, \(\:{w}_{global}\) denotes the aggregated global weight, \(\:{w}_{i}\) represents the weight from the \(\:{i}^{th}\) local model, \(\:N\) indicate the number of edge nodes. Because of non-synchronous device performance, straggler nodes - nodes that do not respond within a 30-s window, will not be included in the current round to prevent bottlenecks during training. Additionally, in order to reduce communication and synchronization overhead due to frequent updates being transmitted, model updates have been quantized, and devices are allowed to communicate every 5 local epoch instead of after each epoch. This reduces the bandwidth usage and increases the usable resource lifetime of edge devices, which is crucial in disaster-prone scenarios when deployed to the real world.

The aggregated model incorporates the knowledge learned from each disaster-specific dataset, making it robust across multiple disaster types. This enables the model now to predict broader sets of information, thus upgrading its ability toward predicting different forms of disasters and catastrophes, such as earthquakes, floods, and tsunamis.

Blockchain integration in federated learning for disaster prediction: working mechanism

The blockchain component of the mTCN-FChain framework is fundamental to securing federated learning operations by providing encrypted update transmission, tamper-proof validations, traceability, and resilient fault tolerance. For securing model updates sent from edge nodes, symmetric encryption using the AES-256 algorithm is utilized. Each model update is encrypted individually, instead of encrypting all updates in a single batch, before being sent to the blockchain so that confidentiality can be ensured for any gradient or weight vector shared from the local mTCN models.

Let \(\:M\in\:{\mathbb{R}}^{n}\) be the model update vector of an edge node, with nnn being the number of learnable parameters. The encrypted version \(\:C\) is represented as Eq. (10).

where \(\:{AES}_{K}\) is the AES encryption function with a 256-bit key \(\:K\). The size of each update vector prior to encryption is between 320 KB and 512 KB based on the depth of the local mTCN model, whereas the encrypted output has an almost negligible overhead (~ 3–5%) attributed to AES block padding. The updates are pushed to the blockchain ledger every 5 local epochs, which coincides with the federated communication round interval to limit bandwidth consumption and power expenditure on resource-constrained edge devices.

Digital signatures are created with the ECDSA algorithm (Elliptic Curve Digital Signature Algorithm) using 256-bit keys. Let \(\:S\) be the digital signature of an update \(\:M\) created with the private key \(\:{K}_{priv}\), given in Eq. (11).

where \(\:H\left(M\right)\) represents the SHA-256 hash of the model update. After getting to the central aggregator, the signature \(\:S\) is verified with the sender public key \(\:{K}_{pub}\) to verify the source and integrity of the update. If the verification does not pass, the update is rejected and set to audit status. All verified updates are tracked on a permissioned blockchain based on Hyperledger Fabric. The ledger has nearly 1000 TPS of transaction throughput and utilizes a Proof-of-Stake (PoS) consensus with a ten second block time and one-megabyte block size limit. Each update stored includes a timestamp, node ID, update type (flood, earthquake, and tsunami) and cryptographic metadata. This immutable log provides complete traceability of contributions and allows for forensic analysis in real-time.

The framework is resistant to various types of attacks, e.g. tampering, model poisoning and spoofing. Hashed updates are automatically rejected at verification stage through hash mismatch. Attackers found to frequently fail in validating their gradients across rounds are blacklisted such that each malicious node trying to inject adversarial gradients is temporarily blocked from participating in the aggregation. In case of node compromise, the system can simply reinitialize the local model with the most recent global model and re-verify through a quorum of trusted validators before being allowed back into the network. This design guarantees the data Privacy, provenance and system robustness with reduced communication overhead. Security and integrity of disaster prediction as maintained within the federated environment are jointly secured by encryptions, digital signatures, as well as integrity backed by ledgers, coupled with traceability. The technical design of the system has been abided by most recent developments of safe federated learning29, making it accurate in case of high-risk real-time conditions.

Deployment and feedback

An updated model of global mTCN is deployed along with a feedback mechanism to effectively deploy real-time predictions of a disaster. The workflow of deployment and feedback is shown in Fig. 8.

Model deployment

Once the global mTCN model is updated through aggregation of the local model parameters, it gets deployed on all participating edge nodes. This enables every node to receive the most recent and updated version of the model for a real-time accurate prediction in a disaster situation. Disasters such as floods or earthquakes can be evaluated according to the latest known information. This allows the system to adapt to changing conditions and growing data sources.

Feedback loop

The feedback loop is critical for the continuous improvement and adaptability of the disaster prediction system. It has two main components:

Continuous learning

Once the global model has been deployed, each edge node generates predictions from real-time data. These predictions are fed back into the system for analysis. Feedback from local predictions allows for an iterative refinement of the global model. Mathematically, this may be represented as following Eq. (12).

where, \(\:\varDelta\:w\) represents the change in model weights, \(\:\eta\:\) is the learning rate, \(\:{y}_{actual}\) denotes the actual observed outcome, \(\:{y}_{prediction}\:\)is the predicted outcome, \(\:x\) represents input features.

Adaptability

The feedback loop thus ensures that the mTCN model evolves with time as the pattern of disasters change. For example, in the case of an emerging type of flood event or an increase in seismic activity within a region once stable, the model can evolve from new data input. More information from the constant monitoring and predicting processes will be used for further training, which can constantly adapt to newly emerging trends and anomalies. Overall, the dynamic framework should enhance disaster preparedness and response capabilities well. This dynamic framework enhances better outcomes under emergency situations.

Algorithm of mTCN-FChain for disaster prediction

Input: Multi-modal disaster datasets (earthquake, flood, tsunami). |

Output: Global disaster prediction model with real-time deployment. |

1. datasets ← collect_datasets([“earthquake”, “flood”, “tsunami”]) |

2. preprocessed_data ← preprocess(datasets). |

3. edge_nodes ← initialize_edge_nodes(). |

4. for each node in edge_nodes: |

5. local_data ← get_data(node). |

6. local_model[node] ← train_TCN(local_data). |

7. for each node in local_model: |

8. updates ← get_model_updates(local_model[node]) |

9. encrypted_update[node] ← encrypt(updates). |

10. blockchain ← initialize_blockchain(). |

11. for each node in encrypted_update: |

12. if verify_signature(encrypted_update[node], node.key) |

13. store_on_blockchain(encrypted_update[node], node.signature) |

14. validated_updates ← blockchain.get_validated_updates() |

15. global_model ← aggregate_updates(validated_updates) |

16. for each node in edge_nodes |

17. distribute_model(global_model, node) |

18. for each node in edge_nodes |

19. predictions[node] ← predict(global_model, node.test_data) |

20. repeat process with new data for continual learning |

The mTCN-FChain framework is a novel framework that brings together Temporal Convolutional Networks, Federated Learning, and blockchain technology to enable disaster prediction in a secure, decentralized, and privacy-preserving manner. The framework makes use of a TCN model to model sequential data from multiple hazards; federated learning allows collaborative training by the edge devices without sharing or gathering data. The employment of blockchain ensures model trust and provenance. The complete operational flow of the proposed framework is given in Fig. 9.

Results and discussion

The result of this study shows the efficiency of mTCN-FChain in integrating federated learning with blockchain for secure and accurate disaster prediction. The mTCN-FChain is implemented in Python to keep data private while training models on decentralized devices without transferring sensitive data. Blockchain adds an extra layer of security and traceability for model updates. TCN has outperformed both short-term and long-term predictions in disasters. Superior Scalability and Flexibility: the mTCN-FChain solution outperformed traditional methods, as well as baseline models on performance metrics by validating its strong robustness of real-time disaster monitoring across many scenarios. This simulation and hardware setup for the experiment is given in Tables 5, 6, 7 and 8.

Dataset statistics and preprocessing

Figure 10 is a histogram with a density curve used to represent the distribution of tsunami magnitudes. The x-axis represents magnitude values, and the y-axis represents occurrence counts. It can be inferred that the most frequent value in the dataset is about a magnitude of 7 because of the peak around this point. The smooth density curve, which closely follows the histogram, reveals a roughly normal distribution with a slight right skew. It depicts the frequency and distribution of magnitudes of tsunami. The findings thus indicate a common range for the magnitudes of tsunamis, with implications on enhancing the predictability of the tsunami models.

Figure 11 compares the frequency of two categories: 0 (no tsunami) and 1 (tsunami present). The larger bar for category 0, which just clears 450, demonstrates that events with no tsunami are more frequent. The smaller bar for category 1, which tops out at around 300, demonstrates tsunami events are much less common. It also shows that compared to its opposite, the tsunamis are less frequent events. The results emphasize the need to target rare events such as tsunamis with more effort in disaster forecasting models.

Figure 12 is the bar chart shows the count of events with various magnitudes ranging from 6.5 to 9.1. The maximum count is seen at a magnitude of 6.5, with an approximate count of 120, and it decreases with an increase in the magnitude. The color gradient from pink to purple indicates that there is a transition from smaller to larger magnitudes. This shows that lower magnitudes occur more frequently than higher magnitudes. The results highlight the significance of knowing magnitude distributions in better earthquake prediction and risk management in disaster scenarios.

The histogram of flood probabilities shows the frequency distribution in Fig. 13. The x-axis is shown here as probabilities from 0.30 to 0.70, and the y-axis is frequency. The bell-shaped curve indicates a peak probability of about 0.50, which represents the most frequent event. This would mean that the flood events are most likely to occur at a probability near 0.50. The flood distribution provides important information related to risk assessment through identification of areas at susceptibility to floods and aiding flood prediction planning. It concludes that understanding probability distributions helps in enhancing disaster preparedness and mitigation.

Figure 14 visualizes the influence of several numerical features on flood probability using a number of scatter plots. In each subplot, it illustrates the relationship between a particular feature, for instance, “Monsoon Intensity,” “Urbanization,” “Climate Change” and flood probability. The x-axis in each plot represents the feature value and the y-axis represents corresponding flood probability. The scatter patterns may indicate possible correlations or trends: for example, higher values of some features, such as “Urbanization” or “Deforestation,” might be related to increased flood probability. The plots together suggest a complex interplay of environmental, infrastructural, and socio-political factors in determining flood risks.

Federated learning performance

Figure 15 compares the four models: Model 1, Model 2, Model 3, and the Federated Model in terms of the MAE across training epochs. As shown above, the increase in epochs leads to a direct relation in terms of reducing the MAE with each model, indicates better performance is achieved over time. Generally, the lowest MAE is consistently recorded with the Federated Model, and other models are at the low end. This suggests that this framework at the developed mTCN-FChain, implemented with federated learning, will give better results. The results show the decrease in error rates with federated learning that increases the model’s performance at the time of training.

Blockchain security metrics

Table 9 illustrates the contribution of edge nodes in the mTCN-FChain framework, which includes the dataset used and the percentage of model contribution from each node. The model is contributed 30% from Node 1 using tsunami data, 25% from Node 2 by using flood data, and 45% from Node 3 by using the earthquake data of November 11, 2024. Thus, this scenario depicts the federated learning architecture, where the model is formed using many edge nodes without sharing any private data among nodes. The experimental results show successful collaboration among these edge nodes toward secure and diversified disaster prediction.

Figure 16 shows the number of updates, distributed over four weeks, shows continuous increase: 20 in Week 1 to 70 in Week 4. Such growth represents a mainstream contribution to blockchain technology, featuring the parameter in traceability. In fact, every update with blockchain is trackable and verifiable so that transparency and accountability are enhanced. The results put importance on the aspect of maintaining model update integrity via blockchain. It simply highlights that through blockchain, secure and verifiable contributions will be guaranteed toward the disaster prediction model.

Figure 17 depicts the security level for different types of model updates. These are the “Encrypted Updates,” the “Verified Updates,” and “Unverified Updates.” Both “Encrypted Updates” and “Verified Updates” depict levels of security approaching 100%, meaning encryption and verification offer strong protection on model updates. On the other hand, “Unverified Updates” are at a security level of around 10%. This clearly explains why encryption and verification are very important for model updates. Results indicated that the usage of unverified updates raises security risk, hence validating the use of secure update mechanisms in the disaster prediction model.

Disaster prediction results

Figure 18 gives a comparison between the observed and predicted wave heights for the tsunami with the x-axis as the number of simulations runs and the y-axis as wave height. Blue dots and lines are used to represent the observed values, while green dots and lines represent the predicted values. Close alignment between the green and blue lines is shown, which reflects the accuracy in the wave heights predicted by the mTCN-FChain model. This proves that the model is efficient in predicting tsunami events, which is essential for early warning systems. The results prove the reliability of the model in mitigating the effects of tsunamis on coastal communities.

Figure 19 compares the true and predicted magnitudes of the earthquake. Here, the x-axis is used to represent sample points and the y-axis is used to represent magnitudes. The closeness of the red dashed line to the green solid line indicates the accuracy of the model mTCN-FChain. This suggests that the model is able to predict the magnitude of the earthquake and thus provide accurate information regarding earthquake prediction. It is critical in disaster preparedness and mitigation.

Figure 20 depicts the strong correlation between actual and predicted values for flood prediction, with the x-axis representing actual values and the y-axis representing predicted values. The closeness of the blue data points to the black dash line, which is corresponding to a perfect prediction, shows that there is a very high accuracy in the model mTCN-FChain. Therefore, the above result confirms that the model predicts flood events with less deviation from the actual values. Overall, this verifies the success of the model in producing reliable and accurate flood predictions.

Qualitative results

Figure 21 is a heatmap displays a global distribution of earthquake events and their corresponding tsunamis-occurring areas. It points out high-risk areas for disaster occurrences, with the intensity shown in the colors where more intensified earthquakes in warmer colors, such as red and yellow, appear. These locations are critical inputs into the mTCN-FChain framework to provide accurate disaster prediction and risk assessment. Visualization has brought to importance of identifying high-risk areas for effective disaster mitigation strategies.

The correlation heatmap in Fig. 22 represents relationships among several environmental and infrastructural variables affecting floods. For example, a positive relationship exists between “Deforestation” and “Urbanization” with a correlation coefficient of 0.47. Such factors generally increase together, whereas negative relationships, such as between “TopographyDrainage” and “FloodProbability” (− 0.25), imply that an improved drainage will decrease the possibility of floods. The heatmap allows for the better understanding of complex environmental factors, and key interactions emerge. It shows the strongly related variables that can be found to make informed decisions on policy and planning. The study emphasizes deforestation, urbanization, and drainage system as targets in mitigating flood risks.

The “Correlation Heatmap” in Fig. 23 has been depicted for the interrelations between variables concerning earthquakes, namely magnitude, depth, cdi, mmi, and sig. Color gradations range from yellow to purple, and each color reflects the strength and direction of correlations; strong positive are indicated by yellow and strong negative by purple. All the diagonal elements reflect perfect self-correlation at 1.00. Off-diagonal values are from − 0.21 to 0.19, and they reflect that there is very weak correlation among these variables. An example is the association between magnitude and mmi is − 0.21, denote a relatively weak negative link.

Scalability evaluation

The results in Table 10 indicate that training time increases linearly from 12 to 14 h depending on the disaster types due to the overhead of distributed training. However, MAE is still consistently low (0.12–0.18), suggesting that while edge nodes participation increased, the mTCN-FChain framework provided high accuracy in predictions, providing further evidence of scalability and robustness.

Statistical analysis

In Table 11, the results of the paired t-test confirm the statistical significance of improvements in accuracy by the proposed mTCN model over PTCN, LSTM, and GRU. The p-values are less than 0.05, which demonstrates that the better performance of mTCN is not random variation, but is statistically significant across multiple disaster prediction scenarios.

Performance evaluation

Table 12 shows the performance metrics concerning the mTCN-FChain for flood, earthquake, and tsunamis predictions. This shows low levels of RMSE, MAE, and MSE in the consistent results, all pointing to proper accuracy in disasters prediction. Although the inference is somewhat slow taking about 25–30 ms, this will still ensure in real-time operation for the process. The training hours that took between 12 and 14 h reflects the model’s learning efficiency and ensures mTCN-FChain also effectively predicts each of the disaster varieties under consideration in different scenarios. The performance graph is shown in Fig. 24.

Table 13 shows the improvement in model performance after federated learning is integrated with blockchain in the mTCN-FChain framework. After integration, model update latency reduces from 200 ms to 150 ms, meaning faster updates. Prediction accuracy improves significantly from 75 to 98%, which indicates better model performance. In addition, the number of successful updates increases from 80 to 95%, which shows better reliability and effectiveness in the system’s real-time disaster prediction capabilities.

Table 14 provides a comparison of the mTCN algorithm against the PTCN, LSTM and GRU implementations as measured by accuracy, RMSE, inference time and memory usage. The proposed mTCN achieves high accuracy of 92.16%, with the lowest RMSE of just 0.10 and the lowest memory consumption of 9 MB, indicating the mTCN’s efficiency and suitability for high-value disaster prediction in real-time edge environments. The mTCN’s larger receptive field of 96 h enables it to effectively capture long-term temporal dependencies critical for early disaster detection, outperforming other models in both precision and efficiency.

Table 15 represents a performance comparison of five machine learning models: Random Forest30, Gradient Boosting31, Linear Regression, LGBM Regressor, and the proposed model mTCN-FChain for MAE, MSE, RMSE, and R2 metrics. The results suggest that mTCN-FChain outperformed all other models with significantly smaller error values: MAE, MSE, and RMSE values, and R2 score being higher, with better predictive accuracy. This indicates that the proposed model is superior when it comes to disaster prediction tasks. The model has shown more consistent, reliable, and accurate predictions makes its performance evident. Altogether, mTCN-FChain is a more robust and accurate real-time disaster monitoring method. The comparison graph is shown in Fig. 25.

Discussion

The mTCN-FChain framework holds huge potential for real-time disaster prediction through the integration of cutting-edge technologies such as mMTC, TCNs, federated learning, and blockchain. Its capability to process multi-modal time-series data efficiently obtained from IoT sensors leads to precise and low-latency predictions even in resource-restricted environments. Edge-based TCNs, in combination with WNLs, ensures high predictive accuracy while minimizing the computational load on IoT devices. This makes the system suitable for deployment in remote or disaster-prone areas. Federated learning ensures data privacy by enabling decentralized training, and blockchain guarantees data security and traceability, which is critical in emergency situations. However, challenges are there to the framework. The computational overhead of federated learning and blockchain integration may also slow down the training and updating times, hindering real-time performance in some disaster scenarios. Real-world deployment may be affected by inconsistent sensor data, device failures, and environmental issues that may negatively impact data collection or prediction accuracy. These limitations underscore the need to optimize within system architecture and the mechanisms of handling data. Future work in this regard has to reduce the latency, compute efficiency of the framework, and make the framework robust for its large-scale deployments, which are likely to find different real-world conditions.

Conclusion and future work

This framework, mTCN-FChain, is represents a significant advancement for the real-time disaster prediction field that leverages the collective power of IoT, AI, and blockchain technologies. The system utilizes TCNs together with federated learning and blockchain to provide the best prediction accuracy with scalability and robust data privacy and security. The proposed model results in better existing approaches in disaster prediction in terms of fewer error rates and faster updates, which makes it suitable for any practical application in disaster resilience. The framework is promising, although computational complexity and resource constraints make it unfeasible at the moment to achieve its functionality on a larger scale.

Future research will focus on optimizing key components of the system, particularly the blockchain architecture, to reduce latency and enhance computational efficiency. Including more types of disasters and real-time data sources can expand the framework, making it more usable in a variety of regions and scenarios. Real-world deployment will help determine its robustness to various regions and scenarios and also give insight into operational challenges and environmental factors. Instead, by integrating such sophisticated techniques as transfer learning and federated learning into the model, one can improve on its performance and scalability. These future improvements would open up paths for more effective, scalable, and reliable systems of disaster prediction that could lead to supporting a global level of disaster resilience.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Akhyar, A. et al. Deep artificial intelligence applications for natural disaster management systems: A methodological review. Ecol. Ind. 163, 112067 (2024).

Díaz, G. et al. ITS4Tsunamis: an intelligent transportation system for tsunami emergencies. J. Comput. Sci. 81, 102360 (2024).

Purnama, M. R. et al. Improving indonesia’s tsunami early warning: part I: developing synthetic tsunami scenarios and initial deployment. Ocean Eng. 316, 119892 (2025).

Abdalzaher, M. S., Krichen, M. & Falcone, F. Leveraging internet of things and emerging technologies for earthquake disaster management: challenges and future directions. Progr. Disaster Sci. 100347 (2024).

Cesario, E. et al. Machine learning for tsunami waves forecasting using regression trees. Big Data Res. 36, 100452 (2024).

Albahri, A. et al. A systematic review of trustworthy artificial intelligence applications in natural disasters. Comput. Electr. Eng. 118, 109409 (2024).

Kumar, R. P., Prakash, A., Singh, R. & Kumar, P. Machine learning-based prediction of hazards fine PM2. 5 concentrations: a case study of delhi, India. Discover Geosci.. 2 (1), 34 (2024).

Chen, C. et al. A short-term flood prediction based on spatial deep learning network: A case study for Xi county, China. J. Hydrol. 607, 127535 (2022).

Joshi, A., Raman, B., Mohan, C. K. & Cenkeramaddi, L. R. Application of a new machine learning model to improve earthquake ground motion predictions. Nat. Hazards. 120 (1), 729–753 (2024).

Abraham, K., Abdelwahab, M. & Abo-Zahhad, M. Classification and detection of natural disasters using machine learning and deep learning techniques: A review. Earth Sci. Inf. 17 (2), 869–891 (2024).

Ge, X. et al. Disaster prediction knowledge graph based on multi-source spatio-temporal information. Remote Sens. 14 (5), 1214 (2022).

Akbarian, H., Gheibi, M., Hajiaghaei-Keshteli, M. & Rahmani, M. A hybrid novel framework for flood disaster risk control in developing countries based on smart prediction systems and prioritized scenarios. J. Environ. Manag. 312, 114939 (2022).

Luo, X., Ye, J., Zhou, G. & Yi, T. Reliability analysis of multisensor fusion technology in disaster nursing application. J. Sens. 2022, 1–11. https://doi.org/10.1155/2022/6564235 (2022).

Jiang, S. et al. Landslide risk prediction by using GBRT algorithm: application of artificial intelligence in disaster prevention of energy mining. Process Saf. Environ. Prot. 166, 384–392 (2022).

GeoStudio Get the software safely and easily., Software Informer. Accessed: Jul. 21, 2025. (2012). https://geostudio-2012.software.informer.com/8.0/

Raza, M. et al. Establishing effective communications in disaster affected areas and artificial intelligence based detection using social media platform, Future Gener. Comput. Syst. 112, 1057–1069. https://doi.org/10.1016/j.future.2020.06.040 (2020).

Keum, H. J., Han, K. Y. & Kim, H. I. Real-time flood disaster prediction system by applying machine learning technique. KSCE J. Civ. Eng. 24 (9), 2835–2848 (2020).

Kumar, V. S. et al. Extraction of sift features for identifying disaster hit areas using machine learning techniques. In International Conference on Knowledge Engineering and Communication Systems (ICKES), 1–5 (IEEE, 2022).

Orimoloye, I. R., Olusola, A. O., Belle, J. A., Pande, C. B. & Ololade, O. O. Drought disaster monitoring and land use dynamics: identification of drought drivers using regression-based algorithms. Nat. Hazards. 112 (2), 1085–1106 (2022).

Jiang, Z. et al. Coupling machine learning and weather forecast to predict farmland flood disaster: A case study in Yangtze river basin. Environ. Model. Softw. 155, 105436 (2022).

Cao, L. AI and data science for smart emergency, crisis and disaster resilience. Int. J. Data Sci. Anal. 15 (3), 231–246 (2023).

Chauhan, C. Earthquake dataset. Accessed: Jan. 09, 2025. [Online]. https://www.kaggle.com/datasets/warcoder/earthquake-dataset

Khalid, N. Flood Prediction Dataset. Accessed: Jan. 09, 2025. [Online]. https://www.kaggle.com/datasets/naiyakhalid/flood-prediction-dataset

Harshal, H. & Tsunami Events Dataset. - Present). Accessed: Jan. 09, 2025. [Online]. (1900). https://www.kaggle.com/datasets/harshalhonde/tsunami-events-dataset-1900-present

Huang, L. et al. Normalization techniques in training dnns: methodology, analysis and application. IEEE Trans. Pattern Anal. Mach. Intell. 45 (8), 10173–10196 (2023).

Pham, T. M., Pandis, N. & White, I. R. Missing data: issues, concepts, methods. In Seminars in Orthodontics (Elsevier, 2024).

Lin, Y., Koprinska, I. & Rana, M. Temporal convolutional attention neural networks for time series forecasting. In International Joint Conference on Neural Networks (IJCNN), 1–8 (IEEE, 2021).

Sameera, K. et al. Privacy-preserving in Blockchain-based federated learning systems. Comput. Commun. (2024).

Asif, M. et al. Intelligent two-phase dual authentication framework for internet of medical things. Sci. Rep. 15 (1), 1760 (2025).

Kanimozhi, T., Belina, S. & Sara, V. J. Classification of tweet on disaster management using random forest. In Advancements in Smart Computing and Information Security in Communications in Computer and Information Science, vol. 1759, (eds. Rajagopal, S.) 180–193 (Springer Nature Switzerland, 2022). https://doi.org/10.1007/978-3-031-23092-9_15

Xu, K., Han, Z., Xu, H. & Bin, L. Rapid prediction model for urban floods based on a light gradient boosting machine approach and hydrological–hydraulic model. Int. J. Disaster Risk Sci. https://doi.org/10.1007/s13753-023-00465-2 (2023).

Ethics declarations

Competing interests

The authors declare no competing interests.

Consent to publish

All the authors gave permission to Consent to publish.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Umadevi, M., Kumar, J.A., Priyan, S.V. et al. Design of mTCN framework for disaster prediction a fusion of massive machine type communications and temporal convolutional networks. Sci Rep 15, 29280 (2025). https://doi.org/10.1038/s41598-025-15397-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-15397-8