Abstract

The unconfined compressive strength of organic-rich clay shale is a fundamental parameter in geotechnical and energy applications, influencing drilling efficiency, wellbore stability, and excavation design. This study presents machine learning-based predictive models for unconfined compressive strength estimation, trained on a comprehensive dataset of 1217 samples that integrate non-destructive indicators such as ultrasonic pulse velocity, shale fabric metrics, wettability potential and destructive field-derived parameters. A dual-model framework was implemented using Support Vector Machine, Decision Tree, K-Nearest Neighbor, and Extreme Gradient Boosting (XGBoost) algorithms. Among these, the composite XGBoost model exhibited the highest accuracy (R2 = 0.981; RMSE = 0.02; MAE = 0.02), and maintained strong generalization (R2 = 0.91) on an independent validation set of 959 samples. Taylor diagram analysis and sensitivity evaluation identified ultrasonic velocity, void ratio, bedding angle, and temperature as critical predictors. This study offers a scalable, data-driven alternative to conventional unconfined compressive strength testing and enables rapid, reliable geo-mechanical characterization for complex shale formations.

Similar content being viewed by others

Introduction

Accurate prediction of shale strength is essential for the safe and cost-effective design of structures interacting with shale formations, such as boreholes, tunnels, slopes, and foundations. Organic-rich shale is widely distributed across the globe and has become a major focus of recent research due to its complex behavior whereas the strength of shale is a critical parameter in the analysis and design of open-pit excavations1,2,3. The presence of variable organic matter content in such shales makes it a challenge to predict the behavior due to water interaction4,5,6,7,8 as the shale strength is closely related to the stability of well-bores9 and the design of support system for the excavations in soft shale strata10,11. Existing models often rely solely on conventional geo-mechanical and drilling parameters, overlooking critical shale-specific characteristics like fabric anisotropy and wettability, which significantly influence strength behavior. The purpose of this study is the development of an advanced ML- model that accurately predicts shale strength by incorporating shale-specific factors improving upon previous models through enhanced algorithmic design, large-scale validation, and detailed sensitivity analysis to support safer and more reliable and optimized foundation designs, and reduction in uncertainty in shale-dominated geotechnical environments.

Unconfined compressive strength (UCS) is widely adopted as the primary response for shale behavior for foundation systems, and in-situ stress evaluations12,13,14 as it directly influences the strength and stiffness of materials used beneath foundations of infrastructures15,16,17,18,19,20,21,22,23,24. Hence the ML-based prediction model of the UCS of shale presents a vital importance design of structures on shale strata. Incorporating geotechnical field and laboratory variables into ML models, enhances the predictive robustness and geotechnical reliability of UCS estimations for shale rocks especially non-destructive parameters like increase in UPV and dry density shows higher strength25,26 and the void ratio (VR), porosity and moisture content (MC) adversely affect the UCS of shale27,28,29. The higher clay content (CC), organic matter (OM), and plasticity index (PI) lower the shale strength30,31,32. Environmental and field-derived parameters such as rainfall duration (RFD), rainfall intensity (RFI), temperature (T), rock quality designation (RQD), recovery ratio (RR), and bedding angle (BA) also exert critical influence on the UCS of shale formations33,34,35,36,37,38,39,40. UCS of organic-rich shale is governed by a wide spectrum of variables which can be handled by ML methods as the recent advances in ML and AI offer powerful alternatives capable of modeling complex geo-mechanical behavior with high accuracy and generalizability41 using efficacy of ML models in predicting UCS using geotechnical and well-logging data42,43,44. A comparative analysis of recent literature, summarized in Table 1, highlights a range of ML-based UCS models employing input features such as drilling parameters (e.g., weight on bit, RPM, ROP), physical properties (e.g., porosity, density, shear wave velocity), and mineralogical indices. Prior models (Table 1) have limited predictor scope, ignoring shale-specific features like fabric anisotropy and wettability; whereas, the significant drilling parameters like RQD and recovery ratio are also missing in these studies.

The present research aimed to develop machine learning-based predictive models for estimating the unconfined compressive strength (UCS) of organic-rich clay shale, providing a scalable, data-driven alternative to conventional destructive testing. The methodology utilized a large, comprehensive dataset (1217 samples) integrating novel non-destructive indicators, specifically wettability potential and quantitative shale fabric metrics—alongside standard destructive and ultrasonic pulse velocity. Four supervised ML algorithms—Support Vector Machine (SVM), Decision Tree (DT), K-Nearest Neighbor (KNN), and Extreme Gradient Boosting (XGBoost) were employed using a dual-model strategy. The simple model utilizes core physical parameters, while the composite model incorporates the full suite of predictors. The composite XGBoost model achieved exceptional predictive accuracy (R2 = 0.981; RMSE = 0.02; MAE = 0.02) and demonstrated robust generalization on an external validation dataset (R2 = 0.91), supported by Taylor diagram and sensitivity analysis. The novelty lies in: (1) the first integration of wettability potential and fabric anisotropy as critical predictors for shale UCS; (2) the composite XGBoost model achieving unprecedented accuracy (R2 = 0.981) and exceptional generalization (R2 = 0.91); and (3) sensitivity analysis revealing the non-linear influence of these novel parameters, establishing a paradigm-shifting approach capable of replacing conventional UCS testing for rapid geo-mechanical characterization in geotechnical applications.

Methodology

The approach adopted to develop and validate the ML-based models and the conceptual framework are presented in Figs. 1 and 2, respectively. The methodology is based on input variables which plays a pivotal role in the development of AI-based predictive models. The selection was based on the physical and statistical significance regarding three different aspects like non-destructive test (i.e., UPV), index and field parameters. The high-quality sample preparation from cores is tedious task which is expensive and time consuming and the samples are likely to be disturbed due to stress-unloading effects due thinly bedded shale (Hu et al.49). The sampling in rock is preferably performed by drilling of core from the shale strata. The core samples are tested in the laboratory for different index tests and UCS correlations are developed which lack the field and environmental influencing factors; hence, generating the imprecise predictive models being used in the geotechnical field. 1217 datasets of significant and effective parameters i.e., Unconfined Compressive Strength (UCS) and Moisture Content, (MC) 50, Ultrasonic Pulse Velocity (UPV) 51, Void Ratio (VR) and Porosity (P) 52, Dry Density (DD) 53, Clay Content (CC)54, Plasticity Index (PI) 55, Rock Quality Designation (RQD) and Recovery Ratio (RR) 56, Bedding Angle (BA) and Regional Temperature, (T) 57 were performed in field and laboratory. These input parameters were used for the model development, encompassing the minimum and maximum range for different types of shale materials. The extensive datasets were arranged in categories of different ranges. A systematic comprehensive repository was meticulously assembled for the development of an AI-driven predictive model for the UCS response parameter. The important segments of input parameters are taken as non-destructive (i.e., UPV, wettability (MC, RFD, and RFI), fabric (VR, OM, DD, CC, PI, and P), and drilling parameters (RQD, RR, BA, and T) are the input parameters incorporated in the development of predictive model for the UCS.

Presented in the Table 2, a total of 1217 data points were obtained from an extensive experimental program carried out at the Rock Mechanics and Geotechnical Testing Laboratories, University of Engineering and Technology, Lahore, Pakistan. This dataset was compiled over several years as part of ongoing research activities on the characterization of organic-rich clay shale. The testing program was designed to capture a wide spectrum of shale properties through systematic laboratory measurements of unconfined compressive strength (UCS), ultrasonic wave velocities, mineral fabric, wettability, and drilling-related parameters. All tests were performed under standardized and controlled laboratory conditions to ensure consistency, accuracy, and repeatability. Data were carefully recorded, reviewed, and integrated into a centralized laboratory database. The large number of testing points was deliberately selected to reflect the inherent variability and anisotropy typical of shale formations. This robust, internally generated dataset serves as a strong foundation for the development and validation of the XGBoost model proposed in this study to predict UCS based on integrated geotechnical and geophysical parameters.

This study encompasses a comprehensive testing data which are critical for modeling the strength behavior of shale rock. UCS serves as a primary indicator of material strength, while UPV reflects material density and elasticity. VR and MC provide insights into natural structural void capacity and compaction characteristic. Likewise, DD and CC are critical for soil and rock stability regarding strength perspective. OM and PI capture the organic behavior and plasticity characteristics of the shale. All these factors describe the shale fabric characteristics which as a result affect the UCS response. The wettability of the shale is vulnerable to its strength and P and MC can describe the potential of void spaces along with water present in these voids whereas RFD and RFI demonstrate the parameters related to the wetting potential at site. The site drilling conditions and field parameters such as RQD and RR are used to assess the quality and integrity of rock cores. The inclusion of BAand T affect the surface moisture of the extracted core samples. Collectively, UPV, fabric characteristics, wettability factors and field drilling parameters enable robust modeling and precise prediction of mechanical properties in complex geological environments. An ML-based predictive model demonstrating the most efficient and favorable KPIs emerged in this study. The efficacy of the proposed model was validated by a comprehensive analysis regarding comparison of the tested models. A rigorous evaluation was conducted with existing models using a large independent dataset. The sensitivity and parametric analyses were conducted for assessment of the model’s behavior in different conditions. Simple and composite models are presented for the response and predictors.

Simple model UCS = f (UPV, VR, OMC, DD, CC, OM, PI).

Composite model UCS = f (UPV, VR, OMC, DD, CC, OM, PI, P, MC, RQD, RR, RFD, RFI, BA, T).

Data analysis

Normalization of variables

The lab and field values of the data obtained were normalized for model development as it is necessary for improving the performance and stability of machine learning models. It ensures that features with different ranges and units contribute equally to the model, preventing those with larger magnitudes from dominating. This is particularly important for algorithms like Support Vector Machines and k-nearest neighbors; where feature scale impacts model performance. Normalization also accelerates convergence in optimization processes, reduces bias, and allows for more balanced learning. Additionally, it enhances the interpretability of results by putting all features on the same scale, making it easier to compare their relative importance and ensure consistent distance calculations in distance-based algorithms.

Table 3 shows a single-factor ANOVA analysis conducted on 14 groups, each containing 1217 samples, to compare variations in key geotechnical parameters. The summary statistics for each group include the count, sum, average, and variance, with averages ranging between 0.47 and 0.52 and variances spanning from 0.077 to 0.089. The ANOVA results show a statistically significant difference between groups, with an F-value of 2.271 exceeding the critical F-value of 1.720 (p-value = 0.0055). The between-group sum of squares (SS) is 2.47, with a mean square (MS) of 0.190, while the within-group variability accounts for an SS of 1163.46 and an MS of 0.083. These findings highlight meaningful variability among the parameters, underscoring their importance in the study’s context.

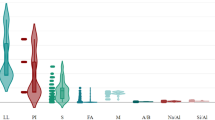

Density of the data

Figure 3 illustrates the density distributions of various geotechnical parameters. Each subplot combines a kernel density estimate (red line) and a histogram (blue area), offering a detailed visualization of the data’s spread and central tendencies. The red density curves highlight the underlying probability distributions, revealing the skewness, modality, and variance of each parameter. Most parameters exhibit unimodal distributions with slight variations in spread, indicating relatively consistent patterns within the dataset. Notably, UCS and a few other parameters show a wider spread, suggesting greater variability, which could influence their predictive capability in machine learning models. These plots provide insights into the statistical behavior and potential interrelationships of the input variables, serving as a foundational basis for model development and parameter optimization in geotechnical applications.

Pearson’s correlation matrix

Figure 4 represents the Pearson correlation matrix of various geotechnical parameters, emphasizing the response of UCS to other variables. UCS exhibits a strong positive correlation with Ultrasonic Pulse Velocity (UPV, r = 0.9939), Bulk Density (BA, r = 0.9598), and Void Ratio (VR, r = 0.9933), indicating that higher material density and reduced voids enhance compressive strength. Additionally, UCS is moderately positively correlated with Moisture Content (MC, r = 0.6627) and Optimum Moisture Content (OMC, r = 0.5653), suggesting that proper moisture levels during compaction improve material strength. Conversely, UCS demonstrates a negative correlation with Clay Content (CC, r = − 0.6627) and Dry Density (DD, r = − 0.6914), reflecting the weakening effects of clay’s deformability and inadequate compaction in drier conditions. A significant positive relationship between UCS and Tensile Strength (T, r = 0.9397) underscores the interplay between compressive and tensile properties in material behavior. These findings highlight UCS’s dependency on physical and compaction-related parameters, reinforcing its critical role in evaluating material performance in geotechnical applications.

Modelling framework

Following both physical and statistical significance, ten independent variables were meticulously examined as predictors for predicting UCS. This study focuses on predictive modelling as it lessens dependency on laborious and time-consuming testing procedures and gives predictions amidst the considerable variability inherent in established geotechnical data. The data was split into training data (80%) and testing data (20%) while separate independent data points were reserved for the validation of the models. Furthermore, the proposed model was validated alongside the existing models in the literature to demonstrate its effectiveness in predicting the UCS using Taylor’s diagram. Additionally, the parametric and sensitivity analysis was done to delineate the mechanism of modelling and emphasize the importance of geotechnical parameters (predictors) in the development of AI models for the prediction of UCS of shale rock.

Modelling techniques

-

(a)

Extreme Gradient Boosting (XGBoost)

XGBoost is a significant ensemble learning ML-algorithm. It comprises classification and regression trees along with analytical methods for boosting. The framework assessment is boosted by different tree construction in place of addressed tree. Then these trees are connected to establish a predictive algorithm. XGBoost’s objective function combines a loss function (measuring how well the model fits the training data) and a regularization term (to prevent overfitting). For a dataset with nnn examples, the objective function at iteration, t is shown in Eq. (1).

ℓ is the loss function (e.g., mean squared error), ft is the newly added tree at iteration t.

The regularization term Ω(ft)) controls the complexity of the model. For a tree ft with T leaves and weights w for each leaf, it’s defined as in Eq. (2)

γ is the penalty for the number of leaves, λ controls the L2 regularization on leaf weights.

The predicted value at each iteration, t is updated as Eq. (3)

To optimize the objective function, XGBoost uses a second-order Taylor expansion of the loss function around the current predictions as presented in Eq. (4)

For a given leaf jjj, the optimal weight wjw_jwj that minimizes the objective function can be computed as in Eq. (5)

The optimal value of the objective function after adding a tree is given by Eq. (6)

The final prediction boosting rounds is shown in Eq. (7)

where each ft is a tree added in iteration, t.

-

(b)

Support Vector Machine (SVM)

SVM makes use of machine learning to solve complex regression, classification and outlier detection problems by conducting optimal data changes that determine boundaries between data points based on predefined classes. Adopted in a wide range of disciplines; SVM has applications in healthcare, natural language processing and image recognition. The support Vector Machine model is chosen in this study because of its ability to perform complex and robust predictions. The limitations of this model include careful parameter tuning and the chances of overfitting. The goal of SVM is to maximize the margin between classes, which is equivalent to minimizing the norm of the weight vector was presented in Eqs. (viii and ix)

w is the weight vector, b is the bias term, yi are the class labels (+ 1 or -1), xi are the data points.

For non-linearly separable data, we introduce slack variables ξi\xi_iξi to allow some misclassifications as shown in Eqs. (x and xi):

where C is a penalty parameter controlling the trade-off between margin width and misclassification. To solve the optimization problem efficiently, SVM uses the dual formulation as presented in Eqs. (12 and 13)

where αi\alpha_iαi are the Lagrange multipliers.

Once the optimal α\alphaα values are found, the decision function for a new point x is in Eq. (14)

For non-linearly separable data, a kernel function K(xi,xj) is used in Eq. (15).

where K(xi,xj) = ϕ(xi)⋅ϕ(xj) maps data into a higher-dimensional space for linear separation.

-

(c)

Decision Tree

A decision tree algorithm works by partitioning input data recursively based on feature values, resulting in the prediction of a target variable. It starts with the entire dataset at the root node while selecting the best attribute to split the data into subsets for maximizing the information gain, and reducing impurity or variance as required. The process continues iteratively making a tree-like structure with internal nodes representing decision points. On the other hand, feature values and leaf nodes represent predicted outcomes. The Gini impurity for a node is calculated as in Eq. (16).

where pk is the proportion of samples belonging to class k in the node.

The best split minimizes the impurity of child nodes as in Eq. (17).

where Impurityleft and Impurityright are the impurity measures (e.g., Gini or Entropy) of the left and right child nodes, respectively.

-

(d)

K-Nearest Neighbor

The k-nearest works by identifying the k-closest data points in the feature space to a given query instance; making predictions based on their labels or values. In classification, the predicted class is usually selected by a majority vote of the k-nearest neighbors, while in regression, the predicted value is frequently the average of the k-nearest neighbors’ values. The number of neighbors to consider (k) is an important hyperparameter that can have a significant influence on the algorithm’s performance. The kNN algorithm has applications in a variety of machine learning tasks due to its simplicity and effectiveness. The primary operation in KNN is finding the distance between a query point x\mathbf{x}x and each point xi in the dataset. The most commonly used distance metric is Euclidean distance, defined as in Eq. (18).

where: m is the number of features, xj and xi are the feature values of the query point and the i-th data point, respectively.

For classification, KNN assigns the class yyy based on the majority vote among the kkk nearest neighbors (Eq. 19)

where yi1, yi2,…, yik are the classes of the k nearest neighbors.

For regression, KNN predicts the output by averaging the values of the k nearest neighbors as shown in (Eq. 20)

where yi are the target values of the k nearest neighbors.

Evaluation metrics

The proposed models were validated against existing models and independent datasets for predicting UCS of rock. The performance of the proposed model was validated based on various evaluation metrics. MAE (Mean Absolute Error) is the difference between the original and predicted values extracted by averaging the absolute difference over the data set (Eq.xxi)

MSE (Mean Squared Error) is the difference between the original and predicted values extracted by squared the average difference over the data set (Eq. 22)

RMSE (Root Mean Squared Error) is the error rate by the square root of MSE (Eq. 23)

R-squared (Coefficient of determination) represents the coefficient of how well the values fit compared to the original values (Eq. 24).

where y* is the predicted value of y and y’ is the mean value of y.

Parametric study and sensitivity analysis

Parametric analysis involved systematically varying the model parameters to evaluate their individual and combined effects on performance. Additionally, a sensitivity analysis was performed to determine the influence of each input parameter within the model framework, using a methodology aligned with the established approach. The following equations were utilized to compute the sensitivity of each input parameter (Eq. 25)

where fmax is the maximum and fmin is the minimum predicted value.

External validation

K-fold validation was used as Level-1 validation. Level-2 validation is done using independent data sets which were not used in the model development. The proposed model was further evaluated using a Taylor diagram analysis in comparison with existing models from the literature, leveraging an independent validation dataset as shown in Fig. 5.

Results and discussion

Figure 6 shows results showcase the performance of four machine learning models (Decision Tree, SVM, KNN, and XGBoost) in predicting UCS. Each figure compares the actual and predicted values for both training and testing datasets. The actual vs. predicted values show reasonable alignment but with noticeable scatter, particularly for the testing data.

The model struggles with overfitting, as evident from higher variance in predictions for testing data compared to training data indicating limited generalization capability and lower reliability in real-world applications. Similarly, the SVM model shows a clear underestimation for higher values of UCS, resulting in deviations from the ideal diagonal line. The testing data demonstrates moderate alignment, but the model’s predictive capability diminishes for extreme values suggesting the model lacks robustness, especially for datasets with diverse environmental, mechanical, and drilling predictors. Furthermore, KNN exhibits improved alignment compared to DT and SVM but still suffers from moderate scatter in the testing data. Its performance is more consistent but does not achieve the level of accuracy seen in XGBoost as it delivers an almost perfect alignment between actual and predicted values, with minimal scatter for both training and testing datasets. The strong performance is evident from the model’s ability to predict across a wide range of UCS values, capturing complex relationships between predictors (mechanical, drilling, and environmental factors). The model’s superior performance, demonstrated through high R-squared values and low error metrics, highlights its robustness and adaptability. The composite strategy (using all predictor categories) enables XGBoost to outperform other models significantly by leveraging the comprehensive dataset effectively. Hence, the composite XGBoost model excels due to its superior predictive accuracy, robust validation, and practical utility, making it the optimal choice for modelling UCS in complex shale lithologies. Figure 7 shows the comparison of the actual versus predicted plots across the models—Decision Tree (DT), Support Vector Machine (SVM), K-Nearest Neighbor (KNN), and XGBoost—clearly illustrates the superior performance of XGBoost.

While all models demonstrate a general alignment along the ideal y = x line, simple XGBoost achieves the tightest clustering of points, indicating the highest accuracy and minimal error for both training and testing datasets. In contrast, the DT model shows significant scatter, particularly in the testing data, suggesting poor generalization and a tendency to overfit. SVM performs better than DT but struggles with extreme values, showing deviations from the ideal line. KNN produces more consistent results than DT and SVM, but its predictions exhibit a wider spread around the ideal line, especially for the testing data. In comparison, simple XGBoost demonstrates excellent generalization, with minimal scatter and high predictive precision, reinforcing its robustness and suitability.

Model comparison

Figure 8 shows the performance metrics that reveal the superior accuracy and robustness of the Composite Model compared to other machine learning models, including Decision Tree, Cubic SVM, K-Nearest Neighbors (KNN), and XGBoost, as well as a simpler XGBoost model.

During training, the Composite Model achieves the lowest MSE (0.011), RMSE (0.011), and MAE (0.011), alongside the highest R-squared (0.991), demonstrating exceptional precision and its ability to explain nearly all variance in the training data. In testing, the Composite Model continues to outperform, achieving the best R-squared (0.981), while maintaining low MSE (0.011), RMSE (0.021), and MAE (0.021), reflecting its ability to generalize effectively to unseen data. When compared to the Simple Model, the Composite Model’s broader inclusion of predictors—integrating shale-water interaction, drilling, and fabric parameters—enables it to better capture complex relationships and dependencies, which the simpler model cannot fully address. This comprehensive approach results in superior performance metrics across all evaluation phases, making the composite models the most reliable and accurate tool for predicting UCS and ensuring its robustness for real-world applications.

Model validation

-

(a)

Level 1 Validation (K-Fold Cross Validation)

It is a widely used technique in machine learning to assess a model’s generalizability and prevent overfitting. The core idea is to split the data into K subsets and train the model on K-1 folds while testing it on the remaining fold. This process is repeated K times, with each fold serving as the test set exactly once. The results from each fold are then averaged to produce an overall performance metric. Unlike a simple train-test split, K-Fold ensures that each data point is used for both training and validation, reducing selection bias. Also, by averaging the performance across K iterations, K-Fold provides a more robust estimate of the model’s performance. The model development in this paper used K = 5.

-

(b)

Level 2 Validation (Using independent datasets for enhanced generalization)

Although the models demonstrated strong performance based on standard evaluation metrics, a comprehensive assessment of their effectiveness requires testing against data not used during the model development phase. To improve the generalizability of the proposed predictive models, validation with independent datasets from different geographic regions is crucial. While the current study shows high predictive accuracy within the primary study area, incorporating data from other parts of the country allows for a more robust evaluation of model stability and transferability. Validating against independent data helps assess the model’s performance under unfamiliar conditions, revealing any tendency toward overfitting to local patterns and supporting broader applicability58,59. Accordingly, to test the proposed models and assess their predictive capability, an independent dataset comprising 959 samples from a distinct region of the country was used for validation.

Figure 9 represents the performance of composite and simple XGBoost model showing distinctive trends. Both models show consistent trends across the sample population. The composite model showed even better prediction accuracy as the predictions generated by these models fall within an error margin of ± 1.51% as compared to a margin error of ± 1.86% for the Simple XGBoost model.

Figure 10 compares the validation performance of the simple and composite XGBoost models highlights the superiority of the composite approach in achieving more reliable and robust predictions.

For the composite model, the training phase yielded a Mean Squared Error (MSE) of 0.16, Root Mean Squared Error (RMSE) of 0.01, Mean Absolute Error (MAE) of 0.01, and an R-squared value of 0.98, while the testing phase achieved an MSE of 0.14, RMSE of 0.02, MAE of 0.02, and R-squared of 0.92. In contrast, the simple model showed comparable performance during training, with an MSE of 0.03, RMSE of 0.03, MAE of 0.03, and R-squared of 0.98, but experienced a significant drop in testing accuracy, yielding an MSE of 0.16, RMSE of 0.06, MAE of 0.05, and R-squared of 0.67. The improved generalization of the composite model, as reflected in its higher R-squared and lower error metrics during testing, underscores its efficacy in capturing complex relationships within the dataset while mitigating overfitting. These findings emphasize the composite model’s suitability for real-world applications requiring both precision and reliability.

Sensitivity analysis

Figure 11 highlights the sensitivity (S%) of various predictors in the Simple XGBoost and Composite XGBoost models, showcasing the superior performance of the composite model across all parameters.

The blue bars, representing the Composite XGBoost, consistently achieve higher sensitivity values compared to the orange bars for the Simple XGBoost. Predictors such as UPV, VR, PI, BA, and T exhibit particularly high sensitivity in the composite model, often nearing or exceeding 90%, indicating their strong contribution to the model’s predictive accuracy. In contrast, the Simple XGBoost model shows lower sensitivity for many predictors, including OMC, MC, and PI, suggesting that it captures these relationships less effectively. The enhanced sensitivity of the composite model is attributed to its inclusion of a broader range of variables, which allows it to better account for the complex interactions between predictors. This demonstrates that the composite model not only utilizes the predictors more effectively but also offers a more reliable framework for capturing the nuances of geotechnical behavior. This sensitivity-driven comparison underscores the importance of comprehensive predictor inclusion in achieving high prediction accuracy. Figure 12 highlights the superior performance of the composite XGBoost model (A) in predicting Unconfined Compressive Strength (UCS) compared to other models, within an absolute error threshold of ± 5%. Model A demonstrates the closest alignment with experimental values across all data points, consistently maintaining minimal deviations and accurately tracking observed trends. Unlike other models, such as C and D, which exhibit noticeable fluctuations and deviations from experimental values, the composite XGBoost model provides stable and precise predictions. Furthermore, its predictions remain tightly bound within the error bars, signifying high reliability and precision. Even across significant variations in UCS, such as peaks and troughs observed around counts 5, 15, and 25, the composite model captures these trends effectively, outperforming its counterparts. These results confirm the robustness and accuracy of the composite XGBoost model, establishing it as the most reliable tool for UCS prediction in geotechnical applications.

Figure 13 shows the Taylor diagram highlighting the superior performance of the composite XGBoost model (A) compared to other models (B, C, D, E) in predicting UCS. The composite XGBoost model achieves a correlation coefficient near 0.99, indicating an almost perfect linear relationship between the predicted and experimental values, surpassing the accuracy of all other models. Additionally, the composite model aligns closely with the reference standard deviation, reflecting its ability to replicate the variability of the experimental data accurately. Its proximity to the reference point further demonstrates that it has the lowest root mean square difference (RMSD), indicating minimal prediction error. Compared to the simple XGBoost model (B), the composite model benefits from a wider range of input variables, enabling more accurate and robust predictions. In contrast, models from previous studies (C, D, E) exhibit lower correlation coefficients, greater standard deviation mismatches, and higher prediction errors. Overall, the composite XGBoost model demonstrates superior accuracy, reliability, and consistency, making it the most effective tool for UCS prediction in geotechnical applications.

Model comparison with existing models in Taylor’s diagram illustrating the comparative performance of various models in predicting unconfined compressive strength (UCS). Model’s proximity to the reference point highlights its effectiveness in replicating both the strength and variability of experimental data. A: Composite XGBoost, B: Simple XGBoost, C: Davoodi et al.45, D: Kolawole et al.46, E: Mollaei et al.47.

Field implications

The innovative methodology employed in this study holds significant importance. The systematic and comparative assessments of diverse ML-based based models and the proposed hybrid model offer a nuanced approach that enables end users to make informed decisions for accurately predicting UCS using a wide range of critical input factors. The proposed models demonstrate high utility due to their minimal errors, both in relative and statistical analyses. Traditional UCS tests, which rely on costly procedures such as rock drilling, sample preservation, core cutting, finishing, testing, analysis, and evaluation, often encounter laboratory errors and complexities, particularly with shale rock samples. Replacing these tedious and error-prone tests with an ML model that incorporates non-destructive UPV tests, shale index characteristics, and field drilling parameters would significantly enhance efficiency and reliability. Furthermore, conventional UCS values often fail to represent actual strata conditions, as weaker samples, typically in broken form, are excluded from strength tests, leaving only the strongest core samples. The proposed models address this limitation by offering a more comprehensive and practical alternative for UCS prediction.

Conclusions

The study highlights the critical importance of shale strength in geotechnical applications, particularly for shale gas drilling, fracturing, rock excavation, and tunneling projects in organic-rich strata. By leveraging a comprehensive dataset of 1217 entries, encompassing both non-destructive parameters such as ultrasonic pulse velocity and shale fabric characteristics, wettability parameters alongside destructive drilling parameters, the research successfully developed machine learning models for unconfined compressive strength prediction. The incorporation of a wide range of variables and ML algorithms such as SVM, DT, KNN, and XGBoost allowed for a robust evaluation of prediction accuracy having R2 values of 0.60, 0.61, 0.63 and 0.67 for the simplified models and 0.76, 0.81, 0.89 and 0.92 for the composite models respectively.

Among the machine learning models tested, the composite XGBoost model demonstrated the highest accuracy with an R-squared of 0.92, MAE of 0.02, and RMSE of 0.02, significantly outperforming simpler models and traditional approaches. Likewise, the validation through metrics such as R-squared, MAE, RMSE, and Taylor diagram analysis confirmed the accuracy and reliability of the model. Sensitivity analysis further revealed the complex interplay of predictors influencing UCS, underscoring the model’s robustness. It is anticipated that the ML-based framework presented in this study offers a rapid, cost-effective, and accurate tool for geotechnical planning and shale-related engineering projects, paving the way for improved wellbore efficiency and resource extraction strategies.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Li, H., Chapman, D. N., Faramarzi, A. & Metje, N. The analysis of the fracturing mechanism and brittleness characteristics of anisotropic shale based on finite-discrete element method. Rock Mech. Rock Eng. 57(4), 2385–2405 (2024).

Alanazi, A. et al. Hydrogen adsorption kinetics in organic-Rich shale reservoir rocks for seasonal geological storage. Fuel 379, 132964 (2025).

Nie, H. Evaluation of gas content in organic-rich shale: A review of the history, current status, and future directions. Geoscience Frontiers, 101921. (2024).

Hu, T. et al. Movable oil content evaluation of lacustrine organic-rich shales: Methods and a novel quantitative evaluation model. Earth Sci. Rev. 214, 103545 (2021).

Wang, J. et al. Evolution of mechanical properties of organic-rich shale during thermal maturation. Sci. Rep. 14(1), 24327 (2024).

Cheng, G. et al. Pore structure evolution of organic-rich shale induced by structural deformation based on shale deformation experiments. Energy 306, 132463 (2024).

Meng, S. et al. CO2 utilization and sequestration in organic-rich shale from the nanoscale perspective. Appl. Energy 361, 122907 (2024).

Yasin, Q. et al. Automatic pore structure analysis in organic-rich shale using FIB-SEM and attention U-Net. Fuel 358, 130161 (2024).

Zhuang, Y. et al. Investigation on effects of water-shale interaction on acoustic characteristics of organic-rich shale in Ordos Basin, China. J. Pet. Explor. Prod. Technol. 14, 1–15 (2024).

Xie, W. Q., Zhang, X. P., Liu, Q. S., Tang, S. H. & Li, W. W. Experimental investigation of rock strength using indentation test and point load test. Int. J. Rock Mech. Min. Sci. 139, 104647 (2021).

Xie, W. Q., Liu, X. L., Zhang, X. P., Liu, Q. S. & Wang, E. Z. A review of test methods for uniaxial compressive strength of rocks: Theory, apparatus and data processing. J. Rock Mech. Geotech. Eng. 17(3), 1889–1905 (2025).

Ibrahim, A. F., Hiba, M., Elkatatny, S. & Ali, A. Estimation of tensile and uniaxial compressive strength of carbonate rocks from well-logging data: artificial intelligence approach. J. Pet. Explor. Prod. Technol. 14(1), 317–329 (2024).

Gao, M. et al. Mechanical characterization of uniaxial compression associated with lamination angles in shale. Adv. Geo-Energy Res. 13(1), 56–68 (2024).

Gao, F. et al. Coupled effects of chemical environments and freeze–thaw cycles on damage characteristics of red sandstone. Bull. Eng. Geol. Env. 76, 1481–1490 (2017).

Masoud, Z., Akbar, A. & Khan, A. H. High quality and cost-effective drilling system for prebored pressuremeter testing. Soils Found. 53(6), 903–909 (2013).

Masoud, Z. & Khan, A. H. An improved technique for prebored pressuremeter tests. KSCE J. Civ. Eng. 23(7), 2839–2846 (2019).

Masoud, Z. & Khan, A. H. Prediction of unloading failure strain and undrained shear strength of saturated clays by limit pressure from prebored pressuremeter. Geotech. Test. J. 44(5), 1426–1447 (2021).

Rizvi, M. A., Khan, A. H., Rehman, Z. U., Masoud, Z. & Inam, A. Effect of fractured aggregate particles on linear stress ratio of aggregate and resilience properties of asphalt mixes—a way forward for sustainable pavements. Sustainability 13(15), 8630 (2021).

Rizvi, M. A., Khan, A. H., Rehman, Z. U., Inam, A. & Masoud, Z. Evaluation of linear deformation and unloading stiffness characteristics of asphalt mixtures incorporating various aggregate gradations. Sustainability 13(16), 8865 (2021).

Khan, A. H. et al. Prediction of post-yield strain from loading and unloading phases of pressuremeter, triaxial, and consolidation test curves for sustainable embankment design. Sustainability 14(5), 2535 (2022).

Qamar, W., Khan, A. H., Rehman, Z. U. & Masoud, Z. Sustainable application of wool-banana bio-composite waste material in geotechnical engineering for enhancement of elastoplastic strain and resilience of subgrade expansive clays. Sustainability 14(20), 13215 (2022).

Zubair, A. et al. Prediction of compression index from secant elastic modulus and peak strength of high plastic clay ameliorated by agro-synthetic waste fibers for green subgrade. Sustainability 15(22), 15871 (2023).

Farooq, Z., Zubair, A., Farooq, K., Masoud, Z. & Mujtaba, H. Enhancement of resilient modulus and strength of expansive clay stabilized by wool and agricultural waste. Arab. J. Sci. Eng. 14, 1–18. https://doi.org/10.1007/s13369-024-09760-6 (2024).

Ali, M., Zubair, A., Farooq, Z., Farooq, K. & Masoud, Z. Prediction of small-strain elastic stiffness of natural and artificial soft rocks subjected to freeze-thaw cycles. J. Rock Mech. Geotech. Eng. 17, 3546 (2025).

Su, Z. et al. A combined non-destructive prediction method for evaluating the uniaxial compressive strength of rocks under freeze–thaw cycles. Arab. J. Sci. Eng. 47(10), 13365–13379 (2022).

Dai, J., Liu, J., Zhou, L. & He, X. Real-time ultrasonic features and damage characterization of deep shale. Rock Mech. Rock Eng. 56(4), 2535–2550 (2023).

Xu, X., Li, Q. & Xu, G. Investigation on the behavior of frozen silty clay subjected to monotonic and cyclic triaxial loading. Acta Geotech. 15, 1289–1302 (2019).

Jia, H., Ding, S., Zi, F., Dong, Y. & Shen, Y. Evolution in sandstone pore structures with freeze-thaw cycling and interpretation of damage mechanisms in saturated porous rocks. CATENA 195, 104915 (2020).

Xian-yin, Q., Dian-dong, G., Ming-zhe, X. & Ting, K. Experimental and damage model study of layered shale under different moisture contents. Int. J. Damage Mech. 33, 10567895241245752 (2024).

Nnamani, C. H. The chemical and mineralogical composition and their effects on strength parameters of cohesive soil developed over Enugu shale. Eur. J. Environ. Earth Sci. 3(1), 28–35 (2022).

Fan, L. F., Wang, H. & Zhong, W. L. Development of lightweight aggregate geopolymer concrete with shale ceramsite. Ceram. Int. 49(10), 15422–15433 (2023).

Li, X. et al. Rheological mechanical properties and its constitutive relation of soft rock considering influence of clay mineral composition and content. Int. J. Coal Sci. Technol. 10(1), 48 (2023).

Li, Q., Wang, Y. & Zhang, K. Failure mechanism of weak rock slopes considering hydrological conditions. KSCE J. Civ. Eng. 26, 1–18 (2022).

Shen, W. et al. Estimating RQD for rock masses based on a comprehensive approach. Appl. Sci. 13(23), 12855 (2023).

Hasan, M., Shang, Y., Yi, X., Shao, P. & Meng, H. Determination of rock quality designation (RQD) using a novel geophysical approach: a case study. Bull. Eng. Geol. Env. 82(3), 86 (2023).

Ge, Y., Liu, J., Zhang, X., Tang, H. & Xia, X. Automated detection and characterization of cracks on concrete using laser scanning. J. Infrastruct. Syst. 29(2), 04023005 (2023).

Zhang, Y., Zhao, Y., Long, A., Wang, C. & Bi, J. Combined effects of bedding anisotropy and matrix heterogeneity on hydraulic fracturing of shales from Changning and Lushan, South China: An experimental investigation. J. Asian Earth Sci. 259, 105908 (2024).

Zhang, J., Du, R., Chen, Y. & Huang, Z. Experimental investigation of the mechanical properties and energy evolution of layered phyllite under uniaxial multilevel cyclic loading. Rock Mech. Rock Eng. 56(6), 4153–4168 (2023).

Zhang, J., Zhang, X., Chen, W., Huang, Z. & Du, R. A constitutive model of freeze–thaw damage to transversely isotropic rock masses and its preliminary application. Comput. Geotech. 152, 105056 (2022).

Wang, M. et al. Summary of the transformational relationship between point load strength index and uniaxial compressive strength of rocks. Sustainability 14(19), 12456 (2022).

Aziz, M. et al. Deep learning-based prediction of particle breakage and friction angle of water-degradable geomaterials. Powder Technol. 444, 120049 (2024).

Lee, H. Engineering in vitro models: Bioprinting of organoids with artificial intelligence. Cyborg Bionic Syst. 4, 18 (2023).

Othman, K. & Abdelwahab, H. The application of deep neural networks for the prediction of California Bearing Ratio of road subgrade soil. Ain Shams Eng. J. 14, 101988 (2023).

Liu, X. F. et al. Experimental study on the small strain stiffness-strength of a fully weathered red mudstone. Constr. Build. Mater. 438, 137058 (2024).

Davoodi, S., Mehrad, M., Wood, D. A., Rukavishnikov, V. S. & Bajolvand, M. Predicting uniaxial compressive strength from drilling variables aided by hybrid machine learning. Int. J. Rock Mech. Min. Sci. 170, 105546 (2023).

Kolawole, O. & Assaad, R. H. Modeling and prediction of temporal biogeomechanical properties using novel machine learning approach. Rock Mech. Rock Eng. 56(8), 5635–5655 (2023).

Mollaei, F., Moradzadeh, A. & Mohebian, R. Novel approaches in geomechanical parameter estimation using machine learning methods and conventional well logs. Geosyst. Eng. 27(5), 252–277 (2024).

Miah, M. I., Ahmed, S., Zendehboudi, S. & Butt, S. Machine learning approach to model rock strength: Prediction and variable selection with aid of log data. Rock Mech. Rock Eng. 53, 4691–4715 (2020).

Hu, Z., Mei, H. & Yu, L. An intelligent prediction method for rock core integrity based on deep learning. Sci. Rep. 15(1), 6456 (2025).

ASTM D2938. Standard test method for unconfined compressive strength of intact rock core specimens. American Society for Testing of Materials: West Conshohocken, PA, USA.

ASTM C597. Standard test method for ultrasonic pulse velocity for concrete. American Society for Testing of Materials: West Conshohocken, PA, USA (2022).

ASTM D4404. Standard test methods for determination of pore volume and pore volume distribution of soil and rock mercury intrusion porosimetry. American Society for Testing of Materials: West Conshohocken, PA, USA.

ASTM D7263. Standard test methods for laboratory determination of density and unit weight of soil specimens. American Society for Testing of Materials: West Conshohocken, PA, USA (2018).

ASTM D7928-17. Standard test methods for particle size distribution (gradation) of fine-grained soils using the (sedimentation) hydrometer analysis. American Society for Testing of Materials: West Conshohocken, PA, USA (2021).

ASTM D4318. Standard test methods for liquid limit, plastic limit, and plasticity index of soils, American Society for Testing and Materials, Vol. 4, USA (2010).

ASTM D6032-17. Standard test methods for determining rock quality designation (RQD) of rock core. American Society for Testing of Materials: West Conshohocken, PA, USA (2017).

ASTM D5312/D5312M. Standard Test Method for Evaluation of Durability of Rock for Erosion Control under Freezing and Thawing Conditions; American Society for Testing of Materials: West Conshohocken, PA, USA (2013).

Nasrnia, B. & Falahat, R. Studying the accuracy and generalizability of different estimation methods of shear wave velocity. Arab. J. Sci. Eng. https://doi.org/10.1007/s13369-024-09809-6 (2024).

Nasrnia, B., Falahat, R., Kadkhodaie, A. & Vijouyeh, A. G. A committee machine-based estimation of shear velocity log by combining intelligent systems and rock-physics model using metaheuristic algorithms. Eng. Appl. Artif. Intell. 126, 106821 (2023).

Acknowledgements

The authors are grateful for the support provided by King Fahd University of Petroleum and Minerals (KFUPM), Dhahran, Saudi Arabia. Interdisciplinary Research Center for Construction and Building Materials (IRC-CBM) and Interdisciplinary Research Center for Advanced Materials (IRC-AM) at KFUPM are also acknowledged for the support and facilities provided.

Funding

The authors received no financial support for this research.

Author information

Authors and Affiliations

Contributions

Muhmmad Ali (MAF) conceptualized the study and developed the methodology. MAF and Usman Ali (UA) conducted the data curation and performed the machine learning analyses. Mubashir Aziz (MA) and Aaqib Ali (AA) provided domain-specific insights and interpretation of the geotechnical data. MAF and MA wrote the main manuscript text. AA and UA contributed to the revision of the manuscript, enhanced the discussion of results, and improved the figures. All authors reviewed and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ali, M., Aziz, M., Ali, A. et al. Machine learning-based prediction of unconfined compressive strength of organic-rich clay shales using hybrid destructive and non-destructive inputs. Sci Rep 15, 31855 (2025). https://doi.org/10.1038/s41598-025-15572-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-15572-x