Abstract

Brain metastases (BMs) are the most common intracranial tumors and stereotactic radiotherapy improved the life quality of patient with BMs, while it requires more time and experience to delineate BMs precisely by oncologists. Deep Learning techniques showed promising applications in radiation oncology. Therefore, we proposed a deep learning-based automatic segmentation of primary tumor volumes for BMs in this work. Magnetic resonance imaging (MRI) of 158 eligible patients with BMs was retrospectively collected in the study. An automatic segmentation model called BUC-Net based on U-Net with cascade strategy and bottleneck module was proposed for auto-segmentation of BMs. The proposed model was evaluated using geometric metrics (Dice similarity coefficient (DSC), 95% Hausdorff distance (HD95) and Average surface distance (ASD)) for the performance of automatic segmentation, and Precision recall (PR) and Receiver operating characteristic (ROC) curve for the performance of automatic detection, and relative volume difference (RVD) for evaluation. Compared with U-Net and U-Net Cascade, the BUC-Net achieved the average DSC of 0.912 and 0.797, HD95 of 0.901 mm and 0.922 mm, ASD of 0.332 mm and 0.210 mm for the evaluation of automatic segmentation in binary classification and multiple classification, respectively. The average Area Under Curve (AUC) of 0.934 and 0.835 for (Precision-Recall) PR and Receiver Operating Characteristic (ROC) curve for the tumor detection. It also performed the minimum RVD with various diameter ranges in the clinical evaluation. The BUC-Net can achieve the segmentation and modification of BMs for one patient within 10 min, instead of 3–6 h by the conventional manual modification, which is conspicuous to improve the efficiency and accuracy of radiation therapy.

Similar content being viewed by others

Introduction

Brain metastases (BMs) are the most common intracranial tumors, which substantially affect the prognosis and quality of life of patients. It is estimated 20–45% of advanced tumors will metastasize to brain1. Although a precise value for incidence of BM is unknown, more than 100,000 people diagnosed every year2. Lung cancer is reported to be most susceptible to brain metastases, which occur in approximately 50% of lung cancers, Brain metastases occur in 13%-30% of breast cancer patients, 6%–11% of malignant melanoma, 6%–9% of gastrointestinal cancer3,4. Since the 1950s, whole brain radiation therapy (WBRT) has been the most widely used treatment for patients with multiple brain metastases. After WBRT, the disease control rate of patients can reach 24%-55%, and the median survival time is extended to 3–6 month5. With the advances in systemic cancer treatment, patients with brain metastases can achieve longer survival now. The toxicities of WBRT, such as cognitive deterioration have been paid attention to. Since Lindquist6 reported that a patient with a brain metastasis had been successfully treated with stereotactic radiotherapy, evidence of the effectiveness of this treatment has been accumulating. The dominance of WBRT has being challenged by stereotactic radiotherapy, which has been a standard treatment for patients with new diagnosed or recurrent brain metastases. A lot of expert panels, including American Society for radiation Oncology (ASTRO), the National Cancer Comprehensive Network (NCCN), and the European Association of Neuro Oncology (EANO), recommended stereotactic radiotherapy for BMs.

Indeed, stereotactic radiotherapy improved the patient’s quality of life. But also, doctor need more time to delineate BMs precisely. Due to BMs usually appeared multiple, their morphological structures and locations are diversely. Thus, manual identification of BMs limited by the subjective experience of different doctors. Especially, small metastases are easily overlooked in manual detection, as they are located only in a few image slices and typically have low contrast. In addition, some anatomical structures, such as blood vessels, appear very similar to BMs in 2D intersection planes, which makes manual identification challenging7. Time taken for the BMs is associated with number, size, boundary, and location of BM. According to Ying Mao’s study, a median value of 2.8 min was recorded in manual segmentation of per lesion in the clinical practice7. Therefore, it is crucial to develop a technology to assist automated BMs identification.

Deep Learning technique is a branch of artificial intelligence in which an algorithm learns by inference from a data set8. During the last decades deep-learning approaches have been applied to automatic detection and/or segmentation of brain metastases and has showed promising applications in radiation oncology. Ying Mao et al.9, proposed the automatic segmentation method based on Three-dimensional T1-magnetization-prepared rapid gradient-echo (3D-T1-MPRAGE) for the segmentation of BMs. Symmetrically driven FCN completed segmentation of BMs. Yixing Huang10, proposed a new segmentation framework called volume-level sensitivity–specificity (VSS) increasing the sensitivity from 86.7% to 95.5%, the precision from 68.8% to 97.8%. Bousabarah11, proposed a soft dice loss computed from three spatial scales, which are the outputs of three down-sampled layers from the 3D U-Net, improved BMs segmentation performance. Pierpaolo Alongi12 provided a segmentation network and demonstrate that radiomics analysis combined with segmentation of FDG-PET/CT images. Sang Kyun Yoo13 demonstrates that a deep learning model, enhanced with effective training techniques, can accurately detect and segment even small brain metastases (less than 0.04 cc) using contrast-enhanced MRI. Jie Xue14 provides BMDS net, a deep learning-based model, achieves highly accurate and automatic detection and segmentation of brain metastases on 3D-T1-MPRAGE MRI images. Muhammad Ali15 highlights how advancements in artificial intelligence and deep learning have significantly improved automated cell detection and segmentation in microscopy images.

The study aims to explore the promising applications of deep learning in BMs’ radiation oncology, and develop an automatic segmentation model for BMs in MRI using deep learning algorithms. An automatic segmentation model 3D BUC-Net based on 3D U-Net and combined Cascade architecture and Bottler layer module was proposed to automatically segment BMs, especially for the smaller BMs. MRI sequences of 62 eligible patients from 86 patients with histologically proven BMs were retrospectively collected to develop the proposed model. MRI sequences of 96 additional patients with BMs from the same institute to evaluate the performance by geometric evaluation and clinical evaluation. The geometric metrics consisted of dice similarity coefficient (DSC), 95% Hausdorff distance (HD95), average surface distance (ASD) for the evaluation of automatic segmentation; and the precision-recall (PR) curves and the receiver operating characteristic (ROC) curves for the evaluation of automatic detection; Moreover, these automatically segmented contours were manually reviewed and revised by oncologists, the relative volume difference (RVD) between automatic contours and manual revised contours was calculated to evaluate its clinical value.

Materials and methods

The ethical committee of The First Hospital of Tsinghua University (TUFH) approved the study with the requirement to obtain written informed consent (Approval NO. Clinical Research 2022–44), which was performed in compliance with the Declaration of Helsinki.

Study design

We retrospectively collected MRI sequences of 86 patients with histologically proven brain metastasis from Department of Radiation Oncology in The First Hospital of Tsinghua University (TUFH). These eligible 62 patients selected from 86 patients consisted of gross tumor volumes (GTVs) of brain Metastasis was then randomly split into two cohorts to develop the auto-segmentation model for BMs: 49 patients as the training set for training an auto-segmentation model and 13 patients as the test set for optimization of hyper-parameters during model training. We further collected 96 eligible patients with BMs from TUFH to implement the automatic segmentation by the trained model, and evaluated its performance by geometric metrics for the evaluation of automatic segmentation16, and the automatic detection compared with the manual contouring17. Finally, these contours segmented automatically by the proposed model were manually reviewed and revised by oncologists, the RVD between automatic contours and manual revised contours was calculated to evaluate its clinical value18. The patients which not be selected were due to MRI without requirements, post-operation and with primary intracranial tumor. The study design is shown in Fig. 1.

Study design and workflow for the development and evaluation of the BUC-Net model for automatic segmentation of BMs using MRI data. The process includes patient selection, dataset splitting into training, validation, and test sets, manual contouring by radiation oncologists, and performance evaluation using geometric and clinical metrics.

Patient characteristics

The MRI sequences of all patients were performed with T1-weighted mDIXON (T1W-mDIXON) sequences and T1-weighted Turbo Field Echo (T1W-TFE), the characteristics of these MRI sequences are the resolution of \(\left(299\sim 364\right)\times 640\times 640\) and the spacing of \(\left[0.5, 0.36, 0.36\right]\). The 62 patients with brain metastasis for the development of BMs’ model were assigned to two radiation oncologists (C. F. and D. L., both with more than 10 years of experience in the radiotherapy treatment) to manually delineate the ground-truth GTVs via consensus according to the RTOG; a third radiologist (Z. D., with about 15 years of experience in the radiotherapy treatment of head and neck tumors) was reviewed the manual contours of BMs and consulted in cases of disagreement. These automatic contours of 96 BMs’ patients delineated by the model were reviewed and revised by an expert oncologist (R. Z., with more than 20 years of experience) specializing in radiation oncology of head and neck tumors. Patient characteristics is reported in Fig. 2.

Manual contours of BMs in a T1W-TFE sequence: on the slice of axial view (A), on the slice of sagittal view (B), on the slice of coronal view (C) and the 3D profile of BMs’ contours (D). The number distribution of BMs per patient (E), diameter distribution (F) and volume (G) of BMs in MRI of 62 patients.

Automatic segmentation framework

The 3D U-Net based networks have been proved good performance in medical images segmentation. However, they were not suitable for all segmentation tasks. In this manuscript, the cascade architecture based on 3D U-Net (3D U-Net Cascade) was designed to improve the segmentation accuracy of small objects19; additionally, the bottleneck module was used in our modified network to reduce the model’s parameters and improve the segmentation efficiency for 3D medical imaging. Therefore, the 3D BUC-Net (shown in Fig. 3) combined U-Net Cascade and the bottleneck module was designed to achieve efficient and accurate delineation for the GTVs of BMs in this work20,21,22.

Schematic overview of the BUC-Net architecture for automatic segmentation of brain metastases. The network employs a two-stage cascade strategy: the first stage generates a coarse segmentation map using a Bottle U-Net, and the second stage refines the segmentation using both the raw MRI and the coarse segmentation map as inputs.

As for the architecture in BUC-Net, a patch of \(32\times 320\times 320\) was cropped according to sliding windows from the MRI with skull-stripping. The patch was passed into 3D Bottle U-Net and predict a segmentation map roughly in the first stage. The coarse segmentation map was fed together with the raw images into 3D Bottle U-Net in the second stage. The second stage can provide a more accurate segmentation map with more network parameters. The two-stage cascaded network is trained in an end-to-end fashion.

The 3D Bottle U-Net (shown in Fig. 4) in BUC-Net was established with 5 encoders and 5 decoders, the Bottle layer module was assembled in each encoder and decoder. Encoder path: [16, 32, 64, 128, 256] channels across five down-sampling levels. Decoder path: [256, 128, 64, 32, 16] channels across five up-sampling levels. Convolutional kernels: 3 × 3 × 3. Max-pooling kernels: 2 × 2 × 2 (used for down-sampling). Strided transposed convolutions used for up-sampling in decoder path. In each encoder and decoder, we replaced the common ReLU activation function and batch normalization in popular deep learning model with the LeakyReLU activation function (negative slope \(1{e}^{-2}\)) and instance normalization with a dropout of 0.5. We used the \(2\times 2\times 2\) max pooling to generate the downsampled feature map in each encoder23, conversely, we used the \(2\times 2\times 2\) deconvolution kennel to generate the upsampled feature map in each decoder. The layers in the decoders were concatenated with the layers in their corresponding encoders by skip-connection to use fine-grained details learned in the decoders. The bottleneck module consists of three convolutional layers. The first convolutional layer applies a 1 × 1 × 1 convolution kernel to reduce the channels of feature maps. The second convolutional layer performs a spatial convolution with the same kernel size as the conventional convolutional layer. The last convolutional layer applies a 1 × 1 × 1 convolution kernel to increase the channels of feature maps back to the original size. This design significantly reduces the number of trainable parameters while preserving segmentation performance.

Detailed structure of the 3D Bottle U-Net used within BUC-Net. Each encoder block includes a bottleneck module with 1 × 1 × 1 convolutions for channel reduction and restoration, followed by a 3 × 3 × 3 convolution for spatial feature extraction. Instance normalization and LeakyReLU activation are applied after each convolutional layer.

Image processing

The skull in all MRI were stripped by the physician workstation (uPWS, United Imaging Healthcare Co., Ltd., UIH, Shanghai, China). Preprocessing was conducted to optimize network input and reduce computational load by cropping all MRI images to include only regions with nonzero value. In order to promote the network’s ability to learn spatial semantics, all MRI sequences were resampled to the median voxel spacing of the dataset, utilizing third-order spline interpolation for image scans and nearest neighbor interpolation for their corresponding contours24. Additionally, all images were normalized using simple Z-score normalization for individual patients25. Augmentation techniques, including random flip, random zoom, random elastic deformation, gamma adjustment, and mirroring, were employed in real-time to address the overfitting issue caused by limited data during deep network training, thus increasing data diversity26.

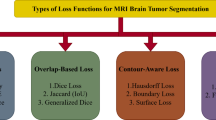

Loss function

In the first stage, in order to overcome the fact that the segmentation targets in the image are too small, the ratio of targets and non-targets regions in the training image is unbalanced during patch sampling, we combined Dice loss \({L}_{dice}\) with Cross-entropy loss \({L}_{CE}\) together as the loss function \({L}_{1}\) of the first stage to improve the detection of small targets24:

where the weights \(\beta\) and \(\beta\) were set to 0.4 and 0.6, respectively.

where \(C\) is the number of divided categories, \(N\) is the number of voxels in each patch sample in the training set, and \({G}_{i}^{k}\) and \({P}_{i}^{k}\) are the contour corresponding to the \({i}^{th}\) voxel of the \({k}^{th}\) category and the probability output of the model prediction respectively.

In the second stage, the loss function \({L}_{2}\) is the weighted sum of Dice loss \({L}_{dice}\) and Focal loss \({L}_{focal}\):

where \({\mu }^{k}\) is the weight of the \({k}^{th}\) class, which is inversely proportional to each class’s average size. \({\left(1-{P}_{i}^{k}\right)}^{\gamma }\) is the modulating factor which weighs less on easy samples (voxels), whose prediction confidence \({P}_{i}^{k}\) is close to 1. In our experiment, \(\gamma\) is set as 2. In our work, the combination of \({L}_{1}\) and \({L}_{2}\) is used as the total loss of the whole stage.

Experiment and evaluation

Model implementation

Due to the limitation of the actual available Graphics Processing Unit (GPU) memory capacity, we cropped an image patch of \(32\times 320\times 320\) according to sliding windows from the original MRI image as the input of our models, which was trained on a NVIDIA Tesla A100 with 40 GB memory. When training the network, the batch size of network input was 2, the optimizer was RMSprop, the initial learning rate was 0.001, and the maximum number of training rounds (epochs) was 150. As to the segmentation results of each patch, we used Gaussian fusion to generate the full-resolution segmentation results and post-processed the full-resolution segmentation results of each class by the largest connected component.

Evaluation metrics

We respectively calculated DSC, HD95 and ASD for the GTVs’ contouring of BM The DSC is defined as follow:

where \(P\) is the automatically segmented contour, \(G\) is the ground-truth contour. DSC is an indicative degree of similarity for agreement, which measures the spatial overlap between the automatically segmented contour and the ground-truth contour. The HD is defined as follow:

DSC is more sensitive to the inner filling of the segmented contour, while HD is more sensitive to the boundary of the segmented contour. HD95 is similar to maximum HD. However, it is based on the calculation of the 95th percentile of the distances between boundary points in \(P\) and \(G\). The purpose for using this metric is to eliminate the impact of a very small subset of the outliers. The ASD is defined as follow:

where \(S\left(P\right)\) and \(S\left(G\right)\) denote the point set of automatical segmentation pixels and ground truth pixels, respectively. The most consistent segmentation result can be obtained when ASD equals 0.

The RVD is defined as the relative volume difference of automatic contours and manual revised contours, which is defined as follow:

where V(P) means the volume of automatic contour and V(G) means the volume of manual revised contour.

In case of BMs, identifying small tumor is crucial. Therefore, we assess not only the whole volume of GTV but also the segmentation performance for each individual tumor. Considering that small tumors are easily overlooked or misidentified, we employ Area Under Curve (AUC), PR curves and ROC curves to evaluate the performance of model in tumor detection.

AUC is a measure of the performance of a binary classifier. It is calculated by plotting the true positive rate (TPR) against the false positive rate (FPR) at various thresholds, and then taking the area under this curve. AUC ranges from 0 to 1, with 0.5 indicating that the classifier is performing no better than random guessing and 1 indicating perfect performance. The PR curve is a plot that illustrates the trade-off between precision and recall for different threshold settings of a binary classifier. Precision is the ratio of true positive predictions to the total number of positive predictions, while recall (also known as sensitivity) is the ratio of true positive predictions to the actual number of positive cases in the dataset. The PR curve is created by varying the decision threshold of the classifier and calculating the corresponding precision and recall values at each threshold. By plotting these points, the curve provides a visual representation of how well the classifier performs across all possible thresholds. The ROC curves are a performance measurement for classification problems at various threshold settings. The ROC curve is created by plotting the TPR against the FPR at various probability thresholds. An AUC value close to 1 indicates a better performing model.

Statistical analysis

The mean, standard deviation (denoted as mean ± standard deviation) and paired bilateral T-test (\(P\text{ valuve}<0.05\) was considered as statistically significant) were used to compare the mean DSC, HD95 and ASD between different models, U-Net, U-Net Cascade and BUC-Net. All analyses were performed using SPSS statistical software (IBM SPSS, version 26.0; New York, USA).

Results

The performance evaluation of our proposed model was conducted by 10 fresh patients, which was evaluated not only by geometric evaluation for automatic segmentation and detection, but also by the clinical practice.

The performance of automatic segmentation

The test results of three models (U-Net, U-Net Cascade and BUC-Net) for automatically segmenting multiple GTV by these 96 patients was summarized in Table 1. The table was divided into three sections: DSC, HD95 and ASD. Each section included the metric on segmenting all brain metastasis as one target (Binary-class) and segmenting all brain metastasis as multiple different targets (Multi-class), as well as the metric on different diameter ranges of multiple BMs.

In the DSC section, the DSC values of Binary-class were 0.891 ± 0.021 for U-Net, 0.894 ± 0.012 for U-Net Cascade and 0.912 ± 0.022 for BUC-Net. The DSC of Multi-class were 0.757 ± 0.016 for U-Net, 0.782 ± 0.011 for U-Net Cascade and 0.797 ± 0.015 for BUC-Net. The BUC-Net was better than U-Net and U-Net Cascade with a significant difference. The DSC for different diameter ranges were various, in general, BUC-Net was significantly superior to U-Net and U-Net Cascade for BMs except BMs with diameter ranges 12 mm to 15 mm.

In the HD95 section, the Binary-class were 3.812 ± 1.070 mm for U-Net, 3.441 ± 1.378 mm for U-Net Cascade and 0.901 ± 0.110 mm for BUC-Net. The HD95 values of Multi-class were 1.348 ± 0.091 mm for U-Net, 1.171 ± 0.072 mm for U-Net Cascade and 0.922 ± 0.041 mm for BUC-Net. The BUC-Net was better than U-Net and U-Net Cascade with a significant difference. As for different diameter ranges of GTV, in general, BUC-Net achieved the best results for the most diameter ranges. In the ASD section, the ASD values of ASD were 0.918 ± 0.207 mm for U-Net, 0.964 ± 0.417 mm for U-Net Cascade and 0.332 ± 0.142 mm for BUC-Net; The ASD values of Multi-class were 0.326 ± 0.021 mm for U-Net, 0.289 ± 0.015 mm for U-Net Cascade and 0.210 ± 0.017 mm for BUC-Net. The BUC-Net was significantly superior to U-Net and U-Net Cascade, except for BMs with diameter 15 mm to 18 mm.

Overall, in the independent evaluating data set, the BUC-Net model outperforms U-Net and U-Net Cascade in DSC, HD95 and ASD for Binary-class and Multi-class in varying degrees.

The performance of automatic detection

The lesion-based detection PR and ROC were evaluated to assess BMs’ detection performance. we compared the area under the curve (AUC) of each PR and ROC curve by three models. The results were shown in Fig. 5. which demonstrated that our BUC-Net obtained both the best AUC of PR (0.975) and ROC (0.888). It is worth noting that our BUC-Net outperform other methods in terms of the sensitivity and specificity, which are two meaningful perspectives in the detection performance. The FPR of U-Net, U-Net Cascade and BUC-Net was 0.177, 0.068 and 0.054. The false negative rate (FNR) of U-Net, U-Net Cascade and BUC-Net was 0.056, 0.016 and 0.015.

The clinical evaluation of automatic segmentation

These BMs of 96 patients segmented automatically by our proposed model were manually reviewed and revised by oncologists according to the requirement of clinical practice. The relative volume difference (RVD) was calculated between automatic contours and manual revised contours to evaluate its performance in the clinical practice. The results were displayed in Fig. 6. The RVD of BMs segmented by BUC-Net was significantly less than U-Net for various diameter ranges, and less than U-Net Cascade for these smaller BMs in varying degrees. Compared with the conventional manual segmentation by 3 h to 6 h, BUC-Net can be automatically segmented BMs for one patient by about 0.5 min and revised within 10 min by the oncologist, which is conspicuous to reduce the workload of oncologists and improve the efficiency of radiation therapy.

Visualization of automatic segmentation

The comparison between the automatic segmentation and the manual segmentation for an exemplary BM patient was visualized in Fig. 7. Most of contours segmented by BUC-Net have good consistency with the manual segmentation, and these contours segmented by BUC-Net matched better with these BMs in MRI compared with the manual segmentation. Some tumorous region in this patient is difficult to define which is small BMs or blood vessels in the brain (Fig. 7C,F,G).

Examples of the automatic segmentation of BMs by BUC-Net: Compared BMs’ contours (A–L) between the manual segmentation named GTV_gt (red) and the automatic segmentation named GTV_pred (blue); 3D visualization of BMs contours between the manual segmentation (M) and the automatic segmentation (N), and GTVs_pred_group1, 2, 3, 4, 5, 6, 7, 8 is the contour of BMs with the diameter ranges < 3 mm, 3–6 mm, 6–9 mm, 9–12 mm, 12–15 mm, 15–18 mm, 18–21 mm, > 21 mm.

Discussion

The paper discusses the development and evaluation of an automatic segmentation method for BMs using deep learning algorithms. BMs are the most common intracranial tumors that substantially affect the prognosis and quality of life of patients27. Although stereotactic radiotherapy is an effective treatment for BMs6, it requires manual identification of BMs by oncologists, which is limited by the subjective experience of different oncologists and is time-consuming. Therefore, an automated method to segmentation BMs is crucial. Deep learning approaches have been applied to automatic segmentation of BMs and have shown promising applications in radiation oncology28.

The results showed that the proposed BUC-Net achieved the average DSC of 0.912 ± 0.022, HD95 of 0.901 ± 0.110 mm and ASD of 0.332 ± 0.142 mm for BMs’ segmentation evaluation in binary classification, and the average DSC of 0.797 ± 0.015, HD95 of 0.922 ± 0.041 mm and ASD of 0.210 ± 0.017 mm for BMs’ segmentation evaluation in multiple classification. Using cascade strategy could improve the performance of segmentation, especially for small tumors. The bottleneck module could reduce the parameters of the model and accelerate the speed of the algorithm. Moreover, the proposed model achieved the average AUC) of 0.934 for the PR curve and 0.835 for the ROC curve in the evaluation of automatic detection, and showed high sensitivity and specificity in identifying BMs. The FPR was higher than FNR for all methods, the reason was that it would generate discrete points for deep learning method. However, we could not use post processing method such as the maximum connected domain to reduce FPR. Finally, BUC-Net performed the minimum RVD for BMs with various diameter ranges in the clinical evaluation, which required the least revision time by oncologists in the clinical practice.

The clinical evaluation demonstrated that the segmentation and revision for BMs of one patient required less 10 min by BUC-Net, instead of 3–6 h by the conventional manual segmentation, which is conspicuous to reduce the workload of oncologists and improve the efficiency and accuracy of radiation therapy for patients with brain metastases. Our work showed that the deep learning approach is promising for the automatic segmentation of brain metastases on MRI, and the proposed model can be integrated into a radiotherapy workflow to significantly shorten segmentation time for BMs. Nevertheless, if the use of the proposed method based on the U-Net already have allowed us to obtain the DSC of 0.912 for the automatic segmentation in binary classification and 0.797 for the automatic segmentation in multiple classification on 96 test samples respectively, it is reasonable to think that they are still possible to improve these results significantly.

Limitation

Indeed, our approach suffered from three major limitations: the small size of the dataset29,30, the use of model developed for brain lesions other than brain metastases31 and the clinical outcomes for treatment planning32. Additionally, there are many blood vessels in brain MRI (T1-weighted sequences), the morphological characteristics of these blood vessels are very similar with small BMs. Therefore, a few of blood vessels were usually segmented as BMs by our model, which is a challenging task for the automatic segmentation of BMs33. Finally, the study design was retrospective, and performed manual review and revision of the automatically segmented contours by oncologists, but did not assess the impact of the automatic segmentation on clinical outcomes or treatment planning. We are plan to address these limitations in a future work, such as by using more amount of MRI images and multiple-modal MRI to improve the segmentation accuracy and generalizability and evaluate its clinical outcomes for treatment planning, notably developing a deep model adapted to the specific problem of the brain metastases34.

Data availability

The datasets generated and analyzed during the current study are not publicly available due to privacy of patients, but are available from the corresponding author on reasonable request.

References

Achrol, A. S. et al. Brain metastases. Nat. Rev. Dis. Primers 5(1), 5. https://doi.org/10.1038/s41572-018-0055-y (2019).

Sacks, P. & Rahman, M. Epidemiology of brain metastases. Neurosurg. Clin. N. Am. 31(4), 481–488. https://doi.org/10.1016/j.nec.2020.06.001 (2020).

Sinha, R., Sage, W. & Watts, C. The evolving clinical management of cerebral metastases. Eur. J. Surg. Oncol. 43(7), 1173–1185. https://doi.org/10.1016/j.ejso.2016.10.006 (2017).

Chamberlain, M. C., Baik, C. S., Gadi, V. K., Bhatia, S. & Chow, L. Q. Systemic therapy of brain metastases: Non-small cell lung cancer, breast cancer, and melanoma. Neuro Oncol. 19(1), i1–i24. https://doi.org/10.1093/neuonc/now197.PMID:28031389;PMCID:PMC5193029 (2017).

Nieder, C., Norum, J., Dalhaug, A., Aandahl, G. & Engljähringer, K. Radiotherapy versus best supportive care in patients with brain metastases and adverse prognostic factors. Clin. Exp. Metastasis 30, 723–729 (2013).

Lindquist, C. Gamma knife surgery for recurrent solitary metastasis of a cerebral hypernephroma: Case report. Neurosurgery 25(5), 802–804. https://doi.org/10.1097/00006123-198911000-00018 (1989).

Kocher, M., Ruge, M. I., Galldiks, N. & Lohmann, P. Applications of radiomics and machine learning for radiotherapy of malignant brain tumors. Strahlenther Onkol. 196(10), 856–867. https://doi.org/10.1007/s00066-020-01626-8 (2020).

Xue, J. et al. Deep learning-based detection and segmentation-assisted management of brain metastases. Neuro Oncol. 22(4), 505–514. https://doi.org/10.1093/neuonc/noz234 (2020).

Li, Z. et al. Low-grade glioma segmentation based on CNN with fully connected CRF. J. Healthc. Eng. 2017, 9283480. https://doi.org/10.1155/2017/9283480 (2017).

Huang, Y. et al. Deep learning for brain metastasis detection and segmentation in longitudinal MRI data. Med. Phys. https://doi.org/10.1002/mp.15863 (2022).

Bousabarah, K. et al. Deep convolutional neural networks for automated segmentation of brain metastases trained on clinical data. Radiat. Oncol. 15(1), 87. https://doi.org/10.1186/s13014-020-01514-6.PMID:32312276;PMCID:PMC7171921 (2020).

Alongi, P. et al. Radiomics analysis of brain [18F] FDG PET/CT to predict Alzheimer’s disease in patients with amyloid PET positivity: A preliminary report on the application of SPM cortical segmentation, pyradiomics and machine-learning analysis. Diagnostics 12(4), 933. https://doi.org/10.3390/diagnostics12040933 (2022).

Yoo, S. K. et al. Deep-learning-based automatic detection and segmentation of brain metastases with small volume for stereotactic ablative radiotherapy. Cancers 14(10), 2555. https://doi.org/10.3390/cancers14102555 (2022).

Xue, J. et al. Deep learning–based detection and segmentation-assisted management of brain metastases. Neuro Oncol. 22(4), 505–514. https://doi.org/10.1093/neuonc/noz234 (2020).

Ali, M. et al. Applications of artificial intelligence, deep learning, and machine learning to support the analysis of microscopic images of cells and tissues. J. Imaging. 11(2), 59. https://doi.org/10.3390/jimaging11020059 (2025).

Ruiping, Z. et al. Auto-segmentation of the hippocampus in multimodal image using artificial intelligence. Chin. J. Med. Phys. 39(3), 390–396. https://doi.org/10.3969/j.issn.1005-202X.2022.03.021 (2022).

Huang, Y. et al. Deep learning for brain metastasis detection and segmentation in longitudinal MRI data. Med. Phys. 49(9), 5773–5786. https://doi.org/10.1002/mp.15863 (2022).

Tian, S. et al. Transfer learning-based autosegmentation of primary tumor volumes of glioblastomas using preoperative MRI for radiotherapy treatment. Front. Oncol. 14(12), 856346. https://doi.org/10.3389/fonc.2022.856346.PMID:35494067;PMCID:PMC9047780 (2022).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional networks for biomedical image segmentation. in Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham.

Milletari, F., Navab, N. & Ahmadi, S.-A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. in International Conference on 3D Vision (3DV). IEEE, 2016, pp. 565–571.

Jiang, Z., Ding, C., Liu, M. & Tao, D. Two-stage cascaded U-Net: 1st place solution to BraTS challenge 2019 segmentation task. In: Crimi, A., Bakas, S. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2019. Lecture Notes in Computer Science, vol 11992. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-46640-4_22.

Wang, G., Li, W., Ourselin, S. & Vercauteren, T. Automatic brain tumor segmentation using cascaded anisotropic convolutional neural networks. in International MICCAI brainlesion workshop. Springer, 2017, pp. 178–190.

Ulyanov, D., Vedaldi, A. & Lempitsky, V. Instance normalization: The missing ingredient for fast stylization. arXiv:1607.08022 (2016).

Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods. 18(2), 203–211 (2021).

Gutman, D. A. et al. Somatic mutations associated with MRI-derived volumetric features in glioblastoma. Neuroradiology 57, 1227–1237 (2015).

Zhao, Y.-X., Zhang, Y.-M. & Liu, C.-L. ag of tricks for 3d mri brain tumor segmentation. in International MICCAI Brainlesion Workshop. Springer, 2019, pp. 210–220.

Kniep, H. C. et al. Radiomics of brain MRI: Utility in prediction of metastatic tumor type. Radiology 290(2), 479–487. https://doi.org/10.1148/radiol.2018180946 (2019).

Meyer, P., Noblet, V., Mazzara, C. & Lallement, A. Survey on deep learning for radiotherapy. Comput. Biol. Med. 98, 126–146 (2018).

Xue, J. et al. Deep learning-based detection and segmentation-assisted management of brain metastases. Neuro Oncol. 22(4), 505–514. https://doi.org/10.1093/neuonc/noz234 (2020).

Takao, H. et al. Deep-learning single-shot detector for automatic detection of brain metastases with the combined use of contrast-enhanced and non-enhanced computed tomography images. Eur. J. Radiol. 144, 110015. https://doi.org/10.1016/j.ejrad.2021.110015 (2021).

Charron, O. et al. Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput. Biol. Med. 95, 43–54. https://doi.org/10.1016/j.compbiomed.2018.02.004 (2018).

Ye, X. et al. Comprehensive and clinically accurate head and neck cancer organs-at-risk delineation on a multi-institutional study. Nat. Commun. 13(1), 6137. https://doi.org/10.1038/s41467-022-33178-z (2022).

Hesamian, M. H., Jia, W., He, X. & Kennedy, P. Deep learning techniques for medical image segmentation: Achievements and challenges. J Digit Imaging. 32(4), 582–596. https://doi.org/10.1007/s10278-019-00227-x (2019).

Shi, F. et al. Deep learning empowered volume delineation of whole-body organs-at-risk for accelerated radiotherapy. Nat. Commun. 13(1), 6566. https://doi.org/10.1038/s41467-022-34257-x (2022).

Funding

This work was supported in part by Leadership Foundation of The First Hospital of Tsinghua University (No. 2022-LH-03).

Author information

Authors and Affiliations

Contributions

Conception and design, acquisition of data: R. Z., P. G., A. J., C. C., Z. P. Implementation: R.Z., Y.L., P. P. Analysis and interpretation of the data: Y.L., R.Z., P. P. Drafting the article or critical revision: R.Z., Y.L., P. P. Final approval of the manuscript: R.Z., W.Z., C.C

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, R., Liu, Y., Li, M. et al. Automated segmentation of brain metastases in magnetic resonance imaging using deep learning in radiotherapy. Sci Rep 15, 32893 (2025). https://doi.org/10.1038/s41598-025-15630-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-15630-4