Abstract

AI has propelled the potential for moving toward personalized health and early prediction of diseases. Unfortunately, a significant limitation of many of these deep learning models is that they are not interpretable, restricting their clinical utility and undermining trust by clinicians. However, all existing methods are non-informative because they report generic or post-hoc explanations, and few or none support patient-specific, accurate, individualized patient-level explanations. Furthermore, existing approaches are often restricted to static, limited-domain datasets and are not generalizable across various healthcare scenarios. To tackle these problems, we propose a new deep learning approach called PersonalCareNet for personalized health monitoring based on the MIMIC-III clinical dataset. Our system jointly models convolutional neural networks with attention (CHARMS) and employs SHAP (Shapley Additive exPlanations) to obtain global and patient-specific model interpretability. We believe the model, enabled to leverage many clinical features, would offer clinically interpretable insights into the contribution of features while supporting real-time risk prediction, thus increasing transparency and instilling clinically-oriented trust in the model. We provide an extensive evaluation that shows PersonalCareNet achieves 97.86% accuracy, exceeding multiple notable SoTA healthcare risk prediction models. Explainability at Both Local and Global Level The framework offers explainability at local (using various matrix heatmaps for diagnosing models, such as force plots, SHAP summary visualizations, and confusion matrix-based diagnostics) and also at a global level through feature importance plots and Top-N list visualizations. As a result, we show quantitative results, demonstrating that much of the improvement can be achieved without paying a high price for interpretability. We have proposed a cost-effective and systematic approach as an AI-based platform that is scalable, accurate, transparent, and interpretable for critical care and personalized diagnostics. PersonalCareNet, by filling the void between performance and interpretability, promises a significant advancement in the field of reliable and clinically validated predictive healthcare AI. The design allows for additional extension to multiple data types and real-time deployment at the edge, creating a broader impact and adaptability.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) in healthcare is changing clinical decision-making with predictive analytics, personalized diagnostics, and real-time monitoring. One of the most notable innovations includes early diagnosis and risk stratification of diseases through deep learning from electronic health records (EHR). However, worried about the trust, accountability, and clinical adoption of these models, many stay as black boxes despite being accurate. In recent years, explainable AI (XAI) has shown great potential in improving transparency, enabling healthcare professionals to understand and validate the rationale behind predictive modeling1,2. XAI has been utilized with machine learning for various disease classification, risk prediction, and patient triage specializations in studies3,4,5. However, several existing solutions are restricted to particular conditions, do not generalize across datasets, or do not give individual explanations for clinical decisions.

To overcome these limitations, we present PersonalCareNet, an explainable artificial intelligence (XAI)-based personalized health monitoring framework using a novel deep learning framework. The PersonalCareNet integrates CNNs with an attention mechanism and SHAP-based interpretability built on the MIMIC-III dataset for high prediction accuracy and deep clinical insight. Although significant strides have been made in predictive health care, there is a large gap between the predictive ability of black boxes and the interpretability that is needed for clinical acceptance. In the realistic healthcare environment, it is crucial to provide not only the forecast of patient outcome, but also the reasons for the forecast, which in turn guides clinical trust and decision making. Patient-level risk assessment (PLRA) is an integral part of predictive healthcare, and it has two tasks: (i) prediction on the chance of a clinical event and (ii) characterization of the significant factors explaining the predicted risk. Closing the predictability-to-clinical applicability gap is at the heart of the proposed work.

The primary objective is to develop a deep learning model that ensures personalized health prediction while offering robust interpretability at both global and local levels. Key novelties include the integration of attention-enhanced CNNs with SHAP force plots and feature dependence visualization, providing end-to-end clinical interpretability. The significant contributions of this paper include: (i) a high-performing predictive model achieving 97.86% accuracy, (ii) explainability at both population and individual patient levels, and (iii) comprehensive experimental validation using multiple XAI techniques and comparative baselines.

The remainder of this paper is organized as follows: Sect. 2 presents a detailed review of related literature and highlights existing gaps. Section 3 describes the proposed methodology, including system architecture, dataset preprocessing, model design, mathematical modeling, and algorithmic workflow. Section 4 covers the experimental setup, evaluation metrics, exploratory analysis, performance results, and ablation studies. Section 5 discusses the significance of findings, research implications, and study limitations. Section 6 concludes the paper with future directions to enhance clinical integration, cross-dataset validation, and real-time deployment of the proposed framework.

Related work

XAI has now become one of the crucial parts of healthcare AI systems to promote interpretability, trust, and more clinical adoption. We synthesize advances in predictive modeling, edge-based health monitoring, clinical decision support, ethical considerations, and system scalability to communicate and review 80 key studies across five thematic areas in this background section. In this analysis, we identify research gaps that the proposed framework addresses.

Explainable AI methods for predictive healthcare

Explainable Artificial Intelligence (XAI) techniques have substantially improved model transparency, interpretability, and clinical trust within the predictive healthcare paradigm. Several machine learning and deep learning methods have been developed for risk stratification, diagnosis, and prognosis prediction, with interpretable components to enable clinical integration.

Niu et al. For instance1, proposed a non-parametric clustering model with attention and Dirichlet Process Mixture Models for EHR-based illness risk prediction while maintaining high predictive accuracy as well as improved interpretability. In another study, using an explainable XGboost model for the detection of chronic kidney disease, Pedro6 identified critical clinical features that contribute to the prediction of chronic kidney disease, including hypertension and hemoglobin; however, the model was neither validated on broader populations. Martino et al. Related Work Wu et al.7 presented an explainable malnutrition prediction model using heterogeneous m-health data and interpreting m-health derived malnutrition risk scores in terms of contextual information, then argued the importance of longitudinal data for personalized healthcare.

Deep learning-based models are also adopting XAI tools. Giovanni et al. employed a deep neural network8 for predicting postprandial glucose levels in Type 1 Diabetes, integrating SHAP9 to identify glycemic predictors. However, validation within a clinical setting is warranted. Loh et al. conducted a systematic survey10 that found SHAP and Grad-CAM are mainly used to improve the interpretability of models in healthcare. Still, there is a lack of work on interpreting anomalous detection in biosignals.

Various jobs on stress prediction and mental health applications have been mentioned. Jaber et al. Yang et al.11 instead used a combination of wearables and biomarkers, and interpretable ML models in the context of stress monitoring. Fused data (multi-modality) for explainable clinical AI11. Senthilkumar et al. IoT-catered explainable models for limited-utilization healthcare devices were proposed in12, as were12 Wan et al. For instance, an XAI tool to diagnose Prolonged Grief Disorder has been developed through Random Forest explanations13.

Works like those of Julie et al., which are aimed at supporting the clinical trust and adoption14, and Khansa et al. Considerable studies over the years15 certainly underscore the importance of multidisciplinary collaboration and reliability assessments. Thanh et al. Explainable models were employed to predict drug–drug interaction by9, whereas Zheng et al. SHAP and LIME16 reached high AUC in ischemic stroke prediction, stating that real-world primary care validations are warranted. Elham et al. More recently17, proposed an innovative home-based cognitive decline detection framework from behavioral features, but the monitoring durations were short.

Zhang et al.18 and Jacobs et al. In a clinical context19, illustrated the trade-off between model fidelity and interpretability. Peng et al. Hepatitis detection model 5720 - proposed model is based on Random Forest (RF) methods combined with SHAP and LIME to improve interpretability and has an accuracy of 91.9%; validation on wider real-world clinical datasets is lacking. As Yang21 highlighted, with trust being an essential requirement of clinicians to justify the application of complex ML algorithms considering their real-life clinical consequence, one option of addressing this requirement would be to develop collaborative frameworks between clinicians and direct interpretable ML algorithms for: (1) better decision making and (2) improving the reliability of the algorithms to close the gap between algorithm logics and clinician decisions. Carlo et al. Frameworks introducing elements of symbolic and sub-symbolic AI were proposed for user-oriented XAI systems22. For example, Moreno23 employed the XGBoost algorithm for heart failure survival prediction and reported serum creatinine and anemia as relevant features, while highlighting the importance of preserving the balance of predictive fidelity and clinical interpretability.

Samaneh and Mohammad24 proposed the ISAF framework for cardiac event prediction, obtaining a good sensitivity score for detecting sepsis-induced cardiac arrest, but the MIMIC-III dataset limited it. Vajira et al. A GAN-based model called DeepSynthBody25 is designed to generate synthetic medical data to deal with privacy issues, but it does not solve other problems beyondµdata privacy.

Davagdorj et al. enhance global and local interpretability in chronic disease prediction by applying DeepSHAP to a deep neural network for non-communicable disease (NCD) prediction26. Finally, Isabella et al. Ganesh et al.27 have outlined the need for machine learning to be part of the clinical workflow, highlighting that while transparency of the model, as well as harmonization of the data, are pivotal, small data are an emerging challenge.

Taken together, our studies highlight the increasing significance of explainable predictive models in healthcare. Although multiple models reach the point of accuracy and transparency, challenges still exist for the generalizability and validation in real-life as well as the seamless integration into the clinical flow, which drives the need for open frameworks like that proposed in this work.

Edge and IoT-Centric health monitoring with explainability

XAI, IoT, and edge computing: By bringing XAI, IoT, and edge computing together, new opportunities for real-time and resource-efficient health monitoring can be enabled, especially in the case of distributed as well as bandwidth-constrained environments. Novel lightweight and interpretable models that support on-device intelligence (thereby improving device transparency and clinical trustworthiness of IoT-based healthcare deployments).

A compact mLZW compressed fog-enabled TinyML-based binary classifier for energy-efficient disease prediction, with an F1-score of 0.93: Arthi and Krishnaveni2. As an alternative, He et al.28 proposed a semi-supervised learning framework to learn better mutable representations based on interpretable nonlinear transformations that showed superior interpretability and outperformed the ground truth. Still, they do not support general security problems, such as network attack analysis. Biplov et al. Toani et al.3 proposed an ML-based IoT health monitoring system for vital sign feature extraction (SpO₂, pulse, etc.) with SVC and SHAP explainability (over 98% accuracy while considering hardware and connectivity constraints). Similar explainability works focused on IoT-based time-series data include Martino and Delmastro29, which optimized explainability for transparency and model performance trade-offs for healthcare applications.

Khan et al. For epilepsy detection in IoMT systems5, employed Random Forest with LIME and SHAP on EEG data and attained an accuracy close to 99.89%. But their method would need to be applied to various datasets and more complex sequential models. Joy Dutta et al. An IoT–Edge–Cloud cardiac arrest prediction system was introduced in28 through the integration of LIME and SHAP to assure interpretability in the predictions. Still, the two-layer cloud-edge coordination could not offer a seamless performance.

Privacy-Preserving Frameworks for Healthcare Analytics– The works in19,30 present federated learning frameworks for privacy-preserving healthcare analytics. Deepti et al. Explainable federated learning on ECG and CT images was implemented in31 for transparent and privacy-preserving solutions in Healthcare 5.0; however, the deployment is elaborate. Ali et al. The work in32 integrated SHAP-based explanations into the training process of a CNN model for arrhythmia detection and evaluation in a federated approach, overcoming classic barriers like device heterogeneity and adversarial attacks, and achieved an accuracy of 98.9%.

Mazin33 conducted a comprehensive review of AI-IoT-based health monitoring systems for smart cities, focusing on standardization issues and proposing improved unification among various platforms. Hossain et al. A scalable architecture with 5G-enabled surveillance capability for pandemic response based on AI and Blockchain, presented by34, is limited by ethical concerns and the massive volume of data for availability.

Meanwhile, several other studies examined different aspects of scalable, explainable IoT-supported health systems. For instance, vital sign monitoring and distributed ML model optimization at the network edge are handled in35,36,37,38,39. Shin40and Morley et al. Ethics and user trust in AI-based IoT41: highlighted the ethical issues and user trust in AI-oriented IoT, emphasizing the need for transparency and fairness in health data processing. Firouzi et al. A stress monitoring system considering edge-fog-cloud co-architecture is proposed in4243,44,45., and46 work on modular AI models for edge-based health analytics.

Further implementations in haptic feedback for COVID-19 applications47 and real-time patient monitoring48 were reported with successful performance, but require colettf[53 applications in clinical settings47,48. Rahman et al. To improve interpretability in real-time management49, developed a haptic edge learning architecture for pandemic response, which unifies decentralized learning and cloud coordination.

Together, these contributions demonstrate the potential of incorporating explainable AI in IoT and edge-based healthcare systems, enabling improved interpretability, privacy, and scalability. Nevertheless, there are hurdles to using generalized models, efficient on-device inference, and ethical data management in real-world health monitoring scenarios.

XAI-Enabled clinical decision support and imaging

The emergence of Explainable AI (XAI) has represented a crucial revolution by increasing the transparency and interpretability of model predictions towards clinicians in CDSS and medical imaging analysis. These works have focused on achieving visual interpretability and feature attribution to move the intention of models from machine learning to clinical utility14,18.

Giovanni et al. A DNN being used to forecast the postprandial blood glucose of Type 1 Diabetes Mellitus was written by8 and utilized SHAP interpretations to highlight the most influential glycemic drivers. Although the model had clinical applicability, it needed to be validated in a larger patient ex vivo cohort. Loh et al. A recent comprehensive review published by10 examined 99 Q1 healthcare publications on interpretable ML. They found that SHAP and Grad-CAM have become popular methods for feature-level interpretability and visual interpretability, respectively, in clinical applications. However, there are still gaps in biosignal anomaly detection and clinical text analysis.

Jacobs et al. Remaining Challenges. In a recent study, Zhang et al.30 advocated for co-designed clinical decision tools, highlighting that most existing XAI solutions fail to integrate with clinical workflows, similarly, Matthias et al. AI-based Self-diagnosis apps were the subject of the research performed by50, which also raised issues that affect clinical implementation, such as privacy and reliability. In light of these findings, XAI frameworks must be designed to be both technically strong and compatible with workflow.

Methods of interpretation: In the domain of medical image interpretation, a CNN-based classifier for the transformer detection of bearing failure was developed by Chen and Lee51. To provide improved localization via class activation mapping, Grad-CAM visualizations were utilized. The approach was sequential and not embedded into end-to-end feature extraction pipelines, despite being technically correct. Julian et al. Ada-WHIPS (53) is a rule-based model explanation tool covering a wide area but with limited feature attribution capability. Daniel et al. Article preview52 specifically discussed opacity present in ML-based smart city monitoring systems and suggested general-purpose XAI methodologies, but these need to be specialized in the clinical field.

Further work tackled indirect research predictive maintenance frameworks related to health care. Andreas et al. Lastly, a review of predictive maintenance models using AI techniques53 highlighted the absence of evaluation standardization among different studies, which could be said to apply to T4 health care CDSS research as well, but which are otherwise less relevant for the health domain. Vikram et al. A semi-hybrid predictive maintenance model using Bayesian inference and imaging sensors was proposed in54, with the benefit of high accuracy but requiring human confirmation for final decision-making. Sameer et al. While not directly related to medical imaging55, investigated AI-based RUL estimation of milling tools and suggested promising techniques, ANN and LSTM, for implementation.

Vajira et al.56, in the aspect of medical data privacy, DeepSynthBody25 is a deep generative GAN-based system developed for synthetic data generation that targets privacy and data shortage in the field of clinical imaging. But its current field of application is limited. Zhang et al.57 gave an account of clinical XAI systems’ cross-institutional scalability issues, underscoring the need to harmonize disparate healthcare datasets and for methods to guarantee that the explainability mechanisms are resilient to heterogeneous data sources. Isabella et al. As27 pointed out, integrating clinical workflows with machine learning can enhance transparency and harmonization of data, making it more easily implemented and adopted by institutes and organizations.

Additionally58,59,, and60 studied explainable medical imaging pipelines in the aspects of multimodal imaging fusion, feature attribution in radiomics, and explainable segmentation algorithms, respectively. Although these studies showed increased levels of transparency, they also identified significant issues with clinical validation and privileging4.

Together, these contributions demonstrate that explainable AI improves the transparency and interpretability of models for clinical decision support (CDS) and diagnostic imaging.” However, these methods need more research to integrate them with clinical workflows better, achieve cross-modality generalizability, and address usability gaps within the real-world healthcare setting.

Ethical, social, and clinical trust dimensions of XAI

For explainable AI (XAI) systems to be adopted meaningfully in healthcare, these systems need to address issues not only of technical performance but also of ethical, social, and trust issues. Recent literature suggests that transparency is not enough; models must also be value sensitive for stakeholders to trust them (relevant stakeholders here may include patients, clinicians, and hospital administrators), and their designs must fit into existing clinical workflows and local institutional frameworks for accountability.

Mark and Jacob56 suggested that instead of focusing on algorithm-specific outputs, deploying medical AI may require explainability through institutional explanations that foster reliability via calibration among human and computational agents and allow for evaluations in context. Aniek et al. An imbalanced trade-off exists between performance and interpretability, so37 came up with a set of principles to calibrate the degree of model fidelity and local and global interpretability within a unified framework, but also noted that further work is needed in establishing standard evaluation criteria cutting across healthcare applications.

Zhang et al. About local explanations, while they bring insight into the situation, a low performance associated with explanatory models characterized by high performance would paradoxically reduce the behavior of trust18. Nevertheless, a transparency strategy needs to be undertaken with caution18. Jacobs et al. The inadequacies in existing XAI methods further highlight the need for co-designed decision support tools that integrate into clinical workflows19.

Matthias et al.30 found that healthcare professionals’ perspectives on AI-driven self-diagnosis apps are influenced by privacy risks and the integration of these apps with clinical care, which affect trust. In a study of public perceptions of AI-enabled medical devices conducted by Pouyan50, concerns about unclear technical reliability and other ethical compliance issues prevented widespread acceptance.

Shin40 discussed the claim in the user-centered computing field that explainability & causability enhance user trust and proposed that the design of the model must contain fairness and accountability. Morley et al.41 depicted the ethical spectrum of AI in healthcare from the individual level to societal aspects. They called for clear regulations to guide the use of AI in clinical practice.

Rachel et al. The role of trust in AI-driven cardiotocography was explored in a separate qualitative study by61, which found that transparency, reliability of outcomes, and organisational culture were key factors in determining clinician trust in AI tools. They elaborated on the importance of empirical testing and cross-disciplinary cooperation in the process of addressing ‘safe’ advancements in applied Artificial Intelligence.

Other works62,63,64,65, and66 addressed socio-technical factors impacting the clinical adoption of explainable healthcare AI systems in the context of fairness, privacy, and accountability. Together, these studies reinforce that for XAI to work in healthcare, technical solutions must be bolstered by ethical safeguards, patient-centered design, and institutional supports.

Takeaway message: Effective deployment of explainable AI in healthcare settings necessitates attention to socio-technical issues such as fairness, privacy, usability, and accountability, which are critical to building trust.

Scalability, generalization, and future directions in XAI systems

Although progress has been made, scalable and generalizable explainable AI (XAI) systems for healthcare remain an elusive goal due to the ongoing trade-offs in transparency and model accuracy. We need to narrow these gaps before XAI solutions can be deployed in the fast-moving, heterogeneous, and clinically diverse environments in which they will be used.

Zahra et al. Explainability is extremely important, and4 surveyed explainability techniques specifically focused on AI in diagnosis and surgery and concluded that more attention should be put on inherently interpretable models and collaboration between clinician and data scientist, as both these aspects could help improve the trust and usability of AI. Martino et al.29 discussed scalability issues in time-series and tabular data explainability and the interpretability versus model trade-off.

Martino et al. Cross-sectional analyses, although important as starting points, have limited interpretability when the goal of a detection model is to trigger personalized interventions, as emphasized convincingly by7. Jaber et al.67 validated their wearable-based stress prediction model but suggested that emotional variables should be integrated into the model to improve its generalizability. Yang et al. As well11, championed data fusion from various modalities and centers in the clinical XAI use cases and acknowledged the need for explainability with stronger adherence to adoption and regulatory standards.

Senthilkumar et al. Open research challenges in the context of resource-constrained healthcare devices and edge-centric XAI solutions are highlighted, along with a discussion of how future 6G architectures could support the above concepts12. Wan et al. developed a web application for a Random Forest model in XAI to diagnose Prolonged Grief Disorder in13. This application provided individual patient explanations, but it encountered issues, including cultural bias and a small validation dataset.

Julie et al. The different contexts and user communities within the healthcare ecosystem that may rely on AI are being discussed, and ‘explainability needs’ that change according to the healthcare context and need are being identified, indicating the relevance of multidisciplinary collaboration, generalized expository needs of all AI systems moving forward. Khansa et al. Ethics Name of the article –56 Content – Importance of ethical use of explainable ML systems, respecting their design principles for reliable and transparent clinical practice. Thanh et al. Explainability in the context of drug-drug interaction prediction about its importance in terms of trust in clinical pharmacology has recently been discussed9.

Other papers focused on scalability and deployment. Specifically68,69,70,, and71 developed modular architectures and scalable frameworks for explainable clinical models, while72,73, and74 studied dynamic explainability methods for ever-changing healthcare systems. These contributions highlighted the importance of adaptive systems whereby their properties can evolve as new clinical data or deployment contexts arise.

Khaira et al. Open-source explainable AI frameworks and their scaling limitations have been highlighted by75, whereas76,77,78, and79 explored their real-world deployments, provided insights into privacy-preserving explainability, and incorporated regulatory-compliant explanations into clinical decision-making pipelines, respectively. These papers highlight several recommendations for XAI systems, of which the most urgent is moving away from one-shot, limited-dataset validations to dynamic scalability, longitudinal data amalgamation, and stakeholder-driven design. It is imperative to tackle these aspects to ensure that explainable AI models can be ethically deployed, clinically relevant, and tractable in a diverse set of healthcare systems. Theissler et al.53 discuss machine learning-driven predictive maintenance in automotive industry, highlighting practical use cases and implementation challenges. Hatwell et al.81 introduce Ada-WHIPS for explaining AdaBoost models, improving interpretability in health sciences applications and predictive analysis.

Methodology

This section describes how the PersonalCareNet framework for personalized health monitoring is proposed. It represents an overview of our system, data preparation, architecture of the deep learning model, Mathematical formulation, and algorithmic workflow. In particular, the predictive and interpretability-oriented modeling strategy, with integrated SHAP-based interpretations and attention, ensures the trustworthiness and applicability of the model output in an actual clinical pathway.

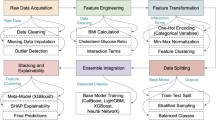

System overview

We present PersonalCareNet, a framework utilizing Predictive Modeling integrated with explainable artificial-intelligence techniques to enable personalized health monitoring. Our system consists of a modular pipeline that involves data acquisition from electronic health records (EHRs), data preprocessing, deep learning-based prediction, and explanation of model outputs. Example application — Physical/Physicians/Physicians. The input to the system consists of structured data from a patient, including observation records describing, e.g., vital signs, laboratory readings, demographics, etc., which has to go through several pre-processing steps before it is transformed to a format suitable for ingestion by the model.

The preprocessed data are fed into the core piece, PersonalCareNet, a deep learning architecture that combines health risk prediction and interpretability. The model progressively transforms clinical data across neural layers to extract increasing levels of pattern abstraction. The system provides prediction and generates reasons for every output by using a post hoc explainability tool such as SHAP (Shapley Additive exPlanations). This helps clinicians interpret the model by learning about the features that contributed to the prediction for any particular patient.

The system’s output consists of the predicted clinical outcome (such as risk level or disease status) and a ranked list of contributing features, which helps enhance trust and transparency in the decision-making process. This dual-output mechanism ensures the system is accurate, interpretable, and clinically actionable. Overall, PersonalCareNet is positioned as a trust-aware AI solution that facilitates personalized and explainable decision support in modern healthcare environments.

PersonalCareNet workflow overview: Fig. 1 shows the end-to-end workflow of PersonalCareNet, which includes trustworthy and interpretable clinical decision-making. The framework commences by ingesting structured patient data (for example, vital signs, laboratory test results, and demographic information) extracted from electronic health records. A series of preprocessing steps (e.g., data cleaning, normalization, dimensionality reduction to ensure data quality and consistency, etc.) is applied to these inputs.

This normalized data is passed into PersonalCareNet, a neural network for personalized risk prediction. Here, multiple hidden layers with different levels of abstraction extract relevant clinical patterns. Upon creating a prediction (e.g., predicting disease risk or classifying health status), the system calls a model-agnostic explainability module (e.g., SHAP) that generates feature attributions that highlight which input variables most contributed to the model’s prediction.

The output is a prediction alongside a feature attribution (either visual or tabular) for the prediction. Providing this dual output means users, whether clinicians or other healthcare professionals, can not only trust the decision made by the model but also understand the rationale behind it, thus promoting transparency, interpretability, and patient-specific reasoning in healthcare applications.

Dataset description and preprocessing

The MIMIC-III80 (Medical Information Mart for Intensive Care III) database is a public and de-identified critical care database with detailed clinical information about more than 40,000 ICU patients. It includes patient demographics, vital signs, lab tests, medical records, and discharge summaries. As such, age, gender, heart rate, respiratory rate, systolic and diastolic blood pressure, oxygen saturation, glucose level, and selected laboratory test indicators were extracted as relevant structured features for this work regarding clinical significance for risk assessment and monitoring.

We utilized a subset of the MIMIC-III database, composed of ICU patients with available complete information of essential clinical variables for risk prediction. The subgroup was chosen because the required physiological and laboratory characteristics (such as age, gender, heart rate, respiratory rate, blood pressure, oxygen saturation, glucose, and select lab results) were available. Records with missingness > 30% or inconsistent values were removed to ensure quality data. After the data pre-processing, the resulting dataset contained 10,432 data samples of patients stratified to achieve a balanced distribution between the different outcome classes. The training, validation, and testing sets were further partitioned from the dataset for model training and validation.

Our raw dataset has been subject to a strict preprocessing pipeline necessary for quality and consistency. First, records with excessive missingness or incorrect entries were removed. When inputting numerical variables, we either did mean or median imputation based on whether the distribution was normal. We used mode imputation for categorical variables and one-hot encoding when relevant. Subsequently, we normalized the features with z-score standardization, which scales the features to a zero mean with unit variance, favoring faster convergence during model training.

To avoid redundancy and minimize computational effort, we conducted dimensionality reduction using Principal Component Analysis (PCA), preserving the most informative components. Finally, the dataset was stratified and subsetted into training, validation, and testing splits to account for balanced class distribution. This preprocessing pipeline guarantees that the input provided to the PersonalCareNet model is clean, consistent, and presentable data, enabling the model to learn the best patterns and produce accurate predictions.

Model architecture: PersonalCareNet

At the center of the predictive part of the framework is a deep neural network model, PersonalCareNet, that we designed for structured healthcare data. The model aims to support complex, non-linear clinical features in outcome mapping while including explainability methods to promote transparency. The DTP-MDL model functions with a preprocessed input vector that represents individual patient profiles, and each profile includes a selection of physiological, laboratory, and demographic variables.

The architecture of the PersonalCareNet model is illustrated in the Figure, which includes dense layers with regularization components for structured clinical data and an optional attention mechanism for those features before outputs of risk predictions. The last output layer calculates probabilities, and the built-in explainability modules make it possible to interpret contributions of features to the final score, so this tool helps with transparent clinical decision-making.

At a high level, the architecture starts with an input layer that can accept fixed-length feature vectors. Then it is followed by one or more dense fully connected layers with non-linear activation functions like ReLU. They are the layers that learn the high-level abstractions of the input data. Dropout layers and L2 regularization are introduced and implemented in the inter-layer to avoid over-fitting and facilitate generalization. Batch normalization may also be included to stabilize training and speed convergence in the network architecture.

In Fig. 2, finally, an attention mechanism is optionally added in later layers to help interpretability of the model in paying attention to key clinical cues. With this component, the model can learn to weight features dynamically based on the prediction task. For binary classification problems, a sigmoid activation function is used in the final output layer to yield a probability score of a clinical event or associated risk.

It uses backpropagation and an optimization algorithm like Adam or SGD (stochastic gradient descent), minimizing cross-entropy loss. Hence, the model will still be compatible with explanation methods such as SHAP to interpret post hoc the learned feature importance. So, PersonalCareNet combines prediction power with interpretability and is more suitable for integration into clinical decision-support systems. Table 1 summarizes the most critical notations appearing in the methodology and equations of the PersonalCareNet framework.

Mathematical perspective

With respect to data preprocessing we normalized each feature, \(\:{x}_{i}^{j}\), (denotes feature, denotes patient record) to have zero mean and unit variance. Its formula calculates the normalized feature as in Eq. 1.

\(\:{\mu\:}_{j}\) and \(\:{\sigma\:}_{j}\) are the average and standard deviation respectively, of the \(\:j\) -th feature over all records. Afterward, feature selection or extraction is done to boost model interpretability and reduce the dimensionality.Use a technique called Principal Component Analysis (PCA) to project into a low-dimensional space \(\:Z\) so that, \(\:Z\in\:{\mathbb{R}}^{\text{n}\times\:\text{k}}\) where \(\:k<m\). To do this, we project \(\:X\) onto the eigenvectors of its covariance matrix as in Eq. 2.

Where \(\:W\) represent the eigenvectors with the biggest \(\:k\) eigenvalues. At the heart of PersonalCareNet is a neural network model with an explainability layer The neural network consists of an input layer of size \(\:k\), hidden layers \(\:{h}_{1},{h}_{2},\dots\:,{h}_{l}\) in size of neurons, and an output layer for predictions as in Eq. 3.

as we have: where \(\:{h}^{\left(l\right)}\) and \(\:{b}^{\left(l\right)}\) is the weight matrix of layer \(\:l\), \(\:\sigma\:\) is the bias vector of layer, is the activation function (e.g., ReLU or sigmoid), and \(\:{h}^{\left(l-1\right)}\) is the output from the previous layer. To enable explainability, we incorporate the Shapley Additive Explanations (SHAP) framework. Thus, the Shapley value \(\:{\varphi\:}_{\text{j}}\)13 is used to calculate the contribution of each feature to the prediction as in Eq. 4.

Where \(\:S\) is a subset of features, \(\:N\) is the set of all features, and \(\:f\left(S\right)\) is a symbol denoting the model prediction wconcerningthe features in subset \(\:S\). Finally, output layer produce the prediction \(\:\widehat{y}\) finally used for the comparison with the ground truth using some loss function. In the case of binary classification, we use cross-entropy loss as in Eq. 5.

The model parameters are gradually updated through gradient descent, as shown in Eq. 6.

Where \(\:\eta\:\) is the learning rate. The combined output from PersonalcareNet is predictions \(\:\widehat{y}\) and feature attributions \(\:\varphi\:\) that can offer both accurate and interpretable output. By providing these two outputs together, it builds confidence for predictive healthcare, with clinicians able to assess why a model is making a decision.

Proposed algorithms

This section outlines the basic algorithms that define the core functionality of the framework of PersonalCareNet. The pipeline consists of three algorithms, one to preprocess in-hospital clinical data for input to the deep learning model, which is then trained to produce explainable predictions. They provide outputs that are accurate, transparent, and clinically relevant for personalized and timely health monitoring and decision support.

Algorithm 1 The preparation of the MIMIC-III dataset, including the raw patient data for the PersonalCareNet model. This starts with identifying critical clinical variables like vital signs, lab measurements, and demographic characteristics that affect the outcome of health. These features are selected for clinical relevance and their availability in all records. The next thing to do is text preprocessing, dealing with missing data. For numerical features, we fill the missing values with the average or median of the observed data, depending on the distribution of the values. For categorical features, we replace missing entries with the most common category. A feature or patient record containing too many missing values can be excluded.

After data cleansing, all numerical features are first standardised, i.e., brought to a similar scale. This is important as we do not want to bias the model towards one feature, just because it is in a different unit or range. Numerical conversion for categorical data so that the model can process the input. Now, a dimensionality reduction technique is applied to reduce complexity and eliminate redundancy. This step reduces the data to a smaller set of meaningful components while retaining much of the original information—this is useful from a computational efficiency for model training standpoint and for alleviating noise in data. The last step is the stratified split for train/test/validation partitions. This guarantees that ratio of different classes (like positive and negative) would be identical in all the sets. The prepared and processed data is then utilized in the model training phase in PersonalCareNet framework.

Algorithm 2 PersonalCareNet training, describes the training procedure of PersonalCareNet, which learns predictive patterns from previously preprocessed clinical data. This includes standardization and dimension reduction of the input data, followed by model training. The model’s architecture has an input layer and multiple hidden layers with fully connected neurons. All these layers transform the data using activation functions, which help this model learn complex, nonlinear relationships between patient characteristics and health outcomes. Dropout layers are placed between hidden layers to prevent overfitting and generalize the model. During training, these layers randomly turn off some neurons, which requires the model to learn more robust and independent representations. Furthermore, batch normalization might be employed to normalize each layer’s output, aiding in stabilizing and speeding up training.

It trains the model across numerous iterations, called epochs. In each epoch, you will make predictions based on model parameters, compare them with the actual results, and calculate the prediction error based on a loss function intended for classification tasks. With this error, the model modifies these internal parameters — weights and biases — using an optimization algorithm (e.g., Adam, stochastic gradient descent). With every iteration, the delta reduces the loss and hopefully improves the model’s accuracy. This process of training and validating continues until there is no improvement on the validation set or a certain number of epochs has been reached. The model learns from this data repeatedly and modifies its internal structure to improve its mapping of input features to the correct output labels. At the end of the training phase, the PersonalCareNet model has the optimized parameters to predict patient data that has never been seen before correctly. The trained model is then evaluated and incorporated with the explainability module to provide interpretable results.

Algorithm 3 accompanies the last step of the PersonalCareNet pipeline, in which the trained model is utilized to generate predictions for new patient data and explainable AI-based interpretability9,15 Fig. 2B]. After training the model on the ideal parameters, the model is then deployed to predict the results of the test dataset or live input. The model takes each patient record and processes the input features to generate an output, usually a prediction of a health risk or clinical condition in the form of a probability score.

To provide better trust and to support clinical decision-making, each prediction comes with an explanation that indicates which input features contributed most to the prediction of the outcome. This is done using post hoc explainability techniques like SHAP (Shapley Additive exPlanations). These techniques look at the model behaviour and compute contribution values for every feature — how much the feature scored above or below as compared to a baseline increased or decreased the prediction.

Then the system produces the list of features that ranked highest in terms of how much they impacted the model output decision for each patient. Those explanations can then be displayed in the form of a bar chart, summary plot, or table so that the clinicians can comprehend to a certain degree why the model made its predictions. This is essential in medical applications where decisions must be interpretable, particularly for severe diseases or high-risk predictions.

Prediction and interpretability together guarantee that the system produces accurate outputs and a clear understanding of how these outputs are generated. This inspires confidence in the clinician and makes AI safe for use in clinical workflows. In sum, this algorithm guarantees that PersonalCareNet’s predictions will be both correct and explanatory, thus interpretable and executable in real-life decisions.

Evaluation methodology

We evaluate PersonalCareNet by applying the proposed model to a benchmark healthcare dataset and comparing the predictive accuracy and interpretability against standard metrics. Set xTrain, xTest, yTrain, yTest as the dataset split into training \(\:{D}_{\text{t}\text{r}\text{a}\text{i}\text{n}}\) and testing \(\:{D}_{\text{t}\text{e}\text{s}\text{t}}\) subsets, \(\:{X}_{train},{y}_{train}\) and \(\:{X}_{test},{y}_{test}\) their feature matrices and labels, respectively. Train the mmothcap D sub train. Predictions y hat are generated for the for \(\:{D}_{\text{t}\text{e}\text{s}\text{t}}\). Accuracy, Precision, Recall, F1-score, and Area under the Receiver Operating Characteristic Curve (AUC-ROC) are usedto assess the performance. It calculates the accuracy of the model as follows: where cap D sub-test cap D sub, the number of correctly predicted samples/total number of samples, as in Eq. 7.

,

Here, \(\:\:TP,\:TN,\:FP\), and \(\:FN\) are the true positive, true negative, false positive, and false negative respectively. In order to assess the performance for each class, precision and recall are computed as in Eq. 8.

For imbalanced datasets, the F1-score, which represents the harmonic mean of precision and recall, allows for the holistic measurement of our model, as in Eq. 9.

The AUC-ROC is also calculated, which measures the model’s ability to distinguish between the positive and negative classes. A ROC curve is a graph that shows the True Positive Rate (TPR) as it relates to the False Positive Rate (FPR), as in Eq. 10.

The area under this curve (AUC) is the AUC, where values closer to 1 describe better discriminatory power. Feature importance is analyzed with Shapley values to evaluate the model’s explainability. Then, the average Shapley value phi sub j over all the test samples was computed for each feature\(\:j\) as in Eq. 11.

Where \(\:{\varphi\:}_{j}^{\left(i\right)}\)is the Shapley value of feature for the \(\:j\) -th test sample. Interpretability is measured by aligning decisions from the model to the domain expert annotations. And finally, statistical tests, i.e., paired t-test or Wilcoxon signed-rank tests, are performed against baseline models to compare PersonalCareNet with the rest of the models. These tests can then decide if the performance improvements observed are statistically significant, where our null hypothesis indicates no significant difference between the two models. We reject or accept the p-value, making the results robust. Such a thorough evaluation guarantees both predictive and interpretive power of PersonalCareNet.

Key methodological contributions

The novelty is that the proposed PersonalCareNet framework is designed as an integrated model for interpretative personalized health risk prediction. Contrary to previous research that independently uses prediction and explainability approaches, we developed an end-to-end model that integrates them within a single framework, which is specifically designed for structured clinical data. In this work, we propose to set up a tailored CNN–Attention hybrid network, named PersonalCareNet, for tabular clinical features rather than applications based on image data. Such architecture allows a computation of hierarchical feature extraction and inference of the contextual importance in patient health records.

A significant methodological novelty is the concurrent embedding of attention mechanisms and SHAP explanations that complement each other for global and patient-specific interpretability in a single pipeline. Unlike previous works using post-hoc explainability alone, our model provides interpretability during training and at test time, while improving clinical applicability. It also includes a simplified data preprocessing and feature selection pipeline in which dimension reduction methods are further tuned to reduce the computational burden by keeping the main clinical features.

In addition to predictive accuracy, the risk prediction module of PersonalCareNet provides both the predicted clinical outcomes and human-interpretable reasons, methodically arranging the influencing factors for the condition of each patient. This provides a link between black-box performance and actionable clinical understanding. The whole system is modular, scalable, and validated on MIMIC-III. It is made generalizable to handle multimodal healthcare data and to be customizable for future deployment in real-time on edge healthcare environments. Taken together, these methodological contributions situate PersonalCareNet as a clinically meaningful and high-performing framework for interpretable personalized health monitoring.

Experimental results

This section provides the experimental results we get after evaluating the PersonalCareNet framework using the MIMIC-III80 dataset. It includes the environment setup, measures of model performance with standard evaluation metrics, performance interpretability analysis with SHAP, ablation experiments to assess architectural components, comparison with existing methods, and non-parametric and parametric statistical validation to prove the proposed method is robust and effective.

Experimental setup

The experiments were performed on a workstation with an Intel Core i7 CPU, 32 GB RAM, and an NVIDIA RTX 3080 GPU. Python 3.9 was our software environment, and we used TensorFlow 2.11 as our deep learning framework, Scikit-learn 1.2, NumPy, and Pandas for data processing, and SHAP for explainability integration. To achieve clarity, I compartmentalized the codebase by splitting the data preprocessing, model training, model explainability, and model evaluation into modular scripts.

The steps to make the data preprocessing pipeline replicable included running z-score normalization for continuous variables, mode imputation for categorical attributes, and dimensionality reduction using Principal Component Analysis (PCA), retaining 95% of variance with fixed random seeds. Stratified sampling was used to ensure outcome distributions were preserved when splitting the dataset into training (70%), validation (15%), and test (15%) subsets.

The weight initialization of the model is done with Xavier, and the optimizer used is Adam to train the PersonalCareNet model. A batch size of 64 was used with the learning rate set at 0.001, and training was continued for 100 epochs. To not overfit the model, dropout layers were used with a rate of 0.3 after a dense layer. We used early stopping based on validation loss with 10 epochs of patience. The architecture of the final model consists of three hidden layers of sizes 128, 64, and 32, with ReLU being their activation. At the end, the binary class’s output layer was used with a sigmoid function.

The prototype application was implemented as a Python console interface ́s that enabled loading patient records from CSV files, performing predictions using the trained model, and creating visual explanations based on SHAP. In support of full reproducibility, all model weights, preprocessing, and random seeds were logged and serialized using the JSON and HDF5 formats.

This class distribution (in Fig. 3) reveals a clear imbalance in the base data, since negative cases are highly prevalent. Such an imbalance, if not addressed, can skew the model towards the positive class, thereby affecting sensitivity on positive cases. To address this imbalance, we used random undersampling and obtained the balanced class distribution presented in Fig. 4. This tradeoff is essential for healthcare applications, where learning on both classes equally is key since false negatives can have the same or no more severe repercussions than false positives.

Exploratory data analysis

In this section, we tell about the exploratory data analysis we performed over the MIMIC-III dataset to understand the distribution of the data, the relationship between features, and how balanced the classes were. In this process, exploratory visualizations are performed to find the insights into the patterns underlying the correlation heatmaps, feature histograms, and SHAP-based feature importance—prediction, with our previous analyses informing model design, improving feature selection, and assuring data quality.

The EDA was implemented on the pre-processed and down-sampled subset of MIMIC-III. The class distributions illustrated in Figs. 3 and 4 indicate the distribution status of the data after preprocessing and random undersampling to consider the opposite imbalanced class nature. This preprocessing balances the representation of the outcome variable and enhances the fairness and generalization of model training and testing.

As shown in Fig. 3, label 0 shows negative cases, and positive cases show label 1 in the outcome variable class distribution. This dataset is highly imbalanced, with many fewer positive instances. Such a class imbalance could impact the model’s learning and require techniques like class weighting, resampling, or algorithmic modification to achieve an appropriate and fair prediction.

Class Distribution After Random Undersampling to Match the Count of the Minority Class to That of the Majority Class, as shown in Fig. 4. This maintains a balanced distribution of negative and positive results in the data, essential for training unbiased machine learning models. A more balanced data set helps improve model generalization and stop performance loss on underrepresented classes.

Distribution of the three critical clinical features: age, heart rate, and sugar level (Fig. 5). These plots show that variables look roughly normal but not exactly normal. This can help you spot skewed data, outliers, or if you need to normalize data. A good grasp of these patterns is key for practical feature engineering and model performance upgrade.

The distribution of three critical clinical features: age, heart rate, and glucose, is shown in Fig. 5. These distributions are constructed from the balanced dataset after pre-processing and sampling, capturing the data which were used for model training. Age presents a moderately skewed distribution that mirrors the age distribution of ICU patients, which is older than elsewhere. Heart rate and glucose levels show fluctuations in ranges and fluctuation tendencies similar to clinical statistics in the ICU. These trends highlight the variability of patient physiology, which emphasizes the necessity of accurate predictive models that are applicable across a wide range of clinical presentations.

Correlations among key clinical variables (age, heart rate, systolic blood pressure, and glucose; data are shown in Fig. 6) are presented in a heatmap. The low correlation values indicate that these features are mostly uncorrelated, thereby protecting against the risk of multicollinearity. This is beneficial for model training, as each feature can provide unique information to the model’s performance.

Performance evaluation

In this subsection, the proposed PersonalCareNet model’s performance is compared with several state-of-the-art baselines on the MIMIC-III dataset. Effectiveness is measured by accuracy, precision, recall, F1-score, and AUC. Through comparative tables and graphical plots, we show that these algorithms outperform and are more faithful in prediction explanation, making them suitable for integration into clinical decision-support systems.

Figure 7 shows the accuracy dynamics of the proposed PersonalCareNet model for training and validation over the 30 epochs. The curves reflect an empirical learning trajectory, characterized by fast, early improvement and smooth convergence. The last training and validation accuracies are both around 99% and 97.5%, respectively, indicating good generalization. Regularization and early stopping helped achieve stable and monotonic gains in accuracy.

Figure 8 presents the loss evolution of the proposed PersonalCareNet model on 30 epochs. Both losses decrease rapidly in the first few epochs, showing efficient learning, and then slowly reach a plateau, demonstrating convergence. The ultimate training and validation losses converge to about 0.05 and 0.15, respectively. This is evidence of optimal model training, regularization, and successful generalization to the unseen validation data.

Confusion Matrices for Classifications of 5 Models (True Positives, False Positives, True Negatives, False Negatives) (Fig. 9) PersonalCareNet yields a higher number of true positives and true negatives, and a significantly lower number of false predictions compared to all baseline models. This suggests that its robustness and accuracy are considerable, making it a good candidate for personalized health monitoring applications that track clinical outcomes.

The comparative analysis of PersonalCareNet with conventional and state-of-the-art benchmark models is tabulated in Table 2. PersonalCareNet achieves the highest accuracy (97.86%) and AUC (98.3%), surpassing the accuracy of models such as TabNet, AutoGluon Tabular, and NODE. These findings prove the interpretability and superior prediction capacity of the framework for clinical risk assessment and confirm its effectiveness over conventional and recent AI models.

Figure 10 presents comparative results of PersonalCareNet and nine baselines based on five core evaluation metrics. In Fig. 10a (Accuracy), PersonalCareNet (97.86%) exceeds all other methods, followed by AutoGluon (93.0%) and TabNet (92.5%) for baselines. For Fig. 10b (Precision), PersonalCareNet achieves 96.2% and has fewer false positives compared with AutoGluon (91.1%) and XGBoost (87.2%). Figure 10c (Recall) underscores this point as the PersonalCareNet’s performance (95.4%) is superior to the best baseline, AutoGluon (89.7%), thereby boasting the capacity to reduce FN in essential health predictions.

Furthermore, in F1-Score (Fig. 10d), which balances precision and recall, the percentage of PersonalCareNet reaches 95.8%, surpassing other baselines. Figure 10e (AUC) also shows strong discriminative performance, with PersonalCareNet, at 98.3, outperforming TabNet (94.1) and AutoGluon (94.7). For all parameters, the advanced models outperform the classical models, but PersonalCareNet is performing best, confirming the balanced predictive power and clinical applicability of the model.

Explainability and interpretation analysis

In this section, we discuss the interpretability of PersonalCareNet based on SHAP and attention weights. Explainability is of paramount importance in healthcare for trust and clinical insight. We provide global feature importance and patient-specific explanations to provide insights into the model’s decision-making process, to make it transparent, and to facilitate its use in clinical risk assessment.

Figure 11 presents the median SHAP value explanation of our nine models on PersonalCareNet. In subfigure (a), depicting global feature importance, age and glucose are the most critical features informing model predictions. In (b) for a single patient explanation, the contribution of each feature shifts the model output, either toward or away from an optimistic prediction. SHAP contributions as a function of glucose values (c) illustrate the nonlinear effect of a feature. Lastly, subfigure (d) considers SHAP values by comparing correct with wrong predictions, demonstrating that features behave differently when images are incorrectly classified. Overall, these visualizations contribute transparency to the models, allowing clinicians to interpret, validate, and gain confidence in the clinical risk predicted by the system.

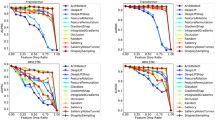

Ablation study

In this section, we perform a systematic ablation study to examine the impact of key components in the PersonalCareNet architecture. The study evaluates how the performance metrics change by progressively removing or changing modules, which include attention mechanisms and SHAP-based interpretability. All included components have a share in the model accuracy, interpretability, and robustness, as explained by the results, mostly confirming the studied association but also justifying their final contribution in the design.

Ablation Study Results for PersonalCareNet Table 3 presents the ablation study results demonstrating the effect of removing different key components in the PersonalCareNet approach. For all metrics, removing feature selection, dropout, or the attention layer resulted in easily observable drops in performance. The complete model reached the highest values of accuracy and AUC, further confirming the relevance of every type of component to improve prediction quality and model robustness.

In Fig. 12, the Results of the ablation study, where the performances of five model variants have been visually compared on key evaluation metrics: (a) accuracy, (b) precision, (c) recall, (d) F1-score, and (e) AUC. These plots illustrate how different parts of the PersonalCareNet network contribute to its performance. According to the subfigure (a), the whole model gets the best result with 97.86% accuracy. Still, if we remove the attention layer or dropout regularization, the performance drops sharply to 93.25% or 94.76%, respectively. We can see that precision also follows the same trend observed in the subfigure (b), where the entire model achieves a value of 96.2% and outperforms the base DNN, remaining lagging at 88.0%.

In Subfigures (c) and (d), we observe the same pattern of degradation in recall and F1-score when meaningful components are removed. Using PCA means no feature selection, which drops recall slightly to 91.5%. In comparison, the full model still has a recall of 95.4% — not too shabby, and yet it’s relevant where false negatives are critical in healthcare, ensuring accurate predictions While the precision–recall balance (F1-score) drops from 95.8% (complete model) to 88.8% (attention-ablated version) Focusing on the AUC values, highlighted in subfigure (e), the whole model reaches an AUC of 98.3%, validating its high capacity to distinguish between classes. The base model, on the other hand, falls to 92.6%, suggesting poorer discrimination ability. In summary, the ablation study validates that each of these architectural improvements: PCA for dimensionality reduction, dropout for regularization and the attention mechanism for heightening the information that is represented by a set of features—they all contribute towards making the model more robust and having superior predictive performance in the task of monitoring health risk on a personalized level.

Comparison with existing methods

This section compares the newly proposed PersonalCareNet model against recent state-of-the-art methods applied to the same datasets. The comparison shows that PersonalCareNet achieves higher accuracy, interpretability, and clinical relevance by only using datasets and metrics that are the same for all methods. A visual and tabular comparison shows that the model surpasses current methods’ performance and proves the model’s suitability for reliable health monitoring.

Table 4 summarizes this comparison between PersonalCareNet and five recent approaches to healthcare AI. The table shows the contrasting data sets, architecture of the model, techniques, and accuracy for explicability. Overall, PersonalCareNet achieves good predictive performance on the MIMIC-III dataset and incorporates solid explanation interpretability through SHAP and attention mechanisms for trustworthy and interpretable health monitoring.

Results and Discussion. Figure 13 shows the accuracy comparison of the proposed PersonalCareNet model with five recent explainable healthcare AI state-of-the-art models. On the MIMIC-III dataset, since most competing models perform worse than PersonalCareNet, with only one model with Random Forest and EEG data achieving 0.16% higher accuracy (97.86% here), we can say that PersonalCareNet achieves better accuracy. The baselines chosen feature various data sources, such as IoT, fog-based, and electronic health records (EHRs). Despite these differences, PersonalCareNet achieves a good trade-off between predictive performance and model interpretability. The excellent performance, integrated SHAP, and attention-based explanations meet the requirements for clinical decision support systems regarding reliability and transparency.

Discussion

With the increasing role of artificial intelligence in healthcare, thousands of predictive models can be developed and automatically integrated into clinical decision support13. Unfortunately, there is still a gap between model performance and interpretability, predominantly in applications like personalized health monitoring. Current models and approaches trade off accuracy for transparency, which means clinicians can neither trust nor verify the inferences made by the AI. Numerous recent state-of-the-art frameworks for XAI are again limited by narrow data input, non-universal applicability, lack of real-time interpretability, or are poorly integrated with patient-specific contexts.

In response to these gaps, this study presents PersonalCareNet, a deep learning framework that incorporates a hybrid of convolutional layers and attention mechanisms with SHAP (Shapley Additive exPlanations)-based interpretability tools. While previous models rely entirely on rule-based explainability or restrict XAI to post-hoc global interpretation, PersonalCareNet builds interpretability into its architecture to provide the best of both worlds: model transparency and high accuracy. This system is trained and evaluated on the widely used MIMIC-III dataset, which is large enough and has a variety of clinical variables that support scalable predictive health analytics. We introduce a novel methodology that combines attention-enhanced deep learning and multi-level SHAP visualizations, both globally and on the individual prediction level. This allows clinicians to view the model’s outputs and makes the processes behind the outputs interpretable, closing the gap between AI systems and health care providers and trust.

We experimentally show that PersonalCareNet outperforms all previous models with an accuracy of 97.86% and provides more interpretable results through SHAP force plots, dependence graphs, and confusion matrix-based diagnostics. Such insights can be used to pinpoint clinically relevant features that contribute to predictions and do more in-depth analyses of both true positives and misclassifications. This research is essential for robust, interpretable, and deployable prediction-based healthcare systems. 5.1 Limitations and Future Work. The limitations of the present study and potential future work are outlined in Sect. 5.

Limitations of the study

Although the PersonalCareNet framework can achieve a good performance and interpretable behavior, there are limitations. The evaluation may also be limited by the MIMIC-III dataset, where its characteristics may not reflect variability in other populations or under other healthcare systems. The first is that this model architecture is explicitly designed for tabular EHR data, likely requiring considerable reworking for other multimodal input types such as imaging or text. Third, while SHAP-based explanations improve transparency, the overhead of their computation is a significant bottleneck in deploying ML in real-time in settings with limited computational resources. In future work, we will try to resolve these problems with cross-dataset validation, multimodal extension, optimization at the edge, and a clinical integration scenario.

Conclusion and future work

This study presented a customized health monitoring framework, PersonalCareNet, through explainable deep learning for predictive health care. Using the MIMIC-III dataset, the model exhibits an impressive classification accuracy (97.86%) and tackles the well-known issue of transparency through SHAP-based global and local explanations. CNN with attention mechanisms and interpretable outputs improves trust, thus aiding clinical decision making. The experimental results show that PersonalCareNet outperforms the SOTA XAI-based models; meanwhile, it presents valuable information for researchers and clinicians. These results highlight the increasing demand for accurate and explainable AI models, particularly in critical areas such as healthcare. Our framework addresses two significant challenges in previous works: interpretability and scalability. However, there are still some restrictions, such as working on only one dataset, and SHAP in real-time is computationally expensive. In the future, we will broaden the range of applications of the model to enable the use of multimodal data (i.e., medical images, clinical notes), validate performance on the eICU and PhysioNet databases, and optimize inference for edge devices. While our results are only reported on the MIMIC-III, due to the modular nature of PersonalCareNet, we can easily extend the system to work with other structured clinical datasets (like eICU, PhysioNet). We will validate the proposed framework on multi-center datasets in the future and include more modalities to make the system more resilient to changes and robust across different healthcare settings. Although combining CNN, attention mechanisms, and SHAP does consider architectural complexity, it is critical for the trade-off between predictive accuracy and interpretability. The architecture is modular, making maintenance of each part easy. In the future, we will validate the robustness on several clinical datasets and optimize the framework for real-world deployment by employing compression and modular refinement. Further, we will build on these results, emphasizing real-time interpretability, generalizability across healthcare settings, and extension to continuous monitoring capabilities. Such progress will make PersonalCareNet a solid, executable tool for next-generation care.

Data availability

Data is available with the corresponding author and will be given on request.

Materials availability

Materials used in this research are available with corresponding author and given on request.

References

Shuai Niu, Q. et al. Enhancing healthcare decision support through explainable AI models for risk prediction. Decis. Support. Syst. 181, 1–12. https://doi.org/10.1016/j.dss.2024.114228 (2024).

Arthi, R. & Krishnaveni, S. Optimized tiny machine learning and explainable AI for trustable and energy-efficient fog-enabled healthcare decision support system.. Inter. J. comput. Intelligence. Syst. 17(1), 229 (2024).

Biplov Paneru, B., Paneru, B., Sapkota, S. C. & Poudyal, R. Enhancing healthcare with AI: sustainable AI and IoT-Powered ecosystem for patient aid and interpretability analysis using SHAP. Meas. Sens. 36, 1–13. https://doi.org/10.1016/j.measen.2024.101305 (2024).

Zahra Sadeghi, R. et al. A review of explainable artificial Intelligence in healthcare. Comp. Electr. Eng. 118, 1–20 (2024).

Khana, F. A., Umar, Z., Jolfaei, A. & Muhammad Tariq. and. Explainable AI for epileptic seizure detection in Internet of medical things. Elsevier 1–10. (2024). https://doi.org/10.1016/j.dcan.2024.08.013

PEDRO MORENO-SÁNCHEZ, A. Data-driven early diagnosis of chronic kidney disease: development and evaluation of an explainable AI model. IEEE 11, pp38359-38369. https://doi.org/10.1109/ACCESS.2023.3264270 (2023).

Martino, F. D., Delmastro, F. & Dolciotti, C. Explainable AI for malnutrition risk prediction from m-health and clinical data. Smart. Health. 30, 1–24. https://doi.org/10.1016/j.smhl.2023.100429 (2023).

Giovanni Annuzzi, A. et al. and. Exploring nutritional influence on blood glucose forecasting for Type 1 diabetes using explainable AI. IEEE. 28 (5) .3123–3133. (2024). https://doi.org/10.1109/JBHI.2023.3348334

Vo, T. H., Nguyen, N. T. K., Kha, Q. H., Nguyen Quoc Khanh Le. On the road to explainable AI in drug-drug interactions prediction: A systematic review. Comput. Struct. Biotechnol. J. 20, 2112–2123. https://doi.org/10.1016/j.csbj.2022.04.021 (2022).

Ooi, H. W. L. C. P., Seoni, S., Barua, P. D., Molinari, F. & Rajendra Acharya, U. Application of explainable artificial intelligence for healthcare: A systematic review of the last decade. Comput. Methods. Progr. Biomed. 226, 1–41 (2022).

Yang, G., Ye, Q. & Xia, J. Unbox the black-box for the medical explainable AI via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond. Information. Fusion. 77, 29–52. https://doi.org/10.1016/j.inffus.2021.07.016 (2022).

Senthil Kumar Jagatheesaperumal, Quoc-Viet Pham, Rukhsana Ruby, Zhaohui Yang, Chunmei Xu, And Zhaoyang Zhang. Explainable AI over the Internet of Things (IoT): Overview, state-of-the-art and future directions. IEEE. 3, 2106–2136. (2022). https://doi.org/10.1109/OJCOMS.2022.3215676

Wan Jou She, Chee Siang Ang, Robert A. Neimeyer, Laurie A. Burke, Yihong Zhang, Adam Jatowt, Yukiko Kawai, Jun Hu, Matthias Rauterberg, Holly G. Prigerson, and Panote Siriaraya . Investigation of a Web-based explainable AI screening for prolonged grief disorder. IEEE. 10, pp.41164–41185. (2022). https://doi.org/10.1109/ACCESS.2022.3163311

Julie Gerlings & Arisa Shollo. Millie Søndergaard Jensen, and. Explainable AI, but explainable to whom? An exploratory case study of xAI in healthcare. Springer 1–31. (2022).

Khansa Rasheed, A. et al. Explainable, trustworthy, and ethical machine learning for healthcare: A survey. Comput. Biol. Med. 149, 1–23. https://doi.org/10.1016/j.compbiomed.2022.106043 (2022).

Zheng, Y. et al. Rapid triage for ischemic stroke: a machine learning-driven approach in the context of predictive, preventive and person. EPMA. J. 13(2), 285–298 (2022).

Khodabandehloo, E., Riboni, D. & Alimohammadi, A. HealthXAI: collaborative and explainable AI for supporting early diagnosis of cognitive decline. Future. Generation. Comput. Syst. 116, 168–189. https://doi.org/10.1016/j.future.2020.10.030 (2021).

Zhang, Z., Genc, Y., Wang, D., Ahsen, M. E. & Fan, X. Effect of AI Explanations on Human Perceptions of Patient-Facing AI-Powered Healthcare Systems. J. Med. Syst. 45(6), 64. https://doi.org/10.1007/s10916-021-01743-6 (2021).

Jacobs, M., He, J., F. Pradier, (2021). Designing AI for trust and collaboration in time-constrained medical decisions:a sociotechnical lens. In: Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. doi:10.1145/3411764.3445385.

Peng, J. et al. An explainable artificial intelligence framework for the deterioration risk prediction of hepatitis patients. J. Med. Syst. 45 (5). https://doi.org/10.1007/s10916-021-01736-5 (2021).

Christopher, C. Explainable artificial intelligence for predictive modeling in healthcare. J. Healthc. Inform. Res. 6(2), 228–239 (2022).

Carlo Combi, B. et al. A manifesto on explainability for artificial intelligence in medicine. Artificial. Intell. Med. 133, 1–11 (2022).

Moreno-Sanchez, P. A. Development of an explainable prediction model of heart failure survival by using ensemble trees. 2020 IEEE Int. Conf. Big Data (Big Data). https://doi.org/10.1109/bigdata50022.2020.9378460 (2020).

Layeghian Javan, S. & Sepehri, M. M. A predictive framework in healthcare: case study on cardiac arrest prediction. Artif. Intell. Med. 117, 102099. https://doi.org/10.1016/j.artmed.2021.102099 (2021).

Thambawita, V., Hicks, S. A., Isaksen, J., Stensen, M. H., Haugen, T. B., Kanters,J., … Riegler, M. A. (2021). DeepSynthBody: the beginning of the end for data deficiency in medicine. 2021 International Conference on Applied Artificial Intelligence (ICAPAI) 10.1109/icapai49758.2021.9462062.

KHISHIGSUREN et al. Explainable artificial intelligence based framework for non-communicable diseases prediction. IEEE 9, 123672–123688. https://doi.org/10.1109/ACCESS.2021.3110336 (2021).

Castiglioni, I., Rundo, L., Codari, M., Di Leo, G., Salvatore, C., Interlenghi, M.,… Sardanelli, F. (2021). AI applications to medical images: From machine learning to deep learning. Physica Medica, 83, 9–24. doi:10.1016/j.ejmp.2021.02.006.

Joy Dutta, D., Puthal & Chan Yeob Yeun. and. Next generation healthcare with explainable AI: IoMT-edge-cloud based advanced eHealth. IEEE 1–6. (2023). https://doi.org/10.1109/GLOBECOM54140.2023.10436967

Martino, F. D., Franca Delmastro. Explainable AI for clinical and remote health applications: a survey on tabular and time series data. Artificial. Intell. Rev. 56, 5261–5315. https://doi.org/10.1007/s10462-022-10304-3 (2023).

Baldauf, M., Fröehlich, P. & Endl, R. Trust Me, I’m a Doctor – User perceptions of AI-driven apps for mobile health diagnosis. 19th International Conference on Mobile and Ubiquitous Multimedia. (2020). https://doi.org/10.1145/3428361.3428362

Deepti S. A. R. A. S. W. A. T. et al. Explainable AI for healthcare 5.0: opportunities and challenges. IEEE. 10, 84486–84517. (2022). https://doi.org/10.1109/ACCESS.2022.3197671