Abstract

Aiming at the difficulty of knee MRI bone and cartilage subregion segmentation caused by numerous subregions and unclear subregion boundary, a fully automatic knee subregion segmentation network based on tissue segmentation and anatomical geometry is proposed. Specifically, first, we use a transformer-based multilevel region and edge aggregation network to achieve precise segmentation of bone and cartilage tissue edges in knee MRI. Then, we designed a fibula detection module, which determines the medial and lateral of the knee by detecting the position of the fibula. Afterwards, a subregion segmentation module based on boundary information was designed, which divides bone and cartilage tissues into subregions by detecting the boundaries. In addition, in order to provide data support for the proposed model, fibula classification dataset and knee MRI bone and cartilage subregion dataset were established respectively. Testing on the fibula classification dataset we established, the proposed method achieved a detection accuracy of 1.000 in detecting the medial and lateral of the knee. On the knee MRI bone and cartilage subregion dataset we established, the proposed method attained an average dice score of 0.953 for bone subregions and 0.831 for cartilage subregions, which verifies the correctness of the proposed method.

Similar content being viewed by others

Introduction

Knee magnetic resonance imaging (MRI) segmentation is the basis of MRI-based knee osteoarthritis (KOA) semi-quantitative scoring. In the traditional knee MRI bone and cartilage segmentation, doctors manually segment MRI layer by layer based on professional knowledge and accumulated experience1,2. This increases the doctor’s workload and wastes a lot of time and effort on manual segmentation of MRI. In addition, because the doctor may be tired or distracted, incorrect segmentation may occur, thereby increasing the rate of misdiagnosis to some extent. Therefore, in order to free doctors from heavy manual segmentation, improve diagnostic efficiency, and reduce misdiagnosis rate, some researchers have developed knee MRI bone and cartilage segmentation algorithms3,4,5,6. Currently, knee MRI bone and cartilage segmentation algorithms are mainly divided into traditional segmentation algorithms7,8,9,10. and deep learning-based segmentation algorithms3,4,5,6,7,8,9,10,11,12.

Traditional segmentation algorithms require manual design of features (texture, color, shape, etc.), which can be mainly divided into threshold methods7, proximal splitting8, region growing methods9, graph methods10, etc. These traditional segmentation algorithms have achieved some results in the field of bone and cartilage segmentation in knee MRI, but there are still problems such as complex design, poor generalization ability, and low accuracy.

In recent years, deep learning based algorithms have gradually replaced traditional medical image segmentation algorithms due to their good universality, high segmentation accuracy, and high efficiency13,14,15. Scholars have gradually begun to study knee MRI bone and cartilage segmentation based on deep learning algorithms16,17. According to different network architectures, it can be mainly divided into UNet18, Mask R-CNN19, Generative Adversarial Network (GAN)20, SegNet21, etc. UNet18 is the most widely used network architecture in the field of medical image segmentation, with many improved versions22,23. Ambellan et al.24 combined 3D statistical shape models, 2D UNet, and 3D UNet to achieve precise segmentation of bone and cartilage in normal and diseased knee MRI. Norman et al.25 developed a deep learning model based on UNet to automatically segment cartilage and meniscus, with segmentation accuracy comparable to manual segmentation by radiologists. Xiao et al.26 proposed an architecture that includes multiple CNNs, each responsible for segmenting tissues with relatively similar volumes and different intensity distributions, and using a weighted loss function to mitigate the impact of class imbalance. Although a large number of scholars have conducted research on deep learning based knee MRI bone and cartilage segmentation algorithms. Due to the large number of bone and cartilage subregions and unclear boundaries in knee MRI27, the task of knee MRI bone and cartilage subregion segmentation is extremely challenging.

In order to solve the difficulty of knee MRI bone and cartilage subregion segmentation caused by numerous subregions and unclear subregion boundary, a fully automatic knee subregion segmentation network based on tissue segmentation and anatomical geometry is proposed. Specifically, first, we use a transformer-based multilevel region and edge aggregation network to achieve precise segmentation of bone and cartilage tissue edges in knee MRI. Then, we designed a fibula detection module, which determines the medial and lateral of the knee by detecting the position of the fibula. Afterwards, a subregion segmentation module based on boundary information was designed, which divides bone and cartilage tissues into subregions by detecting the boundaries. In addition, in order to provide data support for the proposed model, fibula classification dataset and knee MRI bone and cartilage subregion dataset were established respectively. Testing on the fibula classification dataset we established, the proposed method achieved a detection accuracy of 1.000 in detecting the medial and lateral of the knee. On the knee MRI bone and cartilage subregion dataset we established, the proposed method attained an average dice score of 0.953 for bone subregions and 0.831 for cartilage subregions, which verifies the correctness of the proposed method.

Contributions:

-

(1)

A fully automatic knee subregion segmentation network based on tissue segmentation and anatomical geometry is proposed, which achieved full automatic segmentation of bone and cartilage subregions in knee MRI.

-

(2)

A fibula detection module was proposed to determine the medial and lateral of the knee by detecting the position of the fibula.

-

(3)

Fibula classification dataset and knee MRI bone and cartilage subregion dataset are established.

Methods

The proposed fully automatic knee subregion segmentation network based on tissue segmentation and anatomical geometry (KSNet) is shown in Fig. 1 and Algorithm 1. The input of KSNet is knee MRI, and the output is bone and cartilage subregions segmentation mask. It includes the following parts,

(1) Tissue segmentation of knee bone and cartilage. As shown in Fig. 1, the proposed method achieves subregion segmentation based on boundary detection, on the basis of the segmentation of bone and cartilage tissues. Therefore, the segmentation quality of tissue boundaries is crucial for the quality of subregion segmentation. To improve the segmentation quality of tissue boundaries, we use transformer-based multilevel region and edge aggregation network (TBAMNet)28 to achieve the segmentation of bone and cartilage tissues. In order to achieve finer segmentation, we adopted a segmentation strategy from coarse to fine.

(2) Fibula detection module. In clinical practice, doctors use the fibula to determine the medial and lateral of knee: the side with the fibula is the lateral side, and the side without the fibula is the medial side. In order to achieve automatic knee medial and lateral detection, we have established a fibula classification dataset and proposed a fibula detection module (FDM), which determines the medial and lateral of the knee by detecting the position of the fibula.

(3) Subregion segmentation module based on boundary information. Based on the segmentation of bone and cartilage tissues and the knee medial and lateral detection, we propose a subregion segmentation module based on boundary information (SSM), which divides bone and cartilage tissues into subregions by detecting the boundaries.

Fibula detection module

We collected 100 knee MRI cases from the public database Osteoarthritis Initiative, manually annotated each slice for the presence of fibula, and constructed a fibula classification dataset. On the basis of the fibula classification dataset, a fibula detection module (FDM) is proposed to achieve the knee medial and lateral detection, as shown in Fig. 2, the main steps of FDM are as follows,

(1) Category prediction and statistics. All 2D slices of knee MRI in each case are input into the 2D classification network in descending order for classification, and the category prediction results of each 2D slice are obtained. The category prediction results of low numbered slices \(N_{low}\) and high numbered slices \(N_{high}\) are separately calculated,

Here, \(N_{s}\) represents the number of slices of the case in the sagittal plane, \(I_{j}\) is the jth slice ( j = 1, 2, 3, ..., \(N_{s}\)), Clc represents the classification network, and \(<>\) is the rounding operation.

(2) Medial and lateral detection. Based on the predicted results of low order slice categories \(N_{low}\) and high order slice categories \(N_{high}\), determine the medial and lateral direction D,

Here, 1 and 0 represent forward (From 1 to \(N_{s}\)) and reverse, respectively.

In this paper, ResNet18 is used as the 2D classification network. The input of the 2D classification network is 2D MRI slices, which guide the network training through classification labels. For the loss function guided by classification labels, consider Focal loss,

Here, \(\alpha\) represent the loss weight of positive and negative samples. G and P represent the classification label and category prediction value, respectively. \(\gamma\) represent the loss weight of difficult and easy samples.

Subregion segmentation module based on boundary information

After the segmentation of bone and cartilage tissues and the medial and lateral detection in knee MRI, based on the boundary information relationship between bone and cartilage subregions of MRI Osteoarthritis Knee Score (MOAKS)27. A subregion segmentation module based on boundary information (SSM) is proposed to achieve the bone and cartilage subregion segmentation, as shown in Fig. 3, the SSM consists of three parts: patella and cartilage subregion segmentation, tibia and cartilage subregion segmentation, and femur and cartilage subregion segmentation. Its input is the bone and cartilage tissue segmentation results and the medial and lateral detection results, and its output is the bone and cartilage subregion segmentation results.

Patella and cartilage subregion segmentation

As shown in Fig. 4, the main steps of the patella and cartilage subregion segmentation are as follows,

(1) On the axial view, the patellar cartilage segmentation results \(P_{aPC, i}\) of all slices of the case (i=1, 2, 3,..., \(N_{a}\), \(N_{a}\) is the number of slices of the case on the axial view) are projected onto a binary image \(I_{aPC}\) that matches the slice size,

Here, (x, y) represents the position in the image.

(2) Calculate the coordinates (\(x_{aPC, low}\), \(y_{aPC, low}\)) of the lowest point of patellar cartilage in binary image \(I_{aPC}\). First calculate the lower right coordinate (\(x_{aPC, max}\), \(y_{aPC, max}\)) of the minimum envelope rectangle of the patellar cartilage in binary image \(I_{aPC}\). Then calculate the mean x-direction coordinate of the intersection point between the horizontal line \(y=y_{aPC, max}\) and the patellar cartilage in binary image \(I_{aPC}\), which is \(x_{aPC, low}\), and the coordinate \(y_{aPC, max}\) is \(y_{aPC, low}\).

(3) Based on the knee medial and lateral detection results, the lowest point of the patellar cartilage is offset by 3mm towards the external direction, which is the boundary \(B_{P}\) between the medial and lateral sides of the patella,

In the formula, \(r_{x}\) represents the spatial resolution in the x-direction of the MRI, \(<>\) is the rounding operation, and D represents the medial and lateral detection results.

(4) According to the boundary between the medial and lateral of the patella and the medial and lateral detection results, the tissue segmentation results of the patella and cartilage are divided into four subregions: patella medial, patellar lateral, patella medial cartilage, and patellar lateral cartilage.

Tibial and cartilage subregion segmentation

After obtaining the tibia and cartilage tissue segmentation results, medial and lateral detection results, the key is to determine the inferior margin of the tibia, the medial and lateral boundary of the tibia, and the anterior and posterior boundary of the tibial plateau. Based on this, the designed tibia and cartilage subregion segmentation is shown in Fig. 5, and its main steps are as follows,

(1) Tibial inferior margin cuting. The inferior margin of the tibia is 2 cm below the articular surface. Therefore, determining the position of the inferior margin of the tibia can be transformed into solving the problem of articular surface coordinates. As shown in Fig. 6, firstly, in the sagittal view, the tibial cartilage tissue segmentation results \(P_{sTC, i}\) (i = 1, 2, 3, ..., \(N_{S}\), \(N_{S}\) is the number of slices in the sagittal view) of all slices of the case are projected onto a binary image \(I_{sTC}\) that is consistent with the slice size,

Then, calculate the upper left coordinate (\(x_{sTC, min}\), \(y_{sTC, min}\)) and lower right coordinate (\(x_{sTC, max}\), \(y_{sTC, max}\)) of the minimum envelope rectangle of tibial cartilage in the binary image \(I_{sTC}\). The horizontal surface corresponding to the midpoint coordinate \(y_{sTC, mean}\) in the y-direction is the articular surface,

The articular surface is offset 20 mm downwards, which is the inferior margin of the tibia \(T_{M}\),

In the formula, \(r_{y}\) represents the spatial resolution in the y-direction of the MRI image.

According to the segmentation results of the tibial tissue \(P_{sTB, i}\) (i = 1, 2, 3, ..., \(N_{S}\), \(N_{S}\) is the number of slices in the sagittal view of the case) based on the inferior margin of the tibia \(T_{M}\), the cut tibial tissue \(C_{sTB, i}\) is obtained.

(2) Tibia medial and lateral boundary detection. As shown in Fig. 7, firstly, in the coronal view, the segmentation results \(P_{cTC, i}\) (i = 1, 2, 3, ..., \(N_{c}\), where \(N_{c}\) is the number of slices in the coronal view) of the tibial cartilage tissue from all slices of the case are projected onto a binary image \(I_{cTC}\) that matches the slice size,

Then, calculate the lower right coordinate (\(x_{slTC, max}\), \(y_{slTC, max}\)) of the minimum envelope rectangle of the left tibial cartilage on the binary image \(I_{cTC}\), and the lower left coordinate (\(x_{srTC, min}\), \(y_{srTC, min}\)) of the minimum envelope rectangle of the right tibial cartilage. Finally, based on the results of the medial and lateral detection, the boundary between the tibia lateral and the subspinous \(B_{LP}\), the boundary between the tibia medial and the subspinous \(B_{MP}\),

(3) Tibial plateau anterior and posterior boundary detection. MOAKS divides the medial and lateral of the tibia into anterior, medial, and posterior regions based on the anterior and posterior boundary of the tibial plateau. As shown in Fig. 8, in the sagittal view, the cut tibial tissue \(C_{sTB, i}\) from all lateral slices of the case is projected onto the binary image \(I_{LTB}\) that matches the slice size. When the medial and lateral directions D = 1,

When the medial and lateral directions \(D = 0\),

Calculate the upper left coordinate (\(x_{sLTB, min}\), \(y_{sLTB, min}\)) and lower right coordinate (\(x_{sLTB, max}\), \(y_{sLTB, max}\)) of the minimum envelope rectangle of the tibia in the binary image \(I_{LTB}\), where \(x_{sLTB, min}\) and \(x_{sLTB, max}\) are the anterior and posterior boundary of the lateral tibia, respectively.

Similarly, the cut tibial tissue \(C_{sTB, i}\) from all medial slices of the case is projected onto the binary image \(I_{RTB}\) that matches the slice size. When the medial and lateral directions D = 0,

When the medial and lateral directions \(D = 1\),

Calculate the upper left coordinate (\(x_{sRTB, min}\), \(y_{sRTB, min}\)) and lower right coordinate (\(x_{sRTB, max}\), \(y_{sRTB, max}\)) of the minimum envelope rectangle of the tibia in the binary image \(I_{RTB}\), where \(x_{sRTB, min}\) and \(x_{sRTB, max}\) are the anterior and posterior boundary of the lateral tibia, respectively.

(4) Subregion division. In the sagittal view, the tibia and cartilage are divided into anterior, medial, and posterior regions based on the anterior and posterior boundary of the medial and lateral of the tibia.

Femur and cartilage subregion segmentation

The designed femur and cartilage subregion segmentation is shown in Fig. 9, and its main steps are as follows,

(1) Femur medial and lateral boundary detection. As shown in Fig. 10, first, in the coronal view, from front to back, after the appearance of the femoral groove, find the slice \(S_{cFC}\) where the femur is first divided into left and right parts. Then, calculate the lower right coordinate (\(x_{clFC, max}\), \(y_{clFC, max}\)) of the minimum envelope rectangle of the left femoral cartilage on this slice \(S_{cFC}\), and the lower left coordinate (\(x_{crFC, min}\), \(y_{crFC, min}\)) of the minimum envelope rectangle of the right femoral cartilage. Finally, based on the detection results of the medial and lateral, determine the boundary of the medial and lateral of the femur \(B_{F}\),

(2) Femur upper margin cutting. As shown in Fig. 11, firstly, in the sagittal view, all the femoral cartilage tissue slices from the lateral side of the case \(P_{sFC, i}\) are projected onto a binary image \(I_{slFC}\) that is consistent with the slice size. When the medial and lateral directions \(D = 1\),

When the medial and lateral directions \(D = 0\),

Calculate the straight line passing through the left and right vertices of the femoral cartilage on the binary image, which is the lateral upper boundary line of the femur. Then, calculate the line segment that intersects the upper boundary of the lateral femur with each slice of femoral tissue, and the midpoint of this line segment is the midpoint of the upper boundary of the femur slice.

Similarly, in the sagittal view, all the femoral cartilage tissue slices from the medial side of the case \(P_{sFC, i}\) are projected onto a binary image \(I_{smFC}\) that is consistent with the slice size. When the medial and lateral directions \(D = 0\),

When the medial and lateral directions \(D = 1\),

Calculate the straight line passing through the left and right vertices of the femoral cartilage on the binary image, which is the medial upper boundary line of the femur. Then, calculate the line segment that intersects the upper boundary of the medial femur with each slice of femoral tissue, and the midpoint of this line segment is the midpoint of the upper boundary of the femur slice.

(3) Calculation of the boundary between the anterior, middle, and posterior regions of the femur. On the sagittal view, there are several situations for each slice with femur present:

A. There is no tibia. The femur and cartilage are the femur medial.

B. The tibia, anterior margin intersection line, and posterior margin intersection line all exist. The division of the femoral subregion is shown in Fig. 12.

C. The tibia, anterior margin intersection line exists, while the posterior margin intersection line does not exist. The division of the femoral subregion is shown in Fig. 13.

D. The tibia, posterior margin intersection line exists, while the anterior margin intersection line does not exist. The division of the femoral subregion is shown in Fig. 14.

E. The tibia exists, but the intersection lines of the anterior and posterior margin do not exist. The femur and cartilage are the femur medial.

Experiments

Fibula classification dataset and knee MRI bone and cartilage subregion dataset

This section introduces the fibula classification dataset and knee MRI bone and cartilage subregion dataset established in this paper.

Fibula classification dataset

Data collection

The MRI cases in the fibula classification dataset are all from the public database Osteoarthritis Initiative. 100 cases were randomly selected from the baseline (BL), and the inclusion criteria were that the MRI sequence was a dual echo steady-state sequence. Its three-dimensional MRI size is \(160 \times 384 \times 384\) (160 slices per case, totaling \(100 \times 160 = 16000\) slices).

Classification annotation of fibula

Two physicians independently reviewed the knee MRI scans using ITK-SNAP software, observing the slice numbers where the fibula appears and disappears (since the fibula appears continuously in knee MRI scans). They recorded the patient ID, along with the slice numbers of the fibula’s appearance and disappearance, in an Excel spreadsheet. For inconsistent annotations, the two physicians re-observed and discussed to determine the final annotations.

Knee MRI bone and cartilage subregion dataset

Data collection

Randomly select 100 MRI cases and their corresponding labels from the OAI-ZIB dataset, with each case containing 160 2D slices, resulting in a total of \(100 \times 160 = 16,000\) slices. The OAI-ZIB dataset consists of 507 cases, with manually annotated segmentation labels for femur, femoral cartilage, tibia, and tibial cartilage tissues by doctors.

Semi automatic segmentation and annotation of patella and cartilage

Two junior physicians independently performed annotations of the patella and patellar cartilage tissues using the semi-automated annotation assistant tool in ITK-SNAP software.

Division of bone and cartilage subregions in knee MRI

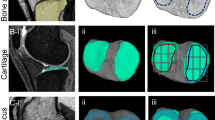

According to MOAKS, two junior physicians independently annotated the subregions using the semi-automated annotation assistant tool in ITK-SNAP software, as shown below,

Femur: femur medial anterior (FBMA), femur medial central (FBMC), femur medial posterior (FBMP), femur lateral anterior (FBLA), femur lateral central (FBLC), femur lateral posterior (FBLP).

Femoral cartilage: femoral cartilage medial anterior (FCMA), femoral cartilage medial central (FCMC), femoral cartilage medial posterior (FCMP), femoral cartilage lateral anterior (FCLA), femoral cartilage lateral central (FCLC), femoral cartilage lateral posterior (FCLP).

Tibia: tibia medial anterior (TBMA), tibia medial central (TBMC), tibia medial posterior (TBMP), tibia lateral anterior (TBLA), tibia lateral central (TBLC), tibia lateral posterior (TBLP), subspinous (SS).

Tibial cartilage: tibial cartilage medial anterior (TCMA), tibial cartilage medial central (TCMC), tibial cartilage medial posterior (TCMP), tibial cartilage lateral anterior (TCLA), tibial cartilage lateral central (TCLC), tibial cartilage lateral posterior (TCLP).

Patella: patella medial (PBM), patella lateral (PBL).

Patella cartilage: patellar cartilage medial (PCM), patellar cartilage lateral (PCL).

Figures 15, 16 and 17 provide examples of annotations for the lateral subregion, medial subregion, and subspinous, respectively.

For inconsistent annotations, the two physicians re-observed and discussed to determine the final annotations. Finally, all annotations were reviewed and confirmed by a senior physician.

Preprocessing

Fibula classification dataset

The fibula classification dataset of 100 cases is divided into a training set (70 cases), a validation set (15 cases), and a testing set (15 cases). Data augmentation methods will not be used for all cases. Standardize each case (0–1 standardization) before training, validation, and testing.

Knee MRI bone and cartilage subregion dataset

The knee MRI bone and cartilage subregion dataset of 100 cases was divided into a training set (70 cases), a validation set (15 cases), and a testing set (15 cases). The coarse segmentation network training does not use data augmentation methods, while the multi-directional fine segmentation network training uses the following data augmentation methods: random translation, scaling, rotation, and flipping. Standardize each case (0–1 standardization) before training, validation, and testing.

Implementation details

The TBAMNet is implemented using PyTorch and runs on four RTX 3090 cards. For all training, use the Adam optimizer for training with a learning rate of 5*\(10^{-4}\), batch sizes of 8.

The fibula detection module is implemented using PyTorch and runs on one RTX 3090 card. Train using Adam optimizer with batch size of 8, learning rate of 1*\(10^{-4}\).

UNet18, FG-UNet29 and BSNet30 are implemented using PyTorch and runs on two RTX 3090 cards. Train using Adam optimizer with batch size of 8, learning rate of 5*\(10^{-4}\).

Evaluation

For the segmentation results of bone and cartilage subregions in knee MRI, dice similarity coefficient (Dice) was used to measure the segmentation performance,

where A and B represent prediction results and labels, respectively.

For the results of medial and lateral directions in knee MRI, the performance of classification was measured by accuracy, specificity and sensitivity,

In the formula, TP, FP, TN and FN represent true positive, false positive, true negative and false negative respectively.

Experimental result

Medial and lateral detection of knee

The medial and lateral detection results of knee are shown in Table 1. As shown in Table 1, the accuracy, specificity, and sensitivity of the 2D classification network for the presence of fibula in a single slice reached 0.952, 0.947, and 0.966, respectively, demonstrating the performance of the 2D classification network for fibula classification in knee MRI. The accuracy, specificity, and sensitivity of the fibula detection module in medial and lateral detection of knee are 1.000, 1.000, and 1.000, respectively, which proves the effectiveness and rationality of the fibula detection module proposed in this paper.

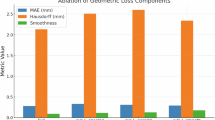

Bone and cartilage subregion segmentation

To demonstrate the rationality and effectiveness of KSNet, we compared it with the current state-of-the-art medical image networks.

(1) Patella and cartilage subregion segmentation results

Table 2 shows the comparison of patella and cartilage subregion segmentation results. As shown in Table 2, KSNet has an average dice coefficient of 0.975 on two patellar subregions and an average dice coefficient of 0.883 on two patellar cartilage subregions. Compared with other medical image segmentation networks, the segmentation accuracy has been improved to some extent, proving the effectiveness of KSNet in segmenting the patella and cartilage subregions. Figures 18 and 19 show the patella and cartilage lateral subregion segmentation results, and patella and cartilage medial subregion segmentation results, respectively. As shown in Figs. 18 and 19, compared with other medical image segmentation networks, KSNet are closer to the segmentation labels, proving the effectiveness of KSNet.

(2) Tibia and cartilage subregion segmentation results

Table 3 shows the tibia and cartilage subregion segmentation results. As shown in Table 3, KSNet has an average dice coefficient of 0.966 on 7 tibial subregions and an average dice coefficient of 0.793 on 6 tibial cartilage subregions. Compared with other medical image segmentation networks, the segmentation accuracy has been improved to some extent, proving the effectiveness of KSNet in segmenting the tibia and its cartilage subregions. Figures 20, 21 and 22 show the tibia and cartilage lateral subregion segmentation results, subspinous, tibia and cartilage medial subregion segmentation results, respectively. As shown in Figs. 20, 21 and 22, compared with other medical image segmentation networks, KSNet has better segmentation quality for sub region edges and a lower probability of erroneous segmentation, proving the effectiveness of KSNet.

Femur and cartilage subregion segmentation results

Table 4 shows the femur and cartilage subregion segmentation results. As shown in Table 4, KSNet has an average dice coefficient of 0.930 on 6 femoral subregions and an average dice coefficient of 0.851 on 6 femoral cartilage subregions. Compared with other medical image segmentation networks, the segmentation accuracy has been improved to some extent, proving that KSNet has good performance in the segmentation of femur and its cartilage subregions. Figures 23 and 24 show the femur and cartilage lateral subregion segmentation results, femur and cartilage lateral medial subregion segmentation results, respectively. As shown in Figs. 23 and 24, compared with other medical image segmentation networks, KSNet can better segment the edges of sub regions, proving the effectiveness of the algorithm proposed in this paper.

Femur and cartilage subregion segmentation results

Table 5 compares the training time, computation time, and space requirements. As shown in Table 5, the proposed method has higher training and computation time than other methods, and also requires higher space requirements. This is because our method introduces edge information, making it more difficult to train. Medical image segmentation does not require high real-time performance, and compared with other methods, our method has a certain improvement in segmentation performance. Therefore, our method has certain practicality.

Conclusion

In this paper, a fully automatic knee subregion segmentation network based on tissue segmentation and anatomical geometry is proposed to solve the difficulty of knee MRI bone and cartilage subregion segmentation caused by numerous subregions and unclear subregion boundary. First, we use a transformer-based multilevel region and edge aggregation network to achieve precise segmentation of bone and cartilage tissue edges in knee MRI. Then, we designed a fibula detection module, which determines the medial and lateral of the knee by detecting the position of the fibula. Afterwards, a subregion segmentation module based on boundary information was designed, which divides bone and cartilage tissues into subregions by detecting the boundaries. In addition, in order to provide data support for the proposed model, fibula classification dataset and knee MRI bone and cartilage subregion dataset were established respectively. Testing on the fibula classification dataset we established, the proposed method achieved a detection accuracy of 1.000 in detecting the medial and lateral of the knee. On the knee MRI bone and cartilage subregion dataset we established, the proposed method attained an average dice score of 0.953 for bone subregions and 0.831 for cartilage subregions, which verifies the correctness of the proposed method.

Data availability

The datasets used and analysed during the current study available from the Zhiyong Zhang (E-mail: zhangsysu2020@163.com) on reasonable request.

References

Esrafilian, A. et al. An automated and robust tool for musculoskeletal and finite element modeling of the knee joint. IEEE Trans. Biomed. Eng. 72, 56–69 (2025).

Huang, R. et al. Memory-guided transformer with group attention for knee mri diagnosis. Pattern Recogn. 162, 111417 (2025).

Mahendrakar, P., Kumar, D. & Patil, U. A comprehensive review on mri-based knee joint segmentation and analysis techniques. Curr. Med. Imaging 20, e150523216894 (2024).

Chen, H. et al. Knee cartilage estimation based on knee bone geometry using posterior shape model. IEEE Sens. J. 24, 30600–30607 (2024).

Chen, J., Yuan, F., Shen, Y. & Wang, J. Multimodality-based knee joint modelling method with bone and cartilage structures for total knee arthroplasty. Int. J. Med. Robot. Comput. Assist. Surg. 17, e2316 (2021).

Daneshmand, M., Panfilov, E., Bayramoglu, N., Korhonen, R. & Saarakkala, S. Deep learning based detection of osteophytes in radiographs and magnetic resonance imagings of the knee using 2d and 3d morphology. J. Orthop. Res. 42, 1473–1481 (2024).

Ghatas, M., Lester, R., Khan, M. & Gorgey, A. Semi-automated segmentation of magnetic resonance images for thigh skeletal muscle and fat using threshold technique after spinal cord injury. Neural Regen. Res. 13, 1787–1795 (2018).

Rini, C., Perumal, B. & Rajasekaran, M. Automatic knee joint segmentation using Douglas–Rachford splitting method. Multimedia Tools Appl. 79, 6599–6621 (2020).

Öztürk, C. & Albayrak, S. Automatic segmentation of cartilage in high-field magnetic resonance images of the knee joint with an improved voxel-classification-driven region-growing algorithm using vicinity-correlated subsampling. Comput. Biol. Med. 72, 90–107 (2016).

Shan, L., Zach, C., Charles, C. & Niethammer, M. Automatic atlas-based three-label cartilage segmentation from mr knee images. Med. Image Anal. 18, 1233–1246 (2014).

Yu, A. et al. Unsupervised segmentation of knee bone marrow edema-like lesions using conditional generative models. Bioengineering 11, 526 (2024).

Maddalena, L. et al. Kneebones3dify: Open-source software for segmentation and 3d reconstruction of knee bones from mri data. SoftwareX 27, 101854 (2024).

Agarwal, R., Ghosal, P., Sadhu, A. K., Murmu, N. & Nandi, D. Multi-scale dual-channel feature embedding decoder for biomedical image segmentation. Comput. Methods Progr. Biomed. 257, 108464 (2024).

Agarwal, R. et al. Deep quasi-recurrent self-attention with dual encoder–decoder in biomedical ct image segmentation. IEEE J. Biomed. Health Inform. 1, 1 (2024).

Gupta, N., Garg, H. & Agarwal, R. A robust framework for glaucoma detection using clahe and efficientnet. Vis. Comput. 38, 2315–2328 (2022).

Gaj, S., Yang, M., Nakamura, K. & Li, X. Automated cartilage and meniscus segmentation of knee mri with conditional generative adversarial networks. Magn. Reson. Med. 84, 437–449 (2020).

Dai, W. et al. Can3d: Fast 3d medical image segmentation via compact context aggregation. Med. Image Anal. 82, 102562 (2022).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, part III 18 234–241 (Springer, 2015).

Felfeliyan, B., Hareendranathan, A., Kuntze, G., Jaremko, J. & Ronsky, J. Improved-mask r-cnn: Towards an accurate generic msk mri instance segmentation platform (data from the osteoarthritis initiative). Comput. Med. Imaging Graph. 97, 102056 (2022).

Liu, F. Susan: Segment unannotated image structure using adversarial network. Magn. Reson. Med. 81, 3330–3345 (2019).

Liu, F. et al. Deep convolutional neural network and 3d deformable approach for tissue segmentation in musculoskeletal magnetic resonance imaging. Magn. Reson. Med. 79, 2379–2391 (2018).

Zhou, Z., Siddiquee, M., Tajbakhsh, N. & Liang, J. Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imaging 39, 1856–1867 (2019).

Huang, H. et al. Unet 3+: A full-scale connected unet for medical image segmentation. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 1055–1059 (IEEE, 2020).

Ambellan, F., Tack, A., Ehlke, M. & Zachow, S. Automated segmentation of knee bone and cartilage combining statistical shape knowledge and convolutional neural networks: Data from the osteoarthritis initiative. Med. Image Anal. 52, 109–118 (2019).

Norman, B., Pedoia, V. & Majumdar, S. Use of 2d u-net convolutional neural networks for automated cartilage and meniscus segmentation of knee mr imaging data to determine relaxometry and morphometry. Radiology 288, 177–185 (2018).

Xiao, L. et al. Architecture of multiple convolutional neural networks to construct a subject-specific knee model for estimating local specific absorption rate. Appl. Magn. Reson. 52, 177–199 (2021).

Hunter, D. et al. Evolution of semi-quantitative whole joint assessment of knee oa: Moaks (mri osteoarthritis knee score). Osteoarthr. Cartil. 19, 990–1002 (2011).

Chen, S., Zhong, L., Qiu, C., Zhang, Z. & Zhang, X. Transformer-based multilevel region and edge aggregation network for magnetic resonance image segmentation. Comput. Biol. Med. 152, 106427 (2023).

Wang, W., He, J. & Wang, X. Rethinking feature guidance for medical image segmentation. IEEE Signal Process. Lett. 32, 641–645 (2025).

Jiang, H., Li, L., Yang, X., Wang, X. & Luo, M. Bsnet: A boundary-aware medical image segmentation network. Eur. Phys. J. Plus 140, 1 (2025).

Funding

This work was supported in part by the Science and Technology Planning Project of Shenzhen City Polytechnic (2511001) and Doctoral Research Initiation Project of Shenzhen City Polytechnic (BS22024012).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, S., Zhong, L., Zhang, Z. et al. A fully automatic knee subregion segmentation network based on tissue segmentation and anatomical geometry. Sci Rep 15, 30449 (2025). https://doi.org/10.1038/s41598-025-16241-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16241-9