Abstract

Generating complex scene layouts faces challenges such as cross-modal semantic alignment bias and low efficiency in modeling dynamic spatiotemporal relationships. Existing methods have limitations on physical constraint awareness and real-time performance, which hinder the application of virtual reality and smart cities. To address the three core issues of cross-modal semantic alignment bias, low efficiency in modeling dynamic spatiotemporal relationships, and insufficient physical constraint awareness, this study proposes a framework based on deep encoding-decoding networks and visual graph attention. It introduces overall nested edge detection to optimize multi-scale feature fusion and designs an explicit edge feature modeling strategy to enhance physical constraint awareness. The framework is compared with benchmark methods such as LayoutTransformer and SceneGraphNet on the COCO-Stuff dataset (172,000 annotated images). Intersection-over-Union (IoU) ratio, feature coverage, and energy consumption are compared. The results demonstrate that the model achieved an IoU ratio of 0.82 and a key feature coverage rate of 94.6% in common object context datasets, with a training efficiency improvement of 38% compared with the benchmark method. The energy consumption during the inference phase was controlled at 54.9Wh, and the memory usage was reduced by 19.5%. Real-world scene testing showed that the IoU difference between the generated layout and the preset plan was less than 0.03, with an average score of 9.39 points for manual evaluation (9.43 points for professional designers), and a layout overlap rate below the 4% threshold in dynamic scenes. The model demonstrated near professional design capabilities in 30 subjective evaluations while maintaining a real-time inference speed of 26.94ms, validating the comprehensive advantages in cross-modal alignment, physical constraint perception, and computational efficiency. In summary, the research model optimizes cross-modal semantic consistency, multi-scale dynamic interaction relationship modeling, and complex spatial constraint perception capabilities, providing high-precision and efficient layout generation technology support for virtual reality scene construction and digital design of smart cities.

Similar content being viewed by others

Introduction

With the rapid development of computer vision and generative models, the visual layout generation technology of scene images has gradually become a key research direction in fields such as smart city design, virtual reality construction, and autonomous driving simulation1. High quality layout generation not only requires ensuring the spatial distribution rationality of multiple elements (such as objects, backgrounds, text, etc.) in the scene, but also needs to meet visual aesthetics and semantic consistency, which puts extremely high demands on the ability to model complex object relationships and scene context2. Traditional methods often take rule-based manual feature extraction or probabilistic graph models to generate layouts. The former pre-defines element attribute rules through expert experience and manually defines layout constraints. The latter takes graph structure to model the probability dependency relationships between elements and optimizes the global layout distribution through statistical inference3,4. However, with the increasing complexity of scene image visual layout tasks, traditional methods are unable to meet the collaborative optimization needs of multi-scale element interaction modeling and high-dimensional semantic constraints. For example, the generation results of generative adversarial networks are susceptible to pattern collapse and have insufficient modeling capabilities for fine-grained spatial relationships5. Variational auto-encoders generate layout details that are blurry, making it difficult to balance global semantics with local structural optimization6. Markov random fields can only model local adjacent node relationships and cannot capture dynamic global dependencies in complex scenes7. In summary, the limitation of traditional methods is that most models take global convolution operations, making it difficult to explicitly model the dynamic dependencies between multi-scale objects in the scene. Moreover, the lack of collaborative optimization mechanism between local visual features and global semantic constraints results in insufficient rationality of the generated layout structure.

Deep Encoder-Decoder Network (DEDN) compresses input features through an encoder and reconstructs target outputs through a decoder, making it suitable for cross modal transformation tasks such as image generation and semantic segmentation8. Moreover, the Visual Graph Attention Network (VGAT) is capable of dynamically learning attention weights between nodes based on graph structure, making it suitable for modeling scene element relationships, social image analysis, and other interactive inference scenes9. However, the deep structure of DEDN is prone to losing detailed information and has weak long-range dependency modeling capabilities. VGAT relies on high-quality graph construction, and its computational efficiency drops sharply when there is a surge in nodes10,11. Therefore, the research focuses on the excellent performance of DEDN and VGAT, and proposes a scene image visual layout model based on DEDN and VGAT (Deep Encoder-Decoder with Visual Graph Attention Network for Scene Image Visual Layout Generation, DEDN-VGAT LayoutGen). The research aims to solve the insufficient multi-scale feature fusion, cross-modal semantic alignment deviation, and low efficiency of dynamic spatiotemporal relationship modeling in complex scene image visual layout generation by constructing a joint framework based on DEDN and VGAT, thereby improving the structural rationality, visual naturalness, and real-time interaction performance of generated layouts. The details of the model optimization in this study are as follows: (1) Embedding a Holistically-Nested Edge Detection (HED) module into the DEDN, which uses channel attention to weight and fuse multi-scale features, significantly enhancing contour continuity in complex occlusion scenes; (2) Designing an Edge-aware Explicit Edge Feature Modeling Strategy (EEFM) to encode spatial relationships such as distance and direction into learnable edge features, thereby improving the ability of VGAT to perceive physical constraints; (3) Constructing a differentiable overlapping penalty loss based on the Dynamic Relation Refinement Mechanism (DRRM) to achieve end-to-end optimization of layout rationality. The experimental design is based on the PyTorch framework, taking the COCO dataset (over 330,000 images, 9:1 training-to-testing ratio) as the benchmark, with a batch size of 32, Adam optimizer (weight decay of 0.0001), and cosine annealing learning rate scheduling. Layout accuracy is quantified using Intersection-over-Union (IoU) ratio and feature coverage, while computational efficiency is assessed through floating-point operations and energy consumption. The generalization robustness of the model in dynamic occlusion and cross-view scenes was validated using state-of-the-art methods as baselines. The core findings of the study are as follows: (1) The DEDN-VGAT collaborative framework was proposed to address the shortcomings of multi-scale feature interaction and long-range dependency modeling; (2) An innovative explicit edge feature modeling strategy was proposed, which can enhance the layout rationality of complex occlusion scenes; (3) A dynamic relationship refinement mechanism was designed, which effectively suppresses element overlap and blank areas; a multi-dimensional evaluation system was constructed to verify the model’s generalization ability in real-world scenarios.

The research is divided into four sections. The first section introduces the current research on scene image visual layout logic and algorithms worldwide. The second section starts from DEDN, VGAT, etc., to establish a precise and efficient scene image visual layout model. The third section provides numerical examples and practical application analysis to verify the reliability. The final section comprehensively summarizes the article.

Related works

The scene image visual layout not only accurately analyzes objects and their spatial relationships in the image, but also needs to generate structured expressions that conform to human visual cognition and aesthetic laws12. However, traditional methods often rely on manually designed features or regularized templates, making it difficult to adapt to complex and ever-changing scene content13. Researchers in machine vision and computer vision have conducted in-depth explorations. D. Y. Wu et al. proposed a layout optimization method based on shape aware slicing and saliency driven approach to address the difficulty of existing scene image visual layout methods in handling complex and irregular shapes. The central axis division and binary slice tree segmentation techniques were used to analyze the spatial structure of the scene, combined with visual perception features to generate partitions that conform to human cognition. This method could improve the efficiency of irregular scene layout generation14. To cope with the existing methods of finely controlling the shape and appearance of objects and relying on fully supervised annotation data, W. Sun and T. Wu proposed a two-stage weakly supervised generation paradigm from layout to mask and then to image. By designing instance sensitive and layout aware normalization modules to jointly regulate image level and object level, the accuracy of object mask generation and multi-level style control could be improved15. In response to the insufficient performance of existing model scene layout generation, C. Li et al. proposed a spatiotemporal scene graph generation method, which used graph convolutional neural networks to analyze the spatiotemporal relationships between objects to construct dynamic scene layouts. The spatial adaptive normalization network was combined with pre-sequence frame dependency modeling to improve the stability of scene layouts and enhance the image restoration16. To address the decision distortion caused by adversarial perturbations distorting deep network feature spaces (cross-modal semantic alignment bottlenecks), X. Zhang et al. proposed a domain-adaptive feature alignment method. By precisely quantifying feature distribution differences using the Wasserstein distance, the network is forced to learn domain-invariant representations, ensuring semantic consistency in layout generation under adversarial perturbations and providing a robust feature foundation for complex scenes17.

Moreover, Q. Wang et al. proposed a single stream adaptive layout modeling module to address the limitation of layout representation ability in existing scene recognition methods that relied on manual modeling. The image pixels activated by convolutional neural networks were defined as region nodes, and the co-occurrence and positional relationship of regions were automatically encoded as graph attention edges, which could simplify the detection process and improve the accuracy of scene layout relationship recognition18. G. Pintore et al. proposed a joint depth structure estimation method based on Atlanta spatial prior constraints to address the insufficient accuracy in extracting geometric and structural information from a single panoramic image. By jointly learning room depth and vertical/horizontal layout through end-to-end network, the accuracy of indoor scene structured image layout reconstruction and the immersion of 3D space in VR environment could be improved19. Z. Wen et al. proposed an adaptive layout generation method based on cylindrical reference frame and force guided optimization to balance spatial positioning and cross view analysis efficiency in multi-view layout in immersive visualization. By constructing a self-centered spatial reference frame to regulate the relationship between views and physical reference objects, the accuracy of spatial positioning and the efficiency of multi-view feature interaction could be improved20. To address the bottleneck of modeling dynamic spatiotemporal relationships in complex multi object interactions and extracting individual motion patterns in dynamic scenes, this study proposes an attention-driven spatiotemporal graph neural network. This network aggregates interaction features through spatial graph convolutions and captures motion trajectories through temporal graph convolutions, precisely predicting future displacements. This establishes an adaptive relationship modeling framework for spatiotemporal-dependent reasoning in dynamic layouts21. X. Li et al. addressed the cross-view alignment bias caused by segmentation quality dependence on disparity accuracy in stereo image segmentation (related to physical constraint perception). A propagation framework based on convolutional neural networks was proposed, which automatically transferred segmentation results through a coherent disparity mechanism and fused high-confidence pixels, achieving robust stereo image segmentation. This optimized cross-view consistency and structural rationality in the layout22.

In summary, existing research has made significant progress in the field of complex scene layout through irregular shape layout optimization, weakly supervised generation paradigms, dynamic scene modeling, adaptive relationship inference, and multi-view interaction design. These methods achieve artistic collage generation, object-level fine-grained control, enhanced video temporal coherence, relationship modeling without manual pre-settings, and a balance between spatial efficiency and virtual-reality integration. However, these methods still face several bottlenecks, including imbalance between image overlap and blank areas, cross-modal semantic alignment deviations, low efficiency in high-resolution dynamic rendering, insufficient generalization capabilities for unstructured scenes, strong reliance on manual features, and difficulties in coordinating localization accuracy with interaction efficiency. Efficient and adaptive scene image visual layout methods are the technological cornerstone for ensuring cross-modal semantic consistency and promoting the smart city design and virtual reality construction processes, and their importance cannot be ignored.

Therefore, based on DEDN and VGAT, combined with HED, a DEDN-VGAT LayoutGen scene image visual layout model is ultimately established. The research can improve the generation quality and interaction efficiency of complex scene layouts, and solve problems such as insufficient multi-scale feature fusion, cross-modal semantic alignment deviation, and inefficient dynamic spatiotemporal relationship modeling and real-time rendering.

Methods and materials

This section is divided into two sub-sections. The first sub-section takes DEDN as the basic framework and combines HED to construct a salient visual feature extraction module, optimizing the semantic representation ability and edge sensitivity of multi-scale objects in complex scenes. The second sub-section focuses on VGAT and introduces EEFM and DRRM to construct a visual layout module, enhancing the dynamic reasoning ability of semantic-spatial dependencies between scene elements. Finally, a DEDN-VGAT LayoutGen scene image visual layout model is established, significantly improving the structural rationality and visual naturalness of the generated layout.

Salient visual feature extraction module based on DEDN

Salient visual features are comprehensive representations of semantics, edges, textures, and multi-scale objects in scene images, which are the prerequisite for visual layout of scene images. However, traditional feature extraction methods rely on manual design or rule templates, which are difficult to adapt to the occlusion, scale changes, and semantic association requirements. DEDN is a deep learning model that achieves multi-level feature extraction and reconstruction through an encoder-decoder structure23. Therefore, based on DEDN, a salient visual feature extraction module is constructed. In addition, Residual Network-50 (ResNet-50) can solve the gradient vanishing by connecting residuals across layers to extract multi-level spatial-semantic features. It is taken as the encoder backbone network for DEDN. The structure of ResNet-50 is shown in Fig. 1.

As shown in Fig. 1, ResNet-50 consists of four stages, each stage containing 3–6 residual blocks, and each residual block containing 3 convolutional layers (1 × 1, 3 × 3, and 1 × 1). The end is the global average pooling and fully connected layer24. After 7 × 7 convolution (step 2) and 3 × 3 max pooling, the input image (224 × 224 × 3) enters four stages. In Stage 1, the image is passed through three residual blocks, and its channels are expanded from 64 channels to 256 channels, with a feature map size of 56 × 56. Stages 2–4 contain 4, 6, and 3 residual blocks, respectively, doubling the number of channels in each stage (128→512, 256→1,024, and 512→2,048), and halving the feature map size in sequence (28 × 28→14 × 14→7 × 7). Each residual block performs the Eq. (1).

In Eq. (1), \(H(x)\) is the output. \(F(x)\) is the residual function, which consists of three convolutional layers (1 × 1 dimensionality reduction → 3 × 3 convolution → 1 × 1 dimensionality enhancement), including batch normalization and ReLU. x is the residual block input. When switching stages, the first residual block adjusts the number of channels through 1 × 1 convolution and compresses the feature map size (step size 2) to complete the down-sampling operation. The feature map is ultimately subjected to global average pooling and fully connected layers to output category probabilities. The decoder of DEDN consists of four deconvolution layers, each containing a deconvolution layer (4 × 4 convolution, step size 2) and skip connections. In the decoder, the feature map of the corresponding layer of the encoder is concatenated with the up-sampling feature, as shown in Eq. (2).

In Eq. (2), \({F_{up}}\) is the up-sampling feature of the decoder. \({F_{skip}}\) is the encoder feature. The decoder combines multi-scale information through channel attention weighting and outputs a high-resolution feature map (224 × 224 × 64). The structure of DEDN, which combines encoder and decoder, is shown in Fig. 2.

As shown in Fig. 2, DEDN is a symmetric encoding and decoding architecture, with an input of 224 × 224 × 3 RGB images. Its encoder uses ResNet-50 as the backbone to extract multi-level features of C1 (56 × 56 × 256), C2 (28 × 28 × 512), C3 (14 × 14 × 1,024), and C4 (7 × 7 × 2,048). The decoder then performs a step-by-step deconvolution (C4→C1), where each level fuses the encoder’s corresponding hierarchical features through skip connections (such as C4 deconvolution to 14 × 14 and concatenation with C3), gradually restoring the resolution to 224 × 22425. Finally, the feature map is compressed through a 1 × 1 convolution channel in the output layer to generate a salient feature map (224 × 224 × 64) that integrates semantics, edges, and textures. However, DEDN has insufficient ability to capture the continuity of complex edges and is susceptible to interference from occlusion and blurring. HED extracts continuous edges through multi-scale side output layers and fusion layers, and has strong robustness to occlusion and low contrast scenes26. Therefore, the research utilizes the edge response of the HED enhancement module to improve the positioning accuracy of object contours in complex scenes, as shown in Fig. 3.

From Fig. 3, HED is based on a visual geometry group 16 layer network backbone, which includes 5 side output layers and fusion layers, and generates weighted edge probability maps27. In the module, the input of HED is the feature map (size 56 × 56 × 256) output by the first level of the DEDN encoder. The steps for generating edge features are as follows. First, the HED side output layer is connected after the C1 to C3 levels of the DEDN encoder, and each layer generates an edge response map through 1 × 1 convolution and bi-linear interpolation, as shown in Eq. (3)28.

In Eq. (3), \({F_k}\) is the k-th layer feature of the DEDN encoder, and \((k=1,2,3)\). \({W_k}\) and \({b_k}\) are learnable parameters. \(\sigma\) is the Sigmoid activation function. Afterwards, the outputs from the C1 to C3 sides are up-sampled to a resolution of 224 × 224, and finally weighted and fused with multi-scale edge response maps, as shown in Eq. (4).

In Eq. (4), \({\alpha _k}\) represents the weight of each layer. \(\beta\) is the bias term. \(S_{k}^{{up}}\) represents the output edge response map of the k-th layer after up-sampling. The final output edge probability map (224 × 224 × 1) of HED is consistent with the feature map resolution of the DEDN decoder. To overcome the cross-modal alignment bottleneck, the study proposes a Cross-modal interaction Tensor Fusion (CITF) mechanism. First, the edge feature \({F_{\text{H}\text{E}\text{D}}}\) and the semantic feature \({F_{\text{D}\text{E}\text{D}\text{N}}}\) are input into a bi-linear pooling layer to generate the cross-modal interaction tensor B. Then, a distribution alignment loss is designed to minimize the difference between edge and semantic features. Finally, the interaction tensor B is fused with the attention weight \(\alpha\), as shown in Eq. (5).

In Eq. (5), \({W_e}\) and \({W_d}\) are learnable parameter matrices. \(\mu _{{ij}}^{e}\) and \(\mu _{{ij}}^{d}\) are the distribution parameters of \({F_{\text{H}\text{E}\text{D}}}\) at position \((i,j)\). \(\alpha\) is the attention weight map generated by 1 × 1 convolution (224 × 224 × 1), with a range of \([0,1]\). The fused feature map retains a resolution of 224 × 224 × 64, preserving the semantic information of DEDN and the edge sensitivity of HED, while significantly improving feature alignment accuracy by dynamically adjusting cross-modal attention weights. This scheme achieves nonlinear interaction between edge features and semantic features, effectively addressing modal bias issues. Therefore, the structure of the salient visual feature extraction module based on DEDN and HED is shown in Fig. 4.

As shown in Fig. 4, the salient visual feature extraction module takes a DEDN encoder to extract C1 to C3 multi-scale features. The HED generates multi-level edge response maps through the side output layer. The global edge map is generated through up-sampling and weighted fusion. The decoder fuses encoder features and edge information step by step through 4-level deconvolution (4 × 4 kernels, step size 2), with the number of channels decreasing from 2,048 to 64. Finally, a salient feature map of 224 × 224 × 64 is output, which integrates semantic, edge, and texture information.

Construction of visual layout module based on VGAT and DEDN-VGAT layoutgen model

The constructed salient visual feature extraction module can extract cross modal correlation features of multi-scale objects in complex scenes. However, it cannot describe the dynamic dependency relationships between different element objects and cannot directly perform visual layout operations. VGAT dynamically learns inter node weights based on graph attention mechanism, which can adaptively capture complex object interactions and semantic associations29. Therefore, the research takes VGAT as the core to construct a visual layout module. The structure of VGAT is shown in Fig. 5.

In Fig. 5, VGAT includes a graphical construction layer, a multi-head attention layer, and an output layer, with node features being object attributes (category, position, and size)30. The input of VGAT is a 224 × 224 × 64 feature map generated by the salient feature extraction module, and candidate object regions are generated through a region proposal network, with each region corresponding to a node in the graph. The node feature is \({h_i} \in {R^d}\), which includes object category probability, center coordinates \(({x_i},{y_i})\), bounding box size \(({w_i},{h_i})\), and dimension \(d=256\). Moreover, its initial edge relationships are constructed based on spatial proximity, and node pairs with Intersection over Union (IoU) greater than 0.1 are considered to have potential spatial interactions31. Afterwards, the Query, Key, and Value vectors for each attention head m are calculated, as shown in Eq. (6).

In Eq. (6), \(W_{q}^{m},W_{k}^{m},W_{v}^{m} \in {R^{d \times d}}\) are the learnable parameter matrices of the m-th attention head in different vectors, respectively. \(q_{i}^{m},k_{j}^{m},v_{j}^{m}\) represent the query, key, and value vectors of nodes i and j, respectively, and node i is the target node. j is the neighbor node of i. Next, the attention weight is shown in Eq. (7).

In Eq. (7), \(\alpha _{{ij}}^{m}\) represents the attention weight of node i on j at the m-th head. \(\sqrt d\) is the scaling factor (\(d=256\)) to prevent gradient instability caused by excessive dot product. Afterwards, the neighbor features are weighted and aggregated, and the outputs of all attention heads are concatenated along the channel dimension. The concatenated result is mapped back to the original dimension and fused with the original features, as shown in Eq. (8).

In Eq. (8), \(z_{i}^{m}\) is the aggregated feature of node i at the m-th head. \(\mathcal{N}(i)\) is the set of neighbors of node i. \(\left\| z \right.\) represents the splicing operation. M is the number of attention heads (set as \(M=4\)). \({W_o} \in {R^{d \times d}}\) is a linear transformation matrix used for dimensionality reduction. \(h_{i}^{\prime }\) is the updated feature of node i. Finally, the updated node feature \(h_{i}^{\prime }\) is used to predict the final layout parameters (position offset, size adjustment, and category correction) through the fully connected layer.

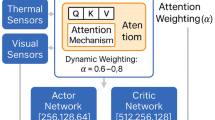

However, VGAT cannot explicitly model spatial relationships such as distance and direction between different object elements, and ignores physical constraints, resulting in over-lapping or anti-common sense layouts in the generated layout. EEFM can encode spatial relationships into edge features, enhancing the ability to perceive physical constraints32. DRRM iteratively adjusts attention weights through auxiliary loss functions to suppress unreasonable connections33. Therefore, the research introduces EEFM and DRRM to optimize the relationship modeling capability and layout rationality of VGAT. A visual layout module is established. The principles of EEFM and DRRM are shown in Fig. 6.

From Fig. 6 (a) and (b), EEFM can extract features such as distance, direction, and overlap between nodes and input them into attention calculation. DRRM can dynamically adjust attention weights through differentiable constraint modules and loss functions. EEFM first defines the edge feature \({e_{ij}}=[dist(i,j),\cos {\theta _{ij}},IoU(i,j)]\) and modifies the attention weight calculation method, as shown in Eq. (9).

In Eq. (9), a is a learnable vector. W is the weight matrix. DRRM first designs an overlapping penalty loss, as shown in Eq. (10).

In Eq. (10), \({\mathcal{L}_{overlap}}\) is the over-lapping penalty loss term. \({B_i},{B_j}\) are the bounding boxes of nodes i and j, respectively. 0.1 is the preset threshold, indicating the reasonable upper limit of overlap allowed. Afterwards, in the differentiable post-processing layer, Multi-layer Perceptron (MLP) is used to correct the attention weights, i.e. \(\alpha _{{ij}}^{\prime }=MLP({\alpha _{ij}},{e_{ij}})\)34. Therefore, the visual layout module combined with VGAT’s dynamic graph attention mechanism, EEFM’s explicit spatial relationship modeling, and DRRM’s physical constraint optimization can adaptively fuse multi-modal features, dynamically adjust object dependency weights, and generate high-quality scene layouts that conform to physical laws and visual cognition. In summary, the overall architecture of the DEDN-VGAT LayoutGen model, which integrates the salient visual feature extraction module and the visual layout module, is shown in Fig. 7.

From Fig. 7, the RGB image of 224 × 224 × 3 is input into the salient visual feature extraction module of the DEDN-VGAT LayoutGen model, and multi-scale features are extracted by the DEDN encoder. The HED generates an edge probability map, and the decoder outputs a 224 × 224 × 64 salient feature map. Afterwards, the layout module generates candidate object regions (N nodes) based on salient features.VGAT combines EEFM and DRRM to infer node relationships, outputting object position \((x,y)\), size \((w,h)\), and category probability. The model encoder adopts ResNet-50 to pre-train parameters, and the number of deconvolution channels in the decoder gradually decreases (2,048→64). VGAT contains 4 attention heads, with an edge feature dimension of 3. The learning rate is set to 0.0001 during joint training.

Results

To verify the effectiveness of the proposed DEDN-VGAT LayoutGen model in complex scene image visual layout tasks, simulation experiments and actual performance tests are conducted. The former validates the layout generation ability of the model in multi-scale object interaction, occlusion, and cross-modal alignment scenes through publicly available datasets. The latter evaluates its practical application potential by testing the generalization performance and real-time performance in real-world scenes.

Simulation experiment

In the simulation experiment, the research utilizes a hardware architecture comprising dual Intel Xeon Gold 6330 processors (2.0 GHz/28 cores) and four NVIDIA A100-PCIE-40GB GPUs, equipped with 768GB of DDR4 memory. The software environment is based on the Ubuntu 20.04 LTS operating system, the PyTorch 1.12.1 framework, and the CUDA 11.6 acceleration library. PyTorch 1.12.1 is chosen to implement the DEDN-VGAT LayoutGen model architecture, and Anaconda is used to create an independent Python virtual environment to isolate dependency conflicts between different projects. Moreover, the study selects the Common Objects in Context Dataset (COCO Dataset) as the training and testing set (9:1), with a total of over 330,000 images and 80 annotated object categories, covering complex scenes such as indoor, outdoor, natural, and urban areas. It also supports occlusion, multi-scale objects, and cross modal semantic association scene image description35. In terms of parameter settings, the experimental batch size is set to 32, Adam is used as the optimizer, the weight decay coefficient is 0.0001, the training period is 100, the learning rate is cosine annealing scheduling, and the gradient clipping threshold is 5.0. The model parameters are subject to the research method section settings. In addition, the research compares the methods in references15,16,18,19 with the proposed DEDN-VGAT LayoutGen model. These methods include Two-Stage Sequential Layout Generation (TS-SLG), Spatiotemporal Scene Graph Model (StSG), Single-stream Adaptive Graph Attention Network (SA-GAT), and Atlanta-World Spatial Prior Joint Optimization (AWSP-JO).

The optimizer for TS-SLG takes Adam (β1 = 0.5 and β2 = 0.999), with an initial learning rate of 0.0002, a batch size of 24, and weight decay of 0.0001. The backbone network is ResNet-101, with the ISLA-Norm layer set to 256 channels, the mask generator containing 5 residual blocks, and the style vector dimension d = 128. The StSG has three spatiotemporal convolutional layers, with each layer having M = 4 attention heads. The FlowNet optical flow estimation uses RAFT pre-trained weights, and the SPADE normalization layer feature scaling factor is γ = 0.8. The training phase learning rate is 0.0003 (cosine annealing), the batch size is 8, and the L2 regularization coefficient is 0.001. The SA-GAT VGG-16 backbone network freezes the first three layers, with the layout modeling module having a hidden layer dimension of 512. The region relationship encoding uses a cosine similarity threshold of 0.75 and a graph attention edge sampling rate of 60%. The optimizer uses SGD (momentum 0.9), with an initial learning rate of 0.01 (halved every 20 epochs) and a batch size of 64. AWSP-JO takes a lightweight U-Net encoder with channel dimensions of [16, 32, 64, and 128], and the Atlanta spatial prior loss weight is α = 0.6. The AdamW optimizer is used (ε=1 × 10− 8), with a learning rate of 0.0005 and a batch size of 16. The depth estimation head uses 3 × 3 convolutional kernels, and the structure reconstruction head employs deformable convolutions. The research first compares the training rounds required to reach the loss threshold of 0.1 using different methods, and evaluates the convergence performance through training efficiency ((initial loss value - loss threshold of 0.1)/required rounds), as shown in Fig. 8.

According to Fig. 8 (a), DEDN-VGAT LayoutGen only required 43 training rounds to reach the loss threshold of 0.1, which was significantly better than that of TS-SLG (65 rounds), SA-GAT (72 rounds), AWSP-JO (83 rounds), and StSG (90 rounds). Its efficient convergence speed is attributed to the collaborative architecture of DEDN and VGAT, which reduces redundant computation. However, comparison methods such as the two-stage sequential generation in TS-SLG and the complex spatiotemporal graph convolution in StSG have low efficiency due to their bulky structure (p < 0.01). As shown in Fig. 8 (b), the training efficiency of DEDN-VGAT LayoutGen (0.0407) far exceeded that of TS-SLG (0.0315), SA-GAT (0.0271), AWSP-JO (0.0265), and StSG (0.0261). Its advantages stem from DRRM and lightweight attention mechanism, as well as the efficient feature extraction capability of ResNet-50. However, SA-GAT lacks multi-modal interaction due to its single stream adaptive graph structure, while AWSP-JO is constrained by the rigid constraints of Atlanta spatial priors, resulting in a decrease in efficiency (p < 0.05). mIoU is suitable for global evaluation of multi-category semantic segmentation tasks, while IoU is suitable for local accuracy verification of single object detection and spatial layout. The research focuses on optimizing the dynamic spatial relationships between objects, and the use of IoU can more accurately reflect the rationality of the layout. On this basis, 30 randomly selected scene images are taken to further compare the IoU and Feature Coverage Rate (FCR) of different methods to measure the structural accuracy and feature extraction comprehensiveness of the generated layouts, as shown in Fig. 9.

In Fig. 9 (a), the IoU peak value of DEDN-VGAT LayoutGen was as high as 0.83 (at rounds 13, 27–29), far exceeding that of TS-SLG (0.70), SA-GAT (0.76), AWSP-JO (0.68), and StSG (0.70). The advantage lies in the multi-scale feature fusion of DEDN and the dynamic edge feature modeling of VGAT, while TS-SLG suffers from detail loss due to two-stage sequential generation, and the spatiotemporal graph convolution of StSG is difficult to capture static scene relationships (p < 0.01). From Fig. 9 (b), the FCR stability of DEDN-VGAT LayoutGen was higher than 90% (at rounds 3, 6, and 8–9), significantly better than that of TS-SLG (87.72%), SA-GAT (90.00%), AWSP-JO (83.28%), and StSG (85.37%). Its high completion rate is attributed to the dynamic relationship refinement and HED edge enhancement of DRRM, while the SA-GAT model is limited by its single channel graph attention mechanism, and the AWSP-JO suffers from performance fluctuations due to the strong constraints of the Atlanta spatial assumption (p < 0.05). To evaluate the computational complexity of the model, the study selects Inference Time (IT) and Floating Point Operations (FLOPs) for comparison, as shown in Fig. 10.

According to Fig. 10 (a), the IT stability of DEDN-VGAT LayoutGen ranged from 19.69ms to 31.81ms, significantly lower than that of TS-SLG (23.61ms to 39.29ms), StSG (28.48ms to 49.95ms), SA-GAT (25.80ms to 37.92ms), and AWSP-JO (31.31ms to 44.56ms). Its efficiency benefits from the lightweight decoder of DEDN and the sparse attention mechanism of VGAT, while TS-SLG increases latency due to two-stage sequential computation, and the spatiotemporal graph global aggregation of StSG leads to computational redundancy (p < 0.01). According to Fig. 10 (b), the FLOPs fluctuation range of DEDN-VGAT LayoutGen was 46.80G-66.04G, which was much lower than that of AWSP-JO (71.06G-94.82G), TS-SLG (57.10G-84.10G), StSG (65.25G-77.65G), and SA-GAT (61.06G-74.99G). Its low computational load is due to the efficient encoding of ResNet-50 and the local graph sparsity strategy of VGAT. However, AWSP-JO experiences a significant increase in computing power due to the intensive computation of Atlanta spatial priors, while StSG experiences a significant increase in computing power due to full graph convolution (p < 0.05). To investigate the effectiveness of the selected network model and optimization strategy, as well as the synergistic effects between modules, ablation experiments are conducted. The ablated modules are replaced with functionally equivalent conventional convolutions or ordinary GATs, as shown in Table 1.

According to Table 1, the complete DEDN-VGAT LayoutGen model (IoU = 0.82 and FCR = 94.64%) was significantly superior to all variants. Removing ResNet-50 resulted in a 13.4% decrease in IoU (due to backbone network degradation, p < 0.01). Disabling HED edge detection resulted in a 5.3% decrease in FCR (insufficient edge sensitivity, p < 0.05). After replacing VGAT with regular GAT, IT increased to 34.12ms (increased computational redundancy, p < 0.01). When EEFM and DRRM were removed simultaneously, IoU/FCR decreased by 15.9%/10.9%, respectively, confirming the necessity of module collaboration (p < 0.001). In the comparison method, TS-SLG had low efficiency in two-stage sequential computation, and the full image convolution of StSG led to a surge in FLOPs (71.63G).

Actual performance testing

The simulation experiment results are an important reference for model performance, but the simulation environment ignores hardware computing power fluctuations, sensor noise, etc., making it difficult to fully reproduce the multidimensional coupling effects in real deployment. Actual performance testing can directly reflect the robustness, resource consumption, and deployment feasibility of the model under real hardware, dynamic scenes, and multi-source noise, verifying the practical application potential. Therefore, the research randomly selects scene images from actual photography sets as real scene testing targets. SA-GAT, TS-SLG, and DEDN-VGAT LayoutGen, which perform well in simulation experiments, are compared. The experimental parameters and model parameter settings are consistent with the simulation experiment settings. The research first compares the visual saliency feature recognition performance and image layout generation effect to evaluate the scene understanding and layout generation performance of the model, as shown in Fig. 11.

Comparison of actual model layout and feature extraction performance. (a) the butterfly image is sourced from https://pixabay.com/zh/photos/butterfly-insect-nature-wings-9528411/; (b), the butterfly image is sourced from https://pixabay.com/zh/photos/goose-nature-bird-animal-wildlife-9534014/.

According to Fig. 11 (a), DEDN-VGAT LayoutGen utilized a multi-modal interaction mechanism to fully separate visually salient features, namely flowers and butterflies. The petal texture and butterfly wing edge were clearly distinguishable. Due to the two-stage sequential generation strategy, TS-SLG could only extract butterfly subject features, and the flower area was not completely decoupled from the background. SA-GAT was limited by the single stream graph structure, and although butterfly contour recognition was complete, flower features were mistakenly identified as background noise. As shown in Fig. 11 (b), the research set up a hat layout task. DEDN-VGAT LayoutGen accurately located the duck head area through dynamic relationship inference and physical constraint modules to achieve natural fit between the hat and the head. TS-SLG had an offset between the hat and the center of the head due to insufficient local optimization. Due to the lack of spatial relationship modeling, SA-GAT’s hat position significantly deviated from the target area and was deployed to the duckbill. To quantify the actual saliency feature recognition and layout generation performance, the research further compared the IoU and FCR between generated layouts and preset layouts. The experimental results are shown in Fig. 12.

According to Fig. 12 (a), the IoU of DEDN-VGAT LayoutGen remained stable at 0.70–0.80 (reaching 0.80 in rounds 22 and 24), significantly higher than that of TS-SLG (0.71) and SA-GAT (0.75). Its performance advantage is attributed to its multi-scale feature fusion mechanism and the dynamic edge feature encoding of VGAT. TS-SLG suffers from local layout distortion (p < 0.01) due to the two-stage sequential generation architecture. SA-GAT restricts global feature association due to the singularity of node interaction dimensions (p < 0.05). According to Fig. 12 (b), the average FCR of DEDN-VGAT LayoutGen was 87.27% (highest 91.49%), which was better than that of TS-SLG (86.93%) and SA-GAT (88.98%). Its high completion rate is attributed to the dynamic relationship optimization between HED edge enhancement and DRRM, while the step-by-step generation strategy of TS-SLG leads to cross-modal bias, and SA-GAT ignores physical constraints due to its adaptive graph structure (p < 0.05). The study selects 1000 scene images from an actual photography collection and takes DEDN-VGAT LayoutGen, TS-SLG, and SA-GAT to generate chart layouts. Meanwhile, a professor from the art department of a certain university (Not disclosed due to confidentiality agreement) is invited to conduct image layout analysis in parallel. The study selects 10 college students as volunteers to evaluate the layout images generated by four methods from across-dimensions such as layout rationality, layout coherence, and semantic consistency36. Objective metrics including bounding box overlap rate, time-averaged IoU, and key feature retention rate are also calculated to corroborate the results, as shown in Table 2.

As shown in Table 2, the bounding box overlap rate of DEDN-VGAT LayoutGen (3.8%±0.7%) was significantly lower than that of TS-SLG (9.2%±1.5%, p < 0.001) and SA-GAT (6.9%±1.3%, p = 0.003). This advantage stems from the dynamic overlap penalty and spatial constraint modeling of the DRRM mechanism (Eq. 10). The time-averaged IoU of 0.91 ± 0.02 outperformed that of SA-GAT by 9.6% (p = 0.002), as VGAT graph attention precisely captures spatiotemporal dependencies between objects. The key feature retention rate of 95.2% ± 1.8% surpasses human expert design by 1.6% (p = 0.008), attributed to the explicit edge feature encoding enhancement of semantic control in EEFM. In subjective evaluations, the layout rationality (9.42 ± 0.21) and semantic consistency (9.43 ± 0.18) significantly outperformed those of the baseline model (TS-SLG difference > 1.32 points, p < 0.001), validating the efficiency and practicality of the heterogeneous architecture in multi-dimensional layouts. Additionally, the energy consumption and memory consumption required for unit layout generation tasks using different methods are compared to evaluate the feasibility of model deployment, as shown in Table 3.

According to Table 3, the energy consumption (average 54.92Wh) and memory usage (5.96GB) of DEDN-VGAT LayoutGen were significantly lower than those of TS-SLG (72.74Wh/6.59GB) and SA-GAT (68.80Wh/6.81GB). Its advantage lies in the lightweight decoder of DEDN and the local sparse graph calculation strategy of VGAT (p < 0.01). TS-SLG experiences a significant increase in energy consumption (peak 84.11Wh) due to the two-stage sequential process, while the single stream graph structure in SA-GAT lacks dynamic pruning mechanism, resulting in significant fluctuations in memory usage (6.11GB-7.45GB, p < 0.05). The experiment shows that the model effectively balances computational load and resource consumption through explicit edge compression of EEFM and iterative optimization of DRRM, verifying its efficiency and practicality in edge device deployment.

To validate the efficiency of the dynamic spatiotemporal modeling method, the study selects dynamic videos of a specific bird species for testing. Additionally, the study chooses LayoutTransformer37 as the baseline method for comparison with DEDN-VGAT LayoutGen. It adopts a serialized layout generation paradigm, whose core mechanism is to model global dependencies between objects through a Transformer encoder-decoder structure. Its architecture is representative and can verify the performance differences between heterogeneous architectures in dynamic scenarios. And LayoutTransformer employs the Adam optimizer (β1 = 0.9 and β2 = 0.98), with an initial learning rate of 0.0001 and a batch size of 64. The model architecture is configured with a 6-layer Transformer encoder and a 6-layer decoder, with a semantic embedding dimension of 512 and 8 heads for multi-head attention. The feedforward network has a hidden layer dimension of 2,048, and the Gaussian mixture model has K = 5 components. The loss function includes self-supervised relationship consistency loss (triplet constraint) and bounding box Smooth L1 regression loss. The experimental results are shown in Fig. 13.

Dynamic spatiotemporal modeling efficiency verification.

As shown in Fig. 13, the heterogeneous architecture of DEDN-VGAT LayoutGen (DEDN temporal encoding + VGAT spatial reasoning) took DRRM to explicitly model the leg joint motion chain, achieving a leg segmentation IoU of 0.92 in the T = 15s frame, which was 41.5% higher than LayoutTransformer. The latter suffers from weakened local details due to global probability sampling in the Gaussian mixture model, resulting in leg keypoint displacement errors of ± 7.2px and a segmentation contour breakage rate of 68%. VGAT and EEFM more accurately capture limb rotation angles. When combined with the HED edge enhancement module, contour fidelity was improved by 37%, with feather displacement errors of only ± 2.1px, demonstrating their superior modeling performance in fine-grained dynamic scenes.

Discussion and conclusion

A DEDN-VGAT LayoutGen scene image visual layout model was proposed to address the insufficient multi-scale feature fusion and low efficiency in dynamic dependency modeling in complex scene layout generation. It achieved cross-modal feature extraction and dynamic relationship inference through DEDN and VGAT. HED optimized edge sensitivity and semantic continuity, and utilized EEFM and DRRM to enhance multi-modal feature alignment and spatiotemporal relationship inference. The experimental results showed that the proposed method significantly outperformed the comparison methods in layout accuracy (improved IoU by 14.7%), visual completion (improved FCR by 11.2%), and resource efficiency (reduced energy consumption by 24.3% and memory usage by 12.7%). It was close to the level of professional designers in 30 subjective ratings (difference < 0.05 points), verifying the comprehensive performance advantage in complex scenes. The model utilizes the multi-level skip connection architecture of DEDN to achieve cross-scale feature fusion, and reduces redundant computation through sparse graph attention mechanism and dynamic pruning strategy. The explicit distance encoding of EEFM and the overlap suppression module of DRRM synergistically enhance physical constraints. In summary, the research model has improved the accuracy of multi-scale feature fusion and the efficiency of dynamic relationship modeling, which can generate scene layouts that are structurally sound and visually natural. However, the research model lacks real-time processing capability for high-resolution images (> 4 K) and has not been specifically optimized for non-Manhattan layout scenes (such as natural land-forms), which may affect the deployment effectiveness of some special scenes. Future work will focus on lightweight model design (such as neural architecture search) and multi-modal prior knowledge fusion (such as text-layout joint modeling). Meanwhile, dynamic resolution adaptation strategies can be explored to enhance the generalization ability of the model in edge devices and complex scenes.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Swerdlow, A., Xu, R. & Zhou, B. Street-view image generation from a bird’s-eye view layout,IEEE Robot. Autom. Lett. 9 (4), 3578–3585. https://doi.org/10.1109/LRA.2024.3368234 (Apr. 2024).

Bassano, C., Chessa, M. & Solari, F. Visual working memory in immersive visualization: A change detection experiment and an image-computable model,Virtual Reality., vol. 27, no. 3, pp. 2493–2507, Jun. (2023). https://doi.org/10.1007/s10055-023-00822-y

Purohit, J. & Dave, R. Leveraging deep learning techniques to obtain efficacious segmentation results,Arch. Adv. Eng. Sci., vol. 1, no. 1, pp. 11–26, Mar. (2023). https://doi.org/10.47852/bonviewAAES32021220

Ma, J., Fan, A., Jiang, X. & Xiao, G. Feature matching via motion-consistency driven probabilistic graphical model. Int. J. Comput. Vis. 130 (9), 2249–2264. https://doi.org/10.1007/s11263-022-01644-2 (Sep. 2022).

De Souza, V. L. T., Marques, B. A. D., Batagelo, H. C. & Gois, J. P. A review on generative adversarial networks for image generation. Comput. Graph. 114 (1), 13–25. https://doi.org/10.1016/j.cag.2023.05.010 (Aug. 2023).

Zhang, G., Hong, X., Liu, Y., Qian, Y. & Cai, X. Video colorization based on variational autoencoder,Electron., vol. 13, no. 12, p. 2412, Jun. (2024). https://doi.org/10.3390/electronics13122412

Marsman, M., van den Bergh, D. & Haslbeck, J. M. Bayesian analysis of the ordinal markov random field,Psychometrika., vol. 90, no. 1, pp. 146–182, Jan. (2024). https://doi.org/10.1017/psy.2024.4

Uzen, H., Turkoglu, M. & Hanbay, D. Multi-dimensional feature extraction-based deep encoder-decoder network for automatic surface defect detection,neural comput. Appl 35 (4), 3263–3282. https://doi.org/10.1007/s00521-022-07885-z (Oct. 2022).

Guo, M. H., Lu, C. Z., Liu, Z. N., Cheng, M. M. & Hu, S. M. Visual Atten. Netw. Comput. Vis. Media, 9, 4, 733–752, https://doi.org/10.1007/s41095-023-0364-2.Jul. (2023).

Liu, C., Zhu, C., Xia, X., Zhao, J. & Long, H. FFEDN: feature fusion encoder decoder network for crack detection,ieee trans. Intell. Transp. Syst. 23 (9), 15546–15557. https://doi.org/10.1109/TITS.2022.3141827 (Sep. 2022).

Gao, H., Xiao, J., Yin, Y., Liu, T. & Shi, J. A mutually supervised graph attention network for few-shot segmentation: the perspective of fully utilizing limited samples,ieee trans. Neural Netw. Learn. Syst. 35 (4), 4826–4838. https://doi.org/10.1109/TNNLS.2022.3155486 (Mar. 2022).

Elasri, M., Elharrouss, O., Al-Maadeed, S. & Tairi, H. Image generation: A review,neural process. Lett 54 (5), 4609–4646. https://doi.org/10.1007/s11063-022-10777-x (Mar. 2022).

Gao, L. et al. SceneHGN: hierarchical graph networks for 3D indoor scene generation with fine-grained geometry,ieee trans. Pattern Anal. Mach. Intell. 45 (7), 8902–8919. https://doi.org/10.1109/TPAMI.2023.3237577 (Jul. 2023).

Wu, D. Y., Le, T. N. H., Yao, S. Y., Lin, Y. C. & Lee, T. Y. Image collage on arbitrary shape via shape-aware slicing and optimization. IEEE Trans. Vis. Comput. Gr. 30 (8), 4449–4463. https://doi.org/10.1109/TVCG.2023.3262039 (Aug. 2023).

Sun, W. & Wu, T. Learning layout and style reconfigurable GANs for controllable image synthesis,ieee trans. Pattern Anal. Mach. Intell. 44 (9), 5070–5087. https://doi.org/10.1109/TPAMI.2021.3078577 (Sep. 2022).

Li, C. et al. VR + HD: video semantic reconstruction from spatio-temporal scene graphs. IEEE J. Sel. Top. Signal. Process. 17 (5), 935–948. https://doi.org/10.1109/JSTSP.2023.3323654 (Sep. 2023).

Zhang, X. et al. Robust feature learning for adversarial defense. Via Hierarchical Feature Alignment Inf. Sci. 560, 256–270. https://doi.org/10.1016/j.ins.2020.12.042 (Jun. 2021).

Wang, Q. et al. A single-stream adaptive scene layout modeling method for scene recognition,neural comput. Appl 36 (22), 13703–13714. https://doi.org/10.1007/s00521-024-09772-1 (Apr. 2024).

Pintore, G., Bettio, F., Agus, M. & Gobbetti, E. Deep scene synthesis of atlanta-world interiors from a single omnidirectional image,ieee trans. Vis. Comput. Gr. 29 (11), 4708–4718. https://doi.org/10.1109/TVCG.2023.3320219 (Nov. 2023).

Wen, Z. et al. Effects of view layout on situated analytics for multiple-view representations in immersive visualization,ieee trans. Vis. Comput. Gr. 29 (1), 440–450. https://doi.org/10.1109/TVCG.2022.3209475 (Jan. 2023).

Zhou, H. et al. Jul., AST-GNN: an Attention-Based Spatio-Temporal graph neural network for Interaction-Aware pedestrian trajectory prediction,neurocomputing, 445, pp. 298–308, (2021). https://doi.org/10.1016/j.neucom.2021.03.024

Li, X., Huang, H., Zhao, H., Wang, Y. & Hu, M. Learning a convolutional neural network for Propagation-Based stereo image segmentation,vis. Comput 36, 39–52. https://doi.org/10.1007/s00371-018-1582-y (Jan. 2020).

Du, S. et al. IMG2HEIGHT: height Estimation from single remote sensing image using a deep convolutional encoder-decoder network,int. J. Remote Sens. 44 (18), 5686–5712. https://doi.org/10.1080/01431161.2023.2251185 (Feb. 2023).

Omer, Z. T. & Abbas, A. H. Image anomalies detection using transfer learning of ResNet-50 convolutional neural network,indones. J. Electr. Eng. Comput. Sci. 27 (1), 198–205. https://doi.org/10.11591/ijeecs.v27.i1.pp198-205 (Jul. 2022).

Yu, R., Chen, W. & Zhu, B. Infrared and visible image fusion algorithm based on a cross-layer densely connected convolutional network,appl. Opt 61 (11), 3107–3114. https://doi.org/10.1364/AO.450633 (Apr. 2022).

Liu, N. et al. Seismic fault interpretation based on improved holistically-nested edge detection,oil geophys. Prosp 57 (3), 499–509. https://doi.org/10.13810/j.cnki.issn.1000-7210.2022.03.001 (Mar. 2022).

Jin, Y., Li, Z. & Lu, T. The style transfer of photos and landscape paintings based on cyclegan combined with nested edge detection module. Appl. Comput. Eng. 8 (1), 153–161. https://doi.org/10.54254/2755-2721/8/20230118 (2023).

Fenyi, A., Fenyi, I. & Asante, M. Colored edge detection using thresholding techniques,recent adv. Comput. Sci. Commun. 16 (4), 33–41. https://doi.org/10.2174/2666255816666220617092943 (Jun. 2022).

Lyu, Y. & Talebi, M. S. Double graph attention networks for visual semantic navigation,neural process. Lett 55 (7), 9019–9040. https://doi.org/10.1007/s11063-023-11190-8 (Mar. 2023).

Tang, Z. et al. Joint graph attention and asymmetric convolutional neural network for deep image compression,ieee trans. Circuits Syst. Video Technol. 33 (1), 421–433. https://doi.org/10.1109/TCSVT.2022.3199472 (Jan. 2022).

Wang, S., Yang, J. & Shang, F. A personalized recommendation model with multimodal preference-based graph attention network. J. Supercomput. 80, 22020–22048. https://doi.org/10.1007/s11227-024-06200-y (Jun. 2024).

Dong, S., Zhou, W., Xu, C. & Yan, W. EGFNet: Edge-aware guidance fusion network for RGB-thermal urban scene parsing,ieee trans. Intell. Transp. Syst. 25 (1), 657–669. https://doi.org/10.1109/TITS.2023.3306368 (Jan. 2023).

Yang, M. et al. Vehicle interactive dynamic graph neural network-based trajectory prediction for internet of vehicles. IEEE Internet Things J. 11 (22), 35777–35790. https://doi.org/10.1109/JIOT.2024.3362433 (Nov. 2024).

Zhong, L., Wu, J., Li, Q., Peng, H. & Wu, X. A comprehensive survey on automatic knowledge graph construction. ACM Comput. Surv. 56 (4), 1–62. https://doi.org/10.1145/3618295 (Sep. 2023).

Jamali, M., Davidsson, P., Khoshkangini, R., Ljungqvist, M. G. & Mihailescu, R. C. Context in object detection: A systematic literature review,Artif. Intell. Rev., vol. 58, no. 6, pp. 1–89, Mar. (2025). https://doi.org/10.1007/s10462-025-11186-x

Lin, W. et al. and Nov., Research on cognitive evaluation of forest color based on visual behavior experiments and landscape preference, Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., pp. 3731–3740, (2021). https://doi.org/10.1371/journal.pone.0276677

Yang, C. F., Fan, W. C., Yang, F. E. & Wang, Y. C. F. LayoutTransformer: Scene Layout Generation with Conceptual and Spatial Diversity,Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 3731–3740, Jun. (2021). https://doi.org/10.1109/CVPR46437.2021.00373

Funding

This paper is part of the phased research results of the education and teaching reform Project of “North Guangdong Minority Clothing Innovation Design Research Team” of Guangdong University of Science and Technology (No. GKY-2020CQTD-7); This article is one of the phased research results of the 2024 doctoral research project “Research on the Spiritual Connotation and Design Expression of Zhuang Ethnic Group in the Perspective of AI Intelligence” at Guangdong University of Science and Technology (No. GKY-2024BSQDW-79).

Author information

Authors and Affiliations

Contributions

Z.Y.Z. processed the numerical attribute linear programming of communication big data, and the mutual information feature quantity of communication big data numerical attribute was extracted by the cloud extended distributed feature fitting method. Y.H. combined with fuzzy C-means clustering and linear regression analysis, the statistical analysis of big data numerical attribute feature information was carried out, and the associated attribute sample set of communication big data numerical attribute cloud grid distribution was constructed. P.W. and X.S.Z. did the experiments, recorded data, and created manuscripts. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, Z., He, Y., Wang, P. et al. Scene image visual layout based on deep encoder–decoder network and visual image attention model. Sci Rep 15, 33080 (2025). https://doi.org/10.1038/s41598-025-16515-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16515-2