Abstract

In multi-objective particle swarm optimization, achieving a balance between solution convergence and diversity remains a crucial challenge. To cope with this difficulty, this paper proposes a novel multi-objective particle swarm algorithm, called ASDMOPSO, which aims to improve the optimization efficiency through the angular division of the archive and the dynamic update strategy. The algorithm efficiently classifies non-dominated solutions by dividing the external archive region into equal angles, thus achieving fine management and diversity maintenance of solutions during the optimization process. When the external archive overflows, the algorithm removes the solution in the highest density region using the congestion distance metric. At the same time, the research presents a multi-stage initialization approach. This method splits the random population into two subpopulations. Subsequently, a genetic algorithm and a differential evolutionary algorithm are utilized for optimization purposes in each subpopulation, respectively. As a result, the quality of the initial population is enhanced. To explore the solution space more efficiently, this paper designs a dynamic flight parameter adjustment technique. This technique balances exploration and exploitation by adjusting the optimization algorithm parameters in real time. The proposed algorithm is compared with several representative multi-objective optimization algorithms on 22 benchmark functions, and statistical tests, sensitivity analysis, and complexity analysis are conducted. The experimental results show that the ASDMOPSO algorithm is more competitive than other comparison algorithms, with significantly improved optimization efficiency. For example, on the ZDT4 test function, its average IGD value is 0.032, outperforming the standard PSO algorithm and surpassing all other comparison algorithms, thereby validating the algorithm’s superiority in complex multi-objective optimization problems.

Similar content being viewed by others

Introduction

In the majority of real-world situations, it is often necessary to simultaneously optimize multiple objective functions, which are known as multi-objective optimization problems (MOP). Since MOP involves multiple conflicting objective functions, its solution process is often of high complexity, which poses a greater challenge to researchers. These problems are commonly found in cloud computing task scheduling1,2, renewable Energy Dispatch3,4, electric vehicle charging scheduling5,6, traffic signal system control7,8, path planning9,10, and other fields, which are of great practical significance.

In recent years, metaheuristic algorithms have become a mainstream tool for solving complex optimization problems by virtue of the advantages of not relying on problem-specific domain knowledge and possessing powerful global search capabilities. In the book “Metaheuristics in Machine Learning: Theory and Applications,” Oliva et al.5 systematically reviewed the applications of meta-heuristic algorithms in the field of machine learning and pointed out that these algorithms can efficiently deal with high-dimensional, nonlinear, and multi-constraint optimization problems by simulating the natural phenomena to construct search strategies. In addition, a variety of metaheuristic optimization strategies are widely used in this field, such as the Dragon Lizard Optimization Algorithm (DLO)12, the Fishing Cat Optimization Algorithm (FCO)13, the Gazelle Optimization Algorithm (GOA)14, the Mixed Moth Flame Optimization Algorithm (MFO)15, the Bat Inspired Algorithm (BA)16, the Chimpanzee Optimization Algorithm (ChOA)17, the Black Widow Optimization Algorithm (BWO)18, and the Particle Swarm Optimization Algorithm (PSO)19, among others. Specifically, the DLO algorithm draws inspiration from the unique biological behaviors of dragon lizards (such as gliding mechanisms and adaptive strategies). By ingeniously transforming these survival techniques derived from nature into algorithms, it constructs an efficient search pattern aimed at providing effective solutions for complex global optimization problems. On the other hand, the FCO algorithm divides the optimization process into four independent steps. To maximize the diversity of the search population and achieve an ideal balance between deep global exploration and local fine-tuning, each stage employs differentiated search methods. The GOA algorithm is inspired by the survival capabilities of antelopes in predator-dominated environments: during the development phase, the algorithm simulates the antelope’s calm foraging state. When detecting threat signals resembling “predators,” it switches to the exploration phase to mitigate negative impacts. The two phases cycle repeatedly until the termination conditions are met and the optimal solution to the optimization problem is found. The MFO algorithm is an optimization algorithm that simulates the phototaxis behavior of moths, achieving optimization by modeling the flight paths and aggregation behavior of moths seeking light sources. The BA algorithm simulates the behavior of bats emitting sounds and receiving echoes to locate prey during flight, transforming this natural phenomenon into a mathematical optimization process. The ChOA algorithm is inspired by the social behavior of chimpanzees, simulating their strategies and wisdom in hunting and territorial competition. BWO achieves global search by simulating the predatory behavior of black widow spiders.

PSO, as a classic swarm intelligence optimization method, is widely recognized and effective in optimization problem solutions due to its fast convergence and low implementation difficulty. Inspired by the natural behavior of flocks of birds, this algorithm can efficiently handle multi-objective optimization problems and improve computational efficiency. In addition to single-component heuristic algorithms, hybrid-component heuristic algorithms have become a core strategy for solving complex optimization problems by integrating the advantages of different algorithms. The traditional PSO algorithm suffers from a rapid loss of particle diversity, which causes it to easily fall into local optima and makes it difficult to accurately search for global optima. To address this issue, Meddour et al.20 proposed a hybrid DE-PSO algorithm that combines the global search capability of differential evolution (DE) with the local search advantage of PSO to improve this problem. However, this algorithm is based on a continuous space design, making it poorly suited for photovoltaic discrete control problems, and the continuous conversion of discrete variables reduces the quality of the solution. In the field of smart contract security detection, traditional methods struggle to balance global search and pattern recognition capabilities. AlShorman et al.21 proposed a PSO-ML hybrid framework, which combines particle swarm optimization with machine learning for synergistic optimization, effectively improving detection efficiency. To address the issue of PSO easily getting stuck in local optima in complex constraint optimization, Shehab et al.22 proposed the MFO-PSO hybrid algorithm: moths initially search for the global optimum region via spiral paths, after which particles utilize social information to accelerate convergence. Shehab et al.23 also integrated cuckoo search (CS) with the bat algorithm (BA), simulating cuckoo parasitic reproduction behavior and bat echolocation mechanisms, achieving superior optimization performance on benchmark functions compared to single algorithms. They24 proposed a CS-based hybrid method combining Lévy flight random search with local exploration capabilities, significantly improving optimization performance on high-dimensional benchmark functions. Although CS leverages the random search capabilities of Lévy flight to excel in global optimization, it tends to get stuck in local optima or converge slowly when handling multi-constrained, nonlinear engineering problems. Shehab et al.25 innovatively introduced reinforcement learning (RL) mechanisms to dynamically adjust search strategies such as step size and direction to adapt to constraint changes, significantly improving algorithm adaptability and solution quality in structural optimization scenarios. Abualigah et al.26 proposed a hybrid HS feature selection method that simulates the principle of musical harmony to identify optimal feature combinations, achieving significant results. During the PSO iteration process, particles update their velocity and position based on the ideal position of the flock and their own position. Due to potential conflicts in the objective function, the solution set exhibits trade-off solutions, presented in the form of a Pareto optimal solution set (PS) and mapped as the Pareto front (PF)27 in the objective space.

To construct a solution set that uniformly covers the approximate Pareto frontier, it is essential to select an appropriate archiving method. The update and maintenance strategies for external archives are critical to the convergence and diversity of Pareto frontier solutions in the MOPSO algorithm28. Since the number of non-dominated solutions may grow rapidly, researchers typically rely on external archiving mechanisms to manage these solutions. Currently, the academic community has developed various density-based archive update techniques, among which adaptive grid methods and crowding distance methods are particularly prominent. The effectiveness of adaptive grid methods depends on the initial solution distribution and the shape of the target space. In high-dimensional or discontinuous spaces, dimensionality features can significantly impact diversity assessment; when particles exceed grid boundaries, reclassification can significantly increase computational complexity. In contrast, the angle-based archiving mechanism proposed in this paper can store all non-dominant solutions in an external archive at once, thereby effectively reducing computational complexity. To address this issue, Leng et al.29 proposed an innovative method that utilizes grid strategies to manage external archives and optimize global sampling learning while introducing algorithmic optimization of grid distances. Experiments demonstrate that this method achieves a balance between diversity preservation and rapid convergence. Experiments show that this method achieves a balance between diversity preservation and fast convergence. Building on this, Li et al.30 proposed a dual method combining congestion density and advantage variance estimation, measuring distribution density by calculating the congestion distance between the solution and neighboring solutions. This method improves convergence speed while preventing local optima, advancing the field. Su et al.31 proposed a dual-file framework incorporating convergence and diversity archives, generating better candidate sets through crossover mutation to solve for the global optimum, significantly improving performance. Researchers integrated the Parallel Coordinate Cell System (PCCS)32,33,34 into the Multi-Objective Particle Swarm Optimization (MOPSO) algorithm to overcome the limitations of traditional methods. However, this method struggles to accurately capture the binary correlations between different dimensions in dimensionality analysis, and as the number of dimensions increases, the coordinate axes in the coordinate system become increasingly dense, significantly complicating the interpretation of high-dimensional complex structures. In contrast, the angle division proposed in this paper belongs to a fixed regional division method, and the number of divisions does not increase with the addition of dimensions. Therefore, the difficulty of interpreting high-dimensional complex structures is relatively lower.

The quality of the initial population is a key factor determining the effectiveness of the MOPSO algorithm. Currently, most methods use random generation to create the initial population, which directly leads to poor algorithm convergence performance and introduces random errors, thereby reducing overall stability. Therefore, optimizing the initialization stage holds significant practical importance. To address this issue, researchers have proposed various innovative strategies to enhance the quality of the initial population. Shehab et al.35 designed the Multi-Universe Optimizer (OMVO) based on the principle of adversarial learning, generating adversarial solutions for candidate solutions and optimizing their retention, thereby effectively improving the quality of initial solutions. However, this method requires calculating the adversarial solutions for all particles in each iteration, leading to increased computational complexity. Daoud et al.36 proposed the gradient-based optimizer (GBO), which integrates gradient information with population search mechanisms, offering a new approach to initialization optimization. However, its initialization relies on gradient information from the objective function (GSR mechanism), requiring the problem to be micronational or have approximable gradients; for non-micronational or discontinuous problems, the initialization mechanism may be ineffective or inefficient. Abualigah et al.37 proposed a gradient-based optimizer (GBO) that leverages the synergistic effects of gradient search rules (GSR) and local escape operations (LEO) to effectively escape local optima while maintaining strong exploration capabilities. However, the dynamic step size adjustment update rule relied upon by LEO in this GBO suffers from premature convergence issues. Kuyu et al.38 proposed a dynamic damage repair mechanism for initial solution generation, which can correct defects in the initial solution set and improve search accuracy and efficiency. In contrast, traditional initialization methods are prone to causing population instability, such as abnormal model behavior.

In addition to initial population optimization, flight parameters (such as learning factors and inertial weights) significantly affect optimization performance and efficiency, as they directly regulate particle interactions and movement states in the solution space. Traditional MOPSO uses fixed parameter settings, which are difficult to adapt to the needs of dynamic optimization problems. When the search space changes, fixed parameters can lead to poor algorithm convergence, insufficient particle diversity, and an increased risk of premature convergence. To address this issue, researchers have proposed various adaptive parameters adjustment strategies. Han et al.39 designed a parameter tuning method based on particle target distance and population diversity, dynamically balancing local exploitation and global exploration to help the algorithm escape local optima. However, this method relies on the trend of population distance increases and decreases and does not consider multi-objective optimization diversity factors, limiting its general applicability. Li et al.40 proposed an adaptive collaborative optimization framework that combines particle swarm optimization, Bayesian optimization, and moving average methods to dynamically adjust process parameters. While this approach improves production quality and efficiency, the parameter optimizations are independent of each other and do not establish a collaborative constraint model, potentially leading to parameter combination conflicts. Eschwege et al.41 innovatively introduced the “belief space” into the PSO framework, constructing a belief model of particles regarding the environment and their own state, achieving parameter self-adaptive adjustment and dynamic balance of search behavior, providing a new theoretical perspective for the design of PSO adaptive mechanisms, and offering an effective solution for improving optimization performance in complex scenarios. Zhang et al.42 proposed a new approach to parameter optimization by analyzing the diversity and convergence of dynamic equilibrium algorithms through particle conversion efficiency (CE) and coefficient adjustment (AC). However, their research lacks core parameter sensitivity analysis, making it difficult to fully validate the stability and superiority of the method under different parameter configurations. Wang et al.43 improved clustering performance by dynamically assessing feature importance and adjusting weights. Their adaptive weight update mechanism is based on the iterative optimization of the membership matrix U and the clustering center C(r). However, the calculation of U and C(r) is susceptible to initial values, which may cause weight adjustments to get stuck in local optima, limiting the practical value of the algorithm.

In addition to single-algorithm improvements, many scholars have applied optimization algorithms to real-world scenarios. Shehab et al.44 proposed an improved gradient-based optimizer (IGBO) for practical engineering problems. This algorithm balances exploration and exploitation capabilities by dynamically adjusting the gradient step size α (which decays exponentially with the number of iterations). However, in engineering problems where the objective function changes drastically, a fixed decay rate may cause the algorithm to converge prematurely to a local optimum. For complex problems such as renewable energy scheduling, a balance must be struck between prediction uncertainty and the dynamic adaptability of optimization strategies. PSO, leveraging the advantages of swarm intelligence, has been widely applied in engineering, economics, and computer science and has also shown great potential in biomedical diagnostics. For example, Nemudraia et al.45 utilized the sequence specificity of the Type III CRISPR system to capture and concentrate viral RNA, significantly enhancing the sensitivity of SARS-CoV-2 detection. This achievement relied on the fine-tuning and optimization of experimental parameters. Additionally, Shehab et al.46 noted in their review that neural networks are widely applied in engineering, but their performance highly depends on architecture and parameter settings, with optimization processes being complex and time-consuming. PSO provides robust support for parameter optimization through swarm intelligence search, effectively accelerating convergence and avoiding local optima.

In summary, by refining the external archive maintenance strategy, enhancing the initial population generation method, and incorporating dynamic adjustment parameters, the MOPSO algorithm demonstrates improved efficiency and stability in solving complex multi-objective optimization problems. These enhancements boost convergence speed, solution accuracy, and adaptability in dynamic environments, laying a crucial foundation for MOPSO’s advancement. This paper introduces an innovative archiving-based method, optimizing archive management through angle segmentation archives and dynamic updates, effectively balancing population diversity and convergence and significantly enhancing algorithm performance. The following are the main contributions of this study:

-

1.

In ASDMOPSO, an external archive mechanism is proposed to divide regions based on angles, which facilitates the allocation of suitable regions for all non-dominated solutions and guides the particles to converge to the true Pareto front (PF) quickly. In addition, when the capacity of the external archive is overflowed, a congestion distance metric mechanism is introduced to preferentially remove the most congested nondominated solutions in the highest density region, thus retaining the high-quality solutions and eliminating the poor-quality ones. This design not only accelerates the convergence speed of the algorithm but also effectively ensures the diversity of the population.

-

2.

This study suggests a multi-step initialization strategy for better initial populations in optimization. First, diverse solutions are randomly generated and split into high- and low-similarity subpopulations by a similarity metric. High-similarity subpopulations aid local exploitation; low-similarity ones, global exploration. Genetic algorithms optimize high-similarity groups via selection, crossover, and mutation; differential evolutionary algorithms improve low-similarity groups through mutation and combination. This combined method leverages both algorithms’ strengths to boost solution efficiency and effectiveness in later optimization, promoting optimization-field progress.

-

3.

This research introduces a dynamic flight parameters tuning strategy that modifies inertia weights and learning factors based on real-time monitoring of population dynamics. It adapts to characteristics such as distribution and diversity; the experiment indicates that this approach outperforms traditional fixed-parameter methods by achieving faster convergence and higher-quality solutions.

The structure of this paper is as follows: “Preliminary work” section provides an overview of the basic knowledge required for multi-objective particle swarm optimization algorithms. “The main algorithm of ASDMOPSO” section focuses on the key elements of the ASDMPSO algorithm proposed in this paper. These elements include an external archive update mechanism, a population initialization method, and a dynamic flight parameter adjustment strategy. Section 4 validates the performance advantages of the proposed algorithm through comparative experiments with other multi-objective particle swarm optimization algorithms (MOPSO) and multi-objective evolutionary algorithms (MOEA), as well as non-parametric statistical tests (Friedman rank test and Wilcoxon test). Finally, Sect. 5 provides a comprehensive summary of the research work on the ASDMPSO algorithm and outlines future research directions.

Preliminary work

Multi-objective optimization problem

Multi-objective optimization problems (MOP) encompass several conflicting objectives, defined by decision variables, objective functions, and constraints, typically formulated for minimization as:

In these formulas, \(m\) represents the dimension index of the objective function, The dimension of the decision variable is \(n\), \(X^{U}\) and \(X^{L}\) represent the upper and lower bounds of the decision variables respectively. \(f_{i} \left( x \right)\) is the i-th target variable, the number of inequality constraints is \(g_{i} \left( x \right) \ge 0,\left( {i = 1,2, \cdots q} \right)\), and the number of equality constraints is \(h_{j} \left( x \right) = 0,\left( {j = 1,2, \cdots r} \right)\).

Particle swarm optimization

Proposed by Kennedy and Eberhart in 1995, inspired by bird and fish social behavior, Particle Swarm Optimization (PSO) is a swarm-intelligence algorithm. Renowned for simplicity and quick convergence, it’s an iterative method using systematic random initialization to generate candidate solutions. It has outstanding local search capabilities in addition to a robust worldwide search potential. The particle swarm in the Basic Multi-Objective Optimization issue is made up of particles, and each particle’s current location in a-dimensional search space indicates a possible solution to the optimization issue. To figure out the next flight route and distance, these particles move through the search area at a preset speed. Their speeds are tweaked on the fly according to their own past flying experiences as well as those of the whole particle swarm. On the iteration, the following equation is used to update the particles’ position and velocity.

where \(\omega\) is the inertia weights, \(t\) is the number of iterations, and \(c_{2}\) and \(c_{1}\) are the social learning and self-learning factors. Respectively, \(pbest_{i}\) and \(gbest_{{}}\) are employed as acceleration factors to control the change in particle velocity \(\omega\). Additionally, both \(r_{1}\) and \(r_{2}\) are uniformly—distributed random numbers in the range \(\left[ {0,1} \right]\). All the symbols can be found in Table 1.

The memory term, which represents the impact of the particle’s last velocity’s magnitude and direction on the continuity of the current velocity, is the first of the particle’s three main components that make up its velocity update formula. The second component, the self-cognition term, gives the particle a strong local search capability that allows it to fine-tune itself in a limited area to discover a more ideal answer. This component reflects the particle’s learning process based on its own previous experience. The third section contains the word "social cognition," which refers to the process by which particles collaborate and share knowledge through group learning, thereby exploring the space for better solutions.

The main algorithm of ASDMOPSO

Balance between diversity and convergence in coordinated solutions poses significant challenges in external archive management. The core of ASDMOPSO lies in the external archive updating and maintenance strategy, the population initialization strategy, and the dynamic flight parameters tuning strategy. This section offers an in-depth overview of the proposed ASDMOPSO algorithm. As depicted in Fig. 1, the algorithm’s overall structure comprises three primary components. To begin with, a multi-step method is employed to set up the population, aiming to enhance the quality of the initial population. Second, in order to store and update particles more efficiently, The ASDMOPSO algorithm introduces an innovative external archive update strategy: It splits the external archive into multiple regions with equal angles and assigns non-dominated solutions to the corresponding regions according to their angular size to ensure that they do not cross the boundary. When the external archive surpasses its predefined capacity, the most crowded nondominated solutions in the densest region are eliminated using the crowding distance indicator to accelerate the population approximation to the true Pareto front (PF) and promote the convergence of nondominated solutions. In addition, the algorithm designs a dynamic flight parameters adjustment strategy based on the particle distribution feedback information to further improve the overall performance.

Update and maintenance of external archives

In the MOPSO algorithm, screening and retaining high-quality particles is the core component, and the particle swarm effectively produces solutions characterized by both better convergence and diversity through learning from the best-performing particles. A vital element of this process is the external archive, which keeps track of all non-dominated solutions throughout the search. This archive serves to furnish a more polished selection of high-quality candidates, thereby enhancing the overall optimization performance. When non-dominated solutions exceed the archive’s capacity, a strategy is needed to limit them for algorithm stability. This section focuses on choosing solutions with high convergence and diversity. If the algorithm generates more non-dominated solutions than the archive can hold, some must be removed. In the traditional MOPSO algorithm, when the external archive is full, it randomly removes non-dominated solutions from the densest grid region, but the newly added solution is not deleted. Although this approach effectively controls the size of the archive, its randomization may result in some high-quality convergent solutions being mistakenly deleted while newly added low-quality solutions are retained. Therefore, in the MOPSO algorithm, effective management of the external archive is essential.

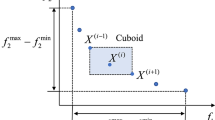

An optimization strategy is introduced in this study. Initially, all non-dominated solutions are taken into account by dividing the external archive into thirty-six regions Q with equal angles, which are categorized according to the angle of the particles with respect to the reference vector, as shown in Fig. 2a. As shown in Fig. 2b, when the number of solutions exceeds the limit of the external archive, the distribution of non-dominated solutions in the target space is examined to identify the regions with the highest density. Once the region is identified, the algorithm further calculates the Euclidean distance between particles in the region, thereby quantifying the degree of crowding in the region. Based on the results of the crowding assessment, the most densely populated non-dominated solutions are prioritized for removal to guarantee a more uniform distribution of solutions within the archive. Figure 2c shows the visualization of this process. If the external archive still exceeds the capacity limit after performing the above steps, the process is repeated. In order to guarantee that the solutions are evenly distributed along the Pareto front and to maintain diversity, this study specifically retains the solutions located on the boundary.

In formula (6),\(C\) represents the objective function values for all Pareto-optimal solutions;\(i\) for the \(i\)th non-dominated solution arranged from smallest to largest by the first objective value on the vector;\(j\) for the coordinate value of the \(i\)th non-dominated solution in the \(j\) dimension; and \(Dis\) is commonly employed to indicate the crowding distance value among the non-dominated solutions.

External archives are updated in accordance with the following principles: the new solution is accepted first. And then, remove particles with low congestion levels in the most dense area. This process aims to eliminate poorly converging and non-diverse particles in the external archive, ensuring an evenly distributed solution set. The archiving process continues until the count of non-dominated solutions equals the external archive’s capacity. The pseudocode for the archiving operation can be found in Algorithm 1, and a more intuitive demonstration can be found in Fig. 2. In addition, to facilitate the reader’s understanding of the pseudocode, Fig. 3 also provides a flowchart for Algorithm 1.

Multi-step initialization

It has been proven that the use of advanced initialization techniques can dramatically improve the chances of finding a globally optimal solution, reduce the uncertainty of the search results, and shrink the computational cost while enhancing the solution quality. Currently, the initial populations of most MOPSO algorithms are generated using random distributions, and this randomness may lead to deviations in the search results, which in turn affects the convergence accuracy and speed of the algorithm. The initialization mechanisms are mainly classified into three categories: random, combinatorial, and generalized. Among them, the multi-step technique in the combinatorial initialization mechanism is particularly prominent. Multi-step techniques contain two or more components that are usually further processed and improved in subsequent steps based on previously generated populations. Therefore, so as to enhance both the quality and diversity of the initial population, this study designed an initialization mechanism that contains multiple steps, and its comprehensive structure is illustrated in Fig. 4.

First, the stochastic characteristics of the population are set, and then the whole population is divided into two subpopulations with significant differences, i.e., the high similarity subpopulation (\(P_{1}\)) and the low similarity subpopulation (\(P_{2}\)), with the help of the hierarchical clustering technique. In this study, the similarity threshold is determined based on the degree of denseness between particles in the population, and the specific calculation process is as follows: first, the average Euclidean distance between each particle in the population and all the remaining particles is solved one by one, and then the above-average Euclidean distance of all the particles is quadratically averaged, and the final mean result obtained is set as the similarity threshold. If the average Euclidean distance of a particle is less than this threshold, it is categorized into the high similarity subpopulation (\(P_{1}\)); conversely, if the average Euclidean distance of a particle is greater than or equal to this threshold, it is categorized into the low similarity subpopulation (\(P_{2}\)). In this way, a binary partition of the overall population can be realized, obtaining two subpopulations, \(P_{1}\) and \(P_{2}\).

In previous studies, algorithms often optimize the population by randomly selecting variant particles, a practice that reduces the convergence speed of the algorithm while increasing the risk of the population falling into a local optimal solution. In view of this, considering that the particle distribution of the high similarity population (\(P_{1}\)) is more dense, in order to construct a diverse subpopulation \(P_{C}\), this paper adopts the genetic algorithm (GA) to locally optimize the population \(P_{1}\); at the same time, the differential evolution (DE) algorithm is applied to the low similarity population (\(P_{2}\)) in order to achieve the search for the global optimal solution and then obtain the high-quality subpopulation \(P_{D}\) with excellent convergence performance. Finally, in this paper, the optimized two subgroups are combined to form the initialization group Newp in this study. The relevant process can be viewed in the pseudocode of Algorithm 2. To facilitate readers’ understanding of the pseudocode, a flowchart of Algorithm 2 is also provided in Fig. 5 below.

Genetic algorithm optimization

Genetic algorithms, inspired by natural selection and genetics, excel at addressing local optimization challenges. During initialization, this study employs genetic algorithms to refine highly similar populations. The high resemblance among individuals makes the populations compact, and applying genetic algorithms for local optimization significantly enhances the initial population quality. The process is strictly executed in accordance with Algorithm 3, and the flowchart of the algorithm can be found in Fig. 6.

This study adheres strictly to the standard genetic algorithm process, setting the mutation probability to 0.1 to balance global search and local optimization. Initially, hierarchical clustering selects the high-similarity population \(P_{1}\), which is subsequently initialized, and the fitness of every individual in \(P_{1}\) is assessed. The algorithm’s core steps involve crossover and mutation: two parents are randomly chosen, and their genetic material is merged through crossover to produce a new offspring. A random value is then generated and compared to the mutation rate; if the random value is less than the probability of mutation, a mutation operation is applied to introduce unique genetic traits, enhancing population diversity. Otherwise, the individual’s genetic information remains unchanged. This process repeats within the algorithm’s iterative framework until the maximum iterations are reached, ultimately producing a high-quality population \(P_{C}\).

Differential evolution algorithm

Differential evolutionary algorithms, an optimization strategy inspired by natural selection and genetics, have demonstrated their extraordinary potential in addressing the challenge of global population optimization. In this study, the differential evolution algorithm was adopted in the initial stage, aiming to optimize populations that have low similarity among members and are more dispersed in distribution. The characteristics of such groups make global optimization particularly important, and the introduction of the differential evolution algorithm undoubtedly provides strong support for its overall performance improvement. The optimization process in this study was strictly carried out in accordance with the steps of Algorithm 4, whose flowchart is shown in Fig. 7.

First, in accordance with the principles of the differential evolution algorithm, the crossover probability (CR) was set to 0.8, and a conventional mutation strategy (DE/rand/1) was adopted. The algorithm selects the low-similarity population \(P_{2}\) through hierarchical clustering. After initializing \(P_{2}\), the fitness of each individual is evaluated. The core mechanism of the algorithm revolves around the mutation and crossover process: in this study, a differential mutation method is used, where three sources of mutation are randomly selected for each individual, and the target individual \(X_{i}\) is crossovered with these sources of mutation to generate a test individual \(U_{i}\). Subsequently, the values of the objective functions of \(X_{i}\) and \(U_{i}\) are compared. If \(U_{i}\) performs better,\(X_{i}\) is replaced; otherwise, \(X_{i}\) remains unchanged. Finally, the optimized high-quality population \(P_{D}\) is generated.

Dynamic flight parameters adjustment mechanism

It is well known that the velocity update formula contains three basic components: inertia, self-perception, and social perception. The inertia factor represents the influence of prior velocity on current motion, embodying the particle’s tendency to sustain its previous movement or continue inertially. The self-perception aspect indicates the inherent motion characteristics of each particle; that is, based on their previous best position, particles modify distance and direction from their current position. On the other hand, the social component shows how particles cooperate and share knowledge with each other through other specific group members. This means that particles use their past ideal position in the group to choose the distance and direction to move from their current position. Therefore, the key of this study is to explore how these parameters can be finely tuned to avoid the algorithm from falling into a local optimum prematurely so as to ensure the comprehensiveness and depth of the search process.

During particle flight, \(gbest\), \(pbest\), and flight parameters (\(\omega\), \(c_{1}\) and \(c_{2}\)) play a pivotal role in exploring and exploiting the balance of evolutionary states such as particle convergence, diversity, and stagnation. Extensive studies indicate that higher values of \(\omega\) and \(c_{1}\), coupled with lower \(c_{2}\), enhance global exploration, whereas lower \(\omega\) and \(c_{1}\) with higher \(c_{2}\) favor local search. Nonetheless, optimizing MOPSO flight parameters remains challenging. This paper introduces a dynamic adjustment mechanism for these parameters to balance global exploration and local exploitation effectively, detailed as follows.

Here, \(t\) represents the number of iterations currently in progress, and \(T\) represents the total number of iterations, \(\omega_{\max }\) is the maximum inertia weight value,\(\omega_{\max } = 0.9\), \(\omega_{\min }\) is the minimum inertia weight value,\(\omega_{\min } = 0.4\); \(c_{1}\) and \(c_{2}\) are the self-learning factor and the social learning factor. Including,\(c_{1\max }\) and \(c_{2\max }\) are the maximum value of the learning factor,\(c_{1\max } = c_{2\max } = 2.5\);\(c_{1\min }\) and \(c_{2\min }\) are the minimum values of the learning factors respectively, \(c_{1\min } = c_{2\min } = 1.5\).

General framework of ASDMOPSO

This paper systematically describes the core architecture of the ASDMOPSO algorithm, whose core mechanisms include a multi-step initialization mechanism, an external archive update mechanism, and a dynamic flight parameters adjustment mechanism. Algorithm 5 presents the pseudocode implementation process of this algorithm. First, the algorithm uses the multi-step initialization mechanism (Line 1) to generate a high-quality initial population. For detailed implementation details, refer to Algorithm 2. Next, the initial population is divided into two subpopulations using agglomerative hierarchical clustering and then combined with differential evolution and genetic optimization to obtain high-quality subpopulations (Line 2). For detailed processes, refer to Algorithms 2 and 3. Subsequently, in Step 5, the algorithm initiates the process of saving and updating non-dominated solutions. When the external archive reaches its maximum capacity, Algorithm 1 is triggered to manage the archive. This iterative cycle continues until the number of non-dominated solutions reaches the maximum limit of the external archive. To accelerate the convergence of non-dominated solutions toward the actual Pareto front, the algorithm introduces a dynamic flight parameters adjustment mechanism to update the position and velocity of particles. Overall, this algorithmic framework differs significantly from traditional MOPSO techniques, ultimately generating a feasible solution set through multiple iterations and fusion with new populations. To help readers better understand Algorithm 5, a flowchart is provided after the algorithm (as shown in Fig. 8) to facilitate understanding.

Computational complexity of ASDMOPSO

This section analyzes the computational complexity of the ASDMOPSO algorithm, whose core steps include external archive update and maintenance, initialization, and dynamic flight parameters optimization. The key parameter settings are as follows: population size N, objective dimension M, decision variable dimension n, number of external archive partitions Q, maximum iteration count T, the number of non-dominant solutions in the external archive is X. (\(size\left( A \right) = X\)), maximum size of non-dominated solutions G, and maximum capacity of external archives \(N_{A}\). The algorithm’s complexity is influenced by the sub-algorithms (Algorithms 1–4) and its own iterative process. During the initialization phase, the hierarchical clustering in Algorithm 2 and the clustering optimization operations in Algorithms 3 and 4 have a complexity of \(O\left( N \right)\), constituting the primary computational overhead. In the outer T iterations, the complexity of each particle update is \(O\left( {N\begin{array}{*{20}c} { \cdot n} \\ \end{array} } \right)\), the archive maintenance complexity of Algorithm 1 is \(O\left( {\begin{array}{*{20}c} {X\log X} \\ \end{array} } \right)\), and the flight parameters adjustment is O(1). After neglecting lower-order terms, the overall complexity simplifies to \(O\left( {\begin{array}{*{20}c} {N^{2} + X\log X} \\ \end{array} } \right)\), determined by the dominant terms, reflecting the cumulative complexity characteristics of multi-stage optimization and archive maintenance. The complexity analysis of each sub-algorithm is as follows:

Computational complexity of Algorithm 1

The upper bound of the algorithm’s time complexity is \(O\left( {\left( {G - N_{A} } \right)\begin{array}{*{20}c} {X\log X} \\ \end{array} } \right)\), When the external archive is not yet full, the algorithm’s time complexity is O(1). Once the external archive overflows, assuming the maximum population size is G, the outer WHILE loop executes at most \(G - N_{A}\) times. In each iteration, the complexity of the main operations (such as region partitioning, vector computation, angle calculation, and crowding handling) is \(O\left( {\begin{array}{*{20}c} {X\log X} \\ \end{array} } \right)\) (where m denotes the size of set A). Therefore, the algorithm’s time complexity is \(O\left( {\left( {G - N_{A} } \right)\begin{array}{*{20}c} {X\log X} \\ \end{array} } \right)\). If we consider only the primary factors affecting the input size, the complexity can be simplified to \(O\left( {\begin{array}{*{20}c} {X\log X} \\ \end{array} } \right)\), where m reflects the core influence of archive size on complexity.

Computational complexity of Algorithm 2

The time complexity of Algorithm 2 is composed of the cumulative complexity of each step. Step 1 generates a random sample set, with a complexity of \(O\left( N \right)\). Step 2, when using hierarchical clustering, requires calculating the distances between all data points to construct a distance matrix, resulting in a complexity of \(O\left( {N^{2} } \right)\), exhibiting quadratic growth. Step 3, during the particle allocation phase, involves iterating over N particles and calculating the MED for each remaining particle, with the presence of nested iterations causing the complexity to also reach \(O\left( {N^{2} } \right)\). In Step 4’s differential evolution, the number of iterations is \(T_{DE}\), and the complexity of a single iteration is \(O\left( {N\begin{array}{*{20}c} \cdot \\ \end{array} D} \right)\) (where D is 30). After simplification, a single iteration becomes \(O\left( N \right)\), so the total complexity is \(O\left( {T_{DE} \begin{array}{*{20}c} \cdot \\ \end{array} N} \right)\); similarly, in Step 5’s genetic algorithm optimization, the number of iterations is \(T_{GA}\), and the total complexity is \(O\left( {T_{GA} \begin{array}{*{20}c} \cdot \\ \end{array} N} \right)\). Overall, the complexity of the merging operation is \(O\left( {N^{2} + \left( {T_{DE} + T_{GA} } \right)\begin{array}{*{20}c} \cdot \\ \end{array} N} \right)\). The overall complexity of the algorithm is primarily dominated by the quadratic terms in Step 2 (clustering) and Step 3 (particle allocation), which are approximately \(O\left( {N^{2} } \right)\). In the worst case, these two \(O\left( {N^{2} } \right)\) complexities are combined, becoming the core factors determining overall efficiency.

Computational complexity of Algorithm 3

The time complexity of the genetic algorithm (Algorithm 3) is primarily determined by the computational costs of each step. Step 1 performs clustering and partitioning operations on population P1, with a complexity of \(O\left( {S^{2} } \right)\), where S is the size of P1. This is the most computationally intensive part of the algorithm. Steps 2 to 4 cover initialization, fitness evaluation, and crossover operations, with an overall complexity of \(O\left( S \right)\), resulting in relatively low computational costs. Step 5 involves a main loop iterating T times, with each iteration being a simple operation, resulting in a complexity of \(O\left( T \right)\).When the population size S and the input size N are of the same order of magnitude, the \(O\left( {S^{2} } \right)\) complexity of the clustering analysis can be converted to \(O\left( {N^{2} } \right)\). Additionally, the algorithm requires iterations for mutation operations, with a single mutation complexity of \(O\left( D \right)\)(where the mutation dimension D is at most 3), resulting in a total mutation complexity of \(O\left( {N^{2} + T\begin{array}{*{20}c} \cdot \\ \end{array} D} \right)\). Since the number of iterations T is much smaller than N2, the mutation operations have a limited impact on the overall complexity. Therefore, the overall time complexity of the algorithm is primarily dominated by the clustering analysis, ultimately resulting in \(O\left( {N^{2} } \right)\).

Computational complexity of Algorithm 4

The time complexity analysis of the Differential Evolution Algorithm (Algorithm 4) is as follows: Step 1 clusters the low-similarity population P₂, with a time complexity of \(O\left( {S^{2} } \right)\), where S denotes the size of population P₂. The random initialization of the population in Step 2 and the evaluation of particle fitness in Step 3 both have a complexity of \(O\left( S \right)\). The main loop (Step 7) iterates T times, with each iteration including Steps 4 to 6. Step 4 involves selecting three individuals for each target individual to calculate the mutation vector, which requires scanning the population, with a time complexity of \(O\left( S \right)\); Step 5 uses nested loops, with the outer loop iterating over S individuals and the inner loop fixedly iterating over 30 dimensions, resulting in a complexity of O(30S) = O(S); Step 6 iterates over the population to evaluate and update individuals, with a complexity of \(O\left( S \right)\). Therefore, the complexity of a single iteration is \(O\left( S \right)\), and the total complexity of the main loop is \(O\left( {T\begin{array}{*{20}c} \cdot \\ \end{array} S} \right)\). When the population size S and the input size N are of the same order of magnitude, the \(O\left( {S^{2} } \right)\) complexity of the clustering analysis can be converted to \(O\left( {N^{2} } \right)\). In summary, the main complexity of the algorithm is \(O\left( {N^{2} } \right)\) for the clustering operation and \(O\left( {T\begin{array}{*{20}c} \cdot \\ \end{array} N} \right)\) for the main loop, which can be summarized as \(O\left( {N^{2} + T\begin{array}{*{20}c} { \cdot N} \\ \end{array} } \right)\). In typical applications, due to the significant impact of the quadratic term in the clustering operation, the time complexity is primarily determined by \(O\left( {N^{2} } \right)\), reflecting the combined time cost of population partitioning and iterative optimization.

In-depth analysis: selecting angle segmentation archiving strategies to optimize algorithm performance

Traditional methods use adaptive grids to store non-dominated solutions in external archives, but the number and size of these grids remain constant. When particles exceed the boundaries, the grids must be recalculated, which makes it difficult to maintain stable performance in large population scenarios and increases computational complexity. The Parallel Coordinate Cell System (PCCS) has been integrated into the MOPSO algorithm to overcome the limitations of traditional grid methods. However, this method struggles to accurately capture the correlations between variables across different dimensions in dimensionality analysis. Additionally, as the number of dimensions increases, the coordinate axes in the coordinate system become increasingly dense, making it extremely challenging to run in high-dimensional complex structures.

In order to overcome the above shortcomings, this paper innovatively proposes an angle-based external archive segmentation strategy. This strategy uses reference vectors as a benchmark to divide archives into equal-angle regions, ensuring that each region is of consistent size while allowing for infinite extension along the positive direction of the coordinate axis. This completely eliminates the boundary constraints inherent in traditional fixed grids. It is worth noting that the angle-based partitioning proposed in this paper belongs to a fixed region partitioning method, where the number of partitions does not increase with the dimension. This characteristic effectively reduces the difficulty of interpreting high-dimensional complex structures. In terms of non-dominated solution storage, this strategy supports one-time complete storage without the need for secondary angle calculations, significantly reducing computational costs. Additionally, angle-based partitioning technology can precisely locate the regions with the densest particle distribution. Since these dense regions exhibit poor diversity, prioritizing particle deletion operations in these regions can significantly enhance the algorithm’s diversity. In practice, the crowding distance is used as the screening criterion (higher values indicate poorer regional diversity). After calculating the crowding distance of particles within a region, removing the particle with the highest value further optimizes algorithm performance, enabling the algorithm to maintain precision while achieving better diversity performance.

It is worth noting that angle partitioning does not cause convergence bias toward the axis angle—the axis angle corresponds to the fitness value of the super-ideal point. Particles will approach the ideal point but will not exactly match the super-ideal point. As shown in Fig. 9, experiments confirming the distribution of 200 particles after 20, 30, 40, and 50 iterations of the algorithm on the test function ZDT3 demonstrate that the particle distribution does not exhibit axis angle bias, thereby validating the effectiveness of the strategy. In summary, this strategy not only addresses the challenge of storing large-scale populations but also significantly enhances the algorithm’s convergence and diversity by precisely controlling particle distribution, providing an efficient archiving management solution for multi-objective optimization.

Experimental test and performance analysis

Testing issues

In this study, in order to systematically validate the comprehensive efficacy of the ASDMOPSO algorithm, three authoritative standard test suites, namely, ZDT47, UF48, and DTLZ49, are prudently selected by the study. Within this collection, the ZDT series encompasses five benchmark problems designed for bi-objective optimization; the UF suite consists of three tri-objective problems and seven bi-objective problems constituting a composite challenge; and the DTLZ suite covers seven tri-objective optimization test cases. It is important to highlight that the ZDT series of problems exhibit typical non-convex characteristics, the UF series of problems face challenges in converging to the Pareto front due to their intricate multimodal characteristics, and the DTLZ series of problems pose special challenges with geometric linear correlations and convexity features. These different test cases allow this text to study more accurately the ability of the ASDMOPSO algorithm to manage various multi-objective optimization types and dimensions. These test problems are powerful tools for validating the reliability and efficiency of the algorithm because they each have unique characteristics and complex Pareto fronts (PF), including concave, multimodal, incoherent, and irregular forms of PF. So as to systematically verify the comprehensive performance of the ASDMOPSO algorithm, this study compares it with five mainstream MOPSO algorithms(CMOPSO50, NMPSO51, MOPSOCD52, MOPSO28, and MPSOD53) and five MOEA algorithms(DGEA54, MOEADD55, SPEAR56, NSGAIII57, and IDBEA58). In this study, the population size of each algorithm was uniformly set to 200, and the number of function evaluations was set to 10,000 times. For each of the test questions, each algorithm was run independently 30 times, and the IGD and HV values were used as performance evaluation indexes. Furthermore, in order to ensure the authenticity and effectiveness of the algorithm. The experimental data were obtained by MATLAB R2023b software under Windows 10, running on an Intel(R) Core(TM) i7-7700 CPU @ 3.60 GHz. The source codes of all comparison algorithms were obtained from the PlatEMO platform.

This study employs two performance metrics to thoroughly assess the ASDMOPSO algorithm: inverse generative distance (IGD)59 and hypervolume metric (HV)60, and ASDMOPSO is analyzed in comparison with selected MOPSO and MOEA algorithms. While both metrics can capture algorithm convergence and diversity, relying on just one is insufficient for a comprehensive experimental algorithm performance evaluation. Thus, this study combines them for a holistic and detailed assessment, enabling a more nuanced understanding of the algorithm’s balance between convergence and diversity.

The Inverse Generational Distance (IGD) is a metric quantifying the approximation between a multi-objective algorithm’s Pareto solution set and the theoretical Pareto frontier. This metric is crucial for evaluating diversity and convergence. Refer to “(10),” a smaller IGD value signifies that the algorithm’s solutions are nearer to the true Pareto frontier, highlighting its effectiveness in both convergence and solution distribution. The formulation offers a precise mathematical measure to quantify this approximation quality.

In Eq. (10), \(p\) represents the current Pareto solution set; \(dist\left( {x_{i}^{ * } ,P} \right)\) denotes the Euclidean distance between particles \(x^{ * } \in F^{ * }\) and the set \(P\) nearest solution.

The hypervolume metric (HV) quantitatively evaluates the combined efficacy of the algorithm by accumulating the sum of the volumes of the multidimensional spatial cube formed by the algorithm’s solution set and the datum. The cube is defined by the solution set of individuals together with the anchor point \(Z^{r} = \left( {Z_{1}^{r} ,Z_{2}^{r} , \cdots Z_{m}^{r} } \right)\) as the diagonal vertex of the space. This assessment metric concurrently showcases the algorithm’s performance traits across two aspects: the degree of spatial approximation to the target and the breadth of the solution set distribution. To be more precise, higher HV values signify a more favorable equilibrium between convergence efficiency and solution diversity. The HV value can be precisely calculated using the Lebesgue measure \(\delta\) in Eq. (11).

In this formula (11), \(S\) denotes the set of optimal Pareto solutions according to the algorithm, while \(\delta\) refers to the Lebesgue measure, which is used to calculate geometric quantities such as volume or area.

Experimental parameters setting

This study compares and analyzes the performance differences between the ASDMOPSO algorithm and five traditional multi-objective evolutionary algorithms and five multi-objective particle swarm optimization algorithms, and all the algorithms show strong competitiveness in dealing with various kinds of complex test problems. In order to guarantee the fairness and objectivity of the comparison process, all the experiments were performed independently 30 times, and the parameters of each comparison algorithm were set according to the recommended values in the original paper, and this paper strictly follows the setting of all the variables and their ranges in the original literature, and the specific parameter settings are shown in Table 2. In addition, in all the test functions, each of the test functions is defined by a series of precise experimental parameters, including the particle population size (N), the number of objective functions (M), the dimensionality of the decision variables (n), and the maximum number of evaluations (FEs), and the related details are shown in Table 3. The experimental process, results, and algorithmic comparisons will be comprehensively analyzed in the subsequent sections.

Comparison of indicators

In order to further verify the evolutionary performance of the ASDMOPSO algorithm, this paper selects the ZDT, UF, and DTLZ test sets to test its performance and compares its performance with the five MOPSO optimization algorithms and five MOEA optimization algorithms. The mean and standard deviation of the IGD and HV metrics of different algorithms on the ZDT, UF, and DTLZ test sets are shown in Tables 4, 5, 6, 7, where the optimal results are shown in bold and the suboptimal results are underlined for the sake of unification and standardization. In addition, in order to verify the distribution characteristics and convergence performance of the algorithms, the experiments in this paper visualize the performance of some algorithms through intuitive charts; at the same time, in order to quantify the significance of the differences in the results of the different algorithms, the experimental data of 30 independent runs are analyzed using Wilkerson’s rank-sum test, and the significance level of the test is set at σ = 0.05. Where the symbols “+,” “−,” and “≈” represent that the performance of the competing algorithms is better than, worse than, or comparable to the ASDMOPSO algorithm proposed in this paper, respectively.

IGD indicator comparison

As can be clearly discerned from the performance metric data shown in Tables 4 and 5, in terms of the IGD metric, the newly proposed ASDMOPSO algorithm demonstrates superiority over other algorithms. In particular, Table 4 offers a comprehensive comparison of the IGD metrics for the ASDMOPSO algorithm and five other particle swarm optimization-based multi-objective optimization algorithms across 22 test problems, including their means and standard deviations. The average IGD results for each method across all test problems are referred to as “means,” and the dispersion of these means is reflected in the “standard deviations.” The bolded IGD results visually highlight the significant performance advantages of the ASDMOPSO algorithm. The penultimate row in Table 4, which is based on IGD performance evaluation, provides clear evidence that the ASDMOPSO algorithm performs best. It achieves optimal IGD metrics in over half of the test problems. The experimental results show that the ASDMOPSO algorithm has a significant advantage and outperforms all comparative algorithms in the ZDT2, ZDT3, and ZDT4 test cases, and outperforms most of the comparative algorithms in the ZDT1 and ZDT6 test problems: its IGD metrics are second only to MOPSOCD on the ZDT1 problem, and its IGD metrics are second only to CMOPSO on the ZDT6 problem. Furthermore, among the UF series of test problems, ASDMOPSO demonstrates superior performance on six test problems—UF3, UF4, UF7, UF8, UF9, and UF10—outperforming all five comparison algorithms; it ranks second behind CMOPSO on the UF2 and UF6 problems and third behind CMOPSO and NMPSO on the UF1 and UF5 problems. Furthermore, in the DTLZ series of test problems, ASDMOPSO outperforms the DTLZ3, DTLZ6, and DTLZ7 problems on all comparison algorithms.

Table 5 demonstrates the IGD metrics and their mean and standard deviation for ASDMOPSO versus five PSO-based MOEA algorithms for 22 test problems, including ZDT, UF, and DTLZ series. From the comparison results, ASDMOPSO significantly outperforms the other algorithms as reflected in its excellent IGD values. As shown in the penultimate row of Table 5, the proposed method achieved optimal performance on 13 test questions. Specifically, ASDMOPSO surpasses all competing algorithms on all 5 test problems within the ZDT series, underscoring its pronounced superiority in this domain. Within the UF series, ASDMOPSO demonstrates superior performance on 6 test problems—UF3, UF4, UF7, UF8, UF9, and UF10—while securing the second position behind NSGAIII on the UF6 test problem. Additionally, ASDMOPSO exhibits commendable performance on 2 test problems in the DTLZ series. In conclusion, ASDMOPSO significantly surpasses the other algorithms in IGD values for most test problems, demonstrating particularly strong dominance in the ZDT test suite.

HV indicator comparison

Table 6 illustrates the comparative outcomes of the HV metrics for the ASDMOPSO algorithm alongside five other MOPSO algorithms, evaluated across 22 test cases from the ZDT, UF, and DTLZ series. The experimental findings indicate that ASDMOPSO demonstrates significantly better convergence performance for most of these benchmark scenarios. Notably, ASDMOPSO attains optimal HV values on nine test problems, whereas CMOPSO and NMPSO achieve optimal HV values on only seven and six test problems, and the remaining three algorithms fail to achieve any optimal HV values. This highlights the enhanced efficiency and robustness of the ASDMOPSO algorithm in addressing multi-objective optimization challenges.

Table 7 showcases the comparative outcomes of ASDMOPSO alongside five other MOEA algorithms, utilizing HV metrics for evaluation. The penultimate row of Table 7 shows that ASDMOPSO performed excellently in 12 test questions and obtained the highest HV value in more than half of the evaluation scenarios, surpassing other algorithms in performance, which fully verifies its effectiveness. In the ZDT series, ASDMOPSO surpassed all comparison algorithms and achieved the best HV value. For the UF series, it outperforms on UF2, UF3, UF7, UF8, UF9, and UF10, excelling in over half of the UF problems. Additionally, ASDMOPSO similarly outperforms all competitors on the DTLZ3 problem. Overall, ASDMOPSO holds a clear advantage in HV metrics across most test scenarios, showcasing its superior ability to secure optimal solutions and maintain diversity.

The experimental findings indicate that ASDMOPSO surpasses similar algorithms regarding stability and convergence precision. This advantage is attributed to its capacity to adaptively modify flight parameters in relation to the number of iterations, effectively avoiding local optima. The algorithm enhances local space utilization via a population segmentation strategy and maintains high-quality non-dominated solutions through a congestion distance mechanism for external archive management.

In the preceding discussion, the experimental results clearly show that the ASDMOPSO algorithm consistently surpasses the comparison algorithms in most test problems from the ZDT, UF, and DTLZ series. However, relying solely on experimental data makes it challenging to fully visualize the algorithm’s superiority. Therefore, this paper further elucidates its performance by plotting box plots and Pareto frontier plots. The box plots are drawn based on the IGD values of the target benchmark test sets ZDT, UF, and DTLZ, as shown in Figs. 10 and 11. IGD measures the distance between the algorithm’s non-dominated solutions and the true Pareto front (PF). Shorter box lengths in the plot indicate lower IGD values, reflecting better algorithm performance. The final data point in each plot represents the paper’s proposed algorithm. After 30 independent runs, the results of the ASDMOPSO algorithm show a higher stability, which is reflected in the characteristics of the box plots.

Figures 12, 13, and 14, respectively, show the Pareto boundary comparison diagrams of the ASDMOPSO algorithm and five MOPSO algorithms on the two-dimensional objective test functions ZDT4 and UF4 and the three-dimensional objective test function DTLZ7. In contrast, the Pareto solution sets of several other comparison algorithms either fall into local optimality or perform poorly in convergence, and there is a significant difference between their solution sets and the actual PF, despite some advantages in diversity.

As shown in Figs. 15, 16, and 17, the Pareto boundary comparison diagrams of the ASDMOPSO algorithm and the five MOEA algorithms on the two-dimensional objective test functions ZDT4 and UF4 and the three-dimensional objective test function DTLZ7 are presented. Compared with the ASDMOPSO algorithm, the performance of the other five MOEA algorithms is poor. The Pareto solution sets they generate face problems such as local optimal traps or low convergence efficiency. Even though these algorithms have certain advantages in the diversity of solution sets, there is still a significant gap between the solution sets they obtain and the actual Pareto front (PF).

Algorithm sensitivity analysis

This section specifically explores the specific impact mechanism of the number of partitions Q in the external archive area of the paper on population diversity, and analyzes how the boundary values of the core parameters \(\omega\), \(c_{1}\), and \(c_{2}\) in the dynamic inertia weight and learning factor formulas, as well as the population size N, can effectively regulate the performance of the ASDMOPSO algorithm. Specifically, the number of regions Q is directly proportional to the number of targets. In this study, the population size is set to 200, and the corresponding number of divisions is determined to be 36; if the population size needs to be increased in the future, the number of divisions should also be increased accordingly. The value of the number of regions Q has a direct impact on the final output of the algorithm: when the value of Q is set too low, the particles in the algorithm tend to concentrate in specific areas. This tendency toward concentration may cause the algorithm to prematurely converge to a local optimum, thereby losing the important opportunity to explore the global optimum. Conversely, if the value of Q is set too high, redundancy may occur during the search process, which not only creates unnecessary computational burdens but may also reduce the algorithm’s learning efficiency and convergence speed. Through the experimental data of sensitivity analysis conducted for region numbers of 12, 24, 36, 48, and 60 (as shown in Table 8), it is clear that when the region number is set to 36, the algorithm achieves the best performance.

Since this paper employs a dynamic strategy to adjust the inertial weight and learning factor, its underlying mechanism is relatively complex and difficult to analyze directly. Therefore, this paper focuses on the boundary values of the basic parameters \(\omega\), \(c_{1}\), and \(c_{2}\) (corresponding to Eqs. 7–9) in the inertial weight and learning factor formulas, specifically the boundary parameters \(\omega_{\max }\), \(\omega_{{m{\text{in}}}}\), \(c_{1\max }\), \(c_{2\min }\), \(c_{1\max }\), and \(c_{2\max }\) set in the study. Through systematic sensitivity analysis, this paper aims to reveal the specific patterns by which these parameters influence algorithm performance. The reasonable configuration of these parameters is crucial for enhancing the overall performance of the ASDMOPSO algorithm. This study will conduct a systematic sensitivity analysis on the aforementioned parameters, and the analysis results will provide a solid theoretical basis for algorithm parameters optimization (specific data can be found in Tables 9, 10, 11, 12).

In the performance optimization study of the ASDMOPSO algorithm, the population size N is a critical parameter, and its value directly affects the overall performance of the algorithm. Selecting an appropriate value for N can effectively improve the algorithm’s solution accuracy and efficiency. Therefore, conducting a sensitivity analysis of this parameter has significant theoretical and practical significance, providing a robust basis for the scientific selection of algorithm parameters. This paper selects six typical test problems: ZDT2, ZDT3, UF1, UF2, UF7, and DTLZ2. Comparative experiments are conducted to evaluate the performance of the ASDMOPSO algorithm under different population sizes (N = 100, 200, 300, 400, 500), as shown in Fig. 18. To ensure the reliability of the results, the algorithm was independently run 30 times for each population size. Statistical analysis of the experimental data indicates that, across all test problems, the ASDMOPSO algorithm demonstrates optimal performance at a population size of N = 200. This conclusion provides clear guidance for the parameter configuration of the ASDMOPSO algorithm.

Discussion on the Algorithm’s convergence rate

To explore the algorithm’s convergence rate, this paper’s performance was evaluated on three test functions, meticulously analyzing its efficiency and effectiveness in achieving optimal solutions. Figure 19 clearly shows the convergence rate of the ASDMOPSO algorithm compared to ten other algorithms for the test problems ZDT4, UF9, and DTLZ7. Specifically, the first three subplots of Fig. 19 provide a detailed comparison and contrast of the convergence speed between the ASDMOPSO algorithm and five other MOPSO algorithms, namely CMOPSO, NMPSO, MOPSOCD, MOPSO, and MPSOD. These subplots highlight the distinct characteristics and efficiencies of each algorithm in achieving rapid convergence. Meanwhile, the last three subplots are dedicated to evaluating the convergence speed of the ASDMOPSO algorithm against five prominent MOEA algorithms, including DGEA, MOEADD, SPEAR, NSGAIII, and IDBEA. This comparative analysis highlights the strengths and limitations of each algorithm in the context of multi-objective optimization. The results clearly indicate that the ASDMOPSO algorithm demonstrates exceptional convergence performance, rapidly approaching the true Pareto front (PF) in the early stages of the iterative process. This early and efficient convergence not only highlights the algorithm’s robustness but also its potential for delivering superior solutions in complex optimization scenarios.

Discussion on algorithm diversity

To further explore the diversity of algorithms, this section focuses on analyzing the distribution characteristics of particles during algorithm execution. Figure 20 clearly illustrates the particle distribution of the ASDMOPSO algorithm across six typical test problems (ZDT3, UF1, UF2, UF8, UF10, and DTLZ7). Among these, ZDT3, UF1, and UF2 are two-dimensional test functions, while UF8, UF10, and DTLZ7 are three-dimensional test functions. Based on the analysis conclusions in Sect. 4.4.4, the algorithm has demonstrated excellent convergence performance. As shown in Fig. 20, the particles are uniformly distributed and cover a wide range in the solution space, indicating that the algorithm possesses good diversity. Overall, compared to other comparison algorithms, the ASDMOPSO algorithm achieves a better balance between convergence accuracy and solution diversity, fully demonstrating its advantages in comprehensive performance.

Non-parametric statistical test

In the process of rigorously evaluating the effectiveness of the algorithm, this study employed the authoritative Friedman rank sum test and Wilcoxon test to determine the significance of the experimental results and assess their performance. When calculating the Friedman scores for the algorithm, the IGD and HV metrics were included, and a comprehensive analysis of the performance of the ASDMOPSO algorithm across multiple tables (including Tables 4, 5, 6, 7) was conducted. Specifically, the average rankings of the algorithms have been calculated and presented in Tables 13 and 14. The comparison results of the IGD and HV metrics indicate that the ASDMOPSO algorithm has a significant advantage. The algorithm achieved the highest ranking in the IGD metric evaluation across the ZDT benchmark set, UF benchmark set, and overall benchmark set. Under the HV metric evaluation, the ASDMOPSO algorithm also performed exceptionally well in the ZDT benchmark set, UF benchmark set, and overall benchmark set. This outstanding performance fully validates the ASDMOPSO algorithm’s leading position in terms of the IGD metric (Figs. 21, 22, 23, 24).

During the Wilcoxon test, data obtained from the IGD and HV indicators were used as reference criteria. In Tables 4, 5, 6, 7, the Wilcoxon test is used to analyze the experimental data from 30 independent runs, with the significance level set at σ = 0.05. The data for the comparison algorithms are annotated in the table, where “+”, “−”, and “≈” represent that the comparison algorithm’s results are significantly better than, significantly worse than, or equivalent to the ASDMOPSO algorithm, respectively. Figures 21, 22, 23, 24 present the Wilcoxon comparison results between the ASDMOPSO algorithm and other algorithms. The terms “failure,” “victory,” and “draw” in the figures correspond to the comparison algorithms’ results being significantly better, significantly worse, or equivalent to the ASDMOPSO algorithm, respectively. As clearly shown in the results of Figs. 21 to 24, the ASDMOPSO algorithm outperforms all other algorithms in both IGD and HV values. Among the compared algorithms, the CMOPSO algorithm ranks second, followed by the NMPSO algorithm in third place. In summary, the Wilcoxon comparison results clearly show that the proposed ASDMOPSO algorithm outperforms competing algorithms when solving 22 test problems.

In summary, based on the evaluation results of the IGD and HV indicators, as well as the conclusions of the Friedman rank test and Wilcoxon test, the ASDMOPSO algorithm proposed in this paper demonstrates excellent overall performance in solving multi-objective optimization problems. The algorithm outperforms other compared algorithms in both convergence and diversity, two critical dimensions. It not only generates high-quality solutions but also exhibits exceptional stability, successfully achieving an effective balance between superior convergence capability and diversity preservation capability.

Conclusion

This paper proposes an innovative multi-objective particle swarm optimization algorithm with an angle division archive and a dynamic update strategy. Initially, a multi-step initialization strategy is implemented to prevent the initial population from converging to local optima, thereby minimizing errors from initial population randomness. To enhance initial population quality, the algorithm integrates genetic and differential evolutionary algorithms. Additionally, the external archive is segmented into equal-angle regions for efficient storage and classification of non-dominated solutions. When exceeding capacity, the crowding distance metric is employed to remove the most densely packed solutions, maintaining the archive’s non-dominated solutions within the predefined limit. This approach ensures particle convergence on the Pareto front (PF) and their uniform distribution along it, significantly boosting the algorithm’s convergence rate. Moreover, a dynamic flight parameters adjustment mechanism is introduced to more effectively guide population updates, further enhancing algorithmic efficiency. In simulation experiments, ASDMOPSO is rigorously compared against five MOPSO and five MOEA algorithms, demonstrating superior performance in tackling multi-objective optimization problems with more comprehensive and outstanding results.

Although the ASDMOPSO algorithm proposed in this paper performs well in a number of rigorous benchmark function tests, there is still much room for improvement in terms of practical application requirements. Its applicability and effectiveness in real complex multi-objective problems have not been fully verified and need to be comprehensively and systematically verified through subsequent targeted research. Based on the current situation and planning, we will push forward the optimization iteration of the algorithm and plan to gradually apply it to general engineering optimization solving, which will become the core direction of future research.

Data availability

All data generated or analysed during this study are included in this published article.

References

Zade, B. M. H., Mansouri, N. & Javidi, M. M. An improved beluga whale optimization using ring topology for solving multi-objective task scheduling in cloud. Comput. Ind. Eng. 200, 110836. https://doi.org/10.1016/j.cie.2024.110836 (2025).

Mondal, B. & Choudhury, A. Multi-objective cuckoo optimizer for task scheduling to balance workload in cloud computing. Computing 106, 3447–3478. https://doi.org/10.1007/s00607-024-01332-8 (2024).

Bergmeir, C. et al. Predict + optimize problem in renewable energy scheduling. IEEE Access 1, 1. https://doi.org/10.1109/ACCESS.2025.3555393 (2025).

Xu, X. F. et al. Multi-objective particle swarm optimization algorithm based on multi-strategy improvement for hybrid energy storage optimization configuration. Renew. Energy 223, 120086. https://doi.org/10.1016/j.renene.2024.120086 (2024).

Chen, J., Mao, L., Liu, Y., Wang, J. & Sun, X. Multi-objective optimization scheduling of active distribution network considering large-scale electric vehicles based on NSGAII-NDAX algorithm. IEEE Access 11, 97259–97273. https://doi.org/10.1109/ACCESS.2023.3312573 (2023).

Mahato, D., Aharwal, V. K. & Sinha, A. Multi-objective optimisation model and hybrid optimization algorithm for electric vehicle charge scheduling. J. Exp. Theor. Artif. Intell. 36, 1645–1667. https://doi.org/10.1080/0952813X.2023.2165719 (2024).