Abstract

In recent days, due to potential growth of vehicle usage, the researchers have to concentrate on abnormal vehicle identification areas to provide solutions to avoid accidents. Though many vehicle identification works have been done by applying machine and deep learning approaches, still there is some problem with handling repetition frames and identifying the abnormal vehicles among vehicles in a camera. To overcome these challenges, this paper introduces KFEAVI (Key Frame Extraction based Abnormal Vehicle Identification) technique that uses statistical feature extraction technique and constrained angular second moment technique. The statistical feature extraction technique is used to extract the key frames in a statistical way by using beta distribution estimation. This technique handles well for both gradual and abrupt content changes in frames. The constrained angular second moment method is applied to find the vehicles to identify the abnormal vehicles movement. The experimental results are carried out using Car Accident Detection Dataset (CADP). For evaluating performance of KFEAVI, several algorithms are compared with the KFEAVI. The experimental results reveal that the KFEAVI achieved better results in terms of F-score.

Similar content being viewed by others

Introduction

In recent days, surveillance cameras have been placed everywhere since unusual events are frequently captured by surveillance cameras. People give more attention to unusual events than normal events. To analyze the unusual events happening in the surveillance footage on a 24/7 basis, it is time and memory consuming. Therefore, video analytics systems have to be made for the surveillance video to differentiate the usual and unusual events. An unusual event is an abnormal event that needs to be differentiated from the normal event. However, an unusual event is not necessary as a suspicious event from the surveillance point of view1,2,3. Exploring normal and abnormal events in the surveillance video is an important task in recent days due to the increased crime rates. Also, these kinds of tasks become a key focus area in surveillance applications for detecting traffic monitoring, vehicle congestion, traffic violations, vehicle intrusion, accidents, terrorism, etc. The above problems can be handled using keyframe extraction technique4,5, object detection and tracking technique6,7, unusual event detection technique8,9,10,11,12,13, etc.

In the recent past, many surveillance based applications have been developed using machine and deep learning approaches14,15,16,17,18,19. These approaches have been built for detecting unusual events using hand crafted features, statistical features, etc. Although noteworthy innovations have been achieved in unusual event detection20,21,22,23, there are still some problems to handle repetitive frames and to accurately detect unusual events. Since surveillance cameras continuously record the events, several repetitive frames can be identified in the frame sequence. To remove the repetitive frames for reducing memory storage, key frames are to be extracted. Identifying representative frames in original video are known as key frames that can be extracted based on user need.

To deal with repetitive frames and unusual event detection, this paper introduces Key Frame Extraction based Abnormal Vehicle Identification (KFEAVI) technique that uses statistical feature extraction technique and constrained angular second moment technique. The statistical feature extraction technique is used to extract the key frames in a statistical way by using beta distribution estimation. This technique handles well for both gradual and abrupt content changes in frames. The constrained angular second moment method is applied to find the vehicles to identify the abnormal vehicles movement. Our contributions are summarized as follows.

-

1.

KFEAVI Methodology is developed for extracting key frames and identifying abnormal vehicles.

-

2.

Beta distribution estimation is applied on the frame sequence to analyze the content and to find the similarity between frames.

-

3.

CASM is developed for identifying vehicles with their location using Vehicle Detection Vector.

-

4.

To get the abnormal vehicle identification using keyframes, beta distribution and CASM methodologies are fused.

Related work

Many unusual event detection works have been done and considerable progress has been obtained in the area of surveillance systems. In this article, some unusual event detection works have been explained.Kosmopoulos and Chatzis24 applied pixel wise computation on the two-dimensional surveillance frame sequence utilizing holistic visual behaviour understanding technique. Yen and Wang25 presented an abnormal event detection method named as Social Force Model (SFM) for crowded scenarios. The SFM is developed using optical flow computation. In this work, every individual is treated as a moving particle. The SFM is the interaction force that is applied between every two particles. Zhang et al.26 developed a model named as Social Attribute-Aware Force Model (SAFM); the SFM is extended as SAFM. Similarly, Chaker et al.27 developed a social network model using an unsupervised approach for crowded scenarios for unusual event detection. Amraee et al.28 developed a feature descriptor using histogram of oriented gradient (HOG) and a Gaussian Mixture Model (GMM) to detect unusual events in the frame sequence. Hung et al.29 extracted the SIFT feature using Spatial Pyramid Matching Kernel (SPMK) based BoW model for describing the motion of a crowd. Sandhan et al.30 developed a method for unusual event detection using an unsupervised learning approach. The proposed method is developed based on the general person’s perception that normal events occur often while abnormal events occur rarely. Tziakos et al.31 explored the projection subspace association to handle the issue about unusual event detection for both labelled and unlabelled parts in a supervised learning approach. The authors mentioned in the article the labelled information about normal events was limited. The reason could be that all kinds of normal events were not learned in the training stage. Therefore, the performance of unusual event detection is poor in many cases. Shi et al.32 developed a model for unusual event detection by using spatio-temporal co-occurrence Gaussian mixture models (STCOG). Yin et al.33 developed a method to increase and improve the information content through the dimension of the motion feature vector that is based on the density of a crowd. Mahadevan et al.34 developed a normal behaviour model using dynamic texture features to form a mixture model to detect unusual events. Singh and Mohan32 developed an approach for unusual activity recognition that is based on graph formulation method and graph kernel SVM. Gu et al.35 introduced a method by considering the advantage of the GMM and the particle entropy to represent the crowd distribution in crowded scenarios. In general, the size of the crowd will be changed according to the number of people appearing in the crowd. To handle this random size and motion patterns of the crowd in unusual situations, Lee et al.36 developed a Human Motion Analysis Method (HMAM). The HMAM is built based on statistics information and entropy computation. Optical flow computation in low-level features can reflect the relative distance of multiple moving objects in a particular scene at two various moments. This analysis is helpful in detecting unusual events in surveillance video.

This paper introduces Key Frame Extraction based Abnormal Vehicle Identification (KFEAVI) technique that uses statistical feature extraction technique and constrained angular second moment technique to identify the abnormal vehicles by extracting key frames.

Proposed KFEAVI methodology

The KFEAVI considers the surveillance video as the input. Let V bethe input surveillance video that is described by M number of frames. The frame number can be denoted as Fj = f1, f2, …….fM where j = 1 to M. The fj frame may contain redundant information. To find the Keyframe information in the frame sequence, statistical distribution is analyzed in terms of frame’s colors on the frame sequence. Furthermore, keyframes are used to find the abnormal vehicle using CASM. The workflow of the KFEAVI is explained in the Fig. 1.

Statistical feature extraction

For efficient feature representation, this work uses statistical feature representation models using Beta Distribution (BD) using color information on the frame sequence. In general, multiple regions of the adjacent frame having different content where object motion are either a slow or rapid change. These differences have to be measured for efficient key frame extraction. Therefore, the input frame sequence is divided into non-overlapping regions uniformly. Let rk be the number of regions where k = 1 to K. The BD is computed on each regionusing Standard Deviation (SD) st of rk.

Standard deviation computation

The SD is computed on the image using color content. Let pij is the pixel of the rk. It is explained in the Eq. 1.

where p is the pixel of the rk, m is the number of pixels in the row of therk, and n is the number of pixels in the column of the rk respectively. The SD can be computed by Eq. 2.

where pij is an individual pixel value, µ is the mean value of the rk; it is computed using all pixels on the rk, and n is the total number of pixels on the rk.

Beta distribution estimation on region

The Beta Distribution (BD) is employed to model the pixel intensity distribution within a given region. As a continuous probability distribution defined on the interval [0, 1], BD is well-suited for capturing various shapes and characteristics of intensity variations across frame sequences. By computing BD over the normalized pixel values \(\:{r}_{k}\), the model can effectively represent subtle changes in regional appearance. These distributional shapes can then be compared across consecutive frames to measure similarity and identify potential anomalies in the scene. The BD is computed using Eq. 3.

The portion B(α, β) is estimated using Eq. 4 .The α and β value must be greater than 0 value.

Parameters estimation

In beta distribution analysis, the α and β are two parameters. These parameters are used to control the shape of the BD. Also, this parameters estimation process is used to produce the correct fitting probability distribution curve for the input data. These parameters can be defined by using following Eqs. 5 and 6.

Key frames extraction

In the BD computation, every rk gets a BD value. For extracting key frames, the corresponding BDs’ are used to find the similarity using Distance measure (D). Let ABD bea current frame’s region and let BBD be the consecutive frame’s corresponding region. By this process, every corresponding region’s BD value is subtracted with their corresponding regions’ values. It is explained in the Eq. 7.

Finally, the regions of the frame are aggregated with their D value. Let DT be the total similarity values of the current frame. For efficient keyframe extraction, the threshold value η is applied on the DT value. If the DT value is greater than the η value is termed as a key frame; it is explained in the Eq. 8. The threshold value ηis set experimentally.

Foreground vehicles identification

The output key frames are used to find the abnormal events frames. Let Kebe the total number of key frames whereK1, K2, K3, …., KN are the individual key frames. These frames consist of background and foreground pixels. Usually, many actions can be performed by the foreground objects and those actions are mainly used to find the abnormal events. Therefore, background subtraction is carried out on the Ke to identify the foreground pixels. This work considers background model6 to subtract the foreground vehicle from the background objects using a reference frame.

The foreground vehicles are segmented from the foreground regions using connected component analysis algorithm37 on the Ke. This analysis is used to connect upper, top, left, and right pixels on the foreground region. Let Qc be the foreground vehicles, where c = 1 to w that is explained in the Eq. 9.

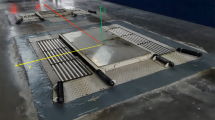

Abnormal vehicle identification using CASM method

The Qcis subjected to find the abnormal events using spatial analysis. To find the spatial information on Qc is found by introducing Constrained Angular Second Moment CASM method. For this analysis, the Vehicle Degree Vector (VDV) needs to be created by using one-dimensional vector Vf where f = 1to n. The Vf consists of vehicle occurrences that are denoted ‘1’ value and the remaining cells are denoted as ‘0’ value. The same size vector is used over the frame sequence. The example VDV is explained in Fig. 2.

For example VDV, there are four vehicles present in the frame. Therefore, four ‘1’ values are filled into VDV and the remaining cells filled with ‘0’ values. In this work, CASM method is used to measure the degree of vehicle occurrence with distance constraints using VDV. To find the abnormal vehicle, the vehicle interaction needs to be analysed since smaller interaction may create problems such as injuries and accidents. The interaction can be calculated using Distance measure (D) by analysingthe vehicle’s location. It is explained using the Eq. 10.

where D is the distance between vehicles. The \({q_c}_{{(x,y)}}\)and \({q_{c+1}}_{{(x,y)}}\)are the location of qc and qc+1 respectively. The distance difference between two vehicles needs to be η level. CASM method is also considered the η value. Based on the distance difference value, Vehicle Occurrence (VO) is computed; it is explained in the Eq. 11.

In CASM methodology, an AVI can be detected within a frame if it’s spatial distance with minimal threshold η between consecutive vehicles position. If a distance variation between vehicles is greater than the threshold η, it is treated as an “isolated” and it is denoted as a ‘0’ in the one-dimensional vector Vf . This vector helps model the dynamic pattern of vehicle presence and spatial behavior. The decrease in VO in the current frame reflects abrupt spatial dislocation of vehicles, which may indicate the AVI.

To avoid overgeneralization, we limit the scope of AVI detection to first instances of abnormal vehicle interactions, such as sudden deceleration, collision risk, or disorganized vehicle flow. The AVI frames are considered as spatial inconsistency. It is explained in the Fig. 3.

Experimental results

The proposed KFEAVI work is carried out in the MATLAB tool using car dataset. The Car Accident Detection Dataset (CADP)38 consists of several car video clips namely Video 1 (V1), Video 2 (V2), Video 3 (V3), etc. For experimental results, this work considers five car video clips. All video clips were captured on various roads using surveillance cameras and each video clip has duration from 3 to 15 min. For experimental purposes, an average of 300 frames was taken from each video clip. To estimate the performance of the KFEAVI proposed work, several existing algorithms are compared with the proposed method.

Performance evaluation

To evaluate the performance of the proposed KFEAVI work, the accuracy measure F-score is used using Eqs. 12, 13, and 14. True Positive (TP), False Positive (FP), and False Negative (FN) are defined by the following ways. Regarding the ground truth annotation, the AVI frames were manually labelled by the user based on visual assessment of abnormal interactions. Specifically, a frame is labelled as ground truth AVI if a vehicle that was present in the previous frame and it disappears in the current frame. Only the first AVI frame is labelled to avoid over-counting prolonged AVI. A True Positive (TP) is when the proposed KFEAVI algorithm detects an AVI frame that matches a ground truth AVI. A False Positive (FP) occurs when KFEAVI detects an AVI not present in the ground truth. A False Negative (FN) occurs when a ground truth AVI is not detected by KFEAVI.

In Table 1, the number of TP, FP, FN were analysed during the experimental results. Based on this analysis, precision, recall, and F-score were estimated using the Eqs. 12, 13, and 14. The results are shown in Table 2. The proposed KFEAVI work achieved best results for all video clips; they are shown in Table 2. The results revealed that the video clip V1 achieved less result than video clips V2, V3, V4, and V5. The overlapping vehicles are more appeared in V1 and thus FP rate is slightly decreased. The snapshot of AVI is explained in Fig. 4.

Comparative analysis

To evaluate the performance of the proposed KFEAVI, the following existing vehicle identification algorithms viz., PC1, PC2, PC3, SVM, PC1 + SVM + GSP(OR), Automated Vehicle Monitoring System (AVMS), Intelligent Video Analytics Model (IVAM), Leveraging Convolutional Neural Networks (LCNN), and YOLOv8 are compared with the proposed KFEAVIwork using three video clips (Tables 3 and 4).

Ablation study

A stepwise ablation study is carried out on the proposed method to estimate the effectiveness of the proposed KFEAVI method. The ablation study is started with the original frame sequence as the baseline and integrating two sub-works into the baseline such as KFEAVI using original frame sequence and KFEAVI using keyframes. For fair validation, the same size samples are taken for ablation study. The results are tabulated in Table 5.

As observed in Table 5, the incorporating CASM and keyframe extraction using beta distribution proposed KFEAVI obtain better results and each of the modules makes a reasonable contribution to AVI task. The exclusion of CASM significantly decreased Precision @ 21%, Recall @ 26%, and F-score @ 23%, respectively. Also, exclusion of keyframe extraction modules and CASM significantly decreased Precision @ 37%, Recall 33.00%, and F-score @ 35%.

Discussion

Tables 3 and 4 demonstrate that the proposed KFEAVI method outperforms existing algorithms in terms of abnormal vehicle interaction (AVI) detection. This improvement is primarily due to KFEAVI’s ability to eliminate duplicate frames by effectively identifying keyframes based on content variation. Through this strategy, frames containing meaningful vehicle activity are isolated, enabling efficient statistical feature extraction and increasing the number of true positive (TP) detections across video clips.

In the AVI module, the Context-Aware Spatial Mapping (CASM) method is employed to assess the degree of vehicle occurrence under distance constraints. This approach facilitates more accurate detection of vehicle count and position within the frame. The combined use of CASM and spatial distance analysis strengthens KFEAVI’s capability to identify abnormal interactions between vehicles.

Despite its strong performance, the proposed method still encounters false positives (FP) and false negatives (FN), especially in scenarios involving overlapping vehicles, where spatial analysis alone may not suffice. The ablation study confirms that KFEAVI achieves significantly higher accuracy when using selected keyframes as opposed to the full frame sequence, which contains substantial redundant information.

Limitation and future work

One key limitation of the current framework is the absence of temporal modeling, which restricts its ability to detect speed-related anomalies, such as sudden stops or over speeding. These behaviors often manifest over a sequence of frames and are challenging to capture using only spatial analysis. Incorporating temporal features—such as vehicle displacement and motion patterns—would allow the system to handle complex scenarios like overlapping vehicles more effectively. Therefore, integrating temporal analysis into the AVI module will be a crucial direction for future research to enhance performance in diverse and dynamic surveillance environments.

Conclusions

This paper introduced KFEAVI work to extract key frames for identifying abnormal vehicles. The KFEAVI used statistical feature extraction technique and constrained angular second moment technique. This work handles well for both gradual and abrupt content changes in frames while extracting key frames. The constrained angular second moment method is applied to identify the abnormal vehicles movement. For evaluating performance of KFEAVI, several algorithms are compared with the proposed KFEAVI. The experimental results reveal that the proposed KFEAVI achieved better results in terms of F-score. In case of overlapping vehicles in the input frame, the KFEAVI gives less accuracy since this technique did not consider temporal information. Therefore, KFEAVI can be extended to find the abnormal events with the temporal analysis.

Data availability

The dataset used in this study is publicly available. https://ankitshah009.github.io/accident_forecasting_traffic_camera.

References

Lu, C., Shi, J. & Jia, J. Abnormal event detection at 150 fps in matlab. In: 2013 IEEE International conference on computer vision, pp 2720–2727. (2013).

Song, H., Sun, C., Wu, X., Chen, M. & Jia, Y. Learning normal patterns via adversarial attentionbasedautoencoder for abnormal event detection in videos. IEEE Trans. Multimed. 22 (8), 2138–2148 (2020).

Liu, W., Luo, W., Lian, D. & Gao, S. Future frame prediction for anomaly detection - a new baseline. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 6536–6545. (2018).

Asha Paul, M. K., Kavitha, J. & Jansi Rani, P. A. Keyframe extraction techniques: A review. Recent. Pat. Comput. Sci. 11 (1), 3–16 (2018).

Vennila, T. J. & Balamurugan, V. A stochastic framework for keyframe extraction, 2020 IntConfEmerg Trends Inform TechnolEng (ic-ETITE), pp. 1–5. (2020).

Elhoseny, M. Multi-object Detection and Tracking (MODT) Machine Learning Model for real-time Video Surveillance Systemspp 611–630 (Springer, 2020). Circuits systems and signal processing.

Vennila, T. J. & Balamurugan, V. A rough set framework for multihuman tracking in surveillance video. IEEE Sens., (2023).

Xia, L. & Li, Z. An abnormal event detection method based on the riemannian manifold and Lstm network. Neurocomputing 463, 144–154 (2021).

Xia, L. & Li, Z. A new method of abnormal behavior detection using Lstm network with Temporal attention mechanism. J. Supercomput. 77 (4), 3223–3241 (2021).

Wang, J., Xia, L., Hu, X. & Xiao, Y. Abnormal event detection with semi-supervised sparse topic model. Neural ComputAppl. 31 (5), 1607–1617 (2019).

Coşar, S. et al. Toward abnormal trajectory and event detection in video surveillance. IEEE Trans. Circuits Syst. Video Technol. 27 (3), 683–695 (2017).

Mahadevan, V., Li, W., Bhalodia, V. & Vasconcelos, N. Anomaly detection in crowded scenes. In: 2010 IEEE computer society conference on computer vision and pattern recognition, pp 1975–1981. (2010).

Cheng, K-W., Chen, Y-T. & Fang, W-H. Video anomaly detection and localization using hierarchical feature representation and gaussian process regression. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 2909–2917. (2015).

Li, T., Chen, X., Zhu, F., Zhang, Z. & Yan, H. Two-stream deep spatial-temporal auto-encoder for surveillance video abnormal event detection. Neurocomputing 439, 256–270 (2021).

Chang, Y., Tu, Z., Xie, W. & Yuan, J. Clustering driven deep autoencoder for video anomaly detection. In: (eds Vedaldi, A., Bischof, H., Brox, T. & Frahm, J-M.) Computer vision - ECCV 2020. Springer, Cham, 329–345 (2020).

Sabokrou, M., Fayyaz, M., Fathy, M., Moayed, Z. & Klette, R. Deep-anomaly: fully convolutional neural network for fast anomaly detection in crowded scenes. Comput. Vis. Image Underst. 172, 88–97 (2018).

He, J., Chen, H., Liu, B., SijieLuo & Liu, J. Enhancing YOLO for occluded vehicle detection with grouped orthogonal attention and dense object repulsion, Sci Rep 14, Nature, pp. 1–14, (2024).

HobeomJeon, H., Kim, D., Kim & Kim, J. PASS-CCTV: Proactive anomaly surveillance system for CCTV footage analysis in adverse environmental conditions, Elsevier, Expert System with Applications, vol. 254,Oct. (2024).

DarshanVenkatrayappa, A. E. D. In Videos Using Deep Embedding, computer vision and pattern recognition, (2024).

Nguyen, T. N. & Meunier, J. Anomaly detection in video sequence with appearance-motion correspondence. In: 2019 IEEE/CVF international conference on computer vision (ICCV), pp 1273–1283. (2019).

Zhao, Y. et al. Spatio-temporal autoencoder for video anomaly detection. In: Proceedings of the 25th ACM international conference on multimedia. MM ’17, pp 1933–1941. Association for Computing Machinery, New York, NY, USA. (2017).

Piciarelli, C., Micheloni, C. & Foresti, G. L. Trajectory-based anomalous event detection. IEEE Trans. Circuits Syst. Video Technol. 18 (11), 1544–1554 (2008).

Tung, F., Zelek, J. S. & Clausi, D. A. Goal-based trajectory analysis for unusual behaviour detection in intelligent surveillance. Image Vis. Comput. 29 (4), 230–240 (2011).

Kosmopoulos, D. & Chatzis, S. P. Robust visual behavior recognition. IEEE Signal. Process. Mag. 27 (5), 34–45 (2010).

Yen, S. & Wang, C., Abnormal event detection using HOSF. in: IEEE Int. Conf. IT Converg Secur. (ICITCS), pp. 1–4. (2013).

Zhang, Y., Qin, L., Yao, H. & Huang, Q. Social attribute-aware force model: exploiting richness of interaction for abnormal crowd detection. IEEE Trans. Circuits Syst. Video Technol. 25 (7), 1231–1245 (2015).

ChakerR, R., Al Aghbari, Z. & Junejo, I. N. Social network model for crowd anomaly detection and localization. Pattern Recognit. 61, 266–281 (2017).

Amraee, S. et al. Anomaly detection and localization in crowded scenes using connected component analysis. Multimed Tools Appl. 77 (12), 14767–14782 (2018).

Hung, T., Lu, J. & Tan, Y., Cross-scene abnormal event detection, in: IEEE Int. Symp. Circuits Syst. (ISCAS), pp. 2844–2847. (2013).

Sandhan, T., Srivastava, T., Sethi, A. & Jin, Y. Unsupervised learning approach for abnormal event detection in surveillance video by revealing infrequent pat- Terns. IEEE Int. Conf. Image Vis. Comput. N Z. (IVCNZ). pp. 4, 94–499 (2013).

Tziakos, I., Cavallaro, A. & Xu, L. Local abnormality detection in video using sub- space learning. in: IEEE Int. Conf. Adv. Video Signal. Based Surveill (AVSS), pp. 519–525. (2010).

Shi, Y., Gao, Y. & Wang, R. Real-time abnormal event detection in complicated scenes. in: IEEE Int. Conf. Pattern Recognit. (ICPR), pp. 3653–3656. (2010).

Yin, Y., Liu, Q. & Mao, S. Global anomaly crowd behavior detection using crowd behavior feature vector. Int. J. Smart Home. 9 (12), 149–160 (2015).

Mahadevan, V. et al., Anomaly detection in crowded scenes. in: IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 1975–1981. (2010).

Singh, D. & Mohan, C. K. Graph formulation of video activities for abnormal ac- tivity recognition. Pattern Recognit. 65, 265–272 (2017).

Gu, X., Cui, J. & Zhu, Q. Abnormal crowd behavior detection by using the particle entropy. Opt. -Int J. Light Electron. Opt. 125 (14), 3428–3433 (2014).

Lee, C. P., Lim, K. M. & Woon, W. L. Statistical and entropy based abnormal motion detection. in: IEEE Stud. Conf. Res. Dev. (SCOReD), pp. 192–197. (2010).

Shah, A. P., Lamare, J. B., Nguyen-Anh, T. & Hauptmann, A. CADP: A novel dataset for CCTV traffic camera based accident analysis. In 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS) (pp. 1–9). (2018), November.

Ketao & Deng Anomaly Detection of Highway Vehicle Trajectory under the Internet of Things Converged with 5G Technology, Complexity, Wiley, pp. 1–12, (2021).

SurjeetYadava, A. S. & AnantaOjhac Smart evaluation of videos for unusual-event identification in automated vehicle monitoring systems. Adv. Eng., pp. 1–7, (2023).

Author information

Authors and Affiliations

Contributions

M.A.Y. Peer Mohamed Appa wrote the main manuscript. Vanitha.V significantly contributed to the revision, including data analysis, interpretation of results. Priti Rishi did Data Collection & Processing.Data Analysis & Interpretation and visualizain can be done by Shrdda Sagar. M. Asha Paul designed the framework, reviewed & revised the manuscript . All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Peer Mohamed Appa, M.A.Y., Vanitha, V., Rishi, P. et al. Key frame extraction based abnormal vehicle identification technique using statistical distribution analysis. Sci Rep 15, 30957 (2025). https://doi.org/10.1038/s41598-025-16653-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-16653-7