Abstract

Opinion mining is more challenging than it was before because of all the user-generated material on social media. People use Twitter (X) to gather opinions on products, advancements, and laws. Sentiment Analysis (SA) examines people’s thoughts, feelings, and views on numerous topics. Tweets can be analyzed to determine public opinion on news, regulations, society, and personalities. The existing SA system has poor prediction performance and needs improvements for instantaneous commercial applications. The insufficient data and complexity of model configuration, which make deep learning (DL) difficult, are the main causes of low accuracy and prediction rates. Convolutional Neural Long Short-Term Memory (OTCNLSTM) optimal tiered blocks with classification learning are proposed in this research to recognize emotions. The objective is to classify tweets as happy or sad. The TCNLSTM model consists of four training blocks for local features. These blocks are designed to extract local emotions hierarchically. The Boosted Killer Whale Predation (BKWOP) strategy is implemented to find the appropriate hyperparameter and its solution set and build a stable neural network model. This research reviews textual emotional classification experiments utilizing various sentiment models and methods. To further analyze this research, a comparative experimental study using the Kaggle Twitter dataset is conducted. The results indicate that the OTCNLSTM model had superior performance compared to the other models.

Similar content being viewed by others

Introduction

The task of assessing the sentiment of textual information has become ever more difficult because of the rapid expansion of social media content on sites such as Facebook and Twitter. Numerous scholarly investigations have thus far concentrated on the analysis of sentiment in textual data. In recent times, there has been a significant accumulation of structured, unstructured, and semi-structured data on social media sites like Twitter. An illustrative instance that exemplifies the prevalence of disinformation in social media is the COVID-19 infodemic, which highlights the potential for misinformation to have a more significant and detrimental impact than even a catastrophic event like a pandemic. Twitter is a highly expeditious and effective platform for microblogging, enabling users to submit concise postings known as tweets. Twitter is a widely utilized application that holds a prominent position in the realm of social media, demonstrating significant achievement. The utilization of Twitter enables the creation of free accounts, which in turn offer a substantial opportunity to reach a vast audience. Twitter can be considered an optimal platform for business and marketing strategies because of its ability to facilitate connections with influential and renowned individuals, such as celebrities and stars.

The opportunity to interact with such people is thus quite alluring to them and the advertisers, and it is therefore an attractive venture to conduct the promotion and sale of their products or services. By use of Twitter, celebrities are able to interact with their fans, and they reach those who are following them. This site is an ideal approach that appeals to the interests of enthusiasts. Nevertheless, this site limits the size of every text to 140 characters. It is also able to share posts or links to the website without any limit on expense to its users. Moreover, it is also a platform where ads are displayed. The existence of personal advertisement groups like other social networking sites does not amount to any problems per se. The swiftness of information transfer through Twitter is based on the instant sensitization of the people following a business account, hence the accuracy of delivery. Sentiment categories have to be allocated on a large scale, and this requires analysis. To satisfy such efforts, exact NLP (natural language processing) techniques and ML (machine learning) models that target text classification are necessary. Twitter gives users an opportunity to analyze its data on a large and broad scale. The automatic classification of text data requires them to be noisy, which further justifies the need for efficient procedures in the same. The past has seen numerous studies carried out regarding the characterization of emotion on Twitter1.

During the past few years, there has been significant potential associated with DL models, such as Convolutional Neural Networks (CNN) and the LSTM, in the SA field. These models have proved their ability to process sequences of any length. DL architecture works in the same way as the human brain2. The system includes several layers which have the possibility to analyze, transfer information and as a result, allow transferring the gained properties to the next layer. The results obtained are relayed to the following layer, and such a process is repeated many times3. In every round, the anticipated outcome will be compared with the labels/actual output and its accuracy. The weights of the elements are then changed. A lot of different DL networks have been suggested, such as CNNs, RBNs, DNNs, RBMs, and LSTM networks. Large amounts of CNN and LSTM layer structures are used in many areas, often with sequence-based or tree-structured models that can effectively find and study local connections within spatial or temporal structures. CNN exhibits notable proficiency in the domains of emotion identification, computer vision, and NLP. Scholars have employed CNN for sentence modelling4. Using convolutional filters, CNN shows a better way to extract n-gram features at different phrase positions.

Additionally, it is capable of learning both short and distant relationships through pooling operations. However, in the context of the feature extraction layer, it is observed that the feature distance has a high dimensionality. Additionally, sparsity characterizes the text data, assigning equal importance to all features. To address these issues, a novel framework called OTCNLSTM is introduced for SA. The novelty of the proposed study is indicated by the following important contributions:

-

Hierarchical Emotional Feature Learning: Unlike conventional CNN-LSTM models, the OTCNLSTM offered extracts hierarchical emotional features through multi-level convolutional blocks before temporal modelling. Such architecture has been designed for tweets that are short, sparse, and rich in context.

-

BKWOP: The optimizer may mimetically model orca behaviour to adaptively balance exploration and exploitation and enhance hyperparameter tuning via boosting-oriented feedback.

-

Optimization-Classified Learning Pipeline: Specifically, this framework treats optimization as more than a parameter search strategic process that behaviour-guided dynamically shapes architecture depth, learning rate, batch size, and length of LSTM memory.

-

Domain-Specific Application to Emotion Polarity: Instead of coarse positive/negative/neutral classification, the model performs emotion-based binary classification (happy versus sad), which is more subtle and reflective of real-world sentiment complexity.

-

Experimental Superiority and Generalizability: The empirical evaluation in the Kaggle Twitter sentiment dataset subset finds the model superior to conventional DL models-CNN, LSTM, and CLSTM, in terms of accuracy, precision, sensitivity, F1 score, and specificity.

OTCNLSTM uses the best hierarchical CLSTM layers to find the spatial and semantic links between Twitter data segments. The research model has a transition methodology that encompasses links between input and state, as well as state-to-state relationships. This model utilizes a hierarchical approach that leverages the convolution operation to learn spatial local cues. Unlike the typical attention model that attends mostly to word-level relevance, the OTCNLSTM model combines multi-level convolutional blocks with CLSTM layers to extract localized emotional features hierarchically prior to sequential modelling. Moreover, the "Boosted Killer Whale Predation" (BKWOP) algorithm used for hyperparameter tuning brings in the behaviour-driven learning paradigm never found in the conventional attention or hierarchical LSTM schemes, largely contributing to greater efficacy in sentiment classification assignments.

Following this, the article will continue with the following sections: Section "Related works" reviews the literature on SA, while Section "Research model" presents the proposed framework for emotion recognition. Section "Results and discussion" provides a comprehensive account of the empirical findings, experimental procedures, analytical discourse, and comparison with current SA methodologies. Section “Conclusion” includes the conclusion and future directions.

Related works

This section focuses on the recognition of emotions based on SA utilizing ML and DL algorithms. Additionally, the limits of these schemes are also addressed as shown in Table 1. Uthirapathy and Sandanam5 introduced the LDA (Latent Dirichlet Allocation) methodology for identifying and classifying various topics within a given dataset. On the other hand, BERT (Bidirectional Encoder Representation from Transformers Uncased) was utilized for sentiment classification in the dataset. The BERT using DL model reads the sentences in the left-to-right direction as well as from right to left. With the aid of a large sample of texts as a training stock, this model employed the language prediction model covered by the hidden mode and sentence prediction strategy to understand the language better and to discover some aberrations in patterns of data. The way that it learnt was also generalizable in its own way, through a wide variety of NLP tasks.

Sharaf Al-deen et al.6 presented another form of model, the DNN-MHAT (deep neural network with a multi-head attention mechanism). This model integrated an accurate DNN that comprehends the context of the text altogether and extracts invariant local features. The Bi-LSTM is combined with a CNN to perform this. It also incorporated a multi-head attention. This mechanism concentrated on the information of the hidden layers of BiLSTM to rapidly identify the words of the text with intensive connections and rely on long-term encoding. These findings indicated that the DNN-MHAT model performed better than the existing best ones when applying the short tweets and the long reviews.

Basiri et al.7 proposed a deep attention-based CNN-RNN model of SA (ABCDM), and the first step of this model was weighting factor GloVe embedding on its embedded layer. Previously, the attention approach has been introduced to highlight some statements, and two GRU and LSTM bidirectional layers have been provided to extract both previous and potential future contexts. Applying the convolution and the pooling method will help to obtain local features, which remain at a certain location and maximally reduce the size of the desired features.

In order to determine the opinions of people on the issue of climate change, Yasin and Arslankaya8 concentrated on the analysis of the Turkish-language tweets containing their discussion of the global warming phenomenon. The tweets were classified by the system into different opinion groups, such as negative, positive, and neutral. The model in question was built by use of ML techniques, K-NN (k nearest neighbour), NB (Naive Bayes) and support vector machine (SVM). The comparisons between accuracy measures were used to check the efficiency of the classifier. Based on the findings, Naive Bayes, K-NN, and SVM algorithms have illustrated 65.43%, 73.51%, and 74.63% of classification accuracy, respectively. These results indicate that the accuracy of the classification could have been improved.

Yin et al.9 demonstrated a thorough study of the most common NLP approaches to retrieving sentiments of people faced with the COVID-19 vaccine on social media platforms. The study was supposed to objectively analyze and illustrate these opinions, and the outcomes of the study can improve the understandability of the difficult material on social networks and provide sufficient empirical support that can be used to sway government decision-making and drafting policies. El Barachi et al.10 developed the sentiment investigation framework based on a BiLSTM model that was used to determine the sentiments of climate change and global warming-related tweets. The feelings were classified into different classifications of emotion. The addressed classifier has achieved a state-of-the-art level, as supported by cross-category accuracy rates of 87.01–89.80%.

Rodrigues et al.11 presented a method for identifying spam and SA in the Twitter data set using ML and deep neural networks. In the study, both the NB algorithms used in ML and the LSTM method used in DL performed better than other methods when evaluating accuracy. Sivakumar and Srinivasulu12 recommended the utilization of LSTM and aspect-based opinion analysis with the incorporation of fuzzy logic for the evaluation of mobile phone reviews. Naramula and Kalaivania13 have introduced a method for sentiment extraction in mobile phone reviews, focusing on certain aspects. The method utilizes artificial intelligence techniques, including SVM, RF, and K-NN. The researchers utilized a dataset sourced from Twitter, which consisted of tweets specifically about reviews of iPhone and Samsung phones. Gandhi et al.14 proposed the development of SA for movie reviews using CNN and LSTM. The methods demonstrate a higher accuracy of 87.74% and 88.02% compared to alternative methods, suggesting potential for further enhancement in performance.

Huang et al.15 proposed a BERT-based linguistic model for preparing Turkish verbal data to make it suitable for ML methods. The utilization of the BERT-based methodology effectively streamlines the data preprocessing procedures. Jain et al.16 employed BERT and dilated DCNN to construct an SA model. It used BERT as the embedding mechanism of words, but the layer DCNN was used to classify and optimise the method. A model that combines BERT and CNN methods in sentiment classification was proposed by Ozcsift et al.17. The model was used to classify sentiment in terms of a large movie review dataset. The method used the BERT embedding method of words and a trained CNN to get a better understanding of the text’s semantic content. Pang et al.18 have developed an aspect-based SA model based on BERT. The methodology has been utilized in drawing aspect-level data out of such a large data set to transfer the aspect attributes to the classification and eventually improve the model. Manju and Gupta19 proposed a BERT-based LDA framework to perform aspects-based SA on a dataset containing restaurant reviews.

Yousaf et al.20 have used various ML models to apply emotion recognition in Twitter data, which involves identifying whether a tweet is happy or unhappy. Using performance comparison in detail, it was revealed that the ideal result was seen with the suggested voting (LR-SGD) classifier with TF-IDF, which was 79 percent accuracy with F1 of 81 percent. In order to make the suggested method more reliable, more tests were done on two separate sets of data: one with binary data and the other with multi-class data. The obtained outcomes exhibited a high level of resilience and consistency.

The study by Bansal et al.21 established a framework employing a DL classification model to distinguish tweets from others and described. Non-situational tweets predominantly express sentiments such as sorrow, anger, and grief. The study has concurrently addressed sentiment classification and informative tweet selection problems with a multi-task learning (MTL) approach within a DL framework. The summarizing method produced clustering solutions through diverse established techniques and subsequently integrated these cluster solutions via generative modelling. The method’s improved efficiency in four disaster-related events demonstrated the framework’s efficacy compared to state-of-the-art methodologies.

An algorithm called Sentiment Analysis of Twitter Social Media Data (SATD) was proposed by Dangi22. The SATD assessed and predicted the sentiment of tweets regarding COVID-19. The methodology predicted the metrics of recall, precision, F1-score, and support utilizing various ML classifiers, including logistic regression, random forest, support vector machine, multinomial Naïve Bayes, and decision trees, across two datasets: the first derived from Twitter during the lockdown and the second during post-lockdown. The experiment and discussion sections indicated that the SATD model exhibited better accuracy.

A method termed the chaotic coyote optimization algorithm (COA)-based temporal weight-AdaBoost support vector machine (SVM) approach was designed by Dangi23 to achieve accurate classifications in the given context. The time-weighted AdaBoost SVM addressed drift-related concerns and facilitated optimal data learning for subsequent classifications. Moreover, class imbalance challenges were addressed by implementing a modified CO algorithm, specifically the chaotic COA. The findings indicated that the methodology classified Twitter-acquired social media information with greater accuracy.

The study by Dangi24 analyzed tweets pertaining to COVID-19 and examined the various ML algorithms along with their performance evaluation on these tweets. The classification methods were utilized to categorize emotions to ascertain their association with non-COVID-19 or COVID-19 topics. The Linear Support Vector Machine (LSVM) attained the greatest test accuracy among these methods. Logistic regression has demonstrated superior performance in ROC_AUC, F1 score, and recall. The random forest classifier has demonstrated superior precision and specificity. Among all methods, stochastic gradient descent (SGD) has shown superior outcomes across all computation parameters. Dangi25 also made a good review on sentiment analysis of movies using machine learning techniques based on data available on Twitter. Table 1 gives the Critical Analysis of the Reviewed Models.

Summary: In the literature above, the researchers have devised numerous ways to enhance the predictive efficacy of SA. However, the observed recognition rates remain relatively low. This paper presents the development of an efficient and significant SA system. The system utilizes optimum TCNLSTM blocks to excerpt hidden emotional cues. These cues are then fed into a sequential model to extract temporal features. After that, using both the center loss and the softmax loss gives chances for the classes, which guarantees and improves the final classification results. BKWOP enhances accuracy in TCNLSTM by guiding the development of appropriate hyperparameter selection. The forthcoming section delivers a comprehensive exposition of the projected framework.

Research model

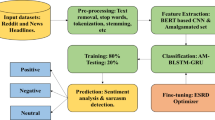

This section presents a deep discussion of the developed SA method, which is based on the OTCNLSTM model. The step-by-step process of the analysis is outlined, and the key components of the model, including the CLSTM architecture, sequential learning approach, centre loss function, and optimal parameter selection, are thoroughly explained. Figure 1 illustrates the comprehensive structure of the proposed design. On the other hand, the problem of recognizing emotions is approached as a classification task with multi-class, utilizing sequential learning and spatiotemporal models. The TCNLSTM model is created on the basis of GRUs (Gated Recurrent Units) and with the help of the use of the centre loss. This is because this model will be successful in determining local as well as global patterns in Twitter data by using raw Twitter content. The intended model architecture is based on three modules, LFTBs with CLSTM, GFTB with GRUs, and multiclass classification using centre and softmax loss, and the preliminary preprocessing stage. Then the processed data is passed into the TCNLSTM model. In this model, the pertinent data are determined and the classification of such sentiments as happiness, unhappiness, sadness, anger and normal is made. In order to improve the accuracy of TCNLSTM, the best parameter is found by the usage of BKWOP, and then, the results are evaluated on the basis of the usage of the Kaggle dataset. The results indicate that the OTCNLSTM model had greater performance when associated with other models.

Since it is a binary sentiment classification of tweets, this study combines OTCNLSTM with an optimizer called BKWOP. As such, the methodology consists of:

Data Preprocessing: Unnecessary data are removed from the raw tweets, after which the Twitter data is standardized using the RMS function.

OTCNLSTM Model Architecture: The proposed model is a hierarchical deep-learning architecture designed to extract local and temporal sentiment patterns from tweets.

-

Tiered Convolutional Blocks: It consists of 4 blocks of 1-dimensional convolution with steadily increasing filter depths of 32, 64, 128, and 256 and kernel size = 3. The main task of the convolution layer is to detect some abstract local semantic features, and then max-pooling is applied to reduce the dimensionality. Batch normalization is applied after each convolution block to stabilize training.

-

Fully Connected Layers: The LSTM output is then sent into two dense layers, with 128 neurons in the first dense layer and 64 neurons in the second dense layer; their activation function is ReLU. Finally, an output layer using sigmoid activation gives a classification output indicating if the sentiment is happy (1) or sad (0).

BKWOP for Hyperparameter Optimization: Optimization Objective: BKWOP aims at the optimization of hyperparameters, e.g., Learning rate, Batch size, Number of LSTM units, Dropout rate, and Number of CNN filters.

Sentiment Classification Process: Preprocessed tweets are fed into the optimized OTCNLSTM model. Local and global emotional patterns are extracted, and the final output layer predicts the sentiment class. The model’s performance is evaluated using accuracy, precision, sensitivity, F1-score, and specificity.

Dataset description

The dataset has a substantial number of tweets that express contrasting viewpoints. The dataset utilized in this work is referred to as "SA on Twitter data" and comprises a total of 99,989 records. Each record is classified as either joyful or unhappy based on its sentiment polarity, represented by the symbols 1 and 0, respectively. The completed dataset includes tweets written in the English language. The dataset comprises various distinct features. This dataset consists of 43,532 negative and 56,457 positive tweets. This dataset is available at Kaggle (https://www.kaggle.com/datasets/jp797498e/twitter-entity-sentiment-analysis).

The dataset was divided with 70% for training, 15% for validation, and 15% for testing using stratified sampling in order to preserve class balance between happy and sad tweets in all subsets. So, the evaluation was fair, and no class imbalance was present in training and testing.

Preprocessing

In this stage, the unnecessary data is eliminated, and the Twitter signal is standardized using the RMS (Root Mean Square) function, as outlined in Eq. (1):

where “RMS” stands for the desired root-mean-square results and \(sf\) is a Twitter data scaling factor of \(l\) for achieving a linear gain based on a lot of data. Normalizing data to a decidable corpus is a typical practice in text data processing. Four LFTBs were built, each with a CLSTM layer, a BN layer, and a pooling layer, to further extract the hidden emotional cues in sequential ways. The LFTBs are hierarchically coupled with one another to find the state-to-state and input-to-state association throughout the Twitter elements. To find out how Twitter posts are related in space and time, a CLSTM layer is employed for optimizing the sequences, storing sequential data in the internal state, and making use of that knowledge for a step-by-step prediction. The long-term context-dependent connections in the Twitter segments are also identified using the GRUs network, and the overall weights are adjusted accordingly. Last, the softmax layer is used to get the final classification probabilities. In the next sections, the GRU-CLSTM will examine and centre loss in greater depth to see how they all fit together.

CLSTM in the SA model

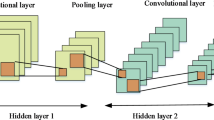

There is a high level of consistency across successive tweets, making emotion identification a sequential problem. By processing the data segments individually and spotting the association during sequential prediction, the suggested SA model can identify emotional states in raw Twitter data. Although LSTM networks are often considered to be superior at sequential modelling tasks26, the generic LSTM network is notorious for ignoring spatial cues in the data being input during processing. As a result, the spatial cues are lost as the sequential cues are modelled as a one-dimensional vector. Because of this, using Twitter data to boost recognition performance is not recommended. Spatial cues will be extremely helpful in enhancing the effectiveness of the model used for emotion recognition in Twitter segments. During its preliminary processing, the CNN network takes into account the geographical structure present in the Twitter data and uses that to its advantage. This method employs the CLSTM (convolution LSTM)27 in place of a traditional CNN network to maintain spatial and temporal signals, including the spatiotemporal data in consecutive Twitter elements. In the CLSTM, the convolutional operation is used for both state transitions and data transitions. For betterment, the correlation between the Twitter sections, the CLSTM, records the spatiotemporal cues during the convolution procedure. Equations (2)–(7) are used by the CLSTM to determine body mass.

The above equations make use of numerous variables to represent different operations performed during the convolution process, such as the sigmoid functions ("σ"), the * (“convolution operations”), the element-wise operations ("+"), and the tanh ("hyperbolic tangent function"). Applying the \(t\)-th step of the CLSTM (denoted as subscript "t") yields the corresponding representations for the various gates, such as the input gates \({ipg}_{t}\), forget gate \({fgg}_{t}\), output gate \({opg}_{t}\) and input modulation gate \({img}_{t}\). Furthermore, \({P}_{t}\) represents the input data while \({hi}_{t}\) and \({ce}_{t}\) referred to as the hidden state and cell state, respectively. The CLSTM extracts temporal information in the first dimension of the input tensor and spatial information in the second and third dimensions of the tensor for use in the convolution operation.

The essential idea behind both the CLSTM and the traditional LSTM network was similar. As shown in Fig. 2, the productivity of one layer helps as the input to the following layer. They differ mostly in their approach. For the CLSTM, the operation of convolution was applied during the change of state-to-state, where the spatiotemporal features were learned. As a result, the CLSTM can concentrate on the most important aspects of the Twitter segments, making it simple to identify the data streams that follow one another on Twitter and ensuring that the prediction performances of the SA model are maintained. Good recognition performances were achieved with the OTCNLSTM layer.

Global feature training block (GFTB)

Modifications to the GRUs enhanced the proposed emotion detection framework’s capability to acquire global cues in the learnt features and comprehend long-term contextual linkages. Here, the stacked GRU network28 is used to learn global features. In contrast, the GRU network (Hochreiter and Schmidhuber) is a variation of the LSTM with two gates, an update gate and a reset gate, and has become popular recently for training with incomplete sequences of input. The update gate in the GRU is comparable to the input and forget gate in an LSTM, and the rearrange gate may be used to re-tune the units in the same manner that it does in an LSTM. Unlike the LSTM module, the GRU module’s internal mechanism does not rely on a dedicated memory cell for the alteration of information within individual units. Equation (8) can be used to determine the generation \({h}_{t}^{j}\) at time "t" between direct interpolation candidates \({h}_{t}^{j}\) and \({h}_{t-1}^{j}\).

where \({z}_{t}^{j}\) represents the evaluation and the stimulation level of the units in the update gate, as shown by Eq. (9).

In GRUs, Eq. (10) is used to activate candidates by the activation of \({\widehat{h}}_{t}^{j}\) Conducted by an update gate.

The element-wise multiplication symbol (*) and the reset gate symbol (\({re}_{t}\)) are used in Eq. (10). For data to be cleared once the gate was disabled, the value should equal 0, as in (\({z}_{t}^{j}\)= 0), and the gate that resets must coincide with the unit. The process through which the unit is instructed to look for the leading variable in a sequence of inputs. The state of the reset gate can be calculated with the help of Eq. (11).

An update gate (represented by "z") controls the current state of a sequence, whereas a reset gate (represented by “re”) activates any short-term dependencies. The GRU network’s update gate is also in charge of managing long-term context. To re-tune the global weights, however, a network is used that can stack bidirectional gated recurrent units (BiGRUs).

Center loss function

Improved model performance was achieved by jointly computing the softmax loss and the main loss of the deep characteristics for the final classification of the Twitter emotions. Although the final class possibilities required for optimal prediction accuracy may be calculated with just these two losses, the softmax loss yields inferior model forecasting performance than the fusing loss because of the significant distance between the classes. In this model, the median "λ" context is used for updating the loss functions and calculating and optimizing the intra/inter-class distances. The proposed function29 determines the amount of information lost while comparing the deep features to the stable centre of their respective classes. The centre loss function was used to determine the smallest possible distance between classes, and the softmax loss function was used to get the largest possible distance between classes (Eqs. (12) and (13)).

To the classifier, "n" and "m" stand for the count of classes and the minimal size of batch, respectively; \(c{y}_{i}\) stands for the class centre \({y}_{i}\) in the ith samples, and the rand number stands for the selection of random values. To determine how far away an object should be to avoid false classifications in real-world conditions, the λ employed symbol for the centre loss. As can be seen in Eq. (14), the emotion classification task was completed utilizing the centre loss with a softmax loss function.

where "L" is the ultimate loss functionality after fusing. Experiments have shown that using the centre loss function is both significant and effective. The hyperparameter is the symbol "λ".

BKWOP-based hyperparameter selection

The reasons to implement the BKWOP algorithm were considered based on:

Exploration–Exploitation Trade-off: BKWOP dynamically allows movements of solution agents with respect to environmental conditions and attack modes (bubble-net strategies). Thus, it prevents premature convergence and the local minima—a problem frequently encountered in PSO and GA.

Boosted Learning: Like the ensemble method of boosting, BKWOP merges multiple search behaviours and feedback processes in order to augment the convergence speed without compromising the diversity.

High-Dimensional Adaptability: Hyperparameter tuning in DL is often manifested as high-dimensional multimodal landscapes. The spiral-driven encircling strategies of BKWOP facilitate the navigation flexibility in such spaces.

In the first step of its optimization process, BKWOP randomly selects N individuals of scale D to form an initial population. Killer whales’ behaviour during the optimizing process can be broken down into two distinct phases according to mathematical criteria: the pursuit phase and the attacking phase.

Chasing phase

When killer whales see a group of fish, they do not immediately begin hunting; rather, they use sonar to organize their efforts and cooperate to capture prey successfully. These killer whales will travel, bringing the group of fish to the external and into a confined area. The killer whales divide the chasing phase of the hunting process into two distinct behaviours: pushing the prey and surrounding the prey. To fine-tune the killer whales (i.e., hyperparameter) capacity to handle these two behaviours independently, first, a constant value is assigned between 0 and 1 to the selection parameter \({x}_{1}\), and then a second number is generated between 0 and 1 at random. For numbers larger than \({x}_{1}\), the forced behaviour is executed; otherwise, the surrounding behaviour is used.

Forcing behaviour

To maximize their value, killer whales will chase groups of fish to the surface when they spot them. When there are few killer whales in a pod (the parameter), the locative gauge of spinning is modest, and the killer whales can zero in on their prey quickly and accurately. Instead, it is more difficult to approach the target spot precisely if the killer whales’ troop is huge, the locative measure of swimming was vast, and the hunting environment was complex. It was essential to keep control over the centre location of the killer whale’s troop to keep it close to the prey and to prevent the killer whale’s troop from diverging from the goal. It not only makes it possible for killer whale search agents to shift nearer to the prey, but it is even necessary. Therefore, chasing can be broken down into two distinct strategies depending on the number of resident killer whales. When the killer whale’s pod is large (rand > UD), the first method is used, whereas the second method is employed when the pod is tiny (rand UD). Exactly, the killer whale’s speed and the connected positions after it had enthused were accessible as given in the following:

where \(iter\) is the current iteration index, \({v}_{c,i,Fi}^{iter}\) is the chasing speed of the i-th killer whale at iterations; subsequently, the first chasing method is determined, \({v}_{c,i,Se}^{iter}\) is the i-th killer whale’s chasing speed at iterations after the next hurtling strategy is determined, and so on. Also \({p}_{c,i,Fi}^{iter}\) represents the i-th killer whale’s location at iterations \(iter\) after finding the initial chasing method, \({p}_{c,i,Se}^{iter}\) represents the i-th killer whale’s location at iterations \(iter\) after determining the next chasing method, and \(Me\) represents the arithmetic mean position of the killer whales’ troop. Probability of choosing a chasing strategy, where P, Q, and R are uniformly distributed between 0 and 1, S is uniformly distributed between 0 and 2, U's esteem is set to 2, and Q is uniformly distributed between 0 and 1.

Surrounding behaviour

Killer whales must cover the groups of fish in a measured circle afterwards, forcing them to the surface. During the surrounding behaviour, killer whales exploit sonar for interacting with all others and choose their next locations according to the position of nearby killer whales. The killer whales shift themselves by applying the locations of three selected killer whales randomly and then determining the locations after swimming as given in the following:

where \({y}_{1},{y}_{2}, {y}_{3}\) are the three killer whales chosen at random from N killer whales and \({y}_{1}\ne {y}_{2}\ne {y}_{3}\), and \({p}_{c,i,Th}^{iter}\) were the i-th killer whale’s locations after finding the next chasing strategy at iterations \(ite{r}_{max}\).

Attacking preys

When a pod of killer whales is hunting its food, the predators take turns diving into the enclosure, attacking the fish inside, lashing their tails on the circle, and then eating the stunned prey. Because too few killer whales will lead to the individual going in one direction, and too many would slow down the algorithm’s convergence velocity, BKWOP has four killer whales, which relate to the four best-attack spots in the circle. Other killer whales can join the four inside by following the same route they took to get there. The direction of movement can be determined by the orientation of randomly selected neighbouring killer whales if the killer whales wish to return to the cage after feeding to trade with other killer whales. Following this technique, the killer whale’s speed and whereabouts when it launches its attack.

where \({v}_{at,i,H}^{iter}\) represents the velocity measurement of the i-th killer whale in its hunt for prey at iteration i and \({v}_{at,i,R}^{it}\) displays the velocity dimension of the i-th killer whale in its return to its cage at iteration it: posit 1, posit 2, posit 3, posit 4. If \({U}_{1}\) is a random number between 0 and 2, \({U}_{2}\) is a random number between 2.5 and 2.5, and posit \(at,i\) is the position of the i-th killer whale after the attacking phase of iteration it.

Based on the initial position and speed of the prey, the original OP adjusts its allocation toward it during the pursuit phase, as illustrated in Eq. (18). However, the OPA entities in the pursuit phase were still vulnerable to local optimal inactivity in some conditions. It means that OP’s issues with immature convergence are still present. Additionally, there are cases where the initial OPA is unable to move from the diversification to the intensification phases successfully. Lévy flight (LF)30 is a strategy that can be used in light of this fact to deal with these difficulties. Using the LF technique, the OP may conduct more in-depth searches and more quickly locate the desired results. This tactic can be used to ensure that Boosted OP (BKWOP) effectively manages global search queries. The quality of the gained solutions in BKWOP is enhanced, and the problem of stagnation can be solved as well. The LF strategy is implemented during the pursuit phase of the OP by being incorporated into the killer whales’ location update equation of the prey forcing behaviour (i.e., Eq. (18)) to increase the killer whales’ likelihood of arriving at the prey’s chosen position. In BKWOP, the following adjustments are made to the specialized formula:

where \(\alpha\) is a random number for all orac scales and corresponds to the step length related to the topic of concern. Additionally, the sign of the indicates the direction of movement: positive for rightward and negative for leftward motion31. Therefore, two criteria must be supplied to carry out LFs or generate random walks: the step lengths of the walk that are subject to the selected Lévy distribution (LD)32, which was indicated by the values of, and the directions of the measure that affects the trip towards the destination, i.e., \(\alpha\). These conditions can be determined most quickly and easily by using the well-known Mantegna procedure for a symmetrical and stable LD32.

The symbol ⊕ indicates multiplications in order of entries. The following is a condensed version of the discussion found in33 regarding a non-trivial strategy for generating a step length sl sample:

where u and v are drawn from a normal distribution. That is

With

Forcing behaviour during the chasing phase is determined by adding the value s with the D-scale obtained from Eq. (26) to the most recent known location of killer whales, \(po{s}_{ch,i}\), where \(\Gamma\) is a typical Gamma function. When it is varied, the distribution \(\beta\) shifts, with longer skips for lesser values and quicker skips for larger ones. Figure 3 shows a flowchart of the proposed BKWOP algorithm for greater context. The BKWOP algorithm’s steps from flowchart are explained below.

Step 1: Initiate the population p of the algorithm using the given values of N, D, lb, and ub. Similarly, set the values for \({iter}_{max}\), × 1, × 2, UD, and h.

Step 2: It is to determine the best as \({p}_{o}\) fitness function to use when evaluating the killer whale’s troop members.

Step 3: using Eqs. (15)–(18) and (19)–(20), the chasing phase was applied in conjunction with the LF technique to individuals in the killer whale’s troop. In this stage, killer whales use the selection parameter \({x}_{1}\) to decide whether to push or encircle their target. Once the prey is found, they use sonar to adjust their positions with it.

Step 4: using Eqs. (21–23), apply the attacking phase to individual killer whale troop members. During this stage, the killer whales initiate an attack on the prey and use sonar to alter their positions; specifically, some of the killer whales will outperform the reef, and their positions will be traded with the pound.

Step 5: The stage involves creating a new populace.

Step 6: The loop is broken at this step. If the current iteration is greater than the \({iter}_{max}\), then it is fulfilled. The process will begin again in the second step if the Optimal Output Solution cannot be determined.

Table 2 presents a detailed hyperparameter space used for the OTCNLSTM model architecture and optimization via BKWOP. The following pseudocode provides the complete end-to-end flow of the OTCNLSTM model developed, optimized using the BKWOP strategy. Data preprocessing is done first. Next, a hierarchical CLSTM-based architecture was developed to extract local as well as global features of sentimentalism. The BKWOP algorithm searches intelligently in the hyperparameter space through behaviour-inspired exploration and Lévy flight strategies. The model is then trained with the optimal parameters and tested on test data, followed by fivefold cross-validation to verify its robustness. This clearly defined pathway facilitates fast training and efficient sentiment classification.

Algorithm: OTCNLSTM with BKWOP.

Results and discussion

Here, the proposed method for emotion recognition on Twitter works by applying it through its paces on real-world data and measuring its performance against other SA methods like BERT-CNN17, LSTM14, and CNN16. The model was created on Python 3.7.9 and Keras, employing TensorFlow for the backend engine. The computing environment comprised a Core i7 CPU, 12 GB RAM, and a 64-bit Windows 10 OS. The OTCNLSTM SA method provided here is tested on publicly available Twitter data from the Kaggle benchmark. Separate sections explain the investigational outcomes of each dataset, including comparisons and discussion with current methodologies. Overall prediction accuracy is shown in percentage form in Table 3, together with the F1-score matrices that demonstrate the robustness of the model. Figure 4 depicts the results of the proposed model’s performance.

Table 4 shows how the proposed OTCNLSTM system stacks up against some of the most popular baseline approaches, including BERT-CNN, LSTM, and CNN. According to the most up-to-date research, 84.5% of studies utilizing the BERT-CNN approach indicated good accuracy, while the most recent result using the 1D-CNN approach was just 78.14%14. Since the current models relied on features with low-level, and the proposed model hierarchically learned higher-level discriminative features of spatiotemporal, the proposed OTCNLSTM system improved accuracy by 5.7% compared to the recent DL models and by exceeding 20% compared to the 1D base model14. For real-time applications, the high identification rates of 78.14% in 2021 and 84.5% in 2022 utilizing the Kaggle Twitter dataset are still too low. However, OTCNLSTM prioritized precision over speed, and it was the first to use optimum convolutional LSTM and the GRUs to extract highly selective features from the original Twitter data and apply them for recognition. Recent advances in DL have led some to declare the OTCNLSTM system a success and the best option for problem monitoring in the real world.

For a given number of emotion classes on Twitter, Fig. 5 provides a comparison of the accuracy performance of the proposed OTCNLSTM to that of existing SA methods like BERT-CNN, LSTM, and CNN. The OTCNLSTM achieves higher precision with less computational overhead. The OTCNLSTM outperforms the state-of-the-art algorithms BERT-CNN, LSTM, and CNN with its 90.4% accuracy due to its optimized local minima with BKWOP and efficient feature extraction blocks like LFTB and GFTB. The suggested approach accurately identified emotions in raw Twitter data and classified them as such. Using a 1D network to analyze a user’s tweets and deduce their emotional state has proven this approach to be superior for usage in commercial settings.

Figure 6 displays a comparison of the proposed OTCNLSTM precision to other SA systems, such as BERT-CNN, LSTM, and CNN, in terms of accuracy for a certain number of emotion classes using a specified Twitter dataset. Using a large dataset improves OTCNLSTM’s precision. The OTCNLSTM outperforms the current BERT-CNN, LSTM, and CNN in terms of precision, achieving a remarkable 89.9%. The suggested OTCNLSTM achieved high precision compared to alternative methods by its efficient preprocessing and feature blocks. The precision numerical evaluation results of the proposed and existing SA schemes are shown in Table 5.

Figure 7 displays a comparison of the proposed OTCNLSTM to other SA systems, such as BERT-CNN, LSTM, and CNN, for a given number of emotion classes in a Twitter dataset, as measured by F1-score performance. The OTCNLSTM F1-score improves with a large Twitter sample. In comparison to baseline algorithms like BERT-CNN, LSTM, and CNN, OTCNLSTM’s hyperparameters provide a much higher quality improvement at a lower computational cost, leading to a high F1-score of 90.4%. The F1-score numerical evaluation results of the proposed and existing SA schemes are shown in Table 6.

Figure 8 compares the proposed OTCNLSTM specificity to existing SA techniques like BERT-CNN, LSTM, and CNN about the number of emotion classes in a standard Twitter dataset. Using a large Twitter dataset improves OTCNLSTM’s specificity. When compared to other algorithms, the OTCNLSTM achieves a far higher specificity of 90.2%. The high specificity achieved by the suggested method is a direct result of the efficient blocks and optimal hyperparameters of OTCNLSTM rather than any other single design factor. Existing techniques are underfitting due to their reliance on simplistic models that perform less when applied to high-dimensional data. The specificity numerical evaluation results of the proposed and existing SA schemes are shown in Table 7.

The number of emotion classes in a specific Twitter dataset is displayed in Fig. 9, along with a comparison of the proposed OTCNLSTM to existing SA schemes like as BERT-CNN, LSTM, and CNN. With a large Twitter dataset, the OTCNLSTM becomes more sensitive. By comparing itself to other algorithms, the OTCNLSTM can achieve a high sensitivity of 90%. Since different BKWOPs have varying capacities for improving sensitivities, the proposed OTCNLSTM’s ability to optimize independently for each layer is an important feature for maximizing efficiency while minimizing costs. The sensitivity numerical evaluation results of the proposed and existing SA schemes are shown in Table 8.

The results of the fivefold cross-validation shown in Table 9 prove the proposed OTCNLSTM model to be dependable and consistent in its applications across numerous performance metrics. The model was able to keep the accuracy very high and consistent with an average of 90.36% across the folds, which accounts for generalization capabilities. In the same way, the precision (89.7%), recall or sensitivity (90.18%), F1-score (90.1%), and specificity (90.06%) show an impressive ability to classify correct happy and sad sentiments in tweets. The near-equal value of the above metrics among all folds proves that the model does well in balancing the detection of positive and negative classes against data variance, proving the model’s suitability in a genuine sentiment analysis task. Figure 10 depicts the graphical chart of the proposed OTCNLSTM model in a five-fold cross-validation performance analysis.

The ablation study in Table 10 presents the impact of the BKWOP model, which substantially enhanced the performance of the OTCNLSTM model in contrast to classical search-based optimization techniques, such as Grid Search and Random Search. BKWOP scores the highest, yielding promising and satisfactory results for all metrics, including accuracy (90.4%), precision (89.7%), recall (90.2%), F1-score (90.1%), and specificity (90.1%)—this is a great margin ahead of Grid Search and Random Search. The reason behind such performance of BKWOP in hyperparameter space exploration and improved model tuning for optimal learning is that it directly aids the increased classification performance and model stability of the proposed framework. Figure 11 depicts the graphical chart of the ablation study comparison for the proposed BKWOP model with Grid Search and Random Search.

This research holds many advantages, where OTCNLSTM is an innovative hierarchical architecture that obtains local emotional patterns from convolutional tiers and global emotional patterns from sequential tiers in tweets. Efficient hyperparameter tuning through the use of the BKWOP strategy aids classification results, ensuring improved accuracy and precision regarding robustness among sentiment categories. The model is highly generalizable and outperforms existing methods (CNN, LSTM, and BERT-CNN) in a setting of actual Twitter data. However, in the process, there have been some limitations, such as being computationally expensive for multi-stage training, the possibility of overfitting with small data, and the extensive hyperparameter search. Moreover, the model’s performance can be affected when tested on a different dataset; hence, there is a strong need for further evaluation and generalization on the multilingual or multi-domain sentiment analysis task.

Conclusion

This research presented an optimized deep learning framework for sentiment analysis by introducing a novel hierarchical architecture named OTCNLSTM, combined with the BKWOP strategy for efficient hyperparameter optimization. The model was designed to classify tweets into binary emotion categories—happy and sad—by hierarchically extracting local semantic features through multiple convolutional LSTM blocks and capturing temporal dependencies using stacked GRUs. The integration of BKWOP enabled a more effective search of the hyperparameter space, significantly improving model performance and stability over traditional tuning methods such as grid and random search. The proposed model was evaluated using the Kaggle Twitter dataset and achieved a recognition accuracy of 90.4%, outperforming competitive models like CNN, LSTM, and BERT-CNN. Additional experiments, including fivefold cross-validation and an ablation study, confirmed the model’s robustness and the impact of BKWOP optimization. Visualization techniques such as confusion matrices and performance comparison charts further supported interpretability. This work contributes a scalable and adaptable solution for emotion recognition in social media, with practical implications for real-time opinion mining, policy analysis, and user behaviour modelling. In future, the work aim to explore multi-modal sentiment analysis and extend the model to multilingual and domain-specific applications for broader generalization and societal relevance. Additionally, a multi-model Twitter SA system can be developed and employed with the CLSTM layer, incorporating innovative architectural designs or structures.

Data availability

The data that support the findings of this study are available within the article.

References

Pouli, V., Kafetzoglou, S., Tsiropoulou, E. E., Dimitriou, A., & Papavassiliou, S. Personalized multimedia content retrieval through relevance feedback techniques for enhanced user experience. In 2015 13th International Conference on Telecommunications (ConTEL) (pp. 1–8). IEEE (2015).

Kalchbrenner, G. E., & Blunsom, P. A convolution neural network for modeling sentence. arXiv preprints, arXiv:1404.2188 (2014).

Mikolov, T., Joulin, A., Chopra, S., Mathieu, M., & Ranzato, M. A. Learning longer memory in recurrent neural network. arXiv preprint, arXiv:1412.7753 (2014).

Kim, Y. Comparative study of CNN and RNN for natural language processing. arXiv preprint arXiv:1408.5882 (2014).

Uthirapathy, S. E. & Sandanam, D. Topic modelling and opinion analysis on climate change twitter data using LDA and BERT model. Proc. Comput. Sci. 218, 908–917 (2023).

Sharaf Al-deen, H. S., Zeng, Z., Al-sabri, R. & Hekmat, A. An improved model for analyzing textual sentiment based on a deep neural network using a multi-head attention mechanism. Appl. Syst. Innov. 4(4), 85 (2021).

Basiri, M. E., Nemati, S., Abdar, M., Cambria, E. & Acharrya, U. R. ABCDM: An attention-based bidirectional CNN-RNN deep model for sentiment analysis. Future Gen. Comput. Syst. 115, 279–294 (2021).

Yasin, K., & Arsalankaya, S. Sentiments analysis of shared tweet on global warming on twitters with data mining method: A case study on Turkish languages. In Computational Intelligences and Neurosciences (Vol. 2020, pp. 1–9) (2020).

Yin, H., et al. Sentiments analysis and topics modelling for COVID-19 vaccines discussion World Wide Web, 25, Springer Sciences and Business Media LLC, pp. 1067–1083 (2022).

El Barachi, M., et al. A novel sentiments analysis framework for monitoring the evolving public opinions in real-time: Case study on climate changes. In Journal of Cleaner Productions (Vol. 312, p. 127820). Elsevier BV (2021).

Rodrigues, Anisha P., et al. Real-time twitter spam detection and sentiment analysis using machine learning and deep learning techniques. In M. Ahmad (Ed.), Computational Intelligence and Neuroscience pp. 1–14 (2022).

Sivakumar, M., & Srinivasulu, R. U. Aspects-based sentiments analysis of mobile phones review using LSTM and fuzzy logics. In International Journal of Data Sciences and Analytics (Vol. 12, Issue 4, pp. 355–367). Springer Sciences and Business Media LLC (2021).

Naramula, V., & Kalaivania, A. Sentiment analysis in aspect term extraction for mobile phone tweets using machine learning techniques. In International Journal of Pervasive Computing and Communications. Emerald (2021).

Gandhi, U. D., et al. Sentiment analysis on twitter data by using convolution neural networks (CNN) and long short-term memory (LSTM). In Wireless Personal Communication. Springer Science and Business Media LLC (2021).

Huang, P., et al. Text sentiment analysis based on Bert and Convolutional Neural Networks. In 2021 5th International Conference on Natural Language Processing and Information Retrieval (NLPIR) (2021).

Jain, P. K., et al. Employing BERT-DCNN with sentic knowledge base for social media sentiments analysis. In Journal of Ambient Intelligences and Humanized Computing. Springer Sciences and Business Media LLC (2022).

Özçift, A., Akarsu, K., Yumuk, F., & Söylemez, C. Advancing natural language processing (NLP) applications of morphologically rich languages with bidirectional encoder representations from transformers (BERT): an empirical case study for Turkish. In Automatika (Vol. 62, Issue 2, pp. 226–238). Informa UK Limited (2021).

Pang, G., Lui, K., Zha, X., He, J., Mo, Z., Pang, Z., & Pu, B. Aspects-Level Sentiments Analysis Approach via BERT and Aspects Features Locations Models. In Z. Duan (Ed.), Wireless Communication and Mobile Computing (Vol. 2021, pp. 1–13). Hindawi Limited (2021).

Manju, V., & Gupta, D. An enhanced guided LDA model augmented with BERT based semantics strength for aspects terms extractions in sentiments analysis. In Knowledge-Based Systems (Vol. 246, p. 108668). Elsevier BV (2022).

Yousaf, A. et al. Emotion recognition by textual tweets classification using voting classifier (LR-SGD). IEEE Access 9, 6286–6295 (2020).

Bansal, D., Groover, R., Saini, N. & Saha, S. GenSumm: a joints framework for multi-tasks tweets classifications and summarizations using sentiments analysis and generative modeling. IEEE Trans. Affect. Comput. 15(4), 1838–1855 (2021).

Dangi, D., Dixit, D. K. & Bhagat, A. Sentiment analysis of COVID-19 social media data through machine learning. Multimedia Tools Appl. 81(29), 42261–42283 (2022).

Dangi, D., Bhagat, A. & Dixit, D. K. Sentiment analysis of social media data based on chaotic coyote optimization algorithm-based time weight-AdaBoost support vector machine approach. Concurr. Comput. Pract. Exp. 34(3), e6581 (2022).

Dangi, D., Dixit, D. K., Bhagat, A., Nair, R., & Varma, N. Analyzing the sentiment by classifying the tweet based on COVID-19 using machine learning classifiers. In 2021 IEEE International conferences on technology, research, and innovations for betterment of society (TRIBES) (pp. 1–6). IEEE (2021).

Dangi, D., Bhagat, A. & Gupta, J. K. Review on sentiment analysis of movie reviews using machine learning techniques based on data available on Twitter. Int. J. Eng. Syst. Model. Simul. 15(5), 253–259 (2024).

Mikolov, T., Joulin, A., Chopra, S., Mathieu, M., & Ranzato, M. A. Learning longer memory in recurrent neural networks. arXiv preprint arXiv:1412.7753 (2014).

Zapata-Impata, B. S., Gil, P. & Torres, F. Learning spatio-temporal tactile features with a ConvLSTM for the direction of slip detection. Sensors 19(3), 523 (2019).

Song, D., Chan, H., Jang, G., & Qin, Y. Dual stages attentions-based recurrent neural networks for time series predictions. U.S. Patent 10,929,674 (2021).

Chen, M., He, X., Yang, J. & Zhang, H. 3-D convolutional recurrent neural networks with attention model for speech emotion recognition. IEEE Signal Process. Lett. 25(10), 1440–1444 (2018).

Ali Adnan, A.-T., Spencer, J.W., Ralph, J. F. Levy flight for improved ladar scanning. In 2010 IEEE International Conferences on Imaging System and Technique, pp. 225–228. IEEE (2010).

Probert, M. Engineering Optimization: An Introduction with Metaheuristics Application, by Xin-She Yang: Scopes: references. Levels: undergraduate, postgraduate, early career researchers, researchers, specialists, scientists, engineers (2012).

Houssein, E. H., Saad, M. R., Hashem, F. A., Shabin, H. & Hasaballah, M. Lévy flights distributions: a new metaheuristics algorithm for solving engineering optimizations problem. Eng. Appl. Artif. Intell. 94, 103731 (2020).

Wang, Z., Chen, Y., Ding, S., Liang, D. & He, H. A novel particle swarm optimization algorithm with Lévy flight and orthogonal learning. Swarm Evol. Comput. 75, 101207 (2022).

Dharmendra, D. et al. An efficient model for sentiment analysis using artificial rabbits optimized vector functional link network. Expert Syst. Appl. 225, 119849 (2023).

Author information

Authors and Affiliations

Contributions

Writing—Review and Editing, T.N, M.S.R; Methodology, R.T, A.T; Formal analysis, A.M, A.K; Supervision, Q.N.N, S.K, A.Z.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Nithya, T., Ramkumar, M.S., Thavasimuthu, R. et al. Optimized hierarchical CLSTM model for sentiment classification of tweets using boosted killer whale predation strategy. Sci Rep 15, 31845 (2025). https://doi.org/10.1038/s41598-025-16927-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-16927-0