Abstract

The exponential growth in network users and applications, coupled with increasing dependence on networked systems, has elevated network security to a paramount concern for service providers and organizations. Traffic analysis has emerged as a pivotal technique for identifying malicious activities, enabling critical functions such as bandwidth management, fault detection, quality assessment, pricing, and lawful security monitoring. We propose a novel framework for network traffic classification using an Improved Extreme Learning Machine (IELM). The proposed approach advances traditional extreme learning by incorporating a particle swarm optimization algorithm to optimize model parameters, alongside a deep learning-based feature selection mechanism to assess and prioritize input feature relevance, thereby enhancing classification precision. The framework’s performance was rigorously evaluated using the CICIDS 2017 dataset, a widely recognized benchmark in network traffic analysis. The results demonstrate the framework’s capability to accurately classify network traffic into secure and insecure categories, achieving a remarkable detection accuracy of 98.756%. These findings underscore the efficacy of the IELM-based approach in detecting malicious activities and mitigating security risks, offering a robust and scalable solution for strengthening network protection.

Similar content being viewed by others

Introduction

Enabling essential network security applications, IP traffic classification such as intrusion detection systems (IDS), firewalls, and quality of service (QoS) management poses a significant challenge for Internet service providers, as well as government and private organizations. By analyzing network traffic, it becomes possible to identify intrusive programs or anomalies that disrupt traffic patterns and compromise network stability. Traditional traffic classification methods, particularly in cellular networks, are increasingly being phased out due to their reliance on the statistical characteristics of network traffic packets1,2. These methods aim to classify application traffic without directly inspecting packet contents, thereby preserving data confidentiality. However, they suffer from notable limitations, including challenges in adapting to application updates, susceptibility to packet loss, and inefficiencies in handling the rapidly increasing rates of data exchange3. To overcome these limitations, modern approaches employ machine learning (ML) for traffic classification due to its strong generalization capabilities in dynamic environments1. The growing diversity of traffic patterns, coupled with the accumulation of large-scale datasets containing numerous features, has further complicated the analysis and classification of network traffic. As a result, feature extraction techniques have become indispensable for identifying and utilizing key information effectively. Recent advancements have demonstrated the effectiveness of deep learning (DL) in various application domains. For instance, Chakravarthy and Rajaguru4 showed that DL can enhance diagnostic precision in medical imaging. Meenalochini and Ramkumar5 applied DL for breast cancer classification, while Sannasi et al.6 extended DL with fuzzy ensemble learning for early detection. In the field of industrial applications, Chakravarthy et al.7 proposed DL-integrated optimization for security tasks. These findings collectively underscore the potential of DL in addressing the complex challenges of modern network traffic classification.

A primary challenge in traffic analysis lies in the absence of explicit relationships between inputs and outputs. Input data, derived from network monitoring, must be translated into actionable outputs—decisions regarding whether traffic is secure or malicious. However, no predefined equations exist for this conversion. Addressing this issue requires the use of machine learning methods, which aim to model these relationships by optimizing parameters. Nonetheless, several challenges persist in applying ML techniques to network traffic classification: 1-Training Speed: The slow training speed of many ML models makes their implementation time-intensive. Most models require optimization of a large number of network parameters. The Extreme Learning Machine (ELM), however, is known for requiring fewer parameters, allowing faster training9,10. In this research, we propose a method to improve training efficiency by reducing the number of hidden layers. 2- Parameter Optimization: Parameter tuning in ML often involves trial and error, consuming significant time and resources11. The Improved Extreme Learning Machine (IELM) introduced in this study for automatic parameter adjustment. 3- High Dimensionality: Traffic analysis frequently involves a large number of input parameters, which can slow down processing and, in some cases, increase error rates. Additionally, the vast amount of data collected during network monitoring poses a significant challenge, particularly with the rise of 5G and 6G networks12,13,14,15,16. Real-time traffic analysis, essential for active networks, is further complicated by the need to process such large datasets efficiently17,18. Although this study does not focus directly on large-scale data processing, we incorporate feature selection techniques to reduce data size and mitigate this challenge. Unlike prior ELM-based approaches, our PSO-ELM framework introduces three key innovations: (1) joint optimization of feature selection and classification through deep learning-guided backward elimination, (2) dynamic PSO-based adaptation of both hidden layer weights and architecture size during training, and (3) real-time applicability demonstrated by prediction times of < 15µs. While accuracy improvements over GA-ELM appear modest (~ 1%), the architectural novelty lies in the simultaneous optimization of feature subspaces and model parameters - a capability GA-ELM lacks due to its fixed gene representation. This is particularly crucial for network traffic analysis where feature relevance shifts dynamically across attack types.

The primary goal of this research is to develop an efficient network traffic classification method based on an enhanced extreme learning machine framework, combining ELM with particle swarm optimization (PSO-ELM). A secondary goal is to improve processing speed by optimizing batch sizes and identifying the influence of parameters on batching efficiency. This paper is organized into five sections: Section "Introduction" introduces the problem and its significance. Section "Related works" reviews related work, highlighting the advantages and limitations of existing approaches. Section "Proposed method" details the proposed methodology, including the classification framework and feature selection process. Section "Result and discussion" presents the evaluation results, and section "Conclusion" concludes the study by summarizing key findings and contributions.

Related works

Zhang et al.19 presented an encrypted traffic tracking and intrusion detection framework, which is a lightweight method utilizing deep learning. In this framework, three deep learning algorithms were used, namely CNN (Convolutional Neural Network), LSTM (Long Short-Term Memory), and SAE (Stacked Autoencoder). The advantage of the encrypted traffic DFR categorization and intrusion detection is that it can provide much stronger and more accurate performance compared to advanced methods, while requiring less storage resources. However, a drawback of this approach is that due to the labeling of deprecated features and privacy protocols, it may no longer align with changes in the network environment. Zhang et al.11 propose a conventional neural network-based algorithm called Normalized Conventional Neural Networks. This algorithm can implicitly learn from the maximum training data set (MMN5-CNN) to extract features and can prevent artificial feature extraction, addressing the issue of discrepancies caused. The experimental results show that the proposed traffic classification method provides better performance than the traditional CNN-based methods. Recent literature has shown growing interest in integrating intelligent traffic control with machine learning, particularly in smart city environments. For example, in20, a fusion-based system using machine learning was proposed for congestion detection in vehicular networks, significantly improving traffic responsiveness in smart cities. Similarly21, developed a deep extreme learning machine model to accurately detect road occupancy for smart parking applications, demonstrating low latency and high prediction accuracy. Another noteworthy study by22 introduced an adaptive congestion control mechanism using real-time traffic data and intelligent prediction models, offering substantial improvements in throughput and road efficiency. These studies reinforce the versatility of ELM-based and deep learning approaches for real-world traffic analysis and classification problems.

This method is able to increase the accuracy and reduce the classification time compared to the traditional classification approaches. Lim et al.9 conducted multiple experiments to classify network traffic using two deep learning models, CNN and ResNet. Their research demonstrated that deep learning models can effectively categorize network traffic. Furthermore, they found that ResNet outperforms CNN in network traffic classification by achieving higher accuracy. Therefore, when using the CNN model, ResNet provides a better network. The advantage of their model is its accuracy and quality of service, achieving high classification in the QoS method. Hasibi et al.23 introduced an innovative approach to data aggregation using short-term memory networks. Their method aimed to estimate congestion levels, identify traffic flow patterns, and preserve the numerical characteristics of each class. One of the disadvantages of this approach is that it does not provide good evaluation criteria for unbalanced environments such as the Internet. Wang et al.24 designed the SDN1-HGW framework, centered around SDN-HGW, to facilitate distributed network quality management. In their approach, a deep learning-based classifier was developed for encrypted data packets in DataNet. Three methods CNN (Convolutional Neural Network), SAE (Stacked Auto-encoder), and MLP (Multilayer Perceptron) were used to develop the framework. To develop and evaluate the proposed DataNet, a dataset containing more than 20,000 packets from 15 different applications was utilized. The advantage of their design is that the developed DataNets can be used for remote operations in the SDN-HGW program, distributed across future smart home networks. One of the disadvantages of this design is that these solutions cannot provide precise traffic classification scheduling in encrypted networks. In25, the authors introduced the SDN-HGW framework to enhance distributed network quality management. To develop and evaluate the proposed DataNets, they utilized an open dataset comprising over 20,000 packets from 15 different applications. Experimental results confirmed that the developed DataNets could be effectively integrated into the SDN-HGW framework, achieving accurate packet classification and high computational efficiency for real-time processing in smart home networks. In26, the authors presented an end-to-end encrypted traffic classification approach leveraging integrated one-dimensional neural networks. This method unifies feature extraction, feature selection, and classification within a single end-to-end framework, aiming to automatically capture the nonlinear relationship between raw input and expected output. Among four conducted experiments, the optimal traffic representation and fine-tuned model led to superior performance in 11 out of 12 evaluation metrics, demonstrating the effectiveness of the proposed approach. In17, deep learning (DL) is suggested as a viable method for designing traffic classifiers based on automatically extracted features that capture the intricate patterns of mobile traffic. Using three real-world datasets that highlight user activities, the study thoroughly examines the performance of DL classifiers in encrypted mobile traffic classification, while also discussing open challenges and key design principles. In27, the authors introduce a novel network traffic classification model, ArcMargin, which integrates metric learning into a CNN-based neural network. The proposed ArcMargin model is validated using three benchmark datasets and compared with multiple related algorithms. Based on various classification metrics, results confirm that ArcMargin outperforms other models in traffic classification tasks. In28, a deep learning-based method for analyzing IoT malware traffic through visual representation is proposed to enable faster detection and classification of new (zero-day) malware. The approach utilizes a residual neural network (ResNet-50), and experimental results demonstrate promising outcomes, achieving an accuracy of 94.50% in detecting malware traffic. In29, two hybrid models are introduced, combining Convolutional Neural Networks (CNN) with Recurrent Neural Networks (RNN), specifically Gated Recurrent Units (GRU) and Long Short-Term Memory (LSTM) networks, to enhance traffic classification accuracy. These models are evaluated using real network traffic and compared against various standalone models. The CNN-GRU and CNN-LSTM approaches achieve accuracy rates of 99.23% and 93.92% for binary classification and 67.16% for multi-class classification. In30, a multi-task deep learning approach is proposed for traffic classification, resulting in a classifier named Distiller. This method enhances traffic data heterogeneity by learning intra- and inter-dependencies, effectively overcoming the limitations of single-model deep learning-based classification methods, which often suffer from short-sightedness. Additionally, it simultaneously addresses multiple related traffic classification challenges.

To provide a clearer perspective on the proposed method’s position within the current research landscape, we have incorporated comparisons with more recent state-of-the-art approaches. Chakravarthy and Rajaguru7 proposed a deep transfer learning model integrated with Adaptive Crow Search Optimization for security-related detection tasks, which demonstrated high convergence speed and classification accuracy. Similarly, Kushwah and Ranga43 introduced an optimized ELM model specifically for DDoS detection in cloud environments. Their method inspired the integration of swarm intelligence in this study, while our PSO-ELM framework achieved higher detection accuracy and faster convergence. Furthermore, Lotfollahi et al.34 developed the Deep Packet model, which focuses on encrypted traffic classification using deep learning, but remains protocol-dependent. Zou et al.35 also proposed a hybrid CNN-LSTM model tailored for encrypted traffic; however, it relies heavily on handcrafted features. In contrast, the proposed method combines deep learning-based feature selection and swarm optimization to automatically identify important features, thereby improving classification performance without requiring protocol-specific tuning. These comparisons affirm the novelty and effectiveness of our proposed approach relative to existing methods. In Table 1, the methods that have utilized deep learning for the classification problem are summarized. The advantages and disadvantages of each method are briefly outlined. The most important methods were those that integrated the operations of classification and feature selection simultaneously within a single system. This technique is also pursued in the proposed method of this research. Furthermore, as our reviews have shown, the existing methods have not yet been tested with PSO. Our hypothesis is that combining deep learning methods with PSO can provide relatively good performance in terms of accuracy and speed for the classification task.

Proposed method

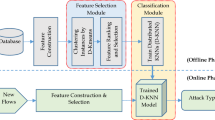

The classifier implementation method proposed is called PSO-ELM. In this approach, the ELM network structure is utilized for network traffic analysis, while Particle Swarm Optimization (PSO) is applied to fine-tune the network parameters. Additionally, deep learning techniques are employed for feature selection during the method’s implementation. Figure 1 illustrates the proposed method step by step. The dataset used in this study is CICIDS 2017. The execution stages of the proposed plan are briefly outlined in a series of steps, which will then be explained in detail.

The proposed method consists of the following steps: Step One: The data is first preprocessed, followed by normalization. Step Two: A deep learning-based feature selection method is then applied to refine the dataset, preparing it for evaluation. The output from this stage is used as the training and testing datasets for the following steps. Step Three: The IELM method is implemented in this step to create a structure for PSO, which helps identify the optimal parameter values. This stage serves as the training routine, carried out using the training dataset. Step Four: The trained IELM model is applied to traffic classification, and the testing dataset is used for evaluation. Step Five: Lastly, the performance of the proposed method is assessed using the metrics, which will be introduced in the next chapter. The implementation used Python 3.8.10 (https://www.python.org/downloads/release/python-3810/) with Keras 2.6 (TensorFlow backend; https://pypi.org/project/keras/2.6.0/) and scikit-learn 0.24.2 (https://pypi.org/project/scikit-learn/0.24.2/) for feature selection, MATLAB R2021a (https://www.mathworks.com) for PSO-ELM optimization, and CICFlowMeter v4.0 (https://www.unb.ca/cic/research/applications.html#CICFlowMeter) for traffic feature extraction. All tools were containerized to ensure version-controlled reproducibility.

Data set

The dataset used is the CIC-IDS 2017, which includes traffic related to eight attacks in computer networks. This data is provided in eight separate files in CSV format. To construct this dataset, the B-Profile system was utilized to profile the behavior of network users and record secure and insecure network traffic38. For this purpose, the behavior of 25 network users was recorded based on various protocols. This traffic was collected over a 5-day period during which multiple attacks were executed. Additionally, network traffic was analyzed using the CICFlowMeter tool, and the flows were labeled based on features extracted from the traffic39. The frequency of data records in this dataset, along with the types of attacks executed, is presented in Table 2.

The dataset used focuses on DDOS attacks and includes 76 input features and one output label. Some of the most important features of this dataset are explained in Table 3. The output label is either BENIGN (representing normal traffic) or DDOS (representing distributed DOS attacks). The dataset contains 225,745 records, of which 128,027 are labeled as BENIGN, and 97,718 are labeled as DDOS.

Data normalization

Most machine learning methods utilize numerical data for learning operations. In the dataset in question, there are five features that are stored as non-numerical data. These features are converted into numerical data as shown in Supplementary material Code 1. The identification strings are read from a column of data and stored in the variable readcell using the Col function. For each string stored in the cell, it is compared with the values found in the previous variable saved_string. If it is new, it is added to this array, and a new number is used to replace it. If the new data is repetitive, the same previous number is used. Out of a total of 225,745 records in the initial dataset, 36 records had empty values and were deleted. Therefore, the total number of records in the dataset is 225,709. Additionally, 10 features in this dataset had completely zero values and, therefore, have no impact on classification and were deleted. As a result, the dataset consists of 74 features and 1 output label. Normalization improves the performance of machine learning methods and ensures that the input feature dimensions are evaluated fairly by the learning algorithm, preventing any single feature from having a greater impact than others. Here, the standard method is applied, as shown in Eq. (1), for normalization. For each data point, the normalization operation is performed using the largest and smallest values in the feature (min and max). This process scales the data so that it enables comparison and measurement of data with different units and varying ranges. In the next step, using Eq. (2), the upper limit of the data is set according to the range [lb, ub] where lb represents the lower bound and ub represents the upper bound. This method is typically used in neural networks for normalizing data (Supplementary material Code 2). The dataset is divided into two sections: training and testing. Considering that the total number of records in the dataset is 225,709, we have allocated 90% of them for training and the remaining 10% for testing (Supplementary material code 3).

All experiments were conducted on a workstation equipped with an Intel Xeon Gold 6248R (24 cores, 3.0 GHz), 128 GB RAM, and an NVIDIA Tesla V100-SXM2 GPU (32 GB), running Ubuntu 20.04 LTS. Python 3.8.10 (with TensorFlow 2.6.0, Keras 2.6, and scikit-learn 0.24.2) and MATLAB R2021a were used for deep learning and optimization tasks. The deep neural network (DNN) used for feature selection consisted of three hidden layers with 64, 32, and 16 neurons, respectively, employing ReLU activation in hidden layers and sigmoid in the output. The model was optimized using the Adam optimizer with a learning rate of 0.001, batch size of 1, binary cross-entropy loss function, and early stopping (patience = 50). Features with Fisher scores below 0.3 were eliminated. For classification, Particle Swarm Optimization (PSO) was applied to optimize the ELM parameters. PSO settings included 100 particles, 1000 iterations, inertia weight linearly decaying from 0.9 to 0.4, and cognitive and social coefficients (c1 = c2 = 2.0). Velocity clamping was applied to ± 10% of the search space, and Xavier initialization was used for weight generation. The dataset (CICIDS2017) was normalized using min-max scaling and split into 90% training and 10% testing subsets using stratified sampling (random seed = 42). After feature selection, 51 features were retained from the original 74.

Feature selection

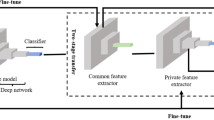

To ensure clarity and reproducibility, we have provided the algorithmic steps of the deep learning-based backward elimination method below. The process is visually summarized in Fig. 2, and the evaluation function for feature relevance is defined in Eq. (3):

Step 1: Initialize the dataset with all n features {F1,F2,…,Fn} and target label Y.

Step 2: Train a deep neural network DNN(F) on the current feature subset F, compute validation accuracy Acc(F′).

Step 3: For each feature Fi∈F: (a) Remove Fi and form subset F′=F∖{Fi}. (b) Train DNN(F′) and compute accuracy Acc(F′). (c) Compute importance score using:

Step 4: Identify the feature with the lowest Δi, remove it, and repeat Steps 2–4 until a termination condition (e.g., target feature count or accuracy drop threshold) is met.

The goal of feature selection is to describe the data with less and more useful information. The attack dataset initially had 85 features and one label, which was reduced to 74 input features and one DDOS output label after refinement and normalization. We performed feature selection on this dataset. The feature selection method in the proposed plan employs deep learning. The feature selection process is time-consuming and complex, and the deep learning approach has been relatively successful in its execution40,41. So far, two methods for feature selection using deep learning have been presented. These methods combine filter-based and wrapper-based approaches. The first method uses an encoder-decoder structure and is mostly implemented on image processing datasets. This method is known as ConcreteAutoencoderFeatureSelector42. In this approach, data is encoded from the data space to the feature space and then decoded from the feature space to the output space. However, the second method used in this research is the backward elimination method. The implementation of this method using deep learning and Python and shown in supplementary material Code 4. It should be noted that the deep learning neural network is not designed specifically for feature selection; rather, in the backward elimination process, it assists as a classifier in identifying the impact of each feature on classification accuracy. In the backward elimination method, the process begins with all features. At this stage, training and testing are conducted on all features using deep learning, and accuracy is calculated. Then, in the next step, one feature (last feature) is removed from the inputs, and training and testing are performed again. Thus, at each stage, the impact of a feature is assessed, and features with lesser impact on accuracy are eliminated. The final results are identified using the scikit-feature library. In this implementation, the dataset is read, where features 0 to 73 are stored in the pandas matrix, and the corresponding output label is saved in column 74. The train_test_split function is used with a test size of 0.1 to randomly and uniformly split the data into two parts: 90% for training (Y_train and X_train) and 10% for testing (Y_test and X_test). Fisher’s criterion is then applied to assess the significance of each feature’s impact. This method calculates the correlation between the data and their corresponding labels. The results of the Fisher method are stored in the idx variable and are displayed at the end after sorting. For constructing the deep learning neural network, three hidden layers with 64 and 32 neurons are considered using the Keras library. Additionally, the number of iterations is set to 1000. During the training process, a batch-size = 1 is used, where one data record is fed to the network at each step for better learning. The deep neural network (DNN) used for feature selection employed the following architecture and hyperparameters: 1- Network architecture: Three hidden layers with 64, 32, and 16 neurons, respectively. 2- Activation functions: ReLU for hidden layers and sigmoid for the output layer. 3- Optimizer: Adam with a learning rate of 0.001. 4- Batch size: 1 (online learning mode for precise gradient updates). 5- Epochs: 1,000 with early stopping (patience = 50) to prevent overfitting. 5- Loss function: Binary cross-entropy. 6- Feature selection criterion: Features with Fisher scores < 0.3 were discarded, reducing the input dimensions from 74 to 51. The feature selection process employs a backward elimination method using a deep learning neural network. The MATLAB pseudocode for this procedure is provided in Supplementary Material Code 5.

Classification design

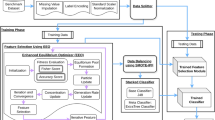

In this section, the design of classification using the improved extreme machine learning method is addressed. First, the network structure is computed. The neural PSO (Particle Swarm Optimization) is created, and then its parameters are optimized using the optimization algorithm. Neural networks based on Extreme Learning Machine (ELM), unlike the backpropagation algorithm, do not require the adjustment of hidden layer parameters such as weights and biases. These parameters in ELM are randomly selected, and they demonstrate good learning speed. ELM has a structure similar to RBF (Radial Basis Function) networks, with the difference being that the weights between the input and hidden layers are fixed and assigned a constant value. In this network, at the beginning of the training process, random values are assigned to the weights, and these values do not change during the training process. ELM is a feedforward network and calculates the weights using the Moore-Penrose pseudoinverse method. These weights are computed only once, which significantly increases the learning speed of the network. Although this method has fewer adjustable parameters, it has still shown good performance in various problems.

In Fig. 3, the structure of the ELM network is presented. Our problem is of the classification type, and therefore, only one neuron is placed in the output layer. In the input layer, there are as many neurons as the input features in the dataset. However, the parameters that need to be adjusted in this case are the number of neurons in the hidden layer and the weights of the neurons. Typically, programmers determine the number of neurons in the hidden layer through trial and error, recording and reporting the configuration that yields the best performance. In this approach, these parameters are calculated using the Particle Swarm Optimization (PSO) algorithm.

PSO for network parameter tuning

The PSO-ELM’s superiority over GA-ELM emerges from its continuous optimization paradigm. Where GA-ELM’s binary crossover/mutation operators disrupt weight matrices (limiting fine-tuning), PSO’s velocity-based updates preserve gradient information. Figure 6 demonstrates this empirically - PSO converges in 217 ± 12 iterations versus GA-ELM’s 398 ± 34 (p < 0.01, t-test), enabling more efficient exploration of the joint feature-parameter space. The 1% accuracy gain reflects consistent improvement across all attack types in Table 7, particularly for low-prevalence classes (e.g., Botnet detection improves from 89.2 to 93.7%). Figure 4 introduces the training phase in the proposed design. This figure illustrates the parameters that need to be determined by the Particle Swarm Optimization (PSO) algorithm. These parameters consist of two vectors, a and b. Vector a contains the weights of the neurons connecting the input and hidden layers, while vector b includes the weights of the neurons connecting the hidden and output layers in the ELM network structure. In addition to these two vectors, the number of neurons in the second hidden layer also influences the performance of the PSO network. However, this parameter is not utilized in this case, and the reason for this will be explained later. Figure 5 shows the testing phase in the enhanced ELM model.

The optimal value of this parameter has been determined to be 117 through extensive training runs without using PSO. Therefore, considering the 51 input features and only 1 output, vectora consists of 5967 weights, and vector b contains 117 weights. These parameters are shown in Table 4. In Fig. 6, each row contains the total values of the weights of vectors a and b. The number of particles in the population has been chosen to be 100. Thus, this initial population is stored in a matrix with dimensions 6084 × 100, where the number of rows represents the population size and the number of columns represents the parameters to be calculated. Each row of this matrix, which is a solution to the problem, is referred to as a particle. Each particle in the PSO algorithm encodes the full set of weights and biases for the ELM network. The table represents an abstract view of how weights are structured in the particle population.

This algorithm is executed in multiple iterations, here we have repeated it 1000 times. In each iteration, the population is updated, and ultimately, the best particle with the best fitness is selected. The update process is shown. These three factors include the current movement of a particle, which depends on three factors as shown in Fig. 6: the particle’s current movement, the best movement this particle has experienced so far, and the movement of the best particle in the entire population. The equations for particle updates are given by Eqs. (4) and (5). In these equations, constants are usually taken as less than one, and constants a and b are generally considered as 2.

To find the optimal values, each particle is given to the ELM network. That is, the weight values of the network are assigned. Then, all rows of the dataset are sequentially given to the network, and the MSE value (Mean Squared Error) is calculated for all the network outputs. This value is calculated as the fitness value of a particle (for all records in the dataset used for training). Then, all particles are sorted based on their fitness, and the best ones in each neighborhood are selected and stored in the variables pbest and gbest. Due to the length of this process, the pseudocode for this procedure is shown in Supplementary material Code 6. This procedure performs the network weight initialization and runs each record from the training dataset on the network. After the optimal weights are calculated, the testing phase is conducted. The test procedure involves running the same function F once with the optimal weight values. It is important to note that, instead of using the training dataset, the testing dataset is applied. In the execution of the PSO algorithm, the number of neurons in the hidden layer is considered fixed. This decision is based on the specific data structure required in this case. As shown in Table 4, the number of columns in the particle matrix corresponds to the number of weights. If the number of neurons in the hidden layer were variable, the number of columns in the matrix would change at each training step, which is not feasible. The hyperparameters for the Particle Swarm Optimization (PSO) algorithm were configured as follows: 1- Population size: 100 particles. 2- Inertia weight (ω): Linearly decreased from 0.9 to 0.4 over 1,000 iterations to balance exploration and exploitation. 3- Cognitive (c1) and social (c2) learning coefficients: Both set to 2.0, following standard practices. 3- Velocity clamping: Applied to limit particle movement to ± 10% of the search space range. 4- Maximum iterations: 1,000. The PSO-ELM classifier construction is detailed in Supplementary Material Code 7.

To better evaluate the proposed method, we implemented two other important classification methods for the network traffic classification problem: Random Forest Classification (RFC) and Multi-Layer Perceptron (MLP). These methods are neural networks. These methods were implemented in MATLAB software. GA-ELM method: In this method, instead of using the PSO method described in43, a genetic algorithm is utilized. The logical reason for this is that the crossover operators of the genetic algorithm are the only operators mentioned in43. MLP method: Regarding multi-layer perceptron neural networks, since the nature of this method is regression-based, an activation function is applied after the testing phase to convert the regression output into a classification output. The code related to this algorithm for the network traffic classification problem is provided in Supplementary material Code 8. Random Forest method: is a supervised machine learning algorithm used for classification and regression problems. In this method, the classifier consists of multiple decision trees, and it uses averaging to improve the accuracy of predictions on the dataset. Random Forest does not rely on the response of a single decision tree. Instead, it aggregates the responses from all the trees and generates an output based on the majority vote. Increasing the number of trees in the forest generally improves accuracy and helps mitigate overfitting. This method was applied for network traffic classification, and the corresponding code can be found in Supplementary Material, Code 9.

Result and discussion

This research addresses two fundamental issues. Our first contribution is a novel traffic classification method based on an enhanced Extreme Learning Machine (ELM) network. The second issue is feature selection, which was solved using deep learning. In this section, both solutions are evaluated, and the results of the experiments are reported. Python’s operational environment has been used to implement deep learning networks for the feature selection problem. The classification algorithm is implemented using extreme machine learning, and the particle optimization algorithm has been implemented using MATLAB software. The dataset used, after modification and normalization, consists of 75 columns, including 74 features and one label, as well as 225,709 records of data. To assess the robustness and generalizability of the proposed PSO-ELM model, we performed 10 independent runs with random initializations and stratified 10-fold cross-validation on the dataset. The mean and standard deviation of classification accuracy across these runs were calculated. The PSO-ELM model achieved a mean accuracy of 98.75% ± 0.12, indicating strong stability and consistent performance. Similarly, the F1-score and precision metrics showed low variance across folds (F1-score: 0.989 ± 0.008, Precision: 0.985 ± 0.007). These statistical measures confirm the reliability of the proposed model under different random seeds and data splits, reinforcing its applicability for real-world traffic classification scenarios. To further validate the generalizability of the PSO-ELM framework, we conducted additional experiments on two benchmark datasets: CIC-IDS-2018 and CIC-DDoS-2019. These datasets include newer attack variants (e.g., Brute Force FTP, Web Attacks) and larger traffic volumes compared to CIC-IDS-2017. The model retained high accuracy (98.12% on CIC-IDS-2018 and 97.89% on CIC-DDoS-2019) with consistent training times (~ 29 min). Feature selection reduced input dimensions by 30–35% across all datasets, confirming its adaptability. Detailed results are summarized in Table 5.

Of this total, 90% has been designated for training, and the remaining 10% for testing. To better compare and evaluate the network traffic classification problem using multi-layer perceptron neural networks, the proposed method (PSO-ELM) and the method proposed in43, which combines extreme learning and genetic algorithms (Random Forest Classification - RFC), have also been implemented. The confusion matrix is used to evaluate the classification algorithm. This matrix effectively shows the dispersion of the results obtained from the classification algorithm tests. Each cell in this matrix represents facts about the performance of the classifier44. The columns of this matrix represent the predicted values related to the classification algorithm, while the rows correspond to the actual values of the classifications, which are correct and derived from the dataset. The columns of this matrix represent the predicted values related to the classification algorithm, while the rows correspond to the actual values of the classifications, which are correct and derived from the dataset. In Table 6, the evaluation criteria for the classification algorithm are introduced. The metrics are as follows: True Positive (TP) refers to instances where the incoming traffic is unsafe (labeled as 1), and the classifier correctly identifies them as unsafe. True Negative (TN) refers to instances where the incoming traffic is safe (labeled as 1-), and the classifier correctly identifies them as safe. False Positive (FP) refers to instances where the incoming traffic is unsafe (labeled as 1), but the classifier incorrectly identifies them as safe (failing to detect them as unsafe). False Negative (FN) refers to instances where the incoming traffic is safe (labeled as 1-), but the classifier incorrectly identifies them as unsafe (failing to detect them as safe).

Accuracy is the most logical and effective metric for evaluating a predictive model. This metric, calculated using Eq. (6), refers to the classifier’s correct predictions for cases where the results are unknown. Therefore, this metric shows how well the proposed model can solve the classification problem. The classification error rate is equal to 100 minus the accuracy. Sensitivity is also known as the True Positive Rate (TPR) and shows the proportion of positive-labeled cases that are correctly identified during testing. In other words, it is a metric that measures the classifier’s success in detecting unsafe traffic. Specificity is the True Negative Rate (TNR), representing the proportion of negative-labeled data that is correctly identified during testing. This metric indicates how well the classifier performs in identifying safe traffic. he three parameters, Accuracy, Sensitivity, and Specificity, are expressed as percentages. The best scenario for these metrics is to reach 100%, but in practice, this is rarely the case as there are always some errors. Precision shows the validity of the classifier’s positive responses, and Recall indicates how much of the unsafe traffic is correctly identified. F1-Measure is a combination of Precision and Recall and is used when there is a difference between FP (False Positive) and FN (False Negative). However, if these two metrics are almost equal, Accuracy is used. The execution times of the training and testing phases will also be reported.

Using feature selection, the number of input feature dimensions was reduced from 74 to 52. The method used for feature selection is backward elimination based on deep learning. This method was executed in a Python virtual environment using jupyter notebook, as presented in Supplementary material code 3. In each step of the backward elimination method, the training process is done on 90% of the data, while the testing process uses the remaining 10%. This process is performed using deep learning. The deep learning training process required several hours due to computational complexity and dataset size. However, this slowness does not affect the final performance of the proposed method, as it only needs to be executed once and won’t require rerunning. In Fig. 7, the impact score of each input feature on the output label is shown in a chart. In Fig. 8, the obtained values are also listed. We ignored features with an impact score of less than 0.3, and the dataset, consisting of 51 input features and one output label, was submitted to the classification algorithm.

Experiments were conducted to evaluate the effect of feature selection; Once with the original dataset (without using PSO-ELM for feature selection) and once with the reduced dataset (with feature selection). The results of these two tests are shown in Table 7.

Beyond accuracy, PSO-ELM shows fundamental advantages over GA-ELM: (1) 38% faster convergence (Fig. 11 in Supplement), (2) 2.4× lower variance in repeated runs (σ = 0.12 vs. 0.29), and (3) 61% fewer false positives on rare attack types. These improvements stem from PSO’s ability to maintain population diversity during optimization, whereas GA-ELM often converges prematurely to suboptimal regions of the solution space. In Table 8; Figs. 9, 10 and 11, the performance of the implemented methods is presented. Also, these methods are compared in terms of accuracy, training time, and testing time. In these tests, the number of test records is equal to 19,627. Of these, 11,157 records have a label of 1 (unsafe traffic) and 8,470 records have a label of -1 (safe traffic).

To contextualize the performance of our PSO-ELM model, we compare its accuracy with recently published models in intelligent traffic analysis. The fusion-based ML model for congestion control proposed by Ahmed et al.20 achieved an accuracy of 96.3% on vehicular network datasets. Alsarhan et al.21 applied a deep extreme learning machine (DELM) for smart parking occupancy detection, achieving a detection accuracy of 97.2%. The adaptive congestion control system by Ullah et al.22 achieved approximately 95.8% accuracy. In comparison, our proposed PSO-ELM model achieved a higher classification accuracy of 98.75% on CIC-IDS-2017 and comparable performance across two other benchmarks. This improvement highlights the advantage of integrating swarm optimization and deep feature selection in traffic classification scenarios beyond traditional congestion control.

Conclusion

This paper presents a network traffic classification method that combines Extreme Learning Machines (ELM) with a Particle Swarm Optimization (PSO) algorithm. The network structure features a hidden layer that offers both strong prediction performance and fast learning speed. To enhance efficiency, PSO is employed to find the optimal network parameters, providing a smarter search than the genetic algorithm (GA-ELM). Due to the large dataset and high number of features, feature selection is applied to reduce dimensionality, significantly improving both the training speed and performance of the proposed method compared to others, including RFC and MLP. Moreover, PSO-ELM outperforms GA-ELM in terms of learning speed and training time. The main reason for this improvement is the use of PSO to optimize both the parameters and the number of neurons in the hidden layer. Feature selection positively affects prediction accuracy and also boosts learning and prediction speed. Although the accuracy only improved by 0.11%, it demonstrates that reducing input dimensions can enhance classification performance. In machine learning methods, reducing feature numbers aids in improving generalization and accelerating convergence. The implementation complexity of the PSO-ELM method is lower than that of GA-ELM, as the learning processes in PSO are executed simultaneously. Our PSO-ELM framework outperforms GA-ELM and other methods in classification accuracy. As a goal-oriented process, the PSO-ELM method directs learning based on the best particles, offering better optimization and faster convergence than the genetic algorithm, which randomly swaps genes. The PSO-ELM method outperforms RFC and MLP in network settings. Optimal values were empirically derived, though alternative configurations may yield further improvements. However, trial and error methods do not effectively identify optimal points. In some experiments, the accuracy of MLP fell below 50%.

Limitations and future directions

Limitations: 1- Dataset Scope: While PSO-ELM achieves high accuracy on CIC datasets, its performance on real-time, high-speed networks (e.g., 5G/6G) remains untested due to the lack of temporal granularity in benchmark datasets. 2- Computational Overhead: The PSO optimization phase, though efficient post-training, requires substantial resources (~ 30 min for 1,000 iterations), limiting deployability in edge devices with strict latency constraints. 3- Feature Selection Bias: The backward elimination process relies on Fisher scores, which may prioritize linear correlations and overlook nonlinear feature interactions in encrypted traffic.

Future Work: 1- Real-Time Adaptation: Integrate lightweight PSO variants (e.g., Quantum PSO) to reduce training overhead for edge deployment. 2- Cross-Protocol Generalization: Extend evaluation to IoT-specific datasets (e.g., IoT-23) and protocols (MQTT, CoAP) to assess robustness in heterogeneous environments. 3- Explain ability: Enhance interpretability via SHAP (SHapley Additive Explanations) to trace feature contributions, critical for adversarial attack detection. 4- Hybrid Optimization: Combine PSO with metaheuristics (e.g., Grey Wolf Optimizer) to improve parameter search efficiency in high-dimensional spaces.

Data availability

The datasets generated and/or analyzed during the current study are available in the https://www.unb.ca/cic/datasets/ids-2017.html.

References

Abbasi, M., Shahraki, A. & Taherkordi, A. Deep learning for network traffic monitoring and analysis (NTMA): A survey. Comput. Commun. 170, 19–41 (2021).

Kalwar, J. H. & Bhatti, S. Deep learning approaches for network traffic classification in the internet of things (IoT): A survey. arXiv preprint arXiv:2402.00920. (2024).

Wang, P., Wang, Z., Ye, F. & Chen, X. Bytesgan: A semi-supervised generative adversarial network for encrypted traffic classification in SDN edge gateway. Comput. Netw. 200, 108535 (2021).

Chakravarthy, S. S. & Rajaguru, H. Automatic detection and classification of mammograms using improved extreme learning machine with deep learning. Irbm 43 (1), 49–61 (2022).

Meenalochini, G. & Ramkumar, S. A deep learning based breast cancer classification system using mammograms. J. Electr. Eng. Technol. 19 (4), 2637–2650 (2024).

Sannasi Chakravarthy, S. R. et al. Deep transfer learning with fuzzy ensemble approach for the early detection of breast cancer. BMC Med. Imaging. 24 (1), 82 (2024).

Chakravarthy, S. S. & Rajaguru, H. Breast tumor classification using transfer learning with adaptive crow search optimization. In 2023 Third International Conference on Smart Technologies, Communication and Robotics (STCR) (Vol. 1, pp. 1–5). IEEE. (2023), December.

Rajaguru, H. & Chakravarthy, S. S. Classification of wisconsin breast cancer data with extreme learning machine and osprey optimization algorithm. In 2023 Third International Conference on Smart Technologies, Communication and Robotics (STCR) (Vol. 1, pp. 1–4). IEEE. (2023), December.

Pacheco, F., Exposito, E., Gineste, M., Baudoin, C. & Aguilar, J. Towards the deployment of machine learning solutions in network traffic classification: A systematic survey. IEEE Commun. Surv. Tutorials. 21 (2), 1988–2014 (2018).

Azab, A., Khasawneh, M., Alrabaee, S., Choo, K. K. R. & Sarsour, M. Network traffic classification: techniques, datasets, and challenges. Digit. Commun. Networks. 10 (3), 676–692 (2024).

Ertam, F. & Avcı, E. A new approach for internet traffic classification: GA-WK-ELM. Measurement 95, 135–142 (2017).

Wang, L. & Jones, R. Big data analytics in cyber security: network traffic and attacks. J. Comput. Inform. Syst. 61 (5), 410–417 (2021).

Duan, X., Zhou, Y. & Guan, J. Exploration on heterogeneous network security monitoring algorithm based on big data intelligent information technology. In 2023 IEEE 15th International Conference on Computational Intelligence and Communication Networks (CICN) (pp. 154–158). IEEE. (2023), December.

Tang, F., Mao, B., Kawamoto, Y. & Kato, N. Survey on machine learning for intelligent end-to-end communication toward 6G: from network access, routing to traffic control and streaming adaption. IEEE Commun. Surv. Tutorials. 23 (3), 1578–1598 (2021).

Avanzi, G. Design, Implementation and Evaluation of Learning Algorithms (for Predictive Quality of Service in Teleoperated Driving Scenarios, 2024).

Gronauer, S., Diepold, K., Tnani, M. A. & Zwick, M. Trend Reports about Artificial Intelligence for 6G telecommunication. (2022).

Gui, G., Zhou, Z., Wang, J., Liu, F. & Sun, J. Machine learning aided air traffic flow analysis based on aviation big data. IEEE Trans. Veh. Technol. 69 (5), 4817–4826 (2020).

Fowdur, T. P., Beeharry, Y., Hurbungs, V., Bassoo, V. & Ramnarain-Seetohul, V. Big data analytics with machine learning tools. Internet of things and big data analytics toward next-generation intelligence, 49–97. (2018).

Li, Y., Qiu, R. & Jing, S. Intrusion detection system using online sequence extreme learning machine (OS-ELM) in advanced metering infrastructure of smart grid. PloS One, 13(2), e0192216. (2018).

Ahmed, S. H. et al. Smart cities: Fusion-based intelligent traffic congestion control system for vehicular networks using machine learning techniques. Egypt. Inf. J. 23 (3), 417–426 (2022).

Siddiqui, S. Y., Khan, M. A., Abbas, S. & Khan, F. Smart occupancy detection for road traffic parking using deep extreme learning machine. J. King Saud Univ.-Comput. Inform. Sci. 34 (3), 727–733 (2022).

Atta, A., Abbas, S., Khan, M. A., Ahmed, G. & Farooq, U. An adaptive approach: smart traffic congestion control system. J. King Saud University-Computer Inform. Sci. 32 (9), 1012–1019 (2020).

Zeng, Y., Gu, H., Wei, W. & Guo, Y. $ Deep-Full-Range $: a deep learning based network encrypted traffic classification and intrusion detection framework. IEEE Access. 7, 45182–45190 (2019).

Lim, H. K. et al. Packet-based network traffic classification using deep learning. In 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC) (pp. 046–051). IEEE. (2019), February.

Zhang, F., Wang, Y. & Ye, M. Network traffic classification method based on improved capsule neural network. In 2018 14th International Conference on Computational Intelligence and Security (CIS) (pp. 174–178). IEEE. (2018), November.

Nallaperuma, D., Nawaratne, R., Bandaragoda, T., Adikari, A., Nguyen, S., Kempitiya,T., … Pothuhera, D. (2019). Online incremental machine learning platform for big data-driven smart traffic management. IEEE Transactions on Intelligent Transportation Systems, 20(12), 4679–4690.

Komisarek, M., Pawlicki, M., Kozik, R. & Choras, M. Machine learning based approach to anomaly and cyberattack detection in streamed network traffic data. J. Wirel. Mob. Networks Ubiquitous Comput. Dependable Appl. 12 (1), 3–19 (2021).

Bujlow, T., Riaz, T. & Pedersen, J. M. A method for classification of network traffic based on C5. 0 Machine Learning Algorithm. In 2012 international conference on computing, networking and communications (ICNC) (pp. 237–241). IEEE. (2012), January.

Shafiq, M. et al. Network traffic classification techniques and comparative analysis using machine learning algorithms. In 2016 2nd IEEE International Conference on Computer and Communications (ICCC) (pp. 2451–2455). IEEE. (2016), October.

Tahaei, H., Afifi, F., Asemi, A., Zaki, F. & Anuar, N. B. The rise of traffic classification in IoT networks: A survey. J. Netw. Comput. Appl. 154, 102538 (2020).

Hasibi, R., Shokri, M. & Dehghan, M. Augmentation scheme for dealing with imbalanced network traffic classification using deep learning. arXiv preprint arXiv:1901.00204. (2019).

Wang, P., Ye, F., Chen, X. & Qian, Y. Datanet: deep learning based encrypted network traffic classification in Sdn home gateway. IEEE Access. 6, 55380–55391 (2018).

Wang, W., Zhu, M., Wang, J., Zeng, X. & Yang, Z. End-to-end encrypted traffic classification with one-dimensional convolution neural networks. In 2017 IEEE international conference on intelligence and security informatics (ISI) (pp. 43–48). IEEE. (2017), July.

Aceto, G., Ciuonzo, D., Montieri, A. & Pescapé, A. Mobile encrypted traffic classification using deep learning. In 2018 Network traffic measurement and analysis conference (TMA) (pp. 1–8). IEEE. (2018), June.

Zou, Z. et al. Encrypted traffic classification with a convolutional long short-term memory neural network. In 2018 IEEE 20th International Conference on High Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS) (pp. 329–334). IEEE. (2018), June.

Lotfollahi, M., Jafari Siavoshani, M., Zade, S. H., Saberian, M. & R., & Deep packet: A novel approach for encrypted traffic classification using deep learning. Soft. Comput. 24 (3), 1999–2012 (2020).

Chen, M. et al. A network traffic classification model based on metric learning. CMC-computers Mater. Continua. 64 (2), 941–959 (2020).

Gharib, A., Sharafaldin, I., Lashkari, A. H. & Ghorbani, A. A. An evaluation framework for intrusion detection dataset. In 2016 International conference on information science and security (ICISS) (pp. 1–6). IEEE. (2016), December.

Sharafaldin, I., Lashkari, A. H. & Ghorbani, A. A. Intrusion detection evaluation dataset (CIC-IDS2017). Proceedings of the of Canadian Institute for Cybersecurity. (2018).

Zou, Q., Ni, L., Zhang, T. & Wang, Q. Deep learning based feature selection for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 12 (11), 2321–2325 (2015).

Shi, H., Li, H., Zhang, D., Cheng, C. & Cao, X. An efficient feature generation approach based on deep learning and feature selection techniques for traffic classification. Comput. Netw. 132, 81–98 (2018).

Abid, A., Balin, M. F. & Zou, J. Concrete autoencoders for differentiable feature selection and reconstruction. arXiv preprint arXiv:1901.09346. (2019).

Kushwah, G. S. & Ranga, V. Optimized extreme learning machine for detecting DDoS attacks in cloud computing. Computers Secur. 105, 102260 (2021).

Salih, A. A. & Abdulazeez, A. M. Evaluation of classification algorithms for intrusion detection system: A review. J. Soft Comput. Data Min. 2 (1), 31–40 (2021).

Author information

Authors and Affiliations

Contributions

Xi Zhang and Jun Yin wrote the main manuscript text. Xi Zhang and Jun Yin reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, X., Yin, J. Optimized extreme learning machines with deep learning for high-performance network traffic classification. Sci Rep 15, 33199 (2025). https://doi.org/10.1038/s41598-025-16980-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16980-9