Abstract

Fake news consists of fabricated stories with no verifiable facts, sources, or quotes, often created to mislead readers or for economic gain, such as click bait. While the spread of fake news on social media has been widely recognized for its profound political, economic, and social consequences, existing research on detection methods remains limited in the context of low-resource languages. In particular, studies addressing Amharic are scarce, and to date, no research has investigated multimodal approaches for fake news detection in this language. This study aims to develop a multimodal fake news detection system for Amharic. Data was collected using Face pager and the Facebook Graph API 13.0, resulting in a dataset of 23,856 news stories. Various preprocessing techniques were applied, including text cleaning, tokenization, normalization, and visual content processing involving noise removal, and other image preprocessing techniques. The study evaluates several deep learning architectures, including combinations of CNN with BiLSTM, CNN for both image and text, CNN with CNN-BiLSTM, and CNN with attention-based BiLSTM. Among these, the CNN with attention-based BiLSTM model demonstrated superior performance across all evaluation metrics, achieving an accuracy of 98%. Therefore, we proposed the CNN with attention-based BiLSTM model for multimodal Amharic fake news detection.

Similar content being viewed by others

Introduction

Social media is now a major platform for sharing and accessing information. Its rapid growth has also enabled the spread of misinformation. This poses serious risks to political, economic, and social stability. Detecting and controlling fake news is therefore essential to ensure accurate and trustworthy information1. In Ethiopia, misinformation has already contributed to social unrest. Platforms like Facebook and Twitter are key sources of fake news. They provide open spaces for users to post content without censorship or filtering. As a result, fake news often spreads faster and wider than reports from traditional media2. Over the past five years, such content has fueled unrest, accelerated political movements, and intensified protests3. This has deepened societal divisions and threatened national unity. The challenge highlights the urgent need for effective computational methods to detect and mitigate fake news, especially in low-resource languages like Amharic.

Artificial Intelligence techniques hold significant potential for academics aiming to develop models capable of automatically detecting fake news. However, fake news detection is a challenging task, as it requires models that can summarize news content and compare it with verified information to determine its authenticity. Moreover, comparing fake news with real news is a complex endeavor due to the subjective and opinion-based nature of news content2.

Many researchers have studied fake news detection, particularly in the English language4,5,6. However, due to the lack of well-structured datasets and the absence of ground truth required for initial annotation, few studies have focused on Amharic fake news detection7. The existing studies in the Amharic language primarily analyze the textual content of Facebook posts, even though most fake news on social media includes both text and images. In contrast to the aforementioned findings, most researchers working on fake news detection have focused mainly on the textual aspect of the news.

Despite the evident and disruptive nature of the problem, there has been little to no practical intervention from either governmental bodies or academic institutions in applying technological solutions to detect fake news in Amharic. Furthermore, the lack of annotated datasets has made this task even more difficult and largely unexplored. The main objective of this study is to develop an Amharic fake news dataset that incorporates both textual and visual content and to use various deep learning algorithms to detect multimodal fake news in the Amharic language. The key contributions of this study are as follows:

-

This study introduces the first multimodal fake news detection model specifically designed for the Amharic language.

-

It presents a newly collected and processed dataset of 23,856 Amharic news stories, integrating both textual and visual content.

-

It explores and compares multiple deep learning architectures, including CNN-BiLSTM and attention-based models, for multimodal analysis.

Related works

The detection of fake news has emerged as a pivotal area of research, aimed at curbing the dissemination of misinformation by employing advanced computational techniques to assess the credibility of news content. Recently, deep learning methodologies have gained prominence in this field, particularly in the context of social media. A growing body of work has explored the integration of multimodal data combining both textual and visual elements to enhance the accuracy and robustness of fake news detection models. This section categorizes relevant studies into three main areas: (1) research on Amharic fake news detection using text-based approaches, (2) multimodal fake news detection in the English language, and (3) studies focused on identifying fake images on social media.

Amharic fake news detection (text-only approaches)

Gereme et al.7 conducted a significant study titled “Combating Fake News in ‘Low-Resource’ Languages: Amharic Fake News Detection.” This research made several notable contributions. Firstly, it introduced a deep learning-based model tailored for Amharic fake news detection. Secondly, to address the lack of existing resources, the authors developed a new dataset named ETH_FAKE, along with a general-purpose Amharic corpus. Additionally, they designed a custom word embedding Amharic FastText Word Embedding (AMFTWE) to improve model accuracy. However, their study primarily utilized homogeneous datasets and did not address challenges associated with heterogeneous data sources.

Tazeze8 also focused on the Amharic language, proposing a dataset for fake news classification into “real” and “fake” categories. The study utilized various feature extraction techniques, including count vectorization, TF-IDF, and n-grams. It employed multiple machine learning algorithms, such as Naive Bayes, Support Vector Machines (SVM), Logistic Regression, Random Forest, Stochastic Gradient Descent, and Passive Aggressive Classifier. Notably, the Naive Bayes and Passive Aggressive Classifier models achieved high performance, with TF-IDF-based models reaching up to 96% accuracy.

Arega9 ntroduced a machine learning model aimed at identifying and classifying fake news on social media, leveraging TF-IDF for feature extraction. However, the study lacked details regarding the specific algorithms used and did not report performance metrics.

Woldeyohannis10 proposed a feature fusion-based approach to detect fake news in the Amharic language, addressing the limitations of content-only detection. The study incorporated multiple feature extraction techniques, including TF-IDF, n-gram weighted TF-IDF, and word2vec. The authors used Logistic Regression, SVM, and Random Forest algorithms for classification. Their experimental results showed that combining Random Forest with word2vec achieved the highest precision, reaching 99.67%. Despite this success, the research was constrained by the limited size of the dataset and did not explore heterogeneous datasets.

Multimodal fake news detection (English language)

Palani et al.4 introduced a pioneering multimodal framework for fake news detection using a combination of BERT and capsule neural networks. This study was among the first to apply such an approach, integrating both textual and visual data from social media posts to evaluate news authenticity. BERT was employed for textual feature extraction, while capsule neural networks handled visual content. The fusion of these modalities significantly enhanced classification performance, outperforming traditional methods.

Wang et al.5 developed a fine-grained multimodal fusion network that leverages scaled dot-product attention mechanisms to effectively combine textual and image data. Their contribution lies in proposing a sophisticated model that performs nuanced feature fusion, thereby improving detection accuracy through enhanced inter-modality interactions.

Kumari and Ekbal11 proposed an attention-based multimodal factorized bilinear pooling (MFB) architecture for fake news detection. Their framework comprises four core modules: an attention-based stacked bidirectional LSTM (ABS-BiLSTM) for extracting textual features, an attention-based multilevel CNN-RNN (ABM-CNN-RNN) for visual feature extraction, an MFB module for feature fusion, and a multi-layer perceptron (MLP) for classification. The study provided empirical evidence that combining textual and visual modalities yields better model performance. The overal summary of related works is shown in Table 1.

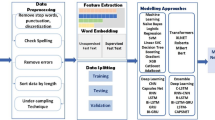

Methodology

This study employs an experimental research methodology. Experimental research is a scientific approach in which one or more independent variables are deliberately manipulated to observe their effects on one or more dependent variables. This method involves careful planning of the experimental setup, including decisions regarding the number of trials, the selection of parameters and weights, the choice of methodologies, and the datasets to be used.

Data collection and preparation

Data collection

For this study, a total of 23,856 multimodal news articles, comprising both text and images, were collected from diverse sources. The annotation process followed established news literacy principles to ensure rigorous and reliable labeling of fake news content. Linguistic experts were involved to verify textual information, checking for factual accuracy, misleading statements, and sensational language. Simultaneously, images were analyzed for manipulation, contextual relevance, and consistency with the accompanying text. Annotation was done by multiple experts to reduce bias and increase reliability, and any discrepancies were resolved through consensus. This approach ensured that both textual and visual modalities were carefully evaluated, providing a high-quality, multimodal dataset for subsequent fake news detection experiments. The methods and sources used for data collection are presented in both tabular and graphical formats, as illustrated in Fig. 1.

As depicted in Fig. 1, the data collection process involved scraping news content from selected Ethical considerations such as demographic bias, content sensitivity, and data consent, were addressed before collecting both text and images using Facepager for our multimodal dataset. Facebook pages using Facepager in conjunction with the Facebook Graph API version 13.0. The raw data was subsequently cleaned and filtered to remove irrelevant or duplicate entries. Following this, the refined data was annotated and structured to form the final dataset used for training the multimodal fake news detection model.

Data preprocessing

Data preprocessing involves removing noise and distortions from the dataset to simplify the fake news detection task and improve the model’s performance14. In this study, data preprocessing is divided into two distinct parts, as image and text data require separate preprocessing techniques adapted to their specific characteristics. In the multimodal preprocessing stage, textual data were cleaned to remove noise such as non-Amharic characters, while images were standardized in size and format to ensure consistency. Although emojis, punctuation, and code-mixed tokens can carry valuable cues for fake news detection, they were not specifically utilized in this study. Text normalization focused on Amharic language structures, and image preprocessing emphasized clarity and uniformity, providing a clean input for the multimodal model.

Preprocessing of the textual data

Cleaning

To ensure the data is suitable for representation through word embeddings, a thorough cleaning process is applied. This procedure eliminates all irrelevant special characters, symbols, emojis, and any non-Amharic characters from the dataset.

Normalization

The Amharic writing system contains multiple characters that share the same pronunciation, but there is no standardized convention for using them consistently in written words. This often leads to inconsistencies in spelling. For instance, the word power (“hail”) can be written in several different forms, including  . To address this issue, characters with identical sounds were replaced with their canonical forms as defined in this study.

. To address this issue, characters with identical sounds were replaced with their canonical forms as defined in this study.

Tokenization

After cleaning and normalizing the raw data, the next preprocessing step is tokenization, which involves breaking the text into smaller units called tokens. Once tokenization is complete, each word is represented using the selected embedding method for the vectorization process.

Word-embedding

Word embedding is a feature learning technique that maps each word or phrase in a vocabulary to an N-dimensional real-valued vector. Its goal is to represent words in a lower-dimensional space while preserving their semantic relationships15. In this study, FastText is used as the word embedding model to generate vector representations of words. Developed by Facebook, FastText enhances the traditional Word2Vec approach by incorporating subword information, allowing it to create more accurate embeddings for both known and out-of-vocabulary words. After preprocessing the dataset and converting it into an analyzable format, FastText transforms words into vectors that capture semantic similarities and relationships. These vectors are then passed through the embedding layer of the deep learning model, resulting in an N × M matrix, where N is the length of the longest sentence in the dataset and M is the embedding dimension. This process enables the model to utilize FastText’s advanced subword modeling capabilities, improving its ability to understand and represent complex word relationships in various natural language processing tasks.

Image preprocessing

Image resizing

This step is essential when adjusting the total number of pixels to standardize image dimensions. Additionally, resizing the image helps reduce preprocessing time and computational cost. In this study, images are resized to a resolution of 100 × 100 pixels.

Convert RGB to gray scale

Converting a color image to grayscale involves transforming the 24-bit RGB values into 8-bit grayscale values. After resizing, the image is converted from the RGB format, which has three channels, to a grayscale format with a single channel.

Histogram equalization

Histogram equalization is a non-linear technique designed to enhance image brightness, making it more suitable for human visual perception. In this study, we employed the Contrast Limited Adaptive Histogram Equalization (CLAHE) method to achieve this enhancement.

Noise removal

After adjusting the image intensity, the next step is noise removal, which helps enhance the overall performance of the proposed model. In this study, a median filter is applied to effectively reduce noise and minimize its impact on the images. Additionally, the preprocessing includes adaptation to the unique characteristics of misinformation images to enhance feature extraction.

Before discussing the deep learning approaches employed, it is important to explain why the late fusion method was chosen and to review the fusion techniques currently available in the state of the art.

Fusion models

Social media content typically includes multimedia posts such as text, images, audio, or video. To accurately classify these posts, data from different modalities must be analyzed, and the prediction probabilities derived from each modality should be integrated to determine a final class label. Multimodal Fusion, in this context, refers to the process of combining features from multiple modalities to make a prediction. This fusion can occur at different stages namely early, late, or intermediate in the processing pipeline16.

Early fusion

Early fusion involves combining features from multiple modalities into a single feature vector, which is then input into the model for prediction17. Handling features with high granularity can be challenging, as the fusion of preprocessed features from different modalities often leads to very high-dimensional data. Figure 2 demonstrates the early fusion approach in a multimodal fake news detection system. In this approach, data from multiple modalities such as text, images, and other sources are integrated at an early stage, prior to classification. This allows the classifier to leverage the combined information from all modalities, leading to more informed predictions and enhancing the effectiveness of the fake news detection model.

Late fusion

Late fusion, also known as decision-level fusion, focuses on combining the outputs or decisions from multiple independently trained modalities17. This method is feature-independent, and errors from different modalities are generally uncorrelated. The architecture of the late fusion approach employed in this study is illustrated in Fig. 3.

Intermediate fusion

Intermediate fusion involves generating representation layers often a shared layer that integrate features from different modality-specific processing streams18. This fusion layer can take the form of a single layer that combines multiple input channels, or a structured combination of layers that merge different sets of modalities at varying levels of abstraction.

In our work, we adopt the late fusion approach. This strategy allows the integration of outputs from independently trained classifiers, each handling different modalities, making it well-suited for scenarios where modality-specific models are developed separately.

Deep learning approaches used

As discussed in the previous sections, we developed a model that integrates both text and image modalities to detect multimodal Amharic news. Prior to building the combined model, we initially evaluated unimodal models separately one for processing text content and another for handling image content.

Text based models

CNN

Convolutional Neural Networks (CNNs) are widely used for image categorization due to their ability to effectively extract visual features19. Beyond image processing, CNNs have also been successfully applied to various Natural Language Processing (NLP) tasks, including text classification20. In this context, text data is first converted into a vectorized format, similar to how images are represented. Word embeddings of the input text are organized into a matrix, which is then passed through a convolutional layer containing multiple filters of varying sizes to capture diverse textual patterns. Following the convolution process, a pooling layer condenses the information, and the resulting outputs are concatenated to form a comprehensive feature vector. This vector is then passed through a fully connected layer to perform the final classification of the text21.

BiLSTM

Bidirectional Long Short-Term Memory (BiLSTM) networks enhance traditional LSTM models by enabling information to flow in both forward and backward directions during the processing of sequential data22. This bidirectional structure allows the model to capture context from both past and future tokens in a sequence, leading to a more comprehensive understanding of the input text. Such an approach is especially beneficial for tasks like text classification, where capturing the full context of a sentence or document is crucial for making accurate predictions.

Attention based BiLSTM

Models based on Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have demonstrated strong performance in text classification tasks23. However, these models often suffer from limited interpretability, making it difficult to understand or justify classification errors, as the underlying representations are not easily accessible. To address this issue, attention-based models have been effectively applied in text classification tasks, offering improved insight into the decision-making process24. The attention mechanism enables the model to assign different levels of importance to individual inputs. It first aggregates significant words into sequence-level vectors and then combines these sequences into comprehensive text-level representations25. y applying attention at both the word and sentence levels, the model can assess the contribution of each component to the final classification decision, which is valuable for both practical applications and interpretability. The growing popularity of attention-based mechanisms is largely due to their ability to improve text classification performance while providing insights into the reasoning behind predictions. The architecture of the attention-based Bi-LSTM model is presented in Fig. 4 below. This model is designed to capture spatial-temporal dependencies from sequential data. The lower section of the architecture represents the spatial time series inputs, denoted as Xt − 2,Xt − 1,…X_{t-2}, X_{t-1}, … Xt − 2,Xt − 1,…, which include both direct and indirect temporal parameters. These inputs are fed into LSTM layers that capture the temporal relationships between the time steps.

Architecture of attention-based BiLSTM26.

Hybrid CNN with bidirectional‑lstm

The hybrid CNN-BiLSTM model represents a sophisticated approach that integrates the strengths of Convolutional Neural Networks (CNNs) and Bidirectional Long Short-Term Memory (BiLSTM) networks, enhancing the processing and understanding of sequential data27. CNNs are well-known for their capability to extract meaningful features by applying convolutional filters that detect local patterns within input data. When applied to text, CNNs can effectively identify important n-grams or word sequences, generating feature maps that capture these key patterns. These feature maps then serve as inputs to the BiLSTM network. BiLSTM networks excel at handling sequential data by modeling long-range dependencies. Unlike standard LSTMs that process data in a single direction, BiLSTM’s analyze sequences both forward and backward. This bidirectional approach enables the model to gather contextual information from both past and future elements in the sequence, thereby improving its comprehension of the overall context.

In a hybrid CNN-BiLSTM model, the initial feature extraction performed by the CNN is followed by sequence modeling through the BiLSTM28. The CNN’s feature maps are typically flattened or pooled and then fed into the BiLSTM, which further processes these features to capture and integrate contextual relationships. The combination of CNN-derived features with BiLSTM-generated embeddings provides a comprehensive representation of the data, which is then used for downstream tasks such as classification or prediction.

This hybrid architecture takes advantage of CNNs’ ability to capture local features and patterns, alongside BiLSTM’s strength in understanding contextual relationships within sequences, resulting in enhanced performance in tasks such as text classification, sentiment analysis, and named entity recognition29. By integrating these two complementary approaches, the model develops a deeper and more comprehensive representation of sequential data, making it highly effective for applications that demand both precise feature extraction and contextual awareness.

Image based models

CNN

In deep learning, Convolutional Neural Networks (CNNs) are a prominent type of neural network widely used for image recognition tasks30. CNNs utilize a distinctive process called convolution, which can be mathematically defined as an operation between two functions resulting in a third function. This output function illustrates how the shape of one function is modified or influenced by the other. A CNN is composed of multiple layers of artificial neurons mathematical units inspired by biological neurons in the human brain. These artificial neurons process inputs by computing weighted sums and then producing an output through an activation function31.

Input stage The input to a Convolutional Neural Network (CNN) is typically an image represented as a grid of pixels32. For example, an image of a car is provided to the network in the form of a tensor that maintains the image’s spatial dimensions along with its color channels.

Feature learning stage

Convolution + ReLU the input image is processed through multiple convolutional layers, where filters (or kernels) are applied to detect key features like edges, corners, and textures33. The output from each convolutional layer is then passed through a Rectified Linear Unit (ReLU) activation function, which is defined in Eq. (1).

This non-linear activation function is crucial for enabling the network to model complex patterns and interactions in the data.

Pooling After the convolutional layers, pooling layers are applied to reduce the spatial dimensions of the feature maps while retaining the most important information34. Pooling not only decreases computational complexity but also helps prevent overfitting. Max-pooling is commonly used, which selects the highest value within a defined region of the feature map, as shown in Eq. 2.

This sequence of convolution, ReLU, and pooling operations is iteratively applied, allowing the network to progressively learn more abstract and complex representations as it deepens.

Flattening The feature maps generated by the final convolutional layer are flattened into a one-dimensional vector35. This vector is essential because it acts as the input to the subsequent fully connected layers, facilitating the shift from feature extraction to the classification stage.

Classification stage

Fully connected layer The flattened vector is fed into one or more fully connected (dense) layers, which combine the features extracted during the convolutional process to generate predictions regarding the class of the input image36.

Activation The output of the final fully connected layer is typically passed through activation function, which converts the raw logits into a probability distribution over the possible classes.

Figure 5 illustrates the architecture of the Convolutional Neural Network (CNN) used for image classification. The model takes input images (shown on the left) and processes them through a series of layers designed to learn and extract relevant features, ultimately classifying the image into categories such as “real” or “fake.” The input passes through multiple convolutional layers, where filters detect important features like edges and textures. Each convolutional layer is followed by a ReLU activation function, which introduces non-linearity to enhance the model’s learning capacity. Pooling layers are also incorporated to down sample the feature maps, reducing their spatial dimensions while preserving critical information and decreasing computational load. After several convolution and pooling stages, the extracted features are flattened into a one-dimensional vector and passed through fully connected layers for deeper analysis. The final output layer is a sigmoid activation that assigns probability scores to each class, determining whether the image is real or fake. CNNs are especially effective for image recognition tasks due to their ability to automatically learn spatial feature hierarchies.

Architecture of CNN for image Classification37.

Proposed model architecture

The proposed architecture illustrated in Fig. 6 presents a deep learning-based multimodal framework for Amharic fake news detection, integrating both textual and visual content derived from a Multimodal Amharic News Dataset. The process begins with the separation of the dataset into textual and visual components. The textual content undergoes a preprocessing stage where noise and irrelevant elements are cleaned and normalized to enhance feature quality. This refined text is then passed through an attention-based Bidirectional Long Short-Term Memory (BiLSTM) network, which captures contextual dependencies from both past and future directions in the sequence while giving more weight to informative words through the attention mechanism. Simultaneously, the visual content (such as images accompanying news articles) is preprocessed to standardize size, resolution, and format before being input to a Convolutional Neural Network (CNN), which extracts deep spatial features representing the visual semantics of the news. The outputs of both the attention-based BiLSTM (for text) and CNN (for images) are then merged in a fusion layer, which aligns and integrates multimodal features into a joint representation. This unified feature vector is subsequently fed into a dense (fully connected) layer that learns high-level abstractions and patterns relevant for classification. Finally, the output from the dense layer is used by the classification layer to determine whether a given Amharic news instance is real or fake, resulting in a robust Multimodal Amharic News Classification Model that leverages both textual and visual modalities to improve detection accuracy and reliability. Late fusion was chosen as it allows independent training of text and image models, making it more robust to modality-specific noise and more practical than early or intermediate fusion in Amharic fake news detection.

Experimental result

The experiments in this study are primarily divided into two categories: those conducted on unimodal data and those involving multimodal data.

Experimenting on uni-modal data

Experiment on text data BiLSTM model

On the text data we have conducted one experiment which is using BiLSTM model. The BiLSTM model achieves the resulted which is indicated in Fig. 7.

As we observe in the above Fig. 7, the BiLSTM model has an over fitting problem that occurs when the gap between validation and training accuracy is very wide.

Experimenting on image data CNN model

As shown in Fig. 8 above, the validation accuracy of the model increases rapidly from epoch one to epoch five, from 0.5 to 0.9. After epoch five, it varies from epoch to epoch, and the graph shows up and down. Even though this model has less accuracy compared to CNN without data augmentation, it has high performance because the over fitting problem slightly decreases. Table 2 presents the classification report, which highlights the performance of the fake news detection model on the test dataset. The model achieved an accuracy of 99%, with precision, recall, and F1-scores close to 99% for both the “Fake” and “Real” news categories. The macro and weighted averages further confirm the robustness of the model, reflecting its strong ability to correctly classify both fake and real news instances.

Experiment on multimodal data

After testing the individual dataset whether it fits to the model or not, we go forward to the actual task of this research which is combining image and text together for developing multimodal Amharic fake news detection. As we have discussed in previous section, we have applied late fusion with concatenation method to develop the multimodal model. For developing multimodal model, we have conducted experiments on several combinations of deep learning algorithms from those combinations we apply BiLSTM and CNN, CNN for both image and text, Combination of CNN and BiLSTM for text and CNN for image. The results of the experiments are discussed below.

Result of experiments on multimodal data

We have conducted four different experiments to select best model for Multimodal based Amharic Fake news detection. The results of those experiments are discussed below.

Experiment one: image CNN text the combination of CNN and BiLSTM

In this experiment we have used CNN for textual content of our data and combination of CNN and BiLSTM for textual content of our data. The result of the experiment presented both in tabular and graphical way.

In the training and validation accuracy graph for the CNN and CNN-BiLSTM models as indicated in Fig. 9, validation accuracy briefly surpasses training accuracy in the first epoch. From the second to fifth epoch, both accuracies progress in parallel with minimal gap. After the fifth epoch, validation accuracy shows fluctuations, while training remains stable. Early stopping halts training at epoch 12 due to stagnation. Overall, the model demonstrates slightly better performance during training.

In the context of evaluating the model’s performance, Table 3 presents the classification report, summarizing the key metrics such as precision, recall, F1-score, and support for both the “Fake” and “Real” news categories as indicated in Fig. 10. The confusion matrix for Experiment One is presented in Fig. 10.

Experiment two: CNN and BiLSTM

In CNN and BiLSTM experiment, we use CNN to analyze image content and BiLSTM for text content. We combine these models into a multimodal model using late fusion. The results of this experiment are presented in both tables and graphs to visually show how the model performed.

Figure 11, presented above, illustrates the training and validation accuracy of Experiment Two. Initially, during the training process, the validation accuracy exceeds the training accuracy until the end of the second epoch. However, after this point, the validation accuracy consistently declines while the training accuracy continues to rise. This widening gap between the two curves indicates that the model is experiencing over fitting. Over fitting occurs when a model demonstrates strong performance on the training data but fails to generalize effectively to validation or test data. Consequently, the training process was halted early at epoch 6 due to these observations.

Table 4 outlines the classification performance of the model, showcasing precision, recall, F1-score, and support values for both “Fake” and “Real” news categories.

The confusion matrix for Experiment two is presented in Fig. 12.

Experiment three: CNN

In this experiment, we used CNNs for both image and text models. Finally, we combined them by concatenating their outputs to create a multimodal model using late fusion. The results of this experiment are presented below in both table and graph formats.

Figure 13, displayed above, depicts the training and validation accuracy for Experiment Three. Initially, during the training process, both training and validation accuracy exhibited a slight increase, maintaining a modest gap until the end of the sixth epoch. However, after epoch 6, the training accuracy continued to rise, while the validation accuracy remained relatively stable. This notable disparity between the training and validation accuracies suggests that the model is experiencing over fitting. Consequently, the training process was terminated early at the 14th epoch based on these observations. In comparison to Experiment 2 (CNN with BiLSTM), this model showed less over fitting. However, it exhibited more over fitting compared to Experiment 1 (CNN and the combination of CNN-BiLSTM models).

Table 5 presents the classification report for Experiment Three. This table summarizes the model’s performance in terms of precision, recall, F1-score, and support for the “Fake” and “Real” classes. The report indicates that the precision for the “Fake” class is 0.95, while the recall stands at 0.88, resulting in an F1-score of 0.92. Conversely, the “Real” class exhibits a precision of 0.89, a recall of 0.96, and an F1-score of 0.92. The overall accuracy of the model across all instances is reported as 0.92, with both the macro and weighted averages also reflecting a consistent performance of 0.92 across the metrics. The confusion matrix for Experiment three is presented in Fig. 14.

Experiment four: attention-based BiLSTM and CNN

In this experiment, we used a CNN for images and an attention-based BiLSTM for text. We combined them using late fusion to create a multimodal model. The results are shown below in both table and graph formats to illustrate the model’s performance.

The analysis of the training and validation accuracy depicted in Fig. 15, which illustrates the results of Experiment Four, reveals several key trends. Initially, the validation accuracy commenced at 0.90, whereas the training accuracy started at 0.70. During the first four epochs, there was a notable and rapid increase in training accuracy, accompanied by a modest rise in validation accuracy. Between the fifth and tenth epochs, both training and validation accuracy exhibited fluctuations, suggesting instability in model performance. Notably, after the tenth epoch, the training and validation accuracy curves converged, indicating that the model reached its optimal performance compared to the models developed in the preceding experiments.

Table 6 presents the classification report for Experiment Four. This table summarizes the model’s performance metrics, including precision, recall, F1-score, and support for both the “Fake” and “Real” classes. The results indicate that the precision for the “Fake” class is 0.97, with a recall of 0.99 and an F1-score of 0.98. For the “Real” class, the precision is 0.99, the recall is 0.97, and the F1-score is 0.98. Overall, the model achieved an accuracy of 0.98 across the dataset of 2386 samples, with both the macro and weighted averages reflecting a consistent performance of 0.98 across the metrics. The confusion matrix for Experiment four is presented in Fig. 16 and the summary of a deep learning model is shown in Fig. 17.

Model summary

The attention-based BiLSTM model architecture integrates two parallel input streams: one for spatial and the other for sequential data. The spatial stream processes 100 × 100 × 1 input images through convolutional and max pooling layers, reducing the dimensions to 10 × 10 × 128 while increasing feature depth. The sequential stream handles input via an embedding layer and a BiLSTM, producing a 64-unit output enhanced by an attention mechanism. The outputs from both streams are concatenated, followed by flattening, dense, and dropout layers for dimensionality reduction and regularization. A final fully connected layer produces the output. This design effectively captures both spatial and temporal dependencies, with approximately 53.96 million trainable parameters.

Results and discussion

In this study, we developed a multimodal model for detecting fake news in Amharic, utilizing data collected from Facebook, a popular social media platform in Ethiopia. The data extraction was performed using Facepager and the Facebook Graph API 13.0, focusing on news posts containing both images and text. For preprocessing, we applied a series of steps to the text data, including cleaning, normalization, tokenization, and vectorization with pre-trained Amharic FastText word embeddings. Multiple textual models were developed and evaluated, such as Bidirectional Long Short-Term Memory (BiLSTM), Convolutional Neural Networks (CNN), attention-based BiLSTM, and a hybrid model combining CNN and BiLSTM. Images were resized to 100 × 100 pixels, converted to gray scale, and had their contrast adjusted using Contrast Limited Adaptive Histogram Equalization (CLAHE) with a 2.0 clip limit, followed by noise reduction with median blur. CNNs were used for feature extraction and classification of the images.

Four experiments were conducted to determine the most effective model for Amharic fake news detection. The first experiment combined CNN for image analysis with CNN and BiLSTM for text processing, achieving an accuracy of 97%. The second experiment used CNN and BiLSTM, resulting in 90% accuracy. The third experiment applied CNN to image and text models, achieving 92% accuracy. The fourth experiment, which combined CNN for images with attention-based BiLSTM for text, reached the highest accuracy of 98%. This model had only 40 incorrect classifications, making it the most effective in terms of accuracy and error rates. Our findings highlight that attention-based and hybrid models significantly improve multimodal Amharic fake news detection, and meticulous preprocessing of both image and text data is crucial for enhancing the performance of deep learning models. Our multimodal model achieved an accuracy of 98%, outperforming the 88.5% accuracy reported by Wang et al.9 using a fine-grained multimodal fusion approach. Similar to Palani et al.8, we combined textual and visual features for multimodal detection, though our use of attention-based BiLSTM further improved performance.

The experimental results revealed that combining CNN for images with attention-based BiLSTM for text yielded the highest performance, underscoring the complementary strengths of both modalities: CNNs effectively capture visual cues such as manipulated or misleading images, while attention-based BiLSTMs address Amharic’s complex morphology and contextual dependencies. Nonetheless, certain sociolinguistic challenges remain, particularly in cases of code-mixing, spelling variations, and culturally specific expressions, which can mislead the text model despite careful preprocessing. These failure cases highlight both the potential and the limitations of multimodal fusion, emphasizing the need for richer sociolinguistic resources and adaptive models to better reflect the nuances of Amharic online discourse.

Conclusion

The primary objective of this research was to address a significant drawback of social media: the rapid dissemination of fake news, which misleads individuals and disrupts societal stability. This study aimed to detect Amharic fake news by leveraging both textual and visual (multimodal) content. To achieve this, we utilized a newly collected dataset comprising both images and text obtained from Facebook through Facebook’s Graph API 13.0. Since no pre-existing dataset met our needs, we created our own. For the textual content, we employed pre trained FastText word embeddings, an extension of Word2Vec, to enhance the understanding of news by deep learning models. For image feature extraction, we used Convolutional Neural Networks (CNNs). We conducted four experiments to identify the most effective model for multimodal Amharic fake news detection. These experiments included: CNN for images combined with BiLSTM for text, CNN for images combined with CNN-BiLSTM for text, CNN applied to both text and image models, and CNN for images combined with attention-based BiLSTM for text. The models achieved accuracies of 90%, 97%, 92%, and 98%, respectively. Our findings indicate that the attention-based BiLSTM model combined with CNN for images yielded the highest performance.

The contributions of this study are as follows: Firstly, it represents the first attempt at multimodal Amharic fake news detection using both textual and visual content from Facebook posts. Secondly, we collected and annotated multimodal data from scratch, a particularly challenging task given the subjective nature of text annotation. To ensure consistency, we adhered to the annotation guidelines established by the News Literacy Project and we also include linguistic experts from journalism and media communication department.

Although the proposed multimodal model achieved strong performance, with the attention-based BiLSTM combined with CNN reaching 98% accuracy, several limitations remain. The dataset, while newly constructed and annotated, is limited in scope and size compared to the vast and diverse nature of real-world social media data, which may affect generalizability. Furthermore, ethical considerations such as demographic bias, content sensitivity, and data consent require continued attention to ensure fairness and responsible use. The model also focuses on binary classification, leaving opportunities to expand toward more nuanced categorization of misinformation types, such as satire, false context, or fabricated content. Despite these challenges, the model has promising deployment potential: it could be integrated into social media monitoring tools, fact-checking systems, or governmental and non-governmental platforms aimed at mitigating the harmful impact of fake news in Ethiopia and other low-resource language contexts.

Future research could advance multimodal Amharic fake news detection by incorporating image captioning, expanding and enriching datasets, and developing hierarchical models to classify nuanced misinformation subtypes such as satire, false context, and fabricated content, while also exploring OCR techniques to extract embedded text from images as a potential enhancement.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Allcott, H. & Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211 (2017).

Masciari, E., Moscato, V., Picariello, A. & Sperli, G. A deep learning approach to fake news detection. Lect Notes Comput. Sci. 12117(3), 113–122. https://doi.org/10.1007/978-3-030-59491-6_11 (2020).

Palani, B., Elango, S. & Viswanathan, V. Fake: A multimodal deep learning framework for automatic fake news detection using capsule neural network and BERT. https://doi.org/10.1007/s11042-021-11782-3 (Springer, 2021).

Wang, J. et al. Citation: FMFN: Fine-grained multimodal fusion networks for fake news detection. Appl. Sci. 12, 1093. https://doi.org/10.3390/app12031093 (2022).

Wang, Y. et al. EANN: event adversarial neural networks for Multi-Modal fake news detection 849–857 (2018).

Gereme, F., Zhu, W. & Ayall, T. Combating Fake News in ‘ Low-Resource ’ (Amharic Fake News Detection Accompanied by Resource Crafting, 2021).

Haile, J. M. Social media for diffusion of conflict & violence in ethiopia: beyond gratifications, international journal of educational development. Int. J. Educ. Dev. 108(103063), 0738–0593. https://doi.org/10.1016/j.ijedudev.2024.103063 (2024).

Tazeze, T. Building a dataset for detecting fake news in amharic Language Building a dataset for detecting fake news in amharic Language. No June. https://doi.org/10.48175/IJARSCT-1362 (2021).

Arega, K. L. Classification and detection of amharic Language fake news on social media using machine learning approach. 04, 1–6 (2022).

Woldeyohannis, M. M. Amharic Fake news detection on social media using feature fusion Amharic fake news detection on social media using feature fusion as a result of advancing technology, ease of access and a low-cost platform (2021).

Kumari, R., Ekbal, A., AMFB. & Attention based multimodal factorized bilinear pooling for multimodal fake news detection. Expert Syst. Appl. 184, 115412. https://doi.org/10.1016/j.eswa.2021.115412 (2021).

Shankar, R., Srivastava, A., Gupta, G., Jadhav, R. & Thorate, U. Fake image detection using machine learning. Int. J. Creat Res. Thoughts 8(5), 2320–2882 (2020).

AlShariah, N. M. & Jilani Saudagar, A. K. Detecting fake images on social media using machine learning. Int. J. Adv. Comput. Sci. Appl. 10(12), 170–176. https://doi.org/10.14569/ijacsa.2019.0101224 (2019).

Kh, S., Juzaiddin, M., Aziz, A. & Ridzwan, M. Heliyon review Article A review of fake news detection approaches: A critical analysis of relevant studies and highlighting key challenges associated with the dataset, feature representation, and data fusion. Heliyon 9(10), e20382. https://doi.org/10.1016/j.heliyon.2023.e20382 (2023).

Ayalew, A. A. & Asfaw, T. T. Model from Amharic texts (2021).

Kiela, D., Grave, E., Joulin, A. & Mikolov, T. Efficient large-scale multi-modal classification. 32nd AAAI Conf. Artif. Intell. AAAI 2018 5198–5204 (2018). https://doi.org/10.1609/aaai.v32i1.11945

Gadzicki, C. Z. K. R. K. and Early vs late fusion in multimodal convolutional neural networks. in International Conference on Information Fusion (FUSION) 1–6 (2020). https://doi.org/10.23919/FUSION45008.2020.9190246

Li, S. & Tang, H. Multimodal Alignment and Fusion: A Survey, no. c, pp. 1–20, [Online]. (2024). Available: http://arxiv.org/abs/2411.17040

Tripathi, M. Analysis of convolutional neural network based image classification techniques. J. Innov. Image Process. 3(2), 100–117. https://doi.org/10.36548/jiip.2021.2.003 (2021).

Haitao, W., Jie, H., Xiaohong, Z. & Shufen, L. A short text classification method based on n-gram and Cnn. Chin. J. Electron. 29(2), 248–254. https://doi.org/10.1049/cje.2020.01.001 (2020).

Li, Q. et al. A Survey on Text Classification: From Shallow to Deep Learning. http://arxiv.org/abs/2008.00364 (2020)

Pavlatos, C., Makris, E., Fotis, G., Vita, V. & Mladenov, V. Enhancing electrical load prediction using a bidirectional LSTM neural network. Electron 12(22), 1–13. https://doi.org/10.3390/electronics12224652 (2023).

Zulqarnain, M., Ghazali, R., Hassim, Y. M. M. & Rehan, M. A comparative review on deep learning models for text classification. Indones J. Electr. Eng. Comput. Sci. 19(1), 325–335. https://doi.org/10.11591/ijeecs.v19.i1.pp325-335 (2020).

Zheng, J. & Zheng, L. A hybrid bidirectional recurrent convolutional neural network Attention-Based model for text classification. IEEE Access. 7, 106673–106685. https://doi.org/10.1109/ACCESS.2019.2932619 (2019).

Galassi, A., Lippi, M. & Torroni, P. Attention in natural Language processing. IEEE Trans. Neural Netw. Learn. Syst. 32(10), 4291–4308. https://doi.org/10.1109/TNNLS.2020.3019893 (2021).

Prerana-Mukherjee Attention-based-BiLSTM-model. ResearchGate. https://www.researchgate.net/profile/Prerana-Mukherjee-2/publication/332299950/figure/fig1/AS:745796773355521@1554823215194/Attention-based-BiLSTM-model-consists-of-a-BiLSTM-layer-and-the-attention-mechanism.png (2019).

Shan, L., Liu, Y., Tang, M., Yang, M. & Bai, X. CNN-BiLSTM hybrid neural networks with attention mechanism for well log prediction. J. Pet. Sci. Eng. 205, 108838. https://doi.org/10.1016/j.petrol.2021.108838 (2021).

Das, H. S. & Roy, P. A CNN-BiLSTM based hybrid model for Indian Language identification. Appl. Acoust. 182, 108274. https://doi.org/10.1016/j.apacoust.2021.108274 (2021).

Rahim, M. A. et al. An enhanced hybrid model based on CNN and BiLSTM for identifying individuals via handwriting analysis. C - Comput. Model. Eng. Sci. 140(2), 1689–1710. https://doi.org/10.32604/cmes.2024.048714 (2024).

Taye, M. M. Theoretical Understanding of convolutional neural network: concepts, architectures, applications, future directions. Computation 11(3). https://doi.org/10.3390/computation11030052 (2023).

Sharma, P. Basic Introduction to Convolutional Neural Network in Deep Learning, Analytics Vidhya. https://www.analyticsvidhya.com/blog/2022/03/basic-introduction-to-convolutional-neural-network-in-deep-learning/ (2022).

Hossain, M. A. & Alam Sajib, M. S. Classification of Image using Convolutional Neural Network (CNN), Glob. J. Comput. Sci. Technol. 19, 13–18. https://doi.org/10.34257/gjcstdvol19is2pg13 (2019).

Diwan, A. & Roy, A. K. CNN-Keypoint Based Two-Stage Hybrid Approach for Copy-Move Forgery Detection, IEEE Access, vol. 12, no. March, pp. 43809–43826, (2024). https://doi.org/10.1109/ACCESS.2024.3380460

Gholamalinezhad, H. & Khosravi, H. Pooling Methods in Deep Neural Networks, A Review. http://arxiv.org/abs/2009.07485 (2020).

Sun, Y. The neural network of one-dimensional convolution: An example of the diagnosis of diabetic retinopathy. IEEE Access. 7, 69657–69666. https://doi.org/10.1109/ACCESS.2019.2916922 (2019).

Mocsari, E. & Stone, S. S. Colostral iga, igg, and IgM-IgA fractions as fluorescent antibody for the detection of the coronavirus of transmissible gastroenteritis. Am. J. Vet. Res. 39(9), 1442–1446 (1978).

Boukhris, A. CNN architecture for image classification. ResearchGate. https://www.researchgate.net/profile/Abdelouafi-Boukhris/publication/371263439/figure/fig2/AS:11431281178108040@1690809967327/CNN-architecture-for-image-classification.png (2023).

Author information

Authors and Affiliations

Contributions

Problem identification and designed the analysis (Alemu Desu and Eshete Derb); Collected the data (Nuru Endris and Alemu Belay); Model Implementation (Alemu Desu; Performed the result analysis (Alemu Desu, Eshete Derb and Bedru Yimam); Wrote the paper (Alemu Desu); Revised the paper (All authors).

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Geto, A.D., Emiru, E.D., Seid, N.E. et al. Multimodal based Amharic fake news detection using CNN and attention-based BiLSTM. Sci Rep 15, 34447 (2025). https://doi.org/10.1038/s41598-025-17579-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-17579-w