Abstract

When predicting adverse complications due to Type 2 Diabetes, often two different approaches are taken: predictions based on clinical data or those using administrative health data. No studies have assessed whether these two approaches reach comparable predictions. This study compares the predictive performance of these two data sources and examines the algorithmic fairness of the developed models. We developed XGBoost models to predict the two-year risk of nephropathy, tissue infection, and cardiovascular events in Type 2 Diabetes patients. The models using only clinical data achieved an average AUC of 0.78, while the models using administrative health data alone achieved 0.77. A hybrid model combining both data types resulted in an average AUC of 0.80, across complications. The models showed that laboratory data were key for predicting nephropathy, whereas comorbidity and diabetes age were most important for tissue infection. For cardiovascular events, age and a history of congestive heart failure was the most important predictors. Our analysis identified bias on the feature sex in all three outcomes: models tended to underestimate risk for females and overestimate it for males, indicating a need to address fairness in these applications. This study demonstrates the effectiveness of ML models using both data types for predicting diabetes complications. However, the presence of sex bias highlights the importance of improving model fairness for reliable clinical use.

Similar content being viewed by others

Introduction

Diabetes is a growing global problem. In 2021, the International Diabetes Federation estimated that 537 million adults are living with diabetes globally, but by 2030 this number is projected to increase to 643 million adults1. Increasing global prevalence is driven by two factors: diabetes incidence has doubled worldwide between 1990 and 2021, and the mortality rate of the population with diabetes has plateaued and even declined in some high-income countries, resulting in a growing population who live a greater proportion of their lives with diabetes2,3. Diabetic complications contribute significantly to suffering and premature mortality in people with diabetes, and reviews have estimated cardiovascular complications alone are the cause of over 27% of deaths of patients with diabetes globally3. Effective prediction and prevention of diabetic complications has been crucial for reducing the harms caused by diabetes complications, and will continue to be essential with the growing global population with diabetes4,5.

Recent developments in machine learning have shown promise in predicting the risk of future complications for patients with diabetes6,7,8,9,10. Accurate prediction of complications has the potential to enable precision medicine strategies which increase the efficiency of preventive disease management. Existing literature points to two separate use cases of such predictive models: first, as a prognostic tool in clinical settings, or second as a population risk management tool in a public health context.

In most studies, the use case of the model as a clinical or population health tool is determined either a priori or based on data availability. Clinical decision-making tools are often derived from information available in electronic health records (EHR) which includes basic demographics such as age and sex, diagnosis and procedure histories, and lab results from inpatient and outpatient settings. Conversely, while lab results are rarely available to public health administrators there are many other types of data including income and education gradients, total healthcare utilization, and race or ethnicity which can be predictive of health risk and may be absent from clinical decision-making tools. For our purposes, we make the distinction between the two data environments along these lines: clinical data (EHR) include basic demographics, diagnoses, and lab results, while administrative health data (AHD) include basic demographics, healthcare utilization statistics, and additional non-health data including income, education, and race/ethnicity.

Clinical ML approach

The clinical decision-making strain of literature relies on medical and biological data often available for the treating physician. The aim of these models is to provide the treating physician with prognostic predictions of the risk of complications to be used to risk stratify patients making preventive treatment guidelines more personalized. This literature has mainly relied on classical statistical approaches to predict across different complication measures with AUC ranging between 0.66 and 0.7511. The most used features are simple demographics such as age, sex and ethnicity; lifestyle measures related to smoking status, BMI and physical activity; and laboratory test results and drug prescriptions related to HbA1C, blood pressure, hypertension and cholesterol11. Recently ML approaches have been applied and a review of this literature by Tan et al. (2023) found 32 studies comprising 87 ML modeling approaches to predict diabetes complications12. Neural network and ensemble models are the most applied ML approaches, where random forest models seemed to have the best predictive performance12. Age, BMI and duration of diabetes are the most used features, but many studies also included laboratory results. The predictive performance outperformed the conventional statistical approaches with an AUC for microvascular approximately 0.85 and an AUC for macrovascular complications of approximately 0.7012.

Public health ML approach

In many settings, biometrics and detailed clinical outcomes data are unavailable for non-clinical use cases. Instead, a second strain of literature relies on predictive models that leverage administrative data including demographics, diagnoses and healthcare utilization from claims data, and income and education data when available. These studies examine the risk of complications in patients with diabetes from a public health perspective. Ravaut et al (2021) produces a public health-oriented machine learning model which imposes limited restrictions on the population under study and uses AHD alone to predict the risk of many different complications in patients with diabetes in Ontario, Canada6. Their models report an AUC of approximately 0.78, and they find age, sex, immigration status, income levels, and area of residence to be consistently important predictors of the risk of complication. Vimont et al (2023) used AHD from the public insurance system in France to predict risk of complication in patients withh diabetes, producing AUC ranging from 0.72 to 0.79 for cardiovascular complications and 0.67 to 0.85 for other complications13. Dworzynski et al (2020) predict comorbidities in patients with diabetes in Denmark using Danish administrative register data, which contains information about individual demographics, economics, education level, and health information including diagnoses, procedures, and prescriptions14. These authors also achieve high accuracy in their predictive models across the population of Denmark based on administrative data alone. While these studies differ in their populations, observation period, feature, and outcomes, all of them achieve reasonable predictive power despite the lack of access to clinical data.

Objective

The objective of this study is to compare the predictive performance of ML models using AHD to those using EHR and laboratory data in identifying cardiovascular events, tissue infection, and nephropathy as a result of diabetes. We select subsets of data for each approach to mimic implementation strategies either with public health authorities (AHD data) or at the physicians point of care (EHR data).

To do this, we develop multiple Extreme Gradient Boosting (XGBoost) models, that aim to predict the two-year risk of a range of adverse complications for patients with type 2 diabetes (T2D) patients. We also investigate the magnitude of algorithmic fairness within the models to assess whether there is significant discrimination against specific subgroups. This implies that the developed model may yield skewed evaluations of predicted risk towards a certain subgroup.

Results

Model comparison and performance analysis

In line with the related literature, we use the Area Under the ROC Curve (AUC) as the primary metric for evaluating the performance of the model. AUC serves as the basis for comparing relative performance across different data sources. We report the F1 score, recall, and precision in the supplementary material, Table S1, along with the ROC curves in Fig. S1. The performance results are presented in Fig. 1. For the clinical model (depicted in green) the baseline model (Model C1) demonstrates an average AUC of 0.74, suggesting that health status and basic demographic factors are effective predictors across the outcomes. Including laboratory results in the clinical model (Model C2) increases the average AUC to 0.78. This enhancement in predictive performance is predominantly driven by a substantial improvement in the nephropathy outcome, while outcomes cardiovascular events and tissue infections exhibit little to no significant increase in performance.

Test result plot. The y-axis shows the AUC of the given model on the x-axis. Each dot represents the average test AUC score across 5 runs with a random restart. The upper and lower lines show the min/max AUC score in a given model, indicating the variation within the model’s test score. Models are specified in Table 2 and segments are described in Table 1.

For the AHD model (depicted in blue), the baseline model (Model A1) achieves an average AUC of 0.77. The incorporation of advanced demographics, such as education, income, and household characteristics, does not appear to augment model performance.

Finally, a model (Model Full, depicted in red) that includes both clinical and AHD is the best performing model, with an average AUC of 0.80. With all data included, nephropathy is the best performing model (0.88 AUC) and the cardiovascular model has the worst performance (0.72).

Overall, the clinical and AHD seem to have similar performances, this is likely due to strong contributions by the health status and basic demographics segments.

Feature contributions across models and outcomes

Figure 2 illustrates the top six features that contribute the most to each outcome, ranked by their importance for the Model Full. For the nephropathy outcome, laboratory test results are the most important group feature. In contrast, predictions for tissue infection and cardiovascular events were primarily driven by demographic variables and health status.

Mean absolute SHAP values for the top six features across the three predicted outcomes for Model Full. Feature contributions are computed on the test dataset using SHAP values, aggregated over five runs. Bars indicate the mean contribution across runs, and error bars represent the corresponding standard deviation. These values reflect the relative importance of each feature to the model’s predictions.

Across all models, Nephropathy still retained predictive power when excluding the laboratory data (Model A2), indicating that information from comorbidities also carry predictive value. For tissue infection, we find that CCI comorbidity, the diabetes age (T2D age), and sex are consistently among the top predictors. And for the cardiovascular events outcome, Age, ECI (Congestive Heart Failure), and Sex are the most important features. Across all models and outcomes, health status indicators, particularly the CCI comorbidity, showed consistently high importance. This suggests that overall disease burden plays a critical role in predicting future complications.

Examining algorithmic fairness

To evaluate predictive performace across subpopulations, we assessed algorithmic fairness across different demographic and clinical subgroups: individuals in the lowest income quartile (Q1), those with primary education, sex, Danish ethnicity, and absence of HbA1c measurement during the observation period.

In the case of nephropathy, the models exhibited poorer performance for individuals without an HbA1c test and females. Little to no signs of substantial unfairness were observed in other tested variables. These results are presented in supplementary Table S2. Tissue infection and cardiovascular complication models were differentially biased by sex but not by any other variables. Model fairness patterns across groups were consistent across different model types, where different features were included.

Across all outcomes, the most notable discrimination is by sex. We assessed this by comparing performance metrics for males and females across all three complications. Figure 3, illustrates this discrimination across metrics. All models assign a higher base risk to males than females, particularly in cardiovascular events, where nephropathy was the least biased model. This pattern is reflected in the strong sex differences in statistical parity. We also see a higher FPR rate for males across all models, and a higher TPR for males for cardiovascular events and tissue infection. Females are conversely over-represented in the negative prediction of complications. Overall accuracy is greater for females than males across models, driven by high FPR and therefore overestimation of complications in males.

Fariness assessment across complications for the feature sex. Figures representing the ratio for metrics TPR, FPR, PPV, STP and ACC. Values are the mean ratio across five runs. Error bars represent the standard deviation of the five runs. Red areas represent threshold (0.8, 1.25). Males are the reference group, i.e. if the ratio is <1, the metric rate is higher for males than females. Orange bars represent model discrimination towards females, blue bars represent discrimination towards males, and grey bars conclude no discrimination in the given metric.

Nephropathy barely exceeded the \(\epsilon\) threshold in two cases. Despite being the least biased model, some bias still exists. Tissue infection exceeds the thresholds in almost all metrics, indicating a highly biased model. Similarly, for cardiovascular events, the threshold is exceeded in four metrics, suggesting significant bias, with the largest ratios observed among all models.

Discussion

The objective of this study is to compare the predictive performance of machine learning models in identifying diabetic complications using data from two perspectives: a clinical setting with EHR and laboratory data, and a public health setting using administrative health and demographic data. A secondary objective was to assess the algorithmic fairness of the models with all available data.

Our results show that in general, predictive performance is quite comparable between the clinical and public health approaches, despite variation in model performance across complications. Across complications, models using age, sex, and diagnosis history alone had the worst predictive performance. Inclusion of lab data or hospital utilization and socio-demographic characteristics improved model performance, and inclusion of both categories of information produces the strongest performing models (except cardiovascular complications, where health data does not augment the performance of AHD models).

Algorithmic fairness analysis revealed evidence of strong bias in predictive models by sex, and bias when HbA1c data was missing for nephropathy. Similar sex bias against females, including high false negative rates, in ML models have been documented for other health-related outcomes15,16. Existing literature documents both differential risk of diabetic complications and differences in the predicted risk scores between sexes, which could contribute to the model unfairness17,18. Divergence in health outcomes between sexes and the existence of sex-related bias in ML prediction, point to the importance of reporting fairness in ML modeling. This may highlight a need for development of sex-specific models of diabetic complications.

The two data sources and approaches to ML prediction of diabetes complications show little difference in predictive performance, and predictions are quite comparable at the individual level. Nonetheless, model performance is greatest with the inclusion of both data types. These findings suggest that the two approaches can supplement each other without a risk of contradictory predictions and implicated treatment recommendations, and that inclusion of all available data in either setting is advantageous.

This study has several limitations. We only evaluated a two-year buffer between predictor measurement and outcome, and did not explore how alternative buffer durations might affect model performance. Optimal buffer lengths may differ by outcome e.g., cardiovascular events might benefit from a longer buffer to capture relevant risk factors in time for intervention.

We assessed model performance exclusively using XGBoost. While more advanced algorithms may improve accuracy, our focus was on relative rather than absolute performance. XGBoost remains a strong and commonly used algorithm for structured data due to its robustness, interpretability, and scalability, making it appropriate for this study. However, different models might rely on different features for prediction, and algorithmic fairness assessment could be different, using other models.

The models were developed using data from Denmark, which may limit their generalizability to other populations. Differences in healthcare policies, clinical practices, and individual health behaviors across countries could affect both predictive performance and fairness outcomes.

Some performance metrics, particularly the F1-score and precision, were relatively low, which reflects the highly imbalanced nature of the data. However, the primary aim of the study was to assess relative differences across data sources and to evaluate the algorithmic fairness of the models. While the absolute values of these metrics should be interpreted cautiously, the fairness-oriented insights remain valid and highlight an important consideration when applying ML in decision-making contexts.

Methods

Data source

The data used in this study are from the Danish national health registers. All health data is reported when a patient among others is hospitalized, visits a GP, or picks up medication at the pharmacy. Other registers contain information on demographics and socioeconomic status. All data is stored safely and is not accessible to the public. Each citizen in Denmark is identifiable by a Social Security number (CPR). This CPR number is encrypted to a unique personal identification number (PNR). PNR is a key, that enables linkage of data across registers. Table 1 illustrates the specific database.

The outcomes, nephropathy, tissue infection and cardiovascular events are defined by ICD-10 codes from The National Patient Register (LPR). The specific ICD-10 codes used can be found in supplementary Table S3.

For each outcome, we develop five different models in stages to evaluate the model performance, using different data sources. The specifications of the five models are specified in Table 2.

Having comorbidities and multimorbidity are well known risk factors for disease progression. The Charlson Comorbidity Index (CCI) is based on ICD10 diagnoses19 and is one of the most used measures for morbidity20. We include CCI as a binary indicator equal to 1 if a patient have had one of the included ICD10 diagnoses in the past five years. To improve coverage of morbidity signals for the prediction we add features from alternative measure of morbidity by also including features based on the Elixhauser Comorbidity Index (ECI)21. This index is also based on ICD10 diagnoses and we add binary features for presence of congestive heart failure, historic registration of depression, and registered obesity

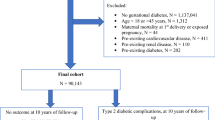

Cohort and exclusion criteria

In Denmark, T2D is defined by The Danish Health Data Authority, who have developed an algorithm for selected chronic diseases and severe mental disorders called RUKS22. Using the RUKS algorithm23, all PNR on T2D patients are obtained in the period 2014-2019. This makes it possible to link all health information on these patients through PNR, in the different registers.

Laboratory test results were only accessible for regions of Southern Denmark, Northern Jutland and Zealand in the defined time period. This means that patients with diabetes living in the capital region and the region of Central Jutland are excluded, effectively cutting the patient population in half. Using this cohort, we end up with 104,341 eligible unique patients with diabetes. Descriptive statistics of the cohort characteristics are in the supplementary materials, Table S4, as well as the descriptive statistics of training, validation, and test sets.

Study design

The dataset consists of instances, each instance is an observation period, a buffer period and a target window. This has been applied for each eligible patient, such that multiple observations for a given patient are possible. Each instance is offset by three months, so that target windows are non-overlapping. This approach is illustrated in Figs. 4 and 5, and has been used in similar prior studies6,24.

Data Point creation Shows how each data point/instance is created. Each blue and orange represents a sample in the data and is explained in Fig. 4. For instance, Patient 1 might have 5 different instances. Each instance is added to the dataset as a different data point. Patient 2 only has one instance, this could be due to death post first data point, therefore additional data points are not created. For patient 3, there is two data points. The late entry could be due to later diagnosis.

For the observation period, we used one year of data, which is sufficient to capture the relevant characteristics of each instance. This choice is further supported by clinical practice, where an HbA1c test is recommended at least once a year25.

The buffer period is the period between observation and prediction. The buffer period is set to two years, meaning that the prediction is two years post the observation window. This makes it possible to make a prediction of the two-year risk of adverse outcomes. The two-year buffer is chosen since a possible adverse outcome of complication in some cases is avoidable if intervened or decelerated, depending on the complication.

The target window of outcome prediction, ’complication prediction’, is 3 months following the buffer. With this data structure, a patient can generate up to 8 instances. In the case where the data does not allow a complete instance, the instance is excluded.

An example of the first two instances of a patient could be: instance 1: Observation period from January 2014 to December 2014 a buffer from January 2015 to December 2016 and the target window from January 2017 to March 2017. Instance 2: Observation period April 2014 to March 2015, a buffer from April 2015 to March 2017, and the target window from April 2017 to June 2017.

The data is randomly split into three different datasets on the individual level. All instances belonging to an individual are in the same dataset. For instance the Fig. 5, all instances of patient 1 are in training data, all instances of patient 2 are in validation, and all instances of patient 3 are in the test.

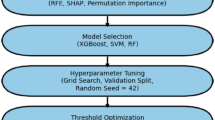

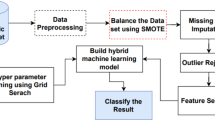

Model development

The project was implemented in Python version 3.10.10. Key libraries utilized include xgboost (v1.7.5) for model training, shap (v0.41.0) for explainability analysis, and dalex (v1.6.0) for model fairness assessment. Other core packages can be found in supplementary Table S5.

We use XGBoost, an efficient and powerful machine learning algorithm, for our predictions26. XGBoost is widely applied in studies utilizing tabular data, demonstrating robust performance on tabular applications6,24. For tasks such as this, it has been proven to be a well-performing algorithm, even when compared to deep learning techniques27. Additionally, it is able to handle missing values, and as laboratory test results tend to have a rather large frequency of missing values - as some patients do not get tested - this is necessary. Missing values might even provide useful information28, and could therefore recognize these behavioral patterns.

Since the distribution of classes is imbalanced for all outcomes due to the low frequency of diabetes complication in any 3-month period, it is necessary to balance the data to avoid biased predictions and poor performance. To do so, the training data are undersampled such that the dimension of negative cases are equivalent to the positive cases. Undersampling was applied exclusively to the training data. The validation and test datasets were preserved with their original class distribution.

To evaluate the performance of the trained models, each model was retrained five times using random restarts. We do this to account for differences in the undersampled training set introduced by the random undersampling of negative cases. By repeating the training process multiple times, we aim to address the differences and report a more robust model performance.

To find the most optimal hyperparameters in the XGBoost model29, we used a grid search approach to find the parameters that resulted in the most optimal model. For each model and each outcome, the most optimal hyperparameters that maximized the validation AUC were found using the python module Hyperopt30. The final hyperparameters for each model and outcome are presented in supplementary Table S6.

Feature importance analysis

To investigate which features contribute most to the model predictions, we analyzed feature importance across the different outcomes. Feature importance was calculated using SHAP (SHapley Additive exPlanations) values in python module shap. We computed the mean absolute SHAP values for each feature, averaged over five independent runs to ensure stability and robustness. To estimate the feature contributions, we used the test data. The top six features, ranked by their average SHAP values, are reported for each model and outcome in Fig. 2. Additional SHAP analyses are in the supplementary materials, Fig. S2.

Evaluating the algorithmic fairness of the models

To investigate potential disparities in machine learning models, we compare various performance metrics using the confusion matrix, supplementary Fig. S3: True Positive Rate (TPR) \(\frac{TP}{TP+FN}\), False Positive Rate (FPR) \(\frac{FP}{FP+TN}\), Predictive parity ratio (PPV) \(\frac{TP}{TP+FP}\), Statistical Parity (STP) \(\frac{TP+FP}{TP+FP+TN+FN}\), and Accuracy (ACC) \(\frac{TP+TN}{TP+FP+TN+FN}\). We analyze these metrics for one subgroup (e.g., males) in comparison to its counterpart (e.g., females) to gain insights into model performance across these subpopulations.

A feature is considered biased if the ratio of performance metrics between two groups falls outside a specified range. When comparing males and females, we determine a model to be fair if this ratio lies within the range: \(\epsilon< \frac{metric_{female}}{metric_{male}} < \frac{1}{\epsilon }\). Where \(\epsilon\) is set to 0.8, as this is the most commonly used threshold, and corresponds to the four-fifths rule31.

A machine learning model may exhibit discrimination against a subgroup if there is encoded bias within the data, if there is missing or imbalanced data, or if the model relies heavily on sensitive attributes. Biases in the data may stem from historical inequalities, non-representative sampling, or societal stereotypes, which can subsequently be learned and perpetuated by the model32,33. For prediction using health data, it is also possible that two subgroups are biologically different and therefore experience different prognoses.

Data availability

The data for this study is not available to the public due to its identifiable nature, and is in a closed and secure environment, on Statistics Denmark. Data protections are governed by the Danish Data Protection Agency. Researchers obtain data access through Statistics Denmark in accordance with rules for the use of data: https://www.dst.dk/en/TilSalg/Forskningsservice/Dataadgang. Correspondence and requests for materials should be addressed to the corresponding author.

References

IDF. (International Diabetes Federation) Diabetes facts & figures (2021). https://www.idf.org/aboutdiabetes/what-is-diabetes/facts-figures.html.

Ong, K. L. et al. Global, regional, and national burden of diabetes from 1990 to 2021, with projections of prevalence to 2050: A systematic analysis for the global burden of disease study 2021. Lancet 402, 203–234. https://doi.org/10.1016/S0140-6736(23)01301-6 (2023).

Ling, W. et al. Global trend of diabetes mortality attributed to vascular complications, 2000–2016. Cardiovasc. Diabetol. 19, 182. https://doi.org/10.1186/s12933-020-01159-5 (2020).

Ali, M. K., Pearson-Stuttard, J., Selvin, E. & Gregg, E. W. Interpreting global trends in type 2 diabetes complications and mortality. Diabetologia 65, 3–13. https://doi.org/10.1007/s00125-021-05585-2 (2022).

Harding, J. L., Pavkov, M. E., Magliano, D. J., Shaw, J. E. & Gregg, E. W. Global trends in diabetes complications: A review of current evidence. Diabetologia 62, 3–16. https://doi.org/10.1007/s00125-018-4711-2 (2019).

Ravaut, M. et al. Predicting adverse outcomes due to diabetes complications with machine learning using administrative health data. npj Digit. Med. 4, 1–12. https://doi.org/10.1038/s41746-021-00394-8 (2021).

Fan, Y. et al. Machine learning approaches to predict risks of diabetic complications and poor glycemic control in nonadherent type 2 diabetes. Front. Pharmacol. https://doi.org/10.3389/fphar.2021.665951 (2021).

Mora, T., Roche, D. & Rodríguez-Sánchez, B. Predicting the onset of diabetes-related complications after a diabetes diagnosis with machine learning algorithms. Diabetes Res. Clin. Pract. 204, 110910. https://doi.org/10.1016/j.diabres.2023.110910 (2023).

Kanbour, S. et al. Machine learning models for prediction of diabetic microvascular complications. J. Diabetes Sci. Technol. 18, 273–286. https://doi.org/10.1177/19322968231223726 (2024).

Nicolucci, A. et al. Prediction of complications of type 2 Diabetes: A Machine learning approach. Diabetes Res. Clin. Pract. 190, 110013. https://doi.org/10.1016/j.diabres.2022.110013 (2022).

Schiborn, C. & Schulze, M. B. Precision prognostics for the development of complications in diabetes. Diabetologia 65, 1867–1882. https://doi.org/10.1007/s00125-022-05731-4 (2022).

Tan, K. et al. Evaluation of machine learning methods developed for prediction of diabetes complications: A systematic review. J. Diabetes Sci. Technol. https://doi.org/10.1177/19322968211056917 (2023).

Vimont, A., Béliard, S., Valéro, R., Leleu, H. & Durand-Zaleski, I. Prognostic models for short-term annual risk of severe complications and mortality in patients living with type 2 diabetes using a national medical claim database. Diabetol. Metab. Syndrome 15, 128. https://doi.org/10.1186/s13098-023-01105-x (2023).

Dworzynski, P. et al. Nationwide prediction of type 2 diabetes comorbidities. Sci. Rep. 10, 1776. https://doi.org/10.1038/s41598-020-58601-7 (2020).

Straw, I. & Wu, H. Investigating for bias in healthcare algorithms: A sex-stratified analysis of supervised machine learning models in liver disease prediction. BMJ Health Care Inform. 29, e100457. https://doi.org/10.1136/bmjhci-2021-100457 (2022).

Li, F. et al. Evaluating and mitigating bias in machine learning models for cardiovascular disease prediction. J. Biomed. Inform. 138, 104294. https://doi.org/10.1016/j.jbi.2023.104294 (2023).

Ciarambino, T. et al. Influence of gender in diabetes mellitus and its complication. Int. J. Mol. Sci. 23, 8850. https://doi.org/10.3390/ijms23168850 (2022).

Seghieri, G. et al. The effect of sex and gender on diabetic complications. Curr. Diabetes Rev. 13, 148–160. https://doi.org/10.2174/1573399812666160517115756 (2017).

Charlson, M., Pompei, P., Ales, K. & MacKenzie, C. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J. Chronic Dis. https://doi.org/10.1016/0021-9681(87)90171-8 (1987).

Huntley, A., Johnson, R., Purdy, S. & Valderas, J. Measures of multimorbidity and morbidity burden for use in primary care and community settings: A systematic review and guide. Ann. Fam. Med. https://doi.org/10.1370/afm.1363 (2012).

Elixhauser, A., Steiner, C., Harris, D. & Coffey, R. Comorbidity measures for use with administrative data. Med. Care https://doi.org/10.1097/00005650-199801000-00004 (1998).

Sundhedsdatastyrelsen. Udvalgte kroniske sygdomme og svære psykiske lidelser (2022).

Sundhedsdatastyrelsen. Algoritmer for udvalgte kroniske sygdomme og svære psykiske lidelser. Tech. Rep., Sundhedsdatastyrelsen (2023). https://www.esundhed.dk/-/media/Files/Dokumentation/Register-for-Udvalgte-Kroniske-Sygdomme-og-Svaere-Psykiske-Lidelser/29_Algoritmer_for_RUKS.ashx?la=da&hash=B728D2AB7C4926371C9D68FFC0762537.

Yi, S. E. et al. Predicting hospitalisations related to ambulatory care sensitive conditions with machine learning for population health planning: derivation and validation cohort study. BMJ Open https://doi.org/10.1136/bmjopen-2021-051403 (2022).

Diabetesforeningen. Langtidsblodsukker (HbA1c) og type 2-diabetes. https://diabetes.dk/diabetes-2/fakta-om-type-2/blodsukker-og-maling/langtidsblodsukker-hba1c.

Chen, T. & Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, vol. 13-17-August-2016, 785–794, https://doi.org/10.1145/2939672.2939785 (2016).

Solheim Bojer, C. & Meldgaard, J. P. Learnings from Kaggle’s Forecasting Competitions (2020). https://arxiv.org/ftp/arxiv/papers/2009/2009.07701.pdf.

Perez-Lebel, A., Varoquaux, G., Le Morvan, M., Josse, J. & Poline, J. B. Benchmarking missing-values approaches for predictive models on health databases. GigaScience https://doi.org/10.1093/gigascience/giac013 (2022).

DMLC. XGBoost Parameters (2022). https://xgboost.readthedocs.io/en/stable/parameter.html.

Bergstra, J., Komer, B., Eliasmith, C., Yamins, D. & Cox, D. D. Hyperopt: A python library for model selection and hyperparameter optimization. Computat. Sci. Discov. 8, 014008. https://doi.org/10.1088/1749-4699/8/1/014008 (2015).

Wiśniewski, J. & Biecek, P. fairmodels: A Flexible Tool For Bias Detection, Visualization, And Mitigation. ArXiv Preprint https://doi.org/10.48550/arXiv.2104.00507 (2022).

Chouldechova, A. & Roth, A. The Frontiers of Fairness in Machine Learning. ArXiv Preprint https://doi.org/10.48550/arXiv.1810.08810 (2018).

Pessach, D. & Shmueli, E. Algorithmic Fairness. ArXiv Preprint http://arxiv.org/abs/2001.09784 (2020).

Author information

Authors and Affiliations

Contributions

Designing the study: A.A, E.J & K.R.O.; Development of the models: A.A. under supervision by R.R., K.R.O & E.J.; Manuscript written by A.A., E.J. & K.R.O with inputs from R.R. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics statement

This study is based on micro-data from administrative registers and was conducted on a secure server at Statistics Denmark. Register data is not subject to the requirement of consent and the study complies with GDPR and Danish data security regulations. The Regional Scientific Ethical Committees for Southern Denmark assessed the study and concluded that no further ethical approval was needed (cf. section 14, subsection 1, Act on Research Ethics Review of Health Research Projects – file number: 20192000-99). Analysis of register data was conducted in strict accordance with the guidelines of Statistics Denmark and the Danish Data Protection Agency. Data access through Statistics Denmark is granted only under stringent conditions, ensuring full compliance with information security and data confidentiality standards https://www.dst.dk/en/OmDS/strategi-og-kvalitet/datasikkerhed-i-danmarks-statistik.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Aagaard, A., Röttger, R., Johnson, E.K. et al. Comparing the predictive performance of diabetes complications using administrative health data and clinical data. Sci Rep 15, 33035 (2025). https://doi.org/10.1038/s41598-025-18079-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-18079-7