Abstract

Contouring organs-at-risk (OARs) is a critical yet time-consuming step in head and neck (HaN) radiotherapy planning. Auto-segmentation methods have been widely studied, and commercial solutions are increasingly entering clinical use. However, their adoption warrants a comprehensive, multi-perspective evaluation. The purpose of this study is to compare three commercial artificial intelligence (AI) software solutions (Limbus, MIM and MVision) for HaN OAR auto-segmentation on a cohort of 10 computed tomography images with reference contours obtained from the public HaN-Seg dataset, from both observational (descriptive and empirical) and analytical (geometric, dosimetric and psychometric) perspectives. The observational evaluation included vendor questionnaires on technical specifications and radiographer feedback on usability. The analytical evaluation covered geometric (Dice similarity coefficient, DSC, and 95th percentile Hausdorff distance, HD95), dosimetric (dose constraint compliance, OAR priority-based analysis), and psychometric (5-point Likert scale) assessments. All software solutions covered a broad range of OARs. Overall geometric performance differences were relatively small (Limbus: 69.7% DSC, 5.0 mm HD95; MIM: 69.2% DSC, 5.6 mm HD95; MVision: 66.7% DSC, 5.3 mm HD95), however, statistically significant differences were observed for smaller structures such as the cochleae, optic chiasm, and pituitary and thyroid glands. Differences in dosimetric compliance were overall minor, with the lowest compliance observed for the oral cavity and submandibular glands. In terms of qualitative assessment, radiographers gave the highest average Likert rating to Limbus (3.9), followed by MVision (3.7) and MIM (3.5). With few exceptions, most software solutions produced good-quality AI-generated contours (Likert ratings ≥ 3), yet some editing should still be performed to reach clinical acceptability. Notable discrepancies were seen for the optic chiasm and in cases affected by mouth bites or dental artifacts. Importantly, no clear relationship emerged between geometric, dosimetric, and psychometric metrics, underscoring the need for a multi-perspective evaluation without shortcuts.

Similar content being viewed by others

Introduction

Radiotherapy (RT) requires precise planning to deliver therapeutic doses of ionizing radiation to the tumor while protecting the surrounding healthy tissues, known as organs-at-risk (OARs). Accurate delineation of the tumor and OARs from the acquired planning images is critical to ensure local tumor control and prevent damage to normal tissue. The delineation process is typically done manually and relies heavily on the expertise of radiation oncologists, radiographers, and dosimetrists. However, numerous studies have shown that manual delineations are subjective, prone to errors, and very time-consuming1. The automation of the contouring process has been long a major research and application focus in RT, which has, in the past decade, witnessed the transition from atlas-based to artificial intelligence (AI) methods that attempt to provide accurate auto-segmentations of tumors and OARs2,3. Moreover, with the introduction of adaptive RT, auto-segmentation is becoming paramount as it facilitates rapid adjustments to treatment plans in response to daily anatomical changes4,5,6.

To address these challenges, several AI algorithms have been developed that make use of deep learning models, which are trained on annotated datasets to learn the patterns and features associated with different anatomical tissues and organs, and then applied to analyze medical images and perform auto-segmentation of the same structures on new images7. These algorithms typically employ convolutional neural network (CNN) architectures, such as U-Net8 and its variants, with nnU-Net9 standing out as an open-source framework that provides a fully automated pipeline as well as self-configuration to the characteristics of the provided dataset. Recently, novel frameworks such as TotalSegmentator10 or Segment-Anything-Model11,12 have started to gain acceptance, especially because they are pre-trained on large standardized datasets. As a result, several commercial AI solutions have emerged to facilitate the contouring process13, which vary considerably in terms of the number of structures and types of images that can be contoured, the methods used to seamlessly integrate the software within the RT workflow, and overall software usability. With the availability of commercial solutions, studies focusing on their comparison and clinical acceptability have emerged14, reporting that the performance of these software solutions can differ in accuracy and consistency across diverse patient populations.

From this perspective, the head and neck (HaN) cancer is particularly challenging to treat with RT since this region is surrounded by a large number of lymph nodes and OARs15,16,17,18, which is also reflected in the number of studies comparing the commercial software solutions for HaN cancer site19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40 (Table 1). While these comparison studies focus on multiple software solutions, few of them perform their complete assessment (Fig. 1) from an observational and analytical perspective. While observational evaluation can be divided into descriptive evaluation, which encompasses various surveys and questionnaires, and empirical evaluation, which is more useful as it may consist of user-feedback on the AI-generated contours or even their manual corrections, analytical evaluation is the primary focus of such studies because it results in measurable properties. In fact, all existing studies (Table 1) performed geometric evaluation, which refers to the quantitative assessment of the spatial agreement between the AI-generated and reference contours using the geometric metrics, such as the Dice similarity coefficient (DSC) or Hausdorff distance (HD) with its 95th percentile (HD95). On the other hand, relatively few of them complement the geometric evaluation with dosimetric evaluation, which assesses how quantitative differences in contouring affect the radiation dose distribution received by OARs or target volumes using the dosimetric metrics, such as the average dose (Dmean), maximal dose (Dmax) or dose-volume histogram (DVH), or with psychometric evaluation, which encompasses standardized techniques involving human judgment of contour quality, such as Likert ratings or Turing tests. All three evaluations were performed only in the studies of Heilemann et al.24 and Chen et al.27, but their dosimetric analysis is limited to reporting deviations in only in terms of Dmean, Dmax and/or DVH, which is an overly generalized approach that does not adequately reflect clinical decision-making, which commonly relies on specific dosimetric constraints and OAR prioritization41,42. Another relevant aspect is the time-based evaluation that measures the time differences between manual contouring and AI auto-segmentations or even manual corrections of AI-generated contours, however, a substantial body of evidence already demonstrates that AI auto-segmentation algorithms can considerably accelerate the auto-segmentation process1. Last but not least, it is important to note that the large amount of data required to train these algorithms raises ethical concerns regarding data privacy, patient consent and dataset representativeness39.

The rapid evolution of AI auto-segmentation algorithms therefore necessitates a thorough evaluation to ensure that these solutions can meet the legal and ethical requirements as well as the demands of clinical practice. As none of the existing studies (Table 1 encompasses the whole spectrum of observational and analytical evaluations in Fig. 1), this study therefore aims to fill this gap by systematically performing a multi-perspective evaluation of three commercial solutions for auto-segmentation of OARs from a publicly available dataset of HaN images to provide clinicians, researchers, hospital administrators and policymakers with evidence-based insights into their strengths and limitations, and hence facilitate the safe integration of these technologies into clinical practice.

Methods

Experimental setup

Data The recently published publicly available HaN-Seg dataset43, which consists of paired computed tomography (CT) and magnetic resonance (MR) images of the same patients with corresponding manually obtained three-dimensional contours for up to 30 OARs in the HaN region, was used in this study. Manual delineations were performed by an RT technologist or radiation oncologist, and then curated by a medical imaging researcher following established delineation guidelines44, aided by co-registered MR images to improve the delineation of soft tissues16, with the overall inter-observer variability in contouring OARs from CT images estimated to 71.2 ± 18.2% and 4.9 ± 4.1 mm in terms of DSC and HD95, respectively16. From the HaN-Seg dataset, which was also used for the recently organized HaN-Seg segmentation challenge45, 10 representative cases were selected to include both straightforward cases – hereinafter referred to as normal cases (n = 3: case_02, case_08, case_12), and common clinical challenges such as mouth bites (n = 3: case_15, case_23, case_34) and artifacts (n = 4: case_05, case_22, case_26, case_27). The extracted CT images, acquired using either the Philips Brilliance Big Bore (Philips Healthcare, Best, the Netherlands) or Siemens Somatom Definition AS (Siemens Healthineers, Erlangen, Germany) scanner, were retained at their original resolution (in-plane resolution: 0.56–0.98 mm; slice thickness: 2.0–3.0 mm) and converted from the originally released NIfTI to DICOM format before being uploaded to the AI software, with no dedicated metal artifact reduction techniques46 applied before further processing. For the purpose of this study, the dataset was complemented with corresponding clinical RT dose distributions maps in the DICOM RTDOSE format, generated using the Varian Eclipse treatment planning system (Varian, Palo Alto, CA, USA). Out of 10 cases, one was treated with intensity modulated RT (IMRT; Monte Carlo algorithm, 7 fields and 35 × 2.0/1.6 fractionation), while the remaining nine received volumetric modulated arc therapy (VMAT; analytical anisotropic algorithm, six with 2 × 360° arcs and 35 × 2.0/1.6 fractionation, one with 2 × 210° arcs and 35 × 2.0/1.6 fractionation, one with 220° + 80° arcs and 30 × 2.0 fractionation, one with 2 × 220° arcs and 33 × 2.0/1.7 fractionation).

Software Six commercial software companies that include AI auto-segmentation of OARs for the HaN region in their portfolio were invited, and three accepted to participate in this study: (i) Limbus AI (Regina, SK, Canada) – part of Radformation Inc. (New York, NY, USA) – with software Limbus Contour v1.7.0, (ii) MIM Software Inc. (Cleveland, OH, USA) – part of GE HealthCare Inc. (Chicago, IL, USA) – with software Contour ProtegeAI v7.3.5 and (iii) MVision AI (Helsinki, Finland) with software MVision Contour v1.2. The corresponding software are hereinafter referred to as Limbus, MIM and MVision, respectively, and were used to segment 13 OARs in the devised dataset: brainstem, cochleae (left, L/right, R), lacrimal glands (L/R), lips, mandible, optic chiasm, optic nerves (L/R), oral cavity, parotid glands (L/R), pituitary gland, spinal cord, submandibular glands (L/R) and thyroid. All AI-generated contours were produced using the cloud-based software and exported locally as DICOM RTSTRUCT files, which were then converted back to NIfTI format for further evaluation.

Compliance with ethical standards All procedures in this study were performed in accordance with the institutional guidelines and ethical standards of the Declaration of Helsinki. The study was approved by the ethical committee of the Institute of Oncology Ljubljana, Slovenia (approval no. ERID-EK/139), which also waived the requirement for informed consent from the study subjects due to the retrospective study design.

Code availability The code for performing numerical evaluations and statistical analyses was developed in SciPy, an open-source Python library for scientific and technical computing (https://scipy.org/), and is available from the corresponding author upon reasonable request. The geometric performance metrics were computed using the recently released open-source software MeshMetrics, version v0.1.047,48 (https://doi.org/10.5281/zenodo.16896595).

Observational evaluation

Descriptive evaluation The participating companies were asked to complete a technical specification questionnaire about their software, consisting of six sections with a total of 28 questions (Q) that were based on literature findings49 and consultations with radiographers: (1) general information about the company (2Q), (2) methodological design and algorithm validation (10Q), (3) compliance of the AI software with legal and hospital standards (5Q), (4) software interoperability and data management (3Q), (5) supported anatomies, OARs, and tumor sites (6Q), and (6) additional features for contouring support (2Q).

Empirical evaluation Two radiographers were invited to provide qualitative comments on the AI-generated contours for each case.

Analytical evaluation

Geometric evaluation The MeshMetrics software47,48 was used to compute the volumetric DSC and HD95 between the AI-generated and curated reference contours, defined as DSC = 2|X∩Y|/(|X|+|Y|) and HD95 = max{dk95%(X,Y), dk95%(Y,X)}, where X and Y are the two volumetric contours being compared, |X|, |Y| and |X∩Y| represent the number of voxels in X, Y, and their volumetric overlap, respectively, while dk95%(X,Y) and dk95%(Y,X) denote the 95th percentile of the Euclidean distances from voxels in X to the surface of Y, and vice versa. Although other metrics for evaluating the segmentation quality exist, such as those derived from radiomic features50, DSC and HD95 remain standard geometric benchmarks in RT workflows. DSC is a relative metric that quantifies volumetric overlap, with 100% indicating perfect and 0% no overlap, which is sensitive to the count of true positive voxels relative to both contours. While it is robust to small contour shifts in large structures, it also heavily penalizes the same absolute error in the case of small structures. On the other hand, HD95 is an absolute metric that is typically expressed in millimeters, and is only lower-bounded, with 0 mm indicating a very close alignment between the two contours. It is less sensitive to extreme outliers than HD, and serves to identify local contour mismatches even when overall volume overlap is high. However, it is still sensitive to small segmentation errors in the case of small, complex or irregular structures.

Dosimetric evaluation A forward dosimetric analysis was performed to evaluate the dosimetric impact of the AI auto-segmentations51,52. A single dose distribution, originally created at the Institute of Oncology Ljubljana, Slovenia, during RT planning based on the selected CT image in the HaN-Seg dataset and corresponding clinical segmentations of OARs, was projected onto both AI-generated and curated reference contours. Although a variety of dosimetric metrics exist, including those based on the recently introduced field of dosiomics53, we computed Dmean, Dmax and the amount of volume receiving more than 54 Gy of dose (V54 Gy) that are among the standard dosimetric benchmarks in RT workflows. Compliance with clinical dose constraints and priority-based analysis was conducted based on OAR-specific international guidelines41,42,54,55 that are routinely applied in clinical practice.

Psychometric evaluation Two radiographers were asked to evaluate the quality of the AI-generated contours using a 5-point Likert scale56: 1 – unusable, 2 – major edits required, 3 – minor edits that are necessary required, 4 – minor edits that are not necessary are required, 5 – clinically acceptable. One case was re-evaluated to assess the intra-rater reliability. For this analysis, only the OARs contoured at our department were considered (i.e. brainstem, cochlea, mandible, optic chiasm, optic nerves, parotid glands, spinal cord). The intra-rater agreement was evaluated by the Kendall rank correlation coefficient (τ)57 that measures the ordinal association between two measured quantities (τ = −1: perfect disagreement, reverse ranking; τ = 0: no correlation, random ranking; τ = +1: perfect agreement, identical ranking).

Relationships between metrics We investigated the relationship between average Likert ratings and two geometric metrics, DSC and HD95, as well as the inter-rater agreement for each OAR. Additionally, leveraging the diverse set of available metrics (Fig. 1), we performed a correlation analysis across all AI software solutions using the Pearson (r) and Spearman (ρ) coefficients57, which measure the linear and monotonic relationships between two variables according to their values and ranks, respectively (r, ρ = −1: perfect negative linear/monotonic decreasing relationship; r, ρ = −0: no linear/monotonic relationship; r, ρ = +1: perfect positive linear/monotonic increasing relationship). This analysis included DSC, HD95, Dmax, Dmean, average Likert ratings, and distance to target, aiming to visualize and explore the potential relationships within the analytical metric triangle: geometric, dosimetric, and psychometric.

Results

Observational evaluation

Descriptive evaluation The findings of the questionnaire are presented in detail in Supplementary Material, Table S1, and can be, at the time of writing, summarized as follows:

-

1.

Market share The market share of the software companies ranged from 100 to 470 hospitals.

-

2.

Methods used to develop the algorithm All software solutions made use of a convolutional neural network based on the U-Net architecture45. The data used to develop the algorithms was obtained from clinical hospitals and open-source databases. A large number of images from different scanners encompassing a wide range of clinical cases was used by all software companies to ensure the generalizability of the AI software. In addition, Limbus also included diagnostic CT and MR linac data to further improve the generalizability of the model. All software companies made use of a wide range of clinical guidelines to develop the AI models according to the structure being delineated. MVision offered the option to delineate some OARs according to the preferred guideline, such as those of the Danish Head and Neck Cancer Group (DAHANCA), European Organisation for Research and Treatment of Cancer (EORTC), Groupe d’Oncologie Radiothérapie Tête et Cou (GORTEC), Head and Neck Cancer Program Study Group (HKNPCSG), National Cancer Institute of Canada Clinical Trials Group (NCIC CTG), National Cancer Research Institute (NCRI), NRG Oncology and Trans-Tasman Radiation Oncology Group (TROG), united under the multi-national consensus guidelines of Brouwer et al.44. All software companies asked medical or radiation oncologists working within the company and at various clinical centers to segment the images. However, MVision also involved medical and dental students for the initial segmentations. All software companies made use of multidisciplinary peer review to assess the quality of the segmentations used for both training and validation. The quality of the algorithms was assessed quantitatively by geometric metrics (e.g. DSC and HD95) and qualitatively by experts. All companies collaborated with clinical centers to assess the quality of the algorithms and collected additional data on the impact of introducing AI on the segmentation time, inter-rater agreement, and dosimetry of any deviations in the AI segmentation from the gold standard contours.

-

3.

Certification and data management All software solutions are marked with European conformity (i.e. CE-marked). MVision and MIM offered a cloud or hospital-based storage system, while Limbus offered only a hospital-based storage system. For the cloud-based solution of both MVision and MIM, the data is pseudonymised before sent to the segmentation software available on the cloud. All solutions offered a user authentication feature. MVision and MIM can audit user trail events, however, Limbus can log events but does not provide a user trail.

-

4.

Interoperability with RT systems All software solutions offered seamless integration with the main RT equipment vendors. This feature enabled the users to seamlessly import and export data into the RT planning station, thus facilitating the editing of structures without the need to learn new features.

-

5.

Type of delineation models offered The software solutions varied in the number of OARs offered: MVision offered the highest number of HaN OARs for CT (n = 32), followed by Limbus (n = 22) and MIM (n = 18). Moreover, Limbus and MVision offered the option to assist the hospital with the development of AI models if required.

-

6.

Additional features All software solutions offered a quality assurance tool to quantitatively assess the quality of the AI auto-segmentation models. In addition, MVision also offered a clinical staff training platform to facilitate the delineation of OARs according to the established clinical guidelines.

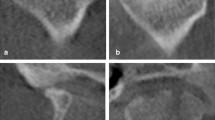

Empirical evaluation According to the feedback provided by the radiographers, the main issues were under- or over-segmentation of the optic nerve and chiasm (cf. last example in the first row in Fig. 2), as well as of the spinal cord and brainstem (cf. third row in Fig. 2). Moreover, they also pointed out how the presence of dental artifacts, mouth bites and tumors affected the auto-segmentation accuracy of the mandible and oral cavity (cf. second row in Fig. 2). Radiographers also noted discrepancies in the auto-segmentation of the parotid glands in the lateral direction (cf. first row in Fig. 2).

Visualization of the reference and AI-generated contours, showing axial slices from the same patient (top row), three patients with dental artifacts and three with mouth bites (middle row, left to right), and mid-sagittal slices from different patients (bottom row). Red arrows highlight substantial deviations between reference and AI-generated contours.

Analytical evaluation

Geometric evaluation The geometric performance of the AI-generated contours, assessed against curated reference segmentations for 13 OARs available across all three software solutions, is summarized in Table 2 and visualized in Figs. 3 and 4. Overall, Limbus achieved the highest mean DSC (69.7%), followed by MIM (69.2%) and MVision (66.7%). In terms of HD95, Limbus also performed best (lowest average value of 5.0 mm), followed by MVision (5.3 mm) and MIM (5.6 mm). Statistical significance was assessed using the Wilcoxon signed-rank test with Bonferroni correction, with the per-OAR results shown in Fig. 3. For the overall performance, statistically significant differences in DSC were observed only between Limbus and MVision (marginal, p = 0.048), while in HD95 they were observed between MIM and MVision (p = 0.009) as well as between Limbus and MVision (p = 0.032). High DSC values (≥ 80%) were achieved for the brainstem, mandible, parotid glands, submandibular glands, and thyroid gland, while a notably low performance was observed for the optic chiasm (DSC < 50%), especially for MIM (Fig. 4). Statistically significant differences in DSC were observed for the brainstem, cochleae, optic chiasm, oral cavity, parotid glands, pituitary, spinal cord, and thyroid gland. For HD95, the best performance was noted for the cochleae, mandible, pituitary gland, spinal cord, and thyroid gland (HD95 < 4 mm), whereas the oral cavity showed the poorest performance (HD95 > 8 mm). Statistically significant differences in HD95 were found for the brainstem, left cochlea, parotid glands, and thyroid gland.

Box plots of the Dice similarity coefficient (DSC), Hausdorff distance (HD95), and average Likert ratings for different organs-at-risk, comparing the three AI software. Asterisks indicate statistically significant differences between AI software based on the Wilcoxon paired signed-rank test with Bonferroni correction (∗ → 0.05 > p > 0.01; ∗∗ → 0.01 > p > 0.001).

It has to be noted that, due to the variability in the cranial and caudal limits between curated and AI-generated brainstem and spinal cord contours, to ensure a fair comparison the brainstem auto-segmentations were truncated at the caudal end of the curated mask, while the spinal cord auto-segmentations were truncated at both cranial and caudal ends. A similar approach that focuses on the geometric agreement rather than the effects of different contouring guidelines has been also adopted in previous studies16,38. Moreover, although the AI-generated contours were compared against curated contours defined by a single observer43, the estimated overall inter-observer variability of delineating OARs from the same dataset (i.e. DSC of 71.2%, HD95 of 4.9 mm)16 was similar to the results obtained by the three software solutions, indicating that they perform at the level of human experts.

Visual analysis of the relationships between different types of metrics, reported separately for each organ-at-risk (one per row). The first two columns show scatter plots comparing the average Likert rating (horizontal axis) with two geometric metrics (vertical axis): Dice similarity coefficient (DSC) and 95th percentile Hausdorff distance (HD95). The third column illustrates the inter-rater agreement between the two Likert scale raters.

Dosimetric evaluation The results of the compliance with dosimetric constraints are presented in Table 3 and Fig. 4. Overall compliance rates were similar across the three software solutions, with Limbus, MIM, and MVision achieving compliance in 70.5%, 70.0%, and 71.2% of cases, respectively. The highest compliance was achieved for the brainstem, cochleae, lacrimal glands, optic chiasm, optic nerves, and pituitary gland, while the lowest compliance was observed for the oral cavity and submandibular glands. In terms of the priority-based assessment, 5 out of 10 test cases met the highest-priority constraints (P1) with Limbus and MIM, while 6 cases did so with MVision. For the P4 constraints, 4, 2, and 3 cases met the criteria with Limbus, MIM, and MVision, respectively. Notably, none of the software solutions satisfied all dosimetric constraints for all OARs within the same patient.

Psychometric evaluation The Likert scale ratings of two radiographers demonstrated good intra-rater agreement, with Kendall’s τ coefficients of 0.76 (p = 0.022) for radiographer 1 and 0.84 (p = 0.004) for radiographer 2. In contrast, the inter-rater agreement shown in Fig. 4 was lower, with τ of 0.45 (p = 0.001). Overall, Limbus received the highest mean rating (3.9) followed by MVision (3.7) and MIM (3.5) (Table 2). In terms of clinical usability, 100%, 90%, and 97% segmentations received an average Likert rating ≥ 3 and 57%, 51%, and 50% a rating ≥ 4 for Limbus, MIM, and MVision, respectively. Strong statistical differences were observed between Limbus and MIM (p = 0.0001), and less strong between Limbus and MVision (p = 0.049) as well as between MVision and MIM (p = 0.024). Across all three software solutions, the optic nerves received the highest mean Likert ratings, while the optic chiasm received the lowest. Statistically significant per-OAR differences between the three software solutions were observed for the mandible and optic chiasm, with the largest discrepancies noted for the latter.

Relationships between metrics The relationships between geometric and psychometric evaluation, stratified by OARs, AI software solutions, and dosimetric compliance, are visually presented in Fig. 4. A more general visualization across all OARs and AI software solutions is shown in Fig. 5, indicating that no correlation exists among the geometric metrics and average Likert ratings across all OARs. These observations were confirmed using the Pearson and Spearman coefficients, which consistently yielded low values (< 0.1) for all comparisons conducted across all OARs and all AI software solutions.

Discussion

A review of the literature revealed that studies comparing commercial AI software solutions often focus on a limited set of evaluations (typically one or a few), and rarely conduct comprehensive observational and analytical assessments (Table 1). However, evaluating these solutions from multiple perspectives is essential, given the complexity of clinical workflows, which demand regulatory compliance, stringent data security, seamless integration with existing hospital systems, intuitive user interfaces, and robust performance that genuinely accelerates the RT planning process. Due to the sequential nature of RT planning, the least optimal step can eventually become a bottleneck, ultimately determining the efficiency of the entire workflow. Comprehensive multi-perspective evaluations are therefore crucial, and to this end, we systematically analyzed three AI software solutions from the observational (using descriptive and empirical evaluations) and analytical (using geometric, dosimetric, and psychometric evaluations) perspective (Fig. 1).

Observational evaluation

Findings from the questionnaire did not reveal any major differences between the three AI software solutions in terms of certification, data interoperability, or underlying methodologies. Limbus is the only software solution that does not offer a cloud-based storage; however, this is not a critical limitation, as strict regulations often require hospital-based storage. Differences were observed in the level of customization available for contouring standards – both Limbus and MVision support the development of proprietary hospital models – while a greater variability was noted in the number of supported OARs, with MVision covering the most (32) and MIM the least (18) OARs for the HaN region. All software companies are actively working to expand their functionality, including support for additional OARs, lymph node segmentation, and delineation on cone beam CT (CBCT) and MR images. However, none currently supports multimodal segmentation that combines CT and MR images, despite their common use in HaN RT planning43,58.

Scatter plots showing the relationship between the distance from the organ-at-risk to the target tissue and various metrics, including Dice similarity coefficient (DSC), 95th percentile Hausdorff distance (HD95), average Likert rating, average dose (Dmean) and maximal dose (Dmax). (Note: Jitter was added to average Likert ratings to enhance readability).

In line with the European Medical Device Regulation (MDR)59, post-market surveillance is required for all clinically used software. All software companies provide quantitative tools for comparing AI-generated with reference contours, however, none currently offers annotation functionalities that would allow users to flag discrepancies for continuous model refinement. This limitation reflects a broader challenge in data governance: strict regulations and institutional policies often constrain data sharing between clinical centers and AI developers, impeding the iterative improvement of AI algorithms. Addressing this sensitive issue requires the development of secure, ethical, and consent-driven data-sharing frameworks. Such frameworks would enable active refinement of AI models, particularly benefiting patients at deployment sites, but would also require collaboration between regulatory, medical, and technical stakeholders.

Analytical evaluation

The geometric evaluation highlights the well-known observation of the volume dependency of the DSC metric, where smaller organs tend to have lower DSC scores23,43,60,61,62,63,64. This underscores the complementary value of boundary-based metrics such as HD95 in assessing the geometric performance. For example, although cochleae auto-segmentations produced by Limbus achieved a DSC of just over 50%, the corresponding HD95 was around 2 mm, and Likert ratings indicated that only minor or no edits were needed (average Likert rating of 3.6). A similar pattern was observed for the optic nerves, which scored a moderate DSC of around 65% and an HD95 of approximately 5.0 mm. Despite these relatively poor geometric scores, the optic nerves achieved the highest Likert ratings, which clearly demonstrates that geometric metrics alone offer only a limited insight into the clinical usability of OAR contours. A strong overall geometric performance was achieved for the mandible (DSC > 90%, HD95 < 2 mm), however, this did not translate into an equally strong dosimetric compliance (only 50% for both MIM and MVision) or qualitative ratings (relatively moderate Likert rating of 3.6). This mismatch is most likely due to the impact of dental artifacts and mouth bites, which, as noted by raters, can confuse the AI models (cf. second row, fifth column in Fig. 2). When comparing the geometric performance among AI software solutions, the most pronounced differences were found in smaller OARs such as the cochleae, lacrimal glands, optic chiasm, and pituitary gland. Interestingly, these geometric differences were less reflected in the dosimetric results and qualitative scores. With the exception of poor ratings for the optic chiasm and excellent ones for the optic nerves, the Likert ratings for the remaining OARs generally ranged between 3 and 4, suggesting only minor revisions were necessary.

Nonetheless, the Likert ratings of the two radiographers presented in the third column in Fig. 4 also highlight the subjectivity of qualitative evaluation. Low inter-rater agreement was particularly notable at the higher end of the scale (ratings ≥ 3), where the first rater appeared more inclined to give scores of 4 or 5 compared to the second one. At the lower end of the scale (ratings ≤ 2), both raters showed better alignment (cf. optic chiasm in Fig. 4). Notably, since Likert ratings were overall skewed toward higher points, this observation may not be statistically robust.

In terms of dosimetric compliance, small OARs generally performed very well. This can be explained by the fact that small boundary deviations – while impactful in geometric terms – rarely translate into significant dosimetric changes. However, this does not apply universally, as the proximity to the target volume and the resulting steep dose gradients can also result in large dosimetric deviations. Conversely, the oral cavity and submandibular glands performed poorly from this perspective. The obtained results are consistent with observations of Koo et al.51 and are most likely attributable to two factors: the presence of mouth bites and thus inaccurate segmentations (Fig. 2) and proximity to the tumor (cf. first row in Fig. 5), which result in steep dose gradients where even small geometric errors can lead to large dosimetric deviations, especially given that these OARs are not small. The priority-based analysis further suggests that AI software solutions are not yet ready for a fully autonomous use without expert review. In most cases where P1 constraints were not met, the cause was related to spinal cord auto-segmentations. We hypothesize this was due to over-contouring of this structure, as this issue was also noted by the two radiographers. An alternative explanation could be the lack of clearly defined anatomical boundaries between the spinal cord and brainstem, or the presence of differences in contouring practice regarding the cranial extent of the spinal cord. However, this effect was most likely mitigated by the applied cropping of the AI-generated contours based on the reference cranial and caudal limits, making the over-contouring more likely the primary issue (under-contouring is rather unlikely, as P1 constraints are based on Dmax and volume thresholds, and not on Dmean).

To study the relationships among the triangle of analytical metrics (Fig. 1) – geometric, dosimetric, and psychometric – we performed an analysis across all OARs and AI software solutions (Fig. 5), and found no clear correlations or monotonic trends. While this could be due to the limited number of cases or low inter-rater agreement, our results suggest that the search for a universal proxy metric to replace expert evaluation or dosimetric assessment may be futile65. Notably, previous studies have already shown weak or no correlation between geometric and dosimetric metrics52,61,66,67,68,69, and it this study we additionally show that linking either to Likert ratings is similarly non-trivial. The contrast between the optic chiasm and optic nerves illustrates this well. That said, if any relationships exist, they are most likely OAR-specific. For example, Fig. 4 suggests a stronger metric coherence when analyses are performed per-OAR or across groups of similar structures (e.g. by volume or proximity to the target). This hypothesis, however, requires a more thorough validation on a larger cohort. Moreover, it has to be noted that while we evaluated the geometric performance with DSC and HD95, other metrics such as those derived from the radiomic features50 may be used to provide additional information about the segmentation quality and can be used as a valuable supplement, especially from the perspective of identifying regions of poor or incorrect contouring. Similarly, the dosimetric performance may be evaluated with the emerging field of dosiomics53 that focuses on extracting radiomic features from dose distributions maps and, in contrast to standard dosimetric metrics, preserves the spatial information about the dose. However, such analyses extend beyond the standard benchmarks of RT workflows, but may be considered for future work.

Comparison to existing studies

For the studies presented in Table 1, the comparison of the geometric, dosimetric and psychometric performance across studies is reported in Supplementary Material, respectively in Tables S2, S3 and S4. The comparison of the geometric performance in terms of DSC and HD95 (Table S2) indicates that, in general, our results are similar to those reported by existing studies, as the difference is often within ± 5%pt and ± 5 mm, respectively. Apart from a few exceptions, such as the results of D’Aviero et al.19, who reported extremely good performance for practically all OARs, the results of Hoque et al.25, who reported relatively poor performance for cochleae, parotid glands and submandibular glands, or the results of Chen et al.27, who reported poor performance for the spinal cord, most studies reported similar DSC and HD95 for specific OARs. A similar conclusion can be drawn for the comparison of the dosimetric performance (Table S3), where the dose differences are in general in the same order of magnitude, except for the results of Chen et al.27 in the case of lacrimal glands, the mandible and parotid glands, and the results of Sarria et al.35 in the case of lips, where the deviations are larger than those reported by other studies. Finally, the comparison of the psychometric performance (Table S4) indicates that Likert ratings are well aligned across all studies for all observed OARs, except for the results of Hu et al.26, who reported a considerably lower average rating for the mandible. It has to be noted that different studies evaluated the software solutions on different datasets, moreover, commercial software solutions improve with time, with updated versions being continuously released, which introduces bias in such performance comparisons. Nevertheless, as our analyses were performed on a publicly available dataset, i.e. the HaN-Seg dataset43, the obtained results may represent a reference benchmark for eventual future evaluations of segmentation software for OARs in the HaN region.

Limitations and future work

One of the limitations of this study is that the image subset may not fully represent the variability in patient anatomies, pathologies, or tumor locations, which could impact algorithm robustness in broader clinical use. While we included cases with diverse clinical challenges to mitigate this issue (i.e. mouth bites and artifacts), additional experiments on datasets spanning different demographics, CT scanners, and clinical conditions are needed to generalize such findings27,39. Another limitation is the use of forward dosimetric analysis51, which does not capture the full impact of auto-segmentation on RT planning in comparison to performing a full replanning. However, access to RT planning infrastructure was unavailable, and prior work by van Aalst et al.52 supports forward analysis as a valid and scalable alternative. An inherent limitation of this type of study is that the results reflect the performance of AI models at the time of evaluation. As software companies continue to improve algorithms with expanded training data and user feedback, ongoing performance monitoring remains essential. Future work could address some of these limitations, explore AI auto-segmentation of lymph nodes, and extend the evaluation framework to other anatomical sites and imaging modalities (e.g. CBCT and MR).

Conclusions

Observational evaluation confirmed that all three AI software solutions comply with key regulatory requirements, including rigorous annotation, peer review, and validation processes to ensure segmentation quality. The main comparative differences were observed in the number of supported OARs and the flexibility to adapt segmentation styles to local clinical standards. All software companies are actively advancing their models by incorporating more data and expanding support for additional OARs, lymph nodes, and imaging modalities. The analytical evaluation compared AI auto-segmentations to manually generated reference contours using a triad of metrics: geometric, dosimetric, and psychometric. While overall differences among software solutions were modest, statistically significant differences were observed for certain OARs. Psychometric assessment with Likert ratings indicated that the majority of auto-segmentations were of reasonably good quality. However, manual editing was often indicated for clinical acceptability, particularly for complex structures like the optic chiasm, or in challenging scenarios involving imaging artifacts, the proximity of tumors to OARs, and the presence of mouth bites. Importantly, no clear correlation was observed among geometric, dosimetric, and psychometric metrics, underscoring the limited redundancy among them and highlighting the value of multi-perspective evaluation frameworks.

Data availability

The HaN-Seg dataset43 is publicly available online (https://doi.org/10.5281/zenodo.7442913). The cases used in this study are available from the corresponding author upon reasonable request.

Change history

04 November 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-26873-6

Abbreviations

- AI:

-

Artificial intelligence

- CT:

-

Computed tomography

- CNN:

-

Convolutional neural network

- DSC:

-

Dice similarity coefficient

- HD:

-

Hausdorff distance

- HD95:

-

Hausdorff distance, 95th percentile

- HaN:

-

Head and neck

- IMRT:

-

Intensity modulated radiation therapy

- MR:

-

Magnetic resonance

- OAR:

-

Organ-at-risk

- RT:

-

Radiotherapy

- VMAT:

-

Volumetric modulated arc therapy

References

Vaassen, F. et al. Evaluation of measures for assessing timesaving of automatic organ-at-risk segmentation in radiotherapy. Phys. Imaging Radiat. Oncol. 13, 1–6. https://doi.org/10.1016/j.phro.2019.12.001 (2020).

Vrtovec, T., Močnik, D., Strojan, P., Pernuš, F. & Ibragimov, B. Auto-segmentation of organs at risk for head and neck radiotherapy planning: from atlas-based to deep learning methods. Med. Phys. 47, e929–e950. https://doi.org/10.1002/mp.14320 (2020).

De Biase, A. et al. Clinical adoption of deep learning target auto-segmentation for radiation therapy: challenges, clinical risks, and mitigation strategies. BJR Artif. Intel. 1, ubae015. https://doi.org/10.1093/bjrai/ubae015 (2024).

Delaby, N. et al. Practical and technical key challenges in head and neck adaptive radiotherapy: the GORTEC point of view. Phys. Med. 109, 102568. https://doi.org/10.1016/j.ejmp.2023.102568 (2023).

Yu, X-L. et al. Real-time morphological and dosimetric adaptation in nasopharyngeal carcinoma radiotherapy: insights from autosegmented fractional fan-beam CT. Radiat. Oncol. 20, 68. https://doi.org/10.1186/s13014-025-02643-6 (2025).

Mastella, E. et al. A systematic review of the role of artificial intelligence in automating computed tomography-based adaptive radiotherapy for head and neck cancer. Phys. Imaging Radiat. Oncol. 33, 100731. https://doi.org/10.1016/j.phro.2025.100731 (2025).

Bibault, J-E. & Giraud, P. Deep learning for automated segmentation in radiotherapy: a narrative review. Br. J. Radiol. 97, 13–20. https://doi.org/10.1093/bjr/tqad018 (2024).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In: 18th International Conference on Medical Image Computing and Computer Assisted Intervention – MICCAI 2015, Springer, Lecture Notes in Computer Science, vol. 9351, pp. 234–241, (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Isensee, F., Jaeger, P., Kohl, S., Petersen, J. & Maier-Hein, K. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods. 18, 203–211. https://doi.org/10.1038/s41592-020-01008-z (2021).

Wasserthal, J. et al. TotalSegmentator: robust segmentation of 104 anatomic structures in CT images. Radiol. Artif. Intell. 5, e230024. https://doi.org/10.1148/ryai.230024 (2023).

Shen, Y. et al. FastSAM3D: An efficient segment anything model for 3D volumetric medical images. In 27th International Conference on Medical Image Computing and Computer Assisted Intervention – MICCAI 2024, Springer, Lecture Notes in Computer Science, vol. 15012, pp. 542–552, (2024). https://doi.org/10.1007/978-3-031-72390-2_51

Xu, G. et al. A segment anything model-guided and match-based semi-supervised segmentation framework for medical imaging. Med. Phys. 52, 4513–4527. https://doi.org/10.1002/mp.17785 (2025).

Rong, Y. et al. NRG oncology assessment of artificial intelligence deep learning-based auto-segmentation for radiation therapy: current developments, clinical considerations, and future directions. Int. J. Radiat. Oncol. Biol. Phys. 119, 261–280. https://doi.org/10.1016/j.ijrobp.2023.10.033 (2024).

Can Erdur, A. et al. Deep learning for autosegmentation for radiotherapy treatment planning: state-of-the-art and novel perspectives. Strahlenther Onkol. 201, 236–254. https://doi.org/10.1007/s00066-024-02262-2 (2025).

Guzene, L. et al. Assessing interobserver variability in the delineation of structures in radiation oncology: a systematic review. Int. J. Radiat. Oncol. Biol. Phys. 115, 1047–1060. https://doi.org/10.1016/j.ijrobp.2022.11.021 (2023).

Podobnik, G., Ibragimov, B., Peterlin, P. & Strojan, P. Vrtovec. vOARiability: interobserver and intermodality variability analysis in OAR contouring from head and neck CT and MR images. Med. Phys. 51, 2175–2186. https://doi.org/10.1002/mp.16924 (2024).

Nielsen, C. et al. Interobserver variation in organs at risk contouring in head and neck cancer according to the DAHANCA guidelines. Radiother Oncol. 197, 110337. https://doi.org/10.1016/j.radonc.2024.110337 (2024).

Atasoy, B. M. et al. The role of radiotherapy planning images in monitoring malnutrition and predicting prognosis in head and neck cancer patients: a pilot study. Radiat. Oncol. 20, 70. https://doi.org/10.1186/s13014-025-02645-4 (2025).

D’Aviero, A. et al. Clinical validation of a deep-learning segmentation software in head and neck: an early analysis in a developing radiation oncology center. Int. J. Environ. Res. Public. Health. 19, 9057. https://doi.org/10.3390/ijerph19159057 (2022).

Hobbis, D. et al. ASTRO annual 2022 meeting: comparisons of clinical and reference standard contours to AI auto-segmentation: an evaluation of 5 commercial models in head and neck organ at risk delineation. Int. J. Radiat. Oncol. Biol. Phys. 114, e98. https://doi.org/10.1016/j.ijrobp.2022.07.888 (2022).

Radici, L. et al. Implementation of a commercial deep learning-based auto segmentation software in radiotherapy: evaluation of effectiveness and impact on workflow. Life (Basel). 12, 2088. https://doi.org/10.3390/life12122088 (2022).

Anaya, V. M. Artificial intelligence based auto-contouring solutions for use in radiotherapy treatment planning of head and neck cancer. IPEM Transl 6, 100018. https://doi.org/10.1016/j.ipemt.2023.100018 (2023).

Doolan, P. J. et al. A clinical evaluation of the performance of five commercial artificial intelligence contouring systems for radiotherapy. Front. Oncol. 13, 1213068. https://doi.org/10.3389/fonc.2023.1213068 (2023).

Heilemann, G. et al. Clinical implementation and evaluation of auto-segmentation tools for multi-site contouring in radiotherapy. Phys. Imaging Radiat. Oncol. 28, 100515. https://doi.org/10.1016/j.phro.2023.100515 (2023).

Hoque, S. M. H. et al. Clinical use of a commercial artificial intelligence-based software for autocontouring in radiation therapy: geometric performance and dosimetric impact. Cancers (Basel). 15, 5735. https://doi.org/10.3390/cancers15245735 (2023).

Hu, Y. et al. Clinical assessment of a novel machine-learning automated contouring tool for radiotherapy planning. J. Appl. Clin. Med. Phys. 24, e13949. https://doi.org/10.1002/acm2.13949 (2023).

Chen, L. et al. Validation of a deep-learning segmentation model for adult and pediatric head and neck radiotherapy in different patient positions. Phys. Imaging Radiat. Oncol. 29, 100527. https://doi.org/10.1016/j.phro.2023.100527 (2024).

Johnson, C. L. et al. Simone 2nd CB,. Clinical validation of commercial deep-learning based auto-segmentation models for organs at risk in the head and neck region: a single institution study. Front Oncol. ;14:1375096. (2024). https://doi.org/10.3389/fonc.2024.1375096

Kim, Y. W., Biggs, S. & Claridge Mackonis, E. Investigation on performance of multiple AI-based auto-contouring systems in organs at risks (OARs) delineation. Phys. Eng. Sci. Med. 47, 1123–1140. https://doi.org/10.1007/s13246-024-01434-9 (2024).

Lê, A. T. et al. Head and neck automatic multi-organ segmentation on dual-energy computed tomography. Phys. Imaging Radiat. Oncol. 32, 100654. https://doi.org/10.1016/j.phro.2024.100654 (2024).

Luan, S. et al. Accurate and robust auto-segmentation of head and neck organ-at-risks based on a novel CNN fine-tuning workflow. J. Appl. Clin. Med. Phys. 25, e14248. https://doi.org/10.1002/acm2.14248 (2024).

Maes, D. et al. Framework for radiation oncology department-wide evaluation and implementation of commercial artificial intelligence autocontouring. Pract. Radiat. Oncol. 14, e150–e158. https://doi.org/10.1016/j.prro.2023.10.011 (2024).

Mody, P. et al. Large-scale dose evaluation of deep learning organ contours in head-and-neck radiotherapy by leveraging existing plans. Phys. Imaging Radiat. Oncol. 30, 100572. https://doi.org/10.1016/j.phro.2024.100572 (2024).

Moktan, H. et al. ASTRO annual 2024 meeting: evaluating commercial auto-segmentation software generated contours on MRI and their clinical acceptability for cranial stereotactic radiosurgery. Int. J. Radiat. Oncol. Biol. Phys. 120, e167. https://doi.org/10.1016/j.ijrobp.2024.07.376 (2024).

Sarria, G. R. et al. Artificial intelligence–based autosegmentation: advantages in delineation, absorbed dose-distribution, and logistics. Adv. Radiat. Oncol. 9, 101394. https://doi.org/10.1016/j.adro.2023.101394 (2024).

Temple, S. W. P. & Rowbottom, C. G. Gross failure rates and failure modes for a commercial AI-based auto-segmentation algorithm in head and neck cancer patients. J. Appl. Clin. Med. Phys. 25, e14273. https://doi.org/10.1002/acm2.14273 (2024).

Way, S. M. et al. ASTRO annual 2024 meeting: physician assessment and clinical evaluation of the suitability of deep learning based auto segmentation contours for organs at risk delineation in radiation oncology. Int. J. Radiat. Oncol. Biol. Phys. 120, e594. https://doi.org/10.1016/j.ijrobp.2024.07.1309 (2024).

Finnegan, R. N. et al. Geometric and dosimetric evaluation of a commercial AI auto-contouring tool on multiple anatomical sites in CT scans. J. Appl. Clin. Med. Phys. 26, e70067. https://doi.org/10.1002/acm2.70067 (2025).

Rusanov, B. et al. Guidance on selecting and evaluating AI auto-segmentation systems in clinical radiotherapy: insights from a six-vendor analysis. Phys. Eng. Sci. Med. 48, 301–316. https://doi.org/10.1007/s13246-024-01513-x (2025).

Sharma, D. et al. Geometric and dosimetric evaluation of a raystation deep learning model for auto-segmentation of organs at risk in a real-world head and neck cancer dataset. Clin. Oncol. 41, 103796. https://doi.org/10.1016/j.clon.2025.103796 (2025).

Lee, A. et al. International guideline on dose prioritization and acceptance criteria in radiation therapy planning for nasopharyngeal carcinoma. Int. J. Radiat. Oncol. Biol. Phys. 105, 567–580. https://doi.org/10.1016/j.ijrobp.2019.06.2540 (2019).

Jensen, K. et al. The Danish head and neck cancer group (DAHANCA) 2020 radiotherapy guidelines. Radiother Oncol. 151, 149–151. https://doi.org/10.1016/j.radonc.2020.07.037 (2020).

Podobnik, G. et al. The head and neck organ-at-risk CT and MR segmentation dataset. Med. Phys. 50, 1917–1927. https://doi.org/10.1002/mp.16197 (2024).

Brouwer, C. et al. CT-based delineation of organs at risk in the head and neck region:dahanca, EORTC, GORTEC, HKNPCSG, NCIC CTG, NCRI, NRG oncology and TROG consensus guidelines. Radiother Oncol. 117, 83–90. https://doi.org/10.1016/j.radonc.2015.07.041 (2015).

Podobnik, G. et al. HaN-Seg: the head and neck organ-at-risk CT and MR segmentation challenge. Radiother Oncol. 198, 110410. https://doi.org/10.1016/j.radonc.2024.110410 (2024).

Selles, M., van Osch, K. A. C., Maas, M., Boomsma, M. F. & Wellenberg, R. H. H. Advances in metal artifact reduction in CT images: a review of traditional and novel metal artifact reduction techniques. Eur. J. Radiol. 170, 111276. https://doi.org/10.1016/j.ejrad.2023.111276 (2024).

Podobnik, G. & Vrtovec, T. Metrics revolutions: Groundbreaking insights into the implementation of metrics for biomedical image segmentation. arXiv 2410.02630 https://doi.org/10.48550/arXiv.2410.02630 (2024).

Podobnik, G., Vrtovec, T. & HDilemma Are open-source Hausdorff distance implementations equivalent? In: 27th International Conference on Medical Image Computing and Computer Assisted Intervention – MICCAI 2024, Springer, Lecture Notes in Computer Science, vol. 15009, pp. 308–317, (2024). https://doi.org/10.1007/978-3-031-72114-4_30

Hurkmans, C. et al. A joint ESTRO and AAPM guideline for development, clinical validation and reporting of artificial intelligence models in radiation therapy. Radiother Oncol. 197, 110345. https://doi.org/10.1016/j.radonc.2024.110345 (2024).

Maffei, N. et al. Radiomics classifier to quantify automatic segmentation quality of cardiac sub-structures for radiotherapy treatment planning. Phys. Med. 83, 278–286. https://doi.org/10.1016/j.ejmp.2021.05.009 (2021).

Koo, J., Caudell, J., Latifi, K., Moros, E. & Feygelman, V. Essentially unedited deep-learning-based oars are suitable for rigorous oropharyngeal and laryngeal cancer treatment planning. J. Appl. Clin. Med. Phys. 25, e14202. https://doi.org/10.1002/acm2.14202 (2024).

Van Aalst, J. et al. Clarifying the dosimetric impact of autosegmentation inaccuracies. In: 20th International Conference on the use of Computers in Radiation therapy – ICCR 2024, pp. 53–56, (2024). https://udl.hal.science/hal-04720234v1

Liang, B. et al. Extracting 3D Spatial features from dose distribution to predict incidence of radiation pneumonitis. Front. Oncol. 9, 269. https://doi.org/10.3389/fonc.2019.00269 (2019).

Bisello, S. et al. Dose–volume constraints for organs at risk in radiotherapy (CORSAIR): an all-in-one multicenter–multidisciplinary practical summary. Curr. Oncol. 29, 7021–7050. https://doi.org/10.3390/curroncol29100552 (2022).

Osorio, E. et al. Dysphagia at 1 year is associated with mean dose to the inferior section of the brain stem. Int. J. Radiat. Oncol. Biol. Phys. 117, 903–913. https://doi.org/10.1016/j.ijrobp.2023.06.004 (2023).

Baroudi, H. et al. Automated contouring and planning in radiation therapy: what is ‘clinically acceptable’? Diagnostics 13, 667. https://doi.org/10.3390/diagnostics13040667 (2023).

Sprent, P. & Smeeton, N. C. Applied Nonparametric Statistical Methods, 4th Edition, Chapman and Hall/CRC, (2007).

Podobnik, G., Strojan, P., Peterlin, P., Ibragimov, B. & Vrtovec, T. Multimodal CT and MR segmentation of head and neck organs-at-risk. In: 26th International Conference on Medical Image Computing and Computer Assisted Intervention – MICCAI 2023, Springer, Lecture Notes in Computer Science, vol. 14223, pp. 745–755, (2023). https://doi.org/10.1007/978-3-031-43901-8_71

Regulation, E. U. 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices. https://eur-lex.europa.eu/eli/reg/2017/745/ (2017).

Maier-Hein, L. et al. Metrics reloaded: recommendations for image analysis validation. Nat. Methods. 21, 195–212. https://doi.org/10.1038/s41592-023-02151-z (2024).

Van Dijk, L. et al. Improving automatic delineation for head and neck organs at risk by deep learning contouring. Radiother Oncol. 142, 115–123. https://doi.org/10.1016/j.radonc.2019.09.022 (2020).

Chen, X. et al. A deep learning-based auto-segmentation system for organs-at-risk on whole-body computed tomography images for radiation therapy. Radiother Oncol. 160, 175–184. https://doi.org/10.1016/j.radonc.2021.04.019 (2021).

Brunenberg, E. et al. External validation of deep learning-based contouring of head and neck organs at risk. Phys. Imaging Radiat. Oncol. 15, 8–15. https://doi.org/10.1016/j.phro.2020.06.006 (2020).

Mlynarski, P., Delingette, H., Alghamdi, H., Bondiau, P-Y. & Ayache, N. Anatomically consistent CNN-based segmentation of organs-at-risk in cranial radiotherapy. J. Med. Imaging. 7, 014502–014502. https://doi.org/10.1117/1.JMI.7.1.014502 (2020).

Kuperman, V. Y. & Altundal, Y. New index for quantitative comparison of dose distributions in radiotherapy. Med. Phys. 52, 4804–4814. https://doi.org/10.1002/mp.17702 (2025).

van Rooij, W. et al. Deep learning-based delineation of head and neck organs at risk: geometric and dosimetric evaluation. Int. J. Radiat. Oncol. Biol. Phys. 104, 677–684. https://doi.org/10.1016/j.ijrobp.2019.02.040 (2019).

Poel, R. et al. The predictive value of segmentation metrics on dosimetry in organs at risk of the brain. Med. Image Anal. 73, 102161. https://doi.org/10.1016/j.media.2021.102161 (2021).

Guo, H. et al. The dosimetric impact of deep learning-based auto-segmentation of organs at risk on nasopharyngeal and rectal cancer. Radiat. Oncol. 16, 113. https://doi.org/10.1186/s13014-021-01837-y (2021).

Marquez, B. et al. Analyzing the relationship between dose and geometric agreement metrics for auto-contouring in head and neck normal tissues. Diagnostics 14, 1632. https://doi.org/10.3390/diagnostics14151632 (2024).

Acknowledgements

The authors thank the software companies Limbus AI (part of Radformation Inc.), MIM Software Inc. (part of GE HealthCare Inc.) and MVision AI for their participation.

Funding

This work was funded by the Ministry for Education, Sport, Youth, Research and Innovation in Malta under the Tertiary Education Scholarship Scheme (TESS), and by the Slovenian Research and Innovation Agency (ARIS) under grants P2-0232 and J2-60042.

Author information

Authors and Affiliations

Contributions

GP, CB, SM and TV contributed to the study design. SM collected the data, GP and CB performed the experiments and statistical analysis, and CJD, SM and TV interpreted the results. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional Information

The original online version of this Article was revised: The original version of this Article contained an error in Figure 2, where the entire figure was duplicated on the next page.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Podobnik, G., Borg, C., Debono, C.J. et al. Geometric, dosimetric and psychometric evaluation of three commercial AI software solutions for OAR auto-segmentation in head and neck radiotherapy. Sci Rep 15, 33396 (2025). https://doi.org/10.1038/s41598-025-18598-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-18598-3