Abstract

Earth observations from low Earth orbit satellites provide vital information for decision makers to better manage time-sensitive events such as natural disasters. For the data to be most effective for first responders, low latency is required between data capture and its arrival to decision makers. A major bottleneck is in the bandwidth-limited downlinking of the data from satellites to ground stations. One approach to overcome this challenge is to process at least some of the data on-board and prioritise pertinent data to be downlinked. In this study we propose a Physics Aware Neuromorphic Network (PANN) to detect changes caused by natural disasters from a sequence of multi-spectral satellite images and produce a change map, enabling relevant data to be prioritised for downlinking. The PANN used in this study is motivated by physical neural networks comprised of nano-electronic circuit elements known as “memristors” (nonlinear resistors with memory). The weights in the network are dynamic and update in response to varying input signals according to memristor equations of state and electrical circuit conservation laws. The PANN thus generates physics-constrained dynamical output features which are used to detect changes in a natural disaster detection task by applying a distance-based metric. Importantly, this makes the whole model training-free, allowing it to be implemented with minimal computing resources. The PANN was benchmarked against a state-of-the-art AI model and achieved comparable or better results in each natural disaster category. It thus presents a promising solution to the challenge of resource-constrained on-board processing.

Similar content being viewed by others

Introduction

Earth Observation (EO) from low Earth orbit satellites provide large volumes of data which are used in a diverse number of applications, including land cover monitoring1, food security2 and gas leaks3. Machine Learning (ML) techniques have become a critical tool for processing the enormous quantity of EO data4,5, with new ML models being designed for change detection tasks that specifically use EO data6,7. An area in which ML using EO data can have a significant impact is the management of natural disasters8,9,10. For the data to be most effective for first responders, low latency is required between data capture and its arrival to decision makers. One of the largest bottlenecks is the downlinking of data due to the limited bandwidth and coordination of available ground stations11. This problem is expected to grow in the future as more data is collected on board satellites due to advances in sensor technology (e.g. hyper-spectral cameras) and an increasing number of satellites12. One way to overcome this challenge is to process some of the data on-board and prioritise pertinent data to be downlinked, particularly for time-sensitive applications like natural disaster management. On-board processing will also be critical for future spacecraft deployed to remote sites in the solar system, or for monitoring solar weather in near-real-time.

Traditional algorithms have been developed to detect changes in EO images, for example BFAST13, which has been applied to detecting changes in forests14. Algorithms such as BFAST rely on spectral indices as input. These are a relatively simple pixel comparison method to highlight objects of interest such as burn areas15, diseased crops16 and flood mapping17. These indices are effective as they utilise domain knowledge regarding the object of interest. For example, the normalised difference vegetation index is commonly used to incorporate knowledge about the wavelengths that the Chlorophyll pigment absorbs18. More sophisticated indices, which involve more than just a normalised difference between two spectral bands, have been proposed to improve the detection15,19. The advantage of spectral indices is that they provide a simple, low computational cost method to determine objects of interest quickly, with reasonable accuracy. Additionally, they do not require training or large datasets to deploy, unlike ML models.

AI models based on deep ML, specifically Convolutional Neural Networks (CNNs), have been applied to several natural disaster tasks using EO data, including flood segmentation20,21, detecting earthquake affected buildings22 and volcanic eruptions23. To increase the performance of these AI models, often the number of layers and weights within the model needs to be increased. Chintalapati et al.24 showed how the architecture of CNN models has evolved to increase performance on an EO image classification task, which resulted in an increase in the number of weights in the models, with the two best performing models, CoCa25 and BASIC-L26, using 2.1 and 2.44 billion parameters, respectively. It was noted that most deep learning AI models have so many parameters that they would exceed the memory budget on most EO satellites. In recent years, work has been done to design smaller AI models specifically for use on-board satellites27,28. Furthermore, missions such as \(\Phi\)-Sat-129 and OPS-SAT30 have also designed satellites to accommodate AI models on-board. Although these models have been specifically designed for on-board use, all the training still occurs on the ground because of the large computational overhead. Only inference is performed on-board. Since AI models are trained on the ground, they often cannot be trained with the on-board sensor data, leading to degraded performance31,32. Work has been done to retrain models, once operational, with new data as it is acquired33,34.

In this study, we introduce the e Physics Aware Neuromorphic Network (PANN), a AI model with a novel architecture modelled after physical neural networks comprised of nano-electronic circuit elements known as memristors (nonlinear resistors with memory) that mimic synapses35,36,37. Like biological synapses, the memristive network weights continuously update in response to varying input signals, constrained by physical laws embedded within the model. The PANN, therefore, actively learns as new inputs are fed into the network, such as from a sensor on-board a satellite. As such, the PANN takes a significant shift away from the conventional artificial neural network AI paradigm, as the gradient-descent, back-propagation training of weights is completely replaced by physics-constrained dynamical equations. This is also in contrast to physics-informed neural networks, where the physics is embedded as a component of the model in the artificial neural network paradigm. Typically, this is done by modifying activation functions, gradient descent optimisation techniques, network structure, or loss functions38,39. The added physics normally takes the form of a partial differential equation and is task specific, for example, incompressible Navier-Stokes equations for fluid flow problems40, whereas with the PANN, the physics is independent of the task.

Physical memristive networks, which the PANN is modelled after, have demonstrated learning tasks such as image classification and sequence memory41, voice recognition42,43, long-/short-term memory and contrastive learning44, as well as regression tasks of varying complexity45,46,47 and chaotic time series prediction48. The PANN model has also been used to demonstrate these and other ML tasks, including transfer learning and multi-task learning49,50,51,52,53,54,55,56. These ML tasks were implemented using reservoir computing, where only weights in a single output layer need to be trained. This approach offers a substantial advantage over deep neural network models as it does not need computationally intensive training algorithms and thus does not consume as much energy. These properties make the PANN an ideal candidate for use on-board satellites.

In this study, we use a natural disaster dataset released by Růžička et al.28 to evaluate the performance of the PANN model on a change detection problem, that contains a time series of multi-spectral EO images from Sentinel-2. We benchmark the PANN against a state-of-the-art AI model and achieve comparable or better results. We also evaluate the performance of the PANN on a secondary dataset of the same natural disasters using multi-spectral EO images from LandSat-8, to compare the models performace on different sensors. Additionally, we use a distance measurement to detect changes, bypassing the need for an output layer altogether. Hence, we do not need to train any part of the model and the PANN does not need to be trained on the ground prior to deployment.

This article is organised as follows: in “Results” we show the results from the PANN model and compare them to a deep learning model for the same change detection task. We also show visualisations of the features the PANN model extracts. In “Discussion” we outline key findings from the results and their implication for use on-board satellites. Finally, “Methods” presents the details of the PANN model and workflow.

Results

The PANN model is used here to detect regions of change from natural disasters using EO multi-spectral images. The change detection task requires the model to take in a sequence of five images, where the final image is after a natural disaster event. The model outputs a change feature map that highlights the regions affected by the natural disaster, by breaking the images into smaller tiles and assigning a change likelihood score for each tile. The PANN model used in this study is an adaptive network, with weights that adjust with each new input. This allows the PANN to generate features from the inputs without requiring any training (see Methods for details). To determine if a tile has changed, the distance between inputs are compared in the feature space rather than the input space (pixel comparison). By applying a distance-based metric directly to the feature space, no training is performed at any stage in the model. The dataset includes four different categories of natural disaster events: fires, floods, hurricanes and landslides. Additionally, each natural disaster event is accompanied by a change mask which is used only to evaluate the performance of the PANN model.

Figure 1 shows the output change maps produced by the PANN model for each type of natural disaster event. Alongside the change maps are the RGB images directly before and after the natural disaster events and the associated change mask, which also includes clouds from the images directly before and after the natural disaster event. Although only one image is shown before the natural disaster event in Fig. 1, a total of four images before the natural disaster event were used to create the displayed change maps. For the four natural disaster events, the main areas of change have been correctly identified as changes in the change maps, with a limited amount of noise in the images being considered as a change, such as the vegetation colour change in the fire natural disaster event (top row), or the sediment being washed into the ocean in the flood natural disaster event (second row). Clouds are detected as changes, but are masked out of the change maps shown in Fig. 1, since they are ignored when evaluating the change maps to allow for a more direct comparison to a state-of-the-art deep learning model (see Methods for details).

RGB images (columns 1 and 2) directly before and after a natural disaster event, the corresponding target change mask (column 3) and the output change map produced by the PANN model (column 4) for each type of natural disaster: Top row—an example fire natural disaster event; Second row—an example flood natural disaster event; Third row—an example hurricane natural disaster event; Bottom row—an example landslide natural disaster event. Cloud-cover has been masked from the images and corresponds to yellow in the change mask, with genuine differences coloured aqua. Satellite images are from Sentinel-2 complied by Růžička et al. and available in their git57.

For each natural disaster event a change map was created, with the order in which the natural disasters were passed into the PANN model being randomised. We evaluated the quality of the change maps by calculating the Area Under the Precision–Recall Curve (AUPRC), which we create tile-wise. The change likelihood score for each tile is given by Eq. (3) in Methods, which depends on the distance metric used to measure the position of the tiles in the feature space. Table 1 reports the overall AUPRC as a percentage for each of the natural disaster categories using three different distance metrics, Euclidean, Cosine and Correlation distances. The Correlation distance significantly outperforms the other distance metrics and is also much more consistent across the different natural disaster classes.

We benchmark our PANN model against a state-of-the-art variational autoencoder model58, called RaVAEn, which was specifically designed to be a relatively small AI model that could be deployed on-board satellites. We also compare the PANN model to a baseline method that uses the same change score equation but compares the different tiles in the pixel space rather than the feature space like the other two AI models. When comparing models, it should be noted that the PANN has an automatic feature engineering process incorporated into the model setup that is not present in the RaVAEn model or the baseline method. Table 2 reports the performance of the three different models for each of the natural disaster classes. Both AI models, RaVAEn and PANN, outperform the baseline method in all classes. The RaVAEn and PANN models achieve comparable results in the fires, hurricanes and landslides, with the PANN slightly ahead for the fires and hurricanes, while RaVAEn is slightly ahead for the landslides. With the flood natural disasters, the PANN achieves the highest results by a significant margin with a score 14% higher than that of RaVAEn.

To assess how well the PANN model generalises to data from different sensors, we evaluated the model on a subset of the natural disaster events using EO images from LandSat-8 and compared the results to the Sentinel-2 data (see Methods for details). Table 3 reports the performance of the PANN and baseline models for the LandSat-8 data and the comparative results for the equivalent subset in the Sentinel-2 data. From the results it can be seen that the PANN model outperforms the baseline method for both the data from the Sentinel-2 satellites and the LandSat-8 satellite for every natural disaster category. Both models achieve higher performance when using data from Sentinel-2 compared to LandSat-8. Additionally, the difference in performance between datasets is much larger for the baseline methods, which shows a significant decrease in performance when using the LandSat-8 data.

Feature space

The AI models RaVAEn and PANN outperform the baseline method, since they can extract the most relevant features from the tiles and group similar tiles together, while keeping dissimilar tiles further apart in their feature spaces. To visualise the high dimensional feature space of the PANN, we used the Uniform Manifold Approximation and Projection (UMAP)59 method to reduce the dimensions of the feature space into 2D. The UMAP used 20 neighbours, a minimum distance of 0.4 and the correlation distance, unless otherwise stated. Figure 2 shows the UMAP 2D projection of the feature space for a flooding event. The left-hand side shows the position of the tiles in the image directly before and after the flooding event. For tiles after the flooding event, the percentage change in each tile is denoted by the colour bar. The right-hand side of the figure shows the same projection of the feature space but with the tile images instead of points. The change tiles are grouped together at the bottom of the projections and the remaining tiles are also sorted according to the features in the tiles, with greener farmland tiles on the left-hand side and tiles with the white objects on the right. Tiles that have missing values, from the north alignment of the swath, are also grouped towards the bottom right, with the tiles that have the missing values across the top being separated from the tiles with the missing values on the right-side of the tile. For comparison with the UMAP projection for the pixel space, see Supplementary Fig. S1 online.

2D UMAP projections of the PANN feature space, using the correlation distance for a flood natural disaster event: Left—positions of before (blue) and after (orange) tiles, with percentage change in the after tiles indicated by the colour bar; Right—the corresponding tile images. The tiles with flooding occupy a distinct arc-like cluster at the bottom of the projection.

Like most dimensionality reduction techniques, UMAP aims to reduce the number of dimensions while maintaining the overall structure and information of the original, higher dimensional space and thus depends on the distance metric used to measure the distances between points. Figure 3 shows the UMAP projection of the feature space for a hurricane natural disaster for the three distance metrics used in Table 1 and shows how the clustering of the tiles varies depending on the distance metric being used. This provides a visual qualitative tool to understand the large range in results reported in Table 1 due to the change in distance metric being used.

Discussion

In this study, we introduced the PANN model for a natural disaster change detection task and compared it against the state-of-the-art AI model RaVAEn and a baseline method. All three models receive the same multi-spectral EO images for the inputs and use the same change detection equation given by Eq. (3), for each tile in the image. The difference in the model performances, therefore, is attributed to their ability to extract the most relevant features from the inputs, while ignoring noise within the inputs. We note, however, that while the PANN model receives the same inputs as the other models, only the inputs corresponding to the bands selected by the automatic feature engineering process, on a scene by scene basis, are passed to the relevant individual networks. This inherently allows the PANN model to ignore irrelevant information as determined by the domain knowledge embedded in the feature engineering process. The baseline method does not extract any features from the inputs and instead does the comparison directly in the pixel space. This makes the baseline method more sensitive to noise in the images and, as expected, it exhibits the lowest performance as reported in Table 2. The AI models, on the other hand, extract meaningful features from the tiles and hence outperform the baseline method in every natural disaster category. The PANN and RaVAEn models achieve a comparable result in three of four of the natural disaster categories, demonstrating that both models extract equally useful features for these categories. For flood natural disasters, however, the PANN model outperforms the RaVAEn model and thus arguably extracts more meaningful features.

Although being able to extract the features is the most important task for the PANN model, how the feature space is navigated is also important for the performance on the PANN model. This is seen in Table 1 where the same feature space can produce significantly different results based on the distance metric used to navigate the feature space. This is visualised in the UMAP projections in Fig. 3, where different distance metrics are used to create the projections. The feature space produced by the PANN has a high dimensionality, as such the relative Euclidean distance between points tends to decrease60. This would result in the change likelihood score decreasing, particularly for change tiles and is likely why the Euclidean distance does not produce the best results. What is surprising is the large difference between the Cosine and Correlation distances, given that both metrics are angle-based measures. The Cosine distance is given by Eq. (4) and measures the angle between the vectors in the feature space. The Correlation distance is the same as the Cosine distance except each sample is mean centred first, as given by Eq. (5). The mean-correction for the Correlation distance effectively changes the location of the origin and hence the angle between the vectors also changes61. One possible reason why the mean correction has such a large impact on the performance is that it helps reduce covariate shift in the output sequence that can arise from the dynamical nature of the PANN. The covariate shift effect can be seen in Fig. 3 (and Supplementary Fig. S2), where there is a much greater overlap of points at the different timesteps (that are not changes) in the Correlation projection compared to that of the Cosine projection. Interestingly, batch normalisation layers were introduced to deep neural networks to help reduce the covariate shift between layers in the networks62 and have been widely accepted to speed up training times and increase performance. We note that the PANN does not have any batch normalisation layers, while the RaVAEn model does, and that the best performing metrics for the models are the Correlation and Cosine distance, respectively.

From the results in Table 3, two clear trends are evident. The first is that both the PANN and baseline methods perform better when using data from Sentinel-2 than LandSat-8 across all natural disaster categories. This can largely be explained by the decrease in resolution. The Sentinel-2 data has a spatial resolution of 10 m, with a tile size \(32 \times 32\) pixels and a tile resolution of 320 m. While the LandSat-8 data has a spatial resolution of 30 m, with a tile size of \(16 \times 16\) pixels and tile resolution 480 m. As a result, the models are not able to resolve finer details when determining if a change has occurred in a LandSat-8 tile. This difference in resolution can be seen in Fig. 4, which shows the change mask and output change map for both datasets for one of the landslides. Additionally, in Fig. 4, more noise is evident in the LandSat-8 change map, compared to the Sentinel-2 change map, particularly in the areas where there is no change. This would also contributes to the decrease in performance. The second trend that can be clearly seen in Table 3, is that the decrease in performance using the LandSat-8 data relative to the Sentinel-2 data is significantly less for the PANN model compared to the baseline method. This shows that the PANN model is able to generalise to different sensor data much better than the baseline method, further showing that the PANN model is able to extract meaningful features from the input data.

Our results show that the PANN model achieves comparable-to-better results than the RaVAEn state-of-the-art AI model for the natural disaster change detection task conducted in this study. Most importantly, the PANN was able to achieve this result by creating a mapping from the input space to the feature space without any training. The PANN is training-free as it continuously adapts with each new input. The model equations are relatively light–weight and thus all the processing of the data by the PANN model was able to be carried out using only CPUs in this study. The compute resources, time and memory, were measured for the PANN to process a patch sequence of \(574 \times 509\) pixels over 5 frames, corresponding to roughly a 25 \(\hbox {km}^2\) region. Using 8 cores on an Intel Xeon Scalable processor, the PANN model takes 19 s and uses approximately 1 GB of memory (see Supplementary Fig. S3). We note that the model could be optimised to further decrease memory requirements by reducing the floating-point precision of the PANN from 64 bit to 32 or 16 bit precision. We also note that the processing time for the PANN model is not directly comparable to the inference time of RaVAEn or other AI change detection models, as the processing time to evaluate a scene also includes updating of the PANN weights with each input. Unlike AI models, the PANN model does not require any additional time and compute resources for training. The physics–based equations that constitute the PANN model are a significant deviation from conventional deep multi-layered bipartite neural networks that typically use mathematical activation functions to perform nonlinear transformations and stochastic gradient decent with backpropagation as the learning algorithm to train networks weights into an optimal static state. As such, the iterative training process is computationally expensive and usually requires cloud-based GPUs63, to produce a trained model. As the PANN simulates a physical device, based on memristive nanowire networks, we expect the computational resources to significantly decrease when tasks are implemented on the physical hardware (see e.g.41,44,48,51,64).

The PANN model is relatively sensor agnostic since it does not need to be pre-trained with data from a specific sensor and the model design of having a separate network for each spectral band (see Methods for details), allows it to receive images with varying number of spectral bands. Having a separate PANN for each band also allows a simple training–free method to add domain knowledge to the model in the form of an automatic feature engineering mechanism that determines which spectral bands and hence PANNs are used (see Methods for details). In this study, only relatively minor adjustments to the feature engineering mechanism were required when changing the sensor data from the Sentinel-2 satellite to the LandSat-8 satellite. In addition, having a separate PANN for each band combined with the feature engineering means only a subset of the PANNs are required, further reducing the computational resources. Having multiple smaller networks, however, means that interactions between bands may not be fully captured, as compared to a single larger network, where information from all the bands is integrated while being processed. Alternatively, another model design that could be considered is multiple networks with a hierarchical structure65.

The training-free nature of PANN makes it an ideal candidate for extreme edge computing applications, particularly for EO analysis on-board satellites. Specifically, there are two main challenges that are inherently addressed by the training-free nature of the PANN. The first is that ML models typically do not generalise well and need to be trained for a specific sensor. When ML models are trained using data from a different sensor, there is often a notable decrease in performance31,32. Furthermore, training a ML model with only simulation data can be difficult24. The training-free nature of the PANN therefore makes it a promising candidate to circumvent this issue. The second is that ML models are often retrained as new data from the desired sensor become available to increase performance and to account for any data-shift problems. This process requires the new data to be downlinked, to retrain the model on the ground before uplinking the updated model to the satellite33. The dynamical nature of the PANN would allow this whole process to be bypassed as the PANN inherently updates and learns from each new input from the sensor.

In this study, we have demonstrated the feasibility of the PANN for detecting changes from natural disasters within the context of their potential deployment on-board satellites. Based on these simulation results, future work would include testing a prototype of the hardware device, based on memristive nanowire networks, which the PANN is modelled after. Based on previous studies with such neuromorphic devices 41,44,48,51,64, it is expected that implementation on the low-power hardware device will substantially reduce processing times and energy consumption, and could be achieved using only the on-board compute. The methodology outlined in this study could be used when implementing in hardware with one key change: the physical network devices may need larger electrode grids than the \(16 \times 16\) grid used here, as additional electrodes would be needed to read out voltages from the larger physical networks. To date, devices with up to 128 electrodes have been fabricated 66, although interestingly, experimental results suggest that using readouts from only a subset of electrodes may be sufficient to capture the most salient output features 41. This may be attributed to how the electrodes integrate signals from many nanowires they make electrical contact with. This means that when implementing in a physical device, the option of using one large network that processes all the spectral bands together may be viable. Multiple configurations for the electrode placement would be possible, provided the contact electrodes evenly sample the underlying nanowire network.

In conclusion, we demonstrated a training-free AI model, the PANN, for a natural disaster EO change detection task that contained four types of natural disasters: fires, floods, hurricanes and landslides. We benchmarked our model against a state-of-the-art AI model and achieved better results for floods and comparable results in the remaining three categories, without any training. We also showed that the PANN model can generalise to other EO sensors. We found that the PANN was able to achieve these results due to its ability to extract meaningful features from the inputs and sort them accordingly in the feature space. Being able to navigate the feature space, therefore, plays a vital role in the model’s performance. The training-free nature of the PANN makes it an ideal candidate for use on-board satellites, processing EO data at the extreme edge.

Methods

Data

The primary dataset used in this study is a time series of multi-spectral EO of natural disasters released by Růžička et al.28. The images are level 1C pre-processed multi-spectral images from the Multi-Spectral Imager67 camera on-board the Sentinel-2 Earth observation satellite constellation. Only the 10 highest spatial resolution bands are in the dataset. All bands have a spatial resolution of 10 m. The bands that are not naturally at 10 m resolution were interpolated to the higher resolution. Table 4 shows the specific bands used in this study along with the corresponding index number used to reference the bands. The dataset covers four categories of natural disasters: fires, floods, hurricanes and landslides. Each category includes five natural disaster events, except for floods which have four, giving a total of 19 natural disaster events. The individual samples were created by tiling each event, with no overlap, into \(32 \times 32\) pixel sequence tiles, resulting in a total of 62,655 samples used to evaluate the PANN model. For each natural disaster event there are five sequential images; the first four are all before the natural disaster event and the final image is after. Along with each natural disaster event, there is an accompanying change mask that contains three labels: unaffected regions, affected regions, and clouds from the fourth and fifth images. An example of a hurricane disaster event is shown in Fig. 5, where damage to the island vegetation can be seen in the fifth image and the change mask image shows the unaffected regions in purple, the affected region in aqua and the clouds in yellow. The change mask in this study is only used to evaluate the performance of the proposed model.

In this study, we created a second dataset to evaluate the performance of the PANN from a different sensor, the LandSat-8 Operational Land Imager68. This was done by selecting the natural disaster events in the Sentinel-2 dataset that did not have any clouds obscuring the change regions in the change mask. This left 8 natural disaster events: 2 fires, 2 floods and 4 landslides. The Top of Atmosphere images from the LandSat-8 satellite with the 9 highest spatial resolution bands of 30 m were used. To create the time series of images for each natural disaster event, only images with 40% cloud cover or less were used. Like the Sentinel-2 dataset, each natural disaster event has five sequential images, with the first four before the event and the final image is after. The corresponding change mask from the Sentinel-2 dataset was used after resizing to 30 m resolution using the nearest-neighbour method and adding the clouds in the fourth and fifth images only. Clouds were detected automatically using the Google Earth Engine LandSat simple cloud score algorithm69, with a score \(\ge 55\)% considered a cloud. As with the Sentinel-2 dataset, individual samples were created by tiling each event, with no overlap, into \(16 \times 16\) pixel sequence tiles, resulting in a total of 8,199 samples.

The data was preprocessed by first taking the log of the RGB pixel values and then scaling the values to the interval [-1, +1] for each band in the data. This process is described by Eq. (1):

where the min and max values for each band are fixed and were chosen to be consistent with Ref. 28 for the Sentinel-2 dataset, to allow a direct comparison with their state-of-the-art variational autoencoder model. A different set of min and max values were manually selected by visual inspection for the LandSat-8 dataset. Missing values were set to a value just above zero of 0.005.

An example of a hurricane natural disaster scene, with the first 4 images before the hurricane and image 5 is after. The change mask (last column) shows the affected (aqua) and unaffected (purple) regions along with the clouds (yellow) in the images directly before and after the hurricane. Satellite images are from Sentinel-2 complied by Růžička et al. and available in their git57.

Model architecture

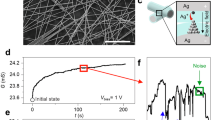

The PANN is based on a physically-motivated model of a physical neuromorphic network comprised of self-organised nanowires with resistive switching memory (memristive) electrical junctions 49. This is done by first modelling a nanowire network and then converting it into a graph representation which is used in the PANN. This process is shown in Fig. 6. In this study, the network for the PANN was created by distributing 803 nanowires over a \(158 \times 158 \, \mu\)m 2D plane with nanowire centres sampled from a generalised normal distribution with a beta value of 5 and with nanowire orientations sampled on \([0,\pi ]\). Nanowire lengths were sampled from a Gaussian distribution with an average of \(30 \, \mu\)m and a standard deviation of \(6 \, \mu\)m. As nanowire networks are nano-electronic systems, input signals are delivered via electrodes, which were modelled with a diameter \(5 \, \mu\)m and with evenly spaced placements in a \(16\times 16\) grid over the 2D plane with a margin of \(15 \, \mu\)m, giving a pitch of \(8 \, \mu\)m between electrodes. Where the nanowires overlap with other nanowires or electrodes, electrical junctions are formed whose internal states evolve in time, as described below. Figure 6a depicts the nanowire network model, with the nanowires displayed as grey lines and nanowire–nanowire junctions as small grey dots. The electrodes are shown as the large green circles and the nanowire-electrode junctions are shown as small dark green dots. Figure 6b shows the corresponding graphical representation of the network used in the PANN. The black nodes correspond to the nanowires and the green nodes correspond to the electrodes, used as input nodes. The edges correspond to the electrical junctions. The resulting network had a total of 1059 nodes and 12,279 edges. 256 nodes were selected as input nodes and from the remaining 803 nodes, 400 were selected at random as output nodes (note that in a real physical device, electrodes would also serve as output nodes).

(a) PANN model nanowire representation. The nanowires are the grey lines and the electrodes are the large green circles. Nanowire–nanowire junctions are shown as grey dots and nanowire–electrode junctions are shown as dark green dots. (b) graph representation of the PANN. Green nodes are the input nodes which correspond to the electrodes. Black nodes correspond to the nanowires, while edges correspond to the nanowire–nanowire and nanowire–electrode junctions.

The network in the PANN has a heterogeneous, neuromorphic topology and operates like a complex electrical circuit with nonlinear circuit components known as memristors53,70. As such, inputs are treated as input voltage signals and Kirchhoff’s conservation laws are solved at each time step to calculate the node voltage distribution across the network. The edge weights are conductances that evolve in time according to a memristor equation of state:

with all edges set to an initial state \(\lambda (t=0) = 0\). In this study, the following parameters were used: \(V_{\text {reset}} = 5\times 10^{-3}\,\)V, \(V_{\text {set}} = 1\times 10^{-2}\,\)V and \(\lambda _{\text {max}} = 1.5\times 10^{-2}\,\)V s. Full details of the model and its validation against experimental nanowire networks are provided in Refs. 51,53. In contrast to artificial neural networks, the dynamic nature of these physical neuromorphic networks means that the weights are not trained, which not only makes them more energy efficient, but also allows all the data to be used for evaluating the model.

Model setup

Figure 7 shows the process of feeding the natural disaster image sequence into the PANN model. First an automatic feature engineering step determines which bands to use for that specific natural disaster event. This is motivated by a physical understanding of the event: for example, water exhibits the greatest contrast compared to land at infrared wavelengths. The images are then subdivided into a series of \(32\times 32\) pixel sequence tiles, \(x^{a,b} _N(t)\), where a, b is the location of the tile and \(N \le 10\) is the number of bands as determined by the feature engineering process. The images are tiled column by column starting at the top left hand corner. The tile sequence is passed through a max pooling layer that has a pooling size (2,2), a stride of 2 and does not use any padding, hence halving the spatial resolution of the tile sequence to \(16\times 16\) pixels. The tile sequence is then split into individual bands, creating the input signals \(U^{a,b}_m (t)\), where m is the index to each PANN for each spectral band as given in Table 4. As such, there are a total of 10 identical PANN networks, each taking inputs for a specific band. The readout values of the PANNs used for the given tile sequence are concatenated to give the final features, \(F^{a,b}(t)\) for that tile location. Hence, not all 10 PANNs are used for every natural disaster event, as demonstrated in Fig. 7, where only PANN numbers 01-03 and 09 are used and the greyed out PANNs (PANN 04 – 08 and 10) are not used for this natural disaster event.

Diagram of the PANN workflow showing the pipeline from the input images to the output features, \(F^{a,b}(t)\) from the networks. First, automatic feature engineering is applied to the input images to determine which bands of the images to use. The images are then broken into a sequence of small \(32\times 32\) pixel tiles, \(x^{a,b} _N(t)\), where a, b is the location of the tile and N is the number of bands. The creation of the tile sequence is shown by the coloured (red, orange, yellow, lime and green) boxes and are then fed into the max pooling layer. The bands are separated, as denoted by \(\bigotimes\), with each band being fed into a unique PANN model. In this example \(\hbox {PANN}_{01-03}\) and \(\hbox {PANN}_{09}\) are used. The outputs from all the PANNs that are used are concatenated together, as denoted by \(\bigoplus\), to produce the feature sequence \(F^{a,b}(t)\) for tile \(x^{a,b} _N(t)\). Satellite images are from Sentinel-2 complied by Růžička et al. and available in their git57.

To determine whether a change from a natural disaster occurred at a given tile location, the distance between the images of the tile sequence is measured in the feature space. It is assumed that if there is any change it is in the last image of the sequence, so only the distances between the final image and each of the previous images are compared. This is the same change score method as introduced in Ref. 28. Formally, the change score, S, is given by

where dist is an arbitrary distance metric. In this study we focus on three different distance metrics: Euclidean, Cosine and Correlation. Unless otherwise stated, the Correlation distance was used. The Cosine distance is an angle-base metric and is given by

The Correlation distance is the mean corrected version of the Cosine distance, given by

Distances between the tile sequence images are compared directly in the features space, so that it is not necessary to learn a mapping function from the feature space to a target variable. This means it is not necessary to train any part of the model.

To evaluate the quality of the change maps produced by the PANN we use the AUPRC71. To calculate the curve, we treated each pixel in the output change maps as a positive or negative example, across all natural disaster events in that category. As such, a separate precision-recall curve was produced for each class of natural disaster and an AUPRC value. Areas labelled as clouds in the change mask were ignored when calculating the AUPRC. This was done to be consistent with Ref. 28 and to allow for a more accurate comparison between the PANN and RaVAEn models.

Automatic feature engineering

The automatic feature engineering process is performed once for each natural disaster scene and is performed first, before the normalisation and tiling stages. The feature engineering process leverages common indices such as the normalised burn ratio index72 and the normalised difference vegetation index73 to calculate natural disaster class scores. Using these scores, a binary decision tree is followed, as shown in Fig. 8, to determine what class of natural disaster is in the scene. The score for each natural disaster class is calculated by creating an index image for the images directly before and after the natural disaster event using Eq. (6), where BX and BY are predetermined bands depending on what type of natural disaster is being assessed. The images are converted to a binary image using a predefined threshold value and a difference image is created by a pixel-wise comparison. The final score is the percentage of pixels that record a change. Once a natural disaster type has been selected, the feature engineering process then selects a subset of bands to be used in the rest of the model depending on the natural disaster class. The specific values and bands used for the natural disaster class score are shown in Fig. 8. See Supplementary Fig. S4 online for the specific values and bands used for the LandSat-8 data.

Decision tree for the automatic feature engineering step. At each branch a natural disaster score is calculated for the different categories. For each score the bands and threshold values used to create the index image are given. The bands used for each natural disaster type once selected are also shown.

We note that more sophisticated index images74,75 could be used to categorise the natural disasters and potentially further improve results. Furthermore, similar feature engineering processes could be used to tune the model to detect changes from specific natural disasters or even clouds. The focus here is to show that the simplest automatic feature engineering process, one that does not require training, can be used to easily incorporate domain knowledge and improve the performance of the model.

Data availibility

We are releasing the full code alongside this paper at https://github.com/ssmi9157/ChangeDetectionPANN. The Sentinel-2 dataset analysed in this study is available in the RaVAEn repository https://github.com/spaceml-org/RaVAEn and the LandSat-8 dataset is available at https://zenodo.org/records/16916355.

References

Vali, A., Comai, S. & Matteucci, M. Deep learning for land use and land cover classification based on hyperspectral and multispectral earth observation data: A review. Remote Sens. 12, 2495 (2020).

Maguluri, L. P. et al. Sustainable agriculture and climate change: A deep learning approach to remote sensing for food security monitoring. Remote Sens. Earth Syst. Sci. 1–13 (2024).

Růžička, V. et al. Semantic segmentation of methane plumes with hyperspectral machine learning models. Sci. Rep. 13, 19999 (2023).

Ferreira, B., Iten, M. & Silva, R. G. Monitoring sustainable development by means of earth observation data and machine learning: A review. Environ. Sci. Eur. 32, 1–17 (2020).

Parelius, E. J. A review of deep-learning methods for change detection in multispectral remote sensing images. Remote Sens. 15, 2092 (2023).

Diakogiannis, F. I., Waldner, F. & Caccetta, P. Looking for change? roll the dice and demand attention. Remote Sens. 13, 3707 (2021).

Chen, H. & Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 12, 1662 (2020).

Bello, O. M. & Aina, Y. A. Satellite remote sensing as a tool in disaster management and sustainable development: towards a synergistic approach. Procedia Soc. Behav. Sci. 120, 365–373 (2014).

Huyck, C., Verrucci, E. & Bevington, J. Remote sensing for disaster response: A rapid, image-based perspective. In Earthquake hazard, risk and disasters, 1–24 (Elsevier, 2014).

Le Cozannet, G. et al. Space-based earth observations for disaster risk management. Surv. Geophys. 41, 1209–1235 (2020).

Karapetyan, D., Minic, S. M., Malladi, K. T. & Punnen, A. P. Satellite downlink scheduling problem: A case study. Omega 53, 115–123 (2015).

Selva, D. & Krejci, D. A survey and assessment of the capabilities of cubesats for earth observation. Acta Astronaut. 74, 50–68 (2012).

Verbesselt, J., Hyndman, R., Newnham, G. & Culvenor, D. Detecting trend and seasonal changes in satellite image time series. Remote Sens. Environ. 114, 106–115 (2010).

Rodriguez, P. S., Schwantes, A. M., Gonzalez, A. & Fortin, M.-J. Monitoring changes in the enhanced vegetation index to inform the management of forests. Remote Sens. 16, 2919 (2024).

Alcaras, E., Costantino, D., Guastaferro, F., Parente, C. & Pepe, M. Normalized burn ratio plus (nbr+): a new index for sentinel-2 imagery. Remote Sens. 14, 1727 (2022).

Zheng, Q., Huang, W., Cui, X., Shi, Y. & Liu, L. New spectral index for detecting wheat yellow rust using sentinel-2 multispectral imagery. Sensors 18, 868 (2018).

Farhadi, H., Ebadi, H., Kiani, A. & Asgary, A. Introducing a new index for flood mapping using sentinel-2 imagery (sfmi). Comput. Geosci. 194, 105742 (2025).

Pettorelli, N. The normalized difference vegetation index (Oxford University Press, USA, 2013).

Jiang, Z., Huete, A. R., Didan, K. & Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 112, 3833–3845 (2008).

Nemni, E., Bullock, J., Belabbes, S. & Bromley, L. Fully convolutional neural network for rapid flood segmentation in synthetic aperture radar imagery. Remote Sens. 12, 2532 (2020).

Rudner, T. G. et al. Multi3net: segmenting flooded buildings via fusion of multiresolution, multisensor, and multitemporal satellite imagery. In Proceedings of the AAAI Conference on Artificial Intelligence 33, 702–709 (2019).

Qing, Y. et al. Operational earthquake-induced building damage assessment using cnn-based direct remote sensing change detection on superpixel level. Int. J. Appl. Earth Obs. Geoinf. 112, 102899 (2022).

Rosso, M. P., Sebastianelli, A., Spiller, D., Mathieu, P. P. & Ullo, S. L. On-board volcanic eruption detection through cnns and satellite multispectral imagery. Remote Sens. 13, 3479 (2021).

Chintalapati, B. et al. Opportunities and challenges of on-board ai-based image recognition for small satellite earth observation missions. Adv. Space Res. (2024).

Yu, J. et al. Coca: Contrastive captioners are image-text foundation models. arXiv preprint arXiv:2205.01917 (2022).

Chen, X. et al. Symbolic discovery of optimization algorithms. Adv. Neural. Inf. Process. Syst. 36, 49205–49233 (2023).

Abid, N. et al. Ucl: Unsupervised curriculum learning for water body classification from remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 105, 102568 (2021).

Růžička, V. et al. Ravæn: unsupervised change detection of extreme events using ml on-board satellites. Sci. Rep. 12, 16939 (2022).

Giuffrida, G. et al. The \(\phi\)-sat-1 mission: The first on-board deep neural network demonstrator for satellite earth observation. IEEE Trans. Geosci. Remote Sens. 60, 1–14 (2021).

Labrèche, G. et al. Ops-sat spacecraft autonomy with tensorflow lite, unsupervised learning, and online machine learning. In 2022 IEEE Aerospace Conference (AERO), 1–17 (IEEE, 2022).

Koh, P. W. et al. Wilds: A benchmark of in-the-wild distribution shifts. In International conference on machine learning, 5637–5664 (PMLR, 2021).

Derksen, D. et al. Few-shot image classification challenge on-board. In Workshop-data centric AI, neurIPS (2021).

Mateo-García, G. et al. In-orbit demonstration of a re-trainable machine learning payload for processing optical imagery. Sci. Rep. 13, 10391 (2023).

Růžička, V. et al. Fast model inference and training on-board of satellites. In IGARSS 2023-2023 IEEE International Geoscience and Remote Sensing Symposium, 2002–2005 (IEEE, 2023).

Christensen, D. V. et al. 2022 roadmap on neuromorphic computing and engineering. Neuromorphic Comput. Eng. 2, 022501 (2022).

Song, M.-K. et al. Recent advances and future prospects for memristive materials, devices, and systems. ACS Nano 17, 11994–12039 (2023).

Kuncic, Z. & Nakayama, T. Neuromorphic nanowire networks: Principles, progress and future prospects for neuro-inspired information processing. Adv. Phys. X 6, 1894234 (2021).

Karniadakis, G. E. et al. Physics-informed machine learning. Nat. Rev. Phys. 3, 422–440 (2021).

Cuomo, S. et al. Scientific machine learning through physics-informed neural networks: Where we are and what’s next. J. Sci. Comput. 92, 88 (2022).

Jin, X., Cai, S., Li, H. & Karniadakis, G. E. Nsfnets (navier-stokes flow nets): Physics-informed neural networks for the incompressible navier-stokes equations. J. Comput. Phys. 426, 109951 (2021).

Zhu, R. et al. Online dynamical learning and sequence memory with neuromorphic nanowire networks. Nat. Commun. 14, 6697 (2023).

Lilak, S. et al. Spoken digit classification by in-materio reservoir computing with neuromorphic atomic switch networks. Front. Nanotechnol. 3. https://doi.org/10.3389/fnano.2021.675792 (2021).

Kotooka, T. et al. Thermally Stable Ag2Se Nanowire Network as an Effective In-Materio Physical Reservoir Computing Device. Adv. Electron. Mater. 10, 2400443. https://doi.org/10.1002/aelm.202400443 (2024).

Loeffler, A. et al. Neuromorphic learning, working memory, and metaplasticity in nanowire networks. Sci. Adv.9, eadg3289 (2023).

Sillin, H. O. et al. A theoretical and experimental study of neuromorphic atomic switch networks for reservoir computing. Nanotechnology 24, 384004 (2013).

Stieg, A. Z. et al. Self-organization and Emergence of Dynamical Structures in Neuromorphic Atomic Switch Networks. In Adamatzky, A. & Chua, L. (eds.) Memristor Networks, 173–209, https://doi.org/10.1007/978-3-319-02630-5_10 (Springer International Publishing, 2014).

Michieletti, F., Pilati, D., Milano, G. & Ricciardi, C. Self-organized criticality in neuromorphic nanowire networks with tunable and local dynamics. Adv. Funct. Mater. 2423903 (2025).

Milano, G. et al. In materia reservoir computing with a fully memristive architecture based on self-organizing nanowire networks. Nat. Mater. 21, 195–202 (2022).

Kuncic, Z. et al. Neuromorphic information processing with nanowire networks. In 2020 IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (IEEE, 2020).

Fu, K. et al. Reservoir computing with neuromemristive nanowire networks. In 2020 International Joint Conference on Neural Networks (IJCNN), 1–8,https://doi.org/10.1109/IJCNN48605.2020.9207727 (2020).

Hochstetter, J. et al. Avalanches and edge-of-chaos learning in neuromorphic nanowire networks. Nat. Commun. 12, 4008 (2021).

Zhu, R. et al. Harnessing adaptive dynamics in neuro-memristive nanowire networks for transfer learning. In 2020 International Conference on Rebooting Computing (ICRC), 102–106 (IEEE, 2020).

Zhu, R. et al. Information dynamics in neuromorphic nanowire networks. Sci. Rep. 11, 13047 (2021).

Loeffler, A. et al. Modularity and multitasking in neuro-memristive reservoir networks. Neuromorphic Comput. Eng. 1, 014003. https://doi.org/10.1088/2634-4386/ac156f (2021).

Zhu, R., Eshraghian, J. & Kuncic, Z. Memristive reservoirs learn to learn. In Proceedings of the 2023 International Conference on Neuromorphic Systems, ICONS ’23, https://doi.org/10.1145/3589737.3605989 (Association for Computing Machinery, New York, NY, USA, 2023).

Baccetti, V., Zhu, R., Kuncic, Z. & Caravelli, F. Ergodicity, lack thereof, and the performance of reservoir computing with memristive networks. Nano Express 5, 015021. https://doi.org/10.1088/2632-959X/ad2999 (2024).

Růžička, V. et al. Ravaen. GitHub https://github.com/spaceml-org/RaVAEn (2022). Accessed: May 1, 2024.

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. In International Conference on Learning Representations (2014).

McInnes, L., Healy, J. & Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv preprint arXiv:1802.03426 (2018).

Xia, S. et al. Effectiveness of the euclidean distance in high dimensional spaces. Optik 126, 5614–5619 (2015).

Korenius, T., Laurikkala, J. & Juhola, M. On principal component analysis, cosine and euclidean measures in information retrieval. Inf. Sci. 177, 4893–4905 (2007).

Ioffe, S. & Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning, 448–456 (2015).

Thompson, N. C., Greenewald, K., Lee, K., Manso, G. F. et al. The computational limits of deep learning. arXiv preprint arXiv:2007.0555810 (2020).

Milano, G., Montano, K. & Ricciardi, C. In materia implementation strategies of physical reservoir computing with memristive nanonetworks. J. Phys. D Appl. Phys. 56, 084005 (2023).

Fan, D., Sharad, M., Sengupta, A. & Roy, K. Hierarchical temporal memory based on spin-neurons and resistive memory for energy-efficient brain-inspired computing. IEEE Trans. Neural Netw. Learn. Syst. 27, 1907–1919 (2015).

Demis, E. C. et al. Nanoarchitectonic atomic switch networks for unconventional computing. Jpn. J. Appl. Phys. 55, 1102B2 (2016).

Drusch, M. et al. Sentinel-2: Esa’s optical high-resolution mission for gmes operational services. Remote Sens. Environ. 120, 25–36 (2012).

Knight, E. J. & Kvaran, G. Landsat-8 operational land imager design, characterization and performance. Remote Sens. 6, 10286–10305 (2014).

Gorelick, N. et al. Google earth engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 202, 18–27 (2017).

Loeffler, A. et al. Topological properties of neuromorphic nanowire networks. Front. Neurosci.14, https://doi.org/10.3389/fnins.2020.00184 (2020).

Davis, J. & Goadrich, M. The relationship between precision-recall and roc curves. In Proceedings of the 23rd international conference on Machine learning, 233–240 (2006).

García, M. L. & Caselles, V. Mapping burns and natural reforestation using thematic mapper data. Geocarto Int. 6, 31–37 (1991).

Huang, S., Tang, L., Hupy, J. P., Wang, Y. & Shao, G. A commentary review on the use of normalized difference vegetation index (ndvi) in the era of popular remote sensing. J. For. Res. 32, 1–6 (2021).

Filipponi, F. Bais2: Burned area index for sentinel-2. In Proceedings 2, 364 (2018).

Muhsoni, F. F., Sambah, A. B., Mahmudi, M. & Wiadnya, D. Comparison of different vegetation indices for assessing mangrove density using sentinel-2 imagery. GEOMATE J. 14, 42–51 (2018).

Acknowledgements

The authors acknowledge the use of the Artemis High Performance Computing resource at the Sydney Informatics Hub, a Core Research Facility of the University of Sydney. S.S. is supported by an Australian Government Research Training Program (RTP) Scholarship.

Author information

Authors and Affiliations

Contributions

S.S. and Z.K. designed the experiments. S.S. performed the experiments with guidance from C.P. and Z.K. S.S., C.P. and Z.K. analysed the results. S.S. drafted the manuscript with consultation from Z.K. All authors critically reviewed the manuscript. Z.K. supervised the project.

Corresponding author

Ethics declarations

Competing interests

Z.K. is a founder and equity holder of Emergentia, Inc., which filed a non-provisional U.S. patent application (no. 18/334,243) for the software simulator described in this work. C.P. was employed by Trillium Technologies during this work. The other author declares no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Smith, S., Purcell, C. & Kuncic, Z. Training-free AI for earth observation change detection using physics aware neuromorphic networks. Sci Rep 15, 35292 (2025). https://doi.org/10.1038/s41598-025-19057-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-19057-9