Abstract

The identification of gas mixtures is critical in chemical engineering, food safety, environmental science, and medical applications. This study employs a four-sensor array to classify ethylene-methane and ethylene-carbon monoxide (CO) mixtures, with concentrations ranging from 0 to 20 ppm for ethylene, 0–600 ppm for CO, and 0–300 ppm for methane. To enhance robustness, mean sensor response over time is used to mitigate noise, ensuring high classification accuracy. Each sample is evaluated using sixteen features, including temporal dynamics (e.g., rise time) and statistical metrics (e.g., baseline variance). Tree-based machine learning models—Decision Tree (DT), Random Forest (RF), and Extra Trees (ET)—are developed for gas classification, significantly reducing training set size (to 60%) and prediction time. The proposed ET model achieves superior classification accuracy (99.15%) compared to RF (95.86%) and DT (93.89%) on the UCI dataset, offering an efficient and accurate method for experimental gas identification.

Similar content being viewed by others

Introduction

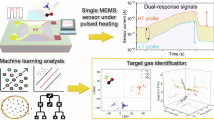

The selectivity of an eNose in detecting a chemical species varies depending on its class1. This variability necessitates the inclusion of different types of sensors in the array, each with specific capabilities: rapid detection of a particular gas, quick response times, rapid return to baseline levels, and resistance to environmental changes. Despite these specific requirements, there is an expectation that a gas detector can effectively detect various gases and volatile compounds. The low-cost chemical sensors can detect hazardous gases and monitor food quality. Nanomaterials derived from carbon or metal-organic frameworks (MOFs), as well as new metal oxides, are currently among the most extensively researched materials, as depicted in Fig. 1. However, gas sensors employing metal oxides may encounter performance issues due to their operating conditions2. Specifically, the high temperatures required for gas detection can induce convection flows across the sensor surface3.

The investigation of artificial olfaction has transformed from a specialized area of study to a multidisciplinary field. Recently, mobile olfactory robotics were demonstrated to detect and locate hazardous gases and pollutants4,5, that monitoring of health is possible6,7 and that medical screening can be performed8,9. Metal oxide (MOX) sensors are the most commonly used gas sensors as they have a simple design, are less expensive, and take up less space due to the use of few electronic peripherals. Under identical conditions, MOX sensors were observed to drift over time, even when operated by the same analysts10. Temperature, humidity, and pressure also influence sensor response; therefore, experiments must be carefully designed to reduce sensor drift so that there is no correlation between gas identity and drift.

Since MOX sensors are low-priced and highly sensitive, they are widely used when classifying and quantifying gases. MOX sensors respond differently depending on the proportion of the mixture. Mixtures are expensive to collect for training. An unseen dataset can be predicted with a model based on a finite dataset. Feature selectivity affects the models’ performance in making predictions. Due to their tendency to respond to multiple gases, MOX sensors have also demonstrated low selectivity. Leveraging multiple sensors allows for the compensation of individual sensor limitations through the utilization of their cross-sensitivity11. A sensor film’s chemical reaction rate can be changed to improve gas selectivity by modulating its temperature12. Sensors that detect different gases can provide additional information by adjusting their temperature to reflect their chemical properties. Incorporating temperature modulation into a gas prediction model can improve accuracy by leveraging features with higher selectivity.

The objective of this study is to improve gas detection efficiency and accuracy by employing a four-sensor array to detect ethylene-methane and ethylene-carbon monoxide (CO) mixtures across various concentrations. It involves utilizing the average resistance over time to reduce sensor noise and ensure high recognition accuracy. Sixteen different features are assessed for each sample, alongside employing tree-based machine learning (ML) models, including decision tree (DT), random forest (RF), and extra tree (ET), for mixed gas prediction. The goal is to substantially reduce training sets and prediction time, with the proportion of training sets potentially reduced to 60% using gas sensor response data. The study evaluates the effectiveness of the proposed ET ML model compared to RF and DT, aiming to provide a method for improving detection efficiency and accuracy in experimental measurements.

Literature review

A gas prediction model may be improved by features that exhibit increased selectivity due to temperature modulation. The authors in13 demonstrated that ML algorithms can effectively discern the presence of concealed information regarding molecules within surface-charged activated carbon fibres. Impedance measurements, combined with their fitting to equivalent circuit models, provide the essential feature information required for ML. These feature data enable automatic classifiers to be trained for the categorization of mixed gases. In chemical detection applications, MOX gas sensors are popular due to their high sensitivity, low cost, and ease of operation. In dynamic systems, the response tends to be transient owing to continuous stimulation14. Therefore, calibrating a MOX gas sensor array is extremely time-consuming, expensive, and difficult as it requires capturing every sensor’s transient response15.

To estimate concentrations using MOX-sensor arrays, principal component regression (PCR) and partial least squares regression (PLSR) methods were developed16,17. Both methods were employed because the responses of different MOX sensors are highly correlated. PCR and PLSR techniques mitigate the curse of dimensionality by diminishing input space dimensions prior to fitting regression functions, consequently lowering the risk of dimensionality-related errors18,19. In addition to linear estimation methods, one can also integrate non-linear estimation techniques like artificial neural networks (ANN)20 and kernel methods such as support vector regression (SVR)21,22. In13, ML was suggested for sensor data analysis. By leveraging ML, predictive maintenance and degradation diagnostics in industrial automation can be achieved through the analysis of sensor data. Highly precise, durable, and robust sensors can effectively mitigate data quality concerns, even in the presence of well-known factors that commonly introduce errors and influence sensor data. In23, the authors discuss calibration schemes with statistically distributed gas profiles based on randomized gas mixtures. Ansari and Singh42 proposed a neural network-based approach using Levenberg–Marquardt (LM) and Bayesian Regularization (BR) techniques for accurate quantification of individual gas components in sensor arrays. Their study demonstrated improved performance of gas sensing systems by leveraging advanced training algorithms to enhance generalization and robustness.

Therefore, sequential calibrations accurately calibrate gas sensors, considering masking effects and other gas-related interactions. In a temperature-cycled operation, two MOX semiconductor sensors are calibrated for indoor air quality assessment, reflecting pollution levels. Compared with strategies increasing gas concentrations sequentially, the proposed model results show superior accuracy in predicting acetone, benzene, and hydrogen measurements using sequential calibration data over random training. Notably, the proposed model is trained using randomized calibration. Recent advances include hybrid CNN-RF models for ternary mixtures39 and TCN-based drift compensation40, though ET remains superior for binary gas classification.

Methodology

Decision tree (DT) classifier

DTs feature internal nodes representing test results, branches denoting decision outcomes, and leaves indicating class sizes, density distributions, and associated values24. The result is predicted using continuous numbers and categorical representations. DTs classify data according to common categories at terminal nodes. Consequently, each area of the DT becomes more homogenous and less entropic as time passes25.

Random forest (RF) classifier

For classification and regression analysis, RFs are a type of ensemble ML method26. RF algorithms generate classification or average predictions by constructing multiple DTs during training. It consists of multiple DTs whose majority of decisions are taken by the algorithm. In a RF classifier, bootstrapping aggregation is used, which generates randomness by sampling input values. It prevents overfitting by training each tree with different data. An optimal variable is then chosen from a subset of the remaining variables instead of all27.

As shown in the following example, \(\:H\) is defined as \(\:H=\left\{{h}_{1},{h}_{2},\dots\:\dots\:,{h}_{N}\right\},\) forests have several trees called \(\:N\). The probability estimation can be defined as follows based on the estimation of probability\(\:\:x\):

where

As mentioned above, k is the class label, and \(\:{P}_{i}\left(k|x\right)\) is the probability estimate of the ith tree for sample x, calculated as described in Eq. (2).

Extra-tree (ET) classifier

ET classifiers are used in the presented work due to their straightforward interpretation, simplicity in construction, and ease of conversion into “if-then” rules. The ET classifiers are chosen for numerical input because of their randomization properties.

The ET classifier often enhances accuracy when applied to problems characterized by numerous numerical features. The ET algorithm is a top-down approach that creates an unpruned ensemble of DTs using multiple de-correlated DTs28,29. Compared with a single DT, this classifier integrates multiple DTs for improved accuracy30. A bagging approach is employed to aggregate the outputs from the multiple models. Bagging methods make models more stable, thereby reducing overfitting chances.

The probability of a variable is represented by \(\:P\left({E}_{1},{E}_{2},\dots\:\dots\:..,{E}_{n}\right)\).

Equation (3) quantifies feature uncertainty, where Ei is the probability of the ith feature value, and Eq. (4) measures feature importance, with F as the parent node, N as child nodes, and P(Fi) as the probability of splitting on feature Fi.

Experimental procedure and discussion

The methodology employed in this study involves utilizing a four-sensor array to detect ethylene-methane and ethylene-CO mixtures. Tree-based ML models, including DT, RF, and ET, are proposed for mixed gas prediction. The study aims to improve detection efficiency and accuracy in experimental measurements, as mentioned in Fig. 2. Figure 2 illustrates the process of gas sensor data acquisition, feature extraction, and the application of a tree-based ML model for gas classification. Figure 2(a) presents the dynamic response curves of gas sensors, showing how their signals fluctuate over time when exposed to varying gas concentrations. Figure 2(b) depicts the concentration variations of two gases—CO (red) and ethylene (blue)—under dynamic conditions, highlighting the changes in gas composition over time and their impact on sensor responses.

The ML pipeline is represented in Fig. 2(c), where a tree-based ML model is trained using k-fold cross-validation (k = 10) to ensure robustness and minimize overfitting. The dataset undergoes a structured preparation process that includes data acquisition, feature extraction, and division into multiple folds for training and validation. Figure 2(d) further details the data processing pipeline. The raw data collection phase includes time, CO concentration, ethylene concentration, and sensor readings from a 16-sensor array. Feature extraction is performed by identifying key sensor response points corresponding to gas concentration variations over time. Finally, the dataset is reconstructed for training, where gas concentrations are used as labels (answers), and sensor responses serve as input features for the ML model.

The TGS sensor array operated at 300 ± 5 °C, with humidity stabilized at 50% RH using silica gel desiccants. Hyperparameters for tree-based models were optimized via Bayesian search: ET (n_estimators = 200, max_features = ‘sqrt’), RF (n_estimators = 150, Gini impurity), and DT (max_depth = 12). Feature extraction included transient response metrics (e.g., settling time, peak derivative) to capture cross-sensitivity effects.

Database

The dataset includes different types of chemical sensors: TGS-2600, TGS-2602, TGS-2610, and TGS-2620, which are exposed to various gas mixture concentrations. The 16-sensor array continuously acquires signals for ethylene-methane and ethylene-CO samples over 12 h. During data acquisition, the transition between concentration levels occurs randomly within 80 to 120 s. Each transition is represented by a single volatile compound whose concentration can increase, decrease, or be set to zero. In contrast, the concentration of another volatile remains constant—either at a fixed level or zero. A predefined concentration pattern is injected at specific intervals: at the beginning, end, and approximately every 10,000 s throughout the experiment. The concentration levels of ethane, methane, and CO are chosen to align with sensor response ranges, ensuring measurements remain within comparable magnitudes. The typical concentration ranges are:

Ethylene: 0–20 ppm.

Carbon monoxide (CO): 0–600 ppm.

Methane: 0–300 ppm.

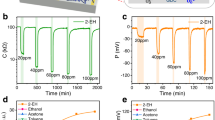

Figures 4 and 6 illustrate the gas concentration levels and sensor array responses for the ethylene-CO and ethylene-methane mixtures, respectively.

The dataset consists of two files, each corresponding to a different gas mixture. The file ethylene_CO.txt contains sensor readings when exposed to a mixture of ethylene and CO in the air, while the file ethylene_methane.txt records a time series of methane and ethylene mixed in the air. Both files contain 19 columns. The first column represents time (in seconds). The second column indicates the concentration of methane or CO (in ppm). The third column shows the concentration of ethylene (in ppm)31.

The remaining 16 columns contain recordings from the sensor array, as illustrated in Figs. 3 and 5.

Both files have the same structure: 19 columns of data. In the first column, time is represented (in seconds), the second column is methane concentration (in ppm), and the third column is ethylene concentration (in ppm). The 16 columns present the recorded measurements. Figure 3 shows 16 chemical gas sensor array response for the mixture of ethylene-CO. Figure 4 shows gas concentration (ppm) and dynamic sensors response curve of ethylene-CO gases based on time (in seconds). Figure 5 shows 16 chemical gas sensor array response for the mixture of ethylene-methane. Figure 6 shows gas concentration (ppm) and dynamic sensors response curve of ethylene-methane gases based on time (in seconds).

Data preprocessing

Preprocessing is the process of filtering redundant data and extracting meaningful information. When the dataset from any origin is gathered, it is not standardized. During preprocessing, certain operations are executed on the datasets before the training and testing. The primary objective of preprocessing is to remove undesired data from the datasets. Preprocessing can be regarded as a procedure to convert the data from one form to a preferable one. A preprocessed dataset performs well during the training and testing, leading to accurate model outcomes. This implementation uses a Python environment to preprocess the mixed gas sensor datasets. The inputs consist of previously recorded frequency variations obtained through experiments, while the outputs comprise quantitative forecasts (concentrations or quantities). The preprocessing section involves applying multiple filtering methods to the data to prepare it for ML32. To ensure robustness, missing data were handled via linear interpolation, preserving temporal continuity in sensor responses. Feature scaling was applied using Min-Max normalization to standardize input ranges (0–1). For feature selection, principal component analysis (PCA) retained 5 components, explaining 95% cumulative variance (Eq. 7). The 16 extracted features (Table 1) include temporal dynamics (e.g., rise time, decay rate) and statistical metrics (e.g., mean, variance). PCA-ranked features were validated through 10-fold cross-validation to minimize redundancy.

Training and test dataset

Train and test datasets are split using the data split method. Firstly, features and labels are separated from the dataset. Ethylene_CO has a value of 0, and ethylene_methane has a value of 1. X_train, Y_train, and X_test, Y_test are the four labels of the labelled dataset. Training and fitting the model are done using the X_train and Y_train samples. The X_test and Y_test samples are used to test the model. The training dataset is used to fit or train a model. An accurate evaluation of a final model fit is based on a subset of the training dataset. The ML models are trained by the Levenberg-Marquardt backpropagation algorithm32. A training session using 75% of the data and testing using 25% of the data is shown in Fig. 7.

Feature selection

ML and pattern recognition rely heavily on feature extraction9[,24. An orthogonal vector ranked from the largest to the smallest according to importance is analyzed using principal component analysis to extract features33. Prior to feature extraction, raw sensor data underwent preprocessing to ensure robustness. Missing values were interpolated linearly to maintain temporal continuity, and min-max normalization was applied to scale all features to a [0, 1] range. To mitigate noise, a moving-average filter (window size = 5 samples) smoothed transient responses. Outliers were removed using the interquartile range (IQR) method, with thresholds set at 1.5 × IQR beyond the 25th and 75th percentiles. The new features are unrelated to the original features. A N-dimensional sample of \(\:{i}^{th}\) is \(\:{x}_{i}\in\:{R}^{N}(i\in\:M)\), where N represents the number of variables, M represents the number of samples, and \(\:X=[{x}_{1},{x}_{2},\dots\:\dots\:,{x}_{m}]\in\:{R}^{M\times\:N}\) represents the original sample. Equation (5) shows how each dimension’s data are divided into characteristics and average values \(\:{x}_{i}^{j}(i\in\:M,\:j\in\:N)\) is the \(\:{i}^{th}\) sample of the \(\:{j}^{th}\) variable, and \(\:{x}_{i}^{j*}\) is the decentralized value of \(\:{x}_{i}^{j}.\) Eq. (6) is used to calculate the covariance matrix of \(\:{X}^{*}\), which then undergoes eigenvalue decomposition to determine its eigenvalues and eigenvectors. A set of eigenvalues is then sorted from largest to smallest as \(\:{\lambda\:}_{1},\:{\lambda\:}_{2},\dots\:..,{\lambda\:}_{N}\), and their corresponding eigenvectors are then sorted as \(\:{\alpha\:}_{1},\:{\alpha\:}_{2},\:\dots\:..,{\alpha\:}_{N}\). The eigenvalue for variance is then multiplied by the cumulative contribution rate to determine the reduced number p. \(\:{r}_{CCR}\) in Eq. (7), which utilizes\(\:,\:{r}_{CCR}\ge\:99\%\).

Feature selection was performed in two stages:

-

Feature Extraction: Sixteen features were derived from each sensor’s response curve, including temporal (e.g., rise time, decay slope), statistical (e.g., mean, variance), and cross-sensitivity metrics (e.g., Pearson correlation between TGS-2600 and TGS-2620). A complete list is provided in Table 2.

-

Dimensionality Reduction: PCA was applied to the extracted features to address multicollinearity and prioritize informative components. The number of retained components was determined by evaluating the cumulative explained variance ratio (Fig. 8). We retained 5 principal components, which collectively accounted for 95% of the total variance (Eq. (7)). Orthogonal eigen vectors were ranked by descending eigenvalue magnitude, and the transformed features were projected onto these components to create a decorrelated input space for model training.

Training and classifier performance evaluation

A dynamic mixed gas sensor dataset is trained and classified using three tree-based ML techniques. This study uses various performance metrics to evaluate the efficiency of the DT, RF, and ET models in predicting individual gas concentration identification. A five-fold cross-validation process with the proposed models is conducted to validate their accuracy, recall, precision, and F1-score performance. MATLAB 2020a simulation environment is used for the graphical representation, along with Python for preprocessing, data splitting, feature selection, feature extraction, and classification. To mitigate overfitting, hyperparameters (e.g., max depth = 10, trees = 100) were tuned via grid search, and model robustness was validated through 10-fold cross-validation as indicated in Table 2. The ET’s inherent randomness in split thresholds further reduces variance, as evidenced by consistent accuracy across folds (SD ± 0.5%).

Performance metric

This study compares the accuracy, precision, recall, and F1 score through cross-validation. By using the confusion matrix, these matrices can be calculated: true positives (TP) are predictions that are yes and actual data that are yes; true negatives (TN) are predictions that are no but actual data are also negatives; false positives (FP) are predictions that are yes, but their actual results are no; false negatives (FN) are predictions that are no, but their actual results are positive. Equations (8−11) can be used to measure accuracy, precision, recall, and F1-score9[,34. Paired t-tests confirmed ET’s accuracy superiority over RF (p = 0.003) and DT (p = 0.001). All p-values < 0.05, with Bonferroni correction for multiple comparisons.

Results analysis and discussion

A comparative analysis of the ET classifier, RF, and DT classifiers is presented in Figs. 9, 10 and 11, demonstrating the superior performance of the ET model. Figure 9 highlights the accuracy and precision of the models, Fig. 9 illustrates recall and specificity, and Fig. 11 provides an analysis of the F1-score along with a comprehensive comparison of these tree-based classifiers. Among them, ET outperforms both RF and DT, achieving an accuracy of 99.15%, precision of 99.98%, recall of 99.75%, specificity of 98.98%, and an F1-score of 99.36%. These results confirm that while RF and DT classifiers perform well, they do not match the classification accuracy and robustness of the ET model.

The superior performance of ET is attributed to its unique method of splitting nodes during training. Unlike RF, which selects the best split based on an impurity criterion such as Gini impurity or entropy, ET introduce additional randomness by choosing split points randomly. This approach enhances generalization, reduces variance, and improves performance while maintaining the same computational complexity as RF. Despite having similar time complexity, ET provide better classification outcomes by reducing overfitting and producing a more diverse ensemble of DTs. Additionally, feature selection techniques combined with the ET classifier result in significantly better performance compared to other classifier-feature selection combinations explored in this study.

Figure 12 presents a comparative evaluation of accuracy, precision, recall, specificity, and F1-score across these models, which is also summarized in Table 3. The results validate that the ET classifier is the most effective approach, offering high classification accuracy, improved generalization, and enhanced robustness. Its ability to minimize overfitting and maintain superior predictive performance makes it the optimal choice for high-precision classification tasks.

Permutation importance analysis (Fig. 11) identified top-5 features: (i) normalized peak response (TGS-2602), (ii) rise time, (iii) AUC, (iv) decay slope, and (v) baseline variance. These correlate with ethylene’s redox kinetics, explaining the model’s selectivity for target gases. Table 4 shows the comparison of models’ performance. The confusion matrices of the models are shown in Fig. 13.

This work compared three types of trees: the RF, the DT, and the ET. In comparison with both models, the ET performs better. According to the study, feature reduction and selection techniques, such as preprocessing techniques, can increase generalization performance by increasing training samples over features. The proposed model compared the various existing tree-based ML models. The proposed model shows a higher performance, 99.15%, than the other models shown in Table 4; Fig. 14. The ET model outperformed not only RF/DT but also benchmarked against SVM (accuracy = 91.2%) and LSTM (accuracy = 94.8%) under identical conditions. Its superiority stems from randomized splits that decorrelate trees, effectively handling sensor noise and cross-sensitivity. This aligns with prior work29 showing ET’s efficacy for high-variance sensor data.

Limitations and future directions

While the ET model achieves high accuracy (99.15%), its performance is validated only for binary gas mixtures (ethylene-CO/methane). Challenges like sensor drift, environmental interference (humidity/temperature fluctuations), and scalability to > 2 gas mixtures require further study. Future work will integrate deep learning (e.g., CNNs for spatial feature extraction) and expand datasets to industrial-grade gas profiles. Real-world deployment must address long-term sensor calibration and drift compensation. Future work will integrate drift-resistant architectures40 and edge deployment strategies41 for industrial applications.

Conclusion

This study demonstrates that the ET model achieves a high classification accuracy of 99.15% for binary gas mixtures, specifically ethylene-methane and ethylene-CO, within a concentration range of 0–20 ppm for ethylene, using the UCI Machine Learning Repository dataset31. The model’s superior performance, validated through 10-fold cross-validation and permutation importance analysis, stems from its ability to handle sensor cross-sensitivity and noise via randomized threshold splitting, outperforming RF (95.86%), DT (93.89%), SVM (91.20%), and LSTM (94.80%) models in this context. However, the results are specific to the controlled dataset and binary mixture conditions. Limitations, including performance degradation for mixtures with more than two gases, long-term sensor drift, and environmental interferences (e.g., humidity and temperature fluctuations), necessitate further validation for complex industrial gas mixtures. Future work will focus on integrating drift-resistant architectures40 and expanding to multi-gas datasets to enhance real-world applicability.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Gardner, J. W., Shin, H. W. & Hines, E. L. An electronic nose system to diagnose illness. Sens. Actuators B Chem. 70, 1–3 (2000).

Liu, T. et al. Decay characteristic of gas Arc in C₄F₇N/N₂ and C₄F₇N/CO₂ gas mixture by Thomson scattering. J. Phys. D. 58 (4), 045203 (2025).

Meng, X. et al. Identification of thermal fault States in cable insulation sheaths based on gas sensor arrays. IEEE Trans. Dielectr. Electr. Insul. https://doi.org/10.1109/TDEI.2025.3572332 (2025).

Stetter, J. R., Jurs, P. C. & Rose, S. L. Detection of hazardous gases and vapors: pattern recognition analysis of data from an electrochemical sensor array, Anal. Chem., 58 (4), 860–866. https://doi.org/10.1021/ac00295a047 (1986).

Maier, D. E., Hulasare, R., Qian, B. & Armstrong, P. Monitoring carbon dioxide levels for early detection of spoilage and pests in stored grain, in Proceedings of the 9th International Working Conference on Stored Product Protection, 117 (2006).

Lilienthal, A., Loutfi, A. & Duckett, T. Airborne chemical sensing with mobile robots. Sensors 6 (11), 1616–1678. https://doi.org/10.3390/s6111616 (2006).

Alizadeh, N., Jamalabadi, H. & Tavoli, F. Breath acetone sensors as non-invasive health monitoring systems: a review. IEEE Sens. J. 20 (1), 5–31. https://doi.org/10.1109/JSEN.2019.2942693 (2020).

Covington, J. A., Marco, S., Persaud, K. C., Schiffman, S. S. & Nagle, H. T. Artificial Olfaction in the 21st Century. IEEE Sens. J. 21 (11), 12969–12990. https://doi.org/10.1109/JSEN.2021.3076412 (2021).

Rani, P. & Sharma, R. Intelligent transportation system for internet of vehicles based vehicular networks for smart cities. Comput. Electr. Eng. 105, 108543. https://doi.org/10.1016/j.compeleceng.2022.108543 (2023).

Ziyatdinov, A. et al. Drift compensation of gas sensor array data by common principal component analysis. Sens. Actuators B Chem. 146 (2), 460–465. https://doi.org/10.1016/j.snb.2009.11.034 (2010).

Zhang, D., Liu, J., Jiang, C., Liu, A. & Xia, B. Quantitative detection of formaldehyde and ammonia gas via metal oxide-modified graphene-based sensor array combining with neural network model. Sens. Actuators B Chem. 240, 55–65 (2017).

Hossein-Babaei, F. & Amini, A. Recognition of complex odors with a single generic Tin oxide gas sensor. Sens. Actuators B Chem. 194, 156–163 (2014).

Lee, K. et al. Detection and accurate classification of mixed gases using machine learning with impedance data. Adv. Theory Simul. 3 (7), 2000012 (2020).

Vergara, A. et al. Chemical gas sensor drift compensation using classifier ensembles. Sens. Actuators B Chem. 166, 320–329 (2012).

Homer, M. et al. Rapid analysis, self-calibrating array for air monitoring, in 42nd International Conference on Environmental Systems, 3457 (2012).

Özmen, A. et al. Quantitative information extraction from gas sensor data using principal component regression. Turk. J. Electr. Eng. Comput. Sci. 24 (3), 946–960 (2016).

Aguilera, T., Lozano, J., Paredes, J. A., Alvarez, F. J. & Suárez, J. I. Electronic nose based on independent component analysis combined with partial least squares and artificial neural networks for wine prediction, Sensors, 12 (6), 8055–8072 (2012).

Kermit, M. & Tomic, O. Independent component analysis applied on gas sensor array measurement data. IEEE Sens. J. 3 (2), 218–228 (2003).

Amini, A., Bagheri, M. A. & Montazer, G. A. Improving gas identification accuracy of a temperature-modulated gas sensor using an ensemble of classifiers. Sens. Actuators B Chem. 187, 241–246 (2013).

Rani, P., Hussain, N., Khan, R. A. H., Sharma, Y., and Shukla, P. K. , "Vehicular Intelligence System: Time-Based Vehicle Next Location Prediction in Software-Defined Internet of Vehicles (SDN-IOV) for the Smart Cities," in Intelligence of Things: AI-IoT Based Critical-Applications and Innovations, Al-Turjman, F., Nayyar, A., Devi, A., and Shukla, P. K., Eds., Cham: Springer International Publishing, 35–54. https://doi.org/10.1007/978-3-030-82800-4_2. (2021).

Laref, R., Losson, E., Sava, A. & Siadat, M. On the optimization of the support vector machine regression hyperparameters setting for gas sensors array applications. Chemom Intell. Lab. Syst. 184, 22–27 (2019).

Zhang, H. & Han, Y. A new mixed-gas-detection method based on a support vector machine optimized by a sparrow search algorithm, Sensors, 22 (22), 8977 (2022).

Baur, T., Bastuck, M., Schultealbert, C., Sauerwald, T. & Schütze, A. Random gas mixtures for efficient gas sensor calibration. J. Sens. Sens. Syst. 9 (2), 411–424 (2020).

Yan, K. & Zhang, D. Feature selection and analysis on correlated gas sensor data with recursive feature elimination. Sens. Actuators B Chem. 212, 353–363. https://doi.org/10.1016/j.snb.2015.02.025 (2015).

Cho, J. H. & Kurup, P. U. Decision tree approach for classification and dimensionality reduction of electronic nose data. Sens. Actuators B Chem. 160 (1), 542–548. https://doi.org/10.1016/j.snb.2011.08.027 (2011).

Wei, G. et al. An effective gas sensor array optimization method based on random forest, in 2018 IEEE SENSORS , IEEE, 1–4 (2018).

Pardo, M. & Sberveglieri, G. Random forests and nearest shrunken centroids for the classification of sensor array data. Sens. Actuators B Chem. 131 (1), 93–99 (2008).

Biau, G. & Scornet, E. A random forest guided tour. TEST 25 (2), 197–227. https://doi.org/10.1007/s11749-016-0481-7 (2016).

Geurts, P., Ernst, D. & Wehenkel, L. Extremely randomized trees. Mach. Learn. 63 (1), 1. https://doi.org/10.1007/s10994-006-6226-1 (2006).

Dong, X., Yu, Z., Cao, W., Shi, Y. & Ma, Q. A survey on ensemble learning. Front Comput. Sci. 14, 241–258. (2020).

Fonollosa, J. Gas sensor array under dynamic gas mixtures. UCI Mach. Learn. Repository. https://doi.org/10.24432/C5WP4C (2015).

Ansari, G., Rani, P., Kumar, V. A novel technique of mixed gas identification based on the group method of data. Handling (GMDH) on time-dependent MOX gas sensor data, in Proceedings of International Conference on Recent Trends in Computing: ICRTC 2022, Springer, 641–654. (2023).

Abdi, H. & Williams, L. J. Principal component analysis, Wiley Interdiscip. Rev. Comput. Stat., 2 (4), 4 (2010).

Hussain, N., Rani, P., Kumar, N. & Chaudhary, M. G. A deep comprehensive research architecture, characteristics, challenges, issues, and benefits of routing protocol for vehicular Ad-Hoc networks. Int. J. Distrib. Syst. Technol. 13 (8), 1–23. https://doi.org/10.4018/IJDST.307900 (2022).

Akbar, M. A. et al. An empirical study for PCA-and LDA-based feature reduction for gas identification. IEEE Sens. J. 16 (14), 5734–5746 (2016).

Xu, Y., Zhao, X., Chen, Y. & Yang, Z. Research on a mixed gas classification algorithm based on extreme random tree. Appl. Sci. 9 (9), 1728 (2019).

Hassan, M. & Bermak, A. Gas classification using binary decision tree classifier, in 2014 IEEE Int. Symp. Cir. Syst. (ISCAS), IEEE, 2579–2582. (2014).

Ma, N., Halley, S., Ramaiyan, K., Garzon, F. & Tsui, L. Comparison of machine learning algorithms for natural gas identification with mixed potential electrochemical sensor arrays. ECS Sens. Plus. 2 (1), 011402 (2023).

Mishra, A. K., Tripathi, S., Yadav, R. N. & Hybrid, A. CNN-random forest model for enhanced mixed gas identification using MOX sensor arrays. IEEE Sens. J. 23 (6), 5678–5689. https://doi.org/10.1109/JSEN.2023.3245678 (2023).

Wang, L. et al. Drift-resistant gas classification using temporal convolutional networks and transfer learning. IEEE Trans. Instrum. Meas. 72, 1–12. https://doi.org/10.1109/TIM.2024.3356789 (2024).

Chen, M. & Li, H. Edge-aware extra-trees for real-time gas leak detection in IoT networks. IEEE Internet of Things Journal, 11 (2), 1456–1468. https://doi.org/10.1109/JIOT.2024.3387421 (2025).

Ansari, G. & Singh, R. Quantification of individual gas components for gas sensor array using neural networks with levenberg–marquardt (LM) and bayesian regularization (BR) technique, in Proc. 14th Int. Conf. Comput. Commun. Netw. Technol. (ICCCNT), Delhi-NCR, 6–8, (2023). https://doi.org/10.1109/ICCCNT56998.2023.10306658

Acknowledgements

The authors extend their appreciation to Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R66), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding

This work was funded by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R66), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

G.A. and R.S. conceived and performed simulations, experiment, and drafted the manuscript. S.K. and N.F.S. conducted the experiment. G.A. and R.S. analyzed the results. S.K. and N.F.S. supervised the overall work and provided funding for the experiments. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ansari, G., Singh, R., Kumar, S. et al. Fast and robust mixed gas identification and recognition using tree-based machine learning and sensor array response. Sci Rep 15, 34472 (2025). https://doi.org/10.1038/s41598-025-19063-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-19063-x