Abstract

This study proceeds with a systematic review and subsequent comparison between the geometry-based and regression model-based techniques on accurately estimating the physical properties of avocados particularly mass using manually collected cross-sectional data set. The objectives of this investigation are to handle grading, weighing and packaging effectively, and cost-effectively for the agricultural and food engineering industries. Among the findings, the Frustum method consistently outperforms other approaches across all slice configurations, achieving the lowest errors with Root Mean Square Percentage Error (RMSPE) of 4.24%, Mean Absolute Percentage Error (MAPE) of 3.43%, Coefficient of Determination (\(R^2\)) value of 97.78% and Explained Variance Score (EVS) value of 97.63% at 20 slices. This highlights its robustness and reliability for precise mass estimation, especially without requiring large datasets or complex computations. To ensure the reliability of the regression models, hyper-parameter optimization and K-fold cross-validation techniques were employed, enabling the identification of optimal model configurations and minimizing over-fitting risks. Regression-based methods, such as Ridge Regression, also exhibit strong performance, with an average RMSPE of 4.30%, MAPE of 3.52%, \(R^2\) of 97.71%, and EVS of 97.58% across 5 folds at 15 slices, making it a competitive and stable alternative. Other regression models, such as LASSO Regression, and Elastic Net Regression, delivered strong and consistent outcomes across the evaluation metrics, followed by Linear Regression. In contrast, MLP Regressor and Gradient Boosting Regressor exhibited notable variability between folds, highlighting issues with stability and generalization. Dimensions of avocados were also assessed in this study with the error rating below 1.53% coupled with model-fit parameters of more than 99% showing that the models used had high accuracy in determining both the width and length of the avocado. The above findings provide a comparative perspective of the choice of forward models depending on the task characteristics including, availability of dataset, stability, and level of precision. Moreover, such outcomes reveal the applicability of these methodologies in implementing state-of-the-art automation technologies accordingly with a focus on robotic harvesting and grading solutions in automation of precision agriculture and modern intelligent food processing systems.

Similar content being viewed by others

Introduction

Avocado (Persea americana Mill.) fruit is a desirable plant in economical and health sectors in tropical and subtropical areas. The avocado is a rich source of lipids, vitamins, minerals and bio-active compounds and as such an essential part of human diets and food industries the worldwide1. Global avocado production has steadily increased over the past decades, with major producers including Mexico, Colombia, Peru, Dominican Republic, Indonesia, and others in Asia2,3. Traditionally, sorting and grading of avocado after harvest was still done using manual methods which included visual way of determining size, shape, and color or directly reading sizes using calipers and weighing scales4. Even though these are cheap and easy, they take a long time, are subjective, labor intensive and can be easily spoiled by human error, especially in the case of large amounts of fruits5. Besides, manual sorting is inconsistent in terms of throughput that supply chains demand. Such constraints have prompted the use of modern technologies such as computer vision, image analysis, and machine learning that will enable non-destructive, objective and high-throughput measurement assessment of fruit characteristics6,7,8. These strategies not only make postharvest operations more accurate, but also enhances automation in precision agriculture.

Fruit mass and physical characteristics estimation is one of the core components of agricultural technology incorporating the desired goals of increasing yields, efficiency, and quality, and decreasing costs in this route. Growing food necessities across the globe on account of the ever-growing population makes efficiency immaculate, making use of precision agriculture and automation inevitable. They allow producers to manage resources effectively, fulfill customers’ requirements, and conform to industry standards. Among all parameters involved, density and components like size, form, and surface area are important for the identification of quality, easy evaluation of quality, and solutions in further procedures like sorting, grading, and packaging.

Early technological advances

Prior work using related technology areas such as computer vision and image processing has remained challenging but efficient in estimating such important physical features. For example, Lorestani and Tabatabaeefar (2006)9 showed how length, width, and height could be used in models to determine the mass and volume of kiwis. According to their results, \(R^2\) is up to 0.97, explaining the coefficient of determination, meaning that their approach for mass estimation is rather accurate. Thus Emadi et al. (2009)10 considered the mechanical characteristics of melons, including the compression and shear stresses, for the study of size and shape characteristics. Not only did their study offer correct classification (all varieties were statistically similarly classified with p > 0.05), but their work also aided applications in optimizing automated grading systems through the incorporation of such mechanical properties into the grading assessment by machines. Further work expanded these methods to other fruit types incorporating both visual and tangible qualities. Liming and Yanchao (2010)11 proposed a non-destructive method to model the surface area and symmetry of strawberries for proper packing and aesthetic purposes. Their research revealed high accuracy Levels that in turn helped improve the method of sorting and packaging. The data they captured for classification and color grading analysis was more than 90% with an object size detection error of not more than 5%. The methods developed by Clayton et al. (1995)12 were aimed at estimating the surface area of apples – rather important for ripening behavior and shelf-life prediction. As it was noted, their model has provided outfits with an increased precision rate effective for managing inventory processes. These results described the significance of consumer-sensitive characteristics such as symmetry in the post-harvest processes while also capturing concerns such as fruit surface area in the internal decision-making processes.

Recent technological advances

More recently, developments in technology have improved the efficiency of these estimation techniques. Sripaurya et al. (2021)13 have used the portable non-contact 6 digital channel near-infrared to measure soluble solids and maturity of banana. Their system not only precisely estimates size and shape but also well classified seven ripeness Level, standard error of the prediction is 0.17% and standard error of the stages of color is 0.38%. Likewise, Chen et al. (2022)14, propose a new method using the convolutional neural network to detect citrus fruit maturity for selective robotic picking due to the fact that citrus fruits on trees are at different ripening stages. Fruit detection is done using YOLOv5, while maturity Levels are classified in a 4-channel ResNet34 that feeds both saliency maps and RGB images. Their proposed approach was able to capture an accuracy of 95.07% which is way better than the VGG16 model as well as KNN, by having 3.14%, 18.24% better accuracies respectively. Oyefeso et al. (2018)15 presented mathematical models to predict the mass and volume of sweet and Irish potatoes using geometry in 2018. The study also found that both of the most accurate models developed for the crops were derived from projected area and volume where coefficients of determination of 98.10% were obtained for sweet potatoes and 96.80% for Irish potatoes. Such findings are useful for planning effective means of handling and processing these tuber crops in a more orderly manner. A novel Intelligent Flexible Manipulator System with Flexible Tactile Sensing (IFMSFTS) was proposed in 2023 by Qin, et al.16 In particular, kiwifruit has been losing up to 25% during its post-harvesting process throughout the world. This system employs a flexible manipulator with a force sensor to evaluate the firmness and associates firmness and ripeness to categorize the kiwifruit maturity levels. Hence, applied Principal Component Analysis (PCA) to reduce the data dimension and used two classifiers, K- nearest neighbor (KNN) and support vector machine (SVM) for training and testing with classification rates of 97.5% and 96.24%, respectively while KNN showed slightly better performance than SVM. This technology is ideal for classifying ripeness and minimizing fruit losses hence encouraging sustainable kiwifruit production.

However, there are still difficulties in applying these techniques in large scale production lines, which are preferred in high-throughput systems. The sophisticated planning methods analyzed in this survey tend to deliver high accuracy at the price of slow computations, which sharply constrains the application of these systems in the dynamic context of agriculture. While such approaches have been tested to yield good outcomes mainly in a laboratory environment, practical problems need solutions that are fast, accurate and affordable. Further, these limitations are crucial to avoid and promote better performance of automated systems that could estimate fruit mass and physical characteristic under various circumstances.

2D vision-based approaches for physical characteristics estimation

Mass estimation, in particular, is vital for tasks such as sorting, grading, and packaging, which are integral to maintaining consistent quality and profitability. In contrast to ripeness detection and quality grading, little is known about the methods to estimate the mass of fruits non-destructively, quickly, and on a large scale.

Hieu M. Tran et al. (2023) presented a series of studies addressing the issue of segmenting the shape of irregular fruits, particularly focusing on starfruit (Averrhoa carambola) from viewpoint of dimensions, volume, and mass. These physical characteristics are important for the design of grading, sizing, and packaging equipment and aspects within the agricultural industry. Traditional approaches are known to encounter problems while working with objects of complex shapes; several measurements or complex three-dimensional modeling may be needed. Thus, the authors of the Tran et al. work proposed innovations based on image processing and simple mathematical algorithms, which proved high accuracy, yet remained plausible for implementation. These two research pieces focus on the development of solutions that bypass the traditional shortcomings in volume measurement For scale accuracy, almost perfect results are obtained for volume and mass estimation, particularly for starfruit and similar fruits in industrial application.

The first study by Tran et al.7 used stylized photographs to directly measure the physical attributes of starfruit using visual data with a dual-camera approach to get top view and body view imagery. Image processing techniques, especially the principal axis of the conical frustum and other mathematical approaches were used to virtually slice the star fruit along its major diametrical line to arrive at the volume. The mass was then estimated by integrating the volume with the fruit density owing to the density’s proportionality to the mass. The applicability of the described method was tested using 255 training samples, providing the overall mean accuracy of the volumes of 99.16% and the mass of 98.59%. This approach clearly illustrated why simplicity and reconfigurable are advantageous in manufacturing environments and shows how it can form a solid solution for gauge development to measure round fruits. In the second investigation, Tran et al.8 brought down the complication of the system by using a single camera, although they paid a high accuracy price. The process was divided into two phases: to start with, a primary perspective photograph was taken, and picture manipulative procedures were applied to dissect the fruit in the sagittal line. The volumes of each disc were estimated using the disc and conical frustum methods where the summation of these yielded the total volume. In the second phase, the linear regression coefficient of determination \(R^2\) = 0.9205 was used to predict the mass in terms of volume. The simplified flow chart successfully passed 300 samples of testing, which gave 99% accuracy for volume and mass, making this a simple and usable method for measurements in stars fruit for its industrial use.

In addition, in 2022 Huynh et al.17 designed a single top view camera vision based simple system to estimate sweet potato attributes with geometrical Transformations in which the product can be virtually sliced following its longitudinal plane and the total volume can be obtained from the sum of all slice volume. The degree of relationship between volume and weight is high and therefore provides an excellent measure of mass, an accuracy of 96% for volume and 95% for weight. The system is faster and does not require computationally heavy 3D reconstructions and makes it practical and cost effective for real life applications.

Implications and applications

Although geometric and statistical methods to solve the weight prediction of agricultural produce have recently been advanced, work specifically dealing with the avocado fruit is scarce. Previous investigations also limited the predictive results on simple geometric variables, which limits the scope of the results and applicability at large-scale production. To bridge this gap, the present study suggests two complementary solutions to mass determination of avocados, which are geometry-based frustum approach and machine learning regression working with morphological features and image-based attributes. The goal is to enhance the precision and strength of weight prediction of avocadoes whilst doing so in a manner that is feasible to practical farming. This research problem is addressed with the following research questions: (1) How well can geometric and image-based characteristics be used to improve mass prediction accuracy over the traditional prediction methods? (2) Which regression algorithms give the best result of avocado weight estimation? (3) What are the proposed methods contribution to the automation opportunities, e.g. vision-based weighing systems, or robotic harvesting systems? To address these questions, Section 2 describes the materials and methods, where the measurement techniques, model frameworks, validation strategies, and relevant formulas are detailed. Section 3 reports the results and discussion, including performance analysis of the proposed approaches and comparisons with existing studies. Finally, Section 4 concludes with the main findings, implications for agricultural automation, and future research directions.

Materials and methods

This section presents the materials and methodological framework of the study. It begins with the hardware setup employed for image acquisition and physical measurements (Section 2.1), followed by an overview of the dataset and the associated preprocessing tasks (Section 2.2). The core of this study lies in two complementary methodological approaches: the geometry-based frustum approach (Section 2.3) and the model-based regression approach (Section 2.4), which are described in detail with their respective assumptions, formulations, and computational procedures. Finally, the evaluation metrics and validation strategies are presented (Section 2.5), providing the basis for a systematic comparison between the two approaches.

Figure 1 illustrates a comprehensive framework for estimating the length, width, and mass of avocados using two distinct methodologies: the Geometry-based Approach and the Model-based (Machine Learning) Approach. Data collection is the first step in the process and involves sample images capturing and corresponding physical measurements. In the following Image Processing stage, dimensions are taken to eliminate distortions by rotating the longest edge of the object to the vertical for accuracy.

When it comes to Geometry-based Approach especially frustum method, the assessment focuses on the direct proportion of mass and volume. This includes determination of circularity of cross sectional sections and computation of the volume of the object. These steps gives a direct calculation of mass from geometrical considerations. On the other hand, the Model-based Approach uses processed features which are then input to analytical models namely, Linear Regression, Ridge Regression, LASSO Regression, Elastic Net Regression, MLP Regressor and Gradient Boosting Regressor. Performing hyperparameter optimization enables each of these machine learning models to run at its optimal capacity. To ensure the model reliable, a technique of K-fold cross validation is employed for accurate validation. Last of all, the Evaluation and Comparison stage assesses the two approaches’ performances according to the processing time, RMSPE, MAPE, coefficient of determination (\(R^2\)) and explained variance score (EVS). The use of RMSPE and MAPE addresses different aspects of estimation accuracy: they express different aspects of the model fit; RMSPE takes into account of larger errors via squaring the residuals and makes it sensitive to outliers, whereas, MAPE simply averages the absolute differences between the observed and the model predicted values thereby highlighting the usual size of the bias of estimation error. In contrast, model-fit measures such as \(R^2\) and EVS tell how well the predictors on balance explain the variation in the criterion. Such two-step evaluation allows for the giving of both accurate judgment of the single estimates, and a coherent opinion on the model as a whole. This systematic framework comprises the geometric approach for reasoning, prediction and hyperparameters’ adjustment to provide accurate and reliable mass estimation.

Hardware setup

The data collection process was enabled by a specially designed hardware system developed to provide the top view of the object under controlled lighting conditions, as illustrated in Fig. 2. This setup had a foundation of an A4 sheet (297 mm \(\times\) 210 mm) used as the background reference, equipped with a digital camera (Canon EOS 60D with a Tamron 17–50 F2.8 lens) mounted on the top frame at a distance of 400 mm from the bottom plate. Each fruit was placed centrally on the A4 sheet to capture a single top-view image. A total of 250 avocado samples were collected, corresponding to 250 images. The imaging chamber had dimensions of 297 mm \(\times\) 210 mm \(\times\) 400 mm (volume \(\approx 25,000 \,\text {cm}^3\)). To reduce shadows and enhance image quality, LED strips (12VDC, 60 LED/m, 7W/m, 6500 K) with a total luminous flux of approximately 300 lm were installed along the inner edges of the box. This arrangement ensured uniform lighting and minimized shadow effects, facilitating subsequent image pre-processing and analysis.

Within the chamber, sample specimens are positioned on the bottom plate, snap shots are taken and the obtained images are then standardized to decide on pixel-square measurements. Of the steps that build the basis for this understanding, the calibration step is the one that makes this estimation possible and accurate, taking into account the volume, mass and dimensions of the object. All the image processing is done in Python as a language while the object detection and feature extraction is done using OpenCV. Data processes such as image analysis are done on a computer using a 10th generation Intel Core i7 processor with 16 GB RAM for storage, processing and sorting out the gathered data into meaningful results. This level of precision and repeatability of movements makes this system preferable for capturing geometrical and structural parameters of objects such as avocados for further analysis using other approaches including geometry and machine learning methods.

Dataset overview

Dataset collection

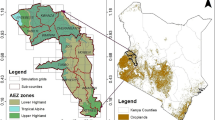

For the purpose of this study, the long-shaped avocado 034 variety (the most popular avocado variety in the Central Highlands of Vietnam) was chosen for research, and their shapes and dimensions were varied, which is statistically described in the Table 1.

Volume, mass, length, and width of each sample were determined using standard laboratory procedures. Lengths were measured with a digital caliper (Vinasemi-150, Vietnam; readability 0.10 mm), and mass was quantified using a digital weighing scale (SF-400, readability ±0.10 g). To quantify volume we used the water displacement method that has been deemed reliable by other researchers8. Data distribution of the available variables shows that the variation is moderate; volume varies from 117 ml to 488 ml, whereas the mass ranges from 118 g to 479 g. In this study, Length proved to be comparatively more variable than width which ranged between 126.40 mm and 269.16 mm, 47.83 mm and 83.88 mm respectively. These diverse measurements provide a solid base for approximation and subsequent analysis of geometrical characteristics of avocados, and their mass in particular, by using geometric and machine learning methodologies.

Image processing

The Fig. 3 also illustrates the fundamental framework for preprocessing and orientation alignment of an avocado sample.

In Figure 3a, the image of the avocado is captured raw without making any pre-processing on it to reduce noise where the image is captured under a controlled environment with good light and background. Consecutive, in the Fig. 3b, to segment the avocado from background a deep learning model of U2-Net18 is used which provides a binary mask on the image. This step eliminates the possibility of further processing items other than the object of interest. Figure 3c shows a determination of the longest side of an object closely resembling an ellipse19,20. The first involves getting its border points in the form of \((x_i, y_i)\) using the Canny Edge Detector. Once the boundary points are collected, the next step is to fit an ellipse to these points. The general equation of an ellipse is given by:

where \(A, B, C, D, E, F\) are parameters for an ellipse with the condition that \(B^2 - 4AC < 0\) must be satisfied. These parameters are estimated using the least-squares fitting method, where the design matrix is constructed as:

The parameter vector \(\textbf{p} = [A, B, C, D, E, F]^T\) is found by minimizing the residual errors between the data points and the fitted ellipse:

where \(\textbf{D}\) is the matrix of design from the boundary coordinates of the ellipse, \(\textbf{D}\textbf{p}\) is the prediction of the output with parameter \(\textbf{p}\) and \(\textbf{0}\) indicating the perfect response where all the points lie on the perfect ellipse. Once the ellipse has been fitted, further mathematical properties possessed by the ellipse may be calculated. The semi-major axis (\(a\)) and semi-minor axis (\(b\)) are derived using:

The center of the ellipse, \((x_0, y_0)\), is determined by solving the linear system:

and the orientation of the ellipse is found by computing the eigenvectors of the covariance matrix:

The eigenvector corresponding to the largest eigenvalue gives the direction of the major axis, and the angle (e.g., \(5.20^\circ\) as shown in Fig. 3c) is calculated as:

Finally, the longest side of the object, which corresponds to the length of the major axis, is computed as \(2a\). In the Fig. 3d, the object is then repositioned in the 0\(^\circ\) position so the major axis is vertical, all samples are oriented the same way. This alignment is necessary to provide data uniformity and its proper format for reliable dimensions of geometry-based approach and feature extraction, analysis for model-based approach. At last, the lengths and widths of the avocado will be obtained by measuring the largest axis-aligned bounding box surrounding the available object on a blank ground in the A4-paper, as shown in Fig. 3e.

Geometry-based approach

Linear relationship between mass and volume evaluation

The scatter plot (shown in Fig. 4) represents the relationship between the mass and volume of avocado samples, with a fitted linear regression model denoted by the equation:

where y symbolizes mass (g) and x symbolizes the volume (ml). The coefficient of determination (\(R^2\) = 98.66%) implies the model is highly positive and linear hence the volumes will be reliable indicators of mass. The slope of 1.01 indicates that for every 1 ml increase in volume, the mass increases by approximately 1.01 g. Since there is this high correlation, the use of volume can be affirmed as a viable parameter in mass estimation of avocados in other research.

Circularity cross-sectional shape assessment

In order to assess the circularity of the cross-sectional shape of avocado fruits, the areas of cross-sections are manually gathered (4–5 cross-sections per fruit, resulting in a total of 1100 cross-sections for 250 samples) and are compared to the circumscribed circle as shown in the Fig. 5. The raw sliced sections in the Fig. 5a of the avocado are initially obtained and then binarized in order to segment out the cross-sectional regions of interest. After that the fitEllipse method, which has been explained above, is used to obtain the parameters of the ellipsis which should be drawn in the Fig. 5b: the center and the major radius, which allows to create the fitted circumscribed circle in the Fig. 5c. The black area now stands for the actual cross-section of which one compares the percentage to the red circumscribed circle. Across all cross-sectional layers, the average overlap ratio (area of actual cross-section relative to circumscribed circle) was determined to be 98.20%, further demonstrating that the cross-sectional layers of avocados are almost perfectly circular in shape. Because this percentage value is consistently high across all 1100 cross-sections, it confirms that the heights of avocados are nearly equal to the width of the circle taken as the radius shown in Fig. 5c.

Volume calculation

This algorithm provides a reliable estimation of the volume of an object using geometrical mathematical figures as elementary frustums of right circular cone shown in Fig. 6. They are each one piece of the object, which, making it pyramid shaped, controls the edges and makes for a much tighter infusion model. In this case, the total volume of the object computed by adding the volumes of these frustums. The volume of each frustum is calculated using the following formula8:

where; \(\alpha = 98.20\%\) equates to object cross-sectional area with respect to circumscribed circle area which shows high circularity, \(l\) stands for frustum length, \(R_i\) for bottom side radius and \(r_i\) for top side radius of \(i^{th}\) frustum. This calculated volume is then converted by the measured density of the object in previous step to estimate mass of the object completely and provide all physical attribute of the object accurately.

Model-based approach

Features extraction

The features used to define this estimating model supply an adequate description of the object geometry, topology and positioning to permit highly accurate and realistic predictions as illustrated in Table 2. The geometric features, \(Mean\_Width\) and \(Std\_Width\), are also important in giving an estimate of the size of the object and the variability. Marginal quantities like \(Middle\_Width\_Part\) and \(Length\_Part\) across slices provide localized characterization of the pattern of cross-sectional object profile and lengths of vertical segments to analyze various structural bends. Quartile features, divided per four sections (Q1-Q4), show \(Mean\_Width\), \(Std\_Width\), \(Width\_Trend\), and \(Length\_Ratio\) as the localized aspect of size distribution, variability, and proportionality. \(Width\_Trend\), derived from differentials of width measurements along each quartile, shows widening or shrinking patterns throughout the object, while \(Length\_Ratio\) standardizes quartile length proportions over the total length for purposes of balance comparison. These features combined allow for a rich and multi-faceted view of the global size of the object, specific local aberrations, and the overall dynamics within each region, further enriching and improving the existing model.

K-fold cross-validation

K-Fold Cross-Validation21 is a strong technique for testing the effectiveness of machine learning models and comprises distributing the data into \(k\) equal parts. The approach involves training the model using \(k-1\) folds while at the same time using the remaining fold for testing in order that each of the factions was used as training or testing data at some point of time. This process is repeated \(k\) times, and the results are averaged to provide a comprehensive assessment of the model’s performance. There are several advantages for applying K-Fold Cross-Validation in this respect. First, it ensures the most appropriate use of the data gathered since each one of the samples is used for training and testing in the cross-validation iteration. Second, it reduces the problem of over-training when working with datasets and provides a more generalized outlook on the performance of a specified model. Last but not the Least, it guarantees not to rely on one specific train-test split and provides a better view on how consistent the model is. This study uses the 5-fold cross-validation, the chunks are formed five times, and the model is trained and tested 5 times, with each chunk being tested only once.

Linear regression

Linear Regression22 is a statistical technique of modeling relationships between two continuous variables where the intent is for the value of the dependent variable to be reacted to changes in the independent variable. This technique is based on a straight line and seeks to estimate the straight line that shows the least discrepancies between the observed average and estimated average. For a single independent variable, the model can be written as:

where \(y\) is the dependent variable, \(x\) is the independent variable, \(\beta _0\) is the y-intercept, \(\beta _1\) is the slope of the line, \(\epsilon\) is the error or residual. When multiple independent variables are involved, the model generalizes to

where \(x_1, x_2, \ldots , x_p\) are the independent variables, and \(\beta _1, \beta _2, \ldots , \beta _p\) are their respective coefficients. The coefficients are estimated using Ordinary Least Squares (OLS) method results in minimizing the squares of the total residuals. Linear Regression is commonly used in economic activities, biology, and engineering for activities like prediction, observing the trends and evaluating the connection of variables. It is easy to understand and explain and for this reason it is a good data analysis tool for regression if though not very efficient in cases of very complicated or nonlinear curves.

LASSO regression

LASSO Regression, or Least Absolute Shrinkage and Selection Operator23, is a regularized linear regression technique that incorporates \(L_1\) regularization to improve the generalization of predictive models. The method addresses issues of over-fitting by penalizing the absolute values of the regression coefficients, effectively shrinking them towards zero. The objective function for LASSO is defined as:

where:

-

\(y_i\) represents the observed response for the \(i\)-th sample.

-

\(x_{ij}\) denotes the \(j\)-th feature of the \(i\)-th sample.

-

\(\beta _j\) are the regression coefficients.

-

\(\lambda\) is the regularization parameter controlling the trade-off between model fit and coefficient shrinkage.

The \(L_1\) penalty term \(\sum _{j=1}^{p} |\beta _j|\) helps to impose some form of sparsity in the regression coefficients and that makes LASSO specially useful in analysis of data that is multi-collinear or contains a lot of predictors. As opposed to other regression format types LASSO optimizes to regularized nature of the data and reduces other coefficients, thus making it more stable and suitable for regression model. Although many people associate LASSO with the ability to conduct feature selection by setting some of the coefficients to zero, this characteristic is served only as a bonus to the main purpose of this method as a method of robust regression analysis. LASSO attains superior predictive accuracy due to limitation on model complexity, it is improved especially when the numbers of predictors is greater than observations or when predictors are present.

Ridge regression

Ridge Regression24, also known as \(L_2\) regularized regression, is a generalized version of linear regression that reduces variance and handles problem of multi-collinearity by adding a penalty term to second power of the estimates. This technique enhances the steadiness and generality of predictive models by including the penalty term to the cost function. The objective function for Ridge Regression is defined as:

where:

-

\(y_i\) represents the observed response for the \(i\)-th sample.

-

\(x_{ij}\) denotes the \(j\)-th feature of the \(i\)-th sample.

-

\(\beta _j\) are the regression coefficients.

-

\(\lambda\) is the regularization parameter that controls the trade-off between model fit and coefficient shrinkage.

The \(L_2\) penalty term \(\sum _{j=1}^{p} \beta _j^2\) discourages large regression coefficients, thus stabilizing the model and reducing sensitivity to multi-collinearity. As with the LASSO technique, Ridge Regression also pulls the coefficients to zero but does not set them to exact zero or perform feature selection while optimizing the performance of the prediction model.

Ridge Regression solves the optimization problem using the following closed-form solution:

where:

-

\(\textbf{X}\) is the design matrix of predictor variables.

-

\(\textbf{y}\) is the vector of observed responses.

-

\(\textbf{I}\) is the identity matrix.

By effectively managing the trade-off between model complexity and prediction accuracy, Ridge Regression is particularly useful in scenarios with highly correlated predictors or datasets with a large number of features. It ensures reliable model performance and maintains computational efficiency even in challenging data environments.

Elastic net regression

Elastic Net Regression25 is a regularization technique that combines the strengths of both \(L_1\) (LASSO) and \(L_2\) (Ridge) regularization to address their individual limitations. By introducing a mixed penalty term, Elastic Net achieves a balance between feature selection and coefficient shrinkage, making it particularly effective for handling multi-collinear data and high-dimensional datasets. The objective function for Elastic Net is defined as:

where:

-

\(y_i\) represents the observed response for the \(i\)-th sample.

-

\(x_{ij}\) denotes the \(j\)-th feature of the \(i\)-th sample.

-

\(\beta _j\) are the regression coefficients.

-

\(\lambda _1\) and \(\lambda _2\) are the regularization parameters for the \(L_1\) and \(L_2\) penalties, respectively.

The \(L_1\) penalty \(\lambda _1 \sum _{j=1}^{p} |\beta _j|\) encourages sparsity in the regression coefficients, setting some of them to exactly zero, effectively performing feature selection. Meanwhile, the \(L_2\) penalty \(\lambda _2 \sum _{j=1}^{p} \beta _j^2\) ensures that coefficients are shrunk proportionally, improving stability and reducing sensitivity to multi-collinearity. The combination of these penalties allows Elastic Net to retain the advantages of both methods while mitigating their weaknesses.

Elastic Net is particularly valuable when:

-

The predictors are highly correlated, where Ridge’s proportional shrinkage complements LASSO’s sparsity.

-

The number of predictors exceeds the number of observations, as both \(L_1\) and \(L_2\) regularization control model complexity effectively.

The impact of \(\lambda _1\) and \(\lambda _2\) is governed by a mixing parameter \(\alpha\), which determines the balance between the \(L_1\) and \(L_2\) penalties:

where \(0 \le \alpha \le 1\). For \(\alpha = 1\), Elastic Net reduces to LASSO, and for \(\alpha = 0\), it becomes Ridge Regression.

By leveraging the strengths of both LASSO and Ridge regression, Elastic Net provides a flexible and robust framework for regression, ensuring optimal predictive performance and feature interpretability in diverse data scenarios.

Multi-layer perceptron regressor

MLP Regressor, or Multi-Layer Perceptron Regressor26, is a type of artificial neural network designed for analysing datasets with continuos target values. Unlike linear regression, MLP Regressor takes benefit of non-linear transformations of features, multiple layers of neurons to identify the relation between the features and the target variable. The MLP model consists of an input layer, one or more hidden layers, and an output layer. Each layer is composed of neurons (or nodes) connected to the next layer, with weights \(\textbf{W}\) and biases \(\textbf{b}\) applied to the connections. The mathematical formulation for the output of an MLP is given by:

where:

-

\(\textbf{x}\) represents the input feature vector.

-

\(\textbf{W}_l\) and \(\textbf{b}_l\) are the weights and biases for the \(l\)-th layer.

-

\(g(\cdot )\) is the activation function, typically a non-linear function such as ReLU (Rectified Linear Unit) or sigmoid.

-

\(f(\cdot )\) is the final activation function, which may be linear for regression tasks.

-

\(L\) is the total number of layers in the network.

To optimize the weights and biases, MLP Regressor uses backpropagation with a gradient-based optimization algorithm such as stochastic gradient descent (SGD) or Adam. The loss function commonly used for regression tasks is the Mean Squared Error (MSE):

where \(y_i\) are the true target values and \(\hat{y}_i\) are the predicted values. The performance of MLP Regressor is remarkable when the connection between the features and the target variables is highly unstructured. Nevertheless, it is very sensitive to the other parameters including the number of layers, the number of neurons per layer, the learning rate and the appropriate set of activation functions to be used.

Gradient boosting regressor

The Gradient Boosting Regressor27 is essentially an application of the general Gradient Boosting algorithm, which is designed specially for regression problems. In Gradient Boosting, models are developed stage-wise; that is, the next model is developed in a way to minimize the residuals of the previous models. Weak learner is normally a decision tree and the algorithm contemplate each of them fits the negative of the gradient of the loss function in order to optimize and minimize the error of the prediction. The predictive model is incrementally improved, with the final prediction expressed as:

where \(h_m(x)\) represents the prediction of the \(m\)-th weak learner, \(\alpha _m\) is its learning rate (step size), and \(M\) is the total number of learners. The weak learners in the regression version consider predicting the continuous outputs, while the boosting process enhances the processing of non-linear aspects efficiently. The Gradient Boosting Regressor is especially strong because of the strong control over the loss function, regularization to prevent over-fitting using a method such as shrinkage and sub-sampling, and the feature importance output.

Hyper-parameter optimization

Tuning the hyper-parameters28 is a crucial step in developing efficient machine learning models, as it significantly impacts their practical performance. For the six regression models used in this study–Linear Regression, Ridge Regression, LASSO Regression, Elastic Net Regression, Multi-Layer Perceptron (MLP) Regressor, and Gradient Boosting Regressor–we employed a grid search strategy combined with K-Fold Cross-validation. This approach ensures the selection of the best combination of hyper-parameters by evaluating model performance over multiple data splits, thereby enhancing generalizability and robustness. Each model was tuned using a predefined parameter grid tailored to its architecture and requirements. The hyper-parameter spaces explored and the optimized values obtained during the grid search process are summarized in the second column of Table 3. By systematically searching these parameter grids, the study achieved optimized configurations for each regression model, ensuring their effectiveness in the context of avocado mass estimation. The selected parameters enhance the models’ ability to handle the features derived from the dataset and adapt to the varying complexities of the prediction tasks.

The last column of the Table 3 illustrated the optimal value obtained for the relevant models. For models without specific hyper-parameters that could be included at model initialization, baseline parameters defined as default settings were applied for all the models such as Linear Regression, etc. Analyzing the results of each of the regression models in terms of evaluation metrics showed that optimization of hyper-parameters helped to increase the performance of model. These optimized configurations were employed in subsequent experiments to ensure consistent and reliable comparisons across the methods.

Performance evaluation

The performances of the models were largely evaluated based on standard error measures (including Root Mean Square Error (RMSE) and Mean Absolute Error (MAE)) commonly used in predictive modeling29. Nevertheless, these indicators are presented in absolute terms and can mask relative differences in various fruit sizes; this paper therefore also utilizes Root Mean Square Percentage Error (RMSPE) and Mean Absolute Percentage Error (MAPE)30. Conversion of the error expressed as a percentage is a more interpretable and comparable expression of the accuracy of the models. The proportion of the variance described by the models was determined as the coefficient of determination (\(R^2\))31 and the Explained Variance Score (EVS)32 provided an additional indicator of the quality of the predictive consistency.

-

Root Mean Squared Percentage Error 30: RMSPE specifically states the root mean squared percentage error of the residuals, which is a measure of prediction error of a developed model, expressed as a percentage.

$$\begin{aligned} \text {RMSPE} = \sqrt{\frac{1}{n} \sum _{i=1}^{n} \left( \frac{y_i - \hat{y}_i}{y_i} \right) ^2} \times 100, \end{aligned}$$(20)where \(y_i\) are the true values, \(\hat{y}_i\) are the predicted values, and \(n\) is the number of samples. RMSPE ranges from 0% to 100%, where a lower value indicates a better fit of the model. RMSPE is particularly useful for understanding the relative error as it is expressed in percentage terms, making it easier to compare across datasets with different scales. However, it can become excessively large if the true values (\(y_i\)) are close to zero, which should be handled carefully in the preprocessing step to avoid misleading results. RMSPE also penalizes large errors more heavily, making it beneficial when reducing significant discrepancies in predictions is critical.

-

Mean Absolute Percentage Error30: MAPE measures the average percentage error between predicted and actual values, providing an intuitive metric for the accuracy of a model.

$$\begin{aligned} \text {MAPE} = \frac{1}{n} \sum _{i=1}^{n} \left| \frac{y_i - \hat{y}_i}{y_i} \right| \times 100, \end{aligned}$$(21)where \(y_i\) are the true values, \(\hat{y}_i\) are the predicted values, and \(n\) is the number of samples. MAPE is typically expressed as a percentage and generally ranges from \(0 \%\) to \(100 \%\) under normal conditions, with a lower value indicating better model performance like. Unlike RMSPE, which penalizes large errors more heavily due to the squaring of percentage errors, making it sensitive to outliers, MAPE provides a straightforward average of absolute percentage errors without emphasizing larger deviations. That is why RMSPE and MAPE are used to assess both individual deviations from the observed values and general performance of the model to point out where and to what extent errors are relative and what proportion of variation stems from inaccuracies in one or another component.

-

Coefficient of Determination31: The \(R^2\) determines how much of that variation in the target variable is account for by the constructed model. It is defined as:

$$\begin{aligned} R^2 = (1 - \frac{\text {SS}_{\text {res}}}{\text {SS}_{\text {tot}}}) \times 100, \end{aligned}$$(22)where \(\text {SS}_{\text {res}} = \sum _{i=1}^{n} (y_i - \hat{y}_i)^2\) is the residual sum of squares, and \(\text {SS}_{\text {tot}} = \sum _{i=1}^{n} (y_i - \bar{y})^2\) is the total sum of squares. High value of \(R^2\) closer to 100% suggest that the model covers most of the existing variations in the data and, therefore, constitutes a very useful measure of the variability of the model and its capacity to generalize on the specific trends of the given data set.

-

Explained Variance Score32: EVS provides another measurement of the proportion of variance explained by the model:

$$\begin{aligned} \text {EVS} = (1 - \frac{\text {Var}(y - \hat{y})}{\text {Var}(y)}) \times 100, \end{aligned}$$(23)where \(\text {Var}(\cdot )\) represents the variance of the respective variable. Note that EVS centers on the extent, to which the model describes the dispersion and distribution of the target values, meaning that it is used alongside \(R^2\). Big values (range from 0 to 100%) of EVS mean better performance while the model fit the variability of the data well. While \(R^2\) measures how well the model quantifies the variance in the target variable relative to its mean value, penalizing both bias and variance errors, EVS focuses on the proportion of variance explained by the model, without penalizing systematic bias in predictions.

Results and discussion

Processing time

The processing time in milliseconds indicated in Table 4 includes the training time in case of the model-based approaches and the execution time. Execution time includes time spend on reading images or matrices, data processing, doing calculations and saving results. Interestingly, the processing of the single image on the current hardware takes 100-120 milliseconds, which comprises part of the overall processing time.

Frustum method wins on the results of the execution time – from 142 to 156 milliseconds. This efficiency is due to the relative computation and the lack of a Learning process step for the model. On the other hand, machine Learning methods like Linear Regression, Ridge Regression, Elastic Net Regression as well as Gradient Boosting Regressor had higher execution times of about 150 to 176ms, because of the time taken in model loading as well as prediction calculation. MLP Regressor reaches the highest execution time with 213 ms, reflecting the computational complexity of deeper neural networks.

Despite the slightly higher execution times, these machine Learning methods offer enhanced flexibility and scalability, especially in feature-rich datasets. The training times for these approaches, ranging from 15,070 ms to 21,164 ms, reflect the trade-off between initial computational investment and subsequent predictive capabilities, making them suitable for large-scale and dynamic agricultural applications.

Dimension evaluation

Tables 5 and Fig. 7 illustrate the performance measurement of the width and length dimensions as identified with the performance indicators, namely RMSPE, MAPE, \(R^2\), and EVS as benchmarks. Each dimension possesses great precision with RMSPE below 1.53% and MAPE below 1.23%, which Least both relative and absolute percentage error. However, in terms of RMSPE, the width is slightly better than the Length with 1.13% compared to 1.53 % as for the MAPE the width better than the Length 0.84% compared to 1.23 %. But again these are small differences which do not have big implications when used in practice. The coefficients of determination \(R^2\) and effect size variable indices EVS for both dimensions are visibly above 99%, indicating a high predictive power and ability to explain variation. Notably, for \(R^2\) and EVS, the Length attains mean estimates of 99.08% while the width attains mean estimates of 99.02% indicating that the length data fits better this model than the width. This could be attributed to superior feature relationship or low noise level in the length measurements. Hence it implies that the metrics vary slightly across the two dimensions of performance. This uniformity shows the reliability of the model in projecting of the dimensions with great precision and accuracy. It is apparent that the model consists of negligible error making it ideal for applications involving construction, packaging, manufacturing and any other innovation that would appreciate tiny precise dimensions.

Mass evaluation

In the visual representation of the results in Figs. 8, 9, 10, 11, each column depicts the model’s performance at a given slice count. The darker part of a column represents the minimum value achieved across 5 folds, while the lighter part represents the maximum value. The gray line within each column indicates the average value across folds, providing an additional layer of insight into the central tendency of the model’s performance. This layered representation highlights the variation in model performance across folds while emphasizing the overall trend through the average values.

Root mean squared percent error

The RMSPE is a widely used evaluation metric in regression analysis, particularly for models where proportional errors are critical, which are shown in Fig. 8. A lower RMSPE value indicates that a model is effectively minimizing proportional prediction errors, contributing to better model performance and reliability across different sample scales. In this analysis, RMSPE serves as a crucial measure to compare the predictive accuracy of various geometry and regression techniques across a range of slice counts (from 10 to 50).

Firstly, The Frustum method demonstrated the best performance across all slice counts, achieving consistently low RMSPE values ranging between 4.24% (at 20 slices) and 4.48% (at 10 slices) across number of slices. Its minimal variation across different slice counts highlights its robustness and reliability, making it a top choice for this dataset. The results of the Linear Regression were fair with minimum RMSPE ranging between 3,62% and 9,09%, but with an average of 5,76% which point to moderation in performances, nevertheless, the high ranging variation at higher slices might be indicative of some stability problems under some condition. It can be observed that Ridge Regression had limited inconsistency between the minimum and maximum RMSPE values. The average RMSPE of its was below 4.89% for all slice counts, maximum is at 5.86% error, minimum at 3.38% proving it works well under different circumstances by using its penalty characteristic. There was also impressive performance by LASSO Regression though with slightly higher error range than Ridge Regression but manageable results as far as the number of slices is concerned. The mean RMSPE percentages oscillated around 4.90% uniformly across the plurality of the slice counts, indicating fair and stable performance. Elastic Net Regression was as efficient as Ridge and LASSO regression models with RMSPE indicators close to 5%. Nevertheless, these higher maximum values, like, 6.44% obtained for slice count at 10, show signs of Learning from data fluctuations, though not as resistant as Ridged or LASSO. The MLP Regressor model was Less stable according to mean and variance data where it had the largest range of minimum and maximum RMSPE. For example, while increasing the slice count to 10 the RMSPE varied between 4% and 9.17%. Compared with other models, its average RMSPE was considerably high especially when the number of slices was large, this problem could imply lack of genarality and flexibility on the model alongside inconsistency. The Gradient Boost Regressor (GBR) performed moderately, with RMSPE values fluctuating between 4.51% and 8.78% across all slice counts. The average values were relatively stable, indicating consistent performance without extremes, but it did not outperform Ridge, LASSO, or Frustum.

Overall, the Frustum method stands out as the most reliable choice due to its consistently low RMSPE values. Ridge Regression and LASSO Regression also demonstrated excellent balance between accuracy and stability with the support from its penalties, making them strong alternatives. While Elastic Net Regression showed good performance, its sensitivity to data variations warrants caution. The MLP Regressor and GBR, though competitive in some scenarios, exhibited higher variability, making them less reliable compared to the top-performing models.

Mean absolute percentage error

Whereas, RMSPE normalizes scaled differences between actual and estimated values based on proportions by squaring and then averaging them, MAPE provides an actual estimation of accuracy in percentage about the actual sum. RMSPE is more appropriate when large errors are more critical than smaller errors given that it incorporates squared values while MAPE give a relative scale of errors for all the units of data. The Mean Absolute Percentage Error is a crucial metric in regression analysis that measures the average percentage deviation of predictions from the actual values which are shown in Fig. 9. A lower value of MAPE shows that in cases where relative errors matter a lot in respect of the impact on the results, the model performs well in prediction errors within the target values.

In every case of slices, the Frustum method again presented the minimum MAPE errors varying between 3.39%, at 40 slices and 3.73% at 10 slices. Its exceptional stability, as indicated by minimal variation in MAPE values, highlights its robustness and reliability in minimizing prediction errors across varying data scales. Additionally, Linear Regression proved to be moderately accurate as minimum MAPE was found between 3.06% to 4.71% maximum MAPE reached up to 5.96% with slices increasing to the last count. The values of MAPE average also showed small fluctuations that enhanced at larger values of the slice count, which pointed to possible problems with the method instability during changes. LASSO Regression was also performed well their minimum and maximum MAPE values ranging from 2.80%, 3.31 % respectively for minimum and 4.19%, 4.74% respectively for maximum. The average values of MAPE are still below 3.82% on average for all types of used data, which prove LASSO as a viable contender in terms of minimizing errors reasonably accurately. The Ridge Regression results were close to that of LASSO Regression, with minimum MAPE scores ranging from 2.83% to 3.76% and maximum scores still Less than 4.62%. Its average MAPE values were below 4% which highly suggests consistent and accurate predictions with reasonable fluctuation compared to the other models. Similarly, there were lower minimums and higher maximums in Elastic Net Regression which stated from 2.78 %, 3.84% and maximum values of 3.97%, 4.77% respectively. Although it is Less regular than Ridge and LASSO, the application of this method results in MAPE of average with fluctuations around 4%. The MLP Regressor dictated desire variability in its performance, where the minimum MAPE coefficient fallen between 3.16% and 4.98% while the maximum coefficient was as high as 9.69% in the slice count 35. The tendency shown by average MAPE values for higher slice count is an evidence of inefficiency and inability to maintain a consistent performance Level and, therefore, Less accurate method than others discussed above. Last, but not the least, moderately accurate results were obtained by The Gradient Boost Regressor; Minimum and Maximum MAPE are 3.88% and 3.99% and maximum up to 6.37%. Its average MAPE values were equally moderate at 5.37 %, a good indicator of consistency but without much of a headway over Ridge or LASSO Regression when it came to slice counts.

Comparing MAPE from each method, it has been observed that the Frustum method has had the lowest errors and holds the most accurate results for all the slice counts. Another two methods, Ridge Regression and LASSO Regression, also demonstrated relatively high accuracy and comparatively low and stable MAPE values so they are rather stable methods. Elastic Net Regression had similar accuracy level but has slightly higher variance. Competitive accuracy was found with Linear Regression but these models suffered from changes in the slices. Despite the fact that both MLP Regressor and Gradient Boosting Regressor outperformed all other models for specific sources, their model variance was substantially higher, which indicates the weakness of the corresponding approach.

Coefficient of determination

The \(R^2\), or Coefficient of Determination, is a widely used performance metric in regression analysis that quantifies how well a model explains the variability of the target variable, which are shown in Fig. 10. An \(R^2\) value closer to 100% indicates a better fit, signifying that the model effectively captures the variation in the dataset. However, a lower \(R^2\) reflects that the model fails to explain the target variable sufficiently. To compare \(R^2\) for various regression methods, \(R^2\) scores for distinct number of slices (ranging from 10 to 50) have been assessed in the present study.

The Frustum method consistently demonstrated high \(R^2\) values across all slice counts, ranging from 97.74% to 97.83%. These proposals obtain relatively low variation but exhibit high average \(R^2\) indicating a satisfactory capacity to track variability in the target value, reinforcing the reliance on this method in the current analysis. Comparable performance was observed from Linear Regression featuring some variability with regards to \(R^2\) scores. Minimum \(R^2\) values varied from 92.81% to 95.89%; and maximum \(R^2\) reached the maximum value of 98.62 at the first slice. Even though it exhibited overall higher than 96% \(R^2\) average, the intra-slice stability of the method was not as consistent with respect to slices as Frustum or Ridge Regression. Moreover, LASSO Regression also worked well with minimum \(R^2\) ranging from 95.05% to 96.74%, and at the maximum, was greater than 98.76% consistently. The average of \(R^2\) value was found to oscillate around 97.33% and this underlined the movie’s good and consistent showing despite change in the size of slice. Within Ridge Regression, high \(R^2\) values were observed consistently with high performances. Minimum \(R^2\) values vary between 95.52% and 97.05%, maximum values exceed 98.80% for all slice counts and the model achieves the highest \(R^2\) value of 99.17% at the 10 slice count. They obtained average \(R^2\) values near to that of LASSO Regression indicating that it can be used to describe variability of data reliably.

Nevertheless, Elastic Net Regression was found to have given us nearly equivalent results of Ridge and LASSO Regression with minimum \(R^2\) varying from 95.51% to 96.90% and the maximum values more than 97.93% for all the slice counts. As to the last criterion, the average \(R^2\) values close to 97.3% and not too high fluctuations of \(R^2\) values indicated the totality of the model’s reliability, while showing slightly higher values of the model’s sensitivity to data variability compared to Ridge and LASSO. The MLP Regressor, therefore, had large fluctuations in the performance with minimum \(R^2\) being as low as 84.47% and the maximum going up to 98.44%. The average \(R^2\) values and especially at higher values of slice count show scatter and inconsistency in the performance and therefore less reliable compared to Ridge and LASSO Regression. The Gradient Boost Regressor (GBR) also gave moderate performances, The minimum and maximum \(R^2\) ranges for GBR varies from 91.52% – 93.04% and 95.60% - 97.24% respectively. Its average \(R^2\) values hovered much near average about 94.10% with comparable performance to Ridge and LASSO Regression with no strangling extra benefit.

The Frustum method stands out as the most robust model, delivering the highest average and most stable \(R^2\) values across all slice counts. Ridge Regression, and LASSO Regression were also almost equally good in explaining a constant and high level of variability in the dataset. Elastic Net Regression followed closely but displayed slightly higher sensitivity to variations in data. The MLP Regressor and GBR, while competitive in some scenarios, exhibited greater variability and were less reliable compared to the top-performing models. These results underscore the superiority of Frustum, Ridge, and LASSO Regression for tasks requiring models with high explanatory power and stability.

Explained variance score

The Explained Variance Score (EVS) is a performance metric that measures how well a regression model captures the variability of the target variable which is shown in Fig. 11. EVS percentiles are expressed in percent and can reach 100% or be 0%, the latter referring to a weaker ability to explain variability. It shows important information regarding the performance of a model as well as its ability to generalize. When using various regression methods for different slices from 10 up to 50, the performance of the EVS is assessed in this study.

Frustum method has shown improved performance with values of the EVS lying in between 97.55% and 97.64%. The small variation between the minimum and maximum values highlights the robustness and reliability of the Frustum method across all slice counts. Its ability to explain most of the variability in the target variable makes it the top-performing technique in this study. Moreover, Linear Regression showed moderate performance, with minimum EVS values ranging between 92.81% and 95.88% and maximum values peaking at 98.23% for a slice count of 35. However, the larger variability in EVS values, especially at higher slice counts, suggests potential instability. The average EVS values hovered around 96.18%, indicating a solid performance but with room for improvement in robustness. Furthermore, another most accurate algorithm is LASSO Regression which shows minimum and maximum EVS between 95.03% - 96.74% and above 98.54% respectively for all slices. The average of EVS values was significantly higher and ranged only at the Level of 96.89% or higher, confirming the net model’s capability to generalize for proximal target variability and to demonstrate the reliability in employing the LASSO Regression. Using Ridge Regression, it was just as effective as LASSO Regression, and had minimum and maximum percent rates for EVS ranging from 95.43% to 97.02%, and above 98.49% respectively depending on the slice counts. The average EVS values were quite comparable with the LASSO Regression and thus, it is preferred in regression analysis problems with almost equivalent Level of generalization.Same as before, Elastic Net Regression yielded results comparable with the Ridge and LASSO with minimum EVS at 95.45% to a maximum of 98.78%. The plot of the average extreme value scores is averagely below that of Ridge and LASSO where the algorithm has performed impressively but shows slightly increased sensitivity to the fluctuations in data variation. On the other hand, the same measure displayed high volatility for the MLP Regressor with the minimum of minimized EVS ranging between 83.05% to 93,33% and the maximum from 94.56% to 98.34%. An average EVS of 93.75% was achieved, but individual slice counts show that certain units experienced fluctuations that decrease robustness and consistency. Finally, the Gradient Boost Regressor achieved stable but modest results, with minimum EVS values ranging from 83.05% to 93.33% and maximum values peaking at 98.39%. The average EVS values, ranging from 92.01% to 95.52%, underscore its consistent performance without significant extremes. However, it was outperformed by Ridge, LASSO, and Elastic Net Regression.

Similar to other evaluation metrics, the Frustum method stands out as the most robust and reliable model, delivering consistently high EVS values across all slice counts. Ridge Regression and LASSO Regression also demonstrated exceptional performance, combining high average EVS with minimal variability. Elastic Net Regression followed closely but displayed slightly higher sensitivity to variability in data. MLP Regressor and GBR, while achieving competitive average EVS values, showed higher variability, making them less reliable for tasks demanding consistent generalization. These findings highlight the superiority of Frustum, Ridge, and LASSO Regression in tasks requiring models with strong and stable predictive capabilities.

Results comparison across methods

The performance of the geometry-based and six regression methods were compared based on the average values of 5 folds estimation for each slice count with the priority order of RMSPE, \(R^2\), MAPE and EVS; and the highest execution time recorded. The results are summarized in Table 6.

Table 6 highlights the performance of the methods averaged over the range of slice counts (10 to 50 slices). The Frustum method emerged as the top-performing geometry-based approach, achieving the lowest RMSPE (4.24%) and MAPE (3.43%), along with high \(R^2\) (97.78%) and EVS (97.63%), demonstrating its reliability and computational efficiency with an execution time of just 156 ms. Among regression-based models, Ridge Regression showed superior accuracy with an RMSPE of 4.30%, MAPE of 3.52%, \(R^2\) of 97.71%, and EVS of 97.58%, while LASSO Regression also delivered competitive results with slightly higher error metrics. Linear Regression and Elastic Net Regression provided solid performance but was slightly outperformed by Ridge and LASSO regressions. In contrast, Gradient Boosting Regressor exhibited moderate results, and MLP Regressor displayed the highest error rates (RMSPE and MAPE) and variability, with the MLP Regressor also incurring the longest execution time (213 ms) and highest peak-to-peak fluctuation reflecting its instability and inefficiency for this task.

Given the relatively limited number of studies focused on avocado weight prediction, some relevant works were selected for comparison. Mokria et al. (2022)33 studied five avocado (Hass, Fuerte, Ettinger, Nabal, and Reed) cultivars in Ethiopia with fruit mass modeled using simple geometric attributes, which are lengths and diameters. The estimated coefficients of determination (\(R^2\)) were 63-80%; this is used as a benchmark in this analysis. Contrastingly, Ornek et al. (2018)34 utilised ANN methods, including BP, LM, and PUNN, to estimate the weight of carrot, which compares to an elongated pear in terms of shape and has some similarity to avocado. Their models, based on features like lengths and diameters, achieved \(R^2\) values between 94.30% and 95.70%, indicating a strong model fit. However, while these methods performed well, they are still surpassed by the predictive accuracy demonstrated in the current study, which leverages more advanced models and a broader set of features for mass estimation.

In addition, a recent work by Sabouri et al. (2025)35 compared fruit mass estimation in plum based on similar geometric characteristics length and diameter. The analysis utilized various regression models, such as the Support Vector Regression, Multivariate Linear Regression, Multi-Layer Perceptron, and Decision Tree, which led to the following \(R^2\) of 92.67%. This work was included due to its use of geometric features in mass estimation, which aligns closely with the methodology in this study. However, there are differences in the choice of models and features; for instance, the current study employs more advanced models with regularization techniques, such as variant regression models with strong penalties, to capture non-linear relationships more effectively. Additionally, a richer set of features, combining both morphological and image-based attributes, is used to provide a more comprehensive representation of the fruit’s physical characteristics.

Conclusion

The results of this research propose a clear comparison of the geometry-based and model-based methods which are applicable in the determination of the dimensions and mass of the avocado. Finally, by converting the results to different metrics such as RMSPE, MAPE, \(R^2\) and EVS, the findings allow for assessing the strengths as well as the weaknesses of the selected methods as to their accuracy, reliability and consistency. Nevertheless, this type of detailed estimation framework based on geometric aspects together with sophisticated artificial neural networks for agriculture still presents a new approach to fill the gaps that have not been extensively discussed previously.

The Frustum method was thus identified as the approach that offered the lowest error rates, both in terms of RMSPE and MAPE, and great stability across all variations in slice possibilities. Therefore, from regression-based approach, this study observed that Ridge and LASSO Regression models balance accuracy and stability and therefore perform well compared to the other models. Elastic Net Regression also gave a similar performance but demonstrated slightly higher oscillation for the data given. However, MLP Regressor and Gradient Boosting Regressor had comparatively higher variance and sometimes unpredictable fluctuations that would not make it applicable for most uses.

In regard to the degree of achieved dimensional accuracy, the assessment of the width and Length predictions revealed very high RMSPE and MAPE indices that did not exceed 1.53%, and \(R^2\) and EVS coefficients that exceeded 99%. The use of a standard A4 paper in calibrating the dataset for some good results affirmed their reliability in other real-life applications like automatic grading, sorting and measuring. From this theoretical vision, centered on both mass and dimensional estimations, the contribution presents a different approach on how geometric and predictive parameters can be used to assess agriculture produce.

Execution times varied significantly among methods. Linear Regression, Ridge Regression, LASSO Regression, Elastic Net Regression and Gradient Boosting Regression models took Less amount of time for prediction than other complex models, with prediction times varying from 154 to 180 ms. However, more advanced techniques involving MLP Regressor for instance, took much longer duration, from 174 ms to 213 ms at most in this analysis. The Frustum method, which combines geometric calculations with image processing, maintained a competitive execution time of 142-156 ms. Additionally, the initial loading and processing of each image required 100–120 ms (including in the above execution time), a factor to consider in real-time or high-throughput scenarios. These findings underscore the need to balance computational efficiency with accuracy, emphasizing the importance of selecting the appropriate method based on the specific requirements and constraints of the application.

In summary, this study breaks new ground by presenting a systematic and integrated approach for estimating the physical properties of avocados, combining geometry and machine learning in ways that have not been fully explored before. The Frustum method is found to be the most efficient and effective solution, especially for the applications in which the proportional error minimization is needed, as well as the performance is highly dependent on the data configuration. Ridge and LASSO Regression do provide good solutions – they are accurate, and they are fast. It is in this beneath-the-surface critical stance that this study’s insights and the carefully scripted dataset of this study provide a firm ground for using such models in practical domains such as agricultural quality assurance for other kinds of fruit or object, automated sorting systems, and precision-driven food packaging workflows. Future research will focus on optimizing computational efficiency and exploring hybrid or ensemble models36 to further enhance performance. Feature selection stage for machine learning models37,38,39 is also a potential factor to reduce resources, remove insignificant features and increase the accuracy. Furthermore, CNN models40 or integrated learning41 are considered to use for improving accuracy of this issue.

Data availability

The dataset used in this study can be made available upon reasonable request. Please contact the corresponding author, Dr. Son V. T. Dao, at son.daovutruong@rmit.edu.vn or dvtson@hcmiu.edu.vn for inquiries.

References

Dreher, M. L., D. A. Hass avocado composition and potential health effects. Critical Rev. Food Sci. Nutr. 53, 738–750. https://doi.org/10.1080/10408398.2011.556759 (2013).

Huang, K.-M., Guan, Z., Blare, T. & Hammami, A. M. Global avocado boom. Choices Mag. 38 (2021).

Our World in Data. Avocado production (2025). Data from Food and Agriculture Organization of the United Nations (FAO), updated March 17 (2025).

Ariwaodo, C. A. Handling strategies and facilities for horticultural crops. Open Access Libr. J. 9, https://doi.org/10.4236/oalib.1108577 (2022).

Cubero, S., Aleixos, N., Moltó, E., Gómez-Sanchis, J. & Blasco, J. Advances in machine vision applications for automatic inspection and quality evaluation of fruits and vegetables. Food Bioprocess Technol. 4, 487–504. https://doi.org/10.1007/s11947-010-0411-8 (2011).

Patel, K. K., Kar, A., Jha, S. N. & Khan, M. A. Machine vision system: a tool for quality inspection of food and agricultural products. J. Food Sci. Technol. 49, 123–141. https://doi.org/10.1007/s13197-011-0321-4 (2012).

Tran, H. M. et al. Physical characteristics estimation for irregularly shaped fruit using two cameras. In 2023 IEEE Statistical Signal Processing Workshop (SSP), 165–169, https://doi.org/10.1109/SSP53291.2023.10207992 (2023).

Tran, H. M. et al. A new approach for estimation of physical properties of irregular shape fruit. IEEE Access 11, 46550–46560. https://doi.org/10.1109/ACCESS.2023.3273777 (2023).

Lorestani, A. & Tabatabaeefar, A. Modelling the mass of kiwi fruit by geometrical attributes. Int. Agrophysics 20, 135–139 (2006).

B. Emadi, M. H. A.-F. & Yarlagadda, P. K. Mechanical properties of melon measured by compression, shear, and cutting modes. Int. J. Food Prop. 12, 780–790, https://doi.org/10.1080/10942910802056143 (2009).

Liming, X. & Yanchao, Z. Automated strawberry grading system based on image processing. Comput. Electron. Agric. 71, S32–S39, https://doi.org/10.1016/j.compag.2009.09.013 (2010). Special issue on computer and computing technologies in agriculture.

Murray Clayton, N. H. B., Nevin D. Amos & Morton, R. H. Estimation of apple fruit surface area. New Zealand J. Crop. Hortic. Sci. 23, 345–349, https://doi.org/10.1080/01140671.1995.9513908 (1995).

Sripaurya, T., Sengchuai, K., Booranawong, A. & Chetpattananondh, K. Gros michel banana soluble solids content evaluation and maturity classification using a developed portable 6 channel nir device measurement. Measurement 173, 108615. https://doi.org/10.1016/j.measurement.2020.108615 (2021).

J., C. S. X. J. J. Citrus fruits maturity detection in natural environments based on convolutional neural networks and visual saliency map. Precis. Agric 23, 1515–1531, https://doi.org/10.1007/s11119-022-09895-2 (2022).

Oyefeso B. O., R. A. O. Estimating mass and volume of nigerian grown sweet and irish potato tubers using their geometrical attributes. Adeleke Univ. J. Eng. Technol. 1, 123–130 (2018).

Qin, L., Zhang, J., Stevan, S., Xing, S. & Zhang, X. Intelligent flexible manipulator system based on flexible tactile sensing (ifmsfts) for kiwifruit ripeness classification. J. Sci. Food Agric. 104, 273–285. https://doi.org/10.1002/jsfa.12916 (2024).

Tri, T. M., Huynh, L. T. & Dao, S. V. T. A vision-based method to estimate volume and mass of fruit/vegetable: Case study of sweet potato. Int. J. Food Prop. 25, 717–732. https://doi.org/10.1080/10942912.2022.2057528 (2022).

Qin, X. et al. U2-net: Going deeper with nested u-structure for salient object detection. Pattern Recognit. 106, https://doi.org/10.1016/j.patcog.2020.107404 (2020).

Gander, W., Golub, G. H. & Strebel, R. Least-squares fitting of circles and ellipses. BIT Numer. Math. 34, 558–578. https://doi.org/10.1007/BF01934268 (1994).

Fitzgibbon, A. W., Pilu, M. & Fisher, R. B. Direct least square fitting of ellipses. IEEE Transactions on Pattern Analysis Mach. Intell. 21, 476–480. https://doi.org/10.1109/34.765658 (1999).

Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence (IJCAI), 1137–1145 (1995).

Draper, N. R. & Smith, H. Applied Regression Analysis (Wiley-Interscience, 1998).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. Royal Stat. Soc. Ser. B (Methodological) 58, 267–288 (1996).

Hoerl, A. E. & Kennard, R. W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 12, 55–67 (1970).

Zou, H. & Hastie, T. Regularization and variable selection via the elastic net. J. Royal Stat. Soc. Ser. B (Statistical Methodology) 67, 301–320 (2005).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Friedman, J. H. Greedy function approximation: A gradient boosting machine. Annals Stat. 29, 1189–1232 (2001).

Hastie, T., Tibshirani, R. & Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Springer, 2009), 2nd edn.

Willmott, C. J. & Matsuura, K. Advantages of the mean absolute error (mae) over the root mean square error (rmse) in assessing average model performance. Clim. Res. 30, 79–82. https://doi.org/10.3354/cr030079 (2005).

Hyndman, Rob J. & A. B. K.,. Another look at measures of forecast accuracy. Int. J. Forecast. 22, 679–688. https://doi.org/10.1016/j.ijforecast.2006.03.001 (2006).