Abstract

With the development of artificial intelligence education, the human-computer interaction and human-human interaction in virtual learning communities such as Zhihu and Quora have become research hotspots. This study has optimized the research dimensions of the virtual learning system in colleges and universities based on neural network algorithms and the value of digital intelligence in the humanities. This study aims to improve the efficiency and interactive quality of students’ online learning by optimizing the interactive system of virtual learning communities in colleges. Constructed an algorithmic model for a long short-term memory (LSTM) network based on the concept of digital humanities integration. The model uses attention mechanism to improve its ability to comprehend and process question-and-answer (Q&A) content. In addition, student satisfaction with its use was investigated. The Siamese LSTM model with the attention mechanism outperforms other methods when using Word2Vec for embedding and Manhattan distance as a similarity function. The performance of the Siamese LSTM model with the introduction of the attention mechanism improves by 9%. In the evaluation of duplicate question detection on the Quora dataset, our model outperformed the previously established high-performing models, achieving an accuracy of 91.6%. Students expressed greater satisfaction with the updated interactive platform. The model in this study is more suitable than other published models for processing the SemEval Task 1 dataset. Our Q&A system, which implements simple information extraction and a natural language understanding method to answer questions, is highly rated by students.

Similar content being viewed by others

Introduction

The 2025 World Digital Education Conference has put forward the core topic of “Development and Transformation of Education in the Intelligent Age”, which represents that the global education system is undergoing disruptive changes and systematic restructuring through AI technology1. With the rapid development of artificial intelligence technology, online Q&A platforms have gradually formed a new paradigm of “human-machine collaboration”. Platforms represented by Zhihu not only rely on AI to achieve efficient responses and knowledge aggregation, but also retain the in-depth insights and experience sharing of real users, integrating digital efficiency with human warmth. This dual-track mechanism of “technology empowerment + interpersonal interaction” not only enhances the quality of information and scene adaptability, but also reconstructs the trust foundation of knowledge dissemination, becoming an important carrier for exploring the evolution of human-machine relationships and knowledge ecosystems.

Information and communication technologies such as the internet and mobile phones can assist young learners in their learning. Consequently, online learning platforms have been recognized as indispensable tools in emergency education. Remote online learning has become extremely crucial, gradually gaining equal importance to face-to-face learning. Online learning platforms can ensure a certain level of interaction between college students and teachers during teaching sessions. Interactive teaching can produce satisfactory results for students. However, teachers are unable to continuously and timely address the numerous questions raised by students online. Therefore, in this situation, an automatic question answering system is needed to improve students’ learning efficiency. Online Q&A platforms are becoming increasingly popular. When comparing question answering systems with search engines, it can be observed that question answering systems can provide users with concise and clear answers, particularly with the technological breakthroughs of generative artificial intelligence GAI)2and large language models3. Thus, Q&A systems have grown in popularity and effectiveness as a means of retrieving information from the Internet4. While large language models (LLMs) represent the current state-of-the-art in natural language processing (NLP), their exclusion from this study is justified by several pragmatic considerations. First, the substantial computational resources required by LLMs pose challenges to maintaining the real-time responsiveness essential in educational Q&A interactions. Second, the high deployment costs—particularly those associated with GPU utilization—limit the feasibility of implementing such models in resource-constrained educational institutions. Third, this study prioritizes the development of lightweight and interpretable architectures that support scalability and sustainable integration within institutional infrastructures.

However, an examination of current online learning platforms revealed that, although these platforms have made significant progress in building independent learning content, the use of interactive Q&A systems by students remains minimal. In traditional face-to-face teaching, students’ questions can be solved in a timely manner through teacher–student or peer-to-peer interactions. However, online platforms have limited interaction mechanisms, resulting in students’ questions not being answered instantly, which affects learning outcomes. Therefore, developing efficient and interactive Q&A systems is important. The system should satisfy two core functions: answering students’ questions quickly to meet immediate requirements and ensuring high accuracy of answers to precisely match relevant answers in the question pool. Although communities such as Stack Overflow attempt to solve this problem by manually tagging duplicate questions, the operation is cumbersome and easy to miss, which is inefficient and affects accuracy and completeness. To accomplish this, it is necessary to take advantage of advances in natural language processing (NLP) techniques, particularly text similarity computations.

The pre-existing knowledge base is constructed using a large amount of Q&A data and text, and text similarity is used to compare students’ new questions with the pre-existing questions, find the most relevant known questions and answers, and provide timely and accurate support for students. This method overcomes the limitations of manual operations, significantly improves the efficiency and accuracy of answers, and provides new possibilities for developing online learning platforms. The introduction of NLP technology, particularly text similarity calculations, can significantly improve the performance of interactive Q&A systems in online learning platforms, meet students’ immediate learning requirements, improve their learning experience, and promote the development of independent learning content.

Neural network algorithms have been widely used in related studies. The neural network model is used in the field of NLP, and with the extensive use of neural networks for the task of detecting repetitive problems, the Manhattan long short-term memory (MaLSTM) model was generated and used to deal with the detection of repetitive data. A deep-neural-network-based logic and activity learning model (DNN-LALM) was proposed to improve learners’ proficiency in cognitive and task-oriented activities. This demonstrates that the application of neural network algorithms can help interactive Q&A platforms overcome limitations, improve efficiency and accuracy, and improve user satisfaction.

Based on this, this study focuses on the use of neural network techniques to optimize the Q&A systems of a college virtual learning community. In particular, the application of Siamese neural networks and long short-term memory (LSTM) networks in interactive systems was investigated, in addition to how the attention mechanism can help improve the model’s ability to understand and process questions. By comparing the performance of models using traditional algorithms with those using the attention mechanism, we demonstrate that technical optimization can significantly improve the effectiveness of online interactive systems. The primary objective of this study is to design a model that is both deployable and scalable within application-oriented university environments, where computational infrastructure is often limited. This study excludes Transformer models that require high-end GPU servers. Furthermore, users in these educational contexts, particularly students and instructors, generally value responsiveness and the delivery of concise, relevant answers within question-answering systems. Therefore, the adoption of a lightweight architecture, specifically an LSTM-based model augmented with an attention mechanism, is more appropriate for satisfying the low-latency and high-interactivity demands of virtual learning platforms. Compared with transformer-based architectures, the Siamese LSTM with attention mechanism provides greater transparency and interpretability of the matching process between question pairs. This is particularly important in educational contexts where trust, explainability, and pedagogical alignment are key concerns. In addition, this study focuses on students’ satisfaction with these technologically improved quiz systems, which not only reflects the effectiveness of the technology but also shows its usefulness in real educational scenarios. We anticipate that this study’s in-depth analyses and experiments will provide scientific and technical support for the future development of virtual learning communities in higher education.

Literature review

Generating artificial intelligence in education

Presently, attention to ChatGPT is gradually increasing, both at home and abroad, and its role in the field of education is becoming increasingly prominent and a research hotspot. Most people, especially students, have a positive attitude toward the use of ChatGPT. Students can obtain real-time answers and feedback to questions in a variety of contexts and improve creative thinking through the guidance of prompts, while educators can assist generate lesson plans and learning reports, thus reducing the burden and improving teaching and research capabilities5. Other studies have shown that GAI can test and evaluate the learning process and outcomes of students and assist teachers understand the learning progress and problems encountered by students in a timely manner to adjust teaching strategies6. It can be observed that GAI is increasingly being used in education and teaching to provide more opportunities to continuously improve the quality and efficiency of education. Moreover, some studies have reported that fewer college students use ChatGPT to answer teachers’ questions in class, and even fewer use it for homework evaluation and feedback7. Currently, online learning platforms designed and used by AI systems are constantly evolving, and research on platform effectiveness, user experience, feedback, and payment willingness is constantly being conducted8. Therefore, interactive systems play an increasingly important role in learning platforms designed for college students and teachers.

Online question and answer community

With the development of virtual learning communities, the frequency of using online Q&A platforms among college students is increasing9. Existing online question-answering communities (CQA) offer numerous advantages worth learning from online Q&A systems in college. Subjective questions posed by various users can receive more targeted responses on CQA websites, whereas traditional web searches frequently provide users with detailed information that already exists on the Internet, which may not necessarily match the context referred to in the question. For example, the widely popular Quora CQA, which is based on real-identity social networking, encourages users to build reputations, and accessing user histories allows them to assess the reputations of other users. Moreover, combining social voting systems with unique answer ranking algorithms enables users to identify and promote high-quality answers. Piazza is the most popular educational CQA, and many professors use it to support students in their courses. Students ask questions and receive answers from instructors or other students. Piazza demonstrates the benefits of CQA websites as educational supplements, although their functionality are limited. For example, each question displays only one student’s answer, and student answers are posted in a wiki-style format, requiring other students to edit previous answers rather than post their own10. Stack Overflow CQA is the most favored among programming learners. It is a successful and rapid professional question-answering website where users receive the first answer to their questions in approximately 11 min, resulting in a large number of new questions being generated daily11.

Semantic duplication detection

The generalized duplicate detection process is typically embedded within a broadly defined data-cleaning process that not only removes duplicates but also performs various other steps to improve the overall quality of the data12. Identifying duplicate texts is crucial in many fields such as plagiarism detection, information retrieval, text summarization, and question answering. Research and competition in Q&As in the NLP field have been increasing in recent years. Researchers have conducted a variety of studies on semantic similarity and expected better data results. Among these, corpus-based methods16 are common. These methods use the information obtained from the corpus to calculate text similarity and can be divided into methods based on the bag-of-words model, search-engine-based approach, and neural-network-based approach. The bag-of-words model is based on the distribution hypothesis that the context of words and their semantics are similar. The basic concept is to represent a document as a combination of words without considering the order in which the words appear. This implies weak generalization ability and an inadequate understanding of sentence semantics. The calculation of text similarity using a neural network model to generate word vectors (word vectors, word embeddings, or distributed representations) is a method that has been extensively studied in the field of NLP. Convolutional neural network (CNN) architectures applied to matching sentences13 are one example of this trend.

Use of machine learning in semantic repetition detection

Numerous researchers are gradually attempting to use relevant deep-learning methods to automatically detect duplicate questions. Chevallier et al. used an original method to detect quasi-duplicate datasets based on feature extraction and deep learning14. Wang et al. developed three deep learning methods based on Word2Vec, CNN, recurrent neural network (RNN), and LSTM to detect duplicate questions in Stack Overflow15. RNNs16 and LSTM models17 adapt naturally to variable-length inputs. By mapping a variable-length sequence onto a fixed-length vector, the semantic information contained in a sentence can be encoded. Thus, finding a more efficient question similarity computation model requires considerable effort. With the emergence of various improved LSTM models, Mueller and Thyagarajan offered a straightforward method for exploring sentence similarity. They proposed the MaLSTM model18 based on a Siamese recurrent architecture that is powerful enough to complete these complex understanding tasks. Regrettably, the MaLSTM model is limited in demonstrating impressive results. It suffers from dependency on its fixed mode, which is a Siamese deep network that calculates the similarity of sentences by relying on a simple Manhattan metric19.

Attention mechanism to compensate for the the MaLSTM model

The shortcoming of the MaLSTM model has a remedial solution. The attention mechanism, inspired by the human visual system, originates from the field of computer vision. The selective attention mechanism of human vision can be explained as follows: when we are looking at one thing, we must always pay attention to where we are currently focusing. It enables humans to quickly screen high-value information from a large volume of information while using limited attention resources20. It was first introduced in the NLP field through machine-translation tasks. The proposition of Nikolas Adaloglou is highly innovative: memory is attention across time21. The core concept of the attention mechanism is based on weighted averaging with dynamic weighting. When applied to sequence tasks, because sequence elements have a temporal order, dynamically weighting each element of the sequence generates memory over time22.

By amalgamating the aforementioned methodologies, the primary concern addressed in this design is to improve the accuracy attained after training data from online question-answering systems in colleges. The core issue lies in identifying a methodology for refining the foundational model to align it with the requirements of an existing system.

Research questions

Based on the above summary and elaboration of the relevant research, this study sought to answer the following research questions:

H1: Can the accuracy of the model be improved using Word2Vec for word embedding and Manhattan distance for similarity measurement?

H2: Can the Siamese LSTM model, which introduces an attention mechanism, improve accuracy?

H3: How will the model in this study process the SemEval Task 1 dataset compared with previously published models?

H4: How do students evaluate updated interactive systems?

H5: What innovations were realized in this study’s Q&A system?

Research design

Given the initial stage of developing a new online college Q&A system that lacks the accumulation of pre-existing question data, all new questions raised by users require system members (whether teachers or student accounts) to search and provide answers. Therefore, the development team decided to collect question data with clearly defined answers from the Stack Overflow and Quora communities and imported them into the system several years ago. This method reduces the labor and time required during the initial stages of construction.

Siamese neural network

A Siamese network was used to measure the similarity between two inputs. It has two inputs, X1 and X2, feeds them into two neural networks, and then converts them into vectors Gw (X1) and Gw (X2). The distance Ew between the two output vectors is calculated using a distance metric. The sample for training the Siamese network is a tuple (X1, X2, y). The label y = 0 indicates that X1 and X2 are of different types (not similar, not duplicates). The label y = 1 indicates that X1 and X2 are of the same type (similar, duplicate). Design of the LOSS function is as follows:

-

1.

When the two input samples are not similar (y = 0), the loss gets smaller if the distance Ew gets larger.

-

2.

When the two input samples are similar (y = 1), the loss gets larger if the distance Ew gets larger.

\(\:{\text{L}}_{+}\left({\text{X}}_{1},{\text{X}}_{2}\right)\) represents the loss when y = 1, \(\:{\text{L}}_{-}\left({\text{X}}_{1},{\text{X}}_{2}\right)\) denotes the loss at y = 0, and the loss function can be expressed as follows:

Data

During the initial phase of system updates, the majority of the test data we imported were sourced from publicly available Quora datasets (First-Quora-Dataset-Release-Question-Pairs) provided by Iyer et al., supplemented by a smaller portion collected from the Stack Exchange Data Explorer (Stack Overflow is a sub-domain within the Stack Exchange).

The Quora dataset comprises 404,290 pairs of potential duplicate questions, with each pair containing IDs, complete text, and a binary tag indicating whether the pair is indeed a duplicate. The analysis revealed that 36.92% of the questions are duplicates, amounting to 111,780 distinct pairs. The total number of questions after deduplication is 537,933. The Quora dataset was randomly partitioned using an 80:10:10 ratio, allocating 80% of the data (323,432 pairs) for training, 10% (40,429 pairs) for validation, and 10% (40,429 pairs) for testing. This splitting strategy follows standard practices in machine learning research to ensure robust model training while preserving sufficient data for unbiased evaluation and parameter tuning.

Data collection efforts from the Stack Exchange Data Explorer focused primarily on two tables: “Post” and “PostLinks.” Two fields from the “Post” table were selected, namely, “Id” and “Title.” The “Id” field from the “Post” table served as the “PostId” field in the “PostLinks” table to identify duplicate question pairs.

We selected 150,000 related question pairs, 150,000 exact duplicate question pairs, and 50,000 random unrelated question pairs from an existing online system.

The formal definition of duplicate question detection can be written as a pair of questions, q1 and q2, and a model that learns this function can be trained as follows:

where 1 represents that Qa and Qb are duplicates, and 0 otherwise.

If the answer to question A already exists and the new question B is a duplicate, the answer to question A can now be used directly for question B. If Qi and Qdi are duplicates,

Given the question, “Which is the best digital marketing institution in Shanghai?” Which types of questions are related? “Which is the best digital marketing institute in Shenzhen?” can be related or connected because of an established or discoverable relation. The two questions always have common characteristics, and the topic of the question is the same. However, these two questions are not duplicates. If Qi and Qri are related,

The two pairs of questions have no connection. They contain irrelevant concepts and details. If Qi and Qui are unrelated, then

.

The actual data fields are:

-

1.

id: the id of a dataset question pair;

-

2.

qid1, qid2: unique ids of each question;

-

3.

question 1, question 2: the full content of each question;

-

4.

is duplicate: the target variable, set to 1 if question 1 and question 2 have the same meaning, and 0 otherwise.

In the loading training stage, text inputs were converted into a list of words. With the preprocessing of data, extra space and specific symbols were removed, the abbreviation form was changed, and the punctuation was corrected. The inputs in string form were processed into a list as outputs, after which each entry of the outputs was a single word from the text.

The next step in embedding preparation was to turn each word into its embedding. The starting place for all zero embeddings was reserved, although this was never used. During the embedding file loading period, iteration was done through the text of both questions in the row; questions as words were replaced with questions as number representations. As shown in Table 1, the training data were converted into word indices.

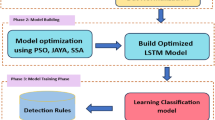

Research algorithm

Determination of the evaluation system

Satisfaction with using online learning platforms in universities was investigated using a summary and collation of related literature, as well as the characteristics of the updated interactive platform system in this study. As shown in Fig. 1, this study draws on the Technology Satisfaction Model (TSM) and adapts it by dividing The platform knowledge into the Self effectiveness (SLE) dimension, Functional use, Usage time, and Q&A Help into the perceived usefulness (PCUN) dimension, and finally dividing System evaluation into the perceived ease of use (PCEU) dimension. The indicator system contains five level 1 indicators: the platform knowledge (TPk), functional use (FU), usage time (UT), Q&A help (QA), and system evaluation (SE). TPk contains five questions to assess students’ familiarity with the e-learning platform; FU contains four questions to test students’ use of the platform’s interactive system; UT contains three questions to test the length of time and planning of using the e-learning platform and answering Q&A questions; and QA contains three questions to test students’ use of the platform’s interactive system. System evaluation (SE) consists of three questions to test the evaluation of the feeling of using the interactive platform system to understand students’ inclination and satisfaction with the interactive platform system.

Neural network model

The Siamese network is a “neural network of twins,” and the “twin relationship” of neural networks is achieved by sharing weights. In this design, a Siamese network is used to measure the similarity between the questions. Two sets of data are simultaneously input into a neural network and converted into N*1-dimensional vectors using this neural network. Subsequently, the distance between these two vectors is calculated using a numerical function (such as cosine similarity), and the obtained distance is used to measure the similarity of the original input data.The Siamese LSTM comprises two LSTMs (LSTMa and LSTMb) that share weights.

The word embedding dimension was set to 300 to maintain consistency with the dimensionality of the pretrained Word2Vec vectors employed in this study. This configuration ensures full compatibility with the embedding model trained on the Google News corpus, which has been extensively validated in NLP tasks and is recognized for its robust generalization capabilities across diverse Linguistic contexts. Regarding the hidden and output layer dimensions, a series of preliminary experiments were conducted with values ranging from 32 to 128. Based on performance evaluations using the validation set, a dimension size of 50 was identified as optimal, offering a balanced compromise between model expressiveness, computational efficiency, and generalization capacity. This configuration was found to be sufficiently expressive to capture semantic regularities pertinent to duplicate question detection, while simultaneously mitigating the risks of overfitting and excessive training time. Similarity function (\(\:{h}_{{T}_{a}}^{\left(a\right)}\) and \(\:{h}_{{T}_{b}}^{\left(b\right)}\) represent two sentences):

In this function, the final representation of sentences \(\:{h}_{{T}_{a}}^{\left(a\right)}\) and \(\:{h}_{{T}_{b}}^{\left(b\right)}\) is the hidden layer output of the last time step of LSTM. The inputs of \(\:LST{M}_{a}\) and \(\:LST{M}_{b}\) are \(\:{F}_{1}=\left\{{\nu\:}^{\left(a\right)},...,{\nu\:}_{{T}_{a}}^{\left(a\right)}\right\}\) and \(\:{F}_{2}=\left\{{\nu\:}^{\left(b\right)},...,{\nu\:}_{{T}_{b}}^{\left(b\right)}\right\}\), respectively. Among it, \(\:{T}_{a}\ne\:{T}_{b}\).

We calculated the Manhattan distance, as defined by the Siamese LSTM model. This research identified that the Manhattan distance outperformed other reasonable alternatives. After obtaining the similarity, all the parameters were fed into the model.

Optimizer selection

Our model chose the Adam optimizer later. The name Adam is derived from adaptive moment estimation. It is an optimization algorithm that requires only first-order gradients to replace the traditional stochastic gradient descent process. It can update the neural network weights iteratively based on the training data.

The advantages are as follows:

-

1.

straightforward implementation;

-

2.

efficient calculation;

-

3.

less memory required;

-

4.

invariance of diagonal gradient scaling;

-

5.

suitable for solving optimization problems with large-scale data and parameters;

-

6.

suitable for non-stationary targets;

-

7.

suitable for solving problems with very high noise or sparse gradients.

Pretrained embeddings

Pretrained word vectors have dominated the field of NLP for a long time. We used two pre-trained methods (Word2Vec and fastText) to identify the optimal choice for the model. Word2Vec focuses only on the byproduct of the model after training the model parameters, specifically the weight of the neural network. These parameters are referred to as word vectors.

FastText is a fast text classifier developed by Facebook that provides simple and efficient text categorization and representation learning methods. For instance, it can learn that “boys,” “girls,” “men,” and “women” refer to specific genders and can store these values in relevant documents.

Because the Word2Vec model does not consider word order, and its output is simply word vectors, capturing the semantic similarity between words and selecting a language model as an alternative can benefit from it.

We used WikiText 103 (28,595 preprocessed Wikipedia articles and 103 million words) as the pretrained language model to replace the word-embedding methods. The WikiText language modeling dataset is a collection of over 100 million tokens extracted from a set of verified and featured articles on Wikipedia. It is well suited for models that can take advantage of long-term dependencies. Merity et al.23. introduced the dataset in detail.

-

1.

Distance Function

The distance between two vectors is mathematically called the distance of the vector, which is also known as the similarity measurement between samples. This is reflected by the extent to which certain objects are close to or distant from each other. Intuitively, the closer the distance, the easier it is to classify two samples; the farther it is, the more different they are. The basis for this division is referred to as the distance.

-

2.

Cosine Distance

Geometrically, the angle cosine can be used to measure the difference between two vector directions. This concept is used in machine learning to measure the differences between sample vectors. The cosine of the angle between two n-dimensional sample points a (x11, x12, …, x1n) and b (x21, x22, …, x2n) is as follows:

The range is \(\:\left[-\text{1,1}\right]\). The larger the cosine, the smaller the angle between the two vectors (more similar), and the smaller the cosine, the larger the angle between the two vectors (more different).

-

3.

Manhattan Distance

As implied by the name, driving from one crossroad to another in Manhattan is not a straight line between two points. The actual driving distance was the “Manhattan distance.” The Manhattan distance between two n-dimensional sample points \(\:a\left({x}_{11},{x}_{12},...,{x}_{1n}\right)\) and \(\:b\left({x}_{21},{x}_{22},...,{x}_{2n}\right)\) is as follows:

Attention mechanism

Considering an input that appears in sequence form, each word in the sequence contributes to the meaning or representation of an entire part. However, not all words provide the same information.

The weight of each word in the sentence should differ. There are always keywords and irrelevant words. To prevent the keyword information from being erased during the transmission of the cell state, we must assign a probability to each word to understand how it affects the entire sequence.

In this design, we used an attention mechanism. It measures the importance of a word or part of a sequence using a softmax function and a context vector ut. Consider an RNN model as an example. Now, we also have one example input sentence: “I love you,” and our target output word is: Y. At time step i, the output value Hi of the hidden layer is known, and our purpose is to calculate the attentional distribution probability of the words “I,” “love,” and “you” to target output Yi when generating Yi. Therefore, we can use Hi at time step i to compare the RNN hidden layer node state hj corresponding to each word in the input sentence, that is, obtain the alignment possibility of the target word Yi and each input word using function F (hj, Hi). Function F adopts different methods in various studies. The output of the function F is then normalized using softmax to obtain the attention weight. After calculating the importance of each word, we obtain a weighted sum of the sequence vector representation ν as a weight-based all-word annotation.

Briefly, each hidden state is weighted based on the output content of each time step, and the final representation of the sentence is accumulated as follows:

The following is a visualization of simple attention. The words with the largest and second-largest weights were identified from dark to light in the sentence:

How do I read and find my YouTube comments?

Why do girls want to be friends with the guy they reject?

Although using the attention mechanism in the Siamese LSTM model was found to increase the computational load, the model performance could be improved.

During runtime, two query texts for comparison undergo identical preprocessing through the same pretrained embedding layer, ensuring consistent initialization of embedding layer parameters across the model. Subsequently, they are processed by two fully symmetrical neural networks, each characterized by identical structures and parameters. The incorporation of an attention mechanism enables the model to dynamically allocate distinct weights to inputs at each time step, thereby highlighting the relative importance of each input within its context. Finally, these weighted representations are fused and serve as inputs for subsequent tasks. Figure 2 depicts the structure of our final model.

Parameter saving

It took more than ten hours to run the training program each time. Therefore, it is natural to consider methods to save time. If users can save weight, bias, and other parameters obtained after running the training program, this will significantly save time.

The callback function is an important module. It is not a function but a class for collecting information or performing actions during the training process. Callbacks can be used to obtain a view of the internal states and statistics of the model during training. For example, we frequently wish to record the training and test errors of each epoch, and this information must be collected using the callback function. “Check-Point” is used to save the model after every epoch; “History” records the training and test information; “EarlyStopping” is used to end the training before it has converged; “LearningRateScheduler” supports adjusting the learning rate based on the policy from the user; this reduces the learning rate when a metric has stopped improving; and “CSVLogger” streams epoch results to a CSV file.

This is added when the user starts training. If the model must be reloaded later, the “load weights” method is used to load the checkpoint just saved, with need to start from scratch.

Evaluation

During the evaluation phase, Keras with a TensorFlow Backend was used, and the experiments were conducted on a college server equipped with an NVIDIA GTX 1080. The operating system was Ubuntu 16.04. Each script, including preparation and training, took approximately 21 h on the central processing unit (CPU) but only 8 h on the graphics processing unit (GPU). CPUs and GPUs process the same task differently. A CPU is required to run the majority of software, whereas a GPU is best at focusing all computing power on a specific task. This is because a CPU consists of a few cores optimized for sequential serial processing, which helps maximize the performance of a single task. By contrast, a GPU uses thousands of smaller and more efficient cores for massively parallel architectures designed to handle multiple functions simultaneously.

Results

Neural network model

Experiment: Comparison of the Siamese LSTM model with different combinations of training components, different embedding files (Word2Vec or fastText), different similarity functions (cosine distance or Manhattan distance), and with or without an attention mechanism.

In the evaluation of the experimental results, this design used the training data accuracy rate (ACC) after 25 epochs, recall rate (Recall), precision rate (Precision), and harmonic average value of the precision and recall rates (F1) to evaluate effectiveness. The specific calculation methods for the four evaluation indicators are as follows:

Among the formulas, the specific meaning of each parameter is as follows:

TP (true positive): judged as a positive sample, and the judgment is correct;

TN (true negative): judged as a negative sample, and the judgment is correct.

FP (false positive): judged as a positive sample but is a negative sample.

FN (false negative): judged as a negative sample but is a positive sample.

As listed in Table 2, the Siamese LSTM network has an attention layer that selects Word2Vec for embedding and selects the Manhattan distance as a similarity function that outperforms the other combination. These data confirmed the superiority of the model combination.

Moreover, we can determine whether the use of the attention mechanism significantly widens the differences in the accuracy of the models. Given the same question similarity calculation function and embedding file, the accuracy of the model using the attention mechanism was estimated to be approximately 9% higher than that of the model without the attention mechanism. This further confirms the correctness of our decision to add the attention layers.

In the same table, we can still see that selecting different same-question similarity calculation functions can also affect the final accuracy. Given the same embedding file and the same situation of applying the attention mechanism, the accuracy of the model using the Manhattan measure was over 1% higher than that of the model using the cosine function.

The new Quora dataset was introduced as a Kaggle competition dataset in 2017 and has since been utilized extensively by researchers. This dataset is a filtered and preprocessed subset of the Quora Question Pairs dataset. In 2019, Lakshay Sharma et al. established a baseline using fundamental models and explored various approaches, including tree-based models, Continuous Bag of Words (CBOW) neural networks, and Long Short-Term Memory (LSTM) recurrent neural networks24. We adopted their evaluation methodology, incorporating modifications such as using the GloVe vectors to get word embeddings and using Dropout for regularization. It is noteworthy that Sharma’s team identified some annotation errors or ambiguities in the source data. Given the limited number of affected entries, we chose to retain the original content without modification.

As listed in Table 3, our model (Siamese LSTM + Attention) achieved commendable accuracy and f-score in conjunction with Sharma’s testing results.

In the most recent evaluation, we compared the test results of similar models from studies conducted between 2017 and 202225. Our results can be summarized as “Siamese LSTM with Manhattan Difference + Attention”, it demonstrated a slight accuracy advantage, as illustrated in Table 4.

Furthermore, the comparative analysis indicates that the Siamese LSTM baseline model performed robustly in this context. Incorporating an attention mechanism also achieved significantly higher accuracy (91.6%) than all baselines: exceeding the standard LSTM by 13.2% points (78.4%) and surpassing the closest competitor (Siamese LSTM with Square Difference and Addition, 89.1%) by 2.5% points. Additionally, different distance metrics had a minor yet discernible impact on the overall model performance.

We have also conducted tests and comparisons on other data sets in the early stage. For the convenience of comparison, our model structure can be summarized as “MaLSTM features + Attention Mechanism.”

Reviewing past research, Lien and Kouylekov used the methodology of textual entailment in conjunction with graph-structured meaning representations, advanced semantic technologies, and formal reasoning tools to elevate their system’s metrics to the forefront among comparable general-purpose semantic parsing systems26. Bowman et al. introduced the Stanford Natural Language Inference Corpus, a freely accessible collection of 570k annotated sentence pairs. They discovered that the information learned by neural network models trained on this corpus could be leveraged to improve performance on standard datasets27. Bjerva et al. adopted a supervised approach to develop a semantic similarity system. It is worth noting that the random forest (RF) regressor is used to determine the similarity between sentence pairs, achieving an accuracy rate of approximately 82%29. Zhao et al. developed five advanced systems, each using an identical feature set but using distinct classifiers or regressors, including a support vector machine (SVM), RF, gradient boosting (GB), K-nearest neighbors (KNN), and stochastic gradient descent (SGD). These systems demonstrate favorable outcomes in the SemEval Task, achieving an accuracy rate approaching 84%, highlighting the effectiveness of their ECNU models31.

Mueller and Thyagarajan proposed a Siamese adaptation of an (LSTM network for data labeling. Combined with an SVM, this novel model surpasses the performance of all previously implemented and more intricate neural network systems. Given that this model relies on pretrained word embeddings as inputs to the LSTM, the authors anticipate further improvements in prediction accuracy with the expansion of pretrained word-embedding datasets18.

This overview traces the progression from general-purpose semantic parsing techniques to the application of supervised learning with RF through experimentation with neural network models. It then delves into the comparative analysis of SVM, RF, GB, KNN, and SGD, culminating in the adoption of LSTM and the innovative MaLSTM model, which marks a steady improvement in accuracy. Table 5 shows that our method outperforms all previous methods when training the SemEval Task 1 data.

SemEval is a series of international NLP research workshops. SemEval Task 1 data are a normal sentence pair. However, whether the dataset consists of questions or not affects the training and similarity calculations. The data fields include sentence pair ID, sentence A, sentence B, semantic relatedness gold label (on a 1–5 continuous scale), and textual entailment gold label (NEUTRAL, ENTAILMENT, or CONTRADICTION). Table 6 shows 3 rows of sample SemEval Task 1 data.

SemEval Task 1 data just require some minor adjustments to be used in our model, listed as follows:

− adding sequence number format based on our question pairs format, “id” start from 0 and inserting two new columns “qid1” and “qid2”;

− deleting the “relatedness” column;

− changing the “entailment judgment” column to “is duplicate”;

− replace all “ENTAILMENT” as “1,” “NEUTRAL” and “CONTRADICTION” as “0”;

− convert the entire “txt” file to “csv” format.

The adaptation of the SemEval Task 1 dataset was undertaken to ensure alignment with the core objective of this study—namely, the detection of semantically equivalent (i.e., duplicate) question pairs. To achieve this, the original three-way entailment classification (ENTAILMENT, NEUTRAL, CONTRADICTION) was transformed into a binary classification scheme. This binary conversion facilitated a consistent evaluation framework across all datasets used in this study (i.e., Quora, Stack Overflow, and SemEval), allowing the application of a unified model architecture without task-specific modifications. It is acknowledged that direct performance comparisons with models originally developed for full natural language inference classification tasks may introduce limitations in fairness due to differences in task formulation. To address this issue, we restricted our comparative analysis to baseline systems from prior studies that employed either semantic similarity scoring or binary entailment evaluation on the SemEval dataset. As presented in Table 5, the selected reference models (e.g., MaLSTM + SVM, ECNU, LangPro) were chosen specifically for their methodological alignment with our binary classification setting, thereby ensuring greater comparability and interpretive validity of the results.

The adjusted data format is shown in Table 7.

Our training data comprise question pairs, and each question has its own “id” tag. In this case, the answer program can be modified from the Siamese LSTM model. All questions from our training data can be saved as raw alignment data to establish a corpus. Including the online question-answering systems for college question pairs and SemEval Task 1 data. The saved content is only the “id” and the vector representations rendered by the Siamese LSTM model. When we enter a new question and want to detect the questions that are duplicated with this new one, we only compare the vector representations of questions, calculate the similarity between them, and finally use the “id” tag to query the question content.

The basic Siamese LSTM splits the data into left and right parts. After loading all the information from the corpus, we built the left-part inputs. The converted question was entered into vector form using the method defined in the Siamese LSTM model. Corresponding to each left input, the vector form of the new question was filled in for the right input. We compared similarity, which is equivalent to calculating the similarity between a new question and each question in the corpus.

Finally, the “id” corresponding to the question with higher similarity is returned to the user. Even multiple “id” values can be returned, ranging from high to low based on similarity. This kind of Q&A search system allows users to ask questions in natural language and accurately and quickly find answers to questions from a large amount of data. Completely new questions will be posted on the platform, awaiting responses from instructors. It is noteworthy that answers from students with strong knowledge and skills may also be included as standard answers.

The computational performance of the proposed model was evaluated on both an NVIDIA GTX 1080 GPU and a standard Intel CPU platform. Experimental results indicate that the model achieves a favorable Balance between predictive accuracy and processing efficiency. Specifically, the average inference latency remained below 200 milliseconds per query, rendering it suitable for real-time deployment in educational question-answering scenarios. The model architecture is inherently modular and supports parallel computation. The integration of pretrained Word2Vec embeddings and the adoption of computationally efficient similarity measures, such as Manhattan distance, contribute to reduced inference overhead.

Application oriented interactive learning system for university

This study designed an operational flowchart for an interactive learning system in universities, including six parts: interactive learning, data collection, data processing and training, real-time optimization technology, feedback collection mechanism, and continuous model optimization, as shown in Fig. 3.

The interactive learning system for universities includes mobile and web platforms, mainly serving university students and teachers. The services are divided into AI services and manual services, among which AI services include open source data warm boot 、LSTM + Attention、 Instant question and answer, belonging to the level of Human-Machine interactive. Human services include providing feedback on incorrect answers, annotating new questions for teachers, verifying responses from students, teachers, and researchers, and belong to the level of interpersonal interaction. Real time data collection begins with user question input, synchronously obtaining user profiles. In the data processing stage, text cleaning and spelling correction are performed, and dynamic injection of learning progress, knowledge graph association, and historical question and answer triple context is performed to correlate information. The data training is conducted through Quora and dataset, and compared and tested with SemEval and Task data. This model continuously updates and optimizes the interactive learning system through three paths: real-time optimization technology, feedback collection mechanism, and continuous model optimization. Real time optimization technology implements dynamic model selection (lightweight model for simple problem routing/complete model for complex problem routing), mixed precision acceleration, and high-frequency result caching. The feedback mechanism combines explicit feedback with implicit feedback, where explicit feedback includes user ratings, accuracy feedback, and manual verification; Implicit feedback includes depth of inquiry, analysis of dwell time, and identification of knowledge gaps. Continuous model optimization is based on daily incremental training: mining low scoring answers and high questioning difficulties, using adversarial samples and knowledge system updates to transfer teacher model soft labels to student models. The final output of multimodal response includes core answers (including formula rendering), dynamic knowledge graph, related exercises, and recommended videos, forming a closed-loop learning system of “data acquisition intelligent reasoning feedback optimization”.

The interactive learning system is based on human-machine collaborative evolution and constructs a structure of “intelligent processing humanistic care”. Through the dynamic context perception (learning portrait/knowledge graph) and multimodal response (formula rendering/AR demonstration) of the human-computer interaction layer, the system realizes a personalized cultivation path; The teacher-student collaboration mechanism and historical Q&A reuse in the interpersonal interaction layer reflect interpersonal interaction. In the dimension of interactive learning, closed-loop feedback systems enable machines to continuously optimize and promote a spiral of “problem-solving knowledge construction”. Ultimately, the visualization of knowledge graphs reproduces the inheritance of disciplinary context, personalized learning is reflected in learning path recommendations, and the deep analysis of problems by big language models embodies the spirit of digital humanities.

Satisfaction survey results

Basic information

In total, 377 valid questionnaires were collected through surveys. The survey institutions are higher vocational colleges and universities, and the distribution of the survey respondents’ academic years is concentrated in the freshman (29.7%), sophomore (68.4%), and junior (1.9%) years of colleges and universities, of which 213 (56.5%) are males and 164 (43.5%) are females, and the largest number of majors are in the field of big data technology and application (25.2%).

Satisfaction analysis

In this design, we updated the version of the online Q&A system. We performed a series of adjustments to the basic Siamese LSTM model. The basic concept of the control variable method was used. This study used the Likert 5-point scale to survey 377 college students who used online question and answer platforms.Table 8 shows the dimensions, and variable of the satisfaction survey. Through reliability and validity testing, the reliability of this study is above 0.85, and the KMO is above 0.83.

First, under the same conditions, the experimental results corresponding to the Manhattan distance method as the distance formula are always superior to those corresponding to the cosine distance method. Second, an attention mechanism is added without changing the other parts of the original model, i.e., an attention layer is added after each LSTM layer to select critical information for the current research mission from a wide range of data. The accuracy can achieve an improvement of approximately 10% in some cases. Following the previous adjustments, we attempted to use a pretrained language model to replace word-embedding methods. The pretrained language model helps sentences from question pairs maintain semantic relationships. These results are applicable to the two sample datasets in which we provide question pairs from online Q&A systems in college and Stack Overflow question pairs. Our system implements simple information extraction and a natural language understanding method (based on the Siamese LSTM model) to answer human questions.

As shown in Fig. 4, It can be seen that AC has the highest score (4.4), with “I am satisfied with the accuracy of the platform’s Q&A system” indicating that students are satisfied with the accuracy and efficiency of answering questions on the online platform. PU (3.98) has the second highest score, with the question “The updated platform Q&A system is better to use than before” indicating that students are satisfied with the use of the updated online system. HS (3.97) and PQ (3.97) have the third highest scores, with “Our school’s online learning platform allows me to maintain high standards (quality) of online learning” and “When I need help with answering questions, I tend to ask questions on the platform’s Q&A system” This indicates that schools have a high level of support for students’ online learning, and students often use online platforms to answer questions. As can be seen from the figure, the score of Other use (UAP) is the lowest among all the questions (2.55), and the original question of this question is “If I have any questions, I will search for some additional platforms to find answers”, It can be seen that this question item is a negative question, so it is reasonable for this question item to have a low score. This also indirectly indicates that students have a high satisfaction with the question and answer platform in this study and a high usage rate of the platform.

Conclusion

This study focuses on the application of neural network models in Q&A systems for virtual learning communities in universities, using the basic idea of the control variable method.

We made a series of adjustments to the basic Siamese LSTM model and arrived at the following conclusions:

-

5.1

Adopting Word2Vec as the word embedding method and using Manhattan distance as the similarity metric can significantly improve the accuracy of the model.

-

5.2

The effectiveness of the Siamese LSTM model combined with the attention mechanism was experimentally verified, and the introduction of the attention mechanism improved the performance of the model by approximately 9% when compared with the model without it.

-

5.3

In comparison with pre-publication models of various types, the method in this study shows superior performance when processing the SemEval Task 1 dataset.

-

5.4

In the satisfaction survey, the students rated the updated interactive system highly. The survey results showed that students were satisfied with the accuracy of the platform.

-

5.5

Our answer system implements simple information extraction and natural language understanding methods to answer human questions.

Data availability

Data can be available upon reasonable request. For access to the data, please contact the first author Hao Cao at M23092100031@cityu.edu.mo.

References

Zheng, Z. et al. Educational development and transformation in the intelligent era: Overview of the 2025 World Digital Education Conference. Open Educ. Res. 31(3), 17–25 (2025).

Deldjoo, Y. Understanding biases in ChatGPT-based recommender systems: Provider fairness, temporal stability, and recency. ACM Trans. Recomm Syst. https://doi.org/10.1145/3690655 (2024).

Deldjoo, Y. & Di Noia, T. Cfairllm Consumer fairness evaluation in large-language model recommender system. ACM Trans. Intell. Syst. Technol. https://doi.org/10.1145/3725853 (2025).

Biancofiore, G. M. et al. Interactive question answering systems: literature review. ACM Comput. Surv. 56 (9), 239 (2024).

Wang, K. et al. ISEMSS. Analyzing public sentiment towards AI: Insights from video comments on Bilibili and YouTube—A ChatGPT case study. In Proc. 7th Int. Semin. Educ. Manag. Soc. Sci. Atlantis Press (2023). (2023).

Lihui, S. & Liang, Z. Generative artificial intelligence literacy: conceptual evolution, framework construction, and improvement path. Mod. Distance Educ. 1, 11–21 (2025).

Yan, L. et al. Investigation of college students’ generative artificial intelligence (GAI) usage status and its implication: taking Zhejiang university as an example. Open. Educ. Res. 30 (1), 89–98 (2024).

Li, D. & Xing, W. A comparative study on sustainable development of online education platforms at home and abroad since the twenty-first century based on big data analysis. Educ. Inf. Technol. 30, 16023–16044 (2025).

Li, D. et al. Structural equation modeling and validation of virtual learning community constructs based on the Chinese evidence. In Proc. of the Interactive Learning Environments 1–16 (2025).

Wang, Y. et al. MCQA: A responsive question-answering system for online education. Sens. Mater. 35 (12), 4325–4336 (2023).

Zolduoarrati, E., Licorish, S. A. & Stanger, N. Harmonising contributions: exploring diversity in software engineering through CQA mining on stack overflow. ACM Trans. Softw. Eng. Methodol. 33 (7), 179 (2024).

Nauman, F. & Herschel M. An Introduction To Duplicate Detection (Springer Nature, 2022).

Ba, J., Mnih, V. & Kavukcuoglu, K. Multiple object recognition with visual attention. arXiv preprint arXiv:1412.7755 (2014).

Chevallier, M. et al. Detecting near duplicate dataset with machine learning. Int. J. Comput. Inf. Syst. Ind. Manag Appl. 14, 374–385 (2022).

Wang, L., Zhang, L. & Jiang, J. Duplicate question detection with deep learning in stack overflow. IEEE Access. 8, 25964–25975 (2020).

Socher, R. Recursive deep learning for natural language processing and computer vision. Stanf. Univ. (2014). http://purl.stanford.edu/xn618dd0392

Soutner, D. & Müller, L. Application of LSTM neural networks in language modelling. In Proc. Springer Berlin Heidelberg (2013).

Mueller, J. & Thyagarajan, A. Siamese recurrent architectures for learning sentence similarity. In Proc. AAAI Conf. Artif. Intell. (2016).

Sammut, C. & Webb, G. I. Encyclopedia of Machine Learning and Data Mining (Springer, 2017).

Ruyi, B. A general image orientation detection method by feature fusion. Vis. Comput. 40 (1), 287–302 (2024).

Adaloglou, N. & Karagiannakos, S. How attention works in deep learning: Understanding the attention mechanism in sequence models. AI Summer (2020). https://theaisummer.com/attention/

Niu, Z., Zhong, G. & Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 452, 48–62 (2021).

Merity, S. et al. Pointer sentinel mixture models. ArXiv preprint arXiv:1609.07843 (2016).

Sharma, L. et al. Natural language understanding with the Quora question pairs dataset. arXiv preprint arXiv:1907.01041 (2019).

Kumari, R. et al. Detection of semantically equivalent question pairs. In Proc. Intell. Hum. Comput. Interact., Springer Int. Publ. (2021).

Lien, E. & Kouylekov, M. Semantic parsing for textual entailment. In Proc. 14th Int. Conf. Parsing Technol. (2015).

Bowman, S. R. et al. A large annotated corpus for learning natural language inference. arXiv preprint arXiv:1508.05326 (2015).

Abzianidze, L. A tableau prover for natural logic and language. In Proc. Conf. Empir. Methods Nat. Lang. Process. (2015).

Bjerva, J., Bos, J., Van der Goot, R., et al. The meaning factory: Formal semantics for recognizing textual entailment and determining semantic similarity. In Proc. 8th Int. Workshop Semant. Eval. (SemEval 2014) (2014).

Jimenez, S., Duenas, G., Baquero, J., et al. UNAL-NLP: Combining soft cardinality features for semantic textual similarity, relatedness and entailment. In Proc. 8th Int. Workshop Semant. Eval. (SemEval 2014) (2014).

Zhao, J., Zhu, T., Lan, M. ECNU: One stone two birds: Ensemble of heterogenous measures for semantic relatedness and textual entailment. In Proc. 8th Int. Workshop Semant. Eval. (SemEval 2014) (2014).

Lai, A., Hockenmaier, J. Illinois-lh: A denotational and distributional approach to semantics. In Proc. 8th Int. Workshop Semant. Eval. (SemEval 2014) (2014).

Acknowledgements

The authors would like to gratefully acknowledge the volunteers for their support and the participants who were interviewed for giving up their time to contribute to this study.

Funding

This paper is a research achievement of the key research of higher education teaching reform in Jilin Province in 2025, “Analysis of network learning behavior based on Data mining and its teaching strategy”. (Project Number: 2025UT3S558005B); Key research Project of Jilin International Studies University in 2024, “Research on the Development and Cultivation Mechanism of University Teachers’ Artificial Intelligence Competence from the Perspective of Lifelong Learning”, (Project number: JW2024ZDB010).

Author information

Authors and Affiliations

Contributions

Hao Cao: Research design, Formal analysis; Xingman Yu: Software; Pingping Han: Data analysis; Jun Peng: Analysis and Verification; Deming Li: Methodology, Funding. Hao Cao and Xingman Yu contributed equally to this work and should be regarded as co-first authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics statement

All experimental protocols were approved by the Ethics Review Committee of Jilin International Studies University(JW20240311).We declare that we will strictly abide by the relevant regulations of the national laws and the ethics review committee in conducting research and protect the rights and privacy of the subjects.We have obtained informed consent from all subjects.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Cao, H., Yu, X., Han, P. et al. Interactive learning system neural network algorithm optimization. Sci Rep 15, 35498 (2025). https://doi.org/10.1038/s41598-025-19436-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-19436-2