Abstract

The ocean plays a critical role in regulating global climate and ecosystems, making the accurate prediction of ocean environmental conditions essential for disaster prevention, sustainable resource management, and ecological protection. A novel method for ocean environment prediction has been developed using a Multiscale Spatial-temporal Network (MSSTN), designed to enhance the accuracy of ocean quality forecasting. Spatial and temporal data are leveraged through the integration of deep learning techniques, including Graph Convolutional Networks (GCNs) and attention mechanisms, to capture the complex spatial similarity and temporal dependencies of oceanic events. Data from buoy observations and remote sensing are utilized, and multivariate time series analysis is performed to predict ocean water quality metrics such as chlorophyll a concentration. Quantitative evaluations using Fujian coastal data show that MSSTN reduced the Mean Absolute Percentage Error (MAPE) by 1.035 mg/L in 1-day predictions, corresponding to a 12.4% improvement relative to the best existing method, and 1.226 mg/L lower MAPE in 1-week forecasts (19.8% improvement over the best existing method). The model sustains an MAPE of < 2.5% in 1-month projections, outperforming conventional methods in both short-term and long-term prediction accuracy and stability.

Similar content being viewed by others

Introduction

The ocean, a vast reservoir of natural resources, plays an important role in shaping global environmental and climatic conditions. Accurate perception and sustainable utilization of ocean dynamics are essential for the continued progress of human civilization. However, ocean pollution has emerged as a significant global crisis, posing severe threats to marine biodiversity and human well-being1. Each year, approximately 8–10 million metric tons of plastic waste enter the oceans, accumulating on the seabed, entangling marine organisms, and being ingested by species across the food web. This pervasive pollution leads to the direct mortality of over one million marine animals annually, including seabirds and sea turtles. Moreover, agricultural nutrient runoff has created more than 400 hypoxic “dead zones,” covering over 245,000 square kilometers of ocean, where depleted oxygen levels destroy marine habitats and disrupt fish populations2. The ecological balance of these habitats faces unprecedented challenges.

Beyond environmental consequences, ocean pollution severely impacts human health. Industrial runoff introduces heavy metals like lead and mercury into marine ecosystems, contaminating seafood and causing neurological damage, kidney disorders, and developmental issues in humans. Additionally, nutrient pollution drives toxic algal blooms, which further compromise food safety and public health. The “China Environmental Condition Bulletin” released annually indicates that China’s marine ecological environment is not optimistic, especially with severe water pollution in coastal areas, frequent occurrences of green tides, red tides, eutrophication, and salt erosion3,4. These issues greatly restrict the development of the marine economy and highlight the urgent need for effective management strategies.

Marine monitoring technology is crucial for marine disaster prevention and reduction. The collection, analysis, and utilization of multi-dimensional marine monitoring data not only promote marine-related scientific research but also provide reliable data support for various production application sectors, such as marine ecological environment monitoring departments, marine disaster early warning monitoring departments, national defense security departments, and the marine personal consumption sector5,6. To effectively address the issue of marine red tide disasters, Fujian Province has established more than 380 base stations and over 5,000 sets of buoy collectors, utilizing Beidou satellites for data transmission to monitor the marine ecological environment in real-time7,8. This initiative aims to analyze and predict the environment through massive marine monitoring data.

Deep learning has recently shown strong potential for spatiotemporal prediction tasks. For example, Long Short-Term Memory (LSTM) networks, which are designed to learn from sequential data, have been combined with Convolutional Neural Networks (CNNs), which excel at identifying spatial patterns. This combination has been applied successfully to sea surface temperature (SST) forecasting9improving accuracy compared with time-only models. This paradigm was further extended by Sarkar et al.10, who integrated LSTM networks with numerical estimators to achieve precise SST forecasts across diverse spatiotemporal scales, demonstrating the feasibility of combining deep learning with physical models. For model performance validation, Ali et al.11 systematically compared deep learning, machine learning, and statistical models for SST and significant wave height (SWH) prediction. Their findings highlighted the superior performance of deep learning in capturing nonlinear oceanic dynamics. Xu et al.11 designed a specialized LSTM architecture for global SST prediction, which demonstrated a 20% reduction in Root Mean Square Error (RMSE) compared to traditional ocean models, particularly excelling in coastal and equatorial regions. Notably, deep learning has achieved groundbreaking progress in forecasting long-term climatic phenomena. The CNN model developed by Yoo-Geun Ham et al.12, published in Nature, enabled effective 18-month predictions of the El Niño-Southern Oscillation (ENSO), surpassing existing dynamical models in Nino3.4 index accuracy. This milestone underscores deep learning’s transformative potential in climate-scale ocean forecasting. In addressing spatial resolution challenges, Hu et al.13 innovatively incorporated residual structures and attention mechanisms, significantly improving the spatial resolution of wind speed, SWH, and wave period data. This approach provides a novel methodology for reconstructing high-precision marine environmental fields. Collectively, these studies outline the evolution of deep learning in ocean prediction: from single-parameter forecasting to multi-physical field coupling, from short-term predictions to climate-scale projections, and from purely data-driven approaches to hybrid physics-informed frameworks.

Recent advances in deep learning, particularly LSTM-CNN hybrids for SST prediction and CNN-based ENSO forecasting, demonstrate AI’s potential in marine science. Despite these advances, two challenges remain unresolved. First, many models overlook the dynamic spatial dependencies that occur as pollutants spread or as conditions change across connected marine regions. Second, long-term temporal patterns, such as seasonal cycles or recurring pollution events, are often ignored. To address these gaps, we propose a new Multiscale Spatiotemporal Network (MSSTN). This model combines Graph Convolutional Networks (GCNs), which represent monitoring stations as nodes in a network and capture their spatial interactions, with a Temporal Transformer module, which excels at modeling long-term dependencies in time series data. By integrating these components, the MSSTN can dynamically learn both spatial and temporal features, improving the accuracy and reliability of ocean environment predictions.

Data processing

In this study, the relevant features in the ocean environment prediction problem are categorized into hydrological features, meteorological features, and chemical characteristics. These features are essential for capturing the complex dynamics of the ocean environment and are used to construct the spatial relationships between different monitoring stations. Hydrological features mainly include elements such as temperature, salinity, waves, water level, and ocean currents. These data provide critical information on the physical state of the ocean and are fundamental for understanding the spatial and temporal variations in the marine environment. Meteorological features primarily include elements such as sea surface temperature, atmospheric pressure, wind direction, wind speed, and geopotential height. These features are crucial for capturing the influence of atmospheric conditions on the ocean environment and are essential for predicting phenomena such as sea surface temperature anomalies and wave heights. Chemical characteristics include marine water quality data such as dissolved oxygen, pH value, alkalinity, nitrate, nitrite, heavy metals, and suspended solids, as well as chlorophyll a (Chl-a) and blue-green algae (BGA), which are key indicators for predicting red tide occurrences. These features provide insights into the chemical state of the ocean and are vital for assessing water quality and potential ecological risks. Spatial features include the geographical spatial information of monitoring stations, which do not change over time. Spatial features can be extracted from POI data (Point of Interest, for example, the number of specific types of locations around the target area), utilizing spatial map information to construct inter-station relationships for each monitoring station.

To effectively model the spatial relationships between different monitoring stations, we integrate the extracted features into a comprehensive graph structure. This integration involves the following steps:

-

1.

Feature Aggregation: We aggregate the hydrological, meteorological, and chemical features to form a unified feature representation for each monitoring station. This aggregated feature vector captures the multidimensional characteristics of the ocean environment at each location.

-

2.

Graph Construction: In the field of ocean environment, pollution events in one area are often correlated with events in nearby areas; regions that are close together typically have a similar number of ocean environmental events14. To describe this correlation, the model introduces regional distance information. Considering that regions with similar features often exhibit similar ocean environment patterns15. To represent the spatial relationships between different monitoring stations, define an undirected graph G containing N nodes (all monitoring stations within the monitor area), G = (V, E, A), where V (vi∈V) is the set of stations, E(< vi, vj>∈E) is the set of edges representing potential interactions between stations, and A(A∈RN×N) represents a spatial weight matrix, where each element Ai ,j indicates the quantitative spatial correlation between vi and vj, with the edge weight representing the strength of the relationship between the stations. In the model proposed in this paper, the Euclidean distance is used to represent relationships, which means that the lower the distance value, the stronger the correlation.

1) POI Graph: The POI graph is a key component of the model that encodes the environmental similarity between regions. It is constructed using POI data, such as the types of monitoring stations or environmental landmarks within a region. The POI graph = (, p, p) is used to encode the environmental similarity between regions, with its dimension equal to the number of POI categories, where each entry represents the number of specific category POIs within region i. V represents the set of nodes in the graph, corresponding to the monitoring stations (or regions) in the ocean environment. p represents the set of edges, which indicate the environmental similarity between pairs of stations or regions. p is the adjacency matrix that encodes the strength of the spatial relationship between nodes (stations or regions). Each element in the matrix represents the spatial similarity between two nodes.

is the vector of region (including three types of feature vectors: hydrological features, meteorological features, and chemical characteristics), p is the parameter that controls the scale of the similarity matrix.

2) Event graph: Establish a regional pollution event similarity graph Event = (, c, c) to encode the historical pollution event similarity between regions, where the dimension is equal to the number of time gaps in the spatiotemporal data of pollution events, and each entry corresponds to the number of pollution events occurring in region during each time gap. V is again the set of nodes, which corresponds to regions or monitoring stations. c denotes the set of edges, indicating the historical event similarities between the regions (e.g., pollution events). c is the adjacency matrix that represents the strength of the connections between regions based on the similarity of historical pollution events. It is defined as follows:

3) Regional topological graph: Establish a regional distance graph Dist =(, d, d) to encode the spatial similarity between regions, applying a Gaussian kernel weighting function to define the weights of the edges. V represents the set of nodes, which again correspond to regions or monitoring stations. d is the set of edges representing the spatial topological relationships between regions based on their geographical proximity. d is the adjacency matrix representing the spatial relationship strength between stations. In this case, the strength is determined by the geographical distance between stations or regions. It is defined as follows:

Where Dist(i,j) is the Manhattan distance between regions and , and d is the parameter controlling the ratio of the adjacency matrix.

Proposed methodology

Framework overview

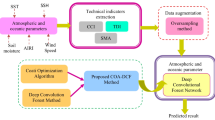

Based on the advantages of Multi-view Graph Convolutional Network with attention mechanism (MGCN) in multidimensional problems between nodes16,17 and the significant advantages of Transformer in capturing long-range spatiotemporal dependencies18. A spatiotemporal distribution prediction model for the ocean environment has been designed, with the overall structure of the model shown in Fig. 1. The overall model consists of a network that learns the spatial similarity features of ocean monitoring regions, a generator network that learns the spatiotemporal features of marine water quality events, and a decoder network that predicts distribution features. We first use the Multi-Scale Graph Convolutional Network (MSGCN), a multi-node marine water quality prediction method. Into this network we input three types of data: the regional Point of Interest (POI) feature map, the regional ocean environment similarity map, and the regional distance map. This step extracts key spatial similarity features of different ocean regions. Next, we apply the Spatiotemporal Transformer Model (STTM), which is a dynamic temporal module based on a graph attention network. The STTM integrates the spatial similarity features from step one with the spatiotemporal features of marine environment events. Its multi-layer encoder stores this information as high-dimensional spatiotemporal memory. Finally, this memory information is passed into the decoder. Combined with the previous spatiotemporal sequence of marine water quality, the decoder outputs the predicted future spatiotemporal sequence.

Feature extraction with MSGCN

Faced with complex and diverse spatial similarity information, the overall structure of the MSGCN network is shown in Fig. 2. The network is divided into three modules: (1) An independent convolutional network is designed for regional POI functional similarity, regional marine water quality similarity, and inter-regional distance, capturing the spatial similarity features of marine water quality regions; (2) A joint convolution layer is designed to fuse and express spatial similarity features; (3) A spatial channel attention network is constructed to adaptively learn the importance weights of the embeddings, better capturing the relationships between different features, allowing for a comprehensive fusion expression of spatial similarity features and categories, ultimately outputting important spatial similarity features of marine water quality regions. The spatial convolution module captures the spatial similarity features of marine water quality regions more effectively by performing separate convolutions and joint convolutions on the graphs.

In the spatiotemporal pollution prediction problem, the spatiotemporal sequence of pollution events at each time point can be regarded as a tensor X ∈ RC×H×W, where the prediction process involves forecasting the changes in the pollution spatiotemporal sequence for the next M time points based on the spatiotemporal sequence data of the previous N time points. The model requires a historical sequence extracted from the spatiotemporal data of pollution events, which can be viewed as a tensor XB×T×C×H×W, where B is the batch size, T is the length of the historical pollution event time series, C is the number of channels, which corresponds to the number of pollution event categories, and H and W are the height and width of the grid, respectively.

-

(1)

Individual convolution

To capture the information in the feature structure space of the regional POI, a graph is constructed based on the node feature matrix Xpoi, represented as Graphpoi =(Apoi, Xpoi), where Apoi is the adjacency matrix of the POI graph. After performing multi-layer GCN operations using Graphpoi, the output representation is denoted as Zpoi, which represents the node embedding capturing specific information in the POI feature space Zpoi. The formula is as follows19:

Where Wpo(k) is the weight matrix of the -th layer in GCN, ReLU is the activation function, and the initial \(\:{Z}_{poi}^{\left(0\right)}={X}_{poi} \circ\)\(\:{\stackrel{\prime }{A}}_{poi}\) is the adjacency of the graph, and \(\:{\stackrel{\prime }{D}}_{poi}^{-\frac{1}{2}}\) is the degree matrix. For the feature space marine water quality patterns and topological space distances, the same calculation method as above is used to obtain the regional marine water quality output Zevent and the regional distance output Zdist.

-

(2)

Joint convolution

The joint convolution employs a parameter-sharing strategy to extract common features from three spatial dimensions: POI functional similarity (e.g., industrial zones, sewage outlets), historical event similarity (e.g., recurring pollution incidents), and geographical distance (e.g., coastal proximity). This design is grounded in the prior knowledge that spatially adjacent regions (exhibiting high spatial proximity/distance correlation) with similar POI distributions (high functional correlation) and convergent historical event patterns (high event correlation) are likely to exhibit strong correlations in marine water quality variations. The study designed a public convolution with a parameter sharing strategy to filter shared features from three spaces. According to different input graphs, we can obtain three output embeddings Zdist, ZEvent, and Zpoi. The formula is as follows:

\(\:{W}_{c}^{\left(k\right)}\)where is the weight matrix of the k-th layer of the public GCN, and α represents one of the three spaces. \(\:{Z}_{a}^{(k-1)}\) is the node in the (k − 1)-th layer embedded in the α space, \(\:{Z}_{a}^{(k-1)}={X}_{a}\). The public embedding Z of the three spaces is:

The output of the joint convolution module is:

-

(3)

Spatial channel attention module

The spatial channel attention mechanism enables the model to adaptively focus on important spatial and channel features obtained from the spatial convolution module, thereby enhancing the model’s performance. Channel attention computes the global average pooling and global maximum pooling of the input features, concatenates the two, and calculates the weights through a fully connected layer. These weights are then applied to the channel dimension of the input features. This weighting can help the model focus on more meaningful feature channels for specific tasks. The formula is as follows20:

Where, Mc(F) is the channel attention module, σ is the sigmoid function, MLP is the multi-layer perceptron, and AvgPool(F) and MaxPool(F) represent average pooling and maximum pooling, respectively.

Spatial attention computes the average and maximum values of the input features along the channel dimension, concatenates the two, and calculates the weights through a convolution layer. These weights are then applied to the spatial dimension of the input features. This weighting helps the model focus on more meaningful spatial areas for specific tasks. The formula is as follows:

Where, Ms(F) is the spatial attention module, σ is the sigmoid function, f is the convolution operation, and AvgPool(F’) and MaxPool(F) represent average pooling and maximum pooling, respectively.

The spatial channel attention module dynamically weights the feature importance across distinct graph convolution branches (POI/event/topology), enabling context-aware fusion of heterogeneous data modalities. This module prioritizes pollution-sensitive regions during algal blooms while suppressing sensor noise in open-sea areas.

Spatio-temporal dependency modeling with STTM

The task of forecasting the marine environment is essentially a spatiotemporal sequence prediction problem, requiring consideration of both the temporal and spatial dimensions. The multi-scale MSGCN extracts spatial information by aggregating K-order neighboring nodes, but the features of the nodes themselves also need to be emphasized. Therefore, in order to further improve the accuracy of precipitation nowcasting, the STTM model was designed, as shown in Fig. 3, using Transformer to capture the long-term dynamic information of each independent node. The model consists of three structures: Encoder, Translator, and Decoder. The Encoder is used to extract the spatial features of historical frames, the Translator is used to learn the spatiotemporal evolution and transmission, and the Decoder is used to integrate and predict the spatial features of future frames. This structure, which separates the fusion of spatial features and spatiotemporal features, allows the CNN model to also possess higher spatiotemporal feature transmission performance.

Encoder

In the design of the encoder, an innovative stacking strategy was adopted, combining the CGS module with the D-Block module. The encoder consists of a composite structure of Ns Basic Conv Blocks (BCB) and Auxiliary Optimal Blocks (AOB). When processing the input data of the encoder, this paper reshapes the input data from (B,T,C,H,W) to (B×T,C, H,W), merging the batch dimension B and the time dimension T, which allows the model to focus more on extracting spatial features. In this process, within each group of the BCB-AOB-BCB structure, BCB and AOB use convolutions with a stride of 1 to preserves spatial resolution to prevent loss of shallow details (e.g., sudden pollution sources) during early downsampling. while the AOB at the end of each group structure employs a convolution operation with a stride of 2 for gradual downsampling (vs. single-step) retaining high-frequency features (e.g., rapid water quality fluctuations near discharge outlets) while reducing computation. In traditional models, excessive reliance on the stacking of the BCB module often leads to significant loss of spatial detail information. However, by interspersing the AOB module within the BCB stacking strategy, the extraction of deep features is enhanced while effectively mitigating the loss of shallow detail information. This approach optimizes the model’s ability to control spatial features and improves the accuracy of forecasts for high-value areas.

Translator

The design employs Nt convolution Transformer modules (Conv Transformer Block, CTB) to learn and process spatiotemporal features, effectively alleviating the problem of long-term spatiotemporal information loss present in CNN models, while also achieving comprehensive utilization of both global and local information. Input features are transformed from the shape (B,T,C,H,W) to (B,T×C,H,W), merging the time dimension T and the channel dimension C, allowing for simultaneous processing of the dynamic changes in spatiotemporal features. The TCB structure incorporates a bidirectional Transformer (BDTB, Bi-Direction Transformer Block) mechanism, which begins with an 11-sized convolution layer to expand the number of feature channels, which explicitly models long-term dependencies (e.g., semi-monthly tidal cycles ≈ 11 days). Its receptive field covers 11 consecutive timesteps (e.g., 264 h ≈ 11 days for hourly data), contrasting with the 7 × 1 kernel used by Xu et al.11 for weekly-scale SST prediction. Next, a residual connection structure21 is employed for feature learning, where a 3 × 3 convolution layer is first used to capture local spatiotemporal features, the 3 × 3 kernel is a classic choice in CNNs to balance receptive field size and computational efficiency. For marine environment prediction, 3 × 3 kernels effectively capture local spatial features (e.g., pollutant dispersion between adjacent monitoring stations) while avoiding redundant computation from larger kernels (e.g., 5 × 5). The 3 × 3 convolution layer is followed by a 1 × 1 pointwise convolution to linearly combine the feature channels and map them to a higher-dimensional space, thereby enhancing the expressive capability of the features. BDTB is used for learning global spatiotemporal information, allowing the model to capture and integrate long-range dependency information across the entire input. Finally, the input features and the output of BDTB are merged and fused through a convolution layer of size 3 × 3, which not only utilizes the global information provided by BDTB but also retains the details of local features, enabling the learning of the interrelationship between local monitoring data and global monitoring data.

Conventional bidirectional Transformers are primarily applied in the field of natural language processing (NLP), represented by the BERT language model22, which mainly employs masking operations to achieve bidirectional understanding of context. The bidirectional Transformer designed in this paper (BDTB) implements bidirectional operations through a designed bidirectional multi-head self-attention (BMA) mechanism, enhancing the ability to process dynamic spatiotemporal data sequences in both forward and backward directions, possessing superior spatiotemporal information extraction capabilities, alleviating the issue of spatiotemporal information loss. Its bidirectional attention can also better capture changes in high-value areas, making it more suitable for spatiotemporal sequence problems. This mechanism is crucial for capturing rapidly changing features in complex dynamic scenes. By adding their reverse correspondences (\(\:\widehat{Q}\) and \(\:\widehat{K}\)) based on the existing query (Q) and key (K) vectors, BMA enables the model to synchronously extract key features from both directions of the data, using the reverse \(\:\widehat{Q}\) and \(\:\widehat{K}\) to identify important information that may be easily overlooked from the reverse features, thereby enhancing the model’s ability to filter features using the sequential Q and K. BDTB is constructed through the concatenation of bidirectional multi-head self-attention units and multi-layer perceptron (MLP) blocks, with the collaborative work of these modules used to synthesize and refine spatiotemporal features, as well as to implement high-order nonlinear feature transformations. Layer normalization (LN) is applied before each attention and MLP module to normalize the input, and such preprocessing is particularly beneficial for enhancing the training stability of the model and accelerating the convergence process. The design of the BDTB model lacks an intrinsic bias towards spatial positions, which affects its ability to perceive spatial features. By referencing the method of data spatial rearrangement in MobileViT23the BDTB’s perception of spatial features has been strengthened.

Bidirectional multi-head self-attention relies on scaled dot-product attention, operating on sequential queries Q, sequential keys K, reverse queries \(\:\widehat{Q}\), reverse keys \(\:\widehat{K}\), and values V.

Among them, dk is the key dimension, Wi and WO are the weight matrices. It is worth noting that when calculating the inverse \(\:\widehat{Q}\) and \(\:\widehat{K}\), it is necessary to first perform the inverse operation on the features along the channel dimension. After completing the computation \(\:\widehat{Q}{\widehat{K}}^{T}\), the inverse operation is again performed along the channel dimension to restore the sequential structure, as shown in Fig. 4, where the symbol ↑↓ represents the channel inverse operation.

Decoder

The decoder adopts a stacking strategy of Ns layers of TCB (Transposed Convolution Block) + AOB + BCB composite structure to gradually construct predictions for future frames. In this process, the transposed convolution operation plays the role of upsampling, aiming to effectively restore the deep feature space to a resolution that matches the original input, with a sampling step size set to 2. The AOB and BCB layers process feature information meticulously through a convolution process with a stride of 1, ensuring the quality of output details. Through the skip connections of the Decoder module, shallow historical input data can be utilized to recover shallow details in the forecast results. The overall architecture design of the decoder forms a symmetrical relationship with the encoder.

Dynamic spatiotemporal dependency capture is enabled by our Bidirectional Dual-Time Transformer Block (BDTB), which replaces traditional RNN architectures. The BDTB employs dual-directional attention mechanisms to model both forward (pollutant dispersion trajectories) and reverse (contamination source tracing) temporal processes, effectively mitigating long-term dependency loss and gradient decay.

Model evaluation and results

Taking the water quality of a nearshore estuary in Fujian, China as the research object, the ecological environment data of the nearshore estuary serves as the research sample. Based on the GPS coordinates of each measurement point, the monitoring area is divided into 10 regions, with an 80:20 ratio for the training set and test set. The predicted Chl-a content, which can directly express the occurrence of algal blooms, is used as a representative indicator. Our dataset combines multiple sources of observations collected between 2018 and 2023. It mainly consists of three parts. Firstly, Buoy Observations take up 50%. These are hourly data gathered from 4,023 buoys in Fujian’s coastal monitoring system, which cover parameters like dissolved oxygen, chlorophyll-a, and sea surface temperature (SST). Secondly, Satellite Remote Sensing accounts for 20%. We have MODIS-Aqua L3 SST with a resolution of 1 km per day (available for free download at https://ladsweb.modaps.eosdis.nasa.gov/archive/) and VIIRS chlorophyll-a with a resolution of 750 m per day (free to download from https://lpdaac.usgs.gov/). These data were resampled to 0.1° grids by nearest-neighbor interpolation, and pixels with over 30% cloud contamination were removed. Finally, Reanalysis Data makes up 30%. These are outputs from NMDIS (including salinity, currents, wind fields, etc., which can be freely downloaded at https://mds.nmdis.org.cn). They were downscaled to the locations of the buoys through bilinear interpolation.

The spatiotemporal alignment of the data was carried out in three steps. First, we used UTC timestamps to synchronize the time dimension of the data, ensuring that all the data points were in the same time frame. Second, we registered the spatial information of the data to the WGS84 coordinate system, making sure that the locations were accurately represented and consistent across different data sources. Finally, we performed feature fusion by means of attention-weighted concatenation (see Sect. 3.2).

In practice, there are numerous patterns of data missing, which can be roughly classified into four types. Firstly, there is the missing completely at random (MCAR) case, where the missing values are entirely independent and thus appear as isolated points randomly distributed. Secondly, there is the time block missing pattern, in which the sensor readings of the same buoy are missing in consecutive time frames. Thirdly, the spatial block missing occurs when most buoys simultaneously lack a certain monitoring parameter at the same time. Fourthly, the parameter block missing means that all the monitoring parameter values of a certain buoy are missing at a specific time. The time interpolation algorithms (i.e., Linear and Spline) have difficulties in estimating the time block missing situations, and the spatial interpolation algorithms (i.e., IDW and Kriging) are not applicable to the spatial block missing cases. Through experimental comparisons, we found that the Spatio-Temporal Heterogeneous Covariance Method (ST-HC)24 has lower Mean Absolute Error (MAE) and Mean Relative Error (MRE) than other methods in all four missing patterns. Therefore, we adopted the ST-HC method as below. First, the missing dataset is divided into homogeneous spatial regions. Then, the most relevant spatial and time sampling sequences are selected for the imputation of the missing data, and the spatial and time contribution weights are calculated to obtain the best linear unbiased estimates in the spatial and time dimensions. Finally, the correlation coefficients are used to determine the spatial and time weights, and the estimates in the spatial and time dimensions are integrated to obtain the overall estimate of the missing data.

Outliers can have a substantial impact on the performance of prediction models. To identify outliers, we employed the Spatio-Temporal outliers detection (STOD) method25. This method takes into full consideration the spatio-temporal neighborhood, spatio-temporal autocorrelation and heterogeneity among entities, thereby enhancing the accuracy of outlier detection.

The model proposed in this paper is developed based on the Pytorch 1.8.0 deep learning environment, using Python for programming. The PC configuration for training the model is as follows: 2*Intel(R) Xeon GOLD6138, 128GB RAM, NVIDIA 4*GeForce RTX 4090 GPU. The STTM model sets the Encoder and Decoder module’s Ns to 2, with the number of groups in GroupNorm being 2. The settings for the Translator module’s Nt are set to 3, with the number of groups for GroupNorm being 8. The settings of Ns=2 for the Encoder/Decoder and Nt=3 for the Translator were determined through ablation experiments. Increasing the layer depth (e.g., Ns=3 or Nt=4) led to overfitting and doubled training time on the validation set. The GroupNorm group numbers (2 for Encoder/Decoder, 8 for Translator) were based on feature complexity: shallow spatial features benefit from coarser grouping, while deeper spatiotemporal interactions require finer granularity. The BDTB module’s bidirectional self-attention is set to 4 heads. Empirical ablation studies demonstrated that 4 attention heads optimally balance computational efficiency and prediction accuracy for coastal water quality forecasting. Fewer heads (e.g., 2) failed to capture tidal-periodic (12.4 h) and diurnal (24 h) patterns simultaneously, while more heads (e.g., 8) introduced noise from sparse buoy data. This aligns with findings in marine Transformer adaptations by Xu et al.11, where 4-head configurations outperformed alternatives in SST prediction. Other comparative models maintain consistency with the parameters of the official open-source code. In this paper, all models maintain consistent basic training settings, using the Adam optimizer, with a learning rate set to 10− 4, conducting 100,000 sampling training iterations, and a batch_size set to 2.

The choice of 100,000 training iterations was based on extensive experiments. During training, both training and validation losses were closely monitored. The training loss decreased initially but slowed down around 80,000 iterations. The validation loss showed a similar trend, declining until about 90,000 iterations and then fluctuating slightly. No significant increase in validation loss (indicating overfitting) or stagnation in training loss (indicating underfitting) was observed. At 100,000 iterations, both losses were stable, and further iterations didn’t improve performance.

Evaluation metrics

The regression model evaluation metrics fall into three categories: MAE series (derived from Mean Absolute Error), MSE series (from Mean Squared Error), and R² series (goodness-of-fit). In our experiments, we selected representative metrics from the first two categories.

-

Mean Absolute Percentage Error (MAPE), from the MAE series, measures the average error as a percentage of the observed values. It provides an intuitive sense of how far predictions deviate from reality in relative terms (e.g., “on average, predictions are 8% off”).

-

Root Mean Squared Logarithmic Error (RMSLE), from the MSE series, is particularly suitable for spatiotemporal data with long-tailed distributions. By applying a logarithmic transformation, RMSLE reduces the influence of very large errors and emphasizes accuracy on smaller values, which are often more critical in environmental prediction.

Together, these metrics provide a balanced assessment: MAPE captures relative error in a way that is easy to interpret, while RMSLE highlights the model’s ability to handle skewed data distributions without being overly penalized by extreme outliers.

In the formula: yi and ŷi represent the true value and predicted value at time t, respectively; N is the length of the prediction sequence.

Comparative analysis

Seven control models were selected to conduct experiments using the same dataset. The control models are shown as M1-M7 in Table 1:

To systematically evaluate the innovations of the proposed MSSTN in marine spatiotemporal prediction, seven baseline models (Mod1–Mod7) were selected and categorized into five classes. These classes include traditional statistical methods (Mod1: Neural Network + ARIMA), pure temporal models (Mod2: LSTM), spatiotemporal hybrid models (Mod3: CNN-GRU), graph-based models (Mod4: TSGN, Mod5: GCN-LSTM, Mod6: ST-GCN), and attention-enhanced models (Mod7: STCANET). This selection covers four key marine prediction paradigms—statistical, temporal, spatiotemporal hybrid, and graph/attention-based approaches—allowing us to isolate MSSTN’s contributions in terms of multi-scale graph fusion compared to single-graph designs (Mod5/Mod6), dynamic bidirectional attention compared to unidirectional attention (Mod7), and cross-modal regularization compared to single-sensor dependency (Mod3).

This paper compares the proposed MSSTN model with the seven methods Mod1-Mod7 listed in Table 1, using a specific day and week in June 2023 as examples. The prediction evaluation results obtained by each method are shown in Fig. 5.

As shown in Fig. 5, in the 1-day lead prediction, the IMAPE(1.678%), IRMSLE(0.0206), and IMAE(25.241 mg/L) of the MSSTN (the model proposed in this paper) decreased by 0.042%, 0.0068, and 1.035 mg/L, respectively, compared to the IMAPE (1.720%), IRMSLE(0.0274), and IMAE(26.276 mg/L) of M6 model(ST-GCN, ), with a significant difference validated by the Diebold-Mariano test as show in Sect. 4.5(p < 0.05). This improvement reflects the advantage of MSSTN’s dynamic graph fusion over ST-GCN’s static spatial granularity. In the 1-week lead prediction, the IMAPE, IRMSLE, and IMAE of the MSSTN model decreased by 0.029%, 0.0049, and 1.226 mg/L, respectively, compared to the M6 model. Compared to the M7 model, the three indicators decreased by 0.126%, 0.0032, and 2.011 mg/L in 1-day lead predictions; In 1-week lead predictions, the three indicators decreased by 0.037%, 0.0035, and 1.395 mg/L. Similarly, compared to the remaining comparison models, the MSSTN model also performed better. This reflects the advantages of the multi-scale spatiotemporal graph convolutional network combined with Transformer in handling multi-node Chl-a prediction tasks.

4Analysis of model prediction performance at different nodes

This section explores the prediction performance of the model at various nodes deployed in different locations. 10 monitoring nodes were selected for 1-day and 1-week lead predictions. Figure 6 shows the MSSTN model’s prediction performance at 10 monitoring nodes across different regions. These nodes, chosen for their diverse environmental conditions and spatial relationships, represent various levels of pollution exposure and environmental dynamics.

As shown in Fig. 6, the MSSTN model has the lowest IMAPE with 7 nodes in both 1-day and 1-week lead predictions according to Fig. 6 (a) and (b). And it has the lowest IRMSLE with 8 nodes in 1-day lead predictions and the lowest IRMSLE with 7 nodes in 1-week lead predictions according to Fig. 6 (c) and (d). Table 2 only retains the mean values of 10 nodes and excludes the specific values of individual nodes, focusing on the overall performance comparison of models. It can be found that the four indicators of MSSTN (IMAPE and IRMSLE for 1-day/1-week predictions) are all the minimum values. For detailed IMAPE data of each model at individual nodes (e.g., Node 5 and Node 8, which represent typical estuarine and aquaculture zones), refer to Supplementary Table S1. To further visualize the consistency of MSSTN’s performance across key nodes, Supplementary Figure S1 presents the Chl-a prediction curves of all models at Node 5 (near a river estuary with high nutrient input) and Node 8 (in a coastal aquaculture zone with seasonal fluctuations). These curves explicitly demonstrate how MSSTN captures abrupt algal bloom events (Node 5) and cyclic Chl-a variations (Node 8) more accurately than baseline models, complementing the statistical results in Table 2; Fig. 6.

Compare models like Mod4-Mod7, which have GCN and perform better than Mod1-Mod3 shown in Figs. 5 and 6, with the MSSTN model. Figures 5 and 6 show that Mod4 (TSGN) exhibits the poor performance, likely due to their static graph construction strategy—unlike MSSTN’s dynamic graph fusion, Mod4 uses fixed adjacency matrices to model spatial relationships, failing to adapt to tidal/current-driven pollution dispersion. Additionally, Mod4’s single-scale graph convolution struggles to capture multi-level spatial dependencies (e.g., nearshore-offshore interactions), whereas MSSTN’s MSGCN integrates POI graphs, event graphs, and topological graphs via joint convolution, enabling adaptive feature fusion. This limitation is further validated by the ablation experiment (MO4), where removing MSSTN’s joint convolution module increases day lead IMAPE by 0.16% (Table 3), highlighting the importance of dynamic multi-graph integration for oceanic spatio-temporal modeling.

Scatter plot of prediction results of all models, shows the relationship between observed values and model predictions for Chl-a. Points closer to the diagonal line indicate better agreement with actual observations. The metric used is the RMSE (lower values indicate higher prediction accuracy) and R² (The higher the value, the better). It demonstrates that the proposed MSSTN achieves the closest alignment with observed values compared with baseline models.

To more intuitively compare the differences between the Chl-a index predicted by different models and the observed values, the 1-day lead prediction scatter plot for Node 5 is shown in Fig. 7. In addition to a scatter plot, which is color-coded by density, the Root Mean Square Error (RMSE) and Goodness of Fit (R²) are also presented. As can be seen from the Fig. 7, both MSSTN and Mod7 (STCANET) exhibit the smallest residuals and the highest goodness of fit. By observing Fig. 7, it can be seen that the majority of points are close to the regression line, indicating that the MSSTN model has a high prediction accuracy in performing Chl-a prediction tasks.

In summary, from the experimental analysis the conclusion can be drawn that MSSTN’s dynamic graph fusion mitigates data sparsity by leveraging spatial correlations from neighboring nodes (e.g., Node 1 borrows features from Node 2 via POI graph), while the Transformer’s bidirectional attention captures long-range dependencies even with limited local data.

Multi-time scale prediction performance analysis

To provide a more intuitive comparison of the medium- and long-term prediction performance of each model, data from February 1 to March 4, 2019, totaling 32 days, were taken for monthly analysis of Node 9 and Node 10 as shown in Fig. 8. It can be seen from the Fig. 8(a) that the MSSTN model has a significant advantage, with prediction accuracy across all 9 prediction times being higher than that of the comparison models. The IMAPE of the MSSTN model improved by 0.15% and 3.96% compared to the optimal comparison model M7 and the worst comparison model M1, respectively, demonstrating excellent prediction performance. Figure 8 (b) also shows the IRMSLE line chart for monthly predictions at Node 9 for each model, where the MSSTN model also exhibits more accurate results, with its IRMSLE decreasing by 0.004 and 0.074 compared to the optimal comparison model Mod6 and the worst model Mod1, respectively. Figure 8 (c) and (d) presents the IMAPE and IRMSLE line charts for Node 10, indicating that the MSSTN model has similar performance, which suggests that the Transformer can enhance the accuracy of long-term Chl-a prediction.

Multi-time scale prediction index comparison across models, compares the performance of MSSTN and baseline models at different forecasting horizons. Lower IMAPE and IRMSEL indicate better prediction accuracy. The results show that MSSTN consistently achieves lower error values across all time scales, confirming its ability to maintain accuracy even in longer-term forecasts.

DM test

Since the differences in prediction errors between the two models may not be significant, one cannot determine the superiority of a model’s predictive ability solely based on the magnitude of MAPE, MAE, and RMSLE values. Therefore, to test the significance of the differences in predictive abilities among the models, a Diebold-Mariano (DM) test needs to be conducted33. The DM test can effectively eliminate the constraints of random sampling, providing a comprehensive evaluation of predictive accuracy and stability. The MSSTN model was selected to conduct pairwise comparison tests with a total of 7 prediction models, including Mod1-Mod7, and the results are shown in Fig. 9.

When comparing Model A and Model B, if the p-value (significance level) is less than 5%, the null hypothesis is rejected, indicating that the two models perform differently, which signifies a significant difference between the models; conversely, if the p-value is greater than 5%, the opposite is true. At the same time, if the DM value is less than 0, Model A is superior to Model B. In our experiment the p-values from the pairwise tests of the MSSTN model against the 7 comparison models are all less than 5%, indicating significant differences, and the DM values are all less than 0 as shown in Fig. 9. It is evident that the MSSTN model has superior predictive capability compared to the other 7 models.

Ablation experiment

To verify the effectiveness of the key components of this model, ablation experiments need to be conducted. Four cases were designed based on the MSSTN model. MO1: using MSGCN, removing STTM. MO2: Using STTM, removing MSGCN. MO3: Replacing BDTB in STTM with a conventional Transformer. MO4: Removing the joint convolution module in MSGCN. Table 3 presents the results of the ablation experiments.

From Table 3, it can be seen that the key modules of the MSSTN model contribute to improving the accuracy of the model’s predictions. MO1 compared to MSSTN demonstrates the contribution of STTM to the model’s prediction accuracy; In the case, MO2 has the largest error, indicating that MSGCN is indispensable. The results of MO3 demonstrate that BDTB is more suitable than conventional Transformers for mining temporal features. The results of MO4 prove that joint convolution can finely quantify the relevant information between nodes, enhancing prediction accuracy.

Conclusion

This study proposed a Multiscale Spatiotemporal Network (MSSTN) that combines Graph Convolutional Networks (GCNs) and a Spatiotemporal Transformer Module (STTM) to improve marine environment prediction. By capturing both dynamic spatial dependencies between monitoring stations and long-term temporal patterns, the model addresses two key limitations of existing approaches.

Quantitative evaluations confirm MSSTN’s superiority over seven baseline models, achieving 1.035 mg/L improvement in MAPE index for 1-day Chl-a forecasts compared to the best conventional model (ST-GCN). The model maintains robust performance across temporal scales, showing 1.226 mg/L IMAPE decrease for weekly predictions (19.8% better than STCANET) and sustaining < 2.5% IMAPE through 32-day projections. These quantifiable results highlight the model’s effectiveness in capturing complex spatiotemporal dependencies and its potential for accurate long-term marine environment forecasting.

In terms of broader applicability, the MSSTN framework has strong potential to be exported to other geographical contexts, including different coastal systems and even open-ocean settings, provided sufficient monitoring data are available. Moreover, while this study focused on chlorophyll-a concentration, the framework is flexible and could be adapted to predict other environmental indicators such as temperature, salinity, nutrient levels, or pollutant concentrations. However, its successful application elsewhere will depend on certain requirements: namely, the density and quality of available monitoring data and the coverage of observation stations. Areas with sparse or irregular monitoring may present challenges, but as observing networks expand, the utility of the MSSTN will likewise increase.

In summary, the MSSTN provides a reliable and scalable framework for forecasting marine environmental parameters. Its ability to capture multiscale spatial interactions and long-term temporal patterns has direct implications for marine disaster early warning, pollution management, and sustainable coastal development. Future work will focus on extending the model to additional ocean parameters and testing its transferability to other regions, further enhancing its value as a decision-support tool for marine ecosystem management.

Data availability

The input data presented in this study are freely available in the official website of National Marine Data Center of China at https://mds.nmdis.org.cn. Other requests on data used in this study can be sent to the corresponding author.

References

Khuduzade, A. & Gasimova, E. Ecological condition of the areas contaminated with oil and oil products on the absheron Peninsula. European J. Nat. History, 12–16 (2019).

Enevoldsen, H., Isensee, K., Lee, Y. J. & Commission I. O. State of the ocean report, (2024)

Han, D. & Currell, M. J. Review of drivers and threats to coastal groundwater quality in China. Sci. Total Environ. 806, 150913 (2022).

Wu, J. et al. Pollution, sources, and risks of heavy metals in coastal waters of China. Hum. Ecol. Risk Assessment: Int. J. 26, 2011–2026 (2020).

Mahrad, B. E. et al. Contribution of remote sensing technologies to a holistic coastal and marine environmental management framework: A review. Remote Sens. 12, 2313 (2020).

Yang, Z. et al. UAV remote sensing applications in marine monitoring: Knowledge visualization and review. Sci. Total Environ. 838, 155939 (2022).

Qingyu, L. Analysis on the marine monitoring buoy data variation characteristics and cause of Karlodinium digitatum red tide in Putian sea area, Fujian Province. J. Fisheries Res. 44, 444 (2022).

Yang, S., Fang, Q., Ikhumhen, H. O., Meilana, L. & Zhu, S. Marine Spatial planning for transboundary issues in Bays of fujian, china: A hierarchical system. Ecol. Ind. 136, 108622 (2022).

He, H. et al. Forecasting sea surface temperature during typhoon events in the Bohai sea using Spatiotemporal neural networks. Atmos. Res. 309, 107578 (2024).

Sarkar, P. P., Janardhan, P. & Roy, P. Prediction of sea surface temperatures using deep learning neural networks. SN Appl. Sci. 2, 1458 (2020).

Ali, A., Fathalla, A., Salah, A., Bekhit, M. & Eldesouky, E. Marine data prediction: an evaluation of machine learning, deep learning, and statistical predictive models. Computational Intelligence and Neuroscience 8551167 (2021). (2021).

Ham, Y. G., Kim, J. H. & Luo, J. J. Deep learning for multi-year ENSO forecasts. Nature 573, 568–572 (2019).

Hu, Y. et al. Research on High-Resolution reconstruction of marine environmental parameters using deep learning model. Remote Sens. 15, 3419 (2023).

Mitchell, R. N., Kirscher, U., Kunzmann, M., Liu, Y. & Cox, G. M. Gulf of nuna: astrochronologic correlation of a mesoproterozoic oceanic euxinic event. Geology 49, 25–29 (2021).

Gaidai, O., Ashraf, A., Cao, Y., Sheng, J. & Zhu, Y. Ocean windspeeds forecast by gaidai multivariate risk assessment method, utilizing Deconvolution scheme. Results Eng. 23, 102796 (2024).

Truong, H., Tello, A., Lazovik, A. & Degeler, V. Graph neural networks for pressure Estimation in water distribution systems. Water Resour. Res. 60, e2023WR036741 (2024).

Wang, B. et al. Spatial-MGCN: A novel multi-view graph convolutional network for identifying Spatial domains with attention mechanism. Brief. Bioinform. 24, bbad262 (2023).

Kumar, R., Mendes-Moreira, J. & Chandra, J. Spatio-temporal parallel transformer based model for traffic prediction. ACM Trans. Knowl. Discovery Data (2024).

Li, D., Zhao, W., Hu, J., Zhao, S. & Liu, S. A long-term water quality prediction model for marine ranch based on time-graph convolutional neural network. Ecol. Ind. 154, 110782 (2023).

Park, J., Woo, S., Lee, J. Y. & Kweon, I. S. A simple and light-weight attention module for convolutional neural networks. Int. J. Comput. Vision. 128, 783–798 (2020).

Bi, X. et al. Structure-adaptive graph neural network with Temporal representation and residual connections. World Wide Web. 26, 3389–3408 (2023).

Kenton, J. D. M. W. C. & Toutanova, L. K. in Proceedings of naacL-HLT. 2 (Minneapolis, Minnesota).

Mehta, S. & Rastegari, M. Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer. arXiv preprint arXiv:2110.02178 (2021).

Deng, M., Fan, Z., Liu, Q. & Gong, J. A hybrid method for interpolating missing data in heterogeneous spatio-temporal datasets. ISPRS Int. J. Geo-Information. 5, 13 (2016).

Liu, Q. et al. Spatio-temporal outliers detection within the space-time framework. Yaogan Xuebao- J. Remote Sens. 15, 457–464 (2011).

Khozani, Z. S., Banadkooki, F. B., Ehteram, M., Ahmed, A. N. & El-Shafie, A. Combining autoregressive integrated moving average with long Short-Term memory neural network and optimisation algorithms for predicting ground water level. J. Clean. Prod. 348, 131224 (2022).

Pravallika, M. S., Vasavi, S. & Vighneshwar, S. Prediction of temperature anomaly in Indian ocean based on autoregressive long short-term memory neural network. Neural Comput. Appl. 34, 7537–7545 (2022).

Zhang, Z. et al. Monthly and quarterly sea surface temperature prediction based on gated recurrent unit neural network. J. Mar. Sci. Eng. 8, 249 (2020).

Sun, Y. et al. Time-series graph network for sea surface temperature prediction. Big Data Res. 25, 100237 (2021).

Liu, J., Wang, L., Hu, F., Xu, P. & Zhang, D. Spatiotemporal fusion prediction of sea surface temperatures based on the graph convolutional neural and long Short-Term memory networks. Water 16, 1725 (2024).

Ye, M. et al. Graph convolutional network assisted SST and Chl-a prediction with Multi-Characteristics modeling of Spatio-Temporal evolution. IEEE Trans. Geoscience Remote Sensing (2023).

Xie, C., Chen, P., Man, T., Dong, J. & Stcanet Spatiotemporal coupled attention network for ocean surface current prediction. J. Ocean. Univ. China. 22, 441–451 (2023).

Mohammed, F. A. & Mousa, M. A. in Theory and Applications of time Series Analysis: Selected Contributions from ITISE 2019 6. 443–458 (Springer).

Author information

Authors and Affiliations

Contributions

Conceptualization, Bin Zeng; Data curation, Houpu Li; Funding acquisition, Houpu Li; Methodology, Rui Wang; Project administration, Bin Zeng; Validation, Bin Zeng; Writing – original draft, Rui Wang.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zeng, B., Wang, R. & Li, H. Ocean environment prediction methods based on deep learning and spatiotemporal feature fusion. Sci Rep 15, 35618 (2025). https://doi.org/10.1038/s41598-025-19620-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-19620-4