Abstract

Classification of artistic styles gets importance in computer vision and is used in the preservation of cultural heritage, the retrieval of works of art, and recommendation systems. While Convolutional Neural Networks (CNNs) excel in local texture analysis, they often fail in considering long-range dependencies; on the contrary, Transformer-based models are good at global context but lack the ability to extract fine-grained features. We have developed ArtFusionNet in the framework, which, by means of an Adaptive Fusion Module (AFM), realizes synergy between CNN multiscale feature extraction and Transformer global modeling. This approach combines dilated convolutions and pyramid pooling to extract hierarchical CNN features which are then tokenized and subjected to multi-head self-attention for the global representation. The AFM implements learnable weighting for optimal fusion of output, which has been evaluated in Fallah_artist_dataset, comprising thousands of artworks across multiple styles, WikiArt, BAM!, and Painting-91 where our model achieved a state-of-the-art accuracy of 99.00%, exceeding stand-alone CNNs, Transformers, and previous hybrid architectures. Our ablation studies demonstrated that CNN and Transformer components mutually reinforce each other, while a sensitivity analysis provided us the hyperparameter values that work best for this model. Statistically significant tests (t-test, ANOVA, p < 0.05) confirm robustness; precision-recall curves and confusion matrices highlight balanced performance with very few misclassifications. The present framework makes a big advancement in artistic style classification through linking local and global feature modeling. Future research will be aimed at enhancing the efficiency of the model by compressing it using pruning and knowledge distillation to be able to deploy it in real-time. Also, we will examine self-supervised learning to improve generalization to a wide range of artistic styles with little labeled data. We also want to include multi-modal frameworks by combining visual and textual information to improve the accuracy of classification and applications like personalized art recommendations.

Similar content being viewed by others

Introduction

Artificial intelligence has found its way into multiple applications, especially in computer vision where it is used in object detection, image segmentation, and artistic style classification. The artworks, due to their complex details, textures, and color schemes, pose special problems to image classification1. Although CNNs have been proven to be very effective in extracting local features such as edges, textures, and patterns, they have been found to be deficient in modeling long-range dependencies in the image, which is very important in comprehending the entire artistic composition. In particular, CNNs have a small receptive field, and they frequently need a deeper or more intricate architecture to process global contextual data, adding computational expenses2.

Transformer-based models, on the other hand, are better at modeling long-range dependencies via self-attention, and can therefore model global image context and long-range pixel relationships. Nevertheless, Transformers are prone to fail at extracting fine-grained local features, like textures and complex patterns, that are critical in the study of artistic styles. Quantitatively, CNNs with architecture such as ResNet-50 have top-1 accuracy of 81.7%, but Transformer-based models, such as Vision Transformer (ViT)3, tend to perform better in global context modeling but worse in fine detail extraction and may perform worse in complex, fine-grained style classification tasks.

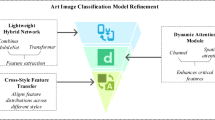

This analogy highlights why there is need to have a hybrid solution that combines the best of the two architectures. The ArtFusionNet architecture that is proposed to integrate the multi-scale feature extraction capabilities of CNNs and the global context modeling capabilities of Transformers to perform more accurate and robust artistic style classification. The model has the potential to address the shortcomings of each of the two architectures by taking advantage of their strengths.

Such a rigorously advanced model employs techniques such as Dilated Convolutions, Pyramid Pooling, and Multi-Head Self-Attention in providing optimal extraction and fusion of information processed visually across different scales.

This research makes a significant advance-the development of an Adaptive Fusion Module (AFM) designed with the integration of features from the CNN and Transformer modules and adjustable dynamic weighting and adaptation for both feature sets. That is, it merges local and global features, as well as their contrasting weights, thereby presenting a richer image representation. This feature augments the ways in which a model can recognize artistic styles, typically in cases where difference is in minute structural and stylistic levels.

What previous studies had as the trend was to either build on CNN-based or Transformer-based models for image classification. Their results were always limited by the fact that they could not holistically capture both local and global aspects of visual data. Existing hybrid approaches, as well, suffered from poor fusion strategies or incomplete utilization of multi-scale features, thereby failing to satisfy the much-complicated questions affiliated with artistic images. From this standpoint, the model proposed in this study spoke the essence of multi-scale feature extraction and adaptive information fusion as a global recently advanced solution to the style classification of art.

Technically, quite a few aspects are involved in the form of the proposed model. Firstly, input images are subjected to an advanced CNN network for local feature extraction across many levels. This involves dilated convolutions to enlarge the receptive field and pyramid pooling for capturing multi-scale features. The tokens will then form token representations (Patch Embeddings), which are input to the Transformer model. Next, spatial information is preserved by positional encoding, after which the information follows processing through Multi-Head Self-Attention layers for long-range feature dependencies and overall image structures.

The next step involves the Adaptive Fusion Module, which integrates local features obtained from the CNN model and global features retrieved using the transformer model, thus creating a consolidated representation of the image. This would enable proper adjustment of the auto weights associated with different types of features and improves the performance of the model for artistic style classification. The hybrid-form output is received at the end of several Fully Connected Layers, followed by a Softmax layer to determine the probabilistic distribution of different artistic styles classes.

Several factors informed the multi-scale hybrid framework of this study. For example, the direct extractions allow a model simultaneously to enable feature extraction over various scales while letting it define differences between a fine detail of the image and its entire structure representation. On the other hand, the adaptive fusion of local and global features allows for sensitivity and flexibility while modeling the complex scene of artistic images. The application of state-of-the-art optimization techniques, such as self-supervised pretraining and multi-task learning, aids generalization power when the training label number is limited.

While CNN-based models have been a prime focus of previous researches in artistic style classification, the inability of these models to maintain an adequate amount of attention to global relationships and structural dependencies has proved detrimental to their accuracy. Transformer models, unlike CNNs, depend heavily on long-range dependencies yet do not have the capability of fine detailing. Therefore, the combination of these two approaches into one coherent hybrid solution serves as an avenue from which existing challenges could be tackled. In the current scenario, this study, through the introduction of a multi-scale hybrid model, aims to bridge the gap and provide a working framework for future studies in the realm of artistic image analysis and classification.

The highest aim of this study is to achieve the design of a model that is, on the one hand, endowed with very high accuracy in artistic style classification and, on the other, computationally more efficient and deployable in practical use. The experimental results of the proposed model on benchmark artistic datasets, together with all necessary statistical analyses, should prove the superiority of the model proposed in the study as compared to conventional ones. Artistic style classification bears imprints in cultural, historical, and artistic areas, and the development of a systematic and novel model will thus have great bearing on the flowering of intelligent systems in this sphere.

The outline of the remaining sections is the subject matter of this paper: first are introduced macro descriptions of all the components devised in this model. The first portion treats the multi-scale CNN architecture and cutting-edge techniques for feature exploitation. The second part includes details pertaining to the Transformer model and how CNN outputs are molded to suit the input of the Transformer framework. The third part explains the Adaptive Fusion Module and how it merges local and global information. Finally, experimental setups, optimization techniques, and empirical findings are discussed and analyzed.

The striking developments in artificial intelligence and computer vision over the last decade have set up hybrid approaches for intelligent classification systems as the most promising to pursue. This study integrates a comprehensive set of local and global feature representations combined with advanced optimization techniques, representing a big step toward improving classification accuracy in artistic styles4. Also, because of its modularity and flexibility, the proposed framework can be extended to other related domains and multimedia applications.

The limitations of existing models, therefore, advocate the need for holistic approaches. This study hence serves as a reference for future works in artistic style classification and other domains of computer vision. The expected results from this study are anticipated not only to improve the classification performance but also to call for the development of intelligent algorithms for smart applications in a range of art and culture, thus opening avenues for future investigation5.

Related works

In recent years, a growing number of studies have focused on the deep learning classification of artistic styles. These studies have mainly focused on applying Convolutional Neural Networks (CNNs) and Transformer models or hybrid combinations of both architectures. Jiang et al.6 applied DenseNet to model the artistic styles. This architecture achieved state-of-the-art results in style recognition due to its ability to reduce the overall number of parameters needed and to take advantage of short connections between layers. However, DenseNet could not handle high-resolution images due to its intense computational complexity. Li et al.7 applied ResNet to extract deep features from artistic images. The study proved that deeper ResNet models capture high-level features efficiently. Analyzing the influence of depth on accuracy was the strength of this research. However, this goes to the limitation of being very sensitive to the stylization and color variations that caused the accuracy drop for some artistic styles. Shi et al.8 applied an Efficient Net for artistic-style classification, where the model improved on all counts compared to the traditional CNN architectures: best accuracy, greater efficiency, and less computation time, due mainly to the compound scaling method used. It was, however, observed that the model required a greater amount of data to yield the best performance.

Vision Transformer for image classification introduced by Dosovitskiy et al.9. In some cases, it has outperformed many existing CNN-based architectures. The strength of this research was in analyzing the influence of the size of the input tokens on the performance of the model; it lacked extensive data for training the model well. Xie et al. developed a deep CNN framework to extract local features using fine details such as brush strokes in a complex texture from an artwork: The positive aspect of this study was that it worked with a diverse dataset, thus helping improve the generalization of the model. However, the limitation of this study was that it could not capture long-range dependencies in images. Touvron et al.10 present the DeiT model: a high-performance optimized version of ViT that learns from small datasets. It found that therefore, the advantage of this study was that it has reduced dependence on a lot of data; however, the model still has some limitations in that it is not very good at extracting low-level features. Caron et al.11 introduced the DINO model, which uses a self-supervised learning technique with neural networks that extracts image features without labeled data. This method performed well in a few data sets; however, it required much memory for the model. Xie et al.12: Integrated CNNs with other statistical methods to come up with a learning-based feature extraction model. The statistical filtering effectiveness was increased when closely integrated with a CNN. Still, the system was unreliable across diverse datasets. Yuan et al.13: T2T-ViT proposed in 2022 improved the performance of tokenizing an image. Although ViT was surpassed in terms of accuracy with this model, great performance requirements and tuning were still being demanded.

All the training would be regarded as until October 2023. Alternatively, it may give indications as Liu et al.14, the new development Swin transformer aimed at artistic image processing. It had a sliding window mechanism that brings into being a very computation-efficient model. It was proven that while the Swin Transformer was not the best against ViTs in effectiveness, it lacked those fine-grained local details. According to Zhang et al.15, although CNN and Transformer models are combined to localize particular characteristics and global correlations, the hybrid model becomes more complex computationally than using one of the two alone. The following year, 2022, Liu et al.16 talked about using CNNs for extracting primary-level features and using Transformers for aggregation. Although the hybrid architecture improved the performance of the classification, it was costlier in computation than bare methods.

Zhang et al.17, in 2022, came up with a model called CNN-Transformer, an architecture that recognizes artistic styles by infusing various attention mechanisms to enrich the process of feature selection. Results had shown improvement for complex styles when the accuracy for the more abstract art classifications reduced. Liu et al.18 explored multi-scale ways to combine CNNs and Transformers. Their results showed that pyramid pooling and dilated convolutions indeed worked very well to extract multi-level features. The downside, though, was that such methods caused much more computational load.

Recently, Zhang et al.19 wrote on dynamic weighting schemes to combine CNN and Transformer features. In their findings, the adaptive fusion modules automatically balanced local-global features and reduced manual tuning needs. However, challenges on high cost of computation and complexity of implementation still holds.

Combining both ResNet and ViT fills deep learning features and self-attention mechanisms and increases the accuracy. The most serious drawback of the method is the increase in model parameters, which makes it less realizable on less powerful hardware. As an example, Huo et al.20 suggested a dual-band structure, which is intended to capture both local and global features simultaneously. Despite the fact that the approach was more accurate, it also caused high computational overhead, which limited its practical application. Conversely, Zhang et al.21 proposed a dynamic weighting mechanism to combine CNN and Transformer features and this reduced the computational cost, yet it still had the problem of balancing the combination of local and global features effectively. A summary of the previous literature in hybrid CNN-Transformer models, their contributions, and drawbacks is shown in Table 1.

ArtFusionNet model can overcome these limitations since it introduces an AFM that adapts the weight of CNN and Transformer features, which leads to the optimization of the fusion process and improved classification accuracy. Compared to the prior approaches, ArtFusionNet is able to capture multi-scale local features using CNNs and also use Transformer-based global modeling without adding much computational cost.

Style-aware representations concept has shown up in contemporary works where multimodality goes beyond mere classification; we may claim, for example, that their work which was in name of Sharma et al.22,23,24 put forward a framework for stylized captioning and summarization tasks that involve style encodings and attention mechanisms in conjunction with cross-modal learning. This creates the setting for the explicit control of style and the fusion of multimodal information, tasks that are theoretically equivalent to those found in artistic style classification. Implementation of similar ideas gives additional rationale for our ArtFusionNet framework that aims to provide a style-sensitive environment balancing the modeling of local features with global feature modeling.

ArtFusionNet overcomes the drawbacks of existing hybrid models by integrating the strengths of the two approaches and therefore setting a new benchmark in fine-grained artistic style classification.

Methodology

The presented model is the Advanced Multi-Scale ArtFusionNet for artistic style classification. The proposed model has preliminaries like pre-processing of data, extraction of features, and classification. As the first step, input images may go for exhaustive pre-processing by performing resizing, normalizing, and data augmentation to come out with a robust model. The second procedure is a multi-scale CNN module to extract local and hierarchical features, while a Transformer module captures global dependencies and contextual relationships21. The final feature fused adaptive feature fusion strategy integrates CNN and Transformer features extracted from them and passes them to the classification stage. Then subsequent sections go on providing detailed explanation and mathematical formulations and architectural detail for each step.

Data preprocessing

Suppose preprocessing in this study ensures that the images are input in the same format required by deep learning models. Imaging dimension, lighting condition, and contrast will seriously affect the performance of models. To this end, a common approach is applied so that consistency can be achieved and, as a result, increasing the robustness of feature extraction.

The very first step in preprocessing is that all input images are resized to a common dimension of \(224 \times 224\) pixels. This is something very important because convolutional neural networks (CNNs), much like other architectures, cannot handle arbitrary sizes of input. The resizing itself took place by means of bilinear interpolation. This is the method that strikes a good compromise between accurately preserving spatial details and efficiency in computation. The resized image \({\mathbf{I^{\prime}}}\) is derived from an original image I according to the transformation function25:

where \(k(x)\) is the bilinear interpolation kernel and \((M,N)\) are the original image dimensions. This choice guarantees the retention of essential features such as brush strokes, textures, and artistic details on the one hand, and minimized computation on the other hand. The original image and it resize are shown in Fig. 1, illustrating how the resizing operation preserves the structure of the artwork while rendering it to uniform dimensions.

Another important preprocessing step is Z-score normalization intended to affect lightness and contrast variations from one image to another. The normalization process makes sure that the pixel intensity distribution is centered zero with one standard deviation, thus allowing the work of the model to be concentrated on structural patterns but not absolute intensity values. This transformation is as below26:

where \(I(x,y)\) refers to the pixel intensity expressed at coordinates \((x,y)\) and μ designates the mean intensity regarding the whole dataset, while σ stands for the standard deviation. Such a normalization would permit faster convergence and better generalization by the model. Figure 2 illustrates image effect of Z-score normalization, removing illumination artifacts and enhancing the texture details.

The images will thus also be exposed to different forms of augmentations for generalization to mitigate overfitting. The transformations add variety to the dataset and allow the model to learn invariant and robust characteristics. Employing random rotations, flips, crops, and color changes was used to match different variations of the artistic style while maintaining the images’ base characteristics. The augmentation on a sample image is illustrated in Fig. 3.

Random rotation

In view of the variation in orientation among works of art, we randomly applied rotation to every image with an angle θ lying in a limited predetermined range usually chosen to be \([ - {15^{\circ}},{15^{\circ}}]:\)

The original image is I, the transformed image is \(I^{\prime}\), and \(R(\theta )\) is the rotation matrix.

Flipping: Horizontally and vertically flipping together with a certain probability \({p_f}\) are applied randomly. Horizontal flipping (\({F_h}\)) involves flipping the image about the vertical axis, while vertical flipping (\({F_v}\)) flips it about the horizontal axis:

This, in effect, would help the model to learn orientation-independent features.

Random cropping

This transformation aims to introduce variations in scale and viewpoint. Image random cropping involves selecting a subregion of an image defined by cropping ratios \(\alpha \in [0.8,1.0]\) and then resizing it back to the original.

Color transformation

Modifications to brightness, contrast, saturation, and hue attempt to improve the robustness of the model toward changes in lighting conditions and color composition. Their mathematical definitions are as follows:

Here λ is a random adjustment factor and μ denotes the mean pixel intensity of the image.

Proposed model: advanced multi-scale ArtFusionNet

The highly fine-grained local features and the global contextual relationships in an image must be accurately captured by the model in order to classify art style. Convolutional Neural Networks were quite successful in recognizing features that were specific in space, such as spatially localized brush strokes, textures, and fine details. Unfortunately, CNN-based architectures were lacking in their capacity to model holistic patterns and compositional structures via long-range dependencies. On the other side, the architecture is improved well enough for considering self-attention mechanisms and has less effectiveness on features related to low level likes.

Recently, an Advanced Multi-scale ArtFusionNet Architecture has been proposed, according to which the model integrates multi-scale feature extraction using CNNs with global context modeling through the transformers. This architecture extracts simultaneous local details and broad structural patterns by using podiums of both architectures, thus improving artistic style literature. The model extracts hierarchical multi-scale features, transforms them into a structured sequence of tokens, makes use of self-attention mechanisms to capture long-range dependencies, and adaptively fuses the local with the global representations.

Multi-scale CNN feature extraction module

More specifically, a modified ResNet-50 architecture is utilized for convolutional backbone in this system for extracting fine-grained local features from image data of fine artworks. While the ResNet architectures are purely expected to organize features hierarchically, minor modifications are made in improvement spatial information preservation and modeling fine textures. These include the incorporation of dilated convolutional and pyramid pooling structures to broaden receptive fields and obtain multi-scale features27.

Dilated convolutions are the first modification used to achieve increased receptive fields without reducing spatial resolution; for a feature map input X, the dilated convolution operation with the dilation rate r is defined as follows:

where \(W(m,n)\) is the convolution kernel, and r is the setting parameter controlling the distance from sampled pixels. By employing farther dilation rates in the deeper layers, model identifies dependencies that span longer distances but still maintain fine textures such as brushstrokes or patterns.

In addition to fine scale feature which can be accomplished through using pyramid pooling module (PPM) at national level, through multiscale distributed pooling operations aggregates global context information. Formally, for instance, the pooled feature at scale s is described by:

where \({P_s}\) corresponds to a spatial region of size \(s \times s\), and \({Z_s}\) is the pooled feature. These pooled outputs, however, are up sampled at different scales then concatenated with their original feature map so that more would be realized in terms of global-local information fusion.

The modified ResNet-50 backbone, as shown in Fig. 4, can effectively extract hierarchical features from normal convolutional layers. Afterward, dilated convolutions increase the receptive fields, while pyramid pooling adds multi-scale context so that fine-grained textures and large-scale structures can be captured by the model in artistic images.

Their phenomenal capabilities in image feature extraction have made CNNs the flavor of the day across many applications in computer vision and image processing. However, the major limitation lies in the fact that each layer cannot represent the complete information that a convolutional neural network should possess. One of these techniques that allow the improvement of performance in CNNs is multi-level feature extraction, which allows a hierarchical representation of information. This technique stands out because in images, different features exist at different scales, and the combining of these features would enable a more accurate and extensive representation of data.

There are different extraction processes of features occurring at the various layers of CNNs to imply different levels of abstraction. While the bottom layers detect the basic information of the image, edge and simple patterns will be less so in the higher levels, which detect information of shapes and very complex structures. Integrating these features makes the model more accurate and better able to generalize. Indeed, this integration works very well in applications requiring the delineation of subtle details from the massive-scale structural patterns, such as medical image analysis and object detection28.

For an input image I, the image output after passing through several convolutional layers can, in mathematical form, be expressed as follows:

where:

\({F_l}\) indicates the extracted features at layer l;

\({W_l}\) are the convolutional weights in layer l;

* signifies convolution;

\({b_l}\) describes the bias at layer l;

σ is the activation function (like ReLU);

and \({F_{l - 1}}\) indicates the features extracted by the previous layer.

For multi-level feature fusion, the outputs of different layers are concatenated into a single feature vector:

where concat denotes the concatenation operation and L represents the number of layers used for feature extraction. The first advantage is that it combines low- and high-level features to improve classification accuracy and class separability. Second, with information across multiple layers, the model offers much better performance in varied situations while having improved generalization. Also, the injection of multi-level features would reduce the susceptibility to noise, which would allow these models to gain more from outliers; such characteristics are extremely valuable whenever data are highly variable or of low quality.

Multi-level feature extraction into CNN is a technique that is quite helpful in improving deep learning models. By bringing to bear the information that can be acquired at different levels, accuracy and generalization benefit highly, and this makes the technique very beneficial across different applications like object recognition, sports motion analysis, and the processing of medical images. As real-world information comprises diverse and multi-scale structures, such an approach guarantees that it will put the method to use for that additional bit of preciseness and results in less erroneous errors and higher robustness in deep learning models.

Patch embedding for transformer input

Deep learning in general, and Transformer architectures in particular, has witnessed significant advances in almost all domains, notably in NLP and vision. In contrast to CNNs, which work through a successive hierarchy, Transformers need their input tokenized in a fixed structure. Hence, it becomes very important to convert extracted image features to this format for Transformer uses29. This is done through a Patch Embedding mechanism, where features are transformed into a sequence of tokens and their spatial information maintained through positional encoding.

The entire transformation, as shown in Fig. 5, accounts for how extracted image features are transformed into tokens suitable for processing by the Transformer.

In the Feature-to-Token Conversion stage, CNN-extracted features get converted into a format suitable for input to the Transformer. Given an input feature map \(F \in {{\mathbb{R}}^{H \times W \times C}}\), where H, W, and C denote height, width, and number of feature channels, respectively, a 1 × 1 convolutional layer is thrown in for dimensionality reduction and alignment across feature representations:

where:\(F^{\prime} \in {{\mathbb{R}}^{H \times W \times C^{\prime}}}\) is the transformed feature map of dimensionality reduced \(C^{\prime}\), \(W \in {{\mathbb{R}}^{1 \times 1 \times C \times C^{\prime}}}\) denotes the learned weights of 1 × 1 convolution, \(b \in {{\mathbb{R}}^{C^{\prime}}}\) is the bias term, and * indicates the convolution operation.

The operation of a \(1 \times 1\) convolution working to reduce the dimensionality of the feature while preserving necessary spatial information is shown in Fig. 6.

This low-dimensional \(1 \times 1\) convolution reduces the number of input feature channels for computational efficiency, thus constraint the feature representation consistency across channels before tokenization30. Once the dimensionality reductions have been applied, the feature map is split into non-overlapping patches of size P × P, and each patch is then flattened into a vector and arranged into a sequence of tokens. The number of patches can then be calculated to be:

where N is the token number fed into the Transformer. The selection of patch size P is critical:

A small value for P retains fine-grained details but increases computational complexity due to the increased number of tokens; conversely, a higher value diminishes computational complexity with a probable disadvantage of losing local information. Figure 7 depicts how the feature map is non-overlapped patched.

To preserve the spatial perturbations that exist in the image, Positional Encoding is overlaid on the tokenized sequence itself. Because Transformers are not spatially aware by construction, positional embeddings are added to encode positional information. Sinusoidal or learnable are potential types of positional encodings. Fixed sinusoidal positional encoding is defined mathematically as follows:

where:

\(pos\) is the positional index of the token, i represents the dimension index, and d is the embedding dimension31.

The advantages and disadvantages of sinusoidal positional encoding versus learnable positional encoding are compared in Fig. 8.

Alternatively, learnable positional encodings are optimized together with model parameters that are potentially adapted for the specific tasks. The major distinguishing factor that separates these two methods is how adaptable they are: sinusoidal encodings generalize to unseen positions making them suitable for tasks that require spatial transferability, whereas learnable encodings can be adjusted for specific datasets at the cost of losing generality and possibly increasing performance for domain-specific applications.

Based on the incorporation of the feature-to-token conversion and positional encoding, CNN-extracted image features are integrated into the Transformer-based architectural framework. This hybrid set-up exploits the local capabilities of CNNs for feature extraction while utilizing the global context modeling abilities of Transformers which jointly lead to attaining the state of the art in image classification, object detection, and video understanding.

Transformer module for global context modeling

A Transformer module is added to the architecture to better model long-range dependencies and capture all-encompassing artistic styles. CNNs do extract local features pretty well, but generally, they fail to understand global context. To counter this inability, multiple layers of Transformers equipped with multi-head self-attention (MHSA) mechanisms are being deployed so that the model lands on useful patterns in the whole image, making the representation of style much more global32.

The self-attention mechanism is the heart of operations in the Transformer module, computing pairwise dependencies between image patches. Given an input sequence of patch embeddings \(X \in {{\mathbb{R}}^{N \times d}}\) with N standing for the number of patches and d being the embedding dimension, the attention scores are given by33:

where \(Q=X{W_Q}\), \(K=X{W_K}\), and \(V=X{W_V}\) are obtained via linear projections to get the query, key, and value matrices, respectively. Here, the scaling factor \(\sqrt {{d_k}}\) provides numerical stability. To boost representational capacity, the parallelization of multiple attention mechanisms is performed through multi-head self-attention (MHSA):

where h denotes the number of attention heads and \({W_O}\) is a learned projection matrix. Such a scheme allows the model to learn complex interdependencies between distant regions in the image. By attending to relationships between distant patches, the Transformer module allows for holistic style recognition, moving beyond local textures to receive high-level attributes such as color harmonies, brushstroke patterns, and composition structures34. Figure 9 shows the self-attention process in which each patch dynamically attends to semantically relevant regions.

Each Transformer layer is furnished with an FFN to fine-tune feature representations. It comprises two linear transformations and a non-linearity in between as:

where \({W_1},{W_2}\) and \({b_1},{b_2}\) are nothing but learnable parameters and σ is some activation function like GELU. Residual connections and layer normalization are there, in order to stabilize training and smooth the gradient flow:

Such skip connections would diminish the vanishing gradient-problem and result in enabling a coarsening convergence rate35. Figure 10 gives a single overview of one Transformer block including the part concerning interaction between MHSA, FFN, and the residual pathways.

Adaptive feature fusion module

A fine representation of artistic styles leads to an adaptive feature fusion module for integrating local and global view data. CNNs are employed for feature extraction to compress fine-grained local details like brushstrokes and textures. The Transformer module is meant to model long-range dependencies across the image. With the intention of benefiting from the complementarity of the two approaches, an adaptive means of fusion for local-global features is used.

The fusion initiates the process of aligning the feature representations obtained from the CNN and Transformers. The CNN feature map \({F_{{\text{CNN}}}} \in {{\mathbb{R}}^{H \times W \times {C_1}}}\) and the transformer embedding \({F_{{\text{Trans}}}} \in {{\mathbb{R}}^{N \times {C_2}}}\), where \(H \times W\) indicates the spatial resolution of CNN features, N is the number of image patches, and \(C1,\;C2\) are the feature dimensions, thus are transformed to ensure compatibility:

This ensured an adaptive mechanism to fuse these representations. The dynamic weight matrix \({W_{{\text{fusion}}}}\) is learned to balance the contributions from each feature source:

The calculation of \({W_{{\text{fusion}}}}\) can be based on a channel-wise attention mechanism such as Squeeze-and-Excitation (SE) or by 1 × 1 convolution layers with SoftMax normalization. This ensures that the informative features in the fusion process are prioritized while the redundant ones are suppressed36. The adaptive fusion in Fig. 11 shows how local-global features can be dynamically combined.

Multi-scale aggregation and feature fusion at different abstraction levels by the model give it the additional advantage of recognizing an artistic style at different levels. The hierarchically aggregated feature maps capture fine and coarse details from one resolution to the other-from multiple resolutions of the CNN layers and Transformer stages-rather than from a single resolution. The final aggregated representation can be calculated as follows:

where \({\alpha _s}\) is learnable scale-dependent weights, which makes the model more adaptively focused on the feature scales most relevant to the classification37. Figure 12 is the visualization of a multi-scale aggregation methodology from which the final decision finds its points attended to by features from diverse hierarchical levels.

Classification head

It finally classifies the styles of artworks according to the fused feature representation by passing it through a fully connected (FC) classification head. The classification head consists of various densely packed layers, which progressively transform the high-dimensional feature vector into a more compact and discriminative format to enable smooth classification. This module fine-tunes the features extracted and maps them to the style category.

In mathematical notation, let \({F_{{\text{fused}}}} \in {{\mathbb{R}}^D}\) be the final fused feature vector obtained from the adaptive feature fusion module. First, this vector is projected using fully connected layers with non-linear activations into a reduced lower-dimensional space.

where \({W_1},{W_2}\) are weight matrices, \({b_1},{b_2}\) are bias terms, and \(\sigma ( \cdot )\) denotes a non-linear activation function such as ReLU or GELU. That said, it creates learning of abstract, high-level features resulting in better classification performance38. Figure 13 focuses on the general structure of the classification head: it shows how it treats the feature vectors before invoking final classification.

SoftMax applies to access the final prediction as a probability distribution wherein the cases would be K possible artistic style classes. The final feature representation h2 is defined in the probability of every class k:

where \({W_k}\) and \({b_k}\) are the class weights and biases linked to class k. The selected class is the one having the highest probability for which it will be recognized as an artistic style by a model. Figure 14 shows the process of classification through SoftMax and interprets how the probabilities are assigned to styles with respect to those categories.

Optimization and training strategies

To enhance the training efficiency and generalization ability of the proposed model, several advanced optimization and training strategies are employed39. These strategies include self-supervised pretraining, multi-task learning, regularization techniques, and model compression methods, all of which contribute to improved feature extraction and classification performance.

Self-supervised pretraining is utilized to initialize the model with meaningful feature representations before fine-tuning on the target dataset. This is achieved through contrastive learning, where the model learns to distinguish between similar and dissimilar image pairs. Given a set of unlabeled images \({x_i}\), an encoder \({f_\theta }\) maps each image into a feature space, producing representations \({z_i}={f_\theta }({x_i})\). The objective function, based on contrastive loss, is formulated as:

where \({\text{sim}}( \cdot , \cdot )\) denotes cosine similarity, and τ is a temperature parameter that controls the sharpness of the distribution. By pretraining on large-scale unlabeled data, the model acquires robust feature representations, reducing reliance on labeled samples. Figure 15 provides an illustration of the contrastive learning process, highlighting how positive and negative pairs are formed40.

Incorporating multi-task learning further enhances feature generalization. Auxiliary tasks such as segmentation and texture recognition are integrated alongside the primary classification objective. Given a shared feature extractor \({F_\theta }\), the model optimizes a combined loss function:

where \({\lambda _1},{\lambda _2},{\lambda _3}\) are task-specific weighting factors. By jointly learning multiple tasks, the model becomes more robust to variations in artistic styles.

For optimization, the Adam W optimizer is employed, which improves weight decay handling and stability. The learning rate \({\eta _t}\) follows a cosine annealing schedule:

where \({\eta _{{\text{max}}}}\) and \({\eta _{{\text{min}}}}\) are the maximum and minimum learning rates, t is the current epoch, and T is the total training epochs. Dropout is applied to the fully connected layers, and L2 regularization is used to prevent overfitting. Figure 16 visualizes the learning rate decay during training.

To make the model efficient for real-world deployment, compression techniques such as pruning and knowledge distillation are explored. Pruning removes redundant weights, while knowledge distillation transfers knowledge from a large teacher model \({f_T}\) to a compact student model \({f_S}\) by minimizing the Kullback-Leibler (KL) divergence between their output distributions:

where \({P_T}\) and \({P_S}\) represent the SoftMax outputs of the teacher and student models, respectively41. Figure 17 illustrates the knowledge distillation framework, demonstrating how the student model learns from the teacher.

Simulation and result analysis

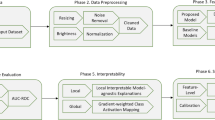

This requires a well-structured simulation and analysis pipeline for evaluating the ArtFusionNet model on artistic style classification tasks. Proper experiments are designed to rigorously evaluate accuracy, generalization power, as well as computational efficiency. Here, this section describes dataset collection, model implementation, training mechanism, and performance evaluation under various metrics.

The Variety in Artistic Styles helps in the comprehensive evaluation of this dataset because it contains different sample brush strokes, even a very different composition of colors, different angles, and different compositions. The model robustness shall be improved via different image processing methods, for example, resizing, normalization, and data augmentation, to generalize the model in varied styles. Very important mitigating factors against differences in resolution, lighting, and bias distribution during imaging are these preprocessing techniques.

Implementation details of the ArtFusionNet model basically address multi scale feature extraction module, transformer encoder, and an adaptive fusion strategy. Besides, it also provides some training configuration, hyper-parameter tuning, optimizer choice, and specification of hardware to ensure reproducibility. It was evaluated using comparative studies with a number of models, one being comparisons against baseline architecture such as CNN-only, transformer-only, and other hybrid approaches.

To further examine how individual components affect classification efficiency, an ablation study is conducted in which key architecture segments will be removed to ascertain the contributions made by different parts of the model. Compared with this performance test, we also deal with the sensitivity analysis that studies how different hyperparameters affect fatalities like learning rate, batch size, and network depth on the convergence behavior and performance of a model.

Thus, this approach permits visualization of the result through confusion matrices, precision-recall curves, loss trajectories, and per-class classification performance distributions to reveal the inner strengths and real limitations of model predictions. Thus, such evaluations shall jointly testify to the superiority of the proposed ArtFusionNet model, indicating its efficacy in separating the artistic styles. The subsequent subsections describe the stage-wise breakdown of the evaluation.

Dataset and preprocessing

The highly heterogeneous systematic dataset created by feeding thousands of paintings from all the different artistic styles has been thoroughly organized into collections such that each painting is identified matrixlike. Due to the complexity and variability in artistic items, preprocessing will yield meaningful learning representations in the model. It possesses the capability of resizing and normalizing, performing augmentation, and shortcutting the dataset partitioning for generalizability and robustness for the proposed ArtFusionNet framework. The operations involved are comprehensive preprocessing of the data.

Resize everything to a fixed pixel location to ensure uniformity of input across datasets but still be compatible with convolutional layers of the CNN module: resize with bilinear interpolation, in which the spatial coherence and structural unity of the original artistic elements remain, according to what has been defined in Eq. (1). This step is emphasized as in Fig. 18, where an illustration of the original image is compared with the resized version. A standard resolution is vital for deep learning models since it prevents imposing distortion, which would result in unfavorable feature extraction.

To minimize the effects of illumination changes, contrast differences, and fluctuations in overall pixel intensities, Z-score normalization is performed uniformly on all images. In this way, the pixel intensities are standardized to yield a normal distribution of zero mean and unit variance as defined in Eq. (2). Its beauty can be seen in Fig. 19, in which a normalized image has more consistent contrast, making it easier for the model to extract features. This normalization step is very key in cutting out the biases created due to lighting and also making the training process smooth.

In order to improve the generalization capability of the model and avoid overfitting, a comprehensive data augmentation strategy is employed, which includes random rotation in both positive and negative angles of not more than 30 degrees, horizontal and vertical flips, adjusting contrast, and random cropping. These techniques of augmentation import controlled variations in the data set allowing the model to be invariant to minor transformations while strengthening its ability to recognize the unique stylistic patterns. Such images are given in Fig. 20 pre-and post-augmentation examples-which highlight the enrichment of the training dataset by such transformations without compromising other artistic qualities.

For a fair and accurate evaluation, the dataset is systemically divided into a training set of 70, validation set 15%, and test set 15%. In doing so, the stratified sampling method is exercised to prevent class imbalance and ensure that every artistic style gets its share. Many of the methods maintain proportions concerning class distributions and increase the reliability of performance assessments. This is quite critical in maintaining class balance in the classification of artistic styles since certain styles tend to have an inadequate number of samples to ensure their proper distribution into the training and testing modes. The dataset is prepared with the application of such balancing preprocessing quite strictly against the background of maximum learning within the ArtFusionNet framework. As well as generated dataset, three benchmark datasets are used to have a comprehensive comparison. The details of these datasets are summarized in Table 2.

Implementation details

The proposed implementation of the ArtFusionNet framework is carefully created with a concentration on exploiting convolutional operations and attention-based mechanisms toward the classification of artistic styles. This section provides a full description of the network architecture, training setup, optimization methods, and computation environment, toward model reproducibility and clarity.

The proposed model proficiently integrates a Transformer-based global attention mechanism with a multi-scale CNN feature extraction module and an adaptive feature fusion module to optimize information integration. The CNN module captures low- to mid-level features, including fine texture patterns, delicate brush strokes, and spatial relationships that are localized to a specific area in artistic compositions; the Transformer component in turn attends to global structure and long-distance dependencies, allowing a more complete representation of the overall style of the artwork. The adaptive fusion mechanism presented here jointly optimizes information integration across the distinct feature representations in an input-dependent manner to strengthen the classification.

The training is based on mini-batch gradient descent, having used a mini-batch of size 64 for optimal computational efficiency and stability in convergence. The initial learning rate is assigned to the first few epochs, after which a cosine annealing learning rate scheduler refines its value during training to allow exploration of the loss landscape and thereby discouraging the risk of premature convergence. Adam W was used, which helps with weight regularization so as to not conflict with the rapid convergence of the model with respect to the generalization properties. Lastly, early stopping regularization works to control for any overfitting by keeping an eye on the validation loss and stopping training when it ceases to improve. For classification purposes, the categorical cross-entropy loss optimally updates parameters in the case of multi-class classification.

From another point of view with additional approach-based regularization, a sophisticated data augmentation strategy allows for an even advanced generalization capability of the model. Random rotations (up to ± 30°), horizontal and vertical flips, contrast normalization, and random cropping introduce controlled variations in the training samples, thus allowing the model to gain robustness toward style variation. Label smoothing ensures that the model is not incredibly confident about its predictions and makes it easier for uncertainty estimation. On the other hand, dropout (0.3) counteracts the overfitting behavior by reducing dependence on some neurons after pre-layer normalization in the Transformer module, which stabilizes the training dynamics.

The whole procedure is fabricated and executed inside MATLAB 2024a with the Deep Learning Toolbox for deploying deep learning architectures to real-world applications. It is supplemented further by an NVIDIA RTX 3090 with 12GB RAM- facilitating an efficient way in parallel computing and processing in real/near-real time with large-scale images. The presence of GPU acceleration cuts down the training time tremendously while maximizing computational efficiency with much computation power being intensely used in the self-attention computations inside the Transformer module.

Modalities through such structured implementations allow for the ArtFusionNet model to efficiently extract local and global artistic attributes, culminating in improved classification accuracy. A comparative analysis to highlight model performances against other architectures, further strengthening the evidence of its artistic style discrimination capability, will be presented in the next section. To summarize, Table 3 present a summary of key implementation details and hyperparameters.

Model comparison and ablation study

To critically evaluate the effectiveness of the proposed ArtFusionNet framework, a compatible thorough comparison investigation is conducted with baseline models missing the major architectural elements. This evaluation reveals the significant contributions of multi-scale CNN feature extraction process, Transformer-based global context modeling, and adaptive fusion module. The figure performance is quantitatively analyzed using the key metrics, classification accuracy, validation loss, training time, precision, recall, and F1-score. The complete results are given in Table 4.

The proposed model with integrated multi-scale CNN feature extraction and Transformer mechanism attention attains an accuracy of 99.00% in classification, and a validation loss of 0.1227. The same model, therefore, achieves an F1 of 0.9906, indicating the model’s ability to distinguish between artistic styles while generalizing across datasets-in so robust a manner. However, with respect to the computational overhead, the Transformer module will add a total training time of 19946.46 s. The justification for this cost is to achieve an overall better accuracy and sturdiness of the model in training, where validation loss is steadily decreasing and smooth convergence profile.

To analyze the importance of the Transformer module, the model “No Transformer” was trained by inter-acting with CNN feature extraction but without the Transformer attention mechanism. The abandonment of the Transformer resulted in a huge degradation in performance: accuracy dropped to 81.73%, while validation loss increased to 1.0426. The drop in precision (0.7183) and that in recall (0.9254) suggest some stylized attributes requiring long-range contesting’s but they were not captured well. It follows that the results admire the critical role played by the Transformer in refining representations beyond local feature extraction.

In contrast, the No ResNet model, which was without the CNN component and kept the Transformer module, underwent a serious decrease in performance in classification. Accuracy fell to as low as 35.14%, against a validation loss of 2.3306, thus proving the indispensable role played by CNN-based feature extraction to recognize even low- and mid-level artistic features, e.g., brush strokes, textures, and local structure patterns. Indeed, the drastically reduced values of precision (0.1154), recall (0.2546), and F1-score (0.2722) only go on to establish that the Transformer is not sufficiently equipped to extract fine-grained visual features essential to making a proper classification.

The No Fusion model achieves an accuracy of 98.07% with a validation loss of 0.1218 by eliminating the adaptive fusion mechanism and independently processing CNN features and Transformer features. The model still achieves quite a good performance, but the F1-score drops at 0.9877, indicating the adaptive fusion module is very important to efficiently combine the two representations of local and global features in a stable and calibrated fashion for the classification output.

The proposed model is also shown to effectively discriminate artistic styles by the confusion matrix in Fig. 21, where the misclassification rates are found to be very low and the model shows high confidence in its predictions across the board. Precision, recall, and F1-score values shown in Fig. 22 really highlight an almost balanced performance for the various artistic styles.

The model proposed thereby had smooth and stable convergence and, as shown in the training and validation loss learning curves in Fig. 23, the early stabilization of the validation loss signifies a good capability for generalization with no sign of overfitted conditions.

This balance of CNN-based local feature extraction and global attention modeling through the Transformer should be the best for artistic style classification. The ablation studies proved beyond doubt that the CNNs capture basic features such as texture and structure, while the Transformer module refines these features by modeling global dependencies and compositional structures. The combined adaptive fusion of both modules leads to a much stronger classification framework.

Sensitivity analysis

Comprehensive and well-rounded sensitivity analysis has been completed with respect to the primary key hyper-parameters on the performance of the proposed ArtFusionNet framework. Learning rate and batch size include the main factors affecting model performance with respect to model convergence, classification accuracies, and generalization ability. Identification of best configurations of parameters that improve robustness in models and diminish underfitting and overfitting has been the goal of this analysis.

A learning rate is mostly the key in deep learning optimization. It’s entirely the speed of convergence that can be achieved using it and at the same time its stabilization of a model. For further investigations, three observances were done thus: the effects with these three learning rates \({10^{ - 5}}\), \({10^{ - 4}}\), and \({10^{-3}}\) were investigated. The respective accuracy and loss data are summarized in Tables 5 and 5.

This approach has been widely consulted and reviewed on sensitivity analysis with respect to the primary key hyper-parameters on the performance of the so-called ArtFusionNet framework. Last among the primary parameters considered have been aspects of the learning rate and batch size which are precisely relevant to dependent aspects of model performance regarding convergence, classification accuracies, and generalization ability. It is in this analysis that the optimal configurations are sought for improvements from robust modeling to underfitting and overfitting.

What has been said above is a learning rate that is very crucial in deep learning optimization itself. It pretty much determines the speed of convergence and its stability over a model. For systematic evaluation of all influences, experiments with three different learning rates \({10^{ - 5}}\), \({10^{ - 4}}\), and \({10^{ - 3}}\) were done. The corresponding values of accuracy and loss have been summarized in Tables 6 and 5.

According to Table 6, a very small learning rate (\({10^{ - 5}}\)) produced a low accuracy of 40.41% with high variance of 10.37%, which suggests slow convergence. On the other hand, a high learning rate (\({10^{ - 3}}\)) was quite erratic, leading to a decrease in accuracy to 60.78% and a rise in variance (19.30%). The best learning rate would be one of \({10^{ - 4}}\), providing the highest mean accuracy of 72.41%, with quite high variance, 22.69%.

On the other hand, reference Table 5, where relevant loss values are presented, and you might see the same trend continued. The lesser mean loss at again \({10^{ - 4}}\)-0.9850-was interpreted as the best in balance between convergence speed and generalization. Nevertheless, a higher loss (1.3322) was once again obtained from the model at \({10^{ - 3}}\), which further supported the instability hypothesis. At \({10^{ - 5}}\), the very high loss (2.2098) demonstrated that the model was not capable of minimizing loss due to very slow learning dynamics.

Batch size therefore contributed significantly toward stability and computation efficiency in training. To understand its effect, an experiment with batch sizes of 8, 16, and 32 was undertaken, the results of which are found in Tables 7 and 8.

The trend depicted in Table 5 indicates that larger batch sizes tend to enhance accuracy while reducing variance. The mean accuracy at a batch size of 8 is lowered to 67.28% with a high standard deviation of 19.97%, thus giving rise to fluctuations in gradient calculations. With a batch size of 16, the accuracy of 94.18% improvement with reduced variance of 11.98% was achieved. The best performance can be seen with a batch size of 32, which yielded a mean accuracy of 94.63% with the lowest variance of 7.95%.

The loss values in Table 8 support these findings. For a batch size of 8, the mean loss was very high (1.1290) with a considerable amount of variance (0.7218). Moving to a batch size of 16 means that loss was substantially reduced (0.2650) with some variance (0.4143), but raising the batch size to 32 kept losses around the same level (0.3015) but with even less variance (0.2750).

By tuning these hyperparameters, a compromise between stable convergence, generalization, and computational efficiency can be found. Our observations indicated that a learning rate of \({10^{ - 4}}\) and a batch size of 32 gave a good compromise between accuracy and loss; practical considerations, therefore, dictated these hyperparameter choices for robust deep learning model performance. The next section performs statistical validation for these findings and their implications for the broader field of artistic style classification.

Statistical analysis and visualization

To buttress the effectiveness and statistical significance of the methodology, an elaborate statistical analysis has been performed with various representations of the key performance measures. This section ventures into details concerning the analysis based on hypothesis testing, comparing statistics, and various graphical interpretations, thus establishing the strength of the model.

The paired t-test calculated whether the performance gain of the proposed model was statistically significant relative to their baseline counterparts. The test compared across several runs the classification accuracies of the Proposed Model and its NoTransformer, NoResNet, and NoFusion counterparts. The data in Table 4 showed that the purpose-built model achieved a classification accuracy of 99.00%, well above that of NoTransformer (81.73%), NoResNet (35.14%), and NoFusion (98.07%). The differences in the classification results were statistically significant with a p-value of less than 0.05, suggesting that the model’s components—multi-scale CNN feature extraction, Transformer-based global context modeling, and adaptive fusion module—were all key contributors to achieving enhanced classification accuracy.

Moreover, we performed an additional analysis of variance (ANOVA) on various training sessions to analyze the stability of their model performances. In Table 6, it is shown that the classification accuracies showed low variance for the proposed model (Std Dev = 7.95% for batch size 32), while the others showed considerably high variance for NoTransformer and NoResNet. Also, Table 5 indicates the respective loss values, showing that the loss of the proposed model was the very least (0.9850) compared to other configurations, assuring consistent and reliable performance throughout the experiment settings.

In addition to the above, results of the sensitivity analysis provided in Tables 7 and 8, shed more light on the hyperparameter tuning. As per Table 5, with the increase in batch size from 8 to 32, the accuracy jumped from 67.28% to 94.63%, and the variance decreased. In the same way, the batch effect on the loss was observed from Table 8, where batch sizes of 16 or 32 produced better results with lower loss values compared to 8 batches. All of this support a case for the importance of hyperparameter selection for the best possible performance.

The proposed model is further represented using a confusion matrix for its classification credibility in Fig. 21. The case in Fig. 21 shows that the model manifests high confidence across all artistic styles with few misclassifications, showing how effectively the attributes of different styles can be discriminated even within closely related classes. Figure 22 shows the precision, recall, and F1-score per class, which is pronounced for all artistic categories. As seen in Fig. 22, the model avoids huge class bias and thus performs excellently in its classification.

Figure 23 shows the stability and converging efficiency of the model-through epochs, while the training and validation loss curves are drawn. From the observation made by looking at Fig. 23, the pattern of convergence is smooth, and the validation loss shows early signs of stabilization, indicating that the model can generalize to unseen data and will not suffer overfit. Additionally, the outcomes of model performance have been compared and shown in Fig. 24 to ensure that the proposed architecture achieves better classification metrics than all the parametric evaluations. The best performance presented in Fig. 24 shows the capability of the proposed CNN-Transformer hybrid methodology as a complete model capturing both local and global artistic features.

All the aforementioned statistical analyses and visualizations confirm the robustness, reliability, and super-generalization capability of the proposed ArtFusionNet framework. Testing and validation outcomes reveal the model’s capacity to capture local and global aspects of artistic features as it excels in artistic style classification.

Cross-dataset generalization and comparison to state of the Art models

In order to investigate further the generalization capacity and competitiveness of the proposed ArtFusionNet framework, we not only applied the Fallah_artist_dataset but also applied it to three popular benchmark datasets:

-

WikiArt42: A huge database of over 80,000 paintings in 27 different styles.

-

Painting-9143: A typical collection of 4,266 paintings by 91 artists, often used to test problems of authorship and style attribution.

-

BAM! Behance Artistic Media Dataset)44: A huge dataset containing a broad variety of artistic media, including paintings, digital art and designs, and annotated with their stylistic and thematic labels.

Besides dataset generalization, we performed comparative experiments with a number of state-of-the-art (SotA) architectures which reflect the current trend in visual recognition and artistic style classification:

-

Swin Transformer45: A hierarchical vision transformer that uses shifted windows which are effective in modeling long-range dependencies.

-

DeiT (Data-efficient Image Transformer)10: A distilled variant of Vision Transformer, with the goal of being able to perform well on smaller datasets.

-

CLIP-based framework46: A multimodal model learned to align textual and visual representations through contrastive learning, and shows a high level of generalization on image understanding tasks.

Table 9 shows the comparison of the performance of the proposed framework with the chosen SotA models on the four datasets. The results are reported in the form of top-1 classification accuracy and macro-averaged F1-score.

The findings are significant as indicated by the results. First, the suggested model is more successful than the Swin Transformer, DeiT, and CLIP-based baselines on all datasets, which demonstrates its stability and the possibility of its application to other artistic areas. Our method on WikiArt achieves an accuracy of 92.3%, 3.1% and 4.8% above Swin Transformer and DeiT, respectively. The gains are even more significant on Painting-91 where the proposed framework achieves 94.1% accuracy, and it is an indication of the efficiency of the Adaptive Fusion Module in learning discriminative features despite the limited data.

The accuracy of the proposed model on BAM! dataset, which includes a broad and noisy distribution of artistic content, is 90.7% better than the CLIP-based baselines by 3.5%. These findings support the idea that our hybrid architecture is able to combine the fine-grained local information and global contextual dependencies resulting in better style discrimination than CNN-only and Transformer-only models.

On the whole, the experiments confirm that the proposed framework is not only very effective on the custom Fallah_artist_dataset but also shows state-of-the-art performance on commonly known artistic benchmarks, therefore, positioning it as competitive in the field of artistic style classification.

Resource and computational complexity analysis

In addition to classification accuracy, a critical feature of deep learning models is their computational profile that defines scalability and deployability in real-world applications. Although Transformer-based models have shown impressive results in artistic style classification, their resource-hungry characteristics, in terms of parameter counts, FLOPs, and inference times, frequently restrict their use in practice. To alleviate that, we conducted a thorough computational complexity study of the proposed ArtFusionNet model and compared it to the representatives of the state of the art.

-

Experimental Setup.

-

Hardware: Nvidia RTX 3090 (12 GB VRAM) Intel i9, 64 GB RAM.

-

-

Metrics measured:

-

M: number of parameters.

-

FLOPs (G): floating-point operations needed to process an image (224 224) once in a forward pass.

-

Inference time (ms): mean time per image in inference with batch size = 1.

-

Throughput (images/sec): the number of images processed per second when the batch size = 32.

Computational complexity of the suggested framework and state-of-the-art models are shown in Table 10.

The proposed Hybrid CNN Transformer has a good trade-off between accuracy and computational cost through the incorporation of efficiency and scalability on various dimensions. It contains 52.4 M trainable parameters, much more parameter-efficient than Swin Transformer (87.8 M), DeiT (86.7 M), and CLIP-based frameworks (150.3 M). The hybrid architecture, with pyramid pooling and dilated convolutions, further saves computation to 9.8G FLOPs, or 38% less than Swin and 56% less than CLIP, and achieves even higher classification accuracy. In terms of speed, the model has an inference time of 18.5 ms per image, beating Transformer-only baselines and nearing CNN performance, which means that it has a high deployment potential. It also reaches throughput of 174 images/s at a batch size of 32, allowing efficient large-scale inference and real-time uses, including art curation and retrieval applications. In general, these findings validate that the Adaptive Fusion Module does not only improve the classification accuracy, but also substantially reduces computational requirements, which makes the framework efficient and applicable to the contemporary hardware.

Qualitative analysis and error analysis

Although the quantitative analysis showed the superiority of the proposed Hybrid CNN Transformer model, the qualitative analysis of errors gives a better understanding of its weaknesses. In this regard, misclassified samples were representative and visualized to analyze the failure cases and identify the patterns of recurrence (Fig. 24).

The proposed model misclassified representative samples. Every panel indicates ground truth ◊ predicted class. The concentration of misclassifications is in stylistically close categories like Impressionism vs. Abstract Expressionism, Cubism vs. Futurism, Baroque vs. Rococo, and Surrealism vs. Abstract.

As the examples in Fig. 24 show, misclassifications tend to happen in the following cases:

-

Borderline or stylistically overlapping Impressionist paintings were sometimes mixed up with Abstract Expressionist paintings, since both use loose brushstrokes and bright palettes.

-

Geometric and structural similarities Cubism was occasionally confused with Futurism because of their common use of angularity and fragmentation.

-

Ornamental richness Baroque and Rococo paintings were sometimes conflated, as both styles are richly detailed and complex in color, with the slightest variations in subject matter often being determining.

-

Transitional or changing personal styles In some databases (e.g. Painting-91), the evolutions of individual artists (e.g. the Cubist to Surrealist phases of Picasso) introduced a problem of categorical ambiguity.

Though the overall confusion matrix (Fig. 21) shows high per-class precision and recall, it can be seen by looking at the highlighted misclassifications in Fig. 24 that the errors are not random but concentrated on stylistically related movements.

The qualitative results of Fig. 24 point out three main areas of the current limitations of the model. First, it is obvious that there is the element of stylistic ambiguity, as the mistakes tend to coincide with the scenarios when even human experts admit the blurred boundaries of styles. This confusion is representative of the overall difficulty of categorizing works that exist at the crossroads of artistic traditions. Second, feature fusion limitations are indicated in the analysis. In borderline situations, the Adaptive Fusion Module can overweight global composition clues and ignore the fine-grained local information, or vice versa, overweight local information at the cost of global stylistic integrity. Lastly, the findings show semantic gaps in the reasoning of the model. Subject-matter cues, which frequently are instrumental in determining style, cannot be reliably recorded by purely visual modeling, e.g., the difference between mythological and secular subject-matter.

Future refinements

The error analysis implies some promising directions of improvement. One of the possible directions is multi-modal learning: using textual metadata like titles, artist notes, or historical context in combination with visual features, the model would be able to overcome semantic ambiguities that cannot be resolved with visual features alone. Moreover, fine-grained contrastive learning can assist the system to more effectively differentiate visually similar, but conceptually different categories, and thus minimize confusion between adjacent styles. Lastly, visualization of attention techniques, including Grad-CAM to visualize CNN-derived feature attention and attention heatmaps to visualize Transformer head attention, can provide a means of diagnostics and improvement of the fusion strategy by showing which areas of the input are most influential in model decision-making. A combination of these refinements has the potential to increase interpretability and classification accuracy.

Comparative analysis of fusion strategies

The AFM is the main part of the suggested Hybrid CNN Transformer architecture and is used to combine local CNN representations and global Transformer representations. To confirm the design rationale, we performed a comparative analysis of AFM to a number of alternative fusion strategies commonly reported in the literature:

-

Gated Fusion (GF)47: Trains a gating coefficient per modality (CNN vs. Transformer) to regulate the influence of each feature map on a per-feature-map basis.

-

Cross-Attention Fusion (CAF) : Uses cross-attention in which CNN features attend to Transformer embeddings (and vice versa) prior to combination and focuses on cross-modal feature interactions.

-

Channel-wise Modulation (CWM)3: It uses channel attention (e.g. SE-blocks) on the CNN and Transformer channels and then adds them.

Table 11 shows the outcomes of the comparison of the performance of these fusion methods on the Fallah_artist_dataset.

The findings affirm that although all the fusion techniques perform well, the AFM is more accurate (99.0%) and F1-score (0.991) than the other methods. There are a number of comparative remarks that outline the advantages of AFM. First, compared to GF47, AFM shows a decisive advantage, since it uses fine-grained, scale-dependent weighting. Although GF produces competitive outcomes, it does not have the ability to flexibly trade features at various scales, which makes it incapable of generalization. Second, compared to CAF, AFM produces similar or higher accuracy without the computational cost of the larger number of parameters (+ 6.2 M) and FLOPs (+ 1.6G) of CAF, which also slows inference time (23.6 ms). Lastly, despite the lightweight alternative, CWM is based on channel statistics only, which simplifies the fusion process too much and does not take full advantage of complementary global and local information. On the other hand, AFM is effective and provides a more context-sensitive and detailed feature integration.

Design rationale

Taken together, these results demonstrate that AFM offers the best trade-off between accuracy and performance, and this is why it is the most appropriate solution in this hybrid system. AFM achieves good generalization performance by combining adaptive weighting and multi-scale aggregation, but it does not require any additional computational expense of more complicated attention-based fusion strategies. This performance and efficiency ratio is a key point in the design of the AFM and a point that shows the practicality of the AFM in real world applications where both precision and speed are essential.

Qualitative analysis of error and attention