Abstract

Facial expression recognition (FER) has advanced applications in various disciplines, including computer vision, Internet of Things, and artificial intelligence, supporting diverse domains such as medical escort services, learning analysis, fatigue detection, and human-computer interaction. The accuracy of these systems is of utmost concern and depends on effective feature selection, which directly impacts their ability to accurately detect facial expressions across various poses. This research proposes a new hybrid approach called QIFABC (Hybrid Quantum-Inspired Firefly and Artificial Bee Colony Algorithm), which combines the Quantum-Inspired Firefly Algorithm (QIFA) with the Artificial Bee Colony (ABC) method to enhance feature selection for a multi-pose facial expression recognition system. The proposed algorithm uses the attributes of both the QIFA and ABC algorithms to enhance search space exploration, thereby improving the robustness of features in FER. The firefly agents initially move toward the brightest firefly until identified, then search transition to the ABC algorithm, targeting positions with the highest nectar quality. In order to evaluate the efficacy of the proposed QIFABC algorithm, feature selection is also conducted using QIFA, FA, and ABC algorithms. The evaluated features are utilized for classifying face expressions by utilizing the deep neural network model, ResNet-50. The presented FER system has been tested using multi-pose facial expression benchmark datasets, including RaF (Radboud Faces) and KDEF (Karolinska Directed Emotional Faces). Experimental results show that the proposed QIFABC with ResNet50 method achieves an accuracy of 98.93%, 94.11%, and 91.79% for front, diagonal, and profile poses on the RaF dataset, respectively, and 98.47%, 93.88%, and 91.58% on the KDEF dataset.

Similar content being viewed by others

Introduction

The development of artificial intelligence (AI)-based technologies has significantly expanded research into interaction technology. Voice recognition technologies have been developed based on senses that play a critical role in human interactions, such as voice commands and recognition through AI speakers to manage routine human tasks1. Furthermore, the incorporation of vision sensory technologies is essential for AI systems to accurately comprehend human behavior2. Facial expressions often serve as a more effective means for humans to communicate their behavior and emotional states compared to verbal or written interactions. Indeed, Mehrabian3pointed out that facial expressions account for 55% of emotional disclosure, compared to 38% for tone of voice and only 7% for the actual words spoken. The substantial research conducted in the field of FER during the past decade can be attributed to this reason2.

The FER involves analyzing human emotions by identifying movements in facial features. However, FER is distinct from human emotion recognition (HER). While HER detects emotional states that may or may not be described by a variety of factors, including gestures, posture, emotional voice, facial expressions, gaze direction, and contextual information, FER specifically utilizes visual information to classify facial movements or deformations into predefined categories4. Additionally, facial expressions can be described using other aspects beyond emotions, including mental processes, physiological activity, and verbal & non-verbal communications4,5. These different sources of facial expressions are depicted by Fig. 1.

The FER technology allows machines to offer personalized services by adapting to individuals’ emotional states, thereby enhancing the user experience. However, developing an effective FER system entails significant challenges. Facial expressions can vary widely due to differences in facial features, environmental conditions, and poses. While previous research has made notable advancements in addressing these challenges by developing techniques to improve FER performance6,7,8,9,10,11, a critical gap remains in optimizing feature selection for multi-pose recognition. Despite progress, current methods struggle with selecting the most relevant features in varying poses, which directly impacts the accuracy of FER systems. This paper addresses this gap by introducing a novel hybrid approach for enhancing feature selection, the QIFABC method, which leverages quantum-inspired meta-heuristic algorithms.

The proposed QIFABC algorithm is a hybridization that combines the QIFA and ABC algorithms. The QIFA is a quantum inspired version of the firefly algorithm that incorporates the attributes of quantum computing (QC) with the firefly algorithm (FA)12. The QC leverages quantum mechanics phenomena like entanglement and superposition to solve complex problems efficiently13. The FA algorithm mimics the flashing behavior of fireflies, where they communicate through light intensity, and it efficiently optimizes solutions by focusing on promising regions of the search space14. The ABC algorithm is an intelligent method in which bee agents exhibits the swarm intelligence to explore and exploit solutions, ensuring robustness for the complex problems with minimal parameter usage15.

While there are other new optimization algorithms such as crayfish optimization16, red fox optimization17, and reptile search algorithm18, the selection of FA and ABC algorithms is due their complementary strengths. The local search abilities of the FA algorithm are effective in narrowing down the search space, while ABC excels in global exploration by simulating bee movements towards high-quality nectar positions. This dual approach allows the QIFABC algorithm to effectively balance exploration and exploitation, crucial for solving complex multi-pose facial expression recognition problems.

Furthermore, according to Wolpert’s No Free Lunch Theorem19, no single optimization algorithm is universally superior to others for all problem types20. In this study, the choice of FA and ABC is based on their demonstrated success in similar optimization tasks and their ability to adapt well to the specific characteristics of FER, such as multi-pose expression recognition, where both local and global search strategies are crucial for feature optimization.

In the overall process of the FER system, the modules of image processing, feature extraction, feature selection, and expression classification are incorporated. The first module, image processing, involves removing the background region and extracting facial elements from the image. Current research has adopted the Viola-Jones algorithm for extracting facial components after processing the raw image. The processed image with the facial elements is utilized to extract the features, which is the second module of the FER system. This is conducted using three-channel convolutional neural networks (TCNN), which extract features related to the whole face, mouth region, and eye region, utilizing one channel for each. The extracted features are enhanced in the third module using the proposed QIFABC approach, which selects a robust feature set. The last module of FER system is executed using ResNet-50 method. The overall process employs a unique combination of different techniques to build a novel FER system that effectively determines expressions. Figure 2 presents an outline diagram of the proposed FER system. This system is experimented for RaF and KDEF datasets possessing multi-pose facial expressions, including the front pose (face positioned at 0 degrees), diagonal pose (face positioned at ± 45 degrees), and profile pose (face positioned at ± 90 degrees).

The main contributions of the research work are listed below:

-

Introduced a novel hybrid optimization method, QIFABC by combining the QIFA and ABC methods to enhance the feature selection for multi-pose FER.

-

Leveraged the complementary strengths of QIFA (local search) and ABC (global exploration) to effectively optimize features for multi-pose FER.

-

Additionally, evaluated the feature optimization performance of QIFA, FA, and ABC algorithms, comparing their efficacy with the proposed method.

-

Conducted three experiments categorizing facial expression recognition across different poses: front pose (0 degrees), diagonal pose (± 45 degrees), and profile pose (± 90 degrees), illustrating the multi-pose expression recognition.

-

Compared the performance of the proposed FER system with state-of-the-art techniques, confirming its enhanced accuracy and robustness across varied facial expressions and poses.

-

Achieved significant performance improvements on benchmark datasets (RaF and KDEF) with accuracy scores of 98.93%, 94.11%, and 91.79% for front, diagonal, and profile poses on RaF, and 98.47%, 93.88%, and 91.58% for KDEF, respectively.

The remaining paper is structured into the subsequent sections: Sect. 2 conducts a comprehensive review on the recent literature to recognize facial expressions. Section 3 details the modules of image processing and feature extraction in the system. In Sect. 4, the proposed QIFABC algorithm is described for feature selection, including an introduction to the FA, QIFA, and ABC methods. Section 5 describes the classification module of FER system which is performed using the deep neural network model of ResNet-50. Section 6 presents the experiments conducted and results obtained for multi-pose expressions. Section 7 discusses the limitations of the proposed work and Sect. 8 provides the conclusion of the research and indicates potential areas for future exploration.

Literature review

In the recent time, the FA and ABC algorithms have gained prominence as effective optimization techniques in machine learning and related domains. The FA is inspired by the flashing behavior of fireflies and excels in solving optimization problems through its robust local search ability. On the other hand, the ABC algorithm is modeled on the foraging behavior of honeybees which has proven capabilities of global exploration with minimal parameter dependence.

The recent advancements have demonstrated the applicability of these algorithms in optimizing machine learning models and addressing complex real-world problems. Jovanovic et al.21 discussed the application of FA in tuning machine learning models for fraud detection tasks. Jovanovic et al.22explored FA’s diversity-oriented metaheuristic approach for tuning machine learning models in industrial applications. Mamindla and Ramadevi23 illustrated the capability of the ABC algorithm in hyperparameter tuning for medical image classification. Bacanin et al.24 described the application of ABC in optimizing the weight connections and hidden units of Artificial Neural Networks (ANNs). Additionally, Park et al.25 demonstrated the utility of ABC in hybrid optimization frameworks, particularly its integration with Support Vector Machines (SVM) for designing efficient motors.

These studies indicate the flexibility and adaptability of FA and ABC algorithms by establishing their relevance for feature selection and optimization in multi-pose FER systems. The proposed QIFABC technique effectively addresses the issues of balancing exploration and exploitation in feature optimization by exploiting supportive capabilities, which is particularly crucial for recognizing facial expressions across varying poses.

The ability to accurately recognize facial expressions across varying poses is essential for enhancing the capabilities of automated systems in areas like human-computer interaction and security. Despite significant progress, variations in facial poses present a persistent challenge, often degrading the performance and accuracy of systems. This literature review explores feature selection approaches, which are crucial for improving the performance of multi-pose FER systems. Although numerous studies have addressed the detection and classification of expressions in frontal images, accuracy significantly decreases with increased pose deviation. This section reviews existing methodologies, focusing on different feature selection techniques for improving robustness and adaptability across various facial poses.

Mistry et al.26 presented a feature selection method of the micro-genetic algorithm (mGA) that is embedded with the swarm intelligence algorithm of particle swarm optimization (PSO). The presented approach mitigates the challenges related to local optimum and premature convergence of the traditional PSO algorithm by retaining the original swarm in a non-replaceable memory. The performance evaluations on the CK + and MMI datasets indicate that this method outperforms traditional GA and PSO algorithms. The authors suggest further improvements could be achieved by incorporating cuckoo search and firefly algorithms to increase search diversity, thus enhancing robustness in feature selection for FER. Mlakar et al.27 modified the DEMO (Differential Evolution for Multi-objective Optimization) algorithm to effectively select the features in FER. The initial feature extraction utilized the histogram of oriented gradients with difference feature vectors. They evaluated the effectiveness of this method on three datasets: CK, MMI, and JAFFE, and reported the effective performance of this method across these datasets. Sreedharan et al.28 selected optimized features using the Grey Wolf Optimization (GWO) method from a feature set obtained with Scale Invariant Feature Transform method. The performance of this technique was tested on the CK as well as JAFFE dataset, and it was found to outperform other conventional and metaheuristic methods for selecting features. Ghosh et al.29 introduced a novel algorithm, the Late Hill Climbing-Based Memetic Algorithm (LHCMA), to select the features to classify facial expressions. In the LHCMA method, the local search is effectively conducted using the combination of minimal-Redundancy Maximal-Relevance (mRMR) and local hill climbing (LHC) methods. The system underwent evaluation utilizing the JAFFE and RaF datasets, and determined the effective performance of expression recognition after optimizing the features using the presented LHCMA method.

Further, Saha et al.30presented a novel supervised filter harmony search algorithm (SFHSA) for feature selection, which integrates the Minimal-Redundancy Maximal-Relevance (mRMR) and cosine similarity. The mRMR method assesses the viability of the optimal features, while cosine similarity helps eliminate redundant features from the extracted set. The efficacy of this method was validated using the datasets of JAFFE and RaF. Considering the positive results, the authors propose that this approach may be modified to address big data challenges. Ghazouani31developed a genetic programming-based framework to optimize features for recognizing facial expressions. This framework employs tree-based genetic programming as its core component and involves three functional layers: feature selection, feature fusion, and classification. The experiments were performed by incorporating the datasets of MUG, CK+, DISFA, and DISFA+. The authors tested the proposed method for three different combinations of appearance and geometric features. Asha32 explored various adaptations of the gravitational search algorithm (GSA) to optimize the features in FER process, including the standard GSA (SGSA), binary GSA (BGSA), and fast discrete GSA (FDGSA). The authors conducted the experiments on the fixed pose expressions of the KDEF and JAFFE datasets. The results demonstrated that the FDGSA method was better in feature selection, as it significantly enhanced classification performance compared to the aforementioned methods.

Furthermore, Kumar et al.33 introduced an improved quantum inspired gravitational search algorithm (IQIGSA) for feature selection to effectively recognize the facial expressions. In the IQIGSA method, the positioning of the best agents is enhanced using a random vector and a mass parameter, which helps avoid trapping agents in local optima and increases feature diversity. The authors compared its performance with traditional GSA and QIGSA methods through experiments involving front and half-side pose expressions from the datasets of RaF and JAFFE. The results indicated that the IQIGSA algorithm performed more effectively, particularly for front pose expressions, although its performance slightly declined for half-side poses. Bhatt et al.34explored various quantum-behaved metaheuristic methods for feature optimization, including the quantum inspired gravitational search algorithm (QGSA), quantum inspired genetic algorithm (QGA), quantum inspired firefly algorithm (QFA), and quantum inspired particle swarm optimization (QPSO). These methods were tested on front, diagonal, and profile pose expressions from the RaF and KDEF datasets. The QFA method was noted to be superior in feature selection performance, although recognition accuracy declined with increasing facial angle deviation. Dirik35 combined an adaptive neuro-fuzzy inference system (ANFIS) utilizing the PSO to enhance feature optimization in FER systems. This approach utilized action units for classification and deeper analysis of expressions. The experimental evaluations indicate the effective performance of the presented method with testing on the MUG dataset. The method was tested only for the front pose expressions and was not investigated for any deviation in the head position or any occlusions.

From the discussed feature selection techniques for FER systems, it is evident that numerous studies have contributed significantly to effective feature selection. Despite these advancements, there is still a pressing need for methods that enhance both accuracy and reliability, especially in non-frontal poses. To address the aforementioned challenges, the current research work has presented a novel hybrid QIFABC algorithm that is a combination of QIFA and ABC algorithms. This approach has been utilized for feature selection in a more effective and robust manner for the front as well as diagonal and profile pose expressions.

Image processing and feature extraction

Image processing is the initial module of the FER system in which images are processed for the removal of non-facial instances and the extraction of face and facial components. The current FER system has incorporated the Viola-Jones algorithm that employs Haar-like attributes to capture facial structures36. The algorithm processes the image as an integral image to facilitate quick and efficient computation of pixel value sums over any rectangular area, and employs a cascade of classifiers organized via the AdaBoost learning method to efficiently distinguish faces from non-faces.

The quick calculations enabled by integral images are crucial for detecting features across different scales and positions with minimal computational overhead. Haar-like features identify oriented contrasts between different facial regions. For example, the value of face region under the eyes, which is darker than the cheeks, can be quickly evaluated by deducting the total pixel count under the white rectangle from the total pixel count under the black rectangle with the help of an integral image. The AdaBoost algorithm leverages these Haar-like features to train classifiers that assess the presence of facial components. It assigns weights to numerous weak classifiers associated with these features to build a comprehensive strong classifier. The value of each weak learner, relative to a feature (f) and a threshold value (\(\:\theta\:\)), is determined by Eq. (1).

Where, the polarity p indicates the direction of the inequality.

The cascade structure enables detailed analysis of promising face-like regions by swiftly rejecting non-face regions, thus accelerating the face detection process. After a region has passed all the stages in the cascade, it is classified as a positive instance, which confirms the detection of a face and its corresponding facial components. Here, the Viola-Jones algorithm is employed to extract the whole face as well as the facial components, including the mouth, eyes, and eyebrows.

Further, the feature extraction process utilizes the entire face along with the facial components. The proposed FER system employs a TCNN approach in which each of the three CNN channels is dedicated to a specific region: the whole face, the mouth, and the eye regions (including eyebrows), respectively. This will produce the face region-based CNN as \(\:{F}_{CNN},\) the mouth region-based CNN as \(\:{M}_{CNN}\), and the eyes region-based CNN as \(\:{E}_{CNN}\), respectively.

The selection of TCNN over a conventional CNN network is motivated by the speed and efficient network structure of TCNN, which leverages the architectures of both LeNet-5 and VGGNet for faster and more accurate feature extraction8. In the TCNN approach, the LeNET-5 architecture is enhanced by adapting the architectural attributes of VGGNet, replacing the traditional 5 × 5 convolutional kernels of standard LeNET-5 with 3 × 3 convolutional kernels. Additionally, the depth of the convolutional layers is higher than in the typical LeNet-5 architecture. This modification enhances the capability of the TCNN neural network to learn complex patterns more effectively and achieve more detailed feature extraction.

For feature extraction, the convolution kernel serves a significant role to select the low and high frequency features using different weight parameters. The low frequency features refer to basic contour features of the image, such as the whole face, which possesses a large mean value, and the high frequency features refer to edge features of image, such as the mouth, eyes, etc., which possess a large variance value. Therefore, the convolution kernel having a high mean value is utilized for extracting features of complete face expressions, while the convolution kernel with a high variance value is utilized for extracting features of local face expressions such as mouth, eyes, and eyebrows. By considering the parameters, c as channels, l as filters, h as height, and w as width for the convolutional kernel (K), respectively, the values of mean (m) and variance (v) can be evaluated by Eqs. (2) and (3), respectively.

After evaluating the values of mean (m) and variance (v) for the convolution kernel (K), threshold values \(\:{Th}_{m}\) for the mean and \(\:{Th}_{v}\) for the variance are assigned. The value of K higher than \(\:{Th}_{m}\) indicates the selection of features from the entire face, while values exceeding \(\:{Th}_{v}\) signify the selection of local features, such as the mouth, eyes, and eyebrows.

The TCNN approach controls all three CNN channels in parallel to extract all the feature types related to the whole face, as well as facial components including the mouth, eyes, and eyebrows. The convolution layer extracts the features using Eq. (4).

Where, \(\:{X}_{p,\:q}^{z}\) are the elements of the input feature map, \(\:{W}_{p,q}^{z}\) are the weights of the convolution kernel (K), and \(\:{Y}_{n}^{z}\) is the output feature map at layer z. The notation \(\:\otimes\:\) describes the convolution operation between \(\:{X}_{p,\:q}^{z}\) and \(\:{W}_{p,q}^{z}\) at position (p, q). \(\:{b}_{q}^{z}\) is the bias added to the entire feature map with a uniform value across the feature map.

The features extracted from the output feature map using the TCNN approach are retained for the feature selection process, which is described in the subsequent section.

Feature selection

The feature selection process is crucial for determining more robust and precise features that effectively recognize facial expressions across various poses. This process not only enhances performance by reducing the dimensionality of the data but also improves computational efficiency, resulting in faster and more accurate detection of human emotional states. This section introduces the proposed QIFABC algorithm for feature selection in FER systems. Additionally, an overview of the FA, QIFA, and ABC methods is provided prior to discuss the QIFABC approach.

Quantum-inspired firefly algorithm (QIFA)

The QIFA algorithm is an amalgamation of the FA method with the principles of QC method. The FA, invented by Yang, is a metaheuristic algorithm that draws inspiration from the natural behavior of fireflies37. Fireflies utilize their flashing activity for attracting other fireflies as well as potential prey. Each firefly species emits a unique flashing light pattern, which correlates with the objective function that must be optimized as per the problem. FA follows three basic principles for the working of its agents, which are described as follows:

-

1.

Fireflies are attracted to each other on the basis of brightness or light intensity \(\:{(I}_{i})\) instead of sex, indicating a direct correlation with the objective function value \(\:{f(x}_{i})\) at their respective positions \(\:{(x}_{i})\). This relationship can be expressed by Eq. (5).

$$\:{I}_{i}\propto\:{f(x}_{i})$$(5)

-

2.

The attractiveness (\(\:\beta\:\)) between fireflies decreases exponentially with increasing distance (r) between them. The value of \(\:\beta\:\) between fireflies i and j can be evaluated using Eq. (6).

$$\:\beta\:\left({r}_{ij}\right)={\beta\:}_{0}{e}^{\left(-\gamma\:{{r}_{ij}}^{2}\right)}$$(6)

Where \(\:{\beta\:}_{0}\) represents the initial brightness value at distance (r = 0) and \(\:\gamma\:\) is the coefficient that measures the absorption of light. The value of \(\:{r}_{ij}\) between fireflies i and j can be evaluated by the Euclidean distance formulation: \(\:{r}_{ij}=\parallel{x}_{i}-{x}_{j}\parallel\).

-

3.

Fireflies exhibit a deterministic attraction towards brighter peers, while also incorporating a random element to enhance their exploration of the search space. This migration of the firefly i in the direction of the brighter firefly j is calculated using Eq. (7).

$$\:{x}_{i}^{t+1}={x}_{i}^{t}+{\beta\:}_{0}{e}^{\left(-\gamma\:{r}_{ij}^{2}\right)}\left({x}_{j}^{t}-{x}_{i}^{t}\right)+\epsilon(rand-0.5)$$(7)

Where \(\:rand\) is a random number generator uniformly dispersed in the range [0, 1] and \(\:\epsilon\) is a randomization parameter that controls the step size of randomness. A higher value of \(\:\epsilon\) indicates a greater possibility for exploration, while a lower value emphasizes more on exploitation.

These rules ensure the effective performance of the FA to solve complex optimization problems. To integrate the attributes of quantum computing with FA, it has been transformed into Binary FA (BFA). The BFA algorithm is the discrete variant of FA, which is effective for discrete binary problems that produce outputs of either 0 or 1. The migration of firefly i in the direction of firefly j due to attractiveness changes the binary number to a real number, thereby changing the discrete problem into one with a continuous output. This shift should be restricted to prevent the algorithm from producing continuous outputs. Sayadi et al.38 addressed this issue by employing the sigmoid function \(\:S\left({x}_{i}\right)\), which keeps the value within binary limits. The position of fireflies in terms of bits (\(\:{x}_{i}\)) in the BFA are evaluated by Eq. (8).

Where \(\:{RN}_{U}\) is a uniform random number. It lies in the interval [0, 1], and the sigmoid function \(\:S\left({x}_{i}\right)\) can be evaluated by Eq. (9).

Like BFA, quantum computing also evaluates the outputs in the binary q-bit form of 0 or 1, along with the superposition of 0 and 1. Equation (10) is used to calculate the superposition of quantum states.

Where \(\:{C}_{1}\) and \(\:{C}_{2}\) are complex numbers with probabilities \(\:{\left|{C}_{1}\right|}^{2}\) and \(\:{\left|{C}_{2}\right|}^{2}\) corresponding to q-bits 0 and 1, respectively. The sum of these probabilities must satisfy the normalized condition: \(\:{\left|{C}_{1}\right|}^{2}+{\left|{C}_{2}\right|}^{2}=1\). The state of a q-bit can be modified via a quantum gate, which is symbolized by the unitary operator U. Among the numerous quantum gates, including the Hadamard gate, NOT gate, rotation gate, controlled NOT gate, etc., the rotation gate is selected to update quantum bits due to its broad and effective applicability in heuristic algorithms12. The unitary operator for the rotation gate, with rotation angle \(\:{\theta\:}_{i}\) (for \(\:i=\text{1,2},\dots\:,n\)) can be described by Eq. (11).

In QIFA, the dynamic rotation angle approach is used for determining the magnitude of the rotation angle and the coordinate rotation gate approach for updating q-bits. Thus, the rotation angle can be evaluated using Eq. (12) without the need of prior lookup table.

Where \(\:\theta\:\) is the extent of the rotation angle that decreases monotonically from \(\:{\theta\:}_{max}\) to \(\:{\theta\:}_{min}\) with the increase in the count of iterations. The final position of fireflies in binary form is updated using Eq. (13).

Where \(\:{RN}_{U}\) is the uniform random number that lies in the interval [0, 1].

Artificial bee colony algorithm (ABC)

The ABC algorithm is a metaheuristic algorithm, developed by Karaboga, that mimics the foraging attributes of honeybees39. This algorithm is particularly efficient for global exploration and solving complex problems with fewer control parameters compared to other algorithms, like ant colony optimization, particle swarm optimization, firefly algorithm, genetic algorithms, and40.

In the ABC algorithm, artificial bees are categorized into three roles: employed bees, onlooker bees, and scout bees. Employed bees explore initial food sources (potential solutions) and communicate their findings to the onlooker bees in the hive. Onlooker bees evaluate these sources and select the quality food sources. Scout bees analyze the overall food sources and search for new ones, replacing the ones that are exhausted41.

The ABC algorithm initiates its process by initializing the population P with N food sources, where each food source represents a solution to the problem. In population P, consider \(\:{X}_{i}=\left\{{x}_{i,1},{x}_{i,2},\dots\:,{x}_{i,D}\right\}\) to be the ith solution in D-dimensional space. The value of \(\:{X}_{i}\) is initially randomly generated using Eq. (14).

Where \(\:{X}_{i,j}\) is the jth parameter with respect to the ith food source. \(\:rand\left[0,\:1\right]\) is a random number in the range [0, 1]. The notations \(\:{X}_{min,j}\) and \(\:{X}_{max,j}\) represent the lower and upper bounds for the jth parameter.

With respect to each solution \(\:{X}_{i}\), each employed bee generates a new solution \(\:{V}_{i}\) in the neighbourhood of the previous food source \(\:{X}_{i}\), with the variation of one parameter (denoted by k). The new solution \(\:{V}_{i}\) is evaluated using Eq. (15).

Where \(\:{X}_{k}\) is randomly selected from the initial solution set \(\:{X}_{i}\) such that \(\:k\ne\:i\) and \(\:{\varnothing\:}_{i,j}\) is a random number between [−1, 1].

Further, the solutions of \(\:{X}_{i}\) and \(\:{V}_{i}\) are compared using a greedy selection process. The solutions \(\:{V}_{i}\) are replaced with \(\:{X}_{i}\), if they are determined to be of superior quality.

The selection of food sources, which are determined by employed bees, is evaluated by the onlooker bees on the basis of the probability (\(\:{p}_{i}\)) related to the fitness value (\(\:fit\left({X}_{i}\right)\)) of the solution. The value of \(\:{p}_{i}\) can be evaluated using Eq. (16).

In Eq. (16), the fitness value \(\:fit\left({X}_{i}\right)\) is evaluated by Eq. (17).

Where \(\:{f}_{i}\) indicates the function value with respect to ith solution. The higher the value of \(\:{f}_{i}\), the higher the value of \(\:{p}_{i}\), which corresponds to the selection of a better solution \(\:{X}_{i}\) by the onlooker bees.

Similarly, onlooker bees also evaluate the quality of the revised solutions \(\:{V}_{i}\) and replace these new solutions \(\:{V}_{i}\) with existing ones \(\:{X}_{i}\), if the quality of the new solutions \(\:{V}_{i}\) is determined to be superior compared to \(\:{X}_{i}\).

Further, the scout bees analyze the improvements in the solutions. If the solutions cannot be revised after the defined number of iterations, then they are abandoned. Then, scout bees perform exploration and search for new solutions using Eq. (14). The process continues until the termination criteria are met and the optimized solutions are determined.

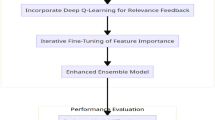

Proposed QIFABC algorithm for feature selection

The proposed QIFABC algorithm is a hybridization of the QIFA and ABC algorithms, utilized for feature selection in multi-pose FER. The process begins with the generation of quantum firefly agents, which are transformed into binary fireflies using quantum measures to apply the attraction attributes of FA. These agents search for brighter fireflies to optimize features. The agents iteratively search until they find a brighter firefly. At the point where the current firefly is determined to be the brightest one, the firefly agents transition to the attributes of the ABC algorithm for further movement. Here, the agents are attracted to positions where bees possess a substantially superior nectar quality. This iterative process of search and transition, utilizing the combined movement strategy of both algorithms, refines feature solutions for enhanced multi-pose FER. The complexity of the QIFABC algorithm, in terms of fitness function evaluations (FFEs), is significantly affected by the interaction between the QIFA and ABC algorithms. While QIFA requires fewer FFEs due to its quantum-based attraction mechanism, the incorporation of ABC adds additional FFEs as it performs a more exhaustive search by comparing nectar quality across multiple positions. However, the combination of both algorithms ultimately enhances the efficiency of the feature selection process in complex datasets. The algorithm was initialized with population size of 120 solutions, maximum count of 500 iterations, with 30 independent runs to ensure robustness in the results. The feature solutions were encoded as binary vectors, where each element represents the presence or absence of a feature in the feature set. The initial feature solution by the quantum firefly agents is represented by \(\:{QF}_{i}={QF}_{i1},{QF}_{i2},\dots\:,{QF}_{im}\), where m indicates the feature count.

In the q-bit representation, each quantum firefly-based feature solution (\(\:{QF}_{i}\)) is represented as the string of quantum bits, depicted by Eq. (18).

Where, \(\:{QF}_{i}\) are developed based on the vector Θ, which is associated with variable \(\:{\theta\:}_{ij}\). The angle \(\:{\theta\:}_{ij}\) lies in \(\:\left[0,\:\frac{\pi\:}{2}\right]\) with \(\:1<j\le\:m\).

The pair of numbers \(\:\left(\text{cos}\left({\theta\:}_{ij}\right),\:\text{sin}\left({\theta\:}_{ij}\right)\right)\) define the probability amplitude of each quantum bit. Here, the amplitude \(\:{\left|\text{sin}\left({\theta\:}_{ij}\right)\right|}^{2}\) depicts the probability to select the feature, while \(\:{\left|\text{cos}\left({\theta\:}_{ij}\right)\right|}^{2}\) illustrates the probability to reject the feature, with the fulfillment of the normalization criteria of quantum computing: \(\:{\left|\text{sin}\left({\theta\:}_{ij}\right)\right|}^{2}+{\left|\text{cos}\left({\theta\:}_{ij}\right)\right|}^{2}=1\). At the initial stage (t = 0), there is higher diversity in the population of quantum fireflies to select or reject the feature solutions \(\:{QF}_{i}\). Initially, the quantum firefly feature solutions \(\:{QF}_{i}^{t=0}\) are described by Eq. (19).

Further, the movement of the fireflies is analyzed after converting the quantum firefly feature solutions (\(\:{QF}_{i}\)) to binary firefly feature solutions (\(\:{BF}_{i}\)) using quantum measure. For each q-bit with respect to angle Θ, a random number \(\:{Num}_{R}\) is generated in the interval [0, 1] to analyze the corresponding q-bits in the binary firefly feature solutions. Equation (20) determines the assignment of value 1 or 0 to the q-bits.

In the quantum measure operation, the algorithm does not possess any previous quantum firefly feature solutions. It selects the feature solutions on the basis of Eq. (20) through multiple passes of measure operations until a feasible solution is found. Each round of quantum measure provides another chance for previously unselected feature solutions that meet the constraints but were unselected due to any reason. This iterative quantum measure process ensures the effective conversion of \(\:{QF}_{i}\) to \(\:{BF}_{i}\).

The less bright \(\:{BF}_{i}\) move towards the brighter \(\:{BF}_{j}\) based on their attractiveness, which is influenced by the distance among the fireflies. The distance (\(\:{Dist}_{new}\)) among the firefly solutions is evaluated by Eq. (21).

The \(\:{Dist}_{new}\) is a modified variant of the classical hamming distance formulation (\(\:{D}_{ch}\)) that effectively distinguishes between configurations with equal hamming distance but differs in attractiveness42. This formulation enhances the effectiveness of the FA. The \(\:{BF}_{i}\) and \(\:{BF}_{j}\) are considered different, if and only if \(\:{Dist}_{new}\left({BF}_{i},\:{BF}_{j}\right)=1\) and \(\:{BF}_{ik}\ne\:{BF}_{jk}\) for \(\:1\le\:k\le\:m\). In Eq. (21), m denotes the size of the feature solutions. The value of \(\:{D}_{ch}({BF}_{i},\:{BF}_{j})\) can be evaluated using Eq. (22).

Where

After determining the distance and comparing the current \(\:{BF}_{i}\) with other \(\:{BF}_{j}\), the position of the \(\:{QF}_{i}\) changes as the current fireflies move towards the brighter firefly solutions (\(\:{QF}_{j}\)). The movement of \(\:{QF}_{i}\) is evaluated using Eq. (24) based on Eq. (12).

Where \(\:{\theta\:}_{ik}^{t+1}\) and \(\:{\theta\:}_{ik}^{t}\) denote the angles with regard to kth q-bit at iterations (t + 1) and t, respectively. This quantum movement alters the selection probability of the feature solutions \(\:{BF}_{i}\). The search space can be efficiently explored by adhering to the following criteria: the q-bit of \(\:{QF}_{i}\) can be replaced with a new value if the kth q-bit of the \(\:{BF}_{i}\) differs from the \(\:{BF}_{j}\), otherwise, the same value for \(\:{QF}_{i}\) is retained. The replacement of \(\:{QF}_{i}^{t}\) with new solutions \(\:{QF}_{i}^{t+1}\) can be evaluated using Eq. (25) on the basis of movement strategy described in Eq. (24).

The movement strategy described in Eq. (25) follows the principles of the FA, and the change in position occurs because \(\:{BF}_{i}\) was less bright than the \(\:{BF}_{j}\). Alternatively, if the current firefly solution \(\:{QF}_{i}\) determined as brightest, it would not explore the further solution space. To avoid this premature convergence, the concept of the ABC algorithm is incorporated. In this case, the movement of the \(\:{QF}_{i}\) is determined using Eq. (26) according to the position of bees in the ABC algorithm possessing the best nectar quality.

In Eq. (26), the values of \(\:{\theta\:}_{ik}^{t}\) and \(\:{p}_{i}\) are determined using Eqs. (27) and (28) by adapting the Eqs. (14) and (16) of the ABC algorithm, respectively.

Where, \(\:{\theta\:}_{min,k}^{t}\) and \(\:{\theta\:}_{max,k}^{t}\) represent the minimum and maximum values of the rotation angles, respectively. The notation \(\:{p}_{i}\left({\theta\:}_{ik}^{t}\right)\) indicates the probability of selecting the solution \(\:{\theta\:}_{ik}^{t}\), based on the fitness value \(\:fit\left({\theta\:}_{ik}^{t}\right)\).

After the movement of \(\:{QF}_{i}\) according to the ABC algorithm, new solutions \(\:{QF}_{i}^{t+1}\) are generated using Eq. (25), similar to the generation of new solutions based on the movement strategy of FA.

The process of determining the best feature solutions continues until the termination criteria of iterations and the analysis of all the feature solutions \(\:{QF}_{i}\). The pseudo-code of the proposed QIFABC algorithm for feature selection is presented in Algorithm 1.

Algorithm 1

Pseudo Code of the Proposed QIFABC Algorithm for Feature Selection.

Setup the parameters for both the QIFA and ABC algorithms.

Generate the initial quantum firefly feature solutions \(\:{QF}_{i}^{t=0}={QF}_{i1}^{0},\:{QF}_{i2}^{0},\:{,\dots\:,QF}_{im}^{0}\) using Eq. (19).

Convert the quantum firefly feature solutions (\(\:{QF}_{i}\)) to binary firefly feature solutions \(\:{BF}_{i}^{t=0}={BF}_{i1}^{0},\:{BF}_{i2}^{0},\:{,\dots\:,BF}_{im}^{0}\) using quantum measure.

Calculate the fitness value for the \(\:{BF}_{i}\) and retain the current best solutions.

Evaluate the distance \(\:{(Dist}_{new})\) between the fireflies using Eq. (21) and compare the brightness of the binary firefly feature solutions.

t = 1;

while (\(\:t\le\:{t}_{max}\)) do.

for (\(\:i=1:n:\text{e}\text{a}\text{c}\text{h}\:\text{q}\text{u}\text{a}\text{n}\text{t}\text{u}\text{m}\:\text{f}\text{i}\text{r}\text{e}\text{f}\text{l}\text{y}\:\)) do

for (\(\:j=1:n:\text{e}\text{a}\text{c}\text{h}\:\text{q}\text{u}\text{a}\text{n}\text{t}\text{u}\text{m}\:\text{f}\text{i}\text{r}\text{e}\text{f}\text{l}\text{y}\)) do.

if (\(\:{\beta\:(BF}_{i}^{t})<{\beta\:(BF}_{j}^{t})\)) then

Calculate the distance \(\:{(Dist}_{new})\) between the binary firefly feature solutions \(\:{BF}_{i}^{t}\) and \(\:{BF}_{j}^{t}\) using Eq. (21).

Evaluate the attractiveness (\(\:\beta\:\)) between the \(\:{BF}_{i}^{t}\) and \(\:{BF}_{j}^{t}\) using Eq. (6).

Determine the new position of the quantum firefly feature solutions (\(\:{QF}_{i}^{t+1}\)) according to the QIFA algorithm using Eq. (24).

else.

% (\(\:{\beta\:(BF}_{i}^{t})\ge\:{\beta\:(BF}_{j}^{t})\))

Determine the new position of the quantum firefly feature solutions (\(\:{QF}_{i}^{t+1}\)) according to the ABC algorithm using Eq. (26).

endif.

Compute the fitness function for the new population \(\:{BF}_{i}^{t+1}\).

Store the current best binary feature solutions.

end for.

end for.

t = t + 1 ;

end while.

For feature selection, it is also essential to analyze the performance of the proposed QIFABC algorithm compared to the QIFA and ABC algorithms. The statistics for the objective function, obtained over 30 independent runs are described in Table 1.

These metrics indicate the robust assessment of the performance of the algorithm which indicating not only the optimization quality but also the stability and reliability of the metaheuristic approach.

Further, the statistical significance of the improvements achieved by the QIFABC algorithm was evaluated in which a comparative analysis with other approaches was conducted, including the FA, QIFA and ABC algorithms. A two-tailed t-test was performed on the best, worst, mean, and median scores across 30 independent runs to determine whether the performance differences were statistically significant. The obtained p-values for these tests were less than 0.05 which indicate that the improvements in performance are statistically significant.

Additionally, it has been noticed that the proposed QIFABC algorithm outperforms the baseline QIFA and ABC algorithms in terms of feature selection accuracy, as evidenced by the best, mean, and median scores across all independent runs. These results highlight the effectiveness of the hybrid algorithm in enhancing feature selection for multi-pose FER tasks.

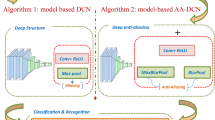

Expression classification

The expression classification is performed using the deep neural network model ResNet-50, which was specifically designed to enable the training of deep architectures effectively by incorporating residual block-based learning through skip connections to bypass layers. Figure 3 presents the internal learning process of the residual block in the ResNet. In Fig. 3, x is the input to the block, y is the output, and F(x) is the residual function. This expression is also depicted in Eq. (29).

The selection of ResNet over traditional CNN network architecture is due to the inefficiency of traditional CNNs in deep learning tasks with increasing layers. As the number of layers in a CNN increases, the model tends to suffer from higher error rates due to overfitting. In contrast, ResNet addresses this issue by introducing residual connections, which add the output of one layer to the input of a subsequent layer, effectively allowing the network to learn residual functions and improve training efficiency and accuracy.

The ResNet-50 model consists of a total of 50 layers, including convolutional, pooling, and fully connected layers. There is the use of 3-layer bottleneck blocks and 3.8 × 109FLOPs for the construction of this network architecture43. The network architecture of the ResNet-50 model is presented in Table 2.

Experimental evaluations

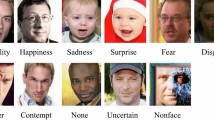

The experiments are performed on the FER datasets of RaF44and KDEF45. The RaF dataset, created by Langner et al.44, is a multi-pose dataset featuring 67 models (28 males and 39 females). These models exhibit eight different expressions, each captured with three different gaze angles and five different camera yaw angles. The KDEF dataset, developed by Lundqvist et al.35, also focuses on multi-pose facial expressions and includes 70 individuals (35 males and 35 females). Each individual displays seven expressions, captured from five different yaw angles.

The current research work conducts experiments on seven expression classes (fear, disgust, happy, surprise, neutral, anger, and sad) with available gaze directions in each dataset. The work analyzes the expressions for the five different face pose angles, encompassing front pose (0 degrees), diagonal pose (± 45 degrees), and profile pose (± 90 degrees). This includes the RaF dataset with 7,035 images (201 images for each expression at each angle) and the KDEF dataset with 4,900 images (140 images for each expression at each angle).

The evaluation of the proposed FER system is measured in terms of recognition rate (%), which is described by Eq. (30).

Results and discussion

In order to access the results of the FER system, 80% of the data is allocated for training purpose, while the remaining 20% is reserved for testing. This involves retaining 40 images from the RaF dataset and 28 images from the KDEF dataset for each expression class at each of the five yaw angles in both datasets. The performance results are also evaluated by conducting the feature selection using QIFA, FA, and ABC algorithms. The expression recognition results for the proposed QIFABC algorithm along with other feature selection methods are presented by three experiments, categorized by different face poses: front pose (0 degrees), diagonal pose (± 45 degrees), and profile pose (± 90 degrees).

Experiment 1: front pose (0 degrees)

This experiment evaluates the results for front pose expressions (face at 0 degrees) of both the RaF and KDEF datasets. The recognition rate (%) is used as the evaluation metric, which is derived from the confusion matrix results. These confusion matrix results are illustrated using Sankey diagrams, shown in Figs. 4 and 4 for the RaF and KDEF datasets, respectively. Further, the recognition rates (%) for the experiments on the front pose expression images of the RaF and KDEF datasets are presented in Tables 3 and 4, respectively.

From Fig. 4, it can be seen that the proposed FER system utilizing the QIFABC feature selection method exhibits the least confusion among expressions for the front pose facial images of the RaF dataset. Whereas the FER systems with other feature selection methods demonstrate greater expression confusion. The individual FA and ABC algorithms exhibit the most confusion among expression classes, particularly fear, disgust, surprise, and sad. The system with feature selection using the QIFA algorithm shows less confusion compared to individual algorithms, but it exhibits higher confusion than the proposed QIFABC approach. There are the fear and disgust expression classes determined as confused classes for evaluations using the QIFA algorithm.

Figure 4 describes the results for the front pose facial images of the KDEF dataset. Similar to the confusion of expressions in the RaF dataset, there is also a comparable pattern of expression confusion in the front pose images of the KDEF dataset. Expression recognition with the FA feature selection method indicates the maximum confusion of expressions, followed by the ABC method, then the QIFA method, and finally the proposed QIFABC approach, which shows the least confusion.

The recognition rates described in Table 3 for the front pose expressions of the RaF dataset indicate the effective performance of the proposed QIFABC algorithm, achieving a 100% recognition rate for all expressions except for fear and disgust, which attained recognition rates of 95% and 97.5%, respectively. The other methods also achieved good recognition rates for the expression classes but comparatively lower values. Among the incorporated feature selection methods, the FA algorithm exhibited the lowest recognition rates. The FA algorithm was evaluated with a minimum recognition rate of 82.5% with respect to disgust expression and a maximum of 97.5% with respect to neutral class. The ABC algorithm performed better than FA, achieving a minimum recognition rate of 85% with respect to fear class and a maximum of 100% with respect to happy class. On the other hand, the QIFA algorithm performed superior compared to the individual FA and ABC algorithms but was less effective compared to the QIFABC algorithm. The QIFA algorithm attained a minimum recognition rate of 92.5% with respect to fear class and a maximum of 100% with respect to happy, surprise, anger, and sad classes.

Table 4 indicates the recognition rate results with respect to front pose expressions of the KDEF dataset. For the KDEF dataset as well, the classification outcomes using the proposed QIFABC algorithm are superior to those using other incorporated feature selection methods, including FA, ABC, and QIFA algorithms. In the front pose expressions of the KDEF dataset, the QIFABC algorithm attained a 100% recognition rate for all expressions except for fear and sad, which had recognition rates of 96.43% and 92.86%, respectively. The QIFA algorithm achieved a minimum recognition rate of 89.29% with respect to the fear and sad expressions, while attaining 100% for all other expressions. The FA algorithm was evaluated with a minimum recognition rate of 85.71% with respect to fear and disgust expressions, and a maximum of 100% with respect to happy expression. The ABC algorithm achieved a minimum recognition rate of 85.71% with respect to fear expression and a maximum of 100% with respect to anger expression.

Experiment 2: diagonal pose (± 45 degrees)

This experiment determines the results for the diagonal pose expressions (face at ± 45 degrees) of both the RaF and KDEF datasets. The Sankey diagrams based on the confusion matrix results are presented in Figs. 6 and 7 for the diagonal pose expression images of the RaF and KDEF datasets, respectively.

The recognition rates (%) for the experiments on the diagonal pose expression images of the RaF and KDEF datasets are presented in Tables 5 and 6, respectively.

Figure 6 illustrates the results related to the diagonal pose expressions of the RaF dataset. The confusion of expressions in the diagonal pose evaluations is higher compared to the front pose expressions. For the proposed FER approach utilizing the QIFABC feature selection method, the fear and disgust classes are identified as the most confused expression classes. The FER system with the feature selection method of QIFA shows confusion among the fear, disgust, and sad classes. The confusion in expressions using the QIFA algorithm is higher compared to the QIFABC approach, and this confusion further increases with the ABC and FA algorithms. Using the FA algorithm, the most confused expression classes are fear, disgust, and surprise. With the ABC algorithm, the most confused classes are disgust and surprise.

Further, Fig. 7 depicts the results related to the diagonal pose expressions of the KDEF dataset. In this illustration, the proposed FER system utilizing the QIFABC feature selection method shows that the fear, disgust, and sad classes are the most confused expression classes among the seven expression classes. Similarly, the QIFA algorithm exhibits confusion primarily among the fear and disgust classes. The individual algorithms FA and ABC also indicate confusion among the fear, disgust, and sad expression classes.

From the results depicted in Tables 5 and 6, it can be analyzed that the proposed FER system with the QIFABC feature selection method achieves higher recognition rate values compared to other methods. For the RaF dataset, the QIFABC, QIFA, and FA algorithms achieved maximum recognition rates of 100%, 97.5%, and 92.5%, respectively, with respect to anger expression, while the ABC algorithm achieved a maximum recognition rate of 96.25% with respect to happy expression. On the other hand, for the KDEF dataset, the QIFABC, QIFA, and ABC algorithms achieved maximum recognition rates of 100%, 98.21%, and 96.43%, respectively, with respect to anger expression. Meanwhile, the FA algorithm achieved a maximum recognition rate of 94.64% with respect to neutral expression.

Experiment 3: Profile pose (± 90 degrees)

This experiment assesses the results for the profile pose expressions (face at ± 90 degrees) of both the RaF and KDEF datasets. The Sankey diagrams illustrating the confusion matrix results for the profile pose expression images of the RaF and KDEF datasets are shown in Figs. 8 and 9, respectively. The recognition rates (%) for the experiments on the profile pose expression images of the RaF and KDEF datasets are presented in Tables 7 and 8, respectively.

Figures 8 and 9 show the results for the profile pose expressions of the RaF and KDEF datasets, respectively. The confusion of expressions in the profile pose evaluations is even higher than in the front and diagonal pose expressions. For the profile poses of both datasets, the QIFABC algorithm shows less confusion of expressions compared to the QIFA, FA, and ABC algorithms. In the profile pose results of both datasets, the most confused class is the fear expression, while the least confused class is the anger expression.

The results depicted in Tables 7 and 8 indicate higher recognition rate values for the proposed FER system with the QIFABC feature selection method compared to other algorithms, similar to the results with respect to front and diagonal pose expressions of both datasets. For the RaF dataset, the QIFABC achieved a maximum recognition rate of 96.25% with respect to anger expression. The QIFA algorithm achieved a maximum value of 93.75% with respect to happy, neutral, and anger expressions, while the FA and ABC algorithms each achieved a maximum value of 93.75% with respect to anger expression. However, for the KDEF dataset, the QIFABC, QIFA, and ABC algorithms achieved maximum recognition rates of 98.21%, 98.21%, and 96.43%, respectively, with respect to anger expression. Likewise, the FA algorithm achieved a maximum recognition rate of 92.86% with respect to neutral expression.

Comparison with state-of-the-art techniques

The performance comparison of the presented FER systems with state-of-the-art techniques includes the existing techniques presented by Kumar et al.10, Asha22, Kumar et al.23, Bhatt et al.24, and Yaddaden36. The comparative analyses for the RaF and KDEF datasets are illustrated in Tables 9 and 10, respectively.

Kumar et al.10used the feature selection method of the quantum-inspired binary gravitational search algorithm (QIBGSA) with a deep convolutional neural network (DCNN) classifier for experiments on the front and diagonal pose expressions of the KDEF dataset. Asha22explored different variants of GSA for feature selection, including SGSA, BGSA, and FDGSA, for experiments on the front pose expressions of the KDEF dataset. Asha22 used DCNN and extended DCNN (EDCNN) for classification. Kumar et al.23 used IQIGSA for feature selection and DCNN for classification, with experiments on the front pose expressions of the RaF dataset and the front and diagonal pose expressions of the KDEF dataset. Bhatt et al.24 utilized different quantum-behaved metaheuristic methods for feature selection, including QGSA, QFA, QPSO, and QGA, for experiments on the front, diagonal, and profile pose expressions of the RaF and KDEF datasets. Bhatt et al.24performed classification using DCNN extended with a residual block (DCNNR). Yaddaden46 incorporated PCA for feature selection and a multi-class support vector machine (MCSVM) for experiments on the front pose expressions of the RaF and KDEF datasets.

The performance comparison results depicted in Tables 9 and 10 indicate the superior performance of the proposed FER system using the QIFABC feature selection method for the multi-pose expressions of the RaF and KDEF datasets compared to state-of-the-art techniques.

The performance comparison results depicted in Tables 9 and 10 indicate that the proposed FER system using the QIFABC feature selection method for the multi-pose expressions of the RaF and KDEF datasets outperforms state-of-the-art techniques.

Limitations and future work

Although the proposed QIFABC algorithm demonstrates significant potential for feature selection in multi-pose FER, there are a few limitations that can be addressed as future work.

First, the computational complexity of the hybrid algorithm can be challenging for the larger datasets or higher-dimensional feature spaces. This limitation may challenge the implementation of real-time applications or the use of the method in scenarios with extensive data.

Second, the efficacy of the QIFABC algorithm can be considered as sensitive for the choice of hyperparameters, including population size and iteration number. The tuning of Optimal parameters is essential as the suboptimal values may lead to deficiency in performance or inefficient convergence.

Also, while the convergence behavior of the QIFABC algorithm is good, it may get stuck in local optima in the rare case of more complex search spaces. This could lead to slower convergence rates or suboptimal solutions compared to actual efficacy of the algorithm.

The interaction between feature selection and classification is explored with ResNet-50, but there is potential for further enhancement by integrating other advanced classifiers.

Finally, the current experiments of the proposed method were conducted on the RaF and KDEF datasets in which specific facial expressions and controlled environments were there. The algorithm may have to face challenges for the real-time datasets with diverse lighting conditions, poses, and expressions.

The aforementioned challenges highlight opportunities for future research work. Addressing these limitations could enhance the scalability, robustness, and applicability of the proposed method for broader implementation in diverse and complex scenarios.

Conclusion

This paper has described a multi-pose FER system with a focus on enhancing feature selection through the proposed QIFABC approach, which incorporates the attributes of QC, FA, and ABC algorithms. The FER system conducted initial feature extraction using the TCNN method, feature selection using the proposed QIFABC approach, and expression classification using the ResNet-50 model. Feature selection is also performed using the QIFA, FA, and ABC algorithms to determine the effectiveness of the proposed QIFABC approach.

The presented FER systems are tested on the front, diagonal, and profile pose expressions of the RaF and KDEF datasets. The experimental evaluations indicate better recognition rates for the front pose expressions, followed by reduced recognition rates for the diagonal pose expressions, and further reduced rates for the profile pose expressions. The performance comparison indicates the superior performance of the proposed FER system using the QIFABC algorithm for feature selection compared to other feature selection methods (QIFA, FA, and ABC algorithms) and state-of-the-art techniques.

These findings illustrate the potential of the QIFABC algorithm to effectively optimize the features for multi-pose FER. The integration of quantum-inspired strategies with metaheuristic algorithms has proven effective to enhance the accuracy and efficiency of FER systems.

The limitations and challenges of the proposed work are already discussed in the previous section which provide opportunities for further exploration. Addressing these challenges could extend the scalability, robustness, and applicability of the proposed QIFABC algorithm in diverse and real-world scenarios. Moreover, future research may explore the integration of advanced classifiers or hybrid approaches to further improve the recognition performance, especially for the diagonal and profile pose expressions.

Data availability

The datasets used in this study, RaF (Radboud Faces) and KDEF (Karolinska Directed Emotional Faces), are publicly available for research purposes. These datasets can be accessed through the following links:• RaF dataset (https://rafd.socsci.ru.nl/Overview.html)• KDEF dataset (https://www.kdef.se/download-2/register).

References

Kim, J. H., Kim, B. G., Roy, P. P. & Jeong, D. M. Efficient facial expression recognition algorithm based on hierarchical deep neural network structure. IEEE access, 7, pp.41273–41285. (2019).

Corneanu, C. A., Simón, M. O., Cohn, J. F. & Guerrero, S. E. Survey on rgb, 3d, thermal, and multimodal approaches for facial expression recognition: history, trends, and affect-related applications. IEEE Trans. Pattern Anal. Mach. Intell. 38 (8), 1548–1568 (2016).

Mehrabian, A. Communication without words. In Communication Theory (193–200). Routledge. (2017).

Fasel, B. & Luettin, J. Automatic facial expression analysis: a survey. Pattern Recogn. 36 (1), 259–275 (2003).

Mao, X., Xue, Y., Li, Z. & Bao, H. Layered fuzzy facial expression generation: social, emotional and physiological. In Affective Computing (p. 83). IntechOpen. (2008).

Jampour, M. & Javidi, M. Multiview facial expression recognition, a survey. IEEE Trans. Affect. Comput. 13 (4), 2086–2105 (2022).

PrabhakaraRao, T. et al. Oppositional Brain Storm Optimization with Deep Learning based Facial Emotion Recognition for Autonomous Intelligent Systems. IEEE Access. (2024).

He, Y., Zhang, Y., Chen, S. & Hu, Y. Facial Expression Recognition Using Hierarchical Features With Three-Channel Convolutional Neural Network. IEEE Access. (2023).

Liu, T. et al. Facial expression recognition on the high aggregation Subgraphs. IEEE Trans. Image Process. 32, 3732–3745 (2023).

Kumar, Y., Verma, S. K. & Sharma, S. Quantum-inspired binary gravitational search algorithm to recognize the facial expressions. International Journal of Modern Physics C, 31(10), p.2050138. (2020).

Ji, L., Wu, S. & Gu, X. A facial expression recognition algorithm incorporating SVM and explainable residual neural network. Signal. Image Video Process. 17 (8), 4245–4254 (2023).

Wong, L. A., Shareef, H., Mohamed, A. & Ibrahim, A. A. Novel quantum-inspired firefly algorithm for optimal power quality monitor placement. Front. Energy. 8, 254–260 (2014).

Ross, O. H. M. A review of quantum-inspired metaheuristics: going from classical computers to real quantum computers. Ieee Access. 8, 814–838 (2019).

Wang, J. et al. Improvement and application of hybrid firefly algorithm. IEEE Access. 7, 165458–165477 (2019).

Karaboga, D. Artificial bee colony algorithm. scholarpedia, 5(3), p.6915. (2010).

Jia, H., Rao, H., Wen, C. & Mirjalili, S. Crayfish optimization algorithm. Artif. Intell. Rev. 56 (Suppl 2), 1919–1979 (2023).

Połap, D. & Woźniak, M. Red fox optimization algorithm. Expert Systems with Applications, 166, p.114107. (2021).

Abualigah, L., Abd Elaziz, M., Sumari, P., Geem, Z. W. & Gandomi, A. H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Systems with Applications, 191, p.116158. (2022).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1 (1), 67–82 (1997).

Trojovský, P. A new human-based metaheuristic algorithm for solving optimization problems based on preschool education. Scientific Reports, 13(1), p.21472. (2023).

Jovanovic, D. et al. Tuning machine learning models using a group search firefly algorithm for credit card fraud detection. Mathematics, 10(13), p.2272. (2022).

Jovanovic, L. et al. Machine learning tuning by diversity oriented firefly metaheuristics for industry 4.0. Expert Syst. 41 (2), e13293 (2024).

Mamindla, A. K. & Ramadevi, D. ANN-ABC meta-heuristic hyper parameter tuning for mammogram classification. J. Theor. Appl. Inf. Technol., 101. (2023).

Bacanin, N. et al. Artificial neural networks hidden unit and weight connection optimization by quasi-refection-based learning artificial bee colony algorithm. IEEE Access. 9, 169135–169155 (2021).

Park, J. W., Koo, M. M., Seo, H. U. & Lim, D. K. Optimizing the Design of an Interior Permanent Magnet Synchronous Motor for Electric Vehicles with a Hybrid ABC-SVM Algorithm. Energies, 16(13), p.5087. (2023).

Mistry, K., Zhang, L., Neoh, S. C., Lim, C. P. & Fielding, B. A micro-GA embedded PSO feature selection approach to intelligent facial emotion recognition. IEEE Trans. Cybernetics. 47 (6), 1496–1509 (2016).

Mlakar, U., Fister, I., Brest, J. & Potočnik, B. Multi-objective differential evolution for feature selection in facial expression recognition systems. Expert Syst. Appl. 89, 129–137 (2017).

Sreedharan, N. P. N. et al. Grey wolf optimisation-based feature selection and classification for facial emotion recognition. IET Biom. 7 (5), 490–499 (2018).

Ghosh, M., Kundu, T., Ghosh, D. & Sarkar, R. Feature selection for facial emotion recognition using late hill-climbing based memetic algorithm. Multimedia Tools Appl. 78, 25753–25779 (2019).

Saha, S. et al. Feature selection for facial emotion recognition using cosine similarity-based harmony search algorithm. Applied Sciences, 10(8), p.2816. (2020).

Ghazouani, H. A genetic programming-based feature selection and fusion for facial expression recognition. Appl. Soft Comput. 103, 107173 (2021).

Asha Deep neural networks-based classification optimization by reducing the feature dimensionality with the variants of gravitational search algorithm. International Journal of Modern Physics C, 32(10), p.2150137. (2021).

Kumar, Y., Kant Verma, S. & Sharma, S. An Improved Quantum-Inspired Gravitational Search Algorithm to Optimize the Facial Features. International Journal of Pattern Recognition and Artificial Intelligence, 35(14), p.2156004. (2021).

Bhatt, A. et al. Quantum-inspired meta-heuristic algorithms with deep learning for facial expression recognition under varying yaw angles. International Journal of Modern Physics C, 33(04), p.2250045. (2022).

Dirik, M. Optimized anfis model with hybrid metaheuristic algorithms for facial emotion recognition. Int. J. Fuzzy Syst. 25 (2), 485–496 (2023).

Viola, P. & Jones, M. December. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition. CVPR 2001 (Vol. 1, pp. I-I). Ieee. (2001).

Yang, X. S. Firefly algorithm, stochastic test functions and design optimisation. Int. J. bio-inspired Comput. 2 (2), 78–84 (2010).

Sayadi, M., Ramezanian, R. & Ghaffari-Nasab, N. A discrete firefly meta-heuristic with local search for makespan minimization in permutation flow shop scheduling problems. Int. J. Ind. Eng. Comput. 1 (1), 1–10 (2010).

Karaboga, D. & Akay, B. A comparative study of artificial bee colony algorithm. Appl. Math. Comput. 214 (1), 108–132 (2009).

Xiao, S., Wang, W., Wang, H. & Zhou, X. A new artificial bee colony based on multiple search strategies and dimension selection. IEEE Access. 7, 133982–133995 (2019).

Akay, B. & Karaboga, D. A modified artificial bee colony algorithm for real-parameter optimization. Inf. Sci. 192, 120–142 (2012).

Zouache, D., Nouioua, F. & Moussaoui, A. Quantum-inspired firefly algorithm with particle swarm optimization for discrete optimization problems. Soft. Comput. 20, 2781–2799 (2016).

Jindal, S., Sachdeva, M. & Kushwaha, A. K. Quantum behaved Intelligent variant of gravitational search algorithm with deep neural networks for human activity recognition: 10.48129/kjs. 18531. Kuwait J. Sci., 50(2A). (2023).

Langner, O. et al. Presentation and validation of the Radboud faces database. Cogn. Emot. 24 (8), 1377–1388 (2010).

Goeleven, E., De Raedt, R., Leyman, L. & Verschuere, B. The Karolinska directed emotional faces: a validation study. Cogn. Emot. 22 (6), 1094–1118 (2008).

Yaddaden, Y. An efficient facial expression recognition system with appearance-based fused descriptors. Intell. Syst. Appl. 17, 200166 (2023).

Funding

No external funding received for this research work.

Author information

Authors and Affiliations

Contributions

Mu Panliang: Conceptualization, Methodology, Writing - original draft. Sanjay Madaan: Investigation, Validation, Writing - review & editing, Supervision.Siddiq Babaker: Conceptualization, Writing - review & editing. Gowrishankar J.: Validation, Writing - review & editing. Ali Khatibi: Investigation, Writing - review & editing. Anas Ratib Alsoud: Conceptualization, Writing - review & editing. Vikas Mittal: Investigation, Writing - review & editing.Lalit Kumar: Methodology, Writing - review & editing.A. Johnson Santhosh: Writing - review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Panliang, M., Madaan, S., Babikir Ali, S. et al. Enhancing feature selection for multi-pose facial expression recognition using a hybrid of quantum inspired firefly algorithm and artificial bee colony algorithm. Sci Rep 15, 4665 (2025). https://doi.org/10.1038/s41598-025-85206-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-85206-9